- Machine Learning Tutorial

- Data Analysis Tutorial

- Python - Data visualization tutorial

- Machine Learning Projects

- Machine Learning Interview Questions

- Machine Learning Mathematics

- Deep Learning Tutorial

- Deep Learning Project

- Deep Learning Interview Questions

- Computer Vision Tutorial

- Computer Vision Projects

- NLP Project

- NLP Interview Questions

- Statistics with Python

- 100 Days of Machine Learning

Linear Algebra and Matrix

- Scalar and Vector

- Python Program to Add Two Matrices

- Python program to multiply two matrices

- Vector Operations

- Product of Vectors

- Scalar Product of Vectors

- Dot and Cross Products on Vectors

- Transpose a matrix in Single line in Python

- Transpose of a Matrix

- Adjoint and Inverse of a Matrix

- How to inverse a matrix using NumPy

- Determinant of a Matrix

- Program to find Normal and Trace of a matrix

- Data Science | Solving Linear Equations

- Data Science - Solving Linear Equations with Python

- System of Linear Equations

- System of Linear Equations in three variables using Cramer's Rule

- Eigenvalues

- Applications of Eigenvalues and Eigenvectors

- How to compute the eigenvalues and right eigenvectors of a given square array using NumPY?

Statistics for Machine Learning

- Descriptive Statistic

- Measures of Central Tendency

- Measures of Dispersion | Types, Formula and Examples

- Mean, Variance and Standard Deviation

- Calculate the average, variance and standard deviation in Python using NumPy

- Random Variables

Difference between Parametric and Non-Parametric Methods

- Probability Distribution

- Confidence Interval

- Mathematics | Covariance and Correlation

- Program to find correlation coefficient

- Robust Correlation

- Normal Probability Plot

- Quantile Quantile plots

- True Error vs Sample Error

- Bias-Variance Trade Off - Machine Learning

- Understanding Hypothesis Testing

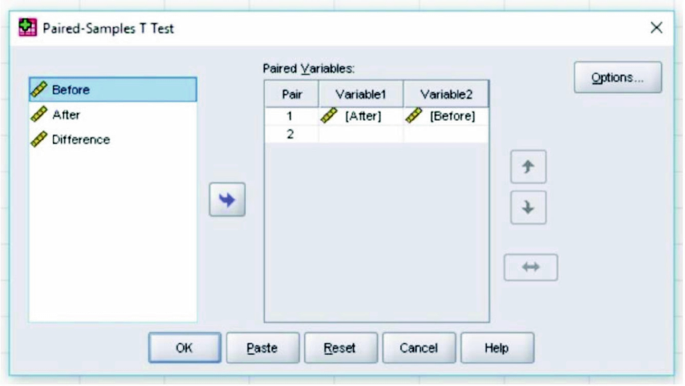

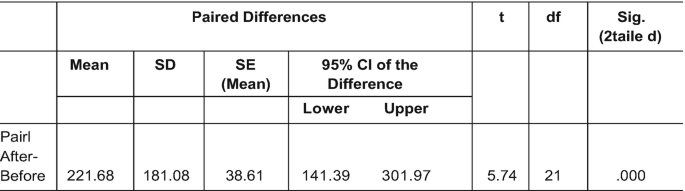

- Paired T-Test - A Detailed Overview

- P-value in Machine Learning

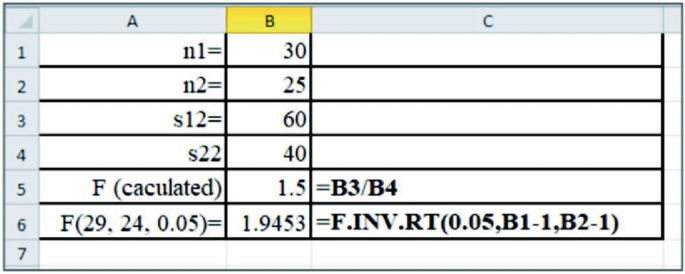

- F-Test in Statistics

- Residual Leverage Plot (Regression Diagnostic)

- Difference between Null and Alternate Hypothesis

- Mann and Whitney U test

- Wilcoxon Signed Rank Test

- Kruskal Wallis Test

- Friedman Test

- Mathematics | Probability

Probability and Probability Distributions

- Mathematics - Law of Total Probability

- Bayes's Theorem for Conditional Probability

- Mathematics | Probability Distributions Set 1 (Uniform Distribution)

- Mathematics | Probability Distributions Set 4 (Binomial Distribution)

- Mathematics | Probability Distributions Set 5 (Poisson Distribution)

- Uniform Distribution Formula

- Mathematics | Probability Distributions Set 2 (Exponential Distribution)

- Mathematics | Probability Distributions Set 3 (Normal Distribution)

- Mathematics | Beta Distribution Model

- Gamma Distribution Model in Mathematics

- Chi-Square Test for Feature Selection - Mathematical Explanation

- Student's t-distribution in Statistics

- Python - Central Limit Theorem

- Mathematics | Limits, Continuity and Differentiability

- Implicit Differentiation

Calculus for Machine Learning

- Engineering Mathematics - Partial Derivatives

- Advanced Differentiation

- How to find Gradient of a Function using Python?

- Optimization techniques for Gradient Descent

- Higher Order Derivatives

- Taylor Series

- Application of Derivative - Maxima and Minima | Mathematics

- Absolute Minima and Maxima

- Optimization for Data Science

- Unconstrained Multivariate Optimization

- Lagrange Multipliers

- Lagrange's Interpolation

- Linear Regression in Machine learning

- Ordinary Least Squares (OLS) using statsmodels

Regression in Machine Learning

Statistical analysis plays a crucial role in understanding and interpreting data across various disciplines. Two prominent approaches in statistical analysis are Parametric and Non-Parametric Methods. While both aim to draw inferences from data, they differ in their assumptions and underlying principles. This article delves into the differences between these two methods, highlighting their respective strengths and weaknesses, and providing guidance on choosing the appropriate method for different scenarios.

Parametric Methods

Parametric methods are statistical techniques that rely on specific assumptions about the underlying distribution of the population being studied. These methods typically assume that the data follows a known Probability distribution, such as the normal distribution, and estimate the parameters of this distribution using the available data.

The basic idea behind the Parametric method is that there is a set of fixed parameters that are used to determine a probability model that is used in Machine Learning as well. Parametric methods are those methods for which we priory know that the population is normal, or if not then we can easily approximate it using a Normal Distribution which is possible by invoking the Central Limit Theorem.

Parameters for using the normal distribution are as follows:

- Standard Deviation

Eventually, the classification of a method to be parametric completely depends on the presumptions that are made about a population.

Assumptions for Parametric Methods

Parametric methods require several assumptions about the data:

- Normality: The data follows a normal (Gaussian) distribution.

- Homogeneity of variance: The variance of the population is the same across all groups.

- Independence: Observations are independent of each other.

What are Parametric Methods?

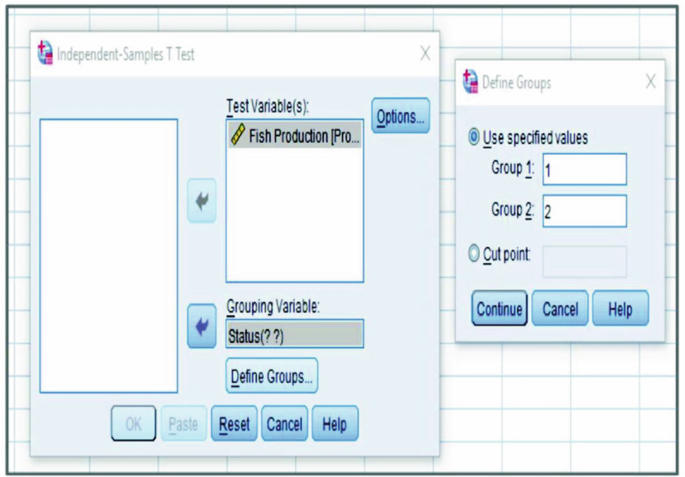

- t-test: Tests for the difference between the means of two independent groups.

- ANOVA: Tests for the difference between the means of three or more groups.

- F-test: Compares the variances of two groups.

- Chi-square test: Tests for relationships between categorical variables.

- Correlation analysis: Measures the strength and direction of the linear relationship between two continuous variables.

- Linear regression: Predicts a continuous outcome based on a linear relationship with one or more independent variables.

- Logistic regression: Predicts a binary outcome (e.g., yes/no) based on a set of independent variables.

- Naive Bayes: Classifies data points based on Bayes’ theorem and assuming independence between features.

- Hidden Markov Models: Models sequential data with hidden states and observable outputs.

some more common parametric methods available some of them are:

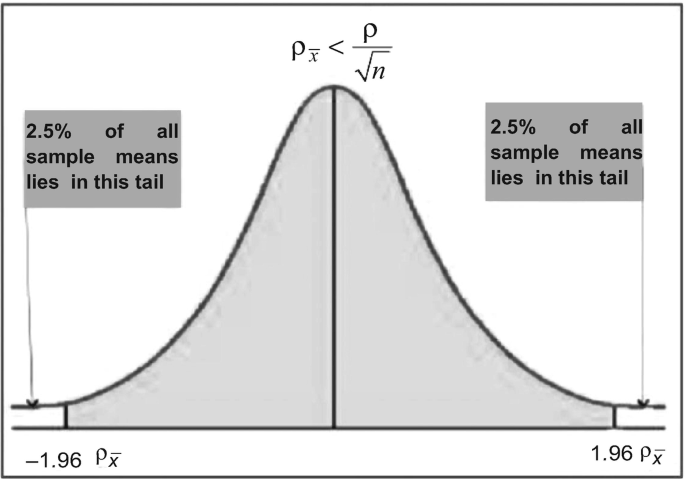

- Confidence interval used for – population mean along with known standard deviation.

- The confidence interval is used for – population means along with the unknown standard deviation.

- The confidence interval for population variance.

- The confidence interval for the difference of two means, with unknown standard deviation.

Advantages of Parametric Methods

- More powerful: When the assumptions are met, parametric tests are generally more powerful than non-parametric tests, meaning they are more likely to detect a real effect when it exists.

- More efficient: Parametric tests require smaller sample sizes than non-parametric tests to achieve the same level of power.

- Provide estimates of population parameters: Parametric methods provide estimates of the population mean, variance, and other parameters, which can be used for further analysis.

Disadvantages of Parametric Methods

- Sensitive to assumptions: If the assumptions of normality, homogeneity of variance, and independence are not met, parametric tests can be invalid and produce misleading results.

- Limited flexibility: Parametric methods are limited to the specific probability distribution they are based on.

- May not capture complex relationships: Parametric methods are not well-suited for capturing complex non-linear relationships between variables.

Applications of Parametric Methods

Parametric methods are widely used in various fields, including:

- Biostatistics: Comparing the effectiveness of different treatments.

- Social sciences: Investigating relationships between variables.

- Finance: Estimating risk and return of investments.

- Engineering: Analyzing the performance of systems.

Nonparametric Methods

Non-parametric methods are statistical techniques that do not rely on specific assumptions about the underlying distribution of the population being studied. These methods are often referred to as “distribution-free” methods because they make no assumptions about the shape of the distribution.

The basic idea behind the parametric method is no need to make any assumption of parameters for the given population or the population we are studying. In fact, the methods don’t depend on the population. Here there is no fixed set of parameters are available, and also there is no distribution (normal distribution, etc.) of any kind is available for use. This is also the reason that nonparametric methods are also referred to as distribution-free methods. Nowadays Non-parametric methods are gaining popularity and an impact of influence some reasons behind this fame is:

- The main reason is that there is no need to be mannered while using parametric methods.

- The second important reason is that we do not need to make more and more assumptions about the population given (or taken) on which we are working on.

- Most of the nonparametric methods available are very easy to apply and to understand also i.e. the complexity is very low.

Assumptions of Non-Parametric Methods

Non Parametric methods require several assumptions about the data:

- Independence: Data points are independent and not influenced by others.

- Random Sampling: Data represents a random sample from the population.

- Homogeneity of Measurement: Measurements are consistent across all data points.

What is Non-Parametric Methods?

- Mann-Whitney U test: Tests for the difference between the medians of two independent groups.

- Kruskal-Wallis test: Tests for the difference between the medians of three or more groups.

- Spearman’s rank correlation: Measures the strength and direction of the monotonic relationship between two variables.

- Wilcoxon signed-rank test: Tests for the difference between the medians of two paired samples.

- K-Nearest Neighbors (KNN): Classifies data points based on the k nearest neighbors.

- Decision Trees: Makes classifications based on a series of yes/no questions about the features.

- Support Vector Machines (SVM): Creates a decision boundary that maximizes the margin between different classes.

- Neural networks: Can be designed with specific architectures to handle non-parametric data, such as convolutional neural networks for image data and recurrent neural networks for sequential data.

Advantages of Non-Parametric Methods

- Robust to outliers: Non-parametric methods are not affected by outliers in the data, making them more reliable in situations where the data is noisy.

- Widely applicable: Non-parametric methods can be used with a variety of data types, including ordinal, nominal, and continuous data.

- Easy to implement: Non-parametric methods are often computationally simple and easy to implement, making them suitable for a wide range of users.

Diadvantages of Non-Parametric Methods

- Less powerful: When the assumptions of parametric methods are met, non-parametric tests are generally less powerful, meaning they are less likely to detect a real effect when it exists.

- May require larger sample sizes: Non-parametric tests may require larger sample sizes than parametric tests to achieve the same level of power.

- Less information about the population: Non-parametric methods provide less information about the population parameters than parametric methods.

Applications of Non-Parametric Methods

Non-parametric methods are widely used in various fields, including:

- Medicine: Comparing the effectiveness of different treatments.

- Psychology: Investigating relationships between variables.

- Ecology: Analyzing environmental data.

- Computer science: Developing machine learning algorithms.

Difference Between Parametric and Non-Parametric

There are several Difference between Parametric and Non-Parametric Methods are as follows:

Parametric and non-parametric methods offer distinct advantages and limitations. Understanding these differences is crucial for selecting the most suitable method for a specific analysis. Choosing the appropriate method ensures valid and reliable inferences, enabling researchers to draw insightful conclusions from their data. As statistical analysis continues to evolve, both parametric and non-parametric methods will play crucial roles in advancing knowledge across various fields.

Frequently Asked Question(FAQs)

Q. what are non-parametric methods.

Non-parametric methods do not make any assumptions about the underlying distribution of the data. Instead, they rely on the data itself to determine the relationship between variables. These methods are more flexible than parametric methods but can be less powerful.

Q. What are parametric methods?

Parametric methods are statistical techniques that make assumptions about the underlying distribution of the data. These methods typically use a pre-defined functional form for the relationship between variables, such as a linear or exponential model.

Q. What is the difference between non-parametric method and distribution free method?

Non-Parametric methods: No assumptions about the underlying distribution’s parameters: This includes the mean, variance, or even the shape (e.g., normal, skewed) of the distribution. Estimates parameters: However, the number and nature of these parameters are flexible and not predetermined. Examples: Chi-square tests, Wilcoxon signed-rank test

Q. What are some common Non Parametric Methods?

Some common Non Parametric Methods: Chi-square test Wilcoxon signed-rank test Mann-Whitney U test Spearman’s rank correlation coefficient

Please Login to comment...

Similar reads.

- Machine Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Parametric vs. Non-Parametric Tests and When to Use Them

The fundamentals of data science include computer science, statistics and math. It’s very easy to get caught up in the latest and greatest, most powerful algorithms — convolutional neural nets, reinforcement learning, etc.

As an ML/health researcher and algorithm developer, I often employ these techniques. However, something I have seen rife in the data science community after having trained ~10 years as an electrical engineer is that if all you have is a hammer, everything looks like a nail. Suffice it to say that while many of these exciting algorithms have immense applicability, too often the statistical underpinnings of the data science community are overlooked.

What is the Difference Between Parametric and Non-Parametric Tests?

A parametric test makes assumptions about a population’s parameters, and a non-parametric test does not assume anything about the underlying distribution.

I’ve been lucky enough to have had both undergraduate and graduate courses dedicated solely to statistics , in addition to growing up with a statistician for a mother. So this article will share some basic statistical tests and when/where to use them.

A parametric test makes assumptions about a population’s parameters:

- Normality : Data in each group should be normally distributed.

- Independence : Data in each group should be sampled randomly and independently.

- No outliers : No extreme outliers in the data.

- Equal Variance : Data in each group should have approximately equal variance.

If possible, we should use a parametric test. However, a non-parametric test (sometimes referred to as a distribution free test ) does not assume anything about the underlying distribution (for example, that the data comes from a normal (parametric distribution).

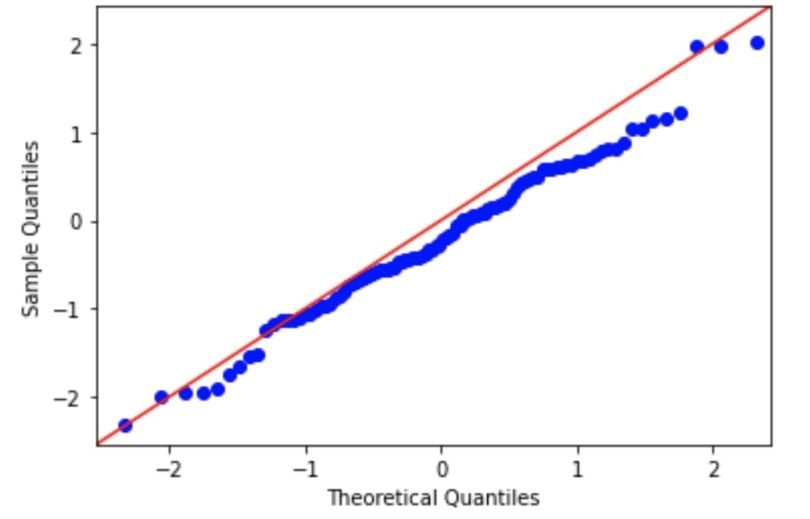

We can assess normality visually using a Q-Q (quantile-quantile) plot. In these plots, the observed data is plotted against the expected quantile of a normal distribution . A demo code in Python is seen here, where a random normal distribution has been created. If the data are normal, it will appear as a straight line.

Read more about data science Random Forest Classifier: A Complete Guide to How It Works in Machine Learning

Tests to Check for Normality

- Shapiro-Wilk

- Kolmogorov-Smirnov

The null hypothesis of both of these tests is that the sample was sampled from a normal (or Gaussian) distribution. Therefore, if the p-value is significant, then the assumption of normality has been violated and the alternate hypothesis that the data must be non-normal is accepted as true.

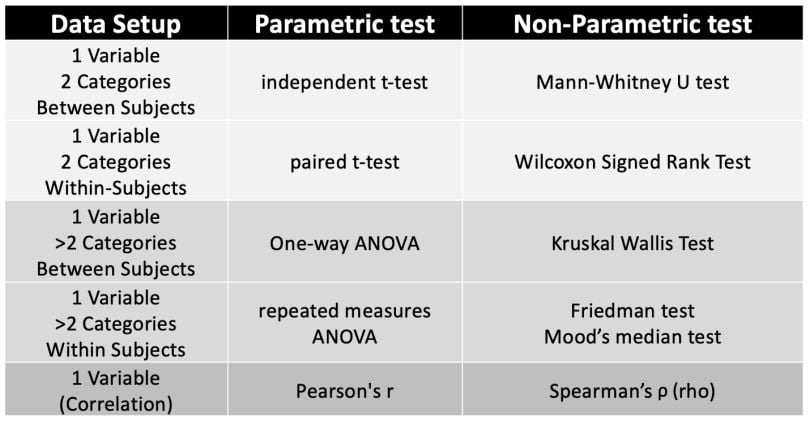

Selecting the Right Test

You can refer to this table when dealing with interval level data for parametric and non-parametric tests.

Read more about data science Statistical Tests: When to Use T-Test, Chi-Square and More

Advantages and Disadvantages

Non-parametric tests have several advantages, including:

- More statistical power when assumptions of parametric tests are violated.

- Assumption of normality does not apply.

- Small sample sizes are okay.

- They can be used for all data types, including ordinal, nominal and interval (continuous).

- Can be used with data that has outliers.

Disadvantages of non-parametric tests:

- Less powerful than parametric tests if assumptions haven’t been violated

[1] Kotz, S.; et al., eds. (2006), Encyclopedia of Statistical Sciences , Wiley.

[2] Lindstrom, D. (2010). Schaum’s Easy Outline of Statistics , Second Edition (Schaum’s Easy Outlines) 2nd Edition. McGraw-Hill Education

[3] Rumsey, D. J. (2003). Statistics for dummies, 18th edition

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

What are statistics parametric tests and where to apply them?

This article will help you understand statistics parametric tests, their most common types, and also where and when to apply them.

Statistics parametric tests are a type of statistical analysis used to test hypotheses about the population mean and variance. These tests are based on the assumption that the underlying data follows a normal distribution and have several key properties, including robustness, reliability, and the ability to detect subtle differences in the data.

Parametric tests are often used in a variety of different applications, including medical research, market research, and social sciences. In these fields, researchers may use parametric tests to determine the significance of changes in population means or variances, or to determine if a particular treatment or intervention has had a significant impact on the data.

Most common types of statistics parametric tests

The t-test .

One of the most commonly used parametric tests is the t-test, which is used to compare the means of two populations. The t-test assumes that the underlying data is normally distributed and that the variances of the two populations are equal. The test statistic is calculated using the difference in the means of the two populations, divided by the standard error of the difference.

Another common parametric test is the analysis of variance (ANOVA), which is used to compare the means of three or more populations. The ANOVA test assumes that the underlying data is normally distributed and that the variances of all populations are equal. The test statistic is calculated using the ratio of the variance between the populations to the variance within the populations.

Other parametric tests

In addition to the t-test and ANOVA, there are several other statistics parametric tests that are used in different applications, including the paired t-test, the one-way ANOVA, the two-way ANOVA, the repeated measures ANOVA, and the mixed-design ANOVA. Each of these tests has different assumptions and test statistics, and is used to address different types of research questions.

One of the key benefits of parametric tests is that they are robust, meaning that they are not sensitive to the shape of the underlying data distribution. As long as the data is approximately normally distributed, parametric tests can provide accurate results.

Create amazing infographics in minutes

Mind the Graph is the perfect tool to bring your data together and present them visually. Use charts, tables and scientific illustrations to make your work easier to understand.

The reliability of statistics parametric tests

Another benefit of parametric tests is their reliability, as they are based on well-established statistical methods and assumptions. The results of parametric tests are highly repeatable and can be used to make valid inferences about the underlying population.

Despite their many benefits, parametric tests are not always the best choice for every data set. In some cases, the underlying data may not be normally distributed, or the variances of the populations may not be equal. In these cases, non-parametric tests may be more appropriate.

Parametric tests vs. Nonparametric tests

Non-parametric tests are a type of statistical analysis that do not make any assumptions about the underlying data distribution. Instead, they rely on the rank of the data to determine the significance of the results. Some common non-parametric tests include the Wilcoxon rank-sum test , the Kruskal-Wallis test , and the Mann-Whitney test .

When choosing between parametric and non-parametric tests, it is important to consider the nature of the data and the research question being addressed. In general, parametric tests are appropriate for data that is normally distributed and has equal variances, while non-parametric tests are appropriate for data that does not meet these assumptions.

Example of a statistics parametric test

Suppose a researcher is interested in testing whether there is a difference in the mean height of two groups of children – Group A and Group B. To do this, the researcher randomly selects 20 children from each group and measures their heights.

The researcher wants to know if the mean height of children in Group A is different from the mean height of children in Group B. To test this hypothesis, the researcher can use a two-sample t-test. The t-test assumes that the underlying data is normally distributed and that the variances of the two groups are equal.

The researcher calculates the mean height for each group and finds that the mean height for Group A is 150 cm and the mean height for Group B is 155 cm. The researcher then calculates the standard deviation for each group and finds that the standard deviation for Group A is 5 cm and the standard deviation for Group B is 4 cm.

Next, the researcher calculates the t-statistic using the difference in the means of the two groups, divided by the standard error of the difference. If the t-statistic is larger than a critical value determined by the level of significance and degrees of freedom, the researcher can conclude that there is a significant difference in the mean height of children in Group A and Group B.

This example demonstrates how a two-sample t-test can be used to test a hypothesis about the difference in means of two groups. The t-test is a powerful and widely used parametric test that provides a robust and reliable way to test hypotheses about the population mean.

Powerful tools for analyzing data

In conclusion, parametric tests are a powerful tool for statistical analysis, providing robust and reliable results for a wide range of applications. However, it is important to choose the appropriate test based on the nature of the data and the research question being addressed. Whether using parametric or non-parametric tests, the goal of statistical analysis is always to make valid inferences about the underlying population and to draw meaningful conclusions from the data.

Nothing can beat a flawless visual piece that delivers a complex message

Having difficulty communicating a large quantity of information? Use infographics and illustrations to make your work more understandable and accessible. Mind the Graph is an excellent tool for researchers who want to make their work more effective by using visually attractive infographics.

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

Unlock Your Creativity

Create infographics, presentations and other scientifically-accurate designs without hassle — absolutely free for 7 days!

About Fabricio Pamplona

Fabricio Pamplona is the founder of Mind the Graph - a tool used by over 400K users in 60 countries. He has a Ph.D. and solid scientific background in Psychopharmacology and experience as a Guest Researcher at the Max Planck Institute of Psychiatry (Germany) and Researcher in D'Or Institute for Research and Education (IDOR, Brazil). Fabricio holds over 2500 citations in Google Scholar. He has 10 years of experience in small innovative businesses, with relevant experience in product design and innovation management. Connect with him on LinkedIn - Fabricio Pamplona .

Content tags

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Invest Ophthalmol Vis Sci

- v.61(8); 2020 Jul

Parametric Statistical Inference for Comparing Means and Variances

Johannes ledolter.

1 Department of Business Analytics, Tippie College of Business, University of Iowa, Iowa City, Iowa, United States

2 Center for the Prevention and Treatment of Visual Loss, Iowa City VA Health Care System, Iowa City, Iowa, United States

Oliver W. Gramlich

3 Department of Ophthalmology and Visual Sciences, University of Iowa, Iowa City, Iowa, United States

Randy H. Kardon

Associated data.

The purpose of this tutorial is to provide visual scientists with various approaches for comparing two or more groups of data using parametric statistical tests, which require that the distribution of data within each group is normal (Gaussian). Non-parametric tests are used for inference when the sample data are not normally distributed or the sample is too small to assess its true distribution.

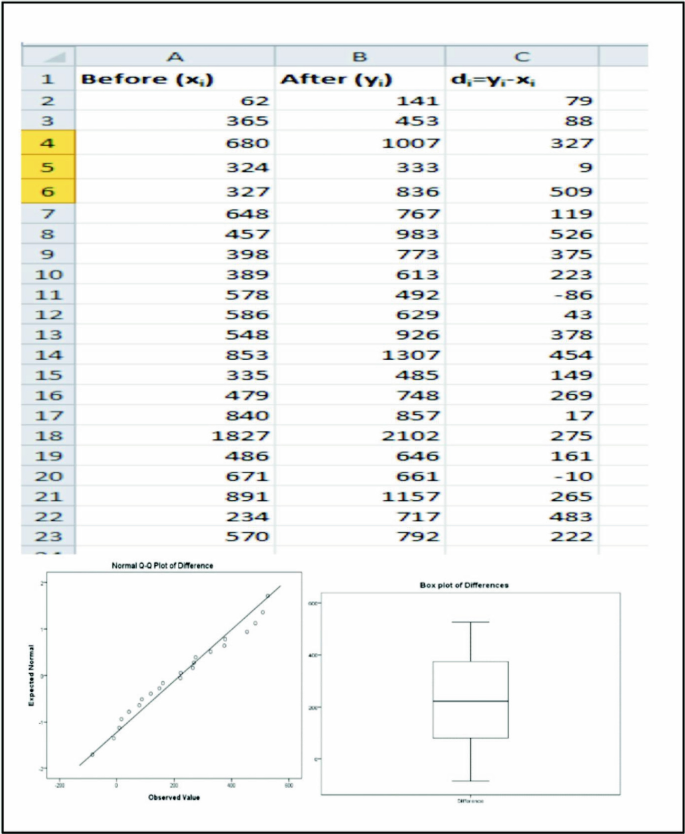

Methods are reviewed using retinal thickness, as measured by optical coherence tomography (OCT), as an example for comparing two or more group means. The following parametric statistical approaches are presented for different situations: two-sample t-test, Analysis of Variance (ANOVA), paired t-test, and the analysis of repeated measures data using a linear mixed-effects model approach.

Analyzing differences between means using various approaches is demonstrated, and follow-up procedures to analyze pairwise differences between means when there are more than two comparison groups are discussed. The assumption of equal variance between groups and methods to test for equal variances are examined. Examples of repeated measures analysis for right and left eyes on subjects, across spatial segments within the same eye (e.g. quadrants of each retina), and over time are given.

Conclusions

This tutorial outlines parametric inference tests for comparing means of two or more groups and discusses how to interpret the output from statistical software packages. Critical assumptions made by the tests and ways of checking these assumptions are discussed. Efficient study designs increase the likelihood of detecting differences between groups if such differences exist. Situations commonly encountered by vision scientists involve repeated measures from the same subject over time, measurements on both right and left eyes from the same subject, and measurements from different locations within the same eye. Repeated measurements are usually correlated, and the statistical analysis needs to account for the correlation. Doing this the right way helps to ensure rigor so that the results can be repeated and validated.

This tutorial deals with statistical parametric tests for inference, such as comparing the means of two or more groups. Parametric tests refer to those that make assumptions about the distribution of the data, most commonly assuming that observations follow normal (Gaussian) distributions or that observations can be mathematically transformed to a normal distribution (e.g., log transformation). Non-parametric tests are used for inference when the sample data are not normally distributed or the sample is too small to assess its true distribution and will be covered in a separate tutorial.

For this tutorial on parametric statistical inference, optical coherence tomography thickness measurements of the inner retinal layers recorded in eyes of control mice and mice with optic neuritis produced by experimental autoimmune encephalitis (EAE) serve as illustration. For brevity, we refer to the measured response as retinal thickness. We have explained the goals of this study in another tutorial on the display of data, 1 and they are summarized here. There are three treatment groups: control mice, diseased mice (EAE) with optic neuritis, and treated diseased mice (EAE + treatment). For the purpose of this tutorial, we consider only mice with measurements made on both eyes. This leaves us with 15, 12, and six subjects (mice) in the three groups, respectively. For the various statistical analyses in this tutorial, the variance ( s 2 ) is defined as the sum of the squared differences of each sample from their sample mean, which is then divided by the number of samples minus 1 (subtracting 1 corrects for the sample bias). The standard deviation is the square root of the variance. The software programs Prism 8 (GraphPad, San Diego, CA, USA) and Minitab (State College, PA, USA) were used to generate the graphs shown in this tutorial.

This tutorial analyzes the average inner retinal thickness of subjects by averaging the measurements on right and left eyes. It also analyzes the inner retinal thickness of eyes, but incorporates the correlation between right and left eye measurements on the same subject.

Analysis of the Average Retinal Thickness of Subjects After Combining Their Measurements on Right and Left Eyes

Comparing means of two treatment groups: two-sample t -test.

First we discuss whether there is a difference between the average retinal thickness of control and diseased mice after EAE-induced optic neuritis. We compare the two groups A = control and B = EAE. Measurements in these two groups are independent, as each group contains different mice. The two-sample t -test relates the difference of the sample means y ¯ A - y ¯ B to its estimated standard error, s e y ¯ A - y ¯ B = s A 2 n A + s B 2 n B . Here, n A , y ¯ A , s A and n B , y ¯ B , s B are the sample size, mean, and standard deviation for each of the two groups.

Under the null hypothesis of no difference between the two means, the ratio y ¯ A - y ¯ B s A 2 n A + s B 2 n B is well-approximated by a t -distribution, with its degrees of freedom [ ( s A 2 / n A ) + ( s B 2 / n B ) ] 2 ( s A 4 / n A 2 ( n A - 1 ) ) + ( s B 4 / n B 2 ( n B - 1 ) ) given from the Welch approximation. 2 Confidence intervals and probability values can be calculated. Small probability values (smaller than 0.05 or 0.10) indicate that the null hypothesis of no difference between the means can be rejected. Note that, although traditionally a probability of <0.05 has been considered significant, some groups favor an even more stringent criterion, but others feel that a less conservative criterion (e.g., P < 0.1) may still be meaningful, depending on the context of the study.

One can also use the standard error that uses the pooled standard deviation, s e y ¯ A - y ¯ B = s p o o l e d 1 n A + 1 n B = ( n A - 1 ) s A 2 + ( n B - 1 ) s B 2 n A + n B - 2 1 n A + 1 n B , and a t -distribution with n A − n B − 2 degrees of freedom. However, we prefer the first method, where the standard error of each group is calculated separately (not pooled), and the Welch approximation of the degrees of freedom, as it does not require that the two group variances be the same. The pooled version of the test assumes equal variances and can be misleading when they are not. 3 Both t -tests are robust to non-normality as long as the sample sizes are reasonably large (sample sizes of 30 or larger; robustness follows from the central limit effect).

The mean retinal thickness of the diseased mice (group B, EAE: mean = 59.81 µm; SD = 3.72 µm) is 6.40 microns smaller than that of the control group (group A, control: mean = 66.21 µm, SD = 3.39 µm). The P value (0.0001) shows that this difference is quite significant, leaving little doubt that the disease leads to thinning of the inner retinal layer ( Table 1 ).

Subject Average Retinal Thickness (in µm) for Control and Disease Groups: Two-Sample t -Test with Welch Correction Comparing Group A (Control) with Group B (EAE) *

Comparing Means of Two or More (Independent) Treatment Groups: One-Way ANOVA

The one-way analysis of variance can be used to compare two or more means. Assume that there are k groups (for our illustration, k = 3) with observations y ij for i = 1, 2, …, k and j = 1, 2, …, n i (number of observations in the i th group). The ANOVA table partitions the sum of squared deviations of the n = ∑ i = 1 k n i observations from their overall mean, y ¯ , into two components: the between-group (or treatment) sum of squares, S S B = ∑ i = 1 k n i ( y ¯ i - y ¯ ) 2 , expressing the variability of the group means y ¯ i from the overall mean y ¯ , and the within-group (or residual) sum of squares, S S W = ∑ i = 1 k { ∑ j = 1 n i ( y i j - y ¯ i ) 2 } = ∑ i = 1 k ( n i - 1 ) s i 2 , adding up all within-group variances, s i 2 . The ratio of the resulting mean squares (where mean squares are obtained by dividing sums of squares by their degrees of freedom), F = S S B / ( k - 1 ) S S W / ( n - k ) , serves as the statistic for testing the null hypothesis that all group means are equal. The probability value for testing this hypothesis can be obtained from the F -distribution. Small probability values (smaller than 0.05 or 0.10) indicate that the null hypothesis should be rejected.

The ANOVA assumes that all measurements are independent. This is the case here, as we have different subjects in the three groups. Note that independence could not be assumed if both right and left eyes were included, as right and left eye observations from the same subject are most likely correlated; we will discuss later how to handle this situation.

The ANOVA assumes that the variances of the treatment groups are the same. Its conclusions may be misleading if the variances are different. Box 3 showed that the F -test is sensitive to violations of the equal variance assumption, especially if the sample sizes in the groups are different. The F -test is less affected by unequal variances if the sample sizes are equal. Although the F -test assumes normality, it is robust to non-normality as long as the sample sizes are reasonably large (e.g., 30 samples per group).

For only two treatment groups, the ANOVA approach reduces to the two-sample t -test that uses the pooled variance. Earlier we had recommended the Welch approximation, which uses a different standard error calculation for the difference of two sample means, as it does not assume equal variances. Useful tests for the equality of variances are discussed later.

If the null hypothesis of equal group means is rejected when there are more than two treatment groups, then follow-up tests are needed to determine which of the treatment groups differ from the others using pairwise comparisons. For three groups, one calculates three pairwise (multiple) comparisons and three confidence intervals for each pairwise difference of two means. The significance level of individual pairwise tests needs to be adjusted for the number of comparisons being made. Under the null hypothesis of no treatment effects, we set the error that one or more of these multiple pairwise comparisons are falsely significant at a given significance level, such as α = 0.05. To achieve this, one must lengthen individual confidence intervals and increase individual probability values. This is exactly what the Tukey multiple comparison procedure 4 does ( Table 2 , Fig. 1 ). Many other multiple comparison procedures are available (Bonferroni, Scheffe, Sidak, Holm, Dunnett, Benjamini–Hochberg), but their discussion would go beyond this introduction. For a discussion of the general statistical theory of multiple comparisons, see Hsu. 5

Subject Average Retinal Thickness (in µm): One-Way ANOVA with Three Groups (Control, EAE, EAE + Treatment) and Tukey's Multiple Comparison Tests *

Subject average retinal thickness (in µm). Visualizations of results. ( A ) Plot of group means and their 95% confidence intervals. Confidence intervals are not adjusted for multiple comparisons. Analysis with Minitab. ( B ) Plot of pairwise differences and their Tukey-adjusted confidence intervals. Analysis with GraphPad Prism 8.

The ANOVA results in Table 2 show that mean retinal thickness differs significantly across the three treatment groups ( P = 0.0001). Tukey pairwise comparisons show differences between the group means of thickness for control and EAE and for control and EAE + treatment. The means of EAE and EAE + treatment are not significantly different.

Comparing Variances of Two or More (Independent) Treatment Groups: Bartlett, Levine, and Brown–Forsythe Tests

As stated above, ANOVA testing assumes that the group variances are equal. How does one test for equal variances? Bartlett's test 6 (see Snedecor and Cochran 7 ) is employed for testing if two or more samples are from populations with equal variances. Equal variances across populations are referred to as homoscedasticity or homogeneity of variances. The Bartlett test compares each group variance with the pooled variance and is sensitive to departures from normality. The tests by Levene 8 and Brown and Forsythe 9 are good alternatives that are less sensitive to departures from normality. These tests make use of the results of a one-way ANOVA on the absolute value of the difference between measurements and their respective group mean (Levine test) or their group median (for the Brown–Forsythe test).

We apply these tests to the average retinal thickness data. We cannot reject the hypothesis that all three variances are the same, so we can be more confident in our interpretation of the ANOVA results, as the variances of the groups appear to be similar ( Table 3 ). If one of the tests shows unequal variance but the other test does not, then one needs to evaluate how significant the P value was in rejecting the null hypothesis of equal variance. If a fair amount of uncertainty remains, then alternative approaches are discussed in the next section.

Subject Average Thickness (in µm): Bartlett and Brown–Forsythe Tests for Equality of Group Variances *

Approaches to Take When Variances Are Different

A finding of unequal variances is not just a nuisance (because it puts into question the results from the ANOVA on means) but it also provides an opportunity to learn something more about the data. Discovering that particular groups have different variances gives valuable insights.

Transforming measurements usually helps to satisfy the requirement that variances are equal. Box and Cox 10 discussed transformations that stabilize the variability so that the variances in the groups are the same. A logarithmic transformation is indicated when the standard deviation in a group is proportional to the group mean; a square root transformation is indicated when the variance is proportional to the mean. Reciprocal transformations are useful if one studies the time from the onset of a disease (or of a treatment) to a certain failure event such as death or blindness. The reciprocal of time to death, which expresses the rate of dying, often stabilizes group variances. For details, see Box et al. 11

If one cannot find a variance-stabilizing transformation, one can proceed with the Welch approximation of pairwise two-sample comparisons. For nearly equal and moderately large sample sizes, the assumption of equal standard deviations is not a crucial assumption, and moderate violations of equal variances can be ignored. Another alternative would be to use nonparametric procedures (they are covered in a different tutorial).

Analysis of Retinal Thickness Using Both Right and Left Eye Measurements of Each Subject

Comparing means of two repeated measurements: paired t -test.

In the earlier two-sample comparison, different subjects were assigned to each of two treatment groups. Often it is more efficient to design the experiment such that a treatment (or induction of a disease phenotype, as in this example) is applied to the same subject. For our example, each mouse could be observed both under its initial healthy condition and after having been exposed to a multiple sclerosis phenotype EAE protocol. Measurements are then available on the same mouse under both conditions, and one can control for (remove) the subject effect that exists. A within-subject comparison of the effectiveness of a treatment or drug is subject to fewer interfering variables than a comparison across subjects. The same is true for the comparison of right and left eyes when both measurements come from the same subject and only one eye is treated, with the other eye acting as a within-subject control. The large subject effect that affects both eyes in a similar way can be removed, resulting in an increase of the precision of the comparison, potentially making it more sensitive to detecting an effect, if one exists.

The paired t -test considers treatment differences, d , on n different subjects and compares the sample mean ( d ¯ ) to its standard error, s e d ¯ = s d / n . Under the null hypothesis of no difference, the ratio (test statistic) d ¯ / s e d ¯ has a t -distribution with n – 1 degrees of freedom, and confidence intervals and probability values can be calculated. Small probability values (usually smaller than 0.05 or 0.10) would indicate that the null hypothesis should be rejected.

For illustration, we use the right eye (OD) and left eye (OS) retinal thickness measurements from the 15 mice of the control group. Figure 2 demonstrates considerable between-subject variability; the intercepts of the lines that connect measurements from the same subject differ considerably. Pairing the observations and working with changes on the same subject removes the subject variability and makes the analysis more precise. Table 4 indicates that there is no difference in the average retinal thickness of right and left eyes. We had expected this result, as neither eye was treated. However, if one wanted to test a treatment that is given to just one eye without affecting the other, such a paired treatment comparison between the two eyes would be a desirable analysis plan.

Retinal Thickness (in µm) of OD and OS Eyes in the Control Group (15 Mice): Paired t -Test *

Retinal thickness (in µm) of OD and OS eyes in the control group (15 mice). OD and OS measurements from the same subject are connected. Analysis with Minitab.

Correlation Between Repeated Measurements on the Same Subject

The two-sample t -test in Table 1 and the ANOVA in Table 2 used subject averages of the thickness of the right and left eyes. Switching to eyes as the unit of observation, it is tempting to run the same tests with twice the number of observations in each group, as now each subject provides two observations. But, if eyes on the same subject are correlated (in our illustration with 33 subjects, the correlation between OD and OS retinal thickness is very large: r = 0.90), this amounts to “cheating,” as correlated observations carry less information than independent ones. By artificially inflating the number of observations and inappropriately reducing standard errors, the probability values appear more significant than they actually are.

Suppose that measurements on the right and left eye are perfectly correlated. Adding perfect replicates does not change the group means and the standard deviations that we obtained from the analysis of subject averages; however, with perfect replicates, the earlier standard error of the difference of the two group means gets divided by 2 , which increases the test statistic and makes the difference appear more significant than it actually is. The earlier ANOVA is equally affected. Adding replicates increases the between-group mean square by a factor of 2 but does not affect the within-group mean square, thus increasing the F -test statistic. This shows that a strategy of adding more and more perfect replicates to each observation makes even the smallest difference significant. One cannot ignore the correlation among measurements on the same subject! The following two sections show how this correlation can be incorporated into the analysis.

Analysis of Repeated Measures Data

Many studies involve repeated measurements on each subject. Here we have 15 healthy control mice, 12 diseased mice (EAE), and 6 treated diseased mice (EAE + treatment), and we have repeated measurements on each subject: measurements on the left and right eye. But, repeated measurements may also reflect measurements over time or across spatial segments (e.g., quadrants of each retina). The objective is to study the effects of the two factors, treatment and eye. Repeated measurements on the same subject can be expected to be dependent, as a subject that measures high on one eye tends to also measure high on the other. The correlation must be incorporated into the analysis. This makes the analysis different from that of a completely randomized two-factor experiment where all observations are assumed independent.

The model for data from such a repeated measures experiment represents the observation Y ijk on subject i in treatment group j and eye k according to

- • α is an intercept.

- • β j in this example represents (three) fixed differential treatment effects, with β 1 + β 2 + β 3 = 0. With this restriction, treatment effects are expressed as deviations from the average. An equivalent representation sets one of the three coefficients equal to zero, then the parameter of each included group represents the difference between the averages of the included group and the reference group for which the parameter has been omitted.

- • π i ( j ) represents random subject effects, represented by a normal distribution with mean 0 and variance σ π 2 . The subscript notation i ( j ) expresses the fact that subject i is nested within factor j ; that is, subject 1 in treatment group 1 is a different subject than subject 1 in treatment group 2. Each subject is observed under only a single treatment group. This is different from the “crossed” design where each subject is studied under all treatment groups.

- • γ k represents fixed eye (OD, OS) effects with coefficients adding to zero: γ 1 + γ 2 = 0.

- • βγ jk represents the interaction effects between the two fixed effects, treatment and eye, with row and column sums of the array βγ jk restricted to zero. There is no interaction when all βγ jk are zero; this makes effects easier to interpret, as the effects of one factor do not depend on the level of the other.

- • ε i ( j ) k represents random measurement errors, with a normal distribution, mean = 0, and variance = σ ɛ 2 . Measurement errors reflect the eye by subject (within treatment) interaction.

This model is known as a linear mixed-effects model as it involves fixed effects (here, treatment and eye and their interaction) and random effects (here, the subject effects and the measurement errors). Maximum likelihood or, preferably, restricted maximum likelihood methods are commonly used to obtain estimates of the fixed effects and the variances of the random effects; standard errors of the fixed effects can be calculated, as well. For detailed discussion, see Diggle et al. 12 and McCulloch et al. 13

Computer software for analyzing the data from such repeated measurement design is readily available. Minitab, SAS (SAS Institute, Cary, NC, USA), R (The R Foundation for Statistical Computing, Vienna, Austria), and GraphPad Prism all have tools for fitting the appropriate models. An important feature of these software packages is that they can handle missing data. It would be quite unusual if a study would not have any missing observations, and software that can handle only balanced datasets would be of little use. Without missing data (as is the case here), the computer output includes the repeated measures ANOVA table. The output from the mixed-effects analysis (which is used if observations are missing) is similar. Computer software also allows for very general correlation structure among repeated measures. The random subject representation discussed here implies compound symmetry with equal correlations among all repeated measures. With time as the repeated factor, other useful models include conditional autoregressive specifications that model the correlation of repeated measurements as a geometrically decreasing function of their time.

Results of the two-way repeated measures ANOVA for the thickness data are shown in Table 5 . Estimates of the two error variances come into play differently when testing fixed effects. The variability between subjects is used when testing the treatment effect; the measurement (residual) variability is used in all tests that involve within-subject factors. See, for example, Winer. 14 These variabilities are estimated by the two mean square (MS) errors that are shown in Table 5 with bold-face type.

Retinal Thickness (in µm) of OD and OS Eyes *

Shown is the GraphPad Prism8 ANOVA output of the two-factor repeated measures experiment with three treatment groups and the repeated factor eye. Sphericity assumes that variances of differences between all possible pairs of within-subject conditions are equal.

In Table 5 , MS(Subject) = 23.45 is used to test the effect of treatment: F (Treatment) = 287.2/23.45 = 12.25. The treatment effect is significant at P = 0.0001. MS(Residual) = 2.192 is used in the test for subject effects and in tests of the main effect of eye and the eye × treatment interaction: F (Subject) = 23.45/2.192 = 10.70 (significant; P < 0.0001); F (Eye) = 1.351/2.192 = 0.6164 (not significant, P = 0.4385) and F (Eye × Treatment) = 0.298/2.192 = 0.1360 (not significant, P = 0.8734). In summary, the mean retinal thickness differs among the control, EAE, and EAE + treatment groups. Thickness varies widely among subjects, but difference in means between right and left eyes are not significant.

Assume that we ignore the correlation of repeated measures on the same subject and run a one-way ANOVA (with our three treatment groups) on individual eye measurements. The mean square error in that analysis is (1345.31 – 574.5)/(65 – 2) = 12.23, increasing the F -statistic to F = 287.2/12.23 = 23.47 which is highly significant. However, such incorrect analysis that does not account for the high correlation between measurements on right and left eyes leads to wrong probability values and wrong conclusions. It makes the treatment effect appear even more significant than it really is. In this example, the conclusions about the factors are not changed, but that is not true in general for all cases.

A standard two-way ANOVA on treatment (with three levels) and eye (with two levels) that does not account for repeated measurements also leads to incorrect results, as such analysis assumes that observations in the six groups are independent. This is not so, as observations in different groups come from the same subject.

More Complicated Repeated Measures Designs

Extensions of repeated measures designs are certainly possible. Here are two different illustrations for a potential third factor.

In the first model, the third factor is the (spatial) quadrant of the retina in which the measurement is taken. Measurements on the superior, inferior, nasal, and temporal quadrants are taken on each eye. The model includes random subject effects for the different mice in each of the three treatment groups (G1, G2, G3), with each mouse studied under all eight eye/quadrant combinations. The design layout is shown in Table 6 .

Retinal Thickness (in µm) of OD and OS Eyes: MINITAB Output of the Repeated Measures Experiment with Three Factors: Treatment Group, Eye, and Quadrant *

The residual sum of squares pools the interaction sums of squares between subjects and the effects of eye, quadrant, and eye by quadrant interaction. The three-factor ANOVA in GraphPad Prism8 is quite limited (two of the three factors can only have two levels) and could not be used.

Data for the 15, 12, and six mice from the three treatment groups are analyzed. A total of 33 subjects × 8 regions (four quadrants for the right eye and four for the left eye) = 264 measurements is used to estimate this repeated measures model. Results are shown in Table 6 . MS(Subject) = 93.80 is used for testing the treatment effect, F (Treatment) = 1148.93/93.80 = 12.25. MS(Residual) = 12.66 is used in all other tests (subject effects, main and interaction effects of eye and quadrant, and all of their interactions with treatment). Treatment and subject effects are highly significant, but all effects of eye and quadrant are insignificant, meaning that eyes and quadrants had no effect on retinal thickness.

In the second model, a third factor, type, represents two different genetic mouse strains. The experiment studies the effect of treatment on mice from either of two genetic strains (type 1 and type 2 below). Treatment and strain are crossed fixed effects, as every level of one factor is combined with every level of the other. Each mouse taken from one of the six groups has a measurement made at four different quadrants in one eye. This is a different repeated measures design, as now the mice are nested within the treatment–strain combinations. The design looks as follows:

The variability between subjects is used for testing main and interaction effects of treatment and strain. The measurement (residual) variability is used in the test for subject effects and the tests for the main effect of quadrant and its interactions with treatment and strain.

This tutorial outlines parametric inference tests for comparing means of two or more groups and how to interpret the output from statistical software packages. Critical assumptions made by the tests and ways of checking these assumptions are discussed.

Efficient study designs increase the likelihood of detecting differences among groups if such differences exist. Situations commonly encountered by vision scientists involve repeated measures from the same subject over time, on both right and left eyes from the same subject, and from different locations within the same eye. Repeated measures are usually correlated, and the statistical analysis must account for the correlation. Doing this the right way helps to ensure rigor so that the results can be repeated and validated with time. The data used in this review (in both Excel and Prism 8 format) are available in the Supplementary Materials.

Two Excel data files can be found under the Supplementary Materials: Supplementary Data S1 contains measurements on each eye as well as on each subject, whereas Supplementary Data S2 contains measurements for each quadrant of the retina. The two GraphPad Prism8 files under the Supplementary Materials illustrate the data analysis: Supplementary Material S3 on the analysis of subject averages, and Supplementary Material S4 on the analysis of individual eyes.

Supplementary Material

Acknowledgments.

Supported by a VA merit grant (C2978-R); by the Center for the Prevention and Treatment of Visual Loss, Iowa City VA Health Care Center (RR&D C9251-C, RX003002); and by an endowment from the Pomerantz Family Chair in Ophthalmology (RHK).

Disclosure: J. Ledolter , None; O.W. Gramlich , None; R.H. Kardon , None

What is Parametric Tests? Types: z-Test, t-Test, F-Test

- Post last modified: 3 September 2023

- Reading time: 9 mins read

- Post category: Research Methodology

What is Parametric Tests?

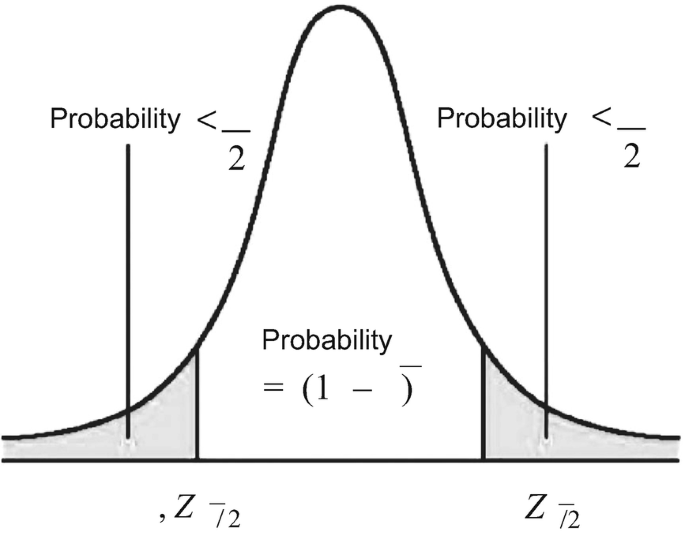

Parametric tests are statistical measures used in the analysis phase of research to draw inferences and conclusions to solve a research problem. There are various types of parametric tests, such as z-test, t-test and F-test. The selection of a particular test for research depends upon various factors, such as the type of population, sample size, Standard Deviation (SD) and variance of population. It is important for a researcher to identify the appropriate test to maintain the authenticity and validity of research results.

Table of Content

- 1 What is Parametric Tests?

- 2.1 Parametric Tests

- 2.2 Non-Parametric Tests

- 4 Assumptions of F-Test

Types of Hypothesis Tests

A hypothesis can be tested by using a large number of tests. Therefore, researchers have found it more convenient to categorise these tests on the basis of their similarities and differences. Hypothesis tests are divided into two types, as mentioned below:

Parametric Tests

In these tests, the researcher makes assumptions about the parameters of the population from which a sample is derived. An example of a parametric test is z-test.

Non-Parametric Tests

These are distribution-free tests of hypotheses. Here, the researcher does not make assumptions about the parameters of the population from which a sample is derived. An example of a non-parametric test is the Kruskal Wallis test.

Types of Parametric Tests

In parametric tests, researchers assume certain properties of the parent population from which samples are drawn. These assumptions include properties, such as the sample size, type of population, mean and variance of population and distribution of the variable. For example, t-test assumes that the variable under study in population is normally distributed.

Researchers calculate the parameters of population using various test statistics. Then, they test the hypothesis by comparing the calculated value of parameters with the benchmark value given in the problem. The scale used for dependent value in parametric tests is mostly the interval scale or ratio.

There are various types of parametric tests are:

This test is used to study the mean and proportion of samples having a sample size of more than 30. It involves comparison of means of two different and unrelated samples drawn from the same population whose variance in known. The z-value (test statistic) is calculated for the present data and compared with the z-value at that level of significance, which is decided earlier in the question/problem. After comparison, researcher may decide to reject or support null hypothesis.

The z-test is used in the following cases:

- To compare the mean of a sample with the mean of a hypothesised population when the sample size is large and the population variance is known

- To compare the significant difference between the means of two independent samples in the case of large samples or when the population variance is known

- To compare the proportion of a sample with the proportion of the population

This test is used to study the mean of samples when the sample size is less than 30 and/or the population variance is unknown. It is based on t-distribution. A t-distribution is a type of probability distribution that is appropriate for estimating the mean of a normally distributed population where the sample size is small and population variance is unknown.

The t-value (test statistic) is calculated for the present data and compared with the t-value at a specified level of significance for concerning degrees of freedom for accepting/rejecting the null hypothesis. The degree of freedom is calculated by subtracting one observation from the number of observations. It is used to check the t-value in the t-distribution table.

Sometimes, the t-test is used to compare the means of two related samples when the sample size is small and the population variance is unknown. In such a situation, it is known as the paired t-test.

This test is used to compare the ratio of variances of two samples under study. It involves comparing the ratio of two variances of two samples. The F-distribution is a right-skewed distribution that is used most common in Analysis of Variance (ANOVA). Here, the test statistic has an F-distribution. The F-value (test statistic) is calculated for the present data and compared with the F-value at that level of significance, which is decided earlier in the question/ problem.

In a F-test, these are two independent degrees of freedom in numerator and denominator respectively. The degrees of freedom (d.f.) of two samples are calculated separately by subtracting one from the number of observations. After that, the F-value is calculated from the F-distribution table.

Parametric tests are further divided into two parts – one-sample tests and two-sample tests. You will learn more about them in the next sections.

Assumptions of F-Test

F-distribution is usually asymmetric with minimum value of zero. However, the maximum value is infinity. Assumptions for using an F-test include:

- Both the samples come from normal distribution.

- Observations in each sample are selected randomly

F-statistic can never be negative as it is a ratio of two squared numbers. The degrees of freedom for different tests is calculated in different ways as follows:

Business Ethics

( Click on Topic to Read )

- What is Ethics?

- What is Business Ethics?

- Values, Norms, Beliefs and Standards in Business Ethics

- Indian Ethos in Management

- Ethical Issues in Marketing

- Ethical Issues in HRM

- Ethical Issues in IT

- Ethical Issues in Production and Operations Management

- Ethical Issues in Finance and Accounting

- What is Corporate Governance?

- What is Ownership Concentration?

- What is Ownership Composition?

- Types of Companies in India

- Internal Corporate Governance

- External Corporate Governance

- Corporate Governance in India

- What is Enterprise Risk Management (ERM)?

- What is Assessment of Risk?

- What is Risk Register?

- Risk Management Committee

Corporate social responsibility (CSR)

- Theories of CSR

- Arguments Against CSR

- Business Case for CSR

- Importance of CSR in India

- Drivers of Corporate Social Responsibility

- Developing a CSR Strategy

- Implement CSR Commitments

- CSR Marketplace

- CSR at Workplace

- Environmental CSR

- CSR with Communities and in Supply Chain

- Community Interventions

- CSR Monitoring

- CSR Reporting

- Voluntary Codes in CSR

- What is Corporate Ethics?

Lean Six Sigma

- What is Six Sigma?

- What is Lean Six Sigma?

- Value and Waste in Lean Six Sigma

- Six Sigma Team

- MAIC Six Sigma

- Six Sigma in Supply Chains

- What is Binomial, Poisson, Normal Distribution?

- What is Sigma Level?

- What is DMAIC in Six Sigma?

- What is DMADV in Six Sigma?

- Six Sigma Project Charter

- Project Decomposition in Six Sigma

- Critical to Quality (CTQ) Six Sigma

- Process Mapping Six Sigma

- Flowchart and SIPOC

- Gage Repeatability and Reproducibility

- Statistical Diagram

- Lean Techniques for Optimisation Flow

- Failure Modes and Effects Analysis (FMEA)

- What is Process Audits?

- Six Sigma Implementation at Ford

- IBM Uses Six Sigma to Drive Behaviour Change

- Research Methodology

- What is Research?

- What is Hypothesis?

Sampling Method

Research methods.

- Data Collection in Research

- Methods of Collecting Data

- Application of Business Research

- Levels of Measurement

- What is Sampling?

- Hypothesis Testing

- Research Report

- What is Management?

- Planning in Management

- Decision Making in Management

- What is Controlling?

- What is Coordination?

- What is Staffing?

- Organization Structure

- What is Departmentation?

- Span of Control

- What is Authority?

- Centralization vs Decentralization

- Organizing in Management

- Schools of Management Thought

- Classical Management Approach

- Is Management an Art or Science?

- Who is a Manager?

Operations Research

- What is Operations Research?

- Operation Research Models

- Linear Programming

- Linear Programming Graphic Solution

- Linear Programming Simplex Method

- Linear Programming Artificial Variable Technique

- Duality in Linear Programming

- Transportation Problem Initial Basic Feasible Solution

- Transportation Problem Finding Optimal Solution

- Project Network Analysis with Critical Path Method

- Project Network Analysis Methods

- Project Evaluation and Review Technique (PERT)

- Simulation in Operation Research

- Replacement Models in Operation Research

Operation Management

- What is Strategy?

- What is Operations Strategy?

- Operations Competitive Dimensions

- Operations Strategy Formulation Process

- What is Strategic Fit?

- Strategic Design Process

- Focused Operations Strategy

- Corporate Level Strategy

- Expansion Strategies

- Stability Strategies

- Retrenchment Strategies

- Competitive Advantage

- Strategic Choice and Strategic Alternatives

- What is Production Process?

- What is Process Technology?

- What is Process Improvement?

- Strategic Capacity Management

- Production and Logistics Strategy

- Taxonomy of Supply Chain Strategies

- Factors Considered in Supply Chain Planning

- Operational and Strategic Issues in Global Logistics

- Logistics Outsourcing Strategy

- What is Supply Chain Mapping?

- Supply Chain Process Restructuring

- Points of Differentiation

- Re-engineering Improvement in SCM

- What is Supply Chain Drivers?

- Supply Chain Operations Reference (SCOR) Model

- Customer Service and Cost Trade Off

- Internal and External Performance Measures

- Linking Supply Chain and Business Performance

- Netflix’s Niche Focused Strategy

- Disney and Pixar Merger

- Process Planning at Mcdonald’s

Service Operations Management

- What is Service?

- What is Service Operations Management?

- What is Service Design?

- Service Design Process

- Service Delivery

- What is Service Quality?

- Gap Model of Service Quality

- Juran Trilogy

- Service Performance Measurement

- Service Decoupling

- IT Service Operation

- Service Operations Management in Different Sector

Procurement Management

- What is Procurement Management?

- Procurement Negotiation

- Types of Requisition

- RFX in Procurement

- What is Purchasing Cycle?

- Vendor Managed Inventory

- Internal Conflict During Purchasing Operation

- Spend Analysis in Procurement

- Sourcing in Procurement

- Supplier Evaluation and Selection in Procurement

- Blacklisting of Suppliers in Procurement

- Total Cost of Ownership in Procurement

- Incoterms in Procurement

- Documents Used in International Procurement

- Transportation and Logistics Strategy

- What is Capital Equipment?

- Procurement Process of Capital Equipment

- Acquisition of Technology in Procurement

- What is E-Procurement?

- E-marketplace and Online Catalogues

- Fixed Price and Cost Reimbursement Contracts

- Contract Cancellation in Procurement

- Ethics in Procurement

- Legal Aspects of Procurement

- Global Sourcing in Procurement

- Intermediaries and Countertrade in Procurement

Strategic Management

- What is Strategic Management?

- What is Value Chain Analysis?

- Mission Statement

- Business Level Strategy

- What is SWOT Analysis?

- What is Competitive Advantage?

- What is Vision?

- What is Ansoff Matrix?

- Prahalad and Gary Hammel

- Strategic Management In Global Environment

- Competitor Analysis Framework

- Competitive Rivalry Analysis

- Competitive Dynamics

- What is Competitive Rivalry?

- Five Competitive Forces That Shape Strategy

- What is PESTLE Analysis?

- Fragmentation and Consolidation Of Industries

- What is Technology Life Cycle?

- What is Diversification Strategy?

- What is Corporate Restructuring Strategy?

- Resources and Capabilities of Organization

- Role of Leaders In Functional-Level Strategic Management

- Functional Structure In Functional Level Strategy Formulation

- Information And Control System

- What is Strategy Gap Analysis?

- Issues In Strategy Implementation

- Matrix Organizational Structure

- What is Strategic Management Process?

Supply Chain

- What is Supply Chain Management?

- Supply Chain Planning and Measuring Strategy Performance

- What is Warehousing?

- What is Packaging?

- What is Inventory Management?

- What is Material Handling?

- What is Order Picking?

- Receiving and Dispatch, Processes

- What is Warehouse Design?

- What is Warehousing Costs?

You Might Also Like

What is causal research advantages, disadvantages, how to perform, types of charts used in data analysis, what is questionnaire design characteristics, types, don’t, what is measure of central tendency, what is measurement scales, types, criteria and developing measurement tools, what is research types, purpose, characteristics, process, what is research problem components, identifying, formulating,, cross-sectional and longitudinal research, sampling process and characteristics of good sample design, what is hypothesis definition, meaning, characteristics, sources, leave a reply cancel reply.

You must be logged in to post a comment.

World's Best Online Courses at One Place

We’ve spent the time in finding, so you can spend your time in learning

Digital Marketing

Personal growth.

Development

Parametric Tests: Definition and Characteristics

Concept Building in Fisheries Data Analysis pp 59–80 Cite as

Basic Concept of Hypothesis Testing and Parametric Test

- Basant Kumar Das 7 ,

- Dharm Nath Jha 8 ,

- Sanjeev Kumar Sahu 9 ,

- Anil Kumar Yadav 10 ,

- Rohan Kumar Raman 11 &

- M. Kartikeyan 12

- First Online: 12 October 2022

210 Accesses

Statistical analysis is done by many ways, but the majority of biologists use the ‘classical’ statistics which involves testing of null hypothesis using experimental data. In this process we estimate the probability that is obtained from the observed results or, something more extreme, if the null hypothesis is true. If the estimated probability (the p -value) is lesser than the significance value, we conclude that null hypothesis is unlikely true, and we reject the null hypothesis. So, hypothesis is defined as an assumption about a single population or about the relationship between two or more populations. It is testable and provides a possible explanation of a certain phenomenon or event. On the other hand, if a hypothesis is not testable, then it implies insufficient evidence to provide more than a tentative explanation, e.g. extinction of inland fishes. To test the new information or knowledge or belief about the populations against the existing one, two hypotheses are used, the null hypothesis ( H 0 ) and the alternative hypothesis ( H 1 ). Null hypothesis ( H 0 ) assumes that there is no difference between the new and existing populations. However there can be some indications that existing knowledge of beliefs may not be true. The null hypothesis ( H 0 ) is tested against the alternative hypothesis ( H 1 ). The alternative hypothesis states the statistical statement indicating the presence of an effect or a difference and sometimes known as ‘an intelligent guess’ based on limited information. It may so happen that the experimental results may match predictions, but it should be believed after proper testing and analysis of appropriate statistical tool(s), which includes designing an experiment or survey to generate or collect data/information (raw), exploratory data analysis and choosing an appropriate significance level or confidence limits (intervals).

Download chapter PDF

Importance of testing of hypothesis in fisheries

Concept of hypothesis testing for inference

One sample parametric test of significance

Two sample parametric test of significance

In the process of hypothesis testing, only null hypothesis is tested; based on the data outputs or results, it is either accepted or rejected, which is expressed as follows:

As the hypothesis is either accepted or rejected based on the numerical facts, only trustworthy data can be used for this purpose. Hypothesis testing therefore involves data collection using proper methods, compilation and securitization, use of appropriate tools for analysis and judicious decision, interpretation and explanation.

For an example, suppose a researcher is interested to conduct a study in which n samples of fishes are randomly selected from the fish population size N and their weight is measured. Now he/she is interested to test the mean weight of the fishes if 1000 g or not. The null and alternative hypothesis can be written as H 0 : μ = 1000 g and H 1 : μ ≠ 1000 g. Here null hypothesis shows that the mean weight of the population represented by the samples n is 1000 g, and the alternative hypothesis is that the mean of the population represented by the sample is not equal to 1000 g. In the above situation, the alternative hypothesis is non-directional, and it does not make a statement towards specific direction. The alternative hypothesis H 1 : μ ≠ 1000 g states only the fish population mean is not equal to 1000 g, but it does not state whether it will be less or greater than 1000 g. The directional alternative hypothesis can be represented in two directions (referred as one-tailed) H 1 : μ > 1000 g or H 1 : μ < 1000 g. The alternative hypothesis H 1 : μ > 1000 g states that the mean of the fish population represented by sample value is greater than 1000 g. If the directional alternative hypothesis H 1 : μ > 1000 g is employed, the null hypothesis can only be rejected if the data indicate that fish population mean is some value above 1000 g. The alternative hypothesis H 1 : μ < 1000 g states that

Note : In general, researcher selects the alternative hypothesis as non-directional when there is no expectation of the proposed research output and chooses directional alternative hypothesis if one have a definite expectation about output of the experiment. For non-directional alternative hypothesis, large effect or difference in sample data is obtained as compared to the directional alternative hypothesis.

4.1 Significance Level

The mean of the fish population represented by sample value is less than 1000 g. If the directional alternative hypothesis H 1 : μ < 1000 g is employed, the null hypothesis can only be rejected if the data indicate that fish population mean is some value less than 1000 g.