- Cloud Pak for Data v5.0 documentation

- Cloud Pak for Data as a Service documentation

- IBM watsonx as a service documentation

- IBM watsonx on premises documentation

IBM Data and AI Content

Evaluate a deployment in spaces: IBM watsonx

Evaluate a deployment in spaces: IBM watsonx.governance service v2.0

Build and deploy a model tutorial - AI governance: Cloud Pak for Data

Test and validate the model tutorial - AI governance: Cloud Pak for Data

Evaluate and track a prompt template: IBM watsonx.governance

Evaluate a prompt template: IBM watsonx.governance

Track a prompt template: IBM watsonx.governance

Integrate data tutorial - Data Integration: Data fabric in Cloud Pak for Data

Integrate data tutorial - Data Integration: Data fabric in Cloud Pak for Data v5.0

Track a model in an AI use case: IBM watsonx.governance

Govern virtualized data - Data Governance: Data fabric in Cloud Pak for Data v5.0

Knowledge Catalog: Cloud Pak for Data v5.0

Data Virtualization: Cloud Pak for Data v4.x-5.0

DataStage: Cloud Pak for Data v4.x-5.0

Data Refinery: Cloud Pak for Data v4.x-5.0

Dashboards: Cloud Pak for Data v4.x-5.0

RStudio: Cloud Pak for Data v4.x-5.0

Decision Optimization: Cloud Pak for Data v4.x-5.0

Machine learning: Cloud Pak for Data v5.0

Get started: Cloud Pak for Data v5.0

Data connections: Cloud Pak for Data v5.0

Match 360: Cloud Pak for Data v5.0

IBM watsonx.governance service

- Cloud Pak for Data product hub

The Data and AI Content Design Team aims to give you exactly the information you need, when you need it, just in time, so that you can achieve your goals with IBM’s products. We create content in-product help, product documentation, videos, tutorials, API docs, chatbots, and support technotes.

Cloud Pak for Business Automation

Cloud Pak For Data Accelerators

IBM Data Science and AI Elite

IBM Knowledge Catalog videos in French

Master Data Management

Videos for blog posts

Watsonx orchestrate.

No privacy policy was made available to date.

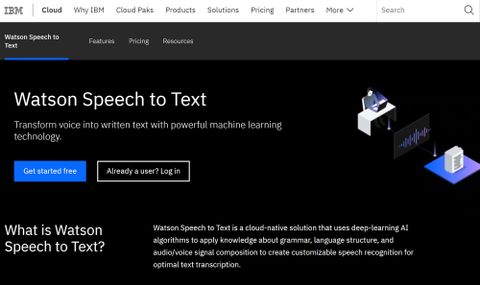

Watson Speech to Text review

Find out how ibm does speech recognition in our watson speech to text review.

TechRadar Verdict

There’s plenty to be said in favor of IBM’s Watson Speech to Text service, such as its ability to convert hours of audio into text quickly and accurately. But price, integration complexity, and somewhat patchy BETA features may put some businesses off.

Fast and accurate speech recognition

Grammar, language, and acoustic model training

More expensive than AWS or Google

Multi-speaker recognition is hit-and-miss

Why you can trust TechRadar We spend hours testing every product or service we review, so you can be sure you’re buying the best. Find out more about how we test.

Watson is IBM’s natural-language-processing computer system. It powers the famous question-answering supercomputer as well as a series of AI-based enterprise products, including Watson Speech to Text . In our Watson Speech to Text review, we’ll take a look at one of the best speech-to-text apps around, ideal for anyone who wants to convert audio to text at scale.

The Watson speech processing platform is available on IBM Cloud. It’s a versatile tool and can be used in many contexts including dictation and conference call transcription. What’s more, unlike most other speech-to-text apps, it’s available as an API, allowing developers to embed it into voice control systems, among other things.

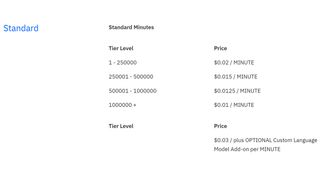

Watson Speech to Text: Plans and pricing

You can use Watson Speech to Text to process up to 500 minutes of audio for free per month. If you want to convert more than that, you’ll need to pay for each audio minute, and the rate changes based on the duration of audio processed. Costs range from $0.01 to $0.02 per minute, and there’s an add-on charge of $0.03 per minute if you require IBM’s Custom Language Model. Premium quote-only Watson plans are available too, and these grant access to enhanced data privacy features and uptime guarantees.

You can also access the Watson Speech to Text system through a general-purpose IBM Cloud subscription. Natural language processing is just one app in a wide range of AI services you can get through IBM Cloud, so this is a good option for any organization that needs access to high-speed data transfers, chatbots, or text-to-speech tools.

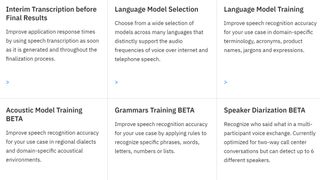

Watson Speech to Text: Features

Thanks to flexible API integration and other pre-build IBM tools, the Watson speech recognition service goes well beyond basic transcription. If you want to use it in a customer service context, for example, the Watson Assistant can be set up to process natural language questions directly or answer queries over the phone.

Watson works with live audio in 11 languages and can import sounds in a variety of pre-recorded formats. When streaming, real-time diagnostic support means Watson can prompt users to move closer to their microphone or change their environment. Also impressive is the fact that Watson can distinguish between different speakers in a shared conversation thanks to Speaker Diarization, a feature still undergoing beta testing.

Watson Speech to Text: Setup

To use Watson, the first thing you need to do is create an IBM Bluemix account. Registration is free and painless, requiring just an email address and password. Once logged in you need to add a provision on your account for the Speech to Text service. You’ll be given a couple of credentials at this stage that you should save in your own records.

After you’ve done that, things get significantly more complex. To access Watson, you’ll need to add those credentials to a batch of client uniform resource locator (cURL) code and then run it on your machine. To find out exactly what command to call, check out this handy guide. Alternatively, if you just want to see how well the Watson system works without having to jump through all those hoops you can try it out on IBM’s demo site instead.

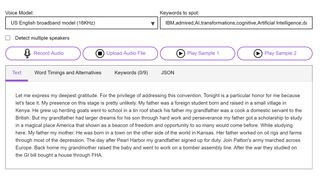

Watson Speech to Text: Interface

Unlike consumer-facing voice-to-text apps, Watson’s services are designed to be accessed through APIs and code embedded in other systems. For this reason, there’s no real Watson “interface”. Instead, Watson can be accessed through three different internet protocols. These are WebSockets, REST API, and Watson Developer Cloud.

To control Watson, you will need to use a command-line tool that connects to IBM’s cloud via one of those three routes. The interface that the end-user interacting with Watson sees will need to be built by someone on your development team separately.

Watson Speech to Text: Performance

Overall, we were impressed by the way that this natural-language-processing platform handled real speech. We used Watson to transcribe clips we recorded in a range of challenging environments as well as soundbites of famous speeches given in several of Watson’s 11 supported languages.

Although errors grew more frequent for clips with lots of background noise, in general, Watson produced incredibly accurate results. We’d estimate from our tests that unprompted mistakes occurred only once every 150 words on average. However, it did become clear why Watson’s Speaker Diarization feature remains in BETA testing as, several times during our evaluation, one voice was mislabelled as separate speakers.

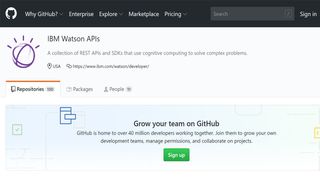

Watson Speech to Text: Support

The IBM resource center offers plenty of documentation to better understand how to apply Watson to your particular use case. It’s also worth making use of the API-integrations and SDKs created by the Watson developer community and posted to GitHub .

If you don’t find the solution to your problem there, you can reach out to IBM directly by opening a support ticket or contacting them over the phone. As long as you opted for one of the premium Watson packages, your Watson use will be protected by a Service Level Uptime agreement.

Watson Speech to Text: Final verdict

If your organization has the know-how and resources to properly integrate the IBM Watson Speech to Text platform into your system, you’ll benefit from advanced functions like real-time sound environment diagnostics and interim transcription results. However, small businesses and organizations will struggle with the technical challenge of setting Watson up properly.

The competition

The IBM Watson Speech to Text service is a direct competitor to bulk transcription services Google Cloud Speech-to-Text and Amazon Transcribe. Both of these are significantly cheaper than Watson, with Google Cloud transcription, for example, starting at $0.006 per minute. All three services share similar functions, such as customized vocabulary, but one feature sorely missing from IBM Watson but available with both competitors is automatic punctuation recognition.

Looking for another spoeech-to-text solution? Check out our Best speech-to-text software guide.

Midjourney AI image generator review

A review of BeyondTrust's Privileged Access Management Solutions

Victrola Eastwood II turntable review: bi-functional Bluetooth in a budget-friendly box

Most Popular

- 2 Where is the next-generation iPhone SE?

- 3 This $2,350 weight bench is prettier than anything in my living room

- 4 You can score a limited edition Apple Watch workout award today – here’s how to get it

- 5 New enterprise class NVMe SSD is the perfect internal boot drive for high-volume servers — This Gen 4x4 PCIe interface with 112-layer 3D TLC NAND SSD even has hardware-based power loss protection

Watson Speech-To-Text: How to Train Your Own Speech “Dragon” — Part 1: Data Collection and Preparation

IBM Watson Speech Services

Over the past years, we’ve seen a lot of AI chatbots deployed in across many organizations. They typically handle general questions about products and services, even doing some basic transactions without the intervention of a human.

Most of them already have an IVR to automate some customer interactions (press “1”… press “2”…), then route to the right call center queue. This locks Call Center Agents into answering very basic questions, instead of focusing on real complex situations.

The current trend now is how they can bring their current web chatbots with voice and replace their IVR. They have no clue where to start when introducing automated speech recognition services. A typical IVR solution built with Watson services requires the following components:

- IBM Voice Gateway (VG)/ Voice Agent (VA) — manage inbound calls using SIP and orchestrate the integration with the other IBM Watson services below

- IBM Watson Assistant (WA) — Conversational service containing your business flows (chatbot)

- IBM Watson Speech-to-Text (STT) — Automated Speech Recognition (ASR) service that converts an audio stream into text for other text-based APIs services

- IBM Watson Text-to-Speech (TTS) — Converts text into a natural-sounding audio voice

- Service Orchestration Engine (SOE) — Application layer that integrates many API services and backend systems.

This series of article will focus on how to train the IBM Watson STT service, its requirements, the methodology and some best practices inspired by actual customer engagements.

This article explains the core components of Watson STT as well as the data collection and preparation required before we start training Watson STT.

IBM Watson STT Components

First, you need to know what comes out-of-the-box with Watson STT and its components you can use to improve its accuracy:

- Base Model — each provisioned STT service comes with a base acoustic model and a base language model in multiple languages . It’s pre-trained with general words and terms. It can also handle light accents. IBM has introduced a new model called “ US English short form ” which is trained for IVR and Automated Customer Support solutions (US English Narrowband only)

- Language Model Adaptation/Customization — with UTF-8 plain text files, you can enhance the existing base language model with domain-specific terminology, acronyms, jargon and expressions, which will improve speech recognition accuracy.

- Grammar Adaptation/Customization — this new feature allows you to adapt the speech recognition based on specific rules limiting the choices of words returned. This is especially useful when dealing with alphanumeric IDs (eg. member ID, policy number, part number, etc).

I will cover the configuration and training in more details in part 2.

Yeah yeah — this is all really great but again, where do I start?

Data Collection

The first thing you need is data. Not any data. Representative Audio Data, meaning data that comes from your actual end users. We recommend to collect 10 to 50 hours of audio, depending on the use case, business flows, data inputs and target user population. You need to know the demographic information and distribution of your target users :

- Location: Some US states, entire USA, worldwide, …

- Accents: plain English, Indian English, Hispanic English, Japanese English, …

- Devices: cellphone, deskphone, softphone, computer,…

- Environments: busy open office, waiting room, quiet office,…

- Length of Utterances: Long versus short responses

You can use your existing call recordings from your existing IVR or call center solution, but in most cases I have seen, they are very “conversational / free form / multi-topics” which, in general, never mimic the target solution. If you have the human transcriptions of these audio files, you could search for calls that match your use case and business flows, then extract the utterances and data inputs you need (text and audio segments). If not, you will most probably end up collecting new, more targeted audio files.

It is recommended to maintain a matrix of accents, devices and environments for each collected audio file within your data set. This matrix will be used to verify if the data set is representative of your actual user population and identify gaps where more training material is required.

How Do I Collect Data?

You will need to identify testers, ideally actual users of your previous solution, matching you demographic matrix (location, accents, devices, environments, etc). The more distributed testers you have, the better data quality and variety you will get. You can either choose and manage your own group of testers or you can crowdsource to a company with your specific data collection requirements that we will cover next.

To be as representative as possible, you need to use a data collection technical environment as close as possible to your target solution. For our example of an IVR, you will need to setup an environment that your testers will be able to call in and collect their voices. All the customers I worked with had to setup 3 environments: Development, User Acceptance Testing (UAT), and Production. You can use the Development environment to do your data collection. You can simply ramp up an IBM Voice Gateway directly connected to a Watson Assistant skill with a simple welcome message and instructions, a STT service and a TTS service. Your telephony team would need to configure an 800 number that would connect to your Voice Gateway.

Next is your data collection strategy. If you know your use case and business flows, you know what you are expecting your users to provide:

- Utterances — to identify your intents and entities

- Data inputs — dates, IDs, numbers, amounts, part numbers

For the testers, should I use a “free form” style or a “scripted” style of data collection? — Here are the pros and cons for each style below.

- PRO: Provides variety of data, more representative — best for utterances (intents)

- CON: Unpredictable, hard to manage — especially for data inputs, will need manual transcription

- PRO: Expected utterances/data inputs (no manual transcription, only review), easier for testers to read and follow

- CON: Limited scope of data set — could result in model overfitting

The ideal solution is a balance of both: build multiple scripts (minimum: 5 — recommended: 10) where you provide a wide variety of pre-scripted data inputs and utterances to read, then include some “free form” fields where you provide instructions to the testers like “how would you ask about filing a claim?”. Include an “identification” section in your scripts. This will greatly help to build your matrix without having to listen to the entire audio. Here’s an example:

I am reading training script #<1, 2, 3,…,10> I am a <gender> — male, female I live in <city>, <state> — Newark, New Jersey I am using a <device> — cellphone, computer, deskphone I am calling from <environment> — office, street, living room, warehouse I speak English with a <accent>

Test your scripts. Based on past experiences, we have had good adoption when a script did not exceed 15 minutes in duration.

Make sure you deliver a training session to your testers so they understand what you are expecting out of this exercise, how to read the different scripts, the amount of calls you expect. Establish clear collection targets (eg. 20 hrs of audio in the next 3 weeks) and track progress on a daily basis.

Human Transcriptions

With all the audio files you have collected, you need to get them transcribed by humans (transcribers).

Wait! Why do I need to get human transcriptions when I already have my scripts above? I’ll just use my scripts and that will be the end of it.

Well… not quite. You’ll find out pretty quickly that your testers will:

- hesitate (eg. hummm, mmm, oops, sorry)

- stutter / restart (eg. one t-t-two three … one two three five <uhm> one two three four five…)

- cheat / skip lines

- cut short on the script

- improvise / not following script

There are two reasons why we need human transcriptions of the collected audio files:

- Measure the Accuracy of our STT Service — establish the “reference” comparison, knowing exactly what the audio is saying, so we can compare to what STT returns.

- Train a Language Model Adaptation — even if you use a script, your testers can provide expressions and jargons as part of their free-form utterances. That’s what we need in your language model adaptation.

More to come in part 2.

Customers have two options to transcribe the audio files:

- SMEs — Using customer staff who knows the use case very well (expressions, acronyms, products, IDs, etc) may make sense but you have to be sensitive as all of them have their day job. Also, they may not be as efficient as a professional transcriptionist and can be expensive/hard to scale if you have a large volume of audio files.

- Outsource — Using companies specialized in audio transcriptions could be the obvious choice but budget is usually a problem. You must also consider data confidentiality and ownership from the vendor. It may still require an SME to review the transcriptions for quality control.

For Proof-of-Concepts, we used both consultants and customer SMEs to expedite this task as the data set is usually very small.

You will also need to use a Human Transcription Protocol — a standard and consistent way of transcribing audio across all human transcribers. Here are some general guidelines I have been using at customers:

Transcribe everything that is said as words, never use numbers. For example, the year 1997 should be spelled out “nineteen ninety seven,” and a street address like 1901 Center St. should be spelled out “nineteen oh one Center street.” Human sounds that are not speech such as coughing, laughter, loud breath noise, or a sneeze should all be transcribed as <vocal_noise> Other small disfluencies like “uh” or “um” should all be marked as: <uh> or with the tag %HESITATION (this depends on the final use of the transcription). Do not tag any other sounds or noises, even loud ones, unless directed. Only if the task at hand calls for it, mark background noise with a speaker ID of ‘noise’ or a transcription annotation of <noise>. Do not make up or use any new tags not mentioned in this sheet, unless directed. If someone stammers and says ‘thir-thirty’, the corresponding transcription would be ‘thir- thirty’. Please be sure to leave a blank space after the “-” in the above example. Don’t use any punctuation such as periods, commas, question marks, or exclamation marks. But don’t correct existing. Transcribe abbreviations spoken as letters using capital letters with a period and a space after each letter: => I. B. M. => F. T. P. Transcribe acronyms that are said as words using capital letters without spaces: => NASA => DAT If someone spells out a word, capitalize the letters and put a space between them. For example, “my name is Dana D. A. N. A.” If you are unsure of a word or phrase, do your best to transcribe it. But if you can’t understand it or feel very unsure, then mark it <unintelligible>.

Building Your Training Set and Your Test Set

Once you have collected your audio files and human transcriptions, the next step is to split them into a training set and a test set. Depending on the amount of data you have collected, you can use the 80/20 rule (80% training / 20% test). Make sure both data sets are randomly selected, evenly distributed across your matrix areas with no overlap between them.

Using a simple spreadsheet with columns and a random function will do the trick. I usually start with the Accents category: I sort the column to group all rows with the same accent together, copy each accent group in their own Excel worksheet, randomize within each accent tab, then pick the top 20% of each accent. I create a Test Set worksheet, then copy each top 20% of each accent into it. Finally, I manually validate the balance of devices and environments in the test set to make sure all areas are covered.

In Part 2 , I will explain how to use the collected to configure and train Watson STT, do some experimentations and optimize.

To learn more about STT, you can also go through the STT Getting Started video HERE

Written by Marco Noel

Sr Product Manager, IBM Watson Speech / Language Translator. Very enthusiastic and passionate about AI technologies and methodologies. All views are only my own

Text to speech

Do you want to log out?

Watson Speech services on Cloud Pak for Data as a Service

Description.

The Watson Speech services for IBM Cloud Pak for Data offer speech recognition and speech synthesis capabilities for your applications:

Watson Speech to Text for IBM Cloud Pak for Data transcribes written text from spoken audio. The service leverages machine learning to combine knowledge of grammar, language structure, and the composition of audio and voice signals to accurately transcribe the human voice. It continuously updates and refines its transcription as it receives more speech audio. The service is ideal for applications that need to extract high-quality speech transcripts for use cases such as call centers, custom care, agent assistance, and similar solutions.

For more information about the service, see About Watson Speech to Text .

Watson Text to Speech for IBM Cloud Pak for Data synthesizes natural-sounding speech from written text. The service streams the results back to the client with minimal delay. The service is appropriate for voice-driven and screenless applications, where audio is the preferred method of output.

For more information about the service, see About Watson Text to Speech .

You can customize the Watson Speech services to suit your language and application needs. Both services offer HTTP and WebSocket programming interfaces that make them suitable for any application that produces or accepts audio.

The services add a tool or other type of interface that runs in IBM Cloud outside of Cloud Pak for Data as a Service and provides APIs that you can run in notebooks.

Quick links

- Use : Work with the service

- Develop : Write code and build applications

- What's new : See what's new each week

- Create : Create the service instance

Integrated services

| Service | Capability |

|---|---|

| Build your own branded assistant into any device, application, or channel. Users interact with your application through the user interface that you implement. |

- Python Course

- Python Basics

- Interview Questions

- Python Quiz

- Popular Packages

- Python Projects

- Practice Python

- AI With Python

- Learn Python3

- Python Automation

- Python Web Dev

- DSA with Python

- Python OOPs

- Dictionaries

Speech To Text using IBM Watson Studio

IBM Watson Studio is an integrated environment designed to develop, train, manage models, and deploy AI-powered applications and is a Software as a Service (SaaS) solution delivered on the IBM Cloud. The IBM Cloud provides lots of services like Speech To Text, Text To Speech, Visual Recognition, Natural Language Classifier, Language Translator, etc.

The Speech to Text service transcribes audio to text to enable speech transcription capabilities for applications.

Create an instance of the service

- Go to the Speech to Text page in the IBM Cloud Catalog.

- Sign up for a free IBM Cloud account or log in.

- Click Create .

Copy the Credentials to Authenticate to your service instance

- From the IBM Cloud Resource list , click on your Speech to Text service instance to go to the Speech to Text service dashboard page.

- On the Manage page, click Show Credentials to view your credentials.

- Copy the API Key and URL values.

Module Needed:

Now you’re ready to use the IBM Cloud Services.

Below code illustrates the use of IBM Watson studio’s Speech To Text Service using Python and web socket interface

Please Login to comment...

Similar reads.

- Technical Scripter

- python-utility

- Technical Scripter 2019

- How to Get a Free SSL Certificate

- Best SSL Certificates Provider in India

- Elon Musk's xAI releases Grok-2 AI assistant

- What is OpenAI SearchGPT? How it works and How to Get it?

- Content Improvement League 2024: From Good To A Great Article

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

ibm-watson-speech-to-text

Here are 6 public repositories matching this topic..., ibm / speech-to-text-code-pattern.

WARNING: This repository is no longer maintained

- Updated Feb 15, 2023

Sanji515 / Speech-to-text-convertor-and-tone-Analyzer

- Updated Jun 10, 2021

mattljones / MobileV

Capstone MSc project

- Updated Dec 1, 2022

n-shyamprasad / IBMWatsonsSpeechToText

IBM Watsons Speech To Text sample application

- Updated May 26, 2021

krishnac7 / YoutubeTranscriber

This Project is a how-to guide on creating a basic youtube transcriber web app on IBM Cloud Using Watson Speech to Text service and node-red

- Updated Sep 22, 2018

yongxuanluo / Cognitive

This repository contains code and description on how to connect, transcribe and write result for Google Speech to Text API, IBM Watson Speech Service and Microsoft Bing Speech library

- Updated Nov 27, 2017

Improve this page

Add a description, image, and links to the ibm-watson-speech-to-text topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the ibm-watson-speech-to-text topic, visit your repo's landing page and select "manage topics."

IMAGES

COMMENTS

Train Watson Speech to Text on your unique domain language and specific audio characteristics. Protects your data. Enjoy the security of IBM's world-class data governance practices. Truly runs anywhere. Built to support global languages and deployable on any cloud — public, private, hybrid, multicloud, or on-premises.

Experience IBM's Watson Speech to Text Demo, showcasing advanced transcription capabilities with machine learning technology.

Convert human voice into written word with deep-learning AI. The service can be used for chatbots, call centers, and multi-media transcription.

IBM Watson Text to Speech is an API cloud service that enables you to convert written text into natural-sounding audio in a variety of languages and voices within an existing application or within watsonx Assistant. Give your brand a voice and improve customer experience and engagement by interacting with users in their native language.

Learn how to use the IBM Watson Speech to Text service for speech transcription in your applications. The service supports many languages, audio formats, and customization options, and can be deployed as a cloud service or on premises.

The IBM Watson® Speech to Text service transcribes audio to text to enable speech transcription capabilities for applications. This curl-based tutorial can help you get started quickly with the service. The examples show you how to call the service's POST /v1/recognize method to request a transcript. The ...

This video shows you how to provision the Watson Speech to Text service from the IBM Cloud Catalog, locate your service credentials, and then use the API to recognize audio files to create a transcript.

Apr 23, 2021. Watson Speech to Text has released ten languages on our next-generation engine. This release is the beginning of a major architectural shift for Watson Speech to Text. We have ...

Sep 15, 2022. Tutorials on how to use Watson Speech to Text and Text to Speech. IBM Watson Speech-to-Text enables fast and accurate speech transcription in multiple languages for a variety of use ...

The IBM Watson Speech to Text service is a direct competitor to bulk transcription services Google Cloud Speech-to-Text and Amazon Transcribe. Both of these are significantly cheaper than Watson ...

Learn how to use Watson Speech to Text Service to transcribe speech data and extract insights from it with the watson_nlp library. Follow the steps to set up the service, preprocess the data, and apply various functions such as background noise suppression, speech audio parsing, and speaker labels.

Tune-By-Example: How To Tune Watson Text-to-Speech For Better Intonations. Co-authored by Rachel Liddell and Marco Noel, IBM Watson Speech Offering Managers. Apr 21, 2021. 1.

Use the sample text or enter your own text in English. Adjust speed. 0.2x 1.7x. Adjust pitch. 0%. Play voice. This system is for demonstration purposes only and is not intended to process Personal Data. No Personal Data is to be entered into this system as it may not have the necessary controls in place to meet the requirements of the General ...

likes. From all the various functionalities that IBM Watson speech-to-text offers, real-time processing is something that stands out as it is really beneficial for apps that require immediate text output like a live stream or customer service. Apart from this it is really easy for the users to integrate it into various applications through APIs ...

The IBM Watson™ Speech to Text service provides APIs that use IBM's speech-recognition capabilities to produce transcripts of spoken audio. The service can transcribe speech from various languages and audio formats. In addition to basic transcription, the service can produce detailed information about many different aspects of the audio. It returns all JSON response content in the UTF-8 ...

Want to skip out on copying down lecture notes?Maybe you want a live transcript from a meeting?To do that, you can use live speech to text transcription. In ...

IBM Watson Text-to-Speech (TTS)— Converts text into a natural-sounding audio voice Service Orchestration Engine (SOE) — Application layer that integrates many API services and backend systems.

Watson Speech to Text for IBM Cloud Pak for Data transcribes written text from spoken audio. The service leverages machine learning to combine knowledge of grammar, language structure, and the composition of audio and voice signals to accurately transcribe the human voice. It continuously updates and refines its transcription as it receives ...

Search for Text to Speech, and click the service. You should see the following page where you can create a new service. Select the same location as your watsonx Assistant instance, and create a lite plan for the Text to Speech service. Click Create. Your Text to Speech service should now be created, and you see it in your resources page.

Watson Text to Speech supports a wide variety of voices in all supported languages and dialects. Customize for your brand and use case. Adapt and customize Watson Text to Speech voices for the vocabulary of your business and the tone of your brand. Seeing is Believing. Proceed to Demo

The audio is streamed to Speech to Text using a WebSocket. The transcribed text from Speech to Text is displayed and updated. The transcribed text is sent to Language Translator and the translated text is displayed and updated. Completed phrases are sent to Text to Speech and the result audio is automatically played.

The Watson Speech to Text service is among the best in the industry. However, like other Cloud speech services, it was trained with general conversational speech for general use. Therefore, it might not perform well in specialized domains such as medicine, law, or sports. To improve the accuracy of the speech-to-text service, you can use ...

IBM Watson Studio is an integrated environment designed to develop, train, manage models, and deploy AI-powered applications and is a Software as a Service (SaaS) solution delivered on the IBM Cloud. The IBM Cloud provides lots of services like Speech To Text, Text To Speech, Visual Recognition, Natural Language Classifier, Language Translator, etc. . The Speech to Text service transcribes ...

Add this topic to your repo. To associate your repository with the ibm-watson-speech-to-text topic, visit your repo's landing page and select "manage topics." Learn more. GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.