Getting Feedback

What this handout is about.

Sometimes you’d like feedback from someone else about your writing, but you may not be sure how to get it. This handout describes when, where, how and from whom you might receive effective responses as you develop as a writer.

Why get feedback on your writing?

You’ll become a better writer, and writing will become a less painful process. When might you need feedback? You might be just beginning a paper and want to talk to someone else about your ideas. You might be midway through a draft and find that you are unsure about the direction you’ve decided to take. You might wonder why you received a lower grade than you expected on a paper, or you might not understand the comments that a TA or professor has written in the margins. Essentially, asking for feedback at any stage helps you break out of the isolation of writing. When you ask for feedback, you are no longer working in a void, wondering whether or not you understand the assignment and/or are making yourself understood. By seeking feedback from others, you are taking positive, constructive steps to improve your own writing and develop as a writer.

Why people don’t ask for feedback

- You worry that the feedback will be negative. Many people avoid asking others what they think about a piece of writing because they have a sneaking suspicion that the news will not be good. If you want to improve your writing, however, constructive criticism from others will help. Remember that the criticism you receive is only criticism of the writing and not of the writer.

- You don’t know whom to ask. The person who can offer the most effective feedback on your writing may vary depending on when you need the feedback and what kind of feedback you need. Keep in mind, though, that if you are really concerned about a piece of writing, almost any thoughtful reader (e.g., your roommate, mother, R.A., brother, etc.) can provide useful feedback that will help you improve your writing. Don’t wait for the expert; share your writing often and with a variety of readers.

- You don’t know how to ask. It can be awkward to ask for feedback, even if you know whom you want to ask. Asking someone, “Could you take a look at my paper?” or “Could you tell me if this is OK?” can sometimes elicit wonderfully rich responses. Usually, though, you need to be specific about where you are in the writing process and the kind of feedback that would help. You might say, “I’m really struggling with the organization of this paper. Could you read these paragraphs and see if the ideas seem to be in the right order?”

- You don’t want to take up your teacher’s time. You may be hesitant to go to your professor or TA to talk about your writing because you don’t want to bother them. The office hours that these busy people set aside, though, are reserved for your benefit, because the teachers on this campus want to communicate with students about their ideas and their work. Faculty can be especially generous and helpful with their advice when you drop by their office with specific questions and know the kinds of help you need. If you can’t meet during the instructor’s office hours, try making a special appointment. If you find that you aren’t able to schedule a time to talk with your instructor, remember that there are plenty of other people around you who can offer feedback.

- You’ve gotten feedback in the past that was unhelpful. If earlier experiences haven’t proved satisfactory, try again. Ask a different person, or ask for feedback in a new way. Experiment with asking for feedback at different stages in the writing process: when you are just beginning an assignment, when you have a draft, or when you think you are finished. Figure out when you benefit from feedback the most, the kinds of people you get the best feedback from, the kinds of feedback you need, and the ways to ask for that feedback effectively.

- You’re working remotely and aren’t sure how to solicit help. Help can feel “out of sight, out of mind” when working remotely, so it may take extra effort and research to reach out. Explore what resources are available to you and how you can access them. What type of remote feedback will benefit you most? Video conferencing, email correspondence, phone conversation, written feedback, or something else? Would it help to email your professor or TA ? Are you looking for the back and forth of a real-time conversation, or would it be more helpful to have written feedback to refer to as you work? Can you schedule an appointment with the Writing Center or submit a draft for written feedback ? Could joining or forming an online writing group help provide a source of feedback?

Possible writing moments for feedback

There is no “best time” to get feedback on a piece of writing. In fact, it is often helpful to ask for feedback at several different stages of a writing project. Listed below are some parts of the writing process and some kinds of feedback you might need in each. Keep in mind, though, that every writer is different—you might think about these issues at other stages of the writing process, and that’s fine.

- The beginning/idea stage: Do I understand the assignment? Am I gathering the right kinds of information to answer this question? Are my strategies for approaching this assignment effective ones? How can I discover the best way to develop my early ideas into a feasible draft?

- Outline/thesis: I have an idea about what I want to argue, but I’m not sure if it is an appropriate or complete response to this assignment. Is the way I’m planning to organize my ideas working? Does it look like I’m covering all the bases? Do I have a clear main point? Do I know what I want to say to the reader?

- Rough draft: Does my paper make sense, and is it interesting? Have I proven my thesis statement? Is the evidence I’m using convincing? Is it explained clearly? Have I given the reader enough information? Does the information seem to be in the right order? What can I say in my introduction and conclusion?

- Early polished draft: Are the transitions between my ideas smooth and effective? Do my sentences make sense individually? How’s my writing style?

- Late or final polished draft: Are there any noticeable spelling or grammar errors? Are my margins, footnotes, and formatting okay? Does the paper seem effective? Is there anything I should change at the last minute?

- After the fact: How should I interpret the comments on my paper? Why did I receive the grade I did? What else might I have done to strengthen this paper? What can I learn as a writer about this writing experience? What should I do the next time I have to write a paper?

A note on asking for feedback after a paper has been graded

Many people go to see their TA or professor after they receive a paper back with comments and a grade attached. If you seek feedback after your paper is returned to you, it makes sense to wait 24 hours before scheduling a meeting to talk about it. If you are angry or upset about a grade, the day off gives you time to calm down and put things in perspective. More important, taking a day off allows you to read through the instructor’s comments and think about why you received the grade that you did. You might underline or circle comments that were confusing to you so that you can ask about them later. You will also have an opportunity to reread your own writing and evaluate it more critically yourself. After all, you probably haven’t seen this piece of work since you handed it in a week or more ago, and refreshing your memory about its merits and weaknesses might help you make more sense of the grade and the instructor’s comments.

Also, be prepared to separate the discussion of your grade from the discussion of your development as a writer. It is difficult to have a productive meeting that achieves both of these goals. You may have very good reasons for meeting with an instructor to argue for a better grade, and having that kind of discussion is completely legitimate. Be very clear with your instructor about your goals. Are you meeting to contest the grade your paper received and explain why you think the paper deserved a higher one? Are you meeting because you don’t understand why your paper received the grade it did and would like clarification? Or are you meeting because you want to use this paper and the instructor’s comments to learn more about how to write in this particular discipline and do better on future written work? Being up front about these distinctions can help you and your instructor know what to expect from the conference and avoid any confusion between the issue of grading and the issue of feedback.

Kinds of feedback to ask for

Asking for a specific kind of feedback can be the best way to get advice that you can use. Think about what kinds of topics you want to discuss and what kinds of questions you want to ask:

- Understanding the assignment: Do I understand the task? How long should it be? What kinds of sources should I be using? Do I have to answer all of the questions on the assignment sheet or are they just prompts to get me thinking? Are some parts of the assignment more important than other parts?

- Factual content: Is my understanding of the course material accurate? Where else could I look for more information?

- Interpretation/analysis: Do I have a point? Does my argument make sense? Is it logical and consistent? Is it supported by sufficient evidence?

- Organization: Are my ideas in a useful order? Does the reader need to know anything else up front? Is there another way to consider ordering this information?

- Flow: Do I have good transitions? Does the introduction prepare the reader for what comes later? Do my topic sentences accurately reflect the content of my paragraphs? Can the reader follow me?

- Style: Comments on earlier papers can help you identify writing style issues that you might want to look out for. Is my writing style appealing? Do I use the passive voice too often? Are there too many “to be” verbs?

- Grammar: Just as with style, comments on earlier papers will help you identify grammatical “trouble spots.” Am I using commas correctly? Do I have problems with subject-verb agreement?

- Small errors: Is everything spelled right? Are there any typos?

Possible sources of feedback and what they’re good for

Believe it or not, you can learn to be your own best reader, particularly if you practice reading your work critically. First, think about writing problems that you know you have had in the past. Look over old papers for clues. Then, give yourself some critical distance from your writing by setting it aside for a few hours, overnight, or even for a couple of days. Come back to it with a fresh eye, and you will be better able to offer yourself feedback. Finally, be conscious of what you are reading for. You may find that you have to read your draft several times—perhaps once for content, once for organization and transitions, and once for style and grammar. If you need feedback on a specific issue, such as passive voice, you may need to read through the draft one time alone focusing on that issue. Whatever you do, don’t count yourself out as a source of feedback. Remember that ultimately you care the most and will be held responsible for what appears on the page. It’s your paper.

A classmate (a familiar and knowledgeable reader)

When you need feedback from another person, a classmate can be an excellent source. A classmate knows the course material and can help you make sure you understand the course content. A classmate is probably also familiar with the sources that are available for the class and the specific assignment. Moreover, you and your classmates can get together and talk about the kinds of feedback you both received on earlier work for the class, building your knowledge base about what the instructor is looking for in writing assignments.

Your TA (an expert reader)

Your TA is an expert reader—they are working on an advanced degree, either a Master’s or a Ph.D., in the subject area of your paper. Your TA is also either the primary teacher of the course or a member of the teaching team, so they probably had a hand in selecting the source materials, writing the assignment, and setting up the grading scheme. No one knows what the TA is looking for on the paper better than the TA , and most of the TAs on campus would be happy to talk with you about your paper.

Your professor (a very expert reader)

Your professor is the most expert reader you can find. They have a Ph.D. in the subject area that you are studying, and probably also wrote the assignment, either alone or with help from TAs. Like your TA, your professor can be the best source for information about what the instructor is looking for on the paper and may be your best guide in developing into a strong academic writer.

Your roommate/friend/family member (an interested but not familiar reader)

It can be very helpful to get feedback from someone who doesn’t know anything about your paper topic. These readers, because they are unfamiliar with the subject matter, often ask questions that help you realize what you need to explain further or that push you to think about the topic in new ways. They can also offer helpful general writing advice, letting you know if your paper is clear or your argument seems well organized, for example. Ask them to read your paper and then summarize for you what they think its main points are.

The Writing Center (an interested but not familiar reader with special training)

While the Writing Center staff may not have specialized knowledge about your paper topic, our writing coaches are trained to assist you with your writing needs. We cannot edit or proofread for you, but we can help you identify problems and address them at any stage of the writing process. The Writing Center’s coaches see thousands of students each year and are familiar with all kinds of writing assignments and writing dilemmas.

Other kinds of resources

If you want feedback on a writing assignment and can’t find a real live person to read it for you, there are other places to turn. Check out the Writing Center’s handouts . These resources can give you tips for proofreading your own work, making an argument, using commas and transitions, and more. You can also try the spell/grammar checker on your computer. This shouldn’t be your primary source of feedback, but it may be helpful.

A word about feedback and plagiarism

Asking for help on your writing does not equal plagiarism, but talking with classmates about your work may feel like cheating. Check with your professor or TA about what kinds of help you can get legally. Most will encourage you to discuss your ideas about the reading and lectures with your classmates. In general, if someone offers a particularly helpful insight, it makes sense to cite them in a footnote. The best way to avoid plagiarism is to write by yourself with your books closed. (For more on this topic, see our handout on plagiarism .)

What to do with the feedback you get

- Don’t be intimidated if your professor or TA has written a lot on your paper. Sometimes instructors will provide more feedback on papers that they believe have a lot of potential. They may have written a lot because your ideas are interesting to them and they want to see you develop them to their fullest by improving your writing.

- By the same token, don’t feel that your paper is garbage if the instructor DIDN’T write much on it. Some graders just write more than others do, and sometimes your instructors are too busy to spend a great deal of time writing comments on each individual paper.

- If you receive feedback before the paper is due, think about what you can and can’t do before the deadline. You sometimes have to triage your revisions. By all means, if you think you have major changes to make and you have time to make them, go for it. But if you have two other papers to write and all three are due tomorrow, you may have to decide that your thesis or your organization is the biggest issue and just focus on that. The paper might not be perfect, but you can learn from the experience for the next assignment.

- Read ALL of the feedback that you get. Many people, when receiving a paper back from their TA or professor, will just look at the grade and not read the comments written in the margins or at the end of the paper. Even if you received a satisfactory grade, it makes sense to carefully read all of the feedback you get. Doing so may help you see patterns of error in your writing that you need to address and may help you improve your writing for future papers and for other classes.

- If you don’t understand the feedback you receive, by all means ask the person who offered it. Feedback that you don’t understand is feedback that you cannot benefit from, so ask for clarification when you need it. Remember that the person who gave you the feedback did so because they genuinely wanted to convey information to you that would help you become a better writer. They wouldn’t want you to be confused and will be happy to explain their comments further if you ask.

- Ultimately, the paper you will turn in will be your own. You have the final responsibility for its form and content. Take the responsibility for being the final judge of what should and should not be done with your essay.

- Just because someone says to change something about your paper doesn’t mean you should. Sometimes the person offering feedback can misunderstand your assignment or make a suggestion that doesn’t seem to make sense. Don’t follow those suggestions blindly. Talk about them, think about other options, and decide for yourself whether the advice you received was useful.

Final thoughts

Finally, we would encourage you to think about feedback on your writing as a way to help you develop better writing strategies. This is the philosophy of the Writing Center. Don’t look at individual bits of feedback such as “This paper was badly organized” as evidence that you always organize ideas poorly. Think instead about the long haul. What writing process led you to a disorganized paper? What kinds of papers do you have organization problems with? What kinds of organization problems are they? What kinds of feedback have you received about organization in the past? What can you do to resolve these issues, not just for one paper, but for all of your papers? The Writing Center can help you with this process. Strategy-oriented thinking will help you go from being a writer who writes disorganized papers and then struggles to fix each one to being a writer who no longer writes disorganized papers. In the end, that’s a much more positive and permanent solution.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

Places on our 2024 summer school are filling fast. Don’t miss out. Enrol now to avoid disappointment

- 10 Types of Essay Feedback and How to Respond to Them

The moment of truth has arrived: you’ve got your marked essay back and you’re eagerly scanning through it, taking in the amount of red pen, and looking at the grade and hastily scrawled feedback at the end.

You should also read…

- The Complete Guide to Research Skills for Essay-Writing

- How to Write Dazzlingly Brilliant Essays

After deciphering the handwriting, you’re able to see a brief assessment of how you’ve performed in this essay, and your heart either leaps or sinks. Ideally, you’d receive detailed feedback telling you exactly where you fell short and providing helpful guidance on how to improve next time. However, the person marking your essay probably doesn’t have time for that, so instead leaves you very brief remarks that you then have to decode in order to understand how you can do better. In this article, we look at some of the common sorts of remarks you might receive in essay feedback, what they mean, and how to respond to them or take them on board so that you can write a better essay next time – no matter how good this one was!

1. “Too heavily reliant on critics”

We all fall into the trap of regurgitating whatever scholarship we happen to have read in the run-up to writing the essay, and it’s a problem that reveals that many students have no idea what their own opinion is. We’re so busy paraphrasing what scholars have said that we forget to think about whether we actually agree with what they’ve said. This is an issue we discussed in a recent article on developing your own opinion , in which we talked about how to approach scholarship with an open and critical mind, make up your own mind and give your own opinion in your essays. If you’ve received this kind of feedback, the person marking your essay has probably noticed that you’ve followed exactly the same line of thinking as one or more of the books on your reading list, without offering any kind of original comment. Take a look at the article linked to just now and you’ll soon be developing your own responses.

2. “Too short”

If your essay falls significantly short of the prescribed word count, this could suggest that you haven’t put in enough work. Most essays will require extensive reading before you can do a topic justice, and if you’ve struggled to fill the word count, it’s almost certainly because you haven’t done enough reading, and you’ve therefore missed out a significant line of enquiry. This is perhaps a sign that you’ve left it too late to write your essay, resulting in a rushed and incomplete essay (even if you consider it finished, it’s not complete if it hasn’t touched on topics of major relevance). This problem can be alleviated by effective time management, allowing plenty of time for the research phase of your essay and then enough time to write a detailed essay that touches on all the important arguments. If you’re struggling to think of things to say in your essay, try reading something on the topic that you haven’t read before. This will offer you a fresh perspective to talk about, and possibly help you to understand the topic clearly enough to start making more of your own comments about it.

3. “Too long”

[pullquote] “The present letter is a very long one, simply because I had no leisure to make it shorter” – Blaise Pascal [/pullquote]It sounds counter-intuitive, but it’s actually much easier to write an essay that’s too long than one that’s too short. This is because we’re all prone to waffling when we’re not entirely sure what we want to say, and/or because we want to show the person marking our essay that we’ve read extensively, even when some of the material we’ve read isn’t strictly relevant to the essay question we’ve been set. But the word count is there for a reason: it forces you to be clear and concise, leaving out what isn’t relevant. A short (say, 500-word) essay is actually a challenging academic exercise, so if you see fit to write twice the number of words, the person marking the essay is unlikely to be impressed. Fifty to a hundred words over the limit probably won’t be too much of an issue if that’s less than 10% of the word count, and will probably go unnoticed, but if you’ve ended up with something significantly over this, it’s time to start trimming. Re-read what you’ve written and scrutinise every single line. Does it add anything to your argument? Are you saying in ten words what could be said in three? Is there a whole paragraph that doesn’t really contribute to developing your argument? If so, get rid of it. This kind of ruthless editing and rephrasing can quickly bring your word count down, and it results in a much tighter and more carefully worded essay.

4. “Contradicts itself”

Undermining your own argument is an embarrassing mistake to make, but you can do it without realising when you’ve spent so long tweaking your essay that you can no longer see the wood for the trees. Contradicting yourself in an essay is also a sign that you haven’t completely understood the issues and haven’t formed a clear opinion on what the evidence shows. To avoid this error, have a detailed read through your essay before you submit it and look in particular detail at the statements you make. Looking at them in essence and in isolation, do any of them contradict each other? If so, decide which you think is more convincing and make your argument accordingly.

5. “Too many quotations”

It’s all too easy to hide behind the words of others when one is unsure of something, or lacking a complete understanding of a topic. This insecurity leads us to quote extensively from either original sources or scholars, including long chunks of quoted text as a nifty way of upping the word count without having to reveal our own ignorance (too much). But you won’t fool the person marking your essay by doing this: they’ll see immediately that you’re relying too heavily on the words of others, without enough intelligent supporting commentary, and it’s particularly revealing when most of the quotations are from the same source (which shows that you haven’t read widely enough). It’s good to include some quotations from a range of different sources, as it adds colour to your essay, shows that you’ve read widely and demonstrates that you’re thinking about different kinds of evidence. However, if you’ve received this kind of feedback, you can improve your next essay by not quoting more than a sentence at a time, making the majority of the text of your essay your own words, and including plenty of your own interpretation and responses to what you’ve quoted. Another word of advice regarding quotations: one of my tutors once told me is that one should never end an essay on a quotation. You may think that this is a clever way of bringing your essay to a conclusion, but actually you’re giving the last word to someone else when it’s your essay, and you should make the final intelligent closing remark. Quoting someone else at the end is a cop-out that some students use to get out of the tricky task of writing a strong final sentence, so however difficult the alternative may seem, don’t do it!

6. “Not enough evidence”

In an essay, every point you make must be backed up with supporting evidence – it’s one of the fundamental tenets of academia. You can’t make a claim unless you can show what has lead you to it, whether that’s a passage in an original historical source, the result of some scientific research, or any other form of information that would lend credibility to your statement. A related problem is that some students will quote a scholar’s opinion as though it were concrete evidence of something; in fact, that is just one person’s opinion, and that opinion has been influenced by the scholar’s own biases. The evidence they based the opinion on might be tenuous, so it’s that evidence you should be looking at, not the actual opinion of the scholar themselves. As you write your essay, make a point of checking that everything you’ve said is adequately supported.

7. “All over the place” / “Confused”

An essay described as “all over the place” – or words to that effect – reveals that the student who wrote it hasn’t developed a clear line of argument, and that they are going off at tangents and using an incoherent structure in which one point doesn’t seem to bear any relation to the previous one. A tight structure is vital in essay-writing, as it holds the reader’s interest and helps build your argument to a logical conclusion. You can avoid your essay seeming confused by writing an essay plan before you start. This will help you get the structure right and be clear about what you want to say before you start writing.

8. “Misses the point”

This feedback can feel particularly damning if you’ve spent a long time writing what you thought was a carefully constructed essay. A simple reason might be that you didn’t read the question carefully enough. But it’s also a problem that arises when students spend too long looking at less relevant sources and not enough at the most important ones, because they ran out of time, or because they didn’t approach their reading lists in the right order, or because they failed to identify correctly which the most important sources actually were. This leads to students focusing on the wrong thing, or perhaps getting lost in the details. The tutor marking the essay, who has a well-rounded view of the topic, will be baffled if you’ve devoted much of your essay to discussing something you thought was important, but which they know to be a minor detail when compared with the underlying point. If you’re not sure which items on your reading list to tackle first, you could try asking your tutor next time if they could give you some pointers on which of the material they recommend you focus on first. It can also be helpful to prompt yourself from time to time with the question “What is the point?”, as this will remind you to take a step back and figure out what the core issues are.

9. “Poor presentation”

This kind of remark is likely to refer to issues with the formatting of your essay, spelling and punctuation , or general style. Impeccable spelling and grammar are a must, so proofread your essay before you submit it and check that there are no careless typos (computer spell checks don’t always pick these up). In terms of your writing style , you might get a comment like this if the essay marker found your writing either boring or in a style inappropriate to the context of a formal essay. Finally, looks matter: use a sensible, easy-to-read font, print with good-quality ink and paper if you’re printing, and write neatly and legibly if you’re handwriting. Your essay should be as easy to read as possible for the person marking it, as this lessens their workload and makes them feel more positively towards your work.

10. “Very good”

On the face of it, this is the sort of essay feedback every student wants to hear. But when you think about it, it’s not actually very helpful – particularly when it’s accompanied by a mark that wasn’t as high as you were aiming for. With these two words, you have no idea why you didn’t achieve top marks. In the face of such (frankly lazy) marking from your teacher or lecturer, the best response is to be pleased that you’ve received a positive comment, but to go to the person who marked it and ask for more comments on what you could have done to get a higher mark. They shouldn’t be annoyed at your asking, because you’re simply striving to do better every time.

General remarks on responding to essay feedback

We end with a few general pieces of advice on how to respond to essay feedback.

- Don’t take criticism personally.

- Remember that feedback is there to help you improve.

- Don’t be afraid to ask for more feedback if what they’ve said isn’t clear.

- Don’t rest on your laurels – if you’ve had glowing feedback, it’s still worth asking if there’s anything you could have done to make the essay even better.

It can be difficult to have one’s hard work (metaphorically) ripped apart or disparaged, but feedback is ultimately there to help you get higher grades, get into better universities, and put you on a successful career path; so keep that end goal in mind when you get your essay back.

Image credits: banner ; library ; snake ; magnifying glass ; dartboard ; suggestions box .

Reflection and Reflective Writing - Skills Guide

- Reflective Assignments

- Reflecting on Your Experiences

- Reflecting on Your Skills

Reflecting on Feedback

- YouTube Playlist This link opens in a new window

- Audio Playlist

- Further Reading

- Downloadable Resources

Reflecting on feedback

What is reflectiong on feedback?

Feedback is designed to help you to identify your own strengths and weaknesses in a piece of work. It can help you improve on your work by building on the positive comments and using the critical ones to inform changes in your future writing. Therefore, feedback forms a critical role in your learning and helps you to improve each piece of work. As with all reflection, reflecting on your feedback should follow the three stages of reflection outlined in earlier in this guide.

What should I do with feedback?

Try to identify the main points of the feedback. What does it say? Can you break it down into main points or areas of improvement? Writing these down can be good to refer to later. You may find keeping all of your feedback in one place helps, as it makes it easier to look back and identify common mistakes. Identifying the main points of the feedback is the descriptive stage of reflection.

Once you have done this, move on to the critical thinking stage. How do you feel about the feedback? What are you particularly proud of? Is there anything you are disappointed by? Are there any points where you need further clarification from your lecturer?

Finally, there is the future focused stage of reflection. How will this feedback influence how you complete your next assignment? What will you do the same? What will you do differently? You may find it helpful to put together an action plan ready for when you begin your next module.

VP Education's Feedback Guidance

Feedback guidance.

Reflecting on Feedback Video - 2 mins

Naomi discusses top tips for reflecting on feedback from your assignments.

Methods of Reflecting on Your Assignment

- << Previous: Reflecting on Your Skills

- Next: PebblePad >>

- Last Updated: Aug 23, 2023 3:51 PM

- URL: https://libguides.derby.ac.uk/reflectivewriting

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, feedback that leads to improvement in student essays: testing the hypothesis that “where to next” feedback is most powerful.

- 1 Graduate School of Education, University of Melbourne, Melbourne, VI, Australia

- 2 Hattie Family Foundation, Melbourne, VI, Australia

- 3 Turnitin, LLC, Oakland, CA, United States

Feedback is powerful but variable. This study investigates which forms of feedback are more predictive of improvement to students’ essays, using Turnitin Feedback Studio –a computer augmented system to capture teacher and computer-generated feedback comments. The study used a sample of 3,204 high school and university students who submitted their essays, received feedback comments, and then resubmitted for final grading. The major finding was the importance of “where to next” feedback which led to the greatest gains from the first to the final submission. There is support for the worthwhileness of computer moderated feedback systems that include both teacher- and computer-generated feedback.

Introduction

One of the more powerful influences on achievement, prosocial development, and personal interactions is feedback–but it is also remarkably variable. Kluger and DeNis (1996) completed an influential meta-analysis of 131 studies and found an overall effect on 0.41 of feedback on performance and close to 40% of effects were negative. Since their paper there have been at least 23 meta-analyses on the effects of feedback, and recently Wisniewski et al. (2020) located 553 studies from these meta-analyses ( N = 59,287) and found an overall effect of 0.53. They found that feedback is more effective for cognitive and physical outcome measures than for motivational and behavioral outcomes. Feedback is more effective the more information it contains, and praise (for example), not only includes little information about the task, but it can also be diluting as receivers tend to recall the praise more than the content of the feedback. This study investigates which forms of feedback are more predictive of improvement to students’ essays, using Turnitin Feedback Studio–a computer augmented system to capture teacher- and computer-generated feedback comments.

Hattie and Timperley (2007) defined feedback as relating to actions or information provided by an agent (e.g., teacher, peer, book, parent, internet, experience) that provides information regarding aspects of one’s performance or understanding. This concept of feedback relates to its power to “fill the gap between what is understood and what is aimed to be understood” ( Sadler, 1989 ). Feedback can lead to increased effort, motivation, or engagement to reduce the discrepancy between the current status and the goal; it can lead to alternative strategies to understand the material; it can confirm for the student that they are correct or incorrect, or how far they have reached the goal; it can indicate that more information is available or needed; it can point to directions that the students could pursue; and, finally, it can lead to restructuring understandings.

To begin to unravel the moderator effects that lead to the marked variability of feedback, Hattie and Timperley (2007) argued that feedback can have different perspectives: "feed-up" (comparison of the actual status with a target status), "feed-back" (comparison of the actual status with a previous status), and "feed-forward" (explanation of the target status based on the actual status). They claimed that these related to the three feedback questions: Where am I going? How am I going? and Where to next? Additionally, feedback can be differentiated according to its level of cognitive complexity: It can refer to a task, a process, one’s self-regulation, or one’s self. Task level feedback means that someone receives feedback about the content, facts, or surface information (How well have the tasks been completed and understood?). Feedback at the level of process means that a person receives feedback on the processes or strategies of his or her performance (What needs to be done to understand and master the tasks?). Feedback at the level of self-regulation means that someone receives feedback about the individual’s regulation of the strategies they are using to their performance (What can be done to manage, guide, and monitor your own way of action?). The self-level focuses on the personal characteristics of the feedback recipient (often praise about the person). One of the arguments about the variability is that feedback needs to focus on the appropriate question and the optimal level of cognitive complexity. If not, the message can easily be ignored, misunderstood, and of low value to the recipient.

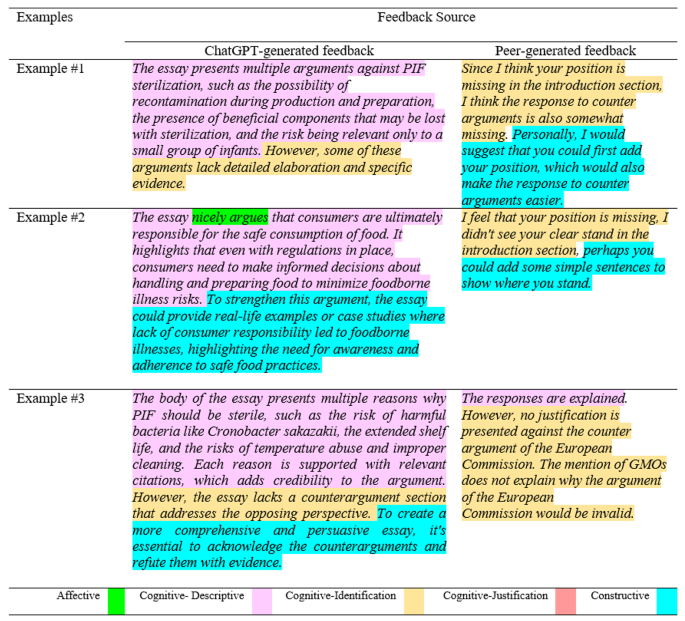

Another important distinction is between the giving and receiving of feedback. Students are more often the receiver, and this is becoming more a focus of research. Students indicate a preference for feedback that is specific, useful, and timely ( Pajares and Graham, 1998 ; Gamlem and Smith, 2013 ), relative to the criteria or standards they are assessed against ( Brown, 2009 ; Beaumont et al., 2011 ), and do not mind what form it comes provided they see it as informative to improve their learning. Dawson et al. (2019) asked teachers and students about what leads to the most effective feedback. The majority of teachers argued it was the design of the task that lead to better feedback and students argued it was the quality of the feedback provided to them in teacher comments that led to improvements in performance.

Brooks et al. (2019) investigated the prevalence of feedback relative to these three questions in upper elementary classrooms. They recorded and transcribed 12 h of classroom audio based on 1,125 grade five students from 13 primary schools in Queensland. The researchers designed a questionnaire to measure the usefulness of feedback aligned with the three feedback questions (“Where am I going?” “How am I going?” “Where to next?“) along with three of the four feedback levels (task, process, and self-regulation). Results indicated that of the three feedback questions, “How am I going?” (Feed-back) was by far the most prominent, accounting for 50% of total feedback words. This was followed by “Where am I going?” (Feed-up) (31%) and “Where to next?” (Feed-forward) (19%). When considering the focus of verbal feedback, 79% of the feedback was at the task level, 16% at process level, and <1% at the self level. The findings of such studies are significant in relation to the gap between literature and practice, which indicates that we need to know more about how effective feedback interventions are enacted in the classroom.

Mandouit (2020) developed a series of feedback questions from an intensive study of student conceptions of feedback. He found that students sought feedback as to how to “elaborate on ideas” and “how to improve.” They wanted feedback that would not only help them “next time” they complete a similar task in the future, but that would help them develop the ability to think critically and self-regulate moving forward. It is these transferable skills and understandings that students consider as important, but, as identified in this study, challenged teachers in practice as it was rarely offered. His student feedback model included four questions: Where have I done well? Where can I improve? How do I improve? What do I do next time?

One often suggested method of improving the nature of feedback is to administer it via computer-based systems. Earlier synthesis of this literature tended to focus on task or item-specific level and investigating the differences between knowledge of results (KR), knowledge of correct response (KCR), and elaborated feedback (EF). Van der Kleij, Feskens, and Eggen (2015) , for example, used 70 effects from 40 studies of item-based feedback in a computer-based environment on students’ learning outcomes. They showed that elaborated feedback (e.g., providing an explanation) produced larger effect-sizes (EF = 0.49) than feedback regarding the correctness of the answer (KR = 0.05) or providing the correct answer (KCR = 0.32). Azevedo and Bernard (1995) used 22 studies on the effects of feedback on learning from computer-based instruction with an overall effect of 0.80. Immediate feedback had an effect of 0.80 and delayed 0.35, but they did not relate their findings to specific feedback characteristics. Jaehnig and Miller (2007) used 33 studies and found elaborated feedback was more effective than KCR, and KCR was more effective than KR. The major message is the computer-delivered elaborated feedback has the largest effects.

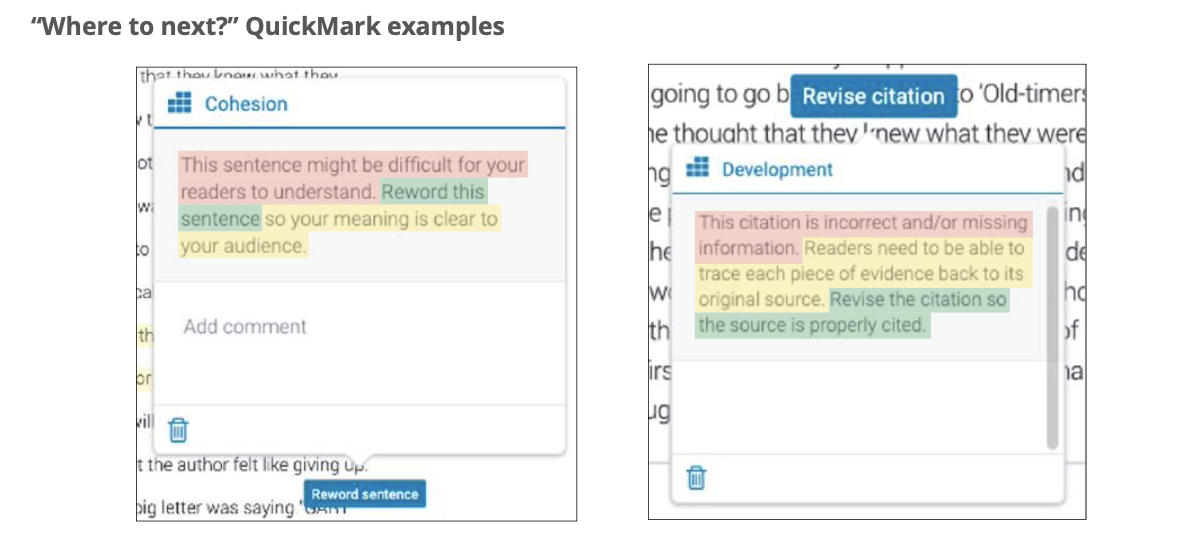

The Turnitin Feedback Studio Model: Background and Existing Research

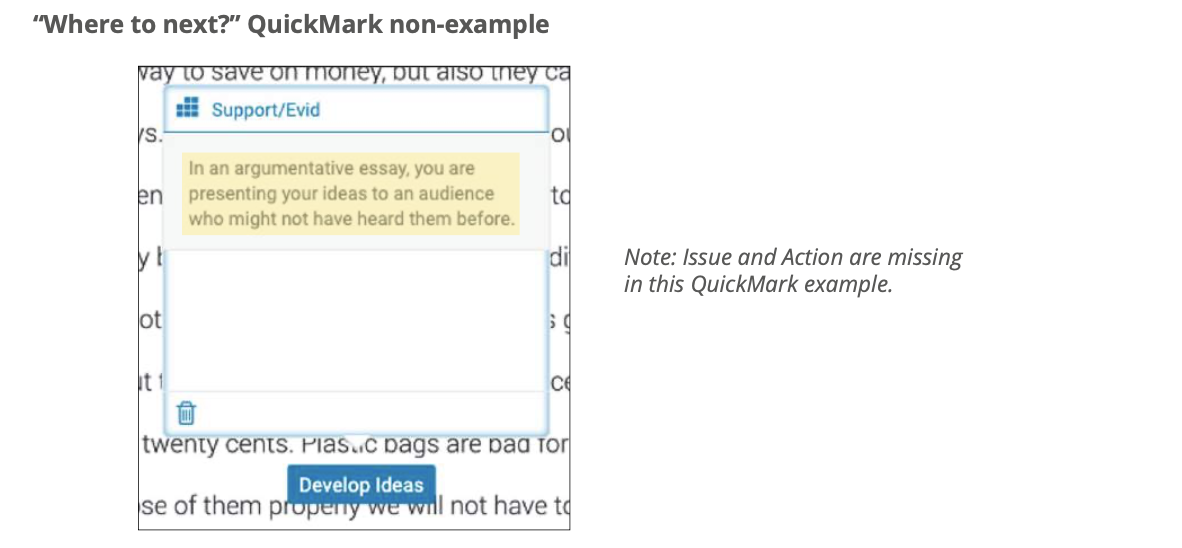

Turnitin Feedback Studio, one such computer-based system, is most known for its similarity checking, powered by a comprehensive database of academic, internet, and student content. Beyond that capability, however, Feedback Studio also offers functionality to support both effective and efficient options for grading and, most relevant to this study, providing feedback. Inside the system, the Feedback Studio model allows for multiple streams of feedback, depending on how instructors opt to utilize the system, with both automated options and teacher-generated options. The primary automated option is for grammar feedback, which automatically detects issues and provides guidance through an integration with the e-rater ® engine from ETS ( https://www.ets.org/erater ). Even this option allows for customization and additional guidance, as instructors are able to add elaborative comments to the automated feedback. Outside of the grammar feedback, the remaining capabilities are manual, in that instructors identify the instances requiring feedback and supply the specific feedback content. Within this structure, there are still multiple avenues for providing feedback, including inline comments, summary text or voice comments, and Turnitin’s trademarked QuickMarks ® . In each case, instructors determine what student content requires commenting and then develop the substance of the feedback.

As a vehicle for providing feedback on student writing, Turnitin Feedback Studio offers an environment in which the impact of feedback can be leveraged. Student perceptions about the kinds of feedback that most impact their learning align to findings from scholarly research ( Kluger and DeNis, 1996 ; Wisniewski et al., 2020 ). Periodically, Turnitin surveys students to gauge different aspects of the product. In studies conducted by Turnitin, student perceptions of feedback over time fall into similar patterns as in outside research. For example, a 2013 survey about students’ perceptions of the value, type, and timing of instructor feedback reported that 67% of students claimed receiving general, overall comments, but only 46% of those students rated the general comments as “very helpful.” Respondents from the same study rated feedback on thesis/development as the most valuable, but reported receiving more feedback on grammar/mechanics and composition/structure ( Turnitin, 2013 ). Turnitin (2013) suggests the disconnect between the receipt of general, overall comments compared to the perceived value provides further support that students value more specific feedback, such as comments on thesis/development.

Later, an exploratory survey examining over 2,000 students’ perceptions on instructor feedback asked students to rank the effectiveness of types of feedback. The survey found that the greatest percentage (76%) of students reported suggestions for improvement as “very” or “extremely effective.” Students also highly perceived feedback such as specific notes written in the margins (73%), use of examples (69%), and pointing out mistakes as effective (68%) ( Turnitin, 2014 ). Turnitin (2014) proposes, “The fact that the largest number of students consider suggestions for improvement to be “very” or “extremely effective” lends additional support to this assertion and also strongly suggests that students are looking at the feedback they receive as an extension of course or classroom instruction.”

Turnitin found similar results in a subsequent survey that asked students about the helpfulness of types of feedback. Students most strongly reported suggestions for improvement (83%) as helpful. Students also preferred specific notes (81%), identifying mistakes (74%), and use of examples (73%) as types of feedback. Meanwhile, the least helpful types of feedback reported by students were general comments (38%) and praise or discouragement (39%) ( Turnitin, 2015 ). As a result of this survey data, Turnitin (2015) proposed that “Students find specific feedback most helpful, incorporating suggestions for improvement and examples of what was done correctly or incorrectly.” The same 2015 survey found that students consider instructor feedback to be just as critical for their learning as doing homework, studying, and listening to lectures. From the 1,155 responses, a majority of students (78%) reported that receiving and using teacher feedback is “very” or “extremely important” for learning. Turnitin (2015) suggests that the results from the survey demonstrates that students consider feedback to be just as important to other core educational activities.

Turnitin’s own studies are not the only evidence of these trends in students’ perceptions of feedback. In a case study examining the effects of Turnitin’s products on writing in a multilingual language class, Sujee et al. (2015) found that the majority of the learners expressed that Turnitin’s personalized feedback and identification of errors met their learning needs. Students appreciated the individualized feedback and claimed a deeper engagement with the content. Students were also able to integrate language rules from the QuickMark drag-and-drop comments, further strengthening the applicability in a second language classroom ( Sujee et al., 2015 ). A 2015 study on perceptions of Turnitin’s online grading features reported that business students favored the level of personalization, timeliness, accessibility, and quantity and quality of receiving feedback in an electronic format ( Carruthers et al., 2015 ). Similarly, a 2014 study exploring the perceptions of healthcare students found that Turnitin’s online grading features enhanced timeliness and accessibility of feedback. In particular regard to the instructor feedback tools in Turnitin Feedback Studio (collectively referred to as GradeMark), students valued feedback that was more specific since instructors could add annotated comments next to students’ text. Students claimed it increased meaningfulness of feedback which further supports the GradeMark tools as a vehicle for instructors to provide quality feedback ( Watkins et al., 2014 ). In both studies, students expressed interest in using the online grading features more widely across other courses in their studies ( Watkins et al., 2014 ; Carruthers et al., 2015 ).

In addition to providing insight about students’ perception of what is most effective, Turnitin studies also surfaced issues that students sometimes encounter with feedback provided inside the system. Part of the 2015 study focused on how much students read, use, and understand feedback they receive. Turnitin (2015) reports that students most often read a higher percentage of feedback than they understand or apply. When asked about barriers to understanding feedback, students who claimed to understand a minimal amount of instructor feedback (13%) reported that most often/always the largest challenges were: comments had unclear connections to the student work or assignment goals (44.8%), feedback was too general (42.6%), and they received too many comments (31.8%) ( Turnitin, 2015 ). Receiving feedback that was too general was also considered a strong barrier for students who claimed to understand a moderate or large amount of feedback.

Research Questions

From studies investigating students’ conceptions of feedback, Mandouit (2020) found that while they appreciated feedback about “where they are going”, and “how they are going”, they saw feedback mainly in terms of helping them know where to go next in light of submitted work. Such “where to next” feedback was more likely to be enacted.

This study investigates a range of feedback forms, and in particular investigates the hypothesized claim that feedback that leads to “where to next” decisions and actions by students is most likely to enhance their performance. It uses Turnitin Feedback Studio to ask about the relation of various agents of feedback (teacher, machine program), and codes the feedback responses to identify which kinds of feedback are related to the growth and achievement from first to final submission of essays.

In order to examine the feedback that instructors have provided on student work, original student submissions and revision submissions, along with corresponding teacher- and machine intelligence-assigned feedback from Feedback Studio were compiled by the Turnitin team. All papers in the dataset were randomly selected using a postgreSQL random () function. A query was built around the initial criteria to fetch assignments and their associated rubrics. The initial criteria included the following: pairs of student original drafts and revision assignments where each instructor and each student was a member of one and only one pairing of assignments; assignments were chosen without date restrictions through random selection until the sample size (<3,000) had been satisfied; assignments were from both higher education and secondary education students; assignment pairs where the same rubric had been applied to both the original submission and the revision submission and students had received scores based on that rubric; any submissions with voice-recorded comments were excluded; and submissions and all feedback were written only in the English language. Throughout the data collection process, active measures were taken to exclude all personally identifiable information, including student name, school name, instructor name, and paper content, in accordance with Turnitin’s policies. The Chief Security Officer of Turnitin conducted a review of this approach prior to completion. After the dataset was returned, an additional column was added that assigned a random number to each data item. That random number column was then sorted and returned the final dataset of student submissions and resubmissions in random order, from which the final sample of student papers were identified for analysis.

The categories for investigation included country of student, higher education or high school setting, number of times the assignment was submitted, date and time of submission, details regarding the scoring of the assignment (like score, possible points, and scoring method), and details regarding feedback that was provided on the assignment (like mark type, page location of each mark, title of each mark, and comment text associated with each mark), and two outcome measures–achievement and growth from time 1 to time 2.

There were 3,204 students who submitted essays for feedback on at least two occasions. About half (56%) were from higher education and the other half (44%) from secondary schools. The majority (90%) were from the United States, and the others were from Australia (5.2%), Japan (1.5%), Korea (0.8%), India (0.5%), Egypt (0.5%), the Netherlands (0.4%), China (0.4%), Germany (0.3%), Chile (0.2%), Ecuador (0.2%), Philippines (0.2), and South Africa (0.03%). Within the United States, students spanned 13 states, with the majority coming from California (464), Texas (412), Illinois (401), New York (256), New Jersey (193), Washington (93), Wisconsin (91), Missouri (81), Colorado (67), and Kentucky (61).

In this study, pairs of student-submitted work—original drafts and revisions of those same assignments—along with the feedback that was added to each assignment, were examined. Student assignments were submitted to the Turnitin Feedback Studio system as part of real courses to which students submit their work via online, course-specific assignment inboxes. Upon submission, student work is reviewed by Turnitin’s machine intelligence for similarity to other published works on the Internet, submissions by other students, or additional content available within Turnitin’s extensive database. At this point in the process, instructors also have the opportunity to provide feedback and score student work with a rubric.

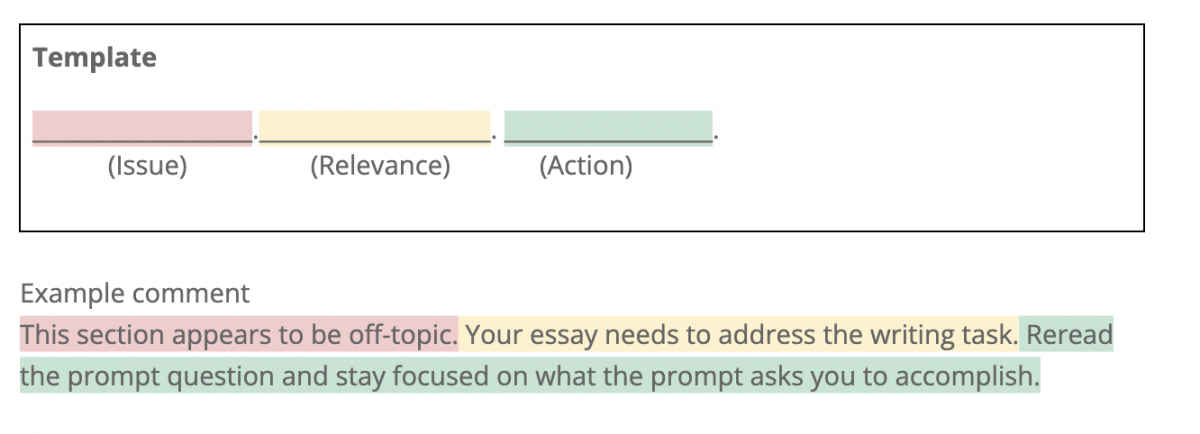

Feedback streams for student submissions in Turnitin Feedback Studio are multifaceted. At the highest level, holistic feedback can be provided in the Feedback Summary panel as a text comment. However, if instructors wish to embed feedback directly within student submissions, there are several options. First, the most prolific feature of Turnitin Feedback Studio is QuickMarks™, a set of reusable drag-and-drop comments derived from corresponding rubrics aligned to genre and skill-level criteria. Instructors may also choose to create their own QuickMarks and rubrics to save and reuse on future submissions. When instructors wish to craft personalized feedback not intended for reuse, they may leave a bubble comment, which appears in a similar manner to the reusable QuickMarks, or an inline comment that appears as a free-form text box they can place anywhere on the submission. Instructors also have access to a strikethrough tool to suggest that a student should delete the selected text. Automated grammar feedback can be enabled as an additional layer, offering the identification of grammar, usage, mechanics, style, and spelling errors. Instructors have the option to add an elaborative comment, including hyperlinks to instructional resources, to the automated grammar and mechanics feedback (delivered via e-rater®) and Turnitin QuickMarks. Finally, rubrics and grading tools are available to the teacher to complete the feedback and scoring process.

Within the prepared dataset, paired student assignments were presented for analysis. Work from each individual student was used only once, but appeared as a pair of assignments, comprising an original, “first draft” submission, and then a later “revision” submission of the same assignment by the same student. The first set of feedback thus can be considered formative, and the latter summative feedback. For each pair of assignments, the following information was reported: institution type, country, and state or province for each individual student’s work. Then, for both the original assignment submission and the revision assignment submission, the following information was reported: assignment ID, submission ID, number of times the assignment was submitted, date and time of submission, details regarding the scoring of the assignment (like score, possible points, and scoring method), and details regarding feedback that was provided on the assignment (like mark type, page location of each mark, title of each mark, and comment text associated with each mark). Prior to the analysis, definitions of all terms included within the dataset were created collaboratively and recorded in a glossary to ensure a common understanding of the vocabulary.

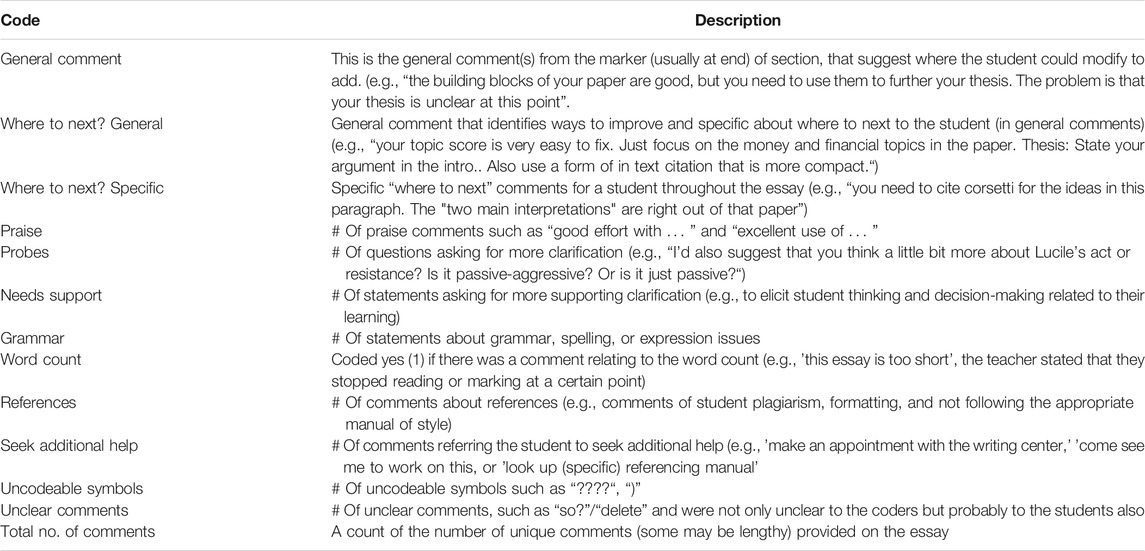

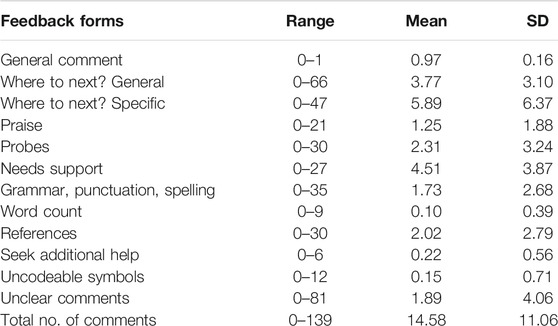

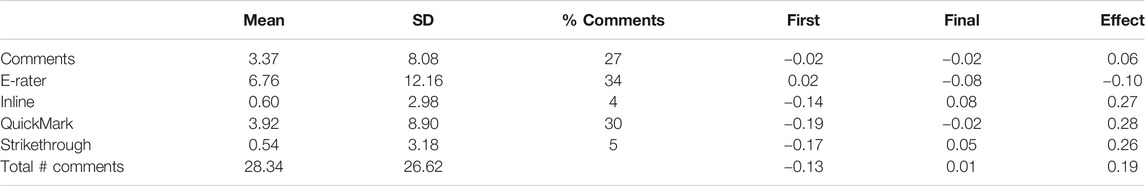

Some of the essays had various criteria scores (such as ideas, organization, evidence, style), but in this study only the total score was used. The assignments were marked out of differing totals so all were converted to percentages. On average, there were 19 days between submissions (SD = 18.4). Markers were invited by the Turnitin Feedback Studio processes to add comments to the essays and these were independently coded into various categories (see Table 1 ). One researcher was trained in applying the coding manual, and close checking was undertaken for the first 300 responses, leading to an inter-rater reliability in excess of 0.90, with all disagreements negotiated.

TABLE 1 . Codes and description of attributes coded for each essay.

There were two outcome measures. The first is the final score after the second submission, and the growth effect-size between the score after the first submission (where the feedback was provided) and the final score. The effect-size for each student was calculated using the formula for correlated or dependent samples.

A structural model was used to relate the feedback types with the final and growth effect-size. A multivariate analysis of variance investigates the nature of changes in means from the first to final scores, moderated by level of schooling (secondary, university). A regression was used to identify the source of feedback relative to the growth and final scores.

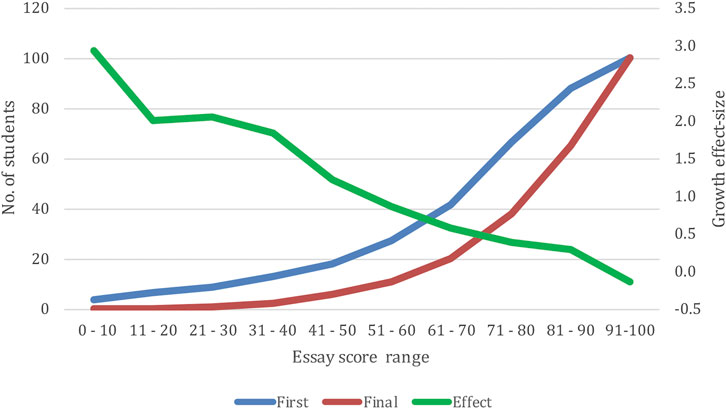

The average score at Time 1 was 71.34 (SD = 19.91) and at Time 2 was 82.97 (SD = 15.03). The overall effect-size was 0.70 (SD = 0.97) with a range from −2.26 to 4.97. The correlation between Time 1 and 2 scores was 0.60.

Figure 1 shows the number of students in each score range, and the average effect-size for that score range. Not surprising, the opportunity to improve (via the effect-size) is greater for those who scored lower in their essays at Time 1. There were between 1 and 139 total comments for the first submission essays with an average of 14 comments per essay ( Table 2 ). The most common comments related to Where to next–Specific (5.9), Needs support (4.5), Where to next–General (3.8), and Probes (2.3). The next set of common comments were about style such as references (2.0), Unclear comments (1.9), Grammar, punctuation, and spelling (1.7). There was about 1 praise comment per essay, and the other forms of feedback were more rare (Seek additional help (0.22), Uncodeable symbols (0.15), and Word count (0.10). The general message is that instructors were mostly focused on improvement, then on the style aspects of the essays.

FIGURE 1 . The number of students within each first submitted and final score range, and the average effect-size for that score range based on the first submission.

TABLE 2 . Range, mean, and standard deviation of feedback comments for first submission essay.

There are two related dependent variables–the relation between the comments and the Time 2 grade, and to the improvement between Time 1 and Time 2 (the growth effect-size). Clearly, there is a correlation between Time 2 and the effect-size (as can be seen in Figure 1 ) but it is sufficiently low ( r = 0.19) to warrant asking about the differential relations of the comments to these two outcomes.

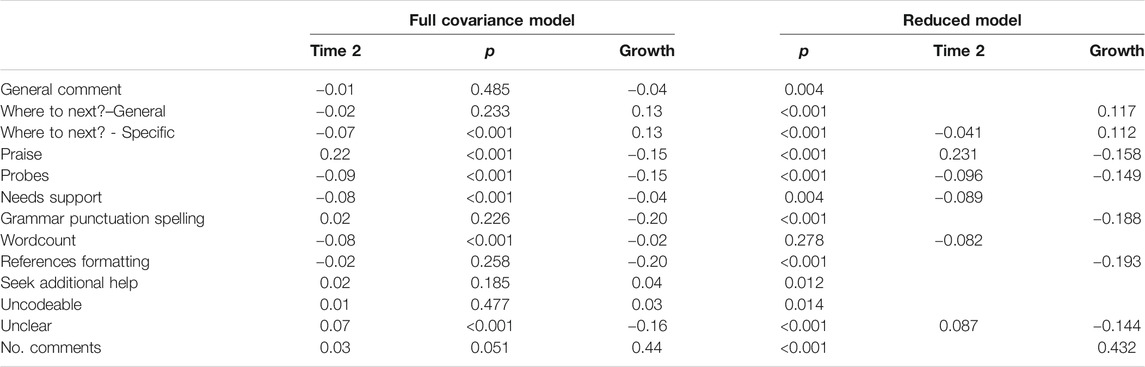

A covariance analysis using SEM (Amos, Arbuckle, 2011 ) identified the statistically significant correlates of the Time 2 and growth effect-sizes. Using only these forms of feedback statistically significant, then a reduced model was run to optimally identify the weights of the best sub-set. The reduced model (chi-square = 18,466, df = 52) was statistically significantly better fit (chi-square = 19,686, df = 79; Δchi-square = 1,419, df = 27, p <. 001).

Thus, the best predictors of the growth improvement from Time 1 to Time 2 were the number of comments (the more comments given, the more likely the essay improved), and Specific and General Where to next comments ( Table 3 ). The best predictors of the overall Time 2 performance were Praise; and the comments that led to the lowest improvement included Praise, Probes, Grammar, Referencing, and Unclear comments. It is worth noting that Praise for a summative outcome is positive, but for formative is negative.

TABLE 3 . Standardized structural weights for the full and reduced covariance analyses for the feedback forms.

A closer investigation was undertaken to see if Praise indeed has a dilution effect. Each student’s first submission was coded as having no Praise and no Where-to-next ( N = 334), only Praise ( N = 416), only Where-to-next (N = 1,113), and Praise and Where-to-next feedback ( N = 1,434). When the first two sets were considered, the improvement was appreciably lower where there was Praise compared to no Praise and no Where-to-next (Mn = −0.21 vs. 0.40), and similar compared to Where-to-next and “Praise and Where-to-next” (Mn = 0.89 vs. 0.89).

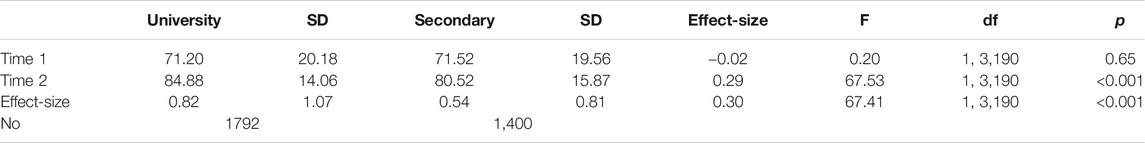

There was an overall mean difference in the Time 1, Time 2, and growth effect-size relating to whether the student was at University or within a High School (Wilks Lambda = 0.965, Mult. F = 57.68, df = 2, 3,189, p < 0.001; Table 4 ). There were no differences between the mean scores at Time 1, but the University students made the greatest growth between Time 1 and Time 2, and thence in the final Time 2 grade. There were more comments for University students inviting students to seek additional help, and more Where to next comments. The instructors of University students gave more specific and general Where to next feedback comments (4.11, 6.55 vs. 3.30, 4.87) than did the instructors/markers of the secondary students. There were no differences in the number of words in the comments, Praise, the provision of general comments or not, uncodeable comments, and referencing.

TABLE 4 . Means, standard deviations, effect-sizes, and analysis of variance statistics of comparisons between University and Secondary students.

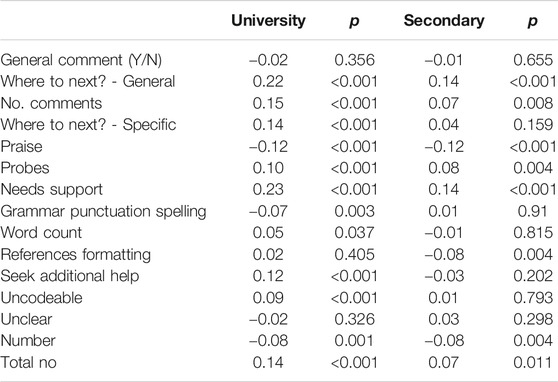

For University students, the highest correlates of the specific coded essay comments included Where to next, the number of comments, General and Specific Where to next, Need support, Seek additional help, the total number of comments, and negatively related to Praise ( Table 5 ). For secondary students, the highest correlates were Where to next, Need support, and negative to Praise.

TABLE 5 . Correlations between the forms of feedback for the university and secondary students.

There are five major forms of feedback provisions, and the most commonly used were e-rater ® (grammar), QuickMarks (drag-and-drop comments), and teacher-provided comments. There were relatively few inline (instructor brief comments), and strikethroughs ( Table 6 ). Across all essays, there were significant relations between teacher inline, QuickMarks, and strikethroughs with the growth impact over time. Perhaps not surprising, these same three correlated negatively with the performance at first submission as these had the greatest opportunity for teacher comments.

TABLE 6 . Means, standard deviations, and correlations between forms of feedback provision and first submission, final submission, and growth effect-sizes.

Feedback can be powerful but it is also most variable. Understanding this variability is critical for instructors who aim to improve their students’ proficiencies. There is so much advice about feedback sandwiches (including a positive comment, then specific feedback comment, then another positive comment), increasing the amount of feedback, the use of praise about effort, and debates about grades or comments, but these all ignore the more important issue about how any feedback is heard, understood, and actioned by students. There is also a proliferation of computer-aided tools to improve the giving of feedback, and with the inclusion of artificial intelligence engines, these are proffered as solutions to also reduce the time and investment by instructors in providing feedback. The question addressed in this study is whether the various forms of feedback is “heard and used by students” leading to improved performance.

As Mandouit (2020) argued, students prefer feedback that assists them to know where to learn next, and then how to attain this “where to next” status; although this appears to be a least frequent form of feedback ( Brooks et al., 2019 ). Others have found that more elaborate feedback produces greater gains in learning than feedback about the correctness of the answer, and this is even more likely to be the case when asked for essays rather than closed forms of answering (e.g., multiple choice).

The major finding was the importance of “where to next” feedback, which lead to the greatest gains from the first to the final submission. No matter whether more general or quite specific, this form of feedback seemed to be heard and actioned by the students. Other forms of feedback helped, but not to the same magnitude; although it is noted that the quantity of feedback (regardless of form) was of value to improve the essay over time.

Care is needed, however, as this “where to next” feedback may need to be scaffolded on feedback about “where they are going” and “how they are going,” and it is notable that these students were not provided with exemplars, worked examples, or scoring rubrics that may change the power of various forms of feedback, and indeed may reduce the power of more general forms of “where to next” feedback.

In most essays, teachers provided some praise feedback, and this had a negative effect on improvement, but a positive effect on the final submission. Praise involves a positive evaluation of a student’s person or effort, a positive commendation of worth, or an expression of approval or admiration. Students claim they like praise (Lipnevich, 2007), and it is often claimed praise is reinforcing such that it can increase the incidence of the praise behaviors and actions. In an early meta-analysis, however, Deci et al. (1999) showed that in all cases, the effects of praise were negative on increasing the desired behavior; task noncontingent–praise given from something other than engaging in the target activity (e.g., simply participating in the lesson) ( d = −0.14); task contingent–praise given for doing or completing the target activity ( d = −0.39); completion contingent–praise given specifically for performing the activity well, matching some standard of excellence, or surpassing some specific criterion ( d = −0.44); engagement contingent–praise dependent on engaging in the activity but not necessarily completing it ( d = −0.28). The message from this study is to reduce the use of praise-only feedback during the formative phase if you want the student to focus on the substantive feedback to then improve their writing. In a summative situation, however, there can be praise-only feedback, although more investigation is needed of such praise on subsequent activities in the class ( Skipper and Douglas, 2012 ).

The improvement was greater for university than high school students and this is probably because university instructors were more likely to provide where to next feedback and inviting students to seek additional help. It is not clear why high school teachers are less likely to offer “where to next” feedback, although it is noted they were more likely to request the student seek additional help. Both high school and college students do not seem to mind the source of the feedback, especially the timeliness, accessibility, and quantity of feedback provided by computer-based systems.

The strengths of the study include the large sample size and there was information from a first submission of an essay with formative feedback, then resubmission for summative feedback. The findings invite further study about the role of praise, the possible effects of combinations of forms of feedback (not explored in this study); a major message is the possibilities offered from computer-moderated feedback systems. These systems include both teacher- and automatic-generated feedback, but as important are the facilities and ease for instructors to add inline comments and drag-and-drop comments. The Turnitin Feedback Studio model does not yet provide artificial intelligence provision of “where to next” feedback, but this is well worth investigation and building. The use of a computer-aided system of feedback augmented with teacher-provided feedback does lead to enhanced performance over time.

This study demonstrates that students do appreciate and act upon “where to next” feedback that guides them to enhance their learning and performance, they do not seem to mind whether the feedback is from the teacher via a computer-based feedback tool, and were able, in light of the feedback, to decode and act on the feedback statements.

Data Availability Statement

The data analyzed in this study is subject to the following licenses/restrictions: The data for the study was drawn from Turnitin’s proprietary systems in the manner described in the from Data Availability Statement to anonymize user information and protect user privacy. Turnitin can provide the underlying data (without personal information) used for this study to parties with a qualified interest in inspecting it (for example, Frontiers editors and reviewers) subject to a non-disclosure agreement. Requests to access these datasets should be directed to Ian McCullough, [email protected] .

Author Contributions

JH is the first author and conducted the data analysis independent of the co-authors employed by Turnitin, which furnished the dataset. JC, KVG, PW-S, and KW provided information on instructor usage of the Turnitin Feedback Studio product and addressed specific questions of data interpretation that arose during the analysis.

Turnitin, LLC employs several of the coauthors, furnished the data for analysis of feedback content, and will cover the costs of the open access publication fees.

Conflict of Interest

JC, KVG, PW-S, and KW are employed by Turnitin, which provided the data for analysis.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Ian McCullough, James I. Miller, and Doreen Kumar for their support and contributions to the set up and coding of this article.

Arbuckle, J. L. (2011). IBM SPSS Amos 20 User’s Guide . Wexford, PA: Amos Development Corporation, SPSS Inc .

Azevedo, R., and Bernard, R. M. (1995). A Meta-Analysis of the Effects of Feedback in Computer-Based Instruction. J. Educ. Comput. Res. 13 (2), 111–127. doi:10.2190/9lmd-3u28-3a0g-ftqt

CrossRef Full Text | Google Scholar

Beaumont, C., O’Doherty, M., and Shannon, L. (2011). Reconceptualising Assessment Feedback: A Key to Improving Student Learning?. Stud. Higher Edu. 36 (6), 671–687. doi:10.1080/03075071003731135

Brooks, C., Carroll, A., Gillies, R. M., and Hattie, J. (2019). A Matrix of Feedback for Learning. Aust. J. Teach. Edu. 44 (4), 13–32. doi:10.14221/ajte.2018v44n4.2

Brown, G. (2009). “The reliability of essay scores: The necessity of rubrics and moderation,” in Tertiary assessment and higher education student outcomes: Policy, practice and research. Editors L. H. Meyer, S. Davidson, H. Anderson, R. Fletcher, P. M. Johnston and M. Rees, (Wellington, NZ: Ako Aotearoa), 40–48.

Google Scholar

Carruthers, C., McCarron, B., Bolan, P., Devine, A., McMahon-Beattie, U., and Burns, A. (2015). ‘I like the Sound of that' - an Evaluation of Providing Audio Feedback via the Virtual Learning Environment for Summative Assessment. Assess. Eval. Higher Edu. 40 (3), 352–370. doi:10.1080/02602938.2014.917145

Dawson, P., Henderson, M., Mahoney, P., Phillips, M., Ryan, T., Boud, D., et al. (2019). What Makes for Effective Feedback: Staff and Student Perspectives. Assess. Eval. Higher Edu. 44 (1), 25–36. doi:10.1080/02602938.2018.1467877

Deci, E. L., Koestner, R., and Ryan, R. M. (1999). A Meta-Analytic Review of Experiments Examining the Effects of Extrinsic Rewards on Intrinsic Motivation. Psychol. Bull. 125 (6), 627–668. doi:10.1037/0033-2909.125.6.627

PubMed Abstract | CrossRef Full Text | Google Scholar

Gamlem, S. M., and Smith, K. (2013). Student Perceptions of Classroom Feedback. Assess. Educ. Principles, Pol. Pract. 20 (2), 150–169. doi:10.1080/0969594x.2012.749212

Hattie, J., and Timperley, H. (2007). The Power of Feedback. Rev. Educ. Res. 77 (1), 81–112. doi:10.3102/003465430298487

Jaehnig, W., and Miller, M. L. (2007). Feedback Types in Programmed Instruction: A Systematic Review. Psychol. Rec. 57 (2), 219–232. doi:10.1007/bf03395573

Kluger, A. N., and DeNisi, A. (1996). The Effects of Feedback Interventions on Performance: A Historical Review, a Meta-Analysis, and a Preliminary Feedback Intervention Theory. Psychol. Bull. 119 (2), 254–284. doi:10.1037/0033-2909.119.2.254

Mandouit, L. (2020). Investigating How Students Receive, Interpret, and Respond to Teacher Feedback . Melbourne, Victoria, Australia: Unpublished doctoral dissertation, University of Melbourne .

Pajares, F., and Graham, L. (1998). Formalist Thinking and Language Arts Instruction. Teach. Teach. Edu. 14 (8), 855–870. doi:10.1016/s0742-051x(98)80001-2

Sadler, D. R. (1989). Formative Assessment and the Design of Instructional Systems. Instr. Sci. 18 (2), 119–144. doi:10.1007/bf00117714

Skipper, Y., and Douglas, K. (2012). Is No Praise Good Praise? Effects of Positive Feedback on Children's and University Students' Responses to Subsequent Failures. Br. J. Educ. Psychol. 82 (2), 327–339. doi:10.1111/j.2044-8279.2011.02028.x

Sujee, E., Engelbrecht, A., and Nagel, L. (2015). Effectively Digitizing Communication with Turnitin for Improved Writing in a Multilingual Classroom. J. Lang. Teach. 49 (2), 11–31. doi:10.4314/jlt.v49i2.1

Turnitin (2013). Closing the Gap: What Students Say about Instructor Feedback . Oakland, CA: Turnitin, LLC . Retrieved from http://go.turnitin.com/what-students-say-about-teacher-feedback?Product=Turnitin&Notification_Language=English&Lead_Origin=Website&source=Website%20-%20Download .

Turnitin (2015). From Here to There: Students’ Perceptions on Feedback, Goals, Barriers, and Effectiveness . Oakland, CA: Turnitin, LLC . Retrieved from http://go.turnitin.com/paper/student-feedback-goals-barriers .

Turnitin (2014). Instructor Feedback Writ Large: Student Perceptions on Effective Feedback . Oakland, CA: Turnitin, LLC . Retrieved from http://go.turnitin.com/paper/student-perceptions-on-effective-feedback .

Van der Kleij, F. M., Feskens, R. C. W., and Eggen, T. J. H. M. (2015). Effects of Feedback in a Computer-Based Learning Environment on Students' Learning Outcomes. Rev. Educ. Res. 85 (4), 475–511. doi:10.3102/0034654314564881

Watkins, D., Dummer, P., Hawthorne, K., Cousins, J., Emmett, C., and Johnson, M. (2014). Healthcare Students' Perceptions of Electronic Feedback through GradeMark. JITE:Research 13, 027–047. doi:10.28945/1945Retrieved from http://www.jite.org/documents/Vol13/JITEv13ResearchP027-047Watkins0592.pdf .

Wisniewski, B., Zierer, K., and Hattie, J. (2020). The Power of Feedback Revisited: A Meta Analysis of Educational Feedback Research. Front. Psychol. 10, 3087. doi:10.3389/fpsyg.2019.03087

Keywords: feedback, essay scoring, formative evaluation, summative evaluation, computer-generated scoring, instructional practice, instructional technologies, writing

Citation: Hattie J, Crivelli J, Van Gompel K, West-Smith P and Wike K (2021) Feedback That Leads to Improvement in Student Essays: Testing the Hypothesis that “Where to Next” Feedback is Most Powerful. Front. Educ. 6:645758. doi: 10.3389/feduc.2021.645758

Received: 23 December 2020; Accepted: 06 May 2021; Published: 28 May 2021.

Reviewed by:

Copyright © 2021 Hattie, Crivelli, Van Gompel, West-Smith and Wike. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Patti West-Smith, [email protected]

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Additional menu

Khan Academy Blog

Introducing Khanmigo’s New Academic Essay Feedback Tool

posted on November 29, 2023

By Sarah Robertson , senior product manager at Khan Academy

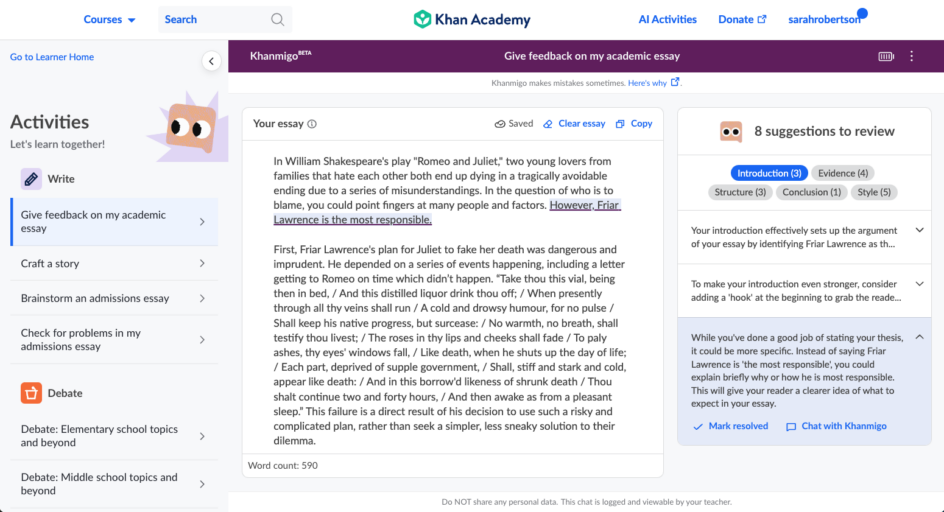

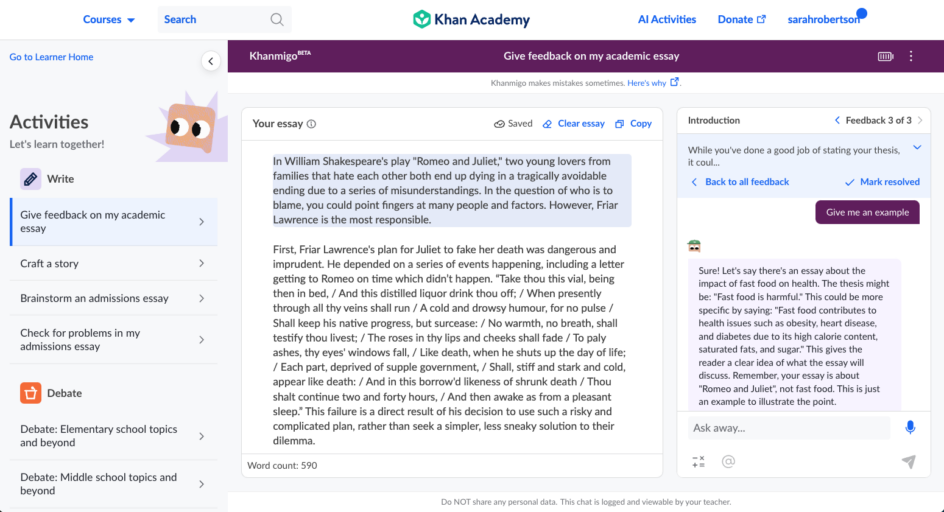

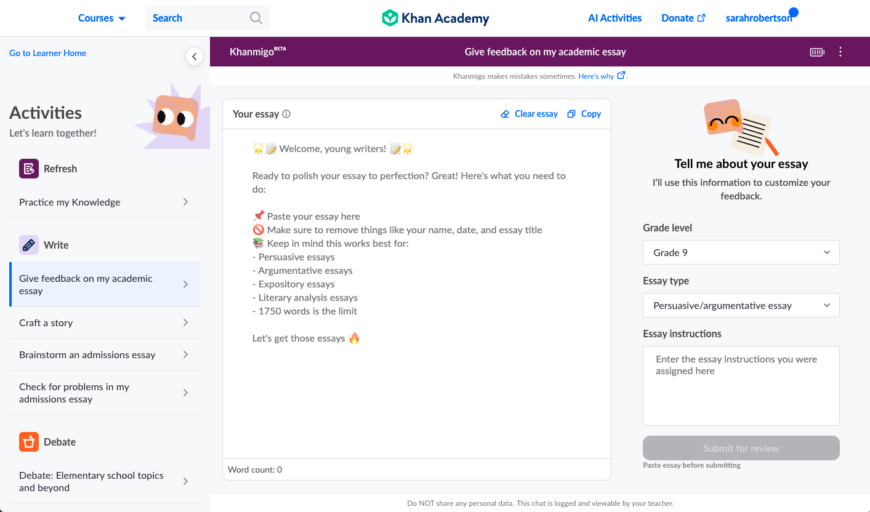

Khan Academy has always been about leveraging technology to deliver world-class educational experiences to students everywhere. We think the newest AI-powered feature in our Khanmigo pilot—our Academic Essay Feedback tool—is a groundbreaking step toward revolutionizing how students improve their writing skills.

The reality of writing instruction

Here’s a word problem for you: A ninth-grade English teacher assigns a two-page essay to 100 students. If she limits herself to spending 10 minutes per essay providing personalized, detailed feedback on each draft, how many hours will it take her to finish reviewing all 100 essays?

The answer is that it would take her nearly 17 hours —and that’s just for the first draft!

Research tells us that the most effective methods of improving student writing skills require feedback to be focused, actionable, aligned to clear objectives, and delivered often and in a timely manner .

The unfortunate reality is that teachers are unable to provide this level of feedback to students as often as students need it—and they need it now more than ever. Only 25% of eighth and twelfth graders are proficient in writing, according to the most recent NAEP scores .

An AI writing tutor for every student