Statistics Made Easy

The Importance of Statistics in Research (With Examples)

The field of statistics is concerned with collecting, analyzing, interpreting, and presenting data.

In the field of research, statistics is important for the following reasons:

Reason 1 : Statistics allows researchers to design studies such that the findings from the studies can be extrapolated to a larger population.

Reason 2 : Statistics allows researchers to perform hypothesis tests to determine if some claim about a new drug, new procedure, new manufacturing method, etc. is true.

Reason 3 : Statistics allows researchers to create confidence intervals to capture uncertainty around population estimates.

In the rest of this article, we elaborate on each of these reasons.

Reason 1: Statistics Allows Researchers to Design Studies

Researchers are often interested in answering questions about populations like:

- What is the average weight of a certain species of bird?

- What is the average height of a certain species of plant?

- What percentage of citizens in a certain city support a certain law?

One way to answer these questions is to go around and collect data on every single individual in the population of interest.

However, this is typically too costly and time-consuming which is why researchers instead take a sample of the population and use the data from the sample to draw conclusions about the population as a whole.

There are many different methods researchers can potentially use to obtain individuals to be in a sample. These are known as sampling methods .

There are two classes of sampling methods:

- Probability sampling methods : Every member in a population has an equal probability of being selected to be in the sample.

- Non-probability sampling methods : Not every member in a population has an equal probability of being selected to be in the sample.

By using probability sampling methods, researchers can maximize the chances that they obtain a sample that is representative of the overall population.

This allows researchers to extrapolate the findings from the sample to the overall population.

Read more about the two classes of sampling methods here .

Reason 2: Statistics Allows Researchers to Perform Hypothesis Tests

Another way that statistics is used in research is in the form of hypothesis tests .

These are tests that researchers can use to determine if there is a statistical significance between different medical procedures or treatments.

For example, suppose a scientist believes that a new drug is able to reduce blood pressure in obese patients. To test this, he measures the blood pressure of 30 patients before and after using the new drug for one month.

He then performs a paired samples t- test using the following hypotheses:

- H 0 : μ after = μ before (the mean blood pressure is the same before and after using the drug)

- H A : μ after < μ before (the mean blood pressure is less after using the drug)

If the p-value of the test is less than some significance level (e.g. α = .05), then he can reject the null hypothesis and conclude that the new drug leads to reduced blood pressure.

Note : This is just one example of a hypothesis test that is used in research. Other common tests include a one sample t-test , two sample t-test , one-way ANOVA , and two-way ANOVA .

Reason 3: Statistics Allows Researchers to Create Confidence Intervals

Another way that statistics is used in research is in the form of confidence intervals .

A confidence interval is a range of values that is likely to contain a population parameter with a certain level of confidence.

For example, suppose researchers are interested in estimating the mean weight of a certain species of turtle.

Instead of going around and weighing every single turtle in the population, researchers may instead take a simple random sample of turtles with the following information:

- Sample size n = 25

- Sample mean weight x = 300

- Sample standard deviation s = 18.5

Using the confidence interval for a mean formula , researchers may then construct the following 95% confidence interval:

95% Confidence Interval: 300 +/- 1.96*(18.5/√ 25 ) = [292.75, 307.25]

The researchers would then claim that they’re 95% confident that the true mean weight for this population of turtles is between 292.75 pounds and 307.25 pounds.

Additional Resources

The following articles explain the importance of statistics in other fields:

The Importance of Statistics in Healthcare The Importance of Statistics in Nursing The Importance of Statistics in Business The Importance of Statistics in Economics The Importance of Statistics in Education

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

How To Write The Results/Findings Chapter

For quantitative studies (dissertations & theses).

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | July 2021

So, you’ve completed your quantitative data analysis and it’s time to report on your findings. But where do you start? In this post, we’ll walk you through the results chapter (also called the findings or analysis chapter), step by step, so that you can craft this section of your dissertation or thesis with confidence. If you’re looking for information regarding the results chapter for qualitative studies, you can find that here .

Overview: Quantitative Results Chapter

- What exactly the results chapter is

- What you need to include in your chapter

- How to structure the chapter

- Tips and tricks for writing a top-notch chapter

- Free results chapter template

What exactly is the results chapter?

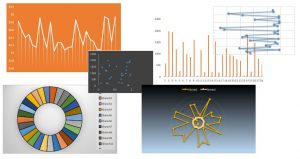

The results chapter (also referred to as the findings or analysis chapter) is one of the most important chapters of your dissertation or thesis because it shows the reader what you’ve found in terms of the quantitative data you’ve collected. It presents the data using a clear text narrative, supported by tables, graphs and charts. In doing so, it also highlights any potential issues (such as outliers or unusual findings) you’ve come across.

But how’s that different from the discussion chapter?

Well, in the results chapter, you only present your statistical findings. Only the numbers, so to speak – no more, no less. Contrasted to this, in the discussion chapter , you interpret your findings and link them to prior research (i.e. your literature review), as well as your research objectives and research questions . In other words, the results chapter presents and describes the data, while the discussion chapter interprets the data.

Let’s look at an example.

In your results chapter, you may have a plot that shows how respondents to a survey responded: the numbers of respondents per category, for instance. You may also state whether this supports a hypothesis by using a p-value from a statistical test. But it is only in the discussion chapter where you will say why this is relevant or how it compares with the literature or the broader picture. So, in your results chapter, make sure that you don’t present anything other than the hard facts – this is not the place for subjectivity.

It’s worth mentioning that some universities prefer you to combine the results and discussion chapters. Even so, it is good practice to separate the results and discussion elements within the chapter, as this ensures your findings are fully described. Typically, though, the results and discussion chapters are split up in quantitative studies. If you’re unsure, chat with your research supervisor or chair to find out what their preference is.

What should you include in the results chapter?

Following your analysis, it’s likely you’ll have far more data than are necessary to include in your chapter. In all likelihood, you’ll have a mountain of SPSS or R output data, and it’s your job to decide what’s most relevant. You’ll need to cut through the noise and focus on the data that matters.

This doesn’t mean that those analyses were a waste of time – on the contrary, those analyses ensure that you have a good understanding of your dataset and how to interpret it. However, that doesn’t mean your reader or examiner needs to see the 165 histograms you created! Relevance is key.

How do I decide what’s relevant?

At this point, it can be difficult to strike a balance between what is and isn’t important. But the most important thing is to ensure your results reflect and align with the purpose of your study . So, you need to revisit your research aims, objectives and research questions and use these as a litmus test for relevance. Make sure that you refer back to these constantly when writing up your chapter so that you stay on track.

As a general guide, your results chapter will typically include the following:

- Some demographic data about your sample

- Reliability tests (if you used measurement scales)

- Descriptive statistics

- Inferential statistics (if your research objectives and questions require these)

- Hypothesis tests (again, if your research objectives and questions require these)

We’ll discuss each of these points in more detail in the next section.

Importantly, your results chapter needs to lay the foundation for your discussion chapter . This means that, in your results chapter, you need to include all the data that you will use as the basis for your interpretation in the discussion chapter.

For example, if you plan to highlight the strong relationship between Variable X and Variable Y in your discussion chapter, you need to present the respective analysis in your results chapter – perhaps a correlation or regression analysis.

Need a helping hand?

How do I write the results chapter?

There are multiple steps involved in writing up the results chapter for your quantitative research. The exact number of steps applicable to you will vary from study to study and will depend on the nature of the research aims, objectives and research questions . However, we’ll outline the generic steps below.

Step 1 – Revisit your research questions

The first step in writing your results chapter is to revisit your research objectives and research questions . These will be (or at least, should be!) the driving force behind your results and discussion chapters, so you need to review them and then ask yourself which statistical analyses and tests (from your mountain of data) would specifically help you address these . For each research objective and research question, list the specific piece (or pieces) of analysis that address it.

At this stage, it’s also useful to think about the key points that you want to raise in your discussion chapter and note these down so that you have a clear reminder of which data points and analyses you want to highlight in the results chapter. Again, list your points and then list the specific piece of analysis that addresses each point.

Next, you should draw up a rough outline of how you plan to structure your chapter . Which analyses and statistical tests will you present and in what order? We’ll discuss the “standard structure” in more detail later, but it’s worth mentioning now that it’s always useful to draw up a rough outline before you start writing (this advice applies to any chapter).

Step 2 – Craft an overview introduction

As with all chapters in your dissertation or thesis, you should start your quantitative results chapter by providing a brief overview of what you’ll do in the chapter and why . For example, you’d explain that you will start by presenting demographic data to understand the representativeness of the sample, before moving onto X, Y and Z.

This section shouldn’t be lengthy – a paragraph or two maximum. Also, it’s a good idea to weave the research questions into this section so that there’s a golden thread that runs through the document.

Step 3 – Present the sample demographic data

The first set of data that you’ll present is an overview of the sample demographics – in other words, the demographics of your respondents.

For example:

- What age range are they?

- How is gender distributed?

- How is ethnicity distributed?

- What areas do the participants live in?

The purpose of this is to assess how representative the sample is of the broader population. This is important for the sake of the generalisability of the results. If your sample is not representative of the population, you will not be able to generalise your findings. This is not necessarily the end of the world, but it is a limitation you’ll need to acknowledge.

Of course, to make this representativeness assessment, you’ll need to have a clear view of the demographics of the population. So, make sure that you design your survey to capture the correct demographic information that you will compare your sample to.

But what if I’m not interested in generalisability?

Well, even if your purpose is not necessarily to extrapolate your findings to the broader population, understanding your sample will allow you to interpret your findings appropriately, considering who responded. In other words, it will help you contextualise your findings . For example, if 80% of your sample was aged over 65, this may be a significant contextual factor to consider when interpreting the data. Therefore, it’s important to understand and present the demographic data.

Step 4 – Review composite measures and the data “shape”.

Before you undertake any statistical analysis, you’ll need to do some checks to ensure that your data are suitable for the analysis methods and techniques you plan to use. If you try to analyse data that doesn’t meet the assumptions of a specific statistical technique, your results will be largely meaningless. Therefore, you may need to show that the methods and techniques you’ll use are “allowed”.

Most commonly, there are two areas you need to pay attention to:

#1: Composite measures

The first is when you have multiple scale-based measures that combine to capture one construct – this is called a composite measure . For example, you may have four Likert scale-based measures that (should) all measure the same thing, but in different ways. In other words, in a survey, these four scales should all receive similar ratings. This is called “ internal consistency ”.

Internal consistency is not guaranteed though (especially if you developed the measures yourself), so you need to assess the reliability of each composite measure using a test. Typically, Cronbach’s Alpha is a common test used to assess internal consistency – i.e., to show that the items you’re combining are more or less saying the same thing. A high alpha score means that your measure is internally consistent. A low alpha score means you may need to consider scrapping one or more of the measures.

#2: Data shape

The second matter that you should address early on in your results chapter is data shape. In other words, you need to assess whether the data in your set are symmetrical (i.e. normally distributed) or not, as this will directly impact what type of analyses you can use. For many common inferential tests such as T-tests or ANOVAs (we’ll discuss these a bit later), your data needs to be normally distributed. If it’s not, you’ll need to adjust your strategy and use alternative tests.

To assess the shape of the data, you’ll usually assess a variety of descriptive statistics (such as the mean, median and skewness), which is what we’ll look at next.

Step 5 – Present the descriptive statistics

Now that you’ve laid the foundation by discussing the representativeness of your sample, as well as the reliability of your measures and the shape of your data, you can get started with the actual statistical analysis. The first step is to present the descriptive statistics for your variables.

For scaled data, this usually includes statistics such as:

- The mean – this is simply the mathematical average of a range of numbers.

- The median – this is the midpoint in a range of numbers when the numbers are arranged in order.

- The mode – this is the most commonly repeated number in the data set.

- Standard deviation – this metric indicates how dispersed a range of numbers is. In other words, how close all the numbers are to the mean (the average).

- Skewness – this indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph (this is called a normal or parametric distribution), or do they lean to the left or right (this is called a non-normal or non-parametric distribution).

- Kurtosis – this metric indicates whether the data are heavily or lightly-tailed, relative to the normal distribution. In other words, how peaked or flat the distribution is.

A large table that indicates all the above for multiple variables can be a very effective way to present your data economically. You can also use colour coding to help make the data more easily digestible.

For categorical data, where you show the percentage of people who chose or fit into a category, for instance, you can either just plain describe the percentages or numbers of people who responded to something or use graphs and charts (such as bar graphs and pie charts) to present your data in this section of the chapter.

When using figures, make sure that you label them simply and clearly , so that your reader can easily understand them. There’s nothing more frustrating than a graph that’s missing axis labels! Keep in mind that although you’ll be presenting charts and graphs, your text content needs to present a clear narrative that can stand on its own. In other words, don’t rely purely on your figures and tables to convey your key points: highlight the crucial trends and values in the text. Figures and tables should complement the writing, not carry it .

Depending on your research aims, objectives and research questions, you may stop your analysis at this point (i.e. descriptive statistics). However, if your study requires inferential statistics, then it’s time to deep dive into those .

Step 6 – Present the inferential statistics

Inferential statistics are used to make generalisations about a population , whereas descriptive statistics focus purely on the sample . Inferential statistical techniques, broadly speaking, can be broken down into two groups .

First, there are those that compare measurements between groups , such as t-tests (which measure differences between two groups) and ANOVAs (which measure differences between multiple groups). Second, there are techniques that assess the relationships between variables , such as correlation analysis and regression analysis. Within each of these, some tests can be used for normally distributed (parametric) data and some tests are designed specifically for use on non-parametric data.

There are a seemingly endless number of tests that you can use to crunch your data, so it’s easy to run down a rabbit hole and end up with piles of test data. Ultimately, the most important thing is to make sure that you adopt the tests and techniques that allow you to achieve your research objectives and answer your research questions .

In this section of the results chapter, you should try to make use of figures and visual components as effectively as possible. For example, if you present a correlation table, use colour coding to highlight the significance of the correlation values, or scatterplots to visually demonstrate what the trend is. The easier you make it for your reader to digest your findings, the more effectively you’ll be able to make your arguments in the next chapter.

Step 7 – Test your hypotheses

If your study requires it, the next stage is hypothesis testing. A hypothesis is a statement , often indicating a difference between groups or relationship between variables, that can be supported or rejected by a statistical test. However, not all studies will involve hypotheses (again, it depends on the research objectives), so don’t feel like you “must” present and test hypotheses just because you’re undertaking quantitative research.

The basic process for hypothesis testing is as follows:

- Specify your null hypothesis (for example, “The chemical psilocybin has no effect on time perception).

- Specify your alternative hypothesis (e.g., “The chemical psilocybin has an effect on time perception)

- Set your significance level (this is usually 0.05)

- Calculate your statistics and find your p-value (e.g., p=0.01)

- Draw your conclusions (e.g., “The chemical psilocybin does have an effect on time perception”)

Finally, if the aim of your study is to develop and test a conceptual framework , this is the time to present it, following the testing of your hypotheses. While you don’t need to develop or discuss these findings further in the results chapter, indicating whether the tests (and their p-values) support or reject the hypotheses is crucial.

Step 8 – Provide a chapter summary

To wrap up your results chapter and transition to the discussion chapter, you should provide a brief summary of the key findings . “Brief” is the keyword here – much like the chapter introduction, this shouldn’t be lengthy – a paragraph or two maximum. Highlight the findings most relevant to your research objectives and research questions, and wrap it up.

Some final thoughts, tips and tricks

Now that you’ve got the essentials down, here are a few tips and tricks to make your quantitative results chapter shine:

- When writing your results chapter, report your findings in the past tense . You’re talking about what you’ve found in your data, not what you are currently looking for or trying to find.

- Structure your results chapter systematically and sequentially . If you had two experiments where findings from the one generated inputs into the other, report on them in order.

- Make your own tables and graphs rather than copying and pasting them from statistical analysis programmes like SPSS. Check out the DataIsBeautiful reddit for some inspiration.

- Once you’re done writing, review your work to make sure that you have provided enough information to answer your research questions , but also that you didn’t include superfluous information.

If you’ve got any questions about writing up the quantitative results chapter, please leave a comment below. If you’d like 1-on-1 assistance with your quantitative analysis and discussion, check out our hands-on coaching service , or book a free consultation with a friendly coach.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Thank you. I will try my best to write my results.

Awesome content 👏🏾

this was great explaination

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

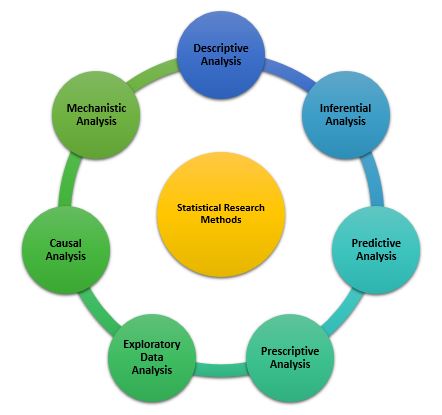

Types of Statistical Research Methods That Aid in Data Analysis

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing

This software package is used among human behavior research and other fields. R is a powerful tool and has a steep learning curve. However, it requires a certain level of coding. Furthermore, it comes with an active community that is engaged in building and enhancing the software and the associated plugins.

3. MATLAB (The Mathworks)

It is an analytical platform and a programming language. Researchers and engineers use this software and create their own code and help answer their research question. While MatLab can be a difficult tool to use for novices, it offers flexibility in terms of what the researcher needs.

4. Microsoft Excel

Not the best solution for statistical analysis in research, but MS Excel offers wide variety of tools for data visualization and simple statistics. It is easy to generate summary and customizable graphs and figures. MS Excel is the most accessible option for those wanting to start with statistics.

5. Statistical Analysis Software (SAS)

It is a statistical platform used in business, healthcare, and human behavior research alike. It can carry out advanced analyzes and produce publication-worthy figures, tables and charts .

6. GraphPad Prism

It is a premium software that is primarily used among biology researchers. But, it offers a range of variety to be used in various other fields. Similar to SPSS, GraphPad gives scripting option to automate analyses to carry out complex statistical calculations.

This software offers basic as well as advanced statistical tools for data analysis. However, similar to GraphPad and SPSS, minitab needs command over coding and can offer automated analyses.

Use of Statistical Tools In Research and Data Analysis

Statistical tools manage the large data. Many biological studies use large data to analyze the trends and patterns in studies. Therefore, using statistical tools becomes essential, as they manage the large data sets, making data processing more convenient.

Following these steps will help biological researchers to showcase the statistics in research in detail, and develop accurate hypothesis and use correct tools for it.

There are a range of statistical tools in research which can help researchers manage their research data and improve the outcome of their research by better interpretation of data. You could use statistics in research by understanding the research question, knowledge of statistics and your personal experience in coding.

Have you faced challenges while using statistics in research? How did you manage it? Did you use any of the statistical tools to help you with your research data? Do write to us or comment below!

Frequently Asked Questions

Statistics in research can help a researcher approach the study in a stepwise manner: 1. Establishing a sample size 2. Testing of hypothesis 3. Data interpretation through analysis

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings.

Statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible. They can manage large data sets, making data processing more convenient. A great number of tools are available to carry out statistical analysis of data like SPSS, SAS (Statistical Analysis Software), and Minitab.

nice article to read

Holistic but delineating. A very good read.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Promoting Research

- Thought Leadership

- Trending Now

How Enago Academy Contributes to Sustainable Development Goals (SDGs) Through Empowering Researchers

The United Nations Sustainable Development Goals (SDGs) are a universal call to action to end…

- Reporting Research

Research Interviews: An effective and insightful way of data collection

Research interviews play a pivotal role in collecting data for various academic, scientific, and professional…

Planning Your Data Collection: Designing methods for effective research

Planning your research is very important to obtain desirable results. In research, the relevance of…

- Language & Grammar

Best Plagiarism Checker Tool for Researchers — Top 4 to choose from!

While common writing issues like language enhancement, punctuation errors, grammatical errors, etc. can be dealt…

- Industry News

- Publishing News

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats were achieved!

It’s beginning to look a lot like success! Some of the greatest opportunities to research…

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats…

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

As a researcher, what do you consider most when choosing an image manipulation detector?

- Weblog home

International Students blog

Thesis life: 7 ways to tackle statistics in your thesis.

By Pranav Kulkarni

Thesis is an integral part of your Masters’ study in Wageningen University and Research. It is the most exciting, independent and technical part of the study. More often than not, most departments in WU expect students to complete a short term independent project or a part of big on-going project for their thesis assignment.

Source : www.coursera.org

This assignment involves proposing a research question, tackling it with help of some observations or experiments, analyzing these observations or results and then stating them by drawing some conclusions.

Since it is an immitigable part of your thesis, you can neither run from statistics nor cry for help.

The penultimate part of this process involves analysis of results which is very crucial for coherence of your thesis assignment.This analysis usually involve use of statistical tools to help draw inferences. Most students who don’t pursue statistics in their curriculum are scared by this prospect. Since it is an immitigable part of your thesis, you can neither run from statistics nor cry for help. But in order to not get intimidated by statistics and its “greco-latin” language, there are a few ways in which you can make your journey through thesis life a pleasant experience.

Make statistics your friend

The best way to end your fear of statistics and all its paraphernalia is to befriend it. Try to learn all that you can about the techniques that you will be using, why they were invented, how they were invented and who did this deed. Personifying the story of statistical techniques makes them digestible and easy to use. Each new method in statistics comes with a unique story and loads of nerdy anecdotes.

If you cannot make friends with statistics, at least make a truce

If you cannot still bring yourself about to be interested in the life and times of statistics, the best way to not hate statistics is to make an agreement with yourself. You must realise that although important, this is only part of your thesis. The better part of your thesis is something you trained for and learned. So, don’t bother to fuss about statistics and make you all nervous. Do your job, enjoy thesis to the fullest and complete the statistical section as soon as possible. At the end, you would have forgotten all about your worries and fears of statistics.

Visualize your data

The best way to understand the results and observations from your study/ experiments, is to visualize your data. See different trends, patterns, or lack thereof to understand what you are supposed to do. Moreover, graphics and illustrations can be used directly in your report. These techniques will also help you decide on which statistical analyses you must perform to answer your research question. Blind decisions about statistics can often influence your study and make it very confusing or worse, make it completely wrong!

Simplify with flowcharts and planning

Similar to graphical visualizations, making flowcharts and planning various steps of your study can prove beneficial to make statistical decisions. Human brain can analyse pictorial information faster than literal information. So, it is always easier to understand your exact goal when you can make decisions based on flowchart or any logical flow-plans.

Source: www.imindq.com

Find examples on internet

Although statistics is a giant maze of complicated terminologies, the internet holds the key to this particular maze. You can find tons of examples on the web. These may be similar to what you intend to do or be different applications of the similar tools that you wish to engage. Especially, in case of Statistical programming languages like R, SAS, Python, PERL, VBA, etc. there is a vast database of example codes, clarifications and direct training examples available on the internet. Various forums are also available for specialized statistical methodologies where different experts and students discuss the issues regarding their own projects.

Comparative studies

Much unlike blindly searching the internet for examples and taking word of advice from online faceless people, you can systematically learn which quantitative tests to perform by rigorously studying literature of relevant research. Since you came up with a certain problem to tackle in your field of study, chances are, someone else also came up with this issue or something quite similar. You can find solutions to many such problems by scouring the internet for research papers which address the issue. Nevertheless, you should be cautious. It is easy to get lost and disheartened when you find many heavy statistical studies with lots of maths and derivations with huge cryptic symbolical text.

When all else fails, talk to an expert

All the steps above are meant to help you independently tackle whatever hurdles you encounter over the course of your thesis. But, when you cannot tackle them yourself it is always prudent and most efficient to ask for help. Talking to students from your thesis ring who have done something similar is one way of help. Another is to make an appointment with your supervisor and take specific questions to him/ her. If that is not possible, you can contact some other teaching staff or researchers from your research group. Try not to waste their as well as you time by making a list of specific problems that you will like to discuss. I think most are happy to help in any way possible.

Talking to students from your thesis ring who have done something similar is one way of help.

Sometimes, with the help of your supervisor, you can make an appointment with someone from the “Biometris” which is the WU’s statistics department. These people are the real deal; chances are, these people can solve all your problems without any difficulty. Always remember, you are in the process of learning, nobody expects you to be an expert in everything. Ask for help when there seems to be no hope.

Apart from these seven ways to make your statistical journey pleasant, you should always engage in reading, watching, listening to stuff relevant to your thesis topic and talking about it to those who are interested. Most questions have solutions in the ether realm of communication. So, best of luck and break a leg!!!

Related posts:

No related posts.

MSc Animal Science

View articles

There are 4 comments.

A perfect approach in a very crisp and clear manner! The sequence suggested is absolutely perfect and will help the students very much. I particularly liked the idea of visualisation!

You are write! I get totally stuck with learning and understanding statistics for my Dissertation!

Statistics is a technical subject that requires extra effort. With the highlighted tips you already highlighted i expect it will offer the much needed help with statistics analysis in my course.

this is so much relevant to me! Don’t forget one more point: try to enrol specific online statistics course (in my case, I’m too late to join any statistic course). The hardest part for me actually to choose what type of statistical test to choose among many options

Leave a reply Cancel reply

Your email address will not be published. Required fields are marked *

Study at Cambridge

About the university, research at cambridge.

- Undergraduate courses

- Events and open days

- Fees and finance

- Postgraduate courses

- How to apply

- Postgraduate events

- Fees and funding

- International students

- Continuing education

- Executive and professional education

- Courses in education

- How the University and Colleges work

- Term dates and calendars

- Visiting the University

- Annual reports

- Equality and diversity

- A global university

- Public engagement

- Give to Cambridge

- For Cambridge students

- For our researchers

- Business and enterprise

- Colleges & departments

- Email & phone search

- Museums & collections

- Open Research

- Share Your Research

- Open Research overview

- Share Your Research overview

- Open Research Position Statement

- Scholarly Communication overview

- Join the discussion overview

- Author tools overview

- Publishing Schol Comm research overview

- Open Access overview

- Open Access policies overview

- Places to find OA content

- Open Access Monographs overview

- Open Access Infrastructure

- Repository overview

- How to Deposit overview

- Digital Object Identifiers (DOI)

- Request a Copy

- Copyright overview

- Third party copyright

- Licensing options

- Creative Commons

- Authorship and IP

- Copyright and VLE

- Copyright resources

- Events overview

- Training overview

- Contact overview

- Governance overview

Data and your thesis

- Scholarly Communication

- Open Access

- Training and Events

What is research data?

Research data are the evidence that underpins the answer to your research question and can support the findings or outputs of your research. Research data takes many different forms. They may include for example, statistics, digital images, sound recordings, films, transcripts of interviews, survey data, artworks, published texts or manuscripts, or fieldwork observations. The term 'data' is more familiar to researchers in Science, Technology, Engineering and Mathematics (STEM), but any outputs from research could be considered data. For example, Humanities, Arts and Social Sciences (HASS) researchers might create data in the form of presentations, spreadsheets, documents, images, works of art, or musical scores. The Research Data Management Team in the University Library aim to help you plan, create, organise, share, and look after your research materials, whatever form they take. For more information about the Research data Management Team, visit their website .

Data Management Plans

Research Data Management is a complex issue, but if done correctly from the start, could save you a lot of time and hassle when you are writing up your thesis. We advise all students to consider data management as early as possible and create a Data Management Plan (DMP). The Research Data Management Team offer help in creating your DMP and can offer advice and training on how to do this. There are some departments that have joined a pilot project to include Data Management Plans in the registration reviews of PhD students. As part of the pilot, students are asked to complete a brief Data Management Plan (DMP) and supervisors and assessors ensure that the student has thought about all the issues and their responses are reasonable. If your department is taking part in the pilot or would like to, see the Data Management Plans for Pilot for Cambridge PhD Students page. The Research Data Management Team will provide support for any students, supervisors or assessors that are in need.

Submitting your digital thesis and depositing your data

If you have created data that is connected to your thesis and the data is in a format separate to the thesis file itself, we recommend that you deposit it in the data repository and make it open access to improve discoverability. We will accept data that either does not contain third party copyright, or contains third party copyright that has been cleared and is data of the following types:

- computer code written by the researcher

- software written by the researcher

- statistical data

- raw data from experiments

If you have created a research output which is not one of those listed above, please contact us on the [email protected] address and we will advise whether you should deposit this with your thesis, or separately in the data repository. If you are ready to deposit your data in the data repository, please do so via symplectic elements. More information on how to deposit can be found on the Research Data Management pages . If you wish to cite your data in your thesis, we can arranged for placeholder DOIs to be created in the data repository before your thesis is submitted. For further information, please email: [email protected]

Third party copyright in your data

For an explanation of what is third party copyright, please see the OSC third party copyright page . If your data is based on, or contains third party copyright you will need to obtain clearance to make your data open access in the data repository. It is possible to apply a 12 month embargo to datasets while clearance is obtained if you need extra time to do this. However, if it is not possible to clear the third party copyrighted material, it is not possible to deposit your data in the data repository. In these cases, it might be preferable to deposit your data with your thesis instead, under controlled access, but this can be complicated if you wish to deposit the thesis itself under a different access level. Please email [email protected] with any queries and we can advise on the best solution.

Open Research Newsletter sign-up

Please contact us at [email protected] to be added to the mailing list to receive our quarterly e-Newsletter.

The Office of Scholarly Communication sends this Newsletter to its subscribers in order to disseminate information relevant to open access, research data management, scholarly communication and open research topics. For details on how the personal information you enter here is used, please see our privacy policy .

Privacy Policy

© 2024 University of Cambridge

- Contact the University

- Accessibility

- Freedom of information

- Privacy policy and cookies

- Statement on Modern Slavery

- Terms and conditions

- University A-Z

- Undergraduate

- Postgraduate

- Research news

- About research at Cambridge

- Spotlight on...

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

Basic statistical tools in research and data analysis

Zulfiqar ali.

Department of Anaesthesiology, Division of Neuroanaesthesiology, Sheri Kashmir Institute of Medical Sciences, Soura, Srinagar, Jammu and Kashmir, India

S Bala Bhaskar

1 Department of Anaesthesiology and Critical Care, Vijayanagar Institute of Medical Sciences, Bellary, Karnataka, India

Statistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data. The results and inferences are precise only if proper statistical tests are used. This article will try to acquaint the reader with the basic research tools that are utilised while conducting various studies. The article covers a brief outline of the variables, an understanding of quantitative and qualitative variables and the measures of central tendency. An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data analysis.

INTRODUCTION

Statistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population.[ 1 ] This requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. An adequate knowledge of statistics is necessary for proper designing of an epidemiological study or a clinical trial. Improper statistical methods may result in erroneous conclusions which may lead to unethical practice.[ 2 ]

Variable is a characteristic that varies from one individual member of population to another individual.[ 3 ] Variables such as height and weight are measured by some type of scale, convey quantitative information and are called as quantitative variables. Sex and eye colour give qualitative information and are called as qualitative variables[ 3 ] [ Figure 1 ].

Classification of variables

Quantitative variables

Quantitative or numerical data are subdivided into discrete and continuous measurements. Discrete numerical data are recorded as a whole number such as 0, 1, 2, 3,… (integer), whereas continuous data can assume any value. Observations that can be counted constitute the discrete data and observations that can be measured constitute the continuous data. Examples of discrete data are number of episodes of respiratory arrests or the number of re-intubations in an intensive care unit. Similarly, examples of continuous data are the serial serum glucose levels, partial pressure of oxygen in arterial blood and the oesophageal temperature.

A hierarchical scale of increasing precision can be used for observing and recording the data which is based on categorical, ordinal, interval and ratio scales [ Figure 1 ].

Categorical or nominal variables are unordered. The data are merely classified into categories and cannot be arranged in any particular order. If only two categories exist (as in gender male and female), it is called as a dichotomous (or binary) data. The various causes of re-intubation in an intensive care unit due to upper airway obstruction, impaired clearance of secretions, hypoxemia, hypercapnia, pulmonary oedema and neurological impairment are examples of categorical variables.

Ordinal variables have a clear ordering between the variables. However, the ordered data may not have equal intervals. Examples are the American Society of Anesthesiologists status or Richmond agitation-sedation scale.

Interval variables are similar to an ordinal variable, except that the intervals between the values of the interval variable are equally spaced. A good example of an interval scale is the Fahrenheit degree scale used to measure temperature. With the Fahrenheit scale, the difference between 70° and 75° is equal to the difference between 80° and 85°: The units of measurement are equal throughout the full range of the scale.

Ratio scales are similar to interval scales, in that equal differences between scale values have equal quantitative meaning. However, ratio scales also have a true zero point, which gives them an additional property. For example, the system of centimetres is an example of a ratio scale. There is a true zero point and the value of 0 cm means a complete absence of length. The thyromental distance of 6 cm in an adult may be twice that of a child in whom it may be 3 cm.

STATISTICS: DESCRIPTIVE AND INFERENTIAL STATISTICS

Descriptive statistics[ 4 ] try to describe the relationship between variables in a sample or population. Descriptive statistics provide a summary of data in the form of mean, median and mode. Inferential statistics[ 4 ] use a random sample of data taken from a population to describe and make inferences about the whole population. It is valuable when it is not possible to examine each member of an entire population. The examples if descriptive and inferential statistics are illustrated in Table 1 .

Example of descriptive and inferential statistics

Descriptive statistics

The extent to which the observations cluster around a central location is described by the central tendency and the spread towards the extremes is described by the degree of dispersion.

Measures of central tendency

The measures of central tendency are mean, median and mode.[ 6 ] Mean (or the arithmetic average) is the sum of all the scores divided by the number of scores. Mean may be influenced profoundly by the extreme variables. For example, the average stay of organophosphorus poisoning patients in ICU may be influenced by a single patient who stays in ICU for around 5 months because of septicaemia. The extreme values are called outliers. The formula for the mean is

where x = each observation and n = number of observations. Median[ 6 ] is defined as the middle of a distribution in a ranked data (with half of the variables in the sample above and half below the median value) while mode is the most frequently occurring variable in a distribution. Range defines the spread, or variability, of a sample.[ 7 ] It is described by the minimum and maximum values of the variables. If we rank the data and after ranking, group the observations into percentiles, we can get better information of the pattern of spread of the variables. In percentiles, we rank the observations into 100 equal parts. We can then describe 25%, 50%, 75% or any other percentile amount. The median is the 50 th percentile. The interquartile range will be the observations in the middle 50% of the observations about the median (25 th -75 th percentile). Variance[ 7 ] is a measure of how spread out is the distribution. It gives an indication of how close an individual observation clusters about the mean value. The variance of a population is defined by the following formula:

where σ 2 is the population variance, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The variance of a sample is defined by slightly different formula:

where s 2 is the sample variance, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. The formula for the variance of a population has the value ‘ n ’ as the denominator. The expression ‘ n −1’ is known as the degrees of freedom and is one less than the number of parameters. Each observation is free to vary, except the last one which must be a defined value. The variance is measured in squared units. To make the interpretation of the data simple and to retain the basic unit of observation, the square root of variance is used. The square root of the variance is the standard deviation (SD).[ 8 ] The SD of a population is defined by the following formula:

where σ is the population SD, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The SD of a sample is defined by slightly different formula:

where s is the sample SD, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. An example for calculation of variation and SD is illustrated in Table 2 .

Example of mean, variance, standard deviation

Normal distribution or Gaussian distribution

Most of the biological variables usually cluster around a central value, with symmetrical positive and negative deviations about this point.[ 1 ] The standard normal distribution curve is a symmetrical bell-shaped. In a normal distribution curve, about 68% of the scores are within 1 SD of the mean. Around 95% of the scores are within 2 SDs of the mean and 99% within 3 SDs of the mean [ Figure 2 ].

Normal distribution curve

Skewed distribution

It is a distribution with an asymmetry of the variables about its mean. In a negatively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the right of Figure 1 . In a positively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the left of the figure leading to a longer right tail.

Curves showing negatively skewed and positively skewed distribution

Inferential statistics

In inferential statistics, data are analysed from a sample to make inferences in the larger collection of the population. The purpose is to answer or test the hypotheses. A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. Hypothesis tests are thus procedures for making rational decisions about the reality of observed effects.

Probability is the measure of the likelihood that an event will occur. Probability is quantified as a number between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty).

In inferential statistics, the term ‘null hypothesis’ ( H 0 ‘ H-naught ,’ ‘ H-null ’) denotes that there is no relationship (difference) between the population variables in question.[ 9 ]

Alternative hypothesis ( H 1 and H a ) denotes that a statement between the variables is expected to be true.[ 9 ]

The P value (or the calculated probability) is the probability of the event occurring by chance if the null hypothesis is true. The P value is a numerical between 0 and 1 and is interpreted by researchers in deciding whether to reject or retain the null hypothesis [ Table 3 ].

P values with interpretation

If P value is less than the arbitrarily chosen value (known as α or the significance level), the null hypothesis (H0) is rejected [ Table 4 ]. However, if null hypotheses (H0) is incorrectly rejected, this is known as a Type I error.[ 11 ] Further details regarding alpha error, beta error and sample size calculation and factors influencing them are dealt with in another section of this issue by Das S et al .[ 12 ]

Illustration for null hypothesis

PARAMETRIC AND NON-PARAMETRIC TESTS

Numerical data (quantitative variables) that are normally distributed are analysed with parametric tests.[ 13 ]

Two most basic prerequisites for parametric statistical analysis are:

- The assumption of normality which specifies that the means of the sample group are normally distributed

- The assumption of equal variance which specifies that the variances of the samples and of their corresponding population are equal.

However, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[ 14 ] statistical techniques are used. Non-parametric tests are used to analyse ordinal and categorical data.

Parametric tests

The parametric tests assume that the data are on a quantitative (numerical) scale, with a normal distribution of the underlying population. The samples have the same variance (homogeneity of variances). The samples are randomly drawn from the population, and the observations within a group are independent of each other. The commonly used parametric tests are the Student's t -test, analysis of variance (ANOVA) and repeated measures ANOVA.

Student's t -test

Student's t -test is used to test the null hypothesis that there is no difference between the means of the two groups. It is used in three circumstances:

where X = sample mean, u = population mean and SE = standard error of mean

where X 1 − X 2 is the difference between the means of the two groups and SE denotes the standard error of the difference.

- To test if the population means estimated by two dependent samples differ significantly (the paired t -test). A usual setting for paired t -test is when measurements are made on the same subjects before and after a treatment.

The formula for paired t -test is:

where d is the mean difference and SE denotes the standard error of this difference.

The group variances can be compared using the F -test. The F -test is the ratio of variances (var l/var 2). If F differs significantly from 1.0, then it is concluded that the group variances differ significantly.

Analysis of variance

The Student's t -test cannot be used for comparison of three or more groups. The purpose of ANOVA is to test if there is any significant difference between the means of two or more groups.

In ANOVA, we study two variances – (a) between-group variability and (b) within-group variability. The within-group variability (error variance) is the variation that cannot be accounted for in the study design. It is based on random differences present in our samples.

However, the between-group (or effect variance) is the result of our treatment. These two estimates of variances are compared using the F-test.

A simplified formula for the F statistic is:

where MS b is the mean squares between the groups and MS w is the mean squares within groups.

Repeated measures analysis of variance

As with ANOVA, repeated measures ANOVA analyses the equality of means of three or more groups. However, a repeated measure ANOVA is used when all variables of a sample are measured under different conditions or at different points in time.

As the variables are measured from a sample at different points of time, the measurement of the dependent variable is repeated. Using a standard ANOVA in this case is not appropriate because it fails to model the correlation between the repeated measures: The data violate the ANOVA assumption of independence. Hence, in the measurement of repeated dependent variables, repeated measures ANOVA should be used.

Non-parametric tests

When the assumptions of normality are not met, and the sample means are not normally, distributed parametric tests can lead to erroneous results. Non-parametric tests (distribution-free test) are used in such situation as they do not require the normality assumption.[ 15 ] Non-parametric tests may fail to detect a significant difference when compared with a parametric test. That is, they usually have less power.

As is done for the parametric tests, the test statistic is compared with known values for the sampling distribution of that statistic and the null hypothesis is accepted or rejected. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 5 .

Analogue of parametric and non-parametric tests

Median test for one sample: The sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median reference value.

This test examines the hypothesis about the median θ0 of a population. It tests the null hypothesis H0 = θ0. When the observed value (Xi) is greater than the reference value (θ0), it is marked as+. If the observed value is smaller than the reference value, it is marked as − sign. If the observed value is equal to the reference value (θ0), it is eliminated from the sample.

If the null hypothesis is true, there will be an equal number of + signs and − signs.

The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

There is a major limitation of sign test as we lose the quantitative information of the given data and merely use the + or – signs. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. As in the sign test, if there is an observed value that is equal to the reference value θ0, this observed value is eliminated from the sample.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample and compares the difference in the rank sums.

Mann-Whitney test

It is used to test the null hypothesis that two samples have the same median or, alternatively, whether observations in one sample tend to be larger than observations in the other.

Mann–Whitney test compares all data (xi) belonging to the X group and all data (yi) belonging to the Y group and calculates the probability of xi being greater than yi: P (xi > yi). The null hypothesis states that P (xi > yi) = P (xi < yi) =1/2 while the alternative hypothesis states that P (xi > yi) ≠1/2.

Kolmogorov-Smirnov test

The two-sample Kolmogorov-Smirnov (KS) test was designed as a generic method to test whether two random samples are drawn from the same distribution. The null hypothesis of the KS test is that both distributions are identical. The statistic of the KS test is a distance between the two empirical distributions, computed as the maximum absolute difference between their cumulative curves.

Kruskal-Wallis test

The Kruskal–Wallis test is a non-parametric test to analyse the variance.[ 14 ] It analyses if there is any difference in the median values of three or more independent samples. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test statistic.

Jonckheere test

In contrast to Kruskal–Wallis test, in Jonckheere test, there is an a priori ordering that gives it a more statistical power than the Kruskal–Wallis test.[ 14 ]

Friedman test

The Friedman test is a non-parametric test for testing the difference between several related samples. The Friedman test is an alternative for repeated measures ANOVAs which is used when the same parameter has been measured under different conditions on the same subjects.[ 13 ]

Tests to analyse the categorical data

Chi-square test, Fischer's exact test and McNemar's test are used to analyse the categorical or nominal variables. The Chi-square test compares the frequencies and tests whether the observed data differ significantly from that of the expected data if there were no differences between groups (i.e., the null hypothesis). It is calculated by the sum of the squared difference between observed ( O ) and the expected ( E ) data (or the deviation, d ) divided by the expected data by the following formula:

A Yates correction factor is used when the sample size is small. Fischer's exact test is used to determine if there are non-random associations between two categorical variables. It does not assume random sampling, and instead of referring a calculated statistic to a sampling distribution, it calculates an exact probability. McNemar's test is used for paired nominal data. It is applied to 2 × 2 table with paired-dependent samples. It is used to determine whether the row and column frequencies are equal (that is, whether there is ‘marginal homogeneity’). The null hypothesis is that the paired proportions are equal. The Mantel-Haenszel Chi-square test is a multivariate test as it analyses multiple grouping variables. It stratifies according to the nominated confounding variables and identifies any that affects the primary outcome variable. If the outcome variable is dichotomous, then logistic regression is used.

SOFTWARES AVAILABLE FOR STATISTICS, SAMPLE SIZE CALCULATION AND POWER ANALYSIS

Numerous statistical software systems are available currently. The commonly used software systems are Statistical Package for the Social Sciences (SPSS – manufactured by IBM corporation), Statistical Analysis System ((SAS – developed by SAS Institute North Carolina, United States of America), R (designed by Ross Ihaka and Robert Gentleman from R core team), Minitab (developed by Minitab Inc), Stata (developed by StataCorp) and the MS Excel (developed by Microsoft).

There are a number of web resources which are related to statistical power analyses. A few are:

- StatPages.net – provides links to a number of online power calculators

- G-Power – provides a downloadable power analysis program that runs under DOS

- Power analysis for ANOVA designs an interactive site that calculates power or sample size needed to attain a given power for one effect in a factorial ANOVA design

- SPSS makes a program called SamplePower. It gives an output of a complete report on the computer screen which can be cut and paste into another document.

It is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study. This will help to conduct an appropriately well-designed study leading to valid and reliable results. Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based guidelines.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Descriptive Statistics

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

The mean, the mode, the median, the range, and the standard deviation are all examples of descriptive statistics. Descriptive statistics are used because in most cases, it isn't possible to present all of your data in any form that your reader will be able to quickly interpret.

Generally, when writing descriptive statistics, you want to present at least one form of central tendency (or average), that is, either the mean, median, or mode. In addition, you should present one form of variability , usually the standard deviation.

Measures of Central Tendency and Other Commonly Used Descriptive Statistics

The mean, median, and the mode are all measures of central tendency. They attempt to describe what the typical data point might look like. In essence, they are all different forms of 'the average.' When writing statistics, you never want to say 'average' because it is difficult, if not impossible, for your reader to understand if you are referring to the mean, the median, or the mode.

The mean is the most common form of central tendency, and is what most people usually are referring to when the say average. It is simply the total sum of all the numbers in a data set, divided by the total number of data points. For example, the following data set has a mean of 4: {-1, 0, 1, 16}. That is, 16 divided by 4 is 4. If there isn't a good reason to use one of the other forms of central tendency, then you should use the mean to describe the central tendency.

The median is simply the middle value of a data set. In order to calculate the median, all values in the data set need to be ordered, from either highest to lowest, or vice versa. If there are an odd number of values in a data set, then the median is easy to calculate. If there is an even number of values in a data set, then the calculation becomes more difficult. Statisticians still debate how to properly calculate a median when there is an even number of values, but for most purposes, it is appropriate to simply take the mean of the two middle values. The median is useful when describing data sets that are skewed or have extreme values. Incomes of baseballs players, for example, are commonly reported using a median because a small minority of baseball players makes a lot of money, while most players make more modest amounts. The median is less influenced by extreme scores than the mean.

The mode is the most commonly occurring number in the data set. The mode is best used when you want to indicate the most common response or item in a data set. For example, if you wanted to predict the score of the next football game, you may want to know what the most common score is for the visiting team, but having an average score of 15.3 won't help you if it is impossible to score 15.3 points. Likewise, a median score may not be very informative either, if you are interested in what score is most likely.

Standard Deviation

The standard deviation is a measure of variability (it is not a measure of central tendency). Conceptually it is best viewed as the 'average distance that individual data points are from the mean.' Data sets that are highly clustered around the mean have lower standard deviations than data sets that are spread out.

For example, the first data set would have a higher standard deviation than the second data set:

Notice that both groups have the same mean (5) and median (also 5), but the two groups contain different numbers and are organized much differently. This organization of a data set is often referred to as a distribution. Because the two data sets above have the same mean and median, but different standard deviation, we know that they also have different distributions. Understanding the distribution of a data set helps us understand how the data behave.

Reference management. Clean and simple.

How to collect data for your thesis

Collecting theoretical data

Search for theses on your topic, use content-sharing platforms, collecting empirical data, qualitative vs. quantitative data, frequently asked questions about gathering data for your thesis, related articles.