- Privacy Policy

Home » Secondary Data – Types, Methods and Examples

Secondary Data – Types, Methods and Examples

Table of Contents

Secondary Data

Definition:

Secondary data refers to information that has been collected, processed, and published by someone else, rather than the researcher gathering the data firsthand. This can include data from sources such as government publications, academic journals, market research reports, and other existing datasets.

Secondary Data Types

Types of secondary data are as follows:

- Published data: Published data refers to data that has been published in books, magazines, newspapers, and other print media. Examples include statistical reports, market research reports, and scholarly articles.

- Government data: Government data refers to data collected by government agencies and departments. This can include data on demographics, economic trends, crime rates, and health statistics.

- Commercial data: Commercial data is data collected by businesses for their own purposes. This can include sales data, customer feedback, and market research data.

- Academic data: Academic data refers to data collected by researchers for academic purposes. This can include data from experiments, surveys, and observational studies.

- Online data: Online data refers to data that is available on the internet. This can include social media posts, website analytics, and online customer reviews.

- Organizational data: Organizational data is data collected by businesses or organizations for their own purposes. This can include data on employee performance, financial records, and customer satisfaction.

- Historical data : Historical data refers to data that was collected in the past and is still available for research purposes. This can include census data, historical documents, and archival records.

- International data: International data refers to data collected from other countries for research purposes. This can include data on international trade, health statistics, and demographic trends.

- Public data : Public data refers to data that is available to the general public. This can include data from government agencies, non-profit organizations, and other sources.

- Private data: Private data refers to data that is not available to the general public. This can include confidential business data, personal medical records, and financial data.

- Big data: Big data refers to large, complex datasets that are difficult to manage and analyze using traditional data processing methods. This can include social media data, sensor data, and other types of data generated by digital devices.

Secondary Data Collection Methods

Secondary Data Collection Methods are as follows:

- Published sources: Researchers can gather secondary data from published sources such as books, journals, reports, and newspapers. These sources often provide comprehensive information on a variety of topics.

- Online sources: With the growth of the internet, researchers can now access a vast amount of secondary data online. This includes websites, databases, and online archives.

- Government sources : Government agencies often collect and publish a wide range of secondary data on topics such as demographics, crime rates, and health statistics. Researchers can obtain this data through government websites, publications, or data portals.

- Commercial sources: Businesses often collect and analyze data for marketing research or customer profiling. Researchers can obtain this data through commercial data providers or by purchasing market research reports.

- Academic sources: Researchers can also obtain secondary data from academic sources such as published research studies, academic journals, and dissertations.

- Personal contacts: Researchers can also obtain secondary data from personal contacts, such as experts in a particular field or individuals with specialized knowledge.

Secondary Data Formats

Secondary data can come in various formats depending on the source from which it is obtained. Here are some common formats of secondary data:

- Numeric Data: Numeric data is often in the form of statistics and numerical figures that have been compiled and reported by organizations such as government agencies, research institutions, and commercial enterprises. This can include data such as population figures, GDP, sales figures, and market share.

- Textual Data: Textual data is often in the form of written documents, such as reports, articles, and books. This can include qualitative data such as descriptions, opinions, and narratives.

- Audiovisual Data : Audiovisual data is often in the form of recordings, videos, and photographs. This can include data such as interviews, focus group discussions, and other types of qualitative data.

- Geospatial Data: Geospatial data is often in the form of maps, satellite images, and geographic information systems (GIS) data. This can include data such as demographic information, land use patterns, and transportation networks.

- Transactional Data : Transactional data is often in the form of digital records of financial and business transactions. This can include data such as purchase histories, customer behavior, and financial transactions.

- Social Media Data: Social media data is often in the form of user-generated content from social media platforms such as Facebook, Twitter, and Instagram. This can include data such as user demographics, content trends, and sentiment analysis.

Secondary Data Analysis Methods

Secondary data analysis involves the use of pre-existing data for research purposes. Here are some common methods of secondary data analysis:

- Descriptive Analysis: This method involves describing the characteristics of a dataset, such as the mean, standard deviation, and range of the data. Descriptive analysis can be used to summarize data and provide an overview of trends.

- Inferential Analysis: This method involves making inferences and drawing conclusions about a population based on a sample of data. Inferential analysis can be used to test hypotheses and determine the statistical significance of relationships between variables.

- Content Analysis: This method involves analyzing textual or visual data to identify patterns and themes. Content analysis can be used to study the content of documents, media coverage, and social media posts.

- Time-Series Analysis : This method involves analyzing data over time to identify trends and patterns. Time-series analysis can be used to study economic trends, climate change, and other phenomena that change over time.

- Spatial Analysis : This method involves analyzing data in relation to geographic location. Spatial analysis can be used to study patterns of disease spread, land use patterns, and the effects of environmental factors on health outcomes.

- Meta-Analysis: This method involves combining data from multiple studies to draw conclusions about a particular phenomenon. Meta-analysis can be used to synthesize the results of previous research and provide a more comprehensive understanding of a particular topic.

Secondary Data Gathering Guide

Here are some steps to follow when gathering secondary data:

- Define your research question: Start by defining your research question and identifying the specific information you need to answer it. This will help you identify the type of secondary data you need and where to find it.

- Identify relevant sources: Identify potential sources of secondary data, including published sources, online databases, government sources, and commercial data providers. Consider the reliability and validity of each source.

- Evaluate the quality of the data: Evaluate the quality and reliability of the data you plan to use. Consider the data collection methods, sample size, and potential biases. Make sure the data is relevant to your research question and is suitable for the type of analysis you plan to conduct.

- Collect the data: Collect the relevant data from the identified sources. Use a consistent method to record and organize the data to make analysis easier.

- Validate the data: Validate the data to ensure that it is accurate and reliable. Check for inconsistencies, missing data, and errors. Address any issues before analyzing the data.

- Analyze the data: Analyze the data using appropriate statistical and analytical methods. Use descriptive and inferential statistics to summarize and draw conclusions from the data.

- Interpret the results: Interpret the results of your analysis and draw conclusions based on the data. Make sure your conclusions are supported by the data and are relevant to your research question.

- Communicate the findings : Communicate your findings clearly and concisely. Use appropriate visual aids such as graphs and charts to help explain your results.

Examples of Secondary Data

Here are some examples of secondary data from different fields:

- Healthcare : Hospital records, medical journals, clinical trial data, and disease registries are examples of secondary data sources in healthcare. These sources can provide researchers with information on patient demographics, disease prevalence, and treatment outcomes.

- Marketing : Market research reports, customer surveys, and sales data are examples of secondary data sources in marketing. These sources can provide marketers with information on consumer preferences, market trends, and competitor activity.

- Education : Student test scores, graduation rates, and enrollment statistics are examples of secondary data sources in education. These sources can provide researchers with information on student achievement, teacher effectiveness, and educational disparities.

- Finance : Stock market data, financial statements, and credit reports are examples of secondary data sources in finance. These sources can provide investors with information on market trends, company performance, and creditworthiness.

- Social Science : Government statistics, census data, and survey data are examples of secondary data sources in social science. These sources can provide researchers with information on population demographics, social trends, and political attitudes.

- Environmental Science : Climate data, remote sensing data, and ecological monitoring data are examples of secondary data sources in environmental science. These sources can provide researchers with information on weather patterns, land use, and biodiversity.

Purpose of Secondary Data

The purpose of secondary data is to provide researchers with information that has already been collected by others for other purposes. Secondary data can be used to support research questions, test hypotheses, and answer research objectives. Some of the key purposes of secondary data are:

- To gain a better understanding of the research topic : Secondary data can be used to provide context and background information on a research topic. This can help researchers understand the historical and social context of their research and gain insights into relevant variables and relationships.

- To save time and resources: Collecting new primary data can be time-consuming and expensive. Using existing secondary data sources can save researchers time and resources by providing access to pre-existing data that has already been collected and organized.

- To provide comparative data : Secondary data can be used to compare and contrast findings across different studies or datasets. This can help researchers identify trends, patterns, and relationships that may not have been apparent from individual studies.

- To support triangulation: Triangulation is the process of using multiple sources of data to confirm or refute research findings. Secondary data can be used to support triangulation by providing additional sources of data to support or refute primary research findings.

- To supplement primary data : Secondary data can be used to supplement primary data by providing additional information or insights that were not captured by the primary research. This can help researchers gain a more complete understanding of the research topic and draw more robust conclusions.

When to use Secondary Data

Secondary data can be useful in a variety of research contexts, and there are several situations in which it may be appropriate to use secondary data. Some common situations in which secondary data may be used include:

- When primary data collection is not feasible : Collecting primary data can be time-consuming and expensive, and in some cases, it may not be feasible to collect primary data. In these situations, secondary data can provide valuable insights and information.

- When exploring a new research area : Secondary data can be a useful starting point for researchers who are exploring a new research area. Secondary data can provide context and background information on a research topic, and can help researchers identify key variables and relationships to explore further.

- When comparing and contrasting research findings: Secondary data can be used to compare and contrast findings across different studies or datasets. This can help researchers identify trends, patterns, and relationships that may not have been apparent from individual studies.

- When triangulating research findings: Triangulation is the process of using multiple sources of data to confirm or refute research findings. Secondary data can be used to support triangulation by providing additional sources of data to support or refute primary research findings.

- When validating research findings : Secondary data can be used to validate primary research findings by providing additional sources of data that support or refute the primary findings.

Characteristics of Secondary Data

Secondary data have several characteristics that distinguish them from primary data. Here are some of the key characteristics of secondary data:

- Non-reactive: Secondary data are non-reactive, meaning that they are not collected for the specific purpose of the research study. This means that the researcher has no control over the data collection process, and cannot influence how the data were collected.

- Time-saving: Secondary data are pre-existing, meaning that they have already been collected and organized by someone else. This can save the researcher time and resources, as they do not need to collect the data themselves.

- Wide-ranging : Secondary data sources can provide a wide range of information on a variety of topics. This can be useful for researchers who are exploring a new research area or seeking to compare and contrast research findings.

- Less expensive: Secondary data are generally less expensive than primary data, as they do not require the researcher to incur the costs associated with data collection.

- Potential for bias : Secondary data may be subject to biases that were present in the original data collection process. For example, data may have been collected using a biased sampling method or the data may be incomplete or inaccurate.

- Lack of control: The researcher has no control over the data collection process and cannot ensure that the data were collected using appropriate methods or measures.

- Requires careful evaluation : Secondary data sources must be evaluated carefully to ensure that they are appropriate for the research question and analysis. This includes assessing the quality, reliability, and validity of the data sources.

Advantages of Secondary Data

There are several advantages to using secondary data in research, including:

- Time-saving : Collecting primary data can be time-consuming and expensive. Secondary data can be accessed quickly and easily, which can save researchers time and resources.

- Cost-effective: Secondary data are generally less expensive than primary data, as they do not require the researcher to incur the costs associated with data collection.

- Large sample size : Secondary data sources often have larger sample sizes than primary data sources, which can increase the statistical power of the research.

- Access to historical data : Secondary data sources can provide access to historical data, which can be useful for researchers who are studying trends over time.

- No ethical concerns: Secondary data are already in existence, so there are no ethical concerns related to collecting data from human subjects.

- May be more objective : Secondary data may be more objective than primary data, as the data were not collected for the specific purpose of the research study.

Limitations of Secondary Data

While there are many advantages to using secondary data in research, there are also some limitations that should be considered. Some of the main limitations of secondary data include:

- Lack of control over data quality : Researchers do not have control over the data collection process, which means they cannot ensure the accuracy or completeness of the data.

- Limited availability: Secondary data may not be available for the specific research question or study design.

- Lack of information on sampling and data collection methods: Researchers may not have access to information on the sampling and data collection methods used to gather the secondary data. This can make it difficult to evaluate the quality of the data.

- Data may not be up-to-date: Secondary data may not be up-to-date or relevant to the current research question.

- Data may be incomplete or inaccurate : Secondary data may be incomplete or inaccurate due to missing or incorrect data points, data entry errors, or other factors.

- Biases in data collection: The data may have been collected using biased sampling or data collection methods, which can limit the validity of the data.

- Lack of control over variables: Researchers have limited control over the variables that were measured in the original data collection process, which can limit the ability to draw conclusions about causality.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Primary Data – Types, Methods and Examples

Information in Research – Types and Examples

Research Data – Types Methods and Examples

Qualitative Data – Types, Methods and Examples

Quantitative Data – Types, Methods and Examples

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Secondary Research

Try Qualtrics for free

Secondary research: definition, methods, & examples.

19 min read This ultimate guide to secondary research helps you understand changes in market trends, customers buying patterns and your competition using existing data sources.

In situations where you’re not involved in the data gathering process ( primary research ), you have to rely on existing information and data to arrive at specific research conclusions or outcomes. This approach is known as secondary research.

In this article, we’re going to explain what secondary research is, how it works, and share some examples of it in practice.

Free eBook: The ultimate guide to conducting market research

What is secondary research?

Secondary research, also known as desk research, is a research method that involves compiling existing data sourced from a variety of channels . This includes internal sources (e.g.in-house research) or, more commonly, external sources (such as government statistics, organizational bodies, and the internet).

Secondary research comes in several formats, such as published datasets, reports, and survey responses , and can also be sourced from websites, libraries, and museums.

The information is usually free — or available at a limited access cost — and gathered using surveys , telephone interviews, observation, face-to-face interviews, and more.

When using secondary research, researchers collect, verify, analyze and incorporate it to help them confirm research goals for the research period.

As well as the above, it can be used to review previous research into an area of interest. Researchers can look for patterns across data spanning several years and identify trends — or use it to verify early hypothesis statements and establish whether it’s worth continuing research into a prospective area.

How to conduct secondary research

There are five key steps to conducting secondary research effectively and efficiently:

1. Identify and define the research topic

First, understand what you will be researching and define the topic by thinking about the research questions you want to be answered.

Ask yourself: What is the point of conducting this research? Then, ask: What do we want to achieve?

This may indicate an exploratory reason (why something happened) or confirm a hypothesis. The answers may indicate ideas that need primary or secondary research (or a combination) to investigate them.

2. Find research and existing data sources

If secondary research is needed, think about where you might find the information. This helps you narrow down your secondary sources to those that help you answer your questions. What keywords do you need to use?

Which organizations are closely working on this topic already? Are there any competitors that you need to be aware of?

Create a list of the data sources, information, and people that could help you with your work.

3. Begin searching and collecting the existing data

Now that you have the list of data sources, start accessing the data and collect the information into an organized system. This may mean you start setting up research journal accounts or making telephone calls to book meetings with third-party research teams to verify the details around data results.

As you search and access information, remember to check the data’s date, the credibility of the source, the relevance of the material to your research topic, and the methodology used by the third-party researchers. Start small and as you gain results, investigate further in the areas that help your research’s aims.

4. Combine the data and compare the results

When you have your data in one place, you need to understand, filter, order, and combine it intelligently. Data may come in different formats where some data could be unusable, while other information may need to be deleted.

After this, you can start to look at different data sets to see what they tell you. You may find that you need to compare the same datasets over different periods for changes over time or compare different datasets to notice overlaps or trends. Ask yourself: What does this data mean to my research? Does it help or hinder my research?

5. Analyze your data and explore further

In this last stage of the process, look at the information you have and ask yourself if this answers your original questions for your research. Are there any gaps? Do you understand the information you’ve found? If you feel there is more to cover, repeat the steps and delve deeper into the topic so that you can get all the information you need.

If secondary research can’t provide these answers, consider supplementing your results with data gained from primary research. As you explore further, add to your knowledge and update your findings. This will help you present clear, credible information.

Primary vs secondary research

Unlike secondary research, primary research involves creating data first-hand by directly working with interviewees, target users, or a target market. Primary research focuses on the method for carrying out research, asking questions, and collecting data using approaches such as:

- Interviews (panel, face-to-face or over the phone)

- Questionnaires or surveys

- Focus groups

Using these methods, researchers can get in-depth, targeted responses to questions, making results more accurate and specific to their research goals. However, it does take time to do and administer.

Unlike primary research, secondary research uses existing data, which also includes published results from primary research. Researchers summarize the existing research and use the results to support their research goals.

Both primary and secondary research have their places. Primary research can support the findings found through secondary research (and fill knowledge gaps), while secondary research can be a starting point for further primary research. Because of this, these research methods are often combined for optimal research results that are accurate at both the micro and macro level.

Sources of Secondary Research

There are two types of secondary research sources: internal and external. Internal data refers to in-house data that can be gathered from the researcher’s organization. External data refers to data published outside of and not owned by the researcher’s organization.

Internal data

Internal data is a good first port of call for insights and knowledge, as you may already have relevant information stored in your systems. Because you own this information — and it won’t be available to other researchers — it can give you a competitive edge . Examples of internal data include:

- Database information on sales history and business goal conversions

- Information from website applications and mobile site data

- Customer-generated data on product and service efficiency and use

- Previous research results or supplemental research areas

- Previous campaign results

External data

External data is useful when you: 1) need information on a new topic, 2) want to fill in gaps in your knowledge, or 3) want data that breaks down a population or market for trend and pattern analysis. Examples of external data include:

- Government, non-government agencies, and trade body statistics

- Company reports and research

- Competitor research

- Public library collections

- Textbooks and research journals

- Media stories in newspapers

- Online journals and research sites

Three examples of secondary research methods in action

How and why might you conduct secondary research? Let’s look at a few examples:

1. Collecting factual information from the internet on a specific topic or market

There are plenty of sites that hold data for people to view and use in their research. For example, Google Scholar, ResearchGate, or Wiley Online Library all provide previous research on a particular topic. Researchers can create free accounts and use the search facilities to look into a topic by keyword, before following the instructions to download or export results for further analysis.

This can be useful for exploring a new market that your organization wants to consider entering. For instance, by viewing the U.S Census Bureau demographic data for that area, you can see what the demographics of your target audience are , and create compelling marketing campaigns accordingly.

2. Finding out the views of your target audience on a particular topic

If you’re interested in seeing the historical views on a particular topic, for example, attitudes to women’s rights in the US, you can turn to secondary sources.

Textbooks, news articles, reviews, and journal entries can all provide qualitative reports and interviews covering how people discussed women’s rights. There may be multimedia elements like video or documented posters of propaganda showing biased language usage.

By gathering this information, synthesizing it, and evaluating the language, who created it and when it was shared, you can create a timeline of how a topic was discussed over time.

3. When you want to know the latest thinking on a topic

Educational institutions, such as schools and colleges, create a lot of research-based reports on younger audiences or their academic specialisms. Dissertations from students also can be submitted to research journals, making these places useful places to see the latest insights from a new generation of academics.

Information can be requested — and sometimes academic institutions may want to collaborate and conduct research on your behalf. This can provide key primary data in areas that you want to research, as well as secondary data sources for your research.

Advantages of secondary research

There are several benefits of using secondary research, which we’ve outlined below:

- Easily and readily available data – There is an abundance of readily accessible data sources that have been pre-collected for use, in person at local libraries and online using the internet. This data is usually sorted by filters or can be exported into spreadsheet format, meaning that little technical expertise is needed to access and use the data.

- Faster research speeds – Since the data is already published and in the public arena, you don’t need to collect this information through primary research. This can make the research easier to do and faster, as you can get started with the data quickly.

- Low financial and time costs – Most secondary data sources can be accessed for free or at a small cost to the researcher, so the overall research costs are kept low. In addition, by saving on preliminary research, the time costs for the researcher are kept down as well.

- Secondary data can drive additional research actions – The insights gained can support future research activities (like conducting a follow-up survey or specifying future detailed research topics) or help add value to these activities.

- Secondary data can be useful pre-research insights – Secondary source data can provide pre-research insights and information on effects that can help resolve whether research should be conducted. It can also help highlight knowledge gaps, so subsequent research can consider this.

- Ability to scale up results – Secondary sources can include large datasets (like Census data results across several states) so research results can be scaled up quickly using large secondary data sources.

Disadvantages of secondary research

The disadvantages of secondary research are worth considering in advance of conducting research :

- Secondary research data can be out of date – Secondary sources can be updated regularly, but if you’re exploring the data between two updates, the data can be out of date. Researchers will need to consider whether the data available provides the right research coverage dates, so that insights are accurate and timely, or if the data needs to be updated. Also, fast-moving markets may find secondary data expires very quickly.

- Secondary research needs to be verified and interpreted – Where there’s a lot of data from one source, a researcher needs to review and analyze it. The data may need to be verified against other data sets or your hypotheses for accuracy and to ensure you’re using the right data for your research.

- The researcher has had no control over the secondary research – As the researcher has not been involved in the secondary research, invalid data can affect the results. It’s therefore vital that the methodology and controls are closely reviewed so that the data is collected in a systematic and error-free way.

- Secondary research data is not exclusive – As data sets are commonly available, there is no exclusivity and many researchers can use the same data. This can be problematic where researchers want to have exclusive rights over the research results and risk duplication of research in the future.

When do we conduct secondary research?

Now that you know the basics of secondary research, when do researchers normally conduct secondary research?

It’s often used at the beginning of research, when the researcher is trying to understand the current landscape . In addition, if the research area is new to the researcher, it can form crucial background context to help them understand what information exists already. This can plug knowledge gaps, supplement the researcher’s own learning or add to the research.

Secondary research can also be used in conjunction with primary research. Secondary research can become the formative research that helps pinpoint where further primary research is needed to find out specific information. It can also support or verify the findings from primary research.

You can use secondary research where high levels of control aren’t needed by the researcher, but a lot of knowledge on a topic is required from different angles.

Secondary research should not be used in place of primary research as both are very different and are used for various circumstances.

Questions to ask before conducting secondary research

Before you start your secondary research, ask yourself these questions:

- Is there similar internal data that we have created for a similar area in the past?

If your organization has past research, it’s best to review this work before starting a new project. The older work may provide you with the answers, and give you a starting dataset and context of how your organization approached the research before. However, be mindful that the work is probably out of date and view it with that note in mind. Read through and look for where this helps your research goals or where more work is needed.

- What am I trying to achieve with this research?

When you have clear goals, and understand what you need to achieve, you can look for the perfect type of secondary or primary research to support the aims. Different secondary research data will provide you with different information – for example, looking at news stories to tell you a breakdown of your market’s buying patterns won’t be as useful as internal or external data e-commerce and sales data sources.

- How credible will my research be?

If you are looking for credibility, you want to consider how accurate the research results will need to be, and if you can sacrifice credibility for speed by using secondary sources to get you started. Bear in mind which sources you choose — low-credibility data sites, like political party websites that are highly biased to favor their own party, would skew your results.

- What is the date of the secondary research?

When you’re looking to conduct research, you want the results to be as useful as possible , so using data that is 10 years old won’t be as accurate as using data that was created a year ago. Since a lot can change in a few years, note the date of your research and look for earlier data sets that can tell you a more recent picture of results. One caveat to this is using data collected over a long-term period for comparisons with earlier periods, which can tell you about the rate and direction of change.

- Can the data sources be verified? Does the information you have check out?

If you can’t verify the data by looking at the research methodology, speaking to the original team or cross-checking the facts with other research, it could be hard to be sure that the data is accurate. Think about whether you can use another source, or if it’s worth doing some supplementary primary research to replicate and verify results to help with this issue.

We created a front-to-back guide on conducting market research, The ultimate guide to conducting market research , so you can understand the research journey with confidence.

In it, you’ll learn more about:

- What effective market research looks like

- The use cases for market research

- The most important steps to conducting market research

- And how to take action on your research findings

Download the free guide for a clearer view on secondary research and other key research types for your business.

Related resources

Market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, primary vs secondary research 14 min read, request demo.

Ready to learn more about Qualtrics?

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Secondary Research: Definition, Methods and Examples.

In the world of research, there are two main types of data sources: primary and secondary. While primary research involves collecting new data directly from individuals or sources, secondary research involves analyzing existing data already collected by someone else. Today we’ll discuss secondary research.

One common source of this research is published research reports and other documents. These materials can often be found in public libraries, on websites, or even as data extracted from previously conducted surveys. In addition, many government and non-government agencies maintain extensive data repositories that can be accessed for research purposes.

LEARN ABOUT: Research Process Steps

While secondary research may not offer the same level of control as primary research, it can be a highly valuable tool for gaining insights and identifying trends. Researchers can save time and resources by leveraging existing data sources while still uncovering important information.

What is Secondary Research: Definition

Secondary research is a research method that involves using already existing data. Existing data is summarized and collated to increase the overall effectiveness of the research.

One of the key advantages of secondary research is that it allows us to gain insights and draw conclusions without having to collect new data ourselves. This can save time and resources and also allow us to build upon existing knowledge and expertise.

When conducting secondary research, it’s important to be thorough and thoughtful in our approach. This means carefully selecting the sources and ensuring that the data we’re analyzing is reliable and relevant to the research question . It also means being critical and analytical in the analysis and recognizing any potential biases or limitations in the data.

LEARN ABOUT: Level of Analysis

Secondary research is much more cost-effective than primary research , as it uses already existing data, unlike primary research, where data is collected firsthand by organizations or businesses or they can employ a third party to collect data on their behalf.

LEARN ABOUT: Data Analytics Projects

Secondary Research Methods with Examples

Secondary research is cost-effective, one of the reasons it is a popular choice among many businesses and organizations. Not every organization is able to pay a huge sum of money to conduct research and gather data. So, rightly secondary research is also termed “ desk research ”, as data can be retrieved from sitting behind a desk.

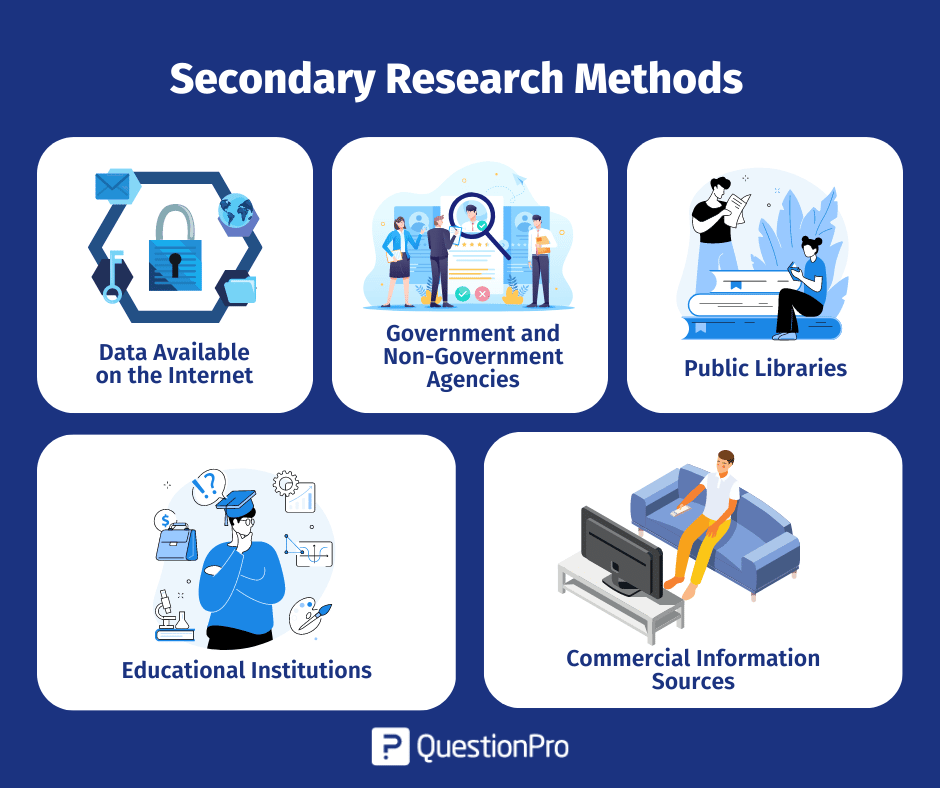

The following are popularly used secondary research methods and examples:

1. Data Available on The Internet

One of the most popular ways to collect secondary data is the internet. Data is readily available on the internet and can be downloaded at the click of a button.

This data is practically free of cost, or one may have to pay a negligible amount to download the already existing data. Websites have a lot of information that businesses or organizations can use to suit their research needs. However, organizations need to consider only authentic and trusted website to collect information.

2. Government and Non-Government Agencies

Data for secondary research can also be collected from some government and non-government agencies. For example, US Government Printing Office, US Census Bureau, and Small Business Development Centers have valuable and relevant data that businesses or organizations can use.

There is a certain cost applicable to download or use data available with these agencies. Data obtained from these agencies are authentic and trustworthy.

3. Public Libraries

Public libraries are another good source to search for data for this research. Public libraries have copies of important research that were conducted earlier. They are a storehouse of important information and documents from which information can be extracted.

The services provided in these public libraries vary from one library to another. More often, libraries have a huge collection of government publications with market statistics, large collection of business directories and newsletters.

4. Educational Institutions

Importance of collecting data from educational institutions for secondary research is often overlooked. However, more research is conducted in colleges and universities than any other business sector.

The data that is collected by universities is mainly for primary research. However, businesses or organizations can approach educational institutions and request for data from them.

5. Commercial Information Sources

Local newspapers, journals, magazines, radio and TV stations are a great source to obtain data for secondary research. These commercial information sources have first-hand information on economic developments, political agenda, market research, demographic segmentation and similar subjects.

Businesses or organizations can request to obtain data that is most relevant to their study. Businesses not only have the opportunity to identify their prospective clients but can also know about the avenues to promote their products or services through these sources as they have a wider reach.

Key Differences between Primary Research and Secondary Research

Understanding the distinction between primary research and secondary research is essential in determining which research method is best for your project. These are the two main types of research methods, each with advantages and disadvantages. In this section, we will explore the critical differences between the two and when it is appropriate to use them.

How to Conduct Secondary Research?

We have already learned about the differences between primary and secondary research. Now, let’s take a closer look at how to conduct it.

Secondary research is an important tool for gathering information already collected and analyzed by others. It can help us save time and money and allow us to gain insights into the subject we are researching. So, in this section, we will discuss some common methods and tips for conducting it effectively.

Here are the steps involved in conducting secondary research:

1. Identify the topic of research: Before beginning secondary research, identify the topic that needs research. Once that’s done, list down the research attributes and its purpose.

2. Identify research sources: Next, narrow down on the information sources that will provide most relevant data and information applicable to your research.

3. Collect existing data: Once the data collection sources are narrowed down, check for any previous data that is available which is closely related to the topic. Data related to research can be obtained from various sources like newspapers, public libraries, government and non-government agencies etc.

4. Combine and compare: Once data is collected, combine and compare the data for any duplication and assemble data into a usable format. Make sure to collect data from authentic sources. Incorrect data can hamper research severely.

4. Analyze data: Analyze collected data and identify if all questions are answered. If not, repeat the process if there is a need to dwell further into actionable insights.

Advantages of Secondary Research

Secondary research offers a number of advantages to researchers, including efficiency, the ability to build upon existing knowledge, and the ability to conduct research in situations where primary research may not be possible or ethical. By carefully selecting their sources and being thoughtful in their approach, researchers can leverage secondary research to drive impact and advance the field. Some key advantages are the following:

1. Most information in this research is readily available. There are many sources from which relevant data can be collected and used, unlike primary research, where data needs to collect from scratch.

2. This is a less expensive and less time-consuming process as data required is easily available and doesn’t cost much if extracted from authentic sources. A minimum expenditure is associated to obtain data.

3. The data that is collected through secondary research gives organizations or businesses an idea about the effectiveness of primary research. Hence, organizations or businesses can form a hypothesis and evaluate cost of conducting primary research.

4. Secondary research is quicker to conduct because of the availability of data. It can be completed within a few weeks depending on the objective of businesses or scale of data needed.

As we can see, this research is the process of analyzing data already collected by someone else, and it can offer a number of benefits to researchers.

Disadvantages of Secondary Research

On the other hand, we have some disadvantages that come with doing secondary research. Some of the most notorious are the following:

1. Although data is readily available, credibility evaluation must be performed to understand the authenticity of the information available.

2. Not all secondary data resources offer the latest reports and statistics. Even when the data is accurate, it may not be updated enough to accommodate recent timelines.

3. Secondary research derives its conclusion from collective primary research data. The success of your research will depend, to a greater extent, on the quality of research already conducted by primary research.

LEARN ABOUT: 12 Best Tools for Researchers

In conclusion, secondary research is an important tool for researchers exploring various topics. By leveraging existing data sources, researchers can save time and resources, build upon existing knowledge, and conduct research in situations where primary research may not be feasible.

There are a variety of methods and examples of secondary research, from analyzing public data sets to reviewing previously published research papers. As students and aspiring researchers, it’s important to understand the benefits and limitations of this research and to approach it thoughtfully and critically. By doing so, we can continue to advance our understanding of the world around us and contribute to meaningful research that positively impacts society.

QuestionPro can be a useful tool for conducting secondary research in a variety of ways. You can create online surveys that target a specific population, collecting data that can be analyzed to gain insights into consumer behavior, attitudes, and preferences; analyze existing data sets that you have obtained through other means or benchmark your organization against others in your industry or against industry standards. The software provides a range of benchmarking tools that can help you compare your performance on key metrics, such as customer satisfaction, with that of your peers.

Using QuestionPro thoughtfully and strategically allows you to gain valuable insights to inform decision-making and drive business success. Start today for free! No credit card is required.

LEARN MORE FREE TRIAL

MORE LIKE THIS

How Can I Help You? — Tuesday CX Thoughts

Jun 5, 2024

Why Multilingual 360 Feedback Surveys Provide Better Insights

Jun 3, 2024

Raked Weighting: A Key Tool for Accurate Survey Results

May 31, 2024

Top 8 Data Trends to Understand the Future of Data

May 30, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Search Menu

- Sign in through your institution

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Variation

- Language Families

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Culture

- Music and Media

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Oncology

- Medical Toxicology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Neuroscience

- Cognitive Psychology

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business History

- Business Ethics

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic Methodology

- Economic History

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- Ethnic Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Political Behaviour

- Political Economy

- Political Institutions

- Politics and Law

- Politics of Development

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

28 Secondary Data Analysis

Department of Psychology, Michigan State University

Richard E. Lucas, Department of Psychology, Michigan State University, East Lansing, MI

- Published: 01 October 2013

- Cite Icon Cite

- Permissions Icon Permissions

Secondary data analysis refers to the analysis of existing data collected by others. Secondary analysis affords researchers the opportunity to investigate research questions using large-scale data sets that are often inclusive of under-represented groups, while saving time and resources. Despite the immense potential for secondary analysis as a tool for researchers in the social sciences, it is not widely used by psychologists and is sometimes met with sharp criticism among those who favor primary research. The goal of this chapter is to summarize the promises and pitfalls associated with secondary data analysis and to highlight the importance of archival resources for advancing psychological science. In addition to describing areas of convergence and divergence between primary and secondary data analysis, we outline basic steps for getting started and finding data sets. We also provide general guidance on issues related to measurement, handling missing data, and the use of survey weights.

The goal of research in the social science is to gain a better understanding of the world and how well theoretical predictions match empirical realities. Secondary data analysis contributes to these objectives through the application of “creative analytical techniques to data that have been amassed by others” ( Kiecolt & Nathan, 1985 , p. 10). Primary researchers design new studies to answer research questions, whereas the secondary data analyst uses existing resources. There is a deliberate coupling of research design and data analysis in primary research; however, the secondary data analyst rarely has had input into the design of the original studies in terms of the sampling strategy and measures selected for the investigation. For better or worse, the secondary data analyst simply has access to the final products of the data collection process in the form of a codebook or set of codebooks and a cleaned data set.

The analysis of existing data sets is routine in disciplines such as economics, political science, and sociology, but it is less well established in psychology ( but see Brooks-Gunn & Chase-Lansdale, 1991 ; Brooks-Gunn, Berlin, Leventhal, & Fuligini, 2000 ). Moreover, biases against secondary data analysis in favor of primary research may be present in psychology ( see McCall & Appelbaum, 1991 ). One possible explanation for this bias is that psychology has a rich and vibrant experimental tradition, and the training of many psychologists has likely emphasized this approach as the “gold standard” for addressing research questions and establishing causality ( see , e.g., Cronbach, 1957 ). As a result, the nonexperimental methods that are typically used in secondary analyses may be viewed by some as inferior. Psychological scientists trained in the experimental tradition may not fully appreciate the unique strengths that nonexperimental techniques have to offer and may underestimate the time, effort, and skills required for conducting secondary data analyses in a competent and professional manner. Finally, biases against secondary data analysis might stem from lingering concerns over the validity of the self-report methods that are typically used in secondary data analysis. These can include concerns about the possibility that placement of items in a survey can influence responses (e.g., differences in the average levels of reported marital and life satisfaction when questions occur back to back as opposed to having the questions separated in the survey; see Schwarz, 1999 ; Schwarz & Strack, 1999 ) and concerns with biased reporting of sensitive behaviors ( but see Akers, Massey, & Clarke, 1983 ).