5 Structured Thinking Techniques for Data Scientists

Structured thinking is a framework for solving unstructured problems — which covers just about all data science problems. Using a structured approach to solve problems not only only helps solve problems faster but also helps identify the parts of the problem that may need some extra attention.

Think of structured thinking like the map of a city you’re visiting for the first time.Without a map, you’ll probably find it difficult to reach your destination. Even if you did eventually reach your destination, it’ll probably take you at least double the time.

What Is Structured Thinking?

Here’s where the analogy breaks down: Structured thinking is a framework and not a fixed mindset; you can modify these techniques based on the problem you’re trying to solve. Let’s look at five structured thinking techniques to use in your next data science project .

- Six Step Problem Solving Model

- Eight Disciplines of Problem Solving

- The Drill Down Technique

- The Cynefin Framework

- The 5 Whys Technique

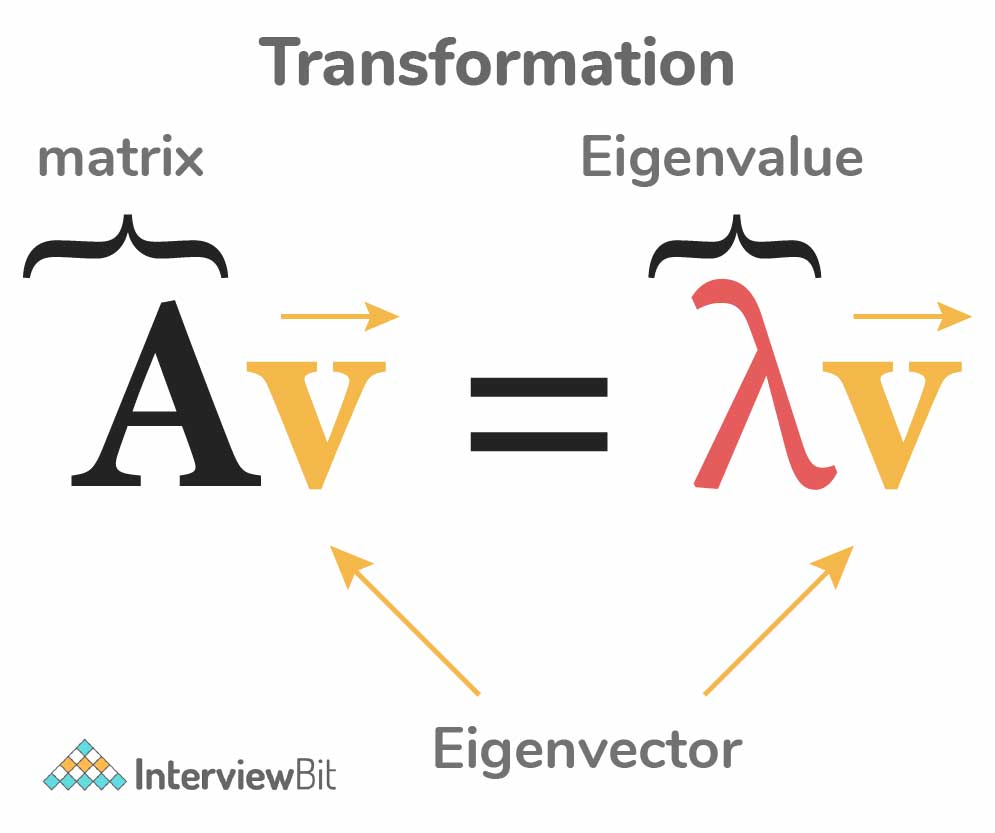

More From Sara A. Metwalli 3 Reasons Data Scientists Need Linear Algebra

1. Six Step Problem Solving Model

This technique is the simplest and easiest to use. As the name suggests, this technique uses six steps to solve a problem, which are:

Have a clear and concise problem definition.

Study the roots of the problem.

Brainstorm possible solutions to the problem.

Examine the possible solution and choose the best one.

Implement the solution effectively.

Evaluate the results.

This model follows the mindset of continuous development and improvement. So, on step six, if your results didn’t turn out the way you wanted, go back to step four and choose another solution (or to step one and try to define the problem differently).

My favorite part about this simple technique is how easy it is to alter based on the specific problem you’re attempting to solve.

We’ve Got Your Data Science Professionalization Right Here 4 Types of Projects You Need in Your Data Science Portfolio

2. Eight Disciplines of Problem Solving

The eight disciplines of problem solving offers a practical plan to solve a problem using an eight-step process. You can think of this technique as an extended, more-detailed version of the six step problem-solving model.

Each of the eight disciplines in this process should move you a step closer to finding the optimal solution to your problem. So, after you’ve got the prerequisites of your problem, you can follow disciplines D1-D8.

D1 : Put together your team. Having a team with the skills to solve the project can make moving forward much easier.

D2 : Define the problem. Describe the problem using quantifiable terms: the who, what, where, when, why and how.

D3 : Develop a working plan.

D4 : Determine and identify root causes. Identify the root causes of the problem using cause and effect diagrams to map causes against their effects.

D5 : Choose and verify permanent corrections. Based on the root causes, assess the work plan you developed earlier and edit as needed.

D6 : Implement the corrected action plan.

D7 : Assess your results.

D8 : Congratulate your team. After the end of a project, it’s essential to take a step back and appreciate the work you’ve all done before jumping into a new project.

3. The Drill Down Technique

The drill down technique is more suitable for large, complex problems with multiple collaborators. The whole purpose of using this technique is to break down a problem to its roots to make finding solutions that much easier. To use the drill down technique, you first need to create a table. The first column of the table will contain the outlined definition of the problem, followed by a second column containing the factors causing this problem. Finally, the third column will contain the cause of the second column's contents, and you’ll continue to drill down on each column until you reach the root of the problem.

Once you reach the root causes of the symptoms, you can begin developing solutions for the bigger problem.

On That Note . . . 4 Essential Skills Every Data Scientist Needs

4. The Cynefin Framework

The Cynefin framework, like the rest of the techniques, works by breaking down a problem into its root causes to reach an efficient solution. We consider the Cynefin framework a higher-level approach because it requires you to place your problem into one of five contexts.

- Obvious Contexts. In this context, your options are clear, and the cause-and-effect relationships are apparent and easy to point out.

- Complicated Contexts. In this context, the problem might have several correct solutions. In this case, a clear relationship between cause and effect may exist, but it’s not equally apparent to everyone.

- Complex Contexts. If it’s impossible to find a direct answer to your problem, then you’re looking at a complex context. Complex contexts are problems that have unpredictable answers. The best approach here is to follow a trial and error approach.

- Chaotic Contexts. In this context, there is no apparent relationship between cause and effect and our main goal is to establish a correlation between the causes and effects.

- Disorder. The final context is disorder, the most difficult of the contexts to categorize. The only way to diagnose disorder is to eliminate the other contexts and gather further information.

Get the Job You Want. We Can Help. Apply for Data Science Jobs on Built In

5. The 5 Whys Technique

Our final technique is the 5 Whys or, as I like to call it, the curious child approach. I think this is the most well-known and natural approach to problem solving.

This technique follows the simple approach of asking “why” five times — like a child would. First, you start with the main problem and ask why it occurred. Then you keep asking why until you reach the root cause of said problem. (Fair warning, you may need to ask more than five whys to find your answer.)

Women Who Code

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

- Data, AI, & Machine Learning

- Managing Technology

- Social Responsibility

- Workplace, Teams, & Culture

- AI & Machine Learning

- Diversity & Inclusion

- Big ideas Research Projects

- Artificial Intelligence and Business Strategy

- Responsible AI

- Future of the Workforce

- Future of Leadership

- All Research Projects

- AI in Action

- Most Popular

- The Truth Behind the Nursing Crisis

- Work/23: The Big Shift

- Coaching for the Future-Forward Leader

- Measuring Culture

The spring 2024 issue’s special report looks at how to take advantage of market opportunities in the digital space, and provides advice on building culture and friendships at work; maximizing the benefits of LLMs, corporate venture capital initiatives, and innovation contests; and scaling automation and digital health platform.

- Past Issues

- Upcoming Events

- Video Archive

- Me, Myself, and AI

- Three Big Points

Framing Data Science Problems the Right Way From the Start

Data science project failure can often be attributed to poor problem definition, but early intervention can prevent it.

- Data, AI, & Machine Learning

- Analytics & Business Intelligence

- Data & Data Culture

The failure rate of data science initiatives — often estimated at over 80% — is way too high. We have spent years researching the reasons contributing to companies’ low success rates and have identified one underappreciated issue: Too often, teams skip right to analyzing the data before agreeing on the problem to be solved. This lack of initial understanding guarantees that many projects are doomed to fail from the very beginning.

Of course, this issue is not a new one. Albert Einstein is often quoted as having said , “If I were given one hour to save the planet, I would spend 59 minutes defining the problem and one minute solving it.”

Get Updates on Leading With AI and Data

Get monthly insights on how artificial intelligence impacts your organization and what it means for your company and customers.

Please enter a valid email address

Thank you for signing up

Privacy Policy

Consider how often data scientists need to “clean up the data” on data science projects, often as quickly and cheaply as possible. This may seem reasonable, but it ignores the critical “why” question: Why is there bad data in the first place? Where did it come from? Does it represent blunders, or are there legitimate data points that are just surprising? Will they occur in the future? How does the bad data impact this particular project and the business? In many cases, we find that a better problem statement is to find and eliminate the root causes of bad data .

Too often, we see examples where people either assume that they understand the problem and rush to define it, or they don’t build the consensus needed to actually solve it. We argue that a key to successful data science projects is to recognize the importance of clearly defining the problem and adhere to proven principles in so doing. This problem is not relegated to technology teams; we find that many business, political, management, and media projects, at all levels, also suffer from poor problem definition.

Toward Better Problem Definition

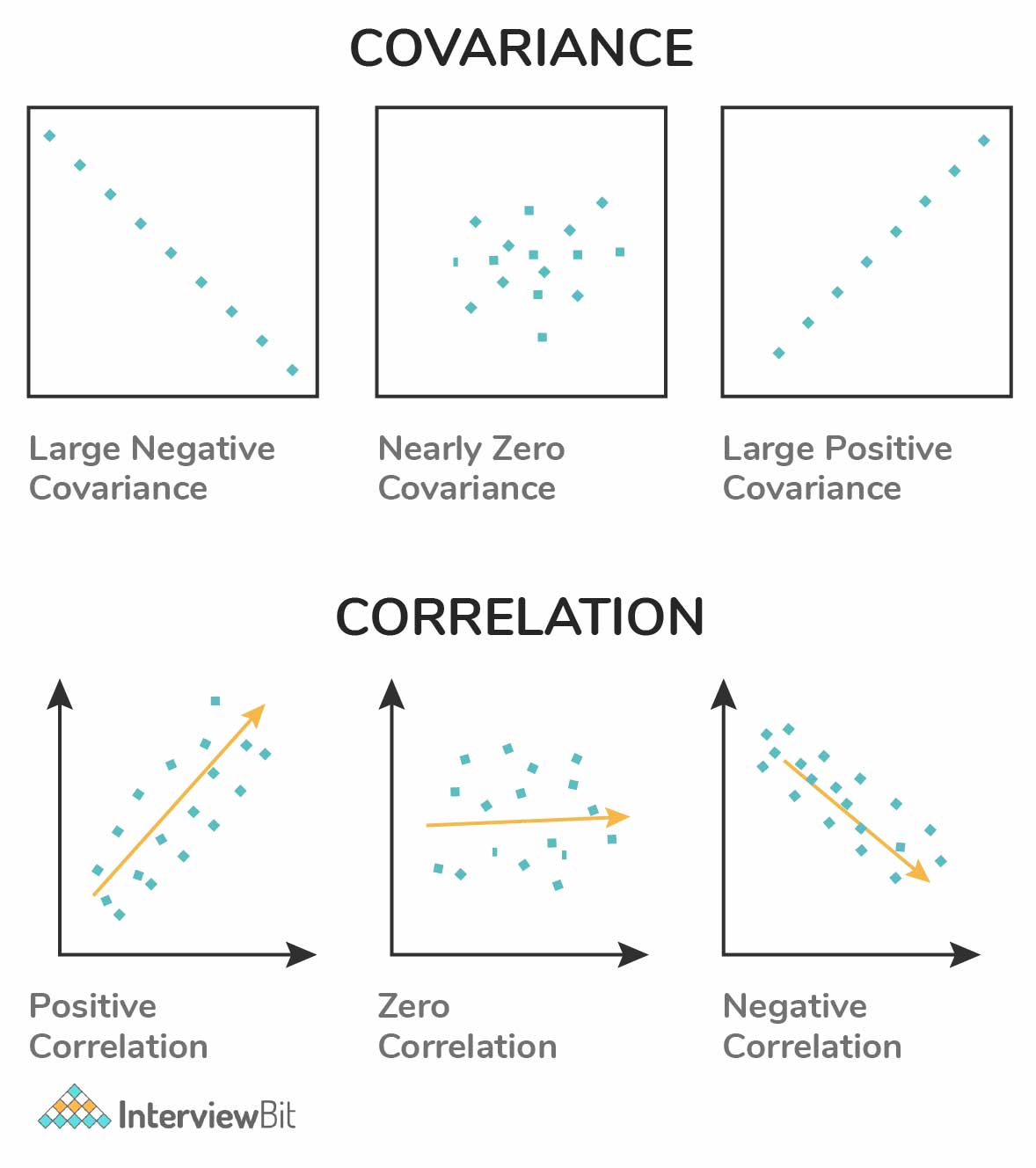

Data science uses the scientific method to solve often complex (or multifaceted) and unstructured problems using data and analytics. In analytics, the term fishing expedition refers to a project that was never framed correctly to begin with and involves trolling the data for unexpected correlations. This type of data fishing does not meet the spirit of effective data science but is prevalent nonetheless. Consequently, defining the problem correctly needs to be step one. We previously proposed an organizational “bridge” between data science teams and business units, to be led by an innovation marshal — someone who speaks the language of both the data and management teams and can report directly to the CEO. This marshal would be an ideal candidate to assume overall responsibility to ensure that the following proposed principles are utilized.

Get the right people involved. To ensure that your problem framing has the correct inputs, you have to involve all the key people whose contributions are needed to complete the project successfully from the beginning. After all, data science is an interdisciplinary, transdisciplinary team sport. This team should include those who “own” the problem, those who will provide data, those responsible for the analyses, and those responsible for all aspects of implementation. Think of the RACI matrix — those responsible , accountable , to be consulted , and to be informed — for each aspect of the project.

Recognize that rigorously defining the problem is hard work. We often find that the problem statement changes as people work to nail it down. Leaders of data science projects should encourage debate, allow plenty of time, and document the problem statement in detail as they go. This ensures broad agreement on the statement before moving forward.

Don’t confuse the problem and its proposed solution. Consider a bank that is losing market share in consumer loans and whose leadership team believes that competitors are using more advanced models. It would be easy to jump to a problem statement that looks something like “Build more sophisticated loan risk models.” But that presupposes that a more sophisticated model is the solution to market share loss, without considering other possible options, such as increasing the number of loan officers, providing better training, or combating new entrants with more effective marketing. Confusing the problem and proposed solution all but ensures that the problem is not well understood, limits creativity, and keeps potential problem solvers in the dark. A better statement in this case would be “Research root causes of market share loss in consumer loans, and propose viable solutions.” This might lead to more sophisticated models, or it might not.

Understand the distinction between a proximate problem and a deeper root cause. In our first example, the unclean data is a proximate problem, whereas the root cause is whatever leads to the creation of bad data in the first place. Importantly, “We don’t know enough to fully articulate the root cause of the bad data problem” is a legitimate state of affairs, demanding a small-scale subproject.

Do not move past problem definition until it meets the following criteria:

Related Articles

- It does no harm. It may not be clear how to solve the defined problem, but it should be clear that solving it will lead to a good business result. If it’s not clear, more refinement may be needed. Consider the earlier bank example. While it might be easy enough to adjust models in ways that grant more loans, this might significantly increase risk — an unacceptable outcome. So the real goal should be to improve market share without creating additional risk, hence the inclusion of “propose viable solutions” in the problem statement above.

- It considers necessary constraints. Using the bank example, we can recognize that more sophisticated models might require hiring additional highly skilled loan officers — something the bank might be unwilling to do. All constraints, including those involving time, budget, technology, and people, should be clearly articulated to avoid a problem statement misaligned with business goals.

- It has an accountability matrix (or its equivalent). Alignment is key for success, so ensure that those who are responsible for solving the problem understand their various roles and responsibilities. Again, think RACI matrix.

- It receives buy-in from stakeholders. Poorly defined or controversial problem statements often produce resistors within the organization. In extreme cases, they may become “snipers,” attempting to ensure project failure. Work to develop a general (not necessarily unanimous) consensus from leadership, those involved in the solution, and the ultimate customers (those who will be affected) on the problem definition.

Taking the time needed to properly define the problem can feel uncomfortable. After all, we live and work in cultures that demand results and are eager to “get on with it.” But shortchanging this step is akin to putting the cart before the horse — it simply doesn’t work. There is no substitute for probing more deeply, getting the right people involved, and taking the time to understand the real problem. All of us — data scientists, business leaders, and politicians alike — need to get better at defining the right problem the right way.

About the Authors

Roger W. Hoerl ( @rogerhoerl ) teaches statistics at Union College in Schenectady, New York. Previously, he led the applied statistics lab at GE Global Research. Diego Kuonen ( @diegokuonen ) is head of Bern, Switzerland-based Statoo Consulting and a professor of data science at the Geneva School of Economics and Management at the University of Geneva. Thomas C. Redman ( @thedatadoc1 ) is president of New Jersey-based consultancy Data Quality Solutions and coauthor of The Real Work of Data Science: Turning Data Into Information, Better Decisions, and Stronger Organizations (Wiley, 2019).

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

Comments (2)

Tathagat varma.

Data Topics

- Data Architecture

- Data Literacy

- Data Science

- Data Strategy

- Data Modeling

- Governance & Quality

- Education Resources For Use & Management of Data

Data Science Solutions: Applications and Use Cases

Data Science is a broad field with many potential applications. It’s not just about analyzing data and modeling algorithms, but it also reinvents the way businesses operate and how different departments interact. Data scientists solve complex problems every day, leveraging a variety of Data Science solutions to tackle issues like processing unstructured data, finding patterns […]

Data Science is a broad field with many potential applications. It’s not just about analyzing data and modeling algorithms, but it also reinvents the way businesses operate and how different departments interact. Data scientists solve complex problems every day, leveraging a variety of Data Science solutions to tackle issues like processing unstructured data, finding patterns in large datasets, and building recommendation engines using advanced statistical methods, artificial intelligence, and machine learning techniques.

Data Science helps analyze and extract patterns from corporate data, so these patterns can be organized to guide corporate decisions. Data analysis using Data Science techniques helps companies to figure out which trends are the best fit for businesses during various parts of the year.

Through data patterns, Data Science professionals can use tools and techniques to forecast future customer needs toward a specific product or service. Data Science and businesses can work together closely in understanding consumer preferences across a wide range of items and running better marketing campaigns.

To enhance the scope of predictive analytics , Data Science now employs other advanced technologies such as machine learning and deep learning to improve decision-making and create better models for predicting financial risks, customer behaviors, or market trends.

Data Science helps with making future-proofing decisions, supply chain predictions, understanding market trends, planning better pricing for products, consideration of automation for various data-driven tasks, and so on.

For example, in sales and marketing, Data Science is mainly used to predict markets, determine new customer segments, optimize pricing structures, and analyze the customer portfolio. Businesses frequently use sentiment analysis and behavior analytics to determine purchase and usage patterns, and to understand how people view products and services. Some businesses like Lowes, Home Depot, or Netflix use “hyper-personalization” techniques to match offers to customers accurately via their recommendation engines.

E-commerce companies use recommendation engines, pricing algorithms, customer predictive segmentation, personalized product image searching, and artificially intelligent chat bots to offer transformational customer experience.

In recent times, deep learning , through its use of “artificial neural networks,” has empowered data scientists to perform unstructured data analytics, such as image recognition, object categorizing, and sound mapping.

Data Science Solutions by Industry Applications

Now let’s take a look at how Data Science is powering the industry sectors with its cross-disciplinary platforms and tools:

Data Science Solutions in Banking: Banking and financial sectors are highly dependent on Data Science solutions powered with big data tools for risk analytics, risk management, KYC, and fraud mitigation. Large banks, hedge funds, stock exchanges, and other financial institutions use advanced Data Science (powered by big data, AI, ML) for trading analytics, pre-trade decision-support analytics, sentiment measurements, predictive analytics, and more.

Data Science Solutions in Marketing: Marketing departments often use Data Science to build recommendation systems and to analyze customer behavior. When we talk about Data Science in marketing, we are primarily concerned with what we call “retail marketing.” The retail marketing process involves analyzing customer data to inform business decisions and drive revenue. Common data used in retail marketing include customer data, product data, sales data, and competitor data. Customer transactional data is used extensively in AI-powered data analytics systems for increased sales and providing excellent marketing services. Chatbot analytics and sales representative response data are used together to improve sales efficiency.

The retailer can use this data to build customer-targeted marketing campaigns, optimize prices based on demand, and decide on product assortment. The retail marketing process is rarely automated; it involves making business decisions based on the data. Data scientists working in retail marketing are primarily concerned with deriving insights from the data and applying statistical and machine learning methods to inform these decisions.

Data Science Solutions in Finance and Trading: Finance departments use Data Science to build trading algorithms, manage risk, and improve compliance. A data scientist working in finance will primarily use data about the financial markets. This includes data about the companies whose stocks are traded on the market, the trading activity of the investors, and the stock prices. The financial data is unstructured and messy; it’s collected from different sources using different formats. The data scientist’s first task, therefore, is to process the data and convert it into a structured format. This is necessary for building algorithms and other models. For example, the data scientist might build a trading algorithm that exploits the market inefficiencies and generates profits for the company.

Data Science Solutions in Human Resources: HR departments use Data Science to hire the best talent, manage employee data, and predict employee performance. The data scientist working in HR will primarily use employee data collected from different sources. This data could be structured or unstructured depending on how it’s collected. The most common source is an HR database such as Workday. The data scientist’s first task is to process the data and clean it. This is necessary for insights from the data. The data scientist might use methods like machine learning to predict the employee’s performance. This can be done by training the algorithm on historical employee data and the features it contains. For example, the data scientist might build a model that predicts employee performance using historical data.

Data Science in Logistics and Warehousing: Logistics and operations departments use Data Science to manage supply chains and predict demand. The data scientist working in logistics and warehousing will primarily use data about customer orders, inventory, and product prices. The data scientist will use data from sensors and IoT devices deployed in the supply chain to track the product’s journey. The data scientist might use methods like machine learning to predict demand.

Data Science Solutions in Customer Service: Customer service departments use Data Science to answer customer queries, manage tickets, and improve the end-to-end customer experience. The data scientist working in customer service will primarily use data about customer tickets, customers, and the support team. The most common source is the ticket management system. In this case, the data scientist might use methods like machine learning to predict when the customer will stop engaging with the brand. This can be done by training the algorithm on historical customer data. For example, using historical data, the data scientist might build a model that predicts when a customer will stop engaging with the brand.

Big Data with Data Science Solutions Use Cases

While Data Science solutions can be used to get insights into behaviors and processes, big data analytics indicates the convergence of several cutting-edge technologies working together to help enterprise organizations extract better value from the data that they have.

In biomedical research and health, advanced Data Science and big data analytics techniques are used for increasing online revenue, reducing customer complaints, and enhancing customer experience through personalized services. In the hospitality and food services industries, once again big data analytics is used for studying customers’ behavior through shopping data, such as wait times at the checkout. Statistics show that 38% of companies use big data to improve organizational effectiveness.

In the insurance sector, big data-powered predictive analytics is frequently used for analyzing large volumes of data at high speed during the underwriting stage. Insurance claims analysts now have access to algorithms that help identify fraudulent behaviors. Across all industry sectors, organizations are harnessing the predictive powers of Data Science to enhance their business forecasting capabilities.

Big data coupled with Data Science enables enterprise businesses to leverage their own organization data, rather than relying on market studies or third-party tools. Data Science practitioners work closely with RPA industry professionals to identify data sources for a company, as well as to build dashboards and visuals for searching various forms of data analytics in real-time. Data Science teams can now train deep learning systems to identify contracts and invoices from a stack of documents, as well as perform different types of identification for the information.

Big data analytics has the potential to unlock great insights into data across social media channels and platforms, enabling marketing, customer support, and advertising to improve and be more aligned with corporate goals. Big data analytics make research results better, and helps organizations use research more effectively by allowing them to identify specific test cases and user settings.

Specialized Data Science Use Cases with Examples

Data Science applications can be used for any industry or area of study, but the majority of examples involve data analytics for business use cases . In this section, some specific use cases are presented with examples to help you better understand its potential in your organization.

Data cleansing: In Data Science, the first step is data cleansing, which involves identifying and cleaning up any incorrect or incomplete data sets. Data cleansing is critical to identify errors and inconsistencies that can skew your data analysis and lead to poor business decisions. The most important thing about data cleansing is that it’s an ongoing process. Business data is always changing, which means the data you have today might not be correct tomorrow. The best data scientists know that data cleansing isn’t done just once; it’s an ongoing process that starts with the very first data set you collect.

Prediction and forecasting: The next step in Data Science is data analysis, prediction, and forecasting. You can do this on an individual level or on a larger scale for your entire customer base. Prediction and forecasting helps you understand how your customers behave and what they may do next. You can use these insights to create better products, marketing campaigns, and customer support. Normally, the techniques used for prediction and forecasting include regression, time series analysis, and artificial neural networks.

Fraud detection: Fraud detection is a highly specialized use of Data Science that relies on many techniques to identify inconsistencies. With fraud detection, you’re trying to find any transactions that are incorrect or fraudulent. It’s an important use case because it can significantly reduce the costs of business operations. The best fraud detection systems are wide-ranging. They use many different techniques to identify inconsistencies and unusual data points that suggest fraud. Because fraud detection is such a specialized use case, it’s best to work with a Data Science professional.

Data Science for business growth: Every business wants to grow, and this is a natural outcome of doing business. Yet many businesses struggle to keep up with their competitors. Data Science can help you understand your potential customers and improve your services. It can also help you identify new opportunities and explore different areas you can expand into. Use Data Science to identify your target audience and their needs. Then create products and services that serve those needs better than your competitors can. You can also use Data Science to identify new markets, explore new areas for growth, and expand into new industries.

Data Science is an interdisciplinary field that uses mathematics, engineering, statistics, machine learning, and other fields of study to analyze data and identify patterns. Data Science applications can be used for any industry or area of study, but most examples involve data analytics for business use cases . Data Science often helps you understand your potential customers and their buying needs.

Image used under license from Shutterstock.com

Leave a Reply Cancel reply

You must be logged in to post a comment.

Data Analytics with R

1 problem solving with data, 1.1 introduction.

This chapter will introduce you to a general approach to solving problems and answering questions using data. Throughout the rest of the module, we will reference back to this chapter as you work your way through your own data analysis exercises.

The approach is applicable to actuaries, data scientists, general data analysts, or anyone who intends to critically analyze data and develop insights from data.

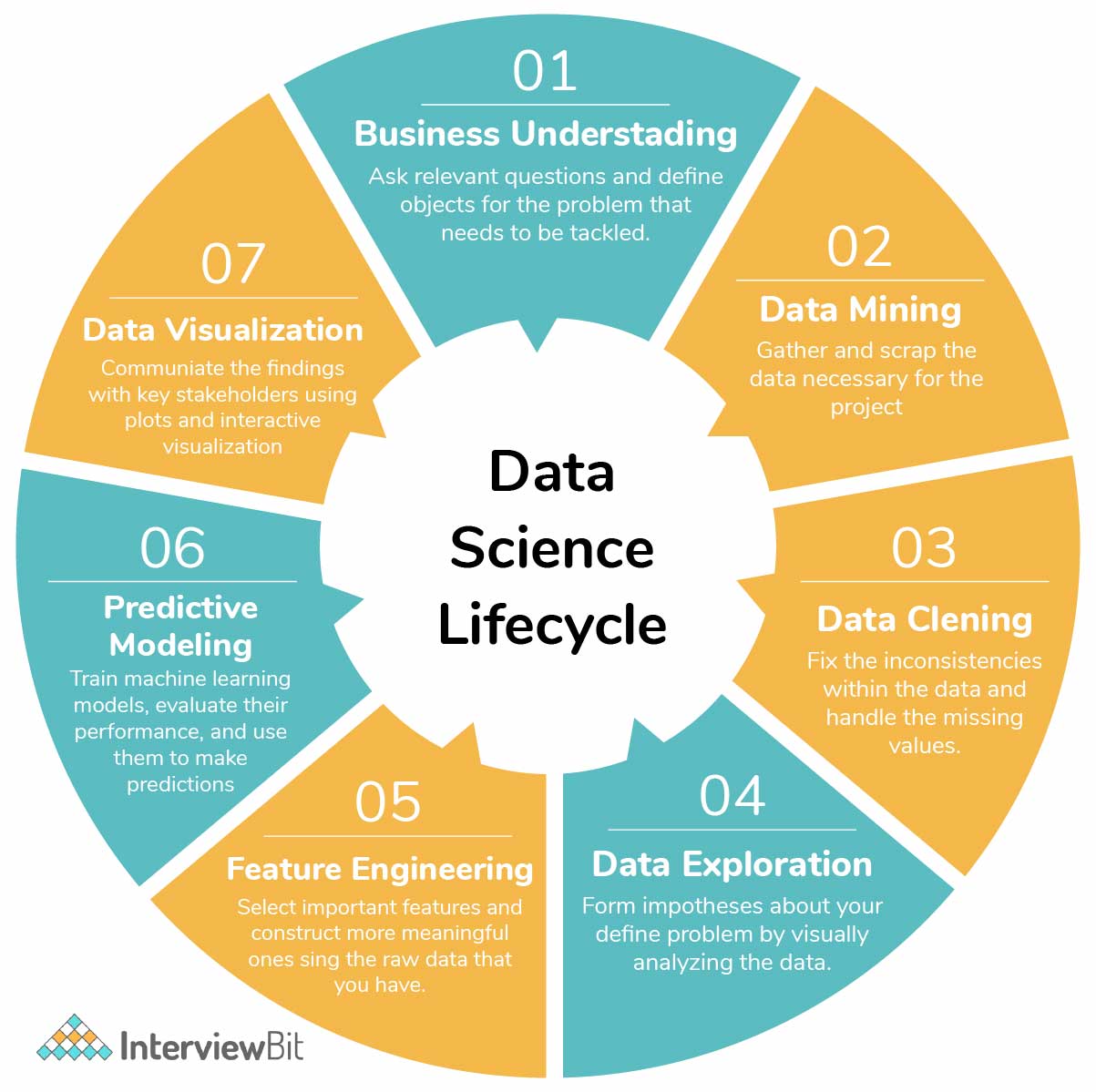

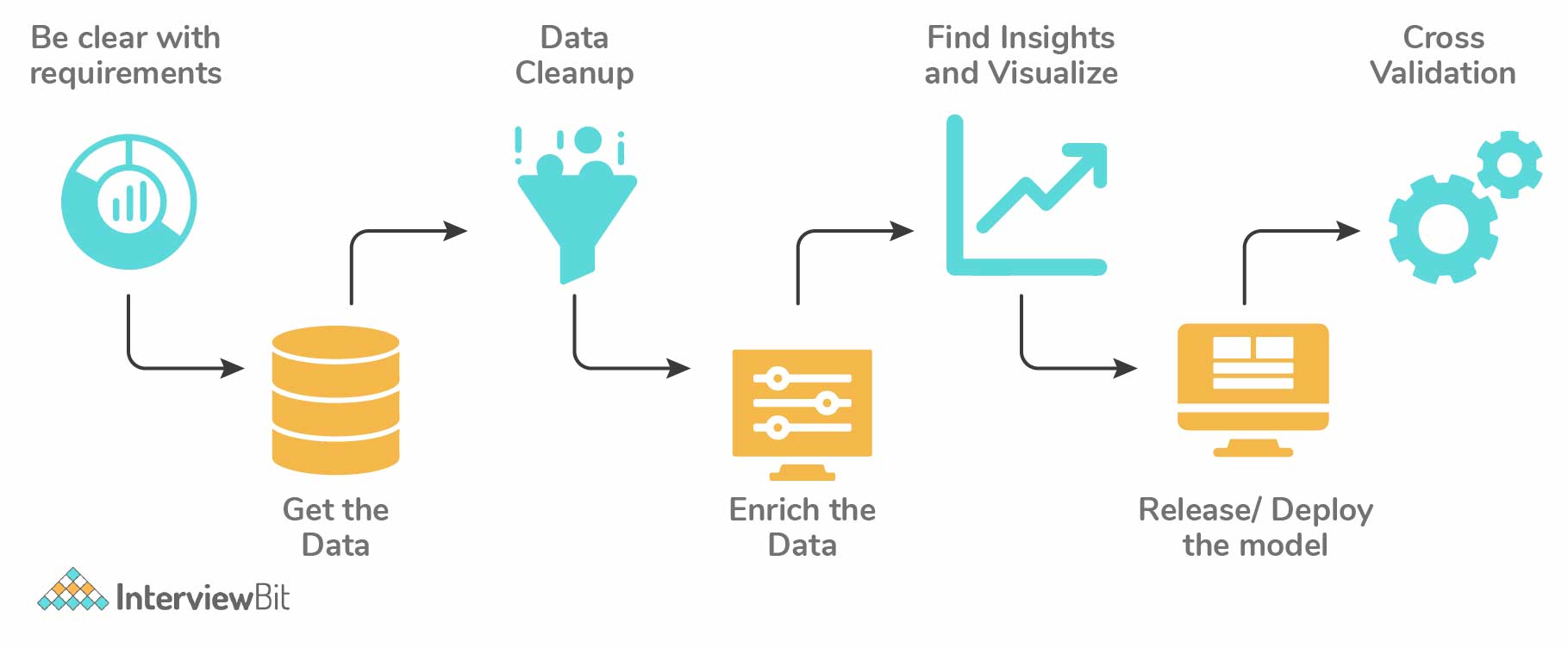

This framework, which some may refer to as The Data Science Process includes the following five main components:

- Data Collection

- Data Cleaning

- Exploratory Data Analysis

- Model Building

- Inference and Communication

Note that all five steps may not be applicable in every situation, but these steps should guide you as you think about how to approach each analysis you perform.

In the subsections below, we’ll dive into each of these in more detail.

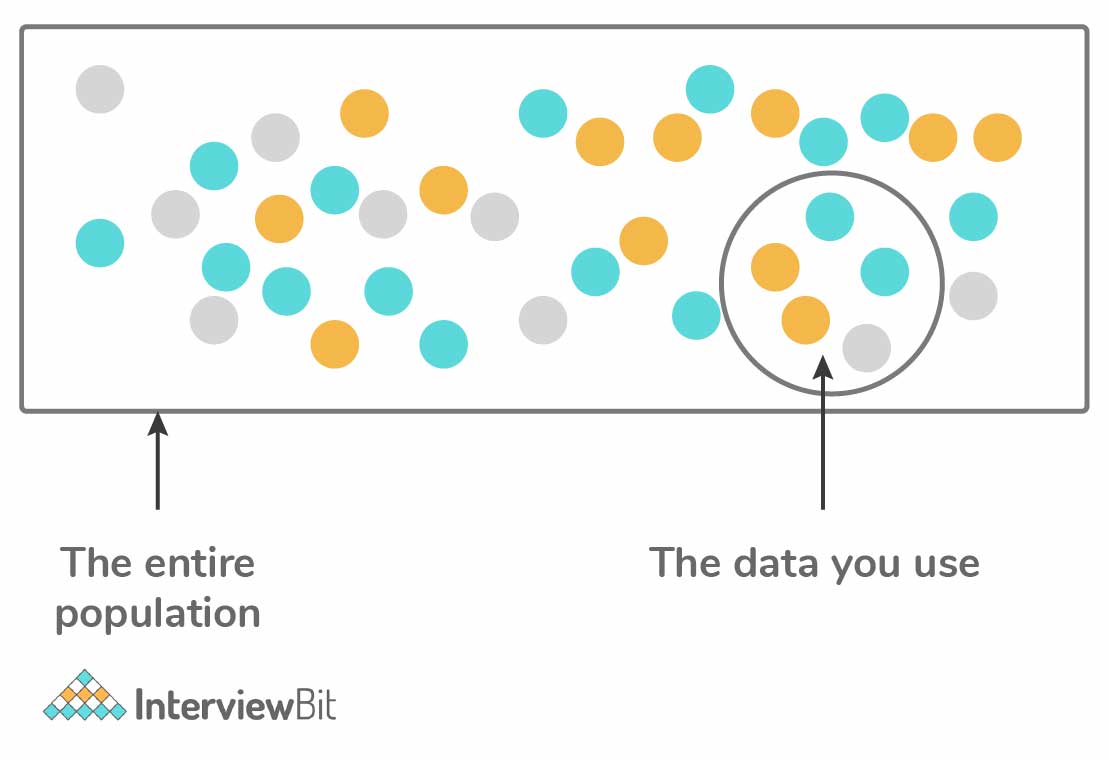

1.2 Data Collection

In order to solve a problem or answer a question using data, it seems obvious that you must need some sort of data to start with. Obtaining data may come in the form of pre-existing or generating new data (think surveys). As an actuary, your data will often come from pre-existing sources within your company. This could include querying data from databases or APIs, being sent excel files, text files, etc. You may also find supplemental data online to assist you with your project.

For example, let’s say you work for a health insurance company and you are interested in determining the average drive time for your insured population to the nearest in-network primary care providers to see if it would be prudent to contract with additional doctors in the area. You would need to collect at least three pieces of data:

- Addresses of your insured population (internal company source/database)

- Addresses of primary care provider offices (internal company source/database)

- Google Maps travel time API to calculate drive times between addresses (external data source)

In summary, data collection provides the fundamental pieces needed to solve your problem or answer your question.

1.3 Data Cleaning

We’ll discuss data cleaning in a little more detail in later chapters, but this phase generally refers to the process of taking the data you collected in step 1, and turning it into a usable format for your analysis. This phase can often be the most time consuming as it may involve handling missing data as well as pre-processing the data to be as error free as possible.

Depending on where you source your data will have major implications for how long this phase takes. For example, many of us actuaries benefit from devoted data engineers and resources within our companies who exert much effort to make our data as clean as possible for us to use. However, if you are sourcing your data from raw files on the internet, you may find this phase to be exceptionally difficult and time intensive.

1.4 Exploratory Data Analysis

Exploratory Data Analysis , or EDA, is an entire subject itself. In short, EDA is an iterative process whereby you:

- Generate questions about your data

- Search for answers, patterns, and characteristics of your data by transforming, visualizing, and summarizing your data

- Use learnings from step 2 to generate new questions and insights about your data

We’ll cover some basics of EDA in Chapter 4 on Data Manipulation and Chapter 5 on Data Visualization, but we’ll only be able to scratch the surface of this topic.

A successful EDA approach will allow you to better understand your data and the relationships between variables within your data. Sometimes, you may be able to answer your question or solve your problem after the EDA step alone. Other times, you may apply what you learned in the EDA step to help build a model for your data.

1.5 Model Building

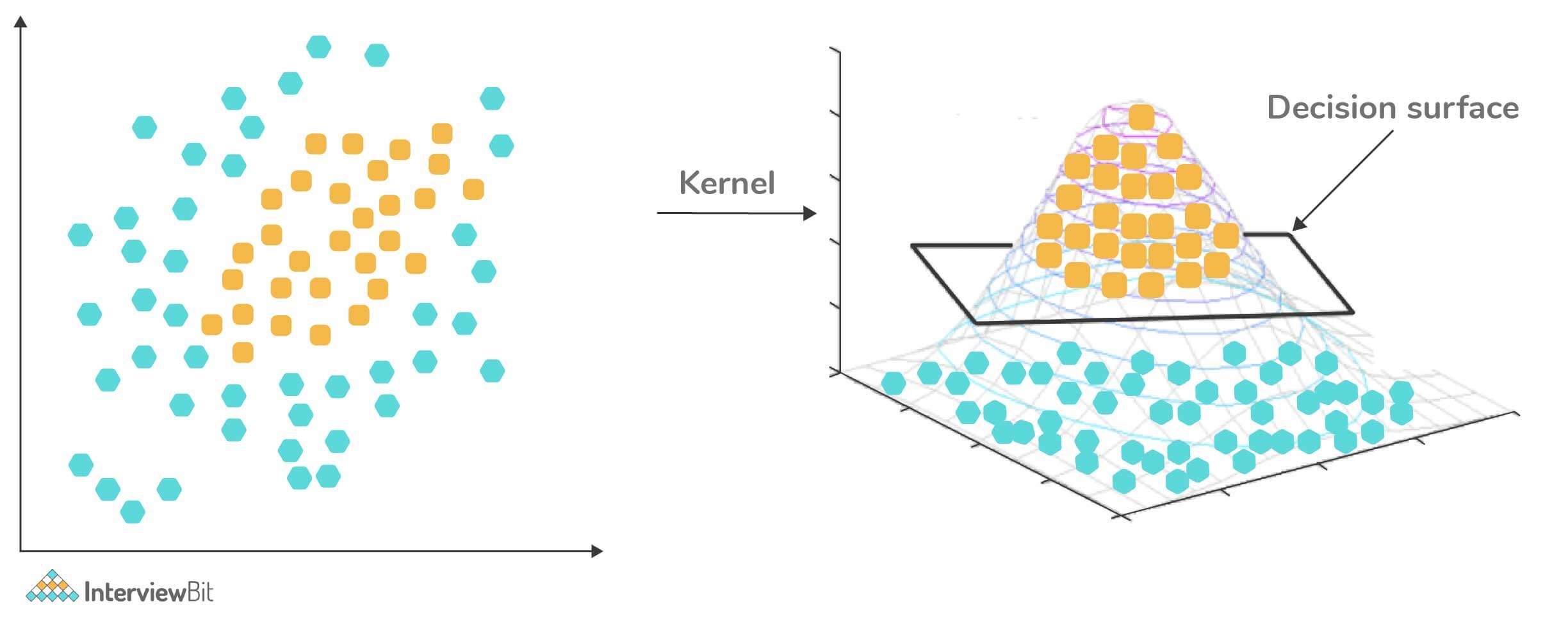

In this step, we build a model, often using machine learning algorithms, in an effort to make sense of our data and gain insights that can be used for decision making or communicating to an audience. Examples of models could include regression approaches, classification algorithms, tree-based models, time-series applications, neural networks, and many, many more. Later in this module, we will practice building our own models using introductory machine learning algorithms.

It’s important to note that while model building gets a lot of attention (because it’s fun to learn and apply new types of models), it typically encompasses a relatively small portion of your overall analysis from a time perspective.

It’s also important to note that building a model doesn’t have to mean applying machine learning algorithms. In fact, in actuarial science, you may find more often than not that the actuarial models you create are Microsoft Excel-based models that blend together historical data, assumptions about the business, and other factors that allow you make projections or understand the business better.

1.6 Inference and Communication

The final phase of the framework is to use everything you’ve learned about your data up to this point to draw inferences and conclusions about the data, and to communicate those out to an audience. Your audience may be your boss, a client, or perhaps a group of actuaries at an SOA conference.

In any instance, it is critical for you to be able to condense what you’ve learned into clear and concise insights and convince your audience why your insights are important. In some cases, these insights will lend themselves to actionable next steps, or perhaps recommendations for a client. In other cases, the results will simply help you to better understand the world, or your business, and to make more informed decisions going forward.

1.7 Wrap-Up

As we conclude this chapter, take a few minutes to look at a couple alternative visualizations that others have used to describe the processes and components of performing analyses. What do they have in common?

- Karl Rohe - Professor of Statistics at the University of Wisconsin-Madison

- Chanin Nantasenamat - Associate Professor of Bioinformatics and Youtuber at the “Data Professor” channel

Practice Exams

Course Notes

Infographics

Career Guides

A selection of practice exams that will test your current data science knowledge. Identify key areas of improvement to strengthen your theoretical preparation, critical thinking, and practical problem-solving skills so you can get one step closer to realizing your professional goals.

Excel Mechanics

Imagine if you had to apply the same Excel formatting adjustment to both Sheet 1 and Sheet 2 (i.e., adjust font, adjust fill color of the sheets, add a couple of empty rows here and there) which contain thousands of rows. That would cost an unjustifiable amount of time. That is where advanced Excel skills come in handy as they optimize your data cleaning, formatting and analysis process and shortcut your way to a job well-done. Therefore, asses your Excel data manipulation skills with this free practice exam.

Formatting Excel Spreadsheets

Did you know that more than 1 in 8 people on the planet uses Excel and that Office users typically spend a third of their time in Excel. But how many of them use the popular spreadsheet tool efficiently? Find out where you stand in your Excel skills with this free practice exam where you are a first-year investment banking analyst at one of the top-tier banks in the world. The dynamic nature of your position will test your skills in quick Excel formatting and various Excel shortcuts

Hypothesis Testing

Whenever we need to verify the results of a test or experiment we turn to hypothesis testing. In this free practice exam you are a data analyst at an electric car manufacturer, selling vehicles in the US and Canada. Currently the company offers two car models – Apollo and SpeedX. You will need to download a free Excel file containing the car sales of the two models over the last 3 years in order find out interesting insights and test your skills in hypothesis testing.

Confidence Intervals

Confidence Intervals refers to the probability of a population parameter falling between a range of certain values. In this free practice exam, you lead the research team at a portfolio management company with over $50 billion dollars in total assets under management. You are asked to compare the performance of 3 funds with similar investment strategies and are given a table with the return of the three portfolios over the last 3 years. You will have to use the data to answer questions that will test your knowledge in confidence intervals.

Fundamentals of Inferential Statistics

While descriptive statistics helps us describe and summarize a dataset, inferential statistics allows us to make predictions based off data. In this free practice exam, you are a data analyst at a leading statistical research company. Much of your daily work relates to understanding data structures and processes, as well as applying analytical theory to real-world problems on large and dynamic datasets. You will be given an excel dataset and will be tested on normal distribution, standardizing a dataset, the Central Limit Theorem among other inferential statistics questions.

Fundamentals of Descriptive Statistics

Descriptive statistics helps us understand the actual characteristics of a dataset by generating summaries about data samples. The most popular types of descriptive statistics are measures of center: median, mode and mean. In this free practice exam you have been appointed as a Junior Data Analyst at a property developer company in the US, where you are asked to evaluate the renting prices in 9 key states. You will work with a free excel dataset file that contains the rental prices and houses over the last years.

Jupyter Notebook Shortcuts

In this free practice exam you are an experienced university professor in Statistics who is looking to upskill in data science and has joined the data science apartment. As on of the most popular coding environments for Python, your colleagues recommend you learn Jupyter Notebook as a beginner data scientist. Therefore, in this quick assessment exam you are going to be tested on some basic theory regarding Jupyter Notebook and some of its shortcuts which will determine how efficient you are at using the environment.

Intro to Jupyter Notebooks

Jupyter is a free, open-source interactive web-based computational notebook. As one of the most popular coding environments for Python and R, you are inevitably going to encounter Jupyter at some point in you data science journey, if you have not already. Therefore, in this free practice exam you are a professor of Applied Economics and Finance who is learning how to use Jupyter. You are going to be tested on the very basics of the Jupyter environment like how to set up the environment and some Jupyter keyboard shortcuts.

Black-Scholes-Merton Model in Python

The Black Scholes formula is one of the most popular financial instruments used in the past 40 years. Derived by Fisher, Black Myron Scholes and Robert Merton in 1973, it has become the primary tool for derivative pricing. In this free practice exam, you are a finance student whose Applied Finance is approaching and is asked to perform the Black-Scholes-Merton formula in Python by working on a dataset containing Tesla’s stock prices for the period between mid-2010 and mid-2020.

Python for Financial Analysis

In a heavily regulated industry like fintech, simplicity and efficiency is key. Which is why Python is the preferred choice for programming language over the likes of Java or C++. In this free practice exam you are a university professor of Applied Economics and Finance, who is focused on running regressions and applying the CAPM model on the NASDAQ and The Coca-Cola Company Dataset for the period between 2016 and 2020 inclusive. Make sure to have the following packages running to complete your practice test: pandas, numpy, api, scipy, and pyplot as plt.

Python Finance

Python has become the ideal programming language for the financial industry, as more and more hedge funds and large investment banks are adopting this general multi-purpose language to solve their quantitative problems. In this free practice exam on Python Finance, you are part of the IT team of a huge company, operating in the US stock market, where you are asked to analyze the performance of three market indices. The packages you need to have running are numpy, pandas and pyplot as plt.

Machine Learning with KNN

KNN is a popular supervised machine learning algorithm that is used for solving both classification and regression problems. In this free practice exam, this is exactly what you are going to be asked to do, as you are required to create 2 datasets for 2 car dealerships in Jupyter Notebook, fit the models to the training data, find the set of parameters that best classify a car, construct a confusion matrix and more.

Excel Functions

The majority of data comes in spreadsheet format, making Excel the #1 tool of choice for professional data analysts. The ability to work effectively and efficiently in Excel is highly desirable for any data practitioner who is looking to bring value to a company. As a matter of fact, being proficient in Excel has become the new standard, as 82% of middle-skill jobs require competent use of the productivity software. Take this free Excel Functions practice exam and test your knowledge on removing duplicate values, transferring data from one sheet to another, rand using the VLOOKUP and SUMIF function.

Useful Tools in Excel

What Excel lacks in data visualization tools compared to Tableau, or computational power for analyzing big data compared to Python, it compensates with accessibility and flexibility. Excel allows you to quickly organize, visualize and perform mathematical functions on a set of data, without the need for any programming or statistical skills. Therefore, it is in your best interest to learn how to use the various Excel tools at your disposal. This practice exam is a good opportunity to test your excel knowledge in the text to column functions, excel macros, row manipulation and basic math formulas.

Excel Basics

Ever since its first release in 1985, Excel continues to be the most popular spreadsheet application to this day- with approximately 750 million users worldwide, thanks to its flexibility and ease of use. No matter if you are a data scientist or not, knowing how to use Excel will greatly improve and optimize your workflow. Therefore, in this free Excel Basics practice exam you are going to work with a dataset of a company in the Fast Moving Consumer Goods Sector as an aspiring data analyst and test your knowledge on basic Excel functions and shortcuts.

A/B Testing for Social Media

In this free A/B Testing for Social Media practice exam, you are an experienced data analyst who works at a new social media company called FilmIt. You are tasked with the job of increasing user engagement by applying the correct modifications to how users move on to the next video. You decide that the best approach is by conducting a A/B test in a controlled environment. Therefore, in order to successfully complete this task, you are going to be tested on statistical significance, 2 tailed-tests and choosing the success metrics.

Fundamentals of A/B Testing

A/B Testing is a powerful statistical tool used to compare the results between two versions of the same marketing asset such as a webpage or email in a controlled environment. An example of A/B testing is when Electronic Arts created a variation version of the sales page for the popular SimCity 5 simulation game, which performed 40% better than the control page. Speaking about video games, in this free practice test, you are a data analyst who is tasked with the job to conduct A/B testing for a game developer. You are going to be asked to choose the best way to perform an A/B test, identify the null hypothesis, choose the right evaluation metrics, and ultimately increase revenue through in-game ads.

Introduction to Data Science Disciplines

The term “Data Science” dates back to the 1960s, to describe the emerging field of working with large amounts of data that drives organizational growth and decision-making. While the essence has remained the same, the data science disciplines have changed a lot over the past decades thanks to rapid technological advancements. In this free introduction to data science practice exam, you will test your understanding of the modern day data science disciplines and their role within an organization.

Advanced SQL

In this free Advanced SQL practice exam you are a sophomore Business student who has decided to focus on improving your coding and analytical skills in the areas of relational database management systems. You are given an employee dataset containing information like titles, salaries, birth dates and department names, and are required to come up with the correct answers. This free SQL practice test will evaluate your knowledge on MySQL aggregate functions , DML statements (INSERT, UPDATE) and other advanced SQL queries.

Most Popular Practice Exams

Check out our most helpful downloadable resources according to 365 Data Science students and our expert team of instructors.

Join 2M+ Students and Start Learning

Learn from the best, develop an invaluable skillset, and secure a job in data science.

- Solving Problems with Data Science

Aakash Tandel , Former Data Scientist

Article Categories: #Strategy , #Data & Analytics

Posted on December 3, 2018

There is a systematic approach to solving data science problems and it begins with asking the right questions. This article covers some of the many questions we ask when solving data science problems at Viget.

T h e r e i s a s y s t e m a t i c a p p r o a c h t o s o l v i n g d a t a s c i e n c e p r o b l e m s a n d i t b e g i n s w i t h a s k i n g t h e r i g h t q u e s t i o n s . T h i s a r t i c l e c o v e r s s o m e o f t h e m a n y q u e s t i o n s w e a s k w h e n s o l v i n g d a t a s c i e n c e p r o b l e m s a t V i g e t .

A challenge that I’ve been wrestling with is the lack of a widely populated framework or systematic approach to solving data science problems. In our analytics work at Viget, we use a framework inspired by Avinash Kaushik’s Digital Marketing and Measurement Model . We use this framework on almost every project we undertake at Viget. I believe data science could use a similar framework that organizes and structures the data science process.

As a start, I want to share the questions we like to ask when solving a data science problem. Even though some of the questions are not specific to the data science domain, they help us efficiently and effectively solve problems with data science.

Business Problem

What is the problem we are trying to solve?

That’s the most logical first step to solving any question, right? We have to be able to articulate exactly what the issue is. Start by writing down the problem without going into the specifics, such as how the data is structured or which algorithm we think could effectively solve the problem.

Then try explaining the problem to your niece or nephew, who is a freshman in high school. It is easier than explaining the problem to a third-grader, but you still can’t dive into statistical uncertainty or convolutional versus recurrent neural networks. The act of explaining the problem at a high school stats and computer science level makes your problem, and the solution, accessible to everyone within your or your client’s organization, from the junior data scientists to the Chief Legal Officer.

Clearly defining our business problem showcases how data science is used to solve real-world problems. This high-level thinking provides us with a foundation for solving the problem. Here are a few other business problem definitions we should think about.

- Who are the stakeholders for this project?

- Have we solved similar problems before?

- Has someone else documented solutions to similar problems?

- Can we reframe the problem in any way?

And don’t be fooled by these deceivingly simple questions. Sometimes more generalized questions can be very difficult to answer. But, we believe answering these framing question is the first, and possibly most important, step in the process, because it makes the rest of the effort actionable.

Say we work at a video game company — let’s call the company Rocinante. Our business is built on customers subscribing to our massive online multiplayer game. Users are billed monthly. We have data about users who have cancelled their subscription and those who have continued to renew month after month. Our management team wants us to analyze our customer data.

Well, as a company, the Rocinante wants to be able to predict whether or not customers will cancel their subscription . We want to be able to predict which customers will churn, in order to address the core reasons why customers unsubscribe. Additionally, we need a plan to target specific customers with more proactive retention strategies.

Churn is the turnover of customers, also referred to as customer death. In a contractual setting - such as when a user signs a contract to join a gym - a customer “dies” when they cancel their gym membership. In a non-contractual setting, customer death is not observed and is more difficult to model. For example, Amazon does not know when you have decided to never-again purchase Adidas. Your customer death as an Amazon or Adidas customer is implied.

Possible Solutions

What are the approaches we can use to solve this problem.

There are many instances when we shouldn’t be using machine learning to solve a problem. Remember, data science is one of many tools in the toolbox. There could be a simpler, and maybe cheaper, solution out there. Maybe we could answer a question by looking at descriptive statistics around web analytics data from Google Analytics. Maybe we could solve the problem with user interviews and hear what the users think in their own words. This question aims to see if spinning up EC2 instances on Amazon Web Services is worth it. If the answer to, “Is there a simple solution,” is, “No,” then we can ask, “ Can we use data science to solve this problem? ” This yes or no question brings about two follow-up questions:

- “ Is the data available to solve this problem? ” A data scientist without data is not a very helpful individual. Many of the data science techniques that are highlighted in media today — such as deep learning with artificial neural networks — requires a massive amount of data. A hundred data points is unlikely to provide enough data to train and test a model. If the answer to this question is no, then we can consider acquiring more data and pipelining that data to warehouses, where it can be accessed at a later date.

- “ Who are the team members we need in order to solve this problem? ” Your initial answer to this question will be, “The data scientist, of course!” The vast majority of the problems we face at Viget can’t or shouldn’t be solved by a lone data scientist because we are solving business problems. Our data scientists team up with UXers , designers , developers , project managers , and hardware developers to develop digital strategies and solving data science problems is one part of that strategy. Siloing your problem and siloing your data scientists isn’t helpful for anyone.

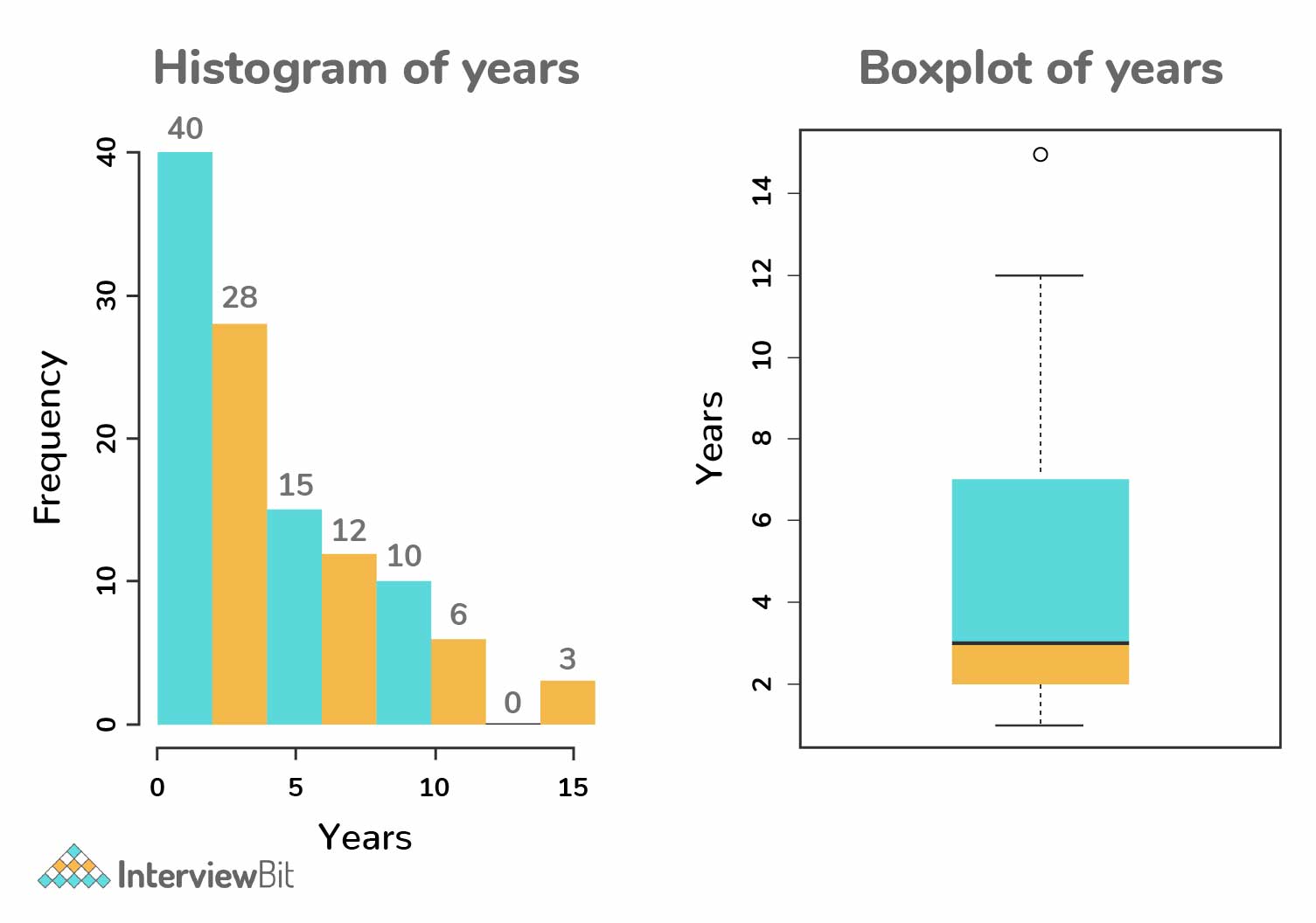

We want to predict when a customer will unsubscribe from Rocinante’s flagship game. One simple approach to solving this problem would be to take the average customer life - how long a gamer remains subscribed - and predict that all customers will churn after X amount of time. Say our data showed that on average customers churned after 72 months of subscription. Then we could predict a new customer would churn after 72 months of subscription. We test out this hypothesis on new data and learn that it is wildly inaccurate. The average customer lifetime for our previous data was 72 months, but our new batch of data had an average customer lifetime of 2 months. Users in the second batch of data churned much faster than those in the first batch. Our prediction of 72 months didn’t generalize well. Let’s try a more sophisticated approach using data science.

- Is the data available to solve this problem? The dataset contains 12,043 rows of data and 49 features. We determine that this sample of data is large enough for our use-case. We don’t need to deploy Rocinante’s data engineering team for this project.

- Who are the team members we need in order to solve this problem? Let’s talk with the Rocinante’s data engineering team to learn more about their data collection process. We could learn about biases in the data from the data collectors themselves. Let’s also chat with the customer retention and acquisitions team and hear about their tactics to reduce churn. Our job is to analyze data that will ultimately impact their work. Our project team will consist of the data scientist to lead the analysis, a project manager to keep the project team on task, and a UX designer to help facilitate research efforts we plan to conduct before and after the data analysis.

How do we know if we have successfully solved the problem?

At Viget, we aim to be data-informed, which means we aren’t blindly driven by our data, but we are still focused on quantifiable measures of success. Our data science problems are held to the same standard. What are the ways in which this problem could be a success? What are the ways in which this problem could be a complete and utter failure? We often have specific success metrics and Key Performance Indicators (KPIs) that help us answer these questions.

Our UX coworker has interviewed some of the other stakeholders at Rocinante and some of the gamers who play our game. Our team believes if our analysis is inconclusive, and we continue the status quo, the project would be a failure. The project would be a success if we are able to predict a churn risk score for each subscriber. A churn risk score, coupled with our monthly churn rate (the rate at which customers leave the subscription service per month), will be useful information. The customer acquisition team will have a better idea of how many new users they need to acquire in order to keep the number of customers the same, and how many new users they need in order to grow the customer base.

Data Science-ing

What do we need to learn about the data and what analysis do we need to conduct.

At the heart of solving a data science problem are hundreds of questions. I attempted to ask these and similar questions last year in a blog post, Data Science Workflow . Below are some of the most crucial — they’re not the only questions you could face when solving a data science problem, but are ones that our team at Viget thinks about on nearly every data problem.

- What do we need to learn about the data?

- What type of exploratory data analysis do we need to conduct?

- Where is our data coming from?

- What is the current state of our data?

- Is this a supervised or unsupervised learning problem?

- Is this a regression, classification, or clustering problem?

- What biases could our data contain?

- What type of data cleaning do we need to do?

- What type of feature engineering could be useful?

- What algorithms or types of models have been proven to solve similar problems well?

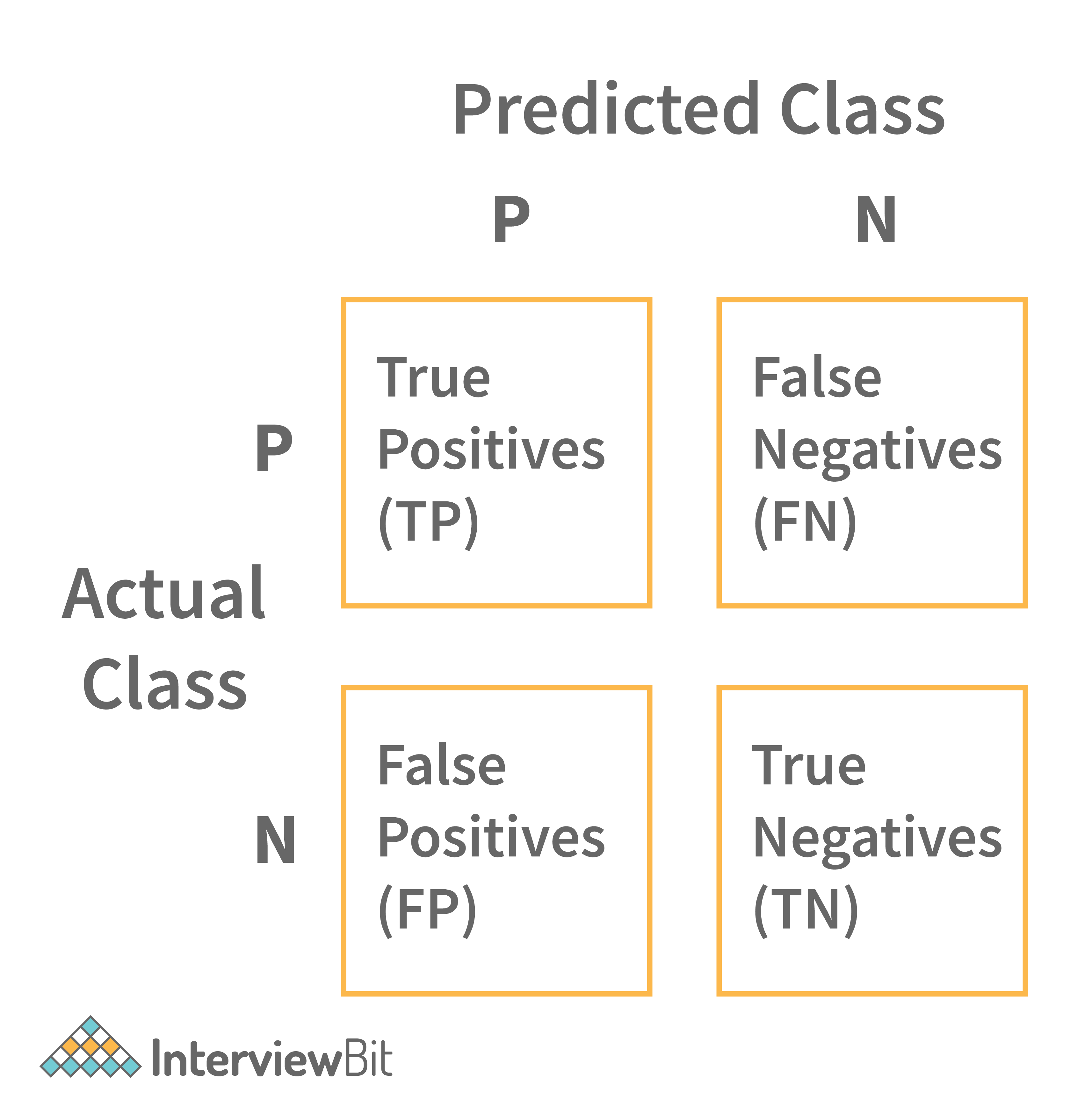

- What evaluation metric are we using for our model?

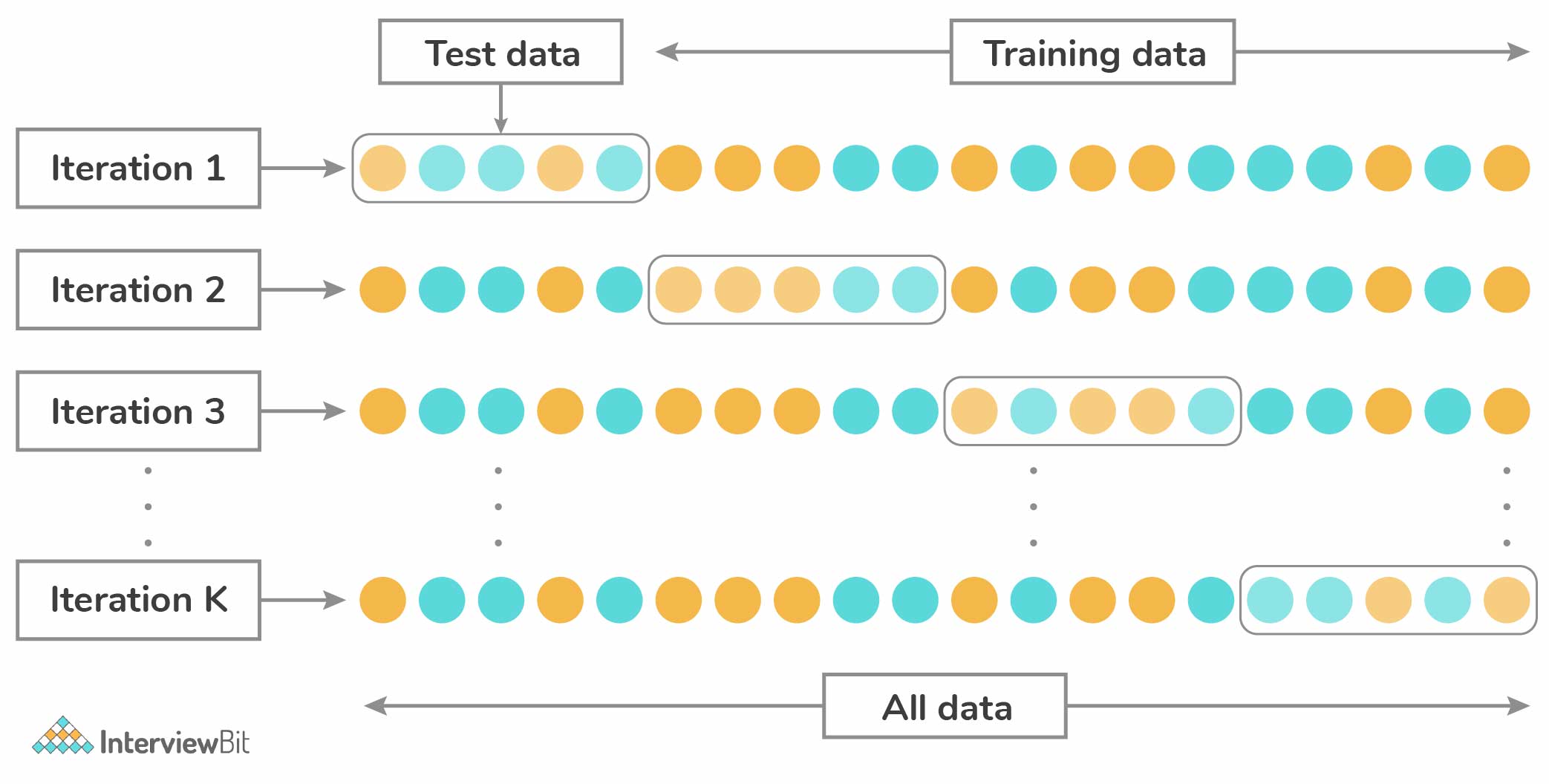

- What is our training and testing plan?

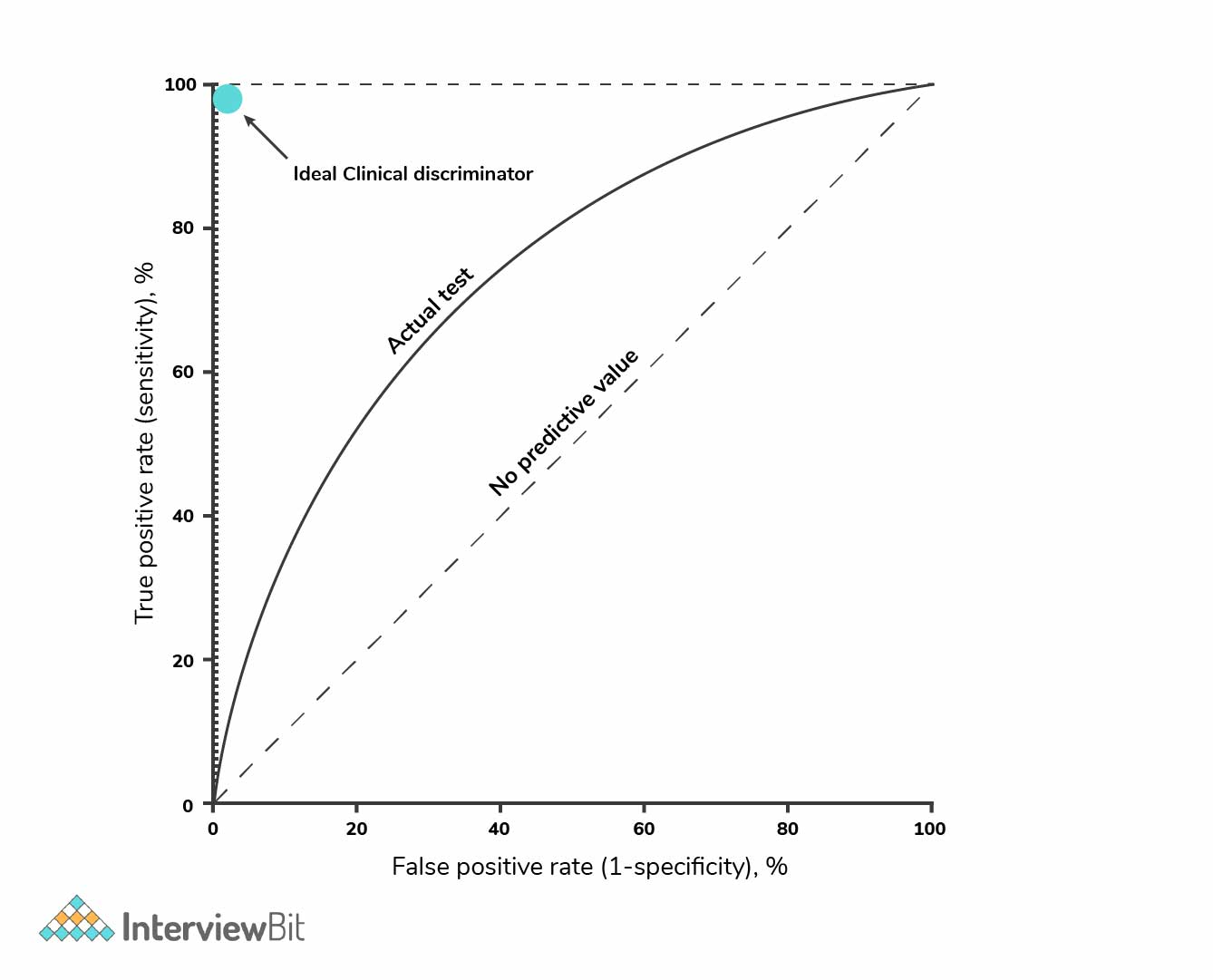

- How can we tweak the model to make it more accurate, increase the ROC/AUC, decrease log-loss, etc. ?

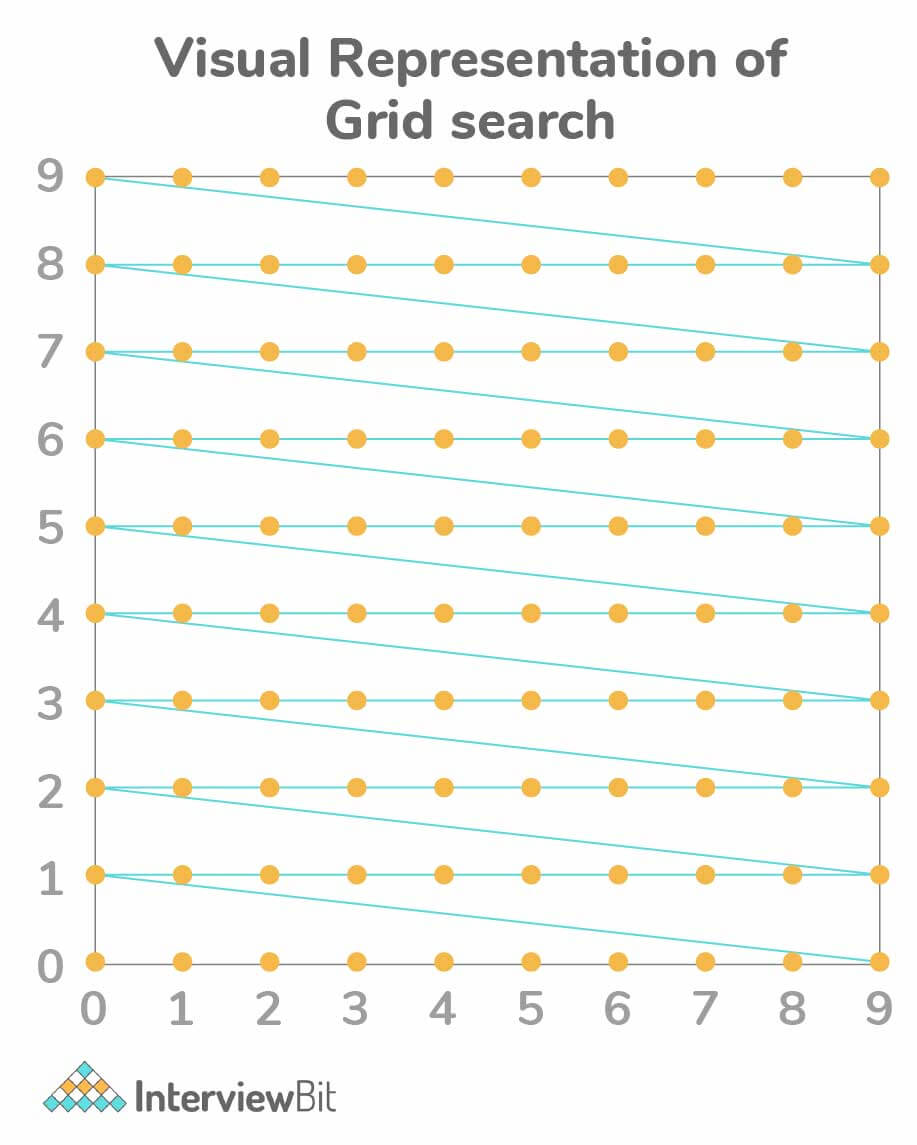

- Have we optimized the various parameters of the algorithm? Try grid search here.

- Is this ethical?

That last question raises the conversation about ethics in data science. Unfortunately, there is no hippocratic oath for data scientists, but that doesn’t excuse the data science industry from acting unethically. We should apply ethical considerations to our standard data science workflow. Additionally, ethics in data science as a topic deserves more than a paragraph in this article — but I wanted to highlight that we should be cognizant and practice only ethical data science.

Let’s get started with the analysis. It’s time to answer the data science questions. Because this is an example, the answer to these data science questions are entirely hypothetical.

- We need to learn more about the time series nature of our data, as well as the format.

- We should look into average customer lifetime durations and summary statistics around some of the features we believe could be important.

- Our data came from login data and customer data, compiled by Rocinante’s data engineering team.

- The data needs to be cleaned, but it is conveniently in a PostgreSQL database.

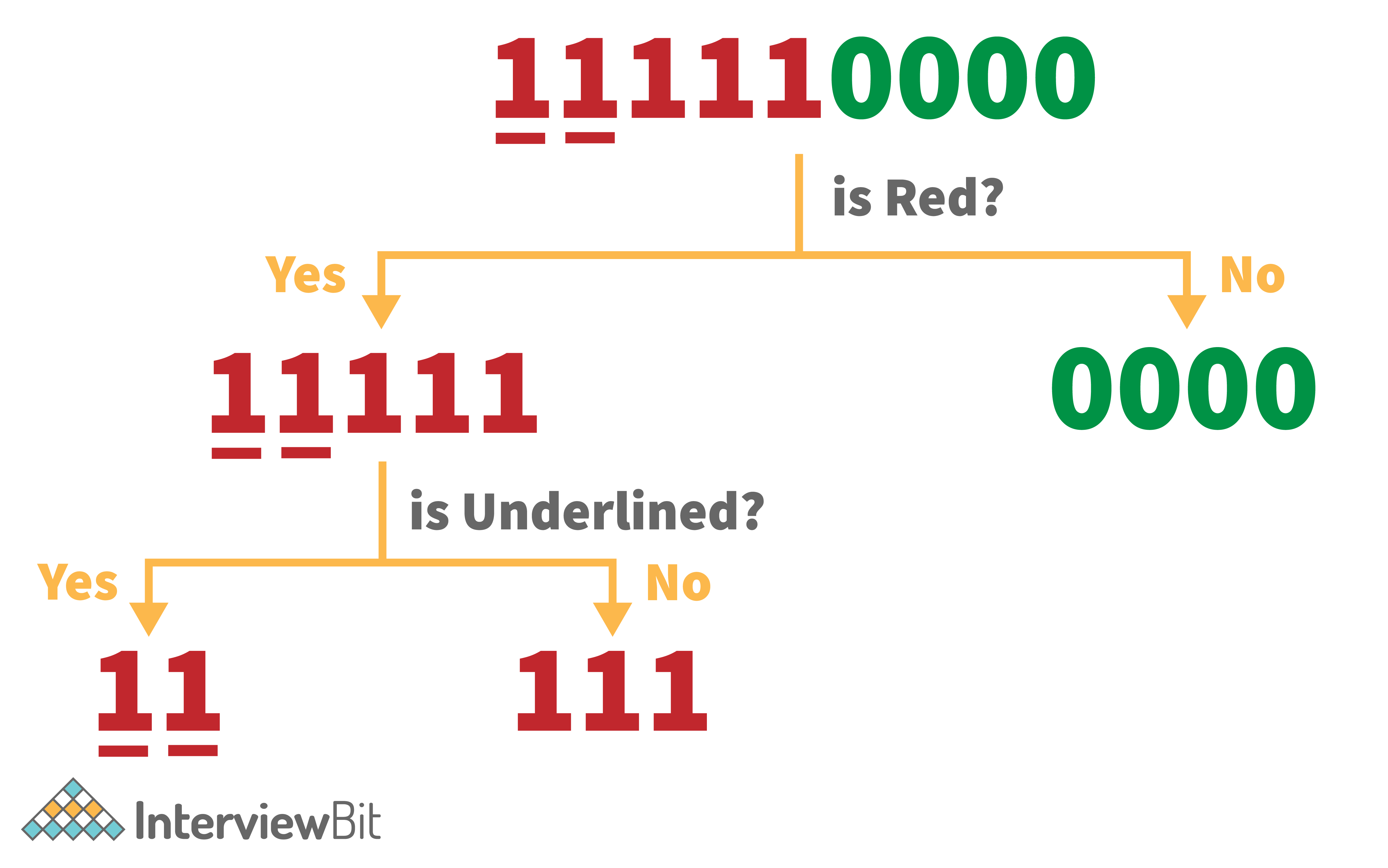

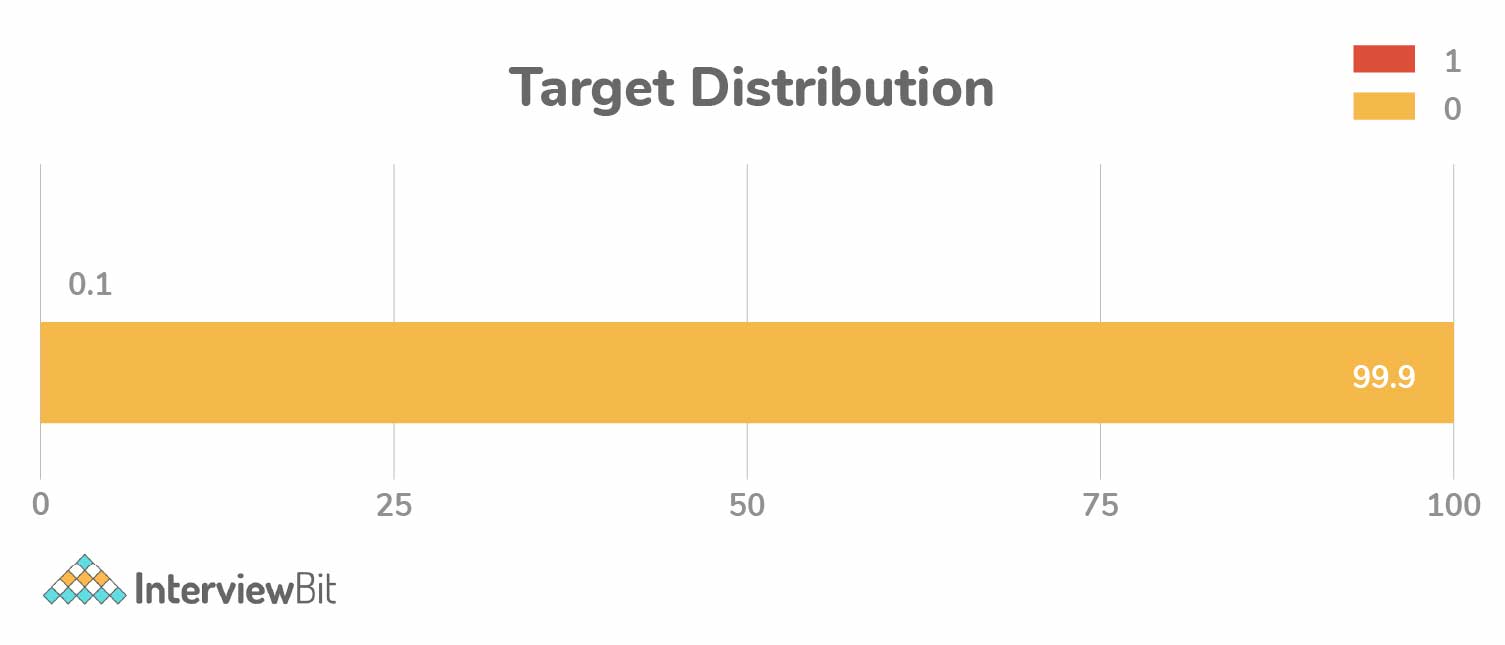

- This is a supervised learning problem because we know which customers have churned.

- This is a binary classification problem.

- After conducting exploratory data analysis and speaking with the data engineering team, we do not see any biases in the data.

- We need to reformat some of the data and use missing data imputation for features we believe are important but have some missing data points.

- With 49 good features, we don’t believe we need to do any feature engineering.

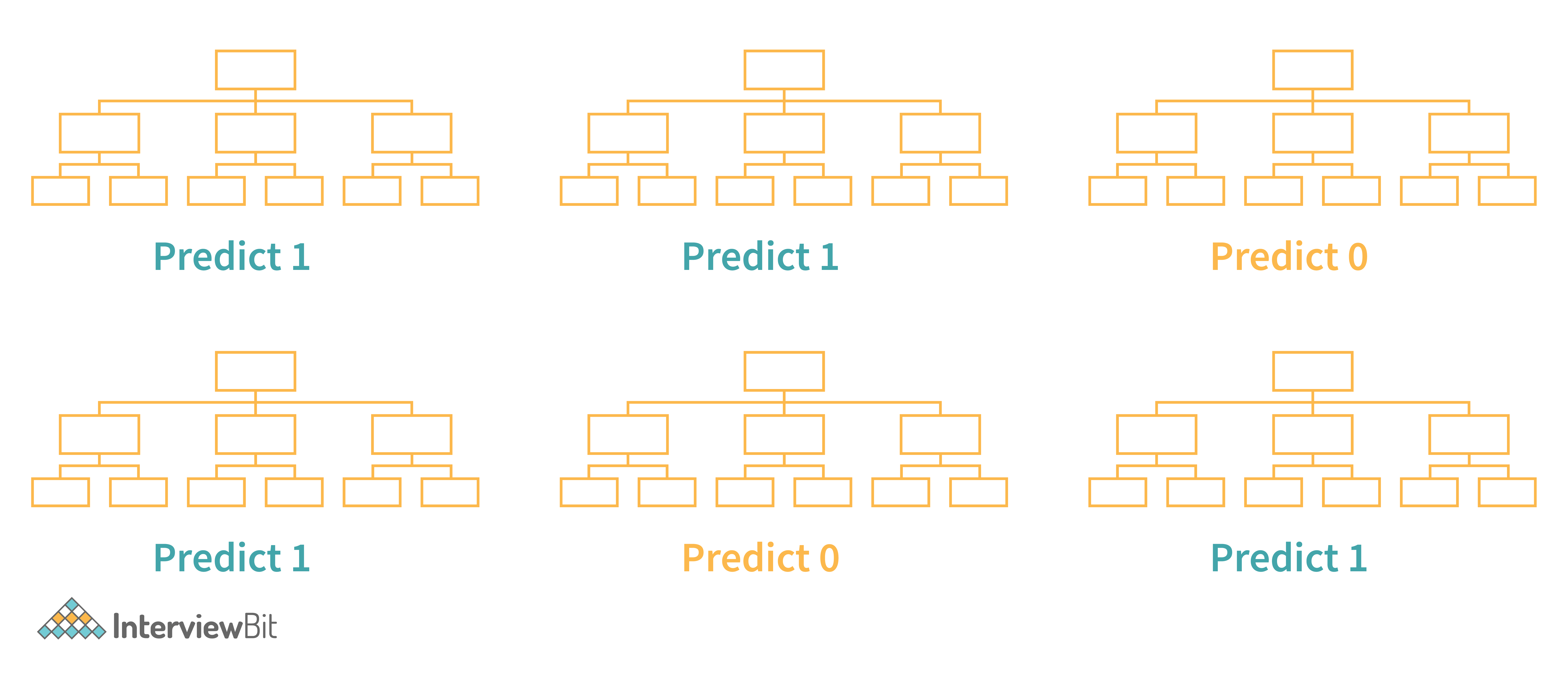

- We have used random forests, XGBoost, and standard logistic regressions to solve classification problems.

- We will use ROC-AUC score as our evaluation metric.

- We are going to use a training-test split (80% training, 20% test) to evaluate our model.

- Let’s remove features that are statistically insignificant from our model to improve the ROC-AUC score.

- Let’s optimize the parameters within our random forests model to improve the ROC-AUC score.

- Our team believes we are acting ethically.

This process may look deceivingly linear, but data science is often a nonlinear practice. After doing all of the work in our example above, we could still end up with a model that doesn’t generalize well. It could be bad at predicting churn in new customers. Maybe we shouldn’t have assumed this problem was a binary classification problem and instead used survival regression to solve the problem. This part of the project will be filled with experimentation, and that’s totally normal.

Communication

What is the best way to communicated and circulate our results.

Our job is typically to bring our findings to the client, explain how the process was a success or failure, and explain why. Communicating technical details and explaining to non-technical audiences is important because not all of our clients have degrees in statistics. There are three ways in which communication of technical details can be advantageous:

- It can be used to inspire confidence that the work is thorough and multiple options have been considered.

- It can highlight technical considerations or caveats that stakeholders and decision-makers should be aware of.

- It can offer resources to learn more about specific techniques applied.

- It can provide supplemental materials to allow the findings to be replicated where possible.

We often use blog posts and articles to circulate our work. They help spread our knowledge and the lessons we learned while working on a project to peers. I encourage every data scientist to engage with the data science community by attending and speaking at meetups and conferences, publishing their work online, and extending a helping hand to other curious data scientists and analysts.

Our method of binary classification was in fact incorrect, so we ended up using survival regression to determine there are four features that impact churn: gaming platform, geographical region, days since last update, and season. Our team aggregates all of our findings into one report, detailing the specific techniques we used, caveats about the analysis, and the multiple recommendations from our team to the customer retention and acquisition team. This report is full of the nitty-gritty details that the more technical folks, such as the data engineering team, may appreciate. Our team also creates a slide deck for the less-technical audience. This deck glosses over many of the technical details of the project and focuses on recommendations for the customer retention and acquisition team.

We give a talk at a local data science meetup, going over the trials, tribulations, and triumphs of the project and sharing them with the data science community at large.

Why are we doing all of this?

I ask myself this question daily — and not in the metaphysical sense, but in the value-driven sense. Is there value in the work we have done and in the end result? I hope the answer is yes. But, let’s be honest, this is business. We don’t have three years to put together a PhD thesis-like paper. We have to move quickly and cost-effectively. Critically evaluating the value ultimately created will help you refine your approach to the next project. And, if you didn’t produce the value you’d originally hoped, then at the very least, I hope you were able to learn something and sharpen your data science skills.

Rocinante has a better idea of how long our users will remain active on the platform based on user characteristics, and can now launch preemptive strikes in order to retain those users who look like they are about to churn. Our team eventually develops a system that alerts the customer retention and acquisition team when a user may be about to churn, and they know to reach out to that user, via email, encouraging them to try out a new feature we recently launched. Rocinante is making better data-informed decisions based on this work, and that’s great!

I hope this article will help guide your next data science project and get the wheels turning in your own mind. Maybe you will be the creator of a data science framework the world adopts! Let me know what you think about the questions, or whether I’m missing anything, in the comments below.

Related Articles

Start Your Project With an Innovation Workshop

Kate Trenerry

Charting New Paths to Startup Product Development

Making a Business Case for Your Website Project

The viget newsletter.

Nobody likes popups, so we waited until now to recommend our newsletter, featuring thoughts, opinions, and tools for building a better digital world. Read the current issue.

Subscribe Here (opens in new window)

- Share this page

- Post this page

5 Steps on How to Approach a New Data Science Problem

Many companies struggle to reorganize their decision making around data and implement a coherent data strategy. The problem certainly isn’t lack of data but inability to transform it into actionable insights. Here's how to do it right.

A QUICK SUMMARY – FOR THE BUSY ONES

TABLE OF CONTENTS

Introduction

Data has become the new gold. 85 percent of companies are trying to be data-driven, according to last year’s survey by NewVantage Partners , and the global data science platform market is expected to reach $128.21 billion by 2022, up from $19.75 billion in 2016.

Clearly, data science is not just another buzzword with limited real-world use cases. Yet, many companies struggle to reorganize their decision making around data and implement a coherent data strategy. The problem certainly isn’t lack of data.

In the past few years alone, 90 percent of all of the world’s data has been created, and our current daily data output has reached 2.5 quintillion bytes , which is such a mind-bogglingly large number that it’s difficult to fully appreciate the break-neck pace at which we generate new data.

The real problem is the inability of companies to transform the data they have at their disposal into actionable insights that can be used to make better business decisions, stop threats, and mitigate risks.

In fact, there’s often too much data available to make a clear decision, which is why it’s crucial for companies to know how to approach a new data science problem and understand what types of questions data science can answer.

What types of questions can data science answer?

“Data science and statistics are not magic. They won’t magically fix all of a company’s problems. However, they are useful tools to help companies make more accurate decisions and automate repetitive work and choices that teams need to make,” writes Seattle Data Guy , a data-driven consulting agency.

The questions that can be answered with the help of data science fall under following categories:

- Identifying themes in large data sets : Which server in my server farm needs maintenance the most?

- Identifying anomalies in large data sets : Is this combination of purchases different from what this customer has ordered in the past?

- Predicting the likelihood of something happening : How likely is this user to click on my video?

- Showing how things are connected to one another : What is the topic of this online article?

- Categorizing individual data points : Is this an image of a cat or a mouse?

Of course, this is by no means a complete list of all questions that data science can answer. Even if it were, data science is evolving at such a rapid pace that it would most likely be completely outdated within a year or two from its publication.

Now that we’ve established the types of questions that can be reasonably expected to be answered with the help of data science, it’s time to lay down the steps most data scientists would take when approaching a new data science problem.

Step 1: Define the problem

First, it’s necessary to accurately define the data problem that is to be solved. The problem should be clear, concise, and measurable . Many companies are too vague when defining data problems, which makes it difficult or even impossible for data scientists to translate them into machine code.

Here are some basic characteristics of a well-defined data problem:

- The solution to the problem is likely to have enough positive impact to justify the effort.

- Enough data is available in a usable format.

- Stakeholders are interested in applying data science to solve the problem.

Step 2: Decide on an approach

There are many data science algorithms that can be applied to data, and they can be roughly grouped into the following families:

- Two-class classification : useful for any question that has just two possible answers.

- Multi-class classification : answers a question that has multiple possible answers.

- Anomaly detection : identifies data points that are not normal.

- Regression : gives a real-valued answer and is useful when looking for a number instead of a class or category.

- Multi-class classification as regression : useful for questions that occur as rankings or comparisons.

- Two-class classification as regression : useful for binary classification problems that can also be reformulated as regression.

- Clustering : answer questions about how data is organized by seeking to separate out a data set into intuitive chunks.

- Dimensionality reduction : reduces the number of random variables under consideration by obtaining a set of principal variables.

- Reinforcement learning algorithms : focus on taking action in an environment so as to maximize some notion of cumulative reward.

Step 3: Collect data

With the problem clearly defined and a suitable approach selected, it’s time to collect data. All collected data should be organized in a log along with collection dates and other helpful metadata.

It’s important to understand that collected data is seldom ready for analysis right away. Most data scientists spend much of their time on data cleaning , which includes removing missing values, identifying duplicate records, and correcting incorrect values.

Step 4: Analyze data

The next step after data collection and cleanup is data analysis. At this stage, there’s a certain chance that the selected data science approach won’t work. This is to be expected and accounted for. Generally, it’s recommended to start with trying all the basic machine learning approaches as they have fewer parameters to alter.

There are many excellent open source data science libraries that can be used to analyze data. Most data science tools are written in Python, Java, or C++.

<blockquote><p>“Tempting as these cool toys are, for most applications the smart initial choice will be to pick a much simpler model, for example using scikit-learn and modeling techniques like simple logistic regression,” – advises Francine Bennett, the CEO and co-founder of Mastodon C.</p></blockquote>

Step 5: Interpret results

After data analysis, it’s finally time to interpret the results. The most important thing to consider is whether the original problem has been solved. You might discover that your model is working but producing subpar results. One way how to deal with this is to add more data and keep retraining the model until satisfied with it.

Most companies today are drowning in data. The global leaders are already using the data they generate to gain competitive advantage, and others are realizing that they must do the same or perish. While transforming an organization to become data-driven is no easy task, the reward is more than worth the effort.

The 5 steps on how to approach a new data science problem we’ve described in this article are meant to illustrate the general problem-solving mindset companies must adopt to successfully face the challenges of our current data-centric era.

Frequently Asked Questions

Our promise

Every year, Brainhub helps 750,000+ founders, leaders and software engineers make smart tech decisions. We earn that trust by openly sharing our insights based on practical software engineering experience.

A serial entrepreneur, passionate R&D engineer, with 15 years of experience in the tech industry. Shares his expert knowledge about tech, startups, business development, and market analysis.

Popular this month

Get smarter in engineering and leadership in less than 60 seconds.

Join 300+ founders and engineering leaders, and get a weekly newsletter that takes our CEO 5-6 hours to prepare.

previous article in this collection

It's the first one.

next article in this collection

It's the last one.

- Data Science

Common Data Science Challenges of 2024 [with Solution]

Home Blog Data Science Common Data Science Challenges of 2024 [with Solution]

Data is the new oil for companies. Since then, it has been a standard aspect of every choice made. Increasingly, businesses rely on analytics and data to strengthen their brand's position in the market and boost revenue.

Information now has more value than physical metals. According to a poll conducted by NewVantage Partners in 2017, 85% of businesses are making an effort to become data-driven, and the worldwide data science platform market is projected to grow to $128.21 billion by 2022, from only $19.75 billion in 2016.

Data science is not a meaningless term with no practical applications. Yet, many businesses have difficulty reorganizing their decision-making around data and implementing a consistent data strategy. Lack of information is not the issue.

Our daily data production has reached 2.5 quintillion bytes, which is so huge that it is impossible to completely understand the breakneck speed at which we produce new data. Ninety percent of all global data was generated in the previous few years.

The actual issue is that businesses aren't able to properly use the data they already collect to get useful insights that can be utilized to improve decision-making, counteract risks, and protect against threats.

It is vital for businesses to know how to approach a new data science challenge and understand what kinds of questions data science can answer since there is frequently too much data accessible to make a clear choice. One must have a look at Data Science Course Subjects for an outstanding career in Data Science.

What is Data Science Challenges?

Data science is an application of the scientific method that utilizes data and analytics to address issues that are often difficult (or multiple) and unstructured. The phrase "fishing expedition" comes from the field of analytics and refers to a project that was never structured appropriately, to begin with, and entails searching through the data for unanticipated connections. This particular kind of "data fishing" does not adhere to the principles of efficient data science; nonetheless, it is still rather common. Therefore, the first thing that needs to be done is to clearly define the issue. In the past, we put out an idea for

"The study of statistics and data is not a kind of witchcraft. They will not, by any means, solve all of the issues that plague a corporation. According to Seattle Data Guy, a data-driven consulting service, "but, they are valuable tools that assist organizations make more accurate judgments and automate repetitious labor and choices that teams need to make."

The following are some of the categories that may be used to classify the problems that can be solved with the assistance of data science:

- Finding patterns in massive data sets : Which of the servers in my server farm need the most maintenance?

- Detecting deviations from the norm in huge data sets : Is this particular mix of acquisitions distinct from what this particular consumer has previously ordered?

- The process of estimating the possibility of something occurring : What are the chances that this person will click on my video?

- illustrating the ways in which things are related to one another : What exactly is the focus of this article that I saw online?

- Categorizing specific data points: Which animal do you think this picture depicts a kitty or a mouse?

Of course, the aforementioned is in no way a comprehensive list of all the questions that can be answered by data science. Even if it were, the field of data science is advancing at such a breakneck speed that it is quite possible that it would be rendered entirely irrelevant within a year or two of its release.

It is time to write out the stages that the majority of data scientists would follow when tackling a new data science challenge now that we have determined the categories of questions that may be fairly anticipated to be solved with the assistance of data science. Data Science Bootcamp review is for people struggling to make a breakthrough in this domain.

Common Data Science Problems Faced by Data Scientists

1. preparation of data for smart enterprise ai.

Finding and cleaning up the proper data is a data scientist's priority. Nearly 80% of a data scientist's day is spent on cleaning, organizing, mining, and gathering data, according to a CrowdFlower poll. In this stage, the data is double-checked before undergoing additional analysis and processing. Most data scientists (76%) agree that this is one of the most tedious elements of their work. As part of the data wrangling process, data scientists must efficiently sort through terabytes of data stored in a wide variety of formats and codes on a wide variety of platforms, all while keeping track of changes to such data to avoid data duplication.

Adopting AI-based tools that help data scientists maintain their edge and increase their efficacy is the best method to deal with this issue. Another flexible workplace AI technology that aids in data preparation and sheds light on the topic at hand is augmented learning.

2. Generation of Data from Multiple Sources

Data is obtained by organizations in a broad variety of forms from the many programs, software, and tools that they use. Managing voluminous amounts of data is a significant obstacle for data scientists. This method calls for the manual entering of data and compilation, both of which are time-consuming and have the potential to result in unnecessary repeats or erroneous choices. The data may be most valuable when exploited effectively for maximum usefulness in company artificial intelligence .

Companies now can build up sophisticated virtual data warehouses that are equipped with a centralized platform to combine all of their data sources in a single location. It is possible to modify or manipulate the data that is stored in the central repository to satisfy the needs of a company and increase its efficiency. This easy-to-implement modification has the potential to significantly reduce the amount of time and labor required by data scientists.

3. Identification of Business Issues

Identifying issues is a crucial component of conducting a solid organization. Before constructing data sets and analyzing data, data scientists should concentrate on identifying enterprise-critical challenges. Before establishing the data collection, it is crucial to determine the source of the problem rather than immediately resorting to a mechanical solution.