An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Can J Hosp Pharm

- v.67(1); Jan-Feb 2014

Research: Articulating Questions, Generating Hypotheses, and Choosing Study Designs

Introduction.

Articulating a clear and concise research question is fundamental to conducting a robust and useful research study. Although “getting stuck into” the data collection is the exciting part of research, this preparation stage is crucial. Clear and concise research questions are needed for a number of reasons. Initially, they are needed to enable you to search the literature effectively. They will allow you to write clear aims and generate hypotheses. They will also ensure that you can select the most appropriate research design for your study.

This paper begins by describing the process of articulating clear and concise research questions, assuming that you have minimal experience. It then describes how to choose research questions that should be answered and how to generate study aims and hypotheses from your questions. Finally, it describes briefly how your question will help you to decide on the research design and methods best suited to answering it.

TURNING CURIOSITY INTO QUESTIONS

A research question has been described as “the uncertainty that the investigator wants to resolve by performing her study” 1 or “a logical statement that progresses from what is known or believed to be true to that which is unknown and requires validation”. 2 Developing your question usually starts with having some general ideas about the areas within which you want to do your research. These might flow from your clinical work, for example. You might be interested in finding ways to improve the pharmaceutical care of patients on your wards. Alternatively, you might be interested in identifying the best antihypertensive agent for a particular subgroup of patients. Lipowski 2 described in detail how work as a practising pharmacist can be used to great advantage to generate interesting research questions and hence useful research studies. Ideas could come from questioning received wisdom within your clinical area or the rationale behind quick fixes or workarounds, or from wanting to improve the quality, safety, or efficiency of working practice.

Alternatively, your ideas could come from searching the literature to answer a query from a colleague. Perhaps you could not find a published answer to the question you were asked, and so you want to conduct some research yourself. However, just searching the literature to generate questions is not to be recommended for novices—the volume of material can feel totally overwhelming.

Use a research notebook, where you regularly write ideas for research questions as you think of them during your clinical practice or after reading other research papers. It has been said that the best way to have a great idea is to have lots of ideas and then choose the best. The same would apply to research questions!

When you first identify your area of research interest, it is likely to be either too narrow or too broad. Narrow questions (such as “How is drug X prescribed for patients with condition Y in my hospital?”) are usually of limited interest to anyone other than the researcher. Broad questions (such as “How can pharmacists provide better patient care?”) must be broken down into smaller, more manageable questions. If you are interested in how pharmacists can provide better care, for example, you might start to narrow that topic down to how pharmacists can provide better care for one condition (such as affective disorders) for a particular subgroup of patients (such as teenagers). Then you could focus it even further by considering a specific disorder (depression) and a particular type of service that pharmacists could provide (improving patient adherence). At this stage, you could write your research question as, for example, “What role, if any, can pharmacists play in improving adherence to fluoxetine used for depression in teenagers?”

TYPES OF RESEARCH QUESTIONS

Being able to consider the type of research question that you have generated is particularly useful when deciding what research methods to use. There are 3 broad categories of question: descriptive, relational, and causal.

Descriptive

One of the most basic types of question is designed to ask systematically whether a phenomenon exists. For example, we could ask “Do pharmacists ‘care’ when they deliver pharmaceutical care?” This research would initially define the key terms (i.e., describing what “pharmaceutical care” and “care” are), and then the study would set out to look for the existence of care at the same time as pharmaceutical care was being delivered.

When you know that a phenomenon exists, you can then ask description and/or classification questions. The answers to these types of questions involve describing the characteristics of the phenomenon or creating typologies of variable subtypes. In the study above, for example, you could investigate the characteristics of the “care” that pharmacists provide. Classifications usually use mutually exclusive categories, so that various subtypes of the variable will have an unambiguous category to which they can be assigned. For example, a question could be asked as to “what is a pharmacist intervention” and a definition and classification system developed for use in further research.

When seeking further detail about your phenomenon, you might ask questions about its composition. These questions necessitate deconstructing a phenomenon (such as a behaviour) into its component parts. Within hospital pharmacy practice, you might be interested in asking questions about the composition of a new behavioural intervention to improve patient adherence, for example, “What is the detailed process that the pharmacist implicitly follows during delivery of this new intervention?”

After you have described your phenomena, you may then be interested in asking questions about the relationships between several phenomena. If you work on a renal ward, for example, you may be interested in looking at the relationship between hemoglobin levels and renal function, so your question would look something like this: “Are hemoglobin levels related to level of renal function?” Alternatively, you may have a categorical variable such as grade of doctor and be interested in the differences between them with regard to prescribing errors, so your research question would be “Do junior doctors make more prescribing errors than senior doctors?” Relational questions could also be asked within qualitative research, where a detailed understanding of the nature of the relationship between, for example, the gender and career aspirations of clinical pharmacists could be sought.

Once you have described your phenomena and have identified a relationship between them, you could ask about the causes of that relationship. You may be interested to know whether an intervention or some other activity has caused a change in your variable, and your research question would be about causality. For example, you may be interested in asking, “Does captopril treatment reduce blood pressure?” Generally, however, if you ask a causality question about a medication or any other health care intervention, it ought to be rephrased as a causality–comparative question. Without comparing what happens in the presence of an intervention with what happens in the absence of the intervention, it is impossible to attribute causality to the intervention. Although a causality question would usually be answered using a comparative research design, asking a causality–comparative question makes the research design much more explicit. So the above question could be rephrased as, “Is captopril better than placebo at reducing blood pressure?”

The acronym PICO has been used to describe the components of well-crafted causality–comparative research questions. 3 The letters in this acronym stand for Population, Intervention, Comparison, and Outcome. They remind the researcher that the research question should specify the type of participant to be recruited, the type of exposure involved, the type of control group with which participants are to be compared, and the type of outcome to be measured. Using the PICO approach, the above research question could be written as “Does captopril [ intervention ] decrease rates of cardiovascular events [ outcome ] in patients with essential hypertension [ population ] compared with patients receiving no treatment [ comparison ]?”

DECIDING WHETHER TO ANSWER A RESEARCH QUESTION

Just because a question can be asked does not mean that it needs to be answered. Not all research questions deserve to have time spent on them. One useful set of criteria is to ask whether your research question is feasible, interesting, novel, ethical, and relevant. 1 The need for research to be ethical will be covered in a later paper in the series, so is not discussed here. The literature review is crucial to finding out whether the research question fulfils the remaining 4 criteria.

Conducting a comprehensive literature review will allow you to find out what is already known about the subject and any gaps that need further exploration. You may find that your research question has already been answered. However, that does not mean that you should abandon the question altogether. It may be necessary to confirm those findings using an alternative method or to translate them to another setting. If your research question has no novelty, however, and is not interesting or relevant to your peers or potential funders, you are probably better finding an alternative.

The literature will also help you learn about the research designs and methods that have been used previously and hence to decide whether your potential study is feasible. As a novice researcher, it is particularly important to ask if your planned study is feasible for you to conduct. Do you or your collaborators have the necessary technical expertise? Do you have the other resources that will be needed? If you are just starting out with research, it is likely that you will have a limited budget, in terms of both time and money. Therefore, even if the question is novel, interesting, and relevant, it may not be one that is feasible for you to answer.

GENERATING AIMS AND HYPOTHESES

All research studies should have at least one research question, and they should also have at least one aim. As a rule of thumb, a small research study should not have more than 2 aims as an absolute maximum. The aim of the study is a broad statement of intention and aspiration; it is the overall goal that you intend to achieve. The wording of this broad statement of intent is derived from the research question. If it is a descriptive research question, the aim will be, for example, “to investigate” or “to explore”. If it is a relational research question, then the aim should state the phenomena being correlated, such as “to ascertain the impact of gender on career aspirations”. If it is a causal research question, then the aim should include the direction of the relationship being tested, such as “to investigate whether captopril decreases rates of cardiovascular events in patients with essential hypertension, relative to patients receiving no treatment”.

The hypothesis is a tentative prediction of the nature and direction of relationships between sets of data, phrased as a declarative statement. Therefore, hypotheses are really only required for studies that address relational or causal research questions. For the study above, the hypothesis being tested would be “Captopril decreases rates of cardiovascular events in patients with essential hypertension, relative to patients receiving no treatment”. Studies that seek to answer descriptive research questions do not test hypotheses, but they can be used for hypothesis generation. Those hypotheses would then be tested in subsequent studies.

CHOOSING THE STUDY DESIGN

The research question is paramount in deciding what research design and methods you are going to use. There are no inherently bad research designs. The rightness or wrongness of the decision about the research design is based simply on whether it is suitable for answering the research question that you have posed.

It is possible to select completely the wrong research design to answer a specific question. For example, you may want to answer one of the research questions outlined above: “Do pharmacists ‘care’ when they deliver pharmaceutical care?” Although a randomized controlled study is considered by many as a “gold standard” research design, such a study would just not be capable of generating data to answer the question posed. Similarly, if your question was, “Is captopril better than placebo at reducing blood pressure?”, conducting a series of in-depth qualitative interviews would be equally incapable of generating the necessary data. However, if these designs are swapped around, we have 2 combinations (pharmaceutical care investigated using interviews; captopril investigated using a randomized controlled study) that are more likely to produce robust answers to the questions.

The language of the research question can be helpful in deciding what research design and methods to use. Subsequent papers in this series will cover these topics in detail. For example, if the question starts with “how many” or “how often”, it is probably a descriptive question to assess the prevalence or incidence of a phenomenon. An epidemiological research design would be appropriate, perhaps using a postal survey or structured interviews to collect the data. If the question starts with “why” or “how”, then it is a descriptive question to gain an in-depth understanding of a phenomenon. A qualitative research design, using in-depth interviews or focus groups, would collect the data needed. Finally, the term “what is the impact of” suggests a causal question, which would require comparison of data collected with and without the intervention (i.e., a before–after or randomized controlled study).

CONCLUSIONS

This paper has briefly outlined how to articulate research questions, formulate your aims, and choose your research methods. It is crucial to realize that articulating a good research question involves considerable iteration through the stages described above. It is very common that the first research question generated bears little resemblance to the final question used in the study. The language is changed several times, for example, because the first question turned out not to be feasible and the second question was a descriptive question when what was really wanted was a causality question. The books listed in the “Further Reading” section provide greater detail on the material described here, as well as a wealth of other information to ensure that your first foray into conducting research is successful.

This article is the second in the CJHP Research Primer Series, an initiative of the CJHP Editorial Board and the CSHP Research Committee. The planned 2-year series is intended to appeal to relatively inexperienced researchers, with the goal of building research capacity among practising pharmacists. The articles, presenting simple but rigorous guidance to encourage and support novice researchers, are being solicited from authors with appropriate expertise.

Previous article in this series:

Bond CM. The research jigsaw: how to get started. Can J Hosp Pharm . 2014;67(1):28–30.

Competing interests: Mary Tully has received personal fees from the UK Renal Pharmacy Group to present a conference workshop on writing research questions and nonfinancial support (in the form of travel and accommodation) from the Dubai International Pharmaceuticals and Technologies Conference and Exhibition (DUPHAT) to present a workshop on conducting pharmacy practice research.

Further Reading

- Cresswell J. Research design: qualitative, quantitative and mixed methods approaches. London (UK): Sage; 2009. [ Google Scholar ]

- Haynes RB, Sackett DL, Guyatt GH, Tugwell P. Clinical epidemiology: how to do clinical practice research. 3rd ed. Philadelphia (PA): Lippincott, Williams & Wilkins; 2006. [ Google Scholar ]

- Kumar R. Research methodology: a step-by-step guide for beginners. 3rd ed. London (UK): Sage; 2010. [ Google Scholar ]

- Smith FJ. Conducting your pharmacy practice research project. London (UK): Pharmaceutical Press; 2005. [ Google Scholar ]

Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

Skip to main page content

- AUTHOR INFO

- Institution: Google Indexer

- Sign In via User Name/Password

Hypothesis-generating research and predictive medicine

- Leslie G. Biesecker 1

- National Human Genome Research Institute, National Institutes of Health, Bethesda, Maryland 20892, USA

Genomics has profoundly changed biology by scaling data acquisition, which has provided researchers with the opportunity to interrogate biology in novel and creative ways. No longer constrained by low-throughput assays, researchers have developed hypothesis-generating approaches to understand the molecular basis of nature—both normal and pathological. The paradigm of hypothesis-generating research does not replace or undermine hypothesis-testing modes of research; instead, it complements them and has facilitated discoveries that may not have been possible with hypothesis-testing research. The hypothesis-generating mode of research has been primarily practiced in basic science but has recently been extended to clinical-translational work as well. Just as in basic science, this approach to research can facilitate insights into human health and disease mechanisms and provide the crucially needed data set of the full spectrum of genotype–phenotype correlations. Finally, the paradigm of hypothesis-generating research is conceptually similar to the underpinning of predictive genomic medicine, which has the potential to shift medicine from a primarily population- or cohort-based activity to one that instead uses individual susceptibility, prognostic, and pharmacogenetic profiles to maximize the efficacy and minimize the iatrogenic effects of medical interventions.

The goal of this article is to describe how recent technological changes provide opportunities to undertake novel approaches to biomedical research and to practice genomic preventive medicine. Massively parallel sequencing is the primary technology that will be addressed here ( Mardis 2008 ), but the principles apply to many other technologies, such as proteomics, metabolomics, transcriptomics, etc. Readers of this journal are well aware of the precipitous fall of sequencing costs over the last several decades. The consequence of this fall is that we are no longer in a scientific and medical world where the throughput (and the costs) of testing is the key limiting factor around which these enterprises are organized. Once one is released from this limiting factor, one may ask whether these enterprises should be reorganized. Here I outline the principles of how these enterprises are organized, show how high-throughput biology can allow alternative organizations of these enterprises to be considered, and show how biology and medicine are in many ways similar. The discussion includes three categories of enterprises: basic research, clinical research, and medical practice.

- The basic science hypothesis-testing paradigm

The classical paradigm for basic biological research has been to develop a specific hypothesis that can be tested by the application of a prospectively defined experiment (see Box 1 ). I suggest that one of the major (although not the only) factors that led to the development of this paradigm is that experimental design was limited by the throughput of available assays. This low throughput mandated that the scientific question had to be focused narrowly to make the question tractable. However, the paradigm can be questioned if the scientist has the ability to assay every potential attribute of a given type (e.g., all genes). If the hypothesis is only needed to select the assay, one does not need a hypothesis to apply a technology that assays all attributes. In the case of sequencing, the radical increase in throughput can release scientists from the constraint of the specific hypothesis because it has allowed them to interrogate essentially all genotypes in a genome in a single assay. This capability facilitates fundamental biological discoveries that were impossible or impractical with a hypothesis-testing mode of scientific inquiry. Examples of this approach are well demonstrated by several discoveries that followed the sequencing of a number of genomes. An example was the discovery that the human gene count was just over 20,000 ( International Human Genome Sequencing Consortium 2004 ), much lower than prior estimates. This result, although it was much debated and anticipated, was not a hypothesis that drove the human genome project, but nonetheless was surprising and led to insights into the nuances of gene regulation and transcriptional isoforms to explain the complexity of the human organism. The availability of whole genome sequence data from multiple species facilitated analyses of conservation. While it was expected that protein-coding regions, and to a lesser extent promoters and 5′- and 3′-untranslated regions of genes, would exhibit recognizable sequence conservation, it was unexpected that an even larger fraction of the genomes outside of genes are highly conserved ( Mouse Genome Sequencing Consortium 2002 ). This surprising and unanticipated discovery has spawned a novel field of scientific inquiry to determine the functional roles of these elements, which are undoubtedly important in physiology and pathophysiology. These discoveries demonstrate the power of hypothesis-generating basic research to illuminate important biological principles.

- In this window

- In a new window

Basic science hypothesis-testing and hypothesis-generating paradigms

- Clinical and translational research

The approach to clinical research grew out of the basic science paradigm as described above. The first few steps of selecting a scientific problem and developing a hypothesis are similar, with the additional step ( Box 2 ) of rigorously defining a phenotype and then carefully selecting research participants with and without that trait. As in the basic science paradigm, the hypothesis is tested by the application of a specific assay to the cases and controls. Again, this paradigm has been incredibly fruitful and should not be abandoned, but the hypothesis-generating approach can be used here as well. In this approach, a cohort of participants is consented, basic information is gathered on their health, and then a high-throughput assay, such as genome or exome sequencing, is applied to all of the participants. Again, because the assay tests all such attributes, the research design does not necessitate a priori selections of phenotypes and genes to be interrogated. Then, the researcher can examine the sequence data set for patterns and perturbations, form hypotheses about how such perturbations might affect the phenotype of the participants, and test that hypothesis with a clinical research evaluation. This approach has been used with data from genome-wide copy number assessments (array CGH and SNP arrays), but sequencing takes it to a higher level of interrogation and provides innumerable variants with which to work.

Clinical research paradigms

An example of this type of sequence-based hypothesis-generating clinical research started with a collaborative project in which we showed that mutations in the gene ACSF3 caused the biochemical phenotype of combined malonic and methylmalonic acidemia ( Sloan et al. 2011 ). At that time, the disorder was believed to be a classic pediatric, autosomal-recessive severe metabolic disorder with decompensation and sometimes death. We then queried the ClinSeq cohort ( Biesecker et al. 2009 ) to assess the carrier frequency, to estimate the population frequency of this rare disorder. Because ClinSeq is a cohort of adults with a range of atherosclerosis severity, we reasoned that this would serve as a control population for an unbiased estimate of ACSF3 heterozygote mutant alleles. Surprisingly, we identified a ClinSeq participant who was homozygous for one of the mutations identified in the children with the typical phenotype. Indeed, one potential interpretation of the data would be that the variant is, in fact, benign and was erroneously concluded to be pathogenic, based on finding it in a child with the typical phenotype. It has been shown that this error is common, with up to 20% of variants listed in databases as pathogenic actually being benign ( Bell et al. 2011 ). Further clinical research on this participant led to the surprising result that she had severely abnormal blood and urine levels of malonic and methylmalonic acid ( Sloan et al. 2011 ). This novel approach to translational research was a powerful confirmation that the mutation was indeed pathogenic, but there was another, even more important conclusion. We had conceptualized the disease completely incorrectly. Instead of being only a severe, pediatric metabolic disorder, it was instead a disorder with a wide phenotypic spectrum in which one component of the disease is a metabolic perturbation and another component is a susceptibility to severe decompensation and strokes. This research indeed raises many questions about the natural history of the disorder, whether the pediatric decompensation phenotype is attributable to modifiers, what the appropriate management of such an adult would be, etc.

Irrespective of these limitations, the understanding of the disease has markedly advanced, and the key to understanding the broader spectrum of this disease was the hypothesis-generating approach enabled by the massively parallel sequence data and the ability to phenotype patients iteratively from ClinSeq. The iterative phenotyping was essential because we could not have anticipated when the patients were originally ascertained that we would need to assay malonic and methylmalonic acid. Nor did we recognize prospectively that we should be evaluating apparently healthy patients in their seventh decade for this phenotype. Indeed, it is impossible to evaluate patients for all potential phenotypes prospectively, and it is essential to minimize ascertainment bias for patient recruitment in order to allow the discovery of the full spectrum of phenotypes associated with genomic variations. This latter issue has become a critical challenge for implementing predictive medicine, as described below.

- Predictive genomic medicine in practice

The principles of scientific inquiry are parallel to the processes of clinical diagnosis ( Box 3 ). In the classic, hypothesis-testing paradigm, clinicians gather background information including chief complaint, 2 medical and family history, and physical examination, and use these data to formulate the differential diagnosis, which is a set of potential medical diagnoses that could explain the patient's signs and symptoms. Then, the clinician selects, among the myriad of tests (imaging, biochemical, genetic, physiologic, etc.), a few tests, the results of which should distinguish among (or possibly exclude entirely) the disorders on the differential diagnosis. Like the scientist, the physician must act as a test selector, because each of the tests is low throughput, time consuming, and expensive.

Clinical practice paradigms—hypothesis testing and hypothesis generating

As in the basic and translational research discussion above, the question could be raised as to whether the differential diagnostic paradigm is necessary for genetic disorders. Indeed, the availability of clinical genome and exome sequencing heralds an era when the test could be ordered relatively early in the diagnostic process, with the clinician serving in a more interpretative role, rather than as a test selector ( Hennekam and Biesecker 2012 ). This approach has already been adopted for copy number variation, because whole genome array CGH- or SNP-based approaches have mostly displaced more specific single-gene or single-locus assays and standard chromosome analyses ( Miller et al. 2010 ). But the paradigm can be taken beyond hypothesis-generating clinical diagnosis into predictive medicine. One can now begin to envision how whole genome approaches could be used to assess risks prospectively for susceptibility to late-onset disorders or occult or subclinical disorders. The heritable cancer susceptibility syndromes are a good example of this. The current clinical approach is to order a specific gene test if a patient presents with a personal history of an atypical or early-onset form of a specific cancer syndrome, or has a compelling family history of the disease. As in the prior examples, this is because individual cancer gene testing is expensive and low throughput. One can ask the question whether this is the ideal approach or if we could be screening for these disorders from genome or exome data. Again, we applied sequencing analysis for these genes to the ClinSeq cohort because they were not ascertained for that phenotype. In a published study of 572 exomes ( Johnston et al. 2012 ), updated here to include 850 exomes, we have identified 10 patients with seven distinct cancer susceptibility syndrome mutations. These were mostly familial breast and ovarian cancer ( BRCA1 and BRCA2 ), with one patient each with paraganglioma and pheochromocytoma ( SDHC ) and one with Lynch syndrome ( MSH6 ). What is remarkable about these diagnoses is that only about half of them had a convincing personal or family history of the disease, and thus most would have not been offered testing using the current, hypothesis-testing clinical paradigm. These data suggest that screening for these disorders using genome or exome sequencing could markedly improve our ability to identify such families before they develop or die from these diseases—the ideal of predictive genomic medicine.

Despite these optimistic scenarios and examples, it remains true that our ability to perform true predictive medicine is limited. These limitations include technical factors such as incomplete sequence coverage, imperfect sequence quality, inadequate knowledge regarding the penetrance and expressivity of most variants, uncertain medical approaches and utility of pursuing variants from genomic sequencing, and the poor preparation of most clinicians for addressing genomic concerns in the clinic ( Biesecker 2013 ). Recognizing all of these limitations, it is clear that we are not prepared to launch broad-scale implementation of predictive genomic medicine, nor should all research be structured using the hypothesis-generating approach.

Hypothesis-testing approaches to science and medicine have served us well and should continue. However, the advent of massively parallel sequencing and other high-throughput technologies provides opportunities to undertake hypothesis-generating approaches to science and medicine, which in turn provide unprecedented opportunities for discovery in the research realm. This can allow the discovery of results that were not anticipated or intended by the research design, yet provide critical insights into biology and pathophysiology. Similarly, hypothesis-generating clinical research has the potential to provide these same insights and, in addition, has the potential to provide us with data that will illuminate the full spectrum of genotype–phenotype correlations, eliminating the biases that have limited this understanding in the past. Finally, applying these principles to clinical medicine can provide new pathways to diagnosis and provide the theoretical basis for predictive medicine that can detect disease susceptibility and allow health to be maintained, instead of solely focusing on the treatment of evident disease.

↵ 1 Corresponding author

E-mail lesb{at}mail.nih.gov

Article is online at http://www.genome.org/cgi/doi/10.1101/gr.157826.113 .

↵ 2 The chief complaint is a brief description of the problem that led the patient to the clinician, such as “I have a cough and fever.”

- © 2013, Published by Cold Spring Harbor Laboratory Press

This article is distributed exclusively by Cold Spring Harbor Laboratory Press for the first six months after the full-issue publication date (see http://genome.cshlp.org/site/misc/terms.xhtml ). After six months, it is available under a Creative Commons License (Attribution-NonCommercial 3.0 Unported), as described at http://creativecommons.org/licenses/by-nc/3.0/ .

- Dinwiddie DL ,

- Miller NA ,

- Hateley SL ,

- Ganusova EE ,

- Langley RJ ,

- Schilkey FD

- Biesecker LG

- Biesecker LG ,

- Mullikin JC ,

- Cherukuri PF ,

- Blakesley RW ,

- Bouffard GG ,

- Chines PS ,

- Hennekam RC ,

- ↵ International Human Genome Sequencing Consortium . 2004 . Finishing the euchromatic sequence of the human genome . Nature 431 : 931 – 945 . CrossRef Medline Google Scholar

- Johnston JJ ,

- Rubinstein WS ,

- Miller DT ,

- Aradhya S ,

- Brothman AR ,

- Carter NP ,

- Church DM ,

- Crolla JA ,

- Eichler EE ,

- ↵ Mouse Genome Sequencing Consortium. 2002 . Initial sequencing and comparative analysis of the mouse genome . Nature 420 : 520 – 562 . CrossRef Medline Google Scholar

- Chandler RJ ,

- Carrillo-Carrasco N ,

- Chandrasekaran SD ,

- O'Brien K ,

What's this?

This Article

- doi: 10.1101/gr.157826.113 Genome Res. 2013. 23: 1051-1053 © 2013, Published by Cold Spring Harbor Laboratory Press

- Abstract Free

- » Full Text Free

- Full Text (PDF) Free

Article Category

- Alert me when this article is cited

- Alert me if a correction is posted

- Similar articles in this journal

- Similar articles in Web of Science

- Article Metrics

- Similar articles in PubMed

- Download to citation manager

- Permissions

Citing Articles

- Load citing article information

- Citing articles via Web of Science

- Citing articles via Google Scholar

Google Scholar

- Articles by Biesecker, L. G.

- Search for related content

PubMed/NCBI

- PubMed citation

Preprint Server

Navigate this article, current issue.

- April 2024, 34 (4)

From the Cover

- Eucalyptus genome evolution

- Epitranscriptomics and immunity review

- Human breast cancer ESR1 heterogeneity

- Ribosome decision graphs

- Exploratory network mediator analysis

- Alert me to new issues of Genome Research

- Advance Online Articles

- Submit a Manuscript

- GR in the News

- Editorial Board

- E-mail Alerts & RSS Feeds

- Recommend to Your Library

- Job Opportunities

- Author Info

Copyright © 2024 by Cold Spring Harbor Laboratory Press

- Print ISSN: 1088-9051

- Online ISSN: 1549-5469

2.4 Developing a Hypothesis

Learning objectives.

- Distinguish between a theory and a hypothesis.

- Discover how theories are used to generate hypotheses and how the results of studies can be used to further inform theories.

- Understand the characteristics of a good hypothesis.

Theories and Hypotheses

Before describing how to develop a hypothesis it is imporant to distinguish betwee a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition. He proposed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are a-theoretical and only after a set of observations have been made, is a theory developed. This is because theories are broad in nature and they explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observation before we can develop a broader theory.

Theories and hypotheses always have this if-then relationship. “ If drive theory is correct, then cockroaches should run through a straight runway faster, and a branching runway more slowly, when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus deriving hypotheses from theories is an excellent way of generating interesting research questions.

But how do researchers derive hypotheses from theories? One way is to generate a research question using the techniques discussed in this chapter and then ask whether any theory implies an answer to that question. For example, you might wonder whether expressive writing about positive experiences improves health as much as expressive writing about traumatic experiences. Although this question is an interesting one on its own, you might then ask whether the habituation theory—the idea that expressive writing causes people to habituate to negative thoughts and feelings—implies an answer. In this case, it seems clear that if the habituation theory is correct, then expressive writing about positive experiences should not be effective because it would not cause people to habituate to negative thoughts and feelings. A second way to derive hypotheses from theories is to focus on some component of the theory that has not yet been directly observed. For example, a researcher could focus on the process of habituation—perhaps hypothesizing that people should show fewer signs of emotional distress with each new writing session.

Among the very best hypotheses are those that distinguish between competing theories. For example, Norbert Schwarz and his colleagues considered two theories of how people make judgments about themselves, such as how assertive they are (Schwarz et al., 1991) [1] . Both theories held that such judgments are based on relevant examples that people bring to mind. However, one theory was that people base their judgments on the number of examples they bring to mind and the other was that people base their judgments on how easily they bring those examples to mind. To test these theories, the researchers asked people to recall either six times when they were assertive (which is easy for most people) or 12 times (which is difficult for most people). Then they asked them to judge their own assertiveness. Note that the number-of-examples theory implies that people who recalled 12 examples should judge themselves to be more assertive because they recalled more examples, but the ease-of-examples theory implies that participants who recalled six examples should judge themselves as more assertive because recalling the examples was easier. Thus the two theories made opposite predictions so that only one of the predictions could be confirmed. The surprising result was that participants who recalled fewer examples judged themselves to be more assertive—providing particularly convincing evidence in favor of the ease-of-retrieval theory over the number-of-examples theory.

Theory Testing

The primary way that scientific researchers use theories is sometimes called the hypothetico-deductive method (although this term is much more likely to be used by philosophers of science than by scientists themselves). A researcher begins with a set of phenomena and either constructs a theory to explain or interpret them or chooses an existing theory to work with. He or she then makes a prediction about some new phenomenon that should be observed if the theory is correct. Again, this prediction is called a hypothesis. The researcher then conducts an empirical study to test the hypothesis. Finally, he or she reevaluates the theory in light of the new results and revises it if necessary. This process is usually conceptualized as a cycle because the researcher can then derive a new hypothesis from the revised theory, conduct a new empirical study to test the hypothesis, and so on. As Figure 2.2 shows, this approach meshes nicely with the model of scientific research in psychology presented earlier in the textbook—creating a more detailed model of “theoretically motivated” or “theory-driven” research.

Figure 2.2 Hypothetico-Deductive Method Combined With the General Model of Scientific Research in Psychology Together they form a model of theoretically motivated research.

As an example, let us consider Zajonc’s research on social facilitation and inhibition. He started with a somewhat contradictory pattern of results from the research literature. He then constructed his drive theory, according to which being watched by others while performing a task causes physiological arousal, which increases an organism’s tendency to make the dominant response. This theory predicts social facilitation for well-learned tasks and social inhibition for poorly learned tasks. He now had a theory that organized previous results in a meaningful way—but he still needed to test it. He hypothesized that if his theory was correct, he should observe that the presence of others improves performance in a simple laboratory task but inhibits performance in a difficult version of the very same laboratory task. To test this hypothesis, one of the studies he conducted used cockroaches as subjects (Zajonc, Heingartner, & Herman, 1969) [2] . The cockroaches ran either down a straight runway (an easy task for a cockroach) or through a cross-shaped maze (a difficult task for a cockroach) to escape into a dark chamber when a light was shined on them. They did this either while alone or in the presence of other cockroaches in clear plastic “audience boxes.” Zajonc found that cockroaches in the straight runway reached their goal more quickly in the presence of other cockroaches, but cockroaches in the cross-shaped maze reached their goal more slowly when they were in the presence of other cockroaches. Thus he confirmed his hypothesis and provided support for his drive theory. (Zajonc also showed that drive theory existed in humans (Zajonc & Sales, 1966) [3] in many other studies afterward).

Incorporating Theory into Your Research

When you write your research report or plan your presentation, be aware that there are two basic ways that researchers usually include theory. The first is to raise a research question, answer that question by conducting a new study, and then offer one or more theories (usually more) to explain or interpret the results. This format works well for applied research questions and for research questions that existing theories do not address. The second way is to describe one or more existing theories, derive a hypothesis from one of those theories, test the hypothesis in a new study, and finally reevaluate the theory. This format works well when there is an existing theory that addresses the research question—especially if the resulting hypothesis is surprising or conflicts with a hypothesis derived from a different theory.

To use theories in your research will not only give you guidance in coming up with experiment ideas and possible projects, but it lends legitimacy to your work. Psychologists have been interested in a variety of human behaviors and have developed many theories along the way. Using established theories will help you break new ground as a researcher, not limit you from developing your own ideas.

Characteristics of a Good Hypothesis

There are three general characteristics of a good hypothesis. First, a good hypothesis must be testable and falsifiable . We must be able to test the hypothesis using the methods of science and if you’ll recall Popper’s falsifiability criterion, it must be possible to gather evidence that will disconfirm the hypothesis if it is indeed false. Second, a good hypothesis must be logical. As described above, hypotheses are more than just a random guess. Hypotheses should be informed by previous theories or observations and logical reasoning. Typically, we begin with a broad and general theory and use deductive reasoning to generate a more specific hypothesis to test based on that theory. Occasionally, however, when there is no theory to inform our hypothesis, we use inductive reasoning which involves using specific observations or research findings to form a more general hypothesis. Finally, the hypothesis should be positive. That is, the hypothesis should make a positive statement about the existence of a relationship or effect, rather than a statement that a relationship or effect does not exist. As scientists, we don’t set out to show that relationships do not exist or that effects do not occur so our hypotheses should not be worded in a way to suggest that an effect or relationship does not exist. The nature of science is to assume that something does not exist and then seek to find evidence to prove this wrong, to show that really it does exist. That may seem backward to you but that is the nature of the scientific method. The underlying reason for this is beyond the scope of this chapter but it has to do with statistical theory.

Key Takeaways

- A theory is broad in nature and explains larger bodies of data. A hypothesis is more specific and makes a prediction about the outcome of a particular study.

- Working with theories is not “icing on the cake.” It is a basic ingredient of psychological research.

- Like other scientists, psychologists use the hypothetico-deductive method. They construct theories to explain or interpret phenomena (or work with existing theories), derive hypotheses from their theories, test the hypotheses, and then reevaluate the theories in light of the new results.

- Practice: Find a recent empirical research report in a professional journal. Read the introduction and highlight in different colors descriptions of theories and hypotheses.

- Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social Psychology, 61 , 195–202. ↵

- Zajonc, R. B., Heingartner, A., & Herman, E. M. (1969). Social enhancement and impairment of performance in the cockroach. Journal of Personality and Social Psychology, 13 , 83–92. ↵

- Zajonc, R.B. & Sales, S.M. (1966). Social facilitation of dominant and subordinate responses. Journal of Experimental Social Psychology, 2 , 160-168. ↵

Share This Book

- Increase Font Size

91%以上節約 ギフト プレゼント 食器・テーブルウェア

- Research article

- Open access

- Published: 03 June 2024

From concerns to benefits: a comprehensive study of ChatGPT usage in education

- Hyeon Jo ORCID: orcid.org/0000-0001-7442-4736 1

International Journal of Educational Technology in Higher Education volume 21 , Article number: 35 ( 2024 ) Cite this article

396 Accesses

5 Altmetric

Metrics details

Artificial Intelligence (AI) chatbots are increasingly becoming integral components of the digital learning ecosystem. As AI technologies continue to evolve, it is crucial to understand the factors influencing their adoption and use among students in higher education. This study is undertaken against this backdrop to explore the behavioral determinants associated with the use of the AI Chatbot, ChatGPT, among university students. The investigation delves into the role of ChatGPT’s self-learning capabilities and their influence on students’ knowledge acquisition and application, subsequently affecting the individual impact. It further elucidates the correlation of chatbot personalization with novelty value and benefits, underscoring their importance in shaping students’ behavioral intentions. Notably, individual impact is revealed to have a positive association with perceived benefits and behavioral intention. The study also brings to light potential barriers to AI chatbot adoption, identifying privacy concerns, technophobia, and guilt feelings as significant detractors from behavioral intention. However, despite these impediments, innovativeness emerges as a positive influencer, enhancing behavioral intention and actual behavior. This comprehensive exploration of the multifaceted influences on student behavior in the context of AI chatbot utilization provides a robust foundation for future research. It also offers invaluable insights for AI chatbot developers and educators, aiding them in crafting more effective strategies for AI integration in educational settings.

Introduction

In the realm of advanced language models, OpenAI’s Generative Pre-trained Transformer (GPT) has emerged as a groundbreaking tool that has begun to make significant strides in numerous disciplines (Biswas, 2023a , b ; Firat, 2023 ; Kalla, 2023 ). Education, in particular, has become a fertile ground for this innovation (Kasneci et al., 2023 ). A standout instance is ChatGPT, the latest iteration of this transformative technology, which has been rapidly adopted by a large segment of university students across the globe (Rudolph et al., 2023 ). At its core, ChatGPT is armed with the ability to produce cohesive, contextually appropriate responses predicated on preceding dialogues, providing an interactive medium akin to human conversation (King & ChatGPT, 2023 ). This interactive capacity of ChatGPT has the potential to significantly restructure the educational landscape, altering the way students absorb, interact, and engage with academic content (Fauzi et al., 2023 ). Despite the burgeoning intrigue surrounding this technology and its applications, the comprehensive examination of determinants shaping students’ behavior in the adoption and usage of ChatGPT remains a largely uncharted territory. A thorough, systematic understanding of these determinants, both facilitative and inhibitive, is essential for the more effective deployment and acceptance of such a tool in educational settings, thereby contributing to its ultimate success and efficacy. The current study seeks to shed light on this crucial aspect, aiming to provide a nuanced comprehension of the array of factors that influence university students’ behavioral intentions and patterns towards utilizing ChatGPT for their educational pursuits.

ChatGPT is replete with remarkable features courtesy of its underlying technologies: machine learning (Rospigliosi, 2023 ) and natural language processing (Maddigan & Susnjak, 2023 ). Its capabilities span across a broad spectrum, from completing texts, answering questions, to even spawning original content. In the landscape of education, these functionalities morph into a powerful apparatus that could revolutionize traditional learning modalities. Similar to a human tutor, students can converse with ChatGPT—asking questions, eliciting explanations, or diving into profound discussions about their study materials. Beyond its conversational abilities, ChatGPT’s capacity to learn from past interactions enables it to refine its responses over time, catering more accurately to the user’s distinct needs and preferences (McGee, 2023 ). This attribute of continuous learning and adaptation paves the way for a more personalized and efficient learning experience, offering custom-made academic assistance (Aljanabi, 2023 ). This intricate combination of characteristics arguably makes ChatGPT a potent tool in the educational sphere, worthy of systematic examination to optimize its potential benefits and mitigate any challenges.

ChatGPT’s unique features manifest a range of enabling factors that can significantly influence its adoption among university students. One such factor is the self-learning attribute that empowers the AI to progressively enhance its performance (Rijsdijk et al., 2007 ). This feature aligns with the ongoing learning journey of students, potentially fostering a symbiotic learning environment. Another pivotal factor is the scope for knowledge acquisition and application. As ChatGPT assists in knowledge acquisition, students can simultaneously apply their newly garnered knowledge, potentially bolstering their academic performance. Personalization of the AI forms another significant enabling factor, ensuring that the interactions are fine-tuned to each student’s distinctive needs, thereby promoting a more individual-centered learning experience. Lastly, the novelty value that ChatGPT brings to the educational realm can stimulate student engagement, making learning an exciting and enjoyable process. Unraveling the influence of these factors on students’ behavior toward ChatGPT could yield valuable insights, paving the way for the tool’s effective implementation in education.

Alongside the positive influencers, some potential detractors could hinder the adoption of ChatGPT among university students. Among these, privacy concerns stand out as paramount (Lund & Wang, 2023 ; McCallum, 2023 ). With data breaches becoming increasingly common, students might be apprehensive about sharing their academic queries and discussions with an AI tool. Technophobia is another potential inhibitor, as not all students might be comfortable interacting with an advanced AI model. Moreover, some students might experience guilt feelings while using ChatGPT, equating its assistance to a form of ‘cheating’ (Anders, 2023 ). Evaluating these potential inhibitors is crucial to forming a comprehensive understanding of the factors that shape students’ behavior towards ChatGPT. This balanced approach could help identify ways to alleviate these concerns and foster wider adoption of this promising educational tool.

Despite the growing adoption of AI tools like ChatGPT, there is a notable gap in the literature pertaining to how these tools influence behavior in an educational context. Moreover, existing studies often fail to account for both enabling and inhibiting factors. Therefore, this paper endeavors to fill this gap by offering a more balanced and comprehensive exploration. The primary objective of this study is to empirically examine the determinants—both enablers and inhibitors—that influence university students’ behavioral intentions and actual behavior towards using ChatGPT. By doing so, this study seeks to make a valuable contribution to the academic discourse surrounding the use of AI in education, while also offering practical implications for its more effective implementation in educational settings.

The rest of this paper is structured as follows: the next section reviews the relevant literature and formulates the research hypotheses. The subsequent section outlines the research methodology, followed by a presentation and discussion of the results. Finally, the paper concludes with the implications for theory and practice, and suggestions for future research.

Theoretical background and hypothesis development

This study is grounded in several theoretical frameworks that inform the understanding of AI-based language models, like ChatGPT, and their impact on individual impact, benefits, behavioral intention, and behavior.

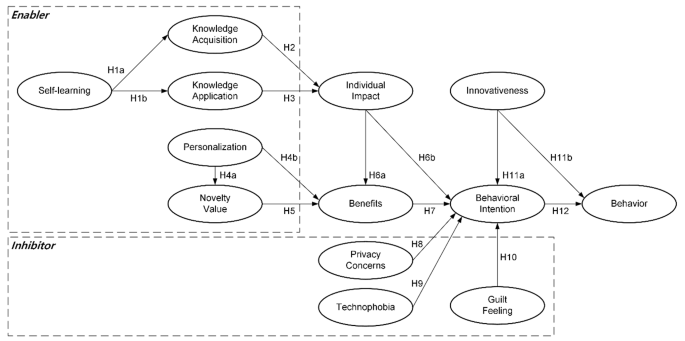

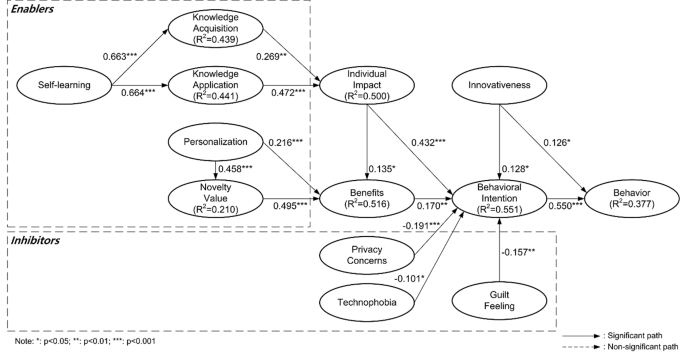

Figure 1 displays the research mode. The model asserts that the AI’s self-learning capability influences knowledge acquisition and application, which in turn impact individual users. Personalization of the AI and the novelty value it offers are also predicted to have substantial effects on the perceived benefits, influencing the behavioral intention to use AI, culminating in actual behavior. The model also takes into account potential negative influences such as perceived risk, technophobia, and feelings of guilt on the behavioral intention to use the AI. The last two constructs in the model, behavioral intention and innovativeness, are anticipated to influence the actual behavior of the AI.

Research Model

Self-determination theory (SDT)

The SDT is indeed a critical theoretical foundation in this study. The theory, developed by Ryan and Deci ( 2000 ), posits that when tasks are intrinsically motivating and individuals perceive competence in these tasks, it leads to improved performance, persistence, and creativity. This theoretical framework supports the idea that the self-learning capability of AI, like ChatGPT, is vital in fostering intrinsic motivation among its users. Specifically, the self-learning aspect allows ChatGPT to tailor its interactions according to the individual user’s needs, thereby creating a more engaging and personally relevant learning environment. This can enhance users’ perception of their competence, resulting in more effective knowledge acquisition and application (H1).

Further, by promoting effective knowledge acquisition and application, the self-learning capability of ChatGPT can significantly impact individuals positively (H2, H3). That is, students can use the knowledge gained from interactions with ChatGPT to improve their academic performance, deepen their understanding of various subjects, and even stimulate their creativity. This aligns with the SDT’s emphasis on competence perception as a driver of enhanced performance and creativity.

Therefore, in line with the SDT, this study hypothesizes that the self-learning capability of ChatGPT, which promotes intrinsic motivation and perceived competence, can lead to improved knowledge acquisition and application, and consequently, a positive individual impact. This theoretical foundation helps to validate the first three hypotheses of the study and underscores the significance of intrinsic motivation and perceived competence in leveraging the educational potential of AI tools like ChatGPT.

- Self-learning

Self-learning is considered as an inherent capability of ChatGPT, the AI tool (Rijsdijk et al., 2007 ). This unique ability of ChatGPT allows it to conduct continuous learning, improve itself, and solve problems based on big data analysis. The concept of self-learning in AI involves the system’s ability to learn and improve from experience without being explicitly programmed (Bishop & Nasrabadi, 2006 ). Such an AI-based tool, like ChatGPT, can evolve its capabilities over time, making it a highly efficient tool for knowledge acquisition and application among students (Berland et al., 2014 ). Berland et al. ( 2014 ) pointed out that self-learning in AI can provide personalized learning experiences, thereby helping students to acquire and retain knowledge more effectively. This is due to the AI’s ability to adapt its responses based on past interactions and data, allowing for more targeted and relevant knowledge acquisition. For knowledge application, the self-learning capability of AI tools like ChatGPT can play a significant role. D’mello and Graesser ( 2013 ) argued that such AI systems could enhance learners’ ability to apply acquired knowledge, as they can simulate a variety of scenarios and provide immediate feedback. This real-time, contextual problem-solving can further strengthen knowledge application skills in students. Consequently, recognizing the potential of self-learning in AI, such as in ChatGPT, to improve both knowledge acquisition and application among university students, this study suggests the following hypotheses.

H1a. Self-learning has a positive effect on knowledge acquisition.

H1b. Self-learning has a positive effect on knowledge application.

Knowledge Acquisition

Knowledge acquisition is the process in which new information is obtained and subsequently expanded upon when additional insights are gained. A research by Nonaka ( 1994 ) emphasized the role of knowledge acquisition in enhancing organizational productivity. Another study by Alavi and Leidner ( 2001 ) suggested that knowledge acquisition can positively influence individual performance by improving the efficiency and effectiveness of task execution. Several scholars have shown the significance of knowledge acquisition on perceived usefulness in the context of learning (Al-Emran et al., 2018 ; Al-Emran & Teo, 2020 ). Given the capabilities of AI chatbots like ChatGPT in providing users with immediate, accurate, and personalized information, it’s likely that knowledge acquisition from using such a tool could lead to improved task performance or individual impact. Therefore, this study proposes the following hypothesis:

H2. Knowledge acquisition has a positive effect on individual impact.

Knowledge application

Knowledge application, as defined by Al-Emran et al. ( 2020 ), implies the facilitation of individuals to effortlessly utilize knowledge techniques via efficient storage and retrieval systems. With AI-driven chatbots like ChatGPT, this application process can be significantly enhanced. The positive influence of effective knowledge application on individual outcomes has also been corroborated by previous research (Al-Emran & Teo, 2020 ; Alavi & Leidner, 2001 ; Heisig, 2009 ). Therefore, this study suggests the following hypothesis:

H3. Knowledge application has a positive effect on individual impact.

- Personalization

The study’s conceptual framework is further informed by personalization (Maghsudi et al., 2021 ; Yu et al., 2017 ), underscoring the importance of personalized experiences in a learning environment. Literature on personalization advocates that designing experiences tailored to individual needs and preferences enhances the user’s engagement and satisfaction (Desaid, 2020 ; Jo, 2022 ). In the context of AI, personalization refers to the ability of the system, in this case, ChatGPT, to adapt its interactions based on the unique requirements and patterns of the individual user. This capacity to customize interactions could make the learning process more relevant and stimulating for the students, thereby potentially increasing the novelty value they derive from using ChatGPT (H4a).

Furthermore, the personalization theory also suggests that a personalized learning environment can lead to increased perceived benefits, as it allows students to learn at their own pace, focus on areas of interest or difficulty, and receive feedback that is specific to their learning progress (H4b). This aligns with the results of the study, which found a significant positive relationship between personalization and perceived benefits.

Personalization refers to the technology’s ability to tailor interactions based on the user’s preferences, behaviors, and individual characteristics, providing a unique and individualized user experience (Wirtz et al., 2018 ). This capacity has been found to significantly enhance users’ perception of value in their interactions with the technology (Chen et al., 2022 ). Previous research has indicated that the personalization capabilities of AI chatbots, such as ChatGPT, can enhance this novelty value, as the individualized user experience provides a unique and fresh engagement every time (Haleem et al., 2022 ; Koubaa et al., 2023 ). Research has highlighted that personalized interactions with AI chatbots can lead to increased perceived benefits, such as improved efficiency, productivity, and task performance (Makice, 2009 ). Personalization enables the technology to cater to the individual user’s needs effectively, thereby enhancing their perception of the benefits derived from using it (Wirtz et al., 2018 ). Thus, this study proposes the following hypotheses:

H4a. Personalization has a positive effect on novelty value.

H4b. Personalization has a positive effect on benefits.

Diffusion of Innovation

The diffusion of innovations theory offers a valuable lens through which to understand the adoption of new technologies (Rogers, 2010 ). The theory suggests that the diffusion and adoption of an innovation in a social system are influenced by five key factors: the innovation’s relative advantage, compatibility, complexity, trialability, and observability.

One essential aspect of the innovation, which resonates strongly with this study, is its relative advantage – the degree to which an innovation is perceived as being better than the idea it supersedes. This relative advantage often takes the form of novelty value, especially in the early stages of an innovation’s diffusion process. In this context, ChatGPT, with its self-learning capability, personalization, and interactive conversation style, can be seen as a novel approach to traditional learning methods. This novelty can make the learning process more engaging, exciting, and effective, thereby increasing the perceived benefits for students.

Rogers’ theory also highlights that these perceived benefits can significantly influence an individual’s intention to adopt the innovation. The more an individual perceives the benefits of the innovation to outweigh the costs, the more likely they are to adopt it. In the context of this study, the novelty value of using ChatGPT contributes to the perceived benefits (H5), which in turn influences the intention to use it (H7). This underscores the importance of managing the perceived novelty value of AI tools like ChatGPT in a learning environment, as it can be a crucial determinant of their adoption and use. Furthermore, Rogers’ theory stresses the importance of compatibility and simplicity of the innovation. These attributes align with the concepts of personalization and self-learning capabilities of ChatGPT, highlighting that an AI tool that aligns with users’ needs and is easy to use is more likely to be adopted.

Novelty value

Novelty value refers to the unique and new experience that users perceive when interacting with the technology (Moussawi et al., 2021 ). This sense of novelty has been associated with increased interest, engagement, and satisfaction, contributing to a more valuable and enriching user experience (Hund et al., 2021 ). In this context, the novelty value provided by AI artifacts can enhance the perceived value of using the technology (Jo, 2022 ). This relationship has been documented in prior research, showing that the novelty of technology use can lead to increased perceived benefits, possibly due to heightened user interest, engagement, and satisfaction (Huang & Benyoucef, 2013 ). Therefore, this study suggests the following hypothesis:

H5. Novelty value has a positive effect on benefits.

Technology Acceptance Model (TAM)

The TAM has been widely employed to explain user acceptance and usage of technology. TAM posits that two particular perceptions, perceived usefulness (the degree to which a person believes that using a particular system would enhance their job performance) and perceived ease of use (the degree to which a person believes that using a particular system would be free of effort), determine an individual’s attitude toward using the system, which in turn influences their behavioral intention to use it, and ultimately their actual system use.

In the context of this study, TAM offers valuable insight into how individual impact, perceived as the influence of using ChatGPT on a student’s academic performance and outcomes, can affect perceived benefits and behavioral intention (H6a, H6b). Students are more likely to use ChatGPT if they perceive it to have a positive impact on their learning outcomes. This perceived impact can enhance the perceived benefits of using ChatGPT, which can then influence their behavioral intention to use it. This aligns with Davis’s proposition that perceived usefulness positively influences behavioral intention to use a technology (Davis, 1989 ).

Moreover, the relationship between perceived benefits and behavioral intention (H7) is also consistent with TAM. According to Davis ( 1989 ), when users perceive a system as beneficial, they are more likely to form positive intentions to use it. Thus, the more students perceive the benefits of using ChatGPT, such as improved learning outcomes, personalized learning experiences, and increased engagement, the stronger their intention to use ChatGPT.

Individual impact

Individual impact refers to the perceived improvements in task performance and productivity brought about by the use of an IT such as ChatGPT (Aparicio et al., 2017 ). The perceived usefulness, which is closely related to individual impact, has a positive effect on behavioral intention to use a technology (Davis, 1989 ). In the literature, numerous studies have demonstrated that when users perceive a technology to be beneficial and impactful on their personal efficiency or productivity, they are more likely to have a positive behavioral intention towards using it (Gatzioufa & Saprikis, 2022 ; Kelly et al., 2022 ; Venkatesh et al., 2012 ). Similarly, these perceived improvements or benefits can also increase the perceived value of the technology, contributing to its overall appeal (Kim & Benbasat, 2006 ). Therefore, building upon this premise, this study proposes the following hypotheses.

H6a. Individual impact has a positive effect on benefits.

H6b. Individual impact has a positive effect on behavioral intention.

The concept of benefits is the perceived advantage or gain a user experiences from the use of the IT (Al-Fraihat et al., 2020 ). This study employed the individual impact and benefits separately. The rationale for considering individual impact and benefits as separate constructs in this research stems from the subtle differences in their underlying meanings and implications in the context of using AI chatbots like ChatGPT. While they might appear closely related, treating them separately can provide a more nuanced understanding of user experiences and perceptions. Individual impact, as defined in this research, pertains to the direct effects of using ChatGPT on the user’s performance and productivity. It encapsulates the tangible, immediate changes that occur in a user’s work efficiency and task completion speed as a result of using the AI chatbot. Therefore, individual impact serves as a measure of performance enhancement, indicating the ‘output’ side of the user’s interaction with ChatGPT. On the other hand, benefits, as gauged by the proposed survey items, are more encompassing. They extend beyond the immediate task-related effects to include the overall advantages perceived by the users. This could range from acquiring new knowledge to achieving personal or educational goals. Benefits, in essence, capture the ‘outcome’ aspect of using ChatGPT, reflecting a broader range of positive effects that contribute to user satisfaction and perceived value. By differentiating between individual impact and benefits, this research can provide a more detailed and comprehensive analysis of users’ experiences with ChatGPT.

The benefit as a determinant of behavioral intention is firmly established within the extant literature on technology acceptance and adoption. the theory of reasoned action (TRA) posits that the perception of benefits or value is directly linked to behavioral intentions (Fishbein & Ajzen, 1975 ). Similarly, the TAM proposes that perceived usefulness significantly influences behavioral intention to use a technology (Davis, 1989 ). Recent studies further confirm this relationship, which highlighted that the perceived benefit of a technology strongly predicts users’ intentions to use it (Cao et al., 2021 ; Chao, 2019 ; Yang & Wibowo, 2022 ). Given the consistent findings supporting this relationship, the following hypothesis is proposed:

H7. Benefits has a positive effect on behavioral intention.

Privacy theory and Technostress

The privacy paradox theory, formulated by Barnes ( 2006 ), provides insight into the intriguing contradiction that exists between individuals’ expressed concerns about privacy and their actual online behavior. Despite voicing concerns about their privacy, many individuals continue to disclose personal information or engage in activities that may compromise their privacy. However, in the context of AI technologies like ChatGPT, this study posits that privacy concerns may have a more pronounced impact. Particularly in an educational context, where sensitive academic information may be shared, privacy concerns can act as a deterrent, negatively influencing the behavioral intention to use the technology (H8).

On the other hand, the technostress model by Tarafdar et al. ( 2007 ) further illuminates our understanding of technology-induced stress and its impact on behavioral intention. This model proposes that individuals can experience different forms of technostress, including technophobia, which refers to the fear or anxiety experienced by some individuals when faced with new technologies. Technophobia can be particularly prevalent among individuals who lack familiarity or comfort with emerging technologies. In the context of this study, technophobia can potentially inhibit students from adopting and using AI tools like ChatGPT, thus negatively impacting their behavioral intention (H9).