Research guidance, Research Journals, Top Universities

Top 20 MCQs on literature review with answers

MCQs on literature review : The primary purpose of literature review is to facilitate detailed background of the previous studies to the readers on the topic of research.

In this blog post, we have published 20 MCQs on Literature Review (Literature Review in Research) with answers.

20 Multiple Choice Questions on Literature Review

1. Literature is a

Written Record

Published Record

Unpublished Record

All of these

2. Which method of literature review involves a non-statistical method to present data having the feature of systematic Method too?

Narrative Method

Systematic Method

Meta-Analysis Method of Literature Review

Meta-Synthesis Method of Literature Review

3. Comparisons of non-statistical variables are performed under which method of literature review?

4. Literature review is not similar to

Annotated Bibliography

5. APA Style, MLA Style, Chicago Manual, Blue Book, OSCOLA are famously known as

Citation Manuals

Directories

Abbreviation Manuals

6. Literature collected is reviewed and preferably arranged

Alphabetically

Chronologically

None of these

7. Literature collected for review includes

Primary and Secondary Sources

Secondary and Tertiary Sources

Primary and Tertiary Sources

8. Literature includes

Previous Studies

Scholarly publications

Research Findings

9. No time frame is set to collect literature in which of the following method of compiling reviews?

Traditional Method

10. Which method of the literature review is more reliable for drawing conclusions of each individual researcher for new conceptualizations and interpretations?

11. The main purpose of finalization of research topics and sub-topics is

Collection of Literature

Collection of Questions

Collection of Statistics

Collection of Responses

12. Literature review is basically to bridge the gap between

Newly established facts

Previously established facts

Facts established time to time

Previous to current established facts

13. The last step in writing the literature review is

Developing a Final Essay

Developing a Coherent Essay

Developing a Collaborated Essay

Developing a Coordinated Essay

14. The primary purpose of literature review is to facilitate detailed background of

Present Studies

Previous studies

Future Studies

15. Narrative Literature Review method is also known as

Advanced Method

Scientific Method

16. Which method of literature review starts with formulating research questions?

17. Which method of literature review involves application of clinical approach based on a specific subject.

18. Which literature review involves timeline based collection of literature for review

19. Which method of literature review involves application of statistical approach?

20. Which literature review method involves conclusions in numeric/statistical form?

More MCQs Related to MCQs on Literature Review

- MCQs on Qualitative Research with answers

- Research Proposal MCQs with answers PDF

- Solved MCQ on legal Reasoning in Research

- MCQ on data analysis in research methodology

- Research Report writing MCQs with answers

- All Solved MCQs on Research Methodology

- MCQs on Legal Research with answers

- MCQs on sampling in research methodology with answers

- MCQs with answers on plagiarism

- MCQ on Citation and Referencing in Research

- Research Ethics MCQs with answers

- Solved MCQs on Sampling in research methodology

- Solved MCQs on Basic Research

MCQs on literature review with answers PDF | Research methods multiple choice questions | Literature review questions and answers

Share this:

1 thought on “top 20 mcqs on literature review with answers”.

Very nice questions for revision

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Notify me of follow-up comments by email.

Notify me of new posts by email.

Library Services

UCL LIBRARY SERVICES

- Guides and databases

- Library skills

- Systematic reviews

Formulating a research question

- What are systematic reviews?

- Types of systematic reviews

- Identifying studies

- Searching databases

- Describing and appraising studies

- Synthesis and systematic maps

- Software for systematic reviews

- Online training and support

- Live and face to face training

- Individual support

- Further help

Clarifying the review question leads to specifying what type of studies can best address that question and setting out criteria for including such studies in the review. This is often called inclusion criteria or eligibility criteria. The criteria could relate to the review topic, the research methods of the studies, specific populations, settings, date limits, geographical areas, types of interventions, or something else.

Systematic reviews address clear and answerable research questions, rather than a general topic or problem of interest. They also have clear criteria about the studies that are being used to address the research questions. This is often called inclusion criteria or eligibility criteria.

Six examples of types of question are listed below, and the examples show different questions that a review might address based on the topic of influenza vaccination. Structuring questions in this way aids thinking about the different types of research that could address each type of question. Mneumonics can help in thinking about criteria that research must fulfil to address the question. The criteria could relate to the context, research methods of the studies, specific populations, settings, date limits, geographical areas, types of interventions, or something else.

Examples of review questions

- Needs - What do people want? Example: What are the information needs of healthcare workers regarding vaccination for seasonal influenza?

- Impact or effectiveness - What is the balance of benefit and harm of a given intervention? Example: What is the effectiveness of strategies to increase vaccination coverage among healthcare workers. What is the cost effectiveness of interventions that increase immunisation coverage?

- Process or explanation - Why does it work (or not work)? How does it work (or not work)? Example: What factors are associated with uptake of vaccinations by healthcare workers? What factors are associated with inequities in vaccination among healthcare workers?

- Correlation - What relationships are seen between phenomena? Example: How does influenza vaccination of healthcare workers vary with morbidity and mortality among patients? (Note: correlation does not in itself indicate causation).

- Views / perspectives - What are people's experiences? Example: What are the views and experiences of healthcare workers regarding vaccination for seasonal influenza?

- Service implementation - What is happening? Example: What is known about the implementation and context of interventions to promote vaccination for seasonal influenza among healthcare workers?

Examples in practice : Seasonal influenza vaccination of health care workers: evidence synthesis / Loreno et al. 2017

Example of eligibility criteria

Research question: What are the views and experiences of UK healthcare workers regarding vaccination for seasonal influenza?

- Population: healthcare workers, any type, including those without direct contact with patients.

- Context: seasonal influenza vaccination for healthcare workers.

- Study design: qualitative data including interviews, focus groups, ethnographic data.

- Date of publication: all.

- Country: all UK regions.

- Studies focused on influenza vaccination for general population and pandemic influenza vaccination.

- Studies using survey data with only closed questions, studies that only report quantitative data.

Consider the research boundaries

It is important to consider the reasons that the research question is being asked. Any research question has ideological and theoretical assumptions around the meanings and processes it is focused on. A systematic review should either specify definitions and boundaries around these elements at the outset, or be clear about which elements are undefined.

For example if we are interested in the topic of homework, there are likely to be pre-conceived ideas about what is meant by 'homework'. If we want to know the impact of homework on educational attainment, we need to set boundaries on the age range of children, or how educational attainment is measured. There may also be a particular setting or contexts: type of school, country, gender, the timeframe of the literature, or the study designs of the research.

Research question: What is the impact of homework on children's educational attainment?

- Scope : Homework - Tasks set by school teachers for students to complete out of school time, in any format or setting.

- Population: children aged 5-11 years.

- Outcomes: measures of literacy or numeracy from tests administered by researchers, school or other authorities.

- Study design: Studies with a comparison control group.

- Context: OECD countries, all settings within mainstream education.

- Date Limit: 2007 onwards.

- Any context not in mainstream primary schools.

- Non-English language studies.

Mnemonics for structuring questions

Some mnemonics that sometimes help to formulate research questions, set the boundaries of question and inform a search strategy.

Intervention effects

PICO Population – Intervention– Outcome– Comparison

Variations: add T on for time, or ‘C’ for context, or S’ for study type,

Policy and management issues

ECLIPSE : Expectation – Client group – Location – Impact ‐ Professionals involved – Service

Expectation encourages reflection on what the information is needed for i.e. improvement, innovation or information. Impact looks at what you would like to achieve e.g. improve team communication .

- How CLIP became ECLIPSE: a mnemonic to assist in searching for health policy/management information / Wildridge & Bell, 2002

Analysis tool for management and organisational strategy

PESTLE: Political – Economic – Social – Technological – Environmental ‐ Legal

An analysis tool that can be used by organizations for identifying external factors which may influence their strategic development, marketing strategies, new technologies or organisational change.

- PESTLE analysis / CIPD, 2010

Service evaluations with qualitative study designs

SPICE: Setting (context) – Perspective– Intervention – Comparison – Evaluation

Perspective relates to users or potential users. Evaluation is how you plan to measure the success of the intervention.

- Clear and present questions: formulating questions for evidence based practice / Booth, 2006

Read more about some of the frameworks for constructing review questions:

- Formulating the Evidence Based Practice Question: A Review of the Frameworks / Davis, 2011

- << Previous: Stages in a systematic review

- Next: Identifying studies >>

- Last Updated: Apr 4, 2024 10:09 AM

- URL: https://library-guides.ucl.ac.uk/systematic-reviews

Literature Review with MAXQDA

Interview transcription examples, make the most out of your literature review.

Literature reviews are an important step in the data analysis journey of many research projects, but often it is a time-consuming and arduous affair. Whether you are reviewing literature for writing a meta-analysis or for the background section of your thesis, work with MAXQDA. Our product comes with many exciting features which make your literature review faster and easier than ever before. Whether you are a first-time researcher or an old pro, MAXQDA is your professional software solution with advanced tools for you and your team.

How to conduct a literature review with MAXQDA

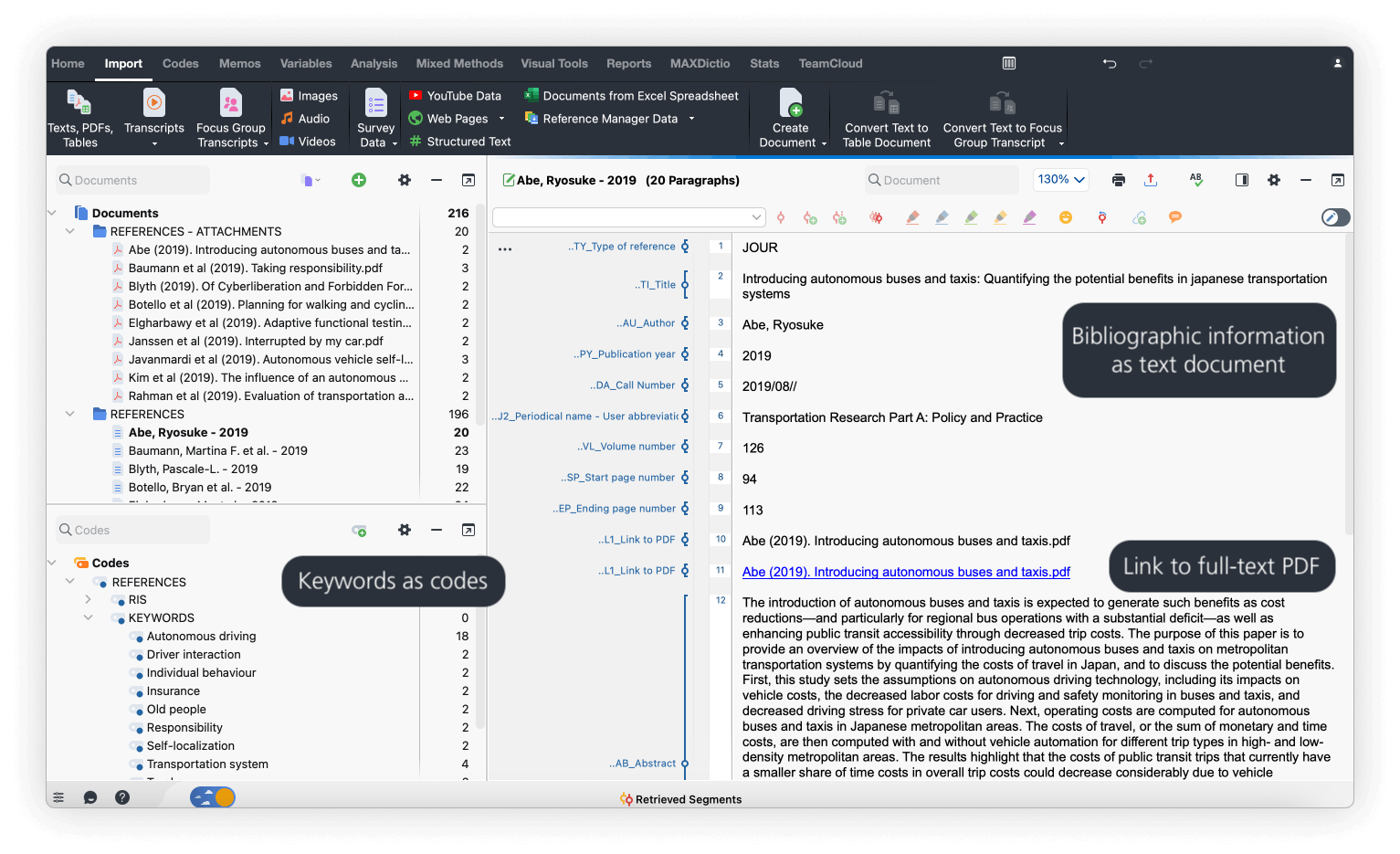

Conducting a literature review with MAXQDA is easy because you can easily import bibliographic information and full texts. In addition, MAXQDA provides excellent tools to facilitate each phase of your literature review, such as notes, paraphrases, auto-coding, summaries, and tools to integrate your findings.

Step one: Plan your literature review

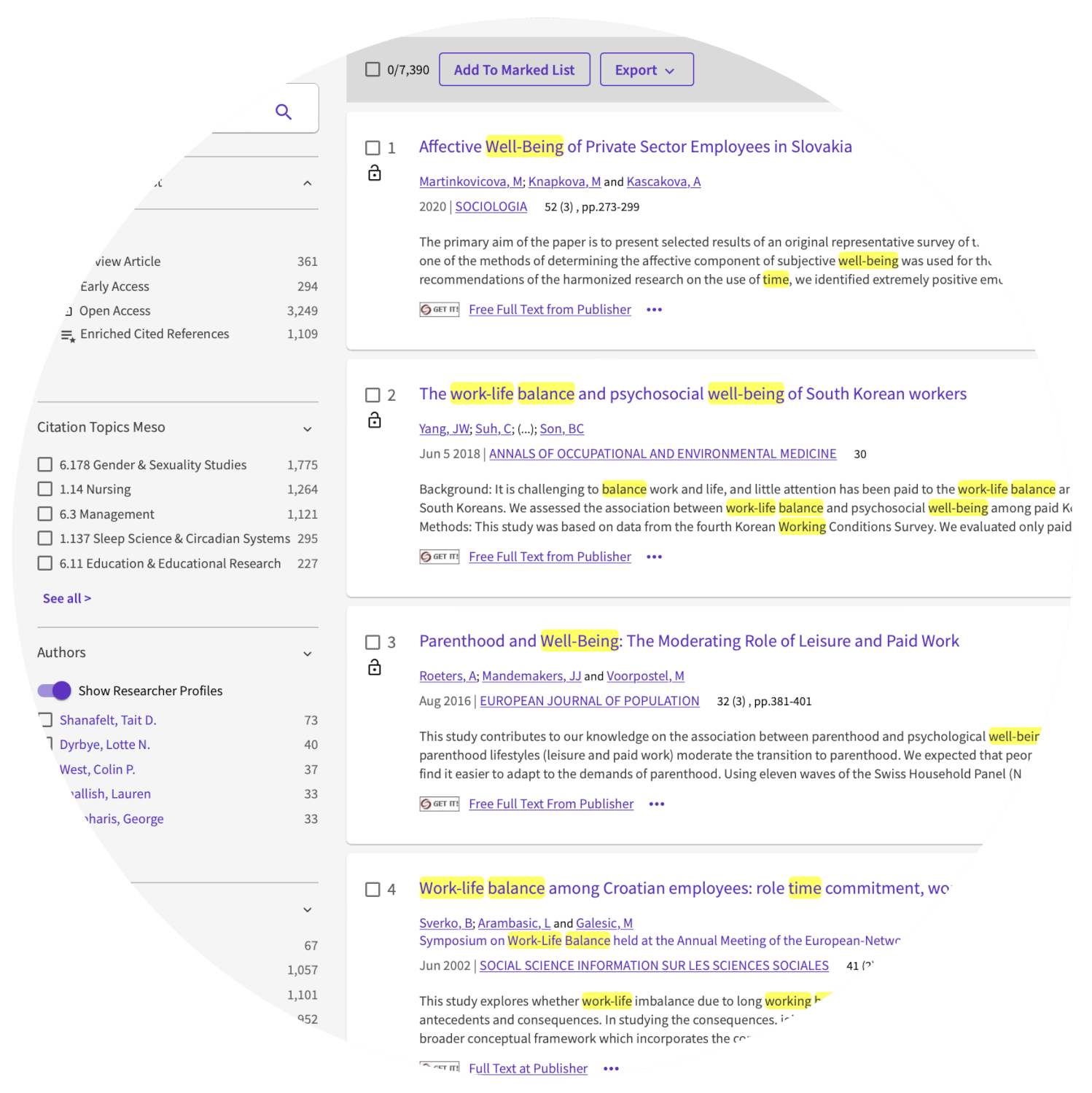

Similar to other research projects, one should carefully plan a literature review. Before getting started with searching and analyzing literature, carefully think about the purpose of your literature review and the questions you want to answer. This will help you to develop a search strategy which is needed to stay on top of things. A search strategy involves deciding on literature databases, search terms, and practical and methodological criteria for the selection of high-quality scientific literature.

MAXQDA supports you during this stage with memos and the newly developed Questions-Themes-Theories tool (QTT). Both are the ideal place to store your research questions and search parameters. Moreover, the Question-Themes-Theories tool is perfectly suited to support your literature review project because it provides a bridge between your MAXQDA project and your research report. It offers the perfect enviornment to bring together findings, record conclusions and develop theories.

Step two: Search, Select, Save your material

Follow your search strategy. Use the databases and search terms you have identified to find the literature you need. Then, scan the search results for relevance by reading the title, abstract, or keywords. Try to determine whether the paper falls within the narrower area of the research question and whether it fulfills the objectives of the review. In addition, check whether the search results fulfill your pre-specified eligibility criteria. As this step typically requires precise reading rather than a quick scan, you might want to perform it in MAXQDA. If the piece of literature fulfills your criteria and context, you can save the bibliographic information using a reference management system which is a common approach among researchers as these programs automatically extract a paper’s meta-data from the publishing website. You can easily import this bibliographic data into MAXQDA via a specialized import tool. MAXQDA is compatible with all reference management programs that are able to export their literature databases in RIS format which is a standard format for bibliographic information. This is the case with all mainstream literature management programs such as Citavi, DocEar, Endnote, JabRef, Mendeley, and Zotero.

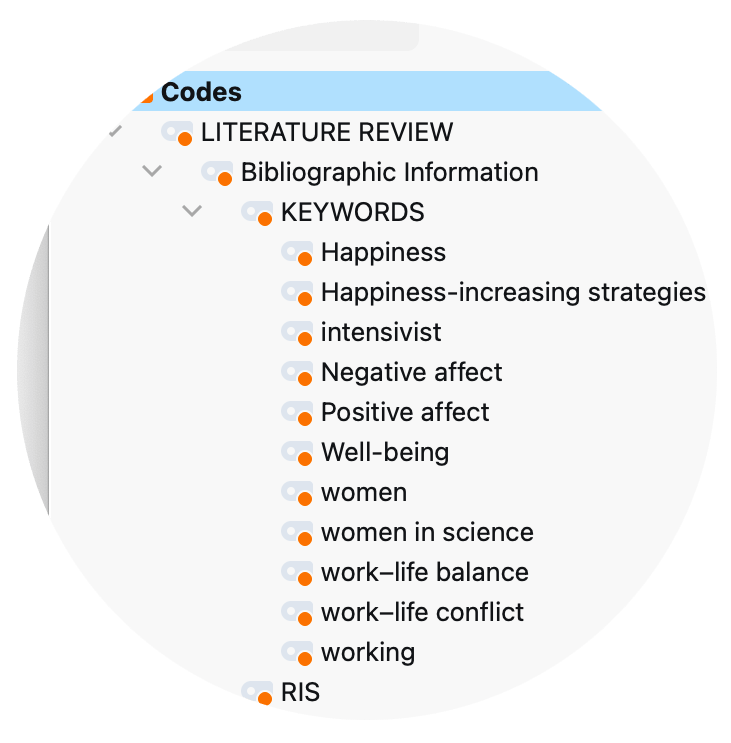

Step three: Import & Organize your material in MAXQDA

Importing bibliographic data into MAXQDA is easy and works seamlessly for all reference management programs that use the standard RIS files. MAXQDA offers an import option dedicated to bibliographic data which you can find in the MAXQDA Import tab. To import the selected literature, just click on the corresponding button, select the data you want to import, and click okay. Upon import, each literature entry becomes its own text document. If full texts are imported, MAXQDA automatically connects the full text to the literature entry with an internal link. The individual information in the literature entries is automatically coded for later analysis so that, for example, all titles or abstracts can be compiled and searched. To help you keeping your literature (review) organized, MAXQDA automatically creates a document group called “References” which contains the individual literature entries. Like full texts or interview documents, the bibliographic entries can be searched, coded, linked, edited, and you can add memos for further qualitative and quantitative content analysis (Kuckartz & Rädiker, 2019). Especially, when running multiple searches using different databases or search terms, you should carefully document your approach. Besides being a great place to store the respective search parameters, memos are perfectly suited to capture your ideas while reviewing our literature and can be attached to text segments, documents, document groups, and much more.

Analyze your literature with MAXQDA

Once imported into MAXQDA, you can explore your material using a variety of tools and functions. With MAXQDA as your literature review & analysis software, you have numerous possibilities for analyzing your literature and writing your literature review – impossible to mention all. Thus, we can present only a subset of tools here. Check out our literature about performing literature reviews with MAXQDA to discover more possibilities.

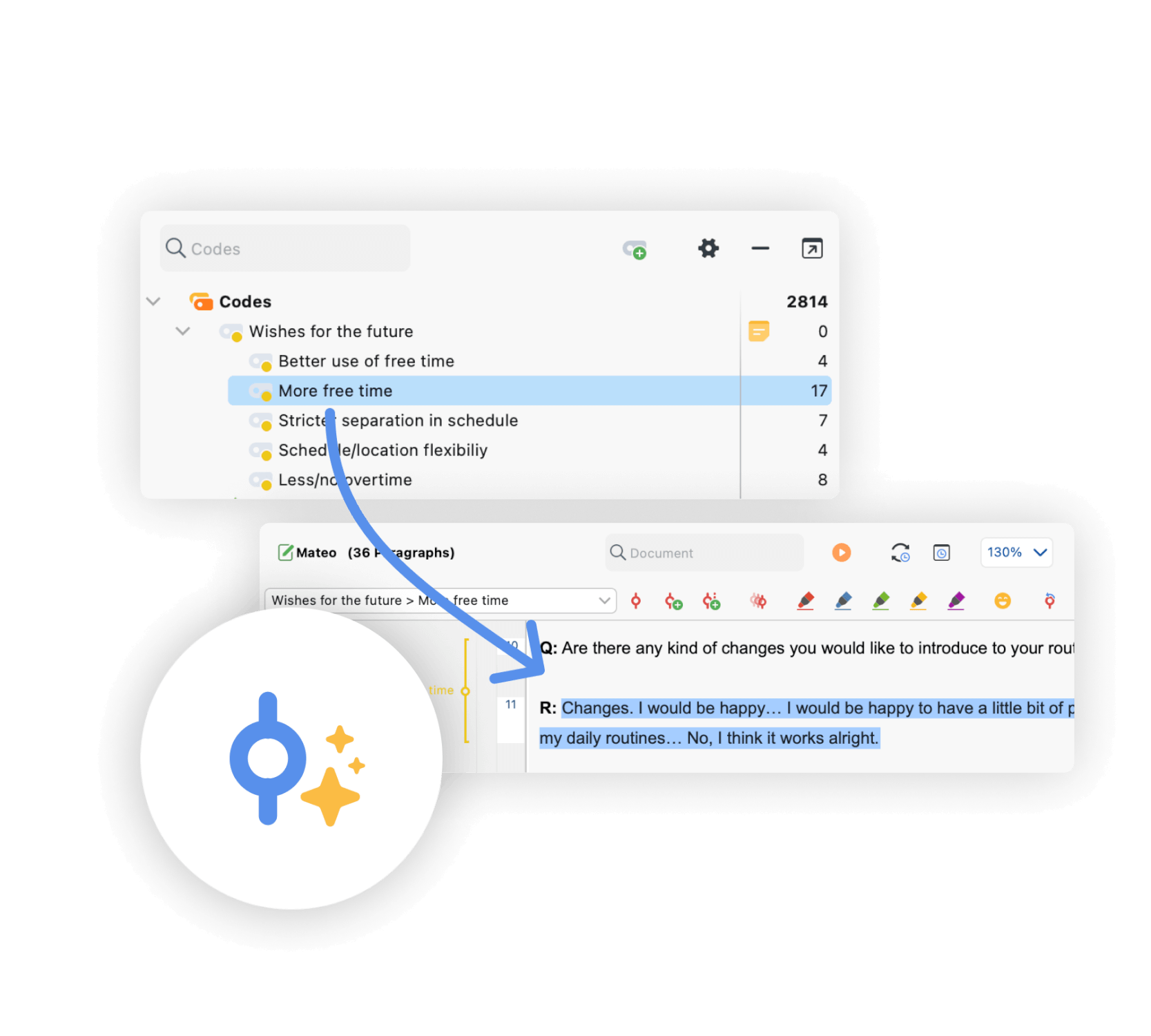

AI Assist: Introducing AI to literature reviews

AI Assist – MAXQDA’s AI-based add-on module – can simplify your literature reviews in many ways. Chat with your data and ask the AI questions about your documents. Let AI Assist automatically summarize entire papers and text segments. Automatically create summaries of all coded segments of a code or generate suggestions for subcodes, and if you don’t know a word’s or concept’s meaning, use AI Assist to get a definition without leaving MAXQDA. Visit our research guide for even more ideas on how AI can support your literature review:

AI for Literature Review

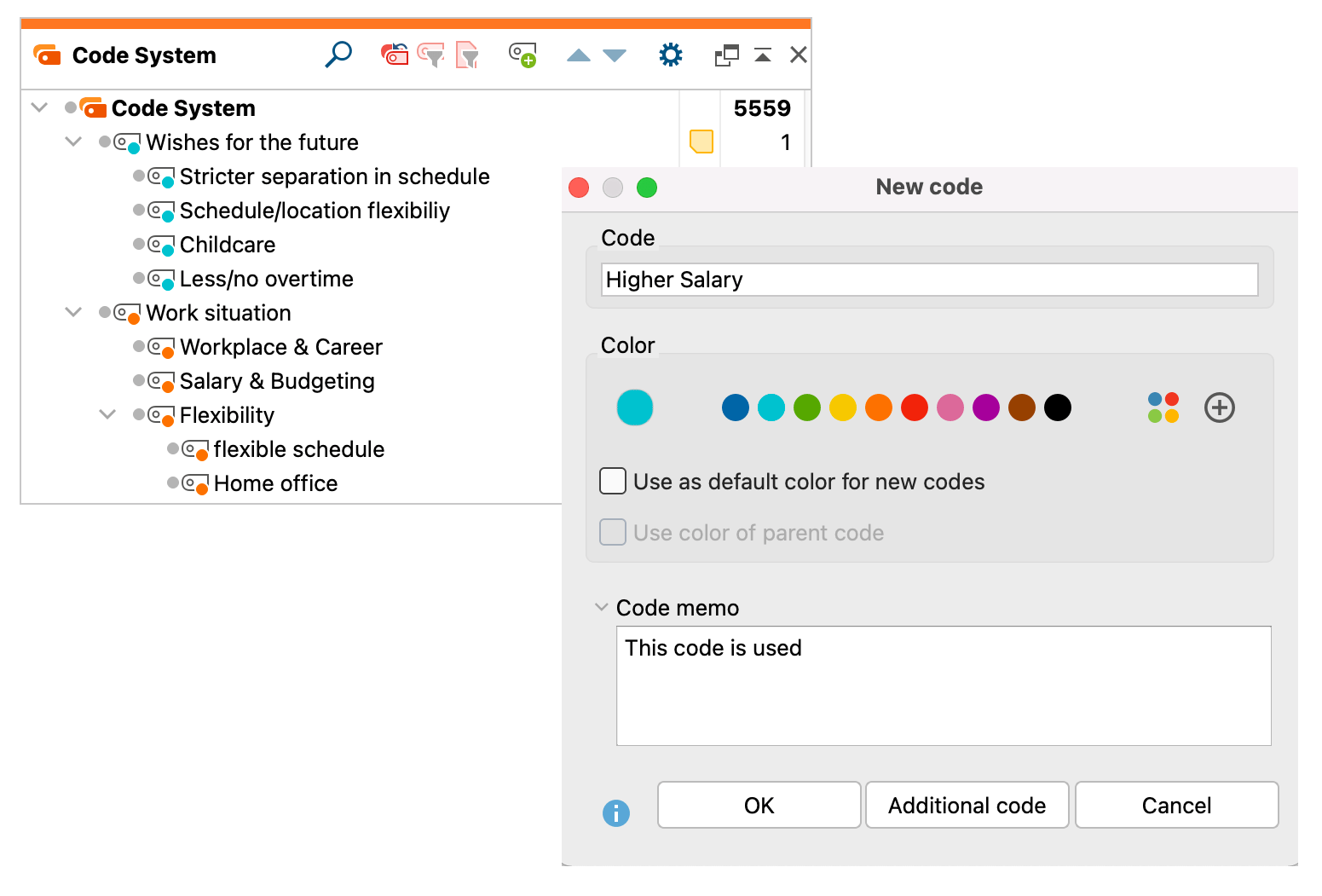

Code & Retrieve important segments

Coding qualitative data lies at the heart of many qualitative data analysis approaches and can be useful for literature reviews as well. Coding refers to the process of labeling segments of your material. For example, you may want to code definitions of certain terms, pro and con arguments, how a specific method is used, and so on. In a later step, MAXQDA allows you to compile all text segments coded with one (or more) codes of interest from one or more papers, so that you can for example compare definitions across papers.

But there is more. MAXQDA offers multiple ways of coding, such as in-vivo coding, highlighters, emoticodes, Creative Coding, or the Smart Coding Tool. The compiled segments can be enriched with variables and the segment’s context accessed with just one click. MAXQDA’s Text Search & Autocode tool is especially well-suited for a literature review, as it allows one to explore large amounts of text without reading or coding them first. Automatically search for keywords (or dictionaries of keywords), such as important concepts for your literature review, and automatically code them with just a few clicks.

Paraphrase literature into your own words

Another approach is to paraphrase the existing literature. A paraphrase is a restatement of a text or passage in your own words, while retaining the meaning and the main ideas of the original. Paraphrasing can be especially helpful in the context of literature reviews, because paraphrases force you to systematically summarize the most important statements (and only the most important statements) which can help to stay on top of things.

With MAXQDA as your literature review software, you not only have a tool for paraphrasing literature but also tools to analyze the paraphrases you have written. For example, the Categorize Paraphrases tool (allows you to code your parpahrases) or the Paraphrases Matrix (allows you to compare paraphrases side-by-side between individual documents or groups of documents.)

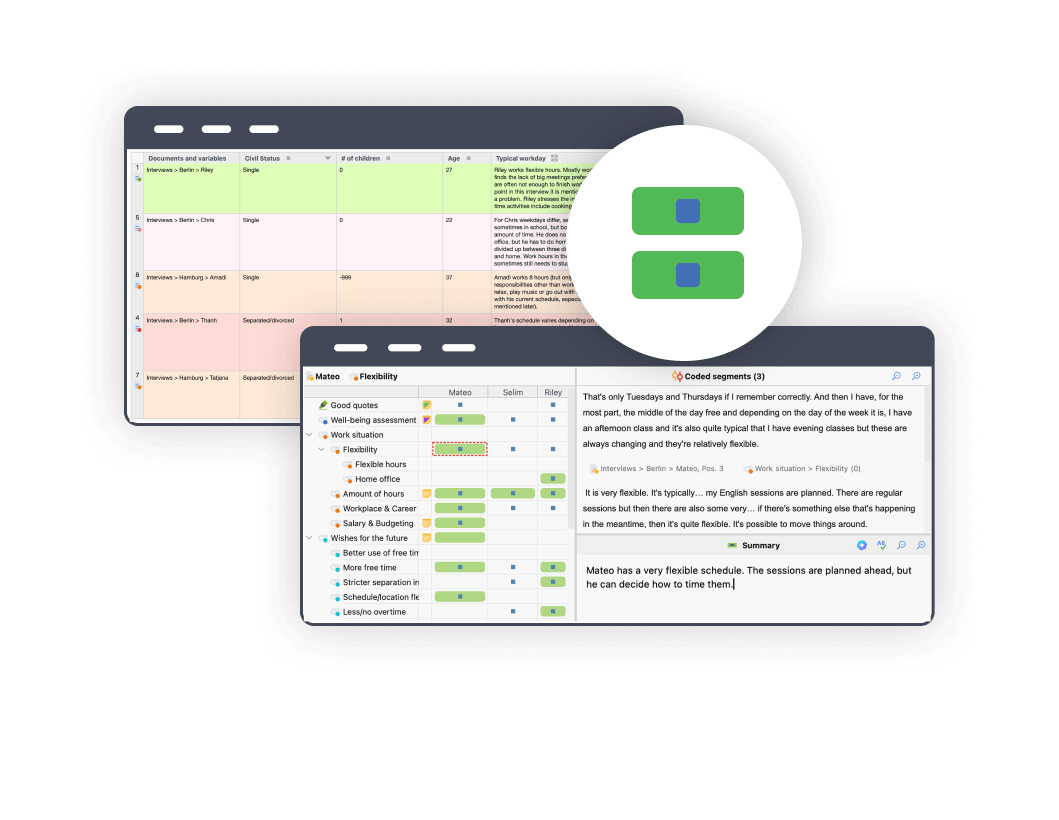

Summaries & Overview tables: A look at the Bigger Picture

When conducting a literature review you can easily get lost. But with MAXQDA as your literature review software, you will never lose track of the bigger picture. Among other tools, MAXQDA’s overview and summary tables are especially useful for aggregating your literature review results. MAXQDA offers overview tables for almost everything, codes, memos, coded segments, links, and so on. With MAXQDA literature review tools you can create compressed summaries of sources that can be effectively compared and represented, and with just one click you can easily export your overview and summary tables and integrate them into your literature review report.

Visualize your qualitative data

The proverb “a picture is worth a thousand words” also applies to literature reviews. That is why MAXQDA offers a variety of Visual Tools that allow you to get a quick overview of the data, and help you to identify patterns. Of course, you can export your visualizations in various formats to enrich your final report. One particularly useful visual tool for literature reviews is the Word Cloud. It visualizes the most frequent words and allows you to explore key terms and the central themes of one or more papers. Thanks to the interactive connection between your visualizations with your MAXQDA data, you will never lose sight of the big picture. Another particularly useful tool is MAXQDA’s word/code frequency tool with which you can analyze and visualize the frequencies of words or codes in one or more documents. As with Word Clouds, nonsensical words can be added to the stop list and excluded from the analysis.

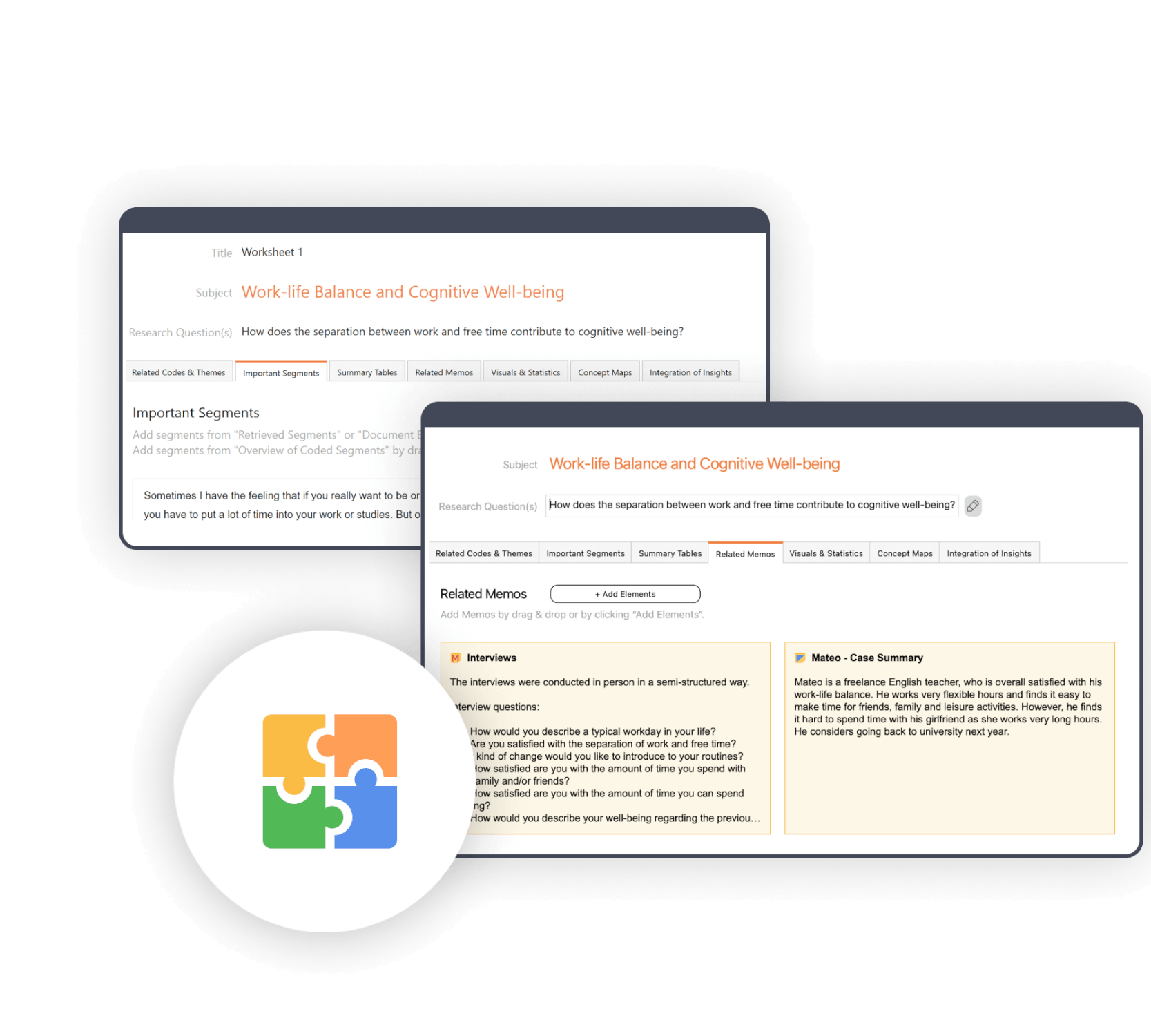

QTT: Synthesize your results and write up the review

MAXQDA has an innovative workspace to gather important visualization, notes, segments, and other analytics results. The perfect tool to organize your thoughts and data. Create a separate worksheet for your topics and research questions, fill it with associated analysis elements from MAXQDA, and add your conclusions, theories, and insights as you go. For example, you can add Word Clouds, important coded segments, and your literature summaries and write down your insights. Subsequently, you can view all analysis elements and insights to write your final conclusion. The Questions-Themes-Theories tool is perfectly suited to help you finalize your literature review reports. With just one click you can export your worksheet and use it as a starting point for your literature review report.

Literature about Literature Reviews and Analysis

We offer a variety of free learning materials to help you get started with your literature review. Check out our Getting Started Guide to get a quick overview of MAXQDA and step-by-step instructions on setting up your software and creating your first project with your brand new QDA software. In addition, the free Literature Reviews Guide explains how to conduct a literature review with MAXQDA in more detail.

Getting Started with MAXQDA

Literature Reviews with MAXQDA

A literature review is a critical analysis and summary of existing research and literature on a particular topic or research question. It involves systematically searching and evaluating a range of sources, such as books, academic journals, conference proceedings, and other published or unpublished works, to identify and analyze the relevant findings, methodologies, theories, and arguments related to the research question or topic.

A literature review’s purpose is to provide a comprehensive and critical overview of the current state of knowledge and understanding of a topic, to identify gaps and inconsistencies in existing research, and to highlight areas where further research is needed. Literature reviews are commonly used in academic research, as they provide a framework for developing new research and help to situate the research within the broader context of existing knowledge.

A literature review is a critical evaluation of existing research on a particular topic and is part of almost every research project. The literature review’s purpose is to identify gaps in current knowledge, synthesize existing research findings, and provide a foundation for further research. Over the years, numerous types of literature reviews have emerged. To empower you in coming to an informed decision, we briefly present the most common literature review methods.

- Narrative Review : A narrative review summarizes and synthesizes the existing literature on a particular topic in a narrative or story-like format. This type of review is often used to provide an overview of the current state of knowledge on a topic, for example in scientific papers or final theses.

- Systematic Review : A systematic review is a comprehensive and structured approach to reviewing the literature on a particular topic with the aim of answering a defined research question. It involves a systematic search of the literature using pre-specified eligibility criteria and a structured evaluation of the quality of the research.

- Meta-Analysis : A meta-analysis is a type of systematic review that uses statistical techniques to combine and analyze the results from multiple studies on the same topic. The goal of a meta-analysis is to provide a more robust and reliable estimate of the effect size than can be obtained from any single study.

- Scoping Review : A scoping review is a type of systematic review that aims to map the existing literature on a particular topic in order to identify the scope and nature of the research that has been done. It is often used to identify gaps in the literature and inform future research.

There is no “best” way to do a literature review, as the process can vary depending on the research question, field of study, and personal preferences. However, here are some general guidelines that can help to ensure that your literature review is comprehensive and effective:

- Carefully plan your literature review : Before you start searching and analyzing literature you should define a research question and develop a search strategy (for example identify relevant databases, and search terms). A clearly defined research question and search strategy will help you to focus your search and ensure that you are gathering relevant information. MAXQDA’s Questions-Themes-Theories tool is the perfect place to store your analysis plan.

- Evaluate your sources : Screen your search results for relevance to your research question, for example by reading abstracts. Once you have identified relevant sources, read them critically and evaluate their quality and relevance to your research question. Consider factors such as the methodology used, the reliability of the data, and the overall strength of the argument presented.

- Synthesize your findings : After evaluating your sources, synthesize your findings by identifying common themes, arguments, and gaps in the existing research. This will help you to develop a comprehensive understanding of the current state of knowledge on your topic.

- Write up your review : Finally, write up your literature review, ensuring that it is well-structured and clearly communicates your findings. Include a critical analysis of the sources you have reviewed, and use evidence from the literature to support your arguments and conclusions.

Overall, the key to a successful literature review is to be systematic, critical, and comprehensive in your search and evaluation of sources.

As in all aspects of scientific work, preparation is the key to success. Carefully think about the purpose of your literature review, the questions you want to answer, and your search strategy. The writing process itself will differ depending on the your literature review method. For example, when writing a narrative review use the identified literature to support your arguments, approach, and conclusions. By contrast, a systematic review typically contains the same parts as other scientific papers: Abstract, Introduction (purpose and scope), Methods (Search strategy, inclusion/exclusion characteristics, …), Results (identified sources, their main arguments, findings, …), Discussion (critical analysis of the sources you have reviewed), Conclusion (gaps or inconsistencies in the existing research, future research, implications, etc.).

Start your free trial

Your trial will end automatically after 14 days and will not renew. There is no need for cancelation.

- Request new password

- Create a new account

Multiple Choice Questions

Management and business research.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Med Educ Online

- v.27(1); 2022

Does developing multiple-choice Questions Improve Medical Students’ Learning? A Systematic Review

Youness touissi.

a Faculty of Medicine and Pharmacy of Rabat, Mohammed V University, Souissi, Rabat, Morocco

Ghita Hjiej

b Faculty of Medicine and Pharmacy of Oujda, Mohammed Premier University, Oujda, Morocco

Abderrazak Hajjioui

c Laboratory of Neurosciences, Faculty of Medicine and Pharmacy of Fes, Sidi Mohammed Ben Abdallah University, Fez, Morocco

Azeddine Ibrahimi

d Laboratory of Biotechnology, Mohammed V University, Souissi, Rabat, Morocco

Maryam Fourtassi

e Faculty of Medicine of Tangier, Abdelmalek Essaadi University, Tetouan, Morocco

Practicing Multiple-choice questions is a popular learning method among medical students. While MCQs are commonly used in exams, creating them might provide another opportunity for students to boost their learning. Yet, the effectiveness of student-generated multiple-choice questions in medical education has been questioned. This study aims to verify the effects of student-generated MCQs on medical learning either in terms of students’ perceptions or their performance and behavior, as well as define the circumstances that would make this activity more useful to the students. Articles were identified by searching four databases MEDLINE, SCOPUS, Web of Science, and ERIC, as well as scanning references. The titles and abstracts were selected based on a pre-established eligibility criterion, and the methodological quality of articles included was assessed using the MERSQI scoring system. Eight hundred and eighty-four papers were identified. Eleven papers were retained after abstract and title screening, and 6 articles were recovered from cross-referencing, making it 17 articles in the end. The mean MERSQI score was 10.42. Most studies showed a positive impact of developing MCQs on medical students’ learning in terms of both perception and performance. Few articles in the literature examined the influence of student-generated MCQs on medical students learning. Amid some concerns about time and needed effort, writing multiple-choice questions as a learning method appears to be a useful process for improving medical students’ learning.

Introduction

Active learning, where students are motivated to construct their understanding of things, and make connections between the information they grasp is proven to be more effective than passively absorb mere facts [ 1 ]. However, medical students, are still largely exposed to passive learning methods, such as lectures, with no active involvement in the learning process. In order to assimilate the vast amount of information they are supposed to learn, students adopt a variety of strategies, which are mostly oriented by the assessment methods used in examinations [ 2 ].

Multiple-choice questions (MCQs) represent the most common assessment tool in medical education worldwide [ 3 ]. Therefore, it is expected that students would favor practicing MCQs, either from old exams or commercial question banks, over other learning methods to get ready for their assessments [ 4 ]. Although this approach might seem practical for students as it strengthens their knowledge and gives them a prior exam experience, it might incite surface learning instead of constructing more elaborate learning skills, such as application and analysis [ 5 ].

Involving students in creating MCQs appears to be a potential learning strategy that combines students’ pragmatic approach and actual active learning. Developing good questions, in general, implies a deep understanding and a firm knowledge of the material that is evaluated [ 6 ]. Writing a good MCQ requires, in addition to a meticulously drafted stem, the ability to suggest erroneous but possible distractors [ 7 , 8 ]. It has been suggested that creating distractors may reveal misconceptions and mistakes and underlines when students have a defective understanding of the course material [ 6 , 9 ]. In other words, creating a well-constructed MCQ requires more cognitive abilities than answering one [ 10 ]. Several studies have shown that the process of producing questions is an efficient way to motivate students and enhance their performance, and linked MCQs generation to improve test performance [ 11–15 ]. Therefore, generating MCQs might develop desirable problem-solving skills and involve students in an activity that is immediately and clearly relevant to their final examinations.

In contrast, other studies indicated there was no considerable impact of this time-consuming MCQs development activity on students’ learning [ 10 ] or that question-generation might benefit only some categories of students [ 16 ].

Because of the conflicting conclusions about this approach in different studies, we conducted a systematic review to define and document evidence of the effect of writing MCQs activity on students learning, and understand how and under what circumstances it could benefit medical students, as to our knowledge, there is no prior systematic review addressing the effect of student-generated multiple-choice questions on medical students’ learning.

Study design

This systematic review was conducted following the guidelines of the Preferred Reporting Items for Systematic Review and Meta‐Analyses (PRISMA) [ 17 ]. Ethical approval was not required because this is a systematic review of previously published research, and does not include any individual participant information.

Inclusion and exclusion criteria

Table 1 summarizes the publications’ inclusion and exclusion criteria. The target population was undergraduate and graduate medical students. The intervention was generating MCQs of all types. The learning outcomes of the intervention had to be reported using validated or non-validated instruments. We excluded studies involving students from other health-related domains, those in which the intervention was writing questions other than MCQs, and also completely descriptive studies without an evaluation section of the learning outcome. Comparison to other educational interventions was not regarded as an exclusive criterion because much educational research in the literature is case-based.

Inclusion & exclusion criteria

Search strategy

On May 16 th, 2020, two reviewers separately conducted a systematic search on 4 databases, ‘Medline’ (via PubMed), ‘Scopus’, ‘Web of Science’ and ‘Eric’ using keywords as (Medical students, Multiple-choice questions, Learning, Creating) and their possible synonyms and abbreviations which were all combined by Boolean logic terms (AND, OR, NOT) with convenient search syntax for each database (Appendix 1). Then, all the references generated from the search were imported to a bibliographic tool (Zotero®) [ 18 ] used for the management of references. The reviewers also checked manually the references list of selected publications for more relevant papers. Sections as ‘Similar Articles’ below articles (e.g., PubMed) were also checked for possible additional articles. No restrictions regarding the publication date, language, or origin country were applied.

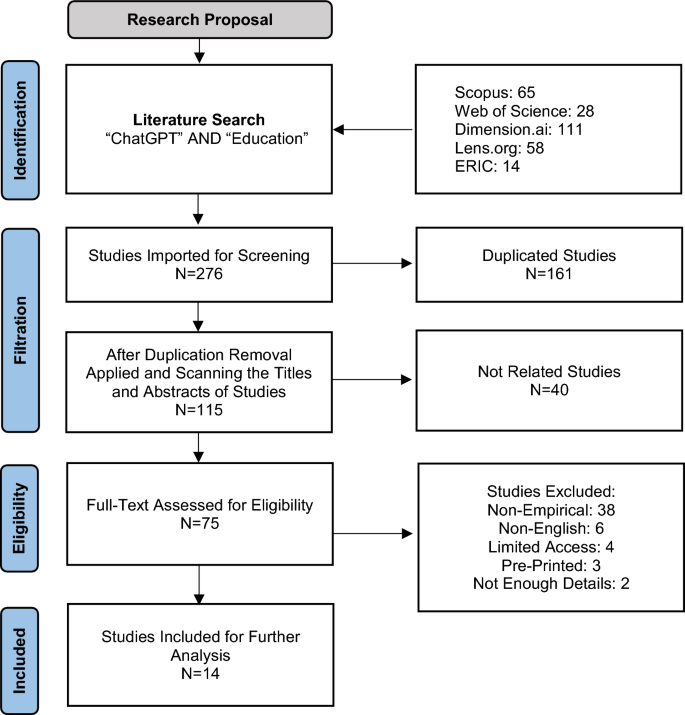

Study selection

The selection process was directed by two reviewers independently. It started with the screening of all papers generated with the databases search, followed by removal of all duplicates. All papers whose titles had a potential relation to the research subject were kept for an abstract screening, while those with obviously irrelevant titles were eliminated. The reviewers then conducted an abstract screening; all selected studies were retrieved for a final full-text screening. Any disagreement among the reviewers concerning papers inclusion was settled through consensus or arbitrated by a third reviewer if necessary.

Data collection

Two reviewers worked separately to create a provisional data extraction sheet, using a small sample made of 4 articles. Then, they met to finalize the coding sheet by adding, editing, and deleting sections, leading to a final template, implemented using Microsoft Excel® to ensure the consistency of collected data. Each reviewer then, extracted data independently using the created framework. Finally, the two reviewers compared their work to ensure the accuracy of the collected data. The items listed in the sheet were article authorship and year of publication, country, study design, participants, subject, intervention and co-interventions, MCQ type and quality, assessment instruments, and findings.

Assessment of study methodological quality

There are few scales to assess the methodological rigor and trustworthiness of quantitative research in medical education, to mention the Best Medical Education Evaluation global scale [ 19 ], Newcastle–Ottawa Scale [ 20 ], and Medical Education Research Study Quality Instrument (MERSQI) [ 21 ]. We chose the latter to assess quantitative studies because it provides a detailed list of items with specified definition, solid validity evidence, and its scores are correlated with the citation rate in the succeeding 3 years of publication, and with the journal impact factor [ 22 , 23 ]. MERSQI evaluates study quality based on 10 items: study design, number of institutions studied, response rate, data type, internal structure, content validity, relationship to other variables, appropriateness of data analysis, the complexity of analysis, and the learning outcome. The 10 items are organized into six domains, each with a maximum score of 3 and a minimum score of 1, not reported items are not scored, resulting in a maximum MERSQI score of 18 [ 21 ].

Each article was assessed independently by two reviewers; any disagreement between the reviewers about MERSQI scoring was resolved by consensus and arbitrated by a third reviewer if necessary. If a study reported more than one outcome, the one with the highest score was taken into account.

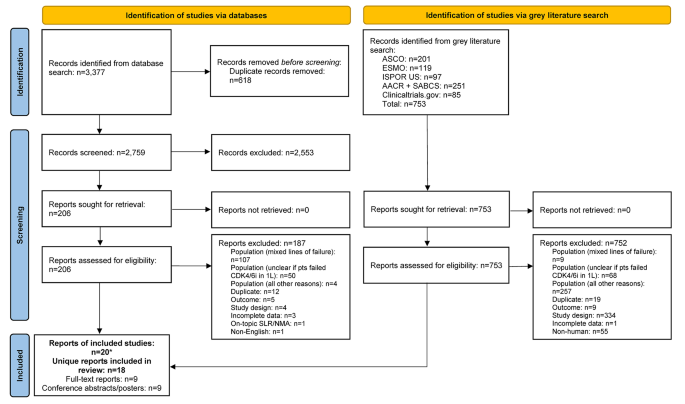

Study design and population characteristics

Eight hundred eighty-four papers were identified after the initial databases search, of which 18 papers were retained after title and abstract screening (see Figure 1 ). Seven of them didn’t fit in the inclusion criteria for reasons as the absence of learning outcome or the targeted population being other than medical students. Finally, only 11 articles were retained, added to another 6 articles retrieved by cross-referencing. For the 17 articles included, the two reviewers agreed about 16 articles, and only one paper was discussed and decided to be included.

Flow-chart of the study selection.

The 17 included papers reported 18 studies, as one paper included two distinct studies. Thirteen out of the eighteen studies were single group studies representing the most used study design (See Table 2 ). Eleven of these single group studies were cross-sectional while two were pre-post-test studies. The second most frequent study design encountered was cohorts, which were adopted in three studies. The remaining two were randomized controlled trials (RCT). The studies have been conducted between 1996 and 2019 with 13 studies (79%) from 2012 to 2019.

Demographics, interventions, and outcome of the included studies

MCQs : Multiple-choice questions; N : Number; NR : Not reported; RCT : Randomized controlled trial

Regarding research methodology, 10 were quantitative studies, four were qualitative and four studies had mixed methods with a quantitative part and a qualitative one (students’ feedback).

Altogether, 2122 students participated in the 17 included papers. All participants were undergraduate medical students enrolled in the first five years of medical school. The preclinical stage was the most represented, with 13 out of the 17 papers including students enrolled in the first two years of medical studies.

Most studies used more than one data source, surveys were present as a main or a parallel instrument to collect data in eight studies. Other data sources were qualitative feedback (n = 8), qualitative feedback turned to quantitative data (n = 1), pre-post-test (n = 4), and post-test (n = 5).

Quality assessment

Overall, the MERSQI scores used to evaluate the quality of the 14 quantitative studies were relatively above average which is 10.7, with a mean MERSQI score of 10.75, ranging from 7 to 14 (see details of MERSQI score for each study in Table 3 ). Studies lost points on MERSQI for using single group design, limiting participants to a single institution, the lack of validity evidence for instrument (only two studies used valid instrument) in addition to measuring the learning outcome only in terms of students’ satisfaction and perceptions.

Methodological quality of included studies according to MERSQI

Details of MERSQI Scoring :

a. Study design: Single group cross-sectional/post-test only (1); single group pre- and post-test (1.5); nonrandomized 2 groups (2); randomized controlled experiment (3).

b. Sampling: Institutions studied: Single institution (0.5); 2 institutions (1); More than 2 institutions (1.5).

c. Sampling: Response rate: Not applicable (0); Response rate < 50% or not reported (0.5); Response rate 50–74% (1); Response rate > 75% (1.5).

d. Type of data: evaluation by study participants (1); Objective measurement (3).

e. Validity evidence for evaluation instrument scores: Content: Not reported/ Not applicable (0); Reported (1).

f. Validity evidence for evaluation instrument scores: Internal structure: Not reported/ Not applicable (0); Reported (1).

g. Validity evidence for evaluation instrument scores: Relationships to other variables: Not reported/ Not applicable (0); Reported (1).

h. Appropriateness of analysis: Inappropriate (0); appropriate (1)

i. Complexity of analysis: Descriptive analysis only (1); Beyond descriptive analysis (2).

j. Outcome: Satisfaction, attitudes, perceptions (1); Knowledge, skills (1.5); Behaviors (2); Patient/health care outcome (3)

The evaluation of the educational effect of MCQs writing was carried out using objective measures in 9 out of the 18 studies included, based on pre-post-tests or post-tests only. Subjective assessments as surveys and qualitative feedbacks were present as second data sources in 7 of these 9 studies, whereas they were the main measures in the remaining nine studies. Hence, 16 studies assessed the learning outcome in terms of students’ satisfaction and perceptions towards the activity representing the first learning level of the Kirkpatrick model which is a four-level model for analyzing and evaluating the results of training and educational programs [ 24 ]. Out of these 16 studies, 3 studies wherein students expressed dissatisfaction with the process and found it disadvantageous compared to other learning methods, whereas 4 studies found mixed results as students admitted the process value though they doubted its efficiency. On the other hand, nine studies provided favorable results of the exercise which was considered of immense importance and helped students consolidate their understanding and knowledge, although students showed reservations about the time expense of the exercise in three studies.

Regarding the nine studies that used objective measures to assess students’ skills and knowledge, which represent the second level of the Kirkpatrick model, six studies reported a significant improvement in students’ grades doing this activity, whereas two studies showed no noticeable difference in grades, and one showed a slight drop in grades.

One study suggested that students performed better when writing MCQs on certain modules compared to others. Two studies found the activity beneficial to all students’ categories while another two suggested the process was more beneficial for low performers.

Four Studies also found that writing and peer review combinations were more beneficial than solely writing MCQs. On the other hand, two studies revealed that peer-reviewing groups didn’t promote learning and one study found mixed results.

Concerning the quality of the generated multiple-choice questions, most studies reported that the MCQs were of good or even high quality when compared to faculty-written MCQs, except for two studies where students created MCQs of poor quality. However, only a few studies (n = 2) reported whether students wrote MCQs that tested higher-order skills such as application and analysis or simply tested recalling facts and concepts.

The majority of interventions required students to write single best answer MCQs (n = 6), three of which were vignettes MCQs. Assertion reason MCQs were present in two studies, and in one study, students were required to write only the stem of the MCQ, while in another study, students were asked to write distractors and the answer, while the rest of studies did not report the MCQs Type.

Data and methodology

This paper methodically reviewed 17 articles investigating the impact of writing multiple-choice questions by medical students on their learning. Several studies pointedly examined the effect of the activity inquired on the learning process, whereas it only represented a small section of the article, which was used for the review. This is due to the fact that many papers focused on other concepts like assessing the quality of students generated MCQs or the efficiency of online question platforms, reflecting the scarce research on the impact of a promising learning strategy (creating MCQs) in medical education.

The mean MERSQI score of quantitative studies was 10.75 which is slightly above the level suggestive of a solid methodology set to 10.7 or higher [ 21 ]. This indicates an acceptable methodology used by most of the studies included. Yet, only two studies [ 30 , 31 ] used a valid instrument in terms of internal structure, content, and relation to other variables, making the lack of the instrument validity, in addition to the use of a single institution and single group design, as the main identified methodological issues.

Furthermore, the studies assessing the outcome in terms of knowledge and skills scored higher than the ones appraising the learning outcome regarding perception and satisfaction. Hence, we recommend that future research should provide more details on the validity parameters of the assessment instruments, and also focus on higher learning outcome levels; precisely skills and knowledge as they are typically more linked with the nature of the studied activity.

Relation with existing literature

Apart from medical education, the impact of students’ generated questions has been a relevant research question in a variety of educational environments. Fu-Yun & Chun-Ping demonstrated through hundreds of papers that student-generated questions promoted learning and led to personal growth [ 32 ]. For example, in Ecology, students who were asked to construct multiple-choice questions significantly improved their grades [ 33 ]. Also, in an undergraduate taxation module, students who were asked to create multiple-choice questions significantly improved their academic achievement [ 34 ].

A previous review explored the impact of student-generated questions on learning and concluded that the process of constructing questions raised students’ abilities of recall and promoted understanding of essential subjects as well as problem-solving skills [ 35 ]. Yet, this review gave a general scope on the activity of generating questions, taking into consideration all questions formats. Thus, its conclusions will not necessarily concord with our review because medical students define a special students’ profile [ 36 ], along with the particularity of multiple-choice questions. As far as we know, this is the first systematic review made to appraise the pedagogical interest of the described process of creating MCQs in medical education.

Students’ satisfaction and perceptions

Students’ viewpoints and attitudes toward the MCQ generation process were evaluated in multiple studies, and the results were generally encouraging, despite a few exceptions where students expressed negative impressions of the process and favored other learning methods over it [ 4 , 10 ]. The most pronouncing remarks were essentially on the time-consumption limiting the process efficiency. This was mainly related to the complexity of the task given to students who were required to write MCQs in addition to other demanding assignments.

Since the most preferred learning method for students is learning by doing, they presumably benefit more when instructions are conveyed in shorter segments, and when introduced in an engaging format [ 37 ]. Thus, some researchers tried more flexible strategies as providing the MCQs distractors and asking students for the stem or better providing the stem and requesting distractors as these were considered to be the most challenging parts of the process [ 38 ].

Some authors used online platforms to create and share questions making the MCQs generation smoother. Another approach to motivate students was including some generated MCQs in examinations, to boost students’ confidence and enhance their reflective learning [ 39 ]. These measures, supposed to facilitate the task, were perceived positively by students.

Students’ performance

Regarding students’ performance, MCQs-generation exercise broadly improved students’ grades. However, not all studies have reported positive results. Some noted no significant effect of writing MCQs on students’ exam scores [ 10 , 31 ]. This was explained by the small number of participants, and the lack of instructors’ supervision. Moreover, students were tested on a broader material than the one they were instructed to write MCQs on, meaning that students might have effectively benefited from the process if they created a larger number of MCQs covering a wider range of material or if the process was aligned with the whole curriculum content. Besides, some studies reported that low performers benefited more from the process of writing MCQs, concordantly with the findings of other studies which indicate that activities promoting active learning advantage lower-performing students more than higher-performing ones [ 40 , 41 ]. Another suggested explanation was the fact that low achievers tried to memorize student-generated MCQs when these made part of their examinations, reversely favoring surface learning instead of the deep learning anticipated from this activity. This created a dilemma between enticing students to participate in this activity and the disadvantage of memorizing MCQs. Therefore, including modified student-generated MCQs after instructors’ input, rather than the original student-generated version in the examinations’ material, might be a reasonable option along with awarding extra points when students are more involved in the process of writing MCQs.

Determinant factors

Students’ performance tends to be related to their ability to generate high-quality questions. As suggested in preceding reviews [ 35 , 42 ], assisting students in constructing questions may enhance the quality of these students’ generated questions, encourage learning, and improve students’ achievement. Also, guiding students to write MCQs makes it possible to test higher-order skills as application and analysis besides recall and comprehension. Accordingly, in several studies, students were provided with instructions on how to write high-quality multiple-choice questions, resulting in high-quality student-generated MCQs [ 10 , 43–45 ]. Even so, such guidelines must take into account not making students’ job more challenging to maintain the process as pleasant.

Several papers discussed various factors that influence the learning outcome of the activity, as working in groups and peer checking MCQs, which were found to be associated with higher performance [ 30 , 38 , 43 , 44 , 46–49 ]. These factors were also viewed favorably by students because of their potential to broaden and deepen one’s knowledge, as well as to notice any misunderstandings or problems, according to many studies, that highlighted a variety of beneficial outcomes of peer learning approaches in the education community [ 42 , 50 , 51 ]. However, in other studies, students preferred to work alone and demanded that time devoted to peer-reviewing MCQs be reduced [ 38 , 45 ]. This was mostly due to students’ lack of trust in the quality of MCQs created by peers; thus, evaluating students’ MCQs by instructors was also a component of an effective intervention.

Strengths and limitations

The main limitation of the present review is the scarcity of studies in the literature. We used a narrowed inclusion criterion leading to the omission of articles published in non-indexed journals and papers from other health-care fields that may have been instructive. However, the choice of limiting the review scope to medical students only was motivated by the specificity of the medical education curricula and teaching methods compared to other health professions categories in most settings. Another limitation is the weak methodology of a non-negligible portion of studies included in this review which makes drawing and generalizing conclusions a delicate exercise. On the other hand, this is the first review to summarize data on the learning benefits of creating MCQs in medical education and to shed light on this interesting learning tool.

Writing multiple-choice questions as a learning method might be a valuable process to enhance medical students learning despite doubts raised on its real efficiency and pitfalls in terms of time and effort.

There is presently a dearth of research that examines the influence of student-generated MCQs on learning. Future research on the subject must use a strong study design, valid instruments, simple and flexible interventions, as well as measure learning based on performance and behavior, and explore the effect of the process on different students’ categories (eg. performance, gender, level), in order to reach the most appropriate circumstances for the activity to get the best out of it.

Appendix: Search strategy.

- Query: ((((Medical student) OR (Medical students)) AND (((Create) OR (Design)) OR (Generate))) AND ((((multiple-choice question) OR (Multiple-choice questions)) OR (MCQ)) OR (MCQs))) AND (Learning)

- Results: 300

- Query: ALL (medical PRE/0 students) AND ALL (multiple PRE/0 choice PRE/0 questions) AND ALL (learning) AND ALL (create OR generate OR design)

- Results: 468

- Query: (ALL = ‘Multiple Choice Questions’ OR ALL = ‘Multiple Choice Question’ OR ALL = MCQ OR ALL = MCQs) AND (ALL = ‘Medical Students’ OR ALL = ‘Medical Student’) AND (ALL = Learning OR ALL = Learn) AND (ALL = Create OR ALL = Generate OR ALL = Design)

- Results: 109

- Query: ‘Medical student’ AND ‘Multiple choice questions’ AND Learning AND (Create OR Generate OR Design)

Total = 884

After deleting double references : Number: 697

Funding Statement

The author(s) reported there is no funding associated with the work featured in this article.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Systematic Reviews: Before you begin...

- Before you begin...

- Introducing systematic reviews

- Step 1: Preparation

- Step 2: Scoping

- Step 3: Planning your search strategy

- Step 4: Recording and managing results

- Step 5: Selecting papers and quality assessment

Welcome to the Library’s Systematic Review Subject Guide

New to systematic reviews?

This Subject Guide page highlights things you should consider before and while undertaking your review.

The systematic review process requires a lot of preparation, detailed searching, and analysing. It may take longer than you think to complete!

Any questions? Contact your Subject Librarian.

Before you begin your review...

Please be assured that your Subject Librarian will support you as best they can.

Subject Librarians are able to show QUB students and staff undertaking any type of literature search (e.g. literature review, scoping review, systematic review) how to:

- Structure searches using AND/OR

- Select appropriate databases

- Search selected databases

- Save and re-run searches

- Export database results

- Store and deduplicate results using EndNote

- Identify grey literature (if required)

At peak periods of demand, Subject Librarians might not be able to deliver all of the above. Please contact your Subject Librarian for guidance on this.

QUB students and staff must provide Subject Librarians with a clear search topic or question, along with a selection of appropriate keywords and synonyms. Students should discuss these with their supervisor before contacting Subject Librarians.

Subject Librarians are unable to do the following for QUB students and staff:

- Check review protocols

- Peer review, or approve, search strategies

- Create search strategies from scratch

- Search databases or grey literature sources

- Deduplicate results

- Screen results

- Demonstrate systematic review tools (e.g. Covidence, Rayyan)

- Create PRISMA flowcharts or similar documentation

Subject Librarians do not need to be listed as review co-authors. However, if reviewers wish to acknowledge the input of a specific Subject Librarian, they should contact the relevant Subject Librarian to ensure appropriate wording.

- Next: Introducing systematic reviews >>

- Last Updated: May 8, 2024 2:56 PM

- URL: https://libguides.qub.ac.uk/systematicreviews

universe84a

Systematic review and meta-analysis mcqs: multiple choice questions related to systematic review and meta-analysis theory, guidelines and softwares.

Systematic Review and Meta-Analysis MCQs

Systematic Review and Meta-Analysis MCQs: It is a group of Multiple Choice Questions (MCQs) related to Systematic Review and Meta-Analysis Theory, Guidelines, and Softwares. Test your knowledge in Systematic Review and Meta-Analysis MCQs, in Theory, Guidelines, and Softwares of Systematic Review and Meta-Analysis by playing the “Systematic Review and Meta-Analysis MCQs” and raise your marks in examinations as well as elsewhere you need. There are multiple-choice questions (MCQs) . A question with four choices among them one right answer you have to chose.

A 20 MCQs set of Systematic Review and Meta-Analysis MCQs

- Which of the following is not always required in systematic review?

- Protocol development

- Search strategy

- Involvement of more than one author

- Meta-analysis

Correct answer: Meta-analysis

- A systematic review of evidence from qualitative studies is also known as a meta-analysis.

- None of them

Correct answer: False

- Which of the steps are included in the systematic review?

- Formulate a question and develop a protocol

- Conduct search

- Select studies, assess study quality, and extract data

- All of the above

Correct answer: All of the above

- Where do we register the protocol of systematic review that will be conducted at the national level?

- Health Research Council of country

- ClinicalTrial.gov

Correct answer: PROSPERO

- What does “S” stand for in PICOS?

- Systematic review

Correct answer: Study

- Which is not the effect size based on continuous variables?

- Absolute mean difference

- Standardized mean difference

- Response ratio

Correct answer: Odds ratio

- A forest plot displays effect estimates and confidence intervals for both individual studies and meta-analyses. *

Correct answer: True

- Which is/are the advantage/s of the meta-analyses?

- To improve precision

- To answer questions not posed by the individual studies

- To settle controversies arising from apparently conflicting studies or to generate new hypotheses

- In the inverse-variance method, larger studies are given more weight than smaller studies.

- Which of the following method is done with the inverse-variance method?

- Fixed-effect method for meta-analysis

- Random-effects methods for meta-analysis

Correct answer: Both

- Meta-regressions are similar in essence to simple regressions, in which an outcome variable is predicted according to the values of one or more explanatory variables.

Correct answer-True

- Which of the following review does not necessarily require methodological quality assessment of included studies?

- Narrative review

- Scoping review

- Both b and c

Correct answer: Both b and c

- Which of the following is not related to selection bias?

- Random sequence generation

- Allocation concealment

Correct answer: Attrition

- Which is true about GRADE?

- A framework for developing and presenting summaries of evidence and providing a systematic approach for making clinical practice recommendations

- Tool for grading the quality of evidence and for making recommendations

- A reproducible and transparent framework for grading certainty in evidence

- Which of the following checklist is used to report the systematic review?

Correct answer: PRISMA

- Which of the following is the most rigorous and methodologically complex kind of review article?

- Which of the following study provided the most robust evidence?

- Randomized clinical trails

- Cohort study

- Cross-sectional analytical study

- Systematic review and meta-analysis

Correct answer: Systematic review and meta-analysis

- Steps in a meta-analysis include all of the following except:

- Abstraction

- Randomization

Correct answer: Randomization

- A fixed-effects model is most appropriate in a meta-analysis when study findings are

- Heterogenous

- Either homogenous or heterogenous

- Neither homogenous nor heterogenous

Correct answer: Homogenous

- What is the full form of PRISMA?

- Providing Results for Systematic Review and Meta-Analyses

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- Providing Reporting Items for Systematic Reviews and Meta-Analyses

- Provisional Reporting Items for Systematic Reviews and Meta-Analyses

Correct answer: Preferred Reporting Items for Systematic Reviews and Meta-Analyses

© 2024 Universe84a.com | All Rights Reserved

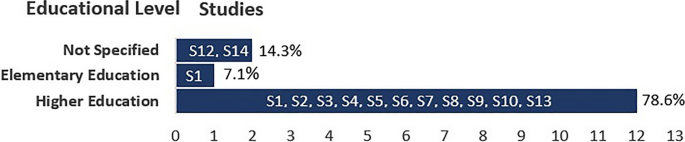

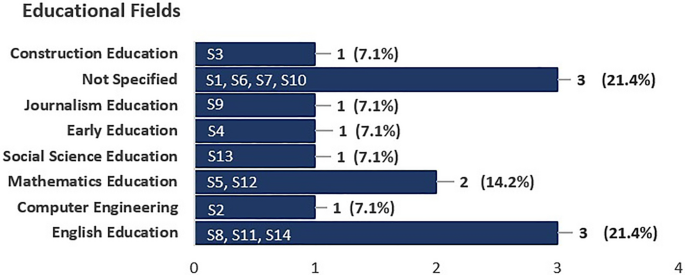

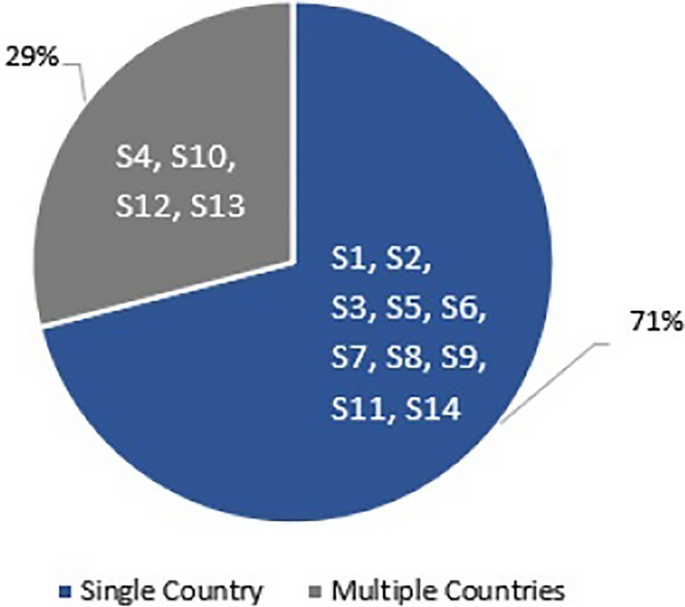

A systematic literature review of empirical research on ChatGPT in education

- Open access

- Published: 26 May 2024

- Volume 3 , article number 60 , ( 2024 )

Cite this article

You have full access to this open access article

- Yazid Albadarin ORCID: orcid.org/0009-0005-8068-8902 1 ,

- Mohammed Saqr 1 ,

- Nicolas Pope 1 &

- Markku Tukiainen 1

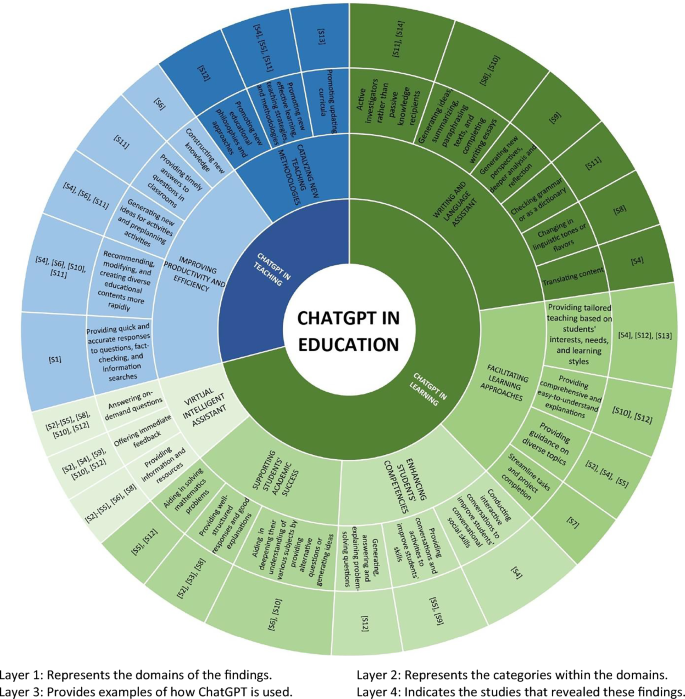

Over the last four decades, studies have investigated the incorporation of Artificial Intelligence (AI) into education. A recent prominent AI-powered technology that has impacted the education sector is ChatGPT. This article provides a systematic review of 14 empirical studies incorporating ChatGPT into various educational settings, published in 2022 and before the 10th of April 2023—the date of conducting the search process. It carefully followed the essential steps outlined in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA 2020) guidelines, as well as Okoli’s (Okoli in Commun Assoc Inf Syst, 2015) steps for conducting a rigorous and transparent systematic review. In this review, we aimed to explore how students and teachers have utilized ChatGPT in various educational settings, as well as the primary findings of those studies. By employing Creswell’s (Creswell in Educational research: planning, conducting, and evaluating quantitative and qualitative research [Ebook], Pearson Education, London, 2015) coding techniques for data extraction and interpretation, we sought to gain insight into their initial attempts at ChatGPT incorporation into education. This approach also enabled us to extract insights and considerations that can facilitate its effective and responsible use in future educational contexts. The results of this review show that learners have utilized ChatGPT as a virtual intelligent assistant, where it offered instant feedback, on-demand answers, and explanations of complex topics. Additionally, learners have used it to enhance their writing and language skills by generating ideas, composing essays, summarizing, translating, paraphrasing texts, or checking grammar. Moreover, learners turned to it as an aiding tool to facilitate their directed and personalized learning by assisting in understanding concepts and homework, providing structured learning plans, and clarifying assignments and tasks. However, the results of specific studies (n = 3, 21.4%) show that overuse of ChatGPT may negatively impact innovative capacities and collaborative learning competencies among learners. Educators, on the other hand, have utilized ChatGPT to create lesson plans, generate quizzes, and provide additional resources, which helped them enhance their productivity and efficiency and promote different teaching methodologies. Despite these benefits, the majority of the reviewed studies recommend the importance of conducting structured training, support, and clear guidelines for both learners and educators to mitigate the drawbacks. This includes developing critical evaluation skills to assess the accuracy and relevance of information provided by ChatGPT, as well as strategies for integrating human interaction and collaboration into learning activities that involve AI tools. Furthermore, they also recommend ongoing research and proactive dialogue with policymakers, stakeholders, and educational practitioners to refine and enhance the use of AI in learning environments. This review could serve as an insightful resource for practitioners who seek to integrate ChatGPT into education and stimulate further research in the field.

Similar content being viewed by others

Empowering learners with ChatGPT: insights from a systematic literature exploration

Incorporating AI in foreign language education: An investigation into ChatGPT’s effect on foreign language learners

Large language models in education: A focus on the complementary relationship between human teachers and ChatGPT

Avoid common mistakes on your manuscript.

1 Introduction

Educational technology, a rapidly evolving field, plays a crucial role in reshaping the landscape of teaching and learning [ 82 ]. One of the most transformative technological innovations of our era that has influenced the field of education is Artificial Intelligence (AI) [ 50 ]. Over the last four decades, AI in education (AIEd) has gained remarkable attention for its potential to make significant advancements in learning, instructional methods, and administrative tasks within educational settings [ 11 ]. In particular, a large language model (LLM), a type of AI algorithm that applies artificial neural networks (ANNs) and uses massively large data sets to understand, summarize, generate, and predict new content that is almost difficult to differentiate from human creations [ 79 ], has opened up novel possibilities for enhancing various aspects of education, from content creation to personalized instruction [ 35 ]. Chatbots that leverage the capabilities of LLMs to understand and generate human-like responses have also presented the capacity to enhance student learning and educational outcomes by engaging students, offering timely support, and fostering interactive learning experiences [ 46 ].

The ongoing and remarkable technological advancements in chatbots have made their use more convenient, increasingly natural and effortless, and have expanded their potential for deployment across various domains [ 70 ]. One prominent example of chatbot applications is the Chat Generative Pre-Trained Transformer, known as ChatGPT, which was introduced by OpenAI, a leading AI research lab, on November 30th, 2022. ChatGPT employs a variety of deep learning techniques to generate human-like text, with a particular focus on recurrent neural networks (RNNs). Long short-term memory (LSTM) allows it to grasp the context of the text being processed and retain information from previous inputs. Also, the transformer architecture, a neural network architecture based on the self-attention mechanism, allows it to analyze specific parts of the input, thereby enabling it to produce more natural-sounding and coherent output. Additionally, the unsupervised generative pre-training and the fine-tuning methods allow ChatGPT to generate more relevant and accurate text for specific tasks [ 31 , 62 ]. Furthermore, reinforcement learning from human feedback (RLHF), a machine learning approach that combines reinforcement learning techniques with human-provided feedback, has helped improve ChatGPT’s model by accelerating the learning process and making it significantly more efficient.

This cutting-edge natural language processing (NLP) tool is widely recognized as one of today's most advanced LLMs-based chatbots [ 70 ], allowing users to ask questions and receive detailed, coherent, systematic, personalized, convincing, and informative human-like responses [ 55 ], even within complex and ambiguous contexts [ 63 , 77 ]. ChatGPT is considered the fastest-growing technology in history: in just three months following its public launch, it amassed an estimated 120 million monthly active users [ 16 ] with an estimated 13 million daily queries [ 49 ], surpassing all other applications [ 64 ]. This remarkable growth can be attributed to the unique features and user-friendly interface that ChatGPT offers. Its intuitive design allows users to interact seamlessly with the technology, making it accessible to a diverse range of individuals, regardless of their technical expertise [ 78 ]. Additionally, its exceptional performance results from a combination of advanced algorithms, continuous enhancements, and extensive training on a diverse dataset that includes various text sources such as books, articles, websites, and online forums [ 63 ], have contributed to a more engaging and satisfying user experience [ 62 ]. These factors collectively explain its remarkable global growth and set it apart from predecessors like Bard, Bing Chat, ERNIE, and others.

In this context, several studies have explored the technological advancements of chatbots. One noteworthy recent research effort, conducted by Schöbel et al. [ 70 ], stands out for its comprehensive analysis of more than 5,000 studies on communication agents. This study offered a comprehensive overview of the historical progression and future prospects of communication agents, including ChatGPT. Moreover, other studies have focused on making comparisons, particularly between ChatGPT and alternative chatbots like Bard, Bing Chat, ERNIE, LaMDA, BlenderBot, and various others. For example, O’Leary [ 53 ] compared two chatbots, LaMDA and BlenderBot, with ChatGPT and revealed that ChatGPT outperformed both. This superiority arises from ChatGPT’s capacity to handle a wider range of questions and generate slightly varied perspectives within specific contexts. Similarly, ChatGPT exhibited an impressive ability to formulate interpretable responses that were easily understood when compared with Google's feature snippet [ 34 ]. Additionally, ChatGPT was compared to other LLMs-based chatbots, including Bard and BERT, as well as ERNIE. The findings indicated that ChatGPT exhibited strong performance in the given tasks, often outperforming the other models [ 59 ].

Furthermore, in the education context, a comprehensive study systematically compared a range of the most promising chatbots, including Bard, Bing Chat, ChatGPT, and Ernie across a multidisciplinary test that required higher-order thinking. The study revealed that ChatGPT achieved the highest score, surpassing Bing Chat and Bard [ 64 ]. Similarly, a comparative analysis was conducted to compare ChatGPT with Bard in answering a set of 30 mathematical questions and logic problems, grouped into two question sets. Set (A) is unavailable online, while Set (B) is available online. The results revealed ChatGPT's superiority in Set (A) over Bard. Nevertheless, Bard's advantage emerged in Set (B) due to its capacity to access the internet directly and retrieve answers, a capability that ChatGPT does not possess [ 57 ]. However, through these varied assessments, ChatGPT consistently highlights its exceptional prowess compared to various alternatives in the ever-evolving chatbot technology.

The widespread adoption of chatbots, especially ChatGPT, by millions of students and educators, has sparked extensive discussions regarding its incorporation into the education sector [ 64 ]. Accordingly, many scholars have contributed to the discourse, expressing both optimism and pessimism regarding the incorporation of ChatGPT into education. For example, ChatGPT has been highlighted for its capabilities in enriching the learning and teaching experience through its ability to support different learning approaches, including adaptive learning, personalized learning, and self-directed learning [ 58 , 60 , 91 ]), deliver summative and formative feedback to students and provide real-time responses to questions, increase the accessibility of information [ 22 , 40 , 43 ], foster students’ performance, engagement and motivation [ 14 , 44 , 58 ], and enhance teaching practices [ 17 , 18 , 64 , 74 ].

On the other hand, concerns have been also raised regarding its potential negative effects on learning and teaching. These include the dissemination of false information and references [ 12 , 23 , 61 , 85 ], biased reinforcement [ 47 , 50 ], compromised academic integrity [ 18 , 40 , 66 , 74 ], and the potential decline in students' skills [ 43 , 61 , 64 , 74 ]. As a result, ChatGPT has been banned in multiple countries, including Russia, China, Venezuela, Belarus, and Iran, as well as in various educational institutions in India, Italy, Western Australia, France, and the United States [ 52 , 90 ].

Clearly, the advent of chatbots, especially ChatGPT, has provoked significant controversy due to their potential impact on learning and teaching. This indicates the necessity for further exploration to gain a deeper understanding of this technology and carefully evaluate its potential benefits, limitations, challenges, and threats to education [ 79 ]. Therefore, conducting a systematic literature review will provide valuable insights into the potential prospects and obstacles linked to its incorporation into education. This systematic literature review will primarily focus on ChatGPT, driven by the aforementioned key factors outlined above.

However, the existing literature lacks a systematic literature review of empirical studies. Thus, this systematic literature review aims to address this gap by synthesizing the existing empirical studies conducted on chatbots, particularly ChatGPT, in the field of education, highlighting how ChatGPT has been utilized in educational settings, and identifying any existing gaps. This review may be particularly useful for researchers in the field and educators who are contemplating the integration of ChatGPT or any chatbot into education. The following research questions will guide this study:

What are students' and teachers' initial attempts at utilizing ChatGPT in education?

What are the main findings derived from empirical studies that have incorporated ChatGPT into learning and teaching?

2 Methodology

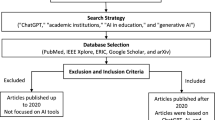

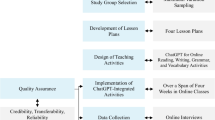

To conduct this study, the authors followed the essential steps of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA 2020) and Okoli’s [ 54 ] steps for conducting a systematic review. These included identifying the study’s purpose, drafting a protocol, applying a practical screening process, searching the literature, extracting relevant data, evaluating the quality of the included studies, synthesizing the studies, and ultimately writing the review. The subsequent section provides an extensive explanation of how these steps were carried out in this study.

2.1 Identify the purpose

Given the widespread adoption of ChatGPT by students and teachers for various educational purposes, often without a thorough understanding of responsible and effective use or a clear recognition of its potential impact on learning and teaching, the authors recognized the need for further exploration of ChatGPT's impact on education in this early stage. Therefore, they have chosen to conduct a systematic literature review of existing empirical studies that incorporate ChatGPT into educational settings. Despite the limited number of empirical studies due to the novelty of the topic, their goal is to gain a deeper understanding of this technology and proactively evaluate its potential benefits, limitations, challenges, and threats to education. This effort could help to understand initial reactions and attempts at incorporating ChatGPT into education and bring out insights and considerations that can inform the future development of education.

2.2 Draft the protocol

The next step is formulating the protocol. This protocol serves to outline the study process in a rigorous and transparent manner, mitigating researcher bias in study selection and data extraction [ 88 ]. The protocol will include the following steps: generating the research question, predefining a literature search strategy, identifying search locations, establishing selection criteria, assessing the studies, developing a data extraction strategy, and creating a timeline.

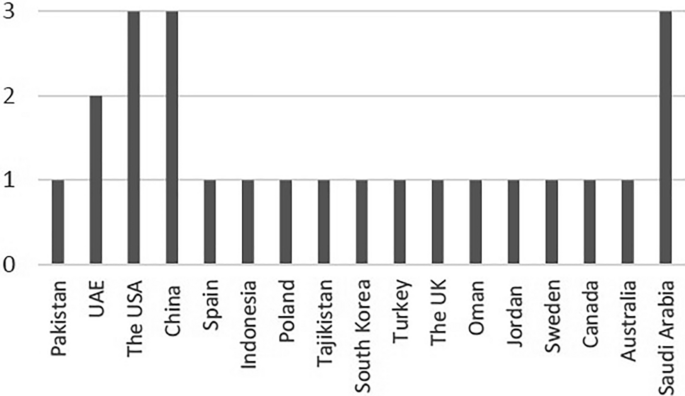

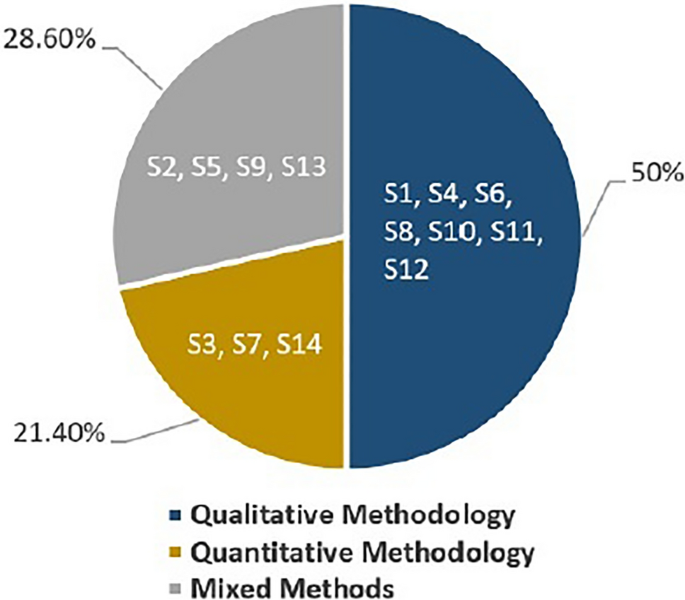

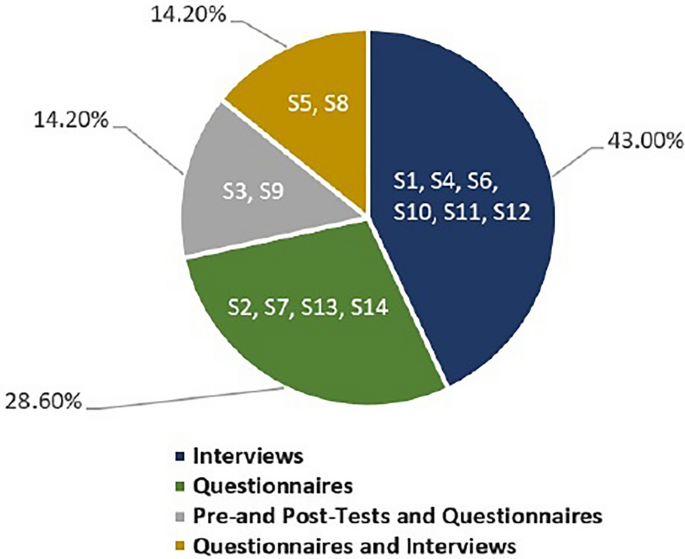

2.3 Apply practical screen