Small Sample Research: Considerations Beyond Statistical Power

- Published: 19 August 2015

- Volume 16 , pages 1033–1036, ( 2015 )

Cite this article

- Kathleen E. Etz 1 &

- Judith A. Arroyo 2

20k Accesses

25 Citations

Explore all metrics

Small sample research presents a challenge to current standards of design and analytic approaches and the underlying notions of what constitutes good prevention science. Yet, small sample research is critically important as the research questions posed in small samples often represent serious health concerns in vulnerable and underrepresented populations. This commentary considers the Special Section on small sample research and also highlights additional challenges that arise in small sample research not considered in the Special Section, including generalizability, determining what constitutes knowledge, and ensuring that research designs match community desires. It also points to opportunities afforded by small sample research, such as a focus on and increased understanding of context and the emphasis it may place on alternatives to the randomized clinical trial. The commentary urges the development and adoption of innovative strategies to conduct research with small samples.

Avoid common mistakes on your manuscript.

Small sample research presents a direct challenge to current standards of design and analytic approaches and the underlying notions of what constitutes good prevention science. While we can have confidence that our scientific methods have the ability to answer many research questions, we have been limited in our ability to take on research with small samples because we have not developed or adopted the means to support rigorous small sample research. This Special Section identifies some tools that can be used for small sample research. It reminds us that progress in this area will likely require expansion of our ideas of what constitutes rigor in analysis and design strategies that address the unique characteristics and accompanying challenges of small sample research. Advances will also require making room for the adoption of innovative design and statistical analysis approaches. The collection of papers makes a significant contribution to the literature and marks major development in the field.

Innovations in small sample research are particularly critical because the research questions posed in small samples often focus on serious health concerns in vulnerable populations. Individuals most at risk for or afflicted by health disparities (e.g., racial and ethnic minorities) are by definition small in number when compared to the larger, dominant society. The current state of the art in design and statistical analysis in prevention science, which is highly dependent on large samples, has severely handicapped investigation of health disparities in these smaller populations. Unless we develop research techniques suitable for small group design and expand our concepts of what design and analytic strategies provide sufficient scientific rigor, health disparities will continue to lay waste to populations that live in smaller communities or who are difficult to recruit in large numbers. Particularly when considering high-risk, low base rate behaviors such as recurrent binge drinking or chronic drug use, investigators are often limited by small populations in many health disparity groups and by small numbers of potential participants in towns, villages, and rural communities. Even in larger, urban settings, researchers may experience constraints on recruitment such as difficulty identifying a sufficiently large sample, distrust of research, lack of transportation or time outside of work hours, or language issues. Until now, small sample sizes and the lack of accepted tools for small sample research have decreased our ability to harness the power of science to research preventive solutions to health disparities. The collection of articles in this Special Section helps to address this by bringing together multiple strategies and demonstrating their strength in addressing research questions with small samples.

Small sample research issues also arise in multi-level, group-based, or community-level intervention research (Trickett et al. 2011 ). An example of this is a study that uses a media campaign and compares the efficacy of that campaign across communities. In such cases, the unit of analysis is the group, and the limited number of units that can be feasibly involved in a study makes multi-level intervention research inevitably an analysis of small samples. The increasingly recognized importance of intervening in communities at multiple levels (Frohlich and Potvin 2008 ) and the desire to understand the efficacy and effectiveness of multi-level interventions (Hawe 1994 ) increase the need to devise strategies for assessing interventions conducted with small samples.

The Special Section makes a major contribution to small sample research, identifying tools that can be used to address small sample design and analytic challenges. The articles here can be grouped into four areas: (1) identification of refinements in statistical applications and measurement that can facilitate analyses with small samples, (2) alternatives to randomized clinical trial (RCT) designs that maintain rigor while maximizing power, (3) use of qualitative and mixed methods, and (4) Bayesian analysis. The Special Section provides a range of alternative strategies to those that are currently employed with larger samples. The first and last papers in the Special Section (Fok et al. 2015 ; Henry et al. 2015a ) examine and elaborate on the contributions of these articles to the field. As this is considered elsewhere, we will focus our comments more on issues that are not already covered but that will be increasingly important as this field moves forward.

One challenge that is not addressed by the papers in this Special Section is the generalizability of small sample research findings, particularly when working with culturally distinct populations. Generalizability poses a different obstacle than those associated with design and analysis, in that it is not related to rigor or the confidence we can have in our conclusions. Rather, it limits our ability to assume the results will apply to populations other than those from whom a sample is drawn and, as such, can limit the application of the work. The need to discover prevention solutions for all people, even if they happen to be members of a small population, begs questions of the value of generalizability and of the importance ascribed to it. Further, existing research raises long-standing important questions about whether knowledge produced under highly controlled conditions can generalize to ethnoculturally diverse communities (Atkins et al. 2006 ; Beeker et al. 1998 ; Green and Glasgow 2006 ). Regardless, the inability to generalize beyond a small population can present a barrier to funding. When grant applications are reviewed, projects that are not seen as widely generalizable often receive poor ratings. Scientists conducting small sample research with culturally distinct groups are frequently stymied by how they can justify their research when it is not generalizable to large segments of the population. In some instances, the question that drives the research is that which limits generalizability. For example, research projects on cultural adaptations of established interventions are often highly specific. An adaptation that might be efficacious in one small sample might not be so in other contexts. This is particularly the case if the adaptation integrates local culture, such as preparing for winter and subsistence activities in Alaska or integrating the horse culture of the Great Plains. Even if local adaptation is not necessary, dissemination research to ascertain the efficacy and/or effectiveness of mainstream, evidence-based interventions when applied to diverse groups will be difficult to conduct if we cannot address concerns about generalizability.

It is not readily apparent how to address issues of generalizability, but it is clear that this will be challenging and will require creativity. One potential strategy is to go beyond questions of intervention efficacy to address additional research questions that have the potential to advance the field more generally. For example, Allen and colleagues’ ( 2014 ) scientific investigations extended beyond development of a prevention intervention in Alaska Native villages to identification and testing of the underlying prevention processes that were at the core of the culturally specific intervention. This isolation of the key components of the prevention process has the potential to inform and generalize across settings. The development of new statistical tools for small culturally distinct samples might also be helpful in other research contexts. Similarly, the identification of the most potent prevention processes for adaptation also might generalize. As small sample research evolves, we must remain open to how this work has the potential to be highly valuable despite recognizing that not all aspects of it will generalize and also take care to identify what can be applied generally.

While not exclusive to small sample research, additional difficulties that can arise in conducting research in some small, culturally distinct samples are the questions of what constitutes knowledge and how to include alternative forms of knowledge (e.g., indigenous ways of knowing, folk wisdom) in health research (Aikenhead and Ogawa 2007 ; Gone 2012 ). For many culturally distinct communities that turn to research to address their health challenges, the need for large samples and methods demanded by mainstream science might be incongruent with local epistemologies and cultural understandings of how the knowledge to inform prevention is generated and standards of evidence are established. Making sense of how or whether indigenous knowledge and western scientific approaches can work together is an immense challenge. The Henry, Dymnicki, Mohatt, Kelly, and Allen article in this Special Section recommends combining qualitative and quantitative methods as one way to address this conundrum. However, this strategy is not sufficient to address all of the challenges encountered by those who seek to integrate traditional knowledge into modern scientific inquiry. For culturally distinct groups who value forms of knowledge other than those generated by western science, the research team, including the community members, will need to work together to identify ways to best ensure that culturally valued knowledge is incorporated into the research endeavor. The scientific field will need to make room for approaches that stem from the integration of culturally valued knowledge.

Ensuring that the research design and methods correspond to community needs and desires can present an additional challenge. Investigations conducted with small, culturally distinct groups often use community-based participatory research (CBPR) approaches (Minkler and Wallerstein 2008 ). True CBPR mandates that community partners be equal participants in every phase of the research, including study design. From an academic researcher’s perspective, the primary obstacle for small sample research may be insufficient statistical power to conduct a classic RCT. However, for the small group partner, the primary obstacle may be the RCT design itself. Many communities will not allow a RCT because assignment of some community members to a no-treatment control condition can violate culturally based ethical principles that demand that all participants be treated equally. Particularly in communities experiencing severe health disparities, community members may want every person to receive the active intervention. While the RCT has become the gold standard because it is believed to be the most rigorous test of intervention efficacy, it is clear the RCT does not serve the needs of all communities.

While presenting challenges for current methods, it is important to note that small sample research can also expand our horizons. For example, attempts to truly comprehend culturally distinct groups will lead to a better understanding of the role of context in health outcomes. Current approaches more often attempt to control for extraneous variables rather than work to more accurately model potentially rich contextual variables. This blinds us to cultural differences between and among small groups that might contribute to outcomes and improve health. Analytical strategies that mask these nuances will fail to detect information about risk and resilience factors that could impact intervention. Multi-level intervention research (which we pointed out earlier qualifies as small sample research) that focuses on contextual changes as well as or instead of change in the individual will also inform our understanding of context, elucidating how to effectively intervene to change context to promote health outcomes. Thus, considering how prevailing methods limit our work in small samples can also expose ways that alternative methods may advance our science more broadly by enhancing both our understanding of context and how to intervene in context.

Small sample science requires us to consider alternatives to the RCT, and this consideration introduces additional opportunities. The last paper in this Special Section (Henry et al. 2015b ) notes compelling critiques of RCT. Small sample research demands we incorporate alternate strategies that may be superior in some instances regarding their efficiency in their use of available information, in contrast to the classic RCT, and may be more aligned with community desires. Alternative designs for small sample research may offer means to enhance and ensure scientific rigor without depending on RCT design (Srinivasan et al. 2015 ). It is important to consider what alternative approaches can contribute rather than adhering rigidly to the RCT.

New challenges require innovative solutions. Innovation is the foundation of scientific advances. It is one of only five National Institutes of Health grant review criteria. Despite the value to science of innovation, research grant application reviewers are often skeptical of new strategies and are reluctant to support risk taking in science. As a field, we seem accustomed to the use of certain methods and statistics, generally accepting and rarely questioning if they are the best approach. Yet, it is clear that common methods that work well with large samples are not always appropriate for small samples. Progress will demand that new approaches be well justified and also that the field supports innovation and the testing of alternative approaches. Srinivasan and colleagues ( 2015 ) further recommend that it might be necessary to offer training to grant application peer reviewers on innovative small sample research methods, thus ensuring that they are knowledgeable in this area and score grant applications appropriately. Alternative approaches need to be accepted into the repertoire of available design and assessment tools. The articles in this Special Section all highlight such innovation for small sample research.

It would be a failure of science and the imagination if newly discovered or re-discovered (i.e., Bayesian) strategies are not employed to facilitate rigorous assessment of interventions in small samples. It is imperative that the tools of science do not limit our ability to address pressing public health questions. New approaches can be used to address contemporary research questions, including providing solutions to the undue burden of disease that can and often does occur in small populations. It must be the pressing nature of the questions, not the limitations of our methods, that determines what science is undertaken (see also Srinivasan et al. 2015 ). While small sample research presents a challenge for prevailing scientific approaches, the papers in this Special Section identify ways to move this science forward with rigor. It is imperative that the field accommodates these advances, and continues to be innovative in response to the challenge of small sample research, to ensure that science can provide answers for those most in need.

Aikenhead, G. S., & Ogawa, M. (2007). Indigenous knowledge and science revisited. Cultural Studies of Science Education, 2 , 539–620.

Article Google Scholar

Allen, J., Mohatt, G. V., Fok, C. C. T., Henry, D., Burkett, R., & People Awakening Project. (2014). A protective factors model for alcohol abuse and suicide prevention among Alaska Native youth. American Journal of Community Psychology, 54 , 125–139.

Article PubMed Central PubMed Google Scholar

Atkins, M. S., Frazier, S. L., & Cappella, E. (2006). Hybrid research models: Natural opportunities for examining mental health in context. Clinical Psychology Review, 13 , 105–108.

Google Scholar

Beeker, C., Guenther-Grey, C., & Raj, A. (1998). Community empowerment paradigm drift and the primary prevention of HIV/AIDS. Social Science & Medicine, 46 , 831–842.

Article CAS Google Scholar

Fok, Henry, D., Allen, J. (2015). Maybe small is too small a term: Introduction to advancing small sample prevention science. Prevention Science .

Frohlich, K. L., & Potvin, L. (2008). Transcending the known in public health practice: The inequality paradox: The population approach and vulnerable populations. American Journal of Public Health, 98 , 216–221.

Gone, J. P. (2012). Indigenous traditional knowledge and substance abuse treatment outcomes: The problem of efficacy evaluation. American Journal of Drug and Alcohol Abuse, 38 , 493–497.

Article PubMed Google Scholar

Green, L. W., & Glasgow, R. E. (2006). Evaluating the relevance, generalization, and applicability of research: Issues in external validation and translation methodology. Evaluation & the Health Professions, 29 , 126–153.

Hawe, P. (1994). Capturing the meaning of “community” in community intervention evaluation: Some contributions from community psychology. Health Promotion International, 9 , 199–210.

Henry, D., Dymnicki, A. B., Mohatt, N., Kelly, J. G., & Allen, J. (2015a). Clustering methods with qualitative data: A mixed methods approach for prevention research with small samples. Prevention Science . doi: 10.1007/s11121-015-0561-z .

Henry, D., Fok, C.C.T., Allen, J. (2015). Why small is too small a term: Prevention science for health disparities, culturally distinct groups, and community-level intervention. Prevention Science.

Minkler, M., & Wallerstein, N. (Eds.). (2008). Community-based participatory research for health: From process to outcomes (2nd ed.). San Francisco: Jossey-Bass.

Srinivasan, S., Moser, R. P., Willis, G., Riley, W., Alexander, M., Berrigan, D., & Kobrin, S. (2015). Small is essential: Importance of subpopulation research in cancer control. American Journal of Public Health, 105 , 371–373.

Trickett, E. J., Beehler, S., Deutsch, C., Green, L. W., Hawe, P., McLeroy, K., Miller, R. L., Rapkin, B. D., Schensul, J. J., Schulz, A. J., & Trimble, J. E. (2011). Advancing the science of community-level interventions. American Journal of Public Health, 11 , 1410–1419.

Download references

Compliance with Ethical Standards

No external funding supported this work.

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Because this article is a commentary, informed consent is not applicable.

Author information

Authors and affiliations.

National Institute on Drug Abuse, National Institutes of Health, 6001 Executive Blvd., Bethesda, MD, 20852, USA

Kathleen E. Etz

National Institute on Alcohol Abuse and Alcoholism, National Institutes of Health, 5635 Fishers Lane, Bethesda, MD, 20852, USA

Judith A. Arroyo

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Kathleen E. Etz .

Additional information

The opinions and conclusions here represent those of the authors and do not represent the National Institutes of Health, the National Institute on Drug Abuse, the National Institute on Alcohol Abuse and Alcoholism, or the US Government.

Rights and permissions

Reprints and permissions

About this article

Etz, K.E., Arroyo, J.A. Small Sample Research: Considerations Beyond Statistical Power. Prev Sci 16 , 1033–1036 (2015). https://doi.org/10.1007/s11121-015-0585-4

Download citation

Published : 19 August 2015

Issue Date : October 2015

DOI : https://doi.org/10.1007/s11121-015-0585-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 10 April 2013

Power failure: why small sample size undermines the reliability of neuroscience

- Katherine S. Button 1 , 2 ,

- John P. A. Ioannidis 3 ,

- Claire Mokrysz 1 ,

- Brian A. Nosek 4 ,

- Jonathan Flint 5 ,

- Emma S. J. Robinson 6 &

- Marcus R. Munafò 1

Nature Reviews Neuroscience volume 14 , pages 365–376 ( 2013 ) Cite this article

483k Accesses

4311 Citations

1383 Altmetric

Metrics details

- Molecular neuroscience

An Erratum to this article was published on 15 April 2013

This article has been updated

Low statistical power undermines the purpose of scientific research; it reduces the chance of detecting a true effect.

Perhaps less intuitively, low power also reduces the likelihood that a statistically significant result reflects a true effect.

Empirically, we estimate the median statistical power of studies in the neurosciences is between ∼ 8% and ∼ 31%.

We discuss the consequences of such low statistical power, which include overestimates of effect size and low reproducibility of results.

There are ethical dimensions to the problem of low power; unreliable research is inefficient and wasteful.

Improving reproducibility in neuroscience is a key priority and requires attention to well-established, but often ignored, methodological principles.

We discuss how problems associated with low power can be addressed by adopting current best-practice and make clear recommendations for how to achieve this.

A study with low statistical power has a reduced chance of detecting a true effect, but it is less well appreciated that low power also reduces the likelihood that a statistically significant result reflects a true effect. Here, we show that the average statistical power of studies in the neurosciences is very low. The consequences of this include overestimates of effect size and low reproducibility of results. There are also ethical dimensions to this problem, as unreliable research is inefficient and wasteful. Improving reproducibility in neuroscience is a key priority and requires attention to well-established but often ignored methodological principles.

You have full access to this article via your institution.

Similar content being viewed by others

Dimensionality reduction beyond neural subspaces with slice tensor component analysis

Principal component analysis

Two common and distinct forms of variation in human functional brain networks

It has been claimed and demonstrated that many (and possibly most) of the conclusions drawn from biomedical research are probably false 1 . A central cause for this important problem is that researchers must publish in order to succeed, and publishing is a highly competitive enterprise, with certain kinds of findings more likely to be published than others. Research that produces novel results, statistically significant results (that is, typically p < 0.05) and seemingly 'clean' results is more likely to be published 2 , 3 . As a consequence, researchers have strong incentives to engage in research practices that make their findings publishable quickly, even if those practices reduce the likelihood that the findings reflect a true (that is, non-null) effect 4 . Such practices include using flexible study designs and flexible statistical analyses and running small studies with low statistical power 1 , 5 . A simulation of genetic association studies showed that a typical dataset would generate at least one false positive result almost 97% of the time 6 , and two efforts to replicate promising findings in biomedicine reveal replication rates of 25% or less 7 , 8 . Given that these publishing biases are pervasive across scientific practice, it is possible that false positives heavily contaminate the neuroscience literature as well, and this problem may affect at least as much, if not even more so, the most prominent journals 9 , 10 .

Here, we focus on one major aspect of the problem: low statistical power. The relationship between study power and the veracity of the resulting finding is under-appreciated. Low statistical power (because of low sample size of studies, small effects or both) negatively affects the likelihood that a nominally statistically significant finding actually reflects a true effect. We discuss the problems that arise when low-powered research designs are pervasive. In general, these problems can be divided into two categories. The first concerns problems that are mathematically expected to arise even if the research conducted is otherwise perfect: in other words, when there are no biases that tend to create statistically significant (that is, 'positive') results that are spurious. The second category concerns problems that reflect biases that tend to co-occur with studies of low power or that become worse in small, underpowered studies. We next empirically show that statistical power is typically low in the field of neuroscience by using evidence from a range of subfields within the neuroscience literature. We illustrate that low statistical power is an endemic problem in neuroscience and discuss the implications of this for interpreting the results of individual studies.

Low power in the absence of other biases

Three main problems contribute to producing unreliable findings in studies with low power, even when all other research practices are ideal. They are: the low probability of finding true effects; the low positive predictive value (PPV; see Box 1 for definitions of key statistical terms) when an effect is claimed; and an exaggerated estimate of the magnitude of the effect when a true effect is discovered. Here, we discuss these problems in more detail.

First, low power, by definition, means that the chance of discovering effects that are genuinely true is low. That is, low-powered studies produce more false negatives than high-powered studies. When studies in a given field are designed with a power of 20%, it means that if there are 100 genuine non-null effects to be discovered in that field, these studies are expected to discover only 20 of them 11 .

Second, the lower the power of a study, the lower the probability that an observed effect that passes the required threshold of claiming its discovery (that is, reaching nominal statistical significance, such as p < 0.05) actually reflects a true effect 1 , 12 . This probability is called the PPV of a claimed discovery. The formula linking the PPV to power is:

where (1 − β) is the power, β is the type II error, α is the type I error and R is the pre-study odds (that is, the odds that a probed effect is indeed non-null among the effects being probed). The formula is derived from a simple two-by-two table that tabulates the presence and non-presence of a non-null effect against significant and non-significant research findings 1 . The formula shows that, for studies with a given pre-study odds R, the lower the power and the higher the type I error, the lower the PPV. And for studies with a given pre-study odds R and a given type I error (for example, the traditional p = 0.05 threshold), the lower the power, the lower the PPV.

For example, suppose that we work in a scientific field in which one in five of the effects we test are expected to be truly non-null (that is, R = 1 / (5 − 1) = 0.25) and that we claim to have discovered an effect when we reach p < 0.05; if our studies have 20% power, then PPV = 0.20 × 0.25 / (0.20 × 0.25 + 0.05) = 0.05 / 0.10 = 0.50; that is, only half of our claims for discoveries will be correct. If our studies have 80% power, then PPV = 0.80 × 0.25 / (0.80 × 0.25 + 0.05) = 0.20 / 0.25 = 0.80; that is, 80% of our claims for discoveries will be correct.

Third, even when an underpowered study discovers a true effect, it is likely that the estimate of the magnitude of that effect provided by that study will be exaggerated. This effect inflation is often referred to as the 'winner's curse' 13 and is likely to occur whenever claims of discovery are based on thresholds of statistical significance (for example, p < 0.05) or other selection filters (for example, a Bayes factor better than a given value or a false-discovery rate below a given value). Effect inflation is worst for small, low-powered studies, which can only detect effects that happen to be large. If, for example, the true effect is medium-sized, only those small studies that, by chance, overestimate the magnitude of the effect will pass the threshold for discovery. To illustrate the winner's curse, suppose that an association truly exists with an effect size that is equivalent to an odds ratio of 1.20, and we are trying to discover it by performing a small (that is, underpowered) study. Suppose also that our study only has the power to detect an odds ratio of 1.20 on average 20% of the time. The results of any study are subject to sampling variation and random error in the measurements of the variables and outcomes of interest. Therefore, on average, our small study will find an odds ratio of 1.20 but, because of random errors, our study may in fact find an odds ratio smaller than 1.20 (for example, 1.00) or an odds ratio larger than 1.20 (for example, 1.60). Odds ratios of 1.00 or 1.20 will not reach statistical significance because of the small sample size. We can only claim the association as nominally significant in the third case, where random error creates an odds ratio of 1.60. The winner's curse means, therefore, that the 'lucky' scientist who makes the discovery in a small study is cursed by finding an inflated effect.

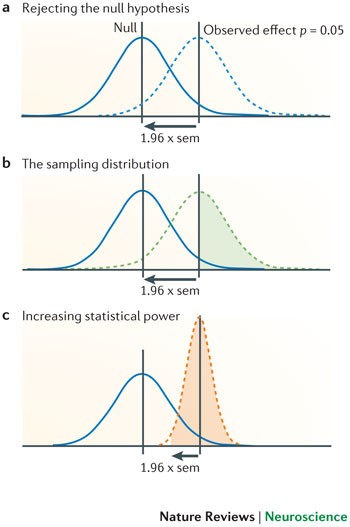

The winner's curse can also affect the design and conclusions of replication studies. If the original estimate of the effect is inflated (for example, an odds ratio of 1.60), then replication studies will tend to show smaller effect sizes (for example, 1.20), as findings converge on the true effect. By performing more replication studies, we should eventually arrive at the more accurate odds ratio of 1.20, but this may take time or may never happen if we only perform small studies. A common misconception is that a replication study will have sufficient power to replicate an initial finding if the sample size is similar to that in the original study 14 . However, a study that tries to replicate a significant effect that only barely achieved nominal statistical significance (that is, p ∼ 0.05) and that uses the same sample size as the original study, will only achieve ∼ 50% power, even if the original study accurately estimated the true effect size. This is illustrated in Fig. 1 . Many published studies only barely achieve nominal statistical significance 15 . This means that if researchers in a particular field determine their sample sizes by historical precedent rather than through formal power calculation, this will place an upper limit on average power within that field. As the true effect size is likely to be smaller than that indicated by the initial study — for example, because of the winner's curse — the actual power is likely to be much lower. Furthermore, even if power calculation is used to estimate the sample size that is necessary in a replication study, these calculations will be overly optimistic if they are based on estimates of the true effect size that are inflated owing to the winner's curse phenomenon. This will further hamper the replication process.

a | If a study finds evidence for an effect at p = 0.05, then the difference between the mean of the null distribution (indicated by the solid blue curve) and the mean of the observed distribution (dashed blue curve) is 1.96 × sem. b | Studies attempting to replicate an effect using the same sample size as that of the original study would have roughly the same sampling variation (that is, sem) as in the original study. Assuming, as one might in a power calculation, that the initially observed effect we are trying to replicate reflects the true effect, the potential distribution of these replication effect estimates would be similar to the distribution of the original study (dashed green curve). A study attempting to replicate a nominally significant effect ( p ∼ 0.05), which uses the same sample size as the original study, would therefore have (on average) a 50% chance of rejecting the null hypothesis (indicated by the coloured area under the green curve) and thus only 50% statistical power. c | We can increase the power of the replication study (coloured area under the orange curve) by increasing the sample size so as to reduce the sem. Powering a replication study adequately (that is, achieving a power ≥ 80%) therefore often requires a larger sample size than the original study, and a power calculation will help to decide the required size of the replication sample.

Low power in the presence of other biases

Low power is associated with several additional biases. First, low-powered studies are more likely to provide a wide range of estimates of the magnitude of an effect (which is known as 'vibration of effects' and is described below). Second, publication bias, selective data analysis and selective reporting of outcomes are more likely to affect low-powered studies. Third, small studies may be of lower quality in other aspects of their design as well. These factors can further exacerbate the low reliability of evidence obtained in studies with low statistical power.

Vibration of effects 13 refers to the situation in which a study obtains different estimates of the magnitude of the effect depending on the analytical options it implements. These options could include the statistical model, the definition of the variables of interest, the use (or not) of adjustments for certain potential confounders but not others, the use of filters to include or exclude specific observations and so on. For example, a recent analysis of 241 functional MRI (fMRI) studies showed that 223 unique analysis strategies were observed so that almost no strategy occurred more than once 16 . Results can vary markedly depending on the analysis strategy 1 . This is more often the case for small studies — here, results can change easily as a result of even minor analytical manipulations. In small studies, the range of results that can be obtained owing to vibration of effects is wider than in larger studies, because the results are more uncertain and therefore fluctuate more in response to analytical changes. Imagine, for example, dropping three observations from the analysis of a study of 12 samples because post-hoc they are considered unsatisfactory; this manipulation may not even be mentioned in the published paper, which may simply report that only nine patients were studied. A manipulation affecting only three observations could change the odds ratio from 1.00 to 1.50 in a small study but might only change it from 1.00 to 1.01 in a very large study. When investigators select the most favourable, interesting, significant or promising results among a wide spectrum of estimates of effect magnitudes, this is inevitably a biased choice.

Publication bias and selective reporting of outcomes and analyses are also more likely to affect smaller, underpowered studies 17 . Indeed, investigations into publication bias often examine whether small studies yield different results than larger ones 18 . Smaller studies more readily disappear into a file drawer than very large studies that are widely known and visible, and the results of which are eagerly anticipated (although this correlation is far from perfect). A 'negative' result in a high-powered study cannot be explained away as being due to low power 19 , 20 , and thus reviewers and editors may be more willing to publish it, whereas they more easily reject a small 'negative' study as being inconclusive or uninformative 21 . The protocols of large studies are also more likely to have been registered or otherwise made publicly available, so that deviations in the analysis plans and choice of outcomes may become obvious more easily. Small studies, conversely, are often subject to a higher level of exploration of their results and selective reporting thereof.

Third, smaller studies may have a worse design quality than larger studies. Several small studies may be opportunistic experiments, or the data collection and analysis may have been conducted with little planning. Conversely, large studies often require more funding and personnel resources. As a consequence, designs are examined more carefully before data collection, and analysis and reporting may be more structured. This relationship is not absolute — small studies are not always of low quality. Indeed, a bias in favour of small studies may occur if the small studies are meticulously designed and collect high-quality data (and therefore are forced to be small) and if large studies ignore or drop quality checks in an effort to include as large a sample as possible.

Empirical evidence from neuroscience

Any attempt to establish the average statistical power in neuroscience is hampered by the problem that the true effect sizes are not known. One solution to this problem is to use data from meta-analyses. Meta-analysis provides the best estimate of the true effect size, albeit with limitations, including the limitation that the individual studies that contribute to a meta-analysis are themselves subject to the problems described above. If anything, summary effects from meta-analyses, including power estimates calculated from meta-analysis results, may also be modestly inflated 22 .

Acknowledging this caveat, in order to estimate statistical power in neuroscience, we examined neuroscience meta-analyses published in 2011 that were retrieved using 'neuroscience' and 'meta-analysis' as search terms. Using the reported summary effects of the meta-analyses as the estimate of the true effects, we calculated the power of each individual study to detect the effect indicated by the corresponding meta-analysis.

Methods. Included in our analysis were articles published in 2011 that described at least one meta-analysis of previously published studies in neuroscience with a summary effect estimate (mean difference or odds/risk ratio) as well as study level data on group sample size and, for odds/risk ratios, the number of events in the control group.

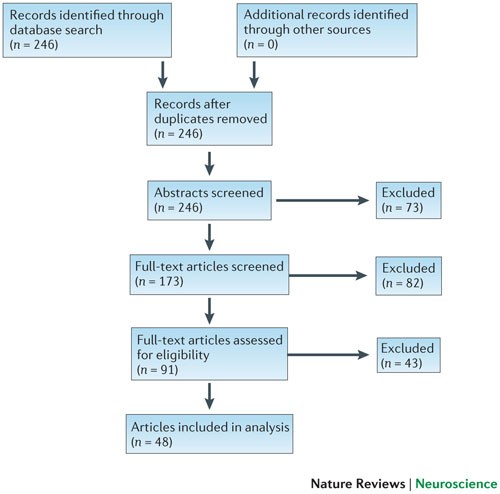

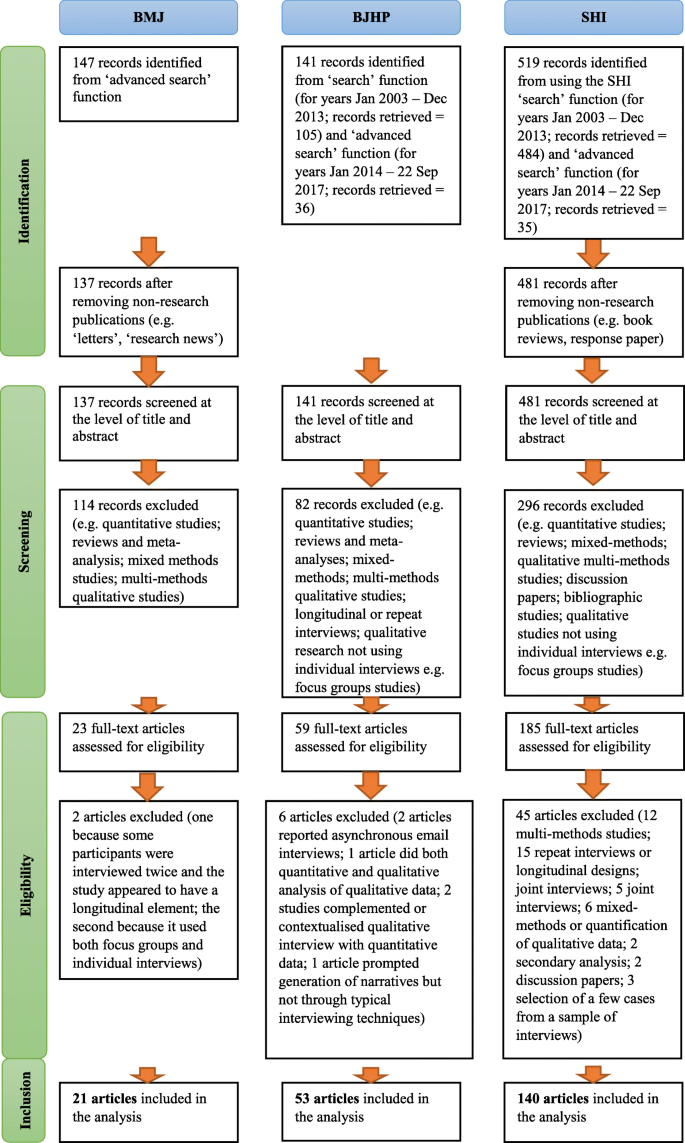

We searched computerized databases on 2 February 2012 via Web of Science for articles published in 2011, using the key words 'neuroscience' and 'meta-analysis'. All of the articles that were identified via this electronic search were screened independently for suitability by two authors (K.S.B. and M.R.M.). Articles were excluded if no abstract was electronically available (for example, conference proceedings and commentaries) or if both authors agreed, on the basis of the abstract, that a meta-analysis had not been conducted. Full texts were obtained for the remaining articles and again independently assessed for eligibility by two authors (K.S.B. and M.R.M.) ( Fig. 2 ).

Computerized databases were searched on 2 February 2012 via Web of Science for papers published in 2011, using the key words 'neuroscience' and 'meta-analysis'. Two authors (K.S.B. and M.R.M.) independently screened all of the papers that were identified for suitability ( n = 246). Articles were excluded if no abstract was electronically available (for example, conference proceedings and commentaries) or if both authors agreed, on the basis of the abstract, that a meta-analysis had not been conducted. Full texts were obtained for the remaining articles ( n = 173) and again independently assessed for eligibility by K.S.B. and M.R.M. Articles were excluded ( n = 82) if both authors agreed, on the basis of the full text, that a meta-analysis had not been conducted. The remaining articles ( n = 91) were assessed in detail by K.S.B. and M.R.M. or C.M. Articles were excluded at this stage if they could not provide the following data for extraction for at least one meta-analysis: first author and summary effect size estimate of the meta-analysis; and first author, publication year, sample size (by groups) and number of events in the control group (for odds/risk ratios) of the contributing studies. Data extraction was performed independently by K.S.B. and M.R.M. or C.M. and verified collaboratively. In total, n = 48 articles were included in the analysis.

Data were extracted from forest plots, tables and text. Some articles reported several meta-analyses. In those cases, we included multiple meta-analyses only if they contained distinct study samples. If several meta-analyses had overlapping study samples, we selected the most comprehensive (that is, the one containing the most studies) or, if the number of studies was equal, the first analysis presented in the article. Data extraction was independently performed by K.S.B. and either M.R.M. or C.M. and verified collaboratively.

The following data were extracted for each meta-analysis: first author and summary effect size estimate of the meta-analysis; and first author, publication year, sample size (by groups), number of events in the control group (for odds/risk ratios) and nominal significance ( p < 0.05, 'yes/no') of the contributing studies. For five articles, nominal study significance was unavailable and was therefore obtained from the original studies if they were electronically available. Studies with missing data (for example, due to unclear reporting) were excluded from the analysis.

The main outcome measure of our analysis was the achieved power of each individual study to detect the estimated summary effect reported in the corresponding meta-analysis to which it contributed, assuming an α level of 5%. Power was calculated using G * Power software 23 . We then calculated the mean and median statistical power across all studies.

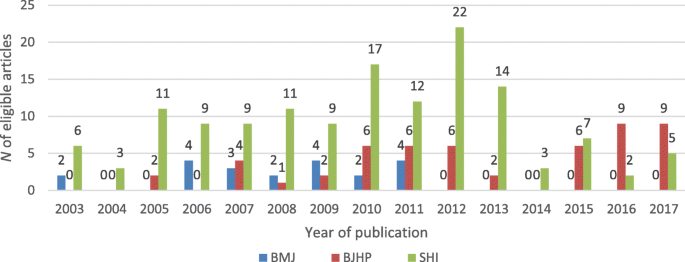

Results. Our search strategy identified 246 articles published in 2011, out of which 155 were excluded after an initial screening of either the abstract or the full text. Of the remaining 91 articles, 48 were eligible for inclusion in our analysis 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 , 46 , 47 , 48 , 49 , 50 , 51 , 52 , 53 , 54 , 55 , 56 , 57 , 58 , 59 , 60 , 61 , 62 , 63 , 64 , 65 , 66 , 67 , 68 , 69 , 70 , 71 , comprising data from 49 meta-analyses and 730 individual primary studies. A flow chart of the article selection process is shown in Fig. 2 , and the characteristics of included meta-analyses are described in Table 1 .

Our results indicate that the median statistical power in neuroscience is 21%. We also applied a test for an excess of statistical significance 72 . This test has recently been used to show that there is an excess significance bias in the literature of various fields, including in studies of brain volume abnormalities 73 , Alzheimer's disease genetics 70 , 74 and cancer biomarkers 75 . The test revealed that the actual number (349) of nominally significant studies in our analysis was significantly higher than the number expected (254; p < 0.0001). Importantly, these calculations assume that the summary effect size reported in each study is close to the true effect size, but it is likely that they are inflated owing to publication and other biases described above.

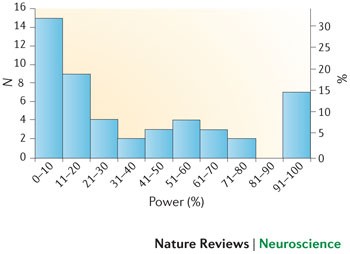

Interestingly, across the 49 meta-analyses included in our analysis, the average power demonstrated a clear bimodal distribution ( Fig. 3 ). Most meta-analyses comprised studies with very low average power — almost 50% of studies had an average power lower than 20%. However, seven meta-analyses comprised studies with high (>90%) average power 24 , 26 , 31 , 57 , 63 , 68 , 71 . These seven meta-analyses were all broadly neurological in focus and were based on relatively small contributing studies — four out of the seven meta-analyses did not include any study with over 80 participants. If we exclude these 'outlying' meta-analyses, the median statistical power falls to 18%.

The figure shows a histogram of median study power calculated for each of the n = 49 meta-analyses included in our analysis, with the number of meta-analyses ( N ) on the left axis and percent of meta-analyses (%) on the right axis. There is a clear bimodal distribution; n = 15 (31%) of the meta-analyses comprised studies with median power of less than 11%, whereas n = 7 (14%) comprised studies with high average power in excess of 90%. Despite this bimodality, most meta-analyses comprised studies with low statistical power: n = 28 (57%) had median study power of less than 31%. The meta-analyses ( n = 7) that comprised studies with high average power in excess of 90% had their broadly neurological subject matter in common.

Small sample sizes are appropriate if the true effects being estimated are genuinely large enough to be reliably observed in such samples. However, as small studies are particularly susceptible to inflated effect size estimates and publication bias, it is difficult to be confident in the evidence for a large effect if small studies are the sole source of that evidence. Moreover, many meta-analyses show small-study effects on asymmetry tests (that is, smaller studies have larger effect sizes than larger ones) but nevertheless use random-effect calculations, and this is known to inflate the estimate of summary effects (and thus also the power estimates). Therefore, our power calculations are likely to be extremely optimistic 76 .

Empirical evidence from specific fields

One limitation of our analysis is the under-representation of meta-analyses in particular subfields of neuroscience, such as research using neuroimaging and animal models. We therefore sought additional representative meta-analyses from these fields outside our 2011 sampling frame to determine whether a similar pattern of low statistical power would be observed.

Neuroimaging studies. Most structural and volumetric MRI studies are very small and have minimal power to detect differences between compared groups (for example, healthy people versus those with mental health diseases). A cl ear excess significance bias has been demonstrated in studies of brain volume abnormalities 73 , and similar problems appear to exist in fMRI studies of the blood-oxygen-level-dependent response 77 . In order to establish the average statistical power of studies of brain volume abnormalities, we applied the same analysis as described above to data that had been previously extracted to assess the presence of an excess of significance bias 73 . Our results indicated that the median statistical power of these studies was 8% across 461 individual studies contributing to 41 separate meta-analyses, which were drawn from eight articles that were published between 2006 and 2009. Full methodological details describing how studies were identified and selected are available elsewhere 73 .

Animal model studies. Previous analyses of studies using animal models have shown that small studies consistently give more favourable (that is, 'positive') results than larger studies 78 and that study quality is inversely related to effect size 79 , 80 , 81 , 82 . In order to examine the average power in neuroscience studies using animal models, we chose a representative meta-analysis that combined data from studies investigating sex differences in water maze performance (number of studies ( k ) = 19, summary effect size Cohen's d = 0.49) and radial maze performance ( k = 21, summary effect size d = 0.69) 80 . The summary effect sizes in the two meta-analyses provide evidence for medium to large effects, with the male and female performance differing by 0.49 to 0.69 standard deviations for water maze and radial maze, respectively. Our results indicate that the median statistical power for the water maze studies and the radial maze studies to detect these medium to large effects was 18% and 31%, respectively ( Table 2 ). The average sample size in these studies was 22 animals for the water maze and 24 for the radial maze experiments. Studies of this size can only detect very large effects ( d = 1.20 for n = 22, and d = 1.26 for n = 24) with 80% power — far larger than those indicated by the meta-analyses. These animal model studies were therefore severely underpowered to detect the summary effects indicated by the meta-analyses. Furthermore, the summary effects are likely to be inflated estimates of the true effects, given the problems associated with small studies described above.

The results described in this section are based on only two meta-analyses, and we should be appropriately cautious in extrapolating from this limited evidence. Nevertheless, it is notable that the results are so consistent with those observed in other fields, such as the neuroimaging and neuroscience studies that we have described above.

Implications

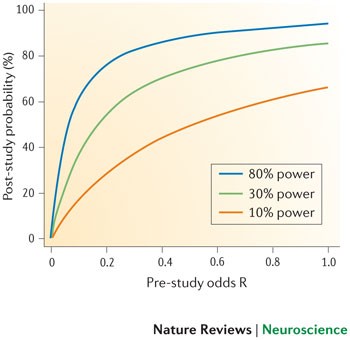

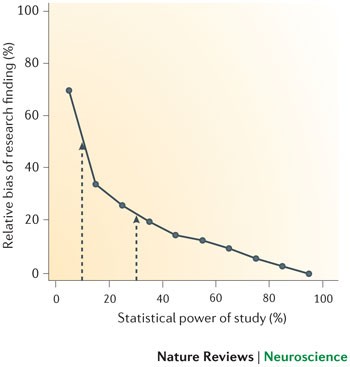

Implications for the likelihood that a research finding reflects a true effect. Our results indicate that the average statistical power of studies in the field of neuroscience is probably no more than between ∼ 8% and ∼ 31%, on the basis of evidence from diverse subfields within neuro-science. If the low average power we observed across these studies is typical of the neuroscience literature as a whole, this has profound implications for the field. A major implication is that the likelihood that any nominally significant finding actually reflects a true effect is small. As explained above, the probability that a research finding reflects a true effect (PPV) decreases as statistical power decreases for any given pre-study odds (R) and a fixed type I error level. It is easy to show the impact that this is likely to have on the reliability of findings. Figure 4 shows how the PPV changes for a range of values for R and for a range of v alues for the average power in a field. For effects that are genuinely non-null, Fig. 5 shows the degree to which an effect size estimate is likely to be inflated in initial studies — owing to the winner's curse phenomenon — for a range of values for statistical power.

The probability that a research finding reflects a true effect — also known as the positive predictive value (PPV) — depends on both the pre-study odds of the effect being true (the ratio R of 'true effects' over 'null effects' in the scientific field) and the study's statistical power. The PPV can be calculated for given values of statistical power (1 − β), pre-study odds ratio (R) and type I error rate (α), using the formula PPV = ([1 − β] × R) / ([1− β] × R + α). The median statistical power of studies in the neuroscience field is optimistically estimated to be between ∼ 8% and ∼ 31%. The figure illustrates how low statistical power consistent with this estimated range (that is, between 10% and 30%) detrimentally affects the association between the probability that a finding reflects a true effect (PPV) and pre-study odds, assuming α = 0.05. Compared with conditions of appropriate statistical power (that is, 80%), the probability that a research finding reflects a true effect is greatly reduced for 10% and 30% power, especially if pre-study odds are low. Notably, in an exploratory research field such as much of neuroscience, the pre-study odds are often low.

The winner's curse refers to the phenomenon that studies that find evidence of an effect often provide inflated estimates of the size of that effect. Such inflation is expected when an effect has to pass a certain threshold — such as reaching statistical significance — in order for it to have been 'discovered'. Effect inflation is worst for small, low-powered studies, which can only detect effects that happen to be large. If, for example, the true effect is medium-sized, only those small studies that, by chance, estimate the effect to be large will pass the threshold for discovery (that is, the threshold for statistical significance, which is typically set at p < 0.05). In practice, this means that research findings of small studies are biased in favour of inflated effects. By contrast, large, high-powered studies can readily detect both small and large effects and so are less biased, as both over- and underestimations of the true effect size will pass the threshold for 'discovery'. We optimistically estimate the median statistical power of studies in the neuroscience field to be between ∼ 8% and ∼ 31%. The figure shows simulations of the winner's curse (expressed on the y-axis as relative bias of research findings). These simulations suggest that initial effect estimates from studies powered between ∼ 8% and ∼ 31% are likely to be inflated by 25% to 50% (shown by the arrows in the figure). Inflated effect estimates make it difficult to determine an adequate sample size for replication studies, increasing the probability of type II errors. Figure is modified, with permission, from Ref. 103 © (2007) Cell Press.

The estimates shown in Figs 4 , 5 are likely to be optimistic, however, because they assume that statistical power and R are the only considerations in determining the probability that a research finding reflects a true effect. As we have already discussed, several other biases are also likely to reduce the probability that a research finding reflects a true effect. Moreover, the summary effect size estimates that we used to determine the statistical power of individual studies are themselves likely to be inflated owing to bias — our excess of significance test provided clear evidence for this. Therefore, the average statistical power of studies in our analysis may in fact be even lower than the 8–31% range we observed.

Ethical implications. Low average power in neuroscience studies also has ethical implications. In our analysis of animal model studies, the average sample size of 22 animals for the water maze experiments was only sufficient to detect an effect size of d = 1.26 with 80% power, and the average sample size of 24 animals for the radial maze experiments was only sufficient to detect an effect size of d = 1.20. In order to achieve 80% power to detect, in a single study, the most probable true effects as indicated by the meta-analysis, a sample size of 134 animals would be required for the water maze experiment (assuming an effect size of d = 0.49) and 68 animals for the radial maze experiment (assuming an effect size of d = 0.69); to achieve 95% power, these sample sizes would need to increase to 220 and 112, respectively. What is particularly striking, however, is the inefficiency of a continued reliance on small sample sizes. Despite the apparently large numbers of animals required to achieve acceptable statistical power in these experiments, the total numbers of animals actually used in the studies contributing to the meta-analyses were even larger: 420 for the water maze experiments and 514 for the radial maze experiments.

There is ongoing debate regarding the appropriate balance to strike between using as few animals as possible in experiments and the need to obtain robust, reliable findings. We argue that it is important to appreciate the waste associated with an underpowered study — even a study that achieves only 80% power still presents a 20% possibility that the animals have been sacrificed without the study detecting the underlying true effect. If the average power in neuroscience animal model studies is between 20–30%, as we observed in our analysis above, the ethical implications are clear.

Low power therefore has an ethical dimension — unreliable research is inefficient and wasteful. This applies to both human and animal research. The principles of the 'three Rs' in animal research (reduce, refine and replace) 83 require appropriate experimental design and statistics — both too many and too few animals present an issue as they reduce the value of research outputs. A requirement for sample size and power calculation is included in the Animal Research: Reporting In Vivo Experiments (ARRIVE) guidelines 84 , but such calculations require a clear appreciation of the expected magnitude of effects being sought.

Of course, it is also wasteful to continue data collection once it is clear that the effect being sought does not exist or is too small to be of interest. That is, studies are not just wasteful when they stop too early, they are also wasteful when they stop too late. Planned, sequential analyses are sometimes used in large clinical trials when there is considerable expense or potential harm associated with testing participants. Clinical trials may be stopped prematurely in the case of serious adverse effects, clear beneficial effects (in which case it would be unethical to continue to allocate participants to a placebo condition) or if the interim effects are so unimpressive that any prospect of a positive result with the planned sample size is extremely unlikely 85 . Within a significance testing framework, such interim analyses — and the protocol for stopping — must be planned for the assumptions of significance testing to hold. Concerns have been raised as to whether stopping trials early is ever justified given the tendency for such a practice to produce inflated effect size estimates 86 . Furthermore, the decision process around stopping is not often fully disclosed, increasing the scope for researcher degrees of freedom 86 . Alternative approaches exist. For example, within a Bayesian framework, one can monitor the Bayes factor and simply stop testing when the evidence is conclusive or when resources are expended 87 . Similarly, adopting conservative priors can substantially reduce the likelihood of claiming that an effect exists when in fact it does not 85 . At present, significance testing remains the dominant framework within neuroscience, but the flexibility of alternative (for example, Bayesian) approaches means that they should be taken seriously by the field.

Conclusions and future directions

A consequence of the remarkable growth in neuroscience over the past 50 years has been that the effects we now seek in our experiments are often smaller and more subtle than before as opposed to when mostly easily discernible 'low-hanging fruit' were targeted. At the same time, computational analysis of very large datasets is now relatively straightforward, so that an enormous number of tests can be run in a short time on the same dataset. These dramatic advances in the flexibility of research design and analysis have occurred without accompanying changes to other aspects of research design, particularly power. For example, the average sample size has not changed substantially over time 88 despite the fact that neuroscientists are likely to be pursuing smaller effects. The increase in research flexibility and the complexity of study designs 89 combined with the stability of sample size and search for increasingly subtle effects has a disquieting consequence: a dramatic increase in the likelihood that statistically significant findings are spurious. This may be at the root of the recent replication failures in the preclinical literature 8 and the correspondingly poor translation of these findings into humans 90 .

Low power is a problem in practice because of the normative publishing standards for producing novel, significant, clean results and the ubiquity of null hypothesis significance testing as the means of evaluating the truth of research findings. As we have shown, these factors result in biases that are exacerbated by low power. Ultimately, these biases reduce the reproducibility of neuroscience findings and negatively affect the validity of the accumulated findings. Unfortunately, publishing and reporting practices are unlikely to change rapidly. Nonetheless, existing scientific practices can be improved with small changes or additions that approximate key features of the idealized model 4 , 91 , 92 . We provide a summary of recommendations for future research practice in Box 2 .

Increasing disclosure. False positives occur more frequently and go unnoticed when degrees of freedom in data analysis and reporting are undisclosed 5 . Researchers can improve confidence in published reports by noting in the text: “We report how we determined our sample size, all data exclusions, all data manipulations, and all measures in the study.” 7 When such a statement is not possible, disclosure of the rationale and justification of deviations from what should be common practice (that is, reporting sample size, data exclusions, manipulations and measures) will improve readers' understanding and interpretation of the reported effects and, therefore, of what level of confidence in the reported effects is appropriate. In clinical trials, there is an increasing requirement to adhere to the Consolidated Standards of Reporting Trials ( CONSORT ), and the same is true for systematic reviews and meta-analyses, for which the Preferred Reporting Items for Systematic Reviews and Meta-Analyses ( PRISMA ) guidelines are now being adopted. A number of reporting guidelines have been produced for application to diverse study designs and tools, and an updated list is maintained by the EQUATOR Network 93 . A ten-item checklist of study quality has been developed by the Collaborative Approach to Meta-Analysis and Review of Animal Data in Experimental Stroke ( CAMARADES ), but to the best of our knowledge, this checklist is not yet widely used in primary studies.

Registration of confirmatory analysis plan. Both exploratory and confirmatory research strategies are legitimate and useful. However, presenting the result of an exploratory analysis as if it arose from a confirmatory test inflates the chance that the result is a false positive. In particular, p -values lose their diagnostic value if they are not the result of a pre-specified analysis plan for which all results are reported. Pre-registration — and, ultimately, full reporting of analysis plans — clarifies the distinction between confirmatory and exploratory analysis, encourages well-powered studies (at least in the case of confirmatory analyses) and reduces the file-drawer effect. These subsequently reduce the likelihood of false positive accumulation. The Open Science Framework ( OSF ) offers a registration mechanism for scientific research. For observational studies, it would be useful to register datasets in detail, so that one can be aware of how extensive the multiplicity and complexity of analyses can be 94 .

Improving availability of materials and data. Making research materials available will improve the quality of studies aimed at replicating and extending research findings. Making raw data available will improve data aggregation methods and confidence in reported results. There are multiple repositories for making data more widely available, such as The Dataverse Network Project and Dryad ) for data in general and others such as OpenfMRI , INDI and OASIS for neuroimaging data in particular. Also, commercial repositories (for example, figshare ) offer means for sharing data and other research materials. Finally, the OSF offers infrastructure for documenting, archiving and sharing data within collaborative teams and also making some or all of those research materials publicly available. Leading journals are increasingly adopting policies for making data, protocols and analytical codes available, at least for some types of studies. However, these policies are uncommonly adhered to 95 , and thus the ability for independent experts to repeat published analysis remains low 96 .

Incentivizing replication. Weak incentives for conducting and publishing replications are a threat to identifying false positives and accumulating precise estimates of research findings. There are many ways to alter replication incentives 97 . For example, journals could offer a submission option for registered replications of important research results (see, for example, a possible new submission format for Cortex 98 ). Groups of researchers can also collaborate on performing one or many replications to increase the total sample size (and therefore the statistical power) achieved while minimizing the labour and resource impact on any one contributor. Adoption of the gold standard of large-scale collaborative consortia and extensive replication in fields such as human genome epidemiology has transformed the reliability of the produced findings. Although previously almost all of the proposed candidate gene associations from small studies were false 99 (with some exceptions 100 ), collaborative consortia have substantially improved power, and the replicated results can be considered highly reliable. In another example, in the field of psychology, the Reproducibility Project is a collaboration of more than 100 researchers aiming to estimate the reproducibility of psychological science by replicating a large sample of studies published in 2008 in three psychology journals 92 . Each individual research study contributes just a small portion of time and effort, but the combined effect is substantial both for accumulating replications and for generating an empirical estimate of reproducibility.

Concluding remarks. Small, low-powered studies are endemic in neuroscience. Nevertheless, there are reasons to be optimistic. Some fields are confronting the problem of the poor reliability of research findings that arises from low-powered studies. For example, in genetic epidemiology sample sizes increased dramatically with the widespread understanding that the effects being sought are likely to be extremely small. This, together with an increasing requirement for strong statistical evidence and independent replication, has resulted in far more reliable results. Moreover, the pressure for emphasizing significant results is not absolute. For example, the Proteus phenomenon 101 suggests that refuting early results can be attractive in fields in which data can be produced rapidly. Nevertheless, we should not assume that science is effectively or efficiently self-correcting 102 . There is now substantial evidence that a large proportion of the evidence reported in the scientific literature may be unreliable. Acknowledging this challenge is the first step towards addressing the problematic aspects of current scientific practices and identifying effective solutions.

Box 1 | Key statistical terms

The Collaborative Approach to Meta-Analysis and Review of Animal Data from Experimental Studies ( CAMARADES ) is a collaboration that aims to reduce bias and improve the quality of methods and reporting in animal research. To this end, CAMARADES provides a resource for data sharing, aims to provide a web-based stratified meta-analysis bioinformatics engine and acts as a repository for completed reviews.

Effect size

An effect size is a standardized measure that quantifies the size of the difference between two groups or the strength of an association between two variables. As standardized measures, effect sizes allow estimates from different studies to be compared directly and also to be combined in meta-analyses.

Excess significance

Excess significance is the phenomenon whereby the published literature has an excess of statistically significant results that are due to biases in reporting. Several mechanisms contribute to reporting bias, including study publication bias, where the results of statistically non-significant ('negative') studies are left unpublished; selective outcome reporting bias, where null results are omitted; and selective analysis bias, where data are analysed with different methods that favour 'positive' results.

Fixed and random effects

A fixed-effect meta-analysis assumes that the underlying effect is the same (that is, fixed) in all studies and that any variation is due to sampling errors. By contrast, a random-effect meta-analysis does not require this assumption and allows for heterogeneity between studies. A test of heterogeneity in between-study effects is often used to test the fixed-effect assumption.

Meta-analysis

Meta-analysis refers to statistical methods for contrasting and combining results from different studies to provide more powerful estimates of the true effect size as opposed to a less precise effect size derived from a single study.

Positive predictive value

The positive predictive value (PPV) is the probability that a 'positive' research finding reflects a true effect (that is, the finding is a true positive). This probability of a research finding reflecting a true effect depends on the prior probability of it being true (before doing the study), the statistical power of the study and the level of statistical significance.

Proteus phenomenon

The Proteus phenomenon refers to the situation in which the first published study is often the most biased towards an extreme result (the winner's curse). Subsequent replication studies tend to be less biased towards the extreme, often finding evidence of smaller effects or even contradicting the findings from the initial study.

Statistical power

The statistical power of a test is the probability that it will correctly reject the null hypothesis when the null hypothesis is false (that is, the probability of not committing a type II error or making a false negative decision). The probability of committing a type II error is referred to as the false negative rate (β), and power is equal to 1 − β.

Winner's curse

The winner's curse refers to the phenomenon whereby the 'lucky' scientist who makes a discovery is cursed by finding an inflated estimate of that effect. The winner's curse occurs when thresholds, such as statistical significance, are used to determine the presence of an effect and is most severe when thresholds are stringent and studies are too small and thus have low power.

Box 2 | Recommendations for researchers

Perform an a priori power calculation

Use the existing literature to estimate the size of effect you are looking for and design your study accordingly. If time or financial constraints mean your study is underpowered, make this clear and acknowledge this limitation (or limitations) in the interpretation of your results.

Disclose methods and findings transparently

If the intended analyses produce null findings and you move on to explore your data in other ways, say so. Null findings locked in file drawers bias the literature, whereas exploratory analyses are only useful and valid if you acknowledge the caveats and limitations.

Pre-register your study protocol and analysis plan

Pre-registration clarifies whether analyses are confirmatory or exploratory, encourages well-powered studies and reduces opportunities for non-transparent data mining and selective reporting. Various mechanisms for this exist (for example, the Open Science Framework ).

Make study materials and data available

Making research materials available will improve the quality of studies aimed at replicating and extending research findings. Making raw data available will enhance opportunities for data aggregation and meta-analysis, and allow external checking of analyses and results.

Work collaboratively to increase power and replicate findings

Combining data increases the total sample size (and therefore power) while minimizing the labour and resource impact on any one contributor. Large-scale collaborative consortia in fields such as human genetic epidemiology have transformed the reliability of findings in these fields.

Change history

15 april 2013.

On page 2 of this article, the definition of R should have read: "R is the pre-study odds (that is, the odds that a probed effect is indeed non-null among the effects being probed)". This has been corrected in the online version.

Ioannidis, J. P. Why most published research findings are false. PLoS Med. 2 , e124 (2005). This study demonstrates that many (and possibly most) of the conclusions drawn from biomedical research are probably false. The reasons for this include using flexible study designs and flexible statistical analyses and running small studies with low statistical power.

Article PubMed PubMed Central Google Scholar

Fanelli, D. Negative results are disappearing from most disciplines and countries. Scientometrics 90 , 891–904 (2012).

Article Google Scholar

Greenwald, A. G. Consequences of prejudice against the null hypothesis. Psychol. Bull. 82 , 1–20 (1975).

Nosek, B. A., Spies, J. R. & Motyl, M. Scientific utopia: II. Restructuring incentives and practices to promote truth over publishability. Perspect. Psychol. Sci. 7 , 615–631 (2012).

Article PubMed Google Scholar

Simmons, J. P., Nelson, L. D. & Simonsohn, U. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol. Sci. 22 , 1359–1366 (2011). This article empirically illustrates that flexible study designs and data analysis dramatically increase the possibility of obtaining a nominally significant result. However, conclusions drawn from these results are almost certainly false.

Sullivan, P. F. Spurious genetic associations. Biol. Psychiatry 61 , 1121–1126 (2007).

Article CAS PubMed Google Scholar

Begley, C. G. & Ellis, L. M. Drug development: raise standards for preclinical cancer research. Nature 483 , 531–533 (2012).

Prinz, F., Schlange, T. & Asadullah, K. Believe it or not: how much can we rely on published data on potential drug targets? Nature Rev. Drug Discov. 10 , 712 (2011).

Article CAS Google Scholar

Fang, F. C. & Casadevall, A. Retracted science and the retraction index. Infect. Immun. 79 , 3855–3859 (2011).

Article CAS PubMed PubMed Central Google Scholar

Munafo, M. R., Stothart, G. & Flint, J. Bias in genetic association studies and impact factor. Mol. Psychiatry 14 , 119–120 (2009).

Sterne, J. A. & Davey Smith, G. Sifting the evidence — what's wrong with significance tests? BMJ 322 , 226–231 (2001).

Ioannidis, J. P. A., Tarone, R. & McLaughlin, J. K. The false-positive to false-negative ratio in epidemiologic studies. Epidemiology 22 , 450–456 (2011).

Ioannidis, J. P. A. Why most discovered true associations are inflated. Epidemiology 19 , 640–648 (2008).

Tversky, A. & Kahneman, D. Belief in the law of small numbers. Psychol. Bull. 75 , 105–110 (1971).

Masicampo, E. J. & Lalande, D. R. A peculiar prevalence of p values just below .05. Q. J. Exp. Psychol. 65 , 2271–2279 (2012).

Carp, J. The secret lives of experiments: methods reporting in the fMRI literature. Neuroimage 63 , 289–300 (2012). This article reviews methods reporting and methodological choices across 241 recent fMRI studies and shows that there were nearly as many unique analytical pipelines as there were studies. In addition, many studies were underpowered to detect plausible effects.

Dwan, K. et al. Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS ONE 3 , e3081 (2008).

Sterne, J. A. et al. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ 343 , d4002 (2011).

Joy-Gaba, J. A. & Nosek, B. A. The surprisingly limited malleability of implicit racial evaluations. Soc. Psychol. 41 , 137–146 (2010).

Schmidt, K. & Nosek, B. A. Implicit (and explicit) racial attitudes barely changed during Barack Obama's presidential campaign and early presidency. J. Exp. Soc. Psychol. 46 , 308–314 (2010).

Evangelou, E., Siontis, K. C., Pfeiffer, T. & Ioannidis, J. P. Perceived information gain from randomized trials correlates with publication in high-impact factor journals. J. Clin. Epidemiol. 65 , 1274–1281 (2012).

Pereira, T. V. & Ioannidis, J. P. Statistically significant meta-analyses of clinical trials have modest credibility and inflated effects. J. Clin. Epidemiol. 64 , 1060–1069 (2011).

Faul, F., Erdfelder, E., Lang, A. G. & Buchner, A. G * Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39 , 175–191 (2007).

Babbage, D. R. et al. Meta-analysis of facial affect recognition difficulties after traumatic brain injury. Neuropsychology 25 , 277–285 (2011).

Bai, H. Meta-analysis of 5, 10-methylenetetrahydrofolate reductase gene poymorphism as a risk factor for ischemic cerebrovascular disease in a Chinese Han population. Neural Regen. Res. 6 , 277–285 (2011).

Google Scholar

Bjorkhem-Bergman, L., Asplund, A. B. & Lindh, J. D. Metformin for weight reduction in non-diabetic patients on antipsychotic drugs: a systematic review and meta-analysis. J. Psychopharmacol. 25 , 299–305 (2011).

Bucossi, S. et al. Copper in Alzheimer's disease: a meta-analysis of serum, plasma, and cerebrospinal fluid studies. J. Alzheimers Dis. 24 , 175–185 (2011).

Chamberlain, S. R. et al. Translational approaches to frontostriatal dysfunction in attention-deficit/hyperactivity disorder using a computerized neuropsychological battery. Biol. Psychiatry 69 , 1192–1203 (2011).

Chang, W. P., Arfken, C. L., Sangal, M. P. & Boutros, N. N. Probing the relative contribution of the first and second responses to sensory gating indices: a meta-analysis. Psychophysiology 48 , 980–992 (2011).

Chang, X. L. et al. Functional parkin promoter polymorphism in Parkinson's disease: new data and meta-analysis. J. Neurol. Sci. 302 , 68–71 (2011).