- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

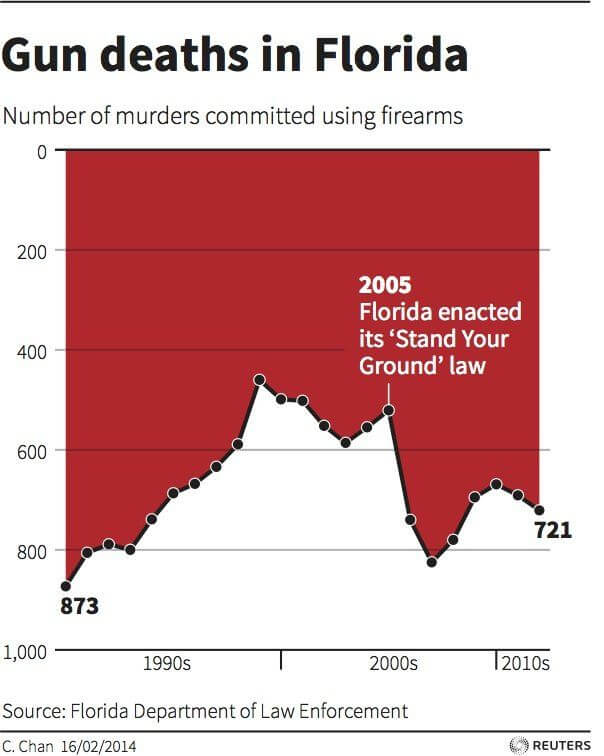

Bad Data Visualization: 5 Examples of Misleading Data

- 28 Jan 2021

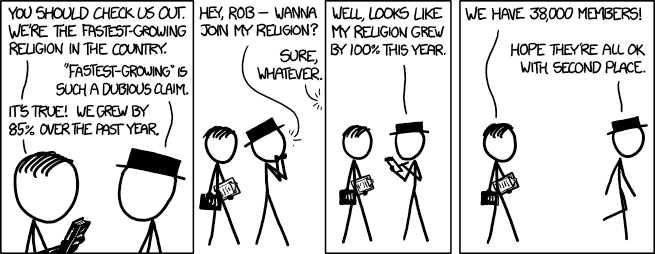

Data visualization , the process of creating visual representations of data, offers businesses various benefits. One of the most powerful is the ability to communicate with data to a wider audience both internally and externally. This, in turn, enables more stakeholders to make data-driven decisions .

In recent years, a proliferation of data visualization tools has made it easier than ever for professionals without a data background to create graphics and charts.

While this has primarily been a positive development, it’s likely given rise to one specific problem: poor or otherwise inaccurate data visualization. Just as well-crafted data visualizations benefit organizations that generate and use them, poorly crafted ones create several problems.

Access your free e-book today.

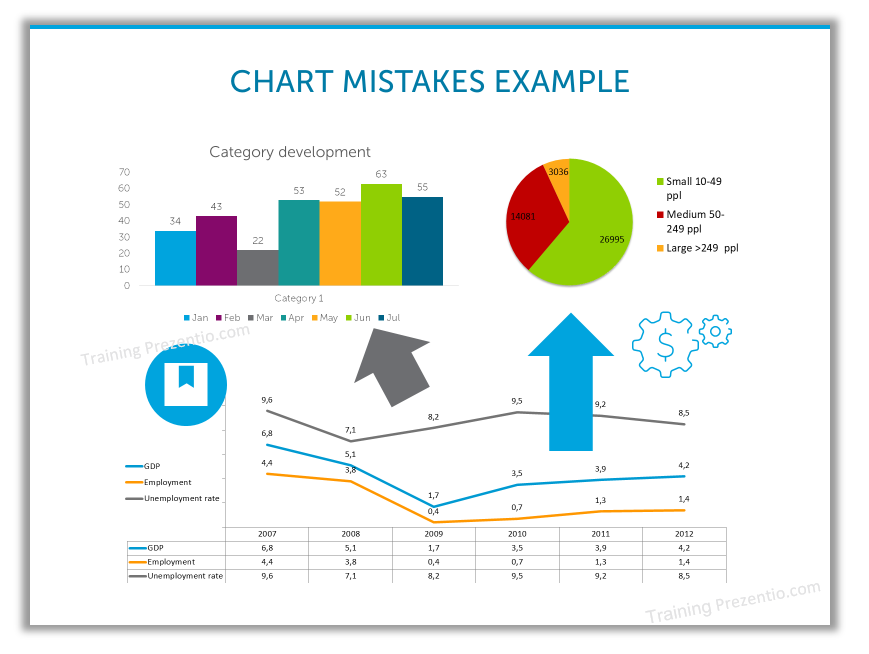

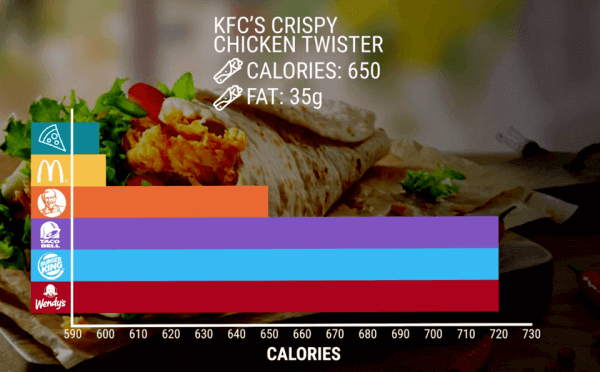

Data Visualization Mistakes to Avoid

When generating data visualizations, it can be easy to make mistakes that lead to faulty interpretation, especially if you’re just starting out. Below are five common mistakes you should be aware of and some examples that illustrate them.

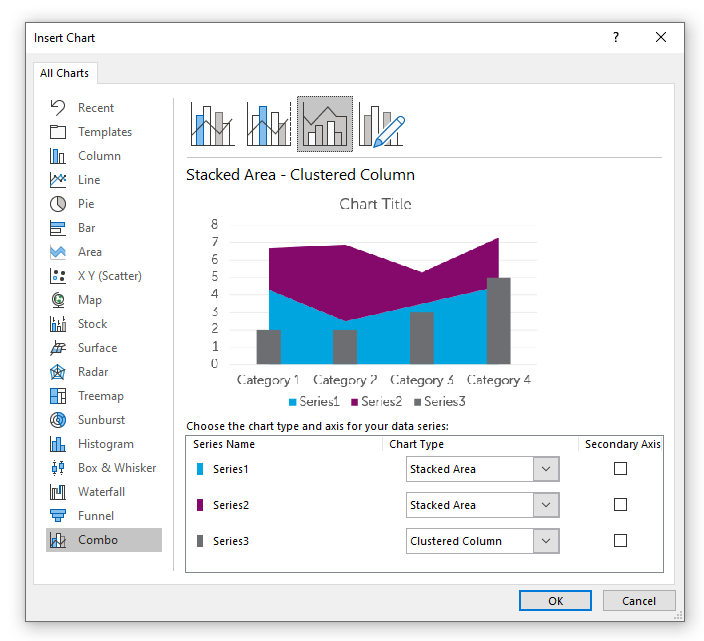

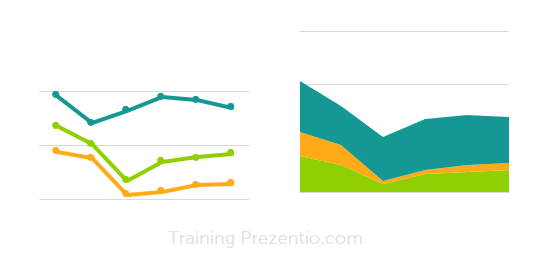

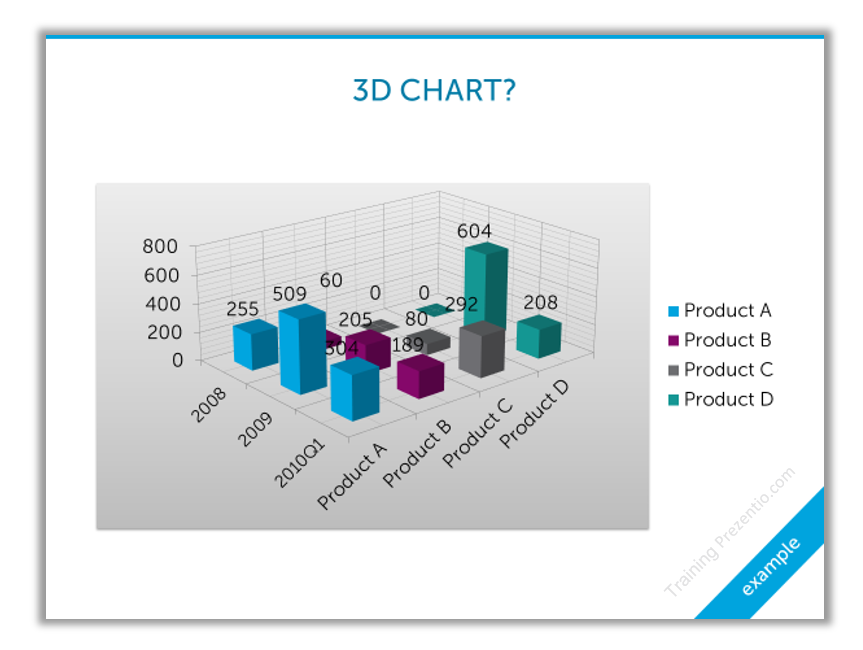

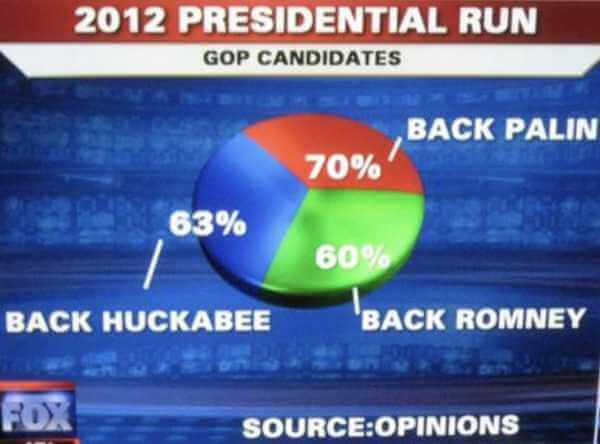

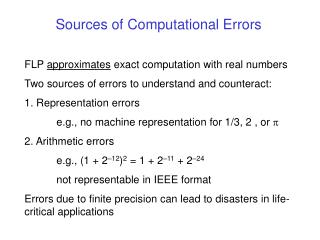

1. Using the Wrong Type of Chart or Graph

There are many types of charts or graphs you can leverage to represent data visually. This is largely beneficial because it allows you to include some variety in your data visualizations. It can, however, prove detrimental if you choose a graph that isn’t well suited to the insights you’re trying to illustrate.

Some graphs and charts work well for communicating specific types of information, but not others. Problems can arise when you try visualizing data using an unsuitable format.

The nature of your data usually dictates the format of your visualization. The most important characteristic is whether the data is qualitative (it describes or categorizes) or quantitative (meaning, it’s measurable). Qualitative data tends to be better suited to bar graphs and pie charts, while quantitative data is best represented in formats like charts and histograms.

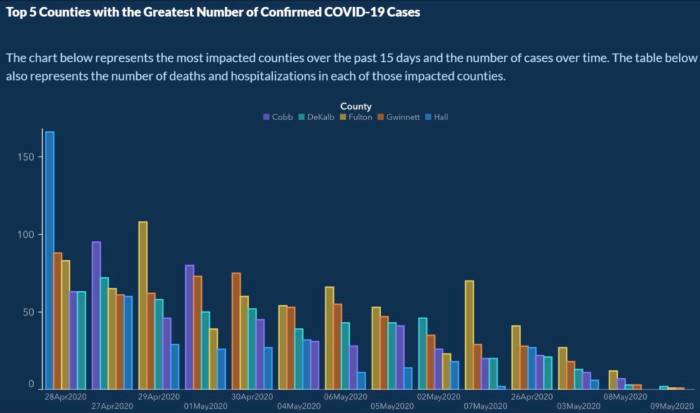

2. Including Too Many Variables

The point of generating a data visualization is to tell a story. As such, it’s your job to include as much relevant information as possible—while excluding irrelevant or unnecessary details. Doing so ensures your audience pays attention to the most important data.

For this reason, in conceptualizing your data visualization, you should first seek to identify the necessary variables. The number of variables you select will then inform your visualization’s format. Ask yourself: Which format will help communicate the data in the clearest manner possible?

A pie chart that compares too many variables, for example, will likely make it difficult to see the differences between values. It might also distract the viewer from the point you’re trying to make.

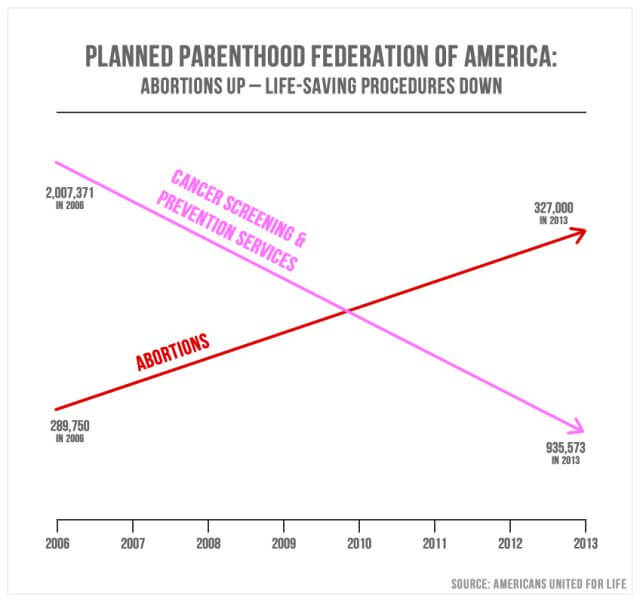

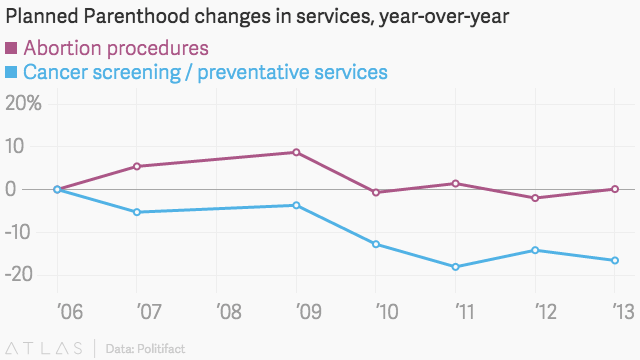

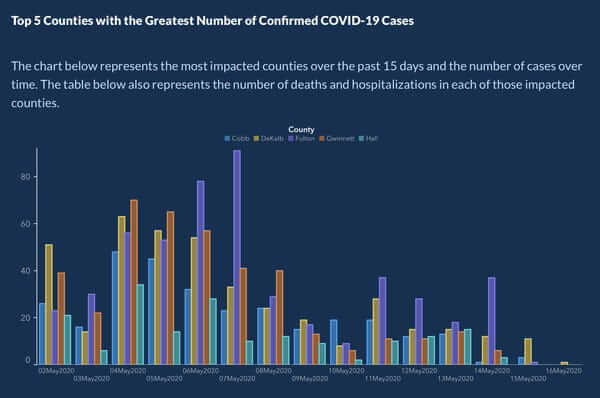

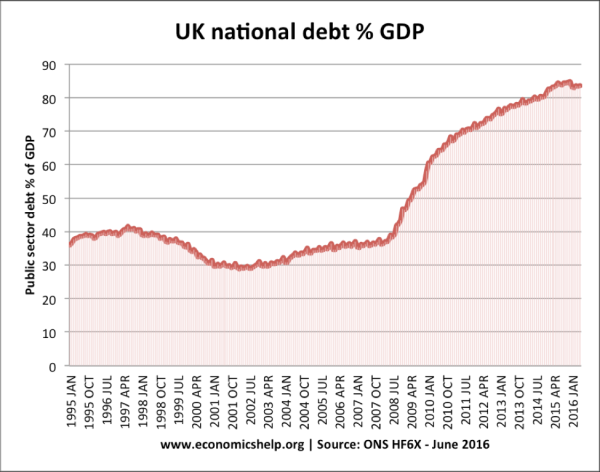

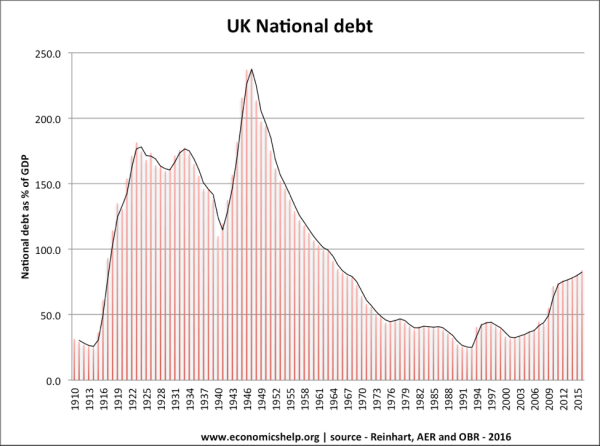

3. Using Inconsistent Scales

If your chart or graph is meant to show the difference between data points, your scale must remain consistent. If your visualization’s scale is inconsistent, it can cause significant confusion for the viewer.

For example, if you generate a pictogram that uses images to represent a measure of data within a bar graph, the images should remain the same size from column to column.

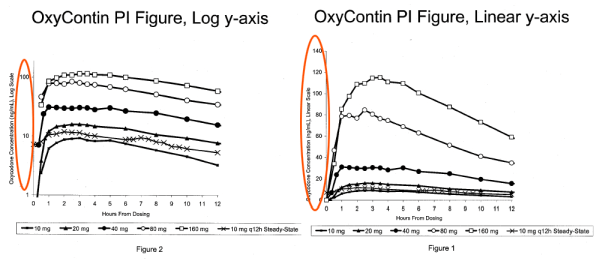

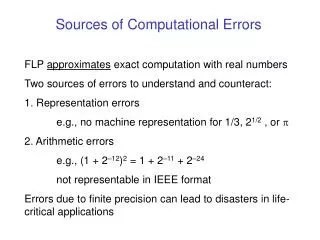

4. Unclear Linear vs. Logarithmic Scaling

The easiest way to understand the difference between a linear scale and a logarithmic one is to look at the axes that each is built on. When a chart is built on a linear scale, the value between any two points along either axis is always equal and unchanging. When a chart is built on a logarithmic scale, the value between any two points along either axis changes according to a particular pattern.

While logarithmic scaling can be an effective means of communicating data, it must be clear that it’s being used in the graphic. When this is unclear, the viewer may, by default, assume they’re looking at a linear scale, which is more common. This can cause confusion and understate your data’s significance.

For example, the two graphics below communicate the same data. The primary difference is that the graphic on the left is built on a linear scale, while the one on the right is built on a logarithmic one.

This isn’t to say logarithmic scaling shouldn’t be used; simply that, when it’s used, it must be clearly stated and communicated to the viewer.

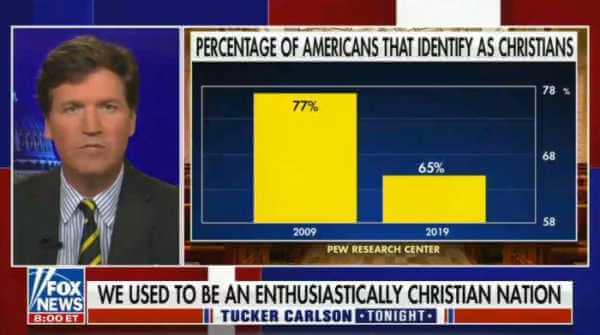

5. Poor Color Choices

Used carefully, color can make it easier for the viewer to understand the data you’re trying to communicate. When used incorrectly, however, it can cause significant confusion. It’s important to understand the story you’re hoping to tell with your data visualization and choose your colors wisely.

Some common issues that arise when incorporating color into your visualizations include:

- Using too many colors, making it difficult for the reader to quickly understand what they’re looking at

- Using familiar colors (for example, red and green) in surprising ways

- Using colors with little contrast

- Not accounting for viewers who may be colorblind

Consider a bar graph that’s meant to show changes in a technology’s adoption rate. Some of the bars indicate increases in adoption, while others indicate decreases. If you use red to represent increases and green to indicate decreases, it might confuse the viewer, who’s likely accustomed to red meaning negative and green meaning positive.

As another example, consider a US map chart that shows virus infection rates from state to state, with colors representing different concentrations of positive cases. Typically, map charts leverage different shades within the same color family. The lighter the shade, the fewer the cases in that state; the darker the shade, the more cases there are. If you go against this assumption and use a darker color to indicate fewer cases, it could confuse the viewer.

The Impact of Poor Data Visualization

While an inaccurate chart may seem like a small error in the grand scheme of your organization, it can have profound repercussions.

By their nature, inaccurate data visualizations lead your audience to have an inaccurate understanding of the data that’s presented in them. This misunderstanding can lead to faulty insights and poor business decisions—all under the guise that they’re backed by data.

Taken to the extreme, a chart or graph that’s improperly formatted could lead to legal or regulatory issues. For example, a misleading data visualization included in a financial report could cause investors to buy or sell shares of company stock.

For these reasons, a firm understanding of data science is an essential skill for professionals. Knowing when data is accurate and complete, and being able to identify discrepancies between numbers and any visualizations created from them, is a must-have in today’s business environment. An online course centered around business analytics can be an effective way to build these skills .

Do you want to further your data literacy? Download our Beginner’s Guide to Data & Analytics to find out how you can leverage data for professional and organizational success.

About the Author

Home Blog Design Understanding Data Presentations (Guide + Examples)

Understanding Data Presentations (Guide + Examples)

In this age of overwhelming information, the skill to effectively convey data has become extremely valuable. Initiating a discussion on data presentation types involves thoughtful consideration of the nature of your data and the message you aim to convey. Different types of visualizations serve distinct purposes. Whether you’re dealing with how to develop a report or simply trying to communicate complex information, how you present data influences how well your audience understands and engages with it. This extensive guide leads you through the different ways of data presentation.

Table of Contents

What is a Data Presentation?

What should a data presentation include, line graphs, treemap chart, scatter plot, how to choose a data presentation type, recommended data presentation templates, common mistakes done in data presentation.

A data presentation is a slide deck that aims to disclose quantitative information to an audience through the use of visual formats and narrative techniques derived from data analysis, making complex data understandable and actionable. This process requires a series of tools, such as charts, graphs, tables, infographics, dashboards, and so on, supported by concise textual explanations to improve understanding and boost retention rate.

Data presentations require us to cull data in a format that allows the presenter to highlight trends, patterns, and insights so that the audience can act upon the shared information. In a few words, the goal of data presentations is to enable viewers to grasp complicated concepts or trends quickly, facilitating informed decision-making or deeper analysis.

Data presentations go beyond the mere usage of graphical elements. Seasoned presenters encompass visuals with the art of data storytelling , so the speech skillfully connects the points through a narrative that resonates with the audience. Depending on the purpose – inspire, persuade, inform, support decision-making processes, etc. – is the data presentation format that is better suited to help us in this journey.

To nail your upcoming data presentation, ensure to count with the following elements:

- Clear Objectives: Understand the intent of your presentation before selecting the graphical layout and metaphors to make content easier to grasp.

- Engaging introduction: Use a powerful hook from the get-go. For instance, you can ask a big question or present a problem that your data will answer. Take a look at our guide on how to start a presentation for tips & insights.

- Structured Narrative: Your data presentation must tell a coherent story. This means a beginning where you present the context, a middle section in which you present the data, and an ending that uses a call-to-action. Check our guide on presentation structure for further information.

- Visual Elements: These are the charts, graphs, and other elements of visual communication we ought to use to present data. This article will cover one by one the different types of data representation methods we can use, and provide further guidance on choosing between them.

- Insights and Analysis: This is not just showcasing a graph and letting people get an idea about it. A proper data presentation includes the interpretation of that data, the reason why it’s included, and why it matters to your research.

- Conclusion & CTA: Ending your presentation with a call to action is necessary. Whether you intend to wow your audience into acquiring your services, inspire them to change the world, or whatever the purpose of your presentation, there must be a stage in which you convey all that you shared and show the path to staying in touch. Plan ahead whether you want to use a thank-you slide, a video presentation, or which method is apt and tailored to the kind of presentation you deliver.

- Q&A Session: After your speech is concluded, allocate 3-5 minutes for the audience to raise any questions about the information you disclosed. This is an extra chance to establish your authority on the topic. Check our guide on questions and answer sessions in presentations here.

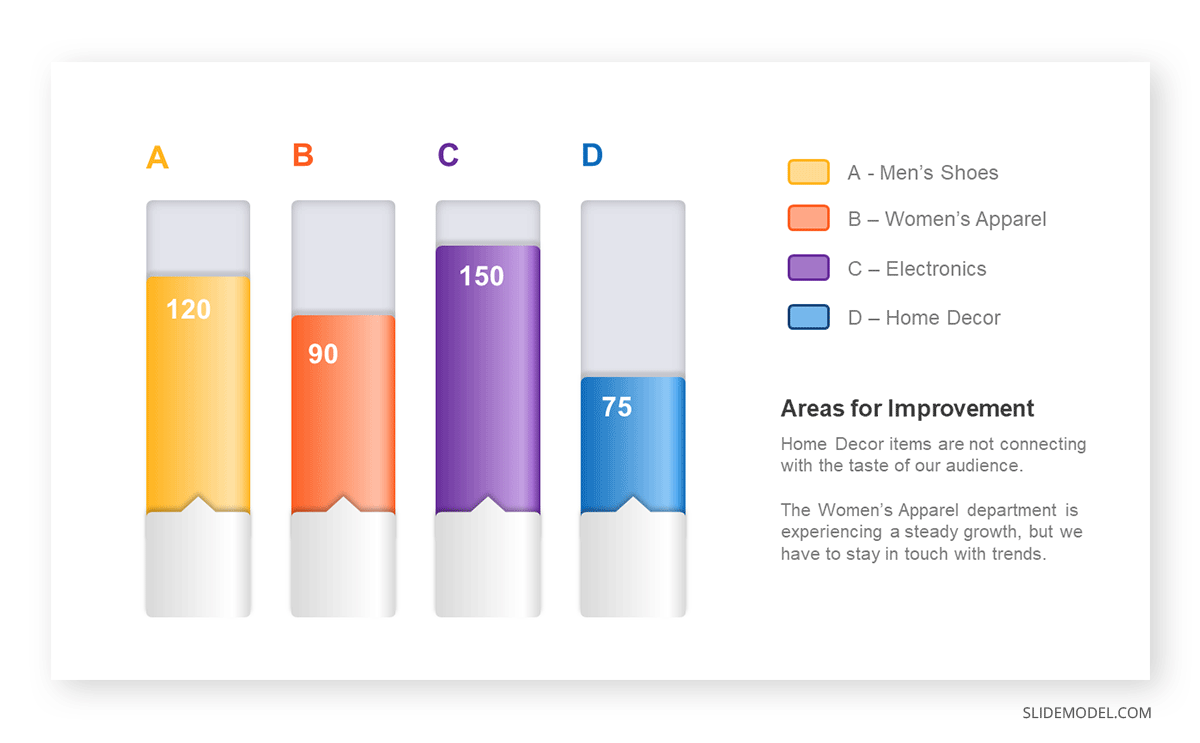

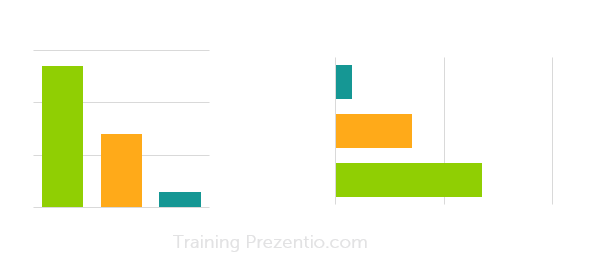

Bar charts are a graphical representation of data using rectangular bars to show quantities or frequencies in an established category. They make it easy for readers to spot patterns or trends. Bar charts can be horizontal or vertical, although the vertical format is commonly known as a column chart. They display categorical, discrete, or continuous variables grouped in class intervals [1] . They include an axis and a set of labeled bars horizontally or vertically. These bars represent the frequencies of variable values or the values themselves. Numbers on the y-axis of a vertical bar chart or the x-axis of a horizontal bar chart are called the scale.

Real-Life Application of Bar Charts

Let’s say a sales manager is presenting sales to their audience. Using a bar chart, he follows these steps.

Step 1: Selecting Data

The first step is to identify the specific data you will present to your audience.

The sales manager has highlighted these products for the presentation.

- Product A: Men’s Shoes

- Product B: Women’s Apparel

- Product C: Electronics

- Product D: Home Decor

Step 2: Choosing Orientation

Opt for a vertical layout for simplicity. Vertical bar charts help compare different categories in case there are not too many categories [1] . They can also help show different trends. A vertical bar chart is used where each bar represents one of the four chosen products. After plotting the data, it is seen that the height of each bar directly represents the sales performance of the respective product.

It is visible that the tallest bar (Electronics – Product C) is showing the highest sales. However, the shorter bars (Women’s Apparel – Product B and Home Decor – Product D) need attention. It indicates areas that require further analysis or strategies for improvement.

Step 3: Colorful Insights

Different colors are used to differentiate each product. It is essential to show a color-coded chart where the audience can distinguish between products.

- Men’s Shoes (Product A): Yellow

- Women’s Apparel (Product B): Orange

- Electronics (Product C): Violet

- Home Decor (Product D): Blue

Bar charts are straightforward and easily understandable for presenting data. They are versatile when comparing products or any categorical data [2] . Bar charts adapt seamlessly to retail scenarios. Despite that, bar charts have a few shortcomings. They cannot illustrate data trends over time. Besides, overloading the chart with numerous products can lead to visual clutter, diminishing its effectiveness.

For more information, check our collection of bar chart templates for PowerPoint .

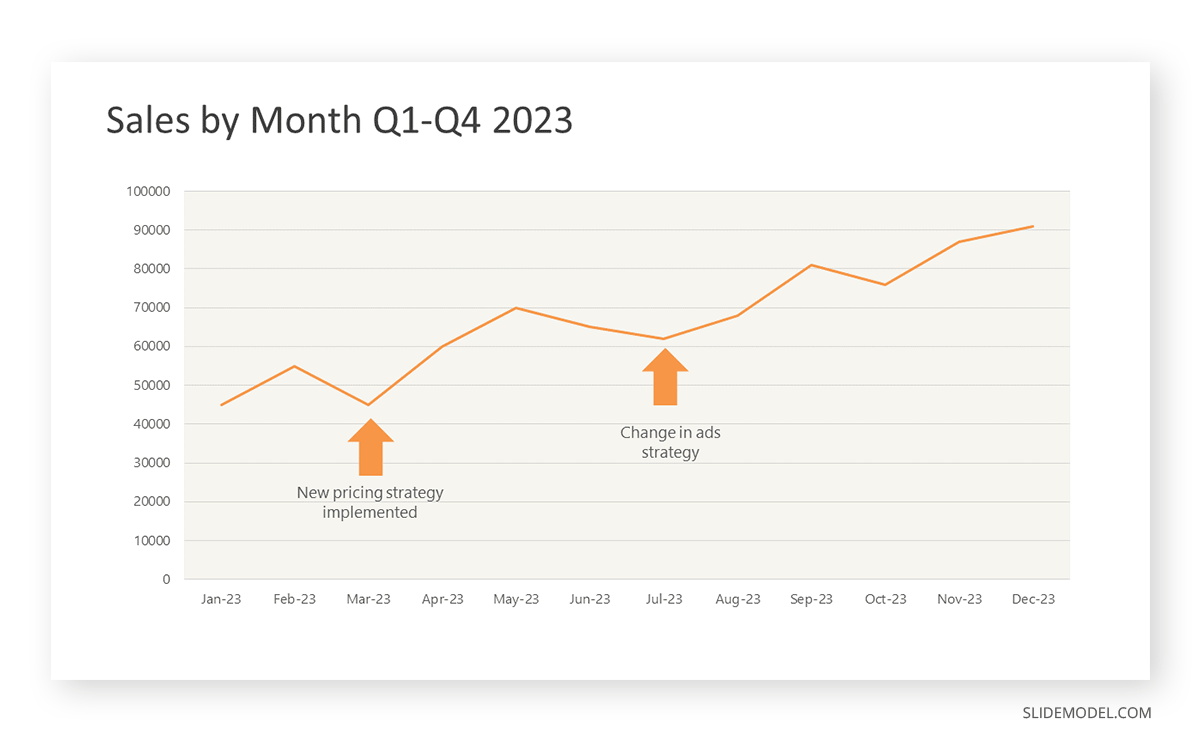

Line graphs help illustrate data trends, progressions, or fluctuations by connecting a series of data points called ‘markers’ with straight line segments. This provides a straightforward representation of how values change [5] . Their versatility makes them invaluable for scenarios requiring a visual understanding of continuous data. In addition, line graphs are also useful for comparing multiple datasets over the same timeline. Using multiple line graphs allows us to compare more than one data set. They simplify complex information so the audience can quickly grasp the ups and downs of values. From tracking stock prices to analyzing experimental results, you can use line graphs to show how data changes over a continuous timeline. They show trends with simplicity and clarity.

Real-life Application of Line Graphs

To understand line graphs thoroughly, we will use a real case. Imagine you’re a financial analyst presenting a tech company’s monthly sales for a licensed product over the past year. Investors want insights into sales behavior by month, how market trends may have influenced sales performance and reception to the new pricing strategy. To present data via a line graph, you will complete these steps.

First, you need to gather the data. In this case, your data will be the sales numbers. For example:

- January: $45,000

- February: $55,000

- March: $45,000

- April: $60,000

- May: $ 70,000

- June: $65,000

- July: $62,000

- August: $68,000

- September: $81,000

- October: $76,000

- November: $87,000

- December: $91,000

After choosing the data, the next step is to select the orientation. Like bar charts, you can use vertical or horizontal line graphs. However, we want to keep this simple, so we will keep the timeline (x-axis) horizontal while the sales numbers (y-axis) vertical.

Step 3: Connecting Trends

After adding the data to your preferred software, you will plot a line graph. In the graph, each month’s sales are represented by data points connected by a line.

Step 4: Adding Clarity with Color

If there are multiple lines, you can also add colors to highlight each one, making it easier to follow.

Line graphs excel at visually presenting trends over time. These presentation aids identify patterns, like upward or downward trends. However, too many data points can clutter the graph, making it harder to interpret. Line graphs work best with continuous data but are not suitable for categories.

For more information, check our collection of line chart templates for PowerPoint and our article about how to make a presentation graph .

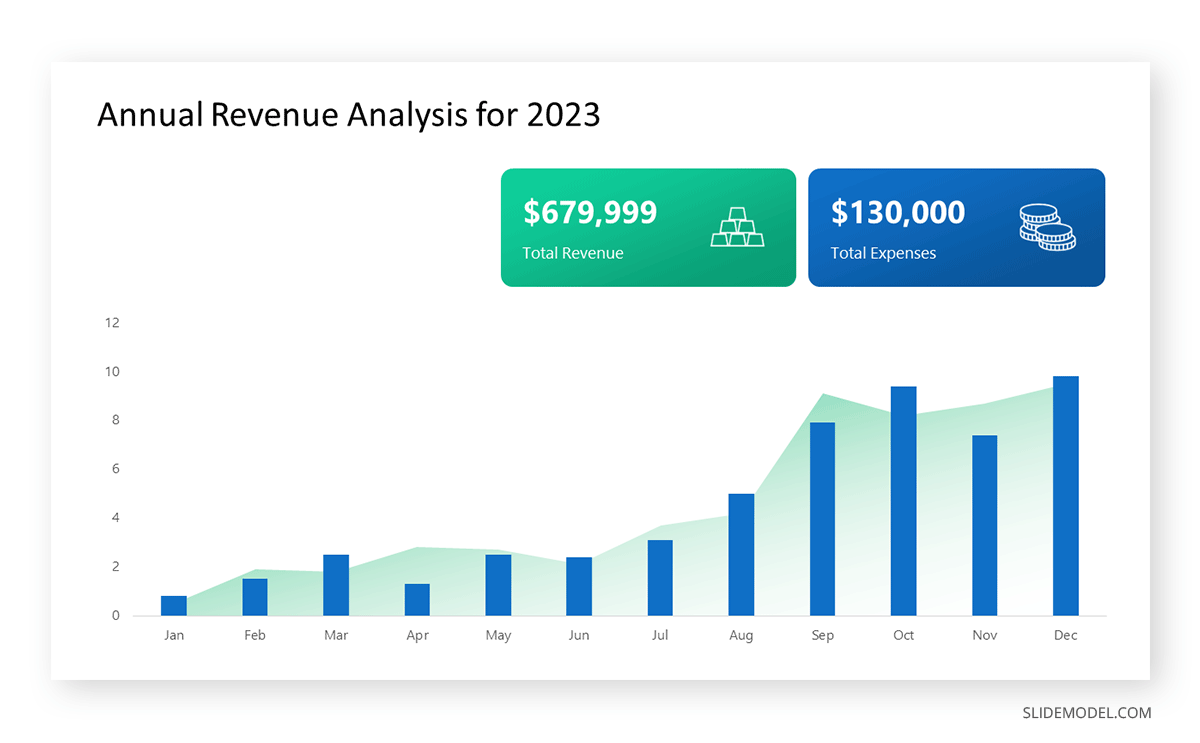

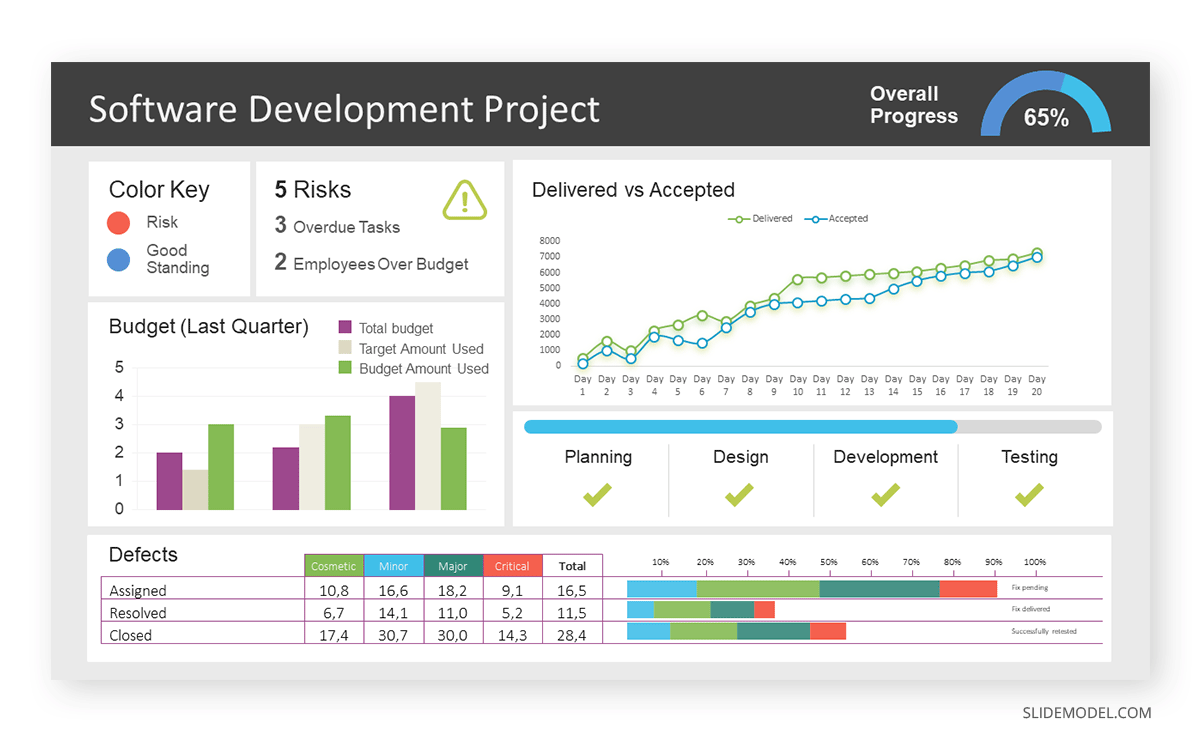

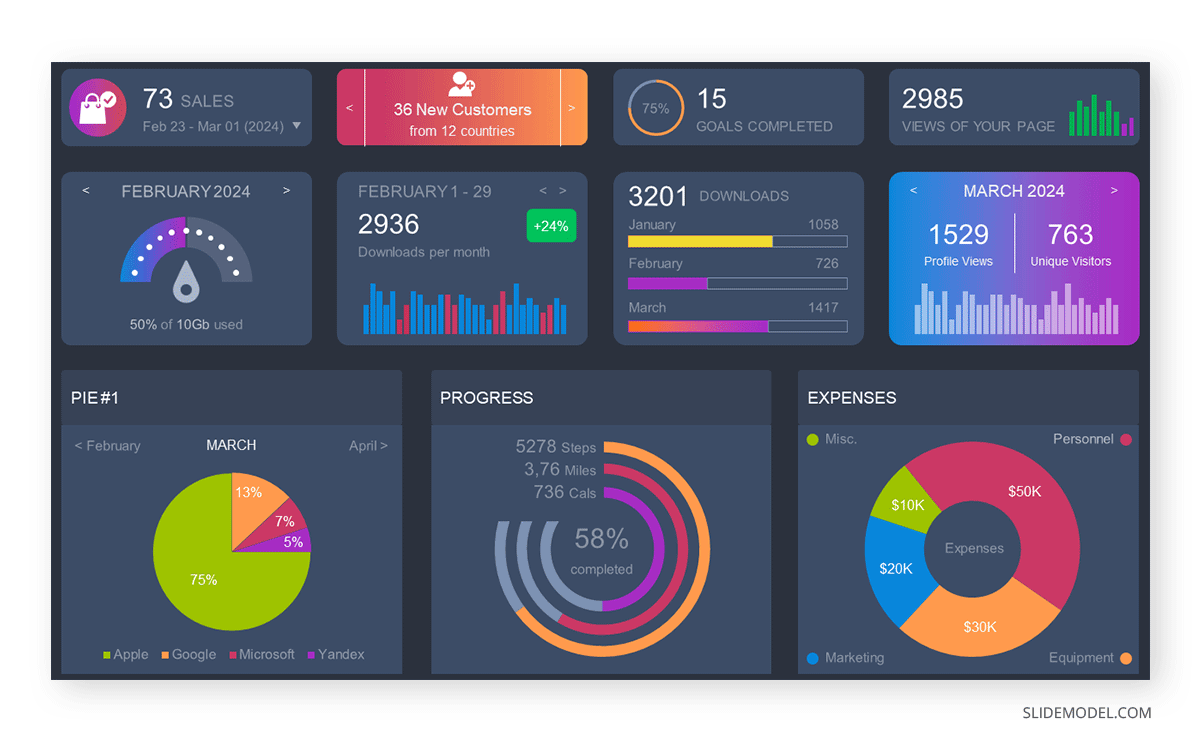

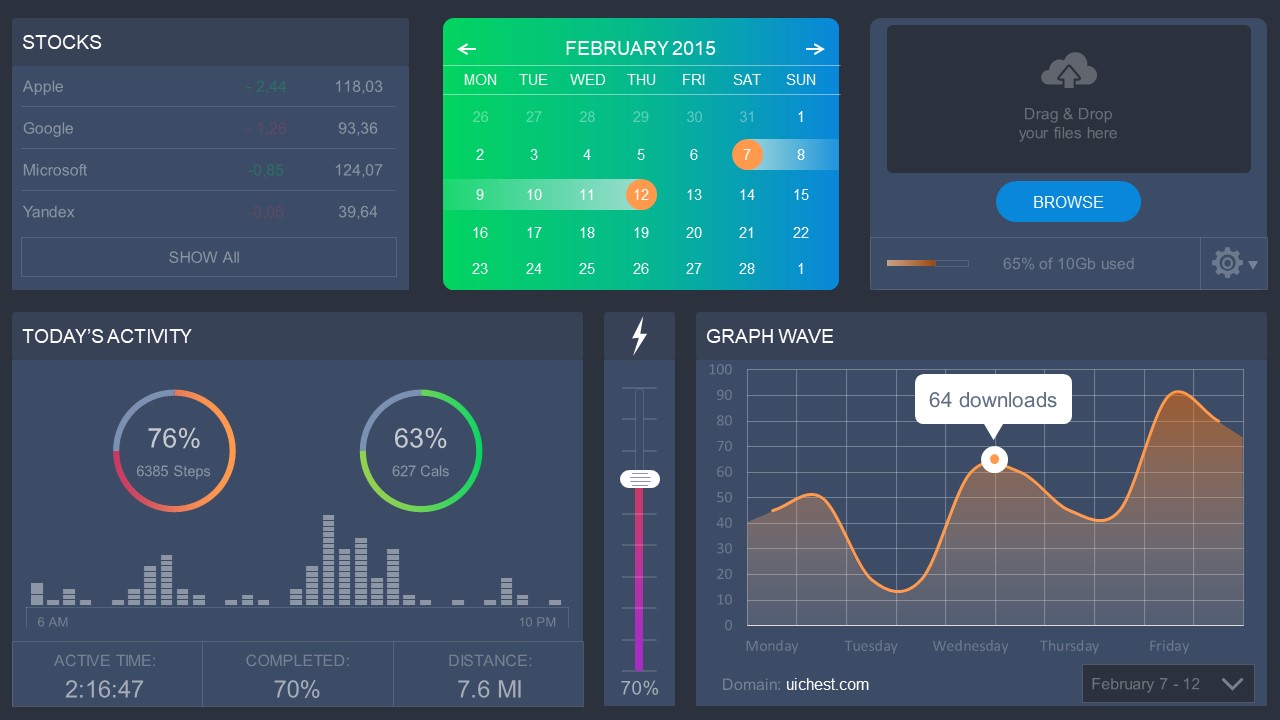

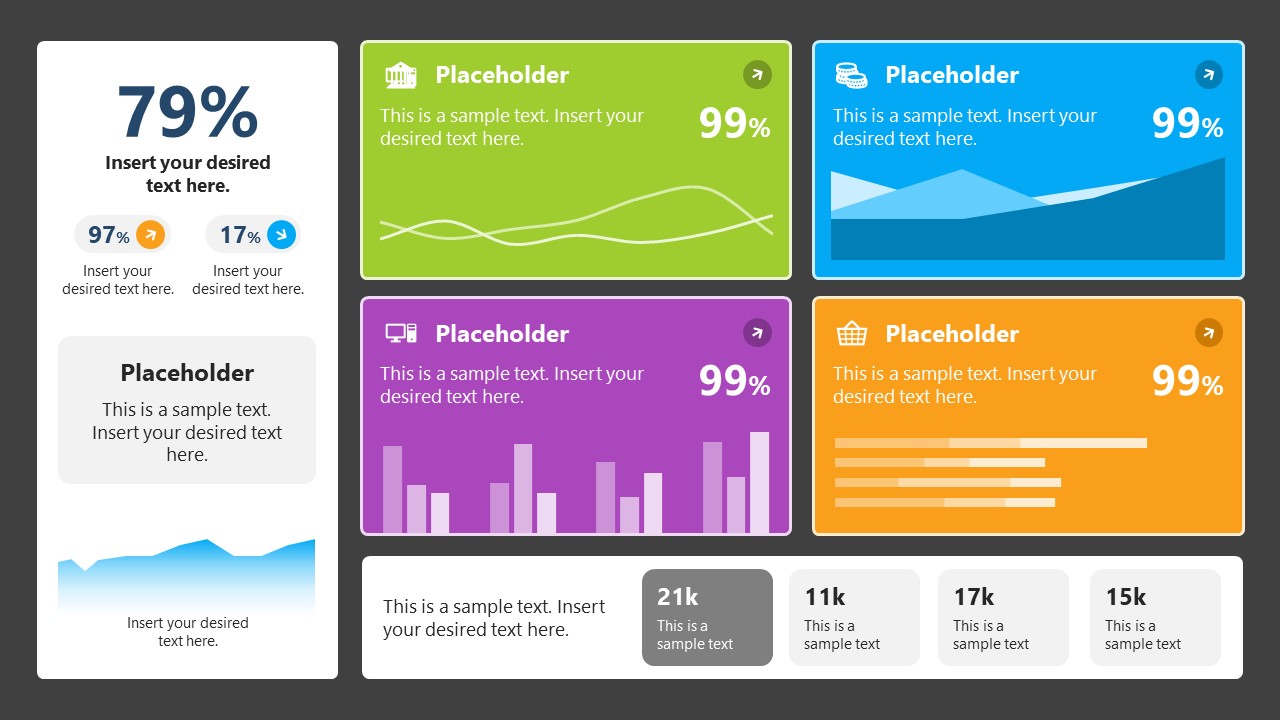

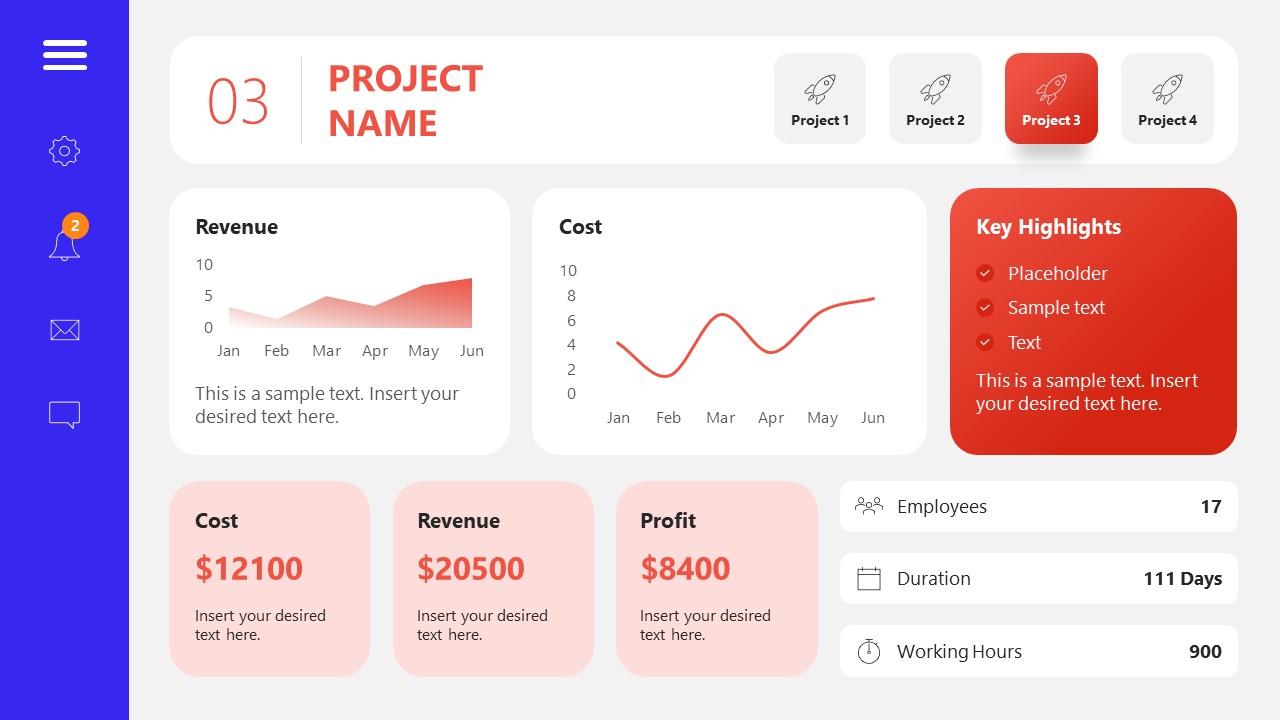

A data dashboard is a visual tool for analyzing information. Different graphs, charts, and tables are consolidated in a layout to showcase the information required to achieve one or more objectives. Dashboards help quickly see Key Performance Indicators (KPIs). You don’t make new visuals in the dashboard; instead, you use it to display visuals you’ve already made in worksheets [3] .

Keeping the number of visuals on a dashboard to three or four is recommended. Adding too many can make it hard to see the main points [4]. Dashboards can be used for business analytics to analyze sales, revenue, and marketing metrics at a time. They are also used in the manufacturing industry, as they allow users to grasp the entire production scenario at the moment while tracking the core KPIs for each line.

Real-Life Application of a Dashboard

Consider a project manager presenting a software development project’s progress to a tech company’s leadership team. He follows the following steps.

Step 1: Defining Key Metrics

To effectively communicate the project’s status, identify key metrics such as completion status, budget, and bug resolution rates. Then, choose measurable metrics aligned with project objectives.

Step 2: Choosing Visualization Widgets

After finalizing the data, presentation aids that align with each metric are selected. For this project, the project manager chooses a progress bar for the completion status and uses bar charts for budget allocation. Likewise, he implements line charts for bug resolution rates.

Step 3: Dashboard Layout

Key metrics are prominently placed in the dashboard for easy visibility, and the manager ensures that it appears clean and organized.

Dashboards provide a comprehensive view of key project metrics. Users can interact with data, customize views, and drill down for detailed analysis. However, creating an effective dashboard requires careful planning to avoid clutter. Besides, dashboards rely on the availability and accuracy of underlying data sources.

For more information, check our article on how to design a dashboard presentation , and discover our collection of dashboard PowerPoint templates .

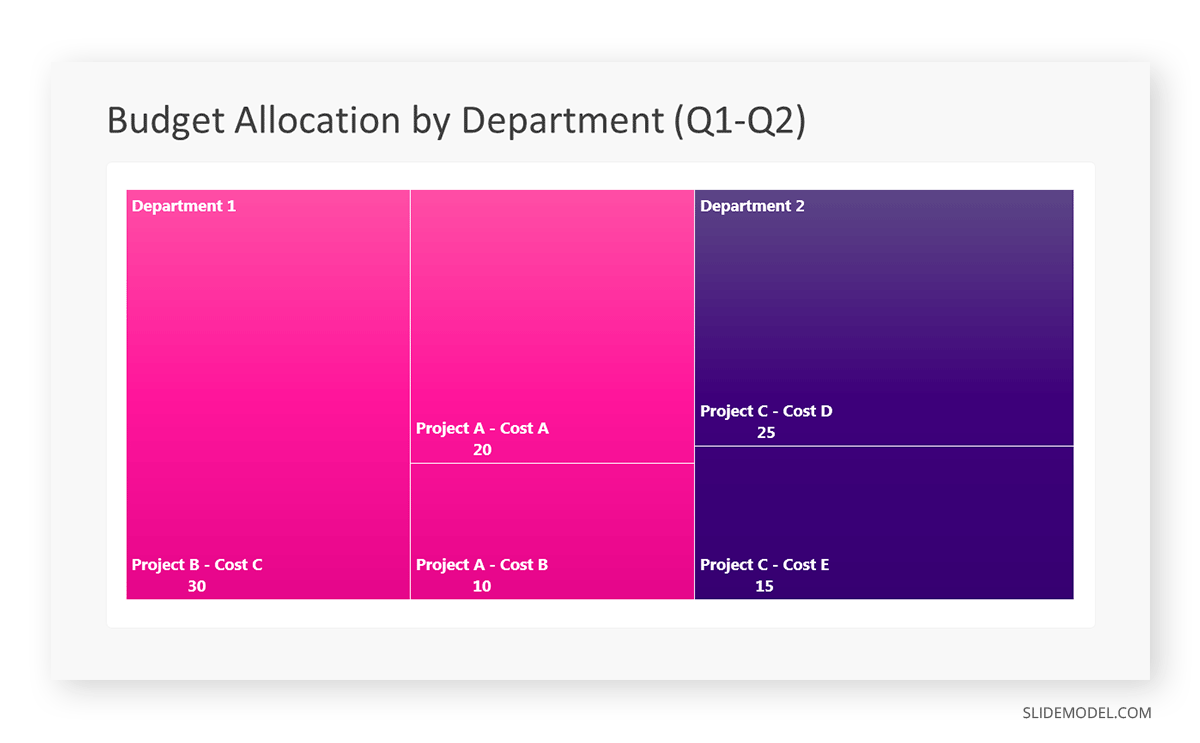

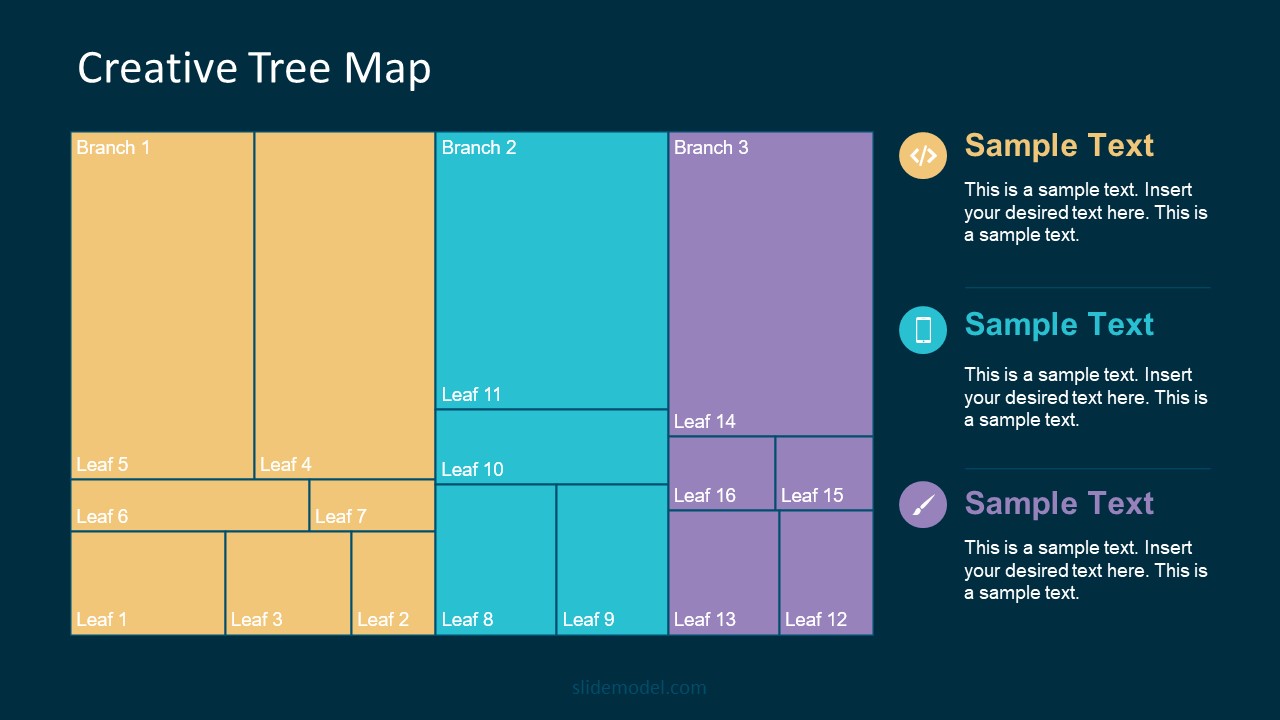

Treemap charts represent hierarchical data structured in a series of nested rectangles [6] . As each branch of the ‘tree’ is given a rectangle, smaller tiles can be seen representing sub-branches, meaning elements on a lower hierarchical level than the parent rectangle. Each one of those rectangular nodes is built by representing an area proportional to the specified data dimension.

Treemaps are useful for visualizing large datasets in compact space. It is easy to identify patterns, such as which categories are dominant. Common applications of the treemap chart are seen in the IT industry, such as resource allocation, disk space management, website analytics, etc. Also, they can be used in multiple industries like healthcare data analysis, market share across different product categories, or even in finance to visualize portfolios.

Real-Life Application of a Treemap Chart

Let’s consider a financial scenario where a financial team wants to represent the budget allocation of a company. There is a hierarchy in the process, so it is helpful to use a treemap chart. In the chart, the top-level rectangle could represent the total budget, and it would be subdivided into smaller rectangles, each denoting a specific department. Further subdivisions within these smaller rectangles might represent individual projects or cost categories.

Step 1: Define Your Data Hierarchy

While presenting data on the budget allocation, start by outlining the hierarchical structure. The sequence will be like the overall budget at the top, followed by departments, projects within each department, and finally, individual cost categories for each project.

- Top-level rectangle: Total Budget

- Second-level rectangles: Departments (Engineering, Marketing, Sales)

- Third-level rectangles: Projects within each department

- Fourth-level rectangles: Cost categories for each project (Personnel, Marketing Expenses, Equipment)

Step 2: Choose a Suitable Tool

It’s time to select a data visualization tool supporting Treemaps. Popular choices include Tableau, Microsoft Power BI, PowerPoint, or even coding with libraries like D3.js. It is vital to ensure that the chosen tool provides customization options for colors, labels, and hierarchical structures.

Here, the team uses PowerPoint for this guide because of its user-friendly interface and robust Treemap capabilities.

Step 3: Make a Treemap Chart with PowerPoint

After opening the PowerPoint presentation, they chose “SmartArt” to form the chart. The SmartArt Graphic window has a “Hierarchy” category on the left. Here, you will see multiple options. You can choose any layout that resembles a Treemap. The “Table Hierarchy” or “Organization Chart” options can be adapted. The team selects the Table Hierarchy as it looks close to a Treemap.

Step 5: Input Your Data

After that, a new window will open with a basic structure. They add the data one by one by clicking on the text boxes. They start with the top-level rectangle, representing the total budget.

Step 6: Customize the Treemap

By clicking on each shape, they customize its color, size, and label. At the same time, they can adjust the font size, style, and color of labels by using the options in the “Format” tab in PowerPoint. Using different colors for each level enhances the visual difference.

Treemaps excel at illustrating hierarchical structures. These charts make it easy to understand relationships and dependencies. They efficiently use space, compactly displaying a large amount of data, reducing the need for excessive scrolling or navigation. Additionally, using colors enhances the understanding of data by representing different variables or categories.

In some cases, treemaps might become complex, especially with deep hierarchies. It becomes challenging for some users to interpret the chart. At the same time, displaying detailed information within each rectangle might be constrained by space. It potentially limits the amount of data that can be shown clearly. Without proper labeling and color coding, there’s a risk of misinterpretation.

A heatmap is a data visualization tool that uses color coding to represent values across a two-dimensional surface. In these, colors replace numbers to indicate the magnitude of each cell. This color-shaded matrix display is valuable for summarizing and understanding data sets with a glance [7] . The intensity of the color corresponds to the value it represents, making it easy to identify patterns, trends, and variations in the data.

As a tool, heatmaps help businesses analyze website interactions, revealing user behavior patterns and preferences to enhance overall user experience. In addition, companies use heatmaps to assess content engagement, identifying popular sections and areas of improvement for more effective communication. They excel at highlighting patterns and trends in large datasets, making it easy to identify areas of interest.

We can implement heatmaps to express multiple data types, such as numerical values, percentages, or even categorical data. Heatmaps help us easily spot areas with lots of activity, making them helpful in figuring out clusters [8] . When making these maps, it is important to pick colors carefully. The colors need to show the differences between groups or levels of something. And it is good to use colors that people with colorblindness can easily see.

Check our detailed guide on how to create a heatmap here. Also discover our collection of heatmap PowerPoint templates .

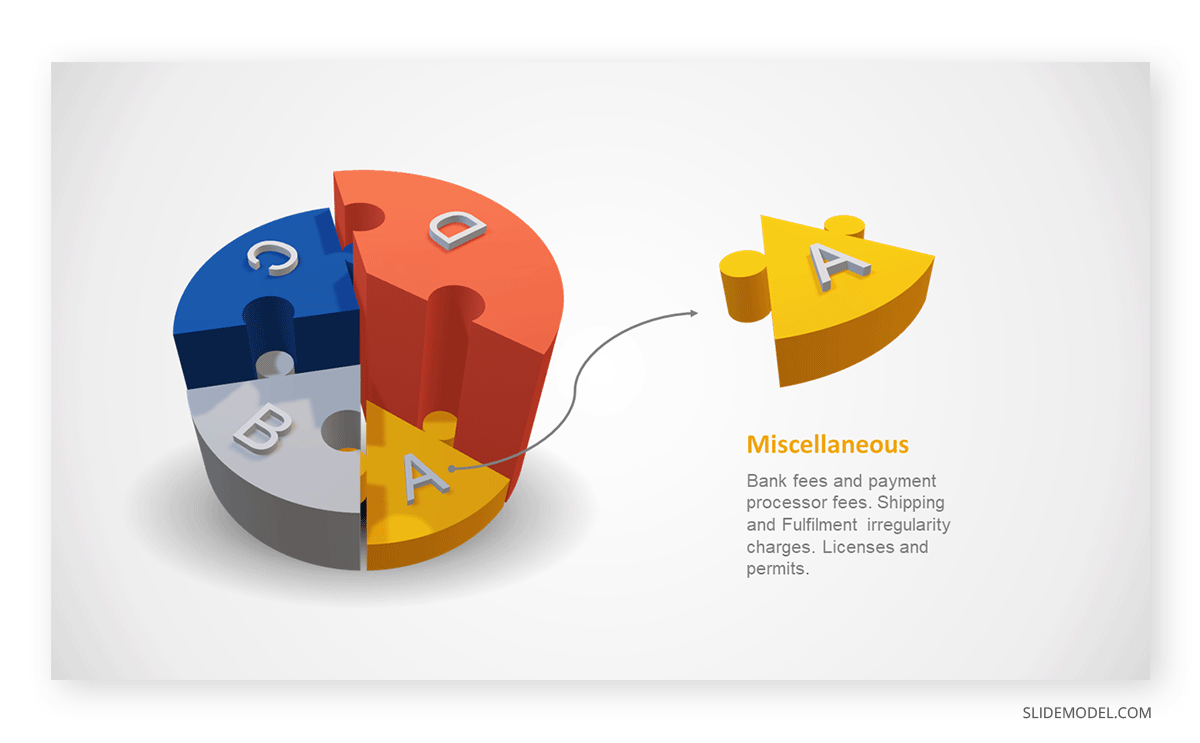

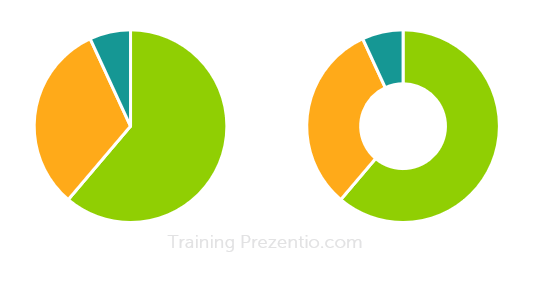

Pie charts are circular statistical graphics divided into slices to illustrate numerical proportions. Each slice represents a proportionate part of the whole, making it easy to visualize the contribution of each component to the total.

The size of the pie charts is influenced by the value of data points within each pie. The total of all data points in a pie determines its size. The pie with the highest data points appears as the largest, whereas the others are proportionally smaller. However, you can present all pies of the same size if proportional representation is not required [9] . Sometimes, pie charts are difficult to read, or additional information is required. A variation of this tool can be used instead, known as the donut chart , which has the same structure but a blank center, creating a ring shape. Presenters can add extra information, and the ring shape helps to declutter the graph.

Pie charts are used in business to show percentage distribution, compare relative sizes of categories, or present straightforward data sets where visualizing ratios is essential.

Real-Life Application of Pie Charts

Consider a scenario where you want to represent the distribution of the data. Each slice of the pie chart would represent a different category, and the size of each slice would indicate the percentage of the total portion allocated to that category.

Step 1: Define Your Data Structure

Imagine you are presenting the distribution of a project budget among different expense categories.

- Column A: Expense Categories (Personnel, Equipment, Marketing, Miscellaneous)

- Column B: Budget Amounts ($40,000, $30,000, $20,000, $10,000) Column B represents the values of your categories in Column A.

Step 2: Insert a Pie Chart

Using any of the accessible tools, you can create a pie chart. The most convenient tools for forming a pie chart in a presentation are presentation tools such as PowerPoint or Google Slides. You will notice that the pie chart assigns each expense category a percentage of the total budget by dividing it by the total budget.

For instance:

- Personnel: $40,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 40%

- Equipment: $30,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 30%

- Marketing: $20,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 20%

- Miscellaneous: $10,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 10%

You can make a chart out of this or just pull out the pie chart from the data.

3D pie charts and 3D donut charts are quite popular among the audience. They stand out as visual elements in any presentation slide, so let’s take a look at how our pie chart example would look in 3D pie chart format.

Step 03: Results Interpretation

The pie chart visually illustrates the distribution of the project budget among different expense categories. Personnel constitutes the largest portion at 40%, followed by equipment at 30%, marketing at 20%, and miscellaneous at 10%. This breakdown provides a clear overview of where the project funds are allocated, which helps in informed decision-making and resource management. It is evident that personnel are a significant investment, emphasizing their importance in the overall project budget.

Pie charts provide a straightforward way to represent proportions and percentages. They are easy to understand, even for individuals with limited data analysis experience. These charts work well for small datasets with a limited number of categories.

However, a pie chart can become cluttered and less effective in situations with many categories. Accurate interpretation may be challenging, especially when dealing with slight differences in slice sizes. In addition, these charts are static and do not effectively convey trends over time.

For more information, check our collection of pie chart templates for PowerPoint .

Histograms present the distribution of numerical variables. Unlike a bar chart that records each unique response separately, histograms organize numeric responses into bins and show the frequency of reactions within each bin [10] . The x-axis of a histogram shows the range of values for a numeric variable. At the same time, the y-axis indicates the relative frequencies (percentage of the total counts) for that range of values.

Whenever you want to understand the distribution of your data, check which values are more common, or identify outliers, histograms are your go-to. Think of them as a spotlight on the story your data is telling. A histogram can provide a quick and insightful overview if you’re curious about exam scores, sales figures, or any numerical data distribution.

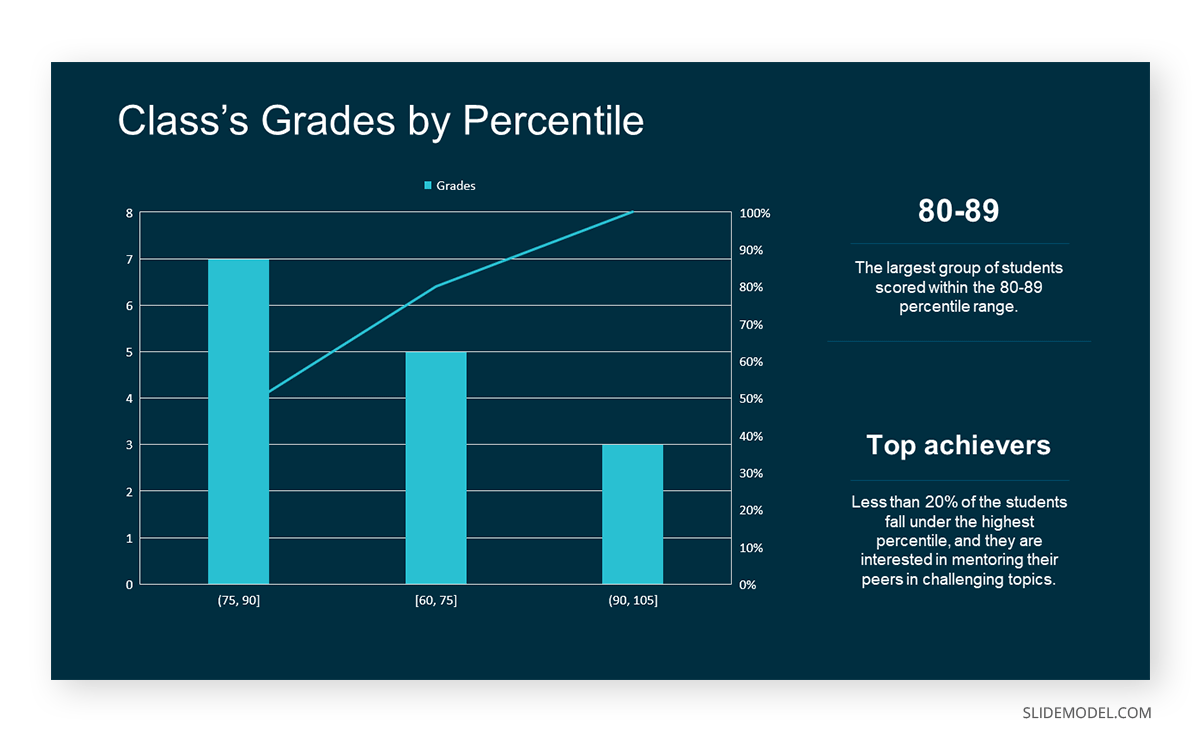

Real-Life Application of a Histogram

In the histogram data analysis presentation example, imagine an instructor analyzing a class’s grades to identify the most common score range. A histogram could effectively display the distribution. It will show whether most students scored in the average range or if there are significant outliers.

Step 1: Gather Data

He begins by gathering the data. The scores of each student in class are gathered to analyze exam scores.

After arranging the scores in ascending order, bin ranges are set.

Step 2: Define Bins

Bins are like categories that group similar values. Think of them as buckets that organize your data. The presenter decides how wide each bin should be based on the range of the values. For instance, the instructor sets the bin ranges based on score intervals: 60-69, 70-79, 80-89, and 90-100.

Step 3: Count Frequency

Now, he counts how many data points fall into each bin. This step is crucial because it tells you how often specific ranges of values occur. The result is the frequency distribution, showing the occurrences of each group.

Here, the instructor counts the number of students in each category.

- 60-69: 1 student (Kate)

- 70-79: 4 students (David, Emma, Grace, Jack)

- 80-89: 7 students (Alice, Bob, Frank, Isabel, Liam, Mia, Noah)

- 90-100: 3 students (Clara, Henry, Olivia)

Step 4: Create the Histogram

It’s time to turn the data into a visual representation. Draw a bar for each bin on a graph. The width of the bar should correspond to the range of the bin, and the height should correspond to the frequency. To make your histogram understandable, label the X and Y axes.

In this case, the X-axis should represent the bins (e.g., test score ranges), and the Y-axis represents the frequency.

The histogram of the class grades reveals insightful patterns in the distribution. Most students, with seven students, fall within the 80-89 score range. The histogram provides a clear visualization of the class’s performance. It showcases a concentration of grades in the upper-middle range with few outliers at both ends. This analysis helps in understanding the overall academic standing of the class. It also identifies the areas for potential improvement or recognition.

Thus, histograms provide a clear visual representation of data distribution. They are easy to interpret, even for those without a statistical background. They apply to various types of data, including continuous and discrete variables. One weak point is that histograms do not capture detailed patterns in students’ data, with seven compared to other visualization methods.

A scatter plot is a graphical representation of the relationship between two variables. It consists of individual data points on a two-dimensional plane. This plane plots one variable on the x-axis and the other on the y-axis. Each point represents a unique observation. It visualizes patterns, trends, or correlations between the two variables.

Scatter plots are also effective in revealing the strength and direction of relationships. They identify outliers and assess the overall distribution of data points. The points’ dispersion and clustering reflect the relationship’s nature, whether it is positive, negative, or lacks a discernible pattern. In business, scatter plots assess relationships between variables such as marketing cost and sales revenue. They help present data correlations and decision-making.

Real-Life Application of Scatter Plot

A group of scientists is conducting a study on the relationship between daily hours of screen time and sleep quality. After reviewing the data, they managed to create this table to help them build a scatter plot graph:

In the provided example, the x-axis represents Daily Hours of Screen Time, and the y-axis represents the Sleep Quality Rating.

The scientists observe a negative correlation between the amount of screen time and the quality of sleep. This is consistent with their hypothesis that blue light, especially before bedtime, has a significant impact on sleep quality and metabolic processes.

There are a few things to remember when using a scatter plot. Even when a scatter diagram indicates a relationship, it doesn’t mean one variable affects the other. A third factor can influence both variables. The more the plot resembles a straight line, the stronger the relationship is perceived [11] . If it suggests no ties, the observed pattern might be due to random fluctuations in data. When the scatter diagram depicts no correlation, whether the data might be stratified is worth considering.

Choosing the appropriate data presentation type is crucial when making a presentation . Understanding the nature of your data and the message you intend to convey will guide this selection process. For instance, when showcasing quantitative relationships, scatter plots become instrumental in revealing correlations between variables. If the focus is on emphasizing parts of a whole, pie charts offer a concise display of proportions. Histograms, on the other hand, prove valuable for illustrating distributions and frequency patterns.

Bar charts provide a clear visual comparison of different categories. Likewise, line charts excel in showcasing trends over time, while tables are ideal for detailed data examination. Starting a presentation on data presentation types involves evaluating the specific information you want to communicate and selecting the format that aligns with your message. This ensures clarity and resonance with your audience from the beginning of your presentation.

1. Fact Sheet Dashboard for Data Presentation

Convey all the data you need to present in this one-pager format, an ideal solution tailored for users looking for presentation aids. Global maps, donut chats, column graphs, and text neatly arranged in a clean layout presented in light and dark themes.

Use This Template

2. 3D Column Chart Infographic PPT Template

Represent column charts in a highly visual 3D format with this PPT template. A creative way to present data, this template is entirely editable, and we can craft either a one-page infographic or a series of slides explaining what we intend to disclose point by point.

3. Data Circles Infographic PowerPoint Template

An alternative to the pie chart and donut chart diagrams, this template features a series of curved shapes with bubble callouts as ways of presenting data. Expand the information for each arch in the text placeholder areas.

4. Colorful Metrics Dashboard for Data Presentation

This versatile dashboard template helps us in the presentation of the data by offering several graphs and methods to convert numbers into graphics. Implement it for e-commerce projects, financial projections, project development, and more.

5. Animated Data Presentation Tools for PowerPoint & Google Slides

A slide deck filled with most of the tools mentioned in this article, from bar charts, column charts, treemap graphs, pie charts, histogram, etc. Animated effects make each slide look dynamic when sharing data with stakeholders.

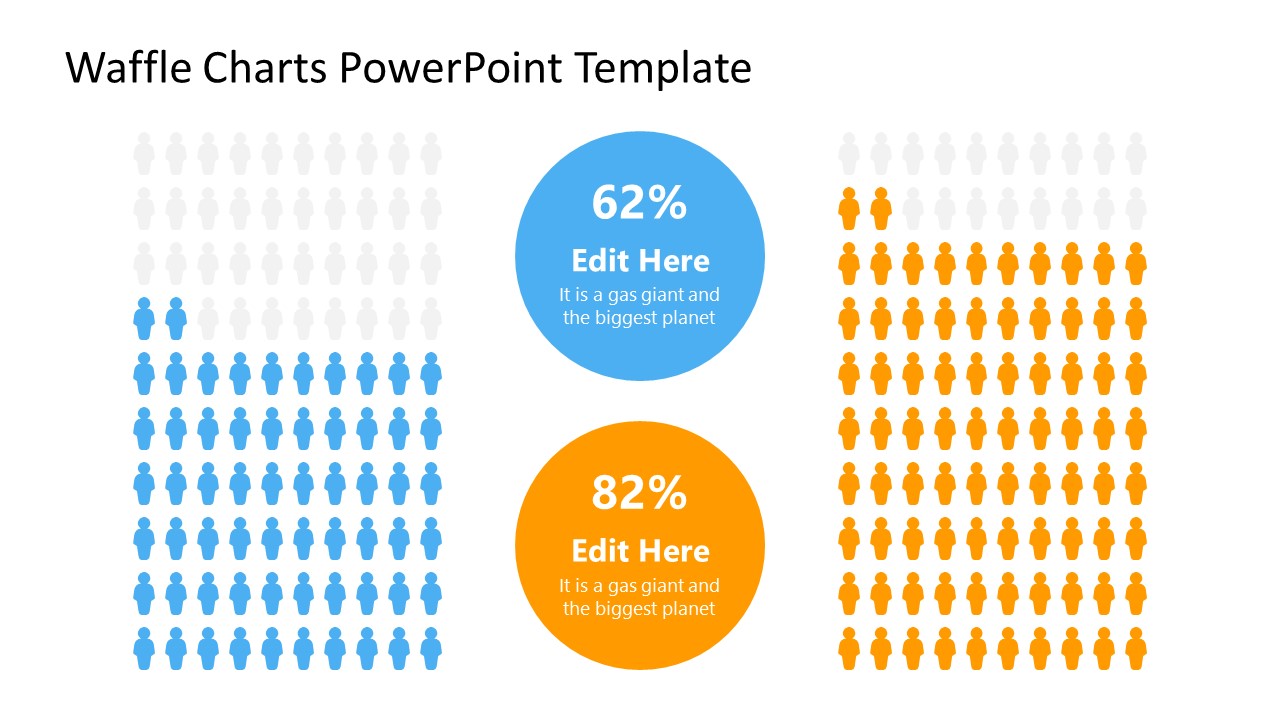

6. Statistics Waffle Charts PPT Template for Data Presentations

This PPT template helps us how to present data beyond the typical pie chart representation. It is widely used for demographics, so it’s a great fit for marketing teams, data science professionals, HR personnel, and more.

7. Data Presentation Dashboard Template for Google Slides

A compendium of tools in dashboard format featuring line graphs, bar charts, column charts, and neatly arranged placeholder text areas.

8. Weather Dashboard for Data Presentation

Share weather data for agricultural presentation topics, environmental studies, or any kind of presentation that requires a highly visual layout for weather forecasting on a single day. Two color themes are available.

9. Social Media Marketing Dashboard Data Presentation Template

Intended for marketing professionals, this dashboard template for data presentation is a tool for presenting data analytics from social media channels. Two slide layouts featuring line graphs and column charts.

10. Project Management Summary Dashboard Template

A tool crafted for project managers to deliver highly visual reports on a project’s completion, the profits it delivered for the company, and expenses/time required to execute it. 4 different color layouts are available.

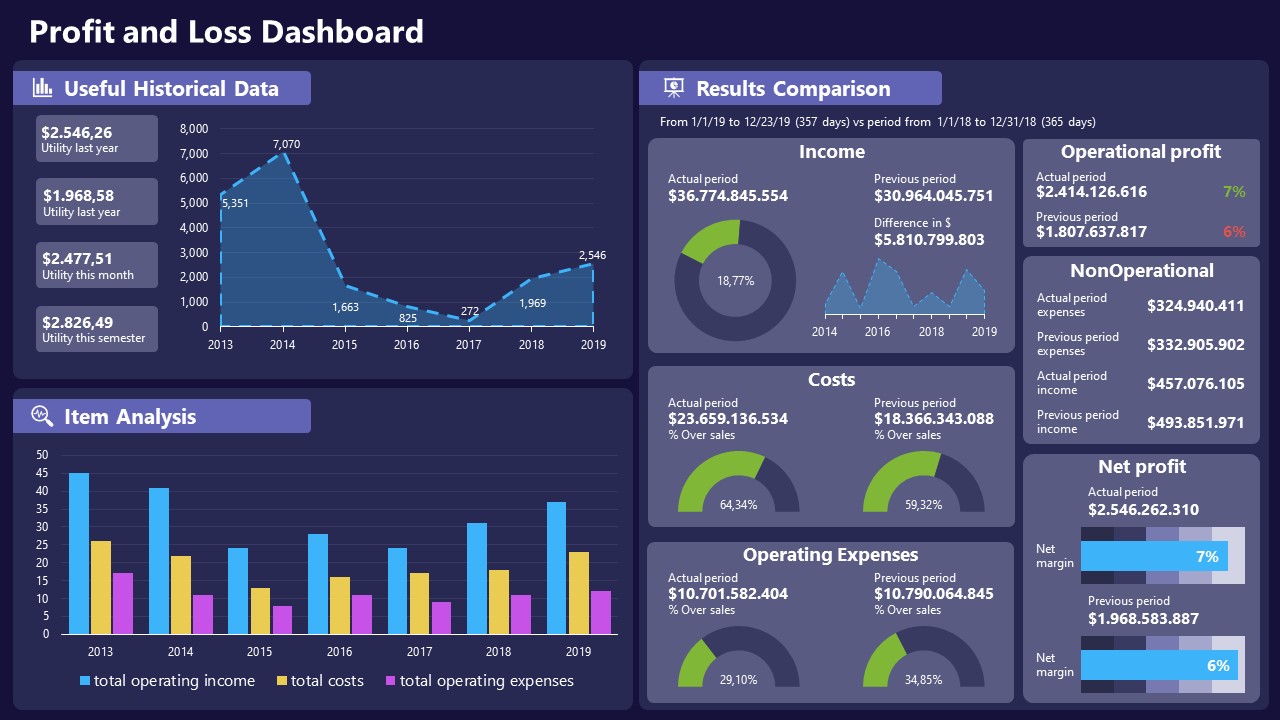

11. Profit & Loss Dashboard for PowerPoint and Google Slides

A must-have for finance professionals. This typical profit & loss dashboard includes progress bars, donut charts, column charts, line graphs, and everything that’s required to deliver a comprehensive report about a company’s financial situation.

Overwhelming visuals

One of the mistakes related to using data-presenting methods is including too much data or using overly complex visualizations. They can confuse the audience and dilute the key message.

Inappropriate chart types

Choosing the wrong type of chart for the data at hand can lead to misinterpretation. For example, using a pie chart for data that doesn’t represent parts of a whole is not right.

Lack of context

Failing to provide context or sufficient labeling can make it challenging for the audience to understand the significance of the presented data.

Inconsistency in design

Using inconsistent design elements and color schemes across different visualizations can create confusion and visual disarray.

Failure to provide details

Simply presenting raw data without offering clear insights or takeaways can leave the audience without a meaningful conclusion.

Lack of focus

Not having a clear focus on the key message or main takeaway can result in a presentation that lacks a central theme.

Visual accessibility issues

Overlooking the visual accessibility of charts and graphs can exclude certain audience members who may have difficulty interpreting visual information.

In order to avoid these mistakes in data presentation, presenters can benefit from using presentation templates . These templates provide a structured framework. They ensure consistency, clarity, and an aesthetically pleasing design, enhancing data communication’s overall impact.

Understanding and choosing data presentation types are pivotal in effective communication. Each method serves a unique purpose, so selecting the appropriate one depends on the nature of the data and the message to be conveyed. The diverse array of presentation types offers versatility in visually representing information, from bar charts showing values to pie charts illustrating proportions.

Using the proper method enhances clarity, engages the audience, and ensures that data sets are not just presented but comprehensively understood. By appreciating the strengths and limitations of different presentation types, communicators can tailor their approach to convey information accurately, developing a deeper connection between data and audience understanding.

[1] Government of Canada, S.C. (2021) 5 Data Visualization 5.2 Bar Chart , 5.2 Bar chart . https://www150.statcan.gc.ca/n1/edu/power-pouvoir/ch9/bargraph-diagrammeabarres/5214818-eng.htm

[2] Kosslyn, S.M., 1989. Understanding charts and graphs. Applied cognitive psychology, 3(3), pp.185-225. https://apps.dtic.mil/sti/pdfs/ADA183409.pdf

[3] Creating a Dashboard . https://it.tufts.edu/book/export/html/1870

[4] https://www.goldenwestcollege.edu/research/data-and-more/data-dashboards/index.html

[5] https://www.mit.edu/course/21/21.guide/grf-line.htm

[6] Jadeja, M. and Shah, K., 2015, January. Tree-Map: A Visualization Tool for Large Data. In GSB@ SIGIR (pp. 9-13). https://ceur-ws.org/Vol-1393/gsb15proceedings.pdf#page=15

[7] Heat Maps and Quilt Plots. https://www.publichealth.columbia.edu/research/population-health-methods/heat-maps-and-quilt-plots

[8] EIU QGIS WORKSHOP. https://www.eiu.edu/qgisworkshop/heatmaps.php

[9] About Pie Charts. https://www.mit.edu/~mbarker/formula1/f1help/11-ch-c8.htm

[10] Histograms. https://sites.utexas.edu/sos/guided/descriptive/numericaldd/descriptiven2/histogram/ [11] https://asq.org/quality-resources/scatter-diagram

Like this article? Please share

Data Analysis, Data Science, Data Visualization Filed under Design

Related Articles

Filed under Design • March 27th, 2024

How to Make a Presentation Graph

Detailed step-by-step instructions to master the art of how to make a presentation graph in PowerPoint and Google Slides. Check it out!

Filed under Presentation Ideas • January 6th, 2024

All About Using Harvey Balls

Among the many tools in the arsenal of the modern presenter, Harvey Balls have a special place. In this article we will tell you all about using Harvey Balls.

Filed under Business • December 8th, 2023

How to Design a Dashboard Presentation: A Step-by-Step Guide

Take a step further in your professional presentation skills by learning what a dashboard presentation is and how to properly design one in PowerPoint. A detailed step-by-step guide is here!

Leave a Reply

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Poor statistical reporting, inadequate data presentation and spin persist despite editorial advice

Roles Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Visualization, Writing – original draft, Writing – review & editing

Affiliations Sydney Medical School, University of Sydney, Sydney, NSW, Australia, Neuroscience Research Australia (NeuRA), Randwick, NSW, Australia

Roles Investigation, Methodology, Project administration, Writing – review & editing

Affiliations Neuroscience Research Australia (NeuRA), Randwick, NSW, Australia, University of New South Wales, Randwick, NSW, Australia

Roles Funding acquisition, Investigation, Methodology, Project administration, Writing – review & editing

* E-mail: [email protected]

Roles Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Visualization, Writing – review & editing

- Joanna Diong,

- Annie A. Butler,

- Simon C. Gandevia,

- Martin E. Héroux

- Published: August 15, 2018

- https://doi.org/10.1371/journal.pone.0202121

- Reader Comments

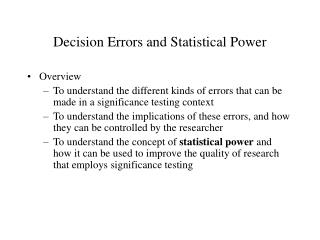

The Journal of Physiology and British Journal of Pharmacology jointly published an editorial series in 2011 to improve standards in statistical reporting and data analysis. It is not known whether reporting practices changed in response to the editorial advice. We conducted a cross-sectional analysis of reporting practices in a random sample of research papers published in these journals before (n = 202) and after (n = 199) publication of the editorial advice. Descriptive data are presented. There was no evidence that reporting practices improved following publication of the editorial advice. Overall, 76-84% of papers with written measures that summarized data variability used standard errors of the mean, and 90-96% of papers did not report exact p-values for primary analyses and post-hoc tests. 76-84% of papers that plotted measures to summarize data variability used standard errors of the mean, and only 2-4% of papers plotted raw data used to calculate variability. Of papers that reported p-values between 0.05 and 0.1, 56-63% interpreted these as trends or statistically significant. Implied or gross spin was noted incidentally in papers before (n = 10) and after (n = 9) the editorial advice was published. Overall, poor statistical reporting, inadequate data presentation and spin were present before and after the editorial advice was published. While the scientific community continues to implement strategies for improving reporting practices, our results indicate stronger incentives or enforcements are needed.

Citation: Diong J, Butler AA, Gandevia SC, Héroux ME (2018) Poor statistical reporting, inadequate data presentation and spin persist despite editorial advice. PLoS ONE 13(8): e0202121. https://doi.org/10.1371/journal.pone.0202121

Editor: Bart O. Williams, Van Andel Institute, UNITED STATES

Received: April 28, 2018; Accepted: July 27, 2018; Published: August 15, 2018

Copyright: © 2018 Diong et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All relevant data are within the paper and its Supporting Information files.

Funding: This work is supported by the National Health and Medical Research Council ( https://www.nhmrc.gov.au/ ), APP1055084. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

The accurate communication of scientific discovery depends on transparent reporting of methods and results. Specifically, information on data variability and results of statistical analyses are required to make accurate inferences.

The quality of statistical reporting and data presentation in scientific papers is generally poor. For example, one third of clinical trials in molecular drug interventions and breast cancer selectively report outcomes [ 1 ], 60-95% of biomedical research papers report statistical analyses that are not pre-specified or are different to published analysis plans [ 2 ], and one third of all graphs published in the prestigious Journal of the American Medical Association cannot be interpreted unambiguously [ 3 ]. In addition, reported results may differ from the actual statistical results. For example, distorted interpretation of statistically non-siginficant results (i.e. spin) is present in more than 40% of clinical trial reports [ 4 ].

Many reporting guidelines (e.g. the Consolidated Standards of Reporting Trials; CONSORT [ 5 ]) have been developed, endorsed and mandated by key journals to improve the quality of research reporting. Furthermore, journals have published editorial advice to advocate better reporting standards [ 6 – 9 ]. Nevertheless, it is arguable whether reporting standards have improved substantially [ 10 – 12 ].

In response to the poor quality of statistical reporting and data presentation in physiology and pharmacology, the Journal of Physiology published an editorial series to provide authors with clear, non-technical guidance on best-practice standards for data analysis, data presentation and reporting of results. Co-authored by the Journal of Physiology’s senior statistics editor and a medical statistician, the editorial series by Drummond and Vowler was jointly published in 2011 under a non-exclusive licence in the Journal of Physiology, the British Journal of Pharmacology, as well as several other journals. (The editorial series was simultaneously published, completely or in part, in Experimental Physiology, Advances in Physiology Education, Microcirculation, the British Journal of Nutrition, and Clinical and Experimental Pharmacology and Physiology.) The key recommendations by Drummond and Vowler include instructions to (1) report variability of continuous outcomes using standard deviations instead of standard errors of the mean, (2) report exact p-values for primary analyses and post-hoc tests, and (3) plot raw data used to calculate variability [ 13 – 15 ]. These recommendations were made so authors would implement them in future research reports. However, it is not known whether reporting practices in these journals have improved since the publication of this editorial advice.

We conducted a cross-sectional analysis of research papers published in the Journal of Physiology and the British Journal of Pharmacology to assess reporting practices. Specifically, we assessed statistical reporting, data presentation and spin in a random sample of papers published in the four years before and four years after the editorial advice by Drummond and Vowler was published.

Materials and methods

Pubmed search and eligibility criteria.

All papers published in the Journal of Physiology and the British Journal of Pharmacology in the years 2007-2010 and 2012-2015 and indexed on PubMed were extracted using the search strategy: (J Physiol[TA] OR Br J Pharmacol[TA]) AND yyyy: yyyy[DP] NOT (editorial OR review OR erratum OR comment OR rebuttal OR crosstalk). Papers were excluded if they were editorials, reviews, erratums, comments, rebuttals, or part of the Journal of Physiology’s Crosstalk correspondence series. Of the eligible papers, a random sample of papers published in the four years before the 2011 editorial advice by Drummond and Vowler was published (2007-2010) and four years after (2012-2015) was extracted ( S1 File ), and full-text PDFs were obtained.

Question development and pilot testing

Ten questions and scoring criteria were developed to assess statistical reporting, data presentation and spin in the text and figures of the extracted papers. Questions assessing statistical reporting in the text (Q1-5) determined if and how written measures that summarize variability were defined, and if exact p-values were reported for primary analyses and post-hoc tests. Questions assessing data presentation in figures (Q6-8) determined if and how plotted measures that summarize variability were defined, and if raw data used to calculate the variability were plotted. Questions assessing the presence of spin (Q9-10) determined if p-values between 0.05 and 0.1 were interpreted as trends or statistically significant.

A random sample of 20 papers before and 20 papers after the editorial advice was used to assess the clarity of the scoring instructions and scoring agreement between raters. These papers were separate from those included in the full audit. All papers were independently scored by three raters (AAB, JD, MEH). Scores that differed between raters were discussed to reach agreement by consensus. The wording of the questions, scoring criteria, and scoring instructions were refined to avoid different interpretations by raters. The questions are shown in Fig 1 . Scoring criteria and additional details of the questions are provided in the scoring information sheets in the supporting information ( S2 File ).

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

Counts and proportions of papers that fulfilled scoring criteria for each question before (white) and after (gray) the editorial advice was published. Abbreviations are SEM: standard error of the mean, ANOVA: analysis of variance.

https://doi.org/10.1371/journal.pone.0202121.g001

Data collection, outcomes and analysis

Each rater (AAB, JD, MEH, SCG) had to score and extract data independently from 50 papers before and 50 papers after the editorial advice was published. For each rater, papers from the 2007-2010 and 2012-2015 periods were audited in an alternating pattern to avoid an order or block effect. One rater unintentionally audited an additional 2007-2010 paper, and another rater unintentionally audited a 2007-2010 paper instead of a 2012-2015 paper. Thus, data from a random sample of 202 papers before and 199 papers after the editorial advice were analysed. When scoring was completed, papers that were difficult or ambiguous to score (less than 5% of all papers) were reviewed by all raters and scoring determined by consensus.

It was difficult to score some papers unambiguously on some of the scoring criteria. For example in question 3, it was sometimes difficult to determine what a paper’s primary analyses, main effects and interactions were, in order to determine whether p-values for these were reported or implied. When raters could not unambiguously interpret the data, either individually or as a team, we scored papers to give authors the benefit of doubt.

Counts and proportions of papers that fulfilled the scoring criteria for each question were calculated; no statistical tests were performed. Descriptive data are reported. All data processing and analysis were performed using Python (v3.5). Raw data, computer analysis code and result are available in the supporting information ( S3 File ).

The random sample of audited papers was reasonably representative of the number of papers published each year in the Journal of Physiology and the British Journal of Pharmacology in the two periods of interest ( Table 1 ).

https://doi.org/10.1371/journal.pone.0202121.t001

The proportions of audited papers that fulfilled the scoring criteria are presented in Fig 1 . The figure shows there is no substantial difference in statistical reporting, data presentation or the presence of spin after the editorial advice was published. Overall, 76-84% of papers with written measures that summarized data variability used standard errors of the mean, and 90-96% of papers did not report exact p-values for primary analyses and post-hoc tests. 76-84% of papers that plotted measures to summarize variability used standard errors of the mean, and only 2-4% of papers plotted raw data used to calculate variability.

Of papers that reported p-values between 0.05 and 0.1, 56-63% interpreted such p-values as trends or statistically significant. Examples of such interpretations include:

- “A P < 0.05 level of significance was used for all analyses. […] As a result, further increases in the tidal P oes /VT (by 2.64 and 1.41 cmH 2 O l −1 , P = 0.041) and effort–displacement ratios (by 0.22 and 0.13 units, P = 0.060) were consistently greater during exercise …” (PMID 18687714)

- “The level of IL-15 mRNA tended to be lower in vastus lateralis than in triceps (P = 0.07) ( Fig 1A )” (PMID 17690139)

- “… where P < 0.05 indicates statistical significance […] was found to be slightly smaller than that of basal cells (−181 ± 21 pA, n = 7, P27–P30) but the difference was not quite significant (P = 0.05)” (PMID 18174213)

- “… resting activity of A5 neurons was marginally but not significantly higher in toxin-treated rats (0.9 ± 0.2vs. 1.8 ± 0.5 Hz, P = 0.068)” (PMID 22526887)

- “… significantly smaller than with fura-6F alone (P = 0.009), and slightly smaller than with fura-6F and EGTA (P = 0.08).” (PMID 18832426)

- “The correlation becomes only marginally significant if the single experiment with the largest effect is removed (r = 0.41, P = 0.057, n = 22).” (PMID 17916607)

Implied or gross spin (i.e. spin other than interpreting p-values between 0.05 and 0.1 as trends or statistically significant) was noted incidentally in papers before (n = 10) and after (n = 9) the editorial advice was published. Examples of statements where implied or gross spin was present include:

- “However, analysis of large spontaneous events (>50 pA in four of six cells) ( Fig 1G–1I ) showed the frequency to be increased from 0.4 ± 0.1 Hz to 0.8 ± 0.2 Hz (P < 0.05) ( Fig 1G and 1H ) and the amplitude by 20.3 ± 15.6 pA (P > 0.1) …” (PMID 24081159)

- “… whereas there was only a non-significant trend in the older group.” (no p-value, PMID 18469848)

Post-hoc analyses revealed audit results were comparable between raters ( S4 File ). Additional post-hoc analyses revealed audit results were relatively consistent across years and journals ( S5 File ). A notable exception was the British Journal of Pharmacology and its lower rate of reporting p-values (3-27% lower; question 3) and exact p-values for main analyses (8-22% lower; question 4).

In 2011 the Journal of Physiology and the British Journal of Pharmacology jointly published editorial advice on best practice standards for statistical reporting and data presentation [ 13 ]. These recommendations were reiterated in the Journals’ Instructions to Authors. Our cross-sectional analysis shows there was no substantial improvement in statistical reporting and data presentation in the four years after publication of this editorial advice.

Our results confirm that the quality of statistical reporting is generally poor. We found that ∼80% of papers that plotted error bars used standard error of the mean. In line with this, a systematic review of 703 papers published in key physiology journals revealed 77% of papers plotted bar graphs with standard error of the mean [ 16 ]. Similarly, one of the authors (MH) audited all 2015 papers published in the Journal of Neurophysiology and found that in papers with error bars, 65% used standard error of the mean and ∼13% did not define their error bars. That audit also revealed ∼42% of papers did not report exact p-values and ∼57% of papers with p-values between 0.05 and 0.1 interpreted these p-values as trends or statistically significant [ 12 ]. Our current study found that ∼93% of papers included non-exact p-values and ∼60% of papers with p-values between 0.05 and 0.1 reported these with spin. It is unfortunate that authors adopt practices that distort the interpretation of results and mislead readers into viewing results more favorably. This problem was recently highlighted by a systematic review on the prevalence of spin in the biomedical literature [ 17 ]. Spin was present in 35% of randomized control trials with significant primary outcomes, 60% of randomized controls with non-significant primary outcomes, 84% of non-randomized trials and 86% of observational studies. Overall, these results highlight the sheer magnitude of the problem: poor statistical reporting and questionable interpretation of results are truly common practice for many scientists.

Our findings also broadly agree with other observational data on the ineffectiveness of statistical reporting guidelines in biomedical and clinical research. For example, the CONSORT guidelines for the reporting of randomized controlled trials are widely supported and mandated by key medical journals, but the quality of statistical reporting and data presentation in randomized trial reports remains inadequate [ 18 – 20 ]. A scoping audit of papers published by American Physiological Society journals in 1996 showed most papers mistakenly reported standard errors of the mean as estimates of variability, not as estimates of uncertainty [ 21 ]. Consequently, in 2004 the Society published editorial guidelines to improve statistical reporting practices [ 22 ]. These guidelines instructed authors to report variability using standard deviations, and report uncertainty about scientific importance using confidence intervals. However, the authors of the guidelines audited papers published before and after their implementation and found no improvement in the proportion of papers reporting standard errors of the mean, standard deviations, confidence intervals, and exact p-values [ 10 ]. Likewise, in 1999 and 2001 the American Psychological Association published guidelines instructing authors to report effect sizes and confidence intervals [ 23 , 24 ]. Once again, an audit of papers published before and after the guidelines were implemented found no improvement in the proportion of figures with error bars defined as standard errors of the mean (43-59%) or worse, with error bars that were not defined (29-34%) [ 25 ].

One example where editorial instructions improved reporting practices occurred in public health. In the mid-80’s the American Journal of Public Health had an influential editor who advocated and enforced the use of confidence intervals rather than p-values. An audit of papers published before and during the tenure of this editor found that the reliance on p-values to interpret findings dropped from 63% to 5% and the reporting of confidence intervals increased from 10% to 54% [ 26 ]. However, few authors referred to confidence intervals when interpreting results. In psychology, when editors of Memory & Cognition and the Journal of Consulting and Clinical Psychology enforced the use of confidence intervals and effect sizes, the use of these statistics increased to some extent, even though long-term use was not maintained [ 27 ]. These examples provide evidence that editors with training in statistical intepretation may enforce editorial instructions more successfully, even if author understanding does not necessarily improve.

Why are reporting practices not improving? The pressure to publish may be partly to blame. Statistically significant findings that are visually and numerically clean are easier to publish. Thus, it should come as no surprise that p-values between 0.05 and 0.1 are interpreted as trends or statistically significant, and that researchers use standard errors of the mean to plot and report results. There is also a cultural component to these practices. The process of natural selection ensures that practices associated with higher publication rates are transmitted from one generation of successful researchers to the next [ 28 ]. Unfortunately, some of these practices include poor reporting practices. As was recently highlighted by Goodman [ 29 ], conventions die hard, even if they contribute to irreproducible research. In the article, citing a government report on creating change within a system, Goodman highlights that “culture will trump rules, standards and control strategies every single time”. Thus, researchers will often opt for reporting practices that make their papers look like others in their field, conscious or not that these reporting practices are inadequate and not in line with published reporting guidelines. A final contributing factor is that many researchers continue to misunderstand key statistical concepts, such as measures of variability and uncertainty, inferences made from independent and repeated-measures study designs, and error bars and how they reflect statistical significance [ 30 ]. This partly explains the resistance to statistical innovations and robust reporting practices [ 27 ].

The recent reproducibility crisis has seen all levels of the scientific community implement new strategies to improve how science is conducted and reported. For example, journals have introduced article series to promote awareness [ 9 , 31 ] and adopted more stringent reporting guidelines [ 32 , 33 ]. Whole disciplines have also taken steps to tackle these issues. For example, the Academy of Medical Sciences partnered with the Biotechnology and Biological Sciences Research Council, the Medical Research Council and the Wellcome Trust to host a symposium on improving reproducibility and reliability of biomedical research [ 34 ]. Funding bodies have also acted. For example, the NIH launched training modules to educate investigators on topics such as bias, blinding and experimental design [ 35 ], and the Wellcome Trust published guidelines on research integrity and good research practice [ 36 ]. Other initiatives include the Open Science Framework, which facilitates open collaboration [ 37 ], and the Transparency and Openness Promotion guidelines, which were developed to improve reproducibility in research and have been endorsed by many key journals [ 38 ]. To improve research practices, these initiatives aim to raise awareness of the issues, educate researchers and provide tools to implement the various recommendations. While the enduring success of these initiatives remains to be determined, we remain hopeful for the future. There is considerable momentum throughout science, and many leaders from various disciplines have stepped up to lead the way.

In summary, reporting practices have not improved despite published editorial advice. Journals and other members of the scientific community continue to advocate and implement strategies for change, but these have only had limited success. Stronger incentives, better education and widespread enforcement are needed for enduring improvements in reporting practices to occur.

Supporting information

S1 file. random paper selection..

Python code and PubMed search results used to randomly select papers for the audit. See the included README.txt file for a full description.

https://doi.org/10.1371/journal.pone.0202121.s001

S2 File. Scoring information sheets.

Scoring criteria and details of questions 1-10.

https://doi.org/10.1371/journal.pone.0202121.s002

S3 File. Data and code.

Comma-separated-values (CSV) file of raw scores for questions 1-10 and the Python files used to analyse the data. See the included README.txt file for a full description.

https://doi.org/10.1371/journal.pone.0202121.s003

S4 File. Audit results across raters.

Comparison of audit results across raters indicates the scoring criteria were applied uniformly across raters.

https://doi.org/10.1371/journal.pone.0202121.s004

S5 File. Audit results across years and journals.

Comparison of audit results for each year and journal. The British Journal of Pharmacology consistently had lower reporting rates of p-values for main analyses, and exact p-values for main analyses.

https://doi.org/10.1371/journal.pone.0202121.s005

Acknowledgments

We thank Dr Gordon Drummond for reviewing a draft of the manuscript.

- View Article

- PubMed/NCBI

- Google Scholar

- 24. American Psychological Association. Publication manual of the American Psychological Association. American Psychological Association; 2001. Available from: http://www.apa.org/pubs/books/4200061.aspx .

- 25. Cumming G, Fidler F, Leonard M, Kalinowski P, Christiansen A, Kleinig A, et al. Statistical reform in psychology: is anything changing?; 2007. Available from: https://www.jstor.org/stable/40064724 .

- 31. Nature Special. Challenges in irreproducible research; 2014. Available from: https://www.nature.com/collections/prbfkwmwvz/ .

- 34. The Academy of Medical Sciences. Reproducibility and reliability of biomedical research; 2016. Available from: https://acmedsci.ac.uk/policy/policy-projects/reproducibility-and-reliability-of-biomedical-research .

- 35. National Institutes of Health. Clearinghouse for training modules to enhance data reproducibility; 2014. Available from: https://www.nigms.nih.gov/training/pages/clearinghouse-for-training-modules-to-enhance-data-reproducibility.aspx .

- 36. Wellcome Trust. Research practice; 2005. Available from: https://wellcome.ac.uk/what-we-do/our-work/research-practice .

- Tips & Tricks

- PowerPoint Templates

- Training Programs

- Free E-Courses

5 Small Mistakes To Avoid While Presenting Data

Home > Presentation of Data Page > Presenting Data Mistakes

Small mistakes are like bad breath. Your friends won’t point them out to you and neither can you find them out yourself. Find the small mistakes people make in a data presentation and learn how to avoid them.

Small mistakes in presenting data go unnoticed:

It is easy to correct big mistakes in your presentations, because they are easily noticeable. Small mistakes are difficult to identify, but they irritate the audience. Here are the 5 common mistakes made while presenting data and the ways to solve them.

1. Retaining the small fonts of Excel in PowerPoint slides

Most presenters have the habit of sticking charts directly from excel sheet to PowerPoint. They don’t bother to increase the font size of the numbers (which is usually 10 when you stick a chart from excel sheet).

Want to know how hard it is to read that small font size from a distance?

Try reading the legend on the slide below:

Solution to issue with font size:

Increase the font size of text and numbers in the chart to at least 18 to 20. This is the minimum acceptable font size even in a small conference room.

2. Using images in chart background

Some presentation gurus advise putting pictures in the chart background for building an emotional connection with the audience. Here is the result:

Wow! That definitely strikes an emotional chord. But, where is the graph?

Remember, the fancy presentation methods used for presenting on culture and philosophy don’t work in business presentations. Remove all chart backgrounds. Let your audience understand your chart.

Related: 2 Creative Info graphic Examples

3. Flaunting thoroughness with footnotes

Some presenters boast the thoroughness of their research and the ‘correctness’ of their observation by using slides like this:

The disclaimers and footnotes occupy half the slide in font size 6. This immediately puts a distance between the presenter and the audience.

Solution to complex tables:

- Remove footnotes from your slides. Include them in your handouts if you must

- Don’t display raw data. Write your conclusions on your slides.

- Avoid using large tables on a slide. Table larger than 2X2 is quite difficult to follow on a slide during presentations.

4. Showing lack of sensitivity while writing text and digits

Some presenters don’t care about audience convenience while writing their text on slides. Here is a quick list of small mistakes and their solution:

- Don’t write vertical text on the Y axis or slanting text on the X axis. Your audience can’t read them easily. If you have a lot of text to write on the x- axis of your column chart, use bar chart instead.

- Don’t write large numbers like 56798745.08 on your charts or tables. Express the big numbers in Millions or Thousands. Round off the decimals while using big numbers. Use space between digits for easy reading. Ex: 12 000 or 12,000 is easy to read instead of 12000.

- Use wide intervals for your Y axis if you want to show gridlines. Avoid showing minor gridlines at all cost.

- Order your lists by groups or by alphabetical order for easy reference.

5. Showing lack of preparedness by reading numbers

A lot of presenters lose audience trust because they don’t familiarize themselves with the charts and numbers on their slides. They try to ‘figure out’ their charts along with the audience. They read the numbers from the slide instead of talking about what the numbers mean.

Solution to reading numbers when presenting data:

- Rehearse your charts at least 3 times before presenting them to the audience.

- Practice the custom animations used in your charts.

- Provide insights from the numbers.

Summary of small mistakes to avoid while presenting data:

- Retaining the small fonts of Excel in PowerPoint slides

- Using images in chart background

- Flaunting thoroughness with footnotes

- Showing lack of audience sensitivity while writing text and digits

- Showing lack of preparedness by reading numbers

Avoid these small mistakes and you will succeed in presenting data with purpose.

FRelated: Top 5 Presentation Design Mistakes

Return to Top of Presenting Data Mistakes Page

Share these tips & tutorials

Get 25 creative powerpoint ideas mini course & members-only tips & offers. sign up for free below:.

- Slide Design Training

- Design Services

Five Data Chart Mistakes to Avoid in Presentation

Are you presenting data graphs a lot on your slides? Make sure you are not failing into one of the data visualization traps. Here are the most common mistakes I see people do when they present various Excel charts to PowerPoint.

How to avoid those typical data visualization mistakes? I am sharing a few quick tips how you can handle those mistakes using only PowerPoint tools.

This is a fragment of our training workshops. For more details see our Online Trainings on Presentation Design.

1. Inappropriate data chart

PowerPoint offers a dozen of data charts you can choose from. Recent addition (from Office365) include fancy looking Sunburst, Funnel or finally Waterfall chart.

However for most of presentation purposes you will need to use a few basic charts or their versions: Bar (or column) chart, Pie chart (also doughnut or donut one), and Line chart (or area chart). Each of them fits a different purpose:

Line Charts and Area Charts

Line chart is best for showing data change over time, For example showing sales trends, website traffic over last days or global warming temperatures over decades.

Mistakes I have seen involve using line charts with categorical Y axes (e.g. a specific product). Another thing to avoid using line chart are showing too many variables on one chart.

Pie and Doughnut Charts

Those are nice looking charts on shape of circle, that are meant to represent data that compose one whole. Typically a market share or any kind of percentage data e.g. a survey answers distribution.

Logical error in a pie chart is when percentage values do not sum up to 100%.

Visual mistakes I see a lot is using pie charts with way to many categories. From design point of view, to keep readability I’d recommended showing max 6 categories in a pie chart. And order them by value from biggest to smallest. If you have more categories, group last 7+ into one category “others”. And if needed, show this category on other chart.

Column and Bar Charts

Those charts represent data value by size of a vertical or horizontal bar. They are suitable to compare multiple categories e.g. sales of various products. Unlike line or pie charts, you can show here also more variables and chart will be still readable.

One thing I would suggest is to use color coding with some business logic behind. Use various colors if you want to underline that variables are different. Apply only 1 color if you want to focus more on values than on variety of categories.

How to choose chart to your data

If the focus of your presentation is to show outcomes in an understandable way, and not to do exploratory data analysis, then it’s better to use those classical data chart. Avoid fancy new chart, that may not be that easy to comprehend by your audience.

Choosing one of those basic data graphs, consider what you want to show:

- Is it a share from some whole part? Then consider Pie or Doughnut chart.

- Is it a trend over a time ? Then Line chart is your obvious choice.

- Or do you compare several items? Then go for Bar chart.

To learn more about data charts I recommend book by Nancy Duarte: Data Story or Say it with a Charts by Gene Zelazny .

2. Unclear reading flow of a data slide

When you are designing a slide with data presentation, indicate clearly how the reader should look at it, where to start and where to follow.

Is the chart title the first thing a reader should look at? Or is it a trend line? A specific data value or outlier you want to highlight? Don’t let reader guess what’s the most important part.

How to tackle reading flow mistake?

Use design elements to lead an eye of a reader – using color contrast, font size or some highlighter shapes – arrow, oval. Remember, natural way for reading the slide is from left to right, from top to bottom (at least in western culture). People will tend to read the slide this way, unless you show the the other way.

Therefore take case your chart objects are aligned for a logical reading flow. This will ensure your slide will be easy to read.

3. Too many data presented on a slide

You may be tempted to show all details of your data analysis, how you got these results, exploring and presenting many data nuances. However ask yourself: Is this a crucial information also for my audience? Presentation should focus on the final result of your analysis and explain it properly.