Validity & Reliability In Research

A Plain-Language Explanation (With Examples)

By: Derek Jansen (MBA) | Expert Reviewer: Kerryn Warren (PhD) | September 2023

Validity and reliability are two related but distinctly different concepts within research. Understanding what they are and how to achieve them is critically important to any research project. In this post, we’ll unpack these two concepts as simply as possible.

This post is based on our popular online course, Research Methodology Bootcamp . In the course, we unpack the basics of methodology using straightfoward language and loads of examples. If you’re new to academic research, you definitely want to use this link to get 50% off the course (limited-time offer).

Overview: Validity & Reliability

- The big picture

- Validity 101

- Reliability 101

- Key takeaways

First, The Basics…

First, let’s start with a big-picture view and then we can zoom in to the finer details.

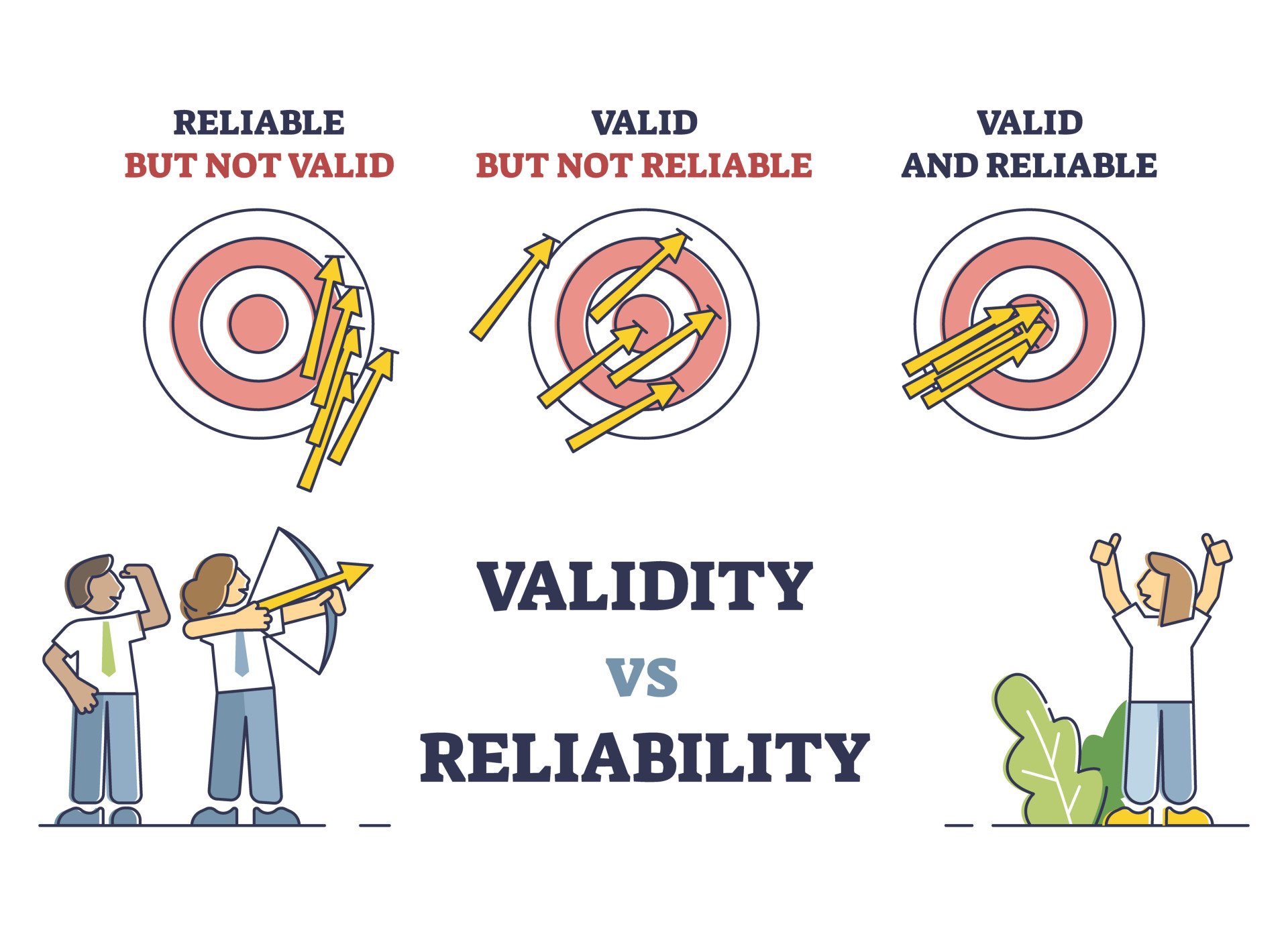

Validity and reliability are two incredibly important concepts in research, especially within the social sciences. Both validity and reliability have to do with the measurement of variables and/or constructs – for example, job satisfaction, intelligence, productivity, etc. When undertaking research, you’ll often want to measure these types of constructs and variables and, at the simplest level, validity and reliability are about ensuring the quality and accuracy of those measurements .

As you can probably imagine, if your measurements aren’t accurate or there are quality issues at play when you’re collecting your data, your entire study will be at risk. Therefore, validity and reliability are very important concepts to understand (and to get right). So, let’s unpack each of them.

What Is Validity?

In simple terms, validity (also called “construct validity”) is all about whether a research instrument accurately measures what it’s supposed to measure .

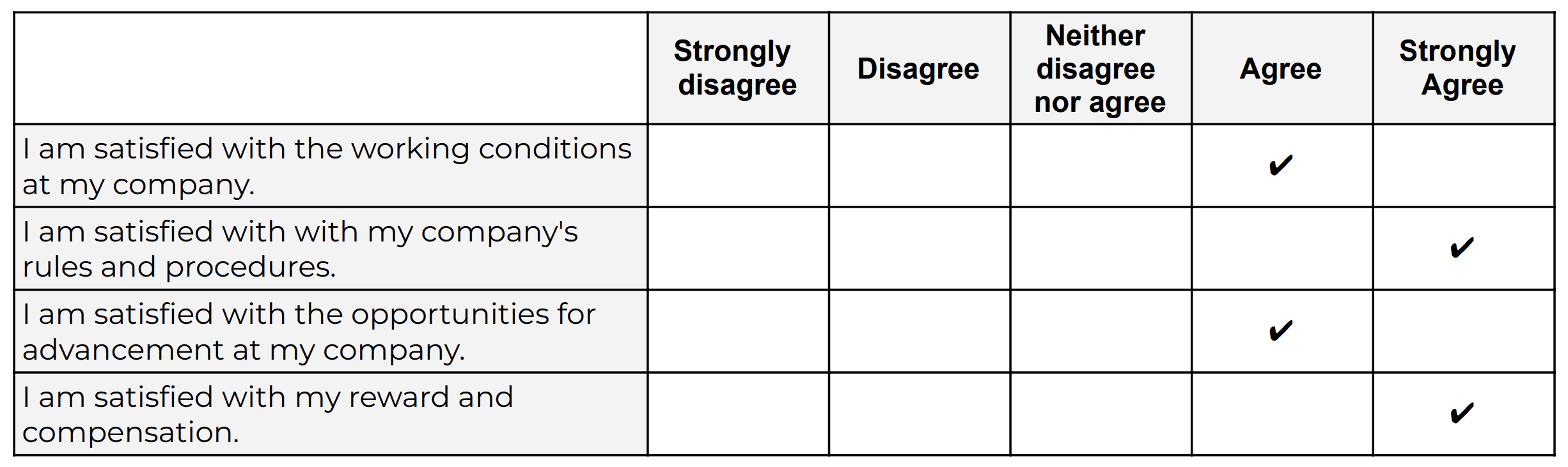

For example, let’s say you have a set of Likert scales that are supposed to quantify someone’s level of overall job satisfaction. If this set of scales focused purely on only one dimension of job satisfaction, say pay satisfaction, this would not be a valid measurement, as it only captures one aspect of the multidimensional construct. In other words, pay satisfaction alone is only one contributing factor toward overall job satisfaction, and therefore it’s not a valid way to measure someone’s job satisfaction.

Oftentimes in quantitative studies, the way in which the researcher or survey designer interprets a question or statement can differ from how the study participants interpret it . Given that respondents don’t have the opportunity to ask clarifying questions when taking a survey, it’s easy for these sorts of misunderstandings to crop up. Naturally, if the respondents are interpreting the question in the wrong way, the data they provide will be pretty useless . Therefore, ensuring that a study’s measurement instruments are valid – in other words, that they are measuring what they intend to measure – is incredibly important.

There are various types of validity and we’re not going to go down that rabbit hole in this post, but it’s worth quickly highlighting the importance of making sure that your research instrument is tightly aligned with the theoretical construct you’re trying to measure . In other words, you need to pay careful attention to how the key theories within your study define the thing you’re trying to measure – and then make sure that your survey presents it in the same way.

For example, sticking with the “job satisfaction” construct we looked at earlier, you’d need to clearly define what you mean by job satisfaction within your study (and this definition would of course need to be underpinned by the relevant theory). You’d then need to make sure that your chosen definition is reflected in the types of questions or scales you’re using in your survey . Simply put, you need to make sure that your survey respondents are perceiving your key constructs in the same way you are. Or, even if they’re not, that your measurement instrument is capturing the necessary information that reflects your definition of the construct at hand.

If all of this talk about constructs sounds a bit fluffy, be sure to check out Research Methodology Bootcamp , which will provide you with a rock-solid foundational understanding of all things methodology-related. Remember, you can take advantage of our 60% discount offer using this link.

Need a helping hand?

What Is Reliability?

As with validity, reliability is an attribute of a measurement instrument – for example, a survey, a weight scale or even a blood pressure monitor. But while validity is concerned with whether the instrument is measuring the “thing” it’s supposed to be measuring, reliability is concerned with consistency and stability . In other words, reliability reflects the degree to which a measurement instrument produces consistent results when applied repeatedly to the same phenomenon , under the same conditions .

As you can probably imagine, a measurement instrument that achieves a high level of consistency is naturally more dependable (or reliable) than one that doesn’t – in other words, it can be trusted to provide consistent measurements . And that, of course, is what you want when undertaking empirical research. If you think about it within a more domestic context, just imagine if you found that your bathroom scale gave you a different number every time you hopped on and off of it – you wouldn’t feel too confident in its ability to measure the variable that is your body weight 🙂

It’s worth mentioning that reliability also extends to the person using the measurement instrument . For example, if two researchers use the same instrument (let’s say a measuring tape) and they get different measurements, there’s likely an issue in terms of how one (or both) of them are using the measuring tape. So, when you think about reliability, consider both the instrument and the researcher as part of the equation.

As with validity, there are various types of reliability and various tests that can be used to assess the reliability of an instrument. A popular one that you’ll likely come across for survey instruments is Cronbach’s alpha , which is a statistical measure that quantifies the degree to which items within an instrument (for example, a set of Likert scales) measure the same underlying construct . In other words, Cronbach’s alpha indicates how closely related the items are and whether they consistently capture the same concept .

Recap: Key Takeaways

Alright, let’s quickly recap to cement your understanding of validity and reliability:

- Validity is concerned with whether an instrument (e.g., a set of Likert scales) is measuring what it’s supposed to measure

- Reliability is concerned with whether that measurement is consistent and stable when measuring the same phenomenon under the same conditions.

In short, validity and reliability are both essential to ensuring that your data collection efforts deliver high-quality, accurate data that help you answer your research questions . So, be sure to always pay careful attention to the validity and reliability of your measurement instruments when collecting and analysing data. As the adage goes, “rubbish in, rubbish out” – make sure that your data inputs are rock-solid.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS.

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS AND I HAVE GREATLY BENEFITED FROM THE CONTENT.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

What are the different research strategies you can use in your dissertation? Here are some guidelines to help you choose a research strategy that would make your research more credible.

Sampling methods are used to to draw valid conclusions about a large community, organization or group of people, but they are based on evidence and reasoning.

A hypothesis is a research question that has to be proved correct or incorrect through hypothesis testing – a scientific approach to test a hypothesis.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Reliability vs Validity in Research | Differences, Types & Examples

Reliability vs Validity in Research | Differences, Types & Examples

Published on 3 May 2022 by Fiona Middleton . Revised on 10 October 2022.

Reliability and validity are concepts used to evaluate the quality of research. They indicate how well a method , technique, or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.

It’s important to consider reliability and validity when you are creating your research design , planning your methods, and writing up your results, especially in quantitative research .

Table of contents

Understanding reliability vs validity, how are reliability and validity assessed, how to ensure validity and reliability in your research, where to write about reliability and validity in a thesis.

Reliability and validity are closely related, but they mean different things. A measurement can be reliable without being valid. However, if a measurement is valid, it is usually also reliable.

What is reliability?

Reliability refers to how consistently a method measures something. If the same result can be consistently achieved by using the same methods under the same circumstances, the measurement is considered reliable.

What is validity?

Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world.

High reliability is one indicator that a measurement is valid. If a method is not reliable, it probably isn’t valid.

However, reliability on its own is not enough to ensure validity. Even if a test is reliable, it may not accurately reflect the real situation.

Validity is harder to assess than reliability, but it is even more important. To obtain useful results, the methods you use to collect your data must be valid: the research must be measuring what it claims to measure. This ensures that your discussion of the data and the conclusions you draw are also valid.

Prevent plagiarism, run a free check.

Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory. Methods of estimating reliability and validity are usually split up into different types.

Types of reliability

Different types of reliability can be estimated through various statistical methods.

Types of validity

The validity of a measurement can be estimated based on three main types of evidence. Each type can be evaluated through expert judgement or statistical methods.

To assess the validity of a cause-and-effect relationship, you also need to consider internal validity (the design of the experiment ) and external validity (the generalisability of the results).

The reliability and validity of your results depends on creating a strong research design , choosing appropriate methods and samples, and conducting the research carefully and consistently.

Ensuring validity

If you use scores or ratings to measure variations in something (such as psychological traits, levels of ability, or physical properties), it’s important that your results reflect the real variations as accurately as possible. Validity should be considered in the very earliest stages of your research, when you decide how you will collect your data .

- Choose appropriate methods of measurement

Ensure that your method and measurement technique are of high quality and targeted to measure exactly what you want to know. They should be thoroughly researched and based on existing knowledge.

For example, to collect data on a personality trait, you could use a standardised questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or the findings of previous studies, and the questions should be carefully and precisely worded.

- Use appropriate sampling methods to select your subjects

To produce valid generalisable results, clearly define the population you are researching (e.g., people from a specific age range, geographical location, or profession). Ensure that you have enough participants and that they are representative of the population.

Ensuring reliability

Reliability should be considered throughout the data collection process. When you use a tool or technique to collect data, it’s important that the results are precise, stable, and reproducible.

- Apply your methods consistently

Plan your method carefully to make sure you carry out the same steps in the same way for each measurement. This is especially important if multiple researchers are involved.

For example, if you are conducting interviews or observations, clearly define how specific behaviours or responses will be counted, and make sure questions are phrased the same way each time.

- Standardise the conditions of your research

When you collect your data, keep the circumstances as consistent as possible to reduce the influence of external factors that might create variation in the results.

For example, in an experimental setup, make sure all participants are given the same information and tested under the same conditions.

It’s appropriate to discuss reliability and validity in various sections of your thesis or dissertation or research paper. Showing that you have taken them into account in planning your research and interpreting the results makes your work more credible and trustworthy.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Middleton, F. (2022, October 10). Reliability vs Validity in Research | Differences, Types & Examples. Scribbr. Retrieved 31 May 2024, from https://www.scribbr.co.uk/research-methods/reliability-or-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, the 4 types of validity | types, definitions & examples, a quick guide to experimental design | 5 steps & examples, sampling methods | types, techniques, & examples.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Reliability vs. Validity in Research: Types & Examples

When it comes to research, getting things right is crucial. That’s where the concepts of “Reliability vs Validity in Research” come in.

Imagine it like a balancing act – making sure your measurements are consistent and accurate at the same time. This is where test-retest reliability, having different researchers check things, and keeping things consistent within your research plays a big role.

As we dive into this topic, we’ll uncover the differences between reliability and validity, see how they work together, and learn how to use them effectively.

Understanding Reliability vs. Validity in Research

When it comes to collecting data and conducting research, two crucial concepts stand out: reliability and validity.

These pillars uphold the integrity of research findings, ensuring that the data collected and the conclusions drawn are both meaningful and trustworthy. Let’s dive into the heart of the concepts, reliability, and validity, to comprehend their significance in the realm of research truly.

What is reliability?

Reliability refers to the consistency and dependability of the data collection process. It’s like having a steady hand that produces the same result each time it reaches for a task.

In the research context, reliability is all about ensuring that if you were to repeat the same study using the same reliable measurement technique, you’d end up with the same results. It’s like having multiple researchers independently conduct the same experiment and getting outcomes that align perfectly.

Imagine you’re using a thermometer to measure the temperature of the water. You have a reliable measurement if you dip the thermometer into the water multiple times and get the same reading each time. This tells you that your method and measurement technique consistently produce the same results, whether it’s you or another researcher performing the measurement.

What is validity?

On the other hand, validity refers to the accuracy and meaningfulness of your data. It’s like ensuring that the puzzle pieces you’re putting together actually form the intended picture. When you have validity, you know that your method and measurement technique are consistent and capable of producing results aligned with reality.

Think of it this way; Imagine you’re conducting a test that claims to measure a specific trait, like problem-solving ability. If the test consistently produces results that accurately reflect participants’ problem-solving skills, then the test has high validity. In this case, the test produces accurate results that truly correspond to the trait it aims to measure.

In essence, while reliability assures you that your data collection process is like a well-oiled machine producing the same results, validity steps in to ensure that these results are not only consistent but also relevantly accurate.

Together, these concepts provide researchers with the tools to conduct research that stands on a solid foundation of dependable methods and meaningful insights.

Types of Reliability

Let’s explore the various types of reliability that researchers consider to ensure their work stands on solid ground.

High test-retest reliability

Test-retest reliability involves assessing the consistency of measurements over time. It’s like taking the same measurement or test twice – once and then again after a certain period. If the results align closely, it indicates that the measurement is reliable over time. Think of it as capturing the essence of stability.

Inter-rater reliability

When multiple researchers or observers are part of the equation, interrater reliability comes into play. This type of reliability assesses the level of agreement between different observers when evaluating the same phenomenon. It’s like ensuring that different pairs of eyes perceive things in a similar way.

Internal reliability

Internal consistency dives into the harmony among different items within a measurement tool aiming to assess the same concept. This often comes into play in surveys or questionnaires, where participants respond to various items related to a single construct. If the responses to these items consistently reflect the same underlying concept, the measurement is said to have high internal consistency.

Types of validity

Let’s explore the various types of validity that researchers consider to ensure their work stands on solid ground.

Content validity

It delves into whether a measurement truly captures all dimensions of the concept it intends to measure. It’s about making sure your measurement tool covers all relevant aspects comprehensively.

Imagine designing a test to assess students’ understanding of a history chapter. It exhibits high content validity if the test includes questions about key events, dates, and causes. However, if it focuses solely on dates and omits causation, its content validity might be questionable.

Construct validity

It assesses how well a measurement aligns with established theories and concepts. It’s like ensuring that your measurement is a true representation of the abstract construct you’re trying to capture.

Criterion validity

Criterion validity examines how well your measurement corresponds to other established measurements of the same concept. It’s about making sure your measurement accurately predicts or correlates with external criteria.

Differences between reliability and validity in research

Let’s delve into the differences between reliability and validity in research.

While both reliability and validity contribute to trustworthy research, they address distinct aspects. Reliability ensures consistent results, while validity ensures accurate and relevant results that reflect the true nature of the measured concept.

Example of Reliability and Validity in Research

In this section, we’ll explore instances that highlight the differences between reliability and validity and how they play a crucial role in ensuring the credibility of research findings.

Example of reliability

Imagine you are studying the reliability of a smartphone’s battery life measurement. To collect data, you fully charge the phone and measure the battery life three times in the same controlled environment—same apps running, same brightness level, and same usage patterns.

If the measurements consistently show a similar battery life duration each time you repeat the test, it indicates that your measurement method is reliable. The consistent results under the same conditions assure you that the battery life measurement can be trusted to provide dependable information about the phone’s performance.

Example of validity

Researchers collect data from a group of participants in a study aiming to assess the validity of a newly developed stress questionnaire. To ensure validity, they compare the scores obtained from the stress questionnaire with the participants’ actual stress levels measured using physiological indicators such as heart rate variability and cortisol levels.

If participants’ scores correlate strongly with their physiological stress levels, the questionnaire is valid. This means the questionnaire accurately measures participants’ stress levels, and its results correspond to real variations in their physiological responses to stress.

Validity assessed through the correlation between questionnaire scores and physiological measures ensures that the questionnaire is effectively measuring what it claims to measure participants’ stress levels.

In the world of research, differentiating between reliability and validity is crucial. Reliability ensures consistent results, while validity confirms accurate measurements. Using tools like QuestionPro enhances data collection for both reliability and validity. For instance, measuring self-esteem over time showcases reliability, and aligning questions with theories demonstrates validity.

QuestionPro empowers researchers to achieve reliable and valid results through its robust features, facilitating credible research outcomes. Contact QuestionPro to create a free account or learn more!

LEARN MORE FREE TRIAL

MORE LIKE THIS

Top 8 Data Trends to Understand the Future of Data

May 30, 2024

Top 12 Interactive Presentation Software to Engage Your User

May 29, 2024

Trend Report: Guide for Market Dynamics & Strategic Analysis

Cannabis Industry Business Intelligence: Impact on Research

May 28, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Reliability vs Validity in Research: Types & Examples

In everyday life, we probably use reliability to describe how something is valid. However, in research and testing, reliability and validity are not the same things.

When it comes to data analysis, reliability refers to how easily replicable an outcome is. For example, if you measure a cup of rice three times, and you get the same result each time, that result is reliable.

The validity, on the other hand, refers to the measurement’s accuracy. This means that if the standard weight for a cup of rice is 5 grams, and you measure a cup of rice, it should be 5 grams.

So, while reliability and validity are intertwined, they are not synonymous. If one of the measurement parameters, such as your scale, is distorted, the results will be consistent but invalid.

Data must be consistent and accurate to be used to draw useful conclusions. In this article, we’ll look at how to assess data reliability and validity, as well as how to apply it.

Read: Internal Validity in Research: Definition, Threats, Examples

What is Reliability?

When a measurement is consistent it’s reliable. But of course, reliability doesn’t mean your outcome will be the same, it just means it will be in the same range.

For example, if you scored 95% on a test the first time and the next you score, 96%, your results are reliable. So, even if there is a minor difference in the outcomes, as long as it is within the error margin, your results are reliable.

Reliability allows you to assess the degree of consistency in your results. So, if you’re getting similar results, reliability provides an answer to the question of how similar your results are.

What is Validity?

A measurement or test is valid when it correlates with the expected result. It examines the accuracy of your result.

Here’s where things get tricky: to establish the validity of a test, the results must be consistent. Looking at most experiments (especially physical measurements), the standard value that establishes the accuracy of a measurement is the outcome of repeating the test to obtain a consistent result.

Read: What is Participant Bias? How to Detect & Avoid It

For example, before I can conclude that all 12-inch rulers are one foot, I must repeat the experiment several times and obtain very similar results, indicating that 12-inch rulers are indeed one foot.

Most scientific experiments are inextricably linked in terms of validity and reliability. For example, if you’re measuring distance or depth, valid answers are likely to be reliable.

But for social experiences, one isn’t the indication of the other. For example, most people believe that people that wear glasses are smart.

Of course, I’ll find examples of people who wear glasses and have high IQs (reliability), but the truth is that most people who wear glasses simply need their vision to be better (validity).

So reliable answers aren’t always correct but valid answers are always reliable.

How Are Reliability and Validity Assessed?

When assessing reliability, we want to know if the measurement can be replicated. Of course, we’d have to change some variables to ensure that this test holds, the most important of which are time, items, and observers.

If the main factor you change when performing a reliability test is time, you’re performing a test-retest reliability assessment.

Read: What is Publication Bias? (How to Detect & Avoid It)

However, if you are changing items, you are performing an internal consistency assessment. It means you’re measuring multiple items with a single instrument.

Finally, if you’re measuring the same item with the same instrument but using different observers or judges, you’re performing an inter-rater reliability test.

Assessing Validity

Evaluating validity can be more tedious than reliability. With reliability, you’re attempting to demonstrate that your results are consistent, whereas, with validity, you want to prove the correctness of your outcome.

Although validity is mainly categorized under two sections (internal and external), there are more than fifteen ways to check the validity of a test. In this article, we’ll be covering four.

First, content validity, measures whether the test covers all the content it needs to provide the outcome you’re expecting.

Suppose I wanted to test the hypothesis that 90% of Generation Z uses social media polls for surveys while 90% of millennials use forms. I’d need a sample size that accounts for how Gen Z and millennials gather information.

Next, criterion validity is when you compare your results to what you’re supposed to get based on a chosen criteria. There are two ways these could be measured, predictive or concurrent validity.

Read: Survey Errors To Avoid: Types, Sources, Examples, Mitigation

Following that, we have face validity . It’s how we anticipate a test to be. For instance, when answering a customer service survey, I’d expect to be asked about how I feel about the service provided.

Lastly, construct-related validity . This is a little more complicated, but it helps to show how the validity of research is based on different findings.

As a result, it provides information that either proves or disproves that certain things are related.

Types of Reliability

We have three main types of reliability assessment and here’s how they work:

1) Test-retest Reliability

This assessment refers to the consistency of outcomes over time. Testing reliability over time does not imply changing the amount of time it takes to conduct an experiment; rather, it means repeating the experiment multiple times in a short time.

For example, if I measure the length of my hair today, and tomorrow, I’ll most likely get the same result each time.

A short period is relative in terms of reliability; two days for measuring hair length is considered short. But that’s far too long to test how quickly water dries on the sand.

A test-retest correlation is used to compare the consistency of your results. This is typically a scatter plot that shows how similar your values are between the two experiments.

If your answers are reliable, your scatter plots will most likely have a lot of overlapping points, but if they aren’t, the points (values) will be spread across the graph.

Read: Sampling Bias: Definition, Types + [Examples]

2) Internal Consistency

It’s also known as internal reliability. It refers to the consistency of results for various items when measured on the same scale.

This is particularly important in social science research, such as surveys, because it helps determine the consistency of people’s responses when asked the same questions.

Most introverts, for example, would say they enjoy spending time alone and having few friends. However, if some introverts claim that they either do not want time alone or prefer to be surrounded by many friends, it doesn’t add up.

These people who claim to be introverts or one this factor isn’t a reliable way of measuring introversion.

Internal reliability helps you prove the consistency of a test by varying factors. It’s a little tough to measure quantitatively but you could use the split-half correlation .

The split-half correlation simply means dividing the factors used to measure the underlying construct into two and plotting them against each other in the form of a scatter plot.

Introverts, for example, are assessed on their need for alone time as well as their desire to have as few friends as possible. If this plot is dispersed, likely, one of the traits does not indicate introversion.

3) Inter-Rater Reliability

This method of measuring reliability helps prevent personal bias. Inter-rater reliability assessment helps judge outcomes from the different perspectives of multiple observers.

A good example is if you ordered a meal and found it delicious. You could be biased in your judgment for several reasons, perception of the meal, your mood, and so on.

But it’s highly unlikely that six more people would agree that the meal is delicious if it isn’t. Another factor that could lead to bias is expertise. Professional dancers, for example, would perceive dance moves differently than non-professionals.

Read: What is Experimenter Bias? Definition, Types & Mitigation

So, if a person dances and records it, and both groups (professional and unprofessional dancers) rate the video, there is a high likelihood of a significant difference in their ratings.

But if they both agree that the person is a great dancer, despite their opposing viewpoints, the person is likely a great dancer.

Types of Validity

Researchers use validity to determine whether a measurement is accurate or not. The accuracy of measurement is usually determined by comparing it to the standard value.

When a measurement is consistent over time and has high internal consistency, it increases the likelihood that it is valid.

1) Content Validity

This refers to determining validity by evaluating what is being measured. So content validity tests if your research is measuring everything it should to produce an accurate result.

For example, if I were to measure what causes hair loss in women. I’d have to consider things like postpartum hair loss, alopecia, hair manipulation, dryness, and so on.

By omitting any of these critical factors, you risk significantly reducing the validity of your research because you won’t be covering everything necessary to make an accurate deduction.

Read: Data Cleaning: 7 Techniques + Steps to Cleanse Data

For example, a certain woman is losing her hair due to postpartum hair loss, excessive manipulation, and dryness, but in my research, I only look at postpartum hair loss. My research will show that she has postpartum hair loss, which isn’t accurate.

Yes, my conclusion is correct, but it does not fully account for the reasons why this woman is losing her hair.

2) Criterion Validity

This measures how well your measurement correlates with the variables you want to compare it with to get your result. The two main classes of criterion validity are predictive and concurrent.

3) Predictive validity

It helps predict future outcomes based on the data you have. For example, if a large number of students performed exceptionally well in a test, you can use this to predict that they understood the concept on which the test was based and will perform well in their exams.

4) Concurrent validity

On the other hand, involves testing with different variables at the same time. For example, setting up a literature test for your students on two different books and assessing them at the same time.

You’re measuring your students’ literature proficiency with these two books. If your students truly understood the subject, they should be able to correctly answer questions about both books.

5) Face Validity

Quantifying face validity might be a bit difficult because you are measuring the perception validity, not the validity itself. So, face validity is concerned with whether the method used for measurement will produce accurate results rather than the measurement itself.

If the method used for measurement doesn’t appear to test the accuracy of a measurement, its face validity is low.

Here’s an example: less than 40% of men over the age of 20 in Texas, USA, are at least 6 feet tall. The most logical approach would be to collect height data from men over the age of twenty in Texas, USA.

However, asking men over the age of 20 what their favorite meal is to determine their height is pretty bizarre. The method I am using to assess the validity of my research is quite questionable because it lacks correlation to what I want to measure.

6) Construct-Related Validity

Construct-related validity assesses the accuracy of your research by collecting multiple pieces of evidence. It helps determine the validity of your results by comparing them to evidence that supports or refutes your measurement.

7) Convergent validity

If you’re assessing evidence that strongly correlates with the concept, that’s convergent validity .

8) Discriminant validity

Examines the validity of your research by determining what not to base it on. You are removing elements that are not a strong factor to help validate your research. Being a vegan, for example, does not imply that you are allergic to meat.

How to Ensure Validity and Reliability in Your Research

You need a bulletproof research design to ensure that your research is both valid and reliable. This means that your methods, sample, and even you, the researcher, shouldn’t be biased.

- Ensuring Reliability

To enhance the reliability of your research, you need to apply your measurement method consistently. The chances of reproducing the same results for a test are higher when you maintain the method you’re using to experiment.

For example, you want to determine the reliability of the weight of a bag of chips using a scale. You have to consistently use this scale to measure the bag of chips each time you experiment.

You must also keep the conditions of your research consistent. For instance, if you’re experimenting to see how quickly water dries on sand, you need to consider all of the weather elements that day.

So, if you experimented on a sunny day, the next experiment should also be conducted on a sunny day to obtain a reliable result.

Read: Survey Methods: Definition, Types, and Examples

- Ensuring Validity

There are several ways to determine the validity of your research, and the majority of them require the use of highly specific and high-quality measurement methods.

Before you begin your test, choose the best method for producing the desired results. This method should be pre-existing and proven.

Also, your sample should be very specific. If you’re collecting data on how dogs respond to fear, your results are more likely to be valid if you base them on a specific breed of dog rather than dogs in general.

Validity and reliability are critical for achieving accurate and consistent results in research. While reliability does not always imply validity, validity establishes that a result is reliable. Validity is heavily dependent on previous results (standards), whereas reliability is dependent on the similarity of your results.

Connect to Formplus, Get Started Now - It's Free!

- concurrent validity

- examples of research bias

- predictive reliability

- research analysis

- research assessment

- validity of research

- busayo.longe

You may also like:

How to do a Meta Analysis: Methodology, Pros & Cons

In this article, we’ll go through the concept of meta-analysis, what it can be used for, and how you can use it to improve how you...

Selection Bias in Research: Types, Examples & Impact

In this article, we’ll discuss the effects of selection bias, how it works, its common effects and the best ways to minimize it.

Research Bias: Definition, Types + Examples

Simple guide to understanding research bias, types, causes, examples and how to avoid it in surveys

Simpson’s Paradox & How to Avoid it in Experimental Research

In this article, we are going to look at Simpson’s Paradox from its historical point and later, we’ll consider its effect in...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 5: Psychological Measurement

Reliability and Validity of Measurement

Learning Objectives

- Define reliability, including the different types and how they are assessed.

- Define validity, including the different types and how they are assessed.

- Describe the kinds of evidence that would be relevant to assessing the reliability and validity of a particular measure.

Again, measurement involves assigning scores to individuals so that they represent some characteristic of the individuals. But how do researchers know that the scores actually represent the characteristic, especially when it is a construct like intelligence, self-esteem, depression, or working memory capacity? The answer is that they conduct research using the measure to confirm that the scores make sense based on their understanding of the construct being measured. This is an extremely important point. Psychologists do not simply assume that their measures work. Instead, they collect data to demonstrate that they work. If their research does not demonstrate that a measure works, they stop using it.

As an informal example, imagine that you have been dieting for a month. Your clothes seem to be fitting more loosely, and several friends have asked if you have lost weight. If at this point your bathroom scale indicated that you had lost 10 pounds, this would make sense and you would continue to use the scale. But if it indicated that you had gained 10 pounds, you would rightly conclude that it was broken and either fix it or get rid of it. In evaluating a measurement method, psychologists consider two general dimensions: reliability and validity.

Reliability

Reliability refers to the consistency of a measure. Psychologists consider three types of consistency: over time (test-retest reliability), across items (internal consistency), and across different researchers (inter-rater reliability).

Test-Retest Reliability

When researchers measure a construct that they assume to be consistent across time, then the scores they obtain should also be consistent across time. Test-retest reliability is the extent to which this is actually the case. For example, intelligence is generally thought to be consistent across time. A person who is highly intelligent today will be highly intelligent next week. This means that any good measure of intelligence should produce roughly the same scores for this individual next week as it does today. Clearly, a measure that produces highly inconsistent scores over time cannot be a very good measure of a construct that is supposed to be consistent.

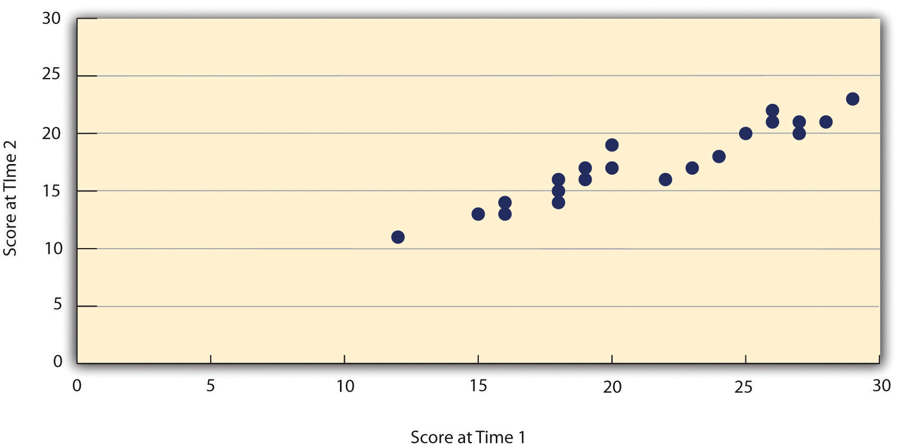

Assessing test-retest reliability requires using the measure on a group of people at one time, using it again on the same group of people at a later time, and then looking at test-retest correlation between the two sets of scores. This is typically done by graphing the data in a scatterplot and computing Pearson’s r . Figure 5.2 shows the correlation between two sets of scores of several university students on the Rosenberg Self-Esteem Scale, administered two times, a week apart. Pearson’s r for these data is +.95. In general, a test-retest correlation of +.80 or greater is considered to indicate good reliability.

Again, high test-retest correlations make sense when the construct being measured is assumed to be consistent over time, which is the case for intelligence, self-esteem, and the Big Five personality dimensions. But other constructs are not assumed to be stable over time. The very nature of mood, for example, is that it changes. So a measure of mood that produced a low test-retest correlation over a period of a month would not be a cause for concern.

Internal Consistency

A second kind of reliability is internal consistency , which is the consistency of people’s responses across the items on a multiple-item measure. In general, all the items on such measures are supposed to reflect the same underlying construct, so people’s scores on those items should be correlated with each other. On the Rosenberg Self-Esteem Scale, people who agree that they are a person of worth should tend to agree that that they have a number of good qualities. If people’s responses to the different items are not correlated with each other, then it would no longer make sense to claim that they are all measuring the same underlying construct. This is as true for behavioural and physiological measures as for self-report measures. For example, people might make a series of bets in a simulated game of roulette as a measure of their level of risk seeking. This measure would be internally consistent to the extent that individual participants’ bets were consistently high or low across trials.

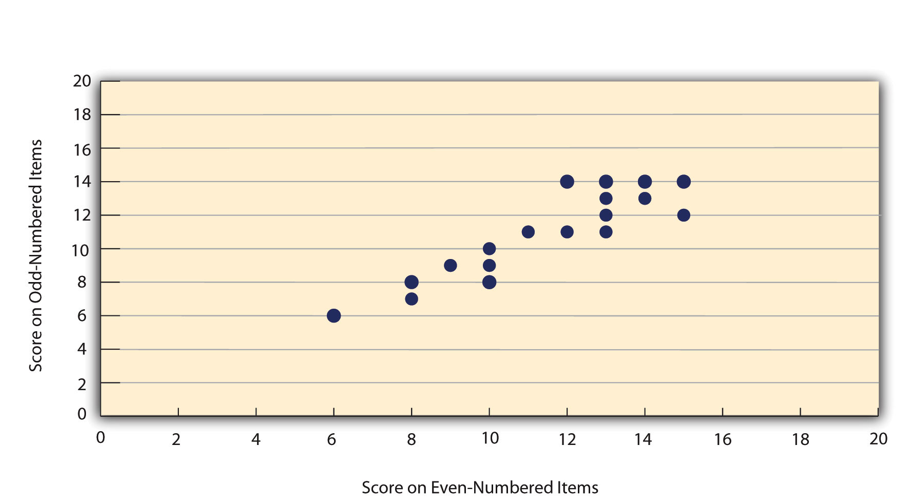

Like test-retest reliability, internal consistency can only be assessed by collecting and analyzing data. One approach is to look at a split-half correlation . This involves splitting the items into two sets, such as the first and second halves of the items or the even- and odd-numbered items. Then a score is computed for each set of items, and the relationship between the two sets of scores is examined. For example, Figure 5.3 shows the split-half correlation between several university students’ scores on the even-numbered items and their scores on the odd-numbered items of the Rosenberg Self-Esteem Scale. Pearson’s r for these data is +.88. A split-half correlation of +.80 or greater is generally considered good internal consistency.

Perhaps the most common measure of internal consistency used by researchers in psychology is a statistic called Cronbach’s α (the Greek letter alpha). Conceptually, α is the mean of all possible split-half correlations for a set of items. For example, there are 252 ways to split a set of 10 items into two sets of five. Cronbach’s α would be the mean of the 252 split-half correlations. Note that this is not how α is actually computed, but it is a correct way of interpreting the meaning of this statistic. Again, a value of +.80 or greater is generally taken to indicate good internal consistency.

Interrater Reliability

Many behavioural measures involve significant judgment on the part of an observer or a rater. Inter-rater reliability is the extent to which different observers are consistent in their judgments. For example, if you were interested in measuring university students’ social skills, you could make video recordings of them as they interacted with another student whom they are meeting for the first time. Then you could have two or more observers watch the videos and rate each student’s level of social skills. To the extent that each participant does in fact have some level of social skills that can be detected by an attentive observer, different observers’ ratings should be highly correlated with each other. Inter-rater reliability would also have been measured in Bandura’s Bobo doll study. In this case, the observers’ ratings of how many acts of aggression a particular child committed while playing with the Bobo doll should have been highly positively correlated. Interrater reliability is often assessed using Cronbach’s α when the judgments are quantitative or an analogous statistic called Cohen’s κ (the Greek letter kappa) when they are categorical.

Validity is the extent to which the scores from a measure represent the variable they are intended to. But how do researchers make this judgment? We have already considered one factor that they take into account—reliability. When a measure has good test-retest reliability and internal consistency, researchers should be more confident that the scores represent what they are supposed to. There has to be more to it, however, because a measure can be extremely reliable but have no validity whatsoever. As an absurd example, imagine someone who believes that people’s index finger length reflects their self-esteem and therefore tries to measure self-esteem by holding a ruler up to people’s index fingers. Although this measure would have extremely good test-retest reliability, it would have absolutely no validity. The fact that one person’s index finger is a centimetre longer than another’s would indicate nothing about which one had higher self-esteem.

Discussions of validity usually divide it into several distinct “types.” But a good way to interpret these types is that they are other kinds of evidence—in addition to reliability—that should be taken into account when judging the validity of a measure. Here we consider three basic kinds: face validity, content validity, and criterion validity.

Face Validity

Face validity is the extent to which a measurement method appears “on its face” to measure the construct of interest. Most people would expect a self-esteem questionnaire to include items about whether they see themselves as a person of worth and whether they think they have good qualities. So a questionnaire that included these kinds of items would have good face validity. The finger-length method of measuring self-esteem, on the other hand, seems to have nothing to do with self-esteem and therefore has poor face validity. Although face validity can be assessed quantitatively—for example, by having a large sample of people rate a measure in terms of whether it appears to measure what it is intended to—it is usually assessed informally.

Face validity is at best a very weak kind of evidence that a measurement method is measuring what it is supposed to. One reason is that it is based on people’s intuitions about human behaviour, which are frequently wrong. It is also the case that many established measures in psychology work quite well despite lacking face validity. The Minnesota Multiphasic Personality Inventory-2 (MMPI-2) measures many personality characteristics and disorders by having people decide whether each of over 567 different statements applies to them—where many of the statements do not have any obvious relationship to the construct that they measure. For example, the items “I enjoy detective or mystery stories” and “The sight of blood doesn’t frighten me or make me sick” both measure the suppression of aggression. In this case, it is not the participants’ literal answers to these questions that are of interest, but rather whether the pattern of the participants’ responses to a series of questions matches those of individuals who tend to suppress their aggression.

Content Validity

Content validity is the extent to which a measure “covers” the construct of interest. For example, if a researcher conceptually defines test anxiety as involving both sympathetic nervous system activation (leading to nervous feelings) and negative thoughts, then his measure of test anxiety should include items about both nervous feelings and negative thoughts. Or consider that attitudes are usually defined as involving thoughts, feelings, and actions toward something. By this conceptual definition, a person has a positive attitude toward exercise to the extent that he or she thinks positive thoughts about exercising, feels good about exercising, and actually exercises. So to have good content validity, a measure of people’s attitudes toward exercise would have to reflect all three of these aspects. Like face validity, content validity is not usually assessed quantitatively. Instead, it is assessed by carefully checking the measurement method against the conceptual definition of the construct.

Criterion Validity

Criterion validity is the extent to which people’s scores on a measure are correlated with other variables (known as criteria ) that one would expect them to be correlated with. For example, people’s scores on a new measure of test anxiety should be negatively correlated with their performance on an important school exam. If it were found that people’s scores were in fact negatively correlated with their exam performance, then this would be a piece of evidence that these scores really represent people’s test anxiety. But if it were found that people scored equally well on the exam regardless of their test anxiety scores, then this would cast doubt on the validity of the measure.

A criterion can be any variable that one has reason to think should be correlated with the construct being measured, and there will usually be many of them. For example, one would expect test anxiety scores to be negatively correlated with exam performance and course grades and positively correlated with general anxiety and with blood pressure during an exam. Or imagine that a researcher develops a new measure of physical risk taking. People’s scores on this measure should be correlated with their participation in “extreme” activities such as snowboarding and rock climbing, the number of speeding tickets they have received, and even the number of broken bones they have had over the years. When the criterion is measured at the same time as the construct, criterion validity is referred to as concurrent validity ; however, when the criterion is measured at some point in the future (after the construct has been measured), it is referred to as predictive validity (because scores on the measure have “predicted” a future outcome).

Criteria can also include other measures of the same construct. For example, one would expect new measures of test anxiety or physical risk taking to be positively correlated with existing measures of the same constructs. This is known as convergent validity .

Assessing convergent validity requires collecting data using the measure. Researchers John Cacioppo and Richard Petty did this when they created their self-report Need for Cognition Scale to measure how much people value and engage in thinking (Cacioppo & Petty, 1982) [1] . In a series of studies, they showed that people’s scores were positively correlated with their scores on a standardized academic achievement test, and that their scores were negatively correlated with their scores on a measure of dogmatism (which represents a tendency toward obedience). In the years since it was created, the Need for Cognition Scale has been used in literally hundreds of studies and has been shown to be correlated with a wide variety of other variables, including the effectiveness of an advertisement, interest in politics, and juror decisions (Petty, Briñol, Loersch, & McCaslin, 2009) [2] .

Discriminant Validity

Discriminant validity , on the other hand, is the extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct. For example, self-esteem is a general attitude toward the self that is fairly stable over time. It is not the same as mood, which is how good or bad one happens to be feeling right now. So people’s scores on a new measure of self-esteem should not be very highly correlated with their moods. If the new measure of self-esteem were highly correlated with a measure of mood, it could be argued that the new measure is not really measuring self-esteem; it is measuring mood instead.

When they created the Need for Cognition Scale, Cacioppo and Petty also provided evidence of discriminant validity by showing that people’s scores were not correlated with certain other variables. For example, they found only a weak correlation between people’s need for cognition and a measure of their cognitive style—the extent to which they tend to think analytically by breaking ideas into smaller parts or holistically in terms of “the big picture.” They also found no correlation between people’s need for cognition and measures of their test anxiety and their tendency to respond in socially desirable ways. All these low correlations provide evidence that the measure is reflecting a conceptually distinct construct.

Key Takeaways

- Psychological researchers do not simply assume that their measures work. Instead, they conduct research to show that they work. If they cannot show that they work, they stop using them.

- There are two distinct criteria by which researchers evaluate their measures: reliability and validity. Reliability is consistency across time (test-retest reliability), across items (internal consistency), and across researchers (interrater reliability). Validity is the extent to which the scores actually represent the variable they are intended to.

- Validity is a judgment based on various types of evidence. The relevant evidence includes the measure’s reliability, whether it covers the construct of interest, and whether the scores it produces are correlated with other variables they are expected to be correlated with and not correlated with variables that are conceptually distinct.

- The reliability and validity of a measure is not established by any single study but by the pattern of results across multiple studies. The assessment of reliability and validity is an ongoing process.

- Practice: Ask several friends to complete the Rosenberg Self-Esteem Scale. Then assess its internal consistency by making a scatterplot to show the split-half correlation (even- vs. odd-numbered items). Compute Pearson’s r too if you know how.

- Discussion: Think back to the last college exam you took and think of the exam as a psychological measure. What construct do you think it was intended to measure? Comment on its face and content validity. What data could you collect to assess its reliability and criterion validity?

- Cacioppo, J. T., & Petty, R. E. (1982). The need for cognition. Journal of Personality and Social Psychology, 42 , 116–131. ↵

- Petty, R. E, Briñol, P., Loersch, C., & McCaslin, M. J. (2009). The need for cognition. In M. R. Leary & R. H. Hoyle (Eds.), Handbook of individual differences in social behaviour (pp. 318–329). New York, NY: Guilford Press. ↵

The consistency of a measure.

The consistency of a measure over time.

The consistency of a measure on the same group of people at different times.

Consistency of people’s responses across the items on a multiple-item measure.

Method of assessing internal consistency through splitting the items into two sets and examining the relationship between them.

A statistic in which α is the mean of all possible split-half correlations for a set of items.

The extent to which different observers are consistent in their judgments.

The extent to which the scores from a measure represent the variable they are intended to.

The extent to which a measurement method appears to measure the construct of interest.

The extent to which a measure “covers” the construct of interest.

The extent to which people’s scores on a measure are correlated with other variables that one would expect them to be correlated with.

In reference to criterion validity, variables that one would expect to be correlated with the measure.

When the criterion is measured at the same time as the construct.

when the criterion is measured at some point in the future (after the construct has been measured).

When new measures positively correlate with existing measures of the same constructs.

The extent to which scores on a measure are not correlated with measures of variables that are conceptually distinct.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Family Med Prim Care

- v.4(3); Jul-Sep 2015

Validity, reliability, and generalizability in qualitative research

Lawrence leung.

1 Department of Family Medicine, Queen's University, Kingston, Ontario, Canada

2 Centre of Studies in Primary Care, Queen's University, Kingston, Ontario, Canada

In general practice, qualitative research contributes as significantly as quantitative research, in particular regarding psycho-social aspects of patient-care, health services provision, policy setting, and health administrations. In contrast to quantitative research, qualitative research as a whole has been constantly critiqued, if not disparaged, by the lack of consensus for assessing its quality and robustness. This article illustrates with five published studies how qualitative research can impact and reshape the discipline of primary care, spiraling out from clinic-based health screening to community-based disease monitoring, evaluation of out-of-hours triage services to provincial psychiatric care pathways model and finally, national legislation of core measures for children's healthcare insurance. Fundamental concepts of validity, reliability, and generalizability as applicable to qualitative research are then addressed with an update on the current views and controversies.

Nature of Qualitative Research versus Quantitative Research

The essence of qualitative research is to make sense of and recognize patterns among words in order to build up a meaningful picture without compromising its richness and dimensionality. Like quantitative research, the qualitative research aims to seek answers for questions of “how, where, when who and why” with a perspective to build a theory or refute an existing theory. Unlike quantitative research which deals primarily with numerical data and their statistical interpretations under a reductionist, logical and strictly objective paradigm, qualitative research handles nonnumerical information and their phenomenological interpretation, which inextricably tie in with human senses and subjectivity. While human emotions and perspectives from both subjects and researchers are considered undesirable biases confounding results in quantitative research, the same elements are considered essential and inevitable, if not treasurable, in qualitative research as they invariable add extra dimensions and colors to enrich the corpus of findings. However, the issue of subjectivity and contextual ramifications has fueled incessant controversies regarding yardsticks for quality and trustworthiness of qualitative research results for healthcare.

Impact of Qualitative Research upon Primary Care

In many ways, qualitative research contributes significantly, if not more so than quantitative research, to the field of primary care at various levels. Five qualitative studies are chosen to illustrate how various methodologies of qualitative research helped in advancing primary healthcare, from novel monitoring of chronic obstructive pulmonary disease (COPD) via mobile-health technology,[ 1 ] informed decision for colorectal cancer screening,[ 2 ] triaging out-of-hours GP services,[ 3 ] evaluating care pathways for community psychiatry[ 4 ] and finally prioritization of healthcare initiatives for legislation purposes at national levels.[ 5 ] With the recent advances of information technology and mobile connecting device, self-monitoring and management of chronic diseases via tele-health technology may seem beneficial to both the patient and healthcare provider. Recruiting COPD patients who were given tele-health devices that monitored lung functions, Williams et al. [ 1 ] conducted phone interviews and analyzed their transcripts via a grounded theory approach, identified themes which enabled them to conclude that such mobile-health setup and application helped to engage patients with better adherence to treatment and overall improvement in mood. Such positive findings were in contrast to previous studies, which opined that elderly patients were often challenged by operating computer tablets,[ 6 ] or, conversing with the tele-health software.[ 7 ] To explore the content of recommendations for colorectal cancer screening given out by family physicians, Wackerbarth, et al. [ 2 ] conducted semi-structure interviews with subsequent content analysis and found that most physicians delivered information to enrich patient knowledge with little regard to patients’ true understanding, ideas, and preferences in the matter. These findings suggested room for improvement for family physicians to better engage their patients in recommending preventative care. Faced with various models of out-of-hours triage services for GP consultations, Egbunike et al. [ 3 ] conducted thematic analysis on semi-structured telephone interviews with patients and doctors in various urban, rural and mixed settings. They found that the efficiency of triage services remained a prime concern from both users and providers, among issues of access to doctors and unfulfilled/mismatched expectations from users, which could arouse dissatisfaction and legal implications. In UK, a care pathways model for community psychiatry had been introduced but its benefits were unclear. Khandaker et al. [ 4 ] hence conducted a qualitative study using semi-structure interviews with medical staff and other stakeholders; adopting a grounded-theory approach, major themes emerged which included improved equality of access, more focused logistics, increased work throughput and better accountability for community psychiatry provided under the care pathway model. Finally, at the US national level, Mangione-Smith et al. [ 5 ] employed a modified Delphi method to gather consensus from a panel of nominators which were recognized experts and stakeholders in their disciplines, and identified a core set of quality measures for children's healthcare under the Medicaid and Children's Health Insurance Program. These core measures were made transparent for public opinion and later passed on for full legislation, hence illustrating the impact of qualitative research upon social welfare and policy improvement.

Overall Criteria for Quality in Qualitative Research