Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Research and Analysis of the Front-end Frameworks and Libraries in Web Development

2022, International Journal for Research in Applied Science & Engineering Technology (IJRASET)

With the rapid expansion of online technology in recent years, there is a strong trend that Hypertext Markup Language (HTML)5 will become a global web consortium and will lead the front-end. to take stage in the history of the internet There are, however, a slew of front-end development frameworks to choose from., Angular, and are examples of libraries. How to Choose appropriate framework or library to launch an e-business and expand its react. It becomes a priority procedure to the user experience.in terms of web development This paper begins with an introduction a list of the most popular frameworks and libraries in the field of front-end development and web performance analysis services. This paper examines the research findings from a variety of perspectives. The advantages and disadvantages of each framework will be described in this study., the study concludes with a summary, the contributions, and finishes by speculating on the future of front-end development

Related Papers

International Journal for Research in Applied Science & Engineering Technology (IJRASET)

IJRASET Publication

An essential step in developing any program or app is choosing the appropriate front-end framework or library. Frontend Web development sounds similar to the JavaScript framework. Both of these choices are available for web development requirements. Vue, React, and Angular all fall under the umbrella of JavaScript frameworks. Due to the extensive spectrum of issues developers encounter daily, the industry offers a wide diversity. With the help of many accessible frameworks, a web application may be constructed as intended while considering all practical considerations. The advantages and disadvantages of the fundamental elements and distinctive features of frameworks are discussed in this paper. Additionally, it offers a thorough analysis of the research on front-end frameworks. This study gives an overview of the front-end frameworks discovered in the literature, outlining the essential components of these frameworks using a systematic literature review as methodology. The three most popular frameworks, Vue.js, Angular, and React, were examined for the necessary features.

IRJET Journal

The most fundamental move and standpoint of a software development is to select the right front end framework. The market has a wide variety due to the wide range of problems that developers face every day. And the number of new front-end framework increases considerably. A web application can be built according to design with various frameworks available taking into account all the constraints and feasibility. This paper explains the benefits and drawbacks in relation to the basic aspects and unique characteristics of frameworks. Also, provides a systematic review of the literature on front end frameworks for single page applications (SPA). Using a systematic literature review as methodology, this paper presents an overview of the front end frameworks identified in the literature, the key features comprising these frameworks. The requisite characteristics were analyzed in the three most common frameworks: Vue.js, Angular and React.

With the rapid transformation towards the online mode, web developers are able to create websites that can be accessed from anywhere. However, the goal to deploy a website is not just creating an attractive front-end, it is to achieve responsiveness, compatibility across different browsers/devices, selection of right frameworks and optimization in terms of loading time, response speed, and user internet experience. This paper studies Web front-end development technology and discusses the key technologies used to achieve responsiveness, analyzes three popular JavaScript frameworks (Angular, Vue, and React), methods to achieve better optimization, and concludes with the idea that the Web front-end technology is an evolving field with a large scope of developments and introduction of new methods and technologies.

10th WSEAS International Conference on APPLIED …

Mohammed Musthafa

International Journal IJRITCC

— Everything you see, click, and interact with on a website is the work of front-end web development. Client-side frameworks and scripting languages like JavaScript and various JS libraries like AngularJS, jQuery, Node.js, etc. have made it possible for developers to develop interactive websites with performance improvement. Today the use of web is raised to such an extent that web has evolved from simple text content from one server to complex ecosystem with various types of contents spread across several administrative domains. This content makes the websites more complex and hence affects user experience. Till now efforts has been done to improve the performance at server side by increasing scalability of back-end or making the application more efficient. The performance of client side is not measured or just tested for usability. Some widely used JavaScript benchmark suites for measuring the performance of web browsers. The main focus of such a benchmark is not to measure the performance of a web application itself, but to measure its performance within a specific browser. There is wide variety of literature being available to measure the complexity of web pages and determining the load time. The aim behind our project is that to characterize the complexity of web pages built using different web development technologies like AngularJS, jQuery, AJAX (Client side web development technologies) so as to compare and analyze the usage of proper web development technique. In this paper we have used AngularJS as a case study to evaluate its performance against other frameworks like jQuery and AJAX.

Nirjhor Anjum

Mohit Sharma

in recent years Javascript Become's very popular programming language. According to the Stack Overflow Survey 2023, 65.82% of professional developers use Javascript to develop web software applications. The software developer needs to choose an effective and in-demand javascript framework. In this paper we discuss different Frameworks, their types, benefits, the security provided, and their challenges. We have also compared Three of the leading frameworks in aspects of complexity and security.

Devendra Kumar Shukla

Web development has undergone significant evolution over the past decade, driven by advancements in technology, changing user expectations, and the proliferation of internet usage. This research paper presents a comprehensive review of the latest trends and practices in web development, drawing upon a thorough analysis of relevant literature and industry reports. The paper begins by discussing the historical development of web development, from the early days of static HTML websites to the advent of dynamic web applications using technologies such as JavaScript, CSS, and server-side programming. The evolution of web development frameworks, libraries, and tools is examined, highlighting key milestones and breakthroughs that have shaped the landscape of modern web development. The paper then delves into the latest trends in web development, including the rise of mobile-first and responsive design, the increasing use of front-end frameworks such as React, Angular, and Vue, the growing ...

WARSE The World Academy of Research in Science and Engineering

This paper gives the comparative study on applications of those using web frameworks and those which don't use frameworks. People after experiencing the ease of frameworks, don't prefer traditional method to develop web applications. It is a heated-up debate happening these days that without using web frameworks, webpage can be a big hit and free from performance issues on one side while on the other side argues that websites are not appealing without frameworks and other framework traits. So Many people generalize that using web frameworks is best for giant applications and proceeding with traditional method is the best option for small web applications. So, there are equal distribution of pros and cons of developing applications with and without frameworks. This study helps to solve one part of the generalized statement that very large successful applications still are a market hit without using any frameworks from the scratch. The other part is solved by practically developing an application in two methods which provides the up's and down's in building the application in each method. So collaboratively. This paper helps in clearing the myths people developed about web development over time. This paper covers the Introduction, Research Methodology, Related work and Conclusion building step by step with necessary factors that helps to solve the argument.

Journal of Informatics Electrical and Electronics Engineering (JIEEE), A2Z Journals

Journal of Informatics Electrical and Electronics Engineering (JIEEE), A 2 Z Journals

Web development is same as building a house. Just like we require a plan, a building permit and license from city, web development also requires documentation, appropriate server, designing and a programming language. Since the standards of web de- signing are always increasing and so does the complexity of the technology required, frameworks have now become a crucial part of developing websites or web applications. It is absolutely unreasonable to reinvent the wheel, thus for designing rich and attractive websites, it is very much sensible to use frameworks endorsed by developers all over the world. Django, Angular, Spring, React, Vue, Express are some of the well-known web development frameworks. In my project I have used Angular.

RELATED PAPERS

Inicijal. Časopis za srednjovekovne studije / Initial. A Review of Medieval Studies 10 (2022)

Centre for Advanced Medieval Studies – Centar za napredne srednjovekovne studije

fatimah lateef

Lubomir Jastrabik

International Journal of Radiation Oncology*Biology*Physics

Sanford Meeks

Diego Desuque

Revista Eletrônica de Estratégia & Negócios

Carlos Ricardo Rossetto

mohamad dawoud

International Journal of Molecular Sciences

Franco Guscetti

Revista De Occidente

Jesús González Requena

Dagstuhl Reports

Verónica Becher

Plant Species Biology

Nadia Silvia Somavilla

Wirginia Kukula-Koch

Gabriella Cristina

Chemicke Listy

Josef Trögl

Ali Mehdinia

Journal of Molecular Liquids

Szabolcs Bálint

Rev Bras Odontol

sergio weyne

International Research Journal Of Pharmacy

Sarika Jadhav

Annales Françaises d'Anesthésie et de Réanimation

Babacar Niang

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Accessibility Links

- Skip to content

- Skip to search IOPscience

- Skip to Journals list

- Accessibility help

- Accessibility Help

Click here to close this panel.

Purpose-led Publishing is a coalition of three not-for-profit publishers in the field of physical sciences: AIP Publishing, the American Physical Society and IOP Publishing.

Together, as publishers that will always put purpose above profit, we have defined a set of industry standards that underpin high-quality, ethical scholarly communications.

We are proudly declaring that science is our only shareholder.

The Research on Single Page Application Front-end development Based on Vue

Nian Li 1 and Bo Zhang 1

Published under licence by IOP Publishing Ltd Journal of Physics: Conference Series , Volume 1883 , 2021 2nd International Conference on Computer Information and Big Data Applications 26-28 March 2021, Wuhan, China Citation Nian Li and Bo Zhang 2021 J. Phys.: Conf. Ser. 1883 012030 DOI 10.1088/1742-6596/1883/1/012030

Article metrics

6908 Total downloads

Share this article

Author e-mails.

Author affiliations

1 School of Computer Science, Wuhan Donghu University, Wuhan, China

Buy this article in print

'Vuejs' is a popular front-end framework, which uses MVVM (Model View View-Model) design pattern to support data-driven and component-based development. This paper discusses the basic working principle and development mode of Vue, and on this basis, uses SPA (Single Page Application) to design and implement a mobile mall front-end page based on Vue. This research fully demonstrates the advantages of front-end and back-end separation technology in Web development, and provides a powerful front-end support for full stack development.

Export citation and abstract BibTeX RIS

Content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence . Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Research of web front-end engineering solution in public cultural service project

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Front-end deep learning web apps development and deployment: a review

- Published: 30 November 2022

- Volume 53 , pages 15923–15945, ( 2023 )

Cite this article

- Hock-Ann Goh ORCID: orcid.org/0000-0002-7730-5465 1 ,

- Chin-Kuan Ho 2 &

- Fazly Salleh Abas 1

8512 Accesses

5 Citations

1 Altmetric

Explore all metrics

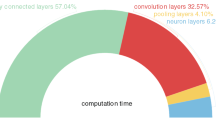

Machine learning and deep learning models are commonly developed using programming languages such as Python, C++, or R and deployed as web apps delivered from a back-end server or as mobile apps installed from an app store. However, recently front-end technologies and JavaScript libraries, such as TensorFlow.js, have been introduced to make machine learning more accessible to researchers and end-users. Using JavaScript, TensorFlow.js can define, train, and run new or existing, pre-trained machine learning models entirely in the browser from the client-side, which improves the user experience through interaction while preserving privacy. Deep learning models deployed on front-end browsers must be small, have fast inference, and ideally be interactive in real-time. Therefore, the emphasis on development and deployment is different. This paper aims to review the development and deployment of these deep-learning web apps to raise awareness of the recent advancements and encourage more researchers to take advantage of this technology for their own work. First, the rationale behind the deployment stack (front-end, JavaScript, and TensorFlow.js) is discussed. Then, the development approach for obtaining deep learning models that are optimized and suitable for front-end deployment is then described. The article also provides current web applications divided into seven categories to show deep learning potential on the front end. These include web apps for deep learning playground, pose detection and gesture tracking, music and art creation, expression detection and facial recognition, video segmentation, image and signal analysis, healthcare diagnosis, recognition, and identification.

Similar content being viewed by others

Various Frameworks and Libraries of Machine Learning and Deep Learning: A Survey

Convolutional Neural Networks-An Extensive arena of Deep Learning. A Comprehensive Study

Internet of Things and Deep Learning

Avoid common mistakes on your manuscript.

1 Introduction

Since the early 2010s, the discipline of deep learning has made astounding progress, resolving previously unsolved issues and generating intriguing new possibilities. Deep learning development is fueled by algorithmic breakthroughs enabled by a stack of open source tools, hardware advancements, greater availability of labeled data, and algorithmic advancements [ 17 ].

Deep learning (DL) models can be deployed on various platforms for real-world use after thorough validation and testing. One approach is to host the DL model on a server or in the cloud. This method allows developers to launch DL-enabled services by contacting API endpoints. Frameworks (for example, TF Serving Footnote 1 ) and cloud platforms (for example, Google Cloud Machine Learning Engine Footnote 2 ) may aid with this deployment. Apart from online cloud frameworks, the model can also be served on a smaller scale, using specialized back-end technologies such as Django and Flask. However, since these deployments need a cloud service or a dedicated computer to execute the model, these back-end deployment methods are prohibitively expensive.

The introduction of deep learning libraries that specifically cater to client-side deployment has changed the landscape [ 78 ]. Previously, it was believed that computation-intensive deep learning models could only be effectively run on platforms with GPU support and that they could not be successfully deployed on browsers or mobile platforms owing to the client-side platforms’ limited processing power. However, this has changed. With the front-end option becoming available, the deployment process has shifted its attention to adapting deep learning models to the front-end platform.

According to a study on the challenges of deep learning deployment [ 21 ], the query about deployment has grown in popularity, based on relevant postings from 2015 to 2019 on Stack Overflow, a developer-focused Q&A website. The questions about front-end deployments only surfaced in 2018 and remain relatively few. By 2019, the volume of users and queries about front-end deployment was only a quarter of back-end and mobile deployment. The researcher concurred with Ma et al. [ 78 ] that deep learning in browsers is still in its infancy. According to the study, it takes approximately 3 times longer to answer deployment questions than non-deployment questions (404.9 min vs. only 145.8 min). These findings show that issues surrounding the deployment of deep learning software are difficult to address, partially confirming a prior result in [ 4 ] that model deployment is the most challenging step of the machine learning life cycle. While deployments are the most time-consuming to resolve, browser-based or front-end deployments seem to have faster median response times. The median response time for server/cloud, mobile, and browser-related queries, respectively, was 428.5, 405.2, and 180.9 min [ 21 ]. These findings suggest that the front end may be an excellent choice for deep learning deployments.

This paper aims to discuss and review the deployment stack for front-end deep learning and the development process that specifically targets this deployment. No other study has explicitly addressed and reviewed deep learning web apps development and deployment for the front end. In [ 29 ], however, a review of deep learning on mobile devices has been provided. In [ 109 ], a series of 52 review papers on deep learning are presented. The papers are grouped into topics, such as ‘computer vision, forecasting, image processing, adversarial cases, autonomous vehicles, natural language processing, recommender systems, and big data analytics’. These review papers focused on particular topics and not on model deployment. To close the gap, this paper reviews the development and deployment of deep learning models on the front-end, client-side browser.

Due to the length of content, Table 1 presents the paper’s organization and section summary, which helps the reader’s navigation and highlights key points.

2 Front-end deep learning deployment stack

Machine learning was previously restricted to only experts in research laboratories; today, it is widely accessible. Now, it is much simpler for machine learning experts and others to get started by leveraging popular front-end technologies. This section goes through the reasoning for developing real-world deep learning apps using the browser, JavaScript, and TensorFlow.js as the front-end stack. These technological stacks are built on top of one another to form an ecosystem for front-end deep learning deployment.

2.1 Why not deploy deep learning on the client-side browser?

Once trained, the machine-learning model should be deployed to generate predictions on actual data. It is meaningless to train a model if it will not be used [ 17 ]. Based on the benefits mentioned later, the front-end may be a viable deployment option.

The conventional approach is deploying a deep learning model on the server backend and accessing it through complicated API calls sent via HTTP requests using JavaScript. Because of their semantics and requirements, correct and efficient use of machine learning APIs is challenging. Accuracy-performance tradeoffs must also be carefully considered. In [ 123 ], a research study of 360 apps that use Google or AWS cloud-based ML APIs was performed. It was found that 70% of these applications include API misuses that impair the functional, performance, or economic quality of the program.

The mobile platform is also a potential deployment platform. However, creating mobile AI apps that are cross-platform compatible is not simple. It is incredibly challenging to develop and maintain versions for iOS and Android, as well as test and submit the mobile apps for distribution on an app store [ 78 ]. A taxonomy of 23 types of fault symptoms is given in [ 22 ], showing that deploying deep learning models on mobile devices covers a wide variety of problems.

However, with advancements in front-end development, researchers now have the option of deploying on the front-end, which is much easier [ 21 ], thus migrating away from back-end data centers and toward clients. Front-end deep learning applications offer the following advantages: wide reach , reduced server costs , timely response , and privacy [ 17 , 111 ].

The web environment is the most commonly used deployment platform for applications, with a growing number of customers expecting to use machine learning web applications while surfing the web [ 16 ].

Reduced server cost

Server cost is often an issue in deep learning deployments. Accelerating deep-learning models often requires GPU acceleration. Models implemented on Google Cloud or Amazon Web Services virtual machines with CUDA GPUs may be costly. The cost increases with traffic, as does scalability and server architectural complexity. A simplified deployment stack using the front-end lowers server expenses and developer concerns. For example, in [ 13 ], the front-end deployment method was selected over cloud deployment to save server costs.

Timely response

Running from the client-side is essential for low latency applications, such as real-time audio, image, and video data. Consider what happens if an image needs to be sent to the server for inference. With client-side inference, the data and processing remain on the device, reducing latency and connection issues. Due to the proximity of data, the front-end browser is the perfect platform for interaction and visualization while working with deep learning applications. In [ 19 ], for example, a real-time and interactive online experience is provided by creating models on the front-end, avoiding the size and latency limitations associated with conventional neural audio synthesis models.

Data privacy

Data privacy is a must for certain applications, such as health-related deep learning models inferred from medical data. Using just the client-side to execute the model effectively addresses this issue since no data is sent or kept outside the user’s device, guaranteeing the privacy of sensitive or personal data. For example, FreddieMeter Footnote 3 is an artificial intelligence-powered singing challenge that determines how well a singer’s voice (timbre, pitch, and melody) resembles Freddie Mercury’s while protecting user privacy.

Constraints and considerations

The deployment of deep learning models to mobile devices and browsers has been discovered to have compatibility and dependability problems [ 45 ], with variations in prediction accuracy and performance between models trained on PC platforms and those deployed to mobile devices and browsers. To bridge the gap, software design tools for development and deployment on the target platform are suggested.

The security of the deep learning model is one major drawback of using the browser to generate predictions. When the model is in the user’s possession, it may be saved and used for unintended purposes. A secure way of distribution would be to keep the model on the cloud or servers and offer services through APIs. Many production use cases, for example, require that the model be securely sent to the client so that it cannot be copied and used on other websites. This is especially true if the model has financial value.

While traditional cybersecurity methods can safeguard deep learning models on back-end servers, deep learning models on mobile devices and browsers pose new security challenges. An adversarial attack on neural networks may cause misclassifications, and the deep learning model’s hidden states and outputs may be exploited to reconstruct user inputs, possibly compromising user privacy [ 59 ]. Thus, additional concerns regarding protecting models against attacks are needed to securely and discreetly deploy deep learning models.

2.2 Why is JavaScript the language of choice?

Python is the preferred language for most machine learning projects. JavaScript, however, may be more practical for browser-based machine learning web applications because of its ease of using front-end components.

Although alternative front-end programming languages such as Typescript, CoffeeScript, and JQuery exist, these languages are compiled into JavaScript to be executed on the browser. The JavaScript engine exists in all browsers and is the one responsible for creating a responsive, interactive environment.

The benefits of developing deep learning applications with JavaScript include universality , user interaction , no installation , and direct input access .

Universality

JavaScript has been the most widely used open-source programming language for nine years Footnote 4 and is often considered a universal language for browsers [ 67 ]. It has a thriving ecosystem with broad client and server applications and has grown rapidly. Unlike backend web development, which uses Python, Java, C#, or PHP, frontend web development uses JavaScript. JavaScript allows users to execute code on almost any device, virtually unchanged while providing an intrinsically linked environment with direct access to different resources. Unlike native mobile apps, web apps may be deployed, regardless of the underlying hardware or operating system.

User interaction

JavaScript enhances the user experience by adding responsive, interactive components to web pages. It can update webpage content, animate graphics, and manage multimedia. This kind of user engagement is critical in deep learning applications. For example, in a semantic image search web app, Footnote 5 an INCEPTIONV3 convolutional neural network is used to enhance user interactions. When a picture is chosen, the neural network examines the content of all the photos in the dataset and displays the top 15 most similar images.

No installation

The simplicity with which JavaScript code and static resources may be shared with the help of a Content Delivery Network (CDN) and performed without installation is one of the main advantages of the JavaScript ecosystem [ 17 ]. This feature eliminates potentially tedious and error-prone installation processes and potentially dangerous access control when installing new software. This is in contrast to the Python environment, where installation becomes difficult due to dependencies. Developers may also make use of a large variety of JavaScript APIs, such as the WebGL API. For example, in [ 43 ], DeepFrag, Footnote 6 a 3D CNN model, has been deployed as a web app to allow broader usage by researchers to conduct optimization experiments in their browsers. This is done without submitting potentially proprietary structures to the server or installing any additional software. Another example is the Cell Profiler Analyst Web [ 9 ], which highlights that no installation or software update is necessary to use the tool.

Direct input access

Web browsers offer an extensive set of tools for managing and displaying text, audio, and visual data. These data, produced and made accessible to the client, may be used directly with permission in the browser by deep learning models with the user’s consent. Aside from these data sources, modern web browsers may access sensor data, providing exciting possibilities for many deep learning applications. For example, in [ 77 ], face recognition on the front end allowed developers to run models on the client-side, reducing latency and server load.

Deploying deep learning models on browsers presents developers with unique programming difficulties, such as converting the models to the formats required by the target platforms. The key to deploying deep learning models is the interplay between deep learning knowledge and web development. As a result, deploying deep learning models requires developers to understand both disciplines, making this a complex process.

Because machine learning development environments are incompatible with deployment environments, transferring them requires much time and effort to accommodate the new environment. The reason is that deep learning frameworks are often developed on powerful machines with GPUs, which speeds up training but may hinder inference during deployment on low-end devices.

Web applications written in JavaScript are often challenging to create for developers that prefer Python, which may introduce additional challenges to overcome. To make matters worse, only a few publicly accessible deep learning packages and built-in functions for JavaScript are available to choose from compared to Python. This lack of library support may add to the complexity and lengthen development time.

2.3 Why is TensorFlow.js ideal for front-end deployment?

TensorFlow.js is a JavaScript library for creating machine learning models compatible with TensorFlow Python APIs [ 111 ], allowing it to benefit from its strengths. However, unlike TensorFlow Python APIs, TensorFlow.js may be easily shared and performed on any platform without installation, making it more powerful. TensorFlow.js also provides high-level modeling APIs that support all Keras layers [ 106 ], making it easy to deploy pre-trained Keras or TensorFlow models into the browser and run them with TensorFlow.js.

2.3.1 Hardware acceleration

Apart from offering the flexibility required for web-based machine learning applications, TensorFlow.js also performs well. Since the TensorFlow.js library is linked with the acceleration mechanism offered by current web browsers, the TensorFlow.js platform enables high-performance machine learning and numerical computing in JavaScript [ 17 ].

TensorFlow.js’s initial hardware acceleration method is WebGL [ 111 ]. Modern web browsers have WebGL APIs, which were initially intended to accelerate the rendering of 2D and 3D visuals in web pages. However, it has since been repurposed for parallel numerical computing in neural networks in TensorFlow.js [ 111 ]. This implies that the integrated graphics card may speed deep learning operations, eliminating the requirement for dedicated graphics cards, such as those from NVIDIA, needed by native deep learning frameworks. While WebGL-based acceleration is not on a par with native GPU acceleration, it nevertheless results in orders of magnitude speedups compared to a CPU-only backend. Tapping into these performance improvements enables real-time inference for complex tasks such as PoseNet extraction of body posture.

TensorFlow.js launched the WebAssembly backend (WASM) in 2020. Footnote 7 WASM is a cross-browser, portable assembly, and binary format optimized for the web that enables near-native code execution. Although WebGL is quicker than WASM for most models, WASM may outperform WebGL for small models because of the fixed overhead costs associated with WebGL shader execution. Thus, when models are tiny or when low-end devices lack WebGL support or have less capable GPUs, WASM is an excellent option used in place of JavaScript’s vanilla CPU and WebGL-accelerated backend.

TensorFlow.js assigns a priority to each backend and automatically picks the one best supported in an environment. Presently, WebGL takes precedence over WASM and subsequently the vanilla JS backend. Footnote 8 An explicit call is needed to use a particular backend. Apart from hardware acceleration through WebGL and WebAssembly, WebGPU is still in its experimental phase as of June 2022. Footnote 9

2.3.2 Robust ecosystem

The TensorFlow.js ecosystem supports all major processes [ 17 ], such as training and inference, serialization, deserialization, and conversion of models. It also supports a wide variety of environments [ 17 , 106 ], including browsers, browser extensions, web servers (Node.js), cloud servers, mobile apps (ReactNative), desktop apps (Electron.js), platforms for app plugins, and single-board computers. It also has built-in functionality for data input and a visualization API [ 106 ]. For example in [ 95 ], a browser extension for online news credibility assessment tools Footnote 10 was developed using a collection of interactive visualizations that explain the reasoning behind the automated credibility evaluation to the user. As another example, in [ 11 ], a neural network was developed and exported to the Arduino Nano Module for geometric object identification by their acoustic signatures, which attempts to replicate the way dolphins and bats perceive by using sonic waves.

2.3.3 Alternative library for front-end deep learning

Several JavaScript-based deep learning frameworks enable deep learning in browsers. In [ 78 ], deep learning in browsers was studied to evaluate how well these frameworks perform, but the work does not consider recent TensorFlow.js speed gains. They assessed seven JavaScript-based deep learning frameworks to see how far browsers have supported deep learning tasks and compared the performance of these frameworks on various deep learning tasks. They found ConvNetJS Footnote 11 excels in both training and inference. TensorFlow.js is recommended in place of ConvNetJS, which is no longer being developed, because of its feature set and performance. Libraries such as ConvNetJS, Keras.js, Footnote 12 and Mind Footnote 13 are no longer supported, while WebDNN Footnote 14 only supports inference tasks. For training tasks, Brain.js Footnote 15 supports DNN, RNN, LSTM, and GRU, while Synaptic Footnote 16 supports first-order or even second-order RNN.

In [ 94 ], a series of deep learning frameworks tailored to the browser environment, such as TensorFlow.js, Brain.js, Keras.js, and ConvNet, are reviewed and compared for object detection. For fast inference with acceleration support, frameworks like TensorFlow.js and WebDNN were recommended. Integration of low-level programming (such as mathematical operations and data manipulation) with higher-level programming (such as deep learning model development, training, and execution in the browser) makes TensorFlow.js a more resilient framework.

According to npmtrends.com, which monitors package download counts over time, TensorFlow.js is well ahead of other ML frameworks in terms of popularity. The other frameworks (Brain.js, ConvNet.js, synaptic, and WebDNN), including the Onnx.js and Neataptic.js libraries, have not yet reached 10% of TensorFlow.js’s weekly download rate of 75 thousand. Footnote 17

2.3.4 Available high-level API extensions

Several deep learning high-level libraries are built based on TensorFlow.js, which allows them to take advantage of its extensive library and hardware-accelerated inference. These libraries are highlighted in Table 2 . The table shows the functionality and use cases of these libraries.

2.3.5 Constraints and considerations

Although TensorFlow.js is a practical and accessible deep learning framework, it has certain drawbacks. TensorFlow.js is not a framework aimed at resource-constrained IoT and embedded devices [ 26 ]. TensorFlowLite, another library from the TensorFlow framework, is more appropriate.

In TensorFlow.js, memory management is essential and tensors that are no longer required must be manually cleaned up by using the tidy() and dispose() functions [ 101 , 111 ]. If they are not explicitly removed, the tensors will continue to use memory, resulting in memory leaks. The JavaScript engine in browser execution environment is also restricted and single-threaded. Thus, computationally intensive tasks may cause the UI to stall [ 111 ]. The asynchronous function should be used for computationally demanding operations [ 101 ]. These environmental issues only apply to the TensorFlow.js environment and must be considered.

3 Front-end deep learning development approach

Researchers often face new challenges and constraints when deploying deep learning models in the browser because these models may not have been explicitly developed for client-side execution. Furthermore, the high-performance back-end server with hardware acceleration such as the GPU differs from the front-end environment in the browser with limited resources. Because of these environmental differences, the emphasis has shifted to adapting models for effective deployment and improved user experience.

When a model is deployed on the front-end, the user experience is a crucial aspect to consider. As a result, much thought goes into making models that are compact and can be executed quickly [ 17 ]. In fact, model loading performance exceeds inference task performance because the process of loading and warming up the deep learning model takes longer than performing the inference job [ 78 ]. For example, if the object detection model size is larger than 10MB, it will take a long time to load, slowing down the website considerably.

Creating models from the ground up, particularly for large and complicated models, is not advisable for frontend deep learning. Because of the limitations of the browser environment, browser models must be small, have fast inference, ideally in real-time, and be as easy to train as possible [ 17 ]. However, decreasing the model’s size may cause reduced accuracy, but most models will work adequately well in a browser [ 45 ]. Generally, when accuracy is weighed against user experience, a minor loss of precision is acceptable, since the user experience is emphasized.

Researchers often choose one of many options when confronted with implementing a deep learning challenge in the browser. The most straightforward and most commonly used approach is to use pre-trained TensorFlow.js models that are ready to deploy. A model that has previously solved a similar issue may also be reused by retraining the model using transfer learning to adapt it for its particular application. However, if an existing model is built in Python, conversion to a TensorFlow.js model is required. Before deployment, it is also critical to test and optimize the model. These points are addressed in more detail in the following sections.

3.1 Reusing pre-trained TensorFlow.js models

The creation of machine learning models, which are at the heart of AI software development, is not a simple task. Significant technical skills and resources are required to build, train, and deploy modern deep learning models. Special abilities in reading and comprehending professional AI literature are needed to apply deep learning algorithms. Training machine learning models also takes considerable resources. For instance, models that perform complicated tasks like image classification, object recognition, or text embedding need extensive calculations. The tasks also take a long time to train on large-scale datasets, using a significant amount of computing resources.

The complexity of creating machine learning models drives the effective reuse of machine learning models. To help with model discovery and reuse, public machine learning package repositories that collect pre-trained models may be employed. Once suitable models are found, these models may also serve as a testing ground for researchers to see whether a concept is viable before delving further and developing a model of their own.

3.1.1 Using models from the TensorFlow.js library

TensorFlow.js includes a collection of Google’s pre-trained models. These pre-trained models, as shown in Table 3 , are ready to execute tasks like object identification, picture segmentation, voice recognition, and text toxicity categorization. The models may be used directly or customized using transfer learning. TensorFlow.js pre-trained models may be classified according to whether they are object-utility models, face and pose models, or text and language models.

3.1.2 Using models from online model repositories

TensorFlow Hub Footnote 18 is a massively scalable, open repository and library for reusable machine learning algorithms. The TensorFlow Hub included trained machine learning models that were ready for fine-tuning and could be deployed anywhere. The TensorFlow Hub provides a central location for searching and discovering hundreds of trained, ready-to-deploy models. With a few lines of code, the most recent trained models, such as BERT and Faster R-CNN, can be downloaded and reused. There are thousands of models available, with an increasing number in each of the four input domains: text, image, video, and audio. Apart from filtering by domain, models may be filtered by formats: TensorFlow.js, TFLite, coral, TF1, and TF2. Models may also be filtered by architectural type, including BERT, EfficientDet, Inception, MobileNet, ResNet, Transformer, and VGG-style.

Another repository that is linked to their framework includes the PyTorch Hub, Footnote 19 which presently includes packages that may be accessed through the PyTorch framework’s APIs. Other such repositories include Microsoft cognitive toolkit, Footnote 20 Caffe/Caffe2 model zoo, Footnote 21 and MXNet model zoo. Footnote 22

Two repositories that are not connected to any specific framework are Model Zoo Footnote 23 and ModelHub. Footnote 24 Model Zoo curates and hosts a repository of open-source deep learning code and pre-trained models for various platforms and applications. The repositories also offer filtering capabilities to assist users in locating the models they need. TensorFlow, Keras, PyTorch, and Caffe are all included in this framework package. ModelHub is another self-contained deep learning model repository. It is a crowdsourced platform for scientific research that highlights current developments in deep learning applications and aims to encourage reproducible science.

The following repositories focus on certain fields:

SRZoo Footnote 25 in [ 24 ] is a centralized repository for super-resolution tasks that collects super-resolution models in TensorFlow.

ARBML Footnote 26 in [ 6 ] showcased a series of TensorFlow.js NLP models trained for Arabic, which support the NLP pipeline development.

EZ-MMLA toolkit Footnote 27 in [ 51 ] was created as a website that makes it simple to access machine learning algorithms in TensorFlow.js for collecting a series of multimodal data streams.

Using an open-source pre-trained model may be very simple and effortless. However, some models do not disclose how they were created, the dataset on which they were trained, or even the method employed. These “black box” models can be a problem, as they can lead to legal liability [ 42 ] when an explanation of why the model made a certain prediction is required.

3.2 Customizing existing models using transfer learning

Although TensorFlow.js is not suggested for intensive training, it is suitable for small-scale interactive learning and experimenting. By integrating available pre-trained models into an appropriate use case, the development process may be significantly accelerated.

Transfer learning is a method that enables us to apply previously learned models to our unique use cases. It is repurposing a trained model for a second similar job. Transfer learning enables the combination of pre-trained models with customized training data. This implies that by simply adding custom samples, the functionality of a model may be leveraged without having to recreate everything from the start.

Most projects use transfer learning to achieve the following recurring benefits: obtaining a solution with little data, getting a solution quicker, and reusing a time-tested model structure [ 101 ]. Transfer learning has the major advantage of using less training data to develop an effective model for new classes. Rather than performing time-consuming relearning, previously acquired features, weights, or biases may be transferred to another situation. Modern, state-of-the-art models often include millions of parameters and train slowly. Transfer learning simplifies this training process by reusing a model learned on one task for a second related task. For instance, if an image classification model has been trained on thousands of pictures, rather than starting from scratch, fresh, unique image samples may be merged with the pre-trained model to produce a new image classifier using transfer learning. This feature enables the user to quickly and easily create a more personalized classifier.

Transfer learning has been applied in a variety of use cases for front-end deep learning. For example, in [ 118 ], a convolutional neural network (CNN) computer vision model was trained on approximately 10,000 pictures (5,500 snails and 5,100 cercariae). Because the image dataset was small, transfer learning was used by using seven pre-trained CNN models, where InceptionResNetV2 was found to be the best pre-trained model. After developing and training the CNN model, the best performing Keras model was converted to TensorFlow.js to be deployed. Other examples of work that applies transfer learning include [ 89 ] which did transfer learning on InceptionV3, Resnet50, MobileNet, and [ 32 ] on PoseNet.

Transfer learning is accomplished by retraining selected parts of previously trained models [ 101 ]. This may be done by substituting new layers for the last layers of the pre-trained model and training the new, much smaller model on top of the original truncated model using freshly tailored data. For example, in [ 1 ], transfer learning is accomplished using TensorFlow’s MobileNet. The MobileNet architecture’s last completely linked layer has been deleted. To categorize the dataset, the higher-level characteristics are input into machine learning classifiers such as the Logistic Regression model, the Support Vector Machine model, and the Random Forest model.

Because of the variety of model implementation types, there are also different ways to access them for transfer learning purposes. For instance, the downloaded bundle from TensorFlow Hub does not include the whole model but rather truncates it into a feature vector output that may be connected to exploit the model’s learned features. Fortunately, TensorFlow’s environment is sufficiently flexible to support transfer learning. Understanding the model and adequate planning on reusing the underlying model is required to implement this correctly.

Two primary considerations must be addressed before initiating transfer learning. First, it is essential to validate the data’s quality. If the data used in training is of poor quality, the result of the training will be worthless [ 73 ]. For this to work, the model properties gained in the first task must also be transferrable. In short, the features should be suitable for both the first and second tasks.

When the machine-learning challenge is unique, transfer learning is not suitable, and developing a model from scratch is best. However, in other instances, the problem is generic for which pre-trained models exist that either precisely fit the need or can fulfill the requirements with minimal modification. Sharing and repurposing deep learning models and resources is expected to continue to increase in popularity [ 17 ]. As modern deep-learning models are becoming more solid and broader, reusing pre-trained models for direct inference or transfer learning is becoming more practical and cost-efficient.

3.3 Converting Python models into TensorFlow.js models

While there are many open-source pre-trained models accessible online, most of these models are trained and available in TensorFlow and Keras Python formats, compared to TensorFlow.js open-source pre-trained models. These pre-trained Python models can actually be converted to TensorFlow.js for front-end deployment. For example, in [ 127 ], the model is trained in Python using the TensorFlow library and then transformed into a layer model using TensorFlow.js.

The TensorFlow.js framework provides a converter tool Footnote 28 that enables direct conversion of models trained using Python frameworks, such as TensorFlow and Keras, allowing for direct inference and transfer learning in web pages [ 73 ]. TensorFlow.js supports the following model formats: TensorFlow SavedModel, Keras model, and TensorFlow Hub module [ 106 ]. When the TensorFlow.js tools converted the model, they produced a JSON file containing the model’s information and a binary file holding the weights and biases.

While conversion is workable for TensorFlow SavedModel and Keras models, the conversion will fail if the model contains operations that are not supported by TensorFlow.js and modification of the original model needs to be done. The full list of TensorFlow.js operations is available here. Footnote 29 For example, CamaLeon [ 30 ] was trained in Keras before being converted to TensorFlow.js for browser-based inference. After many optimization rounds, the model was reported to have 485,817 parameters and a weight size of just 2MB when converted to TensorFlow.js. Other examples of conversions from Keras for usage in web applications are reported in [ 6 , 88 , 99 ].

In addition, models created using PyTorch may be used, but the models must go through an extra conversion before they can be used for inference with TensorFlow.js. It must first be converted to Keras or Onnx format before being converted to TensorFlow.js. Again, changes may be required if any operations are not supported because of differences in library support across models. For example, in [ 108 ], the conversion process was accomplished via the conversion of PyTorch to the Onnx standard and then to TensorFlow and TensorFlow.js.

Converting models poses a challenge when the model is not compatible with the TensorFlow.js library and changes to the API calls need to be made. In [ 45 ], compatibility and reliability issues were highlighted, as well as accuracy loss in certain instances when moving and quantizing a deep learning model from one platform to another. They suggested implementing platform-agnostic deep learning solutions, particularly for mobile and browser platforms, to tackle the problem.

3.4 Optimizing the model prior to deployment

Before deploying TensorFlow.js models to production, testing and model optimization are strongly recommended. Optimizing the download and inference speeds is critical for the client-side deployment of TensorFlow.js models to succeed [ 17 ].

The TensorFlow.js converter supports both optimization methods: Footnote 30 graph-model conversion to improve inference speed, and post-training weight quantization to reduce the model size.

Inference speed is optimized via the use of graph optimization, which simplifies computation graphs and decreases the amount of computation needed for model inference.

Model size is optimized through weight quantization, which reduces the model weight to a lower size. Weight quantification may not result in a significant decrease in forecast accuracy. In most instances, this phase has a minimal effect on total model correctness [ 45 ]. However, if accuracy falls, repeatedly omitting or adding tensors from quantization may help identify the tensors that cause the model’s accuracy to decrease after their values have been quantized. As a result, it is critical to ensure that the model keeps an appropriate level of accuracy following quantization.

Optimization has been used successfully in several use cases. As an example, the original ssd_mobilenet_v2 _cocomodel COCO-SSD object identification model is 187.8 MB in size. In comparison with the original model, the TensorFlow.js version of the model is very lightweight and optimized for browser execution. The TensorFlow.js lite_mobilenet_v2 model is less than 1MB and has the quickest inference performance. As another example, in [ 53 ], by using post-training weight quantization, the downloaded weights are compressed, resulting in a 400KB payload size with no discernible loss of quality. By using dilated depth-wise separable convolutions and fusing procedures, the researchers decreased the model’s run-time for one harmonization from 40s to 2s.

Research on model optimization

Because models must be both compact and powerful, how to construct a neural network with the lowest possible size while still completing tasks with an accuracy equal to that of a larger neural network, is a prominent subject of research in deep learning.

A method for expanding and describing neural net information in a model based on hierarchical choices is suggested in [ 40 ]. In addition, to identify performance bottlenecks and aid system design, a thorough characterization of the results for a selection of paradigmatic deep learning workloads was provided in [ 44 ].

To produce a smaller model and low-latency inference, context-aware pruning was introduced in [ 55 ] that takes into consideration latency, network state, and computational capability of the mobile device. A binary convolutional neural network was later introduced in [ 56 ]. The updated approach reduces ‘model size by 16x to 29x’ and reduces ‘end-to-end latency by 3x to 60x’ compared to existing methods.

A progressive transfer framework for deep learning models is introduced in [ 76 ] by using the principle of transferring large image files over the web. The framework enables a deep learning model to be divided and progressively transferred in stages. Gradually, each part added builds a better model on the user device.

While model optimization is beneficial, the best approach to guarantee that the model works effectively is to design it from the start with resource restrictions. This means avoiding excessively complicated designs and, where feasible, reducing the number of parameters or weights.

A possible fallback alternative if the front end does not perform well would be to use back-end services. For example, in [ 53 ], support for Tensor Processing Units (TPU) on Google Cloud was also introduced, besides front-end inferences for preparation of large-scale deployment. Here, a speed test is run to see whether the user’s device can run the model in the browser. Otherwise, the requests were routed to distant servers.

4 Front-end deep learning web apps

Web browsers have drawn the interest of AI researchers because they offer a cross-platform computing target for deep learning on the client’s side. Privacy, accessibility, and low latency interactions are just a few advantages of using web browsers for deployment. The browser’s access to components, including the web camera, microphone, and accelerometer, allows for simple integration of deep learning models and sensor data. Because of this connectivity, user data may be kept on the device while maintaining user privacy, allowing personalized deep learning apps in fields like medicine and education.

Deep learning improves many existing solutions while also bringing new practical applications. Sometimes, an entirely new area of application is introduced. A few applications of TensorFlow.js are available in TensorFlow.js’s gallery of applications. Footnote 31

This section focuses on research that leverages front-end deep learning web apps. It explores the question of what front-end deep learning can do by providing a glimpse of the work that has been deployed on the front end. Instead of delving deeply into any one field, the section concentrates on how working on the front end may help solve user problems.

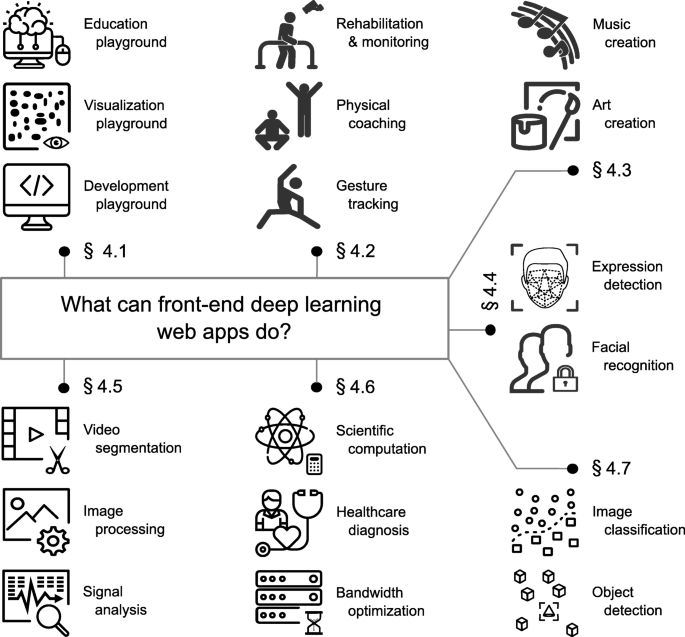

The collected works have 18 different fields, and they are grouped into 7 categories based on the purpose of the web apps. To show how researchers construct front-end deep learning web applications, the section also provides links to resources, such as the website where it is deployed or the GitHub repository where the source code is located.

To help navigate the broad list of diverse topics, Fig. 1 has been designed to outline and highlight the major groupings of applications that will be covered. For example, if gesture tracking is of interest, following the section number (Section 4.2 ) will lead to examples of what has been done and how web apps are used to achieve gesture tracking.

Examples of front-end deep learning web apps

4.1 Deep-learning playground apps

Education playground.

Teachable Machine, Footnote 32 in [ 18 ], is a web app that enables non-programmers to train their machine learning classification models using videos, pictures, or sounds from their devices. To build a model, it uses transfer learning to uncover the trends and patterns within images or sound samples. The trained model can also be downloaded locally, eliminating the need to save and save huge files, datasets, or models on the cloud. Teachable Machine offers expandable panels for hyperparameter tweaking and model assessment visualizations for customers who desire greater control over model training.

Two other attempts have been made to create online AI learning applications comparable to Google’s Teachable Machine. In [ 107 ], the aim is to develop a web-based learning application Footnote 33 to assist novices in comprehending deep learning processes and resolving real-world issues. In [ 90 ], the aim is to create a web app for an open artificial intelligence platform that helps preprocess input data, trains artificial neural networks, and sets up future actions based on inference findings.

For children’s education, an interactive online explanation using picture recognition is suggested in [ 100 ]. When prompted by the website’s instructional video, the user will take a picture of the item, and the website will identify it using a deep learning algorithm created using ml5.js.

Visualization playground

GAN Lab, Footnote 34 in [ 66 ], is an interactive visual learning and experimentation app for Generative Adversarial Networks (GANs). Users may train GANs interactively and visually examine the model training process to grasp how GANs function during training and inference. In a follow-up study, an in-person observational study was conducted to investigate how GAN Lab is used and what users gain from it. Design considerations and difficulties associated with interactive teaching systems for deep learning have also been highlighted in [ 65 ].

The same research group presented CNN Explainer, Footnote 35 in [ 126 ] and [ 125 ], an interactive visualization application that helps explore convolutional neural networks. This app imports the pre-trained Tiny VGG model and uses TensorFlow.js to calculate results in real-time. They also presented a review of visualization and visual analytics in deep learning research, using a human-centered, interrogative approach [ 52 ].

Anomagram Footnote 36 is another interactive visualization app for studying deep learning models, and it looks at how an autoencoder model may be used for anomaly detection. The ECG5000 dataset was used for interactive training and testing, in which the autoencoder model predicts whether an ECG signal sample is normal or abnormal.

Two other studies that introduced visualization for learning deep learning include TensorSpace, Footnote 37 a framework for visually representing pre-trained neural network models in three dimensions, and LEGION, Footnote 38 in [ 28 ], a graphical analytic app that enables users to compare and choose regression models that have been created either via hyperparameter tweaking or feature engineering.

Development playground

Milo Footnote 39 in [ 97 ] is a web-based visual programming environment for data science. It provides an abstraction for language-specific implementations of machine learning principles using graphical blocks (Blocky Footnote 40 ) and the creation of interactive visualizations. Similarly, DeepScratch, Footnote 41 in [ 5 ], is a new Scratch programming language extension that adds strong language features to aid in the development and exploration of deep learning models. Likewise, Marcelle Footnote 42 is another toolkit that enables interactive machine learning development by composing or customizing component-based machine learning workflows based on the Tensorflow.js library [ 39 ].

To employ gamification to facilitate deep learning, a series of tasks Footnote 43 was presented in [ 105 ]. These tasks assess the human-interpretability of generative model representations, in which users change model parameters interactively to recreate target instances. Similarly, in [ 64 ], students were given 30 ‘half-baked’ artificial intelligence projects Footnote 44 in the Snap! block language programming system to explore and improve.

4.2 Body pose detection and gesture tracking apps

Rehabilitation and monitoring.

To facilitate fall risk assessment and rehabilitation monitoring for the elderly, a web app was introduced in [ 98 ]. A similar home care monitoring system was reported in [ 20 ], where it can assess the quality of in-home postural alterations, such as posture conversion, body movement, and positional changes.

In a similar work, a fall detector is proposed in [ 7 ] using convolutional neural networks and recurrent neural networks. The suggested technique is appropriate for real-time detection of a single individual’s fall in a controlled setting, even on low-end computers.

Most recently, in [ 25 ], PoseNet was used for in-home rehabilitation where its skeletal tracking was used to identify and monitor patients’ angular motions as they conduct rehabilitation activities in front of a webcam. After patients have completed their rehabilitation activities, doctors may examine and assess the deviation rate of their angular motions across various days to find out their rate of recovery.

Physical activity coaching

In [ 104 ], web-based video annotations were presented that support multimodal annotations and can be used in a variety of scenarios, including dance rehearsals. Pose estimation is used to detect a human skeleton in video frames, giving the user an app for locating potential annotations. To make it an interactive tool, voice integration was later added by using the ML5 speech and sound classifier to provide human-computer interaction [ 103 ].

In another study, the PoseNet machine learning model from ml5.js was used to create an exercise tracking system that uses two cameras to track and assess the difficulty of an exercise while providing feedback [ 96 ]. According to them, a single RGB camera may miss certain essential information, and incorrect body movement during workouts may also be overlooked. A self-monitoring and coaching system for online squat fitness is also designed in [ 124 ].

In [ 32 ], a Digital Coaching System uses PoseNet to provide real-time feedback on exercise performance to the trainee by comparing keypoints from two video feeds, one from the trainer (using ResNet50) and one from the trainee (using MobileNet). The trainer’s video feed is pre-recorded and processed with a pre-trained pose estimation model to generate keypoints that will be compared to the keypoints from the trainee’s live video feed.

An assessment of pre-trained CNN models for player detection and motion analysis in pre-recorded squash games was carried out in [ 15 ]. One of the ml5.js-based algorithms demonstrated the fastest inference by using a 28-layer deep MobileNetV1 architecture, with the least depth of the CNNs evaluated but the lowest accuracy across all threshold stages in all videos.

To estimate human pose and identify anatomical points, a computer vision-based mobile tool is proposed in [ 82 ] for assessing human posture.

Gesture tracking

HeadbangZ, Footnote 45 in [ 81 ], is a web-based game that shows a 3D gesture-based input using deep posture estimation and user interaction. Likewise, Scroobly Footnote 46 and PoseAnimator Footnote 47 map the user’s actual motion to their animations using Facemesh and PoseNet machine learning models. When the user moves, the machine learning algorithm changes the animation shown on the screen.

TensorFlow.js pose estimation was also used in the development of Learn2Sign [ 92 ], which provides feedback for learning signed languages. In contrast, a real-time translation of American Sign Language (ASL) into text is available in [ 86 ].

4.3 Music and art creation apps

Music creation.

Magenta.js [ 102 ] is an open-source toolkit with a simple JavaScript API that abstracts away technical complexities, allowing application developers to build new interfaces for generative models. While Magenta.js’s goal is far broader than music, the suite’s first package, @magenta/music, contains several state-of-the-art music-generating models.

Trained on Magenta’s MusicVAE’s latent space, MidiMe, Footnote 48 in [ 33 ], is a compact Variational Autoencoder (VAE) that enables artists to summarize the musical characteristics of the input and create new customized samples based on them. Again powered by Magenta.js, Bach Doodle, Footnote 49 in [ 53 ], attempts to make music creation more accessible to amateurs and professionals alike. Users may compose their tune and have it harmonized in baroque-style counterpoint composition using an easy sheet music interface supported by a machine learning model capable of filling in arbitrarily incomplete scores. In addition, Tone Transfer, Footnote 50 in [ 19 ], is an interactive online app that allows users to convert any audio input into various musical instruments using differentiable digital signal processing (DDSP) models.

In a similar work, Rhythm VAE Footnote 51 [ 122 ] is a system for exploring musical rhythms’ latent spaces. It uses minimal training data, which allows for quick customization and exploration by individual users. Likewise, JazzICat, Footnote 52 in [ 72 ], a deep neural network LSTM model, shows how it may simulate a jazz improvisation scenario involving a human soloist and an artificial accompaniment in a real-time environment. In addition, Essentia.js, Footnote 53 in [ 27 ], is a collection of publicly available pre-trained TensorFlow.js models for music-related tasks such as signal analysis, signal processing, and feature extraction.

Art creation

Using the results of picture recognition in Sketcher, Footnote 54 a convolutional neural network model that recognizes drawings was implemented in [ 36 ], to offer human-like complimenting feedback.

In contrast, a web app for collaborative sketching is presented in [ 35 ]. It leverages Magenta.js’ sketch-rnn to be both cooperative and adaptable to the actions of its human partner. During collaborations, this app may suggest logical extensions of incomplete drawings.

Finally, also following earlier work, this time using Sketch-rnn and Sketch-pix2seq, an image-to-sequence VAE model is constructed to guide the user via an interactive GUI environment using conditionally produced lines [ 127 ].

4.4 Facial expression detection and recognition apps

Expression detection.

A comparison study of two open-source emotion recognition software packages was performed under a range of lighting and distance conditions, in which they found that Face-api.js outperforms CLMTrackr with 64% vs. 28% average accuracy [ 8 ].

An intelligent environment capable of detecting students’ learning-related emotions was introduced in [ 99 ], allowing different interventions to be carried out automatically in response to students’ emotional states. In addition, an online report and dashboard were also suggested in [ 46 ] that offer instructors an assessment of students’ involvement and emotional states while using an online tutoring system. Likewise, a head pose app that predicts students’ real-time head direction and reacts to potential disengagement was also proposed in [ 128 ]. The app also includes a facial expression dashboard that detects students’ emotional states and delivers this information to instructors to help instructors assess student development and identify students who need more help.

To assist with emotional healthcare monitoring during mental treatment, a video analysis app on facial expression and emotion visualization was created in [ 48 ] and [ 49 ]. Similarly, a WebRTC-based real-time video-conferencing application that can detect facial emotions by reading the participants’ facial expressions was presented in [ 34 ].

Interestingly, the relationship between human mental fatigue level as measured by bio-signals (i.e., eye blink data) and plant health was studied in [ 68 ]. Face-api.js is also used to conduct neuromarketing research by identifying respondents’ emotions after seeing advertisements [ 38 ].

Facial recognition

A 68-point facial landmark predictor that is under 200kb in size and capable of matching the predicted 68-point landmarks with conventional face recognition landmarks has been presented in [ 77 ]. In addition, to prevent unauthorized access, a system was created that uses facial recognition and landmark detection techniques to perform identity verification and liveness detection tasks [ 75 ]. Similarly, a smart identification system is featured in [ 2 ] for video conferencing applications based on face-api.js, and a distributed hash table using blockchain technology.

4.5 Video, image and signal analysis apps

Video segmentation.

To transform the backdrop of the distant peer’s webcam feed into one that matches the receiver’s, a browser-based application in [ 30 ] uses UNet and TensorFlow.js to perform real-time machine vision. In a similar work, TensorFlow.js’s BodyPix model is used to segment the backdrop and the individual for enhancing remote user presence. This is done by replacing the distant user’s backdrop with a real-time acquired picture of the region behind the receiving side [ 41 ].

A real-time painting algorithm is proposed in [ 12 ] that can remove unwanted human objects from the video by performing segmentation on the video frame by frame using BodyPix and TensorFlow.js. Instead of altering the backdrop, Invisibility Cloak Footnote 55 is a real-time person removal system that runs in the browser.

To predict the areas and landmarks of the faces for real-time face augmentation, a pre-trained FaceMesh model was used in [ 115 ] to overlay various augmented reality filters and effects over the identified face regions.

Interestingly, rather than adding or removing video regions of the face and body, a face-touching web application has been developed in [ 91 ]. By using BodyPix 2.0, the application accepts real-time input from the built-in camera and identifies face-touching using the intersection of the hands and facial areas, delivering a warning notice to the user.

Image processing

Front-end deep learning web apps have also been used to provide a centralized tool that enables individuals working in disparate places and industries to interact.

An online Integrated Fingerprint Image system was created with web-based tools that can view, edit, apply pattern recognition, analyze, and interact with pictures in real-time [ 54 ]. The system can extract characteristics and discriminate between normal and abnormal regions of the image, while allowing for comments. In addition, preprocessing of the input picture has also been shown in [ 62 ] to significantly affect the quantity of input data that must be sent to the cloud service, which subsequently improves server-side processing.

Cell Profiler Analyst Web (CPAW) Footnote 56 in [ 9 ] allows users to examine image-based data and categorize complex biological traits via an interactive user interface, allowing for better accessibility, faster setup, and a simple workflow. During the active learning phase, cells are fetched to be trained and categorized by the machine learning classifier into their appropriate class using TensorFlow.js. MedSeg Footnote 57 is another clinical web app that allows simple volume segmentation of organs, tissue and pathologies for radiological images. The segmentation of the images can be done manually or automatically using deep learning models.

Signal analysis

A virtual laboratory for EEG data analysis that enables data analysis, pre-processing, and model development was built with TensorFlow.js in [ 3 ]. Using real-time sensor measurements of rib-cage movement, a separate clinical study in [ 108 ] found that an LSTM network model can predict physical effort by comparing how much air a person breathes in a minute to their perceived workout intensity.

In [ 70 ], using captured audio data during class observation, deep learning models were built to classify classroom activities. The CNN classifier model outperformed the KNN and Random Forest models in this study.

Using vibrational signals, a real-time classification model for rolling bearing diagnostics based on MobileNet was built in [ 129 ] and tested on a range of fault types. In the study, the improved ReLU is superior to the standard ReLU activation function.

4.6 Scientific computation, diagnosis and optimization apps

Scientific computation.

MLitB [ 79 ] is the first prototype machine learning framework written completely in JavaScript without Tensorflow.js. It can conduct large-scale distributed computing with diverse classes of devices using Web browsers.

JSDoop Footnote 58 is a high-performance volunteer-based web computing library introduced in [ 84 ] that splits a problem into tasks and distributes the computation using various queues. TensorFlow.js is used as a proof-of-concept to train a recurrent neural network that produces or predicts the following letter of an input text. In a follow-up study [ 83 ], a federated learning system that is dynamic and adaptable has been implemented and evaluated to train common machine learning models. The system has been evaluated with up to 24 desktops working together via web browsers to train common machine learning models, while allowing users to join or leave the computation and keeping personal data stored locally.

For exploratory data analysis of high-dimensional data, a T-distributed Stochastic Neighbor Embedding (t-SNE) algorithm Footnote 59 is proposed in [ 93 ]. Their technique decreases the computational cost by orders of magnitude while maintaining or improving the accuracy of previous methods. In contrast, LatentMap [ 61 ], a GAN-based method, was developed to analyze the latent space of density maps. The method can be applied to any density map in spatiotemporal visualization. It may speed up front-end loading, complete or anticipate stream data information, and visualize missing data.

DeepFrag is a deep learning application presented in [ 43 ] for lead optimization, an important step in earlystage drug development, which involves making chemical modifications to a small-molecule ligand to improve features such as binding affinity. In another study to help predict drug responsiveness, a deep learning model Footnote 60 was used on unlabeled cell culture images in [ 23 ]. Transfer learning was applied using the MobileNetV2 architecture and converted to TensorFlow.js format before deployment in the browser.

Healthcare diagnosis

For healthcare diagnosis, front-end deep learning web apps have been used for a wide variety of use cases.

ImJoy, Footnote 61 in [ 88 ], is a versatile and open-source app that enables the broad reuse of deep learning methods and plugins for interactive image analysis and genomics. For instance, the Skin-Lesion-Analyzer plugin uses a deep convolutional network in TensorFlow.js to categorize images of skin into seven distinct kinds of (possibly malignant) skin lesions. Similarly, a web application was created in [ 113 ] that allows users to submit images and analyze them for skin melanoma lesions using a convolutional neural network previously trained on Google Teachable Machine. In [ 31 ], a classification of skin cancer lesions was presented using two different implementations, a basic CNN model and a transfer learning model implemented using ResNet50 pre-trained with ImageNet. The transfer learning model was found to give higher accuracy.

To train the model for COVID-19 Chest X-ray Diagnosis, CustomVision from Microsoft Azure Cognitive Services was used and exported to TensorFlow.js in [ 14 ]. Similarly, to detect and monitor abnormal shapes of the skull in children, the Google Inception V3 model was trained using a transfer learning approach and converted to TensorFlow.js before deployment [ 117 ].