Press release

Global Education Monitoring (GEM) Report 2020

Fewer than 10% of countries have laws that help ensure full inclusion in education, according to UNESCO’s 2020 Global Education Monitoring Report: Inclusion and education – All means all.

The report provides an in-depth analysis of key factors for exclusion of learners in education systems worldwide including background, identity and ability (i.e. gender, age, location, poverty, disability, ethnicity, indigeneity, language, religion, migration or displacement status, sexual orientation or gender identity expression, incarceration, beliefs and attitudes). It identifies an exacerbation of exclusion during the COVID-19 pandemic and estimates that about 40% of low and lower-middle-income countries have not supported disadvantaged learners during temporary school shutdown. The 2020 Global Education Monitoring (GEM) Report urges countries to focus on those left behind as schools reopen so as to foster more resilient and equal societies.

Persistence of exclusion: This year’s report is the fourth annual UNESCO GEM Report to monitor progress across 209 countries in achieving the education targets adopted by UN Member States in the 2030 Agenda for Sustainable Development. It notes that 258 million children and youth were entirely excluded from education, with poverty as the main obstacle to access. In low- and middle-income countries, adolescents from the richest 20% of all households were three times as likely to complete lower secondary school as were as those from the poorest homes. Among those who did complete lower secondary education, students from the richest households were twice as likely to have basic reading and mathematics skills as those from the poorest households. Despite the proclaimed target of universal upper secondary completion by 2030, hardly any poor rural young women complete secondary school in at least 20 countries, most of them in sub-Saharan Africa.

Also according to the report, 10-year old students in middle and high-income countries who were taught in a language other than their mother tongue typically scored 34% below native speakers in reading tests. In ten low- and middle-income countries, children with disabilities were found to be 19% less likely to achieve minimum proficiency in reading than those without disabilities. In the United States, for example, LGBTI students were almost three times more likely to say that they had stayed home from school because of feeling unsafe.

Inequitable foundations: Alongside today’s publication, UNESCO GEM Report team launched a new website, PEER, with information on laws and policies concerning inclusion in education for every country in the world. PEER shows that many countries still practice education segregation, which reinforces stereotyping, discrimination and alienation. Laws in a quarter of all countries require children with disabilities to be educated in separate settings, rising to over 40% in Latin America and the Caribbean, as well as in Asia.

Blatant exclusion: Two countries in Africa still ban pregnant girls from school, 117 allowed child marriages, while 20 had yet to ratify the Convention 138 of the International Labour Organization which bans child labour. In several central and eastern European countries, Roma children were segregated in mainstream schools. In Asia, displaced people, such as the Rohingya were taught in parallel education systems. In OECD countries, more than two-thirds of students from immigrant backgrounds attended schools where they made up at least 50% of the student population, which reduced their chance of academic success.

Parents’ discriminatory beliefs were found to form one barrier to inclusion: Some 15% of parents in Germany and 59% in Hong Kong, China, feared that children with disabilities disturbed others’ learning. Parents with vulnerable children also wished to send them to schools that ensure their well-being and respond to their needs. In Queensland, Australia, 37% of students in special schools had moved away from mainstream establishments.

The Report shows that education systems often fail to take learners’ special needs into account. Just 41 countries worldwide officially recognized sign language and, globally, schools were more eager to get internet access than to cater for learners with disabilities. Some 335 million girls attended schools that did not provide them with the water, sanitation and hygiene services they required to continue attending class during menstruation.

Alienating learners: When learners are inadequately represented in curricula and textbooks they can feel alienated. Girls and women only made up 44% of references in secondary school English-language textbooks in Malaysia and Indonesia, 37% in Bangladesh and 24% in the province of Punjab in Pakistan. The curricula of 23 out of 49 European countries do not address issues of sexual orientation, gender identity, or expression.

Teachers need and want training on inclusion, which fewer than 1 in 10 primary school teachers in ten Francophone countries in sub-Saharan Africa said they had received. A quarter of teachers across 48 countries reported they wanted more training on teaching students with special needs.

Chronic lack of quality data on those left behind: Almost half of low- and middle-income countries do not collect enough education data about children with disabilities. Household surveys are key for breaking education data down by individual characteristics. But 41% of countries – home to 13% of the world’s population – did not conduct surveys or make available data from such surveys. Figures on learning are mostly taken from school, failing to take into account those not attending.

Signs of progress towards inclusion: The Report and its PEER website note that many countries were using positive, innovative approaches to transition towards inclusion. Many were setting up resource centres for multiple schools and enabling mainstream establishments to accommodate children from special schools, as was the case in Malawi, Cuba and Ukraine. The Gambia, New Zealand and Samoa were using itinerant teachers to reach underserved populations.

Many countries were also seen to go out of their way to accommodate different learners’ needs: Odisha state in India, for example, used 21 tribal languages in its classrooms, Kenya adjusted its curriculum to the nomadic calendar and, in Australia, the curricula of 19% of students were adjusted by teachers so that their expected outcomes could match students’ needs.

The report includes material for a digital campaign, All means All, which promotes a set of key recommendations for the next ten years.

Related items

- Country page: Pakistan

- UNESCO Office in Islamabad

- SDG: SDG 4 - Ensure inclusive and equitable quality education and promote lifelong learning opportunities for all

This article is related to the United Nation’s Sustainable Development Goals .

Other recent press releases

- High contrast

- Press Centre

Search UNICEF

Education sector analysis, methodological guidelines - volume3.

This present volume is the third in a series of education sector analysis (ESA) guidelines following two volumes published in 2014. The series provides methodologies and applied examples for diagnosing education systems and informing national education policies and plans. This volume proposes guidelines to strengthen national capacities in analyzing education systems in four areas: inclusive education system for children with disabilities, risk analysis for resilient education systems, functioning and effectiveness of the educational administration, and stakeholder mapping and problem-driven analysis (governance and political economy).

The present volume was prepared by experts from various backgrounds (including education, economics, sociology, political science and other social sciences) from UNESCO‘s International Institute for Educational Planning, UNICEF, the United Kingdom’s Foreign, Commonwealth & Development Office and the Global Partnership for Education.

Files available for download

Related topics, more to explore.

Methodological guidelines - Volume1

Methodological guidelines - Volume2

UNICEF Goodwill Ambassador Millie Bobby Brown calls for ‘a world where periods don’t hold us back’ in new video for Menstrual Hygiene Day

10 Fast Facts: Menstrual health in schools

This site belongs to UNESCO's International Institute for Educational Planning

IIEP Learning Portal

Search form

- issue briefs

- Plan for learning

Education sector analysis

An education sector analysis (ESA) is an in-depth, holistic diagnosis of an education system. It assists with understanding how an education system (and its subsectors) works, why it works that way, and how to improve it. An ESA provides the evidence base for decision-making and is the first step in preparing an education sector plan.

An ESA is a nationally driven process, involving collaboration and dialogue among different actors and institutions in a system. Empowering and consulting the different stakeholders throughout the process are essential, as ‘sustainable changes that lead to improved learning outcomes cannot be brought about in the absence of involvement of the individuals and groups who will implement the change’ (Faul and Martinez, 2019: 31).

The ESA process must therefore be participative and aim to create an understanding of the key stakeholders in the education system, their incentives, relationships and accountability, as well as how these dynamics shape education systems (IIEP-UNESCO et al., 2021).

What does an ESA cover?

An ESA includes context analysis, existing policy analysis, cost and finance analysis, education performance analysis, and system capacity analysis, including stakeholder analysis (IIEP-UNESCO and GPE, 2015). Any challenges identified through the ESA should be analysed through the lens of Sustainable Development Goal 4 (UNESCO, 2016). Quality of learning is one factor analysed in the performance of the education system along with issues related to access and coverage, equity and inclusion, and internal and external efficiency of the system. Quality of learning involves analysing the range of inputs and processes including teachers, learning and teaching materials, school facilities, and learning outcomes (IIEP-UNESCO and GPE, 2015; IIEP-UNESCO, World Bank, and UNICEF, 2014).

Teachers play a decisive role in ensuring learning quality. Teacher management features – ranging from recruitment and deployment to pre- and in-service training, career pathways, motivation and job satisfaction, absenteeism and effective teaching time – also need to be analysed. Typical indicators include (IIEP-UNESCO, World Bank, and UNICEF, 2014):

- Pupil/teacher ratio by level for primary education

- Pupil/trained teacher ratio

- Teacher utilization rate

- The consistency in teacher allocation (R2 coefficient)

- Theoretical teaching time in relation to theoretical instruction time for secondary teachers

- The percentage of pre- and in-service teachers trained by level

- The number of teachers disaggregated by status (civil servants, contract, or community teachers)

- Qualifications and teaching experience

Learning and teaching materials

An ESA should analyse the equitable allocation of learning and teaching materials and other inputs among different schools and regions. An ESA should include indicators such as the proportion of teachers with teacher guides, pupil/textbook ratios, and the notion of useful pupil/textbook ratio (IIEP-UNESCO, World Bank, and UNICEF, 2014). Qualitative information gathered through teacher interviews, for example, can also be integrated into the analysis to complement quantitative data. For instance, in crisis-affected areas, quantitative data may be weak regarding the actual distribution and use of textbooks throughout the country (IIEP UNESCO and GPE, 2016).

School facilities

School facilities (school buildings and infrastructure such as electricity or school landscaping) can have a significant impact on students’ learning achievements. Proper water, sanitation and hygiene (WASH) facilities in schools can improve access to education and learning outcomes, particularly for girls (UNICEF and WHO, 2018). Relevant indicators include classroom utilization rate and, when applicable, type of classroom (such as temporary, open air, permanent, or home-based classrooms); the percentage of schools with functioning WASH facilities; the percentage of schools with electricity; the percentage of schools with boundary walls for security reasons; and the percentage of classrooms that need to be rehabilitated (IIEP-UNESCO, World Bank, and UNICEF, 2014).

Learning outcomes

Student assessments include national examinations and admission tests, national large-scale learning assessments, regional or international standardized assessments, citizen-led assessments, and household surveys. The analysis of learning assessments enables education planners and decision makers to understand whether the education system is transferring knowledge to students as expected, as well as whether this transfer is equitable or is leaving certain population groups or geographic areas behind. Learning assessments can further help countries track the progress of learning achievements over time, compare results with comparable countries, and identify plausible causes for weak learning outcomes (IIEP UNESCO, World Bank, and UNICEF, 2014).

However, there are several risks when using learning data, such as the accuracy of data and their interpretation; the use of a single test score for decision-making; the use of learning assessment data to legitimize predefined agendas; and narrowing educational measurements to simplified indicators (Raudonyte, 2019).

Changes in learning assessment results over time should be interpreted with caution and cross-checked with other evidence. For instance, a sharp increase in enrolments may affect learning outcomes (IIEP-UNESCO, World Bank, and UNICEF, 2014).

ESA data sources

An effective ESA relies on both qualitative and quantitative rigorous data. Relevant data sources include (IIEP-UNESCO and GPE, 2015; IIEP-UNESCO et al., 2021; IIEP-UNESCO, World Bank, and UNICEF, 2014):

- National, regional and international learning assessments: provide information on whether the education system is transferring knowledge as expected; track progress on learning achievements over time; allow comparisons with comparable countries; and identify plausible reasons behind weak learning outcomes.

- School data on students, textbooks, teachers, and subsidies: provide information on resource distribution and learning time, among others.

- Administrative manuals: provide information on teacher management, teaching time, and other resources.

- Teacher training institute data: provide information on whether the capacities of teacher training institutes meet current and projected needs.

- Human resources data: provide information about teacher recruitment, deployment and utilization, among others.

- Sample surveys: can be used to assess teaching and learning time.

- Household surveys: provide information on the relationship between the level of literacy and the number of years of schooling.

- Specific research exercises: provide valuable information on relevant issues faced by education systems.

- Interviews and questionnaires of stakeholders: provide relevant qualitative information, for instance related to institutional capacity.

An ESA should further assess information gaps and whether primary data collection will need to be undertaken to obtain missing information (IIEP-UNESCO and GPE, 2015).

Plans and policies

- Liberia: Education Sector Analysis

- Somalia: Education Sector Analysis

- IIEP-UNESCO; Global Partnership for Education. 2015. Guidelines for Education Sector Plan Preparation

- IIEP-UNESCO; Global Partnership for Education; UNICEF; Foreign, Commonwealth and Development Office. 2021. Education Sector Analysis Methodological Guidelines: Vol. 3: Thematic Analyses

- IIEP-UNESCO; World Bank; UNICEF. 2014. Education Sector Analysis Methodological Guidelines: Vol 1: Sector-wide Analysis, With Emphasis on Primary and Secondary Education

- IIEP-UNESCO; World Bank; UNICEF. 2014. Education Sector Analysis Methodological Guidelines: Vol. 2: Sub-sector Specific Analysis

- UNESCO-UIS. 2009. Education Indicators: Technical Guidelines

Faul, M.; Martinez, R. 2019. Education System Diagnostics. What is an 'Education System Diagnostic', Why Might it be Useful, and What Currently Exists?

IIEP-UNESCO; GPE (Global Partnership for Education). 2015. Guidelines for Education Sector Plan Preparation. Paris: IIEP-UNESCO.

––––. 2016. Guidelines for Transitional Education Plan Preparation. Washington, DC: GPE.

IIEP-UNESCO; GPE (Global Partnership for Education); UNICEF; FCDO (Foreign, Commonwealth and Development Office). 2021. Education Sector Analysis Methodological Guidelines: Vol. 3: Thematic Analyses . Dakar: IIEP-UNESCO.

IIEP-UNESCO; World Bank; UNICEF. 2014. Education Sector Analysis Methodological Guidelines: Vol 1: Sector-wide Analysis, with Emphasis on Primary and Secondary Education. Dakar: IIEP-UNESCO.

Raudonyte, I. 2019. Use of Learning Assessment Data in Education Policy-making. Paris: IIEP UNESCO.

UNESCO. 2016. Mainstreaming SDG4-Education 2030 in Sector-wide Policy and Planning: Technical Guidelines for UNESCO Field Offices. Paris: UNESCO.

UNICEF; WHO (World Health Organization). 2018. Drinking Water, Sanitation and Hygiene in Schools: Global Baseline Report 2018. New York, NY: UNICEF and WHO.

Related information

- Supporting education sector analyses [IIEP-UNESCO Dakar]

How higher-education institutions can transform themselves using advanced analytics

Leaders in most higher-education institutions generally understand that using advanced analytics can significantly transform the way they work by enabling new ways to engage current and prospective students, increase student enrollment, improve student retention and completion rates , and even boost faculty productivity and research. However, many leaders of colleges and universities remain unsure of how to incorporate analytics into their operations and achieve intended outcomes and improvements. What really works? Is it a commitment to new talent, technologies, or operating models? Or all of the above?

To answer these questions, we interviewed more than a dozen senior leaders at colleges and universities known for their transformations through analytics. We also conducted in-depth, on-campus visits at the University of Maryland University College (UMUC), a public institution serving primarily working adults through distance learning, and Northeastern University, a private nonprofit institution in Boston, to understand how their transformations went. 1 Our research base included presidents, vice presidents of enrollment management, chief data officers, provosts, and chief financial officers. In September 2017, we conducted on-campus visits to meet with leaders at several levels at both the University of Maryland University College (UMUC) and Northeastern University. We thank these leaders for generously agreeing to have their observations and experiences included in this article. We combined insights from these interviews and site visits with those gleaned from our work with more than 100 higher-education engagements across North America over the past five years, and we tapped McKinsey’s wide-ranging expertise in analytics-enabled transformations in both the public and private sectors.

Our conversations and engagements revealed several potential pitfalls that organizations may face when building their analytics capabilities—as well as several practical steps education leaders can take to avoid these traps.

Understanding the challenges

Advanced analytics use cases.

Northeastern used advanced analytics to help grow its U.S. News & World Report ranking among national universities from 115 in 2006 to 40 in 2017.

UMUC used advanced analytics to achieve a 20 percent increase in new student enrollment while spending 20 percent less on marketing.

Transformation through advanced analytics can be difficult for any organization; in higher education, the challenges are compounded by sector-specific factors related to governance and talent. Leaders in higher education cannot simply pay lip service to the power of analytics; they must first address some or all of the most common obstacles.

Being overly focused on external compliance . Many higher-education institutions’ data analytics teams focus most of their efforts on generating reports to satisfy operational, regulatory, or statutory compliance. The primary goal of these teams is to churn out university statistics that accrediting bodies and other third parties can use to assess each institution’s performance. Any requests outside the bounds of these activities are considered emergencies rather than standard, necessary assignments. Analytics teams in this scenario have very limited time to support strategic, data-driven decision making.

Isolating the analytics program in an existing department . In our experience, analytics teams in higher-education institutions usually report to the head of an existing function or department—typically the institutional research team or the enrollment-management group. As a result, the analytics function becomes associated with the agenda of that department rather than a central resource for all, with little to no contact with executive leadership. Under this common scenario, the impact of analytics remains limited, and analytics insights are not embedded into day-to-day decision making of the institution as a whole.

Failing to establish a culture of data sharing and hygiene . In many higher-education institutions, there is little incentive (and much reluctance) to share data. As a result, most higher-education institutions lack good data hygiene —that is, established rules for who can access various forms of data, as well as formal policies for how they can share those data across departments. For example, analytics groups in various university functions may use their own data sets to determine retention rates for different student segments—and when they get together, they often disagree on which set of numbers is right.

Compounding this challenge, many higher-education institutions struggle to link the myriad legacy data systems teams use in different functions or working groups. Even with the help of a software platform vendor, the lead time to install, train, and win buy-in for these technical changes can take time, perhaps two to three years, before institutions see tangible outcomes from their analytics programs. In the meantime, institutions struggle to instill a culture and processes built around the possibilities of data-driven decision making.

Lacking the appropriate talent. Budgets and other constraints can make it difficult for higher-education institutions to meet market rates for analytics talent. Colleges and universities could potentially benefit from sourcing analytics talent among their graduate students and faculty, but it can be a struggle to attract and retain them. Furthermore, to successfully pursue transformation through analytics, higher-education institutions need leaders who are fluent in not only management but also data analytics and can solve problems in both areas.

Would you like to learn more about the Social Sector Practice ?

Deploying best practices.

These challenges can seem overwhelming, but transformation through analytics is possible when senior leaders in higher-education institutions endeavour to change both operations and mind-sets.

Leaders point to five action steps to foster success:

Articulate an analytics mandate that goes beyond compliance . Senior leaders in higher education must signal that analytics is a strategic priority. Indeed, to realize the potential of analytics, the function cannot be considered solely as a cost center for compliance. Instead, this team must be seen as a source of innovation and an economic engine for the institution. As such, leaders must articulate the team’s broader mandate. According to the leaders we interviewed, the transformation narrative must focus on how analytics can help the institution facilitate the student journey from applicant to alumnus while providing unparalleled learning, research, and teaching opportunities, as well as foster a strong, financially sustainable institution.

Establish a central analytics team with direct reporting lines to executive leaders . To mitigate the downsides of analytics teams couched in existing departments or decentralized across several functions, higher-education leaders must explicitly allocate the requisite financial and human resources to establish a central department or function to oversee and manage the use of analytics across the institution. This team can be charged with managing a central, integrated platform for collecting, analyzing, and modeling data sets and producing insights quickly.

For example, UMUC has a designated “data czar” to help define standards for how information is captured, managed, shared, and stored online. When conflicts arise, the data czar weighs in and helps de-escalate problems. Having a central point of contact has improved the consistency and quality of the university’s data: there is now a central source of truth, and all analysts have access to the data. Most important, the university now has a data evangelist who can help cultivate an insights-driven culture at the institution.

In another example, leaders at Northeastern created an analytics center of excellence structured as a “virtual” entity. The center is its own entity and is governed by a series of rotating chairs to ensure the analytics team is aware of and paying equal attention to priorities from across the university.

In addition to enjoying autonomous status outside a subfunction or single department, the analytics team should report to the most-senior leaders in the institution—in some cases, the provost. When given a more substantial opportunity to influence decisions, analytics leaders gain a greater understanding of the issues facing the university and how they affect the institution’s overall strategy. Leaders can more easily identify the data sets that might provide relevant insights to university officials—not just in one area, but across the entire organization—and they can get a jump-start on identifying possible solutions.

Analysts at Northeastern, for instance, were able to quantify the impact of service-learning programs on student retention, graduation, and other factors, thereby providing support for key decisions about these programs.

Win analytics buy-in from the front line and create a culture of data-driven decision making . To overcome the cultural resistance to data sharing, the analytics team must take the lead on engendering meaningful communications about analytics across the institution. To this end, it helps to have members of the centralized analytics function interact formally and frequently with different departments across the university. A hub-and-spoke model can be particularly effective: analysts sit alongside staffers in the operating units to facilitate sharing and directly aid their decision making. These analysts can serve as translators, helping working groups understand how to apply analytics to tackle specific problems, while also taking advantage of data sets provided by other departments. The university leaders we spoke with noted that their analysts may rotate into different functional areas to learn more about the university’s departments and to ensure that the department leaders have a link back to the analytics function.

How to improve student educational outcomes: New insights from data analytics

Of course, having standardized, unified systems for processing all university data can help enable robust analysis. However, universities seeking to create a culture of data-driven decision making need not wait two years until a new data platform is up and running. Instead, analysts can define use cases—that is, places where data already exist and where analysis can be conducted relatively quickly to yield meaningful insights. Teams can then share success stories and evangelize the impact of shared data analytics, thereby prompting others to take up their own analytics-driven initiatives.

The analysts from UMUC’s decision-support unit sometimes push relevant data and analyses to the relevant departments to kick-start reflection and action, rather than waiting for the departments to request the information. However, the central unit avoids producing canned reports; analysts tend to be successful only when they engage departments in an honest and objective exploration of the data without preexisting biases.

Strengthen in-house analytical capabilities . The skills gap is an obvious impediment to colleges’ and universities’ attempts to transform operations through advanced analytics—thus, it is perfectly acceptable to contract out work in the short term. However, while supplementing a skills gap with external expertise may help accelerate transformations, it can never fully replace the need for in-house capacity; the effort to push change across the institution must be owned and led internally.

To do so, institutions will need to change their approaches to talent acquisition and development . They may need to look outside usual sources to find professionals who understand core analytics technologies (cloud computing, data science, machine learning, and statistics, for instance) as well as design thinking and operations. Institutions may also need to appeal to new hires with competitive financial compensation and by emphasizing the opportunity to work autonomously on intellectually challenging projects that will make an impact on generations of students and contribute to an overarching mission.

Do not let great be the enemy of good . It takes time to launch a successful analytics program. At the outset, institutions may lack certain types of data, and not every assessment will yield insightful results—but that is no reason to pull back on experimentation. Colleges and universities can instead deploy a test-and-learn approach: identify areas with clear problems and good data, conduct analyses, launch necessary changes, collect feedback, and iterate as needed. These cases can help demonstrate the impact of analytics to other parts of the organization and generate greater interest and buy-in.

Subscribe to the Shortlist

McKinsey’s new weekly newsletter, featuring must-read content on a range of topics, every Friday

Realizing impact from analytics

It is easy to forget that analytics is a beginning, not an end . Analytics is a critical enabler to help colleges and universities solve tough problems—but leaders in higher-education institutions must devote just as much energy to acting on the insights from the data as they do on enabling analysis of the data. Implementation requires significant changes in culture, policy, and processes. When outcomes improve because a university successfully implemented change—even in a limited environment—the rest of the institution takes notice. This can strengthen the institutional will to push further and start tackling other areas of the organization that need improvement.

Some higher-education institutions have already overcome these implementation challenges and are realizing significant impact from their use of analytics. Northeastern University, for example, is using a predictive model to determine which applicants are most likely to be the best fit for the school if admitted. Its analytics team relies on a range of data to make forecasts, including students’ high school backgrounds, previous postsecondary enrollments, campus visit activity, and email response rates. According to the analytics team, an examination of the open rate for emails was particularly insightful as it was more predictive of whether students actually enrolled at Northeastern than what the students said or whether they visited campus.

Meanwhile, the university also looked at National Student Clearinghouse data, which tracks where applicants land at the end of the enrollment process, and learned that the institutions it had considered core competitors were not. Instead, competition was coming from sources it had not even considered. It also learned that half of its enrollees were coming from schools that the institution’s admissions office did not visit. The team’s overall analysis prompted Northeastern to introduce a number of changes to appeal to those individuals most likely to enroll once admitted, including offering combined majors. The leadership team also shifted some spending from little-used programs to bolster programs and features that were more likely to attract targeted students. Due in part to these changes, Northeastern improved its U.S. News & World Report ranking among national universities from 115 in 2006 to 40 in 2017.

In another example, in 2013 UMUC was trying to pinpoint the source of a decline in enrollment. It was investing significant dollars in advertising and was generating a healthy number of leads—however, conversion rates were low. Data analysts at the institution assessed the university’s returns on investment for various marketing efforts and discovered a bottleneck—UMUC’s call centers were overused and underresourced. The university invested in new call-center capabilities and within a year realized a 20 percent increase in new student enrollment while spending 20 percent less on advertising.

The benefits we discussed barely scratch the surface; the next wave of advanced analytics will, among other things, enable bespoke, personalized student experiences, with teaching catered to students’ individual learning styles and competency levels. To realize the great promise of analytics in the years to come, senior leaders must focus on more than just making incremental improvements in business processes or transactions. Our conversations with leaders in higher education point to the need for colleges and universities to establish a strong analytics function as well as a culture of data-driven decision making and a focus on delivering measurable outcomes. In doing so, institutions can create significant value for students—and sustainable operations for themselves.

Marc Krawitz is an associate partner in McKinsey’s New Jersey office. Jonathan Law is a partner in the New York office and leads the Higher-Education Practice. Sacha Litman is an associate partner in the Washington, DC, office and leads public and social sector analytics.

The authors would like to thank business and technology leaders at the University of Maryland University College and Northeastern University for their contributions to this article.

Explore a career with us

Related articles.

Shaking up the leadership model in higher education

Three more reasons why US education is ready for investment

Boosting productivity in US higher education

- Review Article

- Open access

- Published: 22 June 2020

Teaching analytics, value and tools for teacher data literacy: a systematic and tripartite approach

- Ifeanyi Glory Ndukwe 1 &

- Ben Kei Daniel 1

International Journal of Educational Technology in Higher Education volume 17 , Article number: 22 ( 2020 ) Cite this article

27k Accesses

41 Citations

14 Altmetric

Metrics details

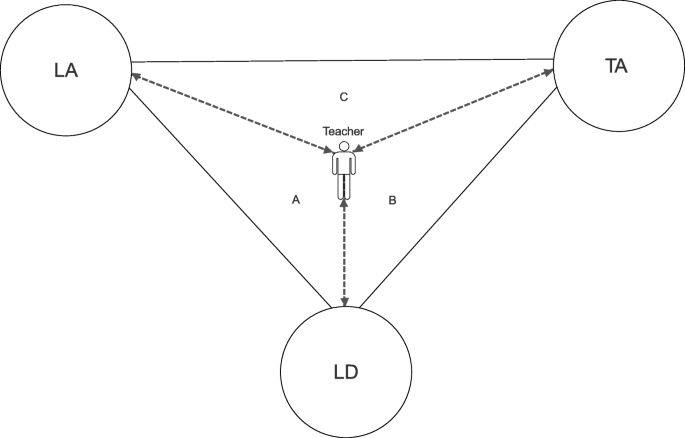

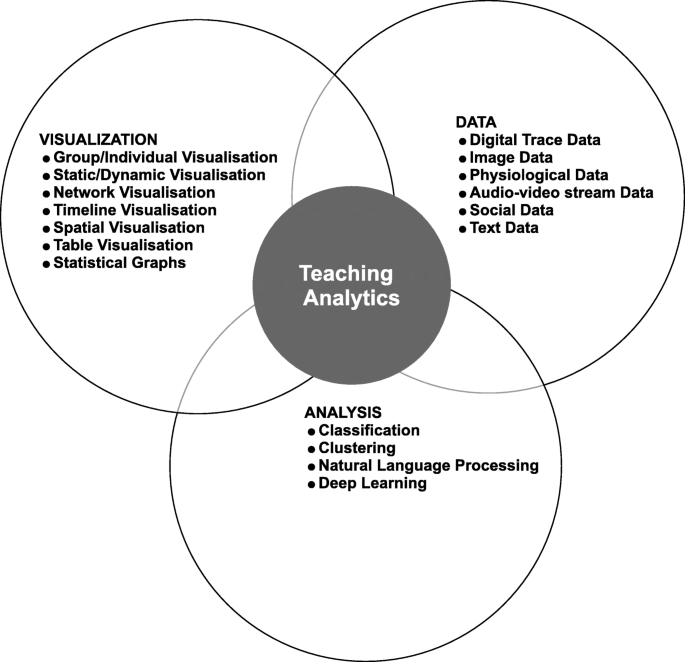

Teaching Analytics (TA) is a new theoretical approach, which combines teaching expertise, visual analytics and design-based research to support teacher’s diagnostic pedagogical ability to use data and evidence to improve the quality of teaching. TA is now gaining prominence because it offers enormous opportunities to the teachers. It also identifies optimal ways in which teaching performance can be enhanced. Further, TA provides a platform for teachers to use data to reflect on teaching outcome. The outcome of TA can be used to engage teachers in a meaningful dialogue to improve the quality of teaching. Arguably, teachers need to develop their teacher data literacy and data inquiry skills to learn about teaching challenges. These skills are dependent on understanding the connection between TA, LA and Learning Design (LD). Additionally, they need to understand how choices in particular pedagogues and the LD can enhance their teaching experience. In other words, teachers need to equip themselves with the knowledge necessary to understand the complexity of teaching and the learning environment. Providing teachers access to analytics associated with their teaching practice and learning outcome can improve the quality of teaching practice. This research aims to explore current TA related discussions in the literature, to provide a generic conception of the meaning and value of TA. The review was intended to inform the establishment of a framework describing the various aspects of TA and to develop a model that can enable us to gain more insights into how TA can help teachers improve teaching practices and learning outcome. The Tripartite model was adopted to carry out a comprehensive, systematic and critical analysis of the literature of TA. To understand the current state-of-the-art relating to TA, and the implications to the future, we reviewed published articles from the year 2012 to 2019. The results of this review have led to the development of a conceptual framework for TA and established the boundaries between TA and LA. From the analysis the literature, we proposed a Teaching Outcome Model (TOM) as a theoretical lens to guide teachers and researchers to engage with data relating to teaching activities, to improve the quality of teaching.

Introduction

Educational institutions today are operating in an information era, where machines automatically generate data rather than manually; hence, the emergence of big data in education ( Daniel 2015 ). The phenomenon of analytics seeks to acquire insightful information from data that ordinarily would not be visible by the ordinary eyes, except with the application of state-of-the-art models and methods to reveal hidden patterns and relationships in data. Analytics plays a vital role in reforming the educational sector to catch up with the fast pace at which data is generated, and the extent to which such data can be used to transform our institutions effectively. For example, with the extensive use of online and blended learning platforms, the application of analytics will enable educators at all levels to gain new insights into how people learn and how teachers can teach better. However, the current discourses on the use of analytics in Higher Education (HE) are focused on the enormous opportunities analytics offer to various stakeholders; including learners, teachers, researchers and administrators.

In the last decade, extensive literature has proposed two weaves of analytics to support learning and improve educational outcomes, operations and processes. The first form of Business Intelligence introduced in the educational industry is Academic Analytics (AA). AA describes data collected on the performance of academic programmes to inform policy. Then, Learning Analytics (LA), emerged as the second weave of analytics, and it is one of the fastest-growing areas of research within the broader use of analytics in the context of education. LA is defined as the "measurement, collection, analysis and reporting of data about the learner and their learning contexts for understanding and optimising learning and the environments in which it occurs" ( Elias 2011 ). LA was introduced to attend to teaching performance and learning outcome ( Anderson 2003 ; Macfadyen and Dawson 2012 ). Typical research areas in LA, include student retention, predicting students at-risk, personalised learning which in turn are highly student-driven ( Beer et al. 2009 ; Leitner et al. 2017 ; Pascual-Miguel et al. 2011 ; Ramos and Yudko 2008 ). For instance, Griffiths ( Griffiths 2017 ), employed LA to monitor students’ engagements and behavioural patterns on a computer-supported collaborative learning environment to predict at-risk students. Similarly, Rienties et al. ( Rienties et al. 2016 ) looked at LA approaches in their capacity to enhance the learner’s retention, engagement and satisfaction. However, in the last decade, LA research has focused mostly on the learner and data collections, based on digital data traces from Learning Management Systems (LMS) ( Ferguson 2012 ), not the physical classroom.

Teaching Analytics (TA) is a new theoretical approach that combines teaching expertise, visual analytics and design-based research, to support the teacher with diagnostic and analytic pedagogical ability to improve the quality of teaching. Though it is a new phenomenon, TA is now gaining prominence because it offers enormous opportunities to the teachers.

Research on TA pays special attention to teacher professional practice, offering data literacy and visual analytics tools and methods ( Sergis et al. 2017 ). Hence, TA is the collection and use of data related to teaching and learning activities and environments to inform teaching practice and to attain specific learning outcomes. Some authors have combined the LA, and TA approaches into Teaching and Learning Analytics (TLA) ( Sergis and Sampson 2017 ; Sergis and Sampson 2016 ). All these demonstrate the rising interest in collecting evidence from educational settings for awareness, reflection, or decision making, among other purposes. However, the most frequent data that have been collected and analysed about TA focus on the students (e.g., different discussion and learning activities and some sensor data such as eye-tracking, position or physical actions) ( Sergis and Sampson 2017 ), rather than monitoring teacher activities. Providing teachers access to analytics of their teaching, and how they can effectively use such analytics to improve their teaching process is a critical endeavour. Also, other human-mediated data gathering in the form of student feedback, self and peer observations or teacher diaries can be employed to enrich TA further. For instance, visual representations such as dashboards can be used to present teaching data to help teachers reflect and make appropriate decisions to inform the quality of teaching. In other words, TA can be regarded as a reconceptualisation of LA for teachers to improve teaching performance and learning outcome. The concept of TA is central to the growing data-rich technology-enhanced learning and teaching environment ( Flavin 2017 ; Saye and Brush 2007 ). Further, it provides teachers with the opportunity to engage in data-informed pedagogical improvement.

While LA is undeniably an essential area of research in educational technology and the learning sciences, automatically extracted data from an educational platform mainly provide an overview of student activities, and participation. Nevertheless, it hardly indicates the role of the teacher in these activities, or may not otherwise be relevant to teachers’ individual needs (for Teaching Professional Development (TPD) or improvement of their classroom practice). Many teachers generally lack adequate data literacy skills ( Sun et al. 2016 ). Teacher data literacy skill and teacher inquiry skill using data are the foundational concepts underpinning TA ( Kaser and Halbert 2014 ). The development of these two skills is dependent on understanding the connection between TA, LA and Learning Design (LD). In other words, teachers need to equip themselves with knowledge through interaction with sophisticated data structures and analytics. Hence, TA is critical to improving teachers’ low efficacy towards educational data.

Additionally, technology has expanded the horizon of analytics to various forms of educational settings. As such, the educational research landscape needs efficient tools for collecting data and analyzing data, which in turn requires explicit guidance on how to use the findings to inform teaching and learning ( McKenney and Mor 2015 ). Increasing the possibilities for teachers to engage with data to assess what works for the students and courses they teach is instrumental to quality ( Van Harmelen and Workman 2012 ). TA provides optimal ways of performing the analysis of data obtained from teaching activities and the environment in which instruction occurs. Hence, more research is required to explore how teachers can engage with data associated with teaching to encourage teacher reflection, improve the quality of teaching, and provide useful insights into ways teachers could be supported to interact with teaching data effectively. However, it is also essential to be aware that there are critical challenges associated with data collection. Moreover, designing the information flow that facilitates evidence-based decision-making requires addressing issues such as the potential risk of bias; ethical and privacy concerns; inadequate knowledge of how to engage with analytics effectively.

To ensure that instructional design and learning support is evidence-based, it is essential to empower teachers with the necessary knowledge of analytics and data literacy. The lack of such knowledge can lead to poor interpretation of analytics, which in turn can lead to ill-informed decisions that can significantly affect students; creating more inequalities in access to learning opportunities and support regimes. Teacher data literacy refers to a teachers’ ability to effectively engage with data and analytics to make better pedagogical decisions.

The primary outcome of TA is to guide educational researchers to develop better strategies to support the development of teachers’ data literacy skills and knowledge. However, for teachers to embrace data-driven approaches to learning design, there is a need to implement bottom-up approaches that include teachers as main stakeholders of a data literacy project, rather than end-users of data.

The purpose of this research is to explore the current discusses in the literature relating to TA. A vital goal of the review was to extend our understanding of conceptions and value of TA. Secondly, we want to contextualise the notion of TA and develop various concepts around TA to establish a framework that describes multiple aspects of TA. Thirdly, to examine different data collections/sources, machine learning algorithms, visualisations and actions associated with TA. The intended outcome is to develop a model that would provide a guide for the teacher to improve teaching practice and ultimately enhance learning outcomes.

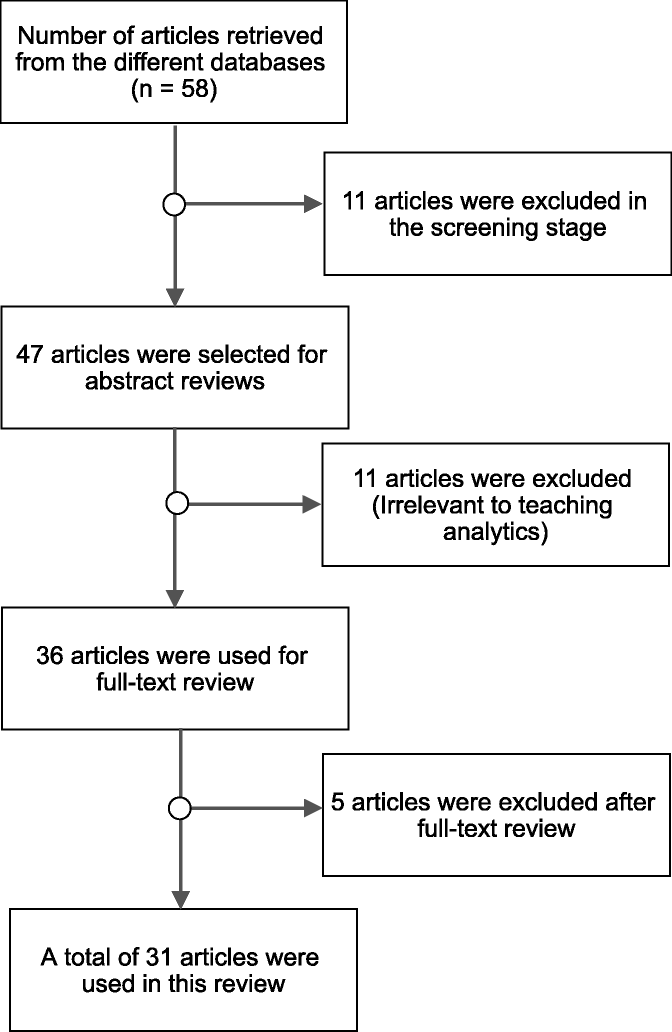

The research employed a systematic and critical analysis of articles published from the year 2012 to 2019. A total of 58 publications were initially identified and compiled from the Scopus database. After analysing the search results, 31 papers were selected for review. This review examined research relating to the utilisation of analytics associated with teaching and teacher activities and provided conceptual clarity on TA. We found that the literature relating to conception, and optimisation of TA is sporadic and scare, as such the notion of TA is theoretically underdeveloped.

Methods and procedures

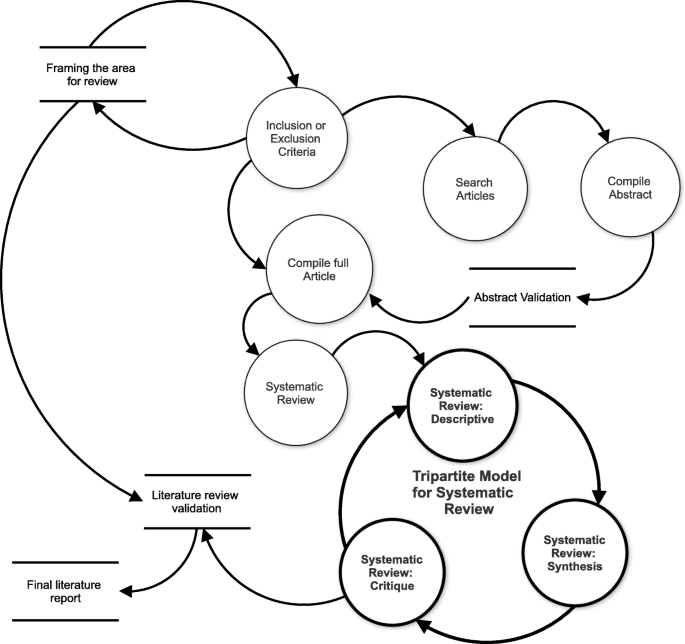

This research used the Tripartite model ( Daniel and Harland 2017 ), illustrated in Fig. 1 , to guide the systematic literature review. The Tripartite model draws from systematic review approaches such as the Cochrane, widely used in the analyses of rigorous studies, to provide the best evidence. Moreover, the Tripartite model offers a comprehensive view and presentation of the reports. The model composes of three fundamental components; descriptive (providing a summary of the literature), synthesis (logically categorising the research based on related ideas, connections and rationales), and critique (criticising the novel, providing evidence to support, discard or offer new ideas about the literature). Each of these phases is detailed fully in the following sections.

Tripartite Model. The Tripartite Model: A Systematic Literature Review Process ( Daniel and Harland 2017 )

To provide clarity; the review first focused on describing how TA is conceptualised and utilised. Followed by the synthesis of the literature on the various tools used to harvest, analyse and present teaching-related data to the teachers. Then the critique of the research which led to the development of a conceptual framework describing various aspects of TA. Finally, this paper proposes a Teaching Outcome Model (TOM). TOM is intended to offer teachers help on how to engage and reflect on teaching data.

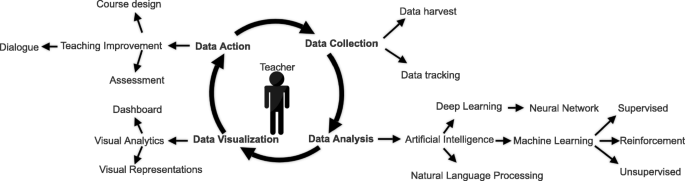

TOM is a TA life cycle which starts with the data collection stage; where the focus is on teaching data. Then the data analysis stage; the application of different Machine Learning (ML) techniques to the data to discover hidden patterns. Subsequently, the data visualisation stage, where data presentation is carried out in the form of a Teaching Analytics Dashboard (TAD) for the teacher. This phase is where the insight generation, critical thinking and teacher reflection are carried out. Finally, the action phase, this is where actions are implemented by teachers to improve teaching practice. Some of these actions include improving the LD, changing teaching method, providing appropriate feedback and assessment or even carrying out more research. This research aims to inform the future work in the advancement of TA research field.

Framing research area for review

As stated in the introduction, understanding current research on TA can be used to provide teachers with strategies that can help them utilise various forms of data to optimise teaching performance and outcome. Framing the review was guided by some questions and proposed answers to address those questions (see Table 1 )

Inclusion and exclusion criteria

The current review started with searching through the Scopus database using the SciVal visualisation and analytical tool. The rationale for choosing the Scopus database is that it contains the largest abstract and citation database of peer-reviewed research literature with diverse titles from publishers worldwide. Hence, it is only conceivable to search for and find a meaningful balance of the published content in the area of TA. Also, the review included peer-reviewed journals and conference proceedings. We excluded other documents and source types, such as book series, books, editorials, trade publications on the understanding that such sources might lack research on TA. Also, this review excluded articles published in other languages other than English.

Search strategy

This review used several keywords and combinations to search on terms related to TA. For instance: ’Teaching Analytics’ AND ’Learning Analytics’ OR ’Teacher Inquiry’ OR ’Data Literacy’ OR ’Learning Design’ OR ’Computer-Supported Collaborative Learning’ OR ’Open Learner Model’ OR ’Visualisation’ OR ’Learning Management System’ OR ’Intelligent Tutoring System’ OR ’Student Evaluation on Teaching’ OR ’Student Ratings’.

This review searched articles published between 2012 to 2019. The initial stage of the literature search yielded 58 papers. After the subsequent screening of previous works and removing duplicates and titles that did not relate to the area of research, 47 articles remained. As such, a total of 36 studies continued for full-text review. Figure 2 , shows the process of finalising the previous studies of this review.

Inclusion Exclusion Criteria Flowchart. The selection of previous studies

Compiling the abstracts and the full articles

The review ensured that the articles identified for review were both empirical and conceptual papers. The relevance of each article was affirmed by requiring that chosen papers contained various vital phrases all through the paper, as well as, title, abstract, keywords and, afterwards, the entire essay. In essence, were reviewed giving particular cognisance and specific consideration to those section(s) that expressly related to the field of TA. In doing as such, to extract essential points of view on definitions, data sources, tools and technologies associated with analytics for the teachers. Also, this review disregarded papers that did not, in any way, relate to analytics in the context of the teachers. Finally, 31 articles sufficed for this review.

Systematic review: descriptive

Several studies have demonstrated that TA is an important area of inquiry ( Flanders 1970 ; Gorham 1988 ; Pennings et al. 2014 ; Schempp et al. 2004 ), that enables researchers to explore analytics associated with teaching process systematically. Such analytics focus on data related to the teachers, students, subjects taught and teaching outcomes. The ultimate goal of TA is to improve professional teaching practice ( Huang 2001 ; Sergis et al. 2017 ). However, there is no consensus on what constitutes TA. Several studies suggest that TA is an approach used to analyse teaching activities ( Barmaki and Hughes 2015 ; Gauthier 2013 ; KU et al. 2018 ; Saar et al. 2017 ), including how teachers deliver lectures to students, tools usage pattern, or dialogue. While various other studies recognise TA as the ability to applying analytical methods to improve teacher awareness of student activities for appropriate intervention ( Ginon et al. 2016 ; Michos and Hernández Leo 2016 ; Pantazos et al. 2013 ; Taniguchi et al. 2017 ; Vatrapu et al. 2013 ). A hand full of others indicate TA as analytics that combines both teachers and students activities ( Chounta et al. 2016 ; Pantazos and Vatrapu 2016 ; Prieto et al. 2016 ; Suehiro et al. 2017 ). Hence, it is particularly problematic and challenging to carry out a systematic study in the area of analytics for the teachers to improve teaching practice, since there is no shared understanding of what constitutes analytics and how best to approach TA.

Researchers have used various tools to automatically harvest important episodes of interactive teacher and student behaviour during teaching, for teacher reflection. For instance, KU et al. ( 2018 ), utilised instruments such as; Interactive Whiteboard (IWB), Document Camera (DC), and Interactive Response System (IRS) to collect classroom instructional data during instruction. Similarly, Vatrapu et al. ( 2013 ) employed eye-tracking tools to capture eye-gaze data on various visual representations. Thomas ( 2018 ) also extracted multimodal features from both the speaker and the students’ audio-video data, using digital devices such as cameras and high-definition cameras. Data collected from some of these tools not only provide academics with real-time data but also attract more details about teaching and learning than the teacher may realise. However, the cost of using such digital tools for large-scale verification is high, and cheaper alternatives are sort after. For instance, Suehiro et al. ( 2017 ) proposed a novel approach of using e-books to extract teaching activity logs in a face-to-face class efficiently.

Vatrapu ( 2012 ) considers TA as a subset of LA dedicated to supporting teachers to understand the learning and teaching process. However, this definition does not recognise that both the learning and teaching processes are intertwined. Also, most of the research in LA collects data about the student learning or behaviour, to provide feedback to the teacher ( Vatrapu et al. 2013 ; Ginon et al. 2016 ; Goggins et al. 2016 ; Shen et al. 2018 ; Suehiro et al. 2017 ), see, for example, the iKlassroom conceptual proposal by Vatrapu et al. ( 2013 ), which highlights a map of the classroom to help contextualise real-time data about the learners in a lecture. Although, a few research draw attention to the analysis of teacher-gathering and teaching practice artefacts, such as lesson plans. Xu and Recker ( 2012 ) examined teachers tool usage patterns. Similarly, Gauthier ( 2013 ) extracted the analysis of the reasoning behind the expert teacher and used such data to improve the quality of teaching.

Multimodal analytics is an emergent trend used to complement available digital trace with data captured from the physical world ( Prieto et al. 2017 ). Isolated examples include the smart school multimodal dataset conceptual future proposal by Prieto et al. ( 2017 ), which features a plan of implementing a smart classroom to help contextualise real-time data about both the teachers and learners in a lecture. Another example, Prieto et al. ( 2016 ), explored the automatic extraction of orchestration graphs from a multimodal dataset gathered from only one teacher, classroom space, and a single instructional design. Results showed that ML techniques could achieve reasonable accuracy towards automated characterisation in teaching activities. Furthermore, Prieto et al. ( 2018 ) applied more advanced ML techniques to an extended version of the previous dataset to explore the different relationships that exist between datasets captured by multiple sources.

Previous studies have shown that teachers want to address common issues such as improving their TPD and making students learn effectively ( Charleer et al. 2013 ; Dana and Yendol-Hoppey 2019 ; Pennings et al. 2014 ). Reflection on teaching practice plays an essential role in helping teachers address these issues during the process of TPD ( Saric and Steh 2017 ; Verbert et al. 2013 ). More specifically, reflecting on personal teaching practice provides opportunities for teachers to re-examine what they have performed in their classes ( Loughran 2002 ; Mansfield 2019 ; Osterman and Kottkamp 1993 ). Which, in turn, helps them gain an in-depth understanding of their teaching practice, and thus improve their TPD. For instance, Gauthier ( 2013 ), used a visual teach-aloud method to help teaching practitioners reflect and gain insight into their teaching practices. Similarly, Saar et al. ( 2017 ) talked about a self-reflection as a way to improve teaching practice. Lecturers can record and observe their classroom activities, analyse their teaching and make informed decisions about any necessary changes in their teaching method.

The network analysis approach is another promising field of teacher inquiry, especially if combined with systematic, effective qualitative research methods ( Goggins et al. 2016 ). However, researchers and teacher who wish to utilise social network analysis must be specific about what inquiry they want to achieve. Such queries must then be checked and validated against a particular ontology for analytics ( Goggins 2012 ). Goggins et al. ( 2016 ), for example, aimed at developing an awareness of the types of analytics that could help teachers in Massive Open Online Courses (MOOCs) participate and collaborate with student groups, through making more informed decisions about which groups need help, and which do not. Network theory offers a particularly useful framework for understanding how individuals and groups respond to each other as they evolve. Study of the Social Network (SNA) is the approach used by researchers to direct analytical studies informed by network theory. SNA has many specific forms, each told by graph theory, probability theory, and algebraic modelling to various degrees. There are gaps in our understanding of the link between analytics and pedagogy. For example, which unique approaches to incorporating research methods for qualitative and network analysis would produce useful information for teachers in MOOCs? A host of previous work suggests a reasonable path to scaling analytics for MOOCs will involve providing helpful TA perspectives ( Goggins 2012 ; Goggins et al. 2016 ; Vatrapu et al. 2012 ).

Teacher facilitation is considered a challenging and critical aspect of active learning ( Fischer et al. 2014 ). Both educational researchers and practitioners have paid particular attention to this process, using different data gathering and visualisation methods, such as classroom observation, student feedback, audio and video recordings, or teacher self-reflection. TA enables teachers to perform analytics through visual representations to enhance teachers’ experience ( Vatrapu et al. 2011 ). As in a pedagogical environment, professionals have to monitor several data such as questions, mood, ratings, or progress. Hence, dashboards have become an essential factor in improving and conducting successful teaching. Dashboards are visualisation tools enable teachers to monitor and observe teaching practice to enhance teacher self-reflection ( Yigitbasioglu and Velcu 2012 ). While a TAD is a category of dashboard meant for teachers and holds a unique role and value [62]. First, TAD could allow teachers to access students learning in an almost real-time and scalable manner ( Mor et al. 2015 ), consequently, enabling teachers to improve their self-knowledge by monitoring and observing students activities. TAD assists the teachers in obtaining an overview of the whole classroom as well as drill down into details about individual and groups of students to identify student competencies, strengths and weaknesses. For instance, Pantazos and Vatrapu ( 2016 ) described TAD for repertory grid data to enable teachers to conduct systematic visual analytics of classroom learning data for formative assessment purposes. Second, TAD also allows for tracking on teacher self-activities ( van Leeuwen et al. 2019 ), as well as students feedback about their teaching practice. For example,Barmaki and Hughes ( 2015 ) explored a TAD that provides automated real-time feedback based on speakers posture, to support teachers practice classroom management and content delivery skills. It is a pedagogical point that dashboards can motivate teachers to reflect on teaching activities, help them improve teaching practice and learning outcome ( 2016 ). The literature has extensively described extensively, different teaching dashboards. For instance, Dix and Leavesley ( 2015 ), broadly discussed the idea of TAD and how they can represent visual tools for academics to interface with learning analytics and other academic life. Some of these academic lives may include schedules such as when preparing for class or updating materials, or meeting times such as meeting appointments with individual or collective group of students. Similarly, Vatrapu et al. ( 2013 ) explored TAD using visual analytics techniques to allow teachers to conduct a joint analysis of students personal constructs and ratings of domain concepts from the repertory grids for formative assessment application.

Systematic review: synthesis

In this second part of the review process, we extracted selected ideas from previous studies. Then group them based on data sources, analytical methods used, types of visualisations performed and actions.

Data sources and tools

Several studies have used custom software and online applications such as employing LMS and MOOCs to collect online classroom activities ( Goggins et al. 2016 ; KU et al. 2018 ; Libbrecht et al. 2013 ; Müller et al. 2016 ; Shen et al. 2018 ; Suehiro et al. 2017 ; Vatrapu et al. 2013 ; Xu and Recker 2012 ). Others have used modern devices including eye-tracker, portable electroencephalogram (EEG), gyroscope, accelerometer and smartphones ( Prieto et al. 2016 ; Prieto et al. 2018 ; Saar et al. 2017 ; Saar et al. 2018 ; Vatrapu et al. 2013 ), and conventional instruments such as video and voice recorders ( Barmaki and Hughes 2015 ; Gauthier 2013 ; Thomas 2018 ), to record classroom activities. However, some authors have pointed out several issues with modern devices such as expensive equipment, high human resource and ethical concerns ( KU et al. 2018 ; Prieto et al. 2017 ; Prieto et al. 2016 ; Suehiro et al. 2017 ).

In particular, one study by Chounta et al. ( 2016 ) recorded classroom activities using humans to code tutor-student dialogue manually. However, they acknowledged that manual coding of lecture activities is complicated and cumbersome. Some authors also subscribe to this school of thought and have attempted to address this issue by applying Artificial Intelligence (AI) techniques to automate and scale the coding process to ensure quality in all platforms ( Prieto et al. 2018 ; Saar et al. 2017 ; Thomas 2018 ). Others have proposed re-designing TA process to automate the process of data collection as well as making the teacher autonomous in collecting data about their teaching ( Saar et al. 2018 ; Shen et al. 2018 ). Including using technology that is easy to set up, effortless to use, does not require much preparation and at the same time, not interrupting the flow of the class. In this way, they would not require researcher assistance or outside human observers. Table 2 , summarises the various data sources as well as tools that are used to harvest teaching data with regards to TA.

The collection of evidence from both online and real classroom practice is significant both for educational research and TPD. LA deals mostly with data captured from online and blended learning platforms (e.g., log data, social network and text data). Hence, LA provides teachers with data to monitor and observe students online class activities (e.g., discussion boards, assignment submission, email communications, wiki activities and progress). However, LA neglects to capture physical occurrences of the classroom and do not always address individual teachers’ needs. TA requires more adaptable forms of classroom data collection (e.g., through video- recordings, sensor recording or by human observers) which are tedious, human capital intensive and costly. Other methods have been explored to balance the trade-off between data collected online, and data gathered from physical classroom settings by implementing alternative designs approach ( Saar et al. 2018 ; Suehiro et al. 2017 ).

Analysis methods

Multimodal analytics is the emergent trend that will complement readily available digital traces, with data captured from the physical world. Several articles in the literature have used multimodal approaches to analyse teaching processes in the physical world ( Prieto et al. 2016 ; Prieto et al. 2017 ; Prieto et al. 2018 ; Saar et al. 2017 ; Thomas 2018 ). In university settings, unobtrusive computer vision approaches to assess student attention from their facial features, and other behavioural signs have been applied ( Thomas 2018 ). Most of the studies that have ventured into multimodal analytics applied ML algorithms to their captured datasets to build models of the phenomena under investigation ( Prieto et al. 2016 ; Prieto et al. 2018 ). Apart from research areas that involve multimodal analytics, other areas of TA research have also applied in ML techniques such as teachers tool usage patterns ( Xu and Recker 2012 ), online e-books ( Suehiro et al. 2017 ), students written-notes ( Taniguchi et al. 2017 ). Table 3 outlines some of the ML techniques applied from previous literature in TA.

Visualisation methods

TA allows teachers to apply visual analytics and visualisation techniques to improve TPD. The most commonly used visualisation techniques in TA are statistical graphs such as line charts, bar charts, box plots, or scatter plots. Other visualisation techniques include SNA, spatial, timeline, static and real-time visualisations. An essential visualisation factor for TA is the number of users represented in a visualisation technique. Serving single or individual users allows the analyst to inspect the viewing behaviour of one participant. Visualising multiple or group users at the same time can allow one to find strategies of groups. However, these representations might suffer from visual clutter if too much data displays at the same time. Here, optimisation strategies, such as averaging or bundling of lines might be used, to achieve better results. Table 4 represents the visualisation techniques mostly used in TA.

Systematic review: critique

Student evaluation on teaching (set) data.

Although the literature has extensively reported various data sources used for TA, this study also draws attention to student feedback on teaching, as another form of data that originates from the classroom. The analytics of student feedback on teaching could support teacher reflection on teaching practice and add value to TA. Student feedback on teaching is also known as student ratings, or SET is a form of textual data. It can be described as a combination of both quantitative and qualitative data that express students opinions about particular areas of teaching performance. It has existed since the 1920s ( Marsh 1987 ; Remmers and Brandenburg 1927 ), and used as a form of teacher feedback. In addition to serving as a source of input for academic improvement ( Linse 2017 ), many universities also rely profoundly on SET for hiring, promoting and firing instructors ( Boring et al. 2016 ; Harland and Wald 2018 ).

Technological advancement has enabled institutions of Higher Education (HE) to administer course evaluations online, forgoing the traditional paper-and-pencil ( Adams and Umbach 2012 ). There has been much research around online teaching evaluations. Asare and Daniel ( 2017 ) investigated the factors influencing the rate at which students respond to online SET. While there is a verity of opinions as to the validity of SET as a measure of teaching performance, many teaching academics and administrators perceive that SET is still the primary measure that fills this gap ( Ducheva et al. 2013 ; Marlin Jr and Niss 1980 ). After all, who experiences teaching more directly than students? These evaluations generally consist of questions addressing the instructor’s teaching, the content and activities of the paper, and the students’ own learning experience, including assessment. However, it appears these schemes gather evaluation data and pass on the raw data to the instructors and administrators, stopping short of deriving value from the data to facilitate improvements in the instruction and the learning experiences. This measure is especially critical as some teachers might have the appropriate data literacy skills to interpret and use such data.

Further, there are countless debates over the validity of SET data ( Benton and Cashin 2014 ; MacNell et al. 2015 ). These debates have highlighted some shortcomings of student ratings of teaching in light of the quality of instruction rated ( Boring 2015 ; Braga et al. 2014 ). For Edström, what matters is how the individual teacher perceives an evaluation. It could be sufficient to undermine TPD, especially if the teachers think they are the subjects of audit ( Edström 2008 ). However, SET is today an integral part of the universities evaluation process ( Ducheva et al. 2013 ). Research has also shown that there is substantial room for utilising student ratings for improving teaching practice, including, improving the quality of instruction, learning outcomes, and teaching and learning experience ( Linse 2017 ; Subramanya 2014 ). This research aligns to the side of the argument that supports using SET for instructional improvements, to the enhancement of teaching experience.

Systematically, analytics of SET could provide valuable insights, which can lead to improving teaching performance. For instance, visualising SET can provide some way, a teacher can benchmark his performance over a while. Also, SET could provide evidence to claim for some level of data fusion in TA, as argued in the conceptualisation subsection of TA.

Transformational TA

The growing research into big data in education has led to renewed interests in the use of various forms of analytics ( Borgman et al. 2008 ; Butson and Daniel 2017 ; Choudhury et al. 2002 ). Analytics seeks to acquire insightful information from hidden patterns and relationships in data that ordinarily would not be visible by the natural eyes, except with the application of state-of-the-art models and methods. Big data analytics in HE provides lenses on students, teachers, administrators, programs, curriculum, procedures, and budgets ( Daniel 2015 ). Figure 3 illustrates the types of analytics that applies to TA to transform HE.

Types of analytics in higher education ( Daniel 2019 )

Descriptive Analytics Descriptive analytics aims to interpret historical data to understand better organisational changes that have occurred. They are used to answer the "What happened?" information regarding a regulatory process such as what are the failure rates in a particular program ( Olson and Lauhoff 2019 ). It applies simple statistical techniques such as mean, median, mode, standard deviation, variance, and frequency to model past behaviour ( Assunção et al. 2015 ; ur Rehman et al. 2016 ). Barmaki and Hughes ( 2015 ) carried out some descriptive analytics to know the mean view time, mean emotional activation, and area of interest analysis on the data generated from 27 stimulus images to investigate the notational, informational and emotional aspect of TA. Similarly, Michos and Hernández-Leo ( 2016 ) demonstrated how descriptive analytics could support teachers’ reflection and re-design their learning scenarios.

Diagnostic Analytics Diagnostic analytics is higher-level analytics that further diagnoses descriptive analytics ( Olson and Lauhoff 2019 ). They are used to answer the "Why it happened?". For example, a teacher may need to carry out diagnostic analytics to know why there is a high failure rate in a particular programme or why students rated a course so low for a specific year compared to the previous year. Diagnostic analytics uses some data mining techniques such as; data discovery, drill-down and correlations to further explore trends, patterns and behaviours ( Banerjee et al. 2013 ). Previous research has applied the repertory grid technique as a pedagogical method to support the teachers perform knowledge diagnostics of students about a specific topic of study ( Pantazos and Vatrapu 2016 ; Vatrapu et al. 2013 ).

Relational Analytics Relational analytics is the measure of relationships that exists between two or more variables. Correlation analysis is a typical example of relational analytics that measures the linear relationship between two variables ( Rayward-Smith 2007 ). For instance, Thomas ( 2018 ) applied correlation analysis to select the best features from the speaker and audience measurements. Some researchers have also referred to other forms of relational analytics, such as co-occurrence analysis to reveal students hidden abstract impressions from students written notes ( Taniguchi et al. 2017 ). Others have used relational analytics to differentiate critical formative assessment futures of an individual student to assist teachers in the understanding of the primary components that affect student performance ( Pantazos et al. 2013 ; Michos and Hernández Leo 2016 ). A few others have applied it to distinguish elements or term used to express similarities or differences as they relate to their contexts ( Vatrapu et al. 2013 ). Insights generated from this kind of analysis can be considered to help improve teaching in future lectures and also compare different teaching styles. Sequential pattern mining is also another type of relational analytics used to determine the relationship that exists between subsequent events ( Romero and Ventura 2010 ). It can be applied in multimodal analytics to cite the relationship between the physical aspect of the learning and teaching process such as the relationship between ambient factors and learning; or the investigation of robust multimodal indicators of learning, to help in teacher decision-making ( Prieto et al. 2017 ).

Predictive Analytics Predictive analytics aims to predict future outcomes based on historical and current data ( Gandomi and Haider 2015 ). Just as the name infers, predictive analytics attempts to predict future occurrences, patterns and trends under varying conditions ( Joseph and Johnson 2013 ). It makes use of different techniques such as regression analysis, forecasting, pattern matching, predictive modelling and multi-variant statistics ( Gandomi and Haider 2015 ; Waller and Fawcett 2013 ). In prediction, the goal is to predict students and teachers activities to generate information that can support decision-making by the teacher ( Chatti et al. 2013 ). Predictive analytics is used to answer the "What will happen". For instance, what are the interventions and preventive measures a teacher can take to minimise the failure rate? Herodotou et al. ( Herodotou et al. 2019 ) provided evidence on how predictive analytics can be used by teachers to support active learning. An extensive body of literature suggests that predictive analytics can help teachers improve teaching practice ( Barmaki and Hughes 2015 ; Prieto et al. 2016 ; Prieto et al. 2018 ; Suehiro et al. 2017 ) and also to identify group of students that might need extra support to reach desired learning outcomes ( Goggins et al. 2016 ; Thomas 2018 ).

Prescriptive Analytics Prescriptive analytics provides recommendations or can automate actions in a feedback loop that might modify, optimise or pre-empt outcomes ( Williamson 2016 ). It is used to answer the "How will it best happen?". For instance, how will teachers make the right interventions for students that have been perceived to be at risk to minimise the student dropout rate or what kinds of resources are needed to support students who might need them to succeed? It determines the optimal action that enhances the business processes by providing the cause-effect relationship and applying techniques such as; graph analysis, recommendation engine, heuristics, neural networks, machine learning and Markov process ( Bihani and Patil 2014 ; ur Rehman et al. 2016 ). For example, applying curriculum Knowledge graph and learning Path recommendation to support teaching and learners learning process ( Shen et al. 2018 ).