If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Art History

Course: ap®︎/college art history > unit 1.

- Contrapposto explained

- What is foreshortening?

- What is chiaroscuro?

- How one-point linear perspective works

- What is atmospheric perspective?

- The classical orders

Naturalism, realism, abstraction and idealization

Representational versus non-representation

Moving toward abstraction, non-representational art, idealization, naturalism and realism, want to join the conversation.

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.5: Representational, Abstract, and Nonrepresentational Art

- Last updated

- Save as PDF

- Page ID 71923

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Painting, sculpture, and other artforms can be divided into the categories of representational (sometimes also called figurative art although it doesn’t always contain figures), abstract and nonrepresentational art. Representational art describes artworks—particularly paintings and sculptures–that are clearly derived from real object sources, and therefore are by definition representing something with strong visual references to the real world. Most, but not all, abstract art is based on imagery from the real world. The most “extreme” form of abstract art is not connected to the visible world and is known as nonrepresentational.

- Representational art or figurative art represents objects or events in the real world, usually looking easily recognizable. For example, a painting of a cat looks very much like a cat– it’s quite obvious what the artist is depicting.

- Romanticism, Impressionism, and Expressionism contributed to the emergence of abstract art in the nineteenth century as artists became less interested in depicting things exactly like they really exist. Abstract art exists on a continuum, from somewhat realistic representational work, to work that is not based on anything visible from the real world. Even representational work is abstracted to some degree; entirely realistic art is elusive.

- Work that does not depict anything from the real world (figures, landscapes, animals, etc.) is called nonrepresentational . Nonrepresentational art may simply depict shapes, colors, lines, etc., but may also express things that are not visible– emotions or feelings for example.

This figurative or representational work from the seventeenth century depicts easily recognizable objects–ships, people, and buildings. But artistic independence was advanced during the nineteenth century, resulting in the emergence of abstract art. Three movements that contributed heavily to the development of these were Romanticism, Impressionism, and Expressionism.

Abstraction indicates a departure from reality in depiction of imagery in art. Abstraction exists along a continuum; abstract art can formally refer to compositions that are derived (or abstracted) from a figurative or other natural source. It can also refer to nonrepresentational (non-objective) art that has no derivation from figures or objects. Picasso is a well-known artist who used abstraction in many of his paintings and sculptures: figures are often simplified, distorted, exaggerated, or geometric.

Pablo Picasso, Girl Before a Mirror , 1932, MOMA Photo by Sharon Mollerus CC BY

Even art that aims for verisimilitude (accuracy and truthfulness) of the highest degree can be said to be abstract, at least theoretically, since perfect representation is likely to be exceedingly elusive. Artwork which takes liberties, altering for instance color and form in ways that are conspicuous, can be said to be partially abstract.

Delaunay’s work is a primary example of early abstract art. Nonrepresentational art is also sometimes called complete abstraction, bearing no trace of any reference to anything recognizable from the real world. In geometric abstraction, for instance, one is unlikely to find references to naturalistic entities. Figurative art and total abstraction are almost mutually exclusive. But representational (or realistic) art often contains partial abstraction. As you see, these terms are bit confusing, but do your best to understand the basic definitions of representational, abstract and nonrepresentational.

- Representational, Abstract, and Nonrepresentational Art. From Boundless Art History. Provided by : Boundless. Located at : www.boundless.com/art-history/textbooks/boundless-art-history-textbook/thinking-and-talking-about-art-1/content-42/representational-abstract-and-nonrepresentational-art-264-1615/. License : CC BY-SA: Attribution-ShareAlike

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Scientific Representation

Science provides us with representations of atoms, elementary particles, polymers, populations, pandemics, economies, rational decisions, aeroplanes, earthquakes, forest fires, irrigation systems, and the world’s climate. It’s through these representations that we learn about the world. This entry explores various different accounts of scientific representation, with a particular focus on how scientific models represent their target systems. As philosophers of science are increasingly acknowledging the importance, if not the primacy, of scientific models as representational units of science, it’s important to stress that how they represent plays a fundamental role in how we are to answer other questions in the philosophy of science (for instance in the scientific realism debate). This entry begins by disentangling “the” problem of scientific representation, and then critically evaluates the current options available in the literature.

1. Problems Concerning Scientific Representation

2. general griceanism and stipulative fiat, 3.1 similarity and er-problem, 3.2 accuracy, style, and ontology, 4.1 structures and the problem of ontology, 4.2 structuralism and the er-problem, 4.3 demarcation, accuracy, style, and target-end structure, 5.1 the ddi account, 5.2 deflationary inferentialism, 5.3 inflating inferentialism: interpretation, 6. the fiction view of models, 7.1 from art to science, 7.2 the deki account, other internet resources, related entries.

In most general terms, any representation that is the product of a scientific endeavour is a scientific representation. These representations are a heterogeneous group comprising anything from thermometer readings and flow charts to verbal descriptions, photographs, X-ray pictures, digital imagery, equations, models, and theories. How do these representations work?

The first thing that strikes the novice in the debate about scientific representation is that there seems to be little agreement about what the problem is. Different authors frame the problem of scientific representation in different ways, and eventually they examine different issues. So a discussion of scientific representation has to begin with a clarification of the problem itself. Reviewing the literature on the subject leads us to the conclusion that there is no such thing as the problem of scientific representation—in fact, there are at least five different problems concerning scientific representation (Frigg and Nguyen 2020: ch. 1). In this section we formulate these problems and articulate five conditions of adequacy that every account of scientific representation has to satisfy.

The first problem is: what turns something into a scientific representation of something else? It has become customary to phrase this problem in terms of necessary and sufficient conditions and ask: what fills the blank in “\(S\) is a scientific representation of \(T\) iff ___”, where “\(S\)” stands for the object doing the representing and “\(T\)” for “target system”, the part or aspect of the world the representation is about? [ 1 ] Let us call this the Scientific Representation Problem (or SR-Problem for short).

A number of contributors to the debate have emphasised that scientific representation is an intentional concept, depending on factors such as a user’s intentions, purposes and objectives, contextual standards of accuracy, intended audiences, and community practices (see, for instance, Boesch 2017; Giere 2010; Mäki 2011; Suárez 2004; and van Fraassen 2008). We will discuss these in detail below. At this point it needs to be emphasised that framing the problem in terms of a biconditional does not preclude such factors to be part of the analysis. The blank can be filled with a \((n+2)\)-ary relation \(C(S, T, x_1, \ldots, x_n)\) (\(C\) for “constitutes”), where \(n \ge 0\) is a natural number and the \(x_i\) are factors such as intentions and purposes.

A first important condition of adequacy on any reply to this problem is that scientific representations allow us to form hypotheses about their target systems. An X-ray picture provides information about the bones of the patient, and models allow investigators to discover features of the things models stands for. Every acceptable theory of scientific representation has to account for how reasoning conducted on representations can yield claims about their target systems. Swoyer (1991: 449) refers to this kind of representation-based thinking as “surrogative reasoning” and so we call this the Surrogative Reasoning Condition . [ 2 ] This condition distinguishes models from lexicographical and indexical representations, which do not allow for surrogative reasoning.

Unfortunately this condition does not constrain answers sufficiently because any account of representation that fills the blank in a way that satisfies the surrogative reasoning condition will almost invariably also cover other kinds of representations. Speed camera photographs give the police information about drivers breaking the law, a cardboard model of the palace instructs us about its layout and proportions, and a weather map shows you where to expect rain. These representations are therefore are likely to fall under an account of representation that explains surrogative reasoning. Hence, representations other than scientific representations also allow for surrogative reasoning, which raises the question: how do scientific representations differ from other kinds of representations that allow for surrogative reasoning? Callender and Cohen (2006: 68–69) point out that this is a version Popper’s demarcation problem, now phrased in terms of representation, and so we refer to it as the Representational Demarcation Problem .

Callender and Cohen voice scepticism about there being a solution to this problem and suggest that the distinction between scientific and non-scientific representations is circumstantial (2006: 83): scientific representations are representations that are used or developed by someone who is a scientist. Other authors do not explicitly discuss the representational demarcation problem, but stances similar to Callender and Cohen’s are implicit in any approach that analyses scientific representation alongside other kinds of representation. Elgin (2010), French (2003), Frigg (2006), Hughes (1997), Suárez (2004), and van Fraassen (2008), for instance, all draw parallels between scientific and pictorial representation, which would make little sense if pictorial and scientific representation were categorically different.

Those who reject the notion that there is an essential difference between scientific and non-scientific representation can follow a suggestion of Contessa’s (2007) and broaden the scope of the investigation. Rather than analysing scientific representation, they can analyse the broader category of epistemic representation . This category comprises scientific representations, but it also includes other representations that allow for surrogative reasoning. The task then becomes to fill the blank in “\(S\) is an epistemic representation of \(T\) iff ___”. We call this the Epistemic Representation Problem ( ER-Problem , for short), and the biconditional the ER-Scheme . So one can say that the ER-Problem is to fill the blank in the ER-Scheme.

Not all representations are of the same kind, not even if we restrict our attention to scientific representations (assuming they are found to be relevantly different to non-scientific epistemic representations). An X-ray photograph represents an ankle joint in a different way than a biomechanical model, a mercury thermometer represent the temperature of gas in a different way than statistical mechanics does, and chemical theory represents a C60 fullerene in different way that an electron-microscope image of the molecule. Even when restricting attention to the same kind of representation, there are important differences: Weizsäcker’s liquid drop model, for instance, represents the nucleus of an atom in a manner that seems to be different from the one of the shell model, and an electric circuit model represents the brain function in a different way than a neural network model. In brief, there seem to be different representational styles. This raises the question: what styles are there and how can they be characterised? [ 3 ] We call this the Problem of Style . [ 4 ] There is no expectation that a complete list of styles be provided in response. Indeed, it is unlikely that such a list can ever be drawn up, and new styles will be invented as science progresses. For this reason a response to the problem of style will always be open-ended, providing a taxonomy of what is currently available while leaving room for later additions.

Some representations are accurate; others aren’t. The quantum mechanical model is an accurate representation of the atom (at least by our current lights) but the Thomson model isn’t. On what grounds do we make such judgments? Morrison (2008: 70) reminds us that it is a task for theory of representation to identify what constitutes an accurate representation. We call this the problem of Standards of Accuracy . Providing such standards is one of the issues an account of representation has to address, which, however, is not to say that accurate representation is the sole epistemic virtue of scientific models. As Parker (2020) points out, there are numerous of considerations to take into account when evaluating a model’s adequacy for its purpose, see Downes (2021: ch. 5) for further discussion.

This problem goes hand in hand with a condition of adequacy: the Possibility of Misrepresentation . Asking what makes a representation an accurate representation ipso facto presupposes that inaccurate representations are representations too. And this is how it should be. If \(S\) does not accurately represent \(T\), then it is a misrepresentation but not a non-representation. It is therefore a general constraint on a theory of scientific representation that it has to make misrepresentation possible. [ 5 ]

A related condition concerns models that misrepresent in the sense that they lack target systems. Models of the ether, phlogiston, four-sex populations, and so on, are all deemed scientific models, but ether, phlogiston, and four-sex populations don’t exist. Such models lack (actual) target systems, and one hopes that an account of scientific representation would allow us to understand how these models work. This need not imply the claim that they are representations in the same sense as models with actual targets, and, as we discuss below, there are accounts that deny targetless models the status of being representations.

A further condition of adequacy for an account of scientific representation is that it must account for the directionality of representation. As Goodman points out (1976: 5), representations are about their targets, but (at least in general) targets are not about their representations: a photograph represents the cracks in the wing of aeroplane, but the wing does not represent the photograph. So there is an essential directionality to representations, and an account of scientific, or epistemic, representation has to identify the root of this directionality. We call this the Requirement of Directionality .

Some representations, in particular models and theories, are mathematized and their mathematical aspects are crucial to their cognitive and representational function. This forces us to reconsider a time-honoured philosophical puzzle: the applicability of mathematics in the empirical sciences. The problem can be traced back at least to Plato’s Timaeus , but its modern expression is due to Wigner who challenged us to find an explanation for the enormous usefulness of mathematics in the sciences (1960: 2). The question how a mathematized model represents its target implies the question how mathematics applies to a physical system (see Pincock 2012 for an explicit discussion of the relationship between scientific representation and the applicability of mathematics). For this reason, our fifth and final condition of adequacy is that an account of representation has to explain how mathematics is applied to the physical world. We call this the Applicability of Mathematics Condition .

In answering the above questions one invariably runs up against a further problem, the Problem of Ontology : what kinds of objects are representations? If representations are material objects the answer is straightforward: photographic plates, pieces of paper covered with ink, elliptical blocks of wood immersed in water, and so on. But not all representations are like this. As Hacking (1983: 216) puts it, some representations one holds in one’s head rather than one’s hands. The Newtonian model of the solar system, the Lotka-Volterra model of predator-prey interaction and the general theory of relativity are not things you can put on your laboratory table and look at. The problem of ontology is to come clear on our commitments and provide a list with things that we recognise—or don’t recognise—as entities performing a representational function and give an account of what they are in case these entities raise questions (what exactly do we mean by something that one holds in one’s head rather than one’s hands?). Contessa (2010), Frigg (2010a,b), Godfrey-Smith (2006), Levy (2015), Thomson-Jones (2010), Weisberg (2013), among others, have drawn attention to this problem in different ways.

In sum, a theory of scientific representation has to respond to the following issues:

- Address the Representational Demarcation Problem (the question how scientific representations differ from other kinds of representations).

- Those who demarcate scientific from non-scientific representations have to provide an answer to the Scientific Representation Problem (fill the blank in “\(S\) is a scientific representation of \(T\) iff ___”). Those who reject the representational demarcation problem can address the Epistemic Representation Problem (fill the blank in ER-Scheme: “\(S\) is an epistemic representation of \(T\) iff ___”).

- Respond to the Problem of Style (what styles are there and how can they be characterised?).

- Formulate Standards of Accuracy (how do we identify what constitutes an accurate representation?).

- Address the Problem of Ontology (what are the kind of objects that serve as representations?).

Any satisfactory answer to these five issues will have to meet the following five conditions of adequacy:

- Surrogative Reasoning (scientific representations allow us to generate hypotheses about their target systems).

- Possibility of Misrepresentation (if \(S\) does not accurately represent \(T\), then it is a misrepresentation but not a non-representation).

- Targetless Models (what are we to make of scientific representations that lack targets?).

- Requirement of Directionality (scientific representations are about their targets, but targets are not about their representations).

- Applicability of Mathematics (how does the mathematical apparatus used in some scientific representations latch onto the physical world).

Listing the problems in this way is not to say that these are separate and unrelated issues. This division is analytical, not factual. It serves to structure the discussion and to assess proposals; it does not imply that an answer to one of these questions can be dissociated from what stance we take on the other issues.

Any attempt to tackle these questions faces an immediate methodological problem. As per the problem of style, there are different kinds of representations: scientific models, theories, measurement outcomes, images, graphs, diagrams, and linguistic assertions are all scientific representations, and even within these groups there can be considerable variation. But every analysis has to start somewhere, and so the problem is where. One might adopt a universalist position, holding that the diversity of styles dissolves under analysis and at bottom all instances of scientific/epistemic representation function in the same way and are covered by the same overarching account. For such a universalist the problem loses its teeth because any starting point will lead to the same result. Those of particularist bent deny that there is such a theory. They will first divide the scientific/epistemic representations into relevant subclasses and then analyse each subclass separately.

Different authors assume different stances in this debate, and we will discuss their positions below. However, there are few, if any, thoroughgoing universalists and so a review like the current one has to discuss different cases. Unfortunately space constraints prevent us from examining all the different varieties of scientific/epistemic representation, and a selection has to be made. This invariably leads to the neglect of some kinds of representations, and the best we can do about this is to be explicit about our choices. We resolve to concentrate on scientific models, and therefore replace our variable \(S\) for the object doing the representing with the variable \(M\) for model. This is in line both with the more recent literature on scientific representation, which is predominantly concerned with scientific models, and with the prime importance that current philosophy of science attaches to models (see the SEP entry on models in science for a survey). [ 6 ]

It is, however, worth briefly mentioning some of the omissions that this brings with it. Various types of images have their place in science, and so do graphs, diagrams, and drawings. Perini (2010) and Elkins (1999) provide discussions of visual representation in science. Measurements also supply representations of processes in nature, sometimes together with the subsequent condensation of measurement results in the form of charts, curves, tables and the like (see the SEP entry on measurement in science ). Furthermore, theories represent their subject matter. At this point the vexing problem of the nature of theories rears again (see the SEP entry on the structure of scientific theories and also Portides (2017) for an extensive discussion). Proponents of the semantic view of theories construe theories as families of models, and so for them the question of how theories represent coincides with the question of how models represent. By contrast, those who regard theories as linguistic entities see theoretical representation as a special kind of linguistic representation and focus on the analysis of scientific languages, in particular the semantics of so-called theoretical terms (see the SEP entry on theoretical terms in science ).

Before delving into the discussion a common misconception needs to be dispelled. The misconception is that a representation is a mirror image, a copy, or an imitation of the thing it represents. On this view representation is ipso facto realistic representation. This is a mistake. Representations can be realistic, but they need not. And representations certainly need not be copies of the real thing, an observation exploited by Lewis Carroll and Jorge Luis Borges in their satires, Sylvie and Bruno and On Exactitude in Science respectively, about cartographers who produce maps as large as the country itself only to see them abandoned (for a discussion see Boesch 2021). Throughout this review we encounter positions that make room for non-realistic representation and hence testify to the fact that representation is a much broader notion than mirroring. [ 7 ]

Callender and Cohen (2006) give a radical answer to the demarcation problem: there is no difference between scientific representations and other kinds of representations, not even between scientific and artistic representation. Underlying this claim is a position they call “General Griceanism” (GG). The core of GG is the reductive claim that all representations owe their status as representations to a privileged core of fundamental representations. GG then comes with a practical prescription about how to proceed with the analysis of a representation:

the General Gricean view consists of two stages. First, it explains the representational powers of derivative representations in terms of those of fundamental representations; second, it offers some other story to explain representation for the fundamental bearers of content. (2006: 73)

Of these stages only the second requires serious philosophical work, and this work is done in the philosophy of mind because the fundamental form of representation is mental representation.

Scientific representation is a derivative kind of representation (2006: 71, 75) and hence falls under the first stage of the above recipe. It is reduced to mental representation by an act of stipulation. In Callender and Cohen’s own example, the salt shaker on the dinner table can represent Madagascar as long as someone stipulates that the former represents the latter, since

the representational powers of mental states are so wide-ranging that they can bring about other representational relations between arbitrary relata by dint of mere stipulation. (2006: 73–74)

So explaining any form of representation other than mental representation is a triviality—all it takes is an act of “stipulative fiat” (2006: 75). This supplies an answer to the ER-problem:

Stipulative Fiat : A scientific model \(M\) represents a target system \(T\) iff a model user stipulates that \(M\) represents \(T\).

The first problem facing Stipulative Fiat is whether or not stipulation, or the bare intentions of language users, suffice to establish representational relationships. In the philosophy of language this gets called the “Humpty Dumpty” problem. It concerns whether or not Lewis Carroll’s Humpty Dumpty could use the word “glory” to mean “a nice knockdown argument” (Donnellan 1968; MacKay 1968). (We ignore the difference between meaning and denotation here). In that context it doesn’t seem like he can, and analogous questions can be posed in the context of scientific representation: can a scientist make any model represent any target simply by stipulating that it does?

Even if stipulation were sufficient to establish some sort of representational relationship, Stipulative Fiat fails to meet the Surrogative Reasoning Condition: assuming a salt shaker represents Madagascar in virtue of someone’s stipulation that this is so, this tells us nothing about how the salt shaker could be used to learn about Madagascar in the way that scientific models are used to learn about their targets (Liu 2015: 46–47, for a related objections see Boesch 2017: 974–978, Bueno and French 2011: 871–873, Gelfert 2016: 33, Ruyant 2021: 535). And appealing to additional facts about the salt shaker (the salt shaker being to the right of the pepper mill might allow us to infer that Madagascar is to the east of Mozambique) in order to answer this objection goes beyond Stipulative Fiat . Callender and Cohen do admit some representations are more useful than others, but claim that

the questions about the utility of these representational vehicles are questions about the pragmatics of things that are representational vehicles, not questions about their representational status per se . (2006: 75)

But even if the Surrogative Reasoning Condition is relegated to the realm of “pragmatics” it seems reasonable to ask for an account of how it is met.

An important thing to note is that even if Stipulative Fiat is untenable, we needn’t give up on GG. GG only requires that there be some explanation of how derivative representations relate to fundamental representations; it does not require that this explanation be of a particular kind, much less that it consist in nothing but an act of stipulation (Toon 2010: 77–78). Ruyant (2021) has recently proposed what he calls “true Griceanism”, which differs from the original account in that it involves a non-trivial reduction of scientific representation to more fundamental representations (complex sequences mental states and purpose-directed behaviour) in a way that is also sensitive to the communal aspects of practices in which the models are embedded. This is a step into the direction indicated by Toon. More generally, as Callender and Cohen note, all that it requires is that there is a privileged class of representations and that other types of representations owe their representational capacities to their relationship with the primitive ones. So philosophers need an account of how members of this privileged class of representations represent, and how derivative representations, which includes scientific models, relate to this class. When stated like this, many recent contributors to the debate on scientific representation can be seen as falling under the umbrella of GG. Indeed, As we will see below, many of the more developed versions of the accounts of scientific representation discussed throughout this entry invoke the intentions of model users, albeit in a more complex manner than Stipulative Fiat .

3. The Similarity Conception

Similarity and representation initially appear to be two closely related concepts, and invoking the former to ground the latter has a philosophical lineage stretching back at least as far as Plato’s The Republic . [ 8 ] In its most basic guise the similarity conception of scientific representation asserts that scientific models represent their targets in virtue of being similar to them. This conception has universal aspirations in that it is taken to account for representation across a broad range of different domains. Paintings, statues, and drawings are said to represent by being similar to their subjects, and Giere proclaimed that it covers scientific models alongside “words, equations, diagrams, graphs, photographs, and, increasingly, computer-generated images” (2004: 243). So the similarity view repudiates the demarcation problem and submits that the same mechanism, namely similarity, underpins different kinds of representation in a broad variety of contexts.

The view offers an elegant account of surrogative reasoning. Similarities between model and target can be exploited to carry over insights gained in the model to the target. If the similarity between \(M\) and \(T\) is based on shared properties, then a property found in \(M\) would also have to be present in \(T\); and if the similarity holds between properties themselves, then \(T\) would have to instantiate properties similar to \(M\).

However, appeal to similarity in the context of representation leaves open whether similarity is offered as an answer to the ER-Problem or the Problem of Style, or whether it is meant to set Standards of Accuracy. Proponents of the similarity conception typically have offered little guidance on this issue. So we examine each option in turn and ask whether similarity offers a viable answer. We then turn to the question of how the similarity view deals with the Problem of Ontology.

Understood as response to the ER-Problem, the simplest similarity view is the following:

Similarity 1 : A scientific model \(M\) represents a target \(T\) iff \(M\) and \(T\) are similar.

A well-known objection to this account is that similarity has the wrong logical properties. Goodman (1976: 4–5) points out that similarity is symmetric and reflexive yet representation isn’t. If object \(A\) is similar to object \(B\), then \(B\) is similar to \(A\). But if \(A\) represents \(B\), then \(B\) need not (and in fact in most cases does not) represent \(A\). Everything is similar to itself, but most things do not represent themselves. So this account does not meet our fourth condition of adequacy for an account of scientific representation insofar as it does not provide a direction to representation.

There are accounts of similarity under which similarity is not a symmetric relation (see Tversky 1977; Weisberg 2012, 2013: ch. 8; and Poznic 2016: sec. 4.2). This raises the question of how to analyse similarity. We turn to this issue in the next subsection. However, even if we concede that similarity need not always be symmetrical, this does not solve Goodman’s problem with reflexivity; nor does it, as we will see, solve other problems of the similarity account.

The most significant problem facing Similarity 1 is that without constraints on what counts as similar, any two things can be considered similar (Aronson et al. 1995: 21; Goodman 1972: 443–444). This has the unfortunate consequence that anything represents anything else. A natural response to this difficulty is to delineate a set of relevant respects and degrees to which \(M\) and \(T\) have to be similar. This idea can be moulded into the following definition:

Similarity 2 : A scientific model \(M\) represents a target \(T\) iff \(M\) and \(T\) are similar in relevant respects and to the relevant degrees.

On this definition one is free to choose one’s respects and degrees so that unwanted similarities drop out of the picture. While this solves the last problem, it leaves the problem of logical properties untouched: similarity in relevant respects and to the relevant degrees is reflexive (and symmetrical, depending on one’s notion of similarity). Moreover, Similarity 2 faces three further problems.

Firstly, similarity, even restricted to relevant similarities, is too inclusive a concept to account for representation. In many cases neither one of a pair of similar objects represents the other. This point has been brought home in Putnam’s now-classical thought experiment due to Putnam (1981: 1–3). An ant is crawling on a patch of sand and leaves a trace that happens to resemble Winston Churchill. Has the ant produced a picture, a representation, of Churchill? Putnam’s answer is that it didn’t because the ant has never seen Churchill, had no intention to produce an image of him, was not causally connected to Churchill, and so on. Although someone else might see the trace as a depiction of Churchill, the trace itself does not represent Churchill. The fact that the trace is similar to Churchill does not suffice to establish that the trace represents him. And what is true of the trace and Churchill is true of every other pair of similar items: even relevant similarity on its own does not establish representation.

Secondly, as noted in Section 1 , allowing for the possibility of misrepresentation is a key desiderata required of any account of scientific representation. In the context of a similarity conception it would seem that a misrepresentation is one that portrays its target as having properties that are not similar in the relevant respects and to the relevant degree to the true properties of the target. But then, on Similarity 2 , \(M\) is not a representation at all. The account thus has difficulty distinguishing between misrepresentation and non-representation (Suárez 2003: 233–235).

Thirdly, there may simply be nothing to be similar to because some representations are not about an actual object. Some paintings represent elves or dragons, and some models represent phlogiston or the ether. None of these exist. This is a problem for the similarity view because models without targets cannot represent what they seem to represent because in order for two things to be similar to each other both have to exist. If there is no ether, then an ether model cannot be similar to the ether.

At least some of these problems can be resolved by taking the very act of asserting a specific similarity between a model and a target as constitutive of the scientific representation. Giere (1988: 81) suggests that models come equipped with what he calls “theoretical hypotheses”, statements asserting that model and target are similar in relevant respects and to certain degrees. He emphasises that “scientists are intentional agents with goals and purposes” (2004: 743) and proposes to build this insight explicitly into an account of representation. This involves adopting an agent-based notion of representation that focuses on “the activity of representing” (2004). Analysing representation in these terms amounts to analysing schemes like

Agents (1) intend; (2) to use model, \(M\); (3) to represent a part of the world \(W\); (4) for purposes, \(P\). So agents specify which similarities are intended and for what purpose. (2010: 274)

(see also Mäki 2009, 2011; although see Rusanen and Lappi 2012: 317 for arguments to the contrary). This leads to the following definition:

Similarity 3 : A scientific model \(M\) represents a target system \(T\) iff there is an agent \(A\) who uses \(M\) to represent a target system \(T\) by proposing a theoretical hypothesis \(H\) specifying a similarity (in certain respects and to certain degrees) between \(M\) and \(T\) for purpose \(P\).

This version of the similarity view avoids problems with misrepresentation because \(H\) being a hypothesis, there is no expectation that the assertions made in \(H\) are true. If they are, then the representation is accurate (or the representation is accurate to the extent that they hold). If they do not, then the representation is a misrepresentation. It also resolves the issue with directionality because \(H\) can be understood as introducing an asymmetry that is not present in the similarity relation. However, it fails to resolve the problem with representation without a target. If there is no ether, no hypotheses can be asserted about it, at least in any straightforward way.

Similarity 3 , by invoking an active role for the purposes and actions of scientists in constituting scientific representation, marks a significant change in emphasis for similarity-based accounts. Suárez (2003: 226–227), drawing on van Fraassen (2002) and Putnam (2002), defines “naturalistic” accounts of representation as ones where

whether or not representation obtains depends on facts about the world and does not in any way answer to the personal purposes, views or interests of enquirers.

By building the purposes of model users directly into an answer to the ER-problem, Similarity 3 is explicitly not a naturalistic account (in contrast to Similarity 1 ). The shift to users performing representational actions invites the question of it means for a scientist to perform such an action. Boesch (2019) offers an answer which draws on Anscombe’s (2000) account of intentional action: something is a “scientific, representational action” (Boesch 2019: 312) when a description of a scientist’s interaction with a model stands as an earlier description towards the final description which is some scientific aim such as explanation, predication, or theorizing.

Even though Similarity 3 resolves a number of issues that beset simpler versions, it does not seem to be a successful similarity-based solution to the ER-Problem. A closer look at Similarity 3 reveals that the role of similarity has shifted. As far as offering a solution to the ER-Problem is concerned, all the heavy lifting in Similarity 3 is done by the appeal to agents and their intentions. Giere implicitly concedes this when he observes that similarity is “the most important way, but probably not the only way” for models to function representationally (2004: 747). But if similarity is not the only way in which a model can be used as a representation, then similarity has become otiose in a reply to the ER-problem. In fact, being similar in the relevant respects to the relevant degree now plays the role either of a representational style or of a normative criterion for accurate representation, rather than constituting representation per se . We assess in the next section whether similarity offers cogent replies to the issues of style and accuracy, and we raise a further problem for any account of scientific representation that relies on the idea that models, specifically non-concrete models, are similar to their targets.

The fact that relevant properties can be delineated in different ways could potentially provide an answer to the Problem of Style. If \(M\) representing \(T\) involves the claim that \(M\) and \(T\) are similar in a certain respect, the respect chosen specifies the style of the representation; and if \(M\) and \(T\) are in fact similar in that respect (and to the specified degree), then \(M\) accurately represents \(T\) within that style. For example, if \(M\) and \(T\) are proposed to be similar with respect to their causal structure, then we might have a style of causal modelling; if \(M\) and \(T\) are proposed to be similar with respect to structural properties, then we might have a style of structural modelling; and so on and so forth.

A first step in the direction of such an understanding of styles is the explicit analysis of the notion of similarity. The standard way of cashing out what it means for an object to be similar to another object is to require that they co-instantiate properties. In fact, this is the idea that Quine (1969: 117–118) and Goodman (1972: 443) had in mind in their influential critiques of similarity. The two most prominent formal frameworks that develop this idea are the geometric and contrast accounts (see Decock and Douven 2011 for a discussion).

The geometric account, associated with Shepard (1980), assigns objects a place in a multidimensional space based on values assigned to their properties. This space is then equipped with a metric and the degree of (dis)similarity between two objects is the distance between the points representing the two objects in that space. This account is based on the strong assumptions that values can be assigned to all features relevant to similarity judgments, which is deemed unrealistic (and to the best of our knowledge no one has developed such an account in the context of scientific representation).

This problem is supposed to be overcome in Tversky’s contrast account (1977). This account defines a gradated notion of similarity based on a weighted comparison of properties. Weisberg has recently introduced this account into the philosophy of science where it serves as the starting point for his weighted feature matching account of model world-relations (for details see Weisberg 2012, 2013: ch. 8). Although the account has some advantages, questions remain whether it can capture the distinction between what Niiniluoto (1988: 272–274) calls “likeness” and “partial identity”. Two objects are alike to the extent that they co-instantiate similar properties (for example, a red phone box and a red London bus might be alike with respect to their colour, despite not instantiating the exact same shade of red). Two objects are partially identical to the extent that they co-instantiate identical properties. As Parker (2015: 273) notes, contrast based accounts of similarity like Weisberg’s have difficulties capturing the former, and this is often pertinent in the context of scientific representation where models and their targets need not co-instantiate the exact same property. Concerns of this sort have led Khosrowi (2020) to suggest that the notion of sharing features should be analysed in a pluralist manner. Sharing a feature sometimes means sharing the exact same feature; sometimes it means to share features which are sufficiently quantitatively close to one another; and sometimes it means having features which are themselves “sufficiently similar”.

A further question that remains for someone who uses the notion of similarity to answer to the Problem of Style and provide standards of accuracy in the manner under consideration here is whether it truly captures all of scientific practice. Similarity theorists are committed to the claim that whenever a scientific model represents its target system, this is established in virtue of a model user specifying a relevant similarity, and if the similarity holds, then the representational relationship is accurate. These universal aspirations require that the notion of similarity invoked capture the relationship that holds between diverse entities such as a basin-model of the San Francisco bay area, a tube map and an underground train system, and the Lotka-Volterra equations of predator-pray interaction. Whether all of these relationships can be captured in terms of similarity remains an open question. In addition, this view is commited to the idea that idealised aspects of scientific models, understood as dissimilarities between models and their targets, are misrepresenations and as such the view has difficulty capturing the positive epistemic role that such aspects can play (Nguyen 2020).

Another problem facing similarity based approaches concerns their treatment of the ontology of models. If models are supposed to be similar to their targets in the ways specified by theoretical hypotheses, then they must be the kind of things that can be so similar. For material models like the San Francisco Bay model (Weisberg 2013), ball and stick models of molecules (Toon 2011), the Phillips-Newlyn machine (Morgan and Boumans 2004), or model organisms (Ankeny and Leonelli 2021) this seems straightforward because they are of the same ontological kind as their respective targets. But many interesting scientific models are not like this: they are what Hacking aptly describes as “something you hold in your head rather than your hands” (1983: 216). Following Thomson-Jones (2012) we call such models non-concrete models . The question then is how such models can be similar to their targets. At the very least these models are “abstract” in the sense that they have no spatiotemporal location. But if so, then it remains unclear how they can instantiate the sorts of properties specified by theoretical hypotheses, since these properties are typically physical , and presumably being located in space and time is a necessary condition on instantiating such properties. For further discussion of this objection, and proposed solutions, see Teller (2001: 399), Thomson-Jones (2010; 2020), and Giere (2009), and Thomasson (2020).

4. The Structuralist Conception

The structuralist conception of model-representation originated in the so-called semantic view of theories that came to prominence in the second half of the 20 th century (see the SEP entry on the structure of scientific theories for further details). The semantic view was originally proposed as an account of theory structure rather than scientific representation. The driving idea behind the position is that scientific theories are best thought of as collections of models. This invites the questions: what are these models, and how do they represent their target systems? Most defenders of the semantic view of theories (with the notable exception of Giere, whose views on scientific representation were discussed in the previous section) take models to be structures, which represent their target systems in virtue of there being some kind of morphism (isomorphism, partial isomorphism, homomorphism, …) between the two.

This conception has two prima facie advantages. The first advantage is that it offers a straightforward answer to the ER-Problem (or SR-problem if the focus is on scientific representation), and one that accounts for surrogative reasoning: the mappings between the model and the target allow scientists to convert truths found in the model into claims about the target system. The second advantage concerns the applicability of mathematics. There is a time-honoured position in the philosophy of mathematics which sees mathematics as the study of structures; see, for instance Resnik (1997) and Shapiro (2000). It is a natural move for the scientific structuralist to adopt this point of view, which then provides a neat explanation of how mathematics is used in scientific modelling.

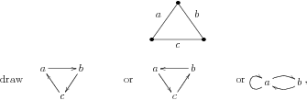

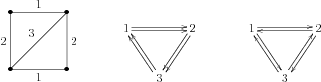

Almost anything from a concert hall to a kinship system can be referred to as a “structure”. So the first task for a structuralist account of representation is to articulate what notion of structure it employs. A number of different notions of structure have been discussed in the literature (for a review see Thomson-Jones 2011), but by far the most common is the notion of structure one finds in set theory and mathematical logic. A structure \(\mathcal{S}\) in that sense (sometimes “mathematical structure” or “set-theoretic structure”) is a composite entity consisting of the following: a non-empty set \(U\) of objects called the domain (or universe) of the structure and an indexed set \(R\) of relations on \(U\) (supporters of the partial structures approach, e.g., Da Costa and French (2003) and Bueno, French, and Ladyman (2002), use partial \(n\)-place relations, for which it may be undefined whether or not some \(n\)-tuples are in their extension). This definition of structure is widely used in mathematics and logic. We note, however, that in mathematical logic structures also contain a language and an interpretation function, interpreting symbols of the language in terms of \(U\) (see for instance Machover 1996 and Hodges 1997), which is absent from structures in the current context. It is convenient to write these as \(\mathcal{S}= \langle U, R \rangle\), where “\(\langle \, , \rangle\)” denotes an ordered tuple.

It is important to be clear on what we mean by “object” and “relation” in this context. As regards objects, all that matters from a structuralist point of view is that there are so and so many of them. Whether the objects are desks or planets is irrelevant. All we need are dummies or placeholders whose only property is “objecthood”. Similarly, when defining relations one disregards completely what the relation is “in itself”. Whether we talk about “being the mother of” or “standing to the left of” is of no concern in the context of a structure; all that matters is between which objects it holds. For this reason, a relation is specified purely extensionally: as a class of ordered \(n\)-tuples. The relation literally is nothing over and above this class. So a structure consists of dummy-objects between which purely extensionally defined relations hold.

The first basic posit of the structuralist theory of representation is that models are structures in this sense (the second is that models represent their targets by being suitably morphic to them; we discuss morphisms in the next subsection). Suppes has articulated this stance clearly when he declared that “the meaning of the concept of model is the same in mathematics and the empirical sciences” (1960 [1969]: 12), and many have followed suit. So we are presented with a clear answer to the Problem of Ontology: models are structures. The remaining issue is what structures themselves are. Are they Platonic entities, equivalence classes, modal constructs, or yet something else? In the context of a discussion of scientific representation one can push these questions off to the philosophy of mathematics (see the SEP entries on the philosophy of mathematics , nominalism in the philosophy of mathematics , and Platonism in the philosophy of mathematics for further details).

The most basic structuralist conception of scientific representation asserts that scientific models, understood as structures, represent their target systems in virtue of being isomorphic to them. An isomorphism between two structures \(\mathcal{S}\) and \(\mathcal{S}'\) is a bijective function from \(U\) to \(U'\) that preserves the relations on \(U\) (and inversely, the relations on \(U'\)). An isomorphism associates each object in \(U\) with an object in \(U'\) and pairs up each relation in \(R\) with a relation in \(R'\) so that a relation holds between certain objects in \(U\) iff the corresponding relation holds between the objects in \(U'\) that are associated with them. [ 9 ] Assume now that the target system \(T\) exhibits the structure \(\mathcal{S}_T\) and the model is the structure \(\mathcal{S}_M\). Then the model represents the target iff it is isomorphic to the target:

Structuralism 1 : A scientific model \(M\) represents its target \(T\) iff \(\mathcal{S}_T\) is isomorphic to \(\mathcal{S}_M\).

It bears noting that few adherents of the structuralist account of scientific representation, most closely associated with the semantic view of theories, explicitly defend this position (although see Ubbink 1960: 302). Representation was not the focus of attention in the semantic view, and the attribution of (something like) Structuralism 1 to its supporters is an extrapolation. Representation became a much-debated topic in the first decade of the 21 st century, and many proponents of the semantic view then either moved away from Structuralism 1 , or pointed out that they never held such a view. We turn to more advanced positions shortly, but to understand what motivates such positions it is helpful to understand why Structuralism 1 fails.

The first and most obvious problem is the same as with the similarity view: isomorphism is symmetrical and reflexive (and transitive) but representation isn’t. This problem could be addressed by replacing isomorphism with an alternative mapping. Bartels (2006), Lloyd (1984), and Mundy (1986) suggest homomorphism; van Fraassen (1980, 1997, 2008) and Redhead (2001) isomorphic embeddings; advocates of the partial structures approach prefer partial isomophisms (Bueno 1997; Bueno and French 2011; Da Costa and French 1990, 2003; French 2003, 2014; French and Ladyman 1999); and Swoyer (1991) introduces what he calls \(\Delta/\Psi\) morphisms. We refer to these collectively as “morphisms”. Pero and Suárez (2016) provide a comparative discussion of different morphisms.

These suggestions solve some, but not all problems. While many of these mappings are not symmetrical, they are all still reflexive. But even if these formal issues could be resolved in one way or another, a view based on structural mappings would still face other serious problems. For ease of presentation we discuss these problems in the context of the isomorphism view; mutatis mutandis other formal mappings suffer from the same difficulties. Like similarity, isomorphism is too inclusive: not all things that are isomorphic represent each other. In the case of similarity this case was brought home by Putnam’s thought experiment with the ant crawling on the beach; in the case of isomorphism a look at the history of science will do the job. Many mathematical structures were discovered and discussed long before they were used in science. Non-Euclidean geometries were studied by mathematicians long before Einstein used them in the context of spacetime theories, and Hilbert spaces were studied by mathematicians prior to their use in quantum theory. If representation was nothing over and above isomorphism, then we would have to conclude that Riemann discovered general relativity or that that Hilbert invented quantum mechanics. This does not seem correct, so it doesn’t seem like isomorphism on its own establishes scientific representation (Frigg 2002: 10).

Isomorphism is more restrictive than similarity: not everything is isomorphic to everything else. But isomorphism is still too abundant to correctly identify what a model represents. The root of the difficulties is that the same structures can be instantiated in different kinds of target systems. Certain geometrical structures are instantiated by many different systems; just think about how many spherical things we find in the world. The \(1/r^2\) law of Newtonian gravity is also the “mathematical skeleton” of Coulomb’s law of electrostatic attraction and the weakening of sound or light as a function of the distance to the source. The mathematical structure of the pendulum is also the structure of an electric circuit with a condenser and a solenoid (Kroes 1989). The same structure can be exhibited by more than one kind of target system, and so isomorphism by itself is too weak to identify a model’s target.

As we have seen in the last section, a misrepresentation is one that portrays its target as having features it doesn’t have. In the case of a structural account of representation, this means that the model portrays the target as having structural properties that it doesn’t have. However, isomorphism demands identity of structure: the structural properties of the model and the target must correspond to one another exactly. So a misrepresentation won’t be isomorphic to the target. By the lights of Structuralism 1 it therefore is not a representation at all. Like simple similarity accounts, Structuralism 1 conflates misrepresentation with non-representation (Suárez 2003: 234–235). Partial structures can avoid a mismatch due to a target relation being omitted in the model and hence go some way to shoring up the structuralist account (Bueno and French 2011: 888). It remains unclear, however, how they account for distortive representations (Pincock 2005).

Finally, like similarity accounts, Structuralism 1 has a problem with non-existent targets because no model can be isomorphic to something that doesn’t exist. If there is no ether, a model can’t be isomorphic to it. Hence models without target cannot represent what they seem to represent.

Most of these problems can be resolved by making moves similar to the ones that lead to Similarity 3 : introduce agents and hypothetical reasoning into the account of representation. Going through the motions one finds:

Structuralism 2 : A scientific model \(M\) represents a target system \(T\) iff there is an agent \(A\) who uses \(M\) to represent a target system \(T\) by proposing a theoretical hypothesis \(H\) specifying an isomorphism between \(\mathcal{S}_M\) and \(\mathcal{S}_T\).

This is in line with van Fraassen’s views on representation. He offers the following as the “Hauptstatz” of a theory of representation: “ There is no representation except in the sense that some things are used, made, or taken, to represent things as thus and so ” (2008: 23, original emphasis). Likewise, Bueno submits that “representation is an intentional act relating two objects” (2010: 94–95, original emphasis), and Bueno and French point out that using one thing to represent another thing is not only a function of (partial) isomorphism but also depends on “pragmatic” factors “having to do with the use to which we put the relevant models” (2011: 885).

As in the shift from Similarity 2 to Similarity 3 , this seems like a successful move, with many (although not all) of the aforementioned concerns being met. But, again, the role of isomorphism has shifted. The crucial ingredient is the agent’s intention and isomorphism has in fact become either a representational style or normative criterion for accurate representation. Let us now assess how well isomorphism fares as a response to these problems, and the others outlined above.

Structuralism’s stand on the Demarcation Problem is by and large an open question. Unlike similarity, which has been widely discussed across different domains, structural mappings are tied closely to the formal framework of set theory, and have been discussed only sparingly outside the context of the mathematized sciences. An exception is French (2003), who discusses isomorphism accounts in the context of pictorial representation. He considers in detail Budd’s (1993) account of pictorial representation and points out that it is based on the notion of a structural isomorphism between the structure of the surface of the painting and the structure of the relevant visual field. Therefore representation is the perceived isomorphism of structure (French 2003: 1475–1476) (this point is reaffirmed by Bueno and French (2011: 864–865); see Downes (2009: 423–425) and Isaac (2019) for critical discussions).

The Problem of Style is to identify representational styles and characterise them. A proposed structural mapping between the model and the target offers an obvious response to this challenge: one can represent a system by coming up with a model that is proposed to be appropriately morphic to it. This delivers the isomorphism-style, the homomorphism-style, the partial-isomorphism style and so on. We can call these “morphism-styles” when referring to them in general. Each of these styles also offers a clear-cut condition of accuracy: the representation is accurate if the hypothesised morphism holds; it is inaccurate if it doesn’t.

This is neat answer. The question is what status it has vis-à-vis the Problem of Style. Are morphism-styles merely a subgroup of styles or are they privileged? The former is uncontentious. However, the emphasis many structuralists place on structure preserving mappings suggests that they do not regard morphisms as merely one way among others to represent something. What they seem to have in mind is the stronger claim that a representation must be of that sort, or that morphism-styles are the only acceptable styles.

This claim seems to conflict with scientific practice in at least two respects. Firstly, many representations are inaccurate (and known to be) in some way. Some models distort, deform and twist properties of the target in ways that seem to undercut isomorphism, or indeed any of the proposed structure preserving mappings. Some models in statistical mechanics have an infinite number of particles and the Newtonian model of the solar system represents the sun as a perfect sphere where in reality it is fiery ball with no well-defined surface at all. It is at best unclear how isomorphism, partial or otherwise, or homomorphism can account for these kinds of idealisations. So it seems that styles of representation other than structure preserving mappings have to be recognised.

Secondly, the structuralist view is a rational reconstruction of scientific modelling, and as such it has some distance from the actual practice. Some philosophers have worried that this distance is too large and that the view is too far removed from the actual practice of science to be able to capture what matters to the practice of modelling (this is the thrust of many contributions to Morgan and Morrison 1999; see also Cartwright 1999). Although some models used by scientists may be best thought of as set theoretic structures, there are many where this seems to contradict how scientists actually talk about, and reason with, their models. Obvious examples include physical models like the San Francisco Bay model (Weisberg 2013), but also systems such as the idealized pendulum or imaginary populations of interbreeding animals. Such models have the strange property of being concrete-if-real and scientists talk about them as if they were real systems, despite the fact that they are obviously not (Godfrey-Smith 2006). Thomson-Jones (2010) dubs this “face value practice”, and there is a question whether structuralism can account for that practice.

There remains a final problem to be addressed in the context of structural accounts of scientific representation. Target systems are physical objects: atoms, planets, populations of rabbits, economic agents, etc. Isomorphism is a relation that holds between two structures and claiming that a set theoretic structure is isomorphic to a piece of the physical world is prima facie a category mistake. By definition, a morphism can only hold between two structures. If we are to make sense of the claim that the model is isomorphic to its target we have to assume that the target somehow exhibits a certain structure \(\mathcal{S}_T\). But what does it mean for a target system—a part of the physical world—to possess a structure, and where in the target system is the structure located?

There are two prominent suggestions in the literature. The first, originally suggested by Suppes (1962 [1969]), is that data models are the target-end structures represented by models. This approach faces a question whether we should be satisfied with an account of scientific representation that precludes phenomena being represented (see Bogen and Woodward (1988) for a discussion of the distinction between data and phenomena, and Brading and Landry (2006) for a discussion of the distinction in the context of scientific representation). Van Fraassen (2008) has addressed this problem and argues for a pragmatic resolution: in the context of use, there is no pragmatic difference between representing phenomena and data extracted from it (see Nguyen 2016 for a critical discussion). The alternative approach locates the target-end structure in the target system itself. One version of this approach sees structures as being instantiated in target systems. This view seems to be implicit in many versions of the semantic view, and it is explicitly held by authors arguing for a structuralist answer to the problem of the applicability of mathematics (Resnik 1997; Shapiro 1997). This approach faces underdetermination issues in that the same target can instantiate different structures. The issue can be seen as arising due to there being alternative descriptions of the system (Frigg 2006) or because a version of “Newman’s Objection” also bites in the current context (Newman 1928; see Ainsworth 2009 and Ketland 2004 for further discussion). A more radical version simply identifies targets with structures (Tegmark 2008). This approach is highly revisionary in particular when considering target systems like populations of breeding rabbits or economies. So the question remains for any structuralist account of scientific representation: where are the required target-end structures to be found?

5. The Inferential Conception

The core idea of the inferential conception is to analyse scientific representation in terms of the inferential function of scientific models. In the previous accounts discussed, a model’s inferential capacity dropped out of whatever it was that was supposed to answer the ER-problem (or SR-problem): proposed morphisms or similarity relations between models and their targets for example. The accounts discussed in this section reverse this order and explain scientific representation directly in terms of surrogative reasoning.

According to Hughes’ Denotation, Demonstration, and Interpretation (DDI) account of scientific representation (1997, 2010: ch. 5), models denote their targets; are such that model users can perform demonstrations on them; and interpret the results of such demonstrations in terms of the target. The last step is necessary because demonstrations establish results about the model itself, and in interpreting these results the model user draws inferences about the target from the model (1997: 333). Unfortunately Hughes has little to say about what it means to interpret a result of a demonstration on a model in terms of its target system, and so one has to retreat to an intuitive (and unanalysed) notion of drawing inferences about the target based on the model. [ 10 ]

Hughes is explicit that he is not attempting to answer the ER-problem, and that he does not offer denotation, demonstration, and interpretation as individually necessary and jointly sufficient conditions for scientific representation. He prefers the more

modest suggestion that, if we examine a theoretical model with these three activities in mind, we shall achieve some insight into the kind of representation that it provides. (1997: 339)

This is unsatisfactory because it ultimately remains unclear what allows scientists to use a model to draw inferences about the target, and it raises the question of what would have to be added to the DDI conditions to turn them into a full-fledged response to the ER-problem. If, alternatively, the conditions were taken to be necessary and sufficient, then the account would require further elaboration on what establishes the conditions.

Suárez argues that we should adopt a “deflationary or minimalist attitude and strategy” (2004: 770) when addressing the problem of scientific representation. Two different notions of deflationism are in operation in his account. The first is to abandon the aim of seeking necessary and sufficient conditions; necessary conditions will be good enough (2004: 771). The second notion is that we should seek “no deeper features to representation other than its surface features” (2004:771) or “platitudes” (Suárez and Solé 2006: 40), and that we should deny that an analysis of a concept “is the kind of analysis that will shed explanatory light on our use of the concept” (Suárez 2015: 39). Suárez intends his account of scientific representation to be deflationary in both senses, and dubs it “inferentialism”. Letting \(A\) stand for the model and \(B\) for the target, he offers the following analysis:

Inferentialism : “\(A\) represents \(B\) only if (i) the representational force of \(A\) points towards \(B\), and (ii) \(A\) allows competent and informed agents to draw specific inferences regarding \(B\)” (2004: 773).

The first condition addresses the Requirement of Directionality and ensures that \(A\) and \(B\) indeed enter into a representational relationship. On might worry that explaining representation in terms of representational force sheds little light on the matter as long as no analysis of representational force is offered. But Suárez resists attempts to explicate representational force in terms of a stronger relation, like denotation or reference, on grounds that this would violate deflationism (2015: 41). The second condition is in fact just the Surrogative Reasoning Condition, now taken as a necessary condition on scientific representation. Contessa (2007: 61) points out that it remains mysterious how these inferences are generated. An appeal to further analysis can, again, be blocked by appeal to deflationism because any attempt to explicate how inferences are drawn would go beyond “surface features”. So the tenability of Inferentialism in effect depends on the tenability of deflationism about scientific representation. Suárez (2015) defends deflationism by drawing analogies with three different deflationary theories about truth, Ramsey’s “redundancy” theory, Wright’s “abstract minimalism” and Horwich’s “use theory” (for more information of these theories see the SEP entry on the deflationary theory of truth ). An alternative defence builds on Brandom’s inferentialism in the philosophy of language (1994, 2000), a line of argument that is developed by de Donato Rodríguez and Zamora Bonilla (2009) and Kuorikoski and Lehtinen (2009).

Inferentialism provides a neat explanation of the possibility of misrepresentation because the inferences drawn about a target need not be true (Suárez 2004: 776). In as far as one accepts representational force as a cogent concept, targetless models are dealt with successfully because representational force (unlike denotation) does not require the existence of a target (2004: 772). Inferentialism repudiates the Representational Demarcation Problem and aims to offer an account of representation that also works in other domains such as painting (2004: 777). The account is ontologically non-committal because anything that has an internal structure that allows an agent to draw inferences can be a representation. Relatedly, since the account is supposed to apply to a wide variety of entities including equations and mathematical structures, the account implies that mathematics is successfully applied in the sciences, but in keeping with the spirit of deflationism no explanation is offered about how this is possible. The account does not directly address the Problem of Style.

In response to the difficulties with Inferentialism Contessa submits that “it is not clear why we should adopt a deflationary attitude from the start ” (2007: 50) and provides a “interpretational account” of scientific representation that is inspired by Suárez’s account, but without being deflationary. Contessa introduces the notion of an interpretation of a model, in terms of its target system, as a necessary and sufficient condition on epistemic representation (see also Ducheyne 2012 for a related account):

Interpretation : “A [model \(M\)] is an epistemic representation of a certain target [\(T\)] (for a certain user) if and only if the user adopts an interpretation of the [\(M\)] in terms of [\(T\)].” (Contessa 2007: 57; see also Contessa 2011: 126–127)

The leading idea of an interpretation is that the model user first identifies sets of relevant objects in the model and the target, and then pins down sets of properties and relations these objects instantiate both in the model and the target. The user then (a) takes \(M\) to denote \(T\); (b) takes every identified object in the model to denote exactly one object in the target (and every relevant object in the target has to be so denoted); (c) takes every property and relation in the model to denote a property or relation of the same type in the target (and, again, and every property and relation in the target has to be so denoted). A formal rendering of these conditions is what Contessa calls an “analytic interpretation” (see his 2007: 57–62 for details; he also includes an additional condition pertaining to functions in the model and target, which we suppress for brevity).

Interpretation offers a neat answer to the ER-problem. The account also explains the directionality of representation: interpreting a model in terms of a target does not entail interpreting a target in terms of a model. However, it has been noted that Interpretation has difficulty accounting for the possibility of misrepresentation, since it seems to require that the relevant objects, properties, and relations actually exist in the target (Shech 2015), although this objection turns on a very strict reading of Contessa’s account. This problem is solved in Díez’s (2020) “Ensemble-Plus-Standing-For” account of representation, which is based on conditions that rule out a mismatch concerning the number of objects in the collection. Contessa does not comment on the applicability of mathematics but since his account shares with the structuralist account an emphasis on relations and one-to-one model-target correspondence, Contessa can appeal to the same account of the applicability of mathematics as the structuralist. Like Suárez, Contessa takes his account to be universal and apply to non-scientific representations such as portraits and maps. But it remains unclear how Interpretation addresses the Problem of Style. As we have seen earlier, in particular visual representations fall into different categories and there is a question about how these can be classified within the interpretational framework. With respect to the Question of Ontology, Interpretation itself places few constraints on what scientific models are. All it requires is that they consist of objects, properties, relations, and functions (but see Contessa (2010) for further discussion of what he takes models to be, ontologically speaking).