- Multi-Tiered System of Supports Build effective, district-wide MTSS

- School Climate & Culture Create a safe, supportive learning environment

- Positive Behavior Interventions & Supports Promote positive behavior and climate

- Family Engagement Engage families as partners in education

- Platform Holistic data and student support tools

- Integrations Daily syncs with district data systems and assessments

- Professional Development Strategic advising, workshop facilitation, and ongoing support

- Surveys and Toolkits

18 Research-Based MTSS Interventions

Download step-by-step guides for intervention strategies across literacy, math, behavior, and SEL.

- Connecticut

- Massachusetts

- Mississippi

- New Hampshire

- North Carolina

- North Dakota

- Pennsylvania

- Rhode Island

- South Carolina

- South Dakota

- West Virginia

- Testimonials

- Success Stories

- About Panorama

- Data Privacy

- Leadership Team

- In the Press

- Request a Demo

- Popular Posts

- Multi-Tiered System of Supports

- Family Engagement

- Social-Emotional Well-Being

- College and Career Readiness

Show Categories

School Climate

45 survey questions to understand student engagement in online learning.

In our work with K-12 school districts during the COVID-19 pandemic, countless district leaders and school administrators have told us how challenging it's been to build student engagement outside of the traditional classroom.

Not only that, but the challenges associated with online learning may have the largest impact on students from marginalized communities. Research suggests that some groups of students experience more difficulty with academic performance and engagement when course content is delivered online vs. face-to-face.

As you look to improve the online learning experience for students, take a moment to understand how students, caregivers, and staff are currently experiencing virtual learning. Where are the areas for improvement? How supported do students feel in their online coursework? Do teachers feel equipped to support students through synchronous and asynchronous facilitation? How confident do families feel in supporting their children at home?

Below, we've compiled a bank of 45 questions to understand student engagement in online learning. Interested in running a student, family, or staff engagement survey? Click here to learn about Panorama's survey analytics platform for K-12 school districts.

Download Toolkit: 9 Virtual Learning Resources to Engage Students, Families, and Staff

45 Questions to Understand Student Engagement in Online Learning

For students (grades 3-5 and 6-12):.

1. How excited are you about going to your classes?

2. How often do you get so focused on activities in your classes that you lose track of time?

3. In your classes, how eager are you to participate?

4. When you are not in school, how often do you talk about ideas from your classes?

5. Overall, how interested are you in your classes?

6. What are the most engaging activities that happen in this class?

7. Which aspects of class have you found least engaging?

8. If you were teaching class, what is the one thing you would do to make it more engaging for all students?

9. How do you know when you are feeling engaged in class?

10. What projects/assignments/activities do you find most engaging in this class?

11. What does this teacher do to make this class engaging?

12. How much effort are you putting into your classes right now?

13. How difficult or easy is it for you to try hard on your schoolwork right now?

14. How difficult or easy is it for you to stay focused on your schoolwork right now?

15. If you have missed in-person school recently, why did you miss school?

16. If you have missed online classes recently, why did you miss class?

17. How would you like to be learning right now?

18. How happy are you with the amount of time you spend speaking with your teacher?

19. How difficult or easy is it to use the distance learning technology (computer, tablet, video calls, learning applications, etc.)?

20. What do you like about school right now?

21. What do you not like about school right now?

22. When you have online schoolwork, how often do you have the technology (laptop, tablet, computer, etc) you need?

23. How difficult or easy is it for you to connect to the internet to access your schoolwork?

24. What has been the hardest part about completing your schoolwork?

25. How happy are you with how much time you spend in specials or enrichment (art, music, PE, etc.)?

26. Are you getting all the help you need with your schoolwork right now?

27. How sure are you that you can do well in school right now?

28. Are there adults at your school you can go to for help if you need it right now?

29. If you are participating in distance learning, how often do you hear from your teachers individually?

For Families, Parents, and Caregivers:

30 How satisfied are you with the way learning is structured at your child’s school right now?

31. Do you think your child should spend less or more time learning in person at school right now?

32. How difficult or easy is it for your child to use the distance learning tools (video calls, learning applications, etc.)?

33. How confident are you in your ability to support your child's education during distance learning?

34. How confident are you that teachers can motivate students to learn in the current model?

35. What is working well with your child’s education that you would like to see continued?

36. What is challenging with your child’s education that you would like to see improved?

37. Does your child have their own tablet, laptop, or computer available for schoolwork when they need it?

38. What best describes your child's typical internet access?

39. Is there anything else you would like us to know about your family’s needs at this time?

For Teachers and Staff:

40. In the past week, how many of your students regularly participated in your virtual classes?

41. In the past week, how engaged have students been in your virtual classes?

42. In the past week, how engaged have students been in your in-person classes?

43. Is there anything else you would like to share about student engagement at this time?

44. What is working well with the current learning model that you would like to see continued?

45. What is challenging about the current learning model that you would like to see improved?

Elevate Student, Family, and Staff Voices This Year With Panorama

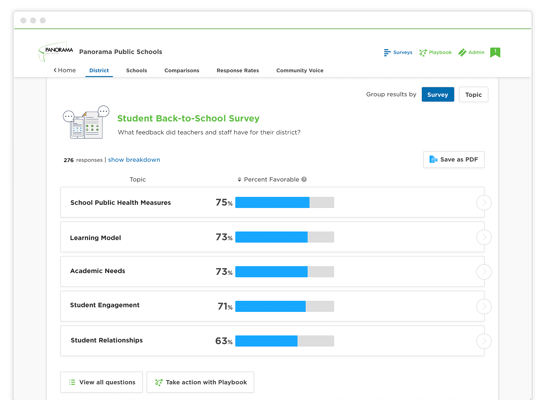

Schools and districts can use Panorama’s leading survey administration and analytics platform to quickly gather and take action on information from students, families, teachers, and staff. The questions are applicable to all types of K-12 school settings and grade levels, as well as to communities serving students from a range of socioeconomic backgrounds.

In the Panorama platform, educators can view and disaggregate results by topic, question, demographic group, grade level, school, and more to inform priority areas and action plans. Districts may use the data to improve teaching and learning models, build stronger academic and social-emotional support systems, improve stakeholder communication, and inform staff professional development.

To learn more about Panorama's survey platform, get in touch with our team.

Related Articles

Engaging Your School Community in Survey Results (Q&A Ep. 4)

Learn how to engage principals, staff, families, and students in the survey results when running a stakeholder feedback program around school climate.

La Cañada Shares Survey Results

La Cañada Unified School District, Panorama's first client, shares results from its surveys, used to collect feedback from students, families, and staff.

44 Questions to Ask Students, Families, and Staff During the Pandemic

Identify ways to support students, families, and staff in your school district during the pandemic with these 44 questions.

Featured Resource

9 virtual learning resources to connect with students, families, and staff.

We've bundled our top resources for building belonging in hybrid or distance learning environments.

Join 90,000+ education leaders on our weekly newsletter.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Surveys Academic

Distance learning survey for students: Tips & examples

The COVID-19 pandemic changed learning in many unprecedented ways. Students had to not just move to online learning but also keep a social distance from their friends and family. A student interest survey helps customize teaching methods and curriculum to make learning more engaging and relevant to students’ lives. It was quite challenging for some to adjust to the ‘new normal’ and missed the in-person interaction with their teachers. For some, it simply meant spending more time with the parents.

Schools need to know how students feel about distance education and learn more about their experiences. To collect data, they can send out a survey on remote learning for students. Once they have the results, the management team can know what students like in the existing setup and what they would like to change.

The classroom response system allowed students to answer multiple-choice questions and engage in real-time discussions instantly.

Here are the examples of class survey questions of distance learning survey for students you must ask to collect their feedback.

LEARN ABOUT: Testimonial Questions

Examples of distance learning survey questions for students

1. How do you feel overall about distance education?

- Below Average

This question collects responses about the overall experience of the students regarding online education. Schools can use this data to decide whether they should continue with teaching online or move in-person learning.

2. Do you have access to a device for learning online?

- Yes, but it doesn’t work well

- No, I share with others

Students should have uninterrupted access to a device for learning online. Know if they face any challenges with the device’s hardware quality. Or if they share the device with others in the house and can’t access when they need it.

3. What device do you use for distance learning?

Know whether students use a laptop, desktop, smartphone, or tablet for distance learning. A laptop or desktop would be an ideal choice for its screen size and quality. You can use a multiple-choice question type in your questionnaire for distance education students.

4. How much time do you spend each day on an average on distance education?

Know how much time do students spend while taking an online course. Analyze if they are over-spending time and find out the reasons behind it. Students must allocate some time to play and exercise while staying at home to take care of their health. You can find out from answers to this question whether they spend time on other activities as well.

5. How effective has remote learning been for you?

- Not at all effective

- Slightly effective

- Moderately effective

- Very effective

- Extremely effective

Depending on an individual’s personality, students may like to learn in the classroom with fellow students or alone at home. The classroom offers a more lively and interactive environment, whereas it is relatively calm at home. You can use this question to know if remote learning is working for students or not.

6. How helpful your [School or University] has been in offering you the resources to learn from home?

- Not at all helpful

- Slightly helpful

- Moderately helpful

- Very helpful

- Extremely helpful

The school management teams need to offer full support to both teachers and students to make distance education comfortable and effective. They should provide support in terms of technological infrastructure and process framework. Given the pandemic situation, schools must allow more flexibility and create lesser strict policies.

7. How stressful is distance learning for you during the COVID-19 pandemic?

Studying in the time of pandemic can be quite stressful, especially if you or someone in the family is not doing well. Measure the stress level of the students and identify ways to reduce it. For instance, you can organize an online dance party or a lego game. The responses to this question can be crucial in deciding the future course of distance learning.

8. How well could you manage time while learning remotely? (Consider 5 being extremely well and 1 being not at all)

- Academic schedule

Staying at home all the time and balancing multiple things can be stressful for many people. It requires students to have good time-management skills and self-discipline. Students can rate their experience on a scale of 1-5 and share it with the school authorities. Use a multiple-choice matrix question type for such questions in your distance learning questionnaire for students.

LEARN ABOUT: System Usability Scale

9. Do you enjoy learning remotely?

- Yes, absolutely

- Yes, but I would like to change a few things

- No, there are quite a few challenges

- No, not at all

Get a high-level view on whether students are enjoying learning from home or doing it because they are being forced to do so. Gain insights on how you can improve distance education and make it interesting for them.

10. How helpful are your teachers while studying online?

Distance education lacks proximity with teachers and has its own set of unique challenges. Some students may find it difficult to learn a subject and take more time to understand. This question measures the extent to which students find their teachers helpful.

You can also use a ready-made survey template to save time. The sample questionnaire for students can be easily customized as per your requirements.

USE THIS TEMPLATE

Other important questions of distance learning survey for students

- How peaceful is the environment at home while learning?

- Are you satisfied with the technology and software you are using for online learning?

- How important is face-to-face communication for you while learning remotely?

- How often do you talk to your [School/University] classmates?

- How often do you have a 1-1 discussion with your teachers?

How to create a survey?

The intent behind creating a remote learning questionnaire for students should be to know how schools and teachers can better support them. Use an online survey software like ours to create a survey or use a template to get started. Distribute the survey through email, mobile app, website, or QR code.

Once you get the survey results, generate reports, and share them with your team. You can also download them in formats like .pdf, .doc, and .xls. To analyze data from multiple resources, you can integrate the survey software with third-party apps.

If you need any help with designing a survey, customizing the look and feel, or deriving insights from it, get in touch with us. We’d be happy to help.

MORE LIKE THIS

Cannabis Industry Business Intelligence: Impact on Research

May 28, 2024

Top 10 Dynata Alternatives & Competitors

May 27, 2024

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

I Am Disconnected – Tuesday CX Thoughts

May 21, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Open supplemental data

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, insights into students’ experiences and perceptions of remote learning methods: from the covid-19 pandemic to best practice for the future.

- 1 Minerva Schools at Keck Graduate Institute, San Francisco, CA, United States

- 2 Ronin Institute for Independent Scholarship, Montclair, NJ, United States

- 3 Department of Physics, University of Toronto, Toronto, ON, Canada

This spring, students across the globe transitioned from in-person classes to remote learning as a result of the COVID-19 pandemic. This unprecedented change to undergraduate education saw institutions adopting multiple online teaching modalities and instructional platforms. We sought to understand students’ experiences with and perspectives on those methods of remote instruction in order to inform pedagogical decisions during the current pandemic and in future development of online courses and virtual learning experiences. Our survey gathered quantitative and qualitative data regarding students’ experiences with synchronous and asynchronous methods of remote learning and specific pedagogical techniques associated with each. A total of 4,789 undergraduate participants representing institutions across 95 countries were recruited via Instagram. We find that most students prefer synchronous online classes, and students whose primary mode of remote instruction has been synchronous report being more engaged and motivated. Our qualitative data show that students miss the social aspects of learning on campus, and it is possible that synchronous learning helps to mitigate some feelings of isolation. Students whose synchronous classes include active-learning techniques (which are inherently more social) report significantly higher levels of engagement, motivation, enjoyment, and satisfaction with instruction. Respondents’ recommendations for changes emphasize increased engagement, interaction, and student participation. We conclude that active-learning methods, which are known to increase motivation, engagement, and learning in traditional classrooms, also have a positive impact in the remote-learning environment. Integrating these elements into online courses will improve the student experience.

Introduction

The COVID-19 pandemic has dramatically changed the demographics of online students. Previously, almost all students engaged in online learning elected the online format, starting with individual online courses in the mid-1990s through today’s robust online degree and certificate programs. These students prioritize convenience, flexibility and ability to work while studying and are older than traditional college age students ( Harris and Martin, 2012 ; Levitz, 2016 ). These students also find asynchronous elements of a course are more useful than synchronous elements ( Gillingham and Molinari, 2012 ). In contrast, students who chose to take courses in-person prioritize face-to-face instruction and connection with others and skew considerably younger ( Harris and Martin, 2012 ). This leaves open the question of whether students who prefer to learn in-person but are forced to learn remotely will prefer synchronous or asynchronous methods. One study of student preferences following a switch to remote learning during the COVID-19 pandemic indicates that students enjoy synchronous over asynchronous course elements and find them more effective ( Gillis and Krull, 2020 ). Now that millions of traditional in-person courses have transitioned online, our survey expands the data on student preferences and explores if those preferences align with pedagogical best practices.

An extensive body of research has explored what instructional methods improve student learning outcomes (Fink. 2013). Considerable evidence indicates that active-learning or student-centered approaches result in better learning outcomes than passive-learning or instructor-centered approaches, both in-person and online ( Freeman et al., 2014 ; Chen et al., 2018 ; Davis et al., 2018 ). Active-learning approaches include student activities or discussion in class, whereas passive-learning approaches emphasize extensive exposition by the instructor ( Freeman et al., 2014 ). Constructivist learning theories argue that students must be active participants in creating their own learning, and that listening to expert explanations is seldom sufficient to trigger the neurological changes necessary for learning ( Bostock, 1998 ; Zull, 2002 ). Some studies conclude that, while students learn more via active learning, they may report greater perceptions of their learning and greater enjoyment when passive approaches are used ( Deslauriers et al., 2019 ). We examine student perceptions of remote learning experiences in light of these previous findings.

In this study, we administered a survey focused on student perceptions of remote learning in late May 2020 through the social media account of @unjadedjade to a global population of English speaking undergraduate students representing institutions across 95 countries. We aim to explore how students were being taught, the relationship between pedagogical methods and student perceptions of their experience, and the reasons behind those perceptions. Here we present an initial analysis of the results and share our data set for further inquiry. We find that positive student perceptions correlate with synchronous courses that employ a variety of interactive pedagogical techniques, and that students overwhelmingly suggest behavioral and pedagogical changes that increase social engagement and interaction. We argue that these results support the importance of active learning in an online environment.

Materials and Methods

Participant pool.

Students were recruited through the Instagram account @unjadedjade. This social media platform, run by influencer Jade Bowler, focuses on education, effective study tips, ethical lifestyle, and promotes a positive mindset. For this reason, the audience is presumably academically inclined, and interested in self-improvement. The survey was posted to her account and received 10,563 responses within the first 36 h. Here we analyze the 4,789 of those responses that came from undergraduates. While we did not collect demographic or identifying information, we suspect that women are overrepresented in these data as followers of @unjadedjade are 80% women. A large minority of respondents were from the United Kingdom as Jade Bowler is a British influencer. Specifically, 43.3% of participants attend United Kingdom institutions, followed by 6.7% attending university in the Netherlands, 6.1% in Germany, 5.8% in the United States and 4.2% in Australia. Ninety additional countries are represented in these data (see Supplementary Figure 1 ).

Survey Design

The purpose of this survey is to learn about students’ instructional experiences following the transition to remote learning in the spring of 2020.

This survey was initially created for a student assignment for the undergraduate course Empirical Analysis at Minerva Schools at KGI. That version served as a robust pre-test and allowed for identification of the primary online platforms used, and the four primary modes of learning: synchronous (live) classes, recorded lectures and videos, uploaded or emailed materials, and chat-based communication. We did not adapt any open-ended questions based on the pre-test survey to avoid biasing the results and only corrected language in questions for clarity. We used these data along with an analysis of common practices in online learning to revise the survey. Our revised survey asked students to identify the synchronous and asynchronous pedagogical methods and platforms that they were using for remote learning. Pedagogical methods were drawn from literature assessing active and passive teaching strategies in North American institutions ( Fink, 2013 ; Chen et al., 2018 ; Davis et al., 2018 ). Open-ended questions asked students to describe why they preferred certain modes of learning and how they could improve their learning experience. Students also reported on their affective response to learning and participation using a Likert scale.

The revised survey also asked whether students had responded to the earlier survey. No significant differences were found between responses of those answering for the first and second times (data not shown). See Supplementary Appendix 1 for survey questions. Survey data was collected from 5/21/20 to 5/23/20.

Qualitative Coding

We applied a qualitative coding framework adapted from Gale et al. (2013) to analyze student responses to open-ended questions. Four researchers read several hundred responses and noted themes that surfaced. We then developed a list of themes inductively from the survey data and deductively from the literature on pedagogical practice ( Garrison et al., 1999 ; Zull, 2002 ; Fink, 2013 ; Freeman et al., 2014 ). The initial codebook was revised collaboratively based on feedback from researchers after coding 20–80 qualitative comments each. Before coding their assigned questions, alignment was examined through coding of 20 additional responses. Researchers aligned in identifying the same major themes. Discrepancies in terms identified were resolved through discussion. Researchers continued to meet weekly to discuss progress and alignment. The majority of responses were coded by a single researcher using the final codebook ( Supplementary Table 1 ). All responses to questions 3 (4,318 responses) and 8 (4,704 responses), and 2,512 of 4,776 responses to question 12 were analyzed. Valence was also indicated where necessary (i.e., positive or negative discussion of terms). This paper focuses on the most prevalent themes from our initial analysis of the qualitative responses. The corresponding author reviewed codes to ensure consistency and accuracy of reported data.

Statistical Analysis

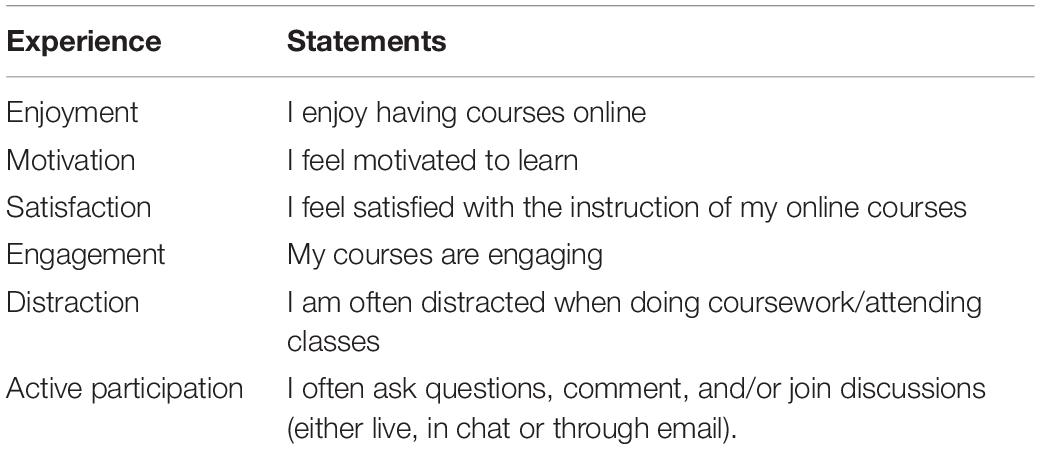

The survey included two sets of Likert-scale questions, one consisting of a set of six statements about students’ perceptions of their experiences following the transition to remote learning ( Table 1 ). For each statement, students indicated their level of agreement with the statement on a five-point scale ranging from 1 (“Strongly Disagree”) to 5 (“Strongly Agree”). The second set asked the students to respond to the same set of statements, but about their retroactive perceptions of their experiences with in-person instruction before the transition to remote learning. This set was not the subject of our analysis but is present in the published survey results. To explore correlations among student responses, we used CrossCat analysis to calculate the probability of dependence between Likert-scale responses ( Mansinghka et al., 2016 ).

Table 1. Likert-scale questions.

Mean values are calculated based on the numerical scores associated with each response. Measures of statistical significance for comparisons between different subgroups of respondents were calculated using a two-sided Mann-Whitney U -test, and p -values reported here are based on this test statistic. We report effect sizes in pairwise comparisons using the common-language effect size, f , which is the probability that the response from a random sample from subgroup 1 is greater than the response from a random sample from subgroup 2. We also examined the effects of different modes of remote learning and technological platforms using ordinal logistic regression. With the exception of the mean values, all of these analyses treat Likert-scale responses as ordinal-scale, rather than interval-scale data.

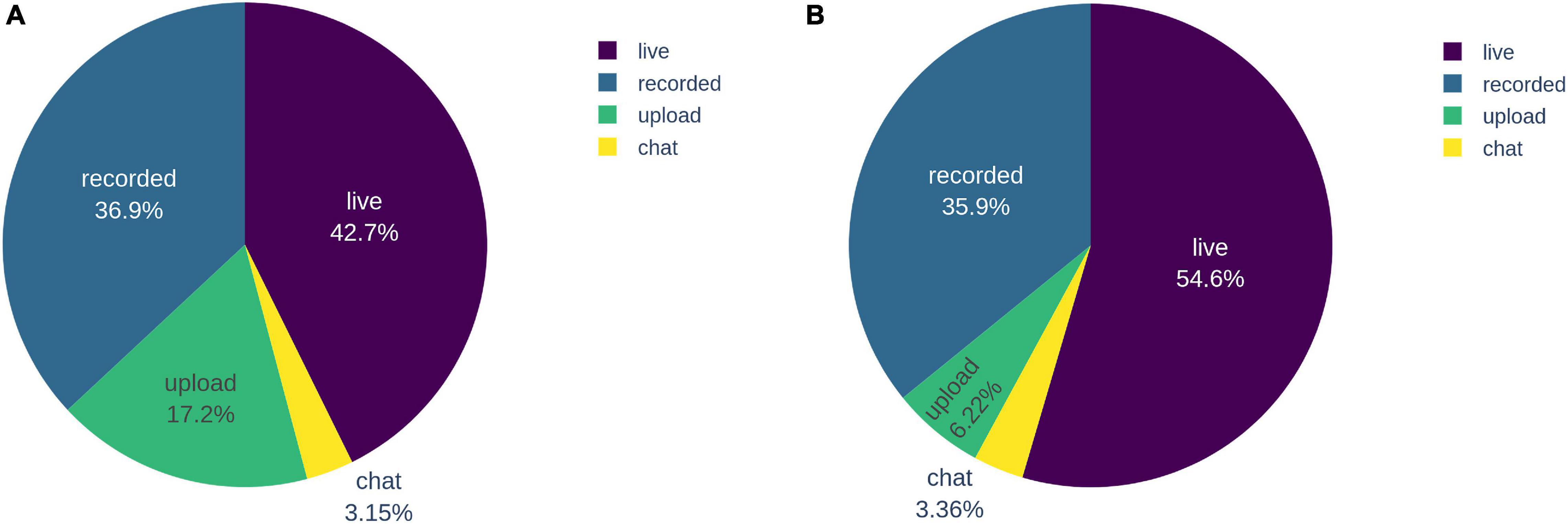

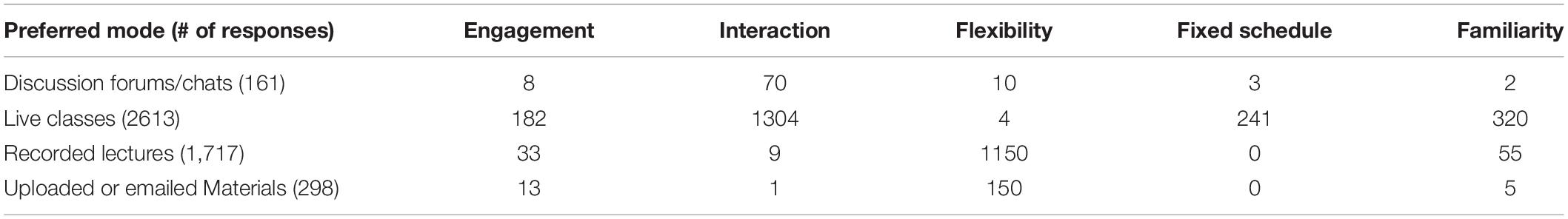

Students Prefer Synchronous Class Sessions

Students were asked to identify their primary mode of learning given four categories of remote course design that emerged from the pilot survey and across literature on online teaching: live (synchronous) classes, recorded lectures and videos, emailed or uploaded materials, and chats and discussion forums. While 42.7% ( n = 2,045) students identified live classes as their primary mode of learning, 54.6% ( n = 2613) students preferred this mode ( Figure 1 ). Both recorded lectures and live classes were preferred over uploaded materials (6.22%, n = 298) and chat (3.36%, n = 161).

Figure 1. Actual (A) and preferred (B) primary modes of learning.

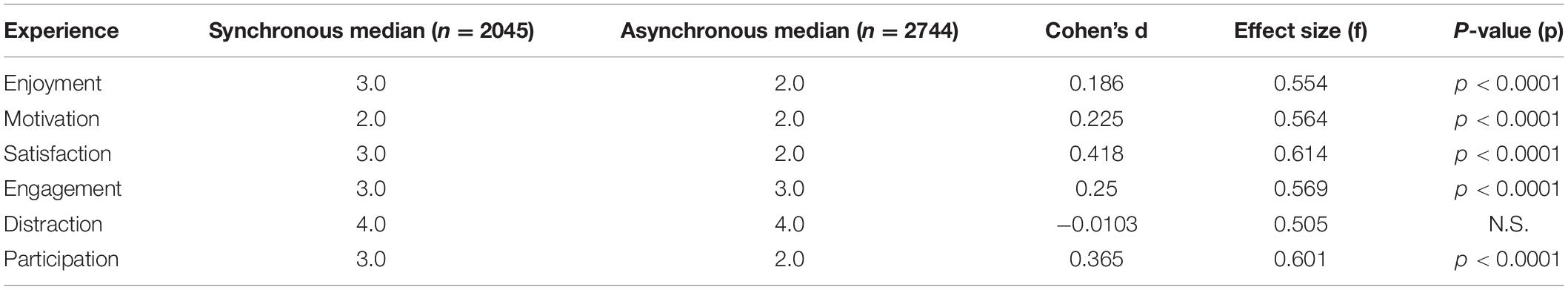

In addition to a preference for live classes, students whose primary mode was synchronous were more likely to enjoy the class, feel motivated and engaged, be satisfied with instruction and report higher levels of participation ( Table 2 and Supplementary Figure 2 ). Regardless of primary mode, over two-thirds of students reported they are often distracted during remote courses.

Table 2. The effect of synchronous vs. asynchronous primary modes of learning on student perceptions.

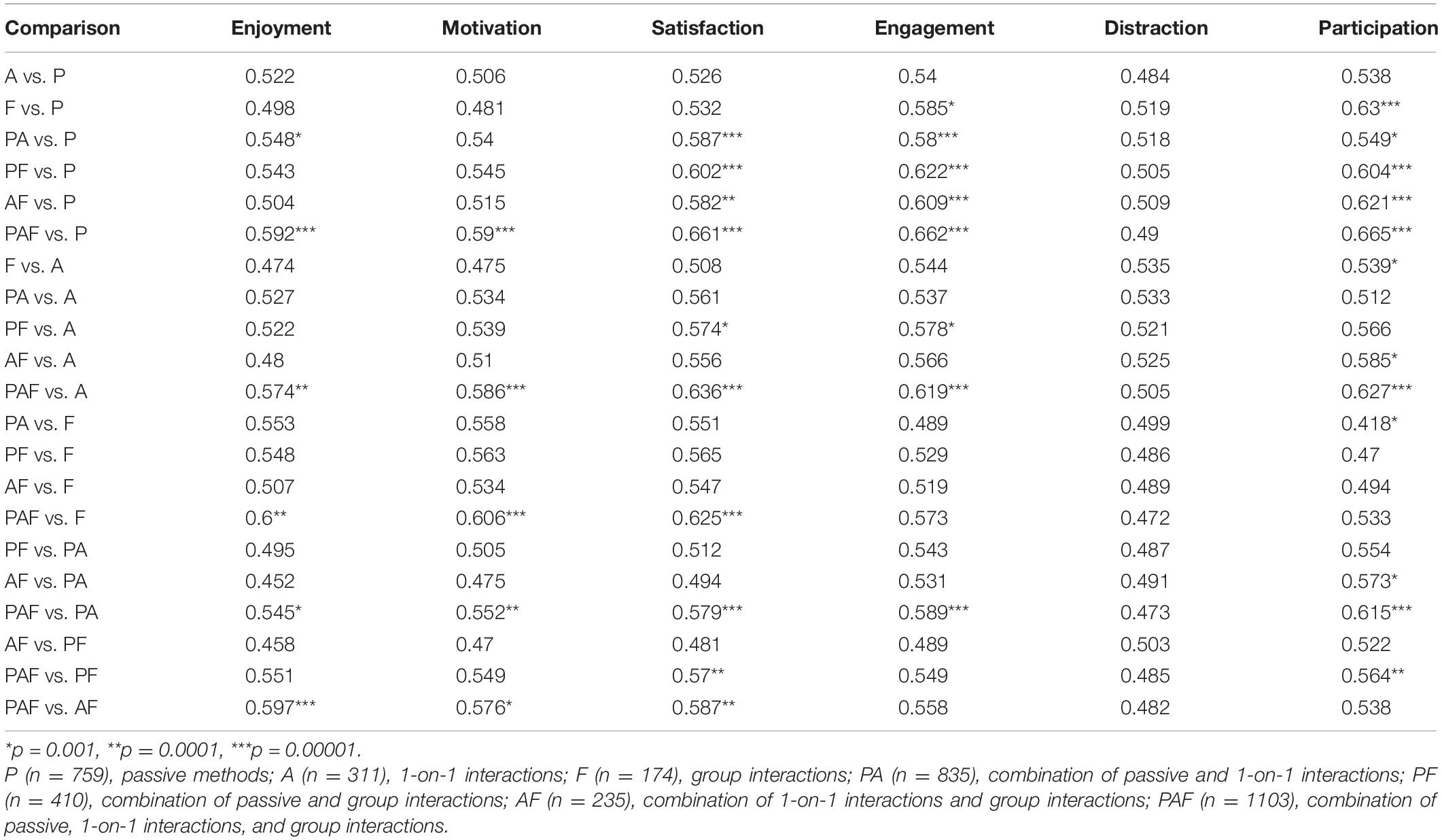

Variation in Pedagogical Techniques for Synchronous Classes Results in More Positive Perceptions of the Student Learning Experience

To survey the use of passive vs. active instructional methods, students reported the pedagogical techniques used in their live classes. Among the synchronous methods, we identify three different categories ( National Research Council, 2000 ; Freeman et al., 2014 ). Passive methods (P) include lectures, presentations, and explanation using diagrams, white boards and/or other media. These methods all rely on instructor delivery rather than student participation. Our next category represents active learning through primarily one-on-one interactions (A). The methods in this group are in-class assessment, question-and-answer (Q&A), and classroom chat. Group interactions (F) included classroom discussions and small-group activities. Given these categories, Mann-Whitney U pairwise comparisons between the 7 possible combinations and Likert scale responses about student experience showed that the use of a variety of methods resulted in higher ratings of experience vs. the use of a single method whether or not that single method was active or passive ( Table 3 ). Indeed, students whose classes used methods from each category (PAF) had higher ratings of enjoyment, motivation, and satisfaction with instruction than those who only chose any single method ( p < 0.0001) and also rated higher rates of participation and engagement compared to students whose only method was passive (P) or active through one-on-one interactions (A) ( p < 0.00001). Student ratings of distraction were not significantly different for any comparison. Given that sets of Likert responses often appeared significant together in these comparisons, we ran a CrossCat analysis to look at the probability of dependence across Likert responses. Responses have a high probability of dependence on each other, limiting what we can claim about any discrete response ( Supplementary Figure 3 ).

Table 3. Comparison of combinations of synchronous methods on student perceptions. Effect size (f).

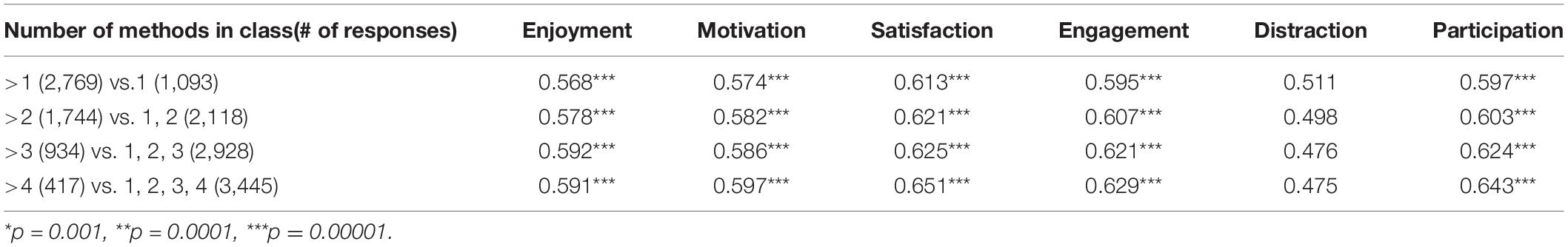

Mann-Whitney U pairwise comparisons were also used to check if improvement in student experience was associated with the number of methods used vs. the variety of types of methods. For every comparison, we found that more methods resulted in higher scores on all Likert measures except distraction ( Table 4 ). Even comparison between four or fewer methods and greater than four methods resulted in a 59% chance that the latter enjoyed the courses more ( p < 0.00001) and 60% chance that they felt more motivated to learn ( p < 0.00001). Students who selected more than four methods ( n = 417) were also 65.1% ( p < 0.00001), 62.9% ( p < 0.00001) and 64.3% ( p < 0.00001) more satisfied with instruction, engaged, and actively participating, respectfully. Therefore, there was an overlap between how the number and variety of methods influenced students’ experiences. Since the number of techniques per category is 2–3, we cannot fully disentangle the effect of number vs. variety. Pairwise comparisons to look at subsets of data with 2–3 methods from a single group vs. 2–3 methods across groups controlled for this but had low sample numbers in most groups and resulted in no significant findings (data not shown). Therefore, from the data we have in our survey, there seems to be an interdependence between number and variety of methods on students’ learning experiences.

Table 4. Comparison of the number of synchronous methods on student perceptions. Effect size (f).

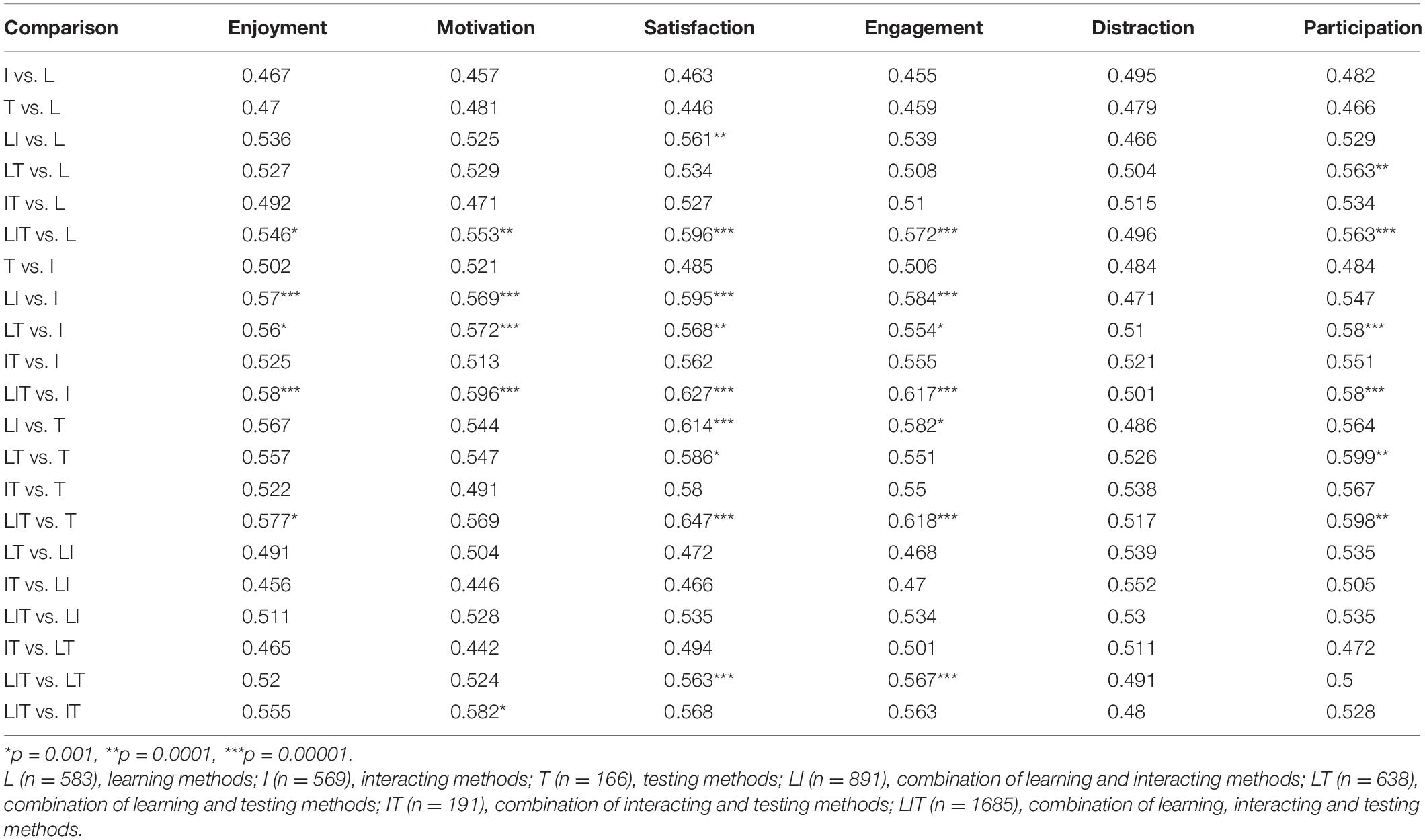

Variation in Asynchronous Pedagogical Techniques Results in More Positive Perceptions of the Student Learning Experience

Along with synchronous pedagogical methods, students reported the asynchronous methods that were used for their classes. We divided these methods into three main categories and conducted pairwise comparisons. Learning methods include video lectures, video content, and posted study materials. Interacting methods include discussion/chat forums, live office hours, and email Q&A with professors. Testing methods include assignments and exams. Our results again show the importance of variety in students’ perceptions ( Table 5 ). For example, compared to providing learning materials only, providing learning materials, interaction, and testing improved enjoyment ( f = 0.546, p < 0.001), motivation ( f = 0.553, p < 0.0001), satisfaction with instruction ( f = 0.596, p < 0.00001), engagement ( f = 0.572, p < 0.00001) and active participation ( f = 0.563, p < 0.00001) (row 6). Similarly, compared to just being interactive with conversations, the combination of all three methods improved five out of six indicators, except for distraction in class (row 11).

Table 5. Comparison of combinations of asynchronous methods on student perceptions. Effect size (f).

Ordinal logistic regression was used to assess the likelihood that the platforms students used predicted student perceptions ( Supplementary Table 2 ). Platform choices were based on the answers to open-ended questions in the pre-test survey. The synchronous and asynchronous methods used were consistently more predictive of Likert responses than the specific platforms. Likewise, distraction continued to be our outlier with no differences across methods or platforms.

Students Prefer In-Person and Synchronous Online Learning Largely Due to Social-Emotional Reasoning

As expected, 86.1% (4,123) of survey participants report a preference for in-person courses, while 13.9% (666) prefer online courses. When asked to explain the reasons for their preference, students who prefer in-person courses most often mention the importance of social interaction (693 mentions), engagement (639 mentions), and motivation (440 mentions). These students are also more likely to mention a preference for a fixed schedule (185 mentions) vs. a flexible schedule (2 mentions).

In addition to identifying social reasons for their preference for in-person learning, students’ suggestions for improvements in online learning focus primarily on increasing interaction and engagement, with 845 mentions of live classes, 685 mentions of interaction, 126 calls for increased participation and calls for changes related to these topics such as, “Smaller teaching groups for live sessions so that everyone is encouraged to talk as some people don’t say anything and don’t participate in group work,” and “Make it less of the professor reading the pdf that was given to us and more interaction.”

Students who prefer online learning primarily identify independence and flexibility (214 mentions) and reasons related to anxiety and discomfort in in-person settings (41 mentions). Anxiety was only mentioned 12 times in the much larger group that prefers in-person learning.

The preference for synchronous vs. asynchronous modes of learning follows similar trends ( Table 6 ). Students who prefer live classes mention engagement and interaction most often while those who prefer recorded lectures mention flexibility.

Table 6. Most prevalent themes for students based on their preferred mode of remote learning.

Student Perceptions Align With Research on Active Learning

The first, and most robust, conclusion is that incorporation of active-learning methods correlates with more positive student perceptions of affect and engagement. We can see this clearly in the substantial differences on a number of measures, where students whose classes used only passive-learning techniques reported lower levels of engagement, satisfaction, participation, and motivation when compared with students whose classes incorporated at least some active-learning elements. This result is consistent with prior research on the value of active learning ( Freeman et al., 2014 ).

Though research shows that student learning improves in active learning classes, on campus, student perceptions of their learning, enjoyment, and satisfaction with instruction are often lower in active-learning courses ( Deslauriers et al., 2019 ). Our finding that students rate enjoyment and satisfaction with instruction higher for active learning online suggests that the preference for passive lectures on campus relies on elements outside of the lecture itself. That might include the lecture hall environment, the social physical presence of peers, or normalization of passive lectures as the expected mode for on-campus classes. This implies that there may be more buy-in for active learning online vs. in-person.

A second result from our survey is that student perceptions of affect and engagement are associated with students experiencing a greater diversity of learning modalities. We see this in two different results. First, in addition to the fact that classes that include active learning outperform classes that rely solely on passive methods, we find that on all measures besides distraction, the highest student ratings are associated with a combination of active and passive methods. Second, we find that these higher scores are associated with classes that make use of a larger number of different methods.

This second result suggests that students benefit from classes that make use of multiple different techniques, possibly invoking a combination of passive and active methods. However, it is unclear from our data whether this effect is associated specifically with combining active and passive methods, or if it is associated simply with the use of multiple different methods, irrespective of whether those methods are active, passive, or some combination. The problem is that the number of methods used is confounded with the diversity of methods (e.g., it is impossible for a classroom using only one method to use both active and passive methods). In an attempt to address this question, we looked separately at the effect of number and diversity of methods while holding the other constant. Across a large number of such comparisons, we found few statistically significant differences, which may be a consequence of the fact that each comparison focused on a small subset of the data.

Thus, our data suggests that using a greater diversity of learning methods in the classroom may lead to better student outcomes. This is supported by research on student attention span which suggests varying delivery after 10–15 min to retain student’s attention ( Bradbury, 2016 ). It is likely that this is more relevant for online learning where students report high levels of distraction across methods, modalities, and platforms. Given that number and variety are key, and there are few passive learning methods, we can assume that some combination of methods that includes active learning improves student experience. However, it is not clear whether we should predict that this benefit would come simply from increasing the number of different methods used, or if there are benefits specific to combining particular methods. Disentangling these effects would be an interesting avenue for future research.

Students Value Social Presence in Remote Learning

Student responses across our open-ended survey questions show a striking difference in reasons for their preferences compared with traditional online learners who prefer flexibility ( Harris and Martin, 2012 ; Levitz, 2016 ). Students reasons for preferring in-person classes and synchronous remote classes emphasize the desire for social interaction and echo the research on the importance of social presence for learning in online courses.

Short et al. (1976) outlined Social Presence Theory in depicting students’ perceptions of each other as real in different means of telecommunications. These ideas translate directly to questions surrounding online education and pedagogy in regards to educational design in networked learning where connection across learners and instructors improves learning outcomes especially with “Human-Human interaction” ( Goodyear, 2002 , 2005 ; Tu, 2002 ). These ideas play heavily into asynchronous vs. synchronous learning, where Tu reports students having positive responses to both synchronous “real-time discussion in pleasantness, responsiveness and comfort with familiar topics” and real-time discussions edging out asynchronous computer-mediated communications in immediate replies and responsiveness. Tu’s research indicates that students perceive more interaction with synchronous mediums such as discussions because of immediacy which enhances social presence and support the use of active learning techniques ( Gunawardena, 1995 ; Tu, 2002 ). Thus, verbal immediacy and communities with face-to-face interactions, such as those in synchronous learning classrooms, lessen the psychological distance of communicators online and can simultaneously improve instructional satisfaction and reported learning ( Gunawardena and Zittle, 1997 ; Richardson and Swan, 2019 ; Shea et al., 2019 ). While synchronous learning may not be ideal for traditional online students and a subset of our participants, this research suggests that non-traditional online learners are more likely to appreciate the value of social presence.

Social presence also connects to the importance of social connections in learning. Too often, current systems of education emphasize course content in narrow ways that fail to embrace the full humanity of students and instructors ( Gay, 2000 ). With the COVID-19 pandemic leading to further social isolation for many students, the importance of social presence in courses, including live interactions that build social connections with classmates and with instructors, may be increased.

Limitations of These Data

Our undergraduate data consisted of 4,789 responses from 95 different countries, an unprecedented global scale for research on online learning. However, since respondents were followers of @unjadedjade who focuses on learning and wellness, these respondents may not represent the average student. Biases in survey responses are often limited by their recruitment techniques and our bias likely resulted in more robust and thoughtful responses to free-response questions and may have influenced the preference for synchronous classes. It is unlikely that it changed students reporting on remote learning pedagogical methods since those are out of student control.

Though we surveyed a global population, our design was rooted in literature assessing pedagogy in North American institutions. Therefore, our survey may not represent a global array of teaching practices.

This survey was sent out during the initial phase of emergency remote learning for most countries. This has two important implications. First, perceptions of remote learning may be clouded by complications of the pandemic which has increased social, mental, and financial stresses globally. Future research could disaggregate the impact of the pandemic from students’ learning experiences with a more detailed and holistic analysis of the impact of the pandemic on students.

Second, instructors, students and institutions were not able to fully prepare for effective remote education in terms of infrastructure, mentality, curriculum building, and pedagogy. Therefore, student experiences reflect this emergency transition. Single-modality courses may correlate with instructors who lacked the resources or time to learn or integrate more than one modality. Regardless, the main insights of this research align well with the science of teaching and learning and can be used to inform both education during future emergencies and course development for online programs that wish to attract traditional college students.

Global Student Voices Improve Our Understanding of the Experience of Emergency Remote Learning

Our survey shows that global student perspectives on remote learning agree with pedagogical best practices, breaking with the often-found negative reactions of students to these practices in traditional classrooms ( Shekhar et al., 2020 ). Our analysis of open-ended questions and preferences show that a majority of students prefer pedagogical approaches that promote both active learning and social interaction. These results can serve as a guide to instructors as they design online classes, especially for students whose first choice may be in-person learning. Indeed, with the near ubiquitous adoption of remote learning during the COVID-19 pandemic, remote learning may be the default for colleges during temporary emergencies. This has already been used at the K-12 level as snow days become virtual learning days ( Aspergren, 2020 ).

In addition to informing pedagogical decisions, the results of this survey can be used to inform future research. Although we survey a global population, our recruitment method selected for students who are English speakers, likely majority female, and have an interest in self-improvement. Repeating this study with a more diverse and representative sample of university students could improve the generalizability of our findings. While the use of a variety of pedagogical methods is better than a single method, more research is needed to determine what the optimal combinations and implementations are for courses in different disciplines. Though we identified social presence as the major trend in student responses, the over 12,000 open-ended responses from students could be analyzed in greater detail to gain a more nuanced understanding of student preferences and suggestions for improvement. Likewise, outliers could shed light on the diversity of student perspectives that we may encounter in our own classrooms. Beyond this, our findings can inform research that collects demographic data and/or measures learning outcomes to understand the impact of remote learning on different populations.

Importantly, this paper focuses on a subset of responses from the full data set which includes 10,563 students from secondary school, undergraduate, graduate, or professional school and additional questions about in-person learning. Our full data set is available here for anyone to download for continued exploration: https://dataverse.harvard.edu/dataset.xhtml?persistentId= doi: 10.7910/DVN/2TGOPH .

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

GS: project lead, survey design, qualitative coding, writing, review, and editing. TN: data analysis, writing, review, and editing. CN and PB: qualitative coding. JW: data analysis, writing, and editing. CS: writing, review, and editing. EV and KL: original survey design and qualitative coding. PP: data analysis. JB: original survey design and survey distribution. HH: data analysis. MP: writing. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We want to thank Minerva Schools at KGI for providing funding for summer undergraduate research internships. We also want to thank Josh Fost and Christopher V. H.-H. Chen for discussion that helped shape this project.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.647986/full#supplementary-material

Aspergren, E. (2020). Snow Days Canceled Because of COVID-19 Online School? Not in These School Districts.sec. Education. USA Today. Available online at: https://www.usatoday.com/story/news/education/2020/12/15/covid-school-canceled-snow-day-online-learning/3905780001/ (accessed December 15, 2020).

Google Scholar

Bostock, S. J. (1998). Constructivism in mass higher education: a case study. Br. J. Educ. Technol. 29, 225–240. doi: 10.1111/1467-8535.00066

CrossRef Full Text | Google Scholar

Bradbury, N. A. (2016). Attention span during lectures: 8 seconds, 10 minutes, or more? Adv. Physiol. Educ. 40, 509–513. doi: 10.1152/advan.00109.2016

PubMed Abstract | CrossRef Full Text | Google Scholar

Chen, B., Bastedo, K., and Howard, W. (2018). Exploring best practices for online STEM courses: active learning, interaction & assessment design. Online Learn. 22, 59–75. doi: 10.24059/olj.v22i2.1369

Davis, D., Chen, G., Hauff, C., and Houben, G.-J. (2018). Activating learning at scale: a review of innovations in online learning strategies. Comput. Educ. 125, 327–344. doi: 10.1016/j.compedu.2018.05.019

Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., and Kestin, G. (2019). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc. Natl. Acad. Sci. 116, 19251–19257. doi: 10.1073/pnas.1821936116

Fink, L. D. (2013). Creating Significant Learning Experiences: An Integrated Approach to Designing College Courses. Somerset, NJ: John Wiley & Sons, Incorporated.

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. 111, 8410–8415. doi: 10.1073/pnas.1319030111

Gale, N. K., Heath, G., Cameron, E., Rashid, S., and Redwood, S. (2013). Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med. Res. Methodol. 13:117. doi: 10.1186/1471-2288-13-117

Garrison, D. R., Anderson, T., and Archer, W. (1999). Critical inquiry in a text-based environment: computer conferencing in higher education. Internet High. Educ. 2, 87–105. doi: 10.1016/S1096-7516(00)00016-6

Gay, G. (2000). Culturally Responsive Teaching: Theory, Research, and Practice. Multicultural Education Series. New York, NY: Teachers College Press.

Gillingham, and Molinari, C. (2012). Online courses: student preferences survey. Internet Learn. 1, 36–45. doi: 10.18278/il.1.1.4

Gillis, A., and Krull, L. M. (2020). COVID-19 remote learning transition in spring 2020: class structures, student perceptions, and inequality in college courses. Teach. Sociol. 48, 283–299. doi: 10.1177/0092055X20954263

Goodyear, P. (2002). “Psychological foundations for networked learning,” in Networked Learning: Perspectives and Issues. Computer Supported Cooperative Work , eds C. Steeples and C. Jones (London: Springer), 49–75. doi: 10.1007/978-1-4471-0181-9_4

Goodyear, P. (2005). Educational design and networked learning: patterns, pattern languages and design practice. Australas. J. Educ. Technol. 21, 82–101. doi: 10.14742/ajet.1344

Gunawardena, C. N. (1995). Social presence theory and implications for interaction and collaborative learning in computer conferences. Int. J. Educ. Telecommun. 1, 147–166.

Gunawardena, C. N., and Zittle, F. J. (1997). Social presence as a predictor of satisfaction within a computer mediated conferencing environment. Am. J. Distance Educ. 11, 8–26. doi: 10.1080/08923649709526970

Harris, H. S., and Martin, E. (2012). Student motivations for choosing online classes. Int. J. Scholarsh. Teach. Learn. 6, 1–8. doi: 10.20429/ijsotl.2012.060211

Levitz, R. N. (2016). 2015-16 National Online Learners Satisfaction and Priorities Report. Cedar Rapids: Ruffalo Noel Levitz, 12.

Mansinghka, V., Shafto, P., Jonas, E., Petschulat, C., Gasner, M., and Tenenbaum, J. B. (2016). CrossCat: a fully Bayesian nonparametric method for analyzing heterogeneous, high dimensional data. J. Mach. Learn. Res. 17, 1–49. doi: 10.1007/978-0-387-69765-9_7

National Research Council (2000). How People Learn: Brain, Mind, Experience, and School: Expanded Edition. Washington, DC: National Academies Press, doi: 10.17226/9853

Richardson, J. C., and Swan, K. (2019). Examining social presence in online courses in relation to students’ perceived learning and satisfaction. Online Learn. 7, 68–88. doi: 10.24059/olj.v7i1.1864

Shea, P., Pickett, A. M., and Pelz, W. E. (2019). A Follow-up investigation of ‘teaching presence’ in the suny learning network. Online Learn. 7, 73–75. doi: 10.24059/olj.v7i2.1856

Shekhar, P., Borrego, M., DeMonbrun, M., Finelli, C., Crockett, C., and Nguyen, K. (2020). Negative student response to active learning in STEM classrooms: a systematic review of underlying reasons. J. Coll. Sci. Teach. 49, 45–54.

Short, J., Williams, E., and Christie, B. (1976). The Social Psychology of Telecommunications. London: John Wiley & Sons.

Tu, C.-H. (2002). The measurement of social presence in an online learning environment. Int. J. E Learn. 1, 34–45. doi: 10.17471/2499-4324/421

Zull, J. E. (2002). The Art of Changing the Brain: Enriching Teaching by Exploring the Biology of Learning , 1st Edn. Sterling, VA: Stylus Publishing.

Keywords : online learning, COVID-19, active learning, higher education, pedagogy, survey, international

Citation: Nguyen T, Netto CLM, Wilkins JF, Bröker P, Vargas EE, Sealfon CD, Puthipiroj P, Li KS, Bowler JE, Hinson HR, Pujar M and Stein GM (2021) Insights Into Students’ Experiences and Perceptions of Remote Learning Methods: From the COVID-19 Pandemic to Best Practice for the Future. Front. Educ. 6:647986. doi: 10.3389/feduc.2021.647986

Received: 30 December 2020; Accepted: 09 March 2021; Published: 09 April 2021.

Reviewed by:

Copyright © 2021 Nguyen, Netto, Wilkins, Bröker, Vargas, Sealfon, Puthipiroj, Li, Bowler, Hinson, Pujar and Stein. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Geneva M. Stein, [email protected]

This article is part of the Research Topic

Covid-19 and Beyond: From (Forced) Remote Teaching and Learning to ‘The New Normal’ in Higher Education

A Systematic Review of the Research Topics in Online Learning During COVID-19: Documenting the Sudden Shift

- Min Young Doo Kangwon National University http://orcid.org/0000-0003-3565-2159

- Meina Zhu Wayne State University

- Curtis J. Bonk Indiana University Bloomington

Since most schools and learners had no choice but to learn online during the pandemic, online learning became the mainstream learning mode rather than a substitute for traditional face-to-face learning. Given this enormous change in online learning, we conducted a systematic review of 191 of the most recent online learning studies published during the COVID-19 era. The systematic review results indicated that the themes regarding “courses and instructors” became popular during the pandemic, whereas most online learning research has focused on “learners” pre-COVID-19. Notably, the research topics “course and instructors” and “course technology” received more attention than prior to COVID-19. We found that “engagement” remained the most common research theme even after the pandemic. New research topics included parents, technology acceptance or adoption of online learning, and learners’ and instructors’ perceptions of online learning.

An, H., Mongillo, G., Sung, W., & Fuentes, D. (2022). Factors affecting online learning during the COVID-19 pandemic: The lived experiences of parents, teachers, and administrators in U.S. high-needs K-12 schools. The Journal of Online Learning Research (JOLR), 8(2), 203-234. https://www.learntechlib.org/primary/p/220404/

Aslan, S., Li, Q., Bonk, C. J., & Nachman, L. (2022). An overnight educational transformation: How did the pandemic turn early childhood education upside down? Online Learning, 26(2), 52-77. DOI: http://dx.doi.org/10.24059/olj.v26i2.2748

Azizan, S. N., Lee, A. S. H., Crosling, G., Atherton, G., Arulanandam, B. V., Lee, C. E., &

Abdul Rahim, R. B. (2022). Online learning and COVID-19 in higher education: The value of IT models in assessing students’ satisfaction. International Journal of Emerging Technologies in Learning (iJET), 17(3), 245–278. https://doi.org/10.3991/ijet.v17i03.24871

Beatty, B. J. (2019). Hybrid-flexible course design (1st ed.). EdTech Books. https://edtechbooks.org/hyflex

Berge, Z., & Mrozowski, S. (2001). Review of research in distance education, 1990 to 1999. American Journal of Distance Education, 15(3), 5–19. https://doi.org/ 10.1080/08923640109527090

Bond, M. (2020). Schools and emergency remote education during the COVID-19 pandemic: A living rapid systematic review. Asian Journal of Distance Education, 15(2), 191-247. http://www.asianjde.com/ojs/index.php/AsianJDE/article/view/517

Bond, M., Bedenlier, S., Marín, V. I., & Händel, M. (2021). Emergency remote teaching in higher education: Mapping the first global online semester. International Journal of Educational Technology in Higher Education, 18(1), 1-24. https://doi.org/10.1186/s41239-021-00282-x

Bonk, C. J. (2020). Pandemic ponderings, 30 years to today: Synchronous signals, saviors, or survivors? Distance Education, 41(4), 589-599. https://doi.org/10.1080/01587919.2020.1821610

Bonk, C. J., & Graham, C. R. (Eds.) (2006). Handbook of blended learning: Global perspectives, local designs. Pfeiffer Publishing.

Bonk, C. J., Olson, T., Wisher, R. A., & Orvis, K. L. (2002). Learning from focus groups: An examination of blended learning. Journal of Distance Education, 17(3), 97-118.

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Braun, V., Clarke, V., & Rance, N. (2014). How to use thematic analysis with interview data. In A. Vossler & N. Moller (Eds.), The counselling & psychotherapy research handbook, 183–197. Sage.

Canales-Romero, D., & Hachfeld, A (2021). Juggling school and work from home: Results from a survey on German families with school-aged children during the early COVID-19 lockdown. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.734257

Cao, Y., Zhang, S., Chan, M.C.E., Kang. Y. (2021). Post-pandemic reflections: lessons from Chinese mathematics teachers about online mathematics instruction. Asia Pacific Education Review, 22, 157–168. https://doi.org/10.1007/s12564-021-09694-w

The Centers for Disease Control and Prevention (2022, May 4). COVID-19 forecasts: Deaths. Retrieved from https://www.cdc.gov/coronavirus/2019-ncov/science/forecasting/forecasting-us.html

Chang, H. M, & Kim. H. J. (2021). Predicting the pass probability of secondary school students taking online classes. Computers & Education, 164, 104110. https://doi.org/10.1016/j.compedu.2020.104110

Charumilind, S. Craven, M., Lamb, J., Sabow, A., Singhal, S., & Wilson, M. (2022, March 1). When will the COVID-19 pandemic end? McKinsey & Company. https://www.mckinsey.com/industries/healthcare-systems-and-services/our-insights/when-will-the-covid-19-pandemic-end

Cooper, H. (1988). The structure of knowledge synthesis: A taxonomy of literature reviews. Knowledge in Society, 1, 104–126.

Crompton, H., Burke, D., Jordan, K., & Wilson, S. W. (2021). Learning with technology during emergencies: A systematic review of K‐12 education. British Journal of Educational Technology, 52(4), 1554-1575. https://doi.org/10.1111/bjet.13114

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319-340. https://doi.org/10.2307/249008

Erwin, B. (2021, November). A policymaker’s guide to virtual schools. Education Commission of the States. https://www.ecs.org/wp-content/uploads/Policymakers-Guide-to-Virtual-Schools.pdf

Gross, B. (2021). Surging enrollment in virtual schools during the pandemic spurs new questions for policymakers. Center on Reinventing Public Education, Arizona State University. https://crpe.org/surging-enrollment-in-virtual-schools-during-the-pandemic-spurs-new-questions-for-policymakers/

Hamaidi, D. D. A., Arouri, D. Y. M., Noufal, R. K., & Aldrou, I. T. (2021). Parents’ perceptions of their children’s experiences with distance learning during the COVID-19 pandemic. The International Review of Research in Open and Distributed Learning, 22(2), 224-241. https://doi.org/10.19173/irrodl.v22i2.5154

Heo, H., Bonk, C. J., & Doo, M. Y. (2022). Influences of depression, self-efficacy, and resource management on learning engagement in blended learning during COVID-19. The Internet and Higher Education, 54, https://doi.org/10.1016/j.iheduc.2022.100856

Hodges, C., Moore, S., Lockee, B., Trust, T., & Bond, A. (2020, March 27). The differences between emergency remote teaching and online learning. EDUCAUSE Review. https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teachingand-online-learning

Huang, L. & Zhang, T. (2021). Perceived social support, psychological capital, and subjective well-being among college students in the context of online learning during the COVID-19 pandemic. Asia-Pacific Education Researcher. https://doi.org/10.1007/s40299-021-00608-3

Kanwar, A., & Daniel, J. (2020). Report to Commonwealth education ministers: From response to resilience. Commonwealth of Learning. http://oasis.col.org/handle/11599/3592

Lederman, D. (2019). Online enrollments grow, but pace slows. Inside Higher Ed. https://www.insidehighered.com/digital-learning/article/2019/12/11/more-students-study-online-rate-growth-slowed-2018

Lee, K. (2019). Rewriting a history of open universities: (Hi)stories of distance teachers. The International Review of Research in Open and Distributed Learning, 20(4), 1-12. https://doi.org/10.19173/irrodl.v20i3.4070

Liu, Y., & Butzlaff, A. (2021). Where's the germs? The effects of using virtual reality on nursing students' hospital infection prevention during the COVID-19 pandemic. Journal of Computer Assisted Learning, 37(6), 1622–1628. https://doi.org/10.1111/jcal.12601

Maloney, E. J., & Kim, J. (2020, June 10). Learning in 2050. Inside Higher Ed. https://www.insidehighered.com/digital-learning/blogs/learning-innovation/learning-2050

Martin, F., Sun, T., & Westine, C. D. (2020). A systematic review of research on online teaching and learning from 2009 to 2018. Computers & Education, 159, 104009.

Miks, J., & McIlwaine, J. (2020, April 20). Keeping the world’s children learning through COVID-19. UNICEF. https://www.unicef.org/coronavirus/keeping-worlds-children-learning-through-covid-19

Mishra, S., Sahoo, S., & Pandey, S. (2021). Research trends in online distance learning during the COVID-19 pandemic. Distance Education, 42(4), 494-519. https://doi.org/10.1080/01587919.2021.1986373

Moore, M. G. (Ed.) (2007). The handbook of distance education (2nd Ed.). Lawrence Erlbaum Associates.

Moore, M. G., & Kearsley, G. (2012). Distance education: A systems view (3rd ed.). Wadsworth.

Munir, F., Anwar, A., & Kee, D. M. H. (2021). The online learning and students’ fear of COVID-19: Study in Malaysia and Pakistan. The International Review of Research in Open and Distributed Learning, 22(4), 1-21. https://doi.org/10.19173/irrodl.v22i4.5637

National Center for Education Statistics (2015). Number of virtual schools by state and school type, magnet status, charter status, and shared-time status: School year 2013–14. https://nces.ed.gov/ccd/tables/201314_Virtual_Schools_table_1.asp

National Center for Education Statistics (2020). Number of virtual schools by state and school type, magnet status, charter status, and shared-time status: School year 2018–19. https://nces.ed.gov/ccd/tables/201819_Virtual_Schools_table_1.asp

National Center for Education Statistics (2021). Number of virtual schools by state and school type, magnet status, charter status, and shared-time status: School year 2019–20. https://nces.ed.gov/ccd/tables/201920_Virtual_Schools_table_1.asp

Nguyen T., Netto, C.L.M., Wilkins, J.F., Bröker, P., Vargas, E.E., Sealfon, C.D., Puthipiroj, P., Li, K.S., Bowler, J.E., Hinson, H.R., Pujar, M. & Stein, G.M. (2021). Insights into students’ experiences and perceptions of remote learning methods: From the COVID-19 pandemic to best practice for the future. Frontiers in Education, 6, 647986. doi: 10.3389/feduc.2021.647986

Oinas, S., Hotulainen, R., Koivuhovi, S., Brunila, K., & Vainikainen, M-P. (2022). Remote learning experiences of girls, boys and non-binary students. Computers & Education, 183, [104499]. https://doi.org/10.1016/j.compedu.2022.104499

Park, A. (2022, April 29). The U.S. is in a 'Controlled Pandemic' Phase of COVID-19. But what does that mean? Time. https://time.com/6172048/covid-19-controlled-pandemic-endemic/

Petersen, G. B. L., Petkakis, G., & Makransky, G. (2022). A study of how immersion and interactivity drive VR learning. Computers & Education, 179, 104429, https://doi.org/10.1016/j.compedu.2021.104429

Picciano, A., Dziuban, C., & Graham, C. R. (Eds.) (2014). Blended learning: Research perspectives, Volume 2. Routledge.

Picciano, A., Dziuban, C., Graham, C. R. & Moskal, P. (Eds.) (2022). Blended learning: Research perspectives, Volume 3. Routledge.

Pollard, R., & Kumar, S. (2021). Mentoring graduate students online: Strategies and challenges. The International Review of Research in Open and Distributed Learning, 22(2), 267-284. https://doi.org/10.19173/irrodl.v22i2.5093

Salis-Pilco, S. Z., Yang. Y., Zhang. Z. (2022). Student engagement in online learning in Latin American higher education during the COVID-19 pandemic: A systematic review. British Journal of Educational Technology, 53(3), 593-619. https://doi.org/10.1111/bjet.13190

Shen, Y. W., Reynolds, T. H., Bonk, C. J., & Brush, T. A. (2013). A case study of applying blended learning in an accelerated post-baccalaureate teacher education program. Journal of Educational Technology Development and Exchange, 6(1), 59-78.

Seabra, F., Teixeira, A., Abelha, M., Aires, L. (2021). Emergency remote teaching and learning in Portugal: Preschool to secondary school teachers’ perceptions. Education Sciences, 11, 349. https://doi.org/ 10.3390/educsci11070349

Tallent-Runnels, M. K., Thomas, J. A., Lan, W. Y., Cooper, S., Ahern, T. C., Shaw, S. M., & Liu, X. (2006). Teaching courses online: A review of the research. Review of Educational Research, 76(1), 93–135. https://doi.org/10.3102/00346543076001093 .

Theirworld. (2020, March 20). Hundreds of millions of students now learning from home after coronavirus crisis shuts their schools. ReliefWeb. https://reliefweb.int/report/world/hundreds-millions-students-now-learning-home-after-coronavirus-crisis-shuts-their

UNESCO (2020). UNESCO rallies international organizations, civil society and private sector partners in a broad Coalition to ensure #LearningNeverStops. https://en.unesco.org/news/unesco-rallies-international-organizations-civil-society-and-private-sector-partners-broad

VanLeeuwen, C. A., Veletsianos, G., Johnson, N., & Belikov, O. (2021). Never-ending repetitiveness, sadness, loss, and “juggling with a blindfold on:” Lived experiences of Canadian college and university faculty members during the COVID-19

pandemic. British Journal of Educational Technology, 52, 1306-1322

https://doi.org/10.1111/bjet.13065

Wedemeyer, C. A. (1981). Learning at the back door: Reflections on non-traditional learning in the lifespan. University of Wisconsin Press.

Zawacki-Richter, O., Backer, E., & Vogt, S. (2009). Review of distance education research (2000 to 2008): Analysis of research areas, methods, and authorship patterns. International Review of Research in Open and Distance Learning, 10(6), 30. https://doi.org/10.19173/irrodl.v10i6.741

Zhan, Z., Li, Y., Yuan, X., & Chen, Q. (2021). To be or not to be: Parents’ willingness to send their children back to school after the COVID-19 outbreak. The Asia-Pacific Education Researcher. https://doi.org/10.1007/s40299-021-00610-9

As a condition of publication, the author agrees to apply the Creative Commons – Attribution International 4.0 (CC-BY) License to OLJ articles. See: https://creativecommons.org/licenses/by/4.0/ .

This licence allows anyone to reproduce OLJ articles at no cost and without further permission as long as they attribute the author and the journal. This permission includes printing, sharing and other forms of distribution.

Author(s) hold copyright in their work, and retain publishing rights without restrictions

The DOAJ Seal is awarded to journals that demonstrate best practice in open access publishing

OLC Membership

OLC Research Center

Information

- For Readers

- For Authors

- For Librarians

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

What we know about online learning and the homework gap amid the pandemic

America’s K-12 students are returning to classrooms this fall after 18 months of virtual learning at home during the COVID-19 pandemic. Some students who lacked the home internet connectivity needed to finish schoolwork during this time – an experience often called the “ homework gap ” – may continue to feel the effects this school year.

Here is what Pew Research Center surveys found about the students most likely to be affected by the homework gap and their experiences learning from home.

Children across the United States are returning to physical classrooms this fall after 18 months at home, raising questions about how digital disparities at home will affect the existing homework gap between certain groups of students.

Methodology for each Pew Research Center poll can be found at the links in the post.

With the exception of the 2018 survey, everyone who took part in the surveys is a member of the Center’s American Trends Panel (ATP), an online survey panel that is recruited through national, random sampling of residential addresses. This way nearly all U.S. adults have a chance of selection. The survey is weighted to be representative of the U.S. adult population by gender, race, ethnicity, partisan affiliation, education and other categories. Read more about the ATP’s methodology .

The 2018 data on U.S. teens comes from a Center poll of 743 U.S. teens ages 13 to 17 conducted March 7 to April 10, 2018, using the NORC AmeriSpeak panel. AmeriSpeak is a nationally representative, probability-based panel of the U.S. household population. Randomly selected U.S. households are sampled with a known, nonzero probability of selection from the NORC National Frame, and then contacted by U.S. mail, telephone or face-to-face interviewers. Read more details about the NORC AmeriSpeak panel methodology .

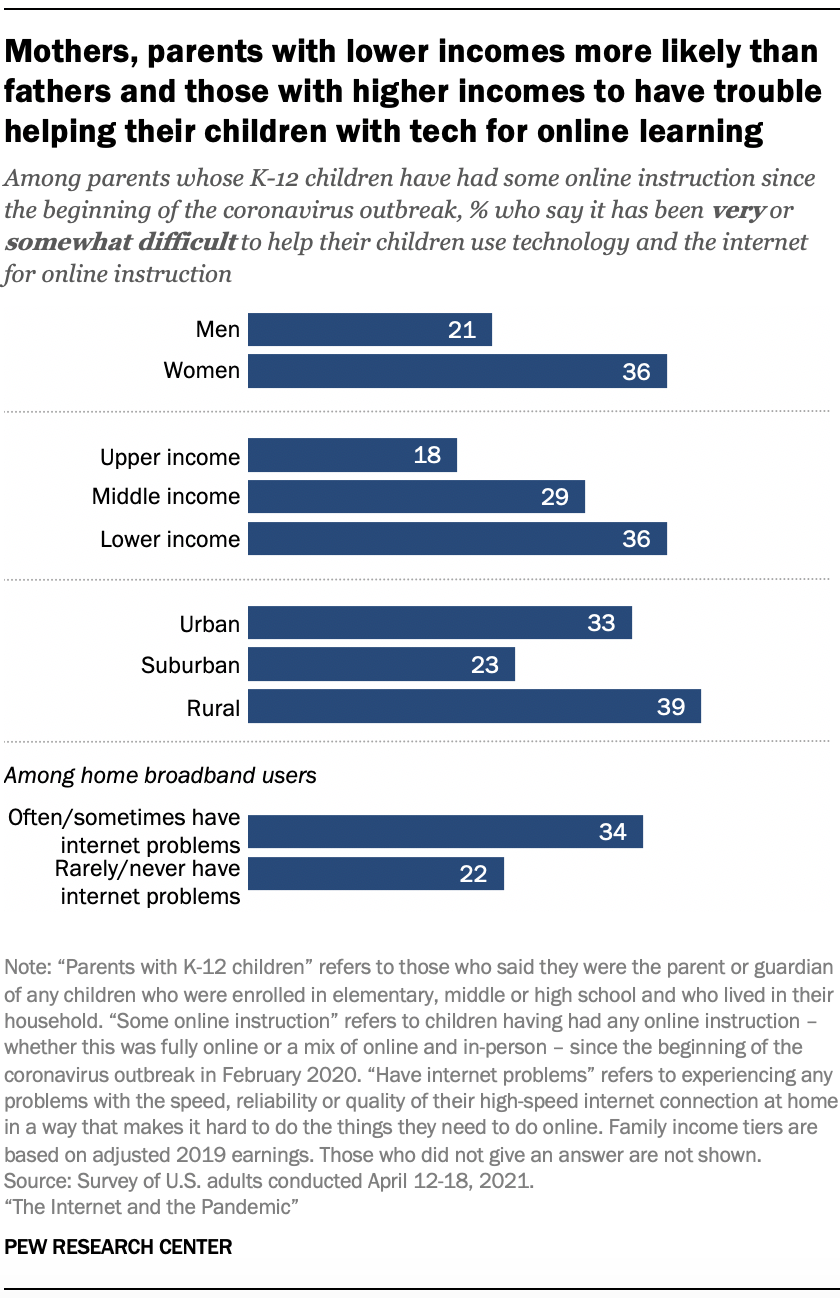

Around nine-in-ten U.S. parents with K-12 children at home (93%) said their children have had some online instruction since the coronavirus outbreak began in February 2020, and 30% of these parents said it has been very or somewhat difficult for them to help their children use technology or the internet as an educational tool, according to an April 2021 Pew Research Center survey .

Gaps existed for certain groups of parents. For example, parents with lower and middle incomes (36% and 29%, respectively) were more likely to report that this was very or somewhat difficult, compared with just 18% of parents with higher incomes.

This challenge was also prevalent for parents in certain types of communities – 39% of rural residents and 33% of urban residents said they have had at least some difficulty, compared with 23% of suburban residents.

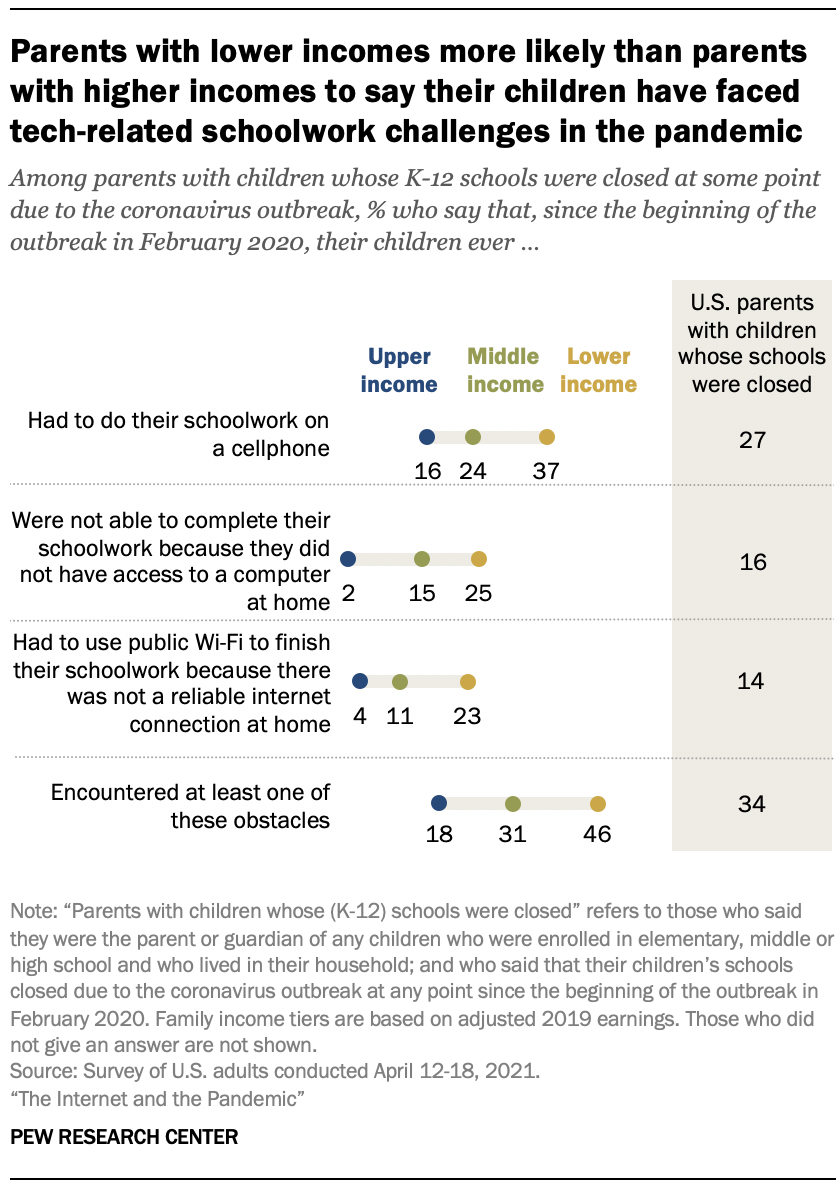

Around a third of parents with children whose schools were closed during the pandemic (34%) said that their child encountered at least one technology-related obstacle to completing their schoolwork during that time. In the April 2021 survey, the Center asked parents of K-12 children whose schools had closed at some point about whether their children had faced three technology-related obstacles. Around a quarter of parents (27%) said their children had to do schoolwork on a cellphone, 16% said their child was unable to complete schoolwork because of a lack of computer access at home, and another 14% said their child had to use public Wi-Fi to finish schoolwork because there was no reliable connection at home.

Parents with lower incomes whose children’s schools closed amid COVID-19 were more likely to say their children faced technology-related obstacles while learning from home. Nearly half of these parents (46%) said their child faced at least one of the three obstacles to learning asked about in the survey, compared with 31% of parents with midrange incomes and 18% of parents with higher incomes.

Of the three obstacles asked about in the survey, parents with lower incomes were most likely to say that their child had to do their schoolwork on a cellphone (37%). About a quarter said their child was unable to complete their schoolwork because they did not have computer access at home (25%), or that they had to use public Wi-Fi because they did not have a reliable internet connection at home (23%).

A Center survey conducted in April 2020 found that, at that time, 59% of parents with lower incomes who had children engaged in remote learning said their children would likely face at least one of the obstacles asked about in the 2021 survey.

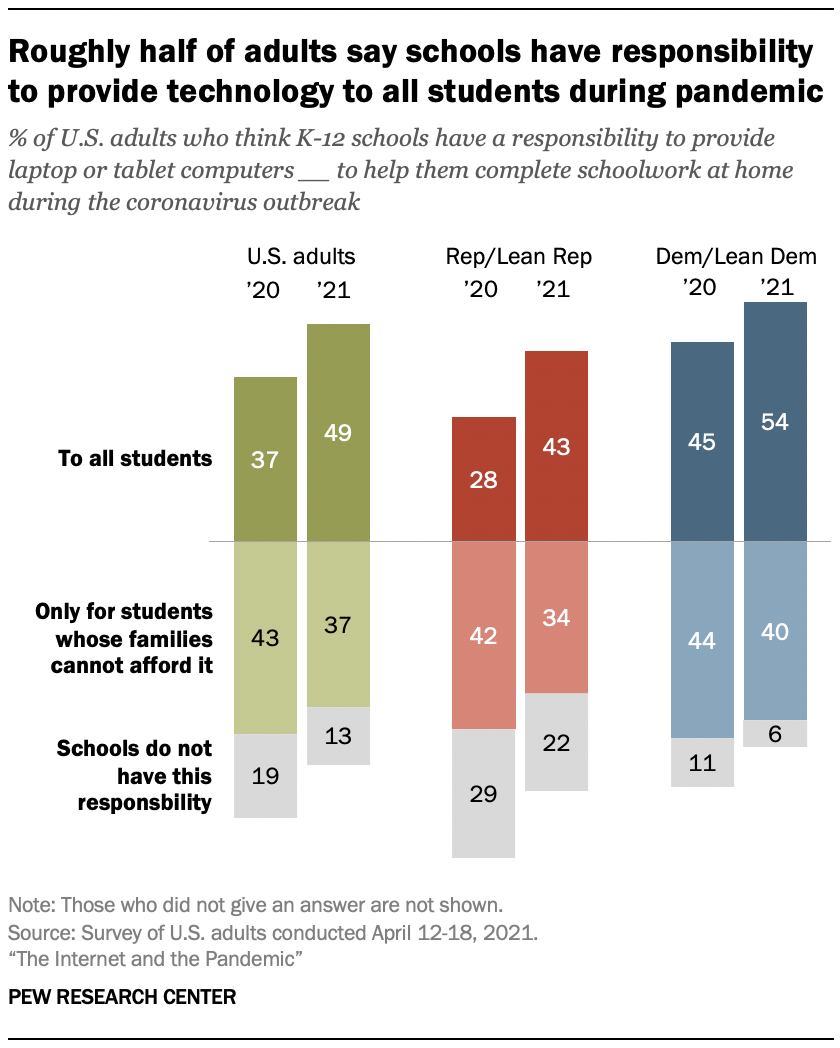

A year into the outbreak, an increasing share of U.S. adults said that K-12 schools have a responsibility to provide all students with laptop or tablet computers in order to help them complete their schoolwork at home during the pandemic. About half of all adults (49%) said this in the spring 2021 survey, up 12 percentage points from a year earlier. An additional 37% of adults said that schools should provide these resources only to students whose families cannot afford them, and just 13% said schools do not have this responsibility.

While larger shares of both political parties in April 2021 said K-12 schools have a responsibility to provide computers to all students in order to help them complete schoolwork at home, there was a 15-point change among Republicans: 43% of Republicans and those who lean to the Republican Party said K-12 schools have this responsibility, compared with 28% last April. In the 2021 survey, 22% of Republicans also said schools do not have this responsibility at all, compared with 6% of Democrats and Democratic leaners.

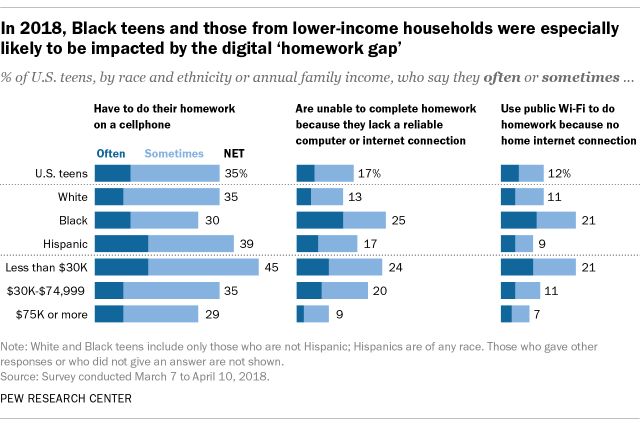

Even before the pandemic, Black teens and those living in lower-income households were more likely than other groups to report trouble completing homework assignments because they did not have reliable technology access. Nearly one-in-five teens ages 13 to 17 (17%) said they are often or sometimes unable to complete homework assignments because they do not have reliable access to a computer or internet connection, a 2018 Center survey of U.S. teens found.

One-quarter of Black teens said they were at least sometimes unable to complete their homework due to a lack of digital access, including 13% who said this happened to them often. Just 4% of White teens and 6% of Hispanic teens said this often happened to them. (There were not enough Asian respondents in the survey sample to be broken out into a separate analysis.)

A wide gap also existed by income level: 24% of teens whose annual family income was less than $30,000 said the lack of a dependable computer or internet connection often or sometimes prohibited them from finishing their homework, but that share dropped to 9% among teens who lived in households earning $75,000 or more a year.

- Coronavirus (COVID-19)

- COVID-19 & Technology

- Digital Divide

- Education & Learning Online

Katherine Schaeffer is a research analyst at Pew Research Center .

How Americans View the Coronavirus, COVID-19 Vaccines Amid Declining Levels of Concern

Online religious services appeal to many americans, but going in person remains more popular, about a third of u.s. workers who can work from home now do so all the time, how the pandemic has affected attendance at u.s. religious services, mental health and the pandemic: what u.s. surveys have found, most popular.