An open portfolio of interoperable, industry leading products

The Dotmatics digital science platform provides the first true end-to-end solution for scientific R&D, combining an enterprise data platform with the most widely used applications for data analysis, biologics, flow cytometry, chemicals innovation, and more.

Statistical analysis and graphing software for scientists

Bioinformatics, cloning, and antibody discovery software

Plan, visualize, & document core molecular biology procedures

Electronic Lab Notebook to organize, search and share data

Proteomics software for analysis of mass spec data

Modern cytometry analysis platform

Analysis, statistics, graphing and reporting of flow cytometry data

Software to optimize designs of clinical trials

The Ultimate Guide to ANOVA

Get all of your ANOVA questions answered here

ANOVA is the go-to analysis tool for classical experimental design, which forms the backbone of scientific research.

In this article, we’ll guide you through what ANOVA is, how to determine which version to use to evaluate your particular experiment, and provide detailed examples for the most common forms of ANOVA.

This includes a (brief) discussion of crossed, nested, fixed and random factors, and covers the majority of ANOVA models that a scientist would encounter before requiring the assistance of a statistician or modeling expert.

What is ANOVA used for?

ANOVA, or (Fisher’s) analysis of variance, is a critical analytical technique for evaluating differences between three or more sample means from an experiment. As the name implies, it partitions out the variance in the response variable based on one or more explanatory factors.

As you will see there are many types of ANOVA such as one-, two-, and three-way ANOVA as well as nested and repeated measures ANOVA. The graphic below shows a simple example of an experiment that requires ANOVA in which researchers measured the levels of neutrophil extracellular traps (NETs) in plasma across patients with different viral respiratory infections.

Many researchers may not realize that, for the majority of experiments, the characteristics of the experiment that you run dictate the ANOVA that you need to use to test the results. While it’s a massive topic (with professional training needed for some of the advanced techniques), this is a practical guide covering what most researchers need to know about ANOVA.

When should I use ANOVA?

If your response variable is numeric, and you’re looking for how that number differs across several categorical groups, then ANOVA is an ideal place to start. After running an experiment, ANOVA is used to analyze whether there are differences between the mean response of one or more of these grouping factors.

ANOVA can handle a large variety of experimental factors such as repeated measures on the same experimental unit (e.g., before/during/after).

If instead of evaluating treatment differences, you want to develop a model using a set of numeric variables to predict that numeric response variable, see linear regression and t tests .

What is the difference between one-way, two-way and three-way ANOVA?

The number of “ways” in ANOVA (e.g., one-way, two-way, …) is simply the number of factors in your experiment.

Although the difference in names sounds trivial, the complexity of ANOVA increases greatly with each added factor. To use an example from agriculture, let’s say we have designed an experiment to research how different factors influence the yield of a crop.

An experiment with a single factor

In the most basic version, we want to evaluate three different fertilizers. Because we have more than two groups, we have to use ANOVA. Since there is only one factor (fertilizer), this is a one-way ANOVA. One-way ANOVA is the easiest to analyze and understand, but probably not that useful in practice, because having only one factor is a pretty simplistic experiment.

What happens when you add a second factor?

If we have two different fields, we might want to add a second factor to see if the field itself influences growth. Within each field, we apply all three fertilizers (which is still the main interest). This is called a crossed design. In this case we have two factors, field and fertilizer, and would need a two-way ANOVA.

As you might imagine, this makes interpretation more complicated (although still very manageable) simply because more factors are involved. There is now a fertilizer effect, as well as a field effect, and there could be an interaction effect, where the fertilizer behaves differently on each field.

How about adding a third factor?

Finally, it is possible to have more than two factors in an ANOVA. In our example, perhaps you also wanted to test out different irrigation systems. You could have a three-way ANOVA due to the presence of fertilizer, field, and irrigation factors. This greatly increases the complication.

Now in addition to the three main effects (fertilizer, field and irrigation), there are three two-way interaction effects (fertilizer by field, fertilizer by irrigation, and field by irrigation), and one three-way interaction effect.

If any of the interaction effects are statistically significant, then presenting the results gets quite complicated. “Fertilizer A works better on Field B with Irrigation Method C ….”

In practice, two-way ANOVA is often as complex as many researchers want to get before consulting with a statistician. That being said, three-way ANOVAs are cumbersome, but manageable when each factor only has two levels.

What are crossed and nested factors?

In addition to increasing the difficulty with interpretation, experiments (or the resulting ANOVA) with more than one factor add another level of complexity, which is determining whether the factors are crossed or nested.

With crossed factors, every combination of levels among each factor is observed. For example, each fertilizer is applied to each field (so the fields are subdivided into three sections in this case).

With nested factors, different levels of a factor appear within another factor. An example is applying different fertilizers to each field, such as fertilizers A and B to field 1 and fertilizers C and D to field 2. See more about nested ANOVA here .

What are fixed and random factors?

Another challenging concept with two or more factors is determining whether to treat the factors as fixed or random.

Fixed factors are used when all levels of a factor (e.g., Fertilizer A, Fertilizer B, Fertilizer C) are specified and you want to determine the effect that factor has on the mean response.

Random factors are used when only some levels of a factor are observed (e.g., Field 1, Field 2, Field 3) out of a large or infinite possible number (e.g., all fields), but rather than specify the effect of the factor, which you can’t do because you didn’t observe all possible levels, you want to quantify the variability that’s within that factor (variability added within each field).

Many introductory courses on ANOVA only discuss fixed factors, and we will largely follow suit other than with two specific scenarios (nested factors and repeated measures).

What are the (practical) assumptions of ANOVA?

These are one-way ANOVA assumptions, but also carryover for more complicated two-way or repeated measures ANOVA.

- Categorical treatment or factor variables - ANOVA evaluates mean differences between one or more categorical variables (such as treatment groups), which are referred to as factors or “ways.”

- Three or more groups - There must be at least three distinct groups (or levels of a categorical variable) across all factors in an ANOVA. The possibilities are endless: one factor of three different groups, two factors of two groups each (2x2), and so on. If you have fewer than three groups, you can probably get away with a simple t-test.

- Numeric Response - While the groups are categorical, the data measured in each group (i.e., the response variable) still needs to be numeric. ANOVA is fundamentally a quantitative method for measuring the differences in a numeric response between groups. If your response variable isn’t continuous, then you need a more specialized modelling framework such as logistic regression or chi-square contingency table analysis to name a few.

- Random assignment - The makeup of each experimental group should be determined by random selection.

- Normality - The distribution within each factor combination should be approximately normal, although ANOVA is fairly robust to this assumption as the sample size increases due to the central limit theorem.

What is the formula for ANOVA?

The formula to calculate ANOVA varies depending on the number of factors, assumptions about how the factors influence the model (blocking variables, fixed or random effects, nested factors, etc.), and any potential overlap or correlation between observed values (e.g., subsampling, repeated measures).

The good news about running ANOVA in the 21st century is that statistical software handles the majority of the tedious calculations. The main thing that a researcher needs to do is select the appropriate ANOVA.

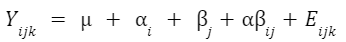

An example formula for a two-factor crossed ANOVA is:

How do I know which ANOVA to use?

As statisticians, we like to imagine that you’re reading this before you’ve run your experiment. You can save a lot of headache by simplifying an experiment into a standard format (when possible) to make the analysis straightforward.

Regardless, we’ll walk you through picking the right ANOVA for your experiment and provide examples for the most popular cases. The first question is:

Do you only have a single factor of interest?

If you have only measured a single factor (e.g., fertilizer A, fertilizer B, .etc.), then use one-way ANOVA . If you have more than one, then you need to consider the following:

Are you measuring the same observational unit (e.g., subject) multiple times?

This is where repeated measures come into play and can be a really confusing question for researchers, but if this sounds like it might describe your experiment, see repeated measures ANOVA . Otherwise:

Are any of the factors nested, where the levels are different depending on the levels of another factor?

In this case, you have a nested ANOVA design. If you don’t have nested factors or repeated measures, then it becomes simple:

Do you have two categorical factors?

Then use two-way ANOVA.

Do you have three categorical factors?

Use three-way ANOVA.

Do you have variables that you recorded that aren’t categorical (such as age, weight, etc.)?

Although these are outside the scope of this guide, if you have a single continuous variable, you might be able to use ANCOVA, which allows for a continuous covariate. With multiple continuous covariates, you probably want to use a mixed model or possibly multiple linear regression.

Prism does offer multiple linear regression but assumes that all factors are fixed. A full “mixed model” analysis is not yet available in Prism, but is offered as options within the one- and two-way ANOVA parameters.

How do I perform ANOVA?

Once you’ve determined which ANOVA is appropriate for your experiment, use statistical software to run the calculations. Below, we provide detailed examples of one, two and three-way ANOVA models.

How do I read and interpret an ANOVA table?

Interpreting any kind of ANOVA should start with the ANOVA table in the output. These tables are what give ANOVA its name, since they partition out the variance in the response into the various factors and interaction terms. This is done by calculating the sum of squares (SS) and mean squares (MS), which can be used to determine the variance in the response that is explained by each factor.

If you have predetermined your level of significance, interpretation mostly comes down to the p-values that come from the F-tests. The null hypothesis for each factor is that there is no significant difference between groups of that factor. All of the following factors are statistically significant with a very small p-value.

One-way ANOVA Example

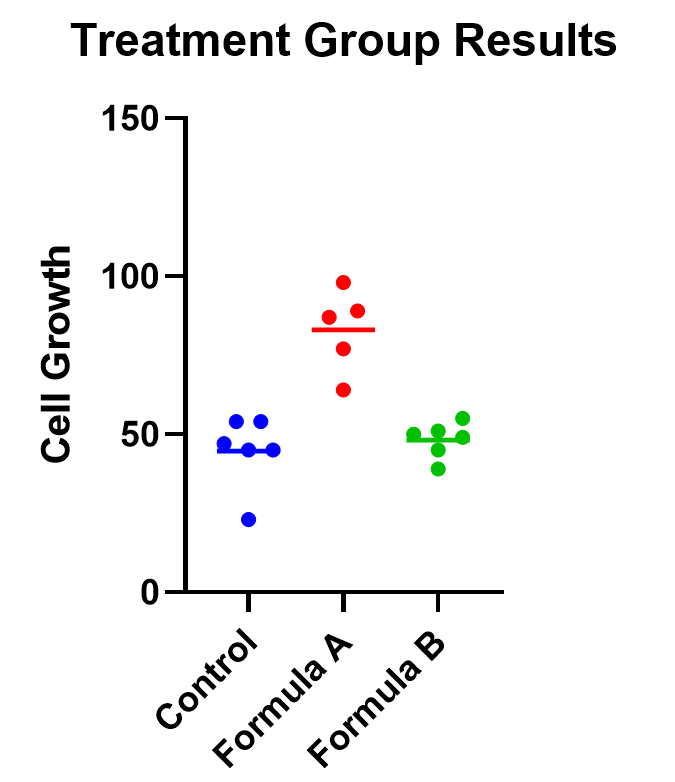

An example of one-way ANOVA is an experiment of cell growth in petri dishes. The response variable is a measure of their growth, and the variable of interest is treatment, which has three levels: formula A, formula B, and a control.

Classic one-way ANOVA assumes equal variances within each sample group. If that isn’t a valid assumption for your data, you have a number of alternatives .

Calculating a one-way ANOVA

Using Prism to do the analysis, we will run a one-way ANOVA and will choose 95% as our significance threshold. Since we are interested in the differences between each of the three groups, we will evaluate each and correct for multiple comparisons (more on this later!).

For the following, we’ll assume equal variances within the treatment groups. Consider

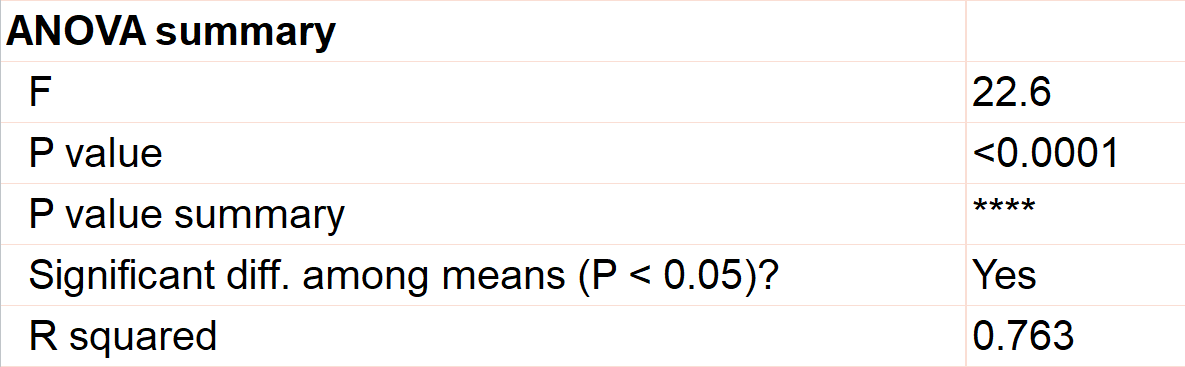

The first test to look at is the overall (or omnibus) F-test, with the null hypothesis that there is no significant difference between any of the treatment groups. In this case, there is a significant difference between the three groups (p<0.0001), which tells us that at least one of the groups has a statistically significant difference.

Now we can move to the heart of the issue, which is to determine which group means are statistically different. To learn more, we should graph the data and test the differences (using a multiple comparison correction).

Graphing one-way ANOVA

The easiest way to visualize the results from an ANOVA is to use a simple chart that shows all of the individual points. Rather than a bar chart, it’s best to use a plot that shows all of the data points (and means) for each group such as a scatter or violin plot.

As an example, below you can see a graph of the cell growth levels for each data point in each treatment group, along with a line to represent their mean. This can help give credence to any significant differences found, as well as show how closely groups overlap.

Determining statistical significance between groups

In addition to the graphic, what we really want to know is which treatment means are statistically different from each other. Because we are performing multiple tests, we’ll use a multiple comparison correction . For our example, we’ll use Tukey’s correction (although if we were only interested in the difference between each formula to the control, we could use Dunnett’s correction instead).

In this case, the mean cell growth for Formula A is significantly higher than the control (p<.0001) and Formula B ( p=0.002 ), but there’s no significant difference between Formula B and the control.

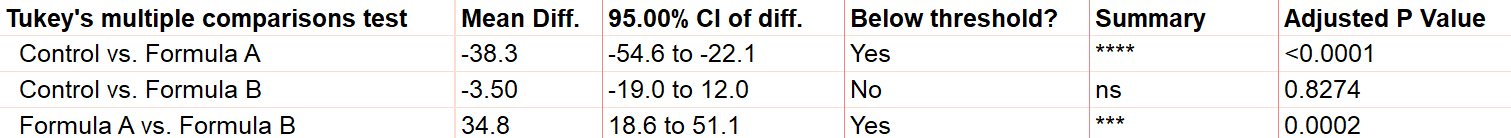

Two-way ANOVA example

For two-way ANOVA, there are two factors involved. Our example will focus on a case of cell lines. Suppose we have a 2x2 design (four total groupings). There are two different treatments (serum-starved and normal culture) and two different fields. There are 19 total cell line “experimental units” being evaluated, up to 5 in each group (note that with 4 groups and 19 observational units, this study isn’t balanced). Although there are multiple units in each group, they are all completely different replicates and therefore not repeated measures of the same unit.

As with one-way ANOVA, it’s a good idea to graph the data as well as look at the ANOVA table for results.

Graphing two-way ANOVA

There are many options here. Like our one-way example, we recommend a similar graphing approach that shows all the data points themselves along with the means.

Determining statistical significance between groups in two-way ANOVA

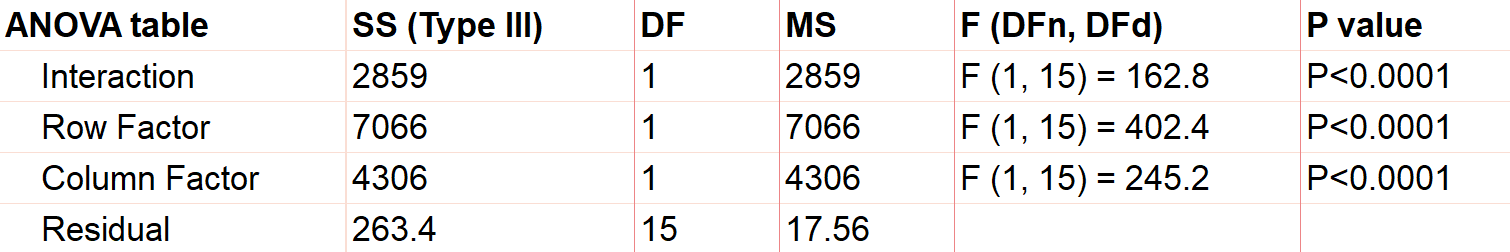

Let’s use a two-way ANOVA with a 95% significance threshold to evaluate both factors’ effects on the response, a measure of growth.

Feel free to use our two-way ANOVA checklist as often as you need for your own analysis.

First, notice there are three sources of variation included in the model, which are interaction, treatment, and field.

The first effect to look at is the interaction term, because if it’s significant, it changes how you interpret the main effects (e.g., treatment and field). The interaction effect calculates if the effect of a factor depends on the other factor. In this case, the significant interaction term (p<.0001) indicates that the treatment effect depends on the field type.

A significant interaction term muddies the interpretation, so that you no longer have the simple conclusion that “Treatment A outperforms Treatment B.” In this case, the graphic is particularly useful. It suggests that while there may be some difference between three of the groups, the precise combination of serum starved in field 2 outperformed the rest.

To confirm whether there is a statistically significant result, we would run pairwise comparisons (comparing each factor level combination with every other one) and account for multiple comparisons.

Do I need to correct for multiple comparisons for two-way ANOVA?

If you’re comparing the means for more than one combination of treatment groups, then absolutely! Here’s more information about multiple comparisons for two-way ANOVA .

Repeated measures ANOVA

So far we have focused almost exclusively on “ordinary” ANOVA and its differences depending on how many factors are involved. In all of these cases, each observation is completely unrelated to the others. Other than the combination of factors that may be the same across replicates, each replicate on its own is independent.

There is a second common branch of ANOVA known as repeated measures . In these cases, the units are related in that they are matched up in some way. Repeated measures are used to model correlation between measurements within an individual or subject. Repeated measures ANOVA is useful (and increases statistical power) when the variability within individuals is large relative to the variability among individuals.

It’s important that all levels of your repeated measures factor (usually time) are consistent. If they aren’t, you’ll need to consider running a mixed model, which is a more advanced statistical technique.

There are two common forms of repeated measures:

- You observe the same individual or subject at different time points. If you’re familiar with paired t-tests, this is an extension to that. (You can also have the same individual receive all of the treatments, which adds another level of repeated measures.)

- You have a randomized block design, where matched elements receive each treatment. For example, you split a large sample of blood taken from one person into 3 (or more) smaller samples, and each of those smaller samples gets exactly one treatment.

Repeated measures ANOVA can have any number of factors. See analysis checklists for one-way repeated measures ANOVA and two-way repeated measures ANOVA .

What does it mean to assume sphericity with repeated measures ANOVA?

Repeated measures are almost always treated as random factors, which means that the correlation structure between levels of the repeated measures needs to be defined. The assumption of sphericity means that you assume that each level of the repeated measures has the same correlation with every other level.

This is almost never the case with repeated measures over time (e.g., baseline, at treatment, 1 hour after treatment), and in those cases, we recommend not assuming sphericity. However, if you used a randomized block design, then sphericity is usually appropriate .

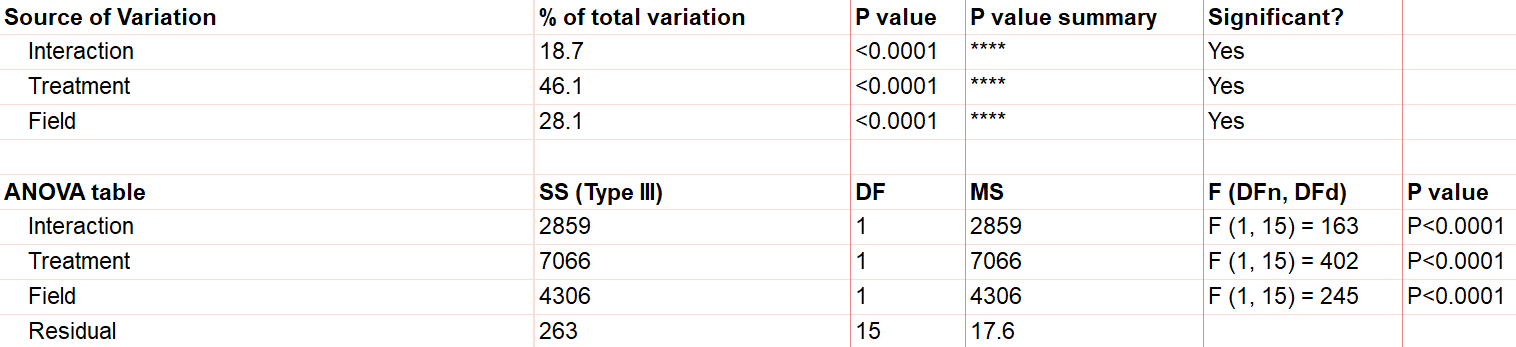

Example two-way ANOVA with repeated measures

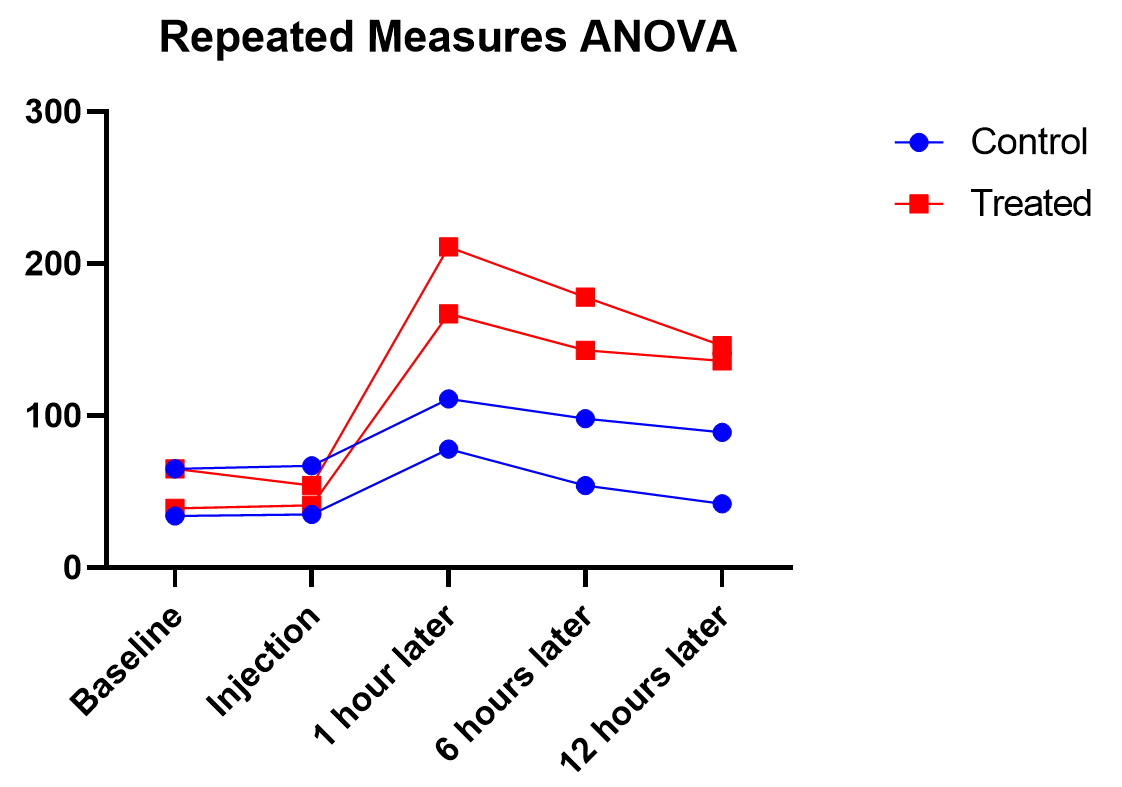

Say we have two treatments (control and treatment) to evaluate using test animals. We’ll apply both treatments to each two animals (replicates) with sufficient time in between the treatments so there isn’t a crossover (or carry-over) effect. Also, we’ll measure five different time points for each treatment (baseline, at time of injection, one hour after, …). This is repeated measures because we will need to measure matching samples from the same animal under each treatment as we track how its stimulation level changes over time.

The output shows the test results from the main and interaction effects. Due to the interaction between time and treatment being significant (p<.0001), the fact that the treatment main effect isn’t significant (p=.154) isn’t noteworthy.

Graphing repeated measures ANOVA

As we’ve been saying, graphing the data is useful, and this is particularly true when the interaction term is significant. Here we get an explanation of why the interaction between treatment and time was significant, but treatment on its own was not. As soon as one hour after injection (and all time points after), treated units show a higher response level than the control even as it decreases over those 12 hours. Thus the effect of time depends on treatment. At the earlier time points, there is no difference between treatment and control.

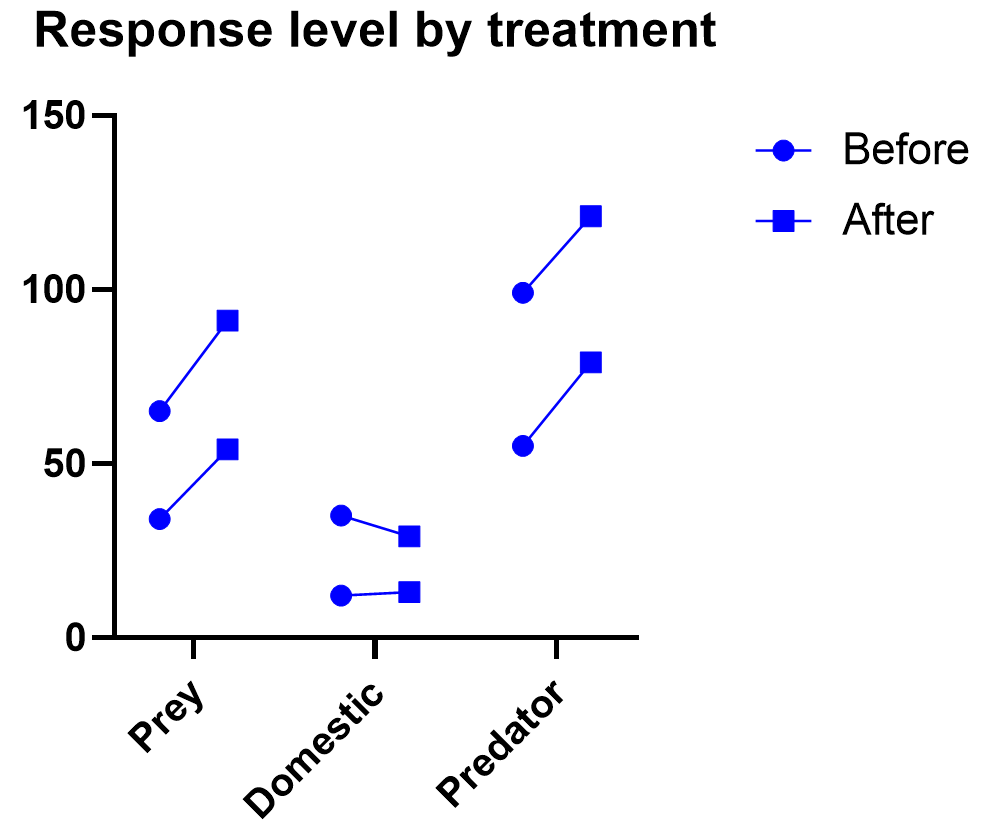

Graphing repeated measures data is an art, but a good graphic helps you understand and communicate the results. For example, it’s a completely different experiment, but here’s a great plot of another repeated measures experiment with before and after values that are measured on three different animal types.

What if I have three or more factors?

Interpreting three or more factors is very challenging and usually requires advanced training and experience .

Just as two-way ANOVA is more complex than one-way, three-way ANOVA adds much more potential for confusion. Not only are you dealing with three different factors, you will now be testing seven hypotheses at the same time. Two-way interactions still exist here, and you may even run into a significant three-way interaction term.

It takes careful planning and advanced experimental design to be able to untangle the combinations that will be involved ( see more details here ).

Non-parametric ANOVA alternatives

As with t-tests (or virtually any statistical method), there are alternatives to ANOVA for testing differences between three groups. ANOVA is means-focused and evaluated in comparison to an F-distribution.

The two main non-parametric cousins to ANOVA are the Kruskal-Wallis and Friedman’s tests. Just as is true with everything else in ANOVA, it is likely that one of the two options is more appropriate for your experiment.

Kruskal-Wallis tests the difference between medians (rather than means) for 3 or more groups. It is only useful as an “ordinary ANOVA” alternative, without matched subjects like you have in repeated measures. Here are some tips for interpreting Kruskal-Wallis test results.

Friedman’s Test is the opposite, designed as an alternative to repeated measures ANOVA with matched subjects. Here are some tips for interpreting Friedman's Test .

What are simple, main, and interaction effects in ANOVA?

Consider the two-way ANOVA model setup that contains two different kinds of effects to evaluate:

The 𝛼 and 𝛽 factors are “main” effects, which are the isolated effect of a given factor. “Main effect” is used interchangeably with “simple effect” in some textbooks.

The interaction term is denoted as “𝛼𝛽”, and it allows for the effect of a factor to depend on the level of another factor. It can only be tested when you have replicates in your study. Otherwise, the error term is assumed to be the interaction term.

What are multiple comparisons?

When you’re doing multiple statistical tests on the same set of data, there’s a greater propensity to discover statistically significant differences that aren’t true differences. Multiple comparison corrections attempt to control for this, and in general control what is called the familywise error rate. There are a number of multiple comparison testing methods , which all have pros and cons depending on your particular experimental design and research questions.

What does the word “way” mean in one-way vs two-way ANOVA?

In statistics overall, it can be hard to keep track of factors, groups, and tails. To the untrained eye “two-way ANOVA” could mean any of these things.

The best way to think about ANOVA is in terms of factors or variables in your experiment. Suppose you have one factor in your analysis (perhaps “treatment”). You will likely see that written as a one-way ANOVA. Even if that factor has several different treatment groups, there is only one factor, and that’s what drives the name.

Also, “way” has absolutely nothing to do with “tails” like a t-test. ANOVA relies on F tests, which can only test for equal vs unequal because they rely on squared terms. So ANOVA does not have the “one-or-two tails” question .

What is the difference between ANOVA and a t-test?

ANOVA is an extension of the t-test. If you only have two group means to compare, use a t-test. Anything more requires ANOVA.

What is the difference between ANOVA and chi-square?

Chi-square is designed for contingency tables, or counts of items within groups (e.g., type of animal). The goal is to see whether the counts in a particular sample match the counts you would expect by random chance.

ANOVA separates subjects into groups for evaluation, but there is some numeric response variable of interest (e.g., glucose level).

Can ANOVA evaluate effects on multiple response variables at the same time?

Multiple response variables makes things much more complicated than multiple factors. ANOVA (as we’ve discussed it here) can obviously handle multiple factors but it isn’t designed for tracking more than one response at a time.

Technically, there is an expansion approach designed for this called Multivariate (or Multiple) ANOVA, or more commonly written as MANOVA. Things get complicated quickly, and in general requires advanced training.

Can ANOVA evaluate numeric factors in addition to the usual categorical factors?

It sounds like you are looking for ANCOVA (analysis of covariance). You can treat a continuous (numeric) factor as categorical, in which case you could use ANOVA, but this is a common point of confusion .

What is the definition of ANOVA?

ANOVA stands for analysis of variance, and, true to its name, it is a statistical technique that analyzes how experimental factors influence the variance in the response variable from an experiment.

What is blocking in Anova?

Blocking is an incredibly powerful and useful strategy in experimental design when you have a factor that you think will heavily influence the outcome, so you want to control for it in your experiment. Blocking affects how the randomization is done with the experiment. Usually blocking variables are nuisance variables that are important to control for but are not inherently of interest.

A simple example is an experiment evaluating the efficacy of a medical drug and blocking by age of the subject. To do blocking, you must first gather the ages of all of the participants in the study, appropriately bin them into groups (e.g., 10-30, 30-50, etc.), and then randomly assign an equal number of treatments to the subjects within each group.

There’s an entire field of study around blocking. Some examples include having multiple blocking variables, incomplete block designs where not all treatments appear in all blocks, and balanced (or unbalanced) blocking designs where equal (or unequal) numbers of replicates appear in each block and treatment combination.

What is ANOVA in statistics?

For a one-way ANOVA test, the overall ANOVA null hypothesis is that the mean responses are equal for all treatments. The ANOVA p-value comes from an F-test.

Can I do ANOVA in R?

While Prism makes ANOVA much more straightforward, you can use open-source coding languages like R as well. Here are some examples of R code for repeated measures ANOVA, both one-way ANOVA in R and two-way ANOVA in R .

Perform your own ANOVA

Are you ready for your own Analysis of variance? Prism makes choosing the correct ANOVA model simple and transparent .

Start your 30 day free trial of Prism and get access to:

- A step by step guide on how to perform ANOVA

- Sample data to save you time

- More tips on how Prism can help your research

With Prism, in a matter of minutes you learn how to go from entering data to performing statistical analyses and generating high-quality graphs.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 16 March 2018

An ANOVA approach for statistical comparisons of brain networks

- Daniel Fraiman ORCID: orcid.org/0000-0002-0482-9137 1 , 2 &

- Ricardo Fraiman 3 , 4

Scientific Reports volume 8 , Article number: 4746 ( 2018 ) Cite this article

20k Accesses

14 Citations

3 Altmetric

Metrics details

- Data processing

- Statistical methods

The study of brain networks has developed extensively over the last couple of decades. By contrast, techniques for the statistical analysis of these networks are less developed. In this paper, we focus on the statistical comparison of brain networks in a nonparametric framework and discuss the associated detection and identification problems. We tested network differences between groups with an analysis of variance (ANOVA) test we developed specifically for networks. We also propose and analyse the behaviour of a new statistical procedure designed to identify different subnetworks. As an example, we show the application of this tool in resting-state fMRI data obtained from the Human Connectome Project. We identify, among other variables, that the amount of sleep the days before the scan is a relevant variable that must be controlled. Finally, we discuss the potential bias in neuroimaging findings that is generated by some behavioural and brain structure variables. Our method can also be applied to other kind of networks such as protein interaction networks, gene networks or social networks.

Similar content being viewed by others

Functional brain networks reflect spatial and temporal autocorrelation

Null models in network neuroscience

Telling functional networks apart using ranked network features stability

Introduction.

Understanding how individual neurons, groups of neurons and brain regions connect is a fundamental issue in neuroscience. Imaging and electrophysiology have allowed researchers to investigate this issue at different brain scales. At the macroscale, the study of brain connectivity is dominated by MRI, which is the main technique used to study how different brain regions connect and communicate. Researchers use different experimental protocols in an attempt to describe the true brain networks of individuals with disorders as well as those of healthy individuals. Understanding resting state networks is crucial for understanding modified networks, such as those involved in emotion, pain, motor learning, memory, reward processing, and cognitive development, among others. Comparing brain networks accurately can also lead to the precise early diagnosis of neuropsychiatric and neurological disorders 1 , 2 . Rigorous mathematical methods are needed to conduct such comparisons.

Currently, the two main techniques used to measure brain networks at the whole brain scale are Diffusion Tensor Imaging (DTI) and resting-state functional magnetic resonance imaging (rs-fMRI). In DTI, large white-matter fibres are measured to create a connectional neuroanatomy brain network, while in rs-fMRI, functional connections are inferred by measuring the BOLD activity at each voxel and creating a whole brain functional network based on functionally-connected voxels (i.e., those with similar behaviour). Despite technical limitations, both techniques are routinely used to provide a structural and dynamic explanation for some aspects of human brain function. These magnetic resonance neuroimages are typically analysed by applying network theory 3 , 4 , which has gained considerable attention for the analysis of brain data over the last 10 years.

The space of networks with as few as 10 nodes (brain regions) contains as many as 10 13 different networks. Thus, one can imagine the number of networks if one analyses brain network populations (e.g. healthy and unhealthy) with, say, 1000 nodes. However, most studies currently report data with few subjects, and the neuroscience community has recently begun to address this issue 5 , 6 , 7 and question the reproducibility of such findings 8 , 9 , 10 . In this work, we present a tool for comparing samples of brain networks. This study contributes to a fast-growing area of research: network statistics of network samples 11 , 12 , 13 , 14 .

We organized the paper as follows: In the Results section, we first present a discussion about the type of differences that can be observed when comparing brain networks. Second, we present the method for comparing brain networks and identifying network differences that works well even with small samples. Third, we present an example that illustrates in greater detail the concept of comparing networks. Next, we apply the method to resting-state fMRI data from the Human Connectome Project and discuss the potential biases generated by some behavioural and brain structural variables. Finally, in the Discussion section, we discuss possible improvements, the impact of sample size, and the effects of confounding variables.

Preliminars

Most studies that compare brain networks (e.g., in healthy controls vs. patients) try to identify the subnetworks, hubs, modules, etc. that are affected in the particular disease. There is a widespread belief (largely supported by data) that the brain network modifications induced by the factor studied (disease, age, sex, stimulus) are specific . This means that the factor will similarly affect the brains of different people.

On the other hand, labeled networks can be modified in many different ways while preserving the nodes, and these modifications can be categorized into three. In the first category, called here localized modifications , some particular identified links suffer changes by the factor. In the second, called unlocalized modifications , some links change, but the changed links differ among subjects. For example, the degree of interconnection of some nodes may decrease/increase by 50%, but in some individuals, this happens in the frontal lobe, in others in the right parietal lobe or the occipital lobe, and so on. In this case, the localization of the links/nodes affected by the factor can be considered random. In the third category, called here global modifications , some links (not the same across subjects) are changed, and these changes produce a global alteration of the network. For example, they can notably decrease/increase the average path length, the average degree, or the number of modules, or just produce more heterogeneous networks in a population of homogeneous ones. This last category is similar to the unlocalized modifications case, but in this case, an important global change in the network occurs.

In all cases, there are changes in the links influenced by the “factor”, while nodes are fixed. How to detect if any of these changes have occurred (hereinafter called detection) is one of the main challenges of this work. And, once their occurrence has been determined, we aim to identify where they occurred (hereinafter called identification). The difficulty lies in statistically asserting that the factor produced true modifications in the huge space of labeled networks. We aim to detect all three types of network modifications. Clearly, as is always true in statistics, more precise methods can be proposed when hypotheses regarding the data are more accurate (e.g., that the differences belong to the global modifications category). However, this last approach requires one to make many more assumptions about the brain’s behaviour. The assumptions are generally unverifiable; for this reason, we use a nonparametric approach, following the adage “less is more”, which is often very useful in statistics. For the detection problem, we developed an analysis of variance (ANOVA) test specifically for networks. As is well known, ANOVA is designed to test differences among the means of the subpopulations, and one may observe that equal means have different distributions. However, we propose a definition of means that will differ in the presence of any of the three modification categories mentioned above. As is well known, the identification stage is computationally far more complicated, and we address it partially looking at the subset of links or a subnetwork that present the highest network differences between groups.

Network Theory Framework

A network (or graph), denoted by G = ( V , E ), is an object described by a set V of nodes (vertices) and a set E ⊂ V × V of links (edges) between them. In what follows, we consider families of networks defined over the same fixed finite set of n nodes (brain regions). A network is completely described by its adjacency matrix A ∈ {0, 1} n × n , where A ( i , j ) = 1 if and only if the link ( i , j ) ∈ E . If the matrix A is symmetric, then the graph is undirected; otherwise, we have a directed graph.

Let us suppose we are interested in studying the brain network of a given population, where most likely brain networks differ from each other to some extent. If we randomly choose a person from this population and study his/her brain network, what we obtain is a random network. This random network, G , will have a given probability of being network G 1 , another probability of being network G 2 , and so on until \({G}_{\tilde{n}}\) . Therefore, a random network is completely characterized by its probability law,

Likewise, a random variable is also completely characterized by its probability law. In this case, the most common test for comparing many subpopulations is the analysis of variance test (ANOVA). This test rejects the null hypothesis of equal means if the averages are statistically different. Here, we propose an ANOVA test designed specifically to compare networks.

To develop this test, we first need to specify the null assumption in terms of some notion of mean network and a statistic to base the test on. We only have at hand two main tools for that: the adjacency matrices of the networks and a notion of distance between networks.

The first step for comparing networks is to define a distance or metric between them. Given two networks G 1 , G 2 we consider the most classical distance, the edit distance 15 defined as

This distance corresponds to the minimum number of links that must be added and subtracted to transform G 1 into G 2 (i.e. the number of different links), and is the L 1 distance between the two matrices. We will also use equation ( 2 ) for the case of weighted networks, i.e. for matrices with A ( i , j ) taking values between 0 and 1. It is important to mention that the results presented here are still valid under other metrics 16 , 17 , 18 .

Next, we consider the average weighted network - hereinafter called the average network - defined as the network whose adjacency matrix is the average of the adjacency matrices in the sample of networks. More precisely, we consider the following definitions.

Definition 1

Given a sample of networks { G 1 , …, G l } with the same distribution

The average network \( {\mathcal M} \) that has as adjacency matrix the average of the adjacency matrices

which in terms of the population version corresponds to the mean matrix \( {\mathcal M} (i,\,j)={\mathbb{E}}({A}_{{\bf{G}}}(i,\,j))=:{p}_{ij}\) .

The average distance around a graph H is defined as

which corresponds to the mean population distance

With these definitions in mind, the natural way to define a measure of network variability is

which measures the average distance (variability) of the networks around the average weighted network.

Given m subpopulations G 1 , …, G m the null assumption for our ANOVA test will be that the means of the m subpopulations \({\tilde{{ {\mathcal M} }}}_{1},\,\ldots ,\,{\tilde{{ {\mathcal M} }}}_{m}\) are the same. The test statistic will be based on a normalized version of the sum of the differences between \({\bar{d}}_{{G}^{i}}({{ {\mathcal M} }}_{i})\) and \({\bar{d}}_{G}({{ {\mathcal M} }}_{i})\) , where \({\bar{d}}_{{G}^{i}}\) and \({\bar{d}}_{G}\) are calculated according to (4) using the i –sample and the pooled sample respectively. This is developed in more detail in the next section.

Detecting and identifying network differences

Now we address the testing problem. Let \({G}_{1}^{1},{G}_{2}^{1},\ldots ,{G}_{{n}_{1}}^{1}\) denote the networks from subpopulation 1, \({G}_{1}^{2},{G}_{2}^{2},\ldots ,{G}_{{n}_{2}}^{2}\) the ones from subpopulation 2, and so on until \({G}_{1}^{m},{G}_{2}^{m},\ldots ,{G}_{{n}_{m}}^{m}\) the networks of subpopulation m . Let G 1 , G 2 , …, G n denote, without superscript, the complete pooled sample of networks, where \(n={\sum }_{i\mathrm{=1}}^{m}{n}_{i}\) . And finally, let \({{ {\mathcal M} }}_{i}\) and σ i denote the average network and the variability of the i -subpopulation of networks. We want to test (H 0 )

that all the subpopulations have the same mean network, under the alternative that at least one subpopulation has a different mean network.

It is interesting to note that for objects that are networks, the average network ( \({ {\mathcal M} }\) ) and the variability ( σ ) are not independent summary measures. In fact, the relationship between them is given by

Therefore, the proposed test can also be considered a test for equal variability. The proposed statistic for testing the null hypothesis is:

where a is a normalization constant given in Supplementary Information 1.3 . This statistic measures the difference between the network variability of each specific subpopulation and the average distance between all the populations and the specific average network. Theorem 1 states that under the null hypothesis (items (i) and (ii)) T is asymptotically Normal(0, 1), and if H 0 is false (item (iii)) T will be smaller than some negative constant c . This specific value is obtained by the following theorem (see the Supplementary Information 1 for the proof).

. Under the null hypothesis, the T statistic fulfills (i) and (ii), while T is sensitive to the alternative hypothesis, and (iii) holds true.

\({\mathbb{E}}(T)=0\)

T is asymptotically ( K : = min { n 1 , n 2 , .., n m } → ∞) Normal(0, 1).

Under the alternative hypothesis, T will be smaller than any negative value if K is large enough (The test is consistent).

This theorem provides a procedure for testing whether two or more groups of networks are different. Although having a procedure like the one described is important, we not only want to detect network differences, we also want to identify the specific network changes or differences. We discuss this issue next.

Identification

. Let us suppose that the ANOVA test for networks rejects the null hypothesis, and now the main goal is to identify network differences. Two main objectives are discussed:

Identification of all the links that show statistical differences between groups.

Identification of a set of nodes (a subnetwork) that present the highest network differences between groups.

The identification procedure we describe below aims to eliminate the noise (links or nodes without differences between subpopulations) while keeping the signal (links or nodes with differences between subpopulations).

Given a network G = ( V , E ) and a subset of links \(\tilde{E}\subset E\) , let us generically denote \({G}_{\tilde{E}}\) the subnetwork with the same nodes but with links identified by the set \(\tilde{E}\) . The rest of the links are erased. Given a subset of nodes \(\tilde{V}\subset V\) let us denote \({G}_{\tilde{V}}\) the subnetwork that only has the nodes (with the links between them) identified by the set \(\tilde{V}\) . The T statistic for the sample of networks with only the set of \(\tilde{E}\) links is denoted by \({T}_{\tilde{E}}\) , and the T statistic computed for all the sample networks with only the nodes that belong to \(\tilde{V}\) is denoted by \({T}_{\tilde{V}}\) .

The procedure we propose for identifying all the links that show statistical differences between groups is based on the minimization for \(\tilde{E}\subset E\) of \({T}_{\tilde{E}}\) . The set of links, \(\bar{E}\) , defined by

contain all the links that show statistical differences between subpopulations. One limitation of this identification procedure is that the space E is huge (# E = 2 n ( n −1)/2 where n is the number of nodes) and an efficient algorithm is needed to find the minimum. That is why we focus on identifying a group of nodes (or a subnetwork) expressing the largest differences.

The procedure proposed for identifying the subnetwork with the highest statistical differences between groups is similar to the previous one. It is based on the minimization of \({T}_{\tilde{V}}\) . The set of nodes, N , defined by

contains all relevant nodes. These nodes make up the subnetwork with the largest difference between groups. In this case, the complexity is smaller, since the space V is not so big (# V = 2 n − n − 1).

As in other well-known statistical procedures such as cluster analysis or selection of variables in regression models, finding the size \(\tilde{j}:=\#N\) of the number of nodes in the true subnetwork is a difficult problem due to possible overestimation of noisy data. The advantage of knowing \(\tilde{j}\) is that it reduces the computational complexity for finding the minimum to an order of \({n}^{\tilde{j}}\) instead of 2 n if we have to look for all possible sizes. However, the problem in our setup is less severe than other cases since the objective function ( \({T}_{\tilde{V}}\) ) is not monotonic when the size of the space increases. To solve this problem, we suggest the following algorithm.

Let V { j } be the space of networks with j distinguishable nodes, j ∈ {2, 3, …, n } and \(V=\mathop{\cup }\limits_{j}{V}_{\{j\}}\) . The nodes N j

define a subnetwork. In order to find the true subnetwork with differences between the groups, we now study the sequence T 2 , T 3 , …, T n . We continue with the search (increasing j ) until we find \(\tilde{j}\) fulfilling

where g is a positive function that decreases together with the sample size (in practice, a real value). \({N}_{\tilde{j}}\) are the nodes that make up the subnetwork with the largest differences among the groups or subpopulations studied.

It is important to mention that the procedures described above do not impose any assumption regarding the real connectivity differences between the populations. With additional hypotheses, the procedure can be improved. For instance, in 14 , 19 the authors proposed a methodology for the edge-identification problem that is powerful only when the real difference connection between the populations form a large unique connected component.

Examples and Applications

A relevant problem in the current neuroimaging research agenda is how to compare populations based on their brain networks. The ANOVA test presented above deals with this problem. Moreover, the ANOVA procedure allows the identification of the variables related to the brain network structure. In this section, we show an example and application of this procedure in neuroimaging (EEG, MEG, fMRI, eCoG). In the example we show the robustness of the procedures for testing and identification of different sample sizes. In the application, we analyze fMRI data to understand which variables in the dataset are dependent on the brain network structure. Identifying these variables is also very important because any fair comparison between two or more populations requires these variables be controlled (similar values).

Let us suppose we have three groups of subjects with equal sample size, K , and the brain network of each subject is studied using 16 regions (electrodes or voxels). Studies show connectivity between certain brain regions is different in certain neuropathologies, in aging, under the influence of psychedelic drugs, and more recently, in motor learning 20 , 21 . Recently, we have shown that a simple way to study connectivity is by what the physics community calls “the correlation function” 22 . This function describes the correlation between regions as a function of the distance between them. Although there exist long range connections, on average, regions (voxels or electrodes) closer to each other interact strongly, while distant ones interact more weakly. We have shown that the way in which this function decays with distance is a marker of certain diseases 23 , 24 , 25 . For example, patients with a traumatic brachial plexus lesion with root avulsions revealed a faster correlation decay as a function of distance in the primary motor cortex region corresponding to the arm 24 .

Next we present a toy model that analyses the method’s performance. In a network context, the behaviour described above can be modeled in the following way: since the probability that two regions are connected is a monotonic function of the correlation between them (i.e. on average, distant regions share fewer links than nearby regions) we decided to skip the correlations and directly model the link probability as an exponential function that decays with distance. We assume that the probability that region i is connected with j is defined as

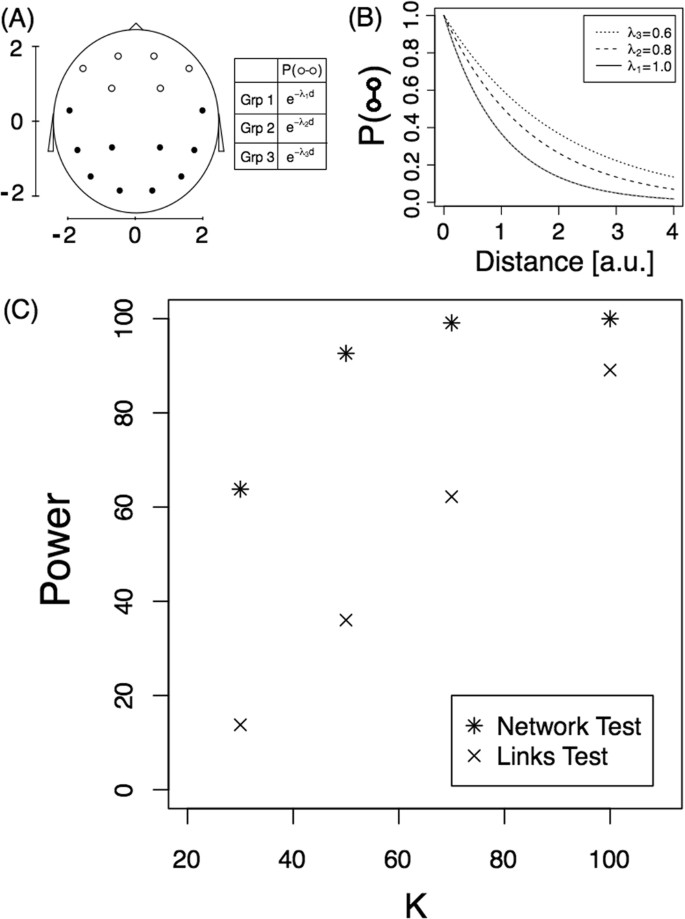

where d ( i , j ) is the distance between regions i and j . For the alternative hypothesis, we consider that there are six frontal brain regions (see Fig. 1 Panel A) that interact with a different decay rate in each of the three subpopulations. Figure 1 panel (A) shows the 16 regions analysed on an x-y scale. Panel (B) shows the link probability function for all electrodes and for each subpopulation. As shown, there is a slight difference between the decay of the interactions between the frontal electrodes in each subpopulation ( λ 1 = 1, λ 2 = 0.8 and λ 3 = 0.6 for groups 1, 2 and 3, respectively). The aim is to determine whether the ANOVA test for networks detects the network differences that are induced by the link probability function.

Detection problem. ( A ) Diagram of the scalp (each node represent a EEG electrode) on an x-y scale and the link probability. The three groups confirm the equation P ( ○ ↔ •) = P (• ↔ •) = e − d . ( B ) Link probability of frontal electrodes, P ( ○ ↔ ○ ), as a function of the distance for the three subpopulations. (C) Power of the tests as a function of sample size, K . Both tests are presented.

Here we investigated the power of the proposed test by simulating the model under different sample sizes ( K ). K networks were computed for each of the three subpopulations and the T statistic was computed for each of 10,000 replicates. The proportion of replicates with a T value smaller than −1.65 is an estimation of the power of the test for a significance level of 0.05 (unilateral hypothesis testing). Star symbols in Fig. 1C represent the power of the test for the different sample sizes. For example, for a sample size of 100, the test detects this small difference between the networks 100% of the time. As expected, the test has less power for small sample sizes, and if we change the values λ 2 and λ 3 in the model to 0.66 and 0.5, respectively, power increases. In this last case, the power changed from 64% to 96% for a sample size of 30 (see Supplementary Fig. S1 for the complete behaviour).

To the best of our knowledge, the T statistic is the first proposal of an ANOVA test for networks. Thus, here we compare it with a naive test where each individual link is compared among the subpopulations. The procedure is as follows: for each link, we calculate a test for equal proportions between the three groups to obtain a p-value for each link. Since we are conducting multiple comparisons, we apply the Benjamini-Hochberg procedure controlling at a significance level of α = 0.05. The procedure is as follows:

1. Compute the p-value of each link comparison, pv 1 , pv 2 , …, pv m .

2. Find the j largest p-value such that \(p{v}_{(j)}\le \frac{j}{m}\alpha \mathrm{.}\)

3. Declare that the link probability is different for all links that have a p-value ≤ pv ( j ) .

This procedure detects differences in the individual links while controlling for multiple comparisons. Finally, we consider the networks as being different if at least one link (of the 15 that have real differences) was detected to have significant differences. We will call this procedure the “Links Test”. Crosses in Fig. 1C correspond to the power of this test as a function of the sample size. As can be observed, the test proposed for testing equal mean networks is much more powerful than the previous test.

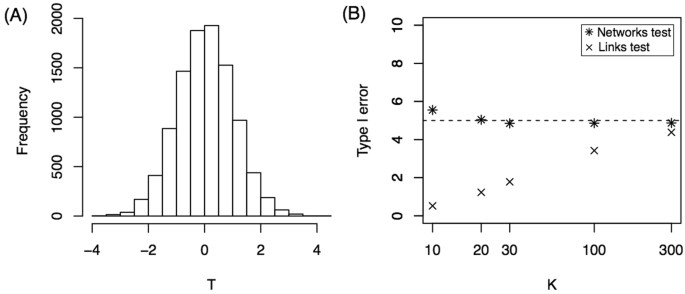

Theorem 1 States that T is asymptotically (sample size → ∞) Normal(0, 1) under the Null hypothesis. Next we investigated how large the sample size must be to obtain a good approximation. Moreover, we applied Theorem 1 in the simulations above for K = {30, 50, 70, 100}, but we did not show that the approximation is valid for K = 30, for example. Here, we show that the normal approximation is valid even for K = 30 in the case of 16-node networks. We simulated 10,000 replicates of the model considering that all three groups have exactly the same probability law given by group 1, i.e. all brain connections confirm the equation \(P(i\leftrightarrow j)={e}^{-{\lambda }_{1}d(i,j)}\) for the three groups (H 0 hypothesis). The T value is computed for each replicate of sample size K = 30, and the distribution is shown in Fig. 2(A) . The histogram shows that the distribution is very close to normal. Moreover, the Kolmogorov-Smirnov test against a normal distribution did not reject the hypothesis of a normal distribution for the T statistic (p-value = 0.52). For sample sizes smaller than 30, the distribution has more variance. For example, for K = 10, the standard deviation of T is 1.1 instead of 1 (see Supplementary Fig. S2 ). This deviation from a normal distribution can also be observed in panel B where we show the percentage of Type I errors as a function of the sample size ( K ). For sample sizes smaller than 30, this percentage is slightly greater than 5%, which is consistent with a variance greater than 1. The Links test procedure yielded a Type I error percentage smaller than 5% for small sample sizes.

Null hypothesis. ( A ) Histogram of T statistics for K = 30. ( B ) Percentage of Type I Error as a function of sample size, K . Both tests are presented.

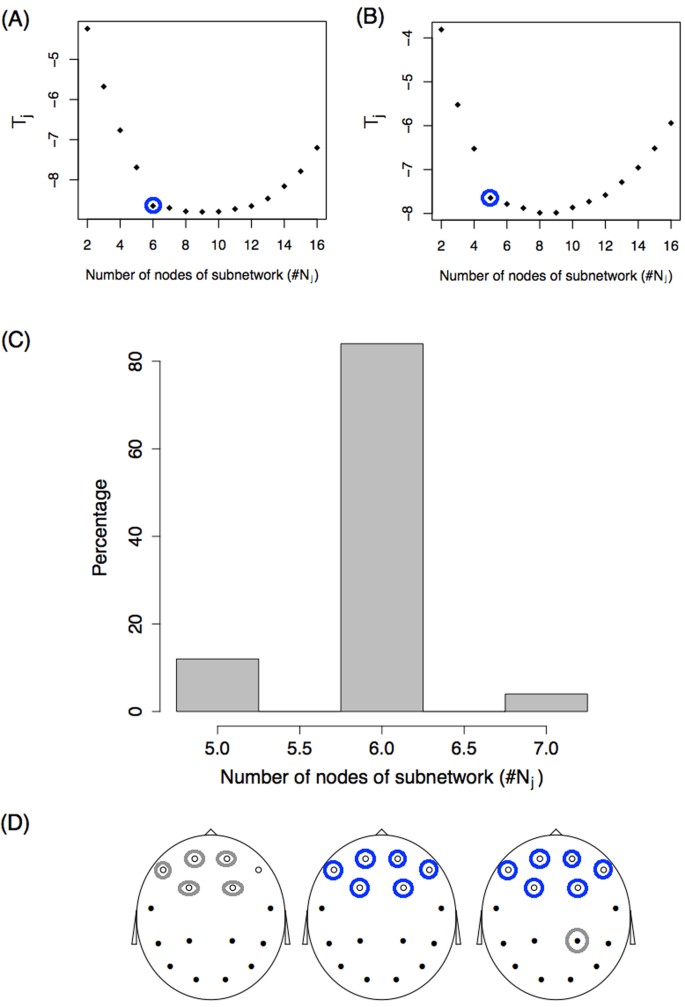

Finally, we applied the subnetwork identification procedure described before to this example. Fifty simulations were performed for the model with a sample size of K = 100. For each replication, the minimum statistic T j was studied as a function of the number of j nodes in the subnetwork. Figure 3A and B show two of the 50 simulation outcomes for the T j function of ( j ) number of nodes. Panel A shows that as nodes are incorporated into the subnetwork, the statistic sharply decreases to six nodes, and further incorporating nodes produces a very small decay in T j in the region between six and nine nodes. Finally, adding even more nodes results in a statistical increase. A similar behaviour is observed in the simulation shown in panel B, but the “change point” appears for a number of nodes equal to five. If we define that the number of nodes with differences, \(\tilde{j}\) , confirms

we obtain the values circled. For each of the 50 simulations, we studied the value \(\tilde{j}\) and a histogram of the results is shown in Panel C. With the criteria defined, most of the simulations (85%) result in a subnetwork of 6 nodes, as expected. Moreover, these 6 nodes correspond to the real subnetwork with differences between subpopulations (white nodes in Fig. 1A ). This was observed in 100% of simulations with \(\tilde{j}\) = 6 (blue circles in Panel D). In the simulations where this value was 5, five of the six true nodes were identified, and five of the six nodes with differences vary between simulations (represented with grey circles in Panel D). For the simulations where \(\tilde{j}\) = 7, all six real nodes were identified and a false node (grey circle) that changed between simulations was identified as being part of the subnetwork with differences.

Identification problem. ( A , B ) Statistic T j as a function of the number of nodes of the subnetwork ( j ) for two simulations. Blue circles represent the value \(\tilde{j}\) following the criteria described in the text. ( C ) Histogram of the number of subnetwork nodes showing differences, \(\tilde{j}\) . ( D ) Identification of the nodes. Blue and grey circles represent the nodes identified from the set \({N}_{\tilde{j}}\) . Circled blue nodes are those identified 100% of the time. Grey circles represent nodes that are identified some of the time. On the left, grey circles alternate between the six white nodes. On the right, the grey circle alternates between the black nodes.

The identification procedure was also studied for a smaller sample size of K = 30, and in this case, the real subnetwork was identified only 28% of the time (see Suppplementary Fig. S3 for more details). Identifying the correct subnetwork is more difficult (larger sample sizes are needed) than detecting global differences between group networks.

Resting-state fMRI functional networks

In this section, we analysed resting-state fMRI data from the 900 participants in the 2015 Human Connectome Project (HCP 26 ). We included data from the 812 healthy participants who had four complete 15-minute rs-fMRI runs, for a total of one hour of brain activity. We partitioned the 812 participants into three subgroups and studied the differences between the brain groups. Clearly, if the participants are randomly divided into groups, no brain subgroup differences are expected, but if the participants are divided in an intentional way, differences may appear. For example, if we divided the 812 by the amount of hours slept before the scan ( G 1 less than 6 hours, G 2 between 6 and 7 hours, and G 3 more than 7) it might be expected 27 , 28 to observe differences in brain connectivity on the day of the scan. Moreover, as a by-product, we obtain that this variable is an important factoring variable to be controlled before the scan. Fortunately, HCP provides interesting individual socio-demographic, behavioural and structural brain data to facilitate this analysis. Moreover, using a previous release of the HCP data (461 subjects), Smith et al . 29 , using a multivariate analysis (canonical correlation), showed that a linear combination of demographics and behavior variables highly correlates with a linear combination of functional interactions between brain parcellations (obtained by Independent Component Analysis). Our approach has the same spirit, but has some differences. In our case, the main objective is to identify variables that “explain” (that are dependent with) the individual brain network. We do not impose a linear relationship between non-imaging and imaging variables, and we study the brain network as a whole object without different “loads” in each edge. Our method does not impose any kind of linearity, and it also detects linear and non-linear dependence structures.

Data were pre-processed by HCP 30 , 31 , 32 (details can be found in 30 ), yielding the following outputs:

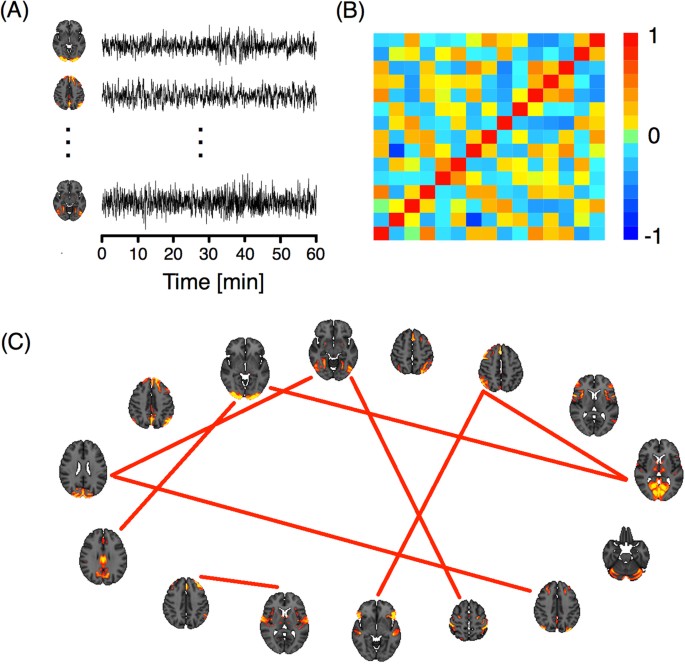

Group-average brain regional parcellations obtained by means of group-Independent Component Analysis (ICA 33 ). Fifteen components are described.

Subject-specific time series per ICA component.

Figure 4(A) shows three of the 15 ICA components with the specific one hour time series for a particular subject. These signals were used to construct an association matrix between pairs of ICA components per subject. This matrix represents the strength of the association between each pair of components, which can be quantified by different functional coupling metrics, such as the Pearson correlation coefficient between the signals of the component, which we adopted in the present study (panel (B)). For each of the 812 subjects, we studied functional connectivity by transforming each correlation matrix, Σ, into binary matrices or networks, G , (panel (C)). Two criteria for this transformation were used 34 , 35 , 36 : a fixed correlation threshold and a fixed number of links criterion. In the first criterion, the matrix was thresholded by a value ρ affording networks with varying numbers of links. In the second, a fixed number of link criteria were established and a specific threshold was chosen for each subject.

( A ) ICA components and their corresponding time series. ( B ) Correlation matrix of the time series. ( C ) Network representation. The links correspond to the nine highest correlations.

As we have already mentioned, HCP provides interesting individual socio-demographic, behavioural and structural brain data. Variables are grouped into seven main categories: alertness, motor response, cognition, emotion, personality, sensory, and brain anatomy. Volume, thickness and areas of different brain regions were computed using the T1-weighted images of each subject in Free Surfer 37 . Thus, for each subject, we obtained a brain functional network, G , and a multivariate vector X that contains this last piece of information.

The main focus of this section is to analyse the “impact” of each of these variables ( X ) on the brain networks (i.e., on brain activity). To this end, we first selected a variable such as k , X k , and grouped each subject according to his/her value into only one of three categories (Low, Medium, or High) just by placing the values in ascending and using the 33.3% percentile. In this way, we obtained three groups of subjects, each identified by its correlation matrix \({{\rm{\Sigma }}}_{1}^{L},\,\ldots ,\,{{\rm{\Sigma }}}_{{n}_{L}}^{L}\) , \({{\rm{\Sigma }}}_{1}^{M},\,\ldots ,\,{{\rm{\Sigma }}}_{{n}_{M}}^{M}\) , and \({{\rm{\Sigma }}}_{1}^{H},\,\ldots ,\,{{\rm{\Sigma }}}_{{n}_{H}}^{H}\) , or by its corresponding network (once the criteria and the parameter are chosen) \({G}_{1}^{L},\,\ldots ,\,{G}_{{n}_{L}}^{L},\,\,\,{G}_{1}^{M},\,\ldots ,\,{G}_{{n}_{M}}^{M}\) , and \({G}_{1}^{H},\,\ldots ,\,{G}_{{n}_{H}}^{H}\) . The sample size of each group ( n L , n M , and n H ) is approximately 1/3 of 812, except in cases where there were ties. Once we obtained these three sets of networks, we applied the developed test. If differences exist between all three groups, then we are confirming an interdependence between the factoring variable and the functional networks. However, we cannot yet elucidate directionality (i.e., different networks lead to different sleeping patterns or vice versa?).

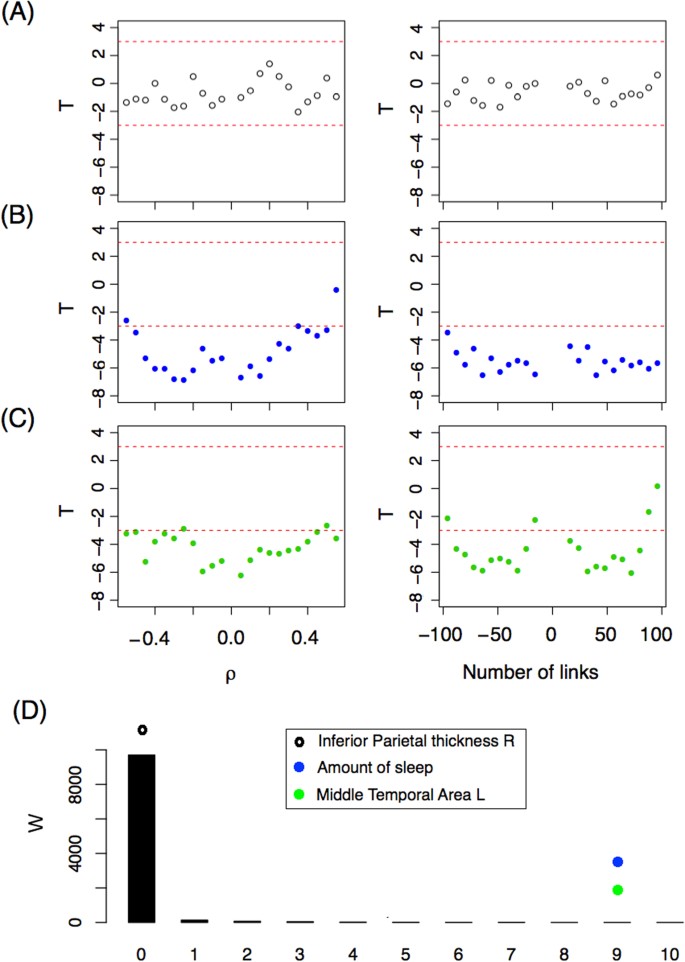

After filtering the data, we identified 221 variables with 100% complete information for the 812 subjects, and 90 other variables with almost complete information, giving a total of 311 variables. We applied the network ANOVA test for each of these 311 variables and report the T statistic. Figure 5(A) shows the T statistic for the variable Thickness of the right Inferior Parietal region. All values of the T statistic are between −2 and 2 for all ρ values using the fixed correlation criterion (left panel) for constructing the networks. The same occurs when a fixed number of link criteria is used (right panel). According to Theorem 1, when there are no differences between groups, T is asymptotically normal (0, 1), and therefore a value smaller than −3 is very unlikely (p-value = 0.00135). Since all T values are between −2 and 2, we assert that Thickness of the right Inferior Parietal region is not associated with the resting-state functional interactions. In panel (B), we show the T statistic for the variable Amount of hours spent sleeping on the 30 nights prior to the scan (“During the past month, how many hours of actual sleep did you get at night? (This may be different than the number of hours you spent in bed.)”) which corresponds to the alertness category. As one can see, most T values are much lower than −3, rejecting the hypothesis of equal mean network. Importantly, this shows that the number of hours a person sleeps is associated with their brain functional networks (or brain activity). However, as explained above, we do not know whether the number of hours slept the nights before represent these individuals’ habitual sleeping patterns, complicating any effort to infer causation. In other words, six hours of sleep for an individual who habitually sleeps six hours may not produce the same network pattern as six hours in an individual who normally sleeps eight hours (and is likely tired during the scan). Alternatively, different activity observed during waking hours may “produce” different sleep behaviours. Nevertheless, we know that the amount of hours slept before the scan should be measured and controlled when scanning a subject. In Panel (C), we show that brain volumetric variables can also influence resting-state fMRI networks. In that panel, we show the T value for the variable Area of the left Middle temporal region. Significant differences for both network criteria are also observed for this variable.

( A – C ) T –statistics as a function of (left panel) ρ and (right panel) the number of links for three variables: ( A ) Right Inferioparietal Thickness, ( B ) Number of hours slept the nights prior to the scan. ( C ) Left Middle temporal Area. ( D ) W -statistic distribution (black bars) based on a bootstrap strategy. The W -statistic of the three variables studied is depicted with dots.

Under the hypothesis of equal mean networks between groups, we expect not to obtain a T statistic less than −3 when comparing the sample networks. We tested several different thresholds and numbers of links in order to present a more robust methodology. However, in this way, we generate sets of networks that are dependent on each criterion and between criteria, similarly to what happens when studying dynamic networks with overlapping sliding windows. This makes the statistical inference more difficult. To address this problem, we decided to define a new statistic based on T , W 3 , and study its distribution using the bootstrap resampling technique. The new statistic is defined as,

where Δ is the number of values of T that are lower than −3 for the resolution (grid of thresholds) studied. The supraindex in Δ indicates the criteria (correlation threshold, ρ or number of links fixed, L ) and the subindex indicates whether it is for positive or negative parameter values ( ρ or number of links). For example, Fig. 5(C) reveals that the variable Area of the left Middle temporal confirms having \({{\rm{\Delta }}}_{+}^{\rho }=10\) , \({{\rm{\Delta }}}_{-}^{\rho }=10\) , \({{\rm{\Delta }}}_{+}^{L}=9\) , and \({{\rm{\Delta }}}_{-}^{L}=9\) , and therefore W 3 = 9. The distribution of W 3 under the null hypothesis is studied numerically. Ten thousand random resamplings of the real networks were selected and the W 3 statistic was computed for each one. Figure 5(D) shows the W empirical distribution (under the null hypothesis) with black bars. Most W 3 values are zero, as expected. In this figure, the W 3 values of the three variables described are also represented by dots. The extreme values of W 3 for the variables Amount of Sleep and Middle Temporal Area L confirm that these differences are not a matter of chance. Both variables are related to brain network connectivity.

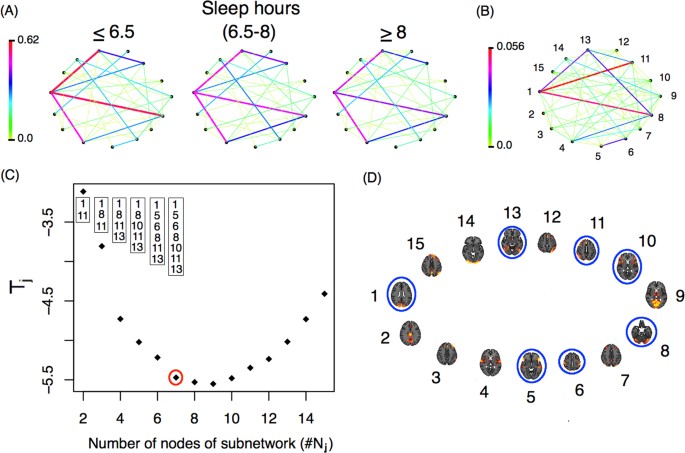

So far we have shown, among other things, that functional networks differ between individuals who get more or fewer hours of sleep, but how do these networks differ exactly? Fig. 6(A) shows the average networks for the three groups of subjects. There are differences in connectivity strength between some of the nodes (ICA components). These differences are more evident in panel (B), which presents a weighted network Ψ with links showing the variability among the subpopulation’s average networks. This weighted network is defined as

where \(\overline{{ {\mathcal M} }}(i,\,j)=\frac{1}{3}\mathop{\sum _{s\mathrm{=1}}}\limits^{3}{{ {\mathcal M} }}^{{\rm{grp}}s}\) . The role of Ψ is to highlight the differences between the mean networks. The greatest difference is observed between nodes 1 and 11. Individuals that sleep 6.5 hours or less show the strongest connection between ICA component number 1 (which corresponds to the occipital pole and the cuneal cortex in the occipital lobe) and ICA component number 11 (which includes the middle and superior frontal gyri in the frontal lobe, the superior parietal lobule and the angular gyrus in the parietal lobe). Another important connection that differs between groups is the one between ICA components 1 and 8, which corresponds to the anterior and posterior lobes of the cerebellum. Using the subnetwork identification procedure previously described (see Fig. 6C ) we identified a 7-node subnetwork as the most significant for network differences. The nodes that make up that network are presented in panel D.

( A ) Average network for each subgroup defined by hours of sleep ( B ) Weighted network with links that represent the differences among the subpopulation mean networks. ( C ) T j -statistic as a function of the number of nodes in each subnetwork ( j ). The nodes identified by the minimum T j are presented in the boxes, while the number of nodes identified by the procedure are represented with a red circle. ( D ) Nodes from the identified subnetwork are circled in blue. The nodes identified in ( D ) correspond to those in panel ( B ).

The results described above refer to only three of the 311 variables we analysed. In terms of the remaining variables, we observed more variables that partitioned the subjects into groups presenting statistical differences between the corresponding brain networks. Two more behavioral variables were identified the variable Dimensional Change Card Sort (CardSort_AgeAdj and CardSort_Unadj) which is a measure of cognitive flexibility, and the variable motor strength (Strength_AgeAdj and Strength_Unadj). Also 20 different brain volumetric variables were identified, the complete list of these variables is shown in Suppl. Table S1 . It is important to note that these brain volumetric variables are largely dependent on each other; for example, individuals with larger inferior-temporal areas often have a greater supratentorial volume, and so on (see Suppl. Fig. S4 ).

We have reported only those variables for which there is very strong statistical evidence in favor of the existence of dependence between the functional networks and the “behavioral” variables, irrespectively of the threshold used to build up the networks. There are other variables that show this dependence only for some levels of the threshold parameter, but we do not report these to avoid reporting results that may not be significant. Our results complement those observed in 29 . In particular, Smith et al . report that the variable Picture Vocabulary test is the most significant. With a less restrictive criterion, this variable can also be considered significant with our methodology. In fact, the W 3 value equals 3 (see Supplementary Fig. S5 for details), which supports the notion (see panel D in Fig. 5 ) that the variable Picture Vocabulary test is also relevant for explaining the functional networks. On the other hand, the variable we found to vary significantly ( W 3 = 9) the Amount of sleep is not reported by Smith et al . Perhaps the canonical correlation cannot find the variable because it looks for linear correlations in a high dimensional space. It is well known that non-linearities appear typically in high dimensional statistical problems (See for instance 38 ). To capture nonlinear associations, a kernel CCA method was introduced, see 39 , 40 and the references therein. By contrast, our method does not impose any kind of linearity, and detects linear as well as non-linear dependence structures. The variable “Cognitive flexibility” (Card Sort) found here was also reported in 38 . Finally, the brain volumetric variables we found to be relevant here were not analyzed in 29 .

So far, we apply the methodology presented here to analyse brain data by using only 15 brain ICA dimensions (provided by HCP). But, what is the impact of working with more ICA components? Does we identify more covariables? Fortunately, we can respond these questions since more ICA dimensions were recently made available on HCP webpage. Three new cognitive variables, Working memory , Relational processing and Self-regulation/Impulsivity were identified for higher network dimension (50 and 300 ICA dimensions, see Suppl. Table S2 for details).

Performing statistical inference on brain networks is important in neuroimaging. In this paper, we presented a new method for comparing anatomical and functional brain networks of two or more subgroups of subjects. Two problems were studied: the detection of differences between the groups and the identification of the specific network differences. For the first problem, we developed an ANOVA test based on the distance between networks. This test performed well in terms of detecting existing differences (high statistical power). Finally, based on the statistics developed for the testing problem, we proposed a way of solving the identification problem. Next, we discuss our findings.

Based on the minimization of the T statistic, we propose a method for identifying the subnetwork that differs among the subgroups. This subnetwork is very useful. On the one hand, it allows us to understand which brain regions are involved in the specific comparison study (neurobiological interpretation), and on the other, it allows us to identify/diagnose new subjects with greater accuracy.

The relationship between the minimum T value for a fixed number of nodes as a function of the number of nodes ( T j vs. j ) is very informative. A large decrease in T j incorporating a new node into the subnetwork ( T j + 1 << T j ) means that the new node and its connections explain much of the difference between groups. A very small decrease shows that the new node explains only some of the difference because either the subgroup difference is small for the connections of the new node, or because there is a problem of overestimation.

The correct number of nodes in each subnetwork must verify

In this paper, we present ad hoc criteria in each example (a certain constant for g ( sample size )) and we do not give a general formula for g ( sample size ). We believe that this could be improved in theory, but in practice, one can propose a natural way to define the upper bound and subsequently identify the subnetwork, as we showed in the example and in the application by observing T j as a function of j . Statistical methods such as the one developed for change-point detection may be useful in solving this problem.

Sample size

What is the adequate sample size for comparing brain networks? This is typically the first question in any comparison study. Clearly, the response depends on the magnitude of the network differences between the groups and the power of the test. If the subpopulations differ greatly, then a moderate number of networks in each group is enough. On the other hand, if the differences are not very big, then a larger sample size is required to have a reasonable power of detection. The problem gets more complicated when it comes to identification. We showed in Example 1 that we obtain a good identification rate when a sample size of 100 networks is selected from each subgroup. Thus, the rate of correct identification is small for a sample size of for example 30.

Confounding variables in Neuroimaging

Humans are highly variable in their brain activity, which can be influenced, in turn, by their level of alertness, mood, motivation, health and many other factors. Even the amount of coffee drunk prior to the scan can greatly influence resting-state neural activity. What variables must be controlled to make a fair comparison between two or more groups? Certainly age, gender, and education are among those variables, and in this study we found that the amount of hours slept the nights prior to the scan is also relevant. Although this might seem pretty obvious, to the best of our knowledge, most studies do not control for this variable. Five other variables were identified, each one related with some dimensions of cognitive flexibility, self-regulation/impulsivity, relational processing, working memory or motor strength. Finally, we identified as being relevant a set of 20 highly interdependent brain volumetric variables. In principle, the role of these variables is not surprising, since comparing brain activity between individuals requires one to pre-process the images by realigning and normalizing them to a standard brain. In other words, the relevance of specific area volumes may simply be a by-product of the standardization process. However, if our finding that brain volumetric variables affect functional networks is replicated in other studies, this poses a problem for future experimental designs. Specifically, groups will not only have to be matched by variables such as age, gender and education level, but also in terms of volumetric variables, which can only be observed in the scanner. Therefore, several individuals would have to be scanned before selecting the final study groups.

In sum, a large number of subjects in each group must be tested to obtain highly reproducible findings when analysing resting-state data with network methodologies. Also, whenever possible, the same participants should be tested both as controls and as the treatment group (paired samples) in order to minimize the impact of brain volumetric variables.

Deco, G. & Kringelbach, M. L. Great expectations: using whole-brain computational connectomics for understanding neuropsychiatric disorders. Neuron 84 , 892–905 (2014).

Article CAS PubMed Google Scholar

Stephan, K. E., Iglesias, S., Heinzle, J. & Diaconescu, A. O. Translational perspectives for computational neuroimaging. Neuron 87 , 716–732 (2015).