- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

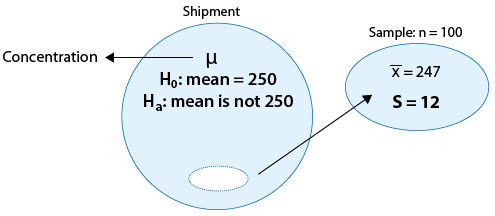

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

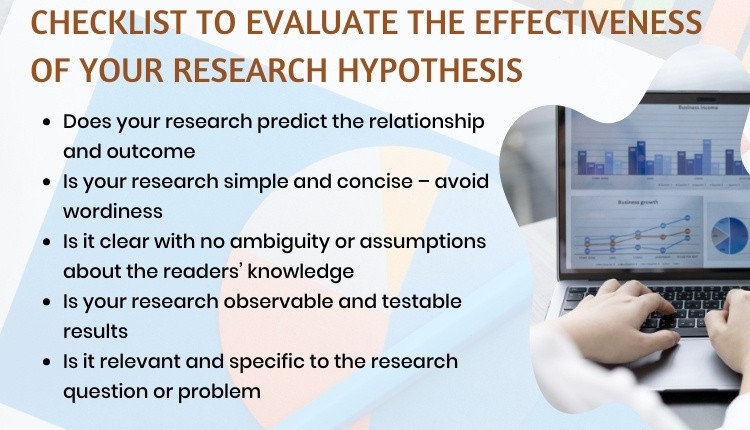

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

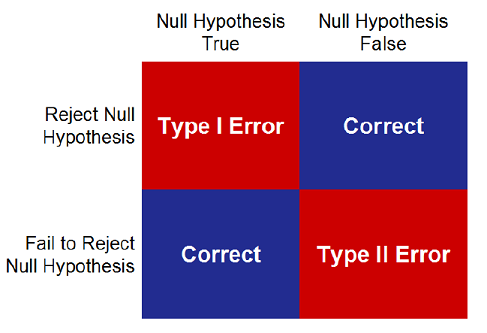

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

How to Develop a Good Research Hypothesis

The story of a research study begins by asking a question. Researchers all around the globe are asking curious questions and formulating research hypothesis. However, whether the research study provides an effective conclusion depends on how well one develops a good research hypothesis. Research hypothesis examples could help researchers get an idea as to how to write a good research hypothesis.

This blog will help you understand what is a research hypothesis, its characteristics and, how to formulate a research hypothesis

Table of Contents

What is Hypothesis?

Hypothesis is an assumption or an idea proposed for the sake of argument so that it can be tested. It is a precise, testable statement of what the researchers predict will be outcome of the study. Hypothesis usually involves proposing a relationship between two variables: the independent variable (what the researchers change) and the dependent variable (what the research measures).

What is a Research Hypothesis?

Research hypothesis is a statement that introduces a research question and proposes an expected result. It is an integral part of the scientific method that forms the basis of scientific experiments. Therefore, you need to be careful and thorough when building your research hypothesis. A minor flaw in the construction of your hypothesis could have an adverse effect on your experiment. In research, there is a convention that the hypothesis is written in two forms, the null hypothesis, and the alternative hypothesis (called the experimental hypothesis when the method of investigation is an experiment).

Characteristics of a Good Research Hypothesis

As the hypothesis is specific, there is a testable prediction about what you expect to happen in a study. You may consider drawing hypothesis from previously published research based on the theory.

A good research hypothesis involves more effort than just a guess. In particular, your hypothesis may begin with a question that could be further explored through background research.

To help you formulate a promising research hypothesis, you should ask yourself the following questions:

- Is the language clear and focused?

- What is the relationship between your hypothesis and your research topic?

- Is your hypothesis testable? If yes, then how?

- What are the possible explanations that you might want to explore?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate your variables without hampering the ethical standards?

- Does your research predict the relationship and outcome?

- Is your research simple and concise (avoids wordiness)?

- Is it clear with no ambiguity or assumptions about the readers’ knowledge

- Is your research observable and testable results?

- Is it relevant and specific to the research question or problem?

The questions listed above can be used as a checklist to make sure your hypothesis is based on a solid foundation. Furthermore, it can help you identify weaknesses in your hypothesis and revise it if necessary.

Source: Educational Hub

How to formulate a research hypothesis.

A testable hypothesis is not a simple statement. It is rather an intricate statement that needs to offer a clear introduction to a scientific experiment, its intentions, and the possible outcomes. However, there are some important things to consider when building a compelling hypothesis.

1. State the problem that you are trying to solve.

Make sure that the hypothesis clearly defines the topic and the focus of the experiment.

2. Try to write the hypothesis as an if-then statement.

Follow this template: If a specific action is taken, then a certain outcome is expected.

3. Define the variables

Independent variables are the ones that are manipulated, controlled, or changed. Independent variables are isolated from other factors of the study.

Dependent variables , as the name suggests are dependent on other factors of the study. They are influenced by the change in independent variable.

4. Scrutinize the hypothesis

Evaluate assumptions, predictions, and evidence rigorously to refine your understanding.

Types of Research Hypothesis

The types of research hypothesis are stated below:

1. Simple Hypothesis

It predicts the relationship between a single dependent variable and a single independent variable.

2. Complex Hypothesis

It predicts the relationship between two or more independent and dependent variables.

3. Directional Hypothesis

It specifies the expected direction to be followed to determine the relationship between variables and is derived from theory. Furthermore, it implies the researcher’s intellectual commitment to a particular outcome.

4. Non-directional Hypothesis

It does not predict the exact direction or nature of the relationship between the two variables. The non-directional hypothesis is used when there is no theory involved or when findings contradict previous research.

5. Associative and Causal Hypothesis

The associative hypothesis defines interdependency between variables. A change in one variable results in the change of the other variable. On the other hand, the causal hypothesis proposes an effect on the dependent due to manipulation of the independent variable.

6. Null Hypothesis

Null hypothesis states a negative statement to support the researcher’s findings that there is no relationship between two variables. There will be no changes in the dependent variable due the manipulation of the independent variable. Furthermore, it states results are due to chance and are not significant in terms of supporting the idea being investigated.

7. Alternative Hypothesis

It states that there is a relationship between the two variables of the study and that the results are significant to the research topic. An experimental hypothesis predicts what changes will take place in the dependent variable when the independent variable is manipulated. Also, it states that the results are not due to chance and that they are significant in terms of supporting the theory being investigated.

Research Hypothesis Examples of Independent and Dependent Variables

Research Hypothesis Example 1 The greater number of coal plants in a region (independent variable) increases water pollution (dependent variable). If you change the independent variable (building more coal factories), it will change the dependent variable (amount of water pollution).

Research Hypothesis Example 2 What is the effect of diet or regular soda (independent variable) on blood sugar levels (dependent variable)? If you change the independent variable (the type of soda you consume), it will change the dependent variable (blood sugar levels)

You should not ignore the importance of the above steps. The validity of your experiment and its results rely on a robust testable hypothesis. Developing a strong testable hypothesis has few advantages, it compels us to think intensely and specifically about the outcomes of a study. Consequently, it enables us to understand the implication of the question and the different variables involved in the study. Furthermore, it helps us to make precise predictions based on prior research. Hence, forming a hypothesis would be of great value to the research. Here are some good examples of testable hypotheses.

More importantly, you need to build a robust testable research hypothesis for your scientific experiments. A testable hypothesis is a hypothesis that can be proved or disproved as a result of experimentation.

Importance of a Testable Hypothesis

To devise and perform an experiment using scientific method, you need to make sure that your hypothesis is testable. To be considered testable, some essential criteria must be met:

- There must be a possibility to prove that the hypothesis is true.

- There must be a possibility to prove that the hypothesis is false.

- The results of the hypothesis must be reproducible.

Without these criteria, the hypothesis and the results will be vague. As a result, the experiment will not prove or disprove anything significant.

What are your experiences with building hypotheses for scientific experiments? What challenges did you face? How did you overcome these challenges? Please share your thoughts with us in the comments section.

Frequently Asked Questions

The steps to write a research hypothesis are: 1. Stating the problem: Ensure that the hypothesis defines the research problem 2. Writing a hypothesis as an 'if-then' statement: Include the action and the expected outcome of your study by following a ‘if-then’ structure. 3. Defining the variables: Define the variables as Dependent or Independent based on their dependency to other factors. 4. Scrutinizing the hypothesis: Identify the type of your hypothesis

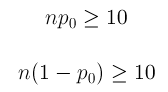

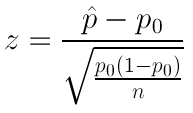

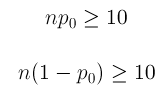

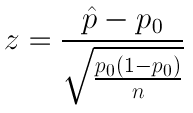

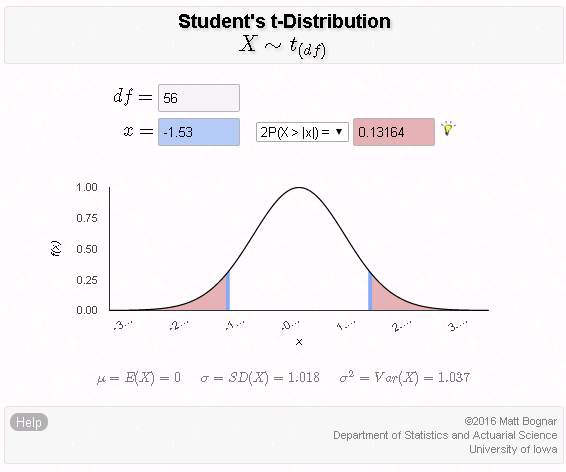

Hypothesis testing is a statistical tool which is used to make inferences about a population data to draw conclusions for a particular hypothesis.

Hypothesis in statistics is a formal statement about the nature of a population within a structured framework of a statistical model. It is used to test an existing hypothesis by studying a population.

Research hypothesis is a statement that introduces a research question and proposes an expected result. It forms the basis of scientific experiments.

The different types of hypothesis in research are: • Null hypothesis: Null hypothesis is a negative statement to support the researcher’s findings that there is no relationship between two variables. • Alternate hypothesis: Alternate hypothesis predicts the relationship between the two variables of the study. • Directional hypothesis: Directional hypothesis specifies the expected direction to be followed to determine the relationship between variables. • Non-directional hypothesis: Non-directional hypothesis does not predict the exact direction or nature of the relationship between the two variables. • Simple hypothesis: Simple hypothesis predicts the relationship between a single dependent variable and a single independent variable. • Complex hypothesis: Complex hypothesis predicts the relationship between two or more independent and dependent variables. • Associative and casual hypothesis: Associative and casual hypothesis predicts the relationship between two or more independent and dependent variables. • Empirical hypothesis: Empirical hypothesis can be tested via experiments and observation. • Statistical hypothesis: A statistical hypothesis utilizes statistical models to draw conclusions about broader populations.

Wow! You really simplified your explanation that even dummies would find it easy to comprehend. Thank you so much.

Thanks a lot for your valuable guidance.

I enjoy reading the post. Hypotheses are actually an intrinsic part in a study. It bridges the research question and the methodology of the study.

Useful piece!

This is awesome.Wow.

It very interesting to read the topic, can you guide me any specific example of hypothesis process establish throw the Demand and supply of the specific product in market

Nicely explained

It is really a useful for me Kindly give some examples of hypothesis

It was a well explained content ,can you please give me an example with the null and alternative hypothesis illustrated

clear and concise. thanks.

So Good so Amazing

Good to learn

Thanks a lot for explaining to my level of understanding

Explained well and in simple terms. Quick read! Thank you

It awesome. It has really positioned me in my research project

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Reporting Research

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for data interpretation

In research, choosing the right approach to understand data is crucial for deriving meaningful insights.…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right approach

The process of choosing the right research design can put ourselves at the crossroads of…

- Industry News

COPE Forum Discussion Highlights Challenges and Urges Clarity in Institutional Authorship Standards

The COPE forum discussion held in December 2023 initiated with a fundamental question — is…

- Career Corner

Unlocking the Power of Networking in Academic Conferences

Embarking on your first academic conference experience? Fear not, we got you covered! Academic conferences…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Research recommendations play a crucial role in guiding scholars and researchers toward fruitful avenues of…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

How to Design Effective Research Questionnaires for Robust Findings

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

As a researcher, what do you consider most when choosing an image manipulation detector?

Hypothesis Maker Online

Looking for a hypothesis maker? This online tool for students will help you formulate a beautiful hypothesis quickly, efficiently, and for free.

Are you looking for an effective hypothesis maker online? Worry no more; try our online tool for students and formulate your hypothesis within no time.

- 🔎 How to Use the Tool?

- ⚗️ What Is a Hypothesis in Science?

👍 What Does a Good Hypothesis Mean?

- 🧭 Steps to Making a Good Hypothesis

🔗 References

📄 hypothesis maker: how to use it.

Our hypothesis maker is a simple and efficient tool you can access online for free.

If you want to create a research hypothesis quickly, you should fill out the research details in the given fields on the hypothesis generator.

Below are the fields you should complete to generate your hypothesis:

- Who or what is your research based on? For instance, the subject can be research group 1.

- What does the subject (research group 1) do?

- What does the subject affect? - This shows the predicted outcome, which is the object.

- Who or what will be compared with research group 1? (research group 2).

Once you fill the in the fields, you can click the ‘Make a hypothesis’ tab and get your results.

⚗️ What Is a Hypothesis in the Scientific Method?

A hypothesis is a statement describing an expectation or prediction of your research through observation.

It is similar to academic speculation and reasoning that discloses the outcome of your scientific test . An effective hypothesis, therefore, should be crafted carefully and with precision.

A good hypothesis should have dependent and independent variables . These variables are the elements you will test in your research method – it can be a concept, an event, or an object as long as it is observable.

You can observe the dependent variables while the independent variables keep changing during the experiment.

In a nutshell, a hypothesis directs and organizes the research methods you will use, forming a large section of research paper writing.

Hypothesis vs. Theory

A hypothesis is a realistic expectation that researchers make before any investigation. It is formulated and tested to prove whether the statement is true. A theory, on the other hand, is a factual principle supported by evidence. Thus, a theory is more fact-backed compared to a hypothesis.

Another difference is that a hypothesis is presented as a single statement , while a theory can be an assortment of things . Hypotheses are based on future possibilities toward a specific projection, but the results are uncertain. Theories are verified with undisputable results because of proper substantiation.

When it comes to data, a hypothesis relies on limited information , while a theory is established on an extensive data set tested on various conditions.

You should observe the stated assumption to prove its accuracy.

Since hypotheses have observable variables, their outcome is usually based on a specific occurrence. Conversely, theories are grounded on a general principle involving multiple experiments and research tests.

This general principle can apply to many specific cases.

The primary purpose of formulating a hypothesis is to present a tentative prediction for researchers to explore further through tests and observations. Theories, in their turn, aim to explain plausible occurrences in the form of a scientific study.

It would help to rely on several criteria to establish a good hypothesis. Below are the parameters you should use to analyze the quality of your hypothesis.

🧭 6 Steps to Making a Good Hypothesis

Writing a hypothesis becomes way simpler if you follow a tried-and-tested algorithm. Let’s explore how you can formulate a good hypothesis in a few steps:

Step #1: Ask Questions

The first step in hypothesis creation is asking real questions about the surrounding reality.

Why do things happen as they do? What are the causes of some occurrences?

Your curiosity will trigger great questions that you can use to formulate a stellar hypothesis. So, ensure you pick a research topic of interest to scrutinize the world’s phenomena, processes, and events.

Step #2: Do Initial Research

Carry out preliminary research and gather essential background information about your topic of choice.

The extent of the information you collect will depend on what you want to prove.

Your initial research can be complete with a few academic books or a simple Internet search for quick answers with relevant statistics.

Still, keep in mind that in this phase, it is too early to prove or disapprove of your hypothesis.

Step #3: Identify Your Variables

Now that you have a basic understanding of the topic, choose the dependent and independent variables.

Take note that independent variables are the ones you can’t control, so understand the limitations of your test before settling on a final hypothesis.

Step #4: Formulate Your Hypothesis

You can write your hypothesis as an ‘if – then’ expression . Presenting any hypothesis in this format is reliable since it describes the cause-and-effect you want to test.

For instance: If I study every day, then I will get good grades.

Step #5: Gather Relevant Data

Once you have identified your variables and formulated the hypothesis, you can start the experiment. Remember, the conclusion you make will be a proof or rebuttal of your initial assumption.

So, gather relevant information, whether for a simple or statistical hypothesis, because you need to back your statement.

Step #6: Record Your Findings

Finally, write down your conclusions in a research paper .

Outline in detail whether the test has proved or disproved your hypothesis.

Edit and proofread your work, using a plagiarism checker to ensure the authenticity of your text.

We hope that the above tips will be useful for you. Note that if you need to conduct business analysis, you can use the free templates we’ve prepared: SWOT , PESTLE , VRIO , SOAR , and Porter’s 5 Forces .

❓ Hypothesis Formulator FAQ

Updated: Oct 25th, 2023

- How to Write a Hypothesis in 6 Steps - Grammarly

- Forming a Good Hypothesis for Scientific Research

- The Hypothesis in Science Writing

- Scientific Method: Step 3: HYPOTHESIS - Subject Guides

- Hypothesis Template & Examples - Video & Lesson Transcript

- Free Essays

- Writing Tools

- Lit. Guides

- Donate a Paper

- Referencing Guides

- Free Textbooks

- Tongue Twisters

- Job Openings

- Expert Application

- Video Contest

- Writing Scholarship

- Discount Codes

- IvyPanda Shop

- Terms and Conditions

- Privacy Policy

- Cookies Policy

- Copyright Principles

- DMCA Request

- Service Notice

Use our hypothesis maker whenever you need to formulate a hypothesis for your study. We offer a very simple tool where you just need to provide basic info about your variables, subjects, and predicted outcomes. The rest is on us. Get a perfect hypothesis in no time!

How to Write a Hypothesis

Improve your research report and learn how to develop a precise and thorough hypothesis for your research.

A hypothesis is simply a testable statement to find an answer to a specific question; a formalized hypothesis forces the thought about what results to expect in an experiment.

As a result, a hypothesis can be used for almost anything, such as testing different outcomes in daily tasks, identifying a possible ending in research, forming the basis of a scientific experiment, and so on.

With this article, you will learn the reasoning behind it, the various types of hypotheses as well as how to write a hypothesis more clearly.

What is a Hypothesis?

A hypothesis is a method of forecasting, an attempt to find an answer to something that has not yet been tested, an idea or a proposal based on limited evidence.

In most cases, this entails proposing relationships between two variables (or more): the independent variable (the change made) and the dependent variable (the measure). For example, suppose you’re used to studying all night before a test, but you’re always too tired to understand the subject clearly, resulting in poor grades.

So, the hypothesis is that if you study during the day, you will understand the subject and, as a result, receive a good grade. In this example, the independent variable is the study time and the dependent variables are the understanding of the subject and the grade.

As you can see, a hypothesis can be used in almost any situation, but it is most commonly found in research papers or scientific experiments.

When writing a hypothesis, it is critical to be cautious and thorough before beginning to write it down. Because any hypothesis must be proven through facts, direct testing, and data evidence, even minor flaws or misunderstandings in hypothesis construction can have a negative impact on the quality of your research and its subsequent results.

Types of Research Hypothesis and Examples

There are various types of hypotheses available depending on the nature or purpose of your hypothesis, whether it is for research or a scientific experiment.

Before we get into how to write a hypothesis , let’s go over the different types to see which one is best for you.

Simple Hypothesis

A simple hypothesis will only test and experiment with the relationship between two variables: the independent variable and the dependent one. As we priorly exemplified using study time and grades.

Complex Hypothesis

A more complex hypothesis involves a relationship between more than two variables, let’s say: two independent variables and one dependent variable or vice versa.

Example: Higher the poverty, higher the illiteracy in society, higher will be the rate of crimes.

Null Hypothesis

A null hypothesis, abbreviated as H0, is one in which there is no relationship between the variables.

Example: Poverty has nothing to do with a society’s crime rate.

Alternative Hypothesis

In conjunction with a null hypothesis, an alternative hypothesis (H1 or HA) is used. It states the inverse of the null hypothesis, implying that only one must be true.

Example: Poverty is the cause of society’s crime rate .

Composite Hypothesis

A composite hypothesis is one that does not predict the dependent variable’s exact parameters, distribution, or range.

We would frequently predict an exact outcome. “23-year-old men are on average 189cm tall,” for example. We are providing an exact parameter here. As a result, the hypothesis is not composite.

However, we cannot always precisely hypothesize something. In these cases, we might say, “On average, 23-year-old men are not 189cm tall.” We have not established a distribution range or precise parameters for the average height of 23-year-old men. As a result, we’ve introduced a composite hypothesis rather than an exact hypothesis.

An alternative hypothesis (as discussed above) is generally composite because it is defined as anything other than the null hypothesis. Because this ‘anything except’ does not specify parameters or distribution, it is an example of a composite hypothesis.

Logical Hypothesis

A hypothesis that can be verified logically is known as a logical hypothesis. So, without actual evidence, a logical hypothesis suggests a relationship between variables.

Example: Alligators have green scales, therefore dinosaurs closely related to them most likely had green scales as well. However, because they are all extinct, we must rely on logic rather than empirical data.

Empirical Hypothesis

An empirical hypothesis is the inverse of a logical hypothesis. It is a hypothesis that is currently being tested through scientific investigation, it relies on concrete data. This is also known as a ‘working hypothesis.’

Example: Cows’ lifespan is reduced by feeding them 1 pound of corn per day.

Statistical Hypothesis

A statistical hypothesis uses representative statistical models to draw conclusions about larger populations. Instead of testing everything, you test a subset and generalize the rest based on previously collected data.

Example: Natural red hair is found in about 2% of the world’s population.

Directional Hypothesis

A directional hypothesis predicts whether the effect of an intervention will be positive or negative before the test itself.

Example: Does rainy weather impact the amount of moderate to high intensity exercise people do per week? Positively, rain reduces the amount of moderate to vigorous exercise people do per week.

How to Write a Hypothesis in 6 Steps

1. ask a question.

Writing a hypothesis implies that you have a question to answer. The question should be direct, focused, and specific. To aid in identification, frame this question with the classic six: who, what, where, when, why, or how. But remember that a hypothesis must be a statement and not a question.

2. Gather Primary Research

Collecting background information on the topic may necessitate the reading of several books, academic journals, experiments, and observations, or it may be as simple as an internet search.

Remember to consider your questions from multiple perspectives; conflicting research can be extremely useful when developing a hypothesis; you can use their findings as potential rebuttals and frame your study to address these concerns.

3. Define Your Variables

Once you’ve determined what the question will be, you must identify the independent and dependent variables, as well as the type of hypothesis that applies.

4. Put It in The Form of an If-Then Statement

When constructing a hypothesis, using an if-then format can be helpful. For example: “If I exercise more, I will lose more weight.” This format can be tricky when dealing with multiple variables, but in general, it’s a reliable way of expressing the cause-and-effect relationship you’re testing.

5. Collect More Data to Prove Your Hypothesis

The priority over a hypothesis is answering the question and proving it right or wrong. Once you’ve established your hypothesis and determined your variables, you can begin your experiments. Ideally, you’ll gather data to support your hypothesis.

6. Write it Down

Finally, once you’ve written your hypothesis, analyze all of the data you’ve gathered and draw your conclusions in a research paper format.

Unleash the Power of Infographics with Mind the Graph

Use this opportunity to include a visual tool in your research paper to help clarify your hypothesis. Mind The Graph transforms scientists into designers to increase the visual impact of your research with scientific images and infographic templates.

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

Unlock Your Creativity

Create infographics, presentations and other scientifically-accurate designs without hassle — absolutely free for 7 days!

About Fabricio Pamplona

Fabricio Pamplona is the founder of Mind the Graph - a tool used by over 400K users in 60 countries. He has a Ph.D. and solid scientific background in Psychopharmacology and experience as a Guest Researcher at the Max Planck Institute of Psychiatry (Germany) and Researcher in D'Or Institute for Research and Education (IDOR, Brazil). Fabricio holds over 2500 citations in Google Scholar. He has 10 years of experience in small innovative businesses, with relevant experience in product design and innovation management. Connect with him on LinkedIn - Fabricio Pamplona .

Content tags

Six Steps of the Scientific Method

Learn What Makes Each Stage Important

ThoughtCo. / Hugo Lin

- Scientific Method

- Chemical Laws

- Periodic Table

- Projects & Experiments

- Biochemistry

- Physical Chemistry

- Medical Chemistry

- Chemistry In Everyday Life

- Famous Chemists

- Activities for Kids

- Abbreviations & Acronyms

- Weather & Climate

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

The scientific method is a systematic way of learning about the world around us and answering questions. The key difference between the scientific method and other ways of acquiring knowledge are forming a hypothesis and then testing it with an experiment.

The Six Steps

The number of steps can vary from one description to another (which mainly happens when data and analysis are separated into separate steps), however, this is a fairly standard list of the six scientific method steps that you are expected to know for any science class:

- Purpose/Question Ask a question.

- Research Conduct background research. Write down your sources so you can cite your references. In the modern era, a lot of your research may be conducted online. Scroll to the bottom of articles to check the references. Even if you can't access the full text of a published article, you can usually view the abstract to see the summary of other experiments. Interview experts on a topic. The more you know about a subject, the easier it will be to conduct your investigation.

- Hypothesis Propose a hypothesis . This is a sort of educated guess about what you expect. It is a statement used to predict the outcome of an experiment. Usually, a hypothesis is written in terms of cause and effect. Alternatively, it may describe the relationship between two phenomena. One type of hypothesis is the null hypothesis or the no-difference hypothesis. This is an easy type of hypothesis to test because it assumes changing a variable will have no effect on the outcome. In reality, you probably expect a change but rejecting a hypothesis may be more useful than accepting one.

- Experiment Design and perform an experiment to test your hypothesis. An experiment has an independent and dependent variable. You change or control the independent variable and record the effect it has on the dependent variable . It's important to change only one variable for an experiment rather than try to combine the effects of variables in an experiment. For example, if you want to test the effects of light intensity and fertilizer concentration on the growth rate of a plant, you're really looking at two separate experiments.

- Data/Analysis Record observations and analyze the meaning of the data. Often, you'll prepare a table or graph of the data. Don't throw out data points you think are bad or that don't support your predictions. Some of the most incredible discoveries in science were made because the data looked wrong! Once you have the data, you may need to perform a mathematical analysis to support or refute your hypothesis.

- Conclusion Conclude whether to accept or reject your hypothesis. There is no right or wrong outcome to an experiment, so either result is fine. Accepting a hypothesis does not necessarily mean it's correct! Sometimes repeating an experiment may give a different result. In other cases, a hypothesis may predict an outcome, yet you might draw an incorrect conclusion. Communicate your results. The results may be compiled into a lab report or formally submitted as a paper. Whether you accept or reject the hypothesis, you likely learned something about the subject and may wish to revise the original hypothesis or form a new one for a future experiment.

When Are There Seven Steps?

Sometimes the scientific method is taught with seven steps instead of six. In this model, the first step of the scientific method is to make observations. Really, even if you don't make observations formally, you think about prior experiences with a subject in order to ask a question or solve a problem.

Formal observations are a type of brainstorming that can help you find an idea and form a hypothesis. Observe your subject and record everything about it. Include colors, timing, sounds, temperatures, changes, behavior, and anything that strikes you as interesting or significant.

When you design an experiment, you are controlling and measuring variables. There are three types of variables:

- Controlled Variables: You can have as many controlled variables as you like. These are parts of the experiment that you try to keep constant throughout an experiment so that they won't interfere with your test. Writing down controlled variables is a good idea because it helps make your experiment reproducible , which is important in science! If you have trouble duplicating results from one experiment to another, there may be a controlled variable that you missed.

- Independent Variable: This is the variable you control.

- Dependent Variable: This is the variable you measure. It is called the dependent variable because it depends on the independent variable.

- Examples of Independent and Dependent Variables

- Null Hypothesis Examples

- Difference Between Independent and Dependent Variables

- Scientific Method Flow Chart

- What Is an Experiment? Definition and Design

- How To Design a Science Fair Experiment

- What Is a Hypothesis? (Science)

- Scientific Variable

- What Are the Elements of a Good Hypothesis?

- Scientific Method Vocabulary Terms

- Understanding Simple vs Controlled Experiments

- What Is a Variable in Science?

- Null Hypothesis Definition and Examples

- Independent Variable Definition and Examples

- Scientific Method Lesson Plan

How to Write a Hypothesis: A Step-by-Step Guide

Introduction

An overview of the research hypothesis, different types of hypotheses, variables in a hypothesis, how to formulate an effective research hypothesis, designing a study around your hypothesis.

The scientific method can derive and test predictions as hypotheses. Empirical research can then provide support (or lack thereof) for the hypotheses. Even failure to find support for a hypothesis still represents a valuable contribution to scientific knowledge. Let's look more closely at the idea of the hypothesis and the role it plays in research.

As much as the term exists in everyday language, there is a detailed development that informs the word "hypothesis" when applied to research. A good research hypothesis is informed by prior research and guides research design and data analysis , so it is important to understand how a hypothesis is defined and understood by researchers.

What is the simple definition of a hypothesis?

A hypothesis is a testable prediction about an outcome between two or more variables . It functions as a navigational tool in the research process, directing what you aim to predict and how.

What is the hypothesis for in research?

In research, a hypothesis serves as the cornerstone for your empirical study. It not only lays out what you aim to investigate but also provides a structured approach for your data collection and analysis.

Essentially, it bridges the gap between the theoretical and the empirical, guiding your investigation throughout its course.

What is an example of a hypothesis?

If you are studying the relationship between physical exercise and mental health, a suitable hypothesis could be: "Regular physical exercise leads to improved mental well-being among adults."

This statement constitutes a specific and testable hypothesis that directly relates to the variables you are investigating.

What makes a good hypothesis?

A good hypothesis possesses several key characteristics. Firstly, it must be testable, allowing you to analyze data through empirical means, such as observation or experimentation, to assess if there is significant support for the hypothesis. Secondly, a hypothesis should be specific and unambiguous, giving a clear understanding of the expected relationship between variables. Lastly, it should be grounded in existing research or theoretical frameworks , ensuring its relevance and applicability.

Understanding the types of hypotheses can greatly enhance how you construct and work with hypotheses. While all hypotheses serve the essential function of guiding your study, there are varying purposes among the types of hypotheses. In addition, all hypotheses stand in contrast to the null hypothesis, or the assumption that there is no significant relationship between the variables .

Here, we explore various kinds of hypotheses to provide you with the tools needed to craft effective hypotheses for your specific research needs. Bear in mind that many of these hypothesis types may overlap with one another, and the specific type that is typically used will likely depend on the area of research and methodology you are following.

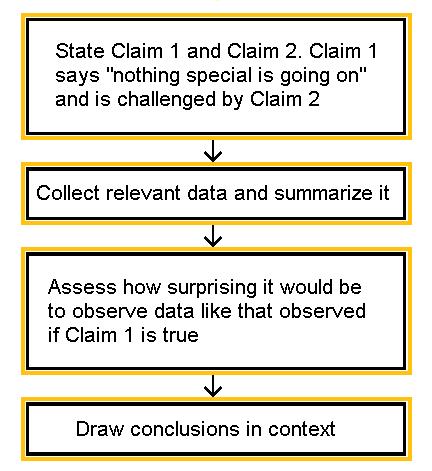

Null hypothesis

The null hypothesis is a statement that there is no effect or relationship between the variables being studied. In statistical terms, it serves as the default assumption that any observed differences are due to random chance.

For example, if you're studying the effect of a drug on blood pressure, the null hypothesis might state that the drug has no effect.

Alternative hypothesis

Contrary to the null hypothesis, the alternative hypothesis suggests that there is a significant relationship or effect between variables.

Using the drug example, the alternative hypothesis would posit that the drug does indeed affect blood pressure. This is what researchers aim to prove.

Simple hypothesis

A simple hypothesis makes a prediction about the relationship between two variables, and only two variables.

For example, "Increased study time results in better exam scores." Here, "study time" and "exam scores" are the only variables involved.

Complex hypothesis

A complex hypothesis, as the name suggests, involves more than two variables. For instance, "Increased study time and access to resources result in better exam scores." Here, "study time," "access to resources," and "exam scores" are all variables.

This hypothesis refers to multiple potential mediating variables. Other hypotheses could also include predictions about variables that moderate the relationship between the independent variable and dependent variable .

Directional hypothesis

A directional hypothesis specifies the direction of the expected relationship between variables. For example, "Eating more fruits and vegetables leads to a decrease in heart disease."

Here, the direction of heart disease is explicitly predicted to decrease, due to effects from eating more fruits and vegetables. All hypotheses typically specify the expected direction of the relationship between the independent and dependent variable, such that researchers can test if this prediction holds in their data analysis .

Statistical hypothesis

A statistical hypothesis is one that is testable through statistical methods, providing a numerical value that can be analyzed. This is commonly seen in quantitative research .

For example, "There is a statistically significant difference in test scores between students who study for one hour and those who study for two."

Empirical hypothesis

An empirical hypothesis is derived from observations and is tested through empirical methods, often through experimentation or survey data . Empirical hypotheses may also be assessed with statistical analyses.

For example, "Regular exercise is correlated with a lower incidence of depression," could be tested through surveys that measure exercise frequency and depression levels.

Causal hypothesis

A causal hypothesis proposes that one variable causes a change in another. This type of hypothesis is often tested through controlled experiments.

For example, "Smoking causes lung cancer," assumes a direct causal relationship.

Associative hypothesis

Unlike causal hypotheses, associative hypotheses suggest a relationship between variables but do not imply causation.

For instance, "People who smoke are more likely to get lung cancer," notes an association but doesn't claim that smoking causes lung cancer directly.

Relational hypothesis

A relational hypothesis explores the relationship between two or more variables but doesn't specify the nature of the relationship.

For example, "There is a relationship between diet and heart health," leaves the nature of the relationship (causal, associative, etc.) open to interpretation.

Logical hypothesis

A logical hypothesis is based on sound reasoning and logical principles. It's often used in theoretical research to explore abstract concepts, rather than being based on empirical data.

For example, "If all men are mortal and Socrates is a man, then Socrates is mortal," employs logical reasoning to make its point.

Let ATLAS.ti take you from research question to key insights

Get started with a free trial and see how ATLAS.ti can make the most of your data.

In any research hypothesis, variables play a critical role. These are the elements or factors that the researcher manipulates, controls, or measures. Understanding variables is essential for crafting a clear, testable hypothesis and for the stages of research that follow, such as data collection and analysis.

In the realm of hypotheses, there are generally two types of variables to consider: independent and dependent. Independent variables are what you, as the researcher, manipulate or change in your study. It's considered the cause in the relationship you're investigating. For instance, in a study examining the impact of sleep duration on academic performance, the independent variable would be the amount of sleep participants get.

Conversely, the dependent variable is the outcome you measure to gauge the effect of your manipulation. It's the effect in the cause-and-effect relationship. The dependent variable thus refers to the main outcome of interest in your study. In the same sleep study example, the academic performance, perhaps measured by exam scores or GPA, would be the dependent variable.

Beyond these two primary types, you might also encounter control variables. These are variables that could potentially influence the outcome and are therefore kept constant to isolate the relationship between the independent and dependent variables . For example, in the sleep and academic performance study, control variables could include age, diet, or even the subject of study.

By clearly identifying and understanding the roles of these variables in your hypothesis, you set the stage for a methodologically sound research project. It helps you develop focused research questions, design appropriate experiments or observations, and carry out meaningful data analysis . It's a step that lays the groundwork for the success of your entire study.

Crafting a strong, testable hypothesis is crucial for the success of any research project. It sets the stage for everything from your study design to data collection and analysis . Below are some key considerations to keep in mind when formulating your hypothesis:

- Be specific : A vague hypothesis can lead to ambiguous results and interpretations . Clearly define your variables and the expected relationship between them.

- Ensure testability : A good hypothesis should be testable through empirical means, whether by observation , experimentation, or other forms of data analysis.

- Ground in literature : Before creating your hypothesis, consult existing research and theories. This not only helps you identify gaps in current knowledge but also gives you valuable context and credibility for crafting your hypothesis.

- Use simple language : While your hypothesis should be conceptually sound, it doesn't have to be complicated. Aim for clarity and simplicity in your wording.

- State direction, if applicable : If your hypothesis involves a directional outcome (e.g., "increase" or "decrease"), make sure to specify this. You also need to think about how you will measure whether or not the outcome moved in the direction you predicted.

- Keep it focused : One of the common pitfalls in hypothesis formulation is trying to answer too many questions at once. Keep your hypothesis focused on a specific issue or relationship.

- Account for control variables : Identify any variables that could potentially impact the outcome and consider how you will control for them in your study.

- Be ethical : Make sure your hypothesis and the methods for testing it comply with ethical standards , particularly if your research involves human or animal subjects.

Designing your study involves multiple key phases that help ensure the rigor and validity of your research. Here we discuss these crucial components in more detail.

Literature review

Starting with a comprehensive literature review is essential. This step allows you to understand the existing body of knowledge related to your hypothesis and helps you identify gaps that your research could fill. Your research should aim to contribute some novel understanding to existing literature, and your hypotheses can reflect this. A literature review also provides valuable insights into how similar research projects were executed, thereby helping you fine-tune your own approach.

Research methods

Choosing the right research methods is critical. Whether it's a survey, an experiment, or observational study, the methodology should be the most appropriate for testing your hypothesis. Your choice of methods will also depend on whether your research is quantitative, qualitative, or mixed-methods. Make sure the chosen methods align well with the variables you are studying and the type of data you need.

Preliminary research

Before diving into a full-scale study, it’s often beneficial to conduct preliminary research or a pilot study . This allows you to test your research methods on a smaller scale, refine your tools, and identify any potential issues. For instance, a pilot survey can help you determine if your questions are clear and if the survey effectively captures the data you need. This step can save you both time and resources in the long run.

Data analysis

Finally, planning your data analysis in advance is crucial for a successful study. Decide which statistical or analytical tools are most suited for your data type and research questions . For quantitative research, you might opt for t-tests, ANOVA, or regression analyses. For qualitative research , thematic analysis or grounded theory may be more appropriate. This phase is integral for interpreting your results and drawing meaningful conclusions in relation to your research question.

Turn data into evidence for insights with ATLAS.ti

Powerful analysis for your research paper or presentation is at your fingertips starting with a free trial.

- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Education and Communications

- Science Writing

How to Write a Hypothesis

Last Updated: May 2, 2023 Fact Checked

This article was co-authored by Bess Ruff, MA . Bess Ruff is a Geography PhD student at Florida State University. She received her MA in Environmental Science and Management from the University of California, Santa Barbara in 2016. She has conducted survey work for marine spatial planning projects in the Caribbean and provided research support as a graduate fellow for the Sustainable Fisheries Group. There are 9 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 1,033,015 times.

A hypothesis is a description of a pattern in nature or an explanation about some real-world phenomenon that can be tested through observation and experimentation. The most common way a hypothesis is used in scientific research is as a tentative, testable, and falsifiable statement that explains some observed phenomenon in nature. [1] X Research source Many academic fields, from the physical sciences to the life sciences to the social sciences, use hypothesis testing as a means of testing ideas to learn about the world and advance scientific knowledge. Whether you are a beginning scholar or a beginning student taking a class in a science subject, understanding what hypotheses are and being able to generate hypotheses and predictions yourself is very important. These instructions will help get you started.

Preparing to Write a Hypothesis

- If you are writing a hypothesis for a school assignment, this step may be taken care of for you.

- Focus on academic and scholarly writing. You need to be certain that your information is unbiased, accurate, and comprehensive. Scholarly search databases such as Google Scholar and Web of Science can help you find relevant articles from reputable sources.

- You can find information in textbooks, at a library, and online. If you are in school, you can also ask for help from teachers, librarians, and your peers.

- For example, if you are interested in the effects of caffeine on the human body, but notice that nobody seems to have explored whether caffeine affects males differently than it does females, this could be something to formulate a hypothesis about. Or, if you are interested in organic farming, you might notice that no one has tested whether organic fertilizer results in different growth rates for plants than non-organic fertilizer.

- You can sometimes find holes in the existing literature by looking for statements like “it is unknown” in scientific papers or places where information is clearly missing. You might also find a claim in the literature that seems far-fetched, unlikely, or too good to be true, like that caffeine improves math skills. If the claim is testable, you could provide a great service to scientific knowledge by doing your own investigation. If you confirm the claim, the claim becomes even more credible. If you do not find support for the claim, you are helping with the necessary self-correcting aspect of science.

- Examining these types of questions provides an excellent way for you to set yourself apart by filling in important gaps in a field of study.

- Following the examples above, you might ask: "How does caffeine affect females as compared to males?" or "How does organic fertilizer affect plant growth compared to non-organic fertilizer?" The rest of your research will be aimed at answering these questions.

- Following the examples above, if you discover in the literature that there is a pattern that some other types of stimulants seem to affect females more than males, this could be a clue that the same pattern might be true for caffeine. Similarly, if you observe the pattern that organic fertilizer seems to be associated with smaller plants overall, you might explain this pattern with the hypothesis that plants exposed to organic fertilizer grow more slowly than plants exposed to non-organic fertilizer.

Formulating Your Hypothesis

- You can think of the independent variable as the one that is causing some kind of difference or effect to occur. In the examples, the independent variable would be biological sex, i.e. whether a person is male or female, and fertilizer type, i.e. whether the fertilizer is organic or non-organically-based.

- The dependent variable is what is affected by (i.e. "depends" on) the independent variable. In the examples above, the dependent variable would be the measured impact of caffeine or fertilizer.

- Your hypothesis should only suggest one relationship. Most importantly, it should only have one independent variable. If you have more than one, you won't be able to determine which one is actually the source of any effects you might observe.

- Don't worry too much at this point about being precise or detailed.

- In the examples above, one hypothesis would make a statement about whether a person's biological sex might impact the way the person is affected by caffeine; for example, at this point, your hypothesis might simply be: "a person's biological sex is related to how caffeine affects his or her heart rate." The other hypothesis would make a general statement about plant growth and fertilizer; for example your simple explanatory hypothesis might be "plants given different types of fertilizer are different sizes because they grow at different rates."

- Using our example, our non-directional hypotheses would be "there is a relationship between a person's biological sex and how much caffeine increases the person's heart rate," and "there is a relationship between fertilizer type and the speed at which plants grow."

- Directional predictions using the same example hypotheses above would be : "Females will experience a greater increase in heart rate after consuming caffeine than will males," and "plants fertilized with non-organic fertilizer will grow faster than those fertilized with organic fertilizer." Indeed, these predictions and the hypotheses that allow for them are very different kinds of statements. More on this distinction below.

- If the literature provides any basis for making a directional prediction, it is better to do so, because it provides more information. Especially in the physical sciences, non-directional predictions are often seen as inadequate.

- Where necessary, specify the population (i.e. the people or things) about which you hope to uncover new knowledge. For example, if you were only interested the effects of caffeine on elderly people, your prediction might read: "Females over the age of 65 will experience a greater increase in heart rate than will males of the same age." If you were interested only in how fertilizer affects tomato plants, your prediction might read: "Tomato plants treated with non-organic fertilizer will grow faster in the first three months than will tomato plants treated with organic fertilizer."

- For example, you would not want to make the hypothesis: "red is the prettiest color." This statement is an opinion and it cannot be tested with an experiment. However, proposing the generalizing hypothesis that red is the most popular color is testable with a simple random survey. If you do indeed confirm that red is the most popular color, your next step may be to ask: Why is red the most popular color? The answer you propose is your explanatory hypothesis .

- An easy way to get to the hypothesis for this method and prediction is to ask yourself why you think heart rates will increase if children are given caffeine. Your explanatory hypothesis in this case may be that caffeine is a stimulant. At this point, some scientists write a research hypothesis , a statement that includes the hypothesis, the experiment, and the prediction all in one statement.

- For example, If caffeine is a stimulant, and some children are given a drink with caffeine while others are given a drink without caffeine, then the heart rates of those children given a caffeinated drink will increase more than the heart rate of children given a non-caffeinated drink.

- Using the above example, if you were to test the effects of caffeine on the heart rates of children, evidence that your hypothesis is not true, sometimes called the null hypothesis , could occur if the heart rates of both the children given the caffeinated drink and the children given the non-caffeinated drink (called the placebo control) did not change, or lowered or raised with the same magnitude, if there was no difference between the two groups of children.

- It is important to note here that the null hypothesis actually becomes much more useful when researchers test the significance of their results with statistics. When statistics are used on the results of an experiment, a researcher is testing the idea of the null statistical hypothesis. For example, that there is no relationship between two variables or that there is no difference between two groups. [8] X Research source

Hypothesis Examples

Community Q&A

- Remember that science is not necessarily a linear process and can be approached in various ways. [10] X Research source Thanks Helpful 0 Not Helpful 0

- When examining the literature, look for research that is similar to what you want to do, and try to build on the findings of other researchers. But also look for claims that you think are suspicious, and test them yourself. Thanks Helpful 0 Not Helpful 0

- Be specific in your hypotheses, but not so specific that your hypothesis can't be applied to anything outside your specific experiment. You definitely want to be clear about the population about which you are interested in drawing conclusions, but nobody (except your roommates) will be interested in reading a paper with the prediction: "my three roommates will each be able to do a different amount of pushups." Thanks Helpful 0 Not Helpful 0

You Might Also Like

- ↑ https://undsci.berkeley.edu/for-educators/prepare-and-plan/correcting-misconceptions/#a4

- ↑ https://owl.purdue.edu/owl/general_writing/common_writing_assignments/research_papers/choosing_a_topic.html

- ↑ https://owl.purdue.edu/owl/subject_specific_writing/writing_in_the_social_sciences/writing_in_psychology_experimental_report_writing/experimental_reports_1.html

- ↑ https://www.grammarly.com/blog/how-to-write-a-hypothesis/

- ↑ https://grammar.yourdictionary.com/for-students-and-parents/how-create-hypothesis.html

- ↑ https://flexbooks.ck12.org/cbook/ck-12-middle-school-physical-science-flexbook-2.0/section/1.19/primary/lesson/hypothesis-ms-ps/

- ↑ https://iastate.pressbooks.pub/preparingtopublish/chapter/goal-1-contextualize-the-studys-methods/

- ↑ http://mathworld.wolfram.com/NullHypothesis.html

- ↑ http://undsci.berkeley.edu/article/scienceflowchart

About This Article

Before writing a hypothesis, think of what questions are still unanswered about a specific subject and make an educated guess about what the answer could be. Then, determine the variables in your question and write a simple statement about how they might be related. Try to focus on specific predictions and variables, such as age or segment of the population, to make your hypothesis easier to test. For tips on how to test your hypothesis, read on! Did this summary help you? Yes No

- Send fan mail to authors

Reader Success Stories

Onyia Maxwell

Sep 13, 2016

Did this article help you?

Nov 26, 2017

ABEL SHEWADEG

Jun 12, 2018

Connor Gilligan

Jan 2, 2017

Dec 30, 2017

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

wikiHow Tech Help Pro:

Develop the tech skills you need for work and life

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 31289

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)