19+ Experimental Design Examples (Methods + Types)

Ever wondered how scientists discover new medicines, psychologists learn about behavior, or even how marketers figure out what kind of ads you like? Well, they all have something in common: they use a special plan or recipe called an "experimental design."

Imagine you're baking cookies. You can't just throw random amounts of flour, sugar, and chocolate chips into a bowl and hope for the best. You follow a recipe, right? Scientists and researchers do something similar. They follow a "recipe" called an experimental design to make sure their experiments are set up in a way that the answers they find are meaningful and reliable.

Experimental design is the roadmap researchers use to answer questions. It's a set of rules and steps that researchers follow to collect information, or "data," in a way that is fair, accurate, and makes sense.

Long ago, people didn't have detailed game plans for experiments. They often just tried things out and saw what happened. But over time, people got smarter about this. They started creating structured plans—what we now call experimental designs—to get clearer, more trustworthy answers to their questions.

In this article, we'll take you on a journey through the world of experimental designs. We'll talk about the different types, or "flavors," of experimental designs, where they're used, and even give you a peek into how they came to be.

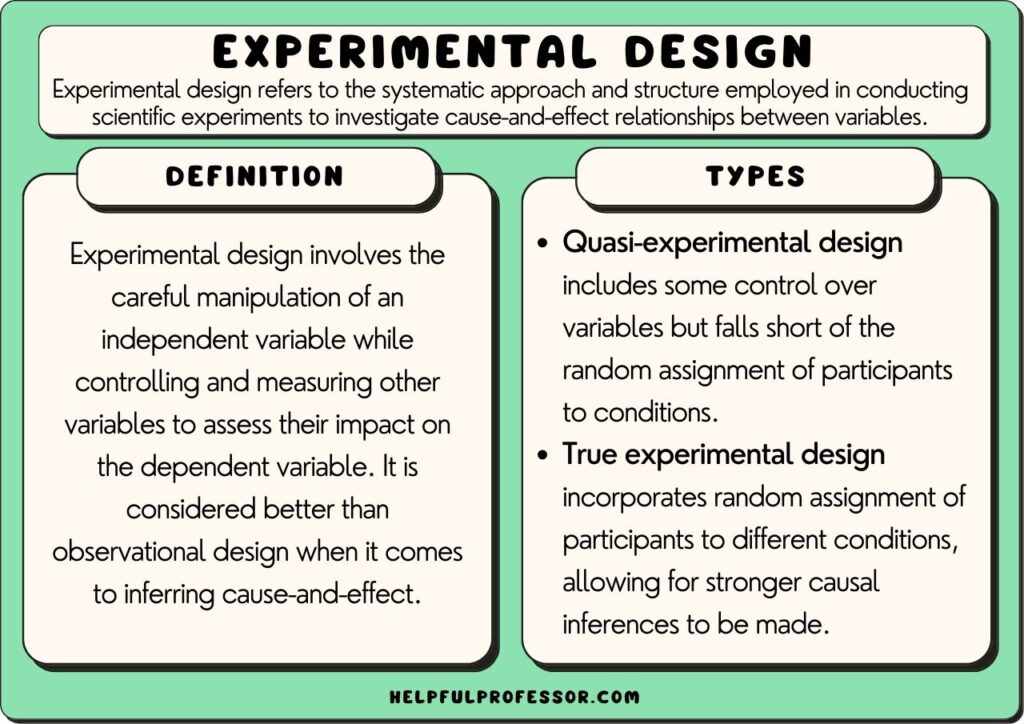

What Is Experimental Design?

Alright, before we dive into the different types of experimental designs, let's get crystal clear on what experimental design actually is.

Imagine you're a detective trying to solve a mystery. You need clues, right? Well, in the world of research, experimental design is like the roadmap that helps you find those clues. It's like the game plan in sports or the blueprint when you're building a house. Just like you wouldn't start building without a good blueprint, researchers won't start their studies without a strong experimental design.

So, why do we need experimental design? Think about baking a cake. If you toss ingredients into a bowl without measuring, you'll end up with a mess instead of a tasty dessert.

Similarly, in research, if you don't have a solid plan, you might get confusing or incorrect results. A good experimental design helps you ask the right questions ( think critically ), decide what to measure ( come up with an idea ), and figure out how to measure it (test it). It also helps you consider things that might mess up your results, like outside influences you hadn't thought of.

For example, let's say you want to find out if listening to music helps people focus better. Your experimental design would help you decide things like: Who are you going to test? What kind of music will you use? How will you measure focus? And, importantly, how will you make sure that it's really the music affecting focus and not something else, like the time of day or whether someone had a good breakfast?

In short, experimental design is the master plan that guides researchers through the process of collecting data, so they can answer questions in the most reliable way possible. It's like the GPS for the journey of discovery!

History of Experimental Design

Around 350 BCE, people like Aristotle were trying to figure out how the world works, but they mostly just thought really hard about things. They didn't test their ideas much. So while they were super smart, their methods weren't always the best for finding out the truth.

Fast forward to the Renaissance (14th to 17th centuries), a time of big changes and lots of curiosity. People like Galileo started to experiment by actually doing tests, like rolling balls down inclined planes to study motion. Galileo's work was cool because he combined thinking with doing. He'd have an idea, test it, look at the results, and then think some more. This approach was a lot more reliable than just sitting around and thinking.

Now, let's zoom ahead to the 18th and 19th centuries. This is when people like Francis Galton, an English polymath, started to get really systematic about experimentation. Galton was obsessed with measuring things. Seriously, he even tried to measure how good-looking people were ! His work helped create the foundations for a more organized approach to experiments.

Next stop: the early 20th century. Enter Ronald A. Fisher , a brilliant British statistician. Fisher was a game-changer. He came up with ideas that are like the bread and butter of modern experimental design.

Fisher invented the concept of the " control group "—that's a group of people or things that don't get the treatment you're testing, so you can compare them to those who do. He also stressed the importance of " randomization ," which means assigning people or things to different groups by chance, like drawing names out of a hat. This makes sure the experiment is fair and the results are trustworthy.

Around the same time, American psychologists like John B. Watson and B.F. Skinner were developing " behaviorism ." They focused on studying things that they could directly observe and measure, like actions and reactions.

Skinner even built boxes—called Skinner Boxes —to test how animals like pigeons and rats learn. Their work helped shape how psychologists design experiments today. Watson performed a very controversial experiment called The Little Albert experiment that helped describe behaviour through conditioning—in other words, how people learn to behave the way they do.

In the later part of the 20th century and into our time, computers have totally shaken things up. Researchers now use super powerful software to help design their experiments and crunch the numbers.

With computers, they can simulate complex experiments before they even start, which helps them predict what might happen. This is especially helpful in fields like medicine, where getting things right can be a matter of life and death.

Also, did you know that experimental designs aren't just for scientists in labs? They're used by people in all sorts of jobs, like marketing, education, and even video game design! Yes, someone probably ran an experiment to figure out what makes a game super fun to play.

So there you have it—a quick tour through the history of experimental design, from Aristotle's deep thoughts to Fisher's groundbreaking ideas, and all the way to today's computer-powered research. These designs are the recipes that help people from all walks of life find answers to their big questions.

Key Terms in Experimental Design

Before we dig into the different types of experimental designs, let's get comfy with some key terms. Understanding these terms will make it easier for us to explore the various types of experimental designs that researchers use to answer their big questions.

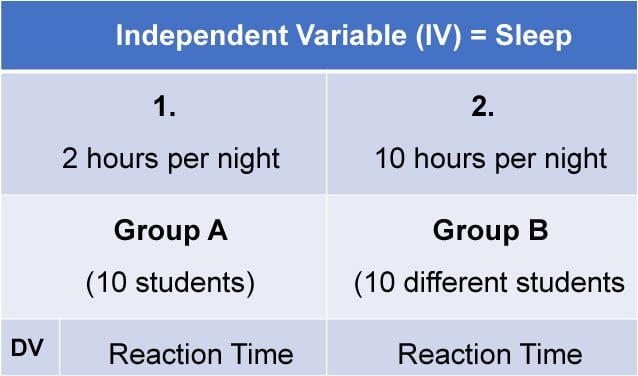

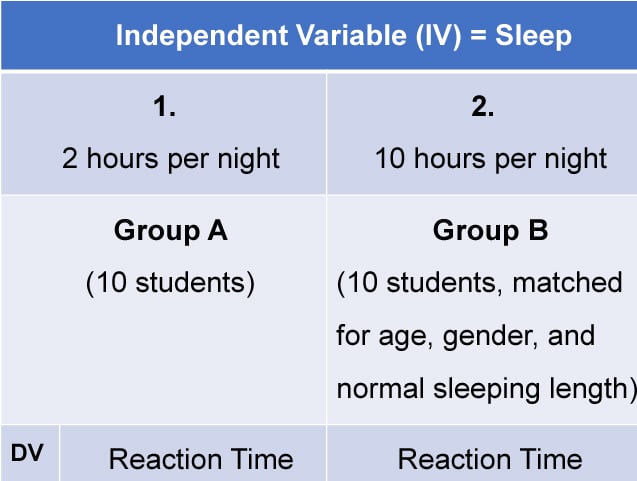

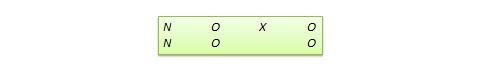

Independent Variable : This is what you change or control in your experiment to see what effect it has. Think of it as the "cause" in a cause-and-effect relationship. For example, if you're studying whether different types of music help people focus, the kind of music is the independent variable.

Dependent Variable : This is what you're measuring to see the effect of your independent variable. In our music and focus experiment, how well people focus is the dependent variable—it's what "depends" on the kind of music played.

Control Group : This is a group of people who don't get the special treatment or change you're testing. They help you see what happens when the independent variable is not applied. If you're testing whether a new medicine works, the control group would take a fake pill, called a placebo , instead of the real medicine.

Experimental Group : This is the group that gets the special treatment or change you're interested in. Going back to our medicine example, this group would get the actual medicine to see if it has any effect.

Randomization : This is like shaking things up in a fair way. You randomly put people into the control or experimental group so that each group is a good mix of different kinds of people. This helps make the results more reliable.

Sample : This is the group of people you're studying. They're a "sample" of a larger group that you're interested in. For instance, if you want to know how teenagers feel about a new video game, you might study a sample of 100 teenagers.

Bias : This is anything that might tilt your experiment one way or another without you realizing it. Like if you're testing a new kind of dog food and you only test it on poodles, that could create a bias because maybe poodles just really like that food and other breeds don't.

Data : This is the information you collect during the experiment. It's like the treasure you find on your journey of discovery!

Replication : This means doing the experiment more than once to make sure your findings hold up. It's like double-checking your answers on a test.

Hypothesis : This is your educated guess about what will happen in the experiment. It's like predicting the end of a movie based on the first half.

Steps of Experimental Design

Alright, let's say you're all fired up and ready to run your own experiment. Cool! But where do you start? Well, designing an experiment is a bit like planning a road trip. There are some key steps you've got to take to make sure you reach your destination. Let's break it down:

- Ask a Question : Before you hit the road, you've got to know where you're going. Same with experiments. You start with a question you want to answer, like "Does eating breakfast really make you do better in school?"

- Do Some Homework : Before you pack your bags, you look up the best places to visit, right? In science, this means reading up on what other people have already discovered about your topic.

- Form a Hypothesis : This is your educated guess about what you think will happen. It's like saying, "I bet this route will get us there faster."

- Plan the Details : Now you decide what kind of car you're driving (your experimental design), who's coming with you (your sample), and what snacks to bring (your variables).

- Randomization : Remember, this is like shuffling a deck of cards. You want to mix up who goes into your control and experimental groups to make sure it's a fair test.

- Run the Experiment : Finally, the rubber hits the road! You carry out your plan, making sure to collect your data carefully.

- Analyze the Data : Once the trip's over, you look at your photos and decide which ones are keepers. In science, this means looking at your data to see what it tells you.

- Draw Conclusions : Based on your data, did you find an answer to your question? This is like saying, "Yep, that route was faster," or "Nope, we hit a ton of traffic."

- Share Your Findings : After a great trip, you want to tell everyone about it, right? Scientists do the same by publishing their results so others can learn from them.

- Do It Again? : Sometimes one road trip just isn't enough. In the same way, scientists often repeat their experiments to make sure their findings are solid.

So there you have it! Those are the basic steps you need to follow when you're designing an experiment. Each step helps make sure that you're setting up a fair and reliable way to find answers to your big questions.

Let's get into examples of experimental designs.

1) True Experimental Design

In the world of experiments, the True Experimental Design is like the superstar quarterback everyone talks about. Born out of the early 20th-century work of statisticians like Ronald A. Fisher, this design is all about control, precision, and reliability.

Researchers carefully pick an independent variable to manipulate (remember, that's the thing they're changing on purpose) and measure the dependent variable (the effect they're studying). Then comes the magic trick—randomization. By randomly putting participants into either the control or experimental group, scientists make sure their experiment is as fair as possible.

No sneaky biases here!

True Experimental Design Pros

The pros of True Experimental Design are like the perks of a VIP ticket at a concert: you get the best and most trustworthy results. Because everything is controlled and randomized, you can feel pretty confident that the results aren't just a fluke.

True Experimental Design Cons

However, there's a catch. Sometimes, it's really tough to set up these experiments in a real-world situation. Imagine trying to control every single detail of your day, from the food you eat to the air you breathe. Not so easy, right?

True Experimental Design Uses

The fields that get the most out of True Experimental Designs are those that need super reliable results, like medical research.

When scientists were developing COVID-19 vaccines, they used this design to run clinical trials. They had control groups that received a placebo (a harmless substance with no effect) and experimental groups that got the actual vaccine. Then they measured how many people in each group got sick. By comparing the two, they could say, "Yep, this vaccine works!"

So next time you read about a groundbreaking discovery in medicine or technology, chances are a True Experimental Design was the VIP behind the scenes, making sure everything was on point. It's been the go-to for rigorous scientific inquiry for nearly a century, and it's not stepping off the stage anytime soon.

2) Quasi-Experimental Design

So, let's talk about the Quasi-Experimental Design. Think of this one as the cool cousin of True Experimental Design. It wants to be just like its famous relative, but it's a bit more laid-back and flexible. You'll find quasi-experimental designs when it's tricky to set up a full-blown True Experimental Design with all the bells and whistles.

Quasi-experiments still play with an independent variable, just like their stricter cousins. The big difference? They don't use randomization. It's like wanting to divide a bag of jelly beans equally between your friends, but you can't quite do it perfectly.

In real life, it's often not possible or ethical to randomly assign people to different groups, especially when dealing with sensitive topics like education or social issues. And that's where quasi-experiments come in.

Quasi-Experimental Design Pros

Even though they lack full randomization, quasi-experimental designs are like the Swiss Army knives of research: versatile and practical. They're especially popular in fields like education, sociology, and public policy.

For instance, when researchers wanted to figure out if the Head Start program , aimed at giving young kids a "head start" in school, was effective, they used a quasi-experimental design. They couldn't randomly assign kids to go or not go to preschool, but they could compare kids who did with kids who didn't.

Quasi-Experimental Design Cons

Of course, quasi-experiments come with their own bag of pros and cons. On the plus side, they're easier to set up and often cheaper than true experiments. But the flip side is that they're not as rock-solid in their conclusions. Because the groups aren't randomly assigned, there's always that little voice saying, "Hey, are we missing something here?"

Quasi-Experimental Design Uses

Quasi-Experimental Design gained traction in the mid-20th century. Researchers were grappling with real-world problems that didn't fit neatly into a laboratory setting. Plus, as society became more aware of ethical considerations, the need for flexible designs increased. So, the quasi-experimental approach was like a breath of fresh air for scientists wanting to study complex issues without a laundry list of restrictions.

In short, if True Experimental Design is the superstar quarterback, Quasi-Experimental Design is the versatile player who can adapt and still make significant contributions to the game.

3) Pre-Experimental Design

Now, let's talk about the Pre-Experimental Design. Imagine it as the beginner's skateboard you get before you try out for all the cool tricks. It has wheels, it rolls, but it's not built for the professional skatepark.

Similarly, pre-experimental designs give researchers a starting point. They let you dip your toes in the water of scientific research without diving in head-first.

So, what's the deal with pre-experimental designs?

Pre-Experimental Designs are the basic, no-frills versions of experiments. Researchers still mess around with an independent variable and measure a dependent variable, but they skip over the whole randomization thing and often don't even have a control group.

It's like baking a cake but forgetting the frosting and sprinkles; you'll get some results, but they might not be as complete or reliable as you'd like.

Pre-Experimental Design Pros

Why use such a simple setup? Because sometimes, you just need to get the ball rolling. Pre-experimental designs are great for quick-and-dirty research when you're short on time or resources. They give you a rough idea of what's happening, which you can use to plan more detailed studies later.

A good example of this is early studies on the effects of screen time on kids. Researchers couldn't control every aspect of a child's life, but they could easily ask parents to track how much time their kids spent in front of screens and then look for trends in behavior or school performance.

Pre-Experimental Design Cons

But here's the catch: pre-experimental designs are like that first draft of an essay. It helps you get your ideas down, but you wouldn't want to turn it in for a grade. Because these designs lack the rigorous structure of true or quasi-experimental setups, they can't give you rock-solid conclusions. They're more like clues or signposts pointing you in a certain direction.

Pre-Experimental Design Uses

This type of design became popular in the early stages of various scientific fields. Researchers used them to scratch the surface of a topic, generate some initial data, and then decide if it's worth exploring further. In other words, pre-experimental designs were the stepping stones that led to more complex, thorough investigations.

So, while Pre-Experimental Design may not be the star player on the team, it's like the practice squad that helps everyone get better. It's the starting point that can lead to bigger and better things.

4) Factorial Design

Now, buckle up, because we're moving into the world of Factorial Design, the multi-tasker of the experimental universe.

Imagine juggling not just one, but multiple balls in the air—that's what researchers do in a factorial design.

In Factorial Design, researchers are not satisfied with just studying one independent variable. Nope, they want to study two or more at the same time to see how they interact.

It's like cooking with several spices to see how they blend together to create unique flavors.

Factorial Design became the talk of the town with the rise of computers. Why? Because this design produces a lot of data, and computers are the number crunchers that help make sense of it all. So, thanks to our silicon friends, researchers can study complicated questions like, "How do diet AND exercise together affect weight loss?" instead of looking at just one of those factors.

Factorial Design Pros

This design's main selling point is its ability to explore interactions between variables. For instance, maybe a new study drug works really well for young people but not so great for older adults. A factorial design could reveal that age is a crucial factor, something you might miss if you only studied the drug's effectiveness in general. It's like being a detective who looks for clues not just in one room but throughout the entire house.

Factorial Design Cons

However, factorial designs have their own bag of challenges. First off, they can be pretty complicated to set up and run. Imagine coordinating a four-way intersection with lots of cars coming from all directions—you've got to make sure everything runs smoothly, or you'll end up with a traffic jam. Similarly, researchers need to carefully plan how they'll measure and analyze all the different variables.

Factorial Design Uses

Factorial designs are widely used in psychology to untangle the web of factors that influence human behavior. They're also popular in fields like marketing, where companies want to understand how different aspects like price, packaging, and advertising influence a product's success.

And speaking of success, the factorial design has been a hit since statisticians like Ronald A. Fisher (yep, him again!) expanded on it in the early-to-mid 20th century. It offered a more nuanced way of understanding the world, proving that sometimes, to get the full picture, you've got to juggle more than one ball at a time.

So, if True Experimental Design is the quarterback and Quasi-Experimental Design is the versatile player, Factorial Design is the strategist who sees the entire game board and makes moves accordingly.

5) Longitudinal Design

Alright, let's take a step into the world of Longitudinal Design. Picture it as the grand storyteller, the kind who doesn't just tell you about a single event but spins an epic tale that stretches over years or even decades. This design isn't about quick snapshots; it's about capturing the whole movie of someone's life or a long-running process.

You know how you might take a photo every year on your birthday to see how you've changed? Longitudinal Design is kind of like that, but for scientific research.

With Longitudinal Design, instead of measuring something just once, researchers come back again and again, sometimes over many years, to see how things are going. This helps them understand not just what's happening, but why it's happening and how it changes over time.

This design really started to shine in the latter half of the 20th century, when researchers began to realize that some questions can't be answered in a hurry. Think about studies that look at how kids grow up, or research on how a certain medicine affects you over a long period. These aren't things you can rush.

The famous Framingham Heart Study , started in 1948, is a prime example. It's been studying heart health in a small town in Massachusetts for decades, and the findings have shaped what we know about heart disease.

Longitudinal Design Pros

So, what's to love about Longitudinal Design? First off, it's the go-to for studying change over time, whether that's how people age or how a forest recovers from a fire.

Longitudinal Design Cons

But it's not all sunshine and rainbows. Longitudinal studies take a lot of patience and resources. Plus, keeping track of participants over many years can be like herding cats—difficult and full of surprises.

Longitudinal Design Uses

Despite these challenges, longitudinal studies have been key in fields like psychology, sociology, and medicine. They provide the kind of deep, long-term insights that other designs just can't match.

So, if the True Experimental Design is the superstar quarterback, and the Quasi-Experimental Design is the flexible athlete, then the Factorial Design is the strategist, and the Longitudinal Design is the wise elder who has seen it all and has stories to tell.

6) Cross-Sectional Design

Now, let's flip the script and talk about Cross-Sectional Design, the polar opposite of the Longitudinal Design. If Longitudinal is the grand storyteller, think of Cross-Sectional as the snapshot photographer. It captures a single moment in time, like a selfie that you take to remember a fun day. Researchers using this design collect all their data at one point, providing a kind of "snapshot" of whatever they're studying.

In a Cross-Sectional Design, researchers look at multiple groups all at the same time to see how they're different or similar.

This design rose to popularity in the mid-20th century, mainly because it's so quick and efficient. Imagine wanting to know how people of different ages feel about a new video game. Instead of waiting for years to see how opinions change, you could just ask people of all ages what they think right now. That's Cross-Sectional Design for you—fast and straightforward.

You'll find this type of research everywhere from marketing studies to healthcare. For instance, you might have heard about surveys asking people what they think about a new product or political issue. Those are usually cross-sectional studies, aimed at getting a quick read on public opinion.

Cross-Sectional Design Pros

So, what's the big deal with Cross-Sectional Design? Well, it's the go-to when you need answers fast and don't have the time or resources for a more complicated setup.

Cross-Sectional Design Cons

Remember, speed comes with trade-offs. While you get your results quickly, those results are stuck in time. They can't tell you how things change or why they're changing, just what's happening right now.

Cross-Sectional Design Uses

Also, because they're so quick and simple, cross-sectional studies often serve as the first step in research. They give scientists an idea of what's going on so they can decide if it's worth digging deeper. In that way, they're a bit like a movie trailer, giving you a taste of the action to see if you're interested in seeing the whole film.

So, in our lineup of experimental designs, if True Experimental Design is the superstar quarterback and Longitudinal Design is the wise elder, then Cross-Sectional Design is like the speedy running back—fast, agile, but not designed for long, drawn-out plays.

7) Correlational Design

Next on our roster is the Correlational Design, the keen observer of the experimental world. Imagine this design as the person at a party who loves people-watching. They don't interfere or get involved; they just observe and take mental notes about what's going on.

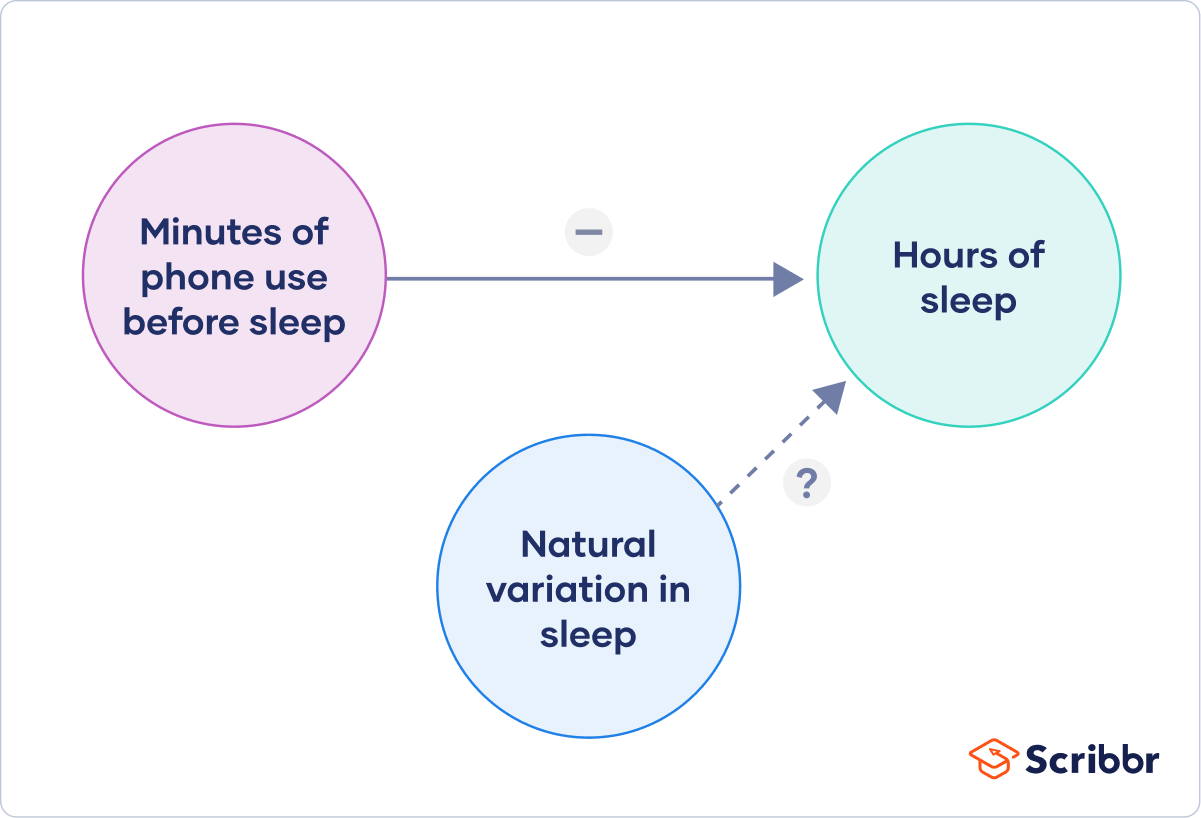

In a correlational study, researchers don't change or control anything; they simply observe and measure how two variables relate to each other.

The correlational design has roots in the early days of psychology and sociology. Pioneers like Sir Francis Galton used it to study how qualities like intelligence or height could be related within families.

This design is all about asking, "Hey, when this thing happens, does that other thing usually happen too?" For example, researchers might study whether students who have more study time get better grades or whether people who exercise more have lower stress levels.

One of the most famous correlational studies you might have heard of is the link between smoking and lung cancer. Back in the mid-20th century, researchers started noticing that people who smoked a lot also seemed to get lung cancer more often. They couldn't say smoking caused cancer—that would require a true experiment—but the strong correlation was a red flag that led to more research and eventually, health warnings.

Correlational Design Pros

This design is great at proving that two (or more) things can be related. Correlational designs can help prove that more detailed research is needed on a topic. They can help us see patterns or possible causes for things that we otherwise might not have realized.

Correlational Design Cons

But here's where you need to be careful: correlational designs can be tricky. Just because two things are related doesn't mean one causes the other. That's like saying, "Every time I wear my lucky socks, my team wins." Well, it's a fun thought, but those socks aren't really controlling the game.

Correlational Design Uses

Despite this limitation, correlational designs are popular in psychology, economics, and epidemiology, to name a few fields. They're often the first step in exploring a possible relationship between variables. Once a strong correlation is found, researchers may decide to conduct more rigorous experimental studies to examine cause and effect.

So, if the True Experimental Design is the superstar quarterback and the Longitudinal Design is the wise elder, the Factorial Design is the strategist, and the Cross-Sectional Design is the speedster, then the Correlational Design is the clever scout, identifying interesting patterns but leaving the heavy lifting of proving cause and effect to the other types of designs.

8) Meta-Analysis

Last but not least, let's talk about Meta-Analysis, the librarian of experimental designs.

If other designs are all about creating new research, Meta-Analysis is about gathering up everyone else's research, sorting it, and figuring out what it all means when you put it together.

Imagine a jigsaw puzzle where each piece is a different study. Meta-Analysis is the process of fitting all those pieces together to see the big picture.

The concept of Meta-Analysis started to take shape in the late 20th century, when computers became powerful enough to handle massive amounts of data. It was like someone handed researchers a super-powered magnifying glass, letting them examine multiple studies at the same time to find common trends or results.

You might have heard of the Cochrane Reviews in healthcare . These are big collections of meta-analyses that help doctors and policymakers figure out what treatments work best based on all the research that's been done.

For example, if ten different studies show that a certain medicine helps lower blood pressure, a meta-analysis would pull all that information together to give a more accurate answer.

Meta-Analysis Pros

The beauty of Meta-Analysis is that it can provide really strong evidence. Instead of relying on one study, you're looking at the whole landscape of research on a topic.

Meta-Analysis Cons

However, it does have some downsides. For one, Meta-Analysis is only as good as the studies it includes. If those studies are flawed, the meta-analysis will be too. It's like baking a cake: if you use bad ingredients, it doesn't matter how good your recipe is—the cake won't turn out well.

Meta-Analysis Uses

Despite these challenges, meta-analyses are highly respected and widely used in many fields like medicine, psychology, and education. They help us make sense of a world that's bursting with information by showing us the big picture drawn from many smaller snapshots.

So, in our all-star lineup, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, the Factorial Design is the strategist, the Cross-Sectional Design is the speedster, and the Correlational Design is the scout, then the Meta-Analysis is like the coach, using insights from everyone else's plays to come up with the best game plan.

9) Non-Experimental Design

Now, let's talk about a player who's a bit of an outsider on this team of experimental designs—the Non-Experimental Design. Think of this design as the commentator or the journalist who covers the game but doesn't actually play.

In a Non-Experimental Design, researchers are like reporters gathering facts, but they don't interfere or change anything. They're simply there to describe and analyze.

Non-Experimental Design Pros

So, what's the deal with Non-Experimental Design? Its strength is in description and exploration. It's really good for studying things as they are in the real world, without changing any conditions.

Non-Experimental Design Cons

Because a non-experimental design doesn't manipulate variables, it can't prove cause and effect. It's like a weather reporter: they can tell you it's raining, but they can't tell you why it's raining.

The downside? Since researchers aren't controlling variables, it's hard to rule out other explanations for what they observe. It's like hearing one side of a story—you get an idea of what happened, but it might not be the complete picture.

Non-Experimental Design Uses

Non-Experimental Design has always been a part of research, especially in fields like anthropology, sociology, and some areas of psychology.

For instance, if you've ever heard of studies that describe how people behave in different cultures or what teens like to do in their free time, that's often Non-Experimental Design at work. These studies aim to capture the essence of a situation, like painting a portrait instead of taking a snapshot.

One well-known example you might have heard about is the Kinsey Reports from the 1940s and 1950s, which described sexual behavior in men and women. Researchers interviewed thousands of people but didn't manipulate any variables like you would in a true experiment. They simply collected data to create a comprehensive picture of the subject matter.

So, in our metaphorical team of research designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, and Meta-Analysis is the coach, then Non-Experimental Design is the sports journalist—always present, capturing the game, but not part of the action itself.

10) Repeated Measures Design

Time to meet the Repeated Measures Design, the time traveler of our research team. If this design were a player in a sports game, it would be the one who keeps revisiting past plays to figure out how to improve the next one.

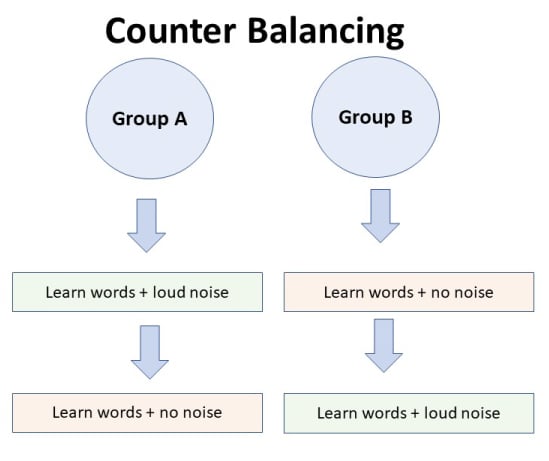

Repeated Measures Design is all about studying the same people or subjects multiple times to see how they change or react under different conditions.

The idea behind Repeated Measures Design isn't new; it's been around since the early days of psychology and medicine. You could say it's a cousin to the Longitudinal Design, but instead of looking at how things naturally change over time, it focuses on how the same group reacts to different things.

Imagine a study looking at how a new energy drink affects people's running speed. Instead of comparing one group that drank the energy drink to another group that didn't, a Repeated Measures Design would have the same group of people run multiple times—once with the energy drink, and once without. This way, you're really zeroing in on the effect of that energy drink, making the results more reliable.

Repeated Measures Design Pros

The strong point of Repeated Measures Design is that it's super focused. Because it uses the same subjects, you don't have to worry about differences between groups messing up your results.

Repeated Measures Design Cons

But the downside? Well, people can get tired or bored if they're tested too many times, which might affect how they respond.

Repeated Measures Design Uses

A famous example of this design is the "Little Albert" experiment, conducted by John B. Watson and Rosalie Rayner in 1920. In this study, a young boy was exposed to a white rat and other stimuli several times to see how his emotional responses changed. Though the ethical standards of this experiment are often criticized today, it was groundbreaking in understanding conditioned emotional responses.

In our metaphorical lineup of research designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, and Non-Experimental Design is the journalist, then Repeated Measures Design is the time traveler—always looping back to fine-tune the game plan.

11) Crossover Design

Next up is Crossover Design, the switch-hitter of the research world. If you're familiar with baseball, you'll know a switch-hitter is someone who can bat both right-handed and left-handed.

In a similar way, Crossover Design allows subjects to experience multiple conditions, flipping them around so that everyone gets a turn in each role.

This design is like the utility player on our team—versatile, flexible, and really good at adapting.

The Crossover Design has its roots in medical research and has been popular since the mid-20th century. It's often used in clinical trials to test the effectiveness of different treatments.

Crossover Design Pros

The neat thing about this design is that it allows each participant to serve as their own control group. Imagine you're testing two new kinds of headache medicine. Instead of giving one type to one group and another type to a different group, you'd give both kinds to the same people but at different times.

Crossover Design Cons

What's the big deal with Crossover Design? Its major strength is in reducing the "noise" that comes from individual differences. Since each person experiences all conditions, it's easier to see real effects. However, there's a catch. This design assumes that there's no lasting effect from the first condition when you switch to the second one. That might not always be true. If the first treatment has a long-lasting effect, it could mess up the results when you switch to the second treatment.

Crossover Design Uses

A well-known example of Crossover Design is in studies that look at the effects of different types of diets—like low-carb vs. low-fat diets. Researchers might have participants follow a low-carb diet for a few weeks, then switch them to a low-fat diet. By doing this, they can more accurately measure how each diet affects the same group of people.

In our team of experimental designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, and Repeated Measures Design is the time traveler, then Crossover Design is the versatile utility player—always ready to adapt and play multiple roles to get the most accurate results.

12) Cluster Randomized Design

Meet the Cluster Randomized Design, the team captain of group-focused research. In our imaginary lineup of experimental designs, if other designs focus on individual players, then Cluster Randomized Design is looking at how the entire team functions.

This approach is especially common in educational and community-based research, and it's been gaining traction since the late 20th century.

Here's how Cluster Randomized Design works: Instead of assigning individual people to different conditions, researchers assign entire groups, or "clusters." These could be schools, neighborhoods, or even entire towns. This helps you see how the new method works in a real-world setting.

Imagine you want to see if a new anti-bullying program really works. Instead of selecting individual students, you'd introduce the program to a whole school or maybe even several schools, and then compare the results to schools without the program.

Cluster Randomized Design Pros

Why use Cluster Randomized Design? Well, sometimes it's just not practical to assign conditions at the individual level. For example, you can't really have half a school following a new reading program while the other half sticks with the old one; that would be way too confusing! Cluster Randomization helps get around this problem by treating each "cluster" as its own mini-experiment.

Cluster Randomized Design Cons

There's a downside, too. Because entire groups are assigned to each condition, there's a risk that the groups might be different in some important way that the researchers didn't account for. That's like having one sports team that's full of veterans playing against a team of rookies; the match wouldn't be fair.

Cluster Randomized Design Uses

A famous example is the research conducted to test the effectiveness of different public health interventions, like vaccination programs. Researchers might roll out a vaccination program in one community but not in another, then compare the rates of disease in both.

In our metaphorical research team, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, and Crossover Design is the utility player, then Cluster Randomized Design is the team captain—always looking out for the group as a whole.

13) Mixed-Methods Design

Say hello to Mixed-Methods Design, the all-rounder or the "Renaissance player" of our research team.

Mixed-Methods Design uses a blend of both qualitative and quantitative methods to get a more complete picture, just like a Renaissance person who's good at lots of different things. It's like being good at both offense and defense in a sport; you've got all your bases covered!

Mixed-Methods Design is a fairly new kid on the block, becoming more popular in the late 20th and early 21st centuries as researchers began to see the value in using multiple approaches to tackle complex questions. It's the Swiss Army knife in our research toolkit, combining the best parts of other designs to be more versatile.

Here's how it could work: Imagine you're studying the effects of a new educational app on students' math skills. You might use quantitative methods like tests and grades to measure how much the students improve—that's the 'numbers part.'

But you also want to know how the students feel about math now, or why they think they got better or worse. For that, you could conduct interviews or have students fill out journals—that's the 'story part.'

Mixed-Methods Design Pros

So, what's the scoop on Mixed-Methods Design? The strength is its versatility and depth; you're not just getting numbers or stories, you're getting both, which gives a fuller picture.

Mixed-Methods Design Cons

But, it's also more challenging. Imagine trying to play two sports at the same time! You have to be skilled in different research methods and know how to combine them effectively.

Mixed-Methods Design Uses

A high-profile example of Mixed-Methods Design is research on climate change. Scientists use numbers and data to show temperature changes (quantitative), but they also interview people to understand how these changes are affecting communities (qualitative).

In our team of experimental designs, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, Crossover Design is the utility player, and Cluster Randomized Design is the team captain, then Mixed-Methods Design is the Renaissance player—skilled in multiple areas and able to bring them all together for a winning strategy.

14) Multivariate Design

Now, let's turn our attention to Multivariate Design, the multitasker of the research world.

If our lineup of research designs were like players on a basketball court, Multivariate Design would be the player dribbling, passing, and shooting all at once. This design doesn't just look at one or two things; it looks at several variables simultaneously to see how they interact and affect each other.

Multivariate Design is like baking a cake with many ingredients. Instead of just looking at how flour affects the cake, you also consider sugar, eggs, and milk all at once. This way, you understand how everything works together to make the cake taste good or bad.

Multivariate Design has been a go-to method in psychology, economics, and social sciences since the latter half of the 20th century. With the advent of computers and advanced statistical software, analyzing multiple variables at once became a lot easier, and Multivariate Design soared in popularity.

Multivariate Design Pros

So, what's the benefit of using Multivariate Design? Its power lies in its complexity. By studying multiple variables at the same time, you can get a really rich, detailed understanding of what's going on.

Multivariate Design Cons

But that complexity can also be a drawback. With so many variables, it can be tough to tell which ones are really making a difference and which ones are just along for the ride.

Multivariate Design Uses

Imagine you're a coach trying to figure out the best strategy to win games. You wouldn't just look at how many points your star player scores; you'd also consider assists, rebounds, turnovers, and maybe even how loud the crowd is. A Multivariate Design would help you understand how all these factors work together to determine whether you win or lose.

A well-known example of Multivariate Design is in market research. Companies often use this approach to figure out how different factors—like price, packaging, and advertising—affect sales. By studying multiple variables at once, they can find the best combination to boost profits.

In our metaphorical research team, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, Crossover Design is the utility player, Cluster Randomized Design is the team captain, and Mixed-Methods Design is the Renaissance player, then Multivariate Design is the multitasker—juggling many variables at once to get a fuller picture of what's happening.

15) Pretest-Posttest Design

Let's introduce Pretest-Posttest Design, the "Before and After" superstar of our research team. You've probably seen those before-and-after pictures in ads for weight loss programs or home renovations, right?

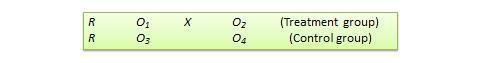

Well, this design is like that, but for science! Pretest-Posttest Design checks out what things are like before the experiment starts and then compares that to what things are like after the experiment ends.

This design is one of the classics, a staple in research for decades across various fields like psychology, education, and healthcare. It's so simple and straightforward that it has stayed popular for a long time.

In Pretest-Posttest Design, you measure your subject's behavior or condition before you introduce any changes—that's your "before" or "pretest." Then you do your experiment, and after it's done, you measure the same thing again—that's your "after" or "posttest."

Pretest-Posttest Design Pros

What makes Pretest-Posttest Design special? It's pretty easy to understand and doesn't require fancy statistics.

Pretest-Posttest Design Cons

But there are some pitfalls. For example, what if the kids in our math example get better at multiplication just because they're older or because they've taken the test before? That would make it hard to tell if the program is really effective or not.

Pretest-Posttest Design Uses

Let's say you're a teacher and you want to know if a new math program helps kids get better at multiplication. First, you'd give all the kids a multiplication test—that's your pretest. Then you'd teach them using the new math program. At the end, you'd give them the same test again—that's your posttest. If the kids do better on the second test, you might conclude that the program works.

One famous use of Pretest-Posttest Design is in evaluating the effectiveness of driver's education courses. Researchers will measure people's driving skills before and after the course to see if they've improved.

16) Solomon Four-Group Design

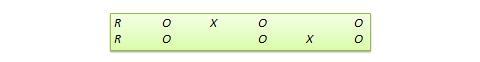

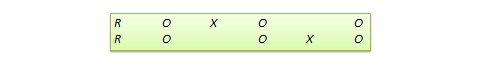

Next up is the Solomon Four-Group Design, the "chess master" of our research team. This design is all about strategy and careful planning. Named after Richard L. Solomon who introduced it in the 1940s, this method tries to correct some of the weaknesses in simpler designs, like the Pretest-Posttest Design.

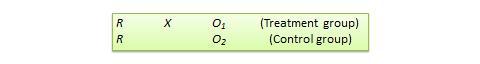

Here's how it rolls: The Solomon Four-Group Design uses four different groups to test a hypothesis. Two groups get a pretest, then one of them receives the treatment or intervention, and both get a posttest. The other two groups skip the pretest, and only one of them receives the treatment before they both get a posttest.

Sound complicated? It's like playing 4D chess; you're thinking several moves ahead!

Solomon Four-Group Design Pros

What's the pro and con of the Solomon Four-Group Design? On the plus side, it provides really robust results because it accounts for so many variables.

Solomon Four-Group Design Cons

The downside? It's a lot of work and requires a lot of participants, making it more time-consuming and costly.

Solomon Four-Group Design Uses

Let's say you want to figure out if a new way of teaching history helps students remember facts better. Two classes take a history quiz (pretest), then one class uses the new teaching method while the other sticks with the old way. Both classes take another quiz afterward (posttest).

Meanwhile, two more classes skip the initial quiz, and then one uses the new method before both take the final quiz. Comparing all four groups will give you a much clearer picture of whether the new teaching method works and whether the pretest itself affects the outcome.

The Solomon Four-Group Design is less commonly used than simpler designs but is highly respected for its ability to control for more variables. It's a favorite in educational and psychological research where you really want to dig deep and figure out what's actually causing changes.

17) Adaptive Designs

Now, let's talk about Adaptive Designs, the chameleons of the experimental world.

Imagine you're a detective, and halfway through solving a case, you find a clue that changes everything. You wouldn't just stick to your old plan; you'd adapt and change your approach, right? That's exactly what Adaptive Designs allow researchers to do.

In an Adaptive Design, researchers can make changes to the study as it's happening, based on early results. In a traditional study, once you set your plan, you stick to it from start to finish.

Adaptive Design Pros

This method is particularly useful in fast-paced or high-stakes situations, like developing a new vaccine in the middle of a pandemic. The ability to adapt can save both time and resources, and more importantly, it can save lives by getting effective treatments out faster.

Adaptive Design Cons

But Adaptive Designs aren't without their drawbacks. They can be very complex to plan and carry out, and there's always a risk that the changes made during the study could introduce bias or errors.

Adaptive Design Uses

Adaptive Designs are most often seen in clinical trials, particularly in the medical and pharmaceutical fields.

For instance, if a new drug is showing really promising results, the study might be adjusted to give more participants the new treatment instead of a placebo. Or if one dose level is showing bad side effects, it might be dropped from the study.

The best part is, these changes are pre-planned. Researchers lay out in advance what changes might be made and under what conditions, which helps keep everything scientific and above board.

In terms of applications, besides their heavy usage in medical and pharmaceutical research, Adaptive Designs are also becoming increasingly popular in software testing and market research. In these fields, being able to quickly adjust to early results can give companies a significant advantage.

Adaptive Designs are like the agile startups of the research world—quick to pivot, keen to learn from ongoing results, and focused on rapid, efficient progress. However, they require a great deal of expertise and careful planning to ensure that the adaptability doesn't compromise the integrity of the research.

18) Bayesian Designs

Next, let's dive into Bayesian Designs, the data detectives of the research universe. Named after Thomas Bayes, an 18th-century statistician and minister, this design doesn't just look at what's happening now; it also takes into account what's happened before.

Imagine if you were a detective who not only looked at the evidence in front of you but also used your past cases to make better guesses about your current one. That's the essence of Bayesian Designs.

Bayesian Designs are like detective work in science. As you gather more clues (or data), you update your best guess on what's really happening. This way, your experiment gets smarter as it goes along.

In the world of research, Bayesian Designs are most notably used in areas where you have some prior knowledge that can inform your current study. For example, if earlier research shows that a certain type of medicine usually works well for a specific illness, a Bayesian Design would include that information when studying a new group of patients with the same illness.

Bayesian Design Pros

One of the major advantages of Bayesian Designs is their efficiency. Because they use existing data to inform the current experiment, often fewer resources are needed to reach a reliable conclusion.

Bayesian Design Cons

However, they can be quite complicated to set up and require a deep understanding of both statistics and the subject matter at hand.

Bayesian Design Uses

Bayesian Designs are highly valued in medical research, finance, environmental science, and even in Internet search algorithms. Their ability to continually update and refine hypotheses based on new evidence makes them particularly useful in fields where data is constantly evolving and where quick, informed decisions are crucial.

Here's a real-world example: In the development of personalized medicine, where treatments are tailored to individual patients, Bayesian Designs are invaluable. If a treatment has been effective for patients with similar genetics or symptoms in the past, a Bayesian approach can use that data to predict how well it might work for a new patient.

This type of design is also increasingly popular in machine learning and artificial intelligence. In these fields, Bayesian Designs help algorithms "learn" from past data to make better predictions or decisions in new situations. It's like teaching a computer to be a detective that gets better and better at solving puzzles the more puzzles it sees.

19) Covariate Adaptive Randomization

Now let's turn our attention to Covariate Adaptive Randomization, which you can think of as the "matchmaker" of experimental designs.

Picture a soccer coach trying to create the most balanced teams for a friendly match. They wouldn't just randomly assign players; they'd take into account each player's skills, experience, and other traits.

Covariate Adaptive Randomization is all about creating the most evenly matched groups possible for an experiment.

In traditional randomization, participants are allocated to different groups purely by chance. This is a pretty fair way to do things, but it can sometimes lead to unbalanced groups.

Imagine if all the professional-level players ended up on one soccer team and all the beginners on another; that wouldn't be a very informative match! Covariate Adaptive Randomization fixes this by using important traits or characteristics (called "covariates") to guide the randomization process.

Covariate Adaptive Randomization Pros

The benefits of this design are pretty clear: it aims for balance and fairness, making the final results more trustworthy.

Covariate Adaptive Randomization Cons

But it's not perfect. It can be complex to implement and requires a deep understanding of which characteristics are most important to balance.

Covariate Adaptive Randomization Uses

This design is particularly useful in medical trials. Let's say researchers are testing a new medication for high blood pressure. Participants might have different ages, weights, or pre-existing conditions that could affect the results.

Covariate Adaptive Randomization would make sure that each treatment group has a similar mix of these characteristics, making the results more reliable and easier to interpret.

In practical terms, this design is often seen in clinical trials for new drugs or therapies, but its principles are also applicable in fields like psychology, education, and social sciences.

For instance, in educational research, it might be used to ensure that classrooms being compared have similar distributions of students in terms of academic ability, socioeconomic status, and other factors.

Covariate Adaptive Randomization is like the wise elder of the group, ensuring that everyone has an equal opportunity to show their true capabilities, thereby making the collective results as reliable as possible.

20) Stepped Wedge Design

Let's now focus on the Stepped Wedge Design, a thoughtful and cautious member of the experimental design family.

Imagine you're trying out a new gardening technique, but you're not sure how well it will work. You decide to apply it to one section of your garden first, watch how it performs, and then gradually extend the technique to other sections. This way, you get to see its effects over time and across different conditions. That's basically how Stepped Wedge Design works.

In a Stepped Wedge Design, all participants or clusters start off in the control group, and then, at different times, they 'step' over to the intervention or treatment group. This creates a wedge-like pattern over time where more and more participants receive the treatment as the study progresses. It's like rolling out a new policy in phases, monitoring its impact at each stage before extending it to more people.

Stepped Wedge Design Pros

The Stepped Wedge Design offers several advantages. Firstly, it allows for the study of interventions that are expected to do more good than harm, which makes it ethically appealing.

Secondly, it's useful when resources are limited and it's not feasible to roll out a new treatment to everyone at once. Lastly, because everyone eventually receives the treatment, it can be easier to get buy-in from participants or organizations involved in the study.

Stepped Wedge Design Cons

However, this design can be complex to analyze because it has to account for both the time factor and the changing conditions in each 'step' of the wedge. And like any study where participants know they're receiving an intervention, there's the potential for the results to be influenced by the placebo effect or other biases.

Stepped Wedge Design Uses

This design is particularly useful in health and social care research. For instance, if a hospital wants to implement a new hygiene protocol, it might start in one department, assess its impact, and then roll it out to other departments over time. This allows the hospital to adjust and refine the new protocol based on real-world data before it's fully implemented.

In terms of applications, Stepped Wedge Designs are commonly used in public health initiatives, organizational changes in healthcare settings, and social policy trials. They are particularly useful in situations where an intervention is being rolled out gradually and it's important to understand its impacts at each stage.

21) Sequential Design

Next up is Sequential Design, the dynamic and flexible member of our experimental design family.

Imagine you're playing a video game where you can choose different paths. If you take one path and find a treasure chest, you might decide to continue in that direction. If you hit a dead end, you might backtrack and try a different route. Sequential Design operates in a similar fashion, allowing researchers to make decisions at different stages based on what they've learned so far.

In a Sequential Design, the experiment is broken down into smaller parts, or "sequences." After each sequence, researchers pause to look at the data they've collected. Based on those findings, they then decide whether to stop the experiment because they've got enough information, or to continue and perhaps even modify the next sequence.

Sequential Design Pros

This allows for a more efficient use of resources, as you're only continuing with the experiment if the data suggests it's worth doing so.

One of the great things about Sequential Design is its efficiency. Because you're making data-driven decisions along the way, you can often reach conclusions more quickly and with fewer resources.

Sequential Design Cons

However, it requires careful planning and expertise to ensure that these "stop or go" decisions are made correctly and without bias.

Sequential Design Uses

In terms of its applications, besides healthcare and medicine, Sequential Design is also popular in quality control in manufacturing, environmental monitoring, and financial modeling. In these areas, being able to make quick decisions based on incoming data can be a big advantage.

This design is often used in clinical trials involving new medications or treatments. For example, if early results show that a new drug has significant side effects, the trial can be stopped before more people are exposed to it.

On the flip side, if the drug is showing promising results, the trial might be expanded to include more participants or to extend the testing period.

Think of Sequential Design as the nimble athlete of experimental designs, capable of quick pivots and adjustments to reach the finish line in the most effective way possible. But just like an athlete needs a good coach, this design requires expert oversight to make sure it stays on the right track.

22) Field Experiments

Last but certainly not least, let's explore Field Experiments—the adventurers of the experimental design world.

Picture a scientist leaving the controlled environment of a lab to test a theory in the real world, like a biologist studying animals in their natural habitat or a social scientist observing people in a real community. These are Field Experiments, and they're all about getting out there and gathering data in real-world settings.

Field Experiments embrace the messiness of the real world, unlike laboratory experiments, where everything is controlled down to the smallest detail. This makes them both exciting and challenging.

Field Experiment Pros

On one hand, the results often give us a better understanding of how things work outside the lab.

While Field Experiments offer real-world relevance, they come with challenges like controlling for outside factors and the ethical considerations of intervening in people's lives without their knowledge.

Field Experiment Cons

On the other hand, the lack of control can make it harder to tell exactly what's causing what. Yet, despite these challenges, they remain a valuable tool for researchers who want to understand how theories play out in the real world.

Field Experiment Uses

Let's say a school wants to improve student performance. In a Field Experiment, they might change the school's daily schedule for one semester and keep track of how students perform compared to another school where the schedule remained the same.

Because the study is happening in a real school with real students, the results could be very useful for understanding how the change might work in other schools. But since it's the real world, lots of other factors—like changes in teachers or even the weather—could affect the results.

Field Experiments are widely used in economics, psychology, education, and public policy. For example, you might have heard of the famous "Broken Windows" experiment in the 1980s that looked at how small signs of disorder, like broken windows or graffiti, could encourage more serious crime in neighborhoods. This experiment had a big impact on how cities think about crime prevention.

From the foundational concepts of control groups and independent variables to the sophisticated layouts like Covariate Adaptive Randomization and Sequential Design, it's clear that the realm of experimental design is as varied as it is fascinating.

We've seen that each design has its own special talents, ideal for specific situations. Some designs, like the Classic Controlled Experiment, are like reliable old friends you can always count on.

Others, like Sequential Design, are flexible and adaptable, making quick changes based on what they learn. And let's not forget the adventurous Field Experiments, which take us out of the lab and into the real world to discover things we might not see otherwise.

Choosing the right experimental design is like picking the right tool for the job. The method you choose can make a big difference in how reliable your results are and how much people will trust what you've discovered. And as we've learned, there's a design to suit just about every question, every problem, and every curiosity.

So the next time you read about a new discovery in medicine, psychology, or any other field, you'll have a better understanding of the thought and planning that went into figuring things out. Experimental design is more than just a set of rules; it's a structured way to explore the unknown and answer questions that can change the world.

Related posts:

- Experimental Psychologist Career (Salary + Duties + Interviews)

- 40+ Famous Psychologists (Images + Biographies)

- 11+ Psychology Experiment Ideas (Goals + Methods)

- The Little Albert Experiment

- 41+ White Collar Job Examples (Salary + Path)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

Experimental Research Design — 6 mistakes you should never make!

Since school days’ students perform scientific experiments that provide results that define and prove the laws and theorems in science. These experiments are laid on a strong foundation of experimental research designs.

An experimental research design helps researchers execute their research objectives with more clarity and transparency.

In this article, we will not only discuss the key aspects of experimental research designs but also the issues to avoid and problems to resolve while designing your research study.

Table of Contents

What Is Experimental Research Design?

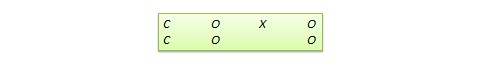

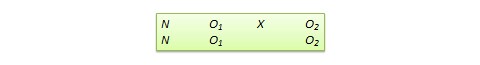

Experimental research design is a framework of protocols and procedures created to conduct experimental research with a scientific approach using two sets of variables. Herein, the first set of variables acts as a constant, used to measure the differences of the second set. The best example of experimental research methods is quantitative research .

Experimental research helps a researcher gather the necessary data for making better research decisions and determining the facts of a research study.

When Can a Researcher Conduct Experimental Research?

A researcher can conduct experimental research in the following situations —

- When time is an important factor in establishing a relationship between the cause and effect.

- When there is an invariable or never-changing behavior between the cause and effect.

- Finally, when the researcher wishes to understand the importance of the cause and effect.

Importance of Experimental Research Design

To publish significant results, choosing a quality research design forms the foundation to build the research study. Moreover, effective research design helps establish quality decision-making procedures, structures the research to lead to easier data analysis, and addresses the main research question. Therefore, it is essential to cater undivided attention and time to create an experimental research design before beginning the practical experiment.

By creating a research design, a researcher is also giving oneself time to organize the research, set up relevant boundaries for the study, and increase the reliability of the results. Through all these efforts, one could also avoid inconclusive results. If any part of the research design is flawed, it will reflect on the quality of the results derived.

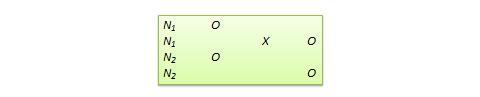

Types of Experimental Research Designs

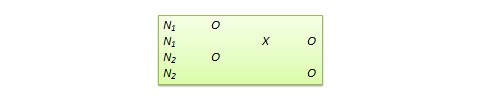

Based on the methods used to collect data in experimental studies, the experimental research designs are of three primary types:

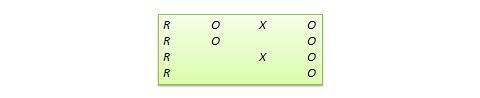

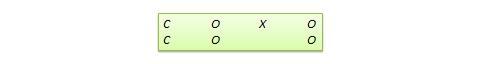

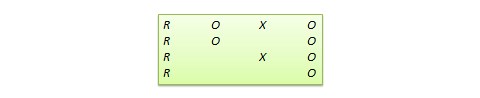

1. Pre-experimental Research Design

A research study could conduct pre-experimental research design when a group or many groups are under observation after implementing factors of cause and effect of the research. The pre-experimental design will help researchers understand whether further investigation is necessary for the groups under observation.

Pre-experimental research is of three types —

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

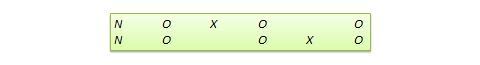

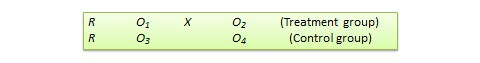

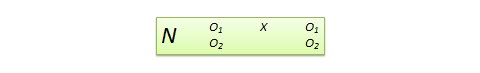

2. True Experimental Research Design

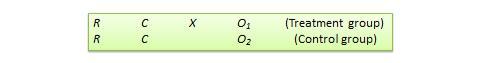

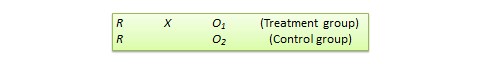

A true experimental research design relies on statistical analysis to prove or disprove a researcher’s hypothesis. It is one of the most accurate forms of research because it provides specific scientific evidence. Furthermore, out of all the types of experimental designs, only a true experimental design can establish a cause-effect relationship within a group. However, in a true experiment, a researcher must satisfy these three factors —

- There is a control group that is not subjected to changes and an experimental group that will experience the changed variables

- A variable that can be manipulated by the researcher

- Random distribution of the variables

This type of experimental research is commonly observed in the physical sciences.

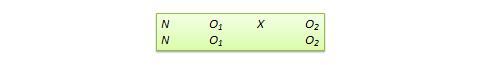

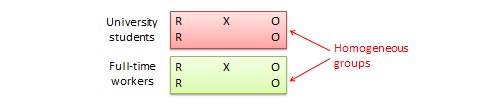

3. Quasi-experimental Research Design

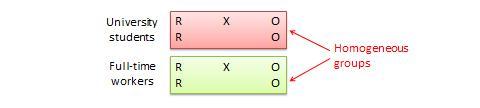

The word “Quasi” means similarity. A quasi-experimental design is similar to a true experimental design. However, the difference between the two is the assignment of the control group. In this research design, an independent variable is manipulated, but the participants of a group are not randomly assigned. This type of research design is used in field settings where random assignment is either irrelevant or not required.

The classification of the research subjects, conditions, or groups determines the type of research design to be used.

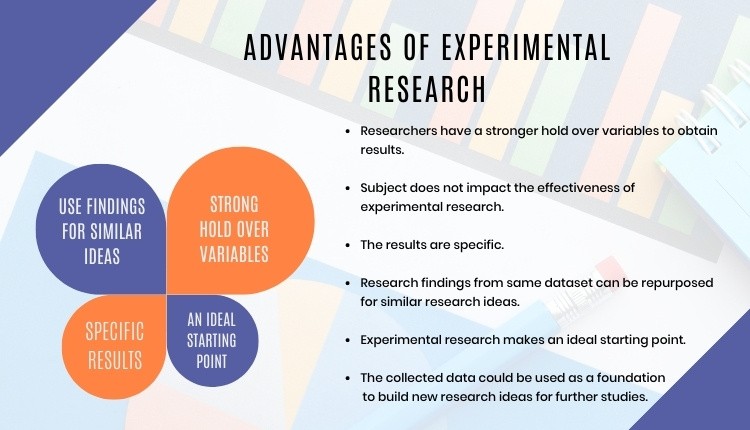

Advantages of Experimental Research

Experimental research allows you to test your idea in a controlled environment before taking the research to clinical trials. Moreover, it provides the best method to test your theory because of the following advantages:

- Researchers have firm control over variables to obtain results.

- The subject does not impact the effectiveness of experimental research. Anyone can implement it for research purposes.

- The results are specific.

- Post results analysis, research findings from the same dataset can be repurposed for similar research ideas.

- Researchers can identify the cause and effect of the hypothesis and further analyze this relationship to determine in-depth ideas.

- Experimental research makes an ideal starting point. The collected data could be used as a foundation to build new research ideas for further studies.

6 Mistakes to Avoid While Designing Your Research

There is no order to this list, and any one of these issues can seriously compromise the quality of your research. You could refer to the list as a checklist of what to avoid while designing your research.

1. Invalid Theoretical Framework

Usually, researchers miss out on checking if their hypothesis is logical to be tested. If your research design does not have basic assumptions or postulates, then it is fundamentally flawed and you need to rework on your research framework.

2. Inadequate Literature Study

Without a comprehensive research literature review , it is difficult to identify and fill the knowledge and information gaps. Furthermore, you need to clearly state how your research will contribute to the research field, either by adding value to the pertinent literature or challenging previous findings and assumptions.

3. Insufficient or Incorrect Statistical Analysis

Statistical results are one of the most trusted scientific evidence. The ultimate goal of a research experiment is to gain valid and sustainable evidence. Therefore, incorrect statistical analysis could affect the quality of any quantitative research.

4. Undefined Research Problem

This is one of the most basic aspects of research design. The research problem statement must be clear and to do that, you must set the framework for the development of research questions that address the core problems.

5. Research Limitations

Every study has some type of limitations . You should anticipate and incorporate those limitations into your conclusion, as well as the basic research design. Include a statement in your manuscript about any perceived limitations, and how you considered them while designing your experiment and drawing the conclusion.

6. Ethical Implications

The most important yet less talked about topic is the ethical issue. Your research design must include ways to minimize any risk for your participants and also address the research problem or question at hand. If you cannot manage the ethical norms along with your research study, your research objectives and validity could be questioned.

Experimental Research Design Example

In an experimental design, a researcher gathers plant samples and then randomly assigns half the samples to photosynthesize in sunlight and the other half to be kept in a dark box without sunlight, while controlling all the other variables (nutrients, water, soil, etc.)

By comparing their outcomes in biochemical tests, the researcher can confirm that the changes in the plants were due to the sunlight and not the other variables.

Experimental research is often the final form of a study conducted in the research process which is considered to provide conclusive and specific results. But it is not meant for every research. It involves a lot of resources, time, and money and is not easy to conduct, unless a foundation of research is built. Yet it is widely used in research institutes and commercial industries, for its most conclusive results in the scientific approach.

Have you worked on research designs? How was your experience creating an experimental design? What difficulties did you face? Do write to us or comment below and share your insights on experimental research designs!

Frequently Asked Questions

Randomization is important in an experimental research because it ensures unbiased results of the experiment. It also measures the cause-effect relationship on a particular group of interest.

Experimental research design lay the foundation of a research and structures the research to establish quality decision making process.

There are 3 types of experimental research designs. These are pre-experimental research design, true experimental research design, and quasi experimental research design.

The difference between an experimental and a quasi-experimental design are: 1. The assignment of the control group in quasi experimental research is non-random, unlike true experimental design, which is randomly assigned. 2. Experimental research group always has a control group; on the other hand, it may not be always present in quasi experimental research.

Experimental research establishes a cause-effect relationship by testing a theory or hypothesis using experimental groups or control variables. In contrast, descriptive research describes a study or a topic by defining the variables under it and answering the questions related to the same.

good and valuable

Very very good

Good presentation.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

![experimental research design sample study What is Academic Integrity and How to Uphold it [FREE CHECKLIST]](https://www.enago.com/academy/wp-content/uploads/2024/05/FeatureImages-59-210x136.png)

Ensuring Academic Integrity and Transparency in Academic Research: A comprehensive checklist for researchers

Academic integrity is the foundation upon which the credibility and value of scientific findings are…

- Publishing Research

- Reporting Research

How to Optimize Your Research Process: A step-by-step guide

For researchers across disciplines, the path to uncovering novel findings and insights is often filled…

- Industry News

- Trending Now

Breaking Barriers: Sony and Nature unveil “Women in Technology Award”

Sony Group Corporation and the prestigious scientific journal Nature have collaborated to launch the inaugural…

Achieving Research Excellence: Checklist for good research practices

Academia is built on the foundation of trustworthy and high-quality research, supported by the pillars…

- Promoting Research

Plain Language Summary — Communicating your research to bridge the academic-lay gap

Science can be complex, but does that mean it should not be accessible to the…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

As a researcher, what do you consider most when choosing an image manipulation detector?