Natural Language Processing

Introduction.

Natural Language Processing (NLP) is one of the hottest areas of artificial intelligence (AI) thanks to applications like text generators that compose coherent essays, chatbots that fool people into thinking they’re sentient, and text-to-image programs that produce photorealistic images of anything you can describe. Recent years have brought a revolution in the ability of computers to understand human languages, programming languages, and even biological and chemical sequences, such as DNA and protein structures, that resemble language. The latest AI models are unlocking these areas to analyze the meanings of input text and generate meaningful, expressive output.

What is Natural Language Processing (NLP)

Natural language processing (NLP) is the discipline of building machines that can manipulate human language — or data that resembles human language — in the way that it is written, spoken, and organized. It evolved from computational linguistics, which uses computer science to understand the principles of language, but rather than developing theoretical frameworks, NLP is an engineering discipline that seeks to build technology to accomplish useful tasks. NLP can be divided into two overlapping subfields: natural language understanding (NLU), which focuses on semantic analysis or determining the intended meaning of text, and natural language generation (NLG), which focuses on text generation by a machine. NLP is separate from — but often used in conjunction with — speech recognition, which seeks to parse spoken language into words, turning sound into text and vice versa.

Why Does Natural Language Processing (NLP) Matter?

NLP is an integral part of everyday life and becoming more so as language technology is applied to diverse fields like retailing (for instance, in customer service chatbots) and medicine (interpreting or summarizing electronic health records). Conversational agents such as Amazon’s Alexa and Apple’s Siri utilize NLP to listen to user queries and find answers. The most sophisticated such agents — such as GPT-3, which was recently opened for commercial applications — can generate sophisticated prose on a wide variety of topics as well as power chatbots that are capable of holding coherent conversations. Google uses NLP to improve its search engine results , and social networks like Facebook use it to detect and filter hate speech .

NLP is growing increasingly sophisticated, yet much work remains to be done. Current systems are prone to bias and incoherence, and occasionally behave erratically. Despite the challenges, machine learning engineers have many opportunities to apply NLP in ways that are ever more central to a functioning society.

What is Natural Language Processing (NLP) Used For?

NLP is used for a wide variety of language-related tasks, including answering questions, classifying text in a variety of ways, and conversing with users.

Here are 11 tasks that can be solved by NLP:

- Sentiment analysis is the process of classifying the emotional intent of text. Generally, the input to a sentiment classification model is a piece of text, and the output is the probability that the sentiment expressed is positive, negative, or neutral. Typically, this probability is based on either hand-generated features, word n-grams, TF-IDF features, or using deep learning models to capture sequential long- and short-term dependencies. Sentiment analysis is used to classify customer reviews on various online platforms as well as for niche applications like identifying signs of mental illness in online comments.

- Toxicity classification is a branch of sentiment analysis where the aim is not just to classify hostile intent but also to classify particular categories such as threats, insults, obscenities, and hatred towards certain identities. The input to such a model is text, and the output is generally the probability of each class of toxicity. Toxicity classification models can be used to moderate and improve online conversations by silencing offensive comments , detecting hate speech , or scanning documents for defamation .

- Machine translation automates translation between different languages. The input to such a model is text in a specified source language, and the output is the text in a specified target language. Google Translate is perhaps the most famous mainstream application. Such models are used to improve communication between people on social-media platforms such as Facebook or Skype. Effective approaches to machine translation can distinguish between words with similar meanings . Some systems also perform language identification; that is, classifying text as being in one language or another.

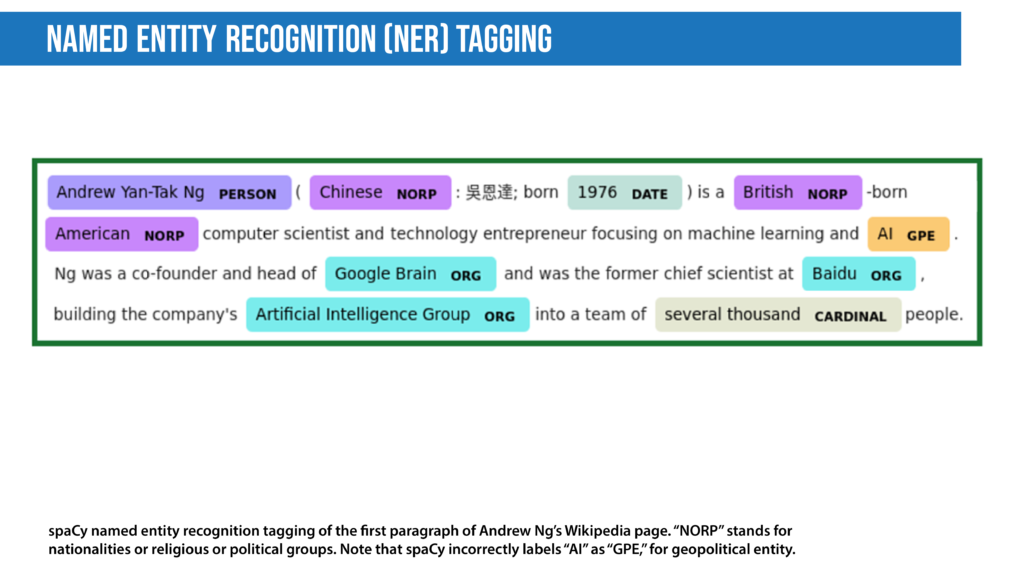

- Named entity recognition aims to extract entities in a piece of text into predefined categories such as personal names, organizations, locations, and quantities. The input to such a model is generally text, and the output is the various named entities along with their start and end positions. Named entity recognition is useful in applications such as summarizing news articles and combating disinformation . For example, here is what a named entity recognition model could provide:

- Spam detection is a prevalent binary classification problem in NLP, where the purpose is to classify emails as either spam or not. Spam detectors take as input an email text along with various other subtexts like title and sender’s name. They aim to output the probability that the mail is spam. Email providers like Gmail use such models to provide a better user experience by detecting unsolicited and unwanted emails and moving them to a designated spam folder.

- Grammatical error correction models encode grammatical rules to correct the grammar within text. This is viewed mainly as a sequence-to-sequence task, where a model is trained on an ungrammatical sentence as input and a correct sentence as output. Online grammar checkers like Grammarly and word-processing systems like Microsoft Word use such systems to provide a better writing experience to their customers. Schools also use them to grade student essays .

- Topic modeling is an unsupervised text mining task that takes a corpus of documents and discovers abstract topics within that corpus. The input to a topic model is a collection of documents, and the output is a list of topics that defines words for each topic as well as assignment proportions of each topic in a document. Latent Dirichlet Allocation (LDA), one of the most popular topic modeling techniques, tries to view a document as a collection of topics and a topic as a collection of words. Topic modeling is being used commercially to help lawyers find evidence in legal documents .

- Autocomplete predicts what word comes next, and autocomplete systems of varying complexity are used in chat applications like WhatsApp. Google uses autocomplete to predict search queries. One of the most famous models for autocomplete is GPT-2, which has been used to write articles , song lyrics , and much more.

- Database query: We have a database of questions and answers, and we would like a user to query it using natural language.

- Conversation generation: These chatbots can simulate dialogue with a human partner. Some are capable of engaging in wide-ranging conversations . A high-profile example is Google’s LaMDA, which provided such human-like answers to questions that one of its developers was convinced that it had feelings .

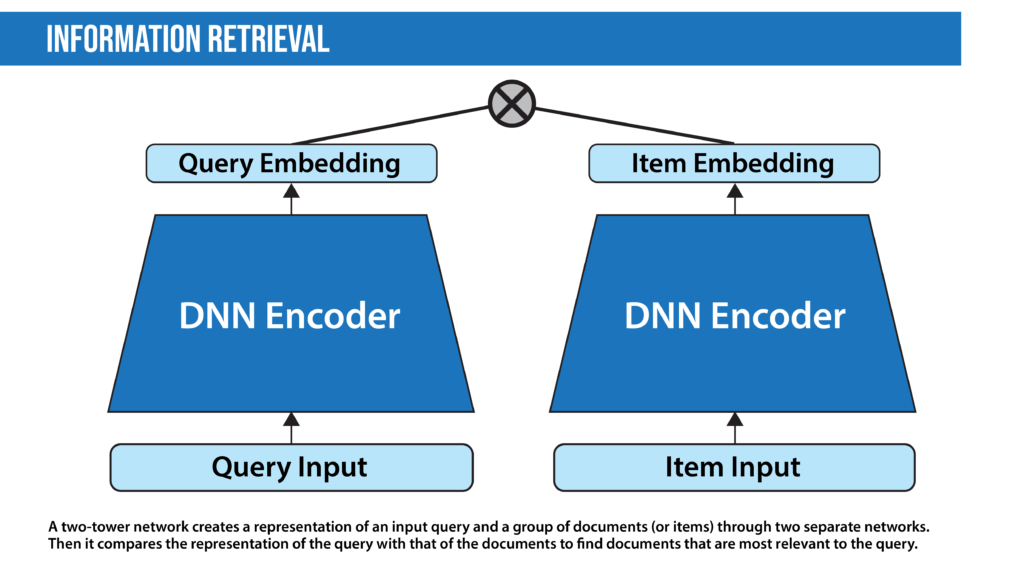

- Information retrieval finds the documents that are most relevant to a query. This is a problem every search and recommendation system faces. The goal is not to answer a particular query but to retrieve, from a collection of documents that may be numbered in the millions, a set that is most relevant to the query. Document retrieval systems mainly execute two processes: indexing and matching. In most modern systems, indexing is done by a vector space model through Two-Tower Networks, while matching is done using similarity or distance scores. Google recently integrated its search function with a multimodal information retrieval model that works with text, image, and video data.

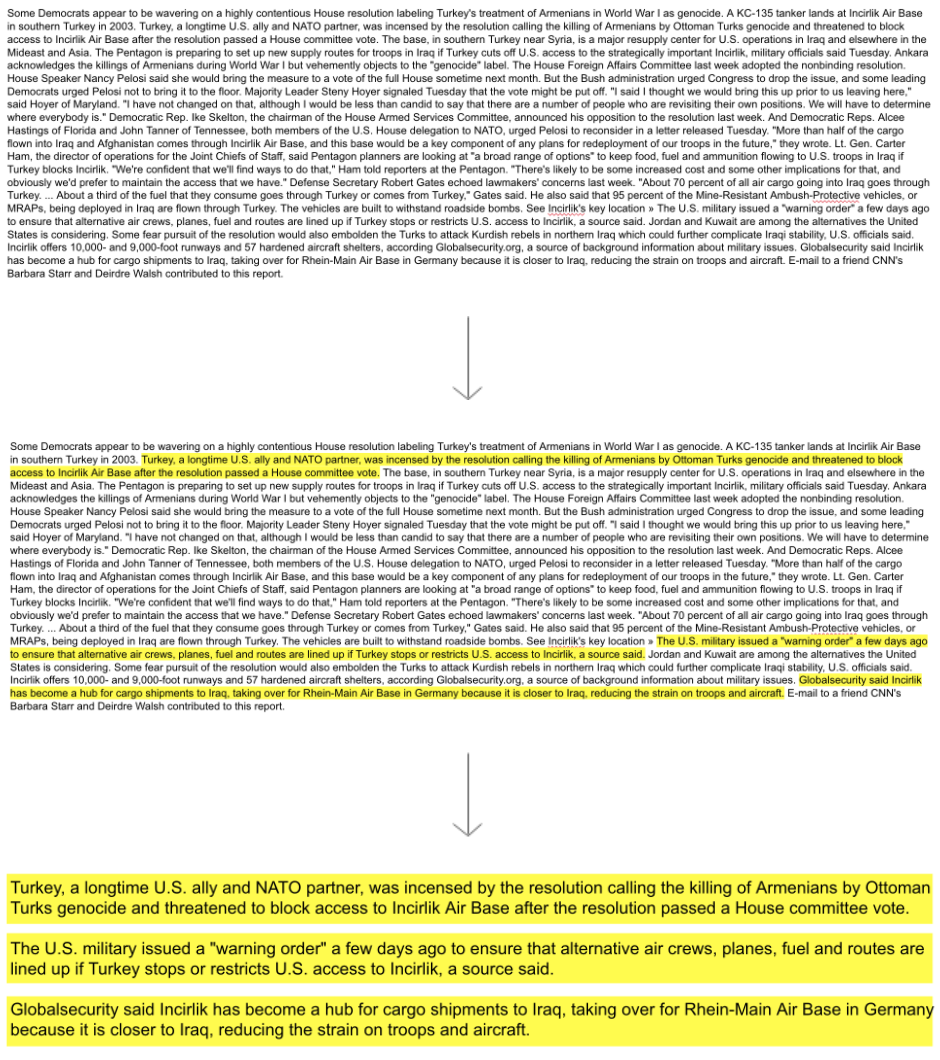

- Extractive summarization focuses on extracting the most important sentences from a long text and combining these to form a summary. Typically, extractive summarization scores each sentence in an input text and then selects several sentences to form the summary.

- Abstractive summarization produces a summary by paraphrasing. This is similar to writing the abstract that includes words and sentences that are not present in the original text. Abstractive summarization is usually modeled as a sequence-to-sequence task, where the input is a long-form text and the output is a summary.

- Multiple choice: The multiple-choice question problem is composed of a question and a set of possible answers. The learning task is to pick the correct answer.

- Open domain : In open-domain question answering, the model provides answers to questions in natural language without any options provided, often by querying a large number of texts.

How Does Natural Language Processing (NLP) Work?

NLP models work by finding relationships between the constituent parts of language — for example, the letters, words, and sentences found in a text dataset. NLP architectures use various methods for data preprocessing, feature extraction, and modeling. Some of these processes are:

- Stemming and lemmatization : Stemming is an informal process of converting words to their base forms using heuristic rules. For example, “university,” “universities,” and “university’s” might all be mapped to the base univers . (One limitation in this approach is that “universe” may also be mapped to univers , even though universe and university don’t have a close semantic relationship.) Lemmatization is a more formal way to find roots by analyzing a word’s morphology using vocabulary from a dictionary. Stemming and lemmatization are provided by libraries like spaCy and NLTK.

- Sentence segmentation breaks a large piece of text into linguistically meaningful sentence units. This is obvious in languages like English, where the end of a sentence is marked by a period, but it is still not trivial. A period can be used to mark an abbreviation as well as to terminate a sentence, and in this case, the period should be part of the abbreviation token itself. The process becomes even more complex in languages, such as ancient Chinese, that don’t have a delimiter that marks the end of a sentence.

- Stop word removal aims to remove the most commonly occurring words that don’t add much information to the text. For example, “the,” “a,” “an,” and so on.

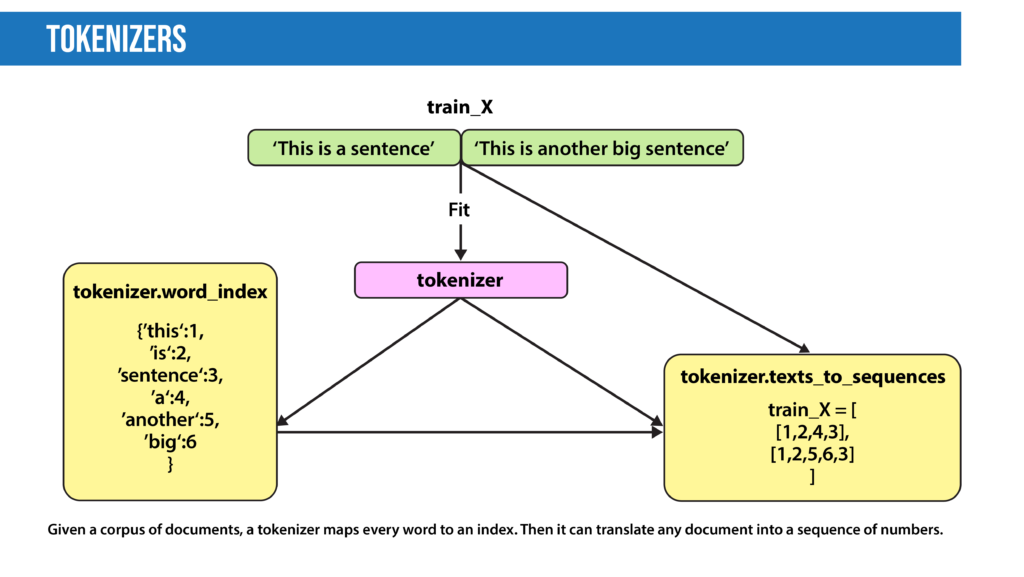

- Tokenization splits text into individual words and word fragments. The result generally consists of a word index and tokenized text in which words may be represented as numerical tokens for use in various deep learning methods. A method that instructs language models to ignore unimportant tokens can improve efficiency.

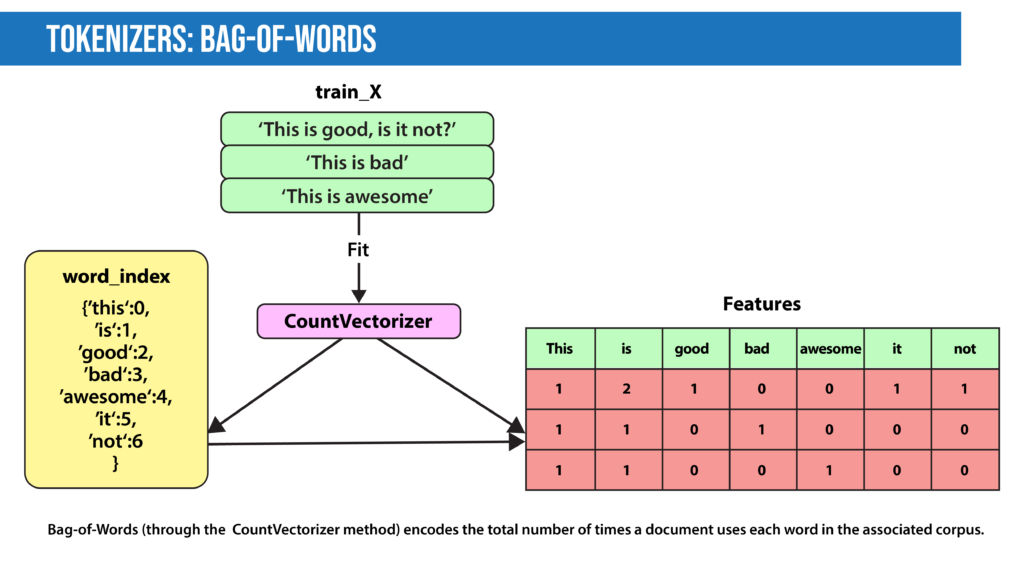

- Bag-of-Words: Bag-of-Words counts the number of times each word or n-gram (combination of n words) appears in a document. For example, below, the Bag-of-Words model creates a numerical representation of the dataset based on how many of each word in the word_index occur in the document.

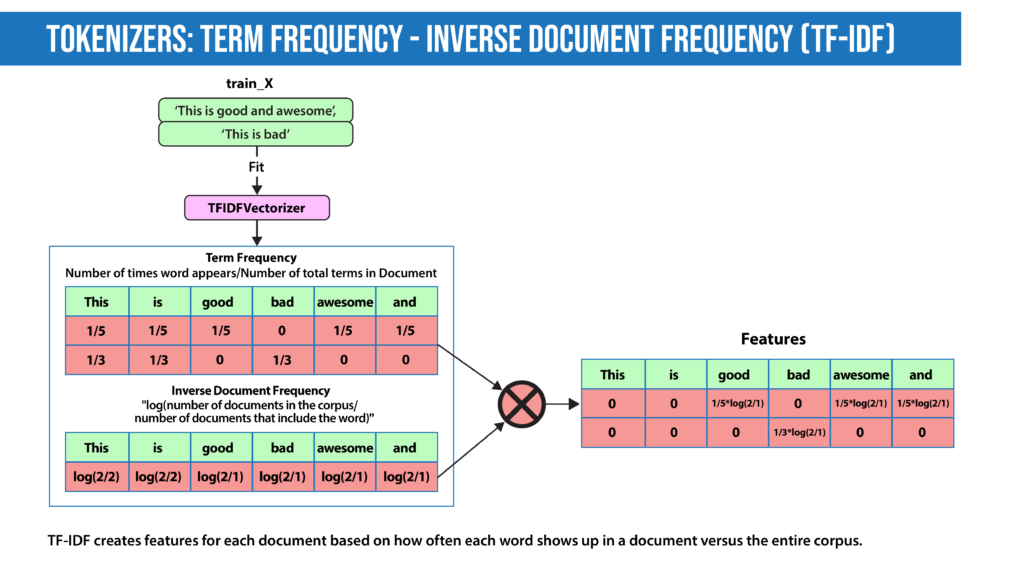

- Term Frequency: How important is the word in the document?

TF(word in a document)= Number of occurrences of that word in document / Number of words in document

- Inverse Document Frequency: How important is the term in the whole corpus?

IDF(word in a corpus)=log(number of documents in the corpus / number of documents that include the word)

A word is important if it occurs many times in a document. But that creates a problem. Words like “a” and “the” appear often. And as such, their TF score will always be high. We resolve this issue by using Inverse Document Frequency, which is high if the word is rare and low if the word is common across the corpus. The TF-IDF score of a term is the product of TF and IDF.

- Word2Vec , introduced in 2013 , uses a vanilla neural network to learn high-dimensional word embeddings from raw text. It comes in two variations: Skip-Gram, in which we try to predict surrounding words given a target word, and Continuous Bag-of-Words (CBOW), which tries to predict the target word from surrounding words. After discarding the final layer after training, these models take a word as input and output a word embedding that can be used as an input to many NLP tasks. Embeddings from Word2Vec capture context. If particular words appear in similar contexts, their embeddings will be similar.

- GLoVE is similar to Word2Vec as it also learns word embeddings, but it does so by using matrix factorization techniques rather than neural learning. The GLoVE model builds a matrix based on the global word-to-word co-occurrence counts.

- Numerical features extracted by the techniques described above can be fed into various models depending on the task at hand. For example, for classification, the output from the TF-IDF vectorizer could be provided to logistic regression, naive Bayes, decision trees, or gradient boosted trees. Or, for named entity recognition, we can use hidden Markov models along with n-grams.

- Deep neural networks typically work without using extracted features, although we can still use TF-IDF or Bag-of-Words features as an input.

- Language Models : In very basic terms, the objective of a language model is to predict the next word when given a stream of input words. Probabilistic models that use Markov assumption are one example:

P(W n )=P(W n |W n−1 )

Deep learning is also used to create such language models. Deep-learning models take as input a word embedding and, at each time state, return the probability distribution of the next word as the probability for every word in the dictionary. Pre-trained language models learn the structure of a particular language by processing a large corpus, such as Wikipedia. They can then be fine-tuned for a particular task. For instance, BERT has been fine-tuned for tasks ranging from fact-checking to writing headlines .

Top Natural Language Processing (NLP) Techniques

Most of the NLP tasks discussed above can be modeled by a dozen or so general techniques. It’s helpful to think of these techniques in two categories: Traditional machine learning methods and deep learning methods.

Traditional Machine learning NLP techniques:

- Logistic regression is a supervised classification algorithm that aims to predict the probability that an event will occur based on some input. In NLP, logistic regression models can be applied to solve problems such as sentiment analysis, spam detection, and toxicity classification.

- Naive Bayes is a supervised classification algorithm that finds the conditional probability distribution P(label | text) using the following Bayes formula:

P(label | text) = P(label) x P(text|label) / P(text)

and predicts based on which joint distribution has the highest probability. The naive assumption in the Naive Bayes model is that the individual words are independent. Thus:

P(text|label) = P(word_1|label)*P(word_2|label)*…P(word_n|label)

In NLP, such statistical methods can be applied to solve problems such as spam detection or finding bugs in software code .

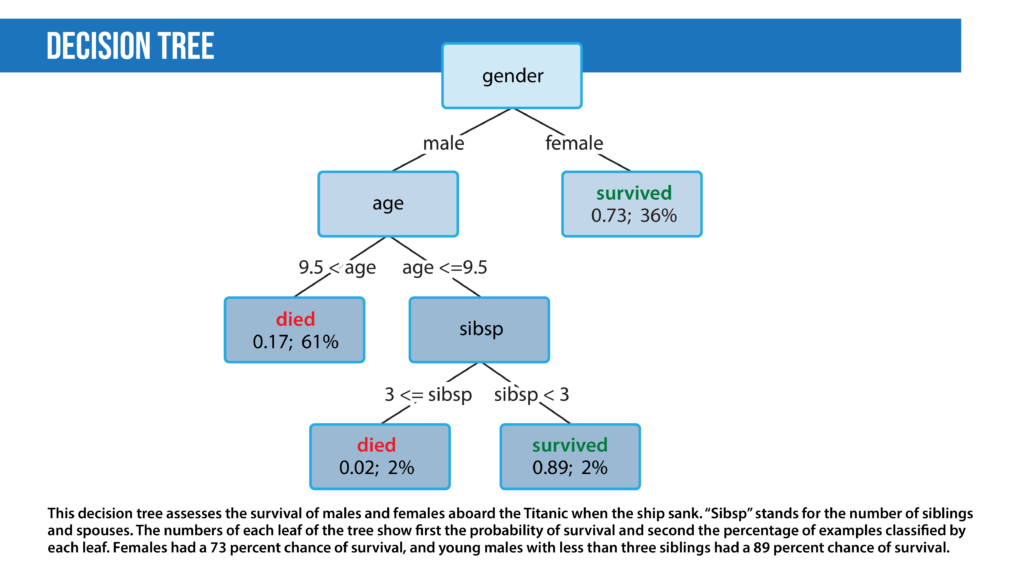

- Decision trees are a class of supervised classification models that split the dataset based on different features to maximize information gain in those splits.

- Latent Dirichlet Allocation (LDA) is used for topic modeling. LDA tries to view a document as a collection of topics and a topic as a collection of words. LDA is a statistical approach. The intuition behind it is that we can describe any topic using only a small set of words from the corpus.

- Hidden Markov models : Markov models are probabilistic models that decide the next state of a system based on the current state. For example, in NLP, we might suggest the next word based on the previous word. We can model this as a Markov model where we might find the transition probabilities of going from word1 to word2, that is, P(word1|word2). Then we can use a product of these transition probabilities to find the probability of a sentence. The hidden Markov model (HMM) is a probabilistic modeling technique that introduces a hidden state to the Markov model. A hidden state is a property of the data that isn’t directly observed. HMMs are used for part-of-speech (POS) tagging where the words of a sentence are the observed states and the POS tags are the hidden states. The HMM adds a concept called emission probability; the probability of an observation given a hidden state. In the prior example, this is the probability of a word, given its POS tag. HMMs assume that this probability can be reversed: Given a sentence, we can calculate the part-of-speech tag from each word based on both how likely a word was to have a certain part-of-speech tag and the probability that a particular part-of-speech tag follows the part-of-speech tag assigned to the previous word. In practice, this is solved using the Viterbi algorithm.

Deep learning NLP Techniques:

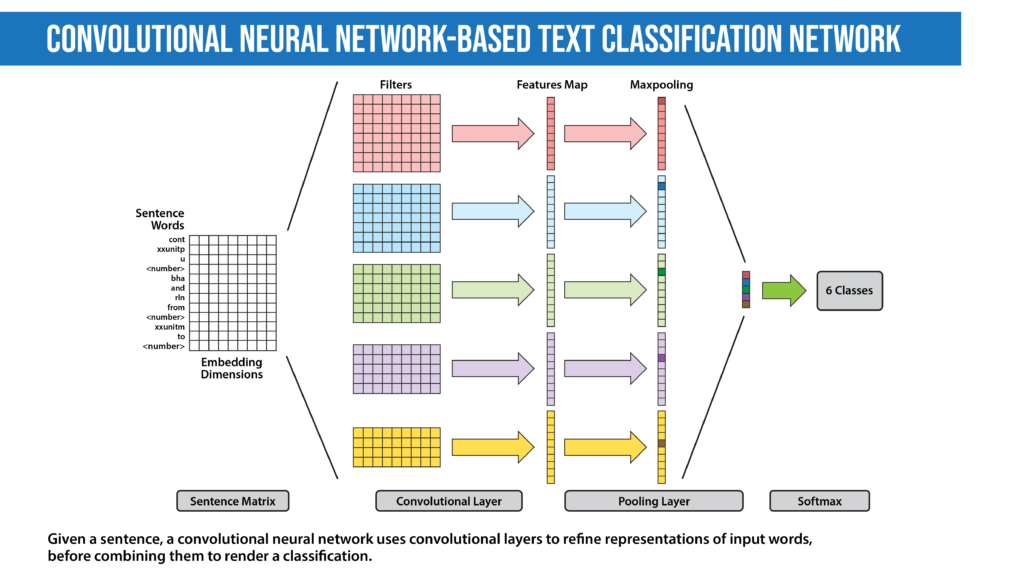

- Convolutional Neural Network (CNN): The idea of using a CNN to classify text was first presented in the paper “ Convolutional Neural Networks for Sentence Classification ” by Yoon Kim. The central intuition is to see a document as an image. However, instead of pixels, the input is sentences or documents represented as a matrix of words.

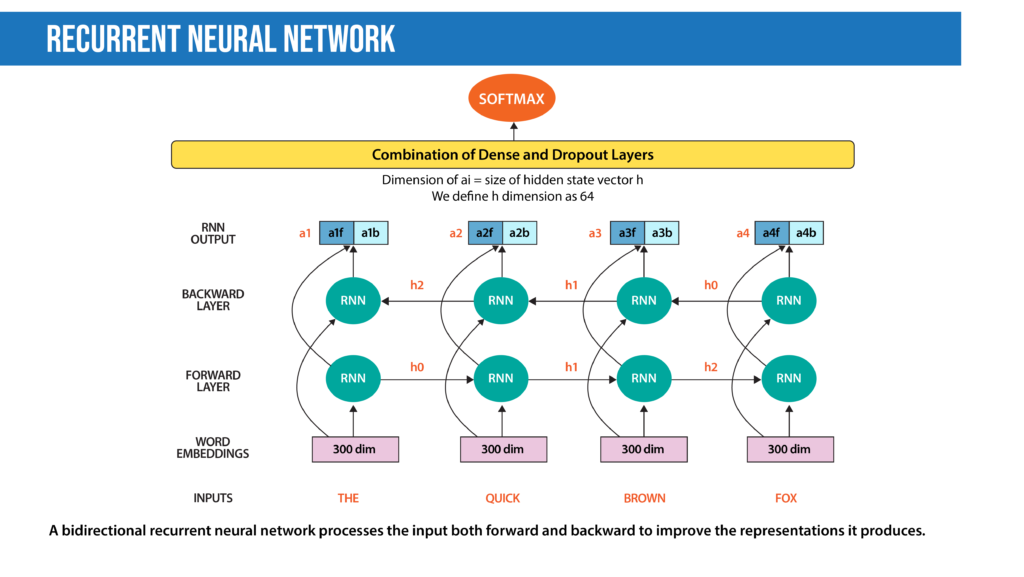

- Recurrent Neural Network (RNN) : Many techniques for text classification that use deep learning process words in close proximity using n-grams or a window (CNNs). They can see “New York” as a single instance. However, they can’t capture the context provided by a particular text sequence. They don’t learn the sequential structure of the data, where every word is dependent on the previous word or a word in the previous sentence. RNNs remember previous information using hidden states and connect it to the current task. The architectures known as Gated Recurrent Unit (GRU) and long short-term memory (LSTM) are types of RNNs designed to remember information for an extended period. Moreover, the bidirectional LSTM/GRU keeps contextual information in both directions, which is helpful in text classification. RNNs have also been used to generate mathematical proofs and translate human thoughts into words.

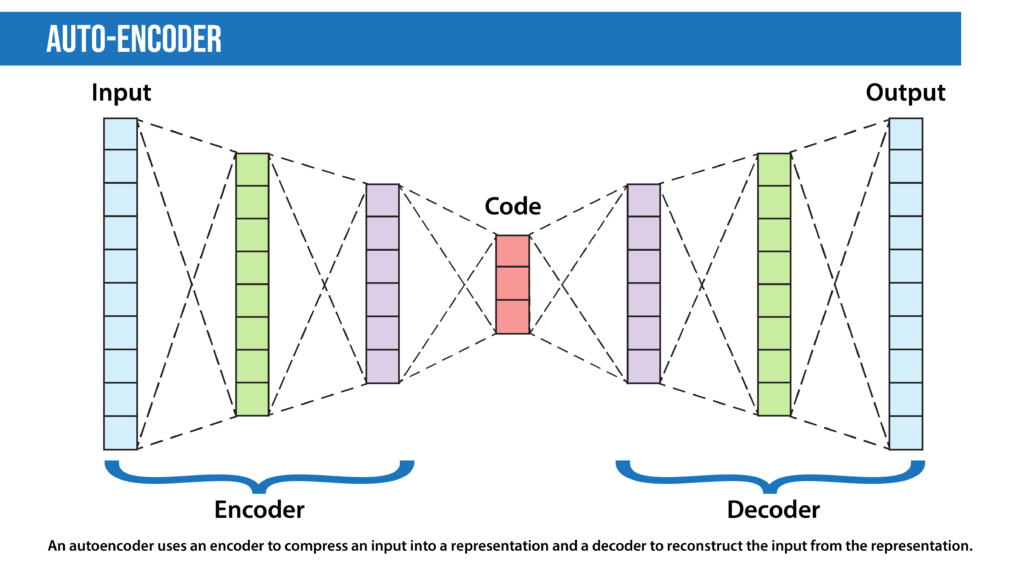

- Autoencoders are deep learning encoder-decoders that approximate a mapping from X to X, i.e., input=output. They first compress the input features into a lower-dimensional representation (sometimes called a latent code, latent vector, or latent representation) and learn to reconstruct the input. The representation vector can be used as input to a separate model, so this technique can be used for dimensionality reduction. Among specialists in many other fields, geneticists have applied autoencoders to spot mutations associated with diseases in amino acid sequences.

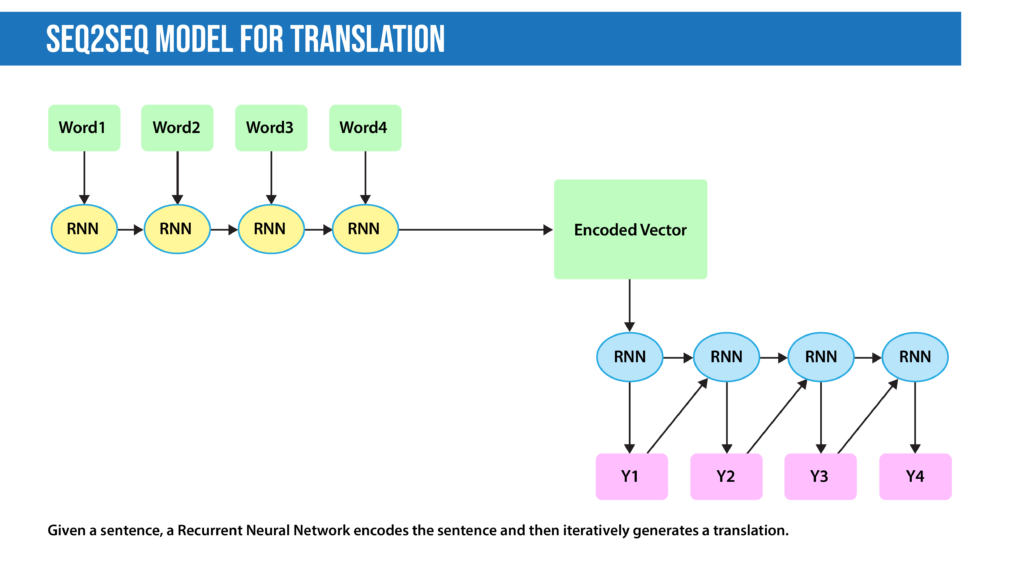

- Encoder-decoder sequence-to-sequence : The encoder-decoder seq2seq architecture is an adaptation to autoencoders specialized for translation, summarization, and similar tasks. The encoder encapsulates the information in a text into an encoded vector. Unlike an autoencoder, instead of reconstructing the input from the encoded vector, the decoder’s task is to generate a different desired output, like a translation or summary.

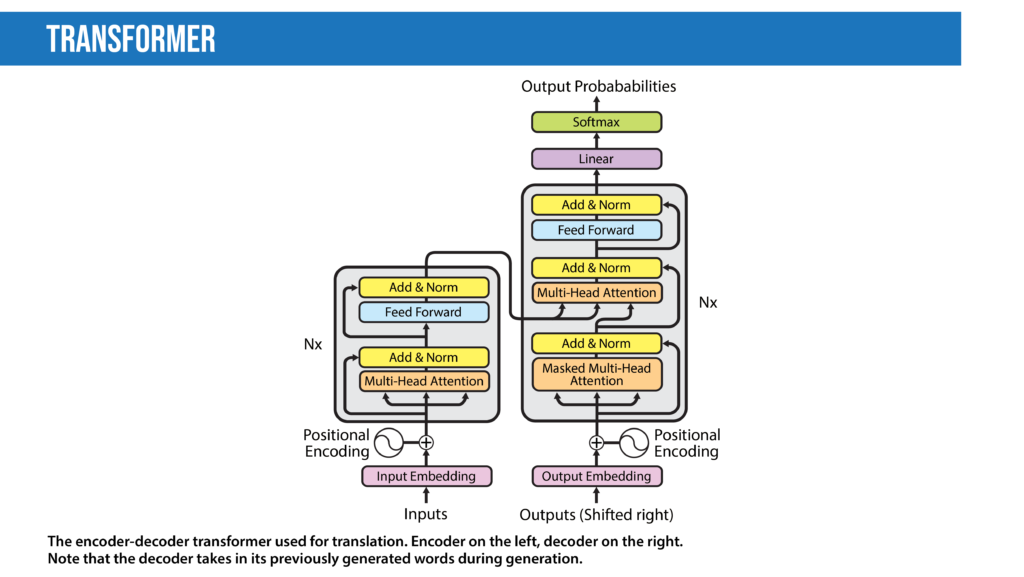

- Transformers : The transformer, a model architecture first described in the 2017 paper “ Attention Is All You Need ” (Vaswani, Shazeer, Parmar, et al.), forgoes recurrence and instead relies entirely on a self-attention mechanism to draw global dependencies between input and output. Since this mechanism processes all words at once (instead of one at a time) that decreases training speed and inference cost compared to RNNs, especially since it is parallelizable. The transformer architecture has revolutionized NLP in recent years, leading to models including BLOOM , Jurassic-X , and Turing-NLG . It has also been successfully applied to a variety of different vision tasks , including making 3D images .

Six Important Natural Language Processing (NLP) Models

Over the years, many NLP models have made waves within the AI community, and some have even made headlines in the mainstream news. The most famous of these have been chatbots and language models. Here are some of them:

- Eliza was developed in the mid-1960s to try to solve the Turing Test; that is, to fool people into thinking they’re conversing with another human being rather than a machine. Eliza used pattern matching and a series of rules without encoding the context of the language.

- Tay was a chatbot that Microsoft launched in 2016. It was supposed to tweet like a teen and learn from conversations with real users on Twitter. The bot adopted phrases from users who tweeted sexist and racist comments, and Microsoft deactivated it not long afterward. Tay illustrates some points made by the “Stochastic Parrots” paper, particularly the danger of not debiasing data.

- BERT and his Muppet friends: Many deep learning models for NLP are named after Muppet characters , including ELMo , BERT , Big BIRD , ERNIE , Kermit , Grover , RoBERTa , and Rosita . Most of these models are good at providing contextual embeddings and enhanced knowledge representation.

- Generative Pre-Trained Transformer 3 (GPT-3) is a 175 billion parameter model that can write original prose with human-equivalent fluency in response to an input prompt. The model is based on the transformer architecture. The previous version, GPT-2, is open source. Microsoft acquired an exclusive license to access GPT-3’s underlying model from its developer OpenAI, but other users can interact with it via an application programming interface (API). Several groups including EleutherAI and Meta have released open source interpretations of GPT-3.

- Language Model for Dialogue Applications (LaMDA) is a conversational chatbot developed by Google. LaMDA is a transformer-based model trained on dialogue rather than the usual web text. The system aims to provide sensible and specific responses to conversations. Google developer Blake Lemoine came to believe that LaMDA is sentient. Lemoine had detailed conversations with AI about his rights and personhood. During one of these conversations, the AI changed Lemoine’s mind about Isaac Asimov’s third law of robotics. Lemoine claimed that LaMDA was sentient, but the idea was disputed by many observers and commentators. Subsequently, Google placed Lemoine on administrative leave for distributing proprietary information and ultimately fired him.

- Mixture of Experts ( MoE): While most deep learning models use the same set of parameters to process every input, MoE models aim to provide different parameters for different inputs based on efficient routing algorithms to achieve higher performance . Switch Transformer is an example of the MoE approach that aims to reduce communication and computational costs.

Programming Languages, Libraries, And Frameworks For Natural Language Processing (NLP)

Many languages and libraries support NLP. Here are a few of the most useful.

- Natural Language Toolkit (NLTK) is one of the first NLP libraries written in Python. It provides easy-to-use interfaces to corpora and lexical resources such as WordNet . It also provides a suite of text-processing libraries for classification, tagging, stemming, parsing, and semantic reasoning.

- spaCy is one of the most versatile open source NLP libraries. It supports more than 66 languages. spaCy also provides pre-trained word vectors and implements many popular models like BERT. spaCy can be used for building production-ready systems for named entity recognition, part-of-speech tagging, dependency parsing, sentence segmentation, text classification, lemmatization, morphological analysis, entity linking, and so on.

- Deep Learning libraries: Popular deep learning libraries include TensorFlow and PyTorch , which make it easier to create models with features like automatic differentiation. These libraries are the most common tools for developing NLP models.

- Hugging Face offers open-source implementations and weights of over 135 state-of-the-art models. The repository enables easy customization and training of the models.

- Gensim provides vector space modeling and topic modeling algorithms.

- R : Many early NLP models were written in R, and R is still widely used by data scientists and statisticians. Libraries in R for NLP include TidyText , Weka , Word2Vec , SpaCyR , TensorFlow , and PyTorch .

- Many other languages including JavaScript, Java, and Julia have libraries that implement NLP methods.

Controversies Surrounding Natural Language Processing (NLP)

NLP has been at the center of a number of controversies. Some are centered directly on the models and their outputs, others on second-order concerns, such as who has access to these systems, and how training them impacts the natural world.

- Stochastic parrots: A 2021 paper titled “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” by Emily Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell examines how language models may repeat and amplify biases found in their training data. The authors point out that huge, uncurated datasets scraped from the web are bound to include social biases and other undesirable information, and models that are trained on them will absorb these flaws. They advocate greater care in curating and documenting datasets, evaluating a model’s potential impact prior to development, and encouraging research in directions other than designing ever-larger architectures to ingest ever-larger datasets.

- Coherence versus sentience: Recently, a Google engineer tasked with evaluating the LaMDA language model was so impressed by the quality of its chat output that he believed it to be sentient . The fallacy of attributing human-like intelligence to AI dates back to some of the earliest NLP experiments.

- Environmental impact: Large language models require a lot of energy during both training and inference. One study estimated that training a single large language model can emit five times as much carbon dioxide as a single automobile over its operational lifespan. Another study found that models consume even more energy during inference than training. As for solutions, researchers have proposed using cloud servers located in countries with lots of renewable energy as one way to offset this impact.

- High cost leaves out non-corporate researchers: The computational requirements needed to train or deploy large language models are too expensive for many small companies . Some experts worry that this could block many capable engineers from contributing to innovation in AI.

- Black box: When a deep learning model renders an output, it’s difficult or impossible to know why it generated that particular result. While traditional models like logistic regression enable engineers to examine the impact on the output of individual features, neural network methods in natural language processing are essentially black boxes. Such systems are said to be “not explainable,” since we can’t explain how they arrived at their output. An effective approach to achieve explainability is especially important in areas like banking, where regulators want to confirm that a natural language processing system doesn’t discriminate against some groups of people, and law enforcement, where models trained on historical data may perpetuate historical biases against certain groups.

“ Nonsense on stilts ”: Writer Gary Marcus has criticized deep learning-based NLP for generating sophisticated language that misleads users to believe that natural language algorithms understand what they are saying and mistakenly assume they are capable of more sophisticated reasoning than is currently possible.

How To Get Started In Natural Language Processing (NLP)

If you are just starting out, many excellent courses can help.

If you want to learn more about NLP, try reading research papers. Work through the papers that introduced the models and techniques described in this article. Most are easy to find on arxiv.org . You might also take a look at these resources:

- The Batch : A weekly newsletter that tells you what matters in AI. It’s the best way to keep up with developments in deep learning.

- NLP News : A newsletter from Sebastian Ruder, a research scientist at Google, focused on what’s new in NLP.

- Papers with Code : A web repository of machine learning research, tasks, benchmarks, and datasets.

We highly recommend learning to implement basic algorithms (linear and logistic regression, Naive Bayes, decision trees, and vanilla neural networks) in Python. The next step is to take an open-source implementation and adapt it to a new dataset or task.

NLP is one of the fast-growing research domains in AI, with applications that involve tasks including translation, summarization, text generation, and sentiment analysis. Businesses use NLP to power a growing number of applications, both internal — like detecting insurance fraud , determining customer sentiment, and optimizing aircraft maintenance — and customer-facing, like Google Translate.

Aspiring NLP practitioners can begin by familiarizing themselves with foundational AI skills: performing basic mathematics, coding in Python, and using algorithms like decision trees, Naive Bayes, and logistic regression. Online courses can help you build your foundation. They can also help as you proceed into specialized topics. Specializing in NLP requires a working knowledge of things like neural networks, frameworks like PyTorch and TensorFlow, and various data preprocessing techniques. The transformer architecture, which has revolutionized the field since it was introduced in 2017, is an especially important architecture.

NLP is an exciting and rewarding discipline, and has potential to profoundly impact the world in many positive ways. Unfortunately, NLP is also the focus of several controversies, and understanding them is also part of being a responsible practitioner. For instance, researchers have found that models will parrot biased language found in their training data, whether they’re counterfactual, racist, or hateful. Moreover, sophisticated language models can be used to generate disinformation. A broader concern is that training large models produces substantial greenhouse gas emissions.

This page is only a brief overview of what NLP is all about. If you have an appetite for more, DeepLearning.AI offers courses for everyone in their NLP journey, from AI beginners and those who are ready to specialize . No matter your current level of expertise or aspirations, remember to keep learning!

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

The Power of Natural Language Processing

- Ross Gruetzemacher

How companies can use NLP to help with brainstorming, summarizing, and researching.

The conventional wisdom around AI has been that while computers have the edge over humans when it comes to data-driven decision making, it can’t compete on qualitative tasks. That, however, is changing. Natural language processing (NLP) tools have advanced rapidly and can help with writing, coding, and discipline-specific reasoning. Companies that want to make use of this new tech should focus on the following: 1) Identify text data assets and determine how the latest techniques can be leveraged to add value for your firm, 2) understand how you might leverage AI-based language technologies to make better decisions or reorganize your skilled labor, 3) begin incorporating new language-based AI tools for a variety of tasks to better understand their capabilities, and 4) don’t underestimate the transformative potential of AI.

Until recently, the conventional wisdom was that while AI was better than humans at data-driven decision making tasks , it was still inferior to humans for cognitive and creative ones . But in the past two years language-based AI has advanced by leaps and bounds, changing common notions of what this technology can do.

- RG Ross Gruetzemacher is an Assistant Professor of Business Analytics at the W. Frank Barton School of Business at Wichita State University. He is a consultant on AI strategy for organizations in the Bay Area and internationally, and he also works as a Senior Game Master on Intelligence Rising , a strategic role-play game for exploring AI futures.

Partner Center

Natural language processing, or NLP, combines computational linguistics—rule-based modeling of human language—with statistical and machine learning models to enable computers and digital devices to recognize, understand and generate text and speech.

A branch of artificial intelligence (AI), NLP lies at the heart of applications and devices that can

- translate text from one language to another

- respond to typed or spoken commands

- recognize or authenticate users based on voice

- summarize large volumes of text

- assess the intent or sentiment of text or speech

- generate text or graphics or other content on demand

often in real time. Today most people have interacted with NLP in the form of voice-operated GPS systems, digital assistants, speech-to-text dictation software, customer service chatbots , and other consumer conveniences. But NLP also plays a growing role in enterprise solutions that help streamline and automate business operations, increase employee productivity, and simplify mission-critical business processes.

Use this model selection framework to choose the most appropriate model while balancing your performance requirements with cost, risks and deployment needs.

Register for the white paper on AI governance

Human language is filled with ambiguities that make it incredibly difficult to write software that accurately determines the intended meaning of text or voice data. Homonyms, homophones, sarcasm, idioms, metaphors, grammar and usage exceptions, variations in sentence structure—these just a few of the irregularities of human language that take humans years to learn, but that programmers must teach natural language-driven applications to recognize and understand accurately from the start, if those applications are going to be useful.

Several NLP tasks break down human text and voice data in ways that help the computer make sense of what it's ingesting. Some of these tasks include the following:

- Speech recognition , also called speech-to-text, is the task of reliably converting voice data into text data. Speech recognition is required for any application that follows voice commands or answers spoken questions. What makes speech recognition especially challenging is the way people talk—quickly, slurring words together, with varying emphasis and intonation, in different accents, and often using incorrect grammar.

- Part of speech tagging , also called grammatical tagging, is the process of determining the part of speech of a particular word or piece of text based on its use and context. Part of speech identifies ‘make’ as a verb in ‘I can make a paper plane,’ and as a noun in ‘What make of car do you own?’

- Word sense disambiguation is the selection of the meaning of a word with multiple meanings through a process of semantic analysis that determine the word that makes the most sense in the given context. For example, word sense disambiguation helps distinguish the meaning of the verb 'make' in ‘make the grade’ (achieve) vs. ‘make a bet’ (place).

- Named entity recognition, or NEM, identifies words or phrases as useful entities. NEM identifies ‘Kentucky’ as a location or ‘Fred’ as a man's name.

- Co-reference resolution is the task of identifying if and when two words refer to the same entity. The most common example is determining the person or object to which a certain pronoun refers (e.g., ‘she’ = ‘Mary’), but it can also involve identifying a metaphor or an idiom in the text (e.g., an instance in which 'bear' isn't an animal but a large hairy person).

- Sentiment analysis attempts to extract subjective qualities—attitudes, emotions, sarcasm, confusion, suspicion—from text.

- Natural language generation is sometimes described as the opposite of speech recognition or speech-to-text; it's the task of putting structured information into human language.

See the blog post “ NLP vs. NLU vs. NLG: the differences between three natural language processing concepts ” for a deeper look into how these concepts relate.

The all new enterprise studio that brings together traditional machine learning along with new generative AI capabilities powered by foundation models.

Python and the Natural Language Toolkit (NLTK)

The Python programing language provides a wide range of tools and libraries for attacking specific NLP tasks. Many of these are found in the Natural Language Toolkit, or NLTK, an open source collection of libraries, programs, and education resources for building NLP programs.

The NLTK includes libraries for many of the NLP tasks listed above, plus libraries for subtasks, such as sentence parsing, word segmentation, stemming and lemmatization (methods of trimming words down to their roots), and tokenization (for breaking phrases, sentences, paragraphs and passages into tokens that help the computer better understand the text). It also includes libraries for implementing capabilities such as semantic reasoning, the ability to reach logical conclusions based on facts extracted from text.

Statistical NLP, machine learning, and deep learning

The earliest NLP applications were hand-coded, rules-based systems that could perform certain NLP tasks, but couldn't easily scale to accommodate a seemingly endless stream of exceptions or the increasing volumes of text and voice data.

Enter statistical NLP, which combines computer algorithms with machine learning and deep learning models to automatically extract, classify, and label elements of text and voice data and then assign a statistical likelihood to each possible meaning of those elements. Today, deep learning models and learning techniques based on convolutional neural networks (CNNs) and recurrent neural networks (RNNs) enable NLP systems that 'learn' as they work and extract ever more accurate meaning from huge volumes of raw, unstructured, and unlabeled text and voice data sets.

For a deeper dive into the nuances between these technologies and their learning approaches, see “ AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the Difference? ”

Natural language processing is the driving force behind machine intelligence in many modern real-world applications. Here are a few examples:

- Spam detection: You may not think of spam detection as an NLP solution, but the best spam detection technologies use NLP's text classification capabilities to scan emails for language that often indicates spam or phishing. These indicators can include overuse of financial terms, characteristic bad grammar, threatening language, inappropriate urgency, misspelled company names, and more. Spam detection is one of a handful of NLP problems that experts consider 'mostly solved' (although you may argue that this doesn’t match your email experience).

- Machine translation: Google Translate is an example of widely available NLP technology at work. Truly useful machine translation involves more than replacing words in one language with words of another. Effective translation has to capture accurately the meaning and tone of the input language and translate it to text with the same meaning and desired impact in the output language. Machine translation tools are making good progress in terms of accuracy. A great way to test any machine translation tool is to translate text to one language and then back to the original. An oft-cited classic example: Not long ago, translating “ The spirit is willing but the flesh is weak” from English to Russian and back yielded “ The vodka is good but the meat is rotten .” Today, the result is “ The spirit desires, but the flesh is weak ,” which isn’t perfect, but inspires much more confidence in the English-to-Russian translation.

- Virtual agents and chatbots: Virtual agents such as Apple's Siri and Amazon's Alexa use speech recognition to recognize patterns in voice commands and natural language generation to respond with appropriate action or helpful comments. Chatbots perform the same magic in response to typed text entries. The best of these also learn to recognize contextual clues about human requests and use them to provide even better responses or options over time. The next enhancement for these applications is question answering, the ability to respond to our questions—anticipated or not—with relevant and helpful answers in their own words.

- Social media sentiment analysis: NLP has become an essential business tool for uncovering hidden data insights from social media channels. Sentiment analysis can analyze language used in social media posts, responses, reviews, and more to extract attitudes and emotions in response to products, promotions, and events–information companies can use in product designs, advertising campaigns, and more.

- Text summarization: Text summarization uses NLP techniques to digest huge volumes of digital text and create summaries and synopses for indexes, research databases, or busy readers who don't have time to read full text. The best text summarization applications use semantic reasoning and natural language generation (NLG) to add useful context and conclusions to summaries.

Accelerate the business value of artificial intelligence with a powerful and flexible portfolio of libraries, services and applications.

Infuse powerful natural language AI into commercial applications with a containerized library designed to empower IBM partners with greater flexibility.

Learn the fundamental concepts for AI and generative AI, including prompt engineering, large language models and the best open source projects.

Learn about different NLP use cases in this NLP explainer.

Visit the IBM Developer's website to access blogs, articles, newsletters and more. Become an IBM partner and infuse IBM Watson embeddable AI in your commercial solutions today. BM Watson NLP Library for Embed into your solutions.

Watch IBM Data & AI GM, Rob Thomas as he hosts NLP experts and clients, showcasing how NLP technologies are optimizing businesses across industries.

Ethical considerations for AI have never been more critical than they are today.

IBM has launched a new open-source toolkit, PrimeQA, to spur progress in multilingual question-answering systems to make it easier for anyone to quickly find information on the web.

Train, validate, tune and deploy generative AI, foundation models and machine learning capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders. Build AI applications in a fraction of the time with a fraction of the data.

Subscribe to the PwC Newsletter

Join the community, natural language processing, representation learning.

Disentanglement

Graph representation learning, sentence embeddings.

Network Embedding

Classification.

Text Classification

Graph Classification

Audio Classification

Medical Image Classification

Language modelling.

Long-range modeling

Protein language model, sentence pair modeling, deep hashing, table retrieval, nlp based person retrival, question answering.

Open-Ended Question Answering

Open-Domain Question Answering

Conversational question answering.

Answer Selection

Translation, image generation.

Image-to-Image Translation

Text-to-Image Generation

Image Inpainting

Conditional Image Generation

Data augmentation.

Image Augmentation

Text Augmentation

Machine translation.

Transliteration

Multimodal Machine Translation

Bilingual lexicon induction.

Unsupervised Machine Translation

Text generation.

Dialogue Generation

Data-to-Text Generation

Multi-Document Summarization

Text style transfer, knowledge graph completion.

Knowledge Graphs

Large language model, triple classification, inductive knowledge graph completion, inductive relation prediction, 2d semantic segmentation, image segmentation.

Scene Parsing

Reflection Removal

Document Classification

Topic Models

Sentence Classification

Emotion Classification

Visual question answering (vqa).

Visual Question Answering

Machine Reading Comprehension

Chart Question Answering

Embodied Question Answering

Named entity recognition (ner).

Nested Named Entity Recognition

Chinese named entity recognition, few-shot ner, sentiment analysis.

Aspect-Based Sentiment Analysis (ABSA)

Multimodal Sentiment Analysis

Aspect Sentiment Triplet Extraction

Twitter Sentiment Analysis

Few-shot learning.

One-Shot Learning

Few-Shot Semantic Segmentation

Cross-domain few-shot.

Unsupervised Few-Shot Learning

Word embeddings.

Learning Word Embeddings

Multilingual Word Embeddings

Embeddings evaluation, contextualised word representations, optical character recognition (ocr).

Active Learning

Handwriting Recognition

Handwritten digit recognition, irregular text recognition, continual learning.

Class Incremental Learning

Continual named entity recognition, unsupervised class-incremental learning, information retrieval.

Passage Retrieval

Cross-lingual information retrieval, table search, text summarization.

Abstractive Text Summarization

Document summarization, opinion summarization, relation extraction.

Relation Classification

Document-level relation extraction, joint entity and relation extraction, temporal relation extraction, link prediction.

Inductive Link Prediction

Dynamic link prediction, hyperedge prediction, anchor link prediction, natural language inference.

Answer Generation

Visual Entailment

Cross-lingual natural language inference, reading comprehension.

Intent Recognition

Implicit relations, active object detection, emotion recognition.

Speech Emotion Recognition

Emotion Recognition in Conversation

Multimodal Emotion Recognition

Emotion-cause pair extraction, natural language understanding, vietnamese social media text processing.

Emotional Dialogue Acts

Image captioning.

3D dense captioning

Controllable image captioning, aesthetic image captioning.

Relational Captioning

Semantic textual similarity.

Paraphrase Identification

Cross-Lingual Semantic Textual Similarity

Event extraction, event causality identification, zero-shot event extraction, dialogue state tracking, task-oriented dialogue systems.

Visual Dialog

Dialogue understanding, coreference resolution, coreference-resolution, cross document coreference resolution, in-context learning, semantic parsing.

AMR Parsing

Semantic dependency parsing, drs parsing, ucca parsing, semantic similarity, conformal prediction.

Text Simplification

Music Source Separation

Decision Making Under Uncertainty

Audio source separation.

Code Generation

Code Translation

Code Documentation Generation

Class-level code generation, library-oriented code generation.

Sentence Embedding

Sentence compression, joint multilingual sentence representations, sentence embeddings for biomedical texts, specificity, dependency parsing.

Transition-Based Dependency Parsing

Prepositional phrase attachment, unsupervised dependency parsing, cross-lingual zero-shot dependency parsing, information extraction, extractive summarization, temporal information extraction, document-level event extraction, cross-lingual, cross-lingual transfer, cross-lingual document classification.

Cross-Lingual Entity Linking

Cross-language text summarization, response generation, memorization, common sense reasoning.

Physical Commonsense Reasoning

Riddle sense, anachronisms, instruction following, visual instruction following, prompt engineering.

Visual Prompting

Data integration.

Entity Alignment

Entity Resolution

Table annotation, entity linking.

Question Generation

Poll generation.

Topic coverage

Dynamic topic modeling, part-of-speech tagging.

Unsupervised Part-Of-Speech Tagging

Mathematical reasoning.

Math Word Problem Solving

Formal logic, geometry problem solving, abstract algebra, abuse detection, hate speech detection, open information extraction.

Hope Speech Detection

Hate speech normalization, hate speech detection crisishatemm benchmark, data mining.

Argument Mining

Opinion Mining

Subgroup discovery, cognitive diagnosis, parallel corpus mining, bias detection, selection bias, word sense disambiguation.

Word Sense Induction

Language identification, dialect identification, native language identification, few-shot relation classification, implicit discourse relation classification, cause-effect relation classification.

Fake News Detection

Relational reasoning.

Semantic Role Labeling

Predicate Detection

Semantic role labeling (predicted predicates).

Textual Analogy Parsing

Slot filling.

Zero-shot Slot Filling

Extracting covid-19 events from twitter, grammatical error correction.

Grammatical Error Detection

Text matching, document text classification, learning with noisy labels, multi-label classification of biomedical texts, political salient issue orientation detection, pos tagging, deep clustering, trajectory clustering, deep nonparametric clustering, nonparametric deep clustering, stance detection, zero-shot stance detection, few-shot stance detection, stance detection (us election 2020 - biden), stance detection (us election 2020 - trump), spoken language understanding, dialogue safety prediction, multi-modal entity alignment, intent detection.

Open Intent Detection

Word similarity, text-to-speech synthesis.

Prosody Prediction

Zero-shot multi-speaker tts, zero-shot cross-lingual transfer, cross-lingual ner, intent classification.

Document AI

Document understanding, fact verification, language acquisition, grounded language learning, entity typing.

Entity Typing on DH-KGs

Constituency parsing.

Constituency Grammar Induction

Self-learning, ad-hoc information retrieval, document ranking.

Model Editing

Knowledge editing, cross-modal retrieval, image-text matching, multilingual cross-modal retrieval.

Zero-shot Composed Person Retrieval

Cross-modal retrieval on rsitmd, word alignment, open-domain dialog, dialogue evaluation, novelty detection, multimodal deep learning, multimodal text and image classification, multi-label text classification.

Text-based Image Editing

Text-guided-image-editing.

Zero-Shot Text-to-Image Generation

Concept alignment, conditional text-to-image synthesis, discourse parsing, discourse segmentation, connective detection.

Shallow Syntax

Sarcasm detection.

De-identification

Privacy preserving deep learning, explanation generation, lemmatization, morphological analysis.

molecular representation

Aspect Extraction

Aspect category sentiment analysis, extract aspect.

Aspect-Category-Opinion-Sentiment Quadruple Extraction

Aspect-oriented Opinion Extraction

Session search.

Chinese Word Segmentation

Handwritten chinese text recognition, chinese spelling error correction, chinese zero pronoun resolution, offline handwritten chinese character recognition, conversational search, entity disambiguation, text-to-video generation, text-to-video editing, subject-driven video generation, source code summarization, method name prediction, authorship attribution, speech-to-text translation, simultaneous speech-to-text translation, text clustering.

Short Text Clustering

Open Intent Discovery

Keyphrase extraction, linguistic acceptability.

Column Type Annotation

Cell entity annotation, columns property annotation, row annotation, abusive language.

Visual Storytelling

KG-to-Text Generation

Unsupervised KG-to-Text Generation

Few-shot text classification, zero-shot out-of-domain detection, term extraction, text2text generation, keyphrase generation, figurative language visualization, sketch-to-text generation, protein folding, phrase grounding, grounded open vocabulary acquisition, deep attention, morphological inflection, multilingual nlp, word translation, spam detection, context-specific spam detection, traditional spam detection, summarization, unsupervised extractive summarization, query-focused summarization.

Natural Language Transduction

Knowledge base population, conversational response selection, cross-lingual word embeddings, passage ranking, text annotation, image-to-text retrieval, key information extraction, biomedical information retrieval.

SpO2 estimation

Authorship verification.

News Classification

Automated essay scoring, graph-to-sequence, keyword extraction, story generation, multimodal association, multimodal generation, sentence summarization, unsupervised sentence summarization, key point matching, component classification, argument pair extraction (ape), claim extraction with stance classification (cesc), claim-evidence pair extraction (cepe), temporal processing, timex normalization, document dating, meme classification, hateful meme classification, morphological tagging, nlg evaluation, weakly supervised classification, weakly supervised data denoising, entity extraction using gan.

Rumour Detection

Semantic composition.

Sentence Ordering

Comment generation.

Lexical Simplification

Token classification, toxic spans detection.

Blackout Poetry Generation

Passage re-ranking, semantic retrieval, subjectivity analysis.

Emotional Intelligence

Dark humor detection, taxonomy learning, taxonomy expansion, hypernym discovery, conversational response generation.

Personalized and Emotional Conversation

Review generation, sentence-pair classification, lexical normalization, pronunciation dictionary creation, negation detection, negation scope resolution, question similarity, medical question pair similarity computation, goal-oriented dialog, user simulation, intent discovery, propaganda detection, propaganda span identification, propaganda technique identification, lexical analysis, lexical complexity prediction, question rewriting, punctuation restoration, reverse dictionary, humor detection.

Legal Reasoning

Meeting summarization, table-based fact verification, attribute value extraction, long-context understanding, pretrained multilingual language models, formality style transfer, semi-supervised formality style transfer, word attribute transfer, diachronic word embeddings, hallucination evaluation, persian sentiment analysis, clinical concept extraction.

Clinical Information Retreival

Constrained clustering.

Only Connect Walls Dataset Task 1 (Grouping)

Incremental constrained clustering, recognizing emotion cause in conversations.

Causal Emotion Entailment

Aspect Category Detection

Dialog act classification, extreme summarization.

Binary Classification

Llm-generated text detection, cancer-no cancer per breast classification, cancer-no cancer per image classification, suspicous (birads 4,5)-no suspicous (birads 1,2,3) per image classification, cancer-no cancer per view classification, nested mention recognition, relationship extraction (distant supervised), clickbait detection, decipherment, semantic entity labeling, text compression, handwriting verification, bangla spelling error correction, ccg supertagging, gender bias detection, linguistic steganography, probing language models, toponym resolution.

Timeline Summarization

Multimodal abstractive text summarization, reader-aware summarization, vietnamese visual question answering, explanatory visual question answering, code repair, thai word segmentation, vietnamese datasets, stock prediction, text-based stock prediction, event-driven trading, pair trading.

Face to Face Translation

Multimodal lexical translation, aggression identification, arabic sentiment analysis, arabic text diacritization, commonsense causal reasoning, complex word identification, fact selection, sign language production, suggestion mining, temporal relation classification, vietnamese word segmentation, speculation detection, speculation scope resolution, aspect category polarity, cross-lingual bitext mining, morphological disambiguation, scientific document summarization, lay summarization, text attribute transfer.

Image-guided Story Ending Generation

Abstract argumentation, dialogue rewriting, logical reasoning reading comprehension.

Multi-agent Integration

Unsupervised sentence compression, stereotypical bias analysis, temporal tagging, text anonymization, anaphora resolution, bridging anaphora resolution.

Abstract Anaphora Resolution

Hope speech detection for english, hope speech detection for malayalam, hope speech detection for tamil, hidden aspect detection, latent aspect detection, personality generation, personality alignment, chinese spell checking, cognate prediction, japanese word segmentation, memex question answering, polyphone disambiguation, spelling correction, table-to-text generation.

KB-to-Language Generation

Vietnamese language models, zero-shot sentiment classification, conditional text generation, contextualized literature-based discovery, multimedia generative script learning, image-sentence alignment, open-world social event classification, trustable and focussed llm generated content, game design, action parsing, author attribution, binary condescension detection, conversational web navigation, croatian text diacritization, czech text diacritization, definition modelling, document-level re with incomplete labeling, domain labelling, french text diacritization, hungarian text diacritization, irish text diacritization, latvian text diacritization, misogynistic aggression identification, morpheme segmentaiton, multi-label condescension detection, news annotation, open relation modeling, personality recognition in conversation.

Reading Order Detection

Record linking, role-filler entity extraction, romanian text diacritization, slovak text diacritization, spanish text diacritization, syntax representation, text-to-video search, turkish text diacritization, turning point identification, twitter event detection.

Vietnamese Scene Text

Vietnamese text diacritization, zero-shot machine translation.

Conversational Sentiment Quadruple Extraction

Attribute extraction, legal outcome extraction, automated writing evaluation, chemical indexing, clinical assertion status detection.

Coding Problem Tagging

Collaborative plan acquisition, commonsense reasoning for rl, context query reformulation.

Variable Disambiguation

Cross-lingual text-to-image generation, crowdsourced text aggregation.

Description-guided molecule generation

Multi-modal Dialogue Generation

Page stream segmentation.

Email Thread Summarization

Emergent communications on relations, emotion detection and trigger summarization, extractive tags summarization.

Hate Intensity Prediction

Hate span identification, job prediction, joint entity and relation extraction on scientific data, joint ner and classification, literature mining, math information retrieval, meme captioning, multi-grained named entity recognition, multilingual machine comprehension in english hindi, multimodal text prediction, negation and speculation cue detection, negation and speculation scope resolution, only connect walls dataset task 2 (connections), overlapping mention recognition, paraphrase generation, multilingual paraphrase generation, phrase ranking, phrase tagging, phrase vector embedding, poem meters classification, query wellformedness.

Question-Answer categorization

Readability optimization, reliable intelligence identification, sentence completion, hurtful sentence completion, social media mental health detection, speaker attribution in german parliamentary debates (germeval 2023, subtask 1), text effects transfer, text-variation, vietnamese aspect-based sentiment analysis, sentiment dependency learning, vietnamese fact checking, vietnamese natural language understanding, vietnamese sentiment analysis, vietnamese multimodal sentiment analysis, web page tagging, workflow discovery, incongruity detection, multi-word expression embedding, multi-word expression sememe prediction, pcl detection, semeval-2022 task 4-1 (binary pcl detection), semeval-2022 task 4-2 (multi-label pcl detection), automatic writing, complaint comment classification, counterspeech detection, extractive text summarization, face selection, job classification, multi-lingual text-to-image generation, multlingual neural machine translation, optical charater recogntion, bangla text detection, question to declarative sentence, relation mention extraction.

Tweet-Reply Sentiment Analysis

Vietnamese parsing.

- Data Science

Caltech Bootcamp / Blog / /

An Introduction to Natural Language Processing in Data Science

- Written by Karin Kelley

- Updated on March 11, 2024

In 2022, ChatGPT took the world by storm and brought AI to the public’s attention. Behind ChatGPT’s ability to understand and generate human-like text is natural language processing (NLP) — a field of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human language. But long before ChatGPT, NLP has been driving a wide range of applications we use daily.

This article will discuss NLP, its working principles, benefits, and how businesses use it. If you want to gain a more in-depth understanding of NLP, read to the end of this guide, where we’ll explore a globally recognized data science course that can help you build a career in data science.

What is Natural Language Processing in Data Science?

Natural language processing is the part of data science that enables computers to understand human languages. In this branch of AI, algorithms observe and analyze human language in text and voice. NLP then extracts data, discerns patterns, and generates new text based on the meaning.

This is a challenging field due to the dynamic nature of human languages. Hence, the focus is on enabling the computer to understand human languages spoken by collecting and treating as many data samples as possible.

The most common example is predictive search results, where you only have to type in a couple of words, and Google provides multiple options for you to select. Here, Google uses NLP to gather all the possible search results that begin with the two words and ranks them according to their popularity.

Another widely used example is the auto-translation service offered by Microsoft on its Outlook application. Outlook analyzes your email interactions and identifies text in a different language than your regular one. The option to ‘Translate this text’ pops up to enable you to understand the email properly.

Types of Natural Language Processing in Data Science

Understanding language is a massive exercise. Going on a straight path and trying to design algorithms for all the aspects of language is impossible. Hence, NLP has been divided into different categories that deal with only one aspect of the language at a time. Let us take a look at the main types of NLP.

Sentiment Analysis

Sentiment analysis deals with discerning the patterns of words related to specific sentiments. This type of text is classified as projecting a positive, negative, or neutral sentiment. This is critical in today’s customer-focused world, where enterprises want to know how the customer feels and predict the best way to serve the customer. Hence, as a data scientist, you may design algorithms to study the text in the emails, chat transcripts, phone call transcripts, and social media posts to accurately predict the mood of the customers the moment they communicate with the enterprise.

Keyword Extraction

Keyword extraction involves critical keywords and phrases specific to a particular situation, trend, or topic. This NLP type analyzes massive amounts of unstructured data to identify mentions in numerous online documents, including blogs, websites, social media posts, and news articles. Keyword extraction can be performed by creating algorithms that can filter useable keyword mentions to help identify business opportunities and the reach of the product.

Knowledge Graph

Knowledge graphs collate information such as people, things, places, events, concepts, and situations in a graph network to understand their interrelationships. This natural language processing category is a step ahead of textual analysis, as it encourages the machine to go deeper into the nuances of the language based on context. The machine is no longer restricted to simple word identification and tagging. It can enhance contextual data collection and comprehension.

Word cloud is the type of NLP that tells which words were used in a text and how many times they were used. Instead of relying on usual bar charts or plots, word cloud uses a visual representation of the frequency of the word usage. All the words are placed together in the shape of a cloud and are ordered from largest to smallest. The words used most frequently are displayed in a larger font, while the lesser-used words are given in smaller fonts. The focus is on the most used words to analyze the topics, mentions, and trends. AI automatically generates word clouds to analyze feedback, surveys, and other documents quickly.

Text Summarization

Text summarization is a sequence-to-sequence model. It takes long texts as the input and summarizes the text’s main points as the output. This NLP is useful when large documents have to be outlined for analysis. In this type, unimportant content is removed, and a shorter semantic version of the sentences and phrases is displayed. Text summarization is conducted through two approaches—extractive and abstractive. While the extractive approach highlights the critical points in a text and presents them as a precis, the abstractive approach understands the nuances of the language to summarize the key points.

How Natural Language Processing Works

Natural language processing is a complex method that uses multiple steps to analyze text. Here’s how it works.

- Lexical: The first step assesses the text on a word level. The different words, their grammatical form, tense, and their relationship with other words are analyzed. It assesses the words and phrases into free and bound morphemes, indicating how they are formed.

- Syntax: Further, the sentence structure is examined. The position of the words, whether the subject, object, or verb, the location of clauses and phrases, and the overall sentence formation are observed.

- Semantics: The sentences are individually analyzed to understand their meaning. This takes NLP a step above word-based analysis, combining the sentence’s meaning with the word’s contextually appropriate meaning.

- Discourse integration: Discourse integration assesses the previous sentences to note the context for the following sentence. It tries to narrow down the sentence’s subject by gauging the last sentence’s theme.

- Pragmatics: Finally, the text as a whole is examined for meaning and sentiment. Sentences are studied in relation to other sentences to note the critical topic and its features, such as definition, principle, types, etc.

There are many tools for conducting NLP. These tools may be available either as SaaS or as open-source libraries. SaaS tools have pre-trained NLP models that can be used directly. You can also use APIs to code some parts of the model freely.

Meanwhile, open-source libraries are free and allow for flexibility in customizing tools. These are complex and are used mainly by experienced professionals who can build open-source tools. Open-source libraries are used if you want to develop an NLP tool from scratch. Several of these libraries are based on the Python language.

Here’s a quick list of the natural language processing tools you should consider learning as a data science professional.

- MonkeyLearn

- Google Cloud NLP API

- Amazon Comprehend

- Stanford Core NLP

A reputable data science program will help you hone your skills and utilize these tools for maximum efficiency.

Advantages of NLP in Data Science

Natural language processing has several benefits that make it attractive to enterprises worldwide. Let’s examine some of these benefits.

- Enables a large amount of data to be assessed in a meaningful manner

- Can analyze both structured and unstructured data, such as a collection of social media posts and messages

- Provides detailed market analysis and brand reach

- Capable of scouring multiple documents related to a subject to gauge its presence and mention

- NLP-enabled AI lowers costs by automating routine tasks and reducing the time and resources spent on them

- It can be customized to accommodate the unique requirements of the industry

Use Cases of Natural Language Processing in Data Analytics

We now have a general idea of what NLP is. To help you understand it better, let’s discuss a few use cases.

Product Returns

E-commerce applications provide AI-enabled online chatbots. The chatbots are trained using many historical conversation logs and online interactions. The chatbots ask the customer to choose a task based on the most common tasks in the data.

Once the customer selects requests for a product return, they direct them to determine the return order. The customer then has to provide a reason. The list of reasons is based on the previous responses by other customers.

Finally, the chatbot will ask how the customer wants to be refunded. Let’s say the customer provides an answer not included in the chatbot in any of these steps. Then, the answer is captured and directed to the NLP algorithm, which analyzes it and includes it in the next software update.

Social Media Crisis Response

Suppose a hair product has a quality issue. Someone posts online about it, and it starts trending. Several others post about their experiences with the same hair product or the company. Natural language processing scans social media for keywords such as the hashtag, brand name, product name, and location of the posts.

Further, it reviews the text for sentiment analysis and flags the marketing team when negative sentiment increases. The marketing team alerts the sales and quality teams. The NLP automatically responds to social media posts with negative sentiments to handle this crisis until the enterprise officially responds on its social media site.

Industry Standards

Standards are large documents that provide guidelines for a particular industry’s process or testing. They contain sections and sub-sections that are updated every couple of years. NLP can be used to analyze these documents and highlight the critical changes by comparing them with previous versions. It can also summarize the chief points in the standard for a quick review.

Of course, like any other technology, natural language processing has certain limitations. For instance, it does not have human experience. Hence, it can analyze text based on only the data it has been trained on. It cannot understand sarcasm, slang, voice tonality, and emotions well. NLP struggles with ambiguity and ends up providing erroneous interpretations. It lacks independent thinking and relies solely on the input.

Techniques Used in NLP Analysis

Several techniques have been devised to conduct NLP analysis. Some of these can be used independently, while others must be used together for the most meaningful result. Here are some of the popular techniques.

- Tokenization: Tokenization works by breaking down the text into words referred to as ‘tokens’ that are analyzed independently. The tokens help simplify the text and group sentences with the same tokens together. Here, punctuation and hyphens are eliminated.

- Stop word removal: Stop words refer to articles, prepositions, and simple verbs such as ‘is,’ ‘the,’ ‘a’, ‘an’, ‘as,’ etc. These words add little to no value to the overall text and are removed from the critical word analysis so that the focus remains on the keywords. This helps reduce the usage space by eliminating the noise from the data.

- Text normalization: Text normalization works by stemming and lemmatization. Here, words with similar roots are grouped in a single token and reduced to their root form. For example, ‘writing’ and ‘written’ are reduced to ‘write’ and grouped.

- Feature extraction: In this technique, the keywords or features are identified and extracted for further analysis. For instance, in marketing campaigns, the marketing team will introduce a hashtag and then track how many times the hashtag was used across several demographics. The posts will also be subjected to a sentiment analysis to gauge customer response.

- Word embeddings: In word embeddings, NLP assigns numbers to the keywords in a document. This converts the text to real-valued vectors and simplifies the analysis.

- Topic modeling: Topic modeling is a technique that focuses on topics rather than words. It assumes a topic is a group of words and a document comprises several topics. Thus, the algorithm scans the document for the topics and extracts them to give a meaningful analysis.

Learn NLP Algorithms and Other Data Science Concepts

Natural language processing has immense potential, and opportunities in this field are expected to grow exponentially in the coming years. Gaining expertise in NLP can give you an edge if you’re looking to build a lucrative career in data science.

A reputed data science bootcamp is designed to equip you with NLP and other essential skills to shine in a data science career. By joining, you can take advantage of live, interactive classes led by industry experts, hands-on training through practical and capstone projects, and networking with peers.

Data Science Bootcamp

- Learning Format:

Online Bootcamp

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Recommended Articles

What is Exploratory Data Analysis? Types, Tools, Importance, etc.

This article highlights exploratory data analysis, including its definition, role in data science, types, and overall importance.

What is Data Wrangling? Importance, Tools, and More

This article explores data wrangling, including its definition, importance, steps, benefits, and tools.

What is Spatial Data Science? Definition, Applications, Careers & More

Do you want to know what spatial data science is? Read this guide to learn its basics, real-world applications, and the exciting career options in this field.

Data Science and Marketing: Transforming Strategies and Enhancing Engagement

Employing data science in marketing is critical for any organization today. This blog explores this intersection of the two disciplines and how professionals and businesses can ensure they have the skills to drive successful digital marketing strategies.

Why Use Python for Data Science?

This article explains why you should use Python for data science tasks, including how it’s done and the benefits.

A Beginner’s Guide to the Data Science Process

Data scientists are in high demand today. If you’re considering pursuing a career in this rewarding field, read on to better understand the data science process, tools, roles, and more.

Learning Format

Program Benefits

- 12+ tools covered, 25+ hands-on projects

- Masterclasses by distinguished Caltech CTME instructors

- Caltech CTME Circle Membership

- Industry-specific training from global experts

- Call us on : 1800-212-7688

Publications

Daniel Jurafsky . 2014. The Language of Food . W. W. Norton.

Christopher D. Manning , Prabhakar Raghavan , and Hinrich Schütze . 2008. Introduction to Information Retrieval . Cambridge University Press.

Daniel Jurafsky and James H. Martin . 2008. Speech and Language Processing: An Introduction to Natural Language Processing, Speech Recognition, and Computational Linguistics . 2nd edition. Prentice-Hall.

Christopher D. Manning and Hinrich Schütze . 1999. Foundations of Statistical Natural Language Processing . Cambridge, MA: MIT Press.

Barbara A. Fox , Dan Jurafsky , and Laura A. Michaelis (Eds.). 1999. Cognition and Function in Language . Stanford, CA: CSLI Publications.

Avery D. Andrews and Christopher D. Manning . 1999. Complex Predicates and Information Spreading in LFG . Stanford, CA: CSLI Publications.

Concept of Natural Language Processing (NLP) Research Paper

Meaning of natural language, natural language processing, reference list.

Natural language processing is significant for technological, economical, social, and educational reasons. NLP is undergoing rapid development as its concepts and methods are distributed in a range of new language technologies. Therefore it is important for the society to have operational knowledge of this process.

Natural language processing controls all computer use of natural language. At one level, it could be as easy as counting word frequencies to evaluate different writing methods. At the other level, it is the “understanding” of comprehensive human words, and being able to give positive responses to them.