Head Start Your Radiology Residency [Online] ↗️

- Radiology Thesis – More than 400 Research Topics (2022)!

Please login to bookmark

Introduction

A thesis or dissertation, as some people would like to call it, is an integral part of the Radiology curriculum, be it MD, DNB, or DMRD. We have tried to aggregate radiology thesis topics from various sources for reference.

Not everyone is interested in research, and writing a Radiology thesis can be daunting. But there is no escape from preparing, so it is better that you accept this bitter truth and start working on it instead of cribbing about it (like other things in life. #PhilosophyGyan!)

Start working on your thesis as early as possible and finish your thesis well before your exams, so you do not have that stress at the back of your mind. Also, your thesis may need multiple revisions, so be prepared and allocate time accordingly.

Tips for Choosing Radiology Thesis and Research Topics

Keep it simple silly (kiss).

Retrospective > Prospective

Retrospective studies are better than prospective ones, as you already have the data you need when choosing to do a retrospective study. Prospective studies are better quality, but as a resident, you may not have time (, energy and enthusiasm) to complete these.

Choose a simple topic that answers a single/few questions

Original research is challenging, especially if you do not have prior experience. I would suggest you choose a topic that answers a single or few questions. Most topics that I have listed are along those lines. Alternatively, you can choose a broad topic such as “Role of MRI in evaluation of perianal fistulas.”

You can choose a novel topic if you are genuinely interested in research AND have a good mentor who will guide you. Once you have done that, make sure that you publish your study once you are done with it.

Get it done ASAP.

In most cases, it makes sense to stick to a thesis topic that will not take much time. That does not mean you should ignore your thesis and ‘Ctrl C + Ctrl V’ from a friend from another university. Thesis writing is your first step toward research methodology so do it as sincerely as possible. Do not procrastinate in preparing the thesis. As soon as you have been allotted a guide, start researching topics and writing a review of the literature.

At the same time, do not invest a lot of time in writing/collecting data for your thesis. You should not be busy finishing your thesis a few months before the exam. Some people could not appear for the exam because they could not submit their thesis in time. So DO NOT TAKE thesis lightly.

Do NOT Copy-Paste

Reiterating once again, do not simply choose someone else’s thesis topic. Find out what are kind of cases that your Hospital caters to. It is better to do a good thesis on a common topic than a crappy one on a rare one.

Books to help you write a Radiology Thesis

Event country/university has a different format for thesis; hence these book recommendations may not work for everyone.

- Amazon Kindle Edition

- Gupta, Piyush (Author)

- English (Publication Language)

- 206 Pages - 10/12/2020 (Publication Date) - Jaypee Brothers Medical Publishers (P) Ltd. (Publisher)

In A Hurry? Download a PDF list of Radiology Research Topics!

Sign up below to get this PDF directly to your email address.

100% Privacy Guaranteed. Your information will not be shared. Unsubscribe anytime with a single click.

List of Radiology Research /Thesis / Dissertation Topics

- State of the art of MRI in the diagnosis of hepatic focal lesions

- Multimodality imaging evaluation of sacroiliitis in newly diagnosed patients of spondyloarthropathy

- Multidetector computed tomography in oesophageal varices

- Role of positron emission tomography with computed tomography in the diagnosis of cancer Thyroid

- Evaluation of focal breast lesions using ultrasound elastography

- Role of MRI diffusion tensor imaging in the assessment of traumatic spinal cord injuries

- Sonographic imaging in male infertility

- Comparison of color Doppler and digital subtraction angiography in occlusive arterial disease in patients with lower limb ischemia

- The role of CT urography in Haematuria

- Role of functional magnetic resonance imaging in making brain tumor surgery safer

- Prediction of pre-eclampsia and fetal growth restriction by uterine artery Doppler

- Role of grayscale and color Doppler ultrasonography in the evaluation of neonatal cholestasis

- Validity of MRI in the diagnosis of congenital anorectal anomalies

- Role of sonography in assessment of clubfoot

- Role of diffusion MRI in preoperative evaluation of brain neoplasms

- Imaging of upper airways for pre-anaesthetic evaluation purposes and for laryngeal afflictions.

- A study of multivessel (arterial and venous) Doppler velocimetry in intrauterine growth restriction

- Multiparametric 3tesla MRI of suspected prostatic malignancy.

- Role of Sonography in Characterization of Thyroid Nodules for differentiating benign from

- Role of advances magnetic resonance imaging sequences in multiple sclerosis

- Role of multidetector computed tomography in evaluation of jaw lesions

- Role of Ultrasound and MR Imaging in the Evaluation of Musculotendinous Pathologies of Shoulder Joint

- Role of perfusion computed tomography in the evaluation of cerebral blood flow, blood volume and vascular permeability of cerebral neoplasms

- MRI flow quantification in the assessment of the commonest csf flow abnormalities

- Role of diffusion-weighted MRI in evaluation of prostate lesions and its histopathological correlation

- CT enterography in evaluation of small bowel disorders

- Comparison of perfusion magnetic resonance imaging (PMRI), magnetic resonance spectroscopy (MRS) in and positron emission tomography-computed tomography (PET/CT) in post radiotherapy treated gliomas to detect recurrence

- Role of multidetector computed tomography in evaluation of paediatric retroperitoneal masses

- Role of Multidetector computed tomography in neck lesions

- Estimation of standard liver volume in Indian population

- Role of MRI in evaluation of spinal trauma

- Role of modified sonohysterography in female factor infertility: a pilot study.

- The role of pet-CT in the evaluation of hepatic tumors

- Role of 3D magnetic resonance imaging tractography in assessment of white matter tracts compromise in supratentorial tumors

- Role of dual phase multidetector computed tomography in gallbladder lesions

- Role of multidetector computed tomography in assessing anatomical variants of nasal cavity and paranasal sinuses in patients of chronic rhinosinusitis.

- magnetic resonance spectroscopy in multiple sclerosis

- Evaluation of thyroid nodules by ultrasound elastography using acoustic radiation force impulse (ARFI) imaging

- Role of Magnetic Resonance Imaging in Intractable Epilepsy

- Evaluation of suspected and known coronary artery disease by 128 slice multidetector CT.

- Role of regional diffusion tensor imaging in the evaluation of intracranial gliomas and its histopathological correlation

- Role of chest sonography in diagnosing pneumothorax

- Role of CT virtual cystoscopy in diagnosis of urinary bladder neoplasia

- Role of MRI in assessment of valvular heart diseases

- High resolution computed tomography of temporal bone in unsafe chronic suppurative otitis media

- Multidetector CT urography in the evaluation of hematuria

- Contrast-induced nephropathy in diagnostic imaging investigations with intravenous iodinated contrast media

- Comparison of dynamic susceptibility contrast-enhanced perfusion magnetic resonance imaging and single photon emission computed tomography in patients with little’s disease

- Role of Multidetector Computed Tomography in Bowel Lesions.

- Role of diagnostic imaging modalities in evaluation of post liver transplantation recipient complications.

- Role of multislice CT scan and barium swallow in the estimation of oesophageal tumour length

- Malignant Lesions-A Prospective Study.

- Value of ultrasonography in assessment of acute abdominal diseases in pediatric age group

- Role of three dimensional multidetector CT hysterosalpingography in female factor infertility

- Comparative evaluation of multi-detector computed tomography (MDCT) virtual tracheo-bronchoscopy and fiberoptic tracheo-bronchoscopy in airway diseases

- Role of Multidetector CT in the evaluation of small bowel obstruction

- Sonographic evaluation in adhesive capsulitis of shoulder

- Utility of MR Urography Versus Conventional Techniques in Obstructive Uropathy

- MRI of the postoperative knee

- Role of 64 slice-multi detector computed tomography in diagnosis of bowel and mesenteric injury in blunt abdominal trauma.

- Sonoelastography and triphasic computed tomography in the evaluation of focal liver lesions

- Evaluation of Role of Transperineal Ultrasound and Magnetic Resonance Imaging in Urinary Stress incontinence in Women

- Multidetector computed tomographic features of abdominal hernias

- Evaluation of lesions of major salivary glands using ultrasound elastography

- Transvaginal ultrasound and magnetic resonance imaging in female urinary incontinence

- MDCT colonography and double-contrast barium enema in evaluation of colonic lesions

- Role of MRI in diagnosis and staging of urinary bladder carcinoma

- Spectrum of imaging findings in children with febrile neutropenia.

- Spectrum of radiographic appearances in children with chest tuberculosis.

- Role of computerized tomography in evaluation of mediastinal masses in pediatric

- Diagnosing renal artery stenosis: Comparison of multimodality imaging in diabetic patients

- Role of multidetector CT virtual hysteroscopy in the detection of the uterine & tubal causes of female infertility

- Role of multislice computed tomography in evaluation of crohn’s disease

- CT quantification of parenchymal and airway parameters on 64 slice MDCT in patients of chronic obstructive pulmonary disease

- Comparative evaluation of MDCT and 3t MRI in radiographically detected jaw lesions.

- Evaluation of diagnostic accuracy of ultrasonography, colour Doppler sonography and low dose computed tomography in acute appendicitis

- Ultrasonography , magnetic resonance cholangio-pancreatography (MRCP) in assessment of pediatric biliary lesions

- Multidetector computed tomography in hepatobiliary lesions.

- Evaluation of peripheral nerve lesions with high resolution ultrasonography and colour Doppler

- Multidetector computed tomography in pancreatic lesions

- Multidetector Computed Tomography in Paediatric abdominal masses.

- Evaluation of focal liver lesions by colour Doppler and MDCT perfusion imaging

- Sonographic evaluation of clubfoot correction during Ponseti treatment

- Role of multidetector CT in characterization of renal masses

- Study to assess the role of Doppler ultrasound in evaluation of arteriovenous (av) hemodialysis fistula and the complications of hemodialysis vasular access

- Comparative study of multiphasic contrast-enhanced CT and contrast-enhanced MRI in the evaluation of hepatic mass lesions

- Sonographic spectrum of rheumatoid arthritis

- Diagnosis & staging of liver fibrosis by ultrasound elastography in patients with chronic liver diseases

- Role of multidetector computed tomography in assessment of jaw lesions.

- Role of high-resolution ultrasonography in the differentiation of benign and malignant thyroid lesions

- Radiological evaluation of aortic aneurysms in patients selected for endovascular repair

- Role of conventional MRI, and diffusion tensor imaging tractography in evaluation of congenital brain malformations

- To evaluate the status of coronary arteries in patients with non-valvular atrial fibrillation using 256 multirow detector CT scan

- A comparative study of ultrasonography and CT – arthrography in diagnosis of chronic ligamentous and meniscal injuries of knee

- Multi detector computed tomography evaluation in chronic obstructive pulmonary disease and correlation with severity of disease

- Diffusion weighted and dynamic contrast enhanced magnetic resonance imaging in chemoradiotherapeutic response evaluation in cervical cancer.

- High resolution sonography in the evaluation of non-traumatic painful wrist

- The role of trans-vaginal ultrasound versus magnetic resonance imaging in diagnosis & evaluation of cancer cervix

- Role of multidetector row computed tomography in assessment of maxillofacial trauma

- Imaging of vascular complication after liver transplantation.

- Role of magnetic resonance perfusion weighted imaging & spectroscopy for grading of glioma by correlating perfusion parameter of the lesion with the final histopathological grade

- Magnetic resonance evaluation of abdominal tuberculosis.

- Diagnostic usefulness of low dose spiral HRCT in diffuse lung diseases

- Role of dynamic contrast enhanced and diffusion weighted magnetic resonance imaging in evaluation of endometrial lesions

- Contrast enhanced digital mammography anddigital breast tomosynthesis in early diagnosis of breast lesion

- Evaluation of Portal Hypertension with Colour Doppler flow imaging and magnetic resonance imaging

- Evaluation of musculoskeletal lesions by magnetic resonance imaging

- Role of diffusion magnetic resonance imaging in assessment of neoplastic and inflammatory brain lesions

- Radiological spectrum of chest diseases in HIV infected children High resolution ultrasonography in neck masses in children

- with surgical findings

- Sonographic evaluation of peripheral nerves in type 2 diabetes mellitus.

- Role of perfusion computed tomography in the evaluation of neck masses and correlation

- Role of ultrasonography in the diagnosis of knee joint lesions

- Role of ultrasonography in evaluation of various causes of pelvic pain in first trimester of pregnancy.

- Role of Magnetic Resonance Angiography in the Evaluation of Diseases of Aorta and its Branches

- MDCT fistulography in evaluation of fistula in Ano

- Role of multislice CT in diagnosis of small intestine tumors

- Role of high resolution CT in differentiation between benign and malignant pulmonary nodules in children

- A study of multidetector computed tomography urography in urinary tract abnormalities

- Role of high resolution sonography in assessment of ulnar nerve in patients with leprosy.

- Pre-operative radiological evaluation of locally aggressive and malignant musculoskeletal tumours by computed tomography and magnetic resonance imaging.

- The role of ultrasound & MRI in acute pelvic inflammatory disease

- Ultrasonography compared to computed tomographic arthrography in the evaluation of shoulder pain

- Role of Multidetector Computed Tomography in patients with blunt abdominal trauma.

- The Role of Extended field-of-view Sonography and compound imaging in Evaluation of Breast Lesions

- Evaluation of focal pancreatic lesions by Multidetector CT and perfusion CT

- Evaluation of breast masses on sono-mammography and colour Doppler imaging

- Role of CT virtual laryngoscopy in evaluation of laryngeal masses

- Triple phase multi detector computed tomography in hepatic masses

- Role of transvaginal ultrasound in diagnosis and treatment of female infertility

- Role of ultrasound and color Doppler imaging in assessment of acute abdomen due to female genetal causes

- High resolution ultrasonography and color Doppler ultrasonography in scrotal lesion

- Evaluation of diagnostic accuracy of ultrasonography with colour Doppler vs low dose computed tomography in salivary gland disease

- Role of multidetector CT in diagnosis of salivary gland lesions

- Comparison of diagnostic efficacy of ultrasonography and magnetic resonance cholangiopancreatography in obstructive jaundice: A prospective study

- Evaluation of varicose veins-comparative assessment of low dose CT venogram with sonography: pilot study

- Role of mammotome in breast lesions

- The role of interventional imaging procedures in the treatment of selected gynecological disorders

- Role of transcranial ultrasound in diagnosis of neonatal brain insults

- Role of multidetector CT virtual laryngoscopy in evaluation of laryngeal mass lesions

- Evaluation of adnexal masses on sonomorphology and color Doppler imaginig

- Role of radiological imaging in diagnosis of endometrial carcinoma

- Comprehensive imaging of renal masses by magnetic resonance imaging

- The role of 3D & 4D ultrasonography in abnormalities of fetal abdomen

- Diffusion weighted magnetic resonance imaging in diagnosis and characterization of brain tumors in correlation with conventional MRI

- Role of diffusion weighted MRI imaging in evaluation of cancer prostate

- Role of multidetector CT in diagnosis of urinary bladder cancer

- Role of multidetector computed tomography in the evaluation of paediatric retroperitoneal masses.

- Comparative evaluation of gastric lesions by double contrast barium upper G.I. and multi detector computed tomography

- Evaluation of hepatic fibrosis in chronic liver disease using ultrasound elastography

- Role of MRI in assessment of hydrocephalus in pediatric patients

- The role of sonoelastography in characterization of breast lesions

- The influence of volumetric tumor doubling time on survival of patients with intracranial tumours

- Role of perfusion computed tomography in characterization of colonic lesions

- Role of proton MRI spectroscopy in the evaluation of temporal lobe epilepsy

- Role of Doppler ultrasound and multidetector CT angiography in evaluation of peripheral arterial diseases.

- Role of multidetector computed tomography in paranasal sinus pathologies

- Role of virtual endoscopy using MDCT in detection & evaluation of gastric pathologies

- High resolution 3 Tesla MRI in the evaluation of ankle and hindfoot pain.

- Transperineal ultrasonography in infants with anorectal malformation

- CT portography using MDCT versus color Doppler in detection of varices in cirrhotic patients

- Role of CT urography in the evaluation of a dilated ureter

- Characterization of pulmonary nodules by dynamic contrast-enhanced multidetector CT

- Comprehensive imaging of acute ischemic stroke on multidetector CT

- The role of fetal MRI in the diagnosis of intrauterine neurological congenital anomalies

- Role of Multidetector computed tomography in pediatric chest masses

- Multimodality imaging in the evaluation of palpable & non-palpable breast lesion.

- Sonographic Assessment Of Fetal Nasal Bone Length At 11-28 Gestational Weeks And Its Correlation With Fetal Outcome.

- Role Of Sonoelastography And Contrast-Enhanced Computed Tomography In Evaluation Of Lymph Node Metastasis In Head And Neck Cancers

- Role Of Renal Doppler And Shear Wave Elastography In Diabetic Nephropathy

- Evaluation Of Relationship Between Various Grades Of Fatty Liver And Shear Wave Elastography Values

- Evaluation and characterization of pelvic masses of gynecological origin by USG, color Doppler and MRI in females of reproductive age group

- Radiological evaluation of small bowel diseases using computed tomographic enterography

- Role of coronary CT angiography in patients of coronary artery disease

- Role of multimodality imaging in the evaluation of pediatric neck masses

- Role of CT in the evaluation of craniocerebral trauma

- Role of magnetic resonance imaging (MRI) in the evaluation of spinal dysraphism

- Comparative evaluation of triple phase CT and dynamic contrast-enhanced MRI in patients with liver cirrhosis

- Evaluation of the relationship between carotid intima-media thickness and coronary artery disease in patients evaluated by coronary angiography for suspected CAD

- Assessment of hepatic fat content in fatty liver disease by unenhanced computed tomography

- Correlation of vertebral marrow fat on spectroscopy and diffusion-weighted MRI imaging with bone mineral density in postmenopausal women.

- Comparative evaluation of CT coronary angiography with conventional catheter coronary angiography

- Ultrasound evaluation of kidney length & descending colon diameter in normal and intrauterine growth-restricted fetuses

- A prospective study of hepatic vein waveform and splenoportal index in liver cirrhosis: correlation with child Pugh’s classification and presence of esophageal varices.

- CT angiography to evaluate coronary artery by-pass graft patency in symptomatic patient’s functional assessment of myocardium by cardiac MRI in patients with myocardial infarction

- MRI evaluation of HIV positive patients with central nervous system manifestations

- MDCT evaluation of mediastinal and hilar masses

- Evaluation of rotator cuff & labro-ligamentous complex lesions by MRI & MRI arthrography of shoulder joint

- Role of imaging in the evaluation of soft tissue vascular malformation

- Role of MRI and ultrasonography in the evaluation of multifidus muscle pathology in chronic low back pain patients

- Role of ultrasound elastography in the differential diagnosis of breast lesions

- Role of magnetic resonance cholangiopancreatography in evaluating dilated common bile duct in patients with symptomatic gallstone disease.

- Comparative study of CT urography & hybrid CT urography in patients with haematuria.

- Role of MRI in the evaluation of anorectal malformations

- Comparison of ultrasound-Doppler and magnetic resonance imaging findings in rheumatoid arthritis of hand and wrist

- Role of Doppler sonography in the evaluation of renal artery stenosis in hypertensive patients undergoing coronary angiography for coronary artery disease.

- Comparison of radiography, computed tomography and magnetic resonance imaging in the detection of sacroiliitis in ankylosing spondylitis.

- Mr evaluation of painful hip

- Role of MRI imaging in pretherapeutic assessment of oral and oropharyngeal malignancy

- Evaluation of diffuse lung diseases by high resolution computed tomography of the chest

- Mr evaluation of brain parenchyma in patients with craniosynostosis.

- Diagnostic and prognostic value of cardiovascular magnetic resonance imaging in dilated cardiomyopathy

- Role of multiparametric magnetic resonance imaging in the detection of early carcinoma prostate

- Role of magnetic resonance imaging in white matter diseases

- Role of sonoelastography in assessing the response to neoadjuvant chemotherapy in patients with locally advanced breast cancer.

- Role of ultrasonography in the evaluation of carotid and femoral intima-media thickness in predialysis patients with chronic kidney disease

- Role of H1 MRI spectroscopy in focal bone lesions of peripheral skeleton choline detection by MRI spectroscopy in breast cancer and its correlation with biomarkers and histological grade.

- Ultrasound and MRI evaluation of axillary lymph node status in breast cancer.

- Role of sonography and magnetic resonance imaging in evaluating chronic lateral epicondylitis.

- Comparative of sonography including Doppler and sonoelastography in cervical lymphadenopathy.

- Evaluation of Umbilical Coiling Index as Predictor of Pregnancy Outcome.

- Computerized Tomographic Evaluation of Azygoesophageal Recess in Adults.

- Lumbar Facet Arthropathy in Low Backache.

- “Urethral Injuries After Pelvic Trauma: Evaluation with Uretrography

- Role Of Ct In Diagnosis Of Inflammatory Renal Diseases

- Role Of Ct Virtual Laryngoscopy In Evaluation Of Laryngeal Masses

- “Ct Portography Using Mdct Versus Color Doppler In Detection Of Varices In

- Cirrhotic Patients”

- Role Of Multidetector Ct In Characterization Of Renal Masses

- Role Of Ct Virtual Cystoscopy In Diagnosis Of Urinary Bladder Neoplasia

- Role Of Multislice Ct In Diagnosis Of Small Intestine Tumors

- “Mri Flow Quantification In The Assessment Of The Commonest CSF Flow Abnormalities”

- “The Role Of Fetal Mri In Diagnosis Of Intrauterine Neurological CongenitalAnomalies”

- Role Of Transcranial Ultrasound In Diagnosis Of Neonatal Brain Insults

- “The Role Of Interventional Imaging Procedures In The Treatment Of Selected Gynecological Disorders”

- Role Of Radiological Imaging In Diagnosis Of Endometrial Carcinoma

- “Role Of High-Resolution Ct In Differentiation Between Benign And Malignant Pulmonary Nodules In Children”

- Role Of Ultrasonography In The Diagnosis Of Knee Joint Lesions

- “Role Of Diagnostic Imaging Modalities In Evaluation Of Post Liver Transplantation Recipient Complications”

- “Diffusion-Weighted Magnetic Resonance Imaging In Diagnosis And

- Characterization Of Brain Tumors In Correlation With Conventional Mri”

- The Role Of PET-CT In The Evaluation Of Hepatic Tumors

- “Role Of Computerized Tomography In Evaluation Of Mediastinal Masses In Pediatric patients”

- “Trans Vaginal Ultrasound And Magnetic Resonance Imaging In Female Urinary Incontinence”

- Role Of Multidetector Ct In Diagnosis Of Urinary Bladder Cancer

- “Role Of Transvaginal Ultrasound In Diagnosis And Treatment Of Female Infertility”

- Role Of Diffusion-Weighted Mri Imaging In Evaluation Of Cancer Prostate

- “Role Of Positron Emission Tomography With Computed Tomography In Diagnosis Of Cancer Thyroid”

- The Role Of CT Urography In Case Of Haematuria

- “Value Of Ultrasonography In Assessment Of Acute Abdominal Diseases In Pediatric Age Group”

- “Role Of Functional Magnetic Resonance Imaging In Making Brain Tumor Surgery Safer”

- The Role Of Sonoelastography In Characterization Of Breast Lesions

- “Ultrasonography, Magnetic Resonance Cholangiopancreatography (MRCP) In Assessment Of Pediatric Biliary Lesions”

- “Role Of Ultrasound And Color Doppler Imaging In Assessment Of Acute Abdomen Due To Female Genital Causes”

- “Role Of Multidetector Ct Virtual Laryngoscopy In Evaluation Of Laryngeal Mass Lesions”

- MRI Of The Postoperative Knee

- Role Of Mri In Assessment Of Valvular Heart Diseases

- The Role Of 3D & 4D Ultrasonography In Abnormalities Of Fetal Abdomen

- State Of The Art Of Mri In Diagnosis Of Hepatic Focal Lesions

- Role Of Multidetector Ct In Diagnosis Of Salivary Gland Lesions

- “Role Of Virtual Endoscopy Using Mdct In Detection & Evaluation Of Gastric Pathologies”

- The Role Of Ultrasound & Mri In Acute Pelvic Inflammatory Disease

- “Diagnosis & Staging Of Liver Fibrosis By Ultraso Und Elastography In

- Patients With Chronic Liver Diseases”

- Role Of Mri In Evaluation Of Spinal Trauma

- Validity Of Mri In Diagnosis Of Congenital Anorectal Anomalies

- Imaging Of Vascular Complication After Liver Transplantation

- “Contrast-Enhanced Digital Mammography And Digital Breast Tomosynthesis In Early Diagnosis Of Breast Lesion”

- Role Of Mammotome In Breast Lesions

- “Role Of MRI Diffusion Tensor Imaging (DTI) In Assessment Of Traumatic Spinal Cord Injuries”

- “Prediction Of Pre-eclampsia And Fetal Growth Restriction By Uterine Artery Doppler”

- “Role Of Multidetector Row Computed Tomography In Assessment Of Maxillofacial Trauma”

- “Role Of Diffusion Magnetic Resonance Imaging In Assessment Of Neoplastic And Inflammatory Brain Lesions”

- Role Of Diffusion Mri In Preoperative Evaluation Of Brain Neoplasms

- “Role Of Multidetector Ct Virtual Hysteroscopy In The Detection Of The

- Uterine & Tubal Causes Of Female Infertility”

- Role Of Advances Magnetic Resonance Imaging Sequences In Multiple Sclerosis Magnetic Resonance Spectroscopy In Multiple Sclerosis

- “Role Of Conventional Mri, And Diffusion Tensor Imaging Tractography In Evaluation Of Congenital Brain Malformations”

- Role Of MRI In Evaluation Of Spinal Trauma

- Diagnostic Role Of Diffusion-weighted MR Imaging In Neck Masses

- “The Role Of Transvaginal Ultrasound Versus Magnetic Resonance Imaging In Diagnosis & Evaluation Of Cancer Cervix”

- “Role Of 3d Magnetic Resonance Imaging Tractography In Assessment Of White Matter Tracts Compromise In Supra Tentorial Tumors”

- Role Of Proton MR Spectroscopy In The Evaluation Of Temporal Lobe Epilepsy

- Role Of Multislice Computed Tomography In Evaluation Of Crohn’s Disease

- Role Of MRI In Assessment Of Hydrocephalus In Pediatric Patients

- The Role Of MRI In Diagnosis And Staging Of Urinary Bladder Carcinoma

- USG and MRI correlation of congenital CNS anomalies

- HRCT in interstitial lung disease

- X-Ray, CT and MRI correlation of bone tumors

- “Study on the diagnostic and prognostic utility of X-Rays for cases of pulmonary tuberculosis under RNTCP”

- “Role of magnetic resonance imaging in the characterization of female adnexal pathology”

- “CT angiography of carotid atherosclerosis and NECT brain in cerebral ischemia, a correlative analysis”

- Role of CT scan in the evaluation of paranasal sinus pathology

- USG and MRI correlation on shoulder joint pathology

- “Radiological evaluation of a patient presenting with extrapulmonary tuberculosis”

- CT and MRI correlation in focal liver lesions”

- Comparison of MDCT virtual cystoscopy with conventional cystoscopy in bladder tumors”

- “Bleeding vessels in life-threatening hemoptysis: Comparison of 64 detector row CT angiography with conventional angiography prior to endovascular management”

- “Role of transarterial chemoembolization in unresectable hepatocellular carcinoma”

- “Comparison of color flow duplex study with digital subtraction angiography in the evaluation of peripheral vascular disease”

- “A Study to assess the efficacy of magnetization transfer ratio in differentiating tuberculoma from neurocysticercosis”

- “MR evaluation of uterine mass lesions in correlation with transabdominal, transvaginal ultrasound using HPE as a gold standard”

- “The Role of power Doppler imaging with trans rectal ultrasonogram guided prostate biopsy in the detection of prostate cancer”

- “Lower limb arteries assessed with doppler angiography – A prospective comparative study with multidetector CT angiography”

- “Comparison of sildenafil with papaverine in penile doppler by assessing hemodynamic changes”

- “Evaluation of efficacy of sonosalphingogram for assessing tubal patency in infertile patients with hysterosalpingogram as the gold standard”

- Role of CT enteroclysis in the evaluation of small bowel diseases

- “MRI colonography versus conventional colonoscopy in the detection of colonic polyposis”

- “Magnetic Resonance Imaging of anteroposterior diameter of the midbrain – differentiation of progressive supranuclear palsy from Parkinson disease”

- “MRI Evaluation of anterior cruciate ligament tears with arthroscopic correlation”

- “The Clinicoradiological profile of cerebral venous sinus thrombosis with prognostic evaluation using MR sequences”

- “Role of MRI in the evaluation of pelvic floor integrity in stress incontinent patients” “Doppler ultrasound evaluation of hepatic venous waveform in portal hypertension before and after propranolol”

- “Role of transrectal sonography with colour doppler and MRI in evaluation of prostatic lesions with TRUS guided biopsy correlation”

- “Ultrasonographic evaluation of painful shoulders and correlation of rotator cuff pathologies and clinical examination”

- “Colour Doppler Evaluation of Common Adult Hepatic tumors More Than 2 Cm with HPE and CECT Correlation”

- “Clinical Relevance of MR Urethrography in Obliterative Posterior Urethral Stricture”

- “Prediction of Adverse Perinatal Outcome in Growth Restricted Fetuses with Antenatal Doppler Study”

- Radiological evaluation of spinal dysraphism using CT and MRI

- “Evaluation of temporal bone in cholesteatoma patients by high resolution computed tomography”

- “Radiological evaluation of primary brain tumours using computed tomography and magnetic resonance imaging”

- “Three dimensional colour doppler sonographic assessment of changes in volume and vascularity of fibroids – before and after uterine artery embolization”

- “In phase opposed phase imaging of bone marrow differentiating neoplastic lesions”

- “Role of dynamic MRI in replacing the isotope renogram in the functional evaluation of PUJ obstruction”

- Characterization of adrenal masses with contrast-enhanced CT – washout study

- A study on accuracy of magnetic resonance cholangiopancreatography

- “Evaluation of median nerve in carpal tunnel syndrome by high-frequency ultrasound & color doppler in comparison with nerve conduction studies”

- “Correlation of Agatston score in patients with obstructive and nonobstructive coronary artery disease following STEMI”

- “Doppler ultrasound assessment of tumor vascularity in locally advanced breast cancer at diagnosis and following primary systemic chemotherapy.”

- “Validation of two-dimensional perineal ultrasound and dynamic magnetic resonance imaging in pelvic floor dysfunction.”

- “Role of MR urethrography compared to conventional urethrography in the surgical management of obliterative urethral stricture.”

Search Diagnostic Imaging Research Topics

You can also search research-related resources on our custom search engine .

Free Resources for Preparing Radiology Thesis

- Radiology thesis topics- Benha University – Free to download thesis

- Radiology thesis topics – Faculty of Medical Science Delhi

- Radiology thesis topics – IPGMER

- Fetal Radiology thesis Protocols

- Radiology thesis and dissertation topics

- Radiographics

Proofreading Your Thesis:

Make sure you use Grammarly to correct your spelling , grammar , and plagiarism for your thesis. Grammarly has affordable paid subscriptions, windows/macOS apps, and FREE browser extensions. It is an excellent tool to avoid inadvertent spelling mistakes in your research projects. It has an extensive built-in vocabulary, but you should make an account and add your own medical glossary to it.

Guidelines for Writing a Radiology Thesis:

These are general guidelines and not about radiology specifically. You can share these with colleagues from other departments as well. Special thanks to Dr. Sanjay Yadav sir for these. This section is best seen on a desktop. Here are a couple of handy presentations to start writing a thesis:

Read the general guidelines for writing a thesis (the page will take some time to load- more than 70 pages!

A format for thesis protocol with a sample patient information sheet, sample patient consent form, sample application letter for thesis, and sample certificate.

Resources and References:

- Guidelines for thesis writing.

- Format for thesis protocol

- Thesis protocol writing guidelines DNB

- Informed consent form for Research studies from AIIMS

- Radiology Informed consent forms in local Indian languages.

- Sample Informed Consent form for Research in Hindi

- Guide to write a thesis by Dr. P R Sharma

- Guidelines for thesis writing by Dr. Pulin Gupta.

- Preparing MD/DNB thesis by A Indrayan

- Another good thesis reference protocol

Hopefully, this post will make the tedious task of writing a Radiology thesis a little bit easier for you. Best of luck with writing your thesis and your residency too!

More guides for residents :

- Guide for the MD/DMRD/DNB radiology exam!

- Guide for First-Year Radiology Residents

FRCR Exam: THE Most Comprehensive Guide (2022)!

- Radiology Practical Exams Questions compilation for MD/DNB/DMRD !

- Radiology Exam Resources (Oral Recalls, Instruments, etc )!

- Tips and Tricks for DNB/MD Radiology Practical Exam

FRCR 2B exam- Tips and Tricks !

- FRCR exam preparation – An alternative take!

- Why did I take up Radiology?

- Radiology Conferences – A comprehensive guide!

- ECR (European Congress Of Radiology)

- European Diploma in Radiology (EDiR) – The Complete Guide!

- Radiology NEET PG guide – How to select THE best college for post-graduation in Radiology (includes personal insights)!

- Interventional Radiology – All Your Questions Answered!

- What It Means To Be A Radiologist: A Guide For Medical Students!

- Radiology Mentors for Medical Students (Post NEET-PG)

- MD vs DNB Radiology: Which Path is Right for Your Career?

- DNB Radiology OSCE – Tips and Tricks

More radiology resources here: Radiology resources This page will be updated regularly. Kindly leave your feedback in the comments or send us a message here . Also, you can comment below regarding your department’s thesis topics.

Note: All topics have been compiled from available online resources. If anyone has an issue with any radiology thesis topics displayed here, you can message us here , and we can delete them. These are only sample guidelines. Thesis guidelines differ from institution to institution.

Image source: Thesis complete! (2018). Flickr. Retrieved 12 August 2018, from https://www.flickr.com/photos/cowlet/354911838 by Victoria Catterson

About The Author

Dr. amar udare, md, related posts ↓.

7 thoughts on “Radiology Thesis – More than 400 Research Topics (2022)!”

Amazing & The most helpful site for Radiology residents…

Thank you for your kind comments 🙂

Dr. I saw your Tips is very amazing and referable. But Dr. Can you help me with the thesis of Evaluation of Diagnostic accuracy of X-ray radiograph in knee joint lesion.

Wow! These are excellent stuff. You are indeed a teacher. God bless

Glad you liked these!

happy to see this

Glad I could help :).

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Get Radiology Updates to Your Inbox!

This site is for use by medical professionals. To continue, you must accept our use of cookies and the site's Terms of Use. Learn more Accept!

Wish to be a BETTER Radiologist? Join 14000 Radiology Colleagues !

Enter your email address below to access HIGH YIELD radiology content, updates, and resources.

No spam, only VALUE! Unsubscribe anytime with a single click.

- Faculty of Arts and Sciences

- FAS Theses and Dissertations

- Communities & Collections

- By Issue Date

- FAS Department

- Quick submit

- Waiver Generator

- DASH Stories

- Accessibility

- COVID-related Research

Terms of Use

- Privacy Policy

- By Collections

- By Departments

Generalizable and Explainable Deep Learning in Medical Imaging with Small Data

Citable link to this page

Collections.

- FAS Theses and Dissertations [6138]

Contact administrator regarding this item (to report mistakes or request changes)

Advertisement

A holistic overview of deep learning approach in medical imaging

- Regular Paper

- Published: 21 January 2022

- Volume 28 , pages 881–914, ( 2022 )

Cite this article

- Rammah Yousef 1 ,

- Gaurav Gupta 1 ,

- Nabhan Yousef 2 &

- Manju Khari ORCID: orcid.org/0000-0001-5395-5335 3

8277 Accesses

42 Citations

20 Altmetric

Explore all metrics

Medical images are a rich source of invaluable necessary information used by clinicians. Recent technologies have introduced many advancements for exploiting the most of this information and use it to generate better analysis. Deep learning (DL) techniques have been empowered in medical images analysis using computer-assisted imaging contexts and presenting a lot of solutions and improvements while analyzing these images by radiologists and other specialists. In this paper, we present a survey of DL techniques used for variety of tasks along with the different medical image’s modalities to provide critical review of the recent developments in this direction. We have organized our paper to provide significant contribution of deep leaning traits and learn its concepts, which is in turn helpful for non-expert in medical society. Then, we present several applications of deep learning (e.g., segmentation, classification, detection, etc.) which are commonly used for clinical purposes for different anatomical site, and we also present the main key terms for DL attributes like basic architecture, data augmentation, transfer learning, and feature selection methods. Medical images as inputs to deep learning architectures will be the mainstream in the coming years, and novel DL techniques are predicted to be the core of medical images analysis. We conclude our paper by addressing some research challenges and the suggested solutions for them found in literature, and also future promises and directions for further developments.

Similar content being viewed by others

Brain tumor detection and classification using machine learning: a comprehensive survey

UNet++: A Nested U-Net Architecture for Medical Image Segmentation

Convolutional neural networks: an overview and application in radiology

Avoid common mistakes on your manuscript.

1 Introduction

Health no doubt is on the top of concerns hierarchy in our life. Through the lifetime, human has struggled of diseases which cause death; in our life scope, we are fighting against enormous number of diseases, moreover, improving life expectancy and health status significantly. Historically medicine could not find the cure of numerous diseases due to a lot of reasons starting from clinical equipment and sensors to the analytical tools of the collected medical data. The fields of big data, AI, and cloud computing have played a missive role at each aspect of handling these data. Across the worldwide, Artificial Intelligence (AI) has been widely common and well known enough to most of the people due to the rapid progress achieved in almost every domain in our life. The importance of AI comes from the remarkable progress within the last 2 decades only, and it is still growing and specialists from different fields are investing. AI’s algorithms were attributed to the availability of big data and the efficiency of modern computing criteria that is provided lately.

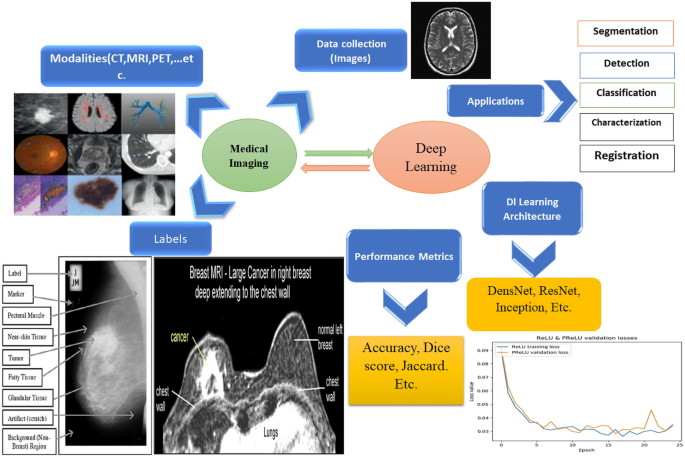

This paper aims to give a holistic overview in the field of healthcare as an application of AI and deep learning particularly. The paper starts by giving an overview of medical imaging as an application of deep learning and then moving to why do we need AI in healthcare; in this section, we will give the key terms of how AI is used in both the main medical data types which are medical imaging and medical signals. To provide a moderate and rich general perspective, we will mention the well-known data which are widely used for generalization and the main pathologies, as well. Starting from classification and detection of a disease to segmentation and treatment and finally survival rate and prognostics. We will talk in detail about each pathology with the relevant key features and the significant results found in literature. In the last section, we will discuss about the challenges of deep learning and the future scope of AI in healthcare. Generally, AI is being a fundamental path in nowadays medicine which is in short a software that can learn from data like human being and it can develop an experience systematically and finally deliver a solution or diagnostic even faster than humans. AI has become an assistive tool in medicine with benefits like error reduction, improving accuracy, fast computing, and better diagnosis were introduced to help doctors efficiently. From clinical perspective, AI is used now to help the doctors in decision-making due to faster pattern recognition from the medical data which also in turn are registered more precisely in computers than humans; moreover, AI has the ability to manage and monitor the patients’ data and creating a personalized medical plan for future treatments. Ultimately, AI has proved to be helpful in medical field with different levels, such as telemedicine diagnosis diseases, decision-making assistant, and drug discovery and development. Machine learning (ML) and deep learning (DL) have tremendous usages in healthcare such as clinical decision support (CDS) system which incorporate human’s knowledge or large datasets to provide clinical recommendations. Another application is to analyze large historical data and get the insights which can predict the future cases of a patient using pattern identification. In this paper, we will highlight the top deep learning advancement and applications in medical imaging. Figure 1 shows the workflow chart of paper highlights.

Deep learning implementation and traits for medical imaging application

2 Background concepts

2.1 medical imaging.

Deep learning in medical imaging [ 1 ] is the contemporary scope of AI which has the top breakthroughs in numerous scientific domains including computer vision [ 2 ], Natural Language Processing (NLP) [ 3 ] and chemical structure analysis, where deep learning is specialized with highly complicated processes. Lately due to deep learning robustness while dealing with images, it has attracted big interest in medical imaging, and it holds big promising future for this field. The main idea that DL is preferable is that medical data are large and it has different varieties such as medical images, medical signals, and medical logs’ data of patients monitoring of body sensed information. Analyzing these data especially historical data by learning very complex mathematical models and extracting meaningful information is the key feature where DL scheme outperformed humans. In other words, DL framework will not replace the doctors, but it will assist them in decision-making and it will enhance the accuracy of the final diagnosis analysis. Our workflow procedure is shown in Fig. 1 .

2.1.1 Types of medical imaging

There are plenty of medical image types, and selecting the type depends on the usage, in a study which was held in US [ 4 ], it was found that there are some basic and widely used modalities of these medical images which also have increased, and these modalities are Magnetic Resonance Images (MRI), Computed Tomography (CT) scans, and Positron Emission Tomography (PET) to be on the top and some other common modalities like, X-ray, Ultrasound, and histology slides. Medical images are known to be so complicated, and in some cases, acquisition of these images is considered to be long process and it needs specific technical implications, e.g., an MRI which may need over 100 Mega Byte of memory storage.

Because of a lack of standardization while image acquisition and diversity in the scanning devices’ settings, a phenomenon called “distribution drift” might arise and cause non-standard acquisition. From a clinical need perspective, medical images are the key part of diagnosis of a disease and then the treatment too. In traditional diagnosis, a radiologist reviews the image, and then, he provides the doctors with a report of his findings. Images are an important part of the invasive process to be used in further treatment, e.g., surgical operations or radiology therapies for example [ 5 , 6 ].

2.2 DL frameworks

Conceptually, Artificial Neural Networks (ANN) are a mimic of the human neuro system in the structure and work. Medical imaging [ 7 ] is a field by which is specialized in observing and analyzing the physical status of the human body by generating visual representations like images of internal tissues or some organs of the body through either invasive or non-invasive procedure.

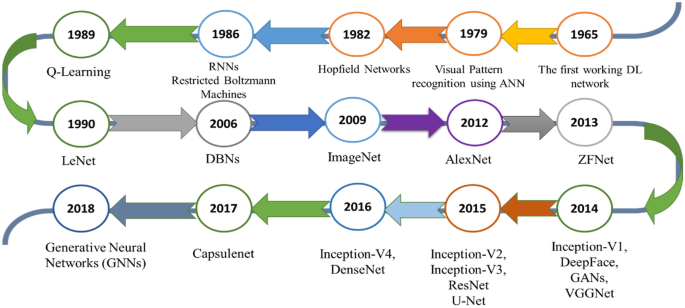

2.2.1 Key technologies and deep learning

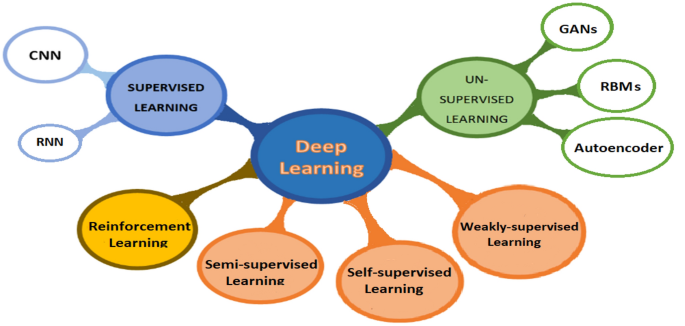

Historically, AI scheme has been proposed in 1970s and it has mainly the two major subcategories, such as Machine Learning (ML) and Deep Learning (DL). The earlier AI used heuristics-based techniques for extracting features from data, and further developments started using handcrafted features’ extraction and finally to supervised learning. Where basically Convolutional Neural Networks (CNN) [ 8 ] is used in images and specifically in medical images. CNN is known to be hungry for data, so it is the most suitable methodology for images, and the recent developments in hardware specifications and GPUs have helped a lot in performing CNN algorithms for medical image analysis. The generalized formulation of how CNN work was proposed by Lecun et al. [ 9 ], where they have used the error backpropagation for the first example of digits hand written recognition. Ultimately, CNNs have been the predominant architecture among all other algorithms which belong to AI, and the number of research of CNN has increased especially in medical images analysis and many new modalities have been proposed. In this section, we explain the fundamentals of DL and its algorithmic path in medical imaging. The commonly known categories of deep learning and their subcategories are discussed in this section and are shown in Fig. 2 .

DL basic categories as per paper organization

2.2.2 Supervised learning

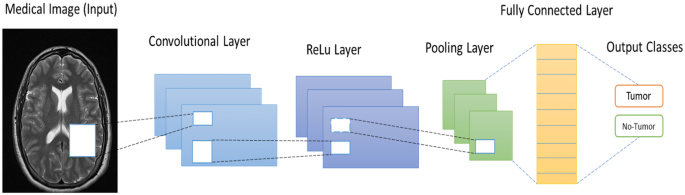

Convolutional neural networks: CNN [ 10 ] have taken the major role in many aspects and have lead the work in image-based tasks, including image reconstruction, enhancement, classification, segmentation, registration, and localization. CNNs are considered to be the most deep learning algorithm regarding images and visual processing because of its robustness in image dimensionality reduction without losing image’s important features; in this way, CNN algorithm deals with less parameters which mean increasing the computational efficiency. Another key term about CNN is that this architecture is suitable for hospitals use, because it can handle both 2D and 3D images, because some of medical images modalities like X-ray images are 2D-based images, while MRI and CT scan images are 3-dimensional images. In this section, we will explain the framework of CNN architecture as the heart of deep learning in medical imaging.

Convolutional layer: Before deep learning and CNN, in image processing, convolution terminology was used for extracting specific features from an image, such as corners, edges (e.g., sobel filter), and noise by applying a particular filters or kernels on the image. This operation is done by sliding the filter all over the image in a sliding window form until all the image is covered. In CNN, usually, the startup layers are designed to extract low-level features, such as lines and edges, and the progressive layers are built up for extracting higher features like full objects within an image. The goodness of using modern CNNs is that the filters could be 2D or 3D filters using multiple filters to form a volume and this depends on the application. The main discrimination in CNN is that this architecture obliges the elements in a filter to be the network weights. The idea behind CNN architecture is the convolution operation which is denoted by the symbol *. Equation ( 1 ) represents the convolution operation

where s ( t ) is the output feature map and I ( t ) is the original image to be convolved with the filter K ( a ).

Activation function : Activation functions are the enable button of a neuron; in CNN, there are multiple popular activation functions which are widely used such as, sigmoid, tanh, ReLU, Leaky ReLU, and Randomized ReLU. Especially, in medical imaging, most papers found in literature uses ReLU activation function which is defined using the formula

where x represents the input of a neuron.

There are other used activation functions used in CNN, such as sigmoid, tanh, and leaky-ReLu

Pooling layer: Mainly, this layer is used to reduce the parameters needed to be computed and it reduces the size of the image but not the number of channels. There are few pooling layers, such as Max-pooling, average- pooling, and L2-normalization pooling, where Max-pooling is the widely used pooling layer. Max-pooling means taking the maximum value of a position of the feature map after convolution operation.

Fully connecter layer: This layer is the same layer that is used in a casual ANN where usually in such network each neuron is connected to all other neurons in both the previous and next layer’s neurons; this makes the computation very expensive. A CNN model can get the help of the stochastic gradient descent to learn significant associations from the existing examples used for training. Thus, the benefit of a CNN usage is that it gradually reduces the feature map size before finally is get flatten to feed the fully connected layer which in turn computes the probability scores of the targeted classes for the classification. Fc-connected layer is the last layer in a CNN model, Furthermore, this layer processes the strongly extracted features from an image due to the convolutional a pooling layer before and finally fc-layer indicate to which class is an image belong to.

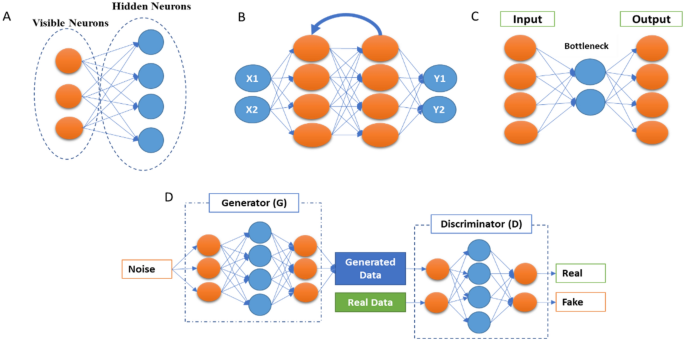

Recurrent neural networks: RNN is a major part from supervised deep learning models, and this model is specific with analyzing sequential data and time series. We can imagine an RNN as a casual neural network, while each layer of it represents the observations at a particular time (t). In [ 11 ], RNN was used for text generating which further connected to speech recognition and text prediction and other applications too. RNN are recurrent, because same work is done for every element in a sequence and the output depends on the previous output computation of the previous element in that sequence general, the output of a layer is fed as an input to the new input of the same layer as it is shown in Fig. 3 . Moreover, since the backpropagation of the output will suffer of vanishing gradient with time, so commonly a network is evolved which is Long Short-Term Memory (LSTM).

Basic common deep learning architectures. A Restricted Boltzmann machine. B Recurrent Neural Network (RNN). C Autoencoders. D GANs

In network and three bidirectional gated recurrent units is (BGRU) to help the RNN to hold long-term dependencies.

There were few papers found in the literature of RNN in medical imaging and particularly in segmentation, in [ 12 ], Chen et al. have used RNN along with CNN for segmenting fungal and neuronal structures from 3D images. Another application of RNN is in image caption generation [ 13 ], where these models can be used for annotating medical images like X-ray with text captions extracted and trained from radiologists’ reports [ 14 ]. RuoxuanCui et al. [ 15 ] have used a combination of CNN and RNN for diagnosing Alzheimer disease where their CNN model was used for classification task, after that the CNN model’s output is fed to an RNN model with cascaded bidirectional gated recurrent units (BGRU) layers to extract the longitudinal features of the disease. In summary, RNN is commonly used with a CNN model in medical imaging. In [ 16 ], authors have developed a novel RNN for speeding up an iterative MAP estimation algorithm.

2.2.3 Unsupervised deep learning

Beside the CNN as a supervised machine leaning algorithm in medical imaging, there are a few unsupervised learning algorithms for this purpose as well, such as Deep Belief Networks (DBNs), Autoencoders, and Generative Adversarial Networks (GANs), where the last has been used for not only performing the image-based tasks but as a data synthesis and augmentation too. Unsupervised learning models have been used for different medical imaging applications, such as motion tracking [ 17 ] general modeling, classification improvement [ 18 ], artifact reduction [ 19 ], and medical image registration [ 20 ]. In this section, we will list the mostly used unsupervised learning structures.

2.2.3.1 Autoencoders

Autoencoders [ 21 , 22 ] are an unsupervised deep learning algorithm by which this model refers to the important features of an input data and dismisses the other data. These important representations of features are called ‘codings’ where it is commonly called representation learning. The basic architecture is shown in Fig. 3 . The robustness of autoencoders stems from the ability to reconstruct output data, which is similar to the input data, because it has cost function which applies penalties to the model when the output and input data are different. Moreover, autoencoders are considered as an automatic features detector, because they do not need labeled data to learn from due to the unsupervised manner. Autoencoders architecture is similar to a formal CNN model, but with the feature is that the number of input neurons must be equal to the number in the output layer. Reducing dimensionality of the raw input data is one of the features of autoencoders, and in some cases, autoencoders are used for denoising purpose [ 23 ], where this autoencoders are called denoising autoencoders. In general, there are few kinds of autoencoders used for different purposes, we mention here the common autoencoders, for example, Sparse Autoencoders [ 24 ] where the neurons in the hidden layer are deactivated through a threshold which means limiting the activated neurons to get a representation in the output similar to the input where for extracting most of the features from the input, most of the hidden layer neurons should be set to zero. Variational autoencoders (VAEs) [ 25 ] are generative model with two networks (Encoder and Decoder) where the encoder network projects the input into latent representation using Gaussian distribution approximation, and the decoder network maps the latent representations into the output data. Contractive autoencoders [ 26 ] and adversarial autoencoders are mostly similar to a Generative Adversarial Network (GAN).

2.2.3.2 Generative Adversarial Networks

GANs [ 27 ] 28 were first introduced by Ian Goodfellow in 2014; it consists basically on a combination of two CNN networks: the first one is called Generative model and another is the discriminator model. For better understanding how GANs work, scientists describe the two networks as a two players who competing against each other, where the generator network tries to fool the discriminator network by generating near authentic data (e.g., artificial images), while the discriminator network tries to distinguish between the generator output and the real data, Fig. 3 . The name of the network is inspired from the objective of the generator to overcome the discriminator. After the training process, both the generator and discriminator networks get better, where the first generates more real data, and the second learns how to differentiate between both previously mentioned data better until the end-point of the whole process where the discriminator network is unable to distinguish between real and artificial data (images). In fact, the criteria by which both networks learn from each other are using the backpropagation for the both, Markov chains, and dropout too. Recently, we have seen tremendous usage of GANs for different applications in medical imaging such as, synthetic images for generating new images and enhance the deep learning models efficiency by increasing the number of training images in the dataset [ 29 ], classification [ 30 , 31 ], detection [ 32 ], segmentation [ 33 , 34 ], image-to-image translation [ 35 ], and other application too. In a study by Kazeminia et al. [ 36 ], they have listed all the applications of GANs in medical imaging and the most two used applications of this unsupervised models are image synthesis and segmentation.

2.2.3.3 Restricted Boltzmann machines

Axkley et al. were the first to introduce the Boltzmann machines in 1985 [ 37 ], Fig. 3 , also known as Gibbs distribution, and further Smolensky has modified it to be known as Restricted Boltzmann Machines (RBMs) [ 38 ] . RBMs consist on two layers of neural networks with stochastic, generative, and probabilistic capabilities, and they can learn probability distributions and internal representations from the dataset. RBMs work using the backpropagation path of input data for generating and estimating the probability distribution of the original input data using gradient descent loss. These unsupervised models are used mostly for dimensionality reduction, filtering, classification, and features representation learning. In medical imaging, Tulder et al. [ 39 ] have modified the RBMs and introduced a novel convolutional RBMs for lung tissue classification using CT scan images; they have extracted the features using different methodologies (generative, discriminative, or mixed) to construct the filters; after that, Random Forest (RF) classifier was used for the classification objective. Ultimately, a stacked version of RBMs is called Deep Belief Networks (DBNs) [ 40 ]. Each RBM model performs non-linear transformation which will again be the input for the next RBM model; performing this process progressively gives the network a lot of flexibility while expansion.

DBNs are generative models, which allow them to be used as a supervised or unsupervised settings. The feature learning is done through an unsupervised manner by doing the layer-by-layer pre-training. For the classification task, a backpropagation (gradient descent) through the RBM stacks is done for fine-tuning on the labeled dataset. In medical imaging applications, DBNs were used widely; for example, Khatami et al. [ 41 ] used this model for classification of X-ray images of anatomic regions and orientations; in [ 42 ], AVN Reddy et al. have proposed a hybrid deep belief networks (DBN) for glioblastoma tumor classification from MRI images. Another significant application of DBNs was reported in [ 43 ] where they have used a novel DBNs’ framework for medical images’ fusion.

2.2.4 Self-supervised learning

Self-supervised learning is basically a subtype of unsupervised Learning, by which it learns features’ representations using a proxy task where the data contain supervisory signals. After representation learning, it is fine-tuned using annotated data. The benefit of self-supervised learning is that it eliminates the need of humans to label the data, where this system extracts the visibly natural relevant context from the data and assign metadata with the representations as supervisory signals. This system matches with unsupervised learning, because both systems learn representations without using explicitly provided labels, but the difference is that self-supervised learning does not learn inherent structure of data and it is not centered around clustering, anomaly detection, dimensionality reduction, and density estimation. The genesis model of this system can retrieve the original image from a distorted image (e.g., non-linear gray-value transformation, image inpainting, image out-painting, and pixels shuffle) using proxy task [ 44 ]. Zhu et al. [ 45 ] have used self-supervised learning and its proxy task to solve Rubik’s cube which mainly contain three operations (rotating, masking, and ordering) the robustness of this model comes from that the network is robust to noise and it learns features that are invariant to rotation and translation. Shekoofeh et al. [ 46 ] have exploited the effectiveness of self-supervised learning in pre-training strategy used to classify medical images for tow tasks (dermatology skin condition classification, and multi-label chest X-ray classification). Their study has improved the classification accuracy after using two self-supervised learning systems: the first one is trained on ImageNet dataset and the second one is trained on unlabeled domain specific medical images.

2.2.5 Semi-supervised learning

Semi-supervised learning is a system by which it stands in between supervised learning and unsupervised learning systems, because for example, it is used for classification task (supervised learning) but without having all the data labeled (Unsupervised learning). Thus, this system is trained on small, labeled dataset, and then generates pseudo-labels to get larger dataset with labels, and the final model is trained by mixing up both the original dataset and the generated one of images. Nie et al. [ 47 ] have proposed semi-supervised learning-based deep network for image segmentation, the proposed method trains adversarially a segmentation model, from the confidence map is computed, and the semi-supervised learning strategy is used to generate labeled data. Another application of semi-supervised learning is used for cardiac MRI segmentation, [ 48 ]. Liu et al. [ 49 ] have presented a novel relation-driven semi-supervised model to classify medical images, they have introduced a novel Sample Relation Consistency (SRC) paradigm to use unlabeled data by generalizing and modeling the relationship information between different samples; in their experiment, they have applied the novel method on two benchmark medical images for classification, skin lesion diagnosis from ISIC 2018 challenge, and thorax disease classification from the publicly dataset ChestX-ray14, and the results have achieved the state-of-the-art criteria.

2.2.6 Weakly (partially) supervised learning

Weak supervision is basically a branch of machine learning used to label unlabeled data by exploiting noisy, limited sources to provide supervision signal that is responsible of labeling large amount of training data using supervised manner. In general, the new labeled data in “weakly-supervised learning” are imperfect, but it can be used to create a robust predictive model. The weakly supervised method uses image-level annotations and weak annotations (e.g., dots and scribbles) [ 50 ]. Weakly supervised multi-label disease system was used for classification task of chest X-ray [ 51 ], Also, it is used for multi-organ segmentation, [ 52 ] by learning single multi-class network from a combination of multiple datasets, where each one of these datasets contains partially organ labeled data and low sample size. Roth et al. [ 53 ] have used weakly supervised learning system for medical image segmentation and their results has speeded up the process of generating new training dataset used for the development purpose of deep learning in medical images analysis. Schleg et al. [ 54 ] have used this type of deep learning approach to detect abnormal regions from test images. Hu et al. [ 55 ] proposed an end-to-end CNN approach for displacement field prediction to align multiple labeled corresponding structures, and the proposed work was used for medical image registration of prostate cancer from T2-weighted MRI and 3D transrectal ultrasound images; the results reached 0.87 of Mean Dice score. Another application is applied in diabetic retinopathy detection in a retinal image dataset [ 56 ].

2.2.7 Reinforcement learning

Reinforcement learning (RL) is subtype of deep learning by which it takes the beneficial action toward maximizing the rewards of specific situation. The main difference between supervised learning and reinforcement learning is that in the first one, the training data have the answer within it, but in case of reinforcement learning, the agent decides how to act with the task where in the absence of the training dataset the model learn from its experience. Al Walid et al. [ 57 ] have used reinforcement learning for landmark localization in 3D medical images; they have introduced the partial policy-based RL, by learning optimal policy of smaller partial domains; in this paper, the proposed method was used on three different localization task in 3D-CT scans and MR images and proved that learning the optimal behavior requires significantly smaller number of trials. Also in [ 58 ], RL was used for object detection PET images. RL was also used for color image classification on neuromorphic system [ 59 ].

2.2.7.1 Transfer learning

Transfer learning is one of the powerful enablers of deep learning [ 60 ], which involves training a deep leaning model by re-using of a an already trained model with related or un-related large dataset. It is known that medical data face the problem of lacking and insufficient for training deep learning models perfectly, so Transfer learning can provide the CNN models with large learned features from non-medical images which in turn can be useful for this case [ 61 ]. Furthermore, Transfer Learning is a key feature for time-consuming problem while training a deep neural network, because it uses the freeze weights and hyperparameters of another model. In usual using transfer learning the weights which is already trained on different data (images) are freezed to be used for another CNN model, and only in the few last layers, modifications are done and these few last layers are trained on the real data for tuning the hyperparameters and weights. For these reasons, transfer learning was widely used in medical imaging, for example a classification of the interstitial lung disease [ 61 ] and detecting the thoraco-abdominal lymph nodes from CT scans; it was found that transfer learning is efficient, even though the disparity between the medical images and natural images. Transfer learning as well could be used for different CNN models (e.g., VGG-16, Resnet-50, and Inception-V3), Xue et al. [ 62 ], have developed transfer learning-based model for these models, and furthermore, they have proposed an Ensembled Transfer Learning (ETL) framework for classification enhancement of cervical histopathological images. Overall, in many computer vision tasks, tuning the last classification layers (fully connected layers) which is called “shallow tuning” is probably efficient, but in medical imaging, a deep tuning for more layers is needed [ 63 ], where they have studied the benefit of using transfer learning in four applications within three imaging modalities (polyp detection from colonoscopy videos, segmentation of the layers of carotid artery wall from ultrasound scans, and colonoscopy video frame classification), their study results found that training more CNN layers on the medical images is efficient more than training from the scratch.

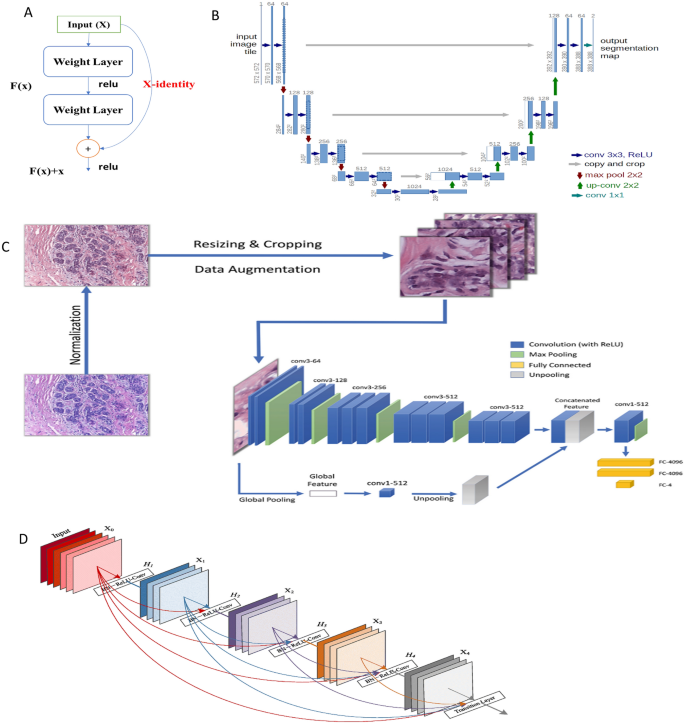

2.3 Best deep learning models and practices

Convolutional Neural Networks (CNNs) based models are usually used in different ways with keeping in minds that CNNs remains the heart of any model; in general, CNN could be trained on the available dataset from the scratch when the available dataset is very large to perform a specific task (e.g., segmentation, classification, detection, etc.), or a pre-trained model with a large dataset (e.g., ImageNet) where this model could be used to train new datasets (e.g., CT scans) with fine-tuning some layers only; this approach is called transfer learning (TL) [ 60 ]. Moreover, CNN models could be used for feature extraction only from the input images with more representation power before proceeding to the next stage of processing these features. In the literature, there were commonly used CNN models which has proven their effectiveness, and based on these models, some developments have arisen; we will mention the most efficient and used models of deep learning in medical images analysis. First, it was AlexNet which was introduced by Alex Krizhevsky [ 64 ] and Siyuan Lu et al. [ 65 ], have used transfer learning with a pre-trained AlexNet with replacing the parameters of the last three layers with a random parameters for pathological brain detection. Another frequently used model is Visual Geometry Group (VGG-16) [ 66 ] where 16 refers to the number of layers; later on, some developments were proposed for VGG-16 like VGG-19; in [ 67 ], they have listed medical imaging applications using different VGGNet architectures. Inception Network [ 68 ] is one of the most common CNN architectures which aim to limit the resources consumption. And further modifications on this basic network were reported with new versions of it [ 69 ]. Gao et al. [ 70 ] have proposed a new architecture of Residual Inception Encoder–Decoder Neural Network (RIEDNet) for medical images synthesis. Later on, Inception network was called Google Net [ 71 ]. ResNet [ 72 ] is a powerful architecture for very deep architectures sometimes over than 100 layers, and it helps in limiting the loss of gradient in the deeper layers, because it adds residual connections between some convolutional layers Fig. 4 . Some of ResNet models in medical imaging are mostly used for robust classification [ 73 , 74 ], for pulmonary nodes and intracranial hemorrhage.

The basic models used in medical imaging: A ResNet architecture, B U-Net architecture [ 75 ], C CNN AlexNet architecture for breast cancer [ 76 ], and D Dense Net architecture [ 77 ]

DenseNet exploits same aspect of residual CNN (ResNet) but in a compact mode for achieving good representations and feature extraction. Each layer of the network has in its input outputs from the previous layers, so comparing to a traditional CNN, DenseNet contains more connections (L) than CNN (L connections) where DenseNet has [ L ( L − 1)]/2 connections. DenseNet is widely used with medical images, Mahmood et al . [ 78 ] have proposed a Multimodal DenseNet for fusing multimodal data to give the model the flexibility of combining information from multiple resources, and they have used this novel model for polyp characterization and landmark identification in endoscopy. Another application used transfer learning with DenseNet for fundus medical images [ 79 ].

U-net [ 80 ] is one of the most popular network architectures used mostly for segmentation, Fig. 4 . The reason behind it is mostly used in medical images is that because it is able to localize and highlight the borders between classes (e.g., brain normal tissues and malignant tissues) by doing the classification for each pixel. It is called U-net, because the network architecture takes the shape of U alphabet and it contains concatenation connections; Fig. 4 shows the basic structure of the U-Net. Some developments of U-Net were U-Net + + [ 75 ], have proposed a new architecture U-Net + + for medical image segmentation, and in their experiments, U_Net + + has outperformed both U-Net and wide U-Net architectures for multiple medical image segmentation tasks, such as liver segmentation from CT scans, polyp segmentation in colonoscopy videos, and nuclei segmentation from microscopy images. From these popular and basic DL models, some other models were inspired and even some of these models were inspired and rely on the insights from others (e.g., inception and ResNet); Fig. 5 shows the timeline of the mentioned models and other popular models too.

Timeline of mostly used DL models in medical imaging

3 Deep learning applications in medical imaging

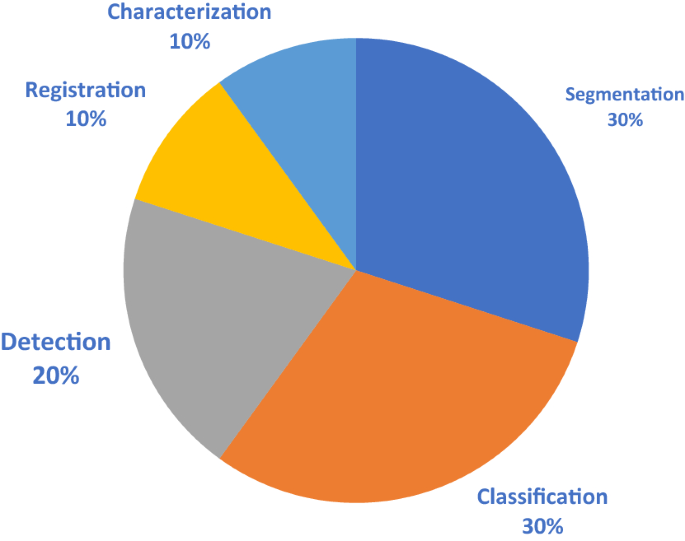

For the purpose of studying the most applications of deep learning in medical imaging, we have organized a study based on the most-cited papers found in literature from 2015 to 2021; the number of surveyed literatures for segmentation, detection, classification, registration, and characterization are: 30, 20, 30, 10, and 10, respectively. Figure 6 shows the pie chart of these applications.

Surveyed DL applications in medical imaging

3.1 Image segmentation

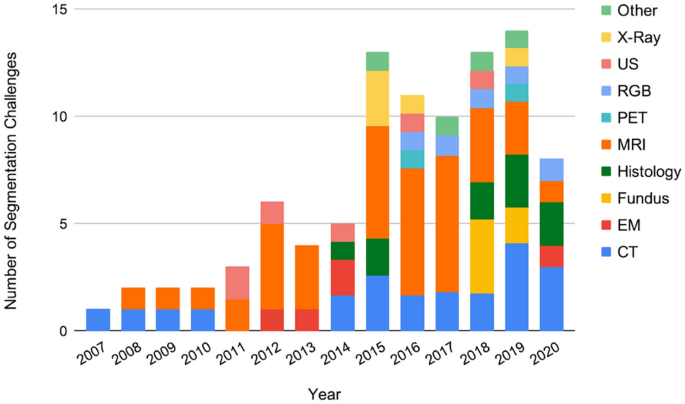

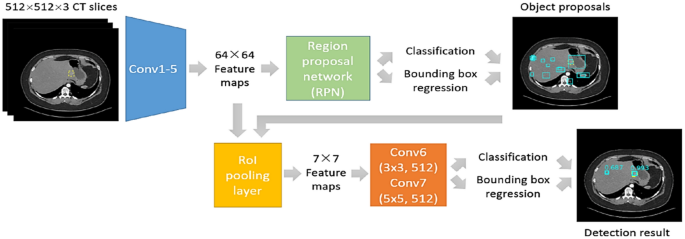

Deep learning is used to segment different body structures from different imaging modalities such as, MRI, CT scans, PET, and ultrasound images. Segmentation means portioning an image into different segments where usually these segments belongs to specific classes (tissue classes, organ, or biological structure) [ 81 ]. In general overview, for CNN models, there are two main approaches for segmenting a medical image; the first is using the entire image as an input and the second is using patches from the image. Segmentation process of Liver tumor using CNN architecture is shown in Fig. 7 according to Li et al., and both the methods work well in generating an output map which provides the segmented output image. Segmentation is potential for surgical planning and determining the exact boundaries of sub-regions (e.g., tumor tissues) for better guidance during the direct surgery resection. Most likely segmentation is common in neuroimaging field and with brain segmentation more than other organs in the body. Akkus et al. [ 82 ] have reviewed different DL models for segmentation of different organs with their datasets. Since CNN architecture can handle both 2-dimensional and 3-dimensional images, it is considered suitable for MRI which is in 3D scheme; Milleteria et al. [ 83 ] have used 3D MRI images and applier 3D-CNN for segmenting prostate images. They have proposed new CNN architecture which is V-Net which relies on the insights of U-Net [ 80 ] and their output results have achieved 0.869 dice similarity coefficient score; this is considered as efficient model regarding to the small dataset (50 MRI for training and 30 MRI for testing). Havaei et al. [ 84 ] have worked on Glioma segmentation from BRATS-2013 with 2D-CNN model and this model took only 3 min to run. From clinical point of view, segmentation of organs is used for calculating clinical parameters (e.g., volume) and improving the performance of Computer-Aided Detection (CAD) to define the regions accurately. Taghanaki et al. [ 85 ] have listed the segmentation challenges from 2007 to 2020 with different imaging modalities; Fig. 8 shows the number of these challenges. We have summarized Deep Learning models for segmentation for different organs in the body, based on the highly cited paper and variations in deep learning models shown in Table 1

Liver tumor segmentation using CNN architecture [ 86 ]

The number of challenges related to segmentation in medical imaging from 2007 to 2020 listed on Grand Challenges regarding the imaging modalities

3.2 Image detection/localization