A Comprehensive Study of Regression Analysis and the Existing Techniques

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 01 December 2015

Points of Significance

Multiple linear regression

- Martin Krzywinski 2 &

- Naomi Altman 1

Nature Methods volume 12 , pages 1103–1104 ( 2015 ) Cite this article

45k Accesses

80 Citations

43 Altmetric

Metrics details

When multiple variables are associated with a response, the interpretation of a prediction equation is seldom simple.

You have full access to this article via your institution.

Last month we explored how to model a simple relationship between two variables, such as the dependence of weight on height 1 . In the more realistic scenario of dependence on several variables, we can use multiple linear regression (MLR). Although MLR is similar to linear regression, the interpretation of MLR correlation coefficients is confounded by the way in which the predictor variables relate to one another.

In simple linear regression 1 , we model how the mean of variable Y depends linearly on the value of a predictor variable X ; this relationship is expressed as the conditional expectation E( Y | X ) = β 0 + β 1 X . For more than one predictor variable X 1 , . . ., X p , this becomes β 0 + Σ β j X j . As for simple linear regression, one can use the least-squares estimator (LSE) to determine estimates b j of the β j regression parameters by minimizing the residual sum of squares, SSE = Σ( y i − ŷ i ) 2 , where ŷ i = b 0 + Σ j b j xij . When we use the regression sum of squares, SSR = Σ( ŷ i − Y − ) 2 , the ratio R 2 = SSR/(SSR + SSE) is the amount of variation explained by the regression model and in multiple regression is called the coefficient of determination.

The slope β j is the change in Y if predictor j is changed by one unit and others are held constant. When normality and independence assumptions are fulfilled, we can test whether any (or all) of the slopes are zero using a t -test (or regression F -test). Although the interpretation of β j seems to be identical to its interpretation in the simple linear regression model, the innocuous phrase “and others are held constant” turns out to have profound implications.

To illustrate MLR—and some of its perils—here we simulate predicting the weight ( W , in kilograms) of adult males from their height ( H , in centimeters) and their maximum jump height ( J , in centimeters). We use a model similar to that presented in our previous column 1 , but we now include the effect of J as E( W | H , J ) = β H H + β J J + β 0 + ε, with β H = 0.7, β J = −0.08, β 0 = −46.5 and normally distributed noise ε with zero mean and σ = 1 ( Table 1 ). We set β J negative because we expect a negative correlation between W and J when height is held constant (i.e., among men of the same height, lighter men will tend to jump higher). For this example we simulated a sample of size n = 40 with H and J normally distributed with means of 165 cm (σ = 3) and 50 cm (σ = 12.5), respectively.

Although the statistical theory for MLR seems similar to that for simple linear regression, the interpretation of the results is much more complex. Problems in interpretation arise entirely as a result of the sample correlation 2 among the predictors. We do, in fact, expect a positive correlation between H and J —tall men will tend to jump higher than short ones. To illustrate how this correlation can affect the results, we generated values using the model for weight with samples of J and H with different amounts of correlation.

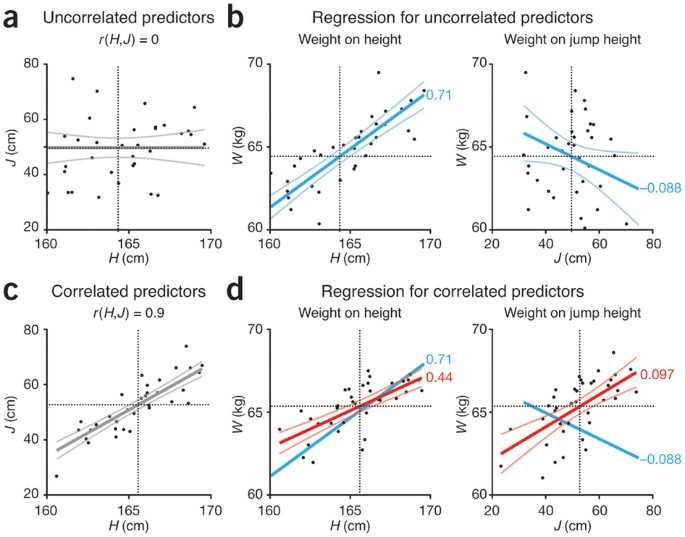

Let's look first at the regression coefficients estimated when the predictors are uncorrelated, r ( H , J ) = 0, as evidenced by the zero slope in association between H and J ( Fig. 1a ). Here r is the Pearson correlation coefficient 2 . If we ignore the effect of J and regress W on H , we find Ŵ = 0.71 H − 51.7 ( R 2 = 0.66) ( Table 1 and Fig. 1b ). Ignoring H , we find Ŵ = −0.088 J + 69.3 ( R 2 = 0.19). If both predictors are fitted in the regression, we obtain Ŵ = 0.71 H − 0.088 J − 47.3 ( R 2 = 0.85). This regression fit is a plane in three dimensions ( H , J , W ) and is not shown in Figure 1 . In all three cases, the results of the F -test for zero slopes show high significance ( P ≤ 0.005).

( a ) Simulated values of uncorrelated predictors, r ( H , J ) = 0. The thick gray line is the regression line, and thin gray lines show the 95% confidence interval of the fit. ( b ) Regression of weight ( W ) on height ( H ) and of weight on jump height ( J ) for uncorrelated predictors shown in a . Regression slopes are shown ( b H = 0.71, b J = −0.088). ( c ) Simulated values of correlated predictors, r ( H , J ) = 0.9. Regression and 95% confidence interval are denoted as in a . ( d ) Regression (red lines) using correlated predictors shown in c . Light red lines denote the 95% confidence interval. Notice that b J = 0.097 is now positive. The regression line from b is shown in blue. In all graphs, horizontal and vertical dotted lines show average values.

When the sample correlations of the predictors are exactly zero, the regression slopes ( b H and b J ) for the “one predictor at a time” regressions and the multiple regression are identical, and the simple regression R 2 sums to multiple regression R 2 (0.66 + 0.19 = 0.85; Fig. 2 ). The intercept changes when we add a predictor with a nonzero mean to satisfy the constraint that the least-squares regression line goes through the sample means, which is always true when the regression model includes an intercept.

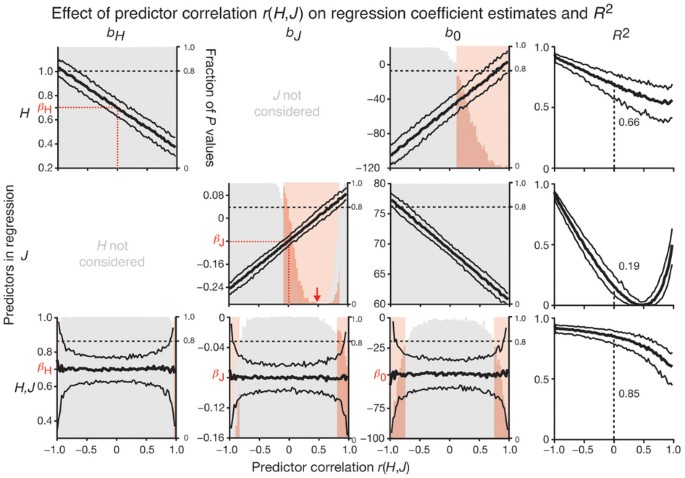

Shown are the values of regression coefficient estimates ( b H , b J , b 0 ) and R 2 and the significance of the test used to determine whether the coefficient is zero from 250 simulations at each value of predictor sample correlation −1 < r ( H , J ) < 1 for each scenario where either H or J or both H and J predictors are fitted in the regression. Thick and thin black curves show the coefficient estimate median and the boundaries of the 10th–90th percentile range, respectively. Histograms show the fraction of estimated P values in different significance ranges, and correlation intervals are highlighted in red where >20% of the P values are >0.01. Actual regression coefficients ( β H , β J , β 0 ) are marked on vertical axes. The decrease in significance for b J when jump height is the only predictor and r ( H , J ) is moderate (red arrow) is due to insufficient statistical power ( b J is close to zero). When predictors are uncorrelated, r ( H , J ) = 0, R 2 of individual regressions sum to R 2 of multiple regression (0.66 + 0.19 = 0.85). Panels are organized to correspond to Table 1 , which shows estimates of a single trial at two different predictor correlations.

Balanced factorial experiments show a sample correlation of zero among the predictors when their levels have been fixed. For example, we might fix three heights and three jump heights and select two men representative of each combination, for a total of 18 subjects to be weighed. But if we select the samples and then measure the predictors and response, the predictors are unlikely to have zero correlation.

When we simulate highly correlated predictors r ( H , J ) = 0.9 ( Fig. 1c ), we find that the regression parameters change depending on whether we use one or both predictors ( Table 1 and Fig. 1d ). If we consider only the effect of H , the coefficient β H = 0.7 is inaccurately estimated as b H = 0.44. If we include only J , we estimate β J = −0.08 inaccurately, and even with the wrong sign ( b J = 0.097). When we use both predictors, the estimates are quite close to the actual coefficients ( b H = 0.63, b J = −0.056).

In fact, as the correlation between predictors r ( H , J ) changes, the estimates of the slopes ( b H , b J ) and intercept ( b 0 ) vary greatly when only one predictor is fitted. We show the effects of this variation for all values of predictor correlation (both positive and negative) across 250 trials at each value ( Fig. 2 ). We include negative correlation because although J and H are likely to be positively correlated, other scenarios might use negatively correlated predictors (e.g., lung capacity and smoking habits). For example, if we include only H in the regression and ignore the effect of J , b H steadily decreases from about 1 to 0.35 as r ( H , J ) increases. Why is this? For a given height, larger values of J (an indicator of fitness) are associated with lower weight. If J and H are negatively correlated, as J increases, H decreases, and both changes result in a lower value of W . Conversely, as J decreases, H increases, and thus W increases. If we use only H as a predictor, J is lurking in the background, depressing W at low values of H and enhancing W at high levels of H , so that the effect of H is overestimated ( b H increases). The opposite effect occurs when J and H are positively correlated. A similar effect occurs for b J , which increases in magnitude (becomes more negative) when J and H are negatively correlated. Supplementary Figure 1 shows the effect of correlation when both regression coefficients are positive.

When both predictors are fitted ( Fig. 2 ), the regression coefficient estimates ( b H , b J , b 0 ) are centered at the actual coefficients ( β H , β J , β 0 ) with the correct sign and magnitude regardless of the correlation of the predictors. However, the standard error in the estimates steadily increases as the absolute value of the predictor correlation increases.

Neglecting important predictors has implications not only for R 2 , which is a measure of the predictive power of the regression, but also for interpretation of the regression coefficients. Unconsidered variables that may have a strong effect on the estimated regression coefficients are sometimes called 'lurking variables'. For example, muscle mass might be a lurking variable with a causal effect on both body weight and jump height. The results and interpretation of the regression will also change if other predictors are added.

Given that missing predictors can affect the regression, should we try to include as many predictors as possible? No, for three reasons. First, any correlation among predictors will increase the standard error of the estimated regression coefficients. Second, having more slope parameters in our model will reduce interpretability and cause problems with multiple testing. Third, the model may suffer from overfitting. As the number of predictors approaches the sample size, we begin fitting the model to the noise. As a result, we may seem to have a very good fit to the data but still make poor predictions.

MLR is powerful for incorporating many predictors and for estimating the effects of a predictor on the response in the presence of other covariates. However, the estimated regression coefficients depend on the predictors in the model, and they can be quite variable when the predictors are correlated. Accurate prediction of the response is not an indication that regression slopes reflect the true relationship between the predictors and the response.

Altman, N. & Krzywinski, M. Nat. Methods 12 , 999–1000 (2015).

Article CAS Google Scholar

Altman, N. & Krzywinski, M. Nat. Methods 12 , 899–900 (2015).

Download references

Author information

Authors and affiliations.

Naomi Altman is a Professor of Statistics at The Pennsylvania State University.,

- Naomi Altman

Martin Krzywinski is a staff scientist at Canada's Michael Smith Genome Sciences Centre.,

Martin Krzywinski

You can also search for this author in PubMed Google Scholar

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Integrated supplementary information

Supplementary figure 1 regression coefficients and r 2.

The significance and value of regression coefficients and R 2 for a model with both regression coefficients positive, E( W | H,J ) = 0.7 H + 0.08 J - 46.5 + ε. The format of the figure is the same as that of Figure 2 .

Supplementary information

Supplementary figure 1.

Regression coefficients and R 2 (PDF 299 kb)

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Krzywinski, M., Altman, N. Multiple linear regression. Nat Methods 12 , 1103–1104 (2015). https://doi.org/10.1038/nmeth.3665

Download citation

Published : 01 December 2015

Issue Date : December 2015

DOI : https://doi.org/10.1038/nmeth.3665

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Income and oral and general health-related quality of life: the modifying effect of sense of coherence, findings of a cross-sectional study.

- Mehrsa Zakershahrak

- Sergio Chrisopoulos

- David Brennan

Applied Research in Quality of Life (2023)

Outcomes of a novel all-inside arthroscopic anterior talofibular ligament repair for chronic ankle instability

- Xiao’ao Xue

- Yinghui Hua

International Orthopaedics (2023)

Predicting financial losses due to apartment construction accidents utilizing deep learning techniques

- Ji-Myong Kim

- Sang-Guk Yum

Scientific Reports (2022)

Regression modeling of time-to-event data with censoring

- Tanujit Dey

- Stuart R. Lipsitz

Nature Methods (2022)

A Systematic Analysis for Energy Performance Predictions in Residential Buildings Using Ensemble Learning

- Monika Goyal

- Mrinal Pandey

Arabian Journal for Science and Engineering (2021)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

Help | Advanced Search

Mathematics > Statistics Theory

Title: all of linear regression.

Abstract: Least squares linear regression is one of the oldest and widely used data analysis tools. Although the theoretical analysis of the ordinary least squares (OLS) estimator is as old, several fundamental questions are yet to be answered. Suppose regression observations $(X_1,Y_1),\ldots,(X_n,Y_n)\in\mathbb{R}^d\times\mathbb{R}$ (not necessarily independent) are available. Some of the questions we deal with are as follows: under what conditions, does the OLS estimator converge and what is the limit? What happens if the dimension is allowed to grow with $n$? What happens if the observations are dependent with dependence possibly strengthening with $n$? How to do statistical inference under these kinds of misspecification? What happens to the OLS estimator under variable selection? How to do inference under misspecification and variable selection? We answer all the questions raised above with one simple deterministic inequality which holds for any set of observations and any sample size. This implies that all our results are a finite sample (non-asymptotic) in nature. In the end, one only needs to bound certain random quantities under specific settings of interest to get concrete rates and we derive these bounds for the case of independent observations. In particular, the problem of inference after variable selection is studied, for the first time, when $d$, the number of covariates increases (almost exponentially) with sample size $n$. We provide comments on the ``right'' statistic to consider for inference under variable selection and efficient computation of quantiles.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Lippincott Open Access

Linear Regression in Medical Research

Patrick schober.

From the * Department of Anesthesiology, Amsterdam UMC, Vrije Universiteit Amsterdam, Amsterdam, the Netherlands

Thomas R. Vetter

† Department of Surgery and Perioperative Care, Dell Medical School at the University of Texas at Austin, Austin, Texas.

Related Article, see p 110

Linear regression is used to quantify the relationship between ≥1 independent (predictor) variables and a continuous dependent (outcome) variable.

In this issue of Anesthesia & Analgesia , Müller-Wirtz et al 1 report results of a study in which they used linear regression to assess the relationship in a rat model between tissue propofol concentrations and exhaled propofol concentrations (Figure.

Table 2 given in Müller-Wirtz et al, 1 showing the estimated relationships between tissue (or plasma) propofol concentrations and exhaled propofol concentrations. The authors appropriately report the 95% confidence intervals as a measure of the precision of their estimates, as well as the coefficient of determination ( R 2 ). The presented values indicate, for example, that (1) the exhaled propofol concentrations are estimated to increase on average by 4.6 units, equal to the slope (regression) coefficient, for each 1-unit increase of plasma propofol concentration; (2) the “true” mean increase could plausibly be expected to lie anywhere between 3.6 and 5.7 units as indicated by the slope coefficient’s confidence interval; and (3) the R 2 suggests that about 71% of the variability in the exhaled concentration can be explained by its relationship with plasma propofol concentrations.

Linear regression is used to estimate the association of ≥1 independent (predictor) variables with a continuous dependent (outcome) variable. 2 In the most simple case, thus referred to as “simple linear regression,” there is only one independent variable. Simple linear regression fits a straight line to the data points that best characterizes the relationship

between the dependent ( Y ) variable and the independent ( X ) variable, with the y -axis intercept ( b 0 ), and the regression coefficient being the slope ( b 1 ) of this line:

A model that includes several independent variables is referred to as “multiple linear regression” or “multivariable linear regression.” Even though the term linear regression suggests otherwise, it can also be used to model curved relationships.

Linear regression is an extremely versatile technique that can be used to address a variety of research questions and study aims. Researchers may want to test whether there is evidence for a relationship between a categorical (grouping) variable (eg, treatment group or patient sex) and a quantitative outcome (eg, blood pressure). The 2-sample t test and analysis of variance, 3 which are commonly used for this purpose, are essentially special cases of linear regression. However, linear regression is more flexible, allowing for >1 independent variable and allowing for continuous independent variables. Moreover, when there is >1 independent variable, researchers can also test for the interaction of variables—in other words, whether the effect of 1 independent variable depends on the value or level of another independent variable.

Linear regression not only tests for relationships but also quantifies their direction and strength. The regression coefficient describes the average (expected) change in the dependent variable for each 1-unit change in the independent variable for continuous independent variables or the expected difference versus a reference category for categorical independent variables. The coefficient of determination, commonly referred to as R 2 , describes the proportion of the variability in the outcome variable that can be explained by the independent variables. With simple linear regression, the coefficient of determination is also equal to the square of the Pearson correlation between the x and y values.

When including several independent variables, the regression model estimates the effect of each independent variable while holding the values of all other independent variables constant. 4 Thus, linear regression is useful (1) to distinguish the effects of different variables on the outcome and (2) to control for other variables—like systematic confounding in observational studies or baseline imbalances due to chance in a randomized controlled trial. Ultimately, linear regression can be used to predict the value of the dependent outcome variable based on the value(s) of the independent predictor variable(s).

Valid inferences from linear regression rely on its assumptions being met, including

- the residuals are the differences between the observed values and the values predicted by the regression model, and the residuals must be approximately normally distributed and have approximately the same variance over the range of predicted values;

- the residuals are also assumed to be uncorrelated. In simple language, the observations must be independent of each other; for example, there must not be repeated measurements within the same subjects. Other techniques like linear mixed-effects models are required for correlated data 5 ; and

- the model must be correctly specified, as explained in more detail in the next paragraph.

Whereas Müller-Wirtz et al 1 used simple linear regression to address their research question, researchers often need to specify a multivariable model and make choices on which independent variables to include and on how to model the functional relationship between variables (eg, straight line versus curve; inclusion of interaction terms).

Variable selection is a much-debated topic, and the details are beyond the scope of this Statistical Minute. Basically, variable selection depends on whether the purpose of the model is to understand the relationship between variables or to make predictions. This is also predicated on whether there is informed a priori theory to guide variable selection and on whether the model needs to control for variables that are not of primary interest but are confounders that could distort the relationship between other variables.

Omitting important variables or interactions can lead to biased estimates and a model that poorly describes the true underlying relationships, whereas including too many variables leads to modeling the noise (sampling error) in the data and reduces the precision of the estimates. Various statistics and plots, including adjusted R 2 , Mallows C p , and residual plots are available to assess the goodness of fit of the chosen linear regression model.

Subscribe to the PwC Newsletter

Join the community, edit method, add a method collection.

- GENERALIZED LINEAR MODELS

Remove a collection

- GENERALIZED LINEAR MODELS -

Add A Method Component

Remove a method component, linear regression.

Linear Regression is a method for modelling a relationship between a dependent variable and independent variables. These models can be fit with numerous approaches. The most common is least squares , where we minimize the mean square error between the predicted values $\hat{y} = \textbf{X}\hat{\beta}$ and actual values $y$: $\left(y-\textbf{X}\beta\right)^{2}$.

We can also define the problem in probabilistic terms as a generalized linear model (GLM) where the pdf is a Gaussian distribution, and then perform maximum likelihood estimation to estimate $\hat{\beta}$.

Image Source: Wikipedia

Usage Over Time

Categories edit add remove.

multiple linear regression Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

The Effect of Conflict and Termination of Employment on Employee's Work Spirit

This study aims to find out the conflict and termination of employment both partially and simultaneously have a significant effect on the morale of employees at PT. The benefits of Medan Technique and how much it affects. The method used in this research is quantitative method with several tests namely reliability analysis, classical assumption deviation test and linear regression. Based on the results of primary data regression processed using SPSS 20, multiple linear regression equations were obtained as follows: Y = 1,031 + 0.329 X1+ 0.712 X2.In part, the conflict variable (X1)has a significant effect on the employee's work spirit (Y) at PT. Medan Technical Benefits. This means that the hypothesis in this study was accepted, proven from the value of t calculate > t table (3,952 < 2,052). While the variable termination of employment (X2) has a significant influence on the work spirit of employees (Y) in PT. Medan Technical Benefits. This means that the hypothesis in this study was accepted, proven from the value of t calculate > t table (7,681 > 2,052). Simultaneously, variable conflict (X1) and termination of employment (X2) have a significant influence on the morale of employees (Y) in PT. Medan Technical Benefits. This means that the hypothesis in this study was accepted, as evidenced by the calculated F value > F table (221,992 > 3.35). Conflict variables (X1) and termination of employment (X2) were able to contribute an influence on employee morale variables (Y) of 94.3% while the remaining 5.7% was influenced by other variables not studied in this study. From the above conclusions, the author advises that employees and leaders should reduce prolonged conflict so that the spirit of work can increase. Leaders should be more selective in severing employment relationships so that decent employees are not dismissed unilaterally. Employees should work in a high spirit so that the company can see the quality that employees have.

Prediction of Local Government Revenue using Data Mining Method

Local Government Revenue or commonly abbreviated as PAD is part of regional income which is a source of regional financing used to finance the running of government in a regional government. Each local government must plan Local Government Revenue for the coming year so that a forecasting method is needed to determine the Local Government Revenue value for the coming year. This study discusses several methods for predicting Local Government Revenue by using data on the realization of Local Government Revenue in the previous years. This study proposes three methods for forecasting local Government revenue. The three methods used in this research are Multiple Linear Regression, Artificial Neural Network, and Deep Learning. In this study, the data used is Local Revenue data from 2010 to 2020. The research was conducted using RapidMiner software and the CRISP-DM framework. The tests carried out showed an RMSE value of 97 billion when using the Multiple Linear Regression method and R2 of 0,942, the ANN method shows an RMSE value of 135 billion and R2 of 0.911, and the Deep Learning method shows the RMSE value of 104 billion and R2 of 0.846. This study shows that for the prediction of Local Government Revenue, the Multiple Linear Regression method is better than the ANN or Deep Learning method. Keywords— Local Government Revenue, Multiple Linear Regression, Artificial Neural Network, Deep Learning, Coefficient of Determination

Analisis Peran Motivasi sebagai Mediasi Pengaruh Trilogi Kepemimpinan dan Kepuasan Kerja terhadap Produktivitas Kerja Karyawan PT. Mataram Tunggal Garment

The purpose of this study is to find out the motivation to mediate the leadership trilogy and job satisfaction to employee work productivity at PT. Mataram Tunggal Garment. The method used in this study is quantitative. Primary data was obtained from questionnaires with 78 respondents with saturated sample techniques. Then the data is analyzed using descriptive analysis, multiple linear regression tests, t (partial) tests, coesifisien determination (R2) and sobel tests. The results showed that job satisfaction had a significant influence on motivation, leadership trilogy and job satisfaction had a significant influence on employee work productivity, leadership trilogy and motivation had no significant effect on employee work productivity, motivation mediated leadership trilogy and job satisfaction had no insignificant effect on employee work productivity. Keywords: Leadership Trilogy, Motivation, Job Satisfaction and Employee Productivity.

Prevalence of asymptomatic hyperuricemia and its association with prediabetes, dyslipidemia and subclinical inflammation markers among young healthy adults in Qatar

Abstract Aim The aim of this study is to investigate the prevalence of asymptomatic hyperuricemia in Qatar and to examine its association with changes in markers of dyslipidemia, prediabetes and subclinical inflammation. Methods A cross-sectional study of young adult participants aged 18 - 40 years old devoid of comorbidities collected between 2012 and 2017. Exposure was defined as uric acid level, and outcomes were defined as levels of different blood markers. De-identified data were collected from Qatar Biobank. T-tests, correlation tests and multiple linear regression were all used to investigate the effects of hyperuricemia on blood markers. Statistical analyses were conducted using STATA 16. Results The prevalence of asymptomatic hyperuricemia is 21.2% among young adults in Qatar. Differences between hyperuricemic and normouricemic groups were observed using multiple linear regression analysis and found to be statistically and clinically significant after adjusting for age, gender, BMI, smoking and exercise. Significant associations were found between uric acid level and HDL-c p = 0.019 (correlation coefficient -0.07 (95% CI [-0.14, -0.01]); c-peptide p = 0.018 (correlation coefficient 0.38 (95% CI [0.06, 0.69]) and monocyte to HDL ratio (MHR) p = 0.026 (correlation coefficient 0.47 (95% CI [0.06, 0.89]). Conclusions Asymptomatic hyperuricemia is prevalent among young adults and associated with markers of prediabetes, dyslipidemia, and subclinical inflammation.

Screen Time, Age and Sunshine Duration Rather Than Outdoor Activity Time Are Related to Nutritional Vitamin D Status in Children With ASD

Objective: This study aimed to investigate the possible association among vitamin D, screen time and other factors that might affect the concentration of vitamin D in children with autism spectrum disorder (ASD).Methods: In total, 306 children with ASD were recruited, and data, including their age, sex, height, weight, screen time, time of outdoor activity, ASD symptoms [including Autism Behavior Checklist (ABC), Childhood Autism Rating Scale (CARS) and Autism Diagnostic Observation Schedule–Second Edition (ADOS-2)] and vitamin D concentrations, were collected. A multiple linear regression model was used to analyze the factors related to the vitamin D concentration.Results: A multiple linear regression analysis showed that screen time (β = −0.122, P = 0.032), age (β = −0.233, P < 0.001), and blood collection month (reflecting sunshine duration) (β = 0.177, P = 0.004) were statistically significant. The vitamin D concentration in the children with ASD was negatively correlated with screen time and age and positively correlated with sunshine duration.Conclusion: The vitamin D levels in children with ASD are related to electronic screen time, age and sunshine duration. Since age and season are uncontrollable, identifying the length of screen time in children with ASD could provide a basis for the clinical management of their vitamin D nutritional status.

Determining Factors of Fraud in Local Government

The objectives of this research are to analyze determining factors of fraud in local government. This study used internal control effectiveness, compliance with accounting rules, compensation compliance, and unethical behavior as an independent variable, while fraud as the dependent variable. The research was conducted at Bantul local government (OPD). The sample of this research were 86 respondents. The sample uses a purposive sampling method. The respondent data is analyzed with multiple linear regression. The results showed: Internal control effectiveness has an impact on fraud. Compliance with accounting rules does not affect fraud. Compensations compliance does not affect fraud. Unethical behavior has an impact on fraud.

PENGARUH TINGKAT EFEKTIVITAS PERPUTARAN KAS, PIUTANG, DAN MODAL KERJA TERHADAP RENTABILITAS EKONOMI PADA KOPERASI PEDAGANG PASAR GROGOLAN BARU (KOPPASGOBA) PERIODE 2016-2020

This study aims to test and analyze the effect of effectiveness of cash turnover, receivables,and working capital on economic rentability in the New Grogolan Market Traders Cooperative of Pekalongan City from 2016 to 2020. The method used in this study was quantitative research method with documentation techniques and analyzed used multiple linear regression analysis. The results of this study showed (1) the effectiveness of cash turnover has no significant effect on economic rentability, (2) the effectiveness of receivables turnover has no significant effect on economic rentability, (3) the effectiveness of working capital turnover has a positive and significant effect on economic rentability, and (4) there is a positive and significant effect on the effectiveness of cash turnover, receivables, and working capital together on economic rentability. Keywords: Turnover of cash, turnover of receivables, turnover of working capital, and economic rentability.

Improvement of AHMES Using AI Algorithms

This research aims to improve the rationality and intelligence of AUTOMATICALLY HIGHER MATHEMATICALLY EXAM SYSTEM (AHMES) through some AI algorithms. AHMES is an intelligent and high-quality higher math examination solution for the Department of Computer Engineering at Pai Chai University. This research redesigned the difficulty system of AHMES and used some AI algorithms for initialization and continuous adjustment. This paper describes the multiple linear regression algorithm involved in this research and the AHMES learning (AL) algorithm improved by the Q-learning algorithm. The simulation test results of the upgraded AHMES show the effectiveness of these algorithms.

ANALISIS PENGARUH KUALITAS PELAYANAN, PROMOSI DAN HARGA TERHADAP KEPUASAN PELANGGAN PADA JASA PENGIRIMAN BARANG JNE DI BESUKI

This research was conducted to see the effect of service quality, promotion and price on customer satisfaction. This research was conducted at the Besuki branch of JNE. Sampling was done by random sampling technique where all the population was taken at random to be the research sample. This is done to increase customer satisfaction at JNE Besuki branch through service quality, promotion and price. The analytical tool used is multiple linear regression to determine service quality, promotion and price on customer satisfaction. The results show that service quality affects customer satisfaction, promotion affects customer satisfaction, price affects customer satisfaction. Keyword : service quality, promotion, price, customer satisfaction

PENGARUH KUALITAS PRODUK, HARGA DAN INFLUENCER MARKETING TERHADAP KEPUTUSAN PEMBELIAN SCARLETT BODY WHITENING

This research aimed to figure out the influence between product quality, price and marketing influencer with the purchasing decision of Scarlett Body Whitening in East Java. The research instrument employed questionnaire to collect data from Scarlett Body Whitening consumers in East Java. Since there was no valid data for number of the consumers, the research used Roscoe method to take the sample. Data analyzed using multiple linear regression test. Product quality and price have a positive and significant effect on purchasing decisions. Meanwhile, the marketing influencer had no significant effect on purchase decision for Scarlett Body Whitening. Need further research to ensure that marketing influencer had an effect on purchase decision. Keywords: Product quality, price, marketing influencer, buying decision

Export Citation Format

Share document.

IMAGES

VIDEO

COMMENTS

Linear regression is a statistical procedure for calculating the value of a dependent variable from an independent variable. Linear regression measures the association between two variables. It is ...

Linear regression refers to the mathematical technique of fitting given data to a function of a certain type. It is best known for fitting straight lines. In this paper, we explain the theory behind linear regression and illustrate this technique with a real world data set. This data relates the earnings of a food truck and the population size of the city where the food truck sells its food.

Example: regression line for a multivariable regression Y = -120.07 + 100.81 × X 1 + 0.38 × X 2 + 3.41 × X 3, where. X 1 = height (meters) X 2 = age (years) ... The study of relationships between variables and the generation of risk scores are very important elements of medical research. The proper performance of regression analysis ...

Clinical & Experimental Ophthalmology is an official RANZCO journal, publishing peer-reviewed papers covering all aspects of eye disease clinical practice and research. Abstract Linear regression (LR) is a powerful statistical model when used correctly.

This paper examines and compares various regression models and machine learning algorithms. The selected techniques include multiple linear regression (MLR), ridge regression (RR), least absolute shrinkage and selection operator (LASSO) regression, multilayer perceptron (MLP), radial basis function (RBF), decision tree (DT), support vector ...

The most basic regression relationship is a simple linear regression. In this case, E ( Y | X) = μ ( X) = β0 + β1X, a line with intercept β0 and slope β1. We can interpret this as Y having a ...

When we use the regression sum of squares, SSR = Σ ( ŷi − Y−) 2, the ratio R2 = SSR/ (SSR + SSE) is the amount of variation explained by the regression model and in multiple regression is ...

All of Linear Regression. Least squares linear regression is one of the oldest and widely used data analysis tools. Although the theoretical analysis of the ordinary least squares (OLS) estimator is as old, several fundamental questions are yet to be answered. Suppose regression observations (X_1,Y_1),\ldots, (X_n,Y_n)\in\mathbb {R}^d\times ...

Linear regression is an extremely versatile technique that can be used to address a variety of research questions and study aims. Researchers may want to test whether there is evidence for a relationship between a categorical (grouping) variable (eg, treatment group or patient sex) and a quantitative outcome (eg, blood pressure).

In both cases, we still use the term 'linear' because we assume that the response variable is directly related to a linear combination of the explanatory variables. The equation for multiple linear regression has the same form as that for simple linear regression but has more terms: = 0 +. 1 +. 2 + ⋯ +.

Linear Regression is a method for modelling a relationship between a dependent variable and independent variables. These models can be fit with numerous approaches. ... Stay informed on the latest trending ML papers with code, research developments, libraries, methods, and datasets. ... Papers With Code is a free resource with all data licensed ...

In this study, data for multilinear regression analysis is occur from Sakarya University Education Faculty student's lesson (measurement and evaluation, educational psychology, program development, counseling and instructional techniques) scores and their 2012- KPSS score. Assumptions of multilinear regression analysis- normality, linearity, no ...

Linear regression is used to quantify the relationship between ≥1 independent (predictor) variables and a continuous dependent (outcome) variable. In this issue of Anesthesia & Analgesia, Müller-Wirtz et al 1 report results of a study in which they used linear regression to assess the relationship in a rat model between tissue propofol ...

The method used in this research is quantitative method with several tests namely reliability analysis, classical assumption deviation test and linear regression. Based on the results of primary data regression processed using SPSS 20, multiple linear regression equations were obtained as follows: Y = 1,031 + 0.329 X1+ 0.712 X2.In part, the ...

Regression allows you to estimate how a dependent variable changes as the independent variable (s) change. Simple linear regression example. You are a social researcher interested in the relationship between income and happiness. You survey 500 people whose incomes range from 15k to 75k and ask them to rank their happiness on a scale from 1 to ...

This paper describes the multiple linear regression algorithm involved in this research and the AHMES learning (AL) algorithm improved by the Q-learning algorithm. The simulation test results of the upgraded AHMES show the effectiveness of these algorithms. Download Full-text.

Home environment and reading achievement research has been largely dominated by a focus on early reading acquisition, while research on the relationship between home environments and reading success with preadolescents (Grades 4-6) has been largely overlooked. There are other limitations as well. Clarke and Kurtz-Costes (1997) argued that prior ...

Research paper. Predictive modeling of PV solar power plant efficiency considering weather conditions: A comparative analysis of artificial neural networks and multiple linear regression ... According to the linear regression plot, a positive strong correlation was shown between the two variables. The nova's smaller than 0.0001 (p < 0.0001) ...

Based on the existing research results, the marginal contribution of this paper may lie in the following aspects: First, from the perspective of the digital level, this paper deeply discusses the non-linear relationship between digital inclusive finance and green innovation of SMEs, so as to develop new ideas for digital inclusive finance to ...