What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

16 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Manuscript Preparation

What is and How to Write a Good Hypothesis in Research?

- 4 minute read

- 311.2K views

Table of Contents

One of the most important aspects of conducting research is constructing a strong hypothesis. But what makes a hypothesis in research effective? In this article, we’ll look at the difference between a hypothesis and a research question, as well as the elements of a good hypothesis in research. We’ll also include some examples of effective hypotheses, and what pitfalls to avoid.

What is a Hypothesis in Research?

Simply put, a hypothesis is a research question that also includes the predicted or expected result of the research. Without a hypothesis, there can be no basis for a scientific or research experiment. As such, it is critical that you carefully construct your hypothesis by being deliberate and thorough, even before you set pen to paper. Unless your hypothesis is clearly and carefully constructed, any flaw can have an adverse, and even grave, effect on the quality of your experiment and its subsequent results.

Research Question vs Hypothesis

It’s easy to confuse research questions with hypotheses, and vice versa. While they’re both critical to the Scientific Method, they have very specific differences. Primarily, a research question, just like a hypothesis, is focused and concise. But a hypothesis includes a prediction based on the proposed research, and is designed to forecast the relationship of and between two (or more) variables. Research questions are open-ended, and invite debate and discussion, while hypotheses are closed, e.g. “The relationship between A and B will be C.”

A hypothesis is generally used if your research topic is fairly well established, and you are relatively certain about the relationship between the variables that will be presented in your research. Since a hypothesis is ideally suited for experimental studies, it will, by its very existence, affect the design of your experiment. The research question is typically used for new topics that have not yet been researched extensively. Here, the relationship between different variables is less known. There is no prediction made, but there may be variables explored. The research question can be casual in nature, simply trying to understand if a relationship even exists, descriptive or comparative.

How to Write Hypothesis in Research

Writing an effective hypothesis starts before you even begin to type. Like any task, preparation is key, so you start first by conducting research yourself, and reading all you can about the topic that you plan to research. From there, you’ll gain the knowledge you need to understand where your focus within the topic will lie.

Remember that a hypothesis is a prediction of the relationship that exists between two or more variables. Your job is to write a hypothesis, and design the research, to “prove” whether or not your prediction is correct. A common pitfall is to use judgments that are subjective and inappropriate for the construction of a hypothesis. It’s important to keep the focus and language of your hypothesis objective.

An effective hypothesis in research is clearly and concisely written, and any terms or definitions clarified and defined. Specific language must also be used to avoid any generalities or assumptions.

Use the following points as a checklist to evaluate the effectiveness of your research hypothesis:

- Predicts the relationship and outcome

- Simple and concise – avoid wordiness

- Clear with no ambiguity or assumptions about the readers’ knowledge

- Observable and testable results

- Relevant and specific to the research question or problem

Research Hypothesis Example

Perhaps the best way to evaluate whether or not your hypothesis is effective is to compare it to those of your colleagues in the field. There is no need to reinvent the wheel when it comes to writing a powerful research hypothesis. As you’re reading and preparing your hypothesis, you’ll also read other hypotheses. These can help guide you on what works, and what doesn’t, when it comes to writing a strong research hypothesis.

Here are a few generic examples to get you started.

Eating an apple each day, after the age of 60, will result in a reduction of frequency of physician visits.

Budget airlines are more likely to receive more customer complaints. A budget airline is defined as an airline that offers lower fares and fewer amenities than a traditional full-service airline. (Note that the term “budget airline” is included in the hypothesis.

Workplaces that offer flexible working hours report higher levels of employee job satisfaction than workplaces with fixed hours.

Each of the above examples are specific, observable and measurable, and the statement of prediction can be verified or shown to be false by utilizing standard experimental practices. It should be noted, however, that often your hypothesis will change as your research progresses.

Language Editing Plus

Elsevier’s Language Editing Plus service can help ensure that your research hypothesis is well-designed, and articulates your research and conclusions. Our most comprehensive editing package, you can count on a thorough language review by native-English speakers who are PhDs or PhD candidates. We’ll check for effective logic and flow of your manuscript, as well as document formatting for your chosen journal, reference checks, and much more.

- Research Process

Systematic Literature Review or Literature Review?

What is a Problem Statement? [with examples]

You may also like.

Make Hook, Line, and Sinker: The Art of Crafting Engaging Introductions

Can Describing Study Limitations Improve the Quality of Your Paper?

A Guide to Crafting Shorter, Impactful Sentences in Academic Writing

6 Steps to Write an Excellent Discussion in Your Manuscript

How to Write Clear and Crisp Civil Engineering Papers? Here are 5 Key Tips to Consider

The Clear Path to An Impactful Paper: ②

The Essentials of Writing to Communicate Research in Medicine

Changing Lines: Sentence Patterns in Academic Writing

Input your search keywords and press Enter.

Loading metrics

Open Access

Perspective

Perspective: Dimensions of the scientific method

* E-mail: [email protected]

Affiliation Department of Biomedical Engineering, Georgia Institute of Technology and Emory University, Atlanta, Georgia, United States of America

- Eberhard O. Voit

Published: September 12, 2019

- https://doi.org/10.1371/journal.pcbi.1007279

- Reader Comments

The scientific method has been guiding biological research for a long time. It not only prescribes the order and types of activities that give a scientific study validity and a stamp of approval but also has substantially shaped how we collectively think about the endeavor of investigating nature. The advent of high-throughput data generation, data mining, and advanced computational modeling has thrown the formerly undisputed, monolithic status of the scientific method into turmoil. On the one hand, the new approaches are clearly successful and expect the same acceptance as the traditional methods, but on the other hand, they replace much of the hypothesis-driven reasoning with inductive argumentation, which philosophers of science consider problematic. Intrigued by the enormous wealth of data and the power of machine learning, some scientists have even argued that significant correlations within datasets could make the entire quest for causation obsolete. Many of these issues have been passionately debated during the past two decades, often with scant agreement. It is proffered here that hypothesis-driven, data-mining–inspired, and “allochthonous” knowledge acquisition, based on mathematical and computational models, are vectors spanning a 3D space of an expanded scientific method. The combination of methods within this space will most certainly shape our thinking about nature, with implications for experimental design, peer review and funding, sharing of result, education, medical diagnostics, and even questions of litigation.

Citation: Voit EO (2019) Perspective: Dimensions of the scientific method. PLoS Comput Biol 15(9): e1007279. https://doi.org/10.1371/journal.pcbi.1007279

Editor: Jason A. Papin, University of Virginia, UNITED STATES

Copyright: © 2019 Eberhard O. Voit. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: This work was supported in part by grants from the National Science Foundation ( https://www.nsf.gov/div/index.jsp?div=MCB ) grant NSF-MCB-1517588 (PI: EOV), NSF-MCB-1615373 (PI: Diana Downs) and the National Institute of Environmental Health Sciences ( https://www.niehs.nih.gov/ ) grant NIH-2P30ES019776-05 (PI: Carmen Marsit). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The author has declared that no competing interests exist.

The traditional scientific method: Hypothesis-driven deduction

Research is the undisputed core activity defining science. Without research, the advancement of scientific knowledge would come to a screeching halt. While it is evident that researchers look for new information or insights, the term “research” is somewhat puzzling. Never mind the prefix “re,” which simply means “coming back and doing it again and again,” the word “search” seems to suggest that the research process is somewhat haphazard, that not much of a strategy is involved in the process. One might argue that research a few hundred years ago had the character of hoping for enough luck to find something new. The alchemists come to mind in their quest to turn mercury or lead into gold, or to discover an elixir for eternal youth, through methods we nowadays consider laughable.

Today’s sciences, in stark contrast, are clearly different. Yes, we still try to find something new—and may need a good dose of luck—but the process is anything but unstructured. In fact, it is prescribed in such rigor that it has been given the widely known moniker “scientific method.” This scientific method has deep roots going back to Aristotle and Herophilus (approximately 300 BC), Avicenna and Alhazen (approximately 1,000 AD), Grosseteste and Robert Bacon (approximately 1,250 AD), and many others, but solidified and crystallized into the gold standard of quality research during the 17th and 18th centuries [ 1 – 7 ]. In particular, Sir Francis Bacon (1561–1626) and René Descartes (1596–1650) are often considered the founders of the scientific method, because they insisted on careful, systematic observations of high quality, rather than metaphysical speculations that were en vogue among the scholars of the time [ 1 , 8 ]. In contrast to their peers, they strove for objectivity and insisted that observations, rather than an investigator’s preconceived ideas or superstitions, should be the basis for formulating a research idea [ 7 , 9 ].

Bacon and his 19th century follower John Stuart Mill explicitly proposed gaining knowledge through inductive reasoning: Based on carefully recorded observations, or from data obtained in a well-planned experiment, generalized assertions were to be made about similar yet (so far) unobserved phenomena [ 7 ]. Expressed differently, inductive reasoning attempts to derive general principles or laws directly from empirical evidence [ 10 ]. An example is the 19th century epigram of the physician Rudolf Virchow, Omnis cellula e cellula . There is no proof that indeed “every cell derives from a cell,” but like Virchow, we have made the observation time and again and never encountered anything suggesting otherwise.

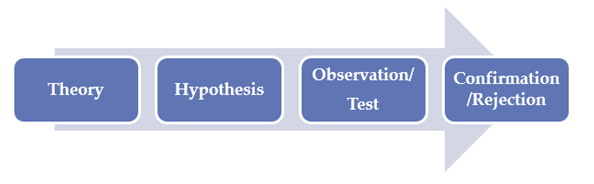

In contrast to induction, the widely accepted, traditional scientific method is based on formulating and testing hypotheses. From the results of these tests, a deduction is made whether the hypothesis is presumably true or false. This type of hypotheticodeductive reasoning goes back to William Whewell, William Stanley Jevons, and Charles Peirce in the 19th century [ 1 ]. By the 20th century, the deductive, hypothesis-based scientific method had become deeply ingrained in the scientific psyche, and it is now taught as early as middle school in order to teach students valid means of discovery [ 8 , 11 , 12 ]. The scientific method has not only guided most research studies but also fundamentally influenced how we think about the process of scientific discovery.

Alas, because biology has almost no general laws, deduction in the strictest sense is difficult. It may therefore be preferable to use the term abduction, which refers to the logical inference toward the most plausible explanation, given a set of observations, although this explanation cannot be proven and is not necessarily true.

Over the decades, the hypothesis-based scientific method did experience variations here and there, but its conceptual scaffold remained essentially unchanged ( Fig 1 ). Its key is a process that begins with the formulation of a hypothesis that is to be rigorously tested, either in the wet lab or computationally; nonadherence to this principle is seen as lacking rigor and can lead to irreproducible results [ 1 , 13 – 15 ].

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

The central concept of the traditional scientific method is a falsifiable hypothesis regarding some phenomenon of interest. This hypothesis is to be tested experimentally or computationally. The test results support or refute the hypothesis, triggering a new round of hypothesis formulation and testing.

https://doi.org/10.1371/journal.pcbi.1007279.g001

Going further, the prominent philosopher of science Sir Karl Popper argued that a scientific hypothesis can never be verified but that it can be disproved by a single counterexample. He therefore demanded that scientific hypotheses had to be falsifiable, because otherwise, testing would be moot [ 16 , 17 ] (see also [ 18 ]). As Gillies put it, “successful theories are those that survive elimination through falsification” [ 19 ]. Kelley and Scott agreed to some degree but warned that complete insistence on falsifiability is too restrictive as it would mark many computational techniques, statistical hypothesis testing, and even Darwin’s theory of evolution as nonscientific [ 20 ].

While the hypothesis-based scientific method has been very successful, its exclusive reliance on deductive reasoning is dangerous because according to the so-called Duhem–Quine thesis, hypothesis testing always involves an unknown number of explicit or implicit assumptions, some of which may steer the researcher away from hypotheses that seem implausible, although they are, in fact, true [ 21 ]. According to Kuhn, this bias can obstruct the recognition of paradigm shifts [ 22 ], which require the rethinking of previously accepted “truths” and the development of radically new ideas [ 23 , 24 ]. The testing of simultaneous alternative hypotheses [ 25 – 27 ] ameliorates this problem to some degree but not entirely.

The traditional scientific method is often presented in discrete steps, but it should really be seen as a form of critical thinking, subject to review and independent validation [ 8 ]. It has proven very influential, not only by prescribing valid experimentation, but also for affecting the way we attempt to understand nature [ 18 ], for teaching [ 8 , 12 ], reporting, publishing, and otherwise sharing information [ 28 ], for peer review and the awarding of funds by research-supporting agencies [ 29 , 30 ], for medical diagnostics [ 7 ], and even in litigation [ 31 ].

A second dimension of the scientific method: Data-mining–inspired induction

A major shift in biological experimentation occurred with the–omics revolution of the early 21st century. All of a sudden, it became feasible to perform high-throughput experiments that generated thousands of measurements, typically characterizing the expression or abundances of very many—if not all—genes, proteins, metabolites, or other biological quantities in a sample.

The strategy of measuring large numbers of items in a nontargeted fashion is fundamentally different from the traditional scientific method and constitutes a new, second dimension of the scientific method. Instead of hypothesizing and testing whether gene X is up-regulated under some altered condition, the leading question becomes which of the thousands of genes in a sample are up- or down-regulated. This shift in focus elevates the data to the supreme role of revealing novel insights by themselves ( Fig 2 ). As an important, generic advantage over the traditional strategy, this second dimension is free of a researcher’s preconceived notions regarding the molecular mechanisms governing the phenomenon of interest, which are otherwise the key to formulating a hypothesis. The prominent biologists Patrick Brown and David Botstein commented that “the patterns of expression will often suffice to begin de novo discovery of potential gene functions” [ 32 ].

Data-driven research begins with an untargeted exploration, in which the data speak for themselves. Machine learning extracts patterns from the data, which suggest hypotheses that are to be tested in the lab or computationally.

https://doi.org/10.1371/journal.pcbi.1007279.g002

This data-driven, discovery-generating approach is at once appealing and challenging. On the one hand, very many data are explored simultaneously and essentially without bias. On the other hand, the large datasets supporting this approach create a genuine challenge to understanding and interpreting the experimental results because the thousands of data points, often superimposed with a fair amount of noise, make it difficult to detect meaningful differences between sample and control. This situation can only be addressed with computational methods that first “clean” the data, for instance, through the statistically valid removal of outliers, and then use machine learning to identify statistically significant, distinguishing molecular profiles or signatures. In favorable cases, such signatures point to specific biological pathways, whereas other signatures defy direct explanation but may become the launch pad for follow-up investigations [ 33 ].

Today’s scientists are very familiar with this discovery-driven exploration of “what’s out there” and might consider it a quaint quirk of history that this strategy was at first widely chastised and ridiculed as a “fishing expedition” [ 30 , 34 ]. Strict traditionalists were outraged that rigor was leaving science with the new approach and that sufficient guidelines were unavailable to assure the validity and reproducibility of results [ 10 , 35 , 36 ].

From the view point of philosophy of science, this second dimension of the scientific method uses inductive reasoning and reflects Bacon’s idea that observations can and should dictate the research question to be investigated [ 1 , 7 ]. Allen [ 36 ] forcefully rejected this type of reasoning, stating “the thinking goes, we can now expect computer programs to derive significance, relevance and meaning from chunks of information, be they nucleotide sequences or gene expression profiles… In contrast with this view, many are convinced that no purely logical process can turn observation into understanding.” His conviction goes back to the 18th century philosopher David Hume and again to Popper, who identified as the overriding problem with inductive reasoning that it can never truly reveal causality, even if a phenomenon is observed time and again [ 16 , 17 , 37 , 38 ]. No number of observations, even if they always have the same result, can guard against an exception that would violate the generality of a law inferred from these observations [ 1 , 35 ]. Worse, Popper argued, through inference by induction, we cannot even know the probability of something being true [ 10 , 17 , 36 ].

Others argued that data-driven and hypothesis-driven research actually do not differ all that much in principle, as long as there is cycling between developing new ideas and testing them with care [ 27 ]. In fact, Kell and Oliver [ 34 ] maintained that the exclusive acceptance of hypothesis-driven programs misrepresents the complexities of biological knowledge generation. Similarly refuting the prominent rule of deduction, Platt [ 26 ] and Beard and Kushmerick [ 27 ] argued that repeated inductive reasoning, called strong inference, corresponds to a logically sound decision tree of disproving or refining hypotheses that can rapidly yield firm conclusions; nonetheless, Platt had to admit that inductive inference is not as certain as deduction, because it projects into the unknown. Lander compared the task of obtaining causality by induction to the problem of inferring the design of a microprocessor from input-output readings, which in a strict sense is impossible, because the microprocessor could be arbitrarily complicated; even so, inference often leads to novel insights and therefore is valuable [ 39 ].

An interesting special case of almost pure inductive reasoning is epidemiology, where hypothesis-driven reasoning is rare and instead, the fundamental question is whether data-based evidence is sufficient to associate health risks with specific causes [ 31 , 34 ].

Recent advances in machine learning and “big-data” mining have driven the use of inductive reasoning to unprecedented heights. As an example, machine learning can greatly assist in the discovery of patterns, for instance, in biological sequences [ 40 ]. Going a step further, a pithy article by Andersen [ 41 ] proffered that we may not need to look for causality or mechanistic explanations anymore if we just have enough correlation: “With enough data, the numbers speak for themselves, correlation replaces causation, and science can advance even without coherent models or unified theories.”

Of course, the proposal to abandon the quest for causality caused pushback on philosophical as well as mathematical grounds. Allen [ 10 , 35 ] considered the idea “absurd” that data analysis could enhance understanding in the absence of a hypothesis. He felt confident “that even the formidable combination of computing power with ease of access to data cannot produce a qualitative shift in the way that we do science: the making of hypotheses remains an indispensable component in the growth of knowledge” [ 36 ]. Succi and Coveney [ 42 ] refuted the “most extravagant claims” of big-data proponents very differently, namely by analyzing the theories on which machine learning is founded. They contrasted the assumptions underlying these theories, such as the law of large numbers, with the mathematical reality of complex biological systems. Specifically, they carefully identified genuine features of these systems, such as nonlinearities, nonlocality of effects, fractal aspects, and high dimensionality, and argued that they fundamentally violate some of the statistical assumptions implicitly underlying big-data analysis, like independence of events. They concluded that these discrepancies “may lead to false expectations and, at their nadir, even to dangerous social, economical and political manipulation.” To ameliorate the situation, the field of big-data analysis would need new strong theorems characterizing the validity of its methods and the numbers of data required for obtaining reliable insights. Succi and Coveney go as far as stating that too many data are just as bad as insufficient data [ 42 ].

While philosophical doubts regarding inductive methods will always persist, one cannot deny that -omics-based, high-throughput studies, combined with machine learning and big-data analysis, have been very successful [ 43 ]. Yes, induction cannot truly reveal general laws, no matter how large the datasets, but they do provide insights that are very different from what science had offered before and may at least suggest novel patterns, trends, or principles. As a case in point, if many transcriptomic studies indicate that a particular gene set is involved in certain classes of phenomena, there is probably some truth to the observation, even though it is not mathematically provable. Kepler’s laws of astronomy were arguably derived solely from inductive reasoning [ 34 ].

Notwithstanding the opposing views on inductive methods, successful strategies shape how we think about science. Thus, to take advantage of all experimental options while ensuring quality of research, we must not allow that “anything goes” but instead identify and characterize standard operating procedures and controls that render this emerging scientific method valid and reproducible. A laudable step in this direction was the wide acceptance of “minimum information about a microarray experiment” (MIAME) standards for microarray experiments [ 44 ].

A third dimension of the scientific method: Allochthonous reasoning

Parallel to the blossoming of molecular biology and the rapid rise in the power and availability of computing in the late 20th century, the use of mathematical and computational models became increasingly recognized as relevant and beneficial for understanding biological phenomena. Indeed, mathematical models eventually achieved cornerstone status in the new field of computational systems biology.

Mathematical modeling has been used as a tool of biological analysis for a long time [ 27 , 45 – 48 ]. Interesting for the discussion here is that the use of mathematical and computational modeling in biology follows a scientific approach that is distinctly different from the traditional and the data-driven methods, because it is distributed over two entirely separate domains of knowledge. One consists of the biological reality of DNA, elephants, and roses, whereas the other is the world of mathematics, which is governed by numbers, symbols, theorems, and abstract work protocols. Because the ways of thinking—and even the languages—are different in these two realms, I suggest calling this type of knowledge acquisition “allochthonous” (literally Greek: in or from a “piece of land different from where one is at home”; one could perhaps translate it into modern lingo as “outside one’s comfort zone”). De facto, most allochthonous reasoning in biology presently refers to mathematics and computing, but one might also consider, for instance, the application of methods from linguistics in the analysis of DNA sequences or proteins [ 49 ].

One could argue that biologists have employed “models” for a long time, for instance, in the form of “model organisms,” cell lines, or in vitro experiments, which more or less faithfully reflect features of the organisms of true interest but are easier to manipulate. However, this type of biological model use is rather different from allochthonous reasoning, as it does not leave the realm of biology and uses the same language and often similar methodologies.

A brief discussion of three experiences from our lab may illustrate the benefits of allochthonous reasoning. (1) In a case study of renal cell carcinoma, a dynamic model was able to explain an observed yet nonintuitive metabolic profile in terms of the enzymatic reaction steps that had been altered during the disease [ 50 ]. (2) A transcriptome analysis had identified several genes as displaying significantly different expression patterns during malaria infection in comparison to the state of health. Considered by themselves and focusing solely on genes coding for specific enzymes of purine metabolism, the findings showed patterns that did not make sense. However, integrating the changes in a dynamic model revealed that purine metabolism globally shifted, in response to malaria, from guanine compounds to adenine, inosine, and hypoxanthine [ 51 ]. (3) Data capturing the dynamics of malaria parasites suggested growth rates that were biologically impossible. Speculation regarding possible explanations led to the hypothesis that many parasite-harboring red blood cells might “hide” from circulation and therewith from detection in the blood stream. While experimental testing of the feasibility of the hypothesis would have been expensive, a dynamic model confirmed that such a concealment mechanism could indeed quantitatively explain the apparently very high growth rates [ 52 ]. In all three cases, the insights gained inductively from computational modeling would have been difficult to obtain purely with experimental laboratory methods. Purely deductive allochthonous reasoning is the ultimate goal of the search for design and operating principles [ 53 – 55 ], which strives to explain why certain structures or functions are employed by nature time and again. An example is a linear metabolic pathway, in which feedback inhibition is essentially always exerted on the first step [ 56 , 57 ]. This generality allows the deduction that a so far unstudied linear pathway is most likely (or even certain to be) inhibited at the first step. Not strictly deductive—but rather abductive—was a study in our lab in which we analyzed time series data with a mathematical model that allowed us to infer the most likely regulatory structure of a metabolic pathway [ 58 , 59 ].

A typical allochthonous investigation begins in the realm of biology with the formulation of a hypothesis ( Fig 3 ). Instead of testing this hypothesis with laboratory experiments, the system encompassing the hypothesis is moved into the realm of mathematics. This move requires two sets of ingredients. One set consists of the simplification and abstraction of the biological system: Any distracting details that seem unrelated to the hypothesis and its context are omitted or represented collectively with other details. This simplification step carries the greatest risk of the entire modeling approach, as omission of seemingly negligible but, in truth, important details can easily lead to wrong results. The second set of ingredients consists of correspondence rules that translate every biological component or process into the language of mathematics [ 60 , 61 ].

This mathematical and computational approach is distributed over two realms, which are connected by correspondence rules.

https://doi.org/10.1371/journal.pcbi.1007279.g003

Once the system is translated, it has become an entirely mathematical construct that can be analyzed purely with mathematical and computational means. The results of this analysis are also strictly mathematical. They typically consist of values of variables, magnitudes of processes, sensitivity patterns, signs of eigenvalues, or qualitative features like the onset of oscillations or the potential for limit cycles. Correspondence rules are used again to move these results back into the realm of biology. As an example, the mathematical result that “two eigenvalues have positive real parts” does not make much sense to many biologists, whereas the interpretation that “the system is not stable at the steady state in question” is readily explained. New biological insights may lead to new hypotheses, which are tested either by experiments or by returning once more to the realm of mathematics. The model design, diagnosis, refinements, and validation consist of several phases, which have been discussed widely in the biomathematical literature. Importantly, each iteration of a typical modeling analysis consists of a move from the biological to the mathematical realm and back.

The reasoning within the realm of mathematics is often deductive, in the form of an Aristotelian syllogism, such as the well-known “All men are mortal; Socrates is a man; therefore, Socrates is mortal.” However, the reasoning may also be inductive, as it is the case with large-scale Monte-Carlo simulations that generate arbitrarily many “observations,” although they cannot reveal universal principles or theorems. An example is a simulation randomly drawing numbers in an attempt to show that every real number has an inverse. The simulation will always attest to this hypothesis but fail to discover the truth because it will never randomly draw 0. Generically, computational models may be considered sets of hypotheses, formulated as equations or as algorithms that reflect our perception of a complex system [ 27 ].

Impact of the multidimensional scientific method on learning

Almost all we know in biology has come from observation, experimentation, and interpretation. The traditional scientific method not only offered clear guidance for this knowledge gathering, but it also fundamentally shaped the way we think about the exploration of nature. When presented with a new research question, scientists were trained to think immediately in terms of hypotheses and alternatives, pondering the best feasible ways of testing them, and designing in their minds strong controls that would limit the effects of known or unknown confounders. Shaped by the rigidity of this ever-repeating process, our thinking became trained to move forward one well-planned step at a time. This modus operandi was rigid and exact. It also minimized the erroneous pursuit of long speculative lines of thought, because every step required testing before a new hypothesis was formed. While effective, the process was also very slow and driven by ingenuity—as well as bias—on the scientist’s part. This bias was sometimes a hindrance to necessary paradigm shifts [ 22 ].

High-throughput data generation, big-data analysis, and mathematical-computational modeling changed all that within a few decades. In particular, the acceptance of inductive principles and of the allochthonous use of nonbiological strategies to answer biological questions created an unprecedented mix of successes and chaos. To the horror of traditionalists, the importance of hypotheses became minimized, and the suggestion spread that the data would speak for themselves [ 36 ]. Importantly, within this fog of “anything goes,” the fundamental question arose how to determine whether an experiment was valid.

Because agreed-upon operating procedures affect research progress and interpretation, thinking, teaching, and sharing of results, this question requires a deconvolution of scientific strategies. Here I proffer that the single scientific method of the past should be expanded toward a vector space of scientific methods, with spanning vectors that correspond to different dimensions of the scientific method ( Fig 4 ).

The traditional hypothesis-based deductive scientific method is expanded into a 3D space that allows for synergistic blends of methods that include data-mining–inspired, inductive knowledge acquisition, and mathematical model-based, allochthonous reasoning.

https://doi.org/10.1371/journal.pcbi.1007279.g004

Obviously, all three dimensions have their advantages and drawbacks. The traditional, hypothesis-driven deductive method is philosophically “clean,” except that it is confounded by preconceptions and assumptions. The data-mining–inspired inductive method cannot offer universal truths but helps us explore very large spaces of factors that contribute to a phenomenon. Allochthonous, model-based reasoning can be performed mentally, with paper and pencil, through rigorous analysis, or with a host of computational methods that are precise and disprovable [ 27 ]. At the same time, they are incomparable faster, cheaper, and much more comprehensive than experiments in molecular biology. This reduction in cost and time, and the increase in coverage, may eventually have far-reaching consequences, as we can already fathom from much of modern physics.

Due to its long history, the traditional dimension of the scientific method is supported by clear and very strong standard operating procedures. Similarly, strong procedures need to be developed for the other two dimensions. The MIAME rules for microarray analysis provide an excellent example [ 44 ]. On the mathematical modeling front, no such rules are generally accepted yet, but trends toward them seem to emerge at the horizon. For instance, it seems to be becoming common practice to include sensitivity analyses in typical modeling studies and to assess the identifiability or sloppiness of ensembles of parameter combinations that fit a given dataset well [ 62 , 63 ].

From a philosophical point of view, it seems unlikely that objections against inductive reasoning will disappear. However, instead of pitting hypothesis-based deductive reasoning against inductivism, it seems more beneficial to determine how the different methods can be synergistically blended ( cf . [ 18 , 27 , 34 , 42 ]) as linear combinations of the three vectors of knowledge acquisition ( Fig 4 ). It is at this point unclear to what degree the identified three dimensions are truly independent of each other, whether additional dimensions should be added [ 24 ], or whether the different versions could be amalgamated into a single scientific method [ 18 ], especially if it is loosely defined as a form of critical thinking [ 8 ]. Nobel Laureate Percy Bridgman even concluded that “science is what scientists do, and there are as many scientific methods as there are individual scientists” [ 8 , 64 ].

Combinations of the three spanning vectors of the scientific method have been emerging for some time. Many biologists already use inductive high-throughput methods to develop specific hypotheses that are subsequently tested with deductive or further inductive methods [ 34 , 65 ]. In terms of including mathematical modeling, physics and geology have been leading the way for a long time, often by beginning an investigation in theory, before any actual experiment is performed. It will benefit biology to look into this strategy and to develop best practices of allochthonous reasoning.

The blending of methods may take quite different shapes. Early on, Ideker and colleagues [ 65 ] proposed an integrated experimental approach for pathway analysis that offered a glimpse of new experimental strategies within the space of scientific methods. In a similar vein, Covert and colleagues [ 66 ] included computational methods into such an integrated approach. Additional examples of blended analyses in systems biology can be seen in other works, such as [ 43 , 67 – 73 ]. Generically, it is often beneficial to start with big data, determine patterns in associations and correlations, then switch to the mathematical realm in order to filter out spurious correlations in a high-throughput fashion. If this procedure is executed in an iterative manner, the “surviving” associations have an increased level of confidence and are good candidates for further experimental or computational testing (personal communication from S. Chandrasekaran).

If each component of a blended scientific method follows strict, commonly agreed guidelines, “linear combinations” within the 3D space can also be checked objectively, per deconvolution. In addition, guidelines for synergistic blends of component procedures should be developed. If we carefully monitor such blends, time will presumably indicate which method is best for which task and how the different approaches optimally inform each other. For instance, it will be interesting to study whether there is an optimal sequence of experiments along the three axes for a particular class of tasks. Big-data analysis together with inductive reasoning might be optimal for creating initial hypotheses and possibly refuting wrong speculations (“we had thought this gene would be involved, but apparently it isn’t”). If the logic of an emerging hypotheses can be tested with mathematical and computational tools, it will almost certainly be faster and cheaper than an immediate launch into wet-lab experimentation. It is also likely that mathematical reasoning will be able to refute some apparently feasible hypothesis and suggest amendments. Ultimately, the “surviving” hypotheses must still be tested for validity through conventional experiments. Deconvolving current practices and optimizing the combination of methods within the 3D or higher-dimensional space of scientific methods will likely result in better planning of experiments and in synergistic blends of approaches that have the potential capacity of addressing some of the grand challenges in biology.

Acknowledgments

The author is very grateful to Dr. Sriram Chandrasekaran and Ms. Carla Kumbale for superb suggestions and invaluable feedback.

- View Article

- Google Scholar

- 2. Gauch HGJ. Scientific Method in Brief. Cambridge, UK.: Cambridge University Press; 2012.

- 3. Gimbel S (Ed). Exploring the Scientific Method: Cases and Questions. Chicago, IL: The University of Chicago Press; 2011.

- PubMed/NCBI

- 8. McLelland CV. The nature of science and the scientific method. Boulder, CO: The Geological Society of America; 2006.

- 9. Ladyman J. Understanding Philosophy of Science. Abington, Oxon: Routledge; 2002.

- 16. Popper KR. Conjectures and Refutations: The Growth of Scientific Knowledge. Abingdon, Oxon: Routledge and Kegan Paul; 1963.

- 17. Popper KR. The Logic of Scientific Discovery. Abingdon, Oxon: Routledge; 2002.

- 21. Harding SE. Can theories be refuted?: Essays on the Duhem-Quine thesis. Dordrecht-Holland / Boston, MA: D. Reidel Publ. Co; 1976.

- 22. Kuhn TS. The Structure of Scientific Revolutions. Chicago, IL: University of Chicago Press; 1962.

- 37. Hume D. An enquiry concerning human understanding. Oxford, U.K.: Oxford University Press; 1748/1999.

- 38. Popper KR. Objective knowledge. An evolutionary approach. Oxford, U.K.: Oxford University Press; 1972.

- 47. von Bertalanffy L. General System Theory: Foundations, Development, Applications. New York: George Braziller; 1968.

- 48. May RM. Stability and Complexity in Model Ecosystems: Princeton University Press; 1973.

- 57. Savageau MA. Biochemical Systems Analysis: A Study of Function and Design in Molecular Biology. Reading, Mass: Addison-Wesley Pub. Co. Advanced Book Program (reprinted 2009); 1976.

- 60. Reither F. Über das Denken mit Analogien und Modellen. In: Schaefer G, Trommer G, editors. Denken in Modellen. Braunschweig, Germany: Georg Westermann Verlag; 1977.

- 61. Voit EO. A First Course in Systems Biology, 2nd Ed. New York, NY: Garland Science; 2018.

- 64. Bridgman PW. Reflections of a Physicist. New York, NY: reprinted by Kessinger Legacy Reprints, 2010; 1955.

The Research Hypothesis: Role and Construction

- First Online: 01 January 2012

Cite this chapter

- Phyllis G. Supino EdD 3

6006 Accesses

A hypothesis is a logical construct, interposed between a problem and its solution, which represents a proposed answer to a research question. It gives direction to the investigator’s thinking about the problem and, therefore, facilitates a solution. There are three primary modes of inference by which hypotheses are developed: deduction (reasoning from a general propositions to specific instances), induction (reasoning from specific instances to a general proposition), and abduction (formulation/acceptance on probation of a hypothesis to explain a surprising observation).

A research hypothesis should reflect an inference about variables; be stated as a grammatically complete, declarative sentence; be expressed simply and unambiguously; provide an adequate answer to the research problem; and be testable. Hypotheses can be classified as conceptual versus operational, single versus bi- or multivariable, causal or not causal, mechanistic versus nonmechanistic, and null or alternative. Hypotheses most commonly entail statements about “variables” which, in turn, can be classified according to their level of measurement (scaling characteristics) or according to their role in the hypothesis (independent, dependent, moderator, control, or intervening).

A hypothesis is rendered operational when its broadly (conceptually) stated variables are replaced by operational definitions of those variables. Hypotheses stated in this manner are called operational hypotheses, specific hypotheses, or predictions and facilitate testing.

Wrong hypotheses, rightly worked from, have produced more results than unguided observation

—Augustus De Morgan, 1872[ 1 ]—

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

De Morgan A, De Morgan S. A budget of paradoxes. London: Longmans Green; 1872.

Google Scholar

Leedy Paul D. Practical research. Planning and design. 2nd ed. New York: Macmillan; 1960.

Bernard C. Introduction to the study of experimental medicine. New York: Dover; 1957.

Erren TC. The quest for questions—on the logical force of science. Med Hypotheses. 2004;62:635–40.

Article PubMed Google Scholar

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 7. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1966.

Aristotle. The complete works of Aristotle: the revised Oxford Translation. In: Barnes J, editor. vol. 2. Princeton/New Jersey: Princeton University Press; 1984.

Polit D, Beck CT. Conceptualizing a study to generate evidence for nursing. In: Polit D, Beck CT, editors. Nursing research: generating and assessing evidence for nursing practice. 8th ed. Philadelphia: Wolters Kluwer/Lippincott Williams and Wilkins; 2008. Chapter 4.

Jenicek M, Hitchcock DL. Evidence-based practice. Logic and critical thinking in medicine. Chicago: AMA Press; 2005.

Bacon F. The novum organon or a true guide to the interpretation of nature. A new translation by the Rev G.W. Kitchin. Oxford: The University Press; 1855.

Popper KR. Objective knowledge: an evolutionary approach (revised edition). New York: Oxford University Press; 1979.

Morgan AJ, Parker S. Translational mini-review series on vaccines: the Edward Jenner Museum and the history of vaccination. Clin Exp Immunol. 2007;147:389–94.

Article PubMed CAS Google Scholar

Pead PJ. Benjamin Jesty: new light in the dawn of vaccination. Lancet. 2003;362:2104–9.

Lee JA. The scientific endeavor: a primer on scientific principles and practice. San Francisco: Addison-Wesley Longman; 2000.

Allchin D. Lawson’s shoehorn, or should the philosophy of science be rated, ‘X’? Science and Education. 2003;12:315–29.

Article Google Scholar

Lawson AE. What is the role of induction and deduction in reasoning and scientific inquiry? J Res Sci Teach. 2005;42:716–40.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 2. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Bonfantini MA, Proni G. To guess or not to guess? In: Eco U, Sebeok T, editors. The sign of three: Dupin, Holmes, Peirce. Bloomington: Indiana University Press; 1983. Chapter 5.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 5. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Flach PA, Kakas AC. Abductive and inductive reasoning: background issues. In: Flach PA, Kakas AC, editors. Abduction and induction. Essays on their relation and integration. The Netherlands: Klewer; 2000. Chapter 1.

Murray JF. Voltaire, Walpole and Pasteur: variations on the theme of discovery. Am J Respir Crit Care Med. 2005;172:423–6.

Danemark B, Ekstrom M, Jakobsen L, Karlsson JC. Methodological implications, generalization, scientific inference, models (Part II) In: explaining society. Critical realism in the social sciences. New York: Routledge; 2002.

Pasteur L. Inaugural lecture as professor and dean of the faculty of sciences. In: Peterson H, editor. A treasury of the world’s greatest speeches. Douai, France: University of Lille 7 Dec 1954.

Swineburne R. Simplicity as evidence for truth. Milwaukee: Marquette University Press; 1997.

Sakar S, editor. Logical empiricism at its peak: Schlick, Carnap and Neurath. New York: Garland; 1996.

Popper K. The logic of scientific discovery. New York: Basic Books; 1959. 1934, trans. 1959.

Caws P. The philosophy of science. Princeton: D. Van Nostrand Company; 1965.

Popper K. Conjectures and refutations. The growth of scientific knowledge. 4th ed. London: Routledge and Keegan Paul; 1972.

Feyerabend PK. Against method, outline of an anarchistic theory of knowledge. London, UK: Verso; 1978.

Smith PG. Popper: conjectures and refutations (Chapter IV). In: Theory and reality: an introduction to the philosophy of science. Chicago: University of Chicago Press; 2003.

Blystone RV, Blodgett K. WWW: the scientific method. CBE Life Sci Educ. 2006;5:7–11.

Kleinbaum DG, Kupper LL, Morgenstern H. Epidemiological research. Principles and quantitative methods. New York: Van Nostrand Reinhold; 1982.

Fortune AE, Reid WJ. Research in social work. 3rd ed. New York: Columbia University Press; 1999.

Kerlinger FN. Foundations of behavioral research. 1st ed. New York: Hold, Reinhart and Winston; 1970.

Hoskins CN, Mariano C. Research in nursing and health. Understanding and using quantitative and qualitative methods. New York: Springer; 2004.

Tuckman BW. Conducting educational research. New York: Harcourt, Brace, Jovanovich; 1972.

Wang C, Chiari PC, Weihrauch D, Krolikowski JG, Warltier DC, Kersten JR, Pratt Jr PF, Pagel PS. Gender-specificity of delayed preconditioning by isoflurane in rabbits: potential role of endothelial nitric oxide synthase. Anesth Analg. 2006;103:274–80.

Beyer ME, Slesak G, Nerz S, Kazmaier S, Hoffmeister HM. Effects of endothelin-1 and IRL 1620 on myocardial contractility and myocardial energy metabolism. J Cardiovasc Pharmacol. 1995;26(Suppl 3):S150–2.

PubMed CAS Google Scholar

Stone J, Sharpe M. Amnesia for childhood in patients with unexplained neurological symptoms. J Neurol Neurosurg Psychiatry. 2002;72:416–7.

Naughton BJ, Moran M, Ghaly Y, Michalakes C. Computer tomography scanning and delirium in elder patients. Acad Emerg Med. 1997;4:1107–10.

Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–72.

Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–5.

Stevens SS. On the theory of scales and measurement. Science. 1946;103:677–80.

Knapp TR. Treating ordinal scales as interval scales: an attempt to resolve the controversy. Nurs Res. 1990;39:121–3.

The Cochrane Collaboration. Open Learning Material. www.cochrane-net.org/openlearning/html/mod14-3.htm . Accessed 12 Oct 2009.

MacCorquodale K, Meehl PE. On a distinction between hypothetical constructs and intervening variables. Psychol Rev. 1948;55:95–107.

Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: conceptual, strategic and statistical considerations. J Pers Soc Psychol. 1986;51:1173–82.

Williamson GM, Schultz R. Activity restriction mediates the association between pain and depressed affect: a study of younger and older adult cancer patients. Psychol Aging. 1995;10:369–78.

Song M, Lee EO. Development of a functional capacity model for the elderly. Res Nurs Health. 1998;21:189–98.

MacKinnon DP. Introduction to statistical mediation analysis. New York: Routledge; 2008.

Download references

Author information

Authors and affiliations.

Department of Medicine, College of Medicine, SUNY Downstate Medical Center, 450 Clarkson Avenue, 1199, Brooklyn, NY, 11203, USA

Phyllis G. Supino EdD

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Phyllis G. Supino EdD .

Editor information

Editors and affiliations.

, Cardiovascular Medicine, SUNY Downstate Medical Center, Clarkson Avenue, box 1199 450, Brooklyn, 11203, USA

Phyllis G. Supino

, Cardiovascualr Medicine, SUNY Downstate Medical Center, Clarkson Avenue 450, Brooklyn, 11203, USA

Jeffrey S. Borer

Rights and permissions

Reprints and permissions

Copyright information

© 2012 Springer Science+Business Media, LLC

About this chapter

Supino, P.G. (2012). The Research Hypothesis: Role and Construction. In: Supino, P., Borer, J. (eds) Principles of Research Methodology. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-3360-6_3

Download citation

DOI : https://doi.org/10.1007/978-1-4614-3360-6_3

Published : 18 April 2012

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4614-3359-0

Online ISBN : 978-1-4614-3360-6

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

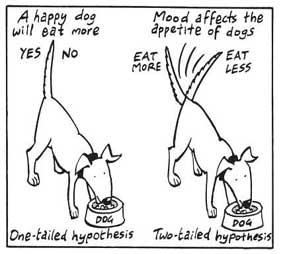

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."