Z-Test for Statistical Hypothesis Testing Explained

The Z-test is a statistical hypothesis test used to determine where the distribution of the test statistic we are measuring, like the mean , is part of the normal distribution .

There are multiple types of Z-tests, however, we’ll focus on the easiest and most well known one, the one sample mean test. This is used to determine if the difference between the mean of a sample and the mean of a population is statistically significant.

What Is a Z-Test?

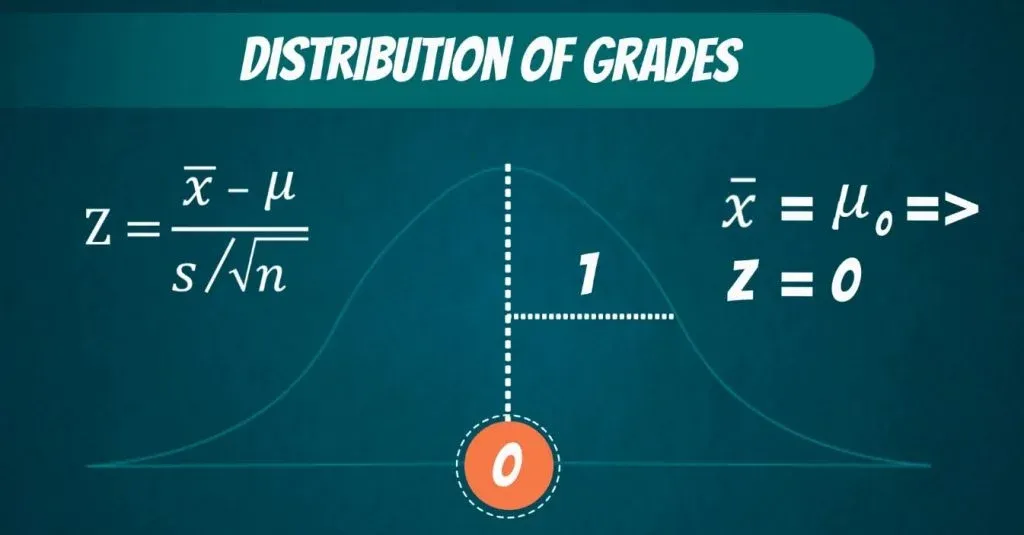

A Z-test is a type of statistical hypothesis test where the test-statistic follows a normal distribution.

The name Z-test comes from the Z-score of the normal distribution. This is a measure of how many standard deviations away a raw score or sample statistics is from the populations’ mean.

Z-tests are the most common statistical tests conducted in fields such as healthcare and data science . Therefore, it’s an essential concept to understand.

Requirements for a Z-Test

In order to conduct a Z-test, your statistics need to meet a few requirements, including:

- A Sample size that’s greater than 30. This is because we want to ensure our sample mean comes from a distribution that is normal. As stated by the c entral limit theorem , any distribution can be approximated as normally distributed if it contains more than 30 data points.

- The standard deviation and mean of the population is known .

- The sample data is collected/acquired randomly .

More on Data Science: What Is Bootstrapping Statistics?

Z-Test Steps

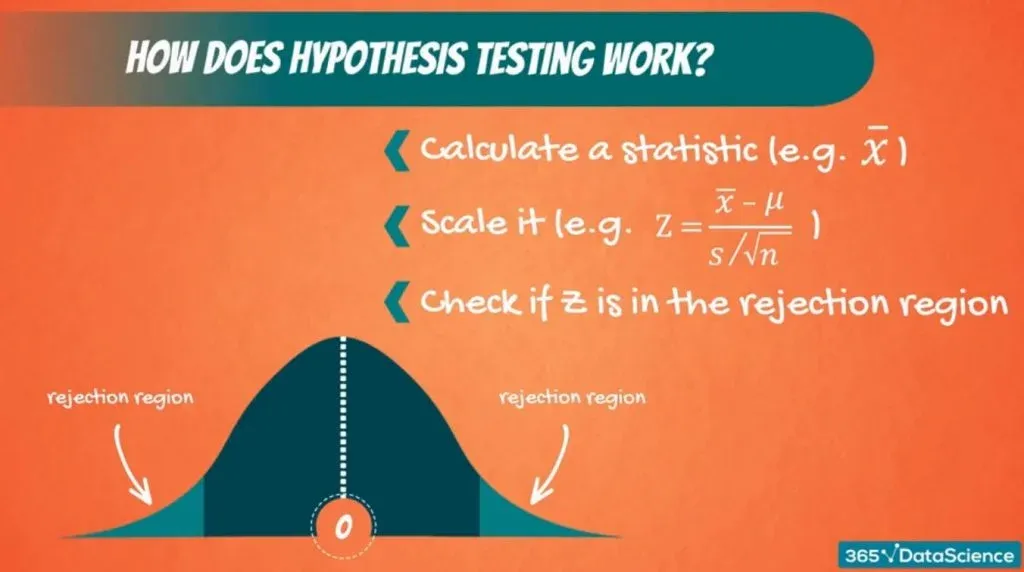

There are four steps to complete a Z-test. Let’s examine each one.

4 Steps to a Z-Test

- State the null hypothesis.

- State the alternate hypothesis.

- Choose your critical value.

- Calculate your Z-test statistics.

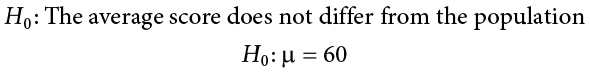

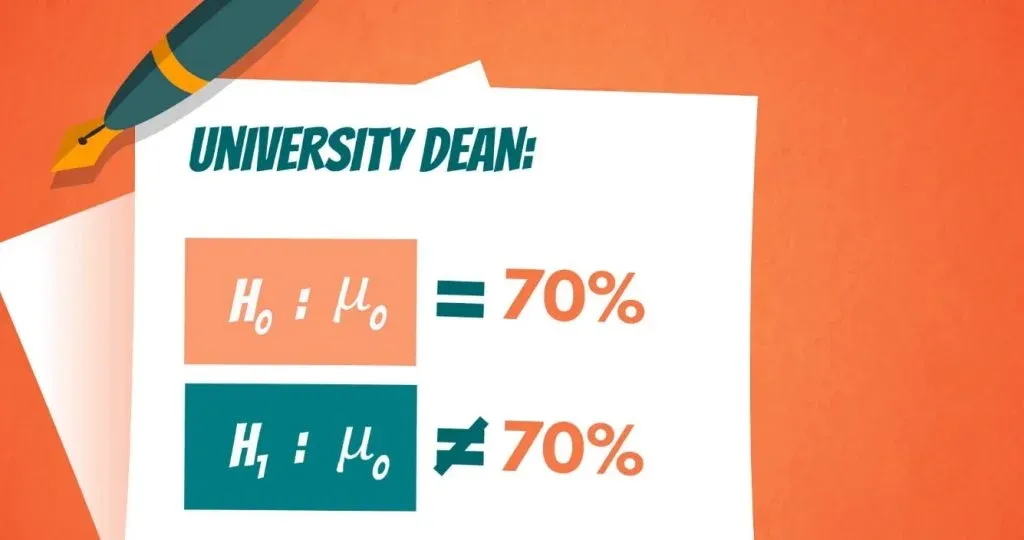

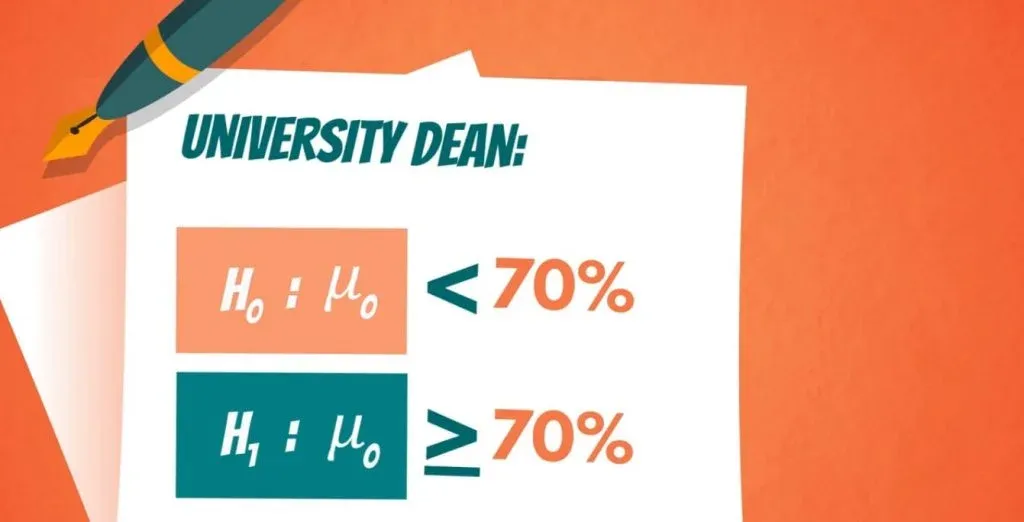

1. State the Null Hypothesis

The first step in a Z-test is to state the null hypothesis, H_0 . This what you believe to be true from the population, which could be the mean of the population, μ_0 :

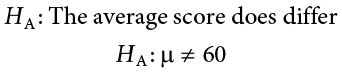

2. State the Alternate Hypothesis

Next, state the alternate hypothesis, H_1 . This is what you observe from your sample. If the sample mean is different from the population’s mean, then we say the mean is not equal to μ_0:

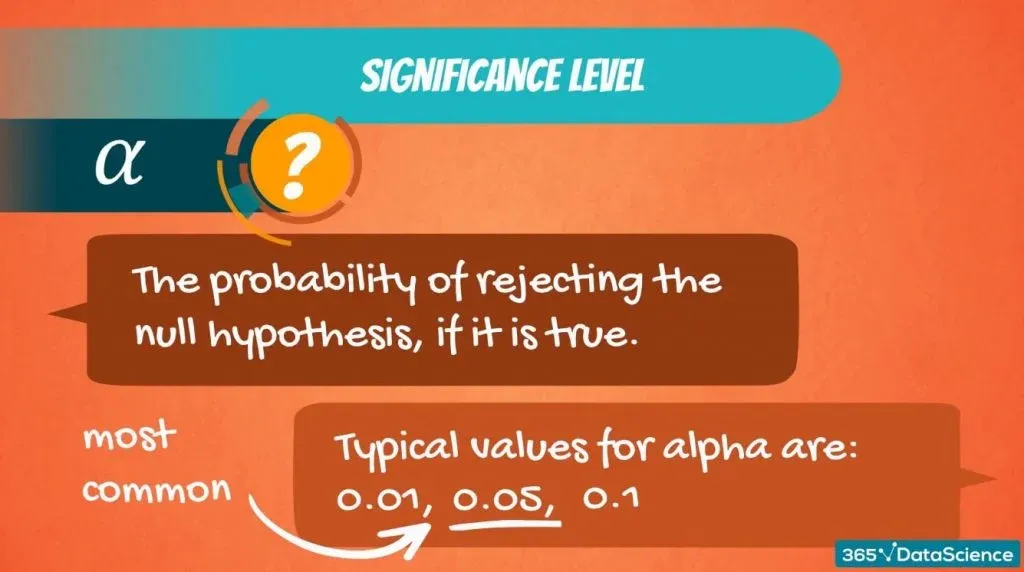

3. Choose Your Critical Value

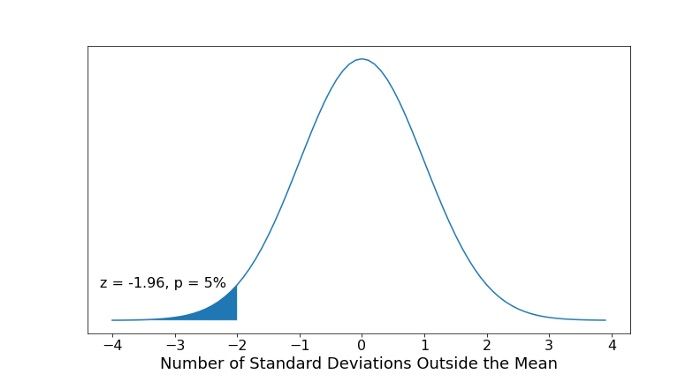

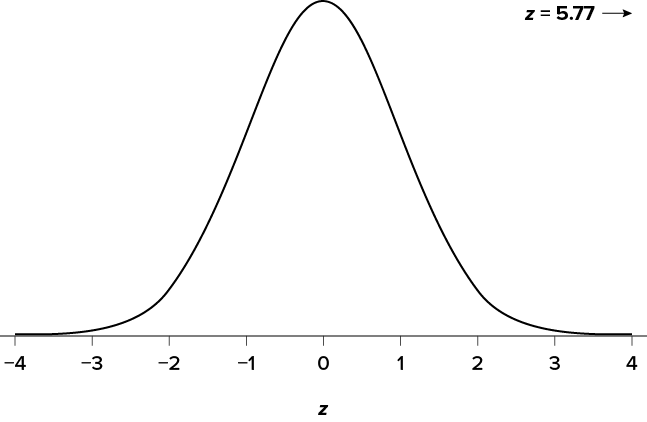

Then, choose your critical value, α , which determines whether you accept or reject the null hypothesis. Typically for a Z-test we would use a statistical significance of 5 percent which is z = +/- 1.96 standard deviations from the population’s mean in the normal distribution:

This critical value is based on confidence intervals.

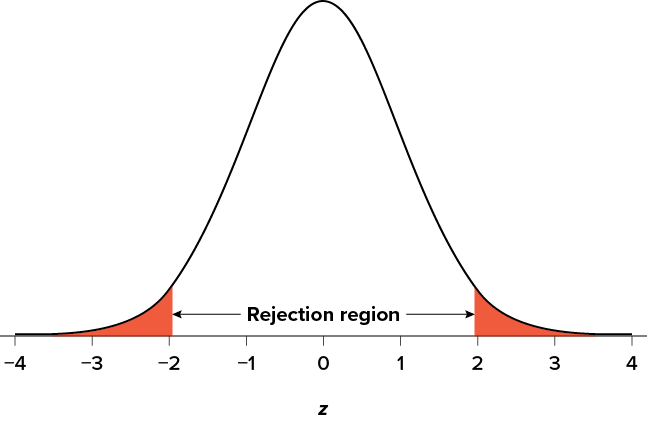

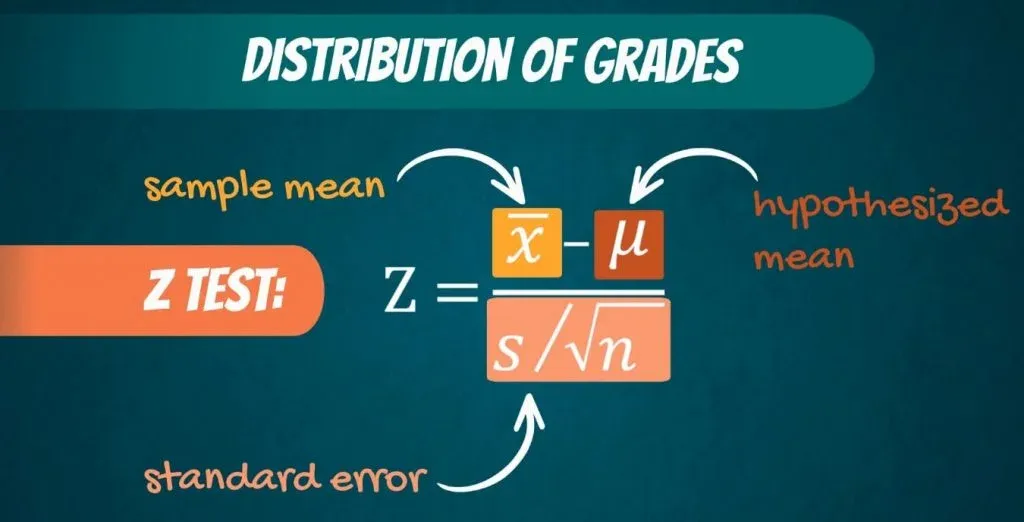

4. Calculate Your Z-Test Statistic

Compute the Z-test Statistic using the sample mean, μ_1 , the population mean, μ_0 , the number of data points in the sample, n and the population’s standard deviation, σ :

If the test statistic is greater (or lower depending on the test we are conducting) than the critical value, then the alternate hypothesis is true because the sample’s mean is statistically significant enough from the population mean.

Another way to think about this is if the sample mean is so far away from the population mean, the alternate hypothesis has to be true or the sample is a complete anomaly.

More on Data Science: Basic Probability Theory and Statistics Terms to Know

Z-Test Example

Let’s go through an example to fully understand the one-sample mean Z-test.

A school says that its pupils are, on average, smarter than other schools. It takes a sample of 50 students whose average IQ measures to be 110. The population, or the rest of the schools, has an average IQ of 100 and standard deviation of 20. Is the school’s claim correct?

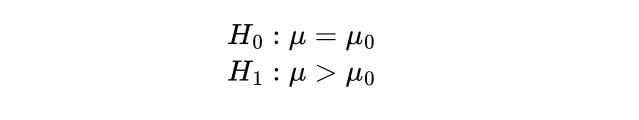

The null and alternate hypotheses are:

Where we are saying that our sample, the school, has a higher mean IQ than the population mean.

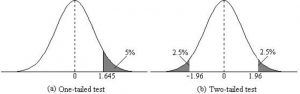

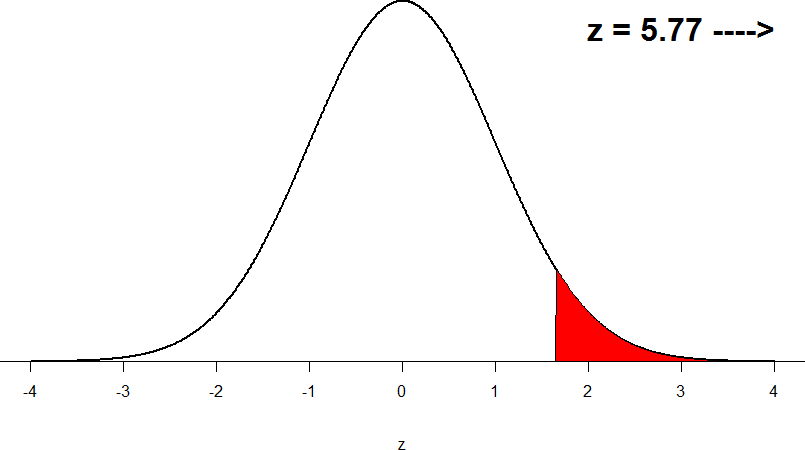

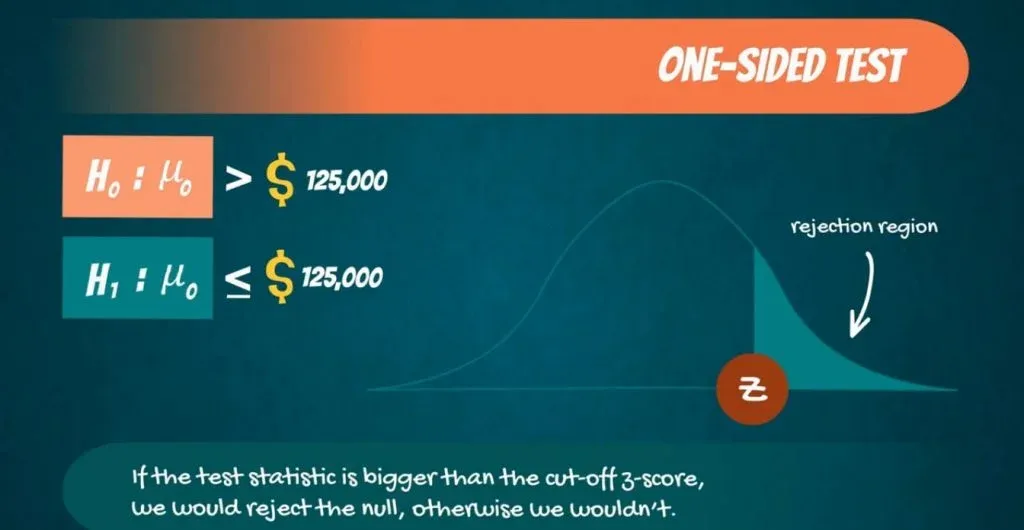

Now, this is what’s called a right-sided, one-tailed test as our sample mean is greater than the population’s mean. So, choosing a critical value of 5 percent, which equals a Z-score of 1.96 , we can only reject the null hypothesis if our Z-test statistic is greater than 1.96.

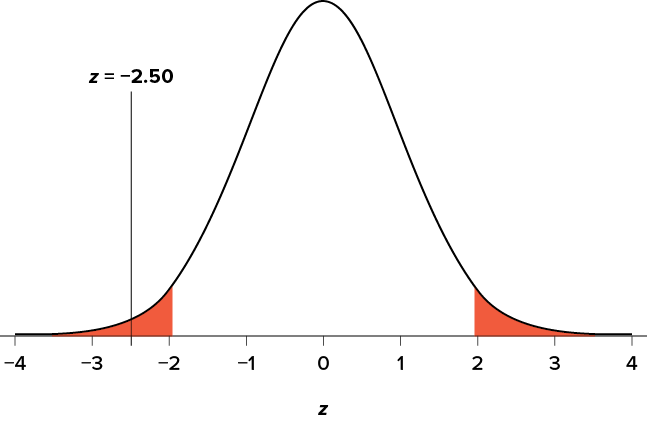

If the school claimed its students’ IQs were an average of 90, then we would use a left-tailed test, as shown in the figure above. We would then only reject the null hypothesis if our Z-test statistic is less than -1.96.

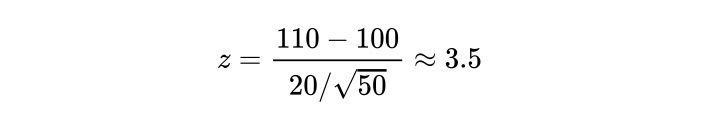

Computing our Z-test statistic, we see:

Therefore, we have sufficient evidence to reject the null hypothesis, and the school’s claim is right.

Hope you enjoyed this article on Z-tests. In this post, we only addressed the most simple case, the one-sample mean test. However, there are other types of tests, but they all follow the same process just with some small nuances.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

10 Chapter 10: Hypothesis Testing with Z

Setting up the hypotheses.

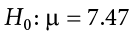

When setting up the hypotheses with z, the parameter is associated with a sample mean (in the previous chapter examples the parameters for the null used 0). Using z is an occasion in which the null hypothesis is a value other than 0. For example, if we are working with mothers in the U.S. whose children are at risk of low birth weight, we can use 7.47 pounds, the average birth weight in the US, as our null value and test for differences against that. For now, we will focus on testing a value of a single mean against what we expect from the population.

Using birthweight as an example, our null hypothesis takes the form: H 0 : μ = 7.47 Notice that we are testing the value for μ, the population parameter, NOT the sample statistic ̅X (or M). We are referring to the data right now in raw form (we have not standardized it using z yet). Again, using inferential statistics, we are interested in understanding the population, drawing from our sample observations. For the research question, we have a mean value from the sample to use, we have specific data is – it is observed and used as a comparison for a set point.

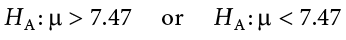

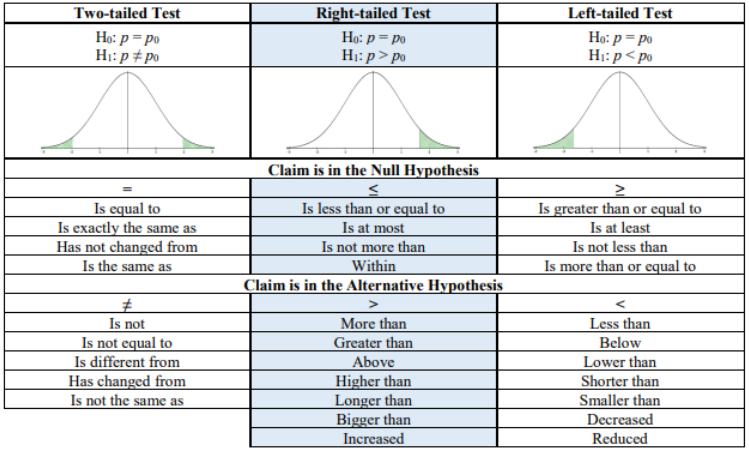

As mentioned earlier, the alternative hypothesis is simply the reverse of the null hypothesis, and there are three options, depending on where we expect the difference to lie. We will set the criteria for rejecting the null hypothesis based on the directionality (greater than, less than, or not equal to) of the alternative.

If we expect our obtained sample mean to be above or below the null hypothesis value (knowing which direction), we set a directional hypothesis. O ur alternative hypothesis takes the form based on the research question itself. In our example with birthweight, this could be presented as H A : μ > 7.47 or H A : μ < 7.47.

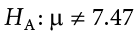

Note that we should only use a directional hypothesis if we have a good reason, based on prior observations or research, to suspect a particular direction. When we do not know the direction, such as when we are entering a new area of research, we use a non-directional alternative hypothesis. In our birthweight example, this could be set as H A : μ ≠ 7.47

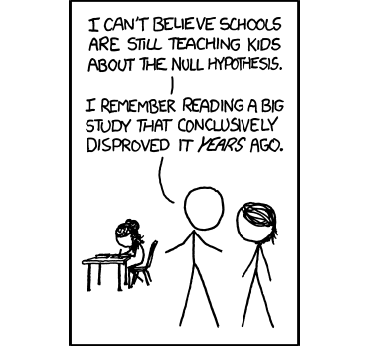

In working with data for this course we will need to set a critical value of the test statistic for alpha (α) for use of test statistic tables in the back of the book. This is determining the critical rejection region that has a set critical value based on α.

Determining Critical Value from α

We set alpha (α) before collecting data in order to determine whether or not we should reject the null hypothesis. We set this value beforehand to avoid biasing ourselves by viewing our results and then determining what criteria we should use.

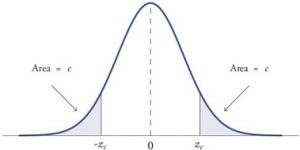

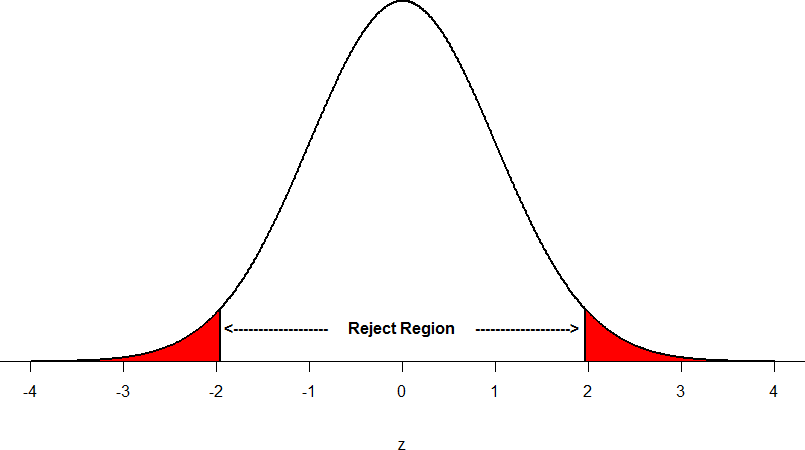

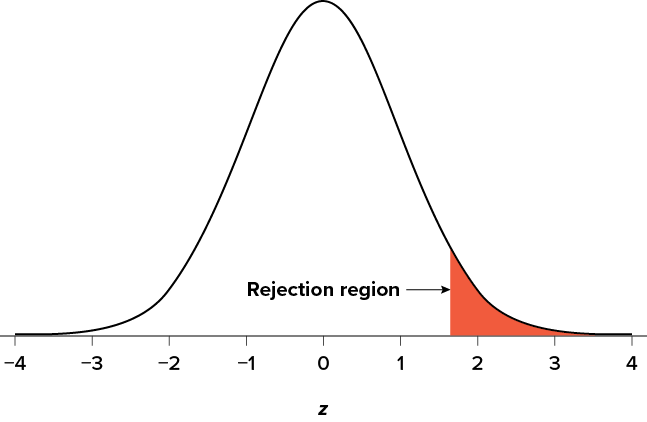

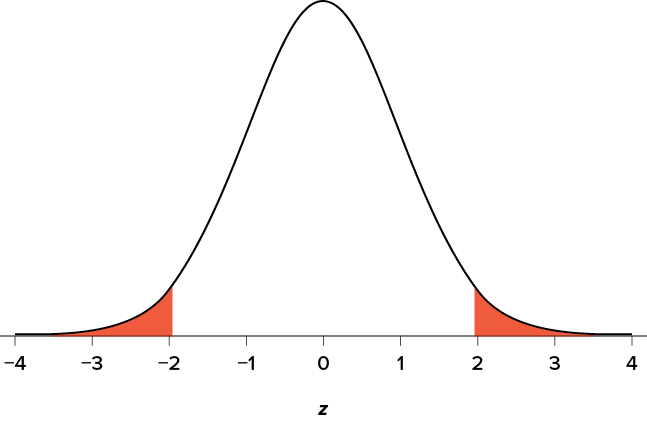

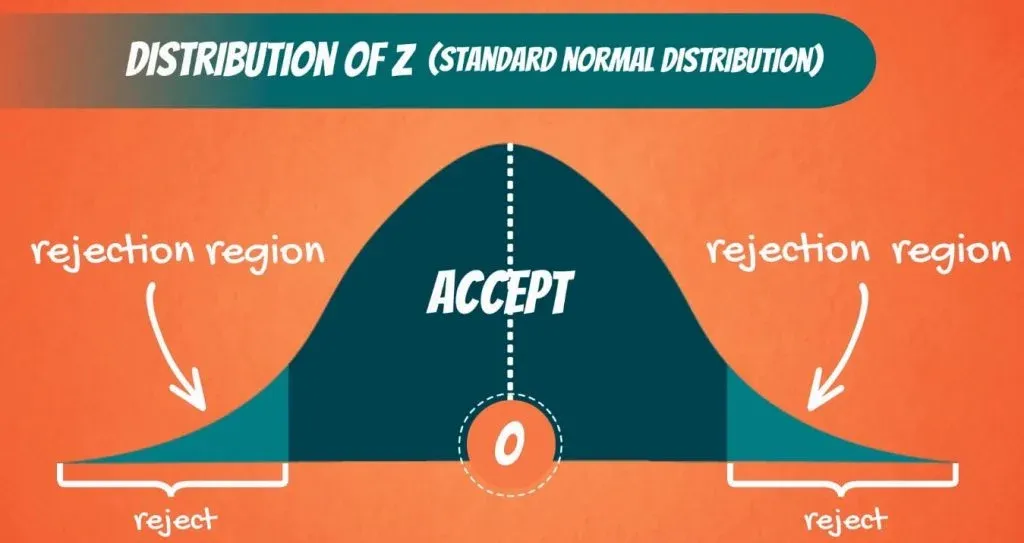

When a research hypothesis predicts an effect but does not predict a direction for the effect, it is called a non-directional hypothesis . To test the significance of a non-directional hypothesis, we have to consider the possibility that the sample could be extreme at either tail of the comparison distribution. We call this a two-tailed test .

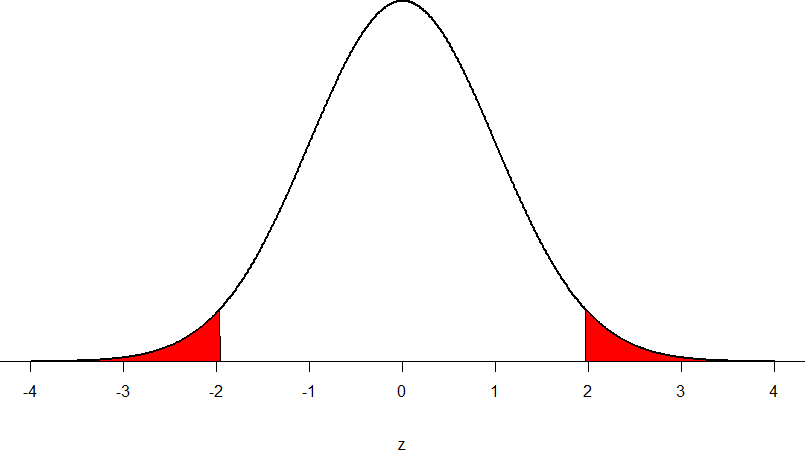

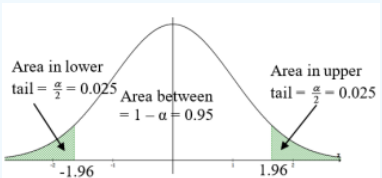

Figure 1. showing a 2-tail test for non-directional hypothesis for z for area C is the critical rejection region.

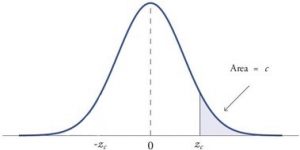

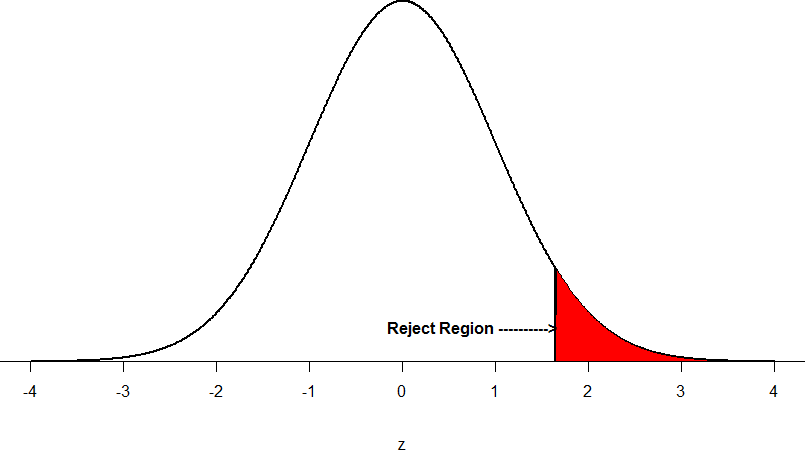

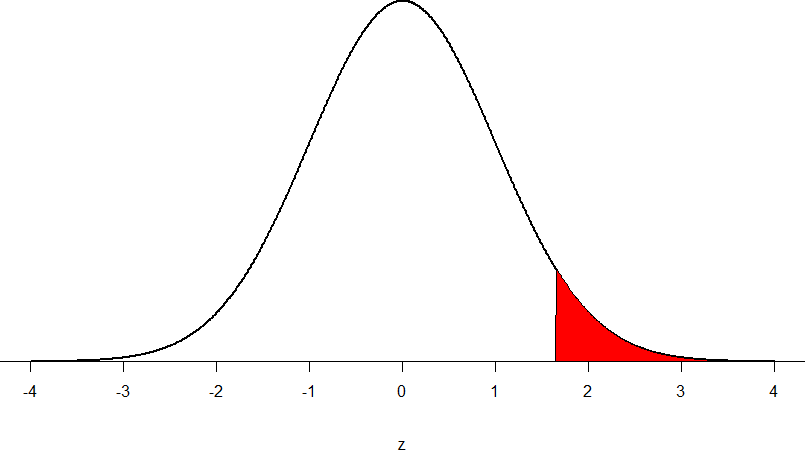

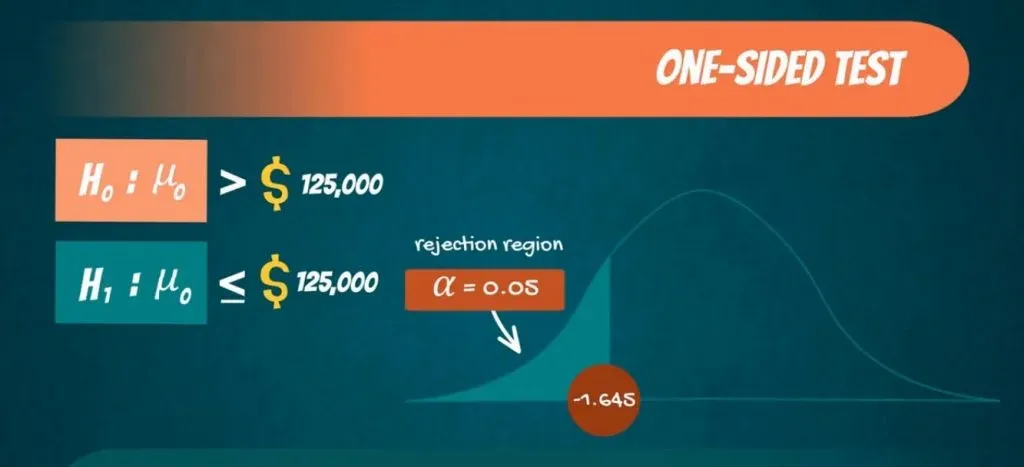

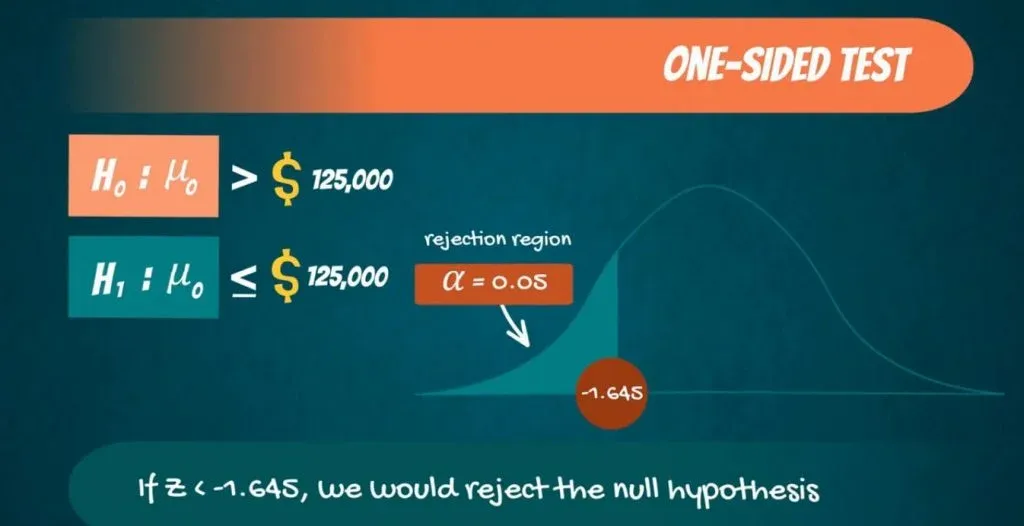

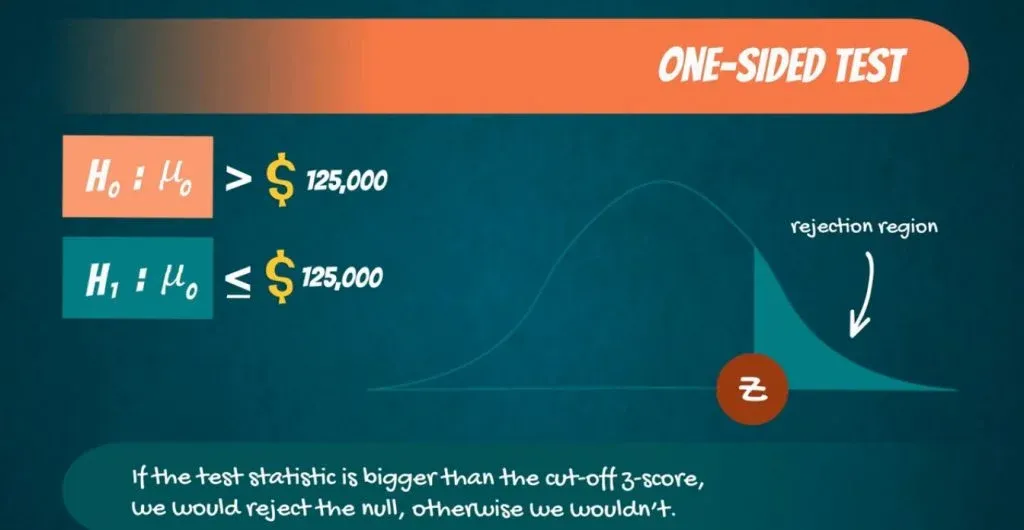

When a research hypothesis predicts a direction for the effect, it is called a directional hypothesis . To test the significance of a directional hypothesis, we have to consider the possibility that the sample could be extreme at one-tail of the comparison distribution. We call this a one-tailed test .

Figure 2. showing a 1-tail test for a directional hypothesis (predicting an increase) for z for area C is the critical rejection region.

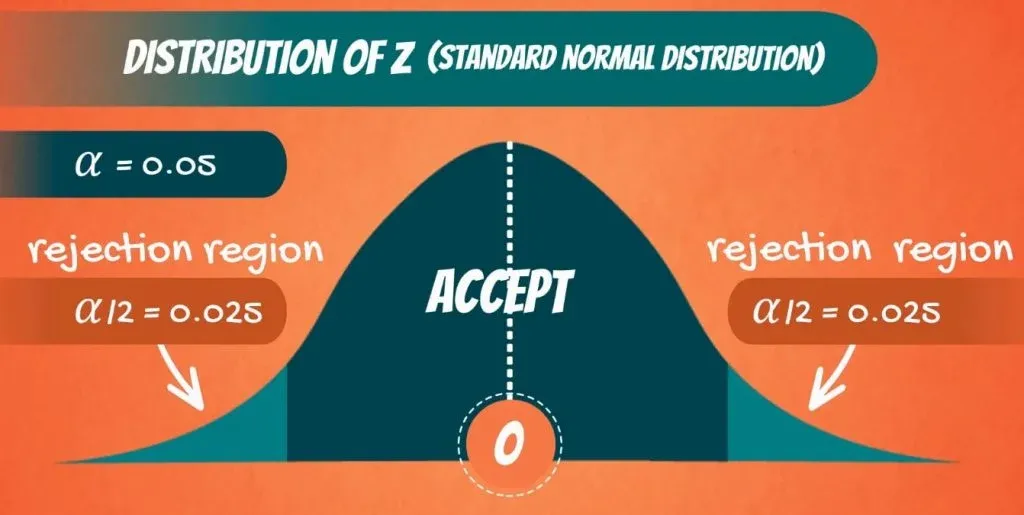

Determining Cutoff Scores with Two-Tailed Tests

Typically we specify an α level before analyzing the data. If the data analysis results in a probability value below the α level, then the null hypothesis is rejected; if it is not, then the null hypothesis is not rejected. In other words, if our data produce values that meet or exceed this threshold, then we have sufficient evidence to reject the null hypothesis ; if not, we fail to reject the null (we never “accept” the null). According to this perspective, if a result is significant, then it does not matter how significant it is. Moreover, if it is not significant, then it does not matter how close to being significant it is. Therefore, if the 0.05 level is being used, then probability values of 0.049 and 0.001 are treated identically. Similarly, probability values of 0.06 and 0.34 are treated identically. Note we will discuss ways to address effect size (which is related to this challenge of NHST).

When setting the probability value, there is a special complication in a two-tailed test. We have to divide the significance percentage between the two tails. For example, with a 5% significance level, we reject the null hypothesis only if the sample is so extreme that it is in either the top 2.5% or the bottom 2.5% of the comparison distribution. This keeps the overall level of significance at a total of 5%. A one-tailed test does have such an extreme value but with a one-tailed test only one side of the distribution is considered.

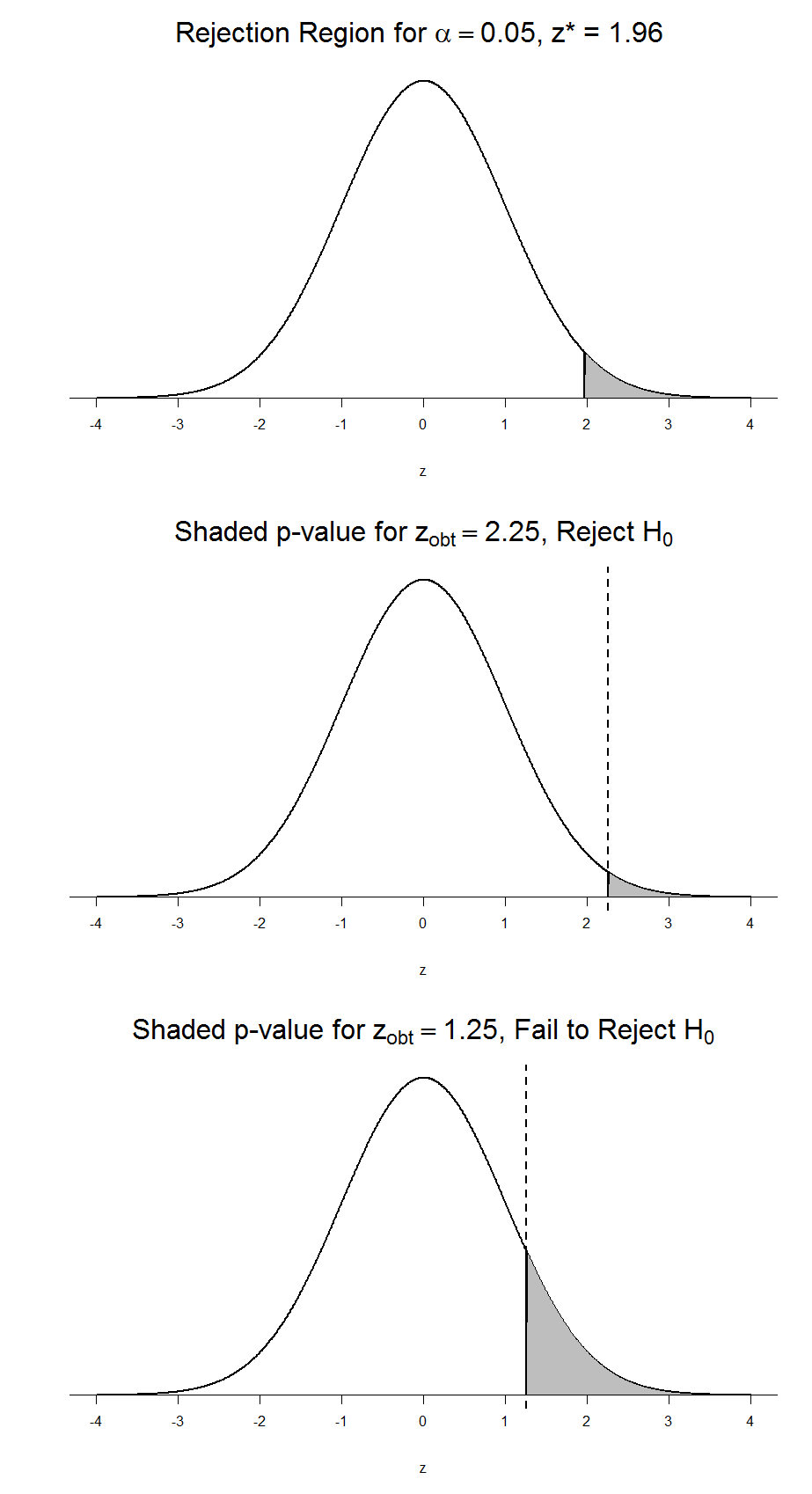

Figure 3. Critical value differences in one and two-tail tests. Photo Credit

Let’s re view th e set critical values for Z.

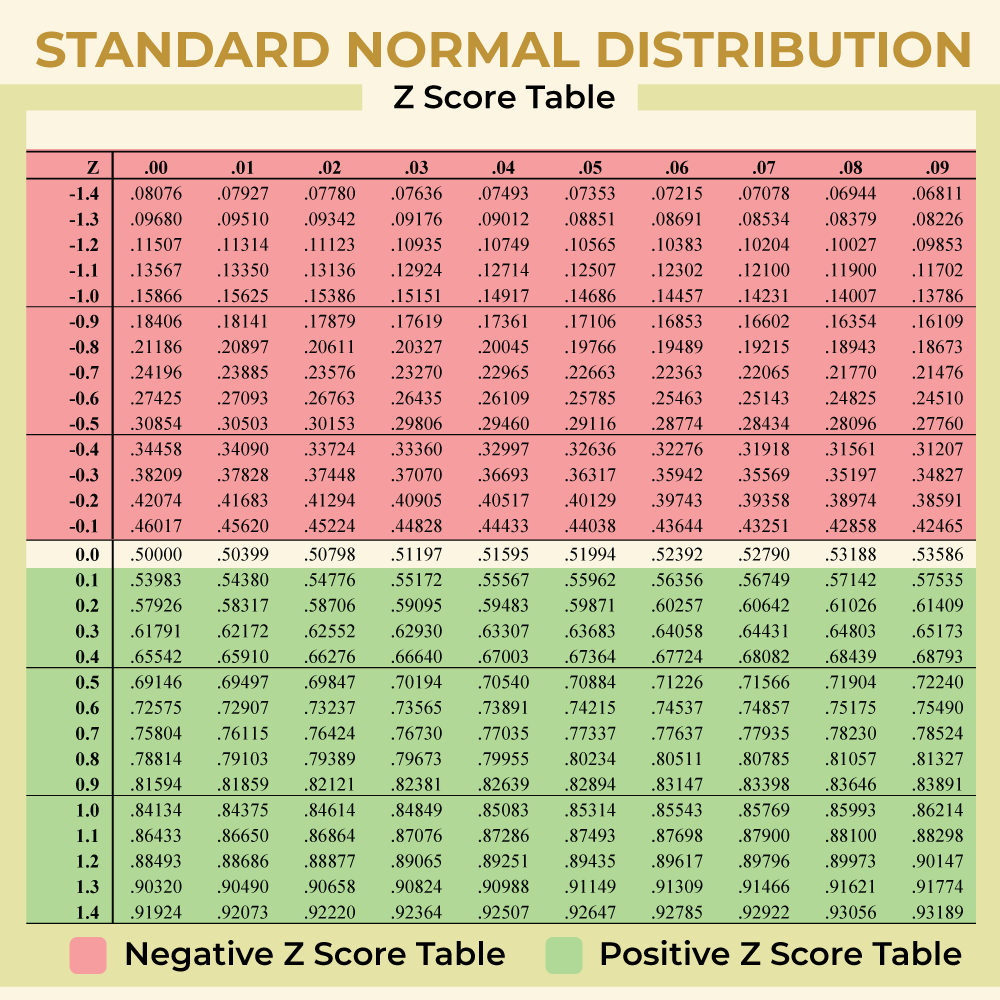

We discussed z-scores and probability in chapter 8. If we revisit the z-score for 5% and 1%, we can identify the critical regions for the critical rejection areas from the unit standard normal table.

- A two-tailed test at the 5% level has a critical boundary Z score of +1.96 and -1.96

- A one-tailed test at the 5% level has a critical boundary Z score of +1.64 or -1.64

- A two-tailed test at the 1% level has a critical boundary Z score of +2.58 and -2.58

- A one-tailed test at the 1% level has a critical boundary Z score of +2.33 or -2.33.

Review: Critical values, p-values, and significance level

There are two criteria we use to assess whether our data meet the thresholds established by our chosen significance level, and they both have to do with our discussions of probability and distributions. Recall that probability refers to the likelihood of an event, given some situation or set of conditions. In hypothesis testing, that situation is the assumption that the null hypothesis value is the correct value, or that there is no effec t. The value laid out in H 0 is our condition under which we interpret our results. To reject this assumption, and thereby reject the null hypothesis, we need results that would be very unlikely if the null was true.

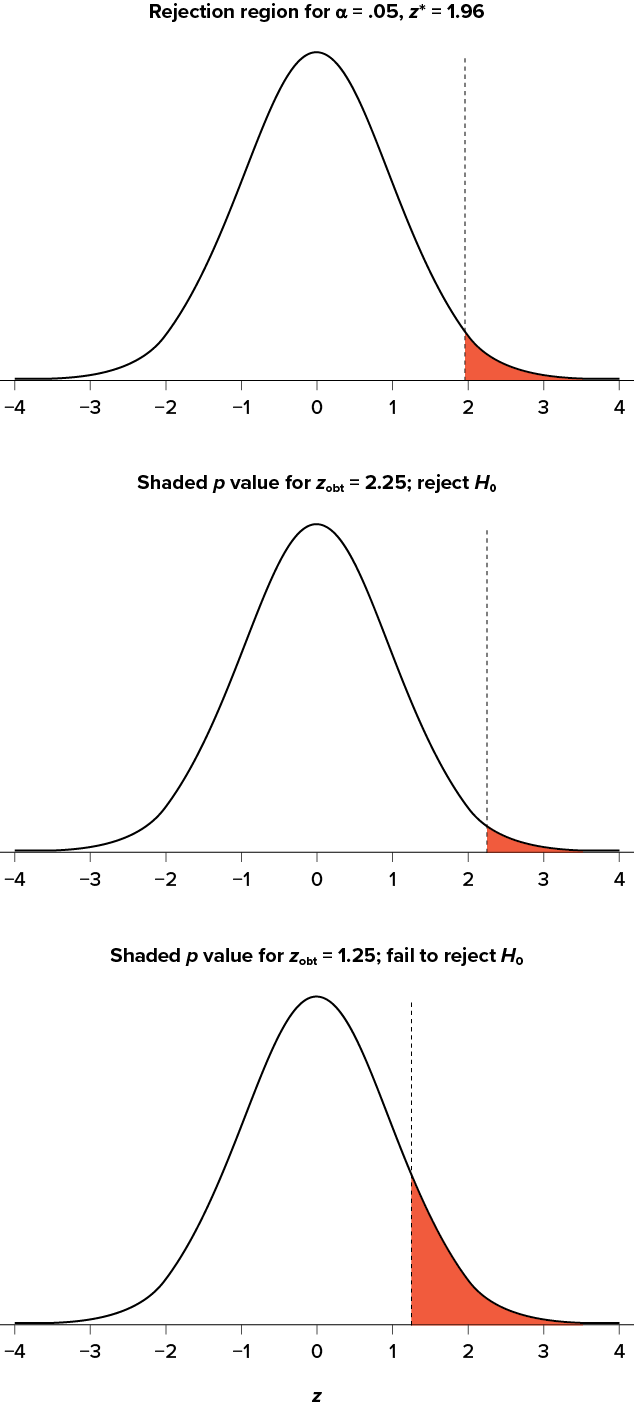

Now recall that values of z which fall in the tails of the standard normal distribution represent unlikely values. That is, the proportion of the area under the curve as or more extreme than z is very small as we get into the tails of the distribution. Our significance level corresponds to the area under the tail that is exactly equal to α: if we use our normal criterion of α = .05, then 5% of the area under the curve becomes what we call the rejection region (also called the critical region) of the distribution. This is illustrated in Figure 4.

Figure 4: The rejection region for a one-tailed test

The shaded rejection region takes us 5% of the area under the curve. Any result which falls in that region is sufficient evidence to reject the null hypothesis.

The rejection region is bounded by a specific z-value, as is any area under the curve. In hypothesis testing, the value corresponding to a specific rejection region is called the critical value, z crit (“z-crit”) or z* (hence the other name “critical region”). Finding the critical value works exactly the same as finding the z-score corresponding to any area under the curve like we did in Unit 1. If we go to the normal table, we will find that the z-score corresponding to 5% of the area under the curve is equal to 1.645 (z = 1.64 corresponds to 0.0405 and z = 1.65 corresponds to 0.0495, so .05 is exactly in between them) if we go to the right and -1.645 if we go to the left. The direction must be determined by your alternative hypothesis, and drawing then shading the distribution is helpful for keeping directionality straight.

Suppose, however, that we want to do a non-directional test. We need to put the critical region in both tails, but we don’t want to increase the overall size of the rejection region (for reasons we will see later). To do this, we simply split it in half so that an equal proportion of the area under the curve falls in each tail’s rejection region. For α = .05, this means 2.5% of the area is in each tail, which, based on the z-table, corresponds to critical values of z* = ±1.96. This is shown in Figure 5.

Figure 5: Two-tailed rejection region

Thus, any z-score falling outside ±1.96 (greater than 1.96 in absolute value) falls in the rejection region. When we use z-scores in this way, the obtained value of z (sometimes called z-obtained) is something known as a test statistic, which is simply an inferential statistic used to test a null hypothesis.

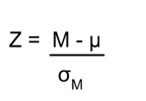

Calculate the test statistic: Z

Now that we understand setting up the hypothesis and determining the outcome, let’s examine hypothesis testing with z! The next step is to carry out the study and get the actual results for our sample. Central to hypothesis test is comparison of the population and sample means. To make our calculation and determine where the sample is in the hypothesized distribution we calculate the Z for the sample data.

Make a decision

To decide whether to reject the null hypothesis, we compare our sample’s Z score to the Z score that marks our critical boundary. If our sample Z score falls inside the rejection region of the comparison distribution (is greater than the z-score critical boundary) we reject the null hypothesis.

The formula for our z- statistic has not changed:

To formally test our hypothesis, we compare our obtained z-statistic to our critical z-value. If z obt > z crit , that means it falls in the rejection region (to see why, draw a line for z = 2.5 on Figure 1 or Figure 2) and so we reject H 0 . If z obt < z crit , we fail to reject. Remember that as z gets larger, the corresponding area under the curve beyond z gets smaller. Thus, the proportion, or p-value, will be smaller than the area for α, and if the area is smaller, the probability gets smaller. Specifically, the probability of obtaining that result, or a more extreme result, under the condition that the null hypothesis is true gets smaller.

Conversely, if we fail to reject, we know that the proportion will be larger than α because the z-statistic will not be as far into the tail. This is illustrated for a one- tailed test in Figure 6.

Figure 6. Relation between α, z obt , and p

When the null hypothesis is rejected, the effect is said to be statistically significant . Do not confuse statistical significance with practical significance. A small effect can be highly significant if the sample size is large enough.

Why does the word “significant” in the phrase “statistically significant” mean something so different from other uses of the word? Interestingly, this is because the meaning of “significant” in everyday language has changed. It turns out that when the procedures for hypothesis testing were developed, something was “significant” if it signified something. Thus, finding that an effect is statistically significant signifies that the effect is real and not due to chance. Over the years, the meaning of “significant” changed, leading to the potential misinterpretation.

Review: Steps of the Hypothesis Testing Process

The process of testing hypotheses follows a simple four-step procedure. This process will be what we use for the remained of the textbook and course, and though the hypothesis and statistics we use will change, this process will not.

Step 1: State the Hypotheses

Your hypotheses are the first thing you need to lay out. Otherwise, there is nothing to test! You have to state the null hypothesis (which is what we test) and the alternative hypothesis (which is what we expect). These should be stated mathematically as they were presented above AND in words, explaining in normal English what each one means in terms of the research question.

Step 2: Find the Critical Values

Next, we formally lay out the criteria we will use to test our hypotheses. There are two pieces of information that inform our critical values: α, which determines how much of the area under the curve composes our rejection region, and the directionality of the test, which determines where the region will be.

Step 3: Compute the Test Statistic

Once we have our hypotheses and the standards we use to test them, we can collect data and calculate our test statistic, in this case z . This step is where the vast majority of differences in future chapters will arise: different tests used for different data are calculated in different ways, but the way we use and interpret them remains the same.

Step 4: Make the Decision

Finally, once we have our obtained test statistic, we can compare it to our critical value and decide whether we should reject or fail to reject the null hypothesis. When we do this, we must interpret the decision in relation to our research question, stating what we concluded, what we based our conclusion on, and the specific statistics we obtained.

Example: Movie Popcorn

Let’s see how hypothesis testing works in action by working through an example. Say that a movie theater owner likes to keep a very close eye on how much popcorn goes into each bag sold, so he knows that the average bag has 8 cups of popcorn and that this varies a little bit, about half a cup. That is, the known population mean is μ = 8.00 and the known population standard deviation is σ =0.50. The owner wants to make sure that the newest employee is filling bags correctly, so over the course of a week he randomly assesses 25 bags filled by the employee to test for a difference (n = 25). He doesn’t want bags overfilled or under filled, so he looks for differences in both directions. This scenario has all of the information we need to begin our hypothesis testing procedure.

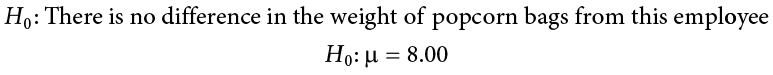

Our manager is looking for a difference in the mean cups of popcorn bags compared to the population mean of 8. We will need both a null and an alternative hypothesis written both mathematically and in words. We’ll always start with the null hypothesis:

H 0 : There is no difference in the cups of popcorn bags from this employee H 0 : μ = 8.00

Notice that we phrase the hypothesis in terms of the population parameter μ, which in this case would be the true average cups of bags filled by the new employee.

Our assumption of no difference, the null hypothesis, is that this mean is exactly

the same as the known population mean value we want it to match, 8.00. Now let’s do the alternative:

H A : There is a difference in the cups of popcorn bags from this employee H A : μ ≠ 8.00

In this case, we don’t know if the bags will be too full or not full enough, so we do a two-tailed alternative hypothesis that there is a difference.

Our critical values are based on two things: the directionality of the test and the level of significance. We decided in step 1 that a two-tailed test is the appropriate directionality. We were given no information about the level of significance, so we assume that α = 0.05 is what we will use. As stated earlier in the chapter, the critical values for a two-tailed z-test at α = 0.05 are z* = ±1.96. This will be the criteria we use to test our hypothesis. We can now draw out our distribution so we can visualize the rejection region and make sure it makes sense

Figure 7: Rejection region for z* = ±1.96

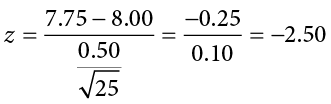

Step 3: Calculate the Test Statistic

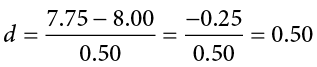

Now we come to our formal calculations. Let’s say that the manager collects data and finds that the average cups of this employee’s popcorn bags is ̅X = 7.75 cups. We can now plug this value, along with the values presented in the original problem, into our equation for z:

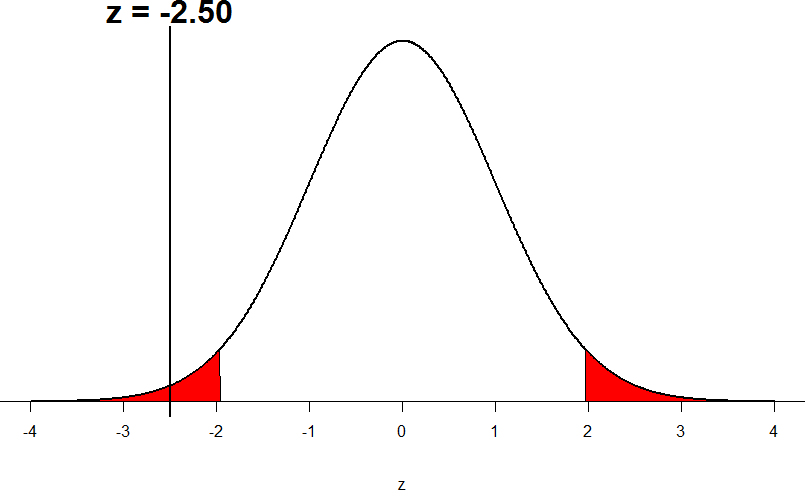

So our test statistic is z = -2.50, which we can draw onto our rejection region distribution:

Figure 8: Test statistic location

Looking at Figure 5, we can see that our obtained z-statistic falls in the rejection region. We can also directly compare it to our critical value: in terms of absolute value, -2.50 > -1.96, so we reject the null hypothesis. We can now write our conclusion:

When we write our conclusion, we write out the words to communicate what it actually means, but we also include the average sample size we calculated (the exact location doesn’t matter, just somewhere that flows naturally and makes sense) and the z-statistic and p-value. We don’t know the exact p-value, but we do know that because we rejected the null, it must be less than α.

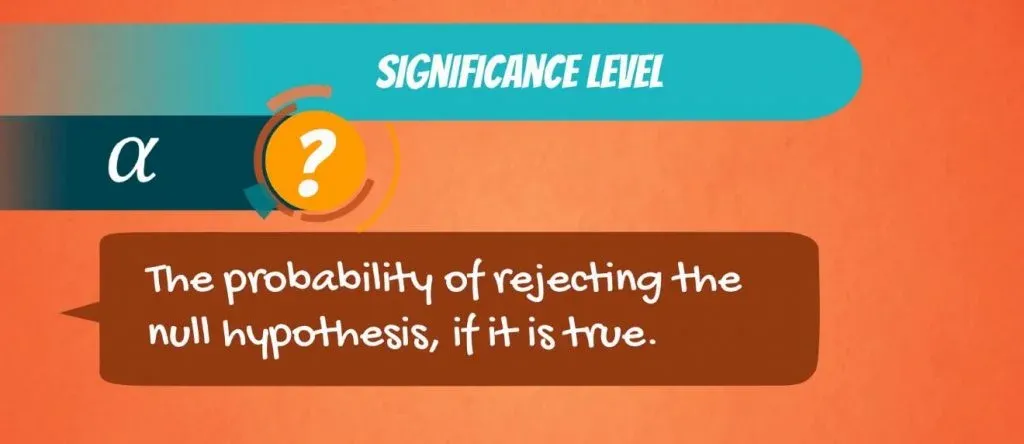

Effect Size

When we reject the null hypothesis, we are stating that the difference we found was statistically significant, but we have mentioned several times that this tells us nothing about practical significance. To get an idea of the actual size of what we found, we can compute a new statistic called an effect size. Effect sizes give us an idea of how large, important, or meaningful a statistically significant effect is.

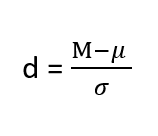

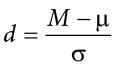

For mean differences like we calculated here, our effect size is Cohen’s d :

Effect sizes are incredibly useful and provide important information and clarification that overcomes some of the weakness of hypothesis testing. Whenever you find a significant result, you should always calculate an effect size

Table 1. Interpretation of Cohen’s d

Example: Office Temperature

Let’s do another example to solidify our understanding. Let’s say that the office building you work in is supposed to be kept at 74 degree Fahrenheit but is allowed

to vary by 1 degree in either direction. You suspect that, as a cost saving measure, the temperature was secretly set higher. You set up a formal way to test your hypothesis.

You start by laying out the null hypothesis:

H 0 : There is no difference in the average building temperature H 0 : μ = 74

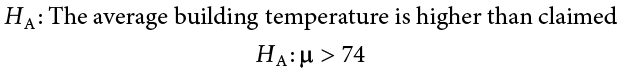

Next you state the alternative hypothesis. You have reason to suspect a specific direction of change, so you make a one-tailed test:

H A : The average building temperature is higher than claimed H A : μ > 74

Now that you have everything set up, you spend one week collecting temperature data:

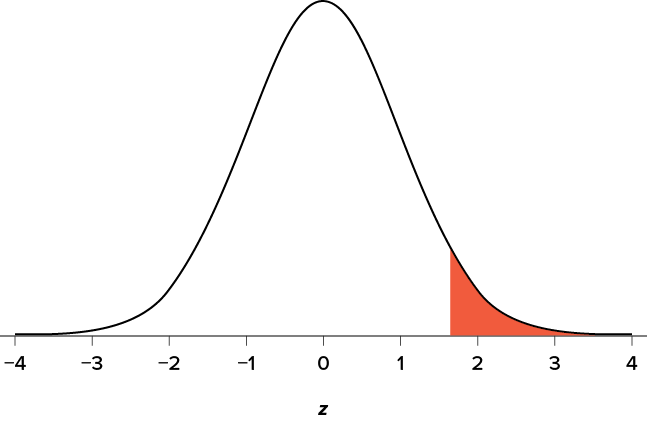

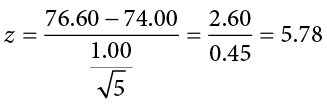

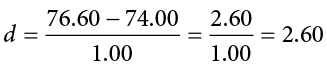

You calculate the average of these scores to be 𝑋̅ = 76.6 degrees. You use this to calculate the test statistic, using μ = 74 (the supposed average temperature), σ = 1.00 (how much the temperature should vary), and n = 5 (how many data points you collected):

z = 76.60 − 74.00 = 2.60 = 5.78

1.00/√5 0.45

This value falls so far into the tail that it cannot even be plotted on the distribution!

Figure 7: Obtained z-statistic

You compare your obtained z-statistic, z = 5.77, to the critical value, z* = 1.645, and find that z > z*. Therefore you reject the null hypothesis, concluding: Based on 5 observations, the average temperature (𝑋̅ = 76.6 degrees) is statistically significantly higher than it is supposed to be, z = 5.77, p < .05.

d = (76.60-74.00)/ 1= 2.60

The effect size you calculate is definitely large, meaning someone has some explaining to do!

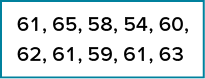

Example: Different Significance Level

First, let’s take a look at an example phrased in generic terms, rather than in the context of a specific research question, to see the individual pieces one more time. This time, however, we will use a stricter significance level, α = 0.01, to test the hypothesis.

We will use 60 as an arbitrary null hypothesis value: H 0 : The average score does not differ from the population H 0 : μ = 50

We will assume a two-tailed test: H A : The average score does differ H A : μ ≠ 50

We have seen the critical values for z-tests at α = 0.05 levels of significance several times. To find the values for α = 0.01, we will go to the standard normal table and find the z-score cutting of 0.005 (0.01 divided by 2 for a two-tailed test) of the area in the tail, which is z crit * = ±2.575. Notice that this cutoff is much higher than it was for α = 0.05. This is because we need much less of the area in the tail, so we need to go very far out to find the cutoff. As a result, this will require a much larger effect or much larger sample size in order to reject the null hypothesis.

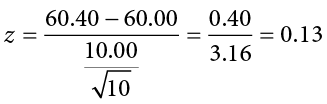

We can now calculate our test statistic. The average of 10 scores is M = 60.40 with a µ = 60. We will use σ = 10 as our known population standard deviation. From this information, we calculate our z-statistic as:

Our obtained z-statistic, z = 0.13, is very small. It is much less than our critical value of 2.575. Thus, this time, we fail to reject the null hypothesis. Our conclusion would look something like:

Notice two things about the end of the conclusion. First, we wrote that p is greater than instead of p is less than, like we did in the previous two examples. This is because we failed to reject the null hypothesis. We don’t know exactly what the p- value is, but we know it must be larger than the α level we used to test our hypothesis. Second, we used 0.01 instead of the usual 0.05, because this time we tested at a different level. The number you compare to the p-value should always be the significance level you test at. Because we did not detect a statistically significant effect, we do not need to calculate an effect size. Note: some statisticians will suggest to always calculate effects size as a possibility of Type II error. Although insignificant, calculating d = (60.4-60)/10 = .04 which suggests no effect (and not a possibility of Type II error).

Review Considerations in Hypothesis Testing

Errors in hypothesis testing.

Keep in mind that rejecting the null hypothesis is not an all-or-nothing decision. The Type I error rate is affected by the α level: the lower the α level the lower the Type I error rate. It might seem that α is the probability of a Type I error. However, this is not correct. Instead, α is the probability of a Type I error given that the null hypothesis is true. If the null hypothesis is false, then it is impossible to make a Type I error. The second type of error that can be made in significance testing is failing to reject a false null hypothesis. This kind of error is called a Type II error.

Statistical Power

The statistical power of a research design is the probability of rejecting the null hypothesis given the sample size and expected relationship strength. Statistical power is the complement of the probability of committing a Type II error. Clearly, researchers should be interested in the power of their research designs if they want to avoid making Type II errors. In particular, they should make sure their research design has adequate power before collecting data. A common guideline is that a power of .80 is adequate. This means that there is an 80% chance of rejecting the null hypothesis for the expected relationship strength.

Given that statistical power depends primarily on relationship strength and sample size, there are essentially two steps you can take to increase statistical power: increase the strength of the relationship or increase the sample size. Increasing the strength of the relationship can sometimes be accomplished by using a stronger manipulation or by more carefully controlling extraneous variables to reduce the amount of noise in the data (e.g., by using a within-subjects design rather than a between-subjects design). The usual strategy, however, is to increase the sample size. For any expected relationship strength, there will always be some sample large enough to achieve adequate power.

Inferential statistics uses data from a sample of individuals to reach conclusions about the whole population. The degree to which our inferences are valid depends upon how we selected the sample (sampling technique) and the characteristics (parameters) of population data. Statistical analyses assume that sample(s) and population(s) meet certain conditions called statistical assumptions.

It is easy to check assumptions when using statistical software and it is important as a researcher to check for violations; if violations of statistical assumptions are not appropriately addressed then results may be interpreted incorrectly.

Learning Objectives

Having read the chapter, students should be able to:

- Conduct a hypothesis test using a z-score statistics, locating critical region, and make a statistical decision including.

- Explain the purpose of measuring effect size and power, and be able to compute Cohen’s d.

Exercises – Ch. 10

- List the main steps for hypothesis testing with the z-statistic. When and why do you calculate an effect size?

- z = 1.99, two-tailed test at α = 0.05

- z = 1.99, two-tailed test at α = 0.01

- z = 1.99, one-tailed test at α = 0.05

- You are part of a trivia team and have tracked your team’s performance since you started playing, so you know that your scores are normally distributed with μ = 78 and σ = 12. Recently, a new person joined the team, and you think the scores have gotten better. Use hypothesis testing to see if the average score has improved based on the following 8 weeks’ worth of score data: 82, 74, 62, 68, 79, 94, 90, 81, 80.

- A study examines self-esteem and depression in teenagers. A sample of 25 teens with a low self-esteem are given the Beck Depression Inventory. The average score for the group is 20.9. For the general population, the average score is 18.3 with σ = 12. Use a two-tail test with α = 0.05 to examine whether teenagers with low self-esteem show significant differences in depression.

- You get hired as a server at a local restaurant, and the manager tells you that servers’ tips are $42 on average but vary about $12 (μ = 42, σ = 12). You decide to track your tips to see if you make a different amount, but because this is your first job as a server, you don’t know if you will make more or less in tips. After working 16 shifts, you find that your average nightly amount is $44.50 from tips. Test for a difference between this value and the population mean at the α = 0.05 level of significance.

Answers to Odd- Numbered Exercises – Ch. 10

1. List hypotheses. Determine critical region. Calculate z. Compare z to critical region. Draw Conclusion. We calculate an effect size when we find a statistically significant result to see if our result is practically meaningful or important

5. Step 1: H 0 : μ = 42 “My average tips does not differ from other servers”, H A : μ ≠ 42 “My average tips do differ from others”

Introduction to Statistics for Psychology Copyright © 2021 by Alisa Beyer is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Z-Score: Definition, Formula, Calculation & Interpretation

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A z-score is a statistical measure that describes the position of a raw score in terms of its distance from the mean, measured in standard deviation units. A positive z-score indicates that the value lies above the mean, while a negative z-score indicates that the value lies below the mean.

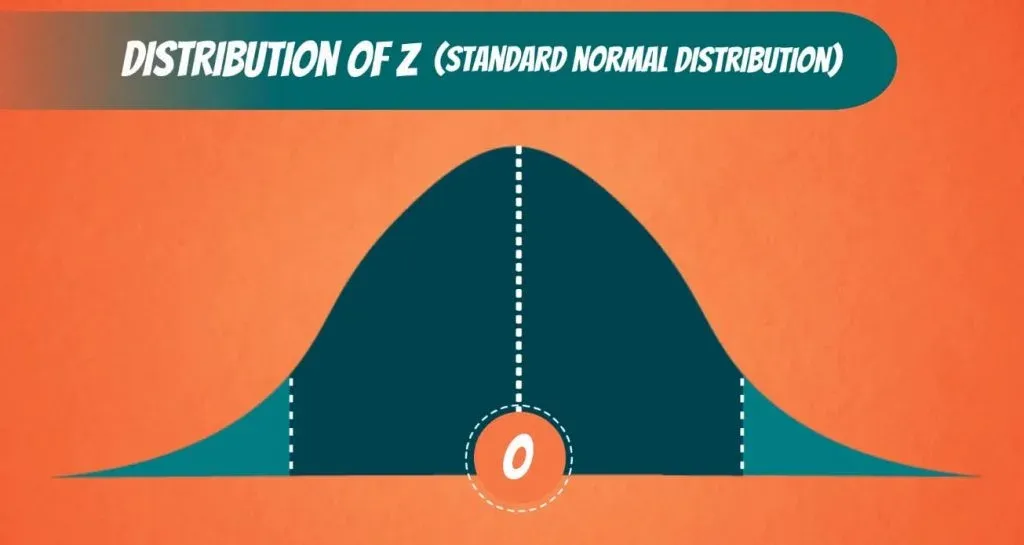

It is also known as a standard score because it allows scores on different variables to be compared by standardizing the distribution. A standard normal distribution (SND) is a normally shaped distribution with a mean of 0 and a standard deviation (SD) of 1 (see Fig. 1).

Why Are Z-Scores Important?

It is useful to standardize the values (raw scores) of a normal distribution by converting them into z-scores because:

- Probability estimation : Z-scores can be used to estimate the probability of a particular data point occurring within a normal distribution. By converting z-scores to percentiles or using a standard normal distribution table, you can determine the likelihood of a value being above or below a certain threshold.

- Hypothesis testing : Z-scores are used in hypothesis testing to determine the significance of results. By comparing the z-score of a sample statistic to critical values, you can decide whether to reject or fail to reject a null hypothesis.

- Comparing datasets : Z-scores allow you to compare data points from different datasets by standardizing the values. This is useful when the datasets have different scales or units.

- Identifying outliers : Z-scores help identify outliers, which are data points significantly different from the rest of the dataset. Typically, data points with z-scores greater than 3 or less than -3 are considered potential outliers and may warrant further investigation.

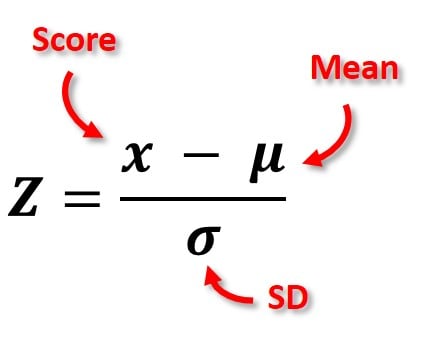

How To Calculate

The formula for calculating a z-score is z = (x-μ)/σ, where x is the raw score, μ is the population mean, and σ is the population standard deviation.

As the formula shows, the z-score is simply the raw score minus the population mean, divided by the population standard deviation.

When the population mean and the population standard deviation are unknown, the standard score may be calculated using the sample mean (x̄) and sample standard deviation (s) as estimates of the population values.

To calculate a z-score, follow these steps:

- Identify the individual score ( x ) you want to convert to a z-score.

- Determine the mean ( μ or mu ) of the dataset. The mean is the average of all the scores.

- Calculate the standard deviation ( σ or sigma ) of the dataset. The standard deviation measures how spread out the scores are from the mean.

- Subtract the mean ( μ ) from the individual score ( x ). This will give you the difference between the score and the mean.

- Divide the difference you calculated in step 4 by the standard deviation ( σ ). The result is the z-score.

Interpretation

The value of the z-score tells you how many standard deviations you are away from the mean. A larger absolute value indicates a greater distance from the mean.

- Positive z-score : If a z-score is positive, it indicates that the data point is above the mean. For example, a z-score of 1.5 means the data point is 1.5 standard deviations above the mean.

- Negative z-score : If a z-score is negative, it indicates that the data point is below the mean. For example, a z-score of -2 means the data point is 2 standard deviations below the mean.

- Zero z-score : A z-score of zero indicates that the data point is equal to the mean.

Another way to interpret z-scores is by creating a standard normal distribution, also known as the z-score distribution, or probability distribution (see Fig. 3).

Probability Estimation

When working with z-scores, the data is assumed to follow a standard normal distribution with a mean of 0 and a standard deviation of 1. This allows for the use of standard normal distribution tables or calculators to determine probabilities.

The z-score tells us how many standard deviations a data point is from the mean. Once we know the z-score, we can estimate the probability of a data point falling within a specific range or being above or below a certain value.

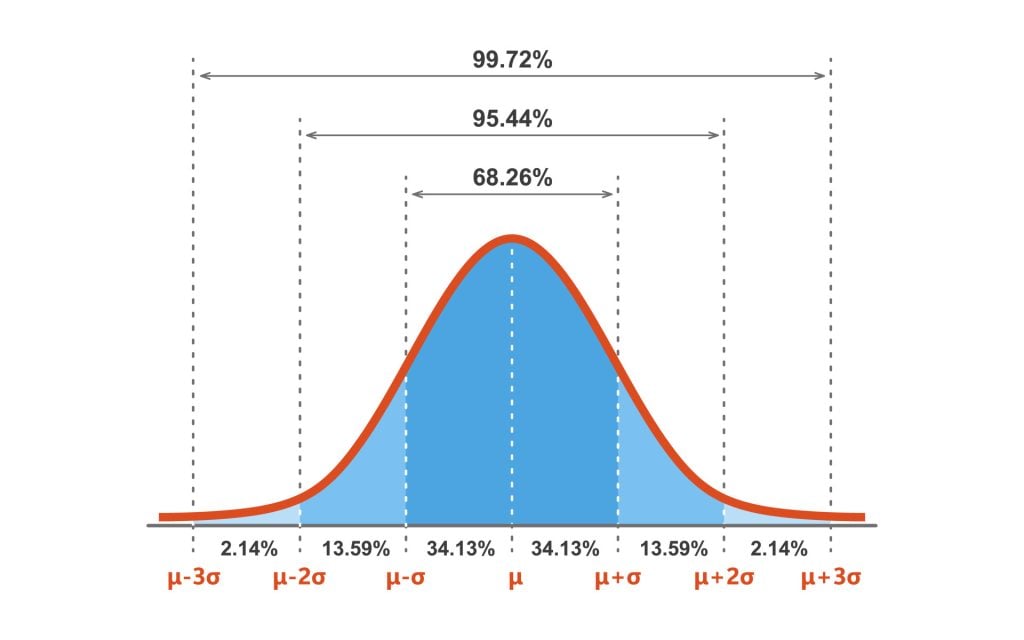

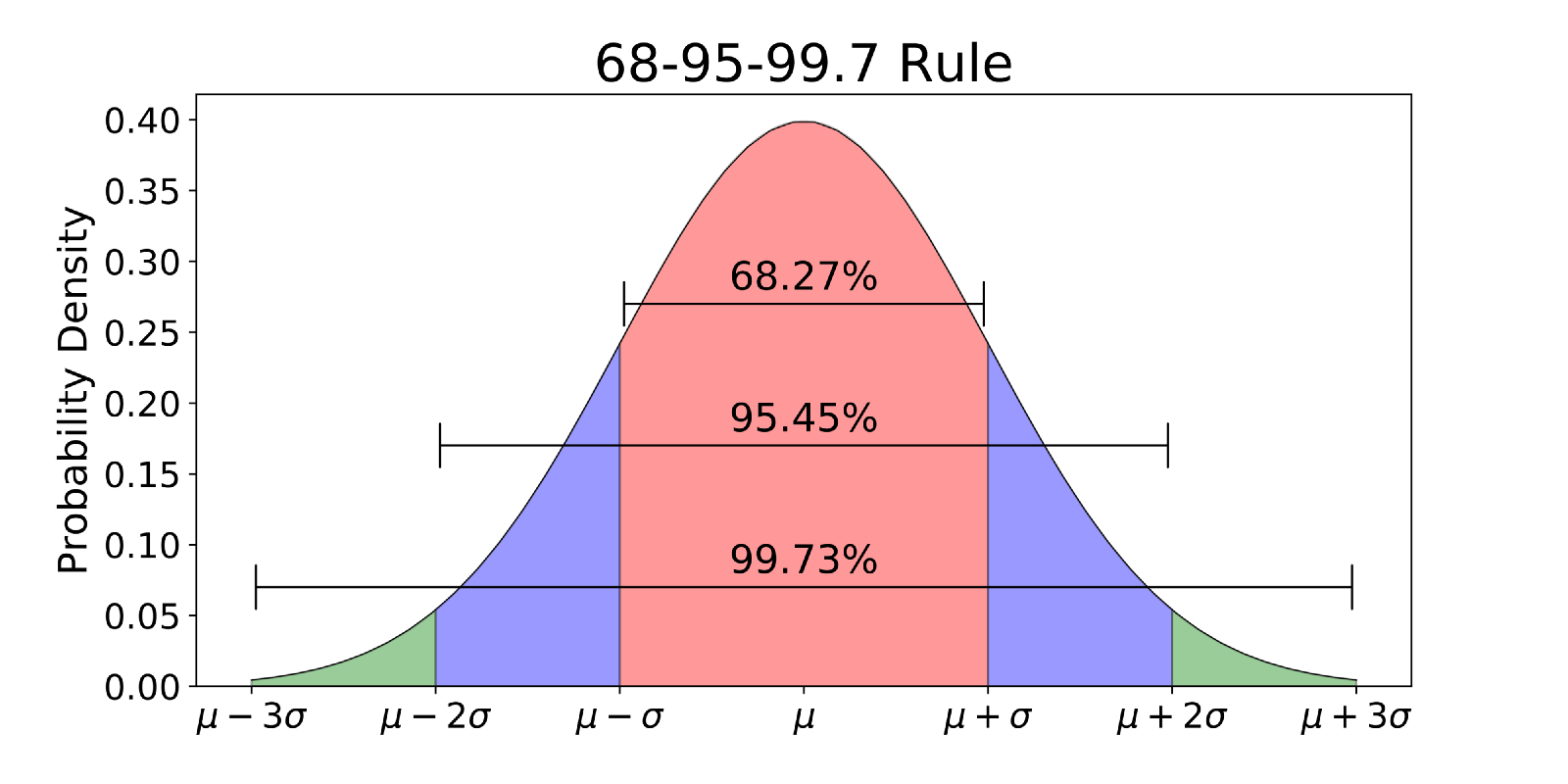

In a standard normal distribution, there’s a handy rule called the empirical rule, or the 68-95-99.7 rule. This rule states that:

- Approximately 68% of the data falls within one standard deviation of the mean (z-scores between -1 and 1).

- Around 95% of the data falls within two standard deviations of the mean (z-scores between -2 and 2).

- Nearly 99.7% of the data falls within three standard deviations of the mean (z-scores between -3 and 3).

Figure 3 shows the proportion of a standard normal distribution in percentages. As you can see, there’s a 95% probability of randomly selecting a score between -1.96 and +1.96 standard deviations from the mean.

Using the standard normal distribution, researchers can calculate the probability of randomly obtaining a score from the sample. For example, there’s a 68% chance of randomly selecting a score between -1 and +1 standard deviations from the mean.

Hypothesis Testing

Using a z-score table lets you quickly determine the probability associated with a specific value in a dataset, helping you make decisions and draw conclusions based on your data.

- If you have a one-tailed test, you will look for the area to the left (for a left-tailed test) or right (for a right-tailed test) of your z-score.

- If you have a two-tailed test, you will look for the area in both tails combined.

The significance level (α) is the probability threshold for rejecting the null hypothesis. Common significance levels are 0.01, 0.05, and 0.10. The critical values are the z-scores that correspond to the chosen significance level. These values can be found using a standard normal distribution table or calculator.

A Z-score table shows the percentage of values (usually a decimal figure) to the left of a given Z-score on a standard normal distribution.

1. Identify the parts of the z-score :

- The z-score consists of a whole number and decimal parts

- For example, if your z-score is 1.24, the whole number part is 1, and the decimal part is 0.24

2. Find the corresponding probability in the z-score table :

- Z-score tables are usually organized with the whole number part of the z-score in the leftmost column and the decimal part across the top row

- Locate the whole number part of your z-score in the leftmost column

- Move across the row until you find the column that matches the decimal part of your z-score

- The value at the intersection of the row and column is the probability (area under the curve) associated with your z-score

3. Interpret the probability :

- For a left-tailed test, the probability you found in the table is your p-value

- For a right-tailed test, subtract the probability you found from 1 to get your p-value

- For a two-tailed test, if your z-score is positive, double the probability you found to get your p-value; if your z-score is negative, subtract the probability from 1 and then double the result to get your p-value

- Compare the probability to your chosen alpha level (0.05 or 0.01). If the probability is less than the alpha level, the result is considered statistically significant

In statistical analysis, if there is less than a 5% chance of randomly selecting a particular raw score, it is considered a statistically significant result. This means the result is unlikely to have occurred by chance alone and is more likely to be a real effect or difference.

Practice Problems for Z-Scores

Calculate the z-scores for the following:

Sample Questions

- Scores on a psychological well-being scale range from 1 to 10, with an average score of 6 and a standard deviation of 2. What is the z-score for a person who scored 4?

- On a measure of anxiety, a group of participants show a mean score of 35 with a standard deviation of 5. What is the z-score corresponding to a score of 30?

- A depression inventory has an average score of 50 with a standard deviation of 10. What is the z-score corresponding to a score of 70?

- In a study on sleep, participants report an average of 7 hours of sleep per night, with a standard deviation of 1 hour. What is the z-score for a person reporting 5 hours of sleep?

- On a memory test, the average score is 100, with a standard deviation of 15. What is the z-score corresponding to a score of 85?

- A happiness scale has an average score of 75 with a standard deviation of 10. What is the z-score corresponding to a score of 95?

- An intelligence test has a mean score of 100 with a standard deviation of 15. What is the z-score that corresponds to a score of 130?

Answers for Sample Questions

Double-check your answers with these solutions. Remember, for each problem, you subtract the average from your value, then divide by how much values typically vary (the standard deviation).

- Z-score = (4 – 6)/2 = -1

- Z-score = (30 – 35)/5 = -1

- Z-score = (70 – 50)/10 = 2

- Z-score = (5 – 7)/1 = -2

- Z-score = (85 – 100)/15 = -1

- Z-score = (95 – 75)/10 = 2

- Z-score = (130 – 100)/15 = 2

Calculating a Raw Score

Sometimes, we know a z-score and want to find the corresponding raw score. The formula for calculating a z-score in a sample into a raw score is given below:

X = (z)(SD) + mean

As the formula shows, the z-score and standard deviation are multiplied together, and this figure is added to the mean.

Check your answer makes sense: If we have a negative z-score, the corresponding raw score should be less than the mean, and a positive z-score must correspond to a raw score higher than the mean.

Calculating a Z-Score using Excel

To calculate the z-score of a specific value, x, first, you must calculate the mean of the sample by using the AVERAGE formula.

For example, if the range of scores in your sample begins at cell A1 and ends at cell A20, the formula =AVERAGE(A1:A20) returns the average of those numbers.

Next, you must calculate the standard deviation of the sample by using the STDEV.S formula. For example, if the range of scores in your sample begins at cell A1 and ends at cell A20, the formula = STDEV.S (A1:A20) returns the standard deviation of those numbers.

Now to calculate the z-score, type the following formula in an empty cell: = (x – mean) / [standard deviation].

To make things easier, instead of writing the mean and SD values in the formula, you could use the cell values corresponding to these values. For example, = (A12 – B1) / [C1].

Then, to calculate the probability for a SMALLER z-score, which is the probability of observing a value less than x (the area under the curve to the LEFT of x), type the following into a blank cell: = NORMSDIST( and input the z-score you calculated).

To find the probability of LARGER z-score, which is the probability of observing a value greater than x (the area under the curve to the RIGHT of x), type: =1 – NORMSDIST (and input the z-score you calculated).

Frequently Asked Questions

Can z-scores be used with any type of data, regardless of distribution.

Z-scores are commonly used to standardize and compare data across different distributions. They are most appropriate for data that follows a roughly symmetric and bell-shaped distribution.

However, they can still provide useful insights for other types of data, as long as certain assumptions are met. Yet, for highly skewed or non-normal distributions, alternative methods may be more appropriate.

It’s important to consider the characteristics of the data and the goals of the analysis when determining whether z-scores are suitable or if other approaches should be considered.

How can understanding z-scores contribute to better research and statistical analysis in psychology?

Understanding z-scores enhances research and statistical analysis in psychology. Z-scores standardize data for meaningful comparisons, identify outliers, and assess likelihood.

They aid in interpreting practical significance, applying statistical tests, and making accurate conclusions. Z-scores provide a common metric, facilitating communication of findings.

By using z-scores, researchers improve rigor, objectivity, and clarity in their work, leading to better understanding and knowledge in psychology.

Can a z-score be used to determine the likelihood of an event occurring?

No, a z-score itself cannot directly determine the likelihood of an event occurring. However, it provides information about the relative position of a data point within a distribution.

By converting data to z-scores, researchers can assess how unusual or extreme a value is compared to the rest of the distribution. This can help estimate the probability or likelihood of obtaining a particular score or more extreme values.

So, while z-scores provide insights into the relative rarity of an event, they do not directly determine the likelihood of the event occurring on their own.

Further Information

- How to Use a Z-Table (Standard Normal Table) to Calculate the Percentage of Scores Above or Below the Z-Score

- Z-Score Table (for positive or negative scores)

- Statistics for Psychology Book Download

Related Articles

Exploratory Data Analysis

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

Content Validity in Research: Definition & Examples

Construct Validity In Psychology Research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7 Chapter 7: Introduction to Hypothesis Testing

alternative hypothesis

critical value

effect size

null hypothesis

probability value

rejection region

significance level

statistical power

statistical significance

test statistic

Type I error

Type II error

This chapter lays out the basic logic and process of hypothesis testing. We will perform z tests, which use the z score formula from Chapter 6 and data from a sample mean to make an inference about a population.

Logic and Purpose of Hypothesis Testing

A hypothesis is a prediction that is tested in a research study. The statistician R. A. Fisher explained the concept of hypothesis testing with a story of a lady tasting tea. Here we will present an example based on James Bond who insisted that martinis should be shaken rather than stirred. Let’s consider a hypothetical experiment to determine whether Mr. Bond can tell the difference between a shaken martini and a stirred martini. Suppose we gave Mr. Bond a series of 16 taste tests. In each test, we flipped a fair coin to determine whether to stir or shake the martini. Then we presented the martini to Mr. Bond and asked him to decide whether it was shaken or stirred. Let’s say Mr. Bond was correct on 13 of the 16 taste tests. Does this prove that Mr. Bond has at least some ability to tell whether the martini was shaken or stirred?

This result does not prove that he does; it could be he was just lucky and guessed right 13 out of 16 times. But how plausible is the explanation that he was just lucky? To assess its plausibility, we determine the probability that someone who was just guessing would be correct 13/16 times or more. This probability can be computed to be .0106. This is a pretty low probability, and therefore someone would have to be very lucky to be correct 13 or more times out of 16 if they were just guessing. So either Mr. Bond was very lucky, or he can tell whether the drink was shaken or stirred. The hypothesis that he was guessing is not proven false, but considerable doubt is cast on it. Therefore, there is strong evidence that Mr. Bond can tell whether a drink was shaken or stirred.

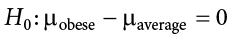

Let’s consider another example. The case study Physicians’ Reactions sought to determine whether physicians spend less time with obese patients. Physicians were sampled randomly and each was shown a chart of a patient complaining of a migraine headache. They were then asked to estimate how long they would spend with the patient. The charts were identical except that for half the charts, the patient was obese and for the other half, the patient was of average weight. The chart a particular physician viewed was determined randomly. Thirty-three physicians viewed charts of average-weight patients and 38 physicians viewed charts of obese patients.

The mean time physicians reported that they would spend with obese patients was 24.7 minutes as compared to a mean of 31.4 minutes for normal-weight patients. How might this difference between means have occurred? One possibility is that physicians were influenced by the weight of the patients. On the other hand, perhaps by chance, the physicians who viewed charts of the obese patients tend to see patients for less time than the other physicians. Random assignment of charts does not ensure that the groups will be equal in all respects other than the chart they viewed. In fact, it is certain the groups differed in many ways by chance. The two groups could not have exactly the same mean age (if measured precisely enough such as in days). Perhaps a physician’s age affects how long the physician sees patients. There are innumerable differences between the groups that could affect how long they view patients. With this in mind, is it plausible that these chance differences are responsible for the difference in times?

To assess the plausibility of the hypothesis that the difference in mean times is due to chance, we compute the probability of getting a difference as large or larger than the observed difference (31.4 − 24.7 = 6.7 minutes) if the difference were, in fact, due solely to chance. Using methods presented in later chapters, this probability can be computed to be .0057. Since this is such a low probability, we have confidence that the difference in times is due to the patient’s weight and is not due to chance.

The Probability Value

It is very important to understand precisely what the probability values mean. In the James Bond example, the computed probability of .0106 is the probability he would be correct on 13 or more taste tests (out of 16) if he were just guessing. It is easy to mistake this probability of .0106 as the probability he cannot tell the difference. This is not at all what it means.

The probability of .0106 is the probability of a certain outcome (13 or more out of 16) assuming a certain state of the world (James Bond was only guessing). It is not the probability that a state of the world is true. Although this might seem like a distinction without a difference, consider the following example. An animal trainer claims that a trained bird can determine whether or not numbers are evenly divisible by 7. In an experiment assessing this claim, the bird is given a series of 16 test trials. On each trial, a number is displayed on a screen and the bird pecks at one of two keys to indicate its choice. The numbers are chosen in such a way that the probability of any number being evenly divisible by 7 is .50. The bird is correct on 9/16 choices. We can compute that the probability of being correct nine or more times out of 16 if one is only guessing is .40. Since a bird who is only guessing would do this well 40% of the time, these data do not provide convincing evidence that the bird can tell the difference between the two types of numbers. As a scientist, you would be very skeptical that the bird had this ability. Would you conclude that there is a .40 probability that the bird can tell the difference? Certainly not! You would think the probability is much lower than .0001.

To reiterate, the probability value is the probability of an outcome (9/16 or better) and not the probability of a particular state of the world (the bird was only guessing). In statistics, it is conventional to refer to possible states of the world as hypotheses since they are hypothesized states of the world. Using this terminology, the probability value is the probability of an outcome given the hypothesis. It is not the probability of the hypothesis given the outcome.

This is not to say that we ignore the probability of the hypothesis. If the probability of the outcome given the hypothesis is sufficiently low, we have evidence that the hypothesis is false. However, we do not compute the probability that the hypothesis is false. In the James Bond example, the hypothesis is that he cannot tell the difference between shaken and stirred martinis. The probability value is low (.0106), thus providing evidence that he can tell the difference. However, we have not computed the probability that he can tell the difference.

The Null Hypothesis

The hypothesis that an apparent effect is due to chance is called the null hypothesis , written H 0 (“ H -naught”). In the Physicians’ Reactions example, the null hypothesis is that in the population of physicians, the mean time expected to be spent with obese patients is equal to the mean time expected to be spent with average-weight patients. This null hypothesis can be written as:

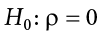

The null hypothesis in a correlational study of the relationship between high school grades and college grades would typically be that the population correlation is 0. This can be written as

Although the null hypothesis is usually that the value of a parameter is 0, there are occasions in which the null hypothesis is a value other than 0. For example, if we are working with mothers in the U.S. whose children are at risk of low birth weight, we can use 7.47 pounds, the average birth weight in the U.S., as our null value and test for differences against that.

For now, we will focus on testing a value of a single mean against what we expect from the population. Using birth weight as an example, our null hypothesis takes the form:

Keep in mind that the null hypothesis is typically the opposite of the researcher’s hypothesis. In the Physicians’ Reactions study, the researchers hypothesized that physicians would expect to spend less time with obese patients. The null hypothesis that the two types of patients are treated identically is put forward with the hope that it can be discredited and therefore rejected. If the null hypothesis were true, a difference as large as or larger than the sample difference of 6.7 minutes would be very unlikely to occur. Therefore, the researchers rejected the null hypothesis of no difference and concluded that in the population, physicians intend to spend less time with obese patients.

In general, the null hypothesis is the idea that nothing is going on: there is no effect of our treatment, no relationship between our variables, and no difference in our sample mean from what we expected about the population mean. This is always our baseline starting assumption, and it is what we seek to reject. If we are trying to treat depression, we want to find a difference in average symptoms between our treatment and control groups. If we are trying to predict job performance, we want to find a relationship between conscientiousness and evaluation scores. However, until we have evidence against it, we must use the null hypothesis as our starting point.

The Alternative Hypothesis

If the null hypothesis is rejected, then we will need some other explanation, which we call the alternative hypothesis, H A or H 1 . The alternative hypothesis is simply the reverse of the null hypothesis, and there are three options, depending on where we expect the difference to lie. Thus, our alternative hypothesis is the mathematical way of stating our research question. If we expect our obtained sample mean to be above or below the null hypothesis value, which we call a directional hypothesis, then our alternative hypothesis takes the form

based on the research question itself. We should only use a directional hypothesis if we have good reason, based on prior observations or research, to suspect a particular direction. When we do not know the direction, such as when we are entering a new area of research, we use a non-directional alternative:

We will set different criteria for rejecting the null hypothesis based on the directionality (greater than, less than, or not equal to) of the alternative. To understand why, we need to see where our criteria come from and how they relate to z scores and distributions.

Critical Values, p Values, and Significance Level

The significance level is a threshold we set before collecting data in order to determine whether or not we should reject the null hypothesis. We set this value beforehand to avoid biasing ourselves by viewing our results and then determining what criteria we should use. If our data produce values that meet or exceed this threshold, then we have sufficient evidence to reject the null hypothesis; if not, we fail to reject the null (we never “accept” the null).

Figure 7.1. The rejection region for a one-tailed test. (“ Rejection Region for One-Tailed Test ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

The rejection region is bounded by a specific z value, as is any area under the curve. In hypothesis testing, the value corresponding to a specific rejection region is called the critical value , z crit (“ z crit”), or z * (hence the other name “critical region”). Finding the critical value works exactly the same as finding the z score corresponding to any area under the curve as we did in Unit 1 . If we go to the normal table, we will find that the z score corresponding to 5% of the area under the curve is equal to 1.645 ( z = 1.64 corresponds to .0505 and z = 1.65 corresponds to .0495, so .05 is exactly in between them) if we go to the right and −1.645 if we go to the left. The direction must be determined by your alternative hypothesis, and drawing and shading the distribution is helpful for keeping directionality straight.

Suppose, however, that we want to do a non-directional test. We need to put the critical region in both tails, but we don’t want to increase the overall size of the rejection region (for reasons we will see later). To do this, we simply split it in half so that an equal proportion of the area under the curve falls in each tail’s rejection region. For a = .05, this means 2.5% of the area is in each tail, which, based on the z table, corresponds to critical values of z * = ±1.96. This is shown in Figure 7.2 .

Figure 7.2. Two-tailed rejection region. (“ Rejection Region for Two-Tailed Test ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Thus, any z score falling outside ±1.96 (greater than 1.96 in absolute value) falls in the rejection region. When we use z scores in this way, the obtained value of z (sometimes called z obtained and abbreviated z obt ) is something known as a test statistic , which is simply an inferential statistic used to test a null hypothesis. The formula for our z statistic has not changed:

Figure 7.3. Relationship between a , z obt , and p . (“ Relationship between alpha, z-obt, and p ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

When the null hypothesis is rejected, the effect is said to have statistical significance , or be statistically significant. For example, in the Physicians’ Reactions case study, the probability value is .0057. Therefore, the effect of obesity is statistically significant and the null hypothesis that obesity makes no difference is rejected. It is important to keep in mind that statistical significance means only that the null hypothesis of exactly no effect is rejected; it does not mean that the effect is important, which is what “significant” usually means. When an effect is significant, you can have confidence the effect is not exactly zero. Finding that an effect is significant does not tell you about how large or important the effect is.

Do not confuse statistical significance with practical significance. A small effect can be highly significant if the sample size is large enough.

Why does the word “significant” in the phrase “statistically significant” mean something so different from other uses of the word? Interestingly, this is because the meaning of “significant” in everyday language has changed. It turns out that when the procedures for hypothesis testing were developed, something was “significant” if it signified something. Thus, finding that an effect is statistically significant signifies that the effect is real and not due to chance. Over the years, the meaning of “significant” changed, leading to the potential misinterpretation.

The Hypothesis Testing Process

A four-step procedure.

The process of testing hypotheses follows a simple four-step procedure. This process will be what we use for the remainder of the textbook and course, and although the hypothesis and statistics we use will change, this process will not.

Step 1: State the Hypotheses

Your hypotheses are the first thing you need to lay out. Otherwise, there is nothing to test! You have to state the null hypothesis (which is what we test) and the alternative hypothesis (which is what we expect). These should be stated mathematically as they were presented above and in words, explaining in normal English what each one means in terms of the research question.

Step 2: Find the Critical Values

Step 3: calculate the test statistic and effect size.

Once we have our hypotheses and the standards we use to test them, we can collect data and calculate our test statistic—in this case z . This step is where the vast majority of differences in future chapters will arise: different tests used for different data are calculated in different ways, but the way we use and interpret them remains the same. As part of this step, we will also calculate effect size to better quantify the magnitude of the difference between our groups. Although effect size is not considered part of hypothesis testing, reporting it as part of the results is approved convention.

Step 4: Make the Decision

Finally, once we have our obtained test statistic, we can compare it to our critical value and decide whether we should reject or fail to reject the null hypothesis. When we do this, we must interpret the decision in relation to our research question, stating what we concluded, what we based our conclusion on, and the specific statistics we obtained.

Example A Movie Popcorn

Our manager is looking for a difference in the mean weight of popcorn bags compared to the population mean of 8. We will need both a null and an alternative hypothesis written both mathematically and in words. We’ll always start with the null hypothesis:

In this case, we don’t know if the bags will be too full or not full enough, so we do a two-tailed alternative hypothesis that there is a difference.

Our critical values are based on two things: the directionality of the test and the level of significance. We decided in Step 1 that a two-tailed test is the appropriate directionality. We were given no information about the level of significance, so we assume that a = .05 is what we will use. As stated earlier in the chapter, the critical values for a two-tailed z test at a = .05 are z * = ±1.96. This will be the criteria we use to test our hypothesis. We can now draw out our distribution, as shown in Figure 7.4 , so we can visualize the rejection region and make sure it makes sense.

Figure 7.4. Rejection region for z * = ±1.96. (“ Rejection Region z+-1.96 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Now we come to our formal calculations. Let’s say that the manager collects data and finds that the average weight of this employee’s popcorn bags is M = 7.75 cups. We can now plug this value, along with the values presented in the original problem, into our equation for z :

So our test statistic is z = −2.50, which we can draw onto our rejection region distribution as shown in Figure 7.5 .

Figure 7.5. Test statistic location. (“ Test Statistic Location z-2.50 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Effect Size

When we reject the null hypothesis, we are stating that the difference we found was statistically significant, but we have mentioned several times that this tells us nothing about practical significance. To get an idea of the actual size of what we found, we can compute a new statistic called an effect size. Effect size gives us an idea of how large, important, or meaningful a statistically significant effect is. For mean differences like we calculated here, our effect size is Cohen’s d :

This is very similar to our formula for z , but we no longer take into account the sample size (since overly large samples can make it too easy to reject the null). Cohen’s d is interpreted in units of standard deviations, just like z . For our example:

Cohen’s d is interpreted as small, moderate, or large. Specifically, d = 0.20 is small, d = 0.50 is moderate, and d = 0.80 is large. Obviously, values can fall in between these guidelines, so we should use our best judgment and the context of the problem to make our final interpretation of size. Our effect size happens to be exactly equal to one of these, so we say that there is a moderate effect.

Effect sizes are incredibly useful and provide important information and clarification that overcomes some of the weakness of hypothesis testing. Any time you perform a hypothesis test, whether statistically significant or not, you should always calculate and report effect size.

Looking at Figure 7.5 , we can see that our obtained z statistic falls in the rejection region. We can also directly compare it to our critical value: in terms of absolute value, −2.50 > −1.96, so we reject the null hypothesis. We can now write our conclusion:

Reject H 0 . Based on the sample of 25 bags, we can conclude that the average popcorn bag from this employee is smaller ( M = 7.75 cups) than the average weight of popcorn bags at this movie theater, and the effect size was moderate, z = −2.50, p < .05, d = 0.50.

Example B Office Temperature

Let’s do another example to solidify our understanding. Let’s say that the office building you work in is supposed to be kept at 74 degrees Fahrenheit during the summer months but is allowed to vary by 1 degree in either direction. You suspect that, as a cost saving measure, the temperature was secretly set higher. You set up a formal way to test your hypothesis.

You start by laying out the null hypothesis:

Next you state the alternative hypothesis. You have reason to suspect a specific direction of change, so you make a one-tailed test:

You know that the most common level of significance is a = .05, so you keep that the same and know that the critical value for a one-tailed z test is z * = 1.645. To keep track of the directionality of the test and rejection region, you draw out your distribution as shown in Figure 7.6 .

Figure 7.6. Rejection region. (“ Rejection Region z1.645 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Now that you have everything set up, you spend one week collecting temperature data:

This value falls so far into the tail that it cannot even be plotted on the distribution ( Figure 7.7 )! Because the result is significant, you also calculate an effect size:

The effect size you calculate is definitely large, meaning someone has some explaining to do!

Figure 7.7. Obtained z statistic. (“ Obtained z5.77 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

You compare your obtained z statistic, z = 5.77, to the critical value, z * = 1.645, and find that z > z *. Therefore you reject the null hypothesis, concluding:

Reject H 0 . Based on 5 observations, the average temperature ( M = 76.6 degrees) is statistically significantly higher than it is supposed to be, and the effect size was large, z = 5.77, p < .05, d = 2.60.

Example C Different Significance Level

Finally, let’s take a look at an example phrased in generic terms, rather than in the context of a specific research question, to see the individual pieces one more time. This time, however, we will use a stricter significance level, a = .01, to test the hypothesis.

We will use 60 as an arbitrary null hypothesis value:

We will assume a two-tailed test:

We have seen the critical values for z tests at a = .05 levels of significance several times. To find the values for a = .01, we will go to the Standard Normal Distribution Table and find the z score cutting off .005 (.01 divided by 2 for a two-tailed test) of the area in the tail, which is z * = ±2.575. Notice that this cutoff is much higher than it was for a = .05. This is because we need much less of the area in the tail, so we need to go very far out to find the cutoff. As a result, this will require a much larger effect or much larger sample size in order to reject the null hypothesis.

We can now calculate our test statistic. We will use s = 10 as our known population standard deviation and the following data to calculate our sample mean:

The average of these scores is M = 60.40. From this we calculate our z statistic as:

The Cohen’s d effect size calculation is:

Our obtained z statistic, z = 0.13, is very small. It is much less than our critical value of 2.575. Thus, this time, we fail to reject the null hypothesis. Our conclusion would look something like:

Fail to reject H 0 . Based on the sample of 10 scores, we cannot conclude that there is an effect causing the mean ( M = 60.40) to be statistically significantly different from 60.00, z = 0.13, p > .01, d = 0.04, and the effect size supports this interpretation.

Other Considerations in Hypothesis Testing

There are several other considerations we need to keep in mind when performing hypothesis testing.

Errors in Hypothesis Testing

In the Physicians’ Reactions case study, the probability value associated with the significance test is .0057. Therefore, the null hypothesis was rejected, and it was concluded that physicians intend to spend less time with obese patients. Despite the low probability value, it is possible that the null hypothesis of no true difference between obese and average-weight patients is true and that the large difference between sample means occurred by chance. If this is the case, then the conclusion that physicians intend to spend less time with obese patients is in error. This type of error is called a Type I error. More generally, a Type I error occurs when a significance test results in the rejection of a true null hypothesis.

The second type of error that can be made in significance testing is failing to reject a false null hypothesis. This kind of error is called a Type II error . Unlike a Type I error, a Type II error is not really an error. When a statistical test is not significant, it means that the data do not provide strong evidence that the null hypothesis is false. Lack of significance does not support the conclusion that the null hypothesis is true. Therefore, a researcher should not make the mistake of incorrectly concluding that the null hypothesis is true when a statistical test was not significant. Instead, the researcher should consider the test inconclusive. Contrast this with a Type I error in which the researcher erroneously concludes that the null hypothesis is false when, in fact, it is true.

A Type II error can only occur if the null hypothesis is false. If the null hypothesis is false, then the probability of a Type II error is called b (“beta”). The probability of correctly rejecting a false null hypothesis equals 1 − b and is called statistical power . Power is simply our ability to correctly detect an effect that exists. It is influenced by the size of the effect (larger effects are easier to detect), the significance level we set (making it easier to reject the null makes it easier to detect an effect, but increases the likelihood of a Type I error), and the sample size used (larger samples make it easier to reject the null).

Misconceptions in Hypothesis Testing

Misconceptions about significance testing are common. This section lists three important ones.

- Misconception: The probability value ( p value) is the probability that the null hypothesis is false. Proper interpretation: The probability value ( p value) is the probability of a result as extreme or more extreme given that the null hypothesis is true. It is the probability of the data given the null hypothesis. It is not the probability that the null hypothesis is false.

- Misconception: A low probability value indicates a large effect. Proper interpretation: A low probability value indicates that the sample outcome (or an outcome more extreme) would be very unlikely if the null hypothesis were true. A low probability value can occur with small effect sizes, particularly if the sample size is large.

- Misconception: A non-significant outcome means that the null hypothesis is probably true. Proper interpretation: A non-significant outcome means that the data do not conclusively demonstrate that the null hypothesis is false.

- In your own words, explain what the null hypothesis is.

- What are Type I and Type II errors?

- Why do we phrase null and alternative hypotheses with population parameters and not sample means?

- Why do we state our hypotheses and decision criteria before we collect our data?

- Why do you calculate an effect size?

- z = 1.99, two-tailed test at a = .05

- z = 0.34, z * = 1.645

- p = .03, a = .05

- p = .015, a = .01

Answers to Odd-Numbered Exercises

Your answer should include mention of the baseline assumption of no difference between the sample and the population.

Alpha is the significance level. It is the criterion we use when deciding to reject or fail to reject the null hypothesis, corresponding to a given proportion of the area under the normal distribution and a probability of finding extreme scores assuming the null hypothesis is true.

We always calculate an effect size to see if our research is practically meaningful or important. NHST (null hypothesis significance testing) is influenced by sample size but effect size is not; therefore, they provide complimentary information.

“ Null Hypothesis ” by Randall Munroe/xkcd.com is licensed under CC BY-NC 2.5 .)

Introduction to Statistics in the Psychological Sciences Copyright © 2021 by Linda R. Cote Ph.D.; Rupa G. Gordon Ph.D.; Chrislyn E. Randell Ph.D.; Judy Schmitt; and Helena Marvin is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Z test is a statistical test that is conducted on data that approximately follows a normal distribution. The z test can be performed on one sample, two samples, or on proportions for hypothesis testing. It checks if the means of two large samples are different or not when the population variance is known.

A z test can further be classified into left-tailed, right-tailed, and two-tailed hypothesis tests depending upon the parameters of the data. In this article, we will learn more about the z test, its formula, the z test statistic, and how to perform the test for different types of data using examples.

What is Z Test?

A z test is a test that is used to check if the means of two populations are different or not provided the data follows a normal distribution. For this purpose, the null hypothesis and the alternative hypothesis must be set up and the value of the z test statistic must be calculated. The decision criterion is based on the z critical value.

Z Test Definition

A z test is conducted on a population that follows a normal distribution with independent data points and has a sample size that is greater than or equal to 30. It is used to check whether the means of two populations are equal to each other when the population variance is known. The null hypothesis of a z test can be rejected if the z test statistic is statistically significant when compared with the critical value.

Z Test Formula

The z test formula compares the z statistic with the z critical value to test whether there is a difference in the means of two populations. In hypothesis testing , the z critical value divides the distribution graph into the acceptance and the rejection regions. If the test statistic falls in the rejection region then the null hypothesis can be rejected otherwise it cannot be rejected. The z test formula to set up the required hypothesis tests for a one sample and a two-sample z test are given below.

One-Sample Z Test

A one-sample z test is used to check if there is a difference between the sample mean and the population mean when the population standard deviation is known. The formula for the z test statistic is given as follows:

z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, \(\sigma\) is the population standard deviation and n is the sample size.

The algorithm to set a one sample z test based on the z test statistic is given as follows:

Left Tailed Test:

Null Hypothesis: \(H_{0}\) : \(\mu = \mu_{0}\)

Alternate Hypothesis: \(H_{1}\) : \(\mu < \mu_{0}\)

Decision Criteria: If the z statistic < z critical value then reject the null hypothesis.

Right Tailed Test:

Alternate Hypothesis: \(H_{1}\) : \(\mu > \mu_{0}\)

Decision Criteria: If the z statistic > z critical value then reject the null hypothesis.

Two Tailed Test:

Alternate Hypothesis: \(H_{1}\) : \(\mu \neq \mu_{0}\)

Two Sample Z Test