What is a research repository, and why do you need one?

Last updated

31 January 2024

Reviewed by

Miroslav Damyanov

Without one organized source of truth, research can be left in silos, making it incomplete, redundant, and useless when it comes to gaining actionable insights.

A research repository can act as one cohesive place where teams can collate research in meaningful ways. This helps streamline the research process and ensures the insights gathered make a real difference.

- What is a research repository?

A research repository acts as a centralized database where information is gathered, stored, analyzed, and archived in one organized space.

In this single source of truth, raw data, documents, reports, observations, and insights can be viewed, managed, and analyzed. This allows teams to organize raw data into themes, gather actionable insights , and share those insights with key stakeholders.

Ultimately, the research repository can make the research you gain much more valuable to the wider organization.

- Why do you need a research repository?

Information gathered through the research process can be disparate, challenging to organize, and difficult to obtain actionable insights from.

Some of the most common challenges researchers face include the following:

Information being collected in silos

No single source of truth

Research being conducted multiple times unnecessarily

No seamless way to share research with the wider team

Reports get lost and go unread

Without a way to store information effectively, it can become disparate and inconclusive, lacking utility. This can lead to research being completed by different teams without new insights being gathered.

A research repository can streamline the information gathered to address those key issues, improve processes, and boost efficiency. Among other things, an effective research repository can:

Optimize processes: it can ensure the process of storing, searching, and sharing information is streamlined and optimized across teams.

Minimize redundant research: when all information is stored in one accessible place for all relevant team members, the chances of research being repeated are significantly reduced.

Boost insights: having one source of truth boosts the chances of being able to properly analyze all the research that has been conducted and draw actionable insights from it.

Provide comprehensive data: there’s less risk of gaps in the data when it can be easily viewed and understood. The overall research is also likely to be more comprehensive.

Increase collaboration: given that information can be more easily shared and understood, there’s a higher likelihood of better collaboration and positive actions across the business.

- What to include in a research repository

Including the right things in your research repository from the start can help ensure that it provides maximum benefit for your team.

Here are some of the things that should be included in a research repository:

An overall structure

There are many ways to organize the data you collect. To organize it in a way that’s valuable for your organization, you’ll need an overall structure that aligns with your goals.

You might wish to organize projects by research type, project, department, or when the research was completed. This will help you better understand the research you’re looking at and find it quickly.

Including information about the research—such as authors, titles, keywords, a description, and dates—can make searching through raw data much faster and make the organization process more efficient.

All key data and information

It’s essential to include all of the key data you’ve gathered in the repository, including supplementary materials. This prevents information gaps, and stakeholders can easily stay informed. You’ll need to include the following information, if relevant:

Research and journey maps

Tools and templates (such as discussion guides, email invitations, consent forms, and participant tracking)

Raw data and artifacts (such as videos, CSV files, and transcripts)

Research findings and insights in various formats (including reports, desks, maps, images, and tables)

Version control

It’s important to use a system that has version control. This ensures the changes (including updates and edits) made by various team members can be viewed and reversed if needed.

- What makes a good research repository?

The following key elements make up a good research repository that’s useful for your team:

Access: all key stakeholders should be able to access the repository to ensure there’s an effective flow of information.

Actionable insights: a well-organized research repository should help you get from raw data to actionable insights faster.

Effective searchability : searching through large amounts of research can be very time-consuming. To save time, maximize search and discoverability by clearly labeling and indexing information.

Accuracy: the research in the repository must be accurately completed and organized so that it can be acted on with confidence.

Security: when dealing with data, it’s also important to consider security regulations. For example, any personally identifiable information (PII) must be protected. Depending on the information you gather, you may need password protection, encryption, and access control so that only those who need to read the information can access it.

- How to create a research repository

Getting started with a research repository doesn’t have to be convoluted or complicated. Taking time at the beginning to set up the repository in an organized way can help keep processes simple further down the line.

The following six steps should simplify the process:

1. Define your goals

Before diving in, consider your organization’s goals. All research should align with these business goals, and they can help inform the repository.

As an example, your goal may be to deeply understand your customers and provide a better customer experience . Setting out this goal will help you decide what information should be collated into your research repository and how it should be organized for maximum benefit.

2. Choose a platform

When choosing a platform, consider the following:

Will it offer a single source of truth?

Is it simple to use

Is it relevant to your project?

Does it align with your business’s goals?

3. Choose an organizational method

To ensure you’ll be able to easily search for the documents, studies, and data you need, choose an organizational method that will speed up this process.

Choosing whether to organize your data by project, date, research type, or customer segment will make a big difference later on.

4. Upload all materials

Once you have chosen the platform and organization method, it’s time to upload all the research materials you have gathered. This also means including supplementary materials and any other information that will provide a clear picture of your customers.

Keep in mind that the repository is a single source of truth. All materials that relate to the project at hand should be included.

5. Tag or label materials

Adding metadata to your materials will help ensure you can easily search for the information you need. While this process can take time (and can be tempting to skip), it will pay off in the long run.

The right labeling will help all team members access the materials they need. It will also prevent redundant research, which wastes valuable time and money.

6. Share insights

For research to be impactful, you’ll need to gather actionable insights. It’s simpler to spot trends, see themes, and recognize patterns when using a repository. These insights can be shared with key stakeholders for data-driven decision-making and positive action within the organization.

- Different types of research repositories

There are many different types of research repositories used across organizations. Here are some of them:

Data repositories: these are used to store large datasets to help organizations deeply understand their customers and other information.

Project repositories: data and information related to a specific project may be stored in a project-specific repository. This can help users understand what is and isn’t related to a project.

Government repositories: research funded by governments or public resources may be stored in government repositories. This data is often publicly available to promote transparent information sharing.

Thesis repositories: academic repositories can store information relevant to theses. This allows the information to be made available to the general public.

Institutional repositories: some organizations and institutions, such as universities, hospitals, and other companies, have repositories to store all relevant information related to the organization.

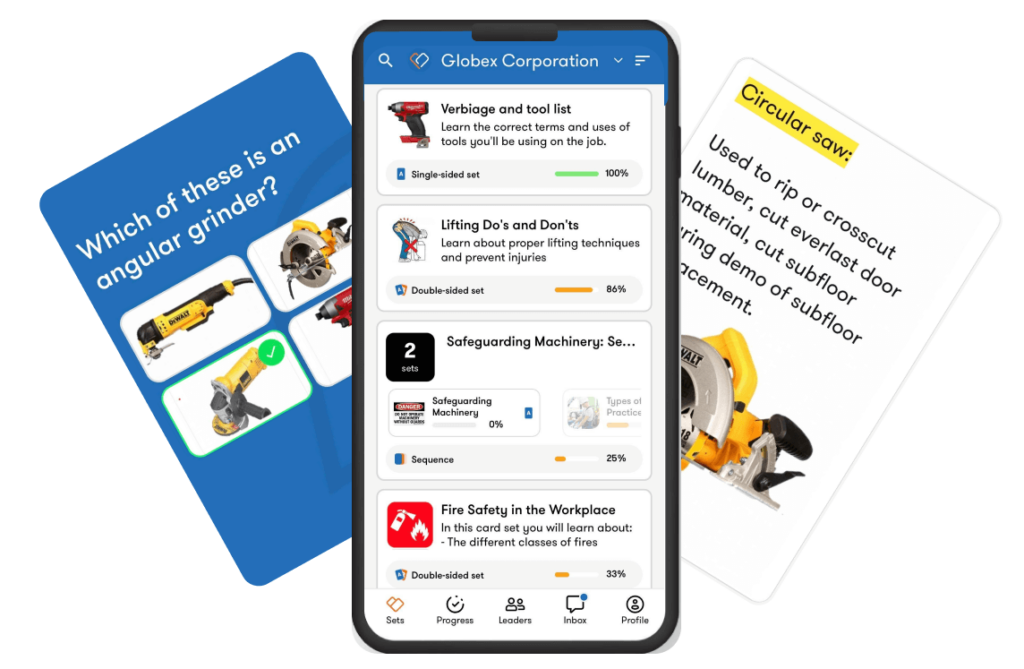

- Build your research repository in Dovetail

With Dovetail, building an insights hub is simple. It functions as a single source of truth where research can be gathered, stored, and analyzed in a streamlined way.

1. Get started with Dovetail

Dovetail is a scalable platform that helps your team easily share the insights you gather for positive actions across the business.

2. Assign a project lead

It’s helpful to have a clear project lead to create the repository. This makes it clear who is responsible and avoids duplication.

3. Create a project

To keep track of data, simply create a project. This is where you’ll upload all the necessary information.

You can create projects based on customer segments, specific products, research methods , or when the research was conducted. The project breakdown will relate back to your overall goals and mission.

4. Upload data and information

Now, you’ll need to upload all of the necessary materials. These might include data from customer interviews , sales calls, product feedback , usability testing , and more. You can also upload supplementary information.

5. Create a taxonomy

Create a taxonomy to organize the data effectively by ensuring that each piece of information will be tagged and organized.

When creating a taxonomy, consider your goals and how they relate to your customers. Ensure those tags are relevant and helpful.

6. Tag key themes

Once the taxonomy is created, tag each piece of information to ensure you can easily filter data, group themes, and spot trends and patterns.

With Dovetail, automatic clustering helps quickly sort through large amounts of information to uncover themes and highlight patterns. Sentiment analysis can also help you track positive and negative themes over time.

7. Share insights

With Dovetail, it’s simple to organize data by themes to uncover patterns and share impactful insights. You can share these insights with the wider team and key stakeholders, who can use them to make customer-informed decisions across the organization.

8. Use Dovetail as a source of truth

Use your Dovetail repository as a source of truth for new and historic data to keep data and information in one streamlined and efficient place. This will help you better understand your customers and, ultimately, deliver a better experience for them.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 13 April 2023

Last updated: 14 February 2024

Last updated: 27 January 2024

Last updated: 18 April 2023

Last updated: 8 February 2023

Last updated: 23 January 2024

Last updated: 30 January 2024

Last updated: 7 February 2023

Last updated: 7 March 2023

Last updated: 18 May 2023

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

What is a Data Repository? Definition, Types and Examples

Collecting data isn’t that hard, but what’s hard is creating and maintaining a data repository. Even harder is making sense out of a data repository.

The concept of a data repository has grown in popularity to manage and utilize data efficiently. A data repository is a centralized storage site that allows for easy access, data management, and analysis.

Here, we start by defining a data repository, explaining how to create one for research insights, and outlining its benefits.

What is a data repository?

A data repository is a data library or archive. It may refer to large database management systems or several databases that collect, manage, and store sensitive data sets for data analysis , sharing, and reporting.

Authorized users can easily access and retrieve data by using query and search tools, which helps with research and decision-making. It gives a complete and unified view of the data by combining data from different sources, like databases, apps, and external systems.

Data can be collected and stored in different ways, like aggregated data, which is usually collected from multiple sources or segments of a business. Then, it can be stored in a structured or unstructured manner and later tagged with different metadata.

The data repository uses structured organization methods, standardized schemas, and metadata to ensure that the data is always the same and easy to find. It has tools for storing, managing, and protecting data, such as compression, indexing, access controls, encryption, and reporting.

Data repositories generally maintain subscriptions to licensed data resources for their users to access the information.

Data Repository Examples

In the data management industry, various types of data repositories allow users to make the most of the information they have available, each with its own limitations and characteristics.

Security is crucial as more organizations adopt data repositories to manage and store data. Data repositories are generally categorized into four types of data repositories:

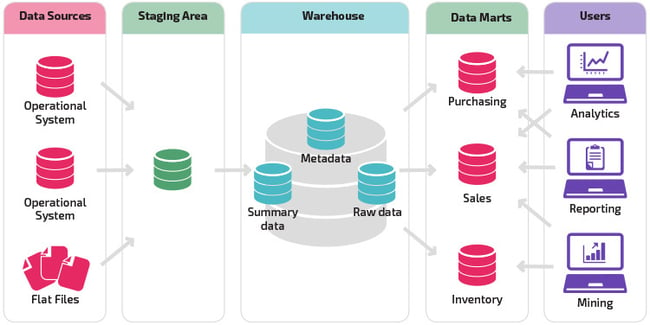

Data warehouse

This is the largest repository type, where data is collected from several business segments or sources. The data stored in this repository is generally used for analysis and reporting, which will help the data users or teams make the correct decisions in their business or project.

In this type of repository, data can be in any form, whether structured, semi-structured, or unstructured. It is a huge storehouse of unstructured data categorized and labeled with metadata.

The main reason for the existence of a data lake is the limitation of the data warehouses. It helps to gain better data governance and data governance framework total control of the data it has in it.

Data marts are often confused with data warehouses. However, they serve different functions.

This subset of the data warehouse is focused on a particular subject, department, or other specific area. Since the data is stored for a specific area, a user can swiftly access the insights without spending much time searching an entire data warehouse, ultimately making users’ lives easy.

This repository contains the most complex data in it. It may be described as the multidimensional extensions of different tables, and they’re generally used to represent data that is too complex to be described by just tables, rows, and columns.

So basically, a data cube can be used when we analyze data available to us and beyond 3-D. Here, we’ll particularly talk about data repositories used in market research.

Benefits of using a research data repository

Using research data repositories has many benefits for both researchers and the scientific community as a whole. Here are some significant benefits:

Greater visibility

Data saved in data repositories can be viewed anytime. Keeping it siloed in Excel sheets or applications not used by a team reduces its visibility and usability, wasting time and resources.

Enhanced discoverability

Saving data in digital format makes it more accessible. Just search for the piece of data you’re looking for, and voila! Also, the metadata added along with the data repository enables others to understand the large context and make more sense of it.

A data repository contains many pieces of data. However, it’s more than just a warehouse. Discrete datasets are joined so that you can derive interesting insights into your area of research and generate various types of reports using the same datasets.

For instance, if you conduct an online survey and collect data from your target audience , you can generate a comparison report to compare responses from various demographic groups. You can also generate trend reports to understand how people’s choices have changed over time. Both of these reports use the same data.

Gain insights from multiple sources of data

Integrating data repositories with other applications lets you see a multi-dimensional view of your data. For instance, you can analyze the historical survey data and the actual sales data to understand the accuracy of insights gained in the past.

How to create a Data Repository using online tools?

Creating data repositories for your research data is simple with the right online tools. If you are conducting your research using surveys , communities, focus groups , or any other method, here are some of the ways to create one.

Create a questionnaire

Many online tools allow you to drag and drop question types . You can create a survey in under 5 minutes! You can also save time by using a ready-to-use survey template. Customize the template per your needs, and you’re ready.

Brand your survey

Customize the header and footer, and add a logo to look more professional. You can also choose a font style and color that suit your brand voice. Branding your surveys increases the chances of getting more responses.

Distribute your survey

Many tools offer different ways to distribute your survey, such as email, embedding data on the website, or sharing it on social media sites. You can also generate a QR code or let your audience answer questions using a mobile app.

Analyze the data

Finally, once you have collected your data, generating the reports is just a matter of time. Use tools that let you create dashboards and generate reports with ease.

How does QuestionPro help implement data repositories?

QuestionPro is a powerful online survey and research platform that collects, analyzes, and manages data. It mostly creates surveys, collects data, and helps establish and maintain data repositories. QuestionPro helps data repository management in several ways:

- Data collection : QuestionPro lets you develop and send surveys to collect data. Surveys can use multiple choice, rating scales, open-ended questions , and more. Your data repositories get important data from this data collection process.

- Data Management : With QuestionPro, you can effectively organize and manage your gathered data. It filters, categorizes, and validates data to ensure accuracy and quality. These management tools help maintain a well-organized and ordered data repository.

- Data Analysis: QuestionPro has built-in tools to help you examine and visualize your data. You can create reports, charts, and graphs based on survey answers to help you find trends, patterns, and insights. The analysis results can be saved in your data repository.

- Real-time Reporting: Real-time reporting lets you view and analyze your data. After collecting replies, you may instantly generate reports to assess trends and progress and make data-driven decisions.

- Data Security: QuestionPro prioritizes data security. It encrypts, transfers, and restricts data access to prevent data breaches. This makes sure that the data in your repository is safe and that users’ privacy is protected.

- Data Integration: QuestionPro integrates with Excel, Google Sheets, and SPSS. This connection lets you import external data or survey responses into your data repositories for analysis and storage.

Data collecting, customer data integration , management, analysis, and security features in QuestionPro can help you manage your repository. It’s useful for data repository management since it centralizes data collection, storage, and analysis.

Learn more about best data collection tools to help you choose the best one.

If you need any help conducting research or creating a data repository, connect with our team of experts. We can guide you through the process and help you make the most of your data.

Frequently Asking Questions (FAQ)

Your data repository should suit your demands. You should choose a repository that is popular and relevant to your research domain. Your data format should be supported by the repository.

Data repositories are managed digital environments that specialize in gathering, characterizing, distributing, and tracking research data. Sharing data in a repository is a best practice that is frequently mandated by federal authorities.

The difference between a database and a data repository lies in its functionality, the former is only a data storage system while a data repository is a data management system.

MORE LIKE THIS

Cannabis Industry Business Intelligence: Impact on Research

May 28, 2024

Top 10 Dynata Alternatives & Competitors

May 27, 2024

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

I Am Disconnected – Tuesday CX Thoughts

May 21, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Research Data Management

- Data Management Plans

- National Institutes of Health (NIH)

- National Endowment for the Humanities (NEH)

Data Repositories

- Resources on Campus

- File Formats

- Learning Resources

UDSpace - UD's Institutional Repository

University of Delaware original research in digital form. Includes articles, technical reports, working & conference papers, images, data sets, & more.

The UDSpace Institutional Repository collects and disseminates research material from the University of Delaware. The information is organized by Communities, corresponding to administrative entities such as college, school, department, and research center, and then by Collection. Select a Community to browse its collections, or perform a keyword search and then filter by author, subject, or date.

- What are data repositories?

- Where can I find a data repository?

- About Open Data

A data repository is simply a place to store datasets for the long-term. Using a repository or archive to store your data (versus simply hosting it on your website) facilitates its discovery and preservation, ensuring that it will be found by others looking for data and that care will be taken to preserve the data over time.

There are thousands of data repositories, often for a specific subject or type of data, and many organized by universities or scholarly organizations.

Released in May 2022 by OSTP, the report Desirable Characteristics of Data Repositories for Federally Funded Research aims to improve consistency across Federal departments and agencies in the instructions they provide to researchers about selecting repositories for data resulting from Federally funded research. This document contains clearly defined desirable characteristics for two classes of online research data repositories: a general class appropriate for all types of Federally funded data—including free and easy access—and a specific class that has special considerations for the sharing of human data, including additional data security and privacy considerations.

For subject data repositories, please also visit your discipline's research guide or contact your subject librarian directly. Note that some subjects may not have discipline specific repositories.

See the Open Data tab for other repositories

- DataCite Commons Web portal for users to search for works, people, organizations or repositories based on the DataCite metadata catalog

- figshare figshare helps academic institutions store, share and manage all of their research outputs. Upload files up to 5GB. We accept any file format and aim to preview all of them in the browser.

- ICPSR Data Portal An international consortium of more than 750 academic institutions and research organizations, Inter-university Consortium for Political and Social Research (ICPSR) provides leadership and training in data access, curation, and methods of analysis for the social science research community. ICPSR maintains a data archive of more than 250,000 files of research in the social and behavioral sciences. It hosts 21 specialized collections of data in education, aging, criminal justice, substance abuse, terrorism, and other fields.

- re3data.org re3data.org is a global registry of research data repositories that covers research data repositories from different academic disciplines.

- Simmons Data Repositories Listing A listing of various subject oriented data repositories, part of the Open Access Directory.

Open Data is part of the broader Open Access movement, promoting the idea that research, especially publicly funded research, should be made widely available. This allows for more and more rapid development and dissemination of knowledge.

Open Data is data that is permanently and freely available for the world to use, allowing for maximum exposure and benefit from current research.

- Open Access Directory The Open Access Directory (OAD) is a compendium of simple factual lists about open access (OA) to science and scholarship, maintained by the OA community at large.

- Open Data Commons Provides a set of legal tools and licenses to help you publish, provide and use open data

- Harvard Dataverse Repository A free data repository open to all researchers from any discipline, both inside and outside of the Harvard community, where you can share, archive, cite, access, and explore research data.

- Dryad Digital Repository The Dryad Digital Repository is a curated resource that makes research data discoverable, freely reusable, and citable. Dryad provides a general-purpose home for a wide diversity of data types.

- << Previous: National Endowment for the Humanities (NEH)

- Next: Resources on Campus >>

- Last Updated: May 16, 2024 8:35 AM

- URL: https://guides.lib.udel.edu/researchdata

University Libraries

- Ohio University Libraries

- Library Guides

Research Data Repositories: Finding and Storing Data

- Business & Economics

- Crime, Government, & Law

- Sociology, Anthropology, & Archaeology

- Biological Sciences

- Engineering and Computer Science

- Health, Medicine, and Psychology

- Physics and Astronomy

- Chemistry and Biochemistry

- General Humanities

- Literature, Linguistics, & Languages

- Contact/Need Help?

We have three guides about data: Which one do you need?

- Research Data Literacy 101 This guide covers research data generally, what data is, the difference between data and statistics, understanding open data, library databases that offer statistics and data, and other overview topics.

- Research Data Repositories: Finding and Storing Data This guide is a annotated list of data repositories by subject where a researcher can deposit their data per gov standards and find data sets from research done by others. Note: For library databases that offer statistics and data, see Research Data Literacy 101: Find Data and Statistics.

- Research Data Management This guide covers how to create a Data Management Plan, including funders, metadata, and other important aspects. Includes help using DMPTool.

Often, the place to find and store data are the very same. Researchers will place the data they collect into general or disciplinary repositories. While other researchers can search those repositories for data and datasets on their topic. Some repositories are costly while others are considered "open" and offer data freely for anyone to download.

Data and Statistics Are Not Equivalent

Although both terms are commonly used synonymously, they are, in fact, very different. Before you start searching for either, think about which one best applies to your needs.

- Data: are collected raw numbers or bits of information that have not been analyzed or organized.

- Statistics: are the product of collected data after it has been analyzed or organized that will help derive meaning from the data.

The National Library of Medicine has a great resource full of other data-related definitions.

What to Consider When Choosing a Data Repository

A data repository is a storage space for researchers to deposit data sets associated with their research. And if you’re an author seeking to comply with a journal or funder data sharing policy, you’ll need to identify a suitable repository for your data.

An open access data repository openly stores data in a way that allows immediate user access to anyone. There are no limitations to the repository access.

When choosing a repository for your data, keep in mind the following:

- It is likely that your funder or journal will have specific guidelines for sharing your data

- Ensure the repository issues a persistent identifier (like a DOI) or you can link to your ORCID account

- Repository has a preservation plan in perpetuity

- Does the repository have a cost to store your data? There may also be a cost to access datasets.

- Is the repository certified or indexed?

- Is the repository completely open or are there restrictions to access?

- Consider FAIR data Principles - Data should be Findable, Accessible, Interoperable, and Re-usable

NIH guidelines for selecting a data repository

3 Ways to use Google to find Data

Google has a Dataset Search! Here is a video tutorial on how to use this search tool .

You can search for specific file types in Google, for example CSV files for datasets. By typing into Google filetype:csv in the search bar you are "telling" Google to only search for things that have that specific file type. For example: (poverty AND ohio) filetype:xls will result in XLS (Excel) files mentioning Poverty in Ohio.

Limit search results by web domain by typing into Google: site:.gov (YOUR TOPIC HERE) . This will limit datasets, files, etc. from specific websites. You could even do .org for professional organizations.

- Next: Social Sciences >>

Research Data Management

- Data Repositories

A key aspect of data management involves not only making articles available, but also the data, code, and materials used to conduct that research. Data repositories are a centralized place to hold data, make data available for use, and organize data in a logical manner.

Which Repository Should I Use?

The best repository for sharing your data depends on your discipline. If there is a national or subject-level repository in your discipline, that would be your first choice. To determine if such repositories exist, you can search the registry of repositories re3data , or check the Open Access Directory of Data Repositories at Simmons University .

It is also possible that your funding agency or your state has a repository you can use. In the absence of these, there are a number of general subject repositories which can take your data. The NNLM Data Repository Finder is a tool that was developed to help locate NIH-supported repositories for sharing research data

- << Previous: Unique Identifiers

- Data Management Plans

- Data Policies & Compliance

- Directory Structures

- File Naming Conventions

- Roles & Responsibilities

- Collaborative Tools & Software

- Electronic Lab Notebooks

- Documentation & Metadata

- Reproducibility

- Analysis Ready Datasets

- Image Management

- Version Control

- Data Storage

- Data & Safety Monitoring

- Data Privacy & Confidentiality

- Retention & Preservation

- Data Destruction

- Data Sharing

- Public Access

- Data Transfer Agreements

- Intellectual Property & Copyright

- Unique Identifiers

Research Support

Request a data management services consultation.

Email [email protected] to schedule a consultation related to the organization, storage, preservation, and sharing of data.

- Last Updated: May 23, 2024 10:37 AM

- URL: https://guides.library.ucdavis.edu/data-management

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- About This Blog

- Official PLOS Blog

- EveryONE Blog

- Speaking of Medicine

- PLOS Biologue

- Absolutely Maybe

- DNA Science

- PLOS ECR Community

- All Models Are Wrong

- About PLOS Blogs

What a difference a data repository makes: Six ways depositing data maximizes the impact of your science

Data is key to verification, replication, reuse, and enhanced understanding of research conclusions. When your data is in a repository—instead of an old hard drive, say, or even a Supporting Information file—its impact and its relevance are magnified. Here are six ways that putting your data in a public repository can help your research go further.

1. You can’t lose data that’s in a public data repository

Have you ever lost track of a dataset? Maybe you’ve upgraded your computer or moved to a new institution. Maybe you deleted a file by mistake, or simply can’t remember the name of the file you’re looking for. No matter the cause, lost data can be embarrassing and time consuming. You’re unable to supply requested information to journals during the submission process or to readers after publication. Future meta analyses or systematic reviews are impossible. And you may end up redoing experiments in order to move forward with your line of inquiry. With data securely deposited in a repository with a unique DOI for tracking, archival standards to prevent loss, and metadata and readme materials to make sure your data is used correctly, fulfilling journal requests or revisiting past work is easy.

2. Public data repositories support understanding, reanalysis and reuse

Transparently posting raw data to a public repository supports trustworthy, reproducible scientific research. Insight into the data and analysis gives readers a deeper understanding of published research articles. Offering the opportunity for others to interpret results demonstrates integrity and opens new avenues for discussion and collaboration. Machine-readable data formatting allows the work to be incorporated into future systematic reviews or meta analyses, expanding its usefulness.

3. Public data repositories facilitate discovery

Even the best data can’t be used unless it can be found. Detailed metadata, database indexing, and bidirectional linking to and from related articles helps to make data in public repositories easily searchable—so that it reaches the readers who need it most, maximizing the impact and influence of the study as a whole.

4. Public data repositories reflect the true value of data

Data shouldn’t be treated like an ancillary bi-product of a research article. Data is research . And researchers deserve academic credit for collecting, capturing and curating the data they generate through their work. Public repositories help to illustrate the true importance and lasting relevance of datasets by assigning them their own unique DOI, distinct from that of related research articles—so that datasets can accumulate citations in their own right.

5. Public data demonstrates rigor

There’s no better way to illustrate the rigor of your results than explaining exactly how you achieved them. Sharing data lets you demonstrate your credibility and inspires confidence in readers by contextualizing results and facilitating reproducibility.

6. Research with data in public data repositories attracts more citations

A 2020 study of more than 500,000 published research articles found articles that link to data in a public repository have a 25% higher citation rate on average than articles where data is available on request or as Supporting Information. The precise reasons for the association remain unclear. Are researchers who deposit carefully curated data in a repository also more likely to produce rigorous, citation-worthy research? Are researchers with the time and resources to devote to data curation and deposition more established in their careers, and therefore more highly cited? Are readers more likely to cite research when they trust that they can verify the conclusions with data? Perhaps some combination?

What do you see as the most important reason for posting data in a repository?

Access to raw scientific data enhances understanding, enables replication and reanalysis, and increases trust in published research. The vitality and utility of…

The latest quarterly update to the Open Science Indicators (OSIs) dataset was released in December, marking the one year anniversary of OSIs…

For PLOS, increasing data-sharing rates—and especially increasing the amount of data shared in a repository—is a high priority. Research data is a…

Home / Blogs / Data Repository: Definition, Types, and Benefits with Best Practices

The Automated, No-Code Data Stack

Learn how Astera Data Stack can simplify and streamline your enterprise’s data management.

Data Repository: Definition, Types, and Benefits with Best Practices

With time, data is becoming more significant to business decision-making. This means you need solutions to gather, store, and analyze data. A data repository is a virtual storage entity that can help you consolidate and manage critical enterprise data.

In this blog, we’ll give a brief overview of a data repository, its common examples, and critical benefits.

What is a Data Repository?

A data repository , often called a data archive or library, is a generic terminology that refers to a segmented data set used for reporting or analysis.

A data repository serves as a centralized storage facility for managing and storing various datasets . It encompasses:

- Large database management systems: These systems efficiently collect, organize, and store extensive datasets.

- Data archives: These archives securely preserve sensitive data sets for analysis, sharing, and reporting purposes.

Data repositories facilitate data management, ensuring accessibility, security, and efficiency in handling diverse datasets.

It’s a vast database infrastructure that gathers, manages, and stores varying data sets for analysis, distribution, and reporting.

Types of Data Repositories

Some common types of data repositories include:

Data Warehouse

A data warehouse is a large central data repository that gathers data from several sources or business segments. The stored data is generally used for reporting and analysis to help users make critical business decisions.

In a broader perspective, a data warehouse offers a consolidated view of either a physical or logical data repository gathered from numerous systems. The main objective of a data warehouse is to establish a connection between data from current systems, such as product catalog data stored in one system and procurement orders for a client stored in another one.

A data lake is a unified data repository that allows you to store structured, semi-structured, and unstructured enterprise data at any scale. Data can be in raw form and used for different tasks like reporting, visualizations, advanced analytics, and machine learning.

A data mart is a subject-oriented data repository often a segregated section of a data warehouse. It holds a subset of data usually aligned with a specific business department, such as marketing, finance, or support.

Due to its smaller size, a data mart can fast-track business procedures as you can easily access relevant data within days instead of months. As it only includes the data pertinent to a specific area, a data mart is an economical way to acquire actionable insights swiftly.

Metadata Repositories

While metadata incorporates information about the structures that include the actual data, metadata repositories contain information about the data model that store and share this data. They describe where the data source is, how it was collected, and what it signifies. It may define the arrangement of any data or subject deposited in any format.

For businesses, metadata repositories are essential in helping people understand administrative changes, as they contain detailed information about the data.

Data cubes are data lists with multidimensions (usually three or more dimensions) stored as a table. They are used to describe the time sequence of an image’s data and help assess gathered data from a range of standpoints.

Each dimension of a data cube signifies specific database characteristics such as day-to-day, monthly or annual sales. The data within a data cube allows you to analyze all the information for almost any client, sales representative, products, and more. Consequently, a data cube can help you identify trends and scrutinize business performance.

Why Do You Need A Data Repository?

A data repository can help businesses fast-track decision-making by offering a consolidated space to store data critical to your operations. This segmentation enables easier data access and troubleshooting and streamlines reporting and analysis.

For instance, if you want to find out which of your workplaces incur the most cost, you can create an information repository for leases, energy expenses, amenities, security, and utilities, excluding employees or business function information. Storing this data in one place can make it easier for you to come to a decision.

Challenges Associated with a Data Repository

Although an information repository offers many benefits, it also includes several challenges that you must manage efficiently to alleviate possible data security risks.

Some challenges in maintaining data repositories include:

- An increase in data sets can reduce your system’s speed. To rectify this problem, ensure that the database management system can scale with data expansion.

- In case a system crashes, it can negatively impact your data. It’s best to maintain a backup of all the databases and restrict access to control the system risk.

- Unauthorized operators can access sensitive data more quickly if stored in a single location than if it’s dispersed across numerous sources. On the contrary, implementing security protocols on a single data storage location is more accessible than multiple ones.

Best Practices to Create and Manage Data Repositories

When creating and maintaining software repositories, you have to make several hardware and software decisions. Therefore, it is best to involve all stakeholders during the development and usage phase of the data repositories. For example, in case of building a clinical data repository architecture, it is a good idea to involve doctors, data experts, analysts and data pipeline engineers in the initial planning stages.

Here are some of the best practices to help you make the most of this storage solution:

1. Select the Right Tool

Using ETL tools to create a data repository and transfer data can help ensure data quality is maintained during the process. But keep in mind that different data repository tools offer additional features to create, maintain, and control the repository. So, find a tool that provides the features that support your business requirements.

2. Limit the Scope Initially

It’s best to narrow down the scope of your information repository in the initial days. Accumulate smaller data sets and limit the number of subject areas. Gradually increase the complexity as the data operators get familiar with the system.

3. Automate as Much as Possible

Automating the process for loading and maintaining the data repository saves the user from manual efforts and reduces the chances of errors.

4. Prioritize Flexibility

The data repository should be scalable enough to accommodate evolving data types and increase volumes. So, make flexible plans that make allowance for alterations in technology.

As more and more businesses adopt data repositories to store and administer their ever-increasing data, a secure approach becomes imperative for your company’s overall security. Creating comprehensive access rules to permit only authorized operators to access, change, or transfer data will help secure your enterprise data.

Astera Centerprise is an automated data integration tool that helps in data management with features such as data cleansing, profiling, and transformation all in one solution. Contact our team for a personalized demo .

You MAY ALSO LIKE

What is olap (online analytical processing).

What is Online Analytical Processing (OLAP)? Online Analytical Processing (OLAP) is a computing technology through which users can extract and...

The Best Data Ingestion Tools in 2024

Data ingestion is important in collecting and transferring data from various sources to storage or processing systems. In this blog,...

Data Ingestion vs. ETL: Understanding the Difference

Working with large volumes of data requires effective data management practices and tools, and two of the frequently used processes...

Considering Astera For Your Data Management Needs?

Establish code-free connectivity with your enterprise applications, databases, and cloud applications to integrate all your data.

This article provides an overview of the current state of research data repositories, what they are, why your library needs one, and pragmatic steps toward actualization. It surveys the present changing data repository landscape and uses practical examples from a large, current Texas consortial effort to create a research data repository for universities across the Lone Star State. Libraries in general need to think seriously about data repositories to partner with state, national, and global efforts to begin providing the next generation of information services and infrastructures.

Data Research Repositories: Definitions

Online research data repositories are large database infrastructures set up to manage, share, access, and archive researchers’ datasets. Repositories may be specialized and relegated to aggregating disciplinary data or more general, collecting over larger knowledge areas, such as the sciences or social sciences. Online repositories may also aggregate experts’ data globally or locally, collecting a university or consortium of universities researcher’s data for mutual benefit. The simple idea is that sharing data improves results and drives research and discovery forward. A repository allows examination, proof, review, transparency, and validation of a researcher’s results by other experts beyond the published refereed academic article. Placing research data online allows instantaneous access by a globally dispersed group of researchers to share, understand, and synthesize results. This aggregation and synthesis provide an opportunity for insight, progress, and that uniquely human quest for larger understanding. Data repositories also allow for the publication of previously hidden negative data, essentially experiments that didn’t work. This enables other researchers to avoid previous dead ends of those who have tried a path before them to better find their way toward more fertile territory. A global community of experts benefits from online sharing and the aggregation of research data.

The Nuts and Bolts

Data repositories allow long-term archiving and preservation of data by the ingestion/uploading of various data types. This includes simple Excel files, SPSS, and more exotic disciplinary formats (i.e., GIS shapefiles and Genome data-specific formats). Usually, a repository will also provide a permalink strategy for online citation and instant access so that researchers may offer a direct link to their data and ancillary files in the later published article or conference paper. This is usually provided through a digital object identifier (DOI) or universal numerical fingerprint (UNF), which allows later linking of data and possibilities for interoperability and mashing up of data archives. Within data archives, para-textual research material is also stored for later archiving and sharing. Users include social scientists and hard scientists. Data files include spreadsheets, field notes, lab methodology recipes, multimedia, and specific software programs for analyzing and working with the accompanying datasets.

The data repository infrastructure trajectory moves through a lifecycle. It begins with the experiment or research project and initial data capture and progresses to uploading, cataloging, adding disciplinary metadata schema, and assigning DOI and/or UNF (see above). Repositories will typically allow instant searching, retrievability, linking, and downloading of data. As data repositories progress, they will allow synthesis of datasets and data fields to facilitate insight, discovery, and verification. In an online global networked environment, this is accomplished through data harvesting and the possibilities of linked data and data visualization with current applications such as Tableau.

Why a Repository Now?

Besides being a good thing for the sharing and verification of data-driven research results, data research repositories are now necessary for university campuses. Placing one’s research data online has become mandatory for any researcher wishing to receive grants from any public U.S. agency. This includes the National Institutes of Health (NIH), National Science Foundation (NSF), U.S. Department of Agriculture (USDA), and National Endowment for the Humanities (NEH). The rational is that if a researcher is drawing from the public taxpayers’ trough, the research must be publicly accessible through both the article and original data. Sharing this data helps keep the wider economy vital, facilitating healthy competition toward commercialization and dissemination of discovery. If researchers do not have data management plans in place, their chance of obtaining a grant decreases. Currently, a majority of grant-funded researchers do not share data. With recent mandated changes, this situation is rapidly changing. Ivy League institutions have already capitalized on it—sharing data leverages and enhances faculty, departmental, and a university’s global research standing.

The Current State of Affairs

Among research-intensive institutions and academic research libraries, about 74% provide data archiving services (see figure below). Of this group, only 13% provide data-specific repositories. Another 13% use more general digital repositories, and 74% use temporary stopgaps—text-centric repositories such as DSpace—to accommodate current grant stipulations until new data-centered applications can be put in place. The vast majority of academic libraries lag behind this cohort. In terms of Roger’s technological adoption curve, phases of innovators and early adopters of data repositories are complete; we are entering the early majority and primary adoption phases (see the bottom figure). It is a great time to be thinking about a data repository.

Research Data Repository Software

There are currently several possibilities with regard to research data repository software, some specifically created for data (i.e., Dataverse, HUBzero, and Chronopolis), others cobbled together from previous text-based institutional repository/digital library sources (i.e., DSpace, Fedora, and Hydra). The software may be hosted or installed on university servers. Different infrastructures also contain various ranges of data management and data collaboration options. There are both well-established open source software (notably, Dataverse and HUBzero) and proprietary/commercial sources (Interuniversity Consortium for Political and Social Resource [ICSPR], figshare, and Digital Commons).

Repositories, Institutions, and Consortiums

Currently, at Texas State University, we are part of a Texas-wide university effort championed by the Texas Digital Library (TDL) to implement a statewide consortial data repository based on Harvard University’s open source solution, Dataverse. Dataverse is a software framework that enables institutions to host research data repositories and has its roots in the social sciences and Harvard’s Institute for Quantitative Social Sciences (IQSS).

Because of Dataverse’s largely customizable metadata schema abilities and open source flexibilities, TDL is using it as a data archiving infrastructure for the state (officially scheduled for launch in summer/fall 2016). The software allows data sharing, persistent data citation, data publishing, and various administrative management functions. The architecture also allows customization for a consortial effort for future systemwide sharing and interoperability of datasets for a stronger data research network (see diagram directly above).

If an institution is looking seriously at open source data repositories, other software also worth considering is Purdue’s data repository system—HUBzero ( hubzero.org )—and a customized instance, Purdue University Research Repository (PURR; purr.purdue.edu ). Different from Dataverse’s social science antecedents, HUBzero originally began as a platform for hard scientific collaboration (nanoHUB; nanohub.org). In re-creating or customizing one’s institution’s or consortium’s data repository, PURR’s interface is particularly user-friendly. It is worth looking at how different data repositories step their researchers through the data management process via various online examples (see PURR example below).

Other more proprietary data repositories such as figshare, bepress’s Digital Commons, or ICPSR are worth looking at, depending on an institution’s size, needs, and present infrastructure. As the landscape is changing quickly, an environmental scan is a good idea. A good example scan recently conducted by TDL prior to choosing Harvard’s Dataverse is available here: tinyurl.com/h36w93v .

Data Size Matters

Beyond the specific data repository an institution chooses, another factor that needs to be considered is size of datasets. To generalize, researcher, project, and data storage needs come in all different shapes and sizes. Preliminarily thinking about these factors will be important as an institution moves from implementation and customization to setting policy and data storage requirements.

Research data projects may be divided into size categories of 1) small/medium, 2) large, and 3) very large. For small-to-medium datasets, these are data projects that can be stored on a researcher’s current desktop hard drive, typically sets of Excel or other specialized disciplinary data files. These may be uploaded by a researcher, emailed, or transferred through university network drives to a server or the cloud and/or uploaded by a data archivist into a repository. Many of the current data-specific repositories allow researchers’ self-uploading processes to begin or facilitate this process.

For medium-to-large projects, data may require special back-end storage systems or relationships engendered with core university IT to set up larger storage options (i.e., dedicated network space allocation and RAID). Typically, these types of datasets can still be linked online, but there is a larger weight toward data curation, adding robust metadata for access points and considering logical divisions of datasets/fields in consultation with researchers.

For very large projects, relationships may be required to be engendered with consortial, national, or proprietary data preservation and archiving efforts. For example, TDL partners with both state and national organizations—the Texas Advanced Computing Center (TACC) and the Digital Preservation Network (DPN)—and proprietary solutions, DuraCloud and Amazon Web Services (Amazon Glacier and Amazon S3). Funds become a factor here.

Typically, a university will have a spectrum of researchers with low to very high data storage requirements. Infrastructure bridges should be set up to accommodate the range of possibilities that will arise. Longer-term storage needs that a university or consortial environment anticipates and will require should also be factored in here.

Data Management Planning: The Wide Angle

Data management repositories are an important, but single, piece in any researcher’s larger data management plans. Other infrastructure bridges will necessarily involve offices of sponsored research, university core IT, and library personnel working together to build these new paradigms. Fortunately, several good planning tools have been created. A good starting place for planning considerations is the California Digital Library’s DMPTool ( dmp.cdlib.org ). This will help researchers, libraries, and other infrastructure personnel begin thinking and stepping through the multi-tiered process of managing their data.

With an institutional or consortial data repository initially in place and a few key staff members to help researchers navigate this new world, a data repository infrastructure may be enabled. This article has given a whirlwind tour of the fast-changing and now required area of data repositories. A larger presentation with more detailed links and references for further exploration and research is available here: tinyurl.com/jljmmcz .

The Sheridan Libraries

- Data Management and Sharing

- Sheridan Libraries

- How to Find a Data Repository

- Write A Proposal

- Write a Data Management and Sharing Plan

- Human Participant Research Considerations

- JHU Policies for Data Management and Sharing

- Access and Collect Data

- Manage Data

- Analyze and Visualize Data

- Document Data

- Store and Preserve Data

- Overview of Data Sharing

- Prepare Your Data For Sharing

Find a Repository

Repository-specific data submission guidelines.

- Share Code or Software

- Conditions for Access and Reuse

When sharing your research data code, and documentation, there are many repositories to choose from. Some repositories are domain specific, and focus on a very narrow type of research. Other repositories, known as generalists, accept data from multiple disciplines and in various file formats. Another aspect of repositories is the ease with which data is accessible (controlled-access or public).

Qualities to Look For in a Repository

- Data Services selecting a repository for data deposit : Tips and set of questions researchers can use in determining whether a particular research data repository will work for their circumstances.

- NIH guidance on selecting a repository : List of desirable characteristics to look for when choosing a repository to manage and share data resulting from Federally funded research.

- Nature Data Repository guidance: Because Nature does not host data, this is guide for researchers who are publishing with them on qualities to look for when determining where to share their data.

- Data Sharing Tiers for Broad Sharing of JHM Clinically Derived Data : Written by JHU Data Trust , a guide to determining the types of repositories you can use to share clinical data. Requires JHU affiliation JHED to login .

- General JHU IRB Expectations for Sharing of Individual Level Research Data : Written by JHU IRBs. The table outlines considerations researchers should be aware of when developing plans for data sharing when the data to be shared is from human research participants.

Data Repository Registries/Lists

- re3data.org : A global registry of data repositories that covers research data repositories from different academic disciplines and with varying access controls.

- NIH-supported Scientific Data Repositories : A browsable list of NIH-supported repositories including a description and data submission instructions for each repository.

- Welch Medical Library Research Data Repositories & Databases guide : Description of medically-related databases and repositories to find datasets for secondary analysis. Many of those listed are also options for data submission.

- Welch Medical Library Data Catalogs & Search Engines : A list of repositories that you can find secondary data and publish your own data in.

- FAIRsharing Database Registry : A registry of knowledgebases and repositories of data and other digital assets.

- Johns Hopkins Research Data Repository

- Johns Hopkins Research Data Repository : Open access repository for the long-term management and preservation of research data. Johns Hopkins researchers can use the JHU Data Archive to meet their funder and/or journal data sharing requirements.

- Description of the Repository : an overview of the benefits of using the JHU Data Archive and the procedures for getting started

- FAQs about the Johns Hopkins Research Data Repository

Inter-university Consortium for Political and Social Research (ICPSR)

ICPSR has one of the largest archives of social science datasets in the world and is free to deposit your data into. It is designed for both public-use and restricted-use data.

- Information on how to deposit

- FAQs for depositing

Prior to depositing into a repository you should review the submission guidelines as they likely will require you to format your data a particular way and include specific metadata with your submission.

- Submitting data to the Gene Expression Omnibus

- dbGaP Study Submission Guide

- Data Sharing for Next-Generation Sequencing : an online course created by Welch medical library that discusses preparing genomic data for sharing including into dbGaP.

- SRA Submission Quick Start

- << Previous: Prepare Your Data For Sharing

- Next: Share Code or Software >>

- Last Updated: May 10, 2024 9:46 AM

- URL: https://guides.library.jhu.edu/dataservices

What Is a Data Repository? [+ Examples and Tools]

Published: April 19, 2022

Businesses are collecting, storing, and using more data than ever before. This data is being used to improve the customer experience, support marketing and advertising efforts, and drive decision making. But more data means more challenges.

In a survey on customer experience (CX) among businesses in the United States , 49.8% identified the lack of reliability and integrity of available data as the main challenge affecting data analysis capability for CX. Data security, data privacy, and too many data sources were also identified as challenges.

To help you overcome these issues and get the most out of your data, you can store it in a data repository. Let’s take a close look at this term, then walk through some examples, benefits, and tools that can help you store and manage your data .

![define research data repository Download Now: Introduction to Data Analytics [Free Guide]](https://no-cache.hubspot.com/cta/default/53/8982b6b5-d870-4c07-9ccd-49a31e661036.png)

What is a data repository?

A data repository is a data storage entity in which data has been isolated for analytical or reporting purposes. Since it provides long-term storage and access to data, it is a type of sustainable information infrastructure.

While commonly used for scientific research, a data repository can also be used to manage business data. Let’s take a look at some challenges and benefits below.

What are the challenges of a data repository?

The challenges of a data repository all revolve around management. For example, data repositories can slow down enterprise systems as they grow so it’s important you have a software or mechanism in place to scale your repository. You also need to ensure your repository is backed up and secure. That’s because a system crash or attack could compromise all your data since it’s stored in one place instead of distributed across multiple locations.

These challenges can be addressed by a solid data management strategy that addresses data quality, privacy, and other data trends .

To create your own, check out our guide Everything You Need to Know About Data Management .

What are the benefits of a data repository?

Having data from multiple sources in one place makes it easier to manage, analyze, and report on. A data repository makes it faster and easier to analyze and report data because it’s stored in one place and compartmentalized. It also improves the quality of data since it’s aggregated and preserved. Without a single repository, you’ll likely deal with duplicate data, missing data, and other issues that affect the quality of your analysis.

Now that we understand both the challenges and benefits of a data repository, let’s look at some examples.

Data Repository Examples

Data repository is a general term. There are several more specific terms or subtypes. Let’s take a look at some of these examples below.

Data Warehouse

A data warehouse is a centralized repository that stores large volumes of data from multiple sources in order to more efficiently organize, analyze, and report on it. Unlike a data mart and lake, it covers multiple subjects and is already filtered, cleaned, and defined for a specific use.

We’ll take a closer look at the difference between a data repository and warehouse below (jump link).

Data Repository Software

Choosing a data repository software comes down to a few key factors, including sustainability, usability, and flexibility. Here are some questions to ask when evaluating different software:

- Is the repository supported by a company or community?

- What does the user interface look like?

- Is the documentation clear and comprehensive?

- What data formats does it support?

Answering these and other questions will help you pick the software that best meets your needs. Let’s take a look at some popular data repository software options below.

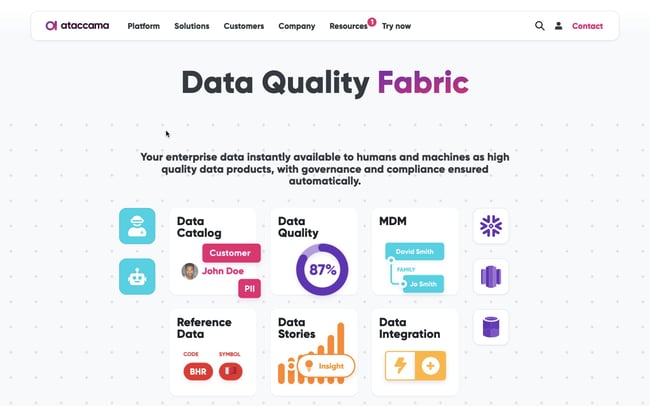

1. Ataccama

Best for: Multinational corporations and mid-sized businesses

Don't forget to share this post!

Related articles.

Materialized View: What You Need to Know [+Best Practices]

What Is Data Hygiene?: Why You Need It & How to Do It Right

API Management: What Is It & Why Does It Matter?

5 Best Data Governance Tools

How to Create a Data Quality Management Plan

Single Source of Truth: Benefits, Challenges, & Examples

Data Governance (DG): A Straightforward Guide

What Is Event-Driven Architecture? Everything You Need to Know

Data Stream: Use Cases, Benefits, & Examples

ETL vs. ELT: What's the Difference & Which Is Better?

Unlock the power of data and transform your business with HubSpot's comprehensive guide to data analytics.

Marketing software that helps you drive revenue, save time and resources, and measure and optimize your investments — all on one easy-to-use platform

.png)

How to build a research repository: a step-by-step guide to getting started

Research repositories have the potential to be incredibly powerful assets for any research-driven organisation. But when it comes to building one, it can be difficult to know where to start. In this post, we provide some practical tips to define a clear vision and strategy for your repository.

Done right, research repositories have the potential to be incredibly powerful assets for any research-driven organisation. But when it comes to building one, it can be difficult to know where to start.

As a result, we see tons of teams jumping in without clearly defining upfront what they actually hope to achieve with the repository, and ending up disappointed when it doesn't deliver the results.

Aside from being frustrating and demoralising for everyone involved, building an unused repository is a waste of money, time, and opportunity.

So how can you avoid this?

In this post, we provide some practical tips to define a clear vision and strategy for your repository in order to help you maximise your chances of success.

🚀 This post is also available as a free, interactive Miro template that you can use to work through each exercise outlined below - available for download here .

Defining the end goal for your repository

To start, you need to define your vision.

Only by setting a clear vision, can you start to map out the road towards realising it.

Your vision provides something you can hold yourself accountable to - acting as a north star. As you move forward with the development and roll out of your repository, this will help guide you through important decisions like what tool to use, and who to engage with along the way.

The reality is that building a research repository should be approached like any other product - aiming for progress, over perfection with each iteration of the solution.

Starting with a very simple question like "what do we hope to accomplish with our research repository within the first 12 months?" is a great starting point.

You need to be clear on the problems that you’re looking to solve - and the desired outcomes from building your repository - before deciding on the best approach.

Building a repository is an investment, so it’s important to consider not just what you want to achieve in the next few weeks or months, but also in the longer term to ensure your repository is scalable.

Whatever the ultimate goal (or goals), capturing the answer to this question will help you to focus on outcomes over output .

🔎 How to do this in practice…

1. complete some upfront discovery.

In a previous post we discussed how to conduct some upfront discovery to help with understanding today’s biggest challenges when it comes to accessing and leveraging research insights.

⏰ You should aim to complete your upfront discovery within a couple of hours, spending 20-30 mins interviewing each stakeholder (we recommend talking with at least 5 people, both researchers and non-researchers).

2. Prioritise the problems you want to solve

Start by spending some time reviewing the current challenges your team and organisation are facing when it comes to leveraging research and insights.

You can run a simple affinity mapping exercise to highlight the common themes from your discovery and prioritise the top 1-3 problems that you’d like to solve using your repository.

💡 Example challenges might include:

Struggling to understand what research has already been conducted to-date, leading to teams repeating previous research

Looking for better ways to capture and analyse raw data e.g. user interviews

Spending lots of time packaging up research findings for wider stakeholders

Drowning in research reports and artefacts, and in need of a better way to access and leverage existing insights

Lacking engagement in research from key decision makers across the organisation

⏰ You should aim to confirm what you want to focus on solving with your repository within 45-60 mins (based on a group of up to 6 people).

3. Consider what future success looks like

Next you want to take some time to think about what success looks like one year from now, casting your mind to the future and capturing what you’d like to achieve with your repository in this time.

A helpful exercise is to imagine the headline quotes for an internal company-wide newsletter talking about the impact that your new research repository has had across the business.

The ‘ Jobs to be done ’ framework provides a helpful way to format the outputs for this activity, helping you to empathise with what the end users of your repository might expect to experience by way of outcomes.

💡 Example headlines might include:

“When starting a new research project, people are clear on the research that’s already been conducted, so that we’re not repeating previous research” Research Manager

“During a study, we’re able to quickly identify and share the key insights from our user interviews to help increase confidence around what our customers are currently struggling with” Researcher

“Our designers are able to leverage key insights when designing the solution for a new user journey or product feature, helping us to derisk our most critical design decisions” Product Design Director

“Our product roadmap is driven by customer insights, and building new features based on opinion is now a thing of the past” Head of Product

“We’ve been able to use the key research findings from our research team to help us better articulate the benefits of our product and increase the number of new deals” Sales Lead

“Our research is being referenced regularly by C-level leadership at our quarterly townhall meetings, which has helped to raise the profile of our team and the research we’re conducting” Head of Research

Ask yourself what these headlines might read and add these to the front page of a newspaper image.

You then want to discuss each of these headlines across the group and fold these into a concise vision statement for your research repository - something memorable and inspirational that you can work towards achieving.

💡Example vision statements:

‘Our research repository makes it easy for anyone at our company to access the key learnings from our research, so that key decisions across the organisation are driven by insight’

‘Our research repository acts as a single source of truth for all of our research findings, so that we’re able to query all of our existing insights from one central place’

‘Our research repository helps researchers to analyse and synthesise the data captured from user interviews, so that we’re able to accelerate the discovery of actionable insights’

‘Our research repository is used to drive collaborative research across researchers and teams, helping to eliminate data silos, foster innovation and advance knowledge across disciplines’

‘Our research repository empowers people to make a meaningful impact with their research by providing a platform that enables the translation of research findings into remarkable products for our customers’

⏰ You should aim to agree the vision for your repository within 45-60 mins (based on a group of up to 6 people).

Creating a plan to realise your vision

Having a vision alone isn't going to make your repository a success. You also need to establish a set of short-term objectives, which you can use to plan a series of activities to help you make progress towards this.