- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Present Your Data Like a Pro

- Joel Schwartzberg

Demystify the numbers. Your audience will thank you.

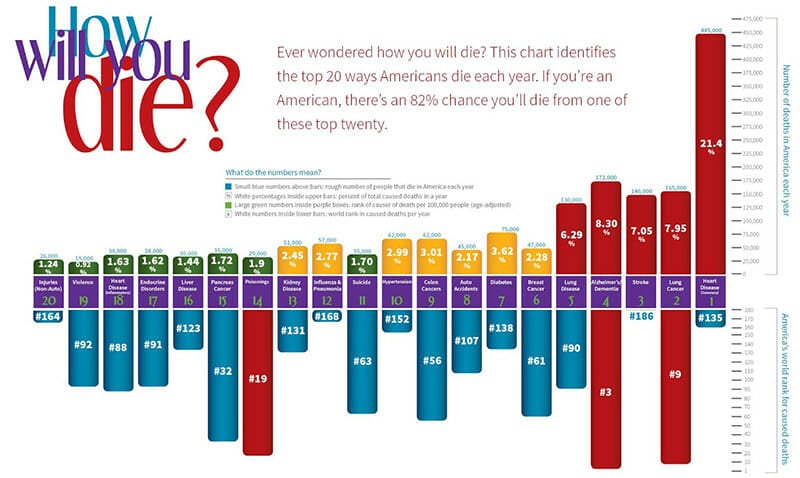

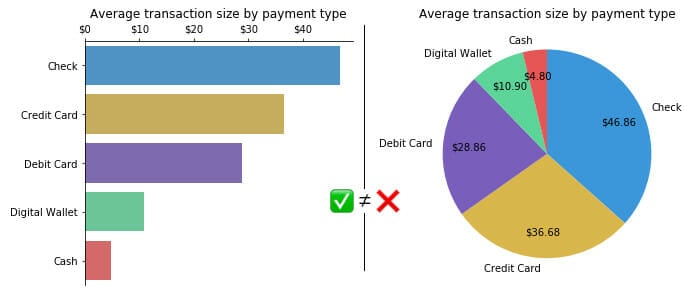

While a good presentation has data, data alone doesn’t guarantee a good presentation. It’s all about how that data is presented. The quickest way to confuse your audience is by sharing too many details at once. The only data points you should share are those that significantly support your point — and ideally, one point per chart. To avoid the debacle of sheepishly translating hard-to-see numbers and labels, rehearse your presentation with colleagues sitting as far away as the actual audience would. While you’ve been working with the same chart for weeks or months, your audience will be exposed to it for mere seconds. Give them the best chance of comprehending your data by using simple, clear, and complete language to identify X and Y axes, pie pieces, bars, and other diagrammatic elements. Try to avoid abbreviations that aren’t obvious, and don’t assume labeled components on one slide will be remembered on subsequent slides. Every valuable chart or pie graph has an “Aha!” zone — a number or range of data that reveals something crucial to your point. Make sure you visually highlight the “Aha!” zone, reinforcing the moment by explaining it to your audience.

With so many ways to spin and distort information these days, a presentation needs to do more than simply share great ideas — it needs to support those ideas with credible data. That’s true whether you’re an executive pitching new business clients, a vendor selling her services, or a CEO making a case for change.

- JS Joel Schwartzberg oversees executive communications for a major national nonprofit, is a professional presentation coach, and is the author of Get to the Point! Sharpen Your Message and Make Your Words Matter and The Language of Leadership: How to Engage and Inspire Your Team . You can find him on LinkedIn and X. TheJoelTruth

Partner Center

- Sources of Business Finance

- Small Business Loans

- Small Business Grants

- Crowdfunding Sites

- How to Get a Business Loan

- Small Business Insurance Providers

- Best Factoring Companies

- Types of Bank Accounts

- Best Banks for Small Business

- Best Business Bank Accounts

- Open a Business Bank Account

- Bank Accounts for Small Businesses

- Free Business Checking Accounts

- Best Business Credit Cards

- Get a Business Credit Card

- Business Credit Cards for Bad Credit

- Build Business Credit Fast

- Business Loan Eligibility Criteria

- Small-Business Bookkeeping Basics

- How to Set Financial Goals

- Business Loan Calculators

- How to Calculate ROI

- Calculate Net Income

- Calculate Working Capital

- Calculate Operating Income

- Calculate Net Present Value (NPV)

- Calculate Payroll Tax

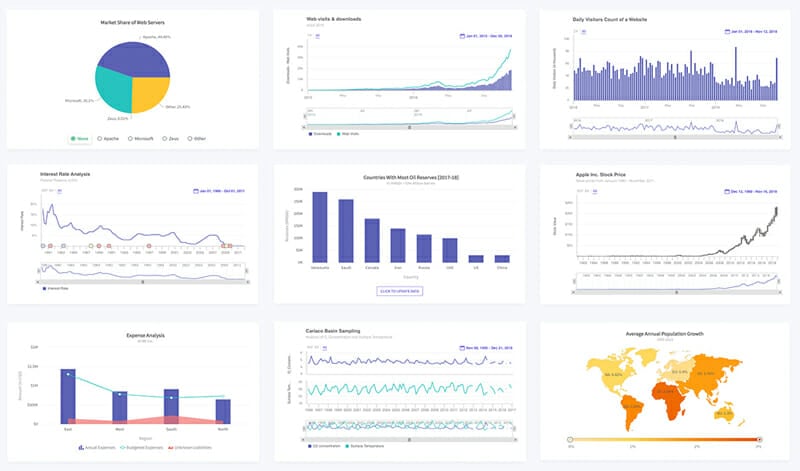

23 Best Data Visualization Tools of 2024 (with Examples)

If you are dissatisfied with what you've been able to achieve with your current data visualization software, and you want to try a different one, you have come to the right place.

Or, if you have never tried a data visualization software and you wish first to understand how it works and what the market offers, you are again in the right place.

Through extensive research, we have compiled a list of the absolute best data visualization tools in the industry, ranging from free solutions to enterprise packages.

Continue reading, and find the JavaScript library, non-programmer-optimized tools, industry and concept-specific, or fully-featured mapping, charting, and dashboard solutions for your needs.

What are Data Visualization Tools?

Data Visualization Tools refer to all forms of software designed to visualize data.

Different tools can contain varying features but, at their most basic, data visualization tools will provide you with the capabilities to input datasets and visually manipulate them.

Helping you showcase raw data in a visually digestible graphical format, data visualization tools can ensure you produce customizable bar, pie, Gantt, column, area, doughnut charts, and more.

When you need to handle datasets that contain up to millions of data points, you will need a program that will help you explore, source, trim, implement and provide insights for the data you work with.

A data visualization tool will enable you to automate these processes, so you can interpret information immediately, whether that is needed for your annual reports, sales and marketing materials, identifying trends and disruptions in your audience's product consumption, investor slide decks, or something else.

After you have collected and studied the trends, outliers, and patterns in data you gathered through the data visualization tools, you can make necessary adjustments in business strategy and propel your team closer to better results.

In addition, the more you can implement the valuable insights gained from the graphs, charts, and maps into your work, the more interested and adept you will become at generating intelligent data visualizations, and this loops back into getting actionable insights from the reports.

Through data visualization tools, you build a constructive feedback loop that keeps your team on the right path.

Comparison of Best Data Visualization Tools

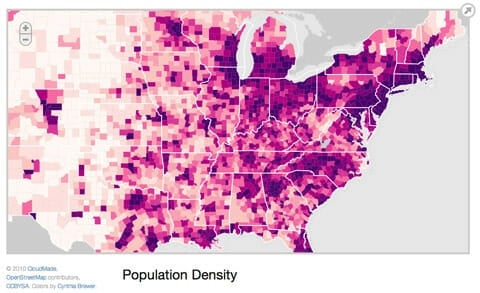

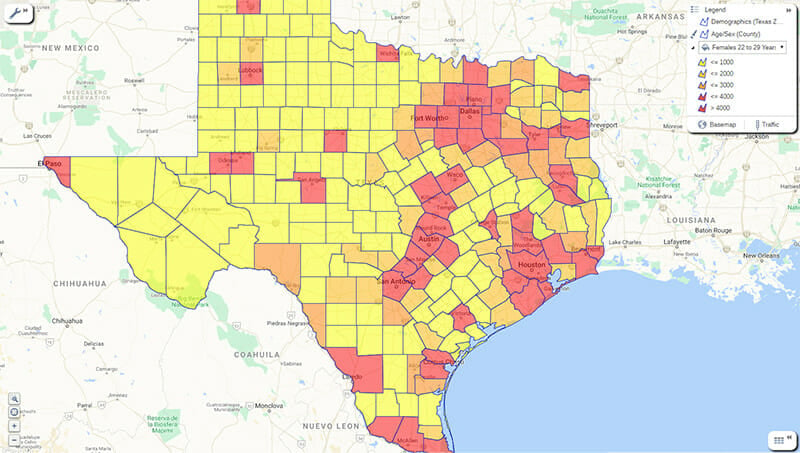

Best data visualization software for creating maps and public-facing visualizations..

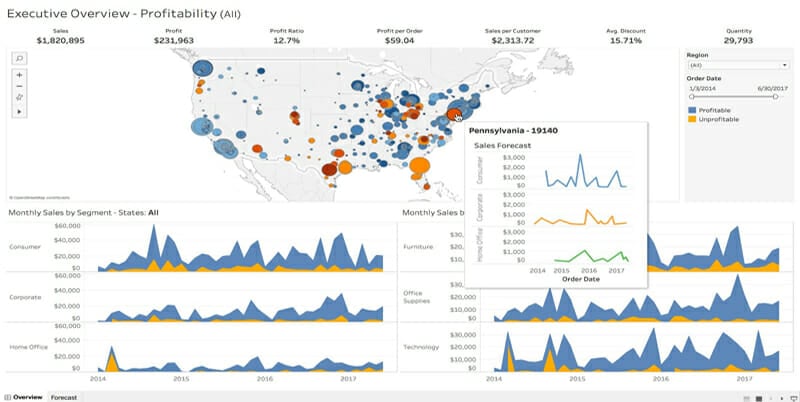

Available in a variety of ways, including desktop, server, online, prep, free public option, Tableau provides an enormous collection of data connectors and visualizations.

Establishing connections to your data sources is straightforward, and you can import everything from CSV files, Google Ads and Analytics to Microsoft Excel, JSON, PDF files, and Salesforce data.

A variety of chart formats and a robust mapping capability ensure that the designers can create color-coded maps that show geographically important data in the most visually digestible way.

The tool offers a public version that is free to use for everyone that will help you create interactive visualizations and connect to CSV, text, statistical files, Google sheets, web data connectors, and Excel documents.

Tableau Desktop can help you transform, process, and store huge volumes of data with exceptional analytics and powerful calculations from existing data, drag-and-drop reference lines and forecasts, and statistical summaries.

The Desktop option lets you connect to data on-prem or in the cloud, access and combine disparate data without coding, pivot, split, and manage metadata, and there is no limit to how much data it can store, process, or share.

Whereas Tableau Desktop is more suitable for analysts and BI professionals, Tableau Public is for anyone interested in understanding data and sharing those insights through data visualizations (students, journalists, writers, bloggers).

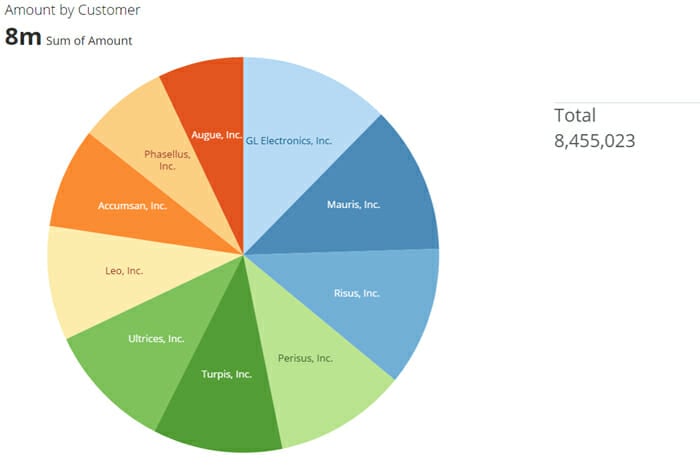

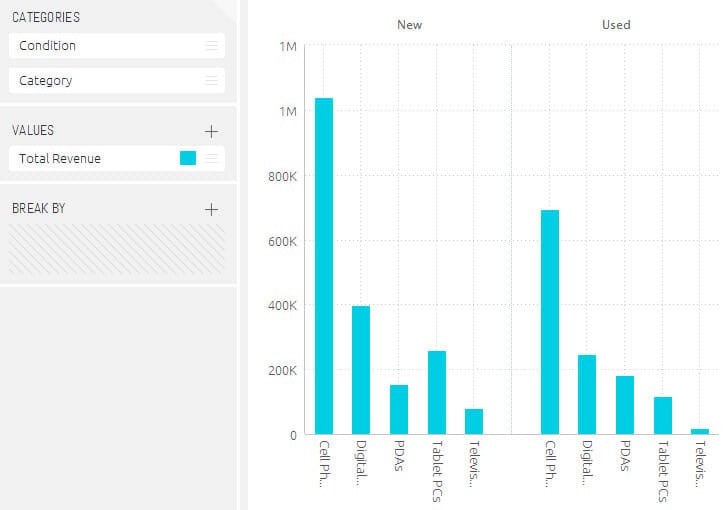

Data visualization example

You can purchase Tableau Creator for $70 per user per month.

- The tool comes in desktop, cloud, server, prep, online options

- Free public version

- Extensive options for securing data without scripting

- Convert unstructured statistical information into comprehensive logical results

- Fully functional, interactive, and appealing dashboards

- Arrange raw data into catchy diagrams

- Support for connections with many data sources, such as HADOOP, SAP, DB Technologies

- More than 250 app integrations

With a user-friendly design and a substantial collection of data connectors and visualizations, Tableau will help you attain high performance with a thriving community & forum and mobile-friendly capacity.

2. Infogram

Fully-featured data visualization tool for non-designers and designers..

Consisting of more than 550 maps, 35 charts, and 20 predesigned templates, Infogram can enable you to prepare a fully responsive, professional presentation on any device.

You can import data from online or PC sources, and you can download infographics in HD quality, supporting multiple file types (PNG, JPG, PDF, GIF, HTML).

If the majority of your data sources are in Excel XLS, JPG, or HTML files, and you want them imported in Infogram as PDF files, you can do a little search and find the best PDF converters .

The process of creating effective visualizations for marketing reports, infographics, social media posts, and maps is simplified with the drag-and-drop editor, allowing non-designers or people without much technical knowledge to generate slick-looking reports, one-pagers, diagrams.

Interactive visualizations are perfect for embedding into websites and apps, and you can update new data to a chart periodically and automatically for updating dashboards, reports, presentations, live feeds, and articles through Infogram's live options.

The software allows you to upload CSV or XLS files from your Google Drive, Dropbox, or OneDrive accounts easily by connecting with the accounts, making any design changes, publishing, and embedding them.

Outside of the free Basic plan, the Pro subscription is $25 per month.

- 550+ map types and 35+ chart types

- Enhanced interactive charts and maps with tooltips, tabs, clickable legends, linking

- Object animations

- Move your graphics to social media, slide decks like Prezi, and other platforms easily

- Make changes on the fly without wholesale revisions

- View and restore earlier versions of your projects

- Generate 13 different reports on website traffic and automatically update the charts with data

- Facilitate top referring sites, mobile usage, number of pages per session, top keywords, and other reports through Google Analytics

- Publish your content on Facebook Instant Articles, Medium, or through WordPress

With a wide selection of chart types and map types that are easy to implement by anyone and a free plan that allows you to publish your content online, you will find Infogram is an excellent solution for downloading data, sharing it privately, and accessing beautiful templates and images.

3. ChartBlocks

Best data visualization tool for embedding charts on any website..

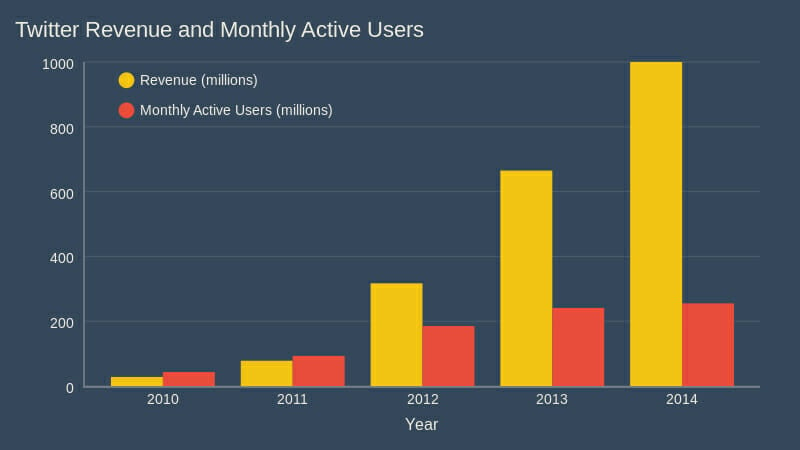

The cloud-based chart-building tool allows you to customize any charts and sync them with any data source, letting you share charts on social media websites, including Facebook and Twitter.

You can import data from any source using their API, including live feeds, with the chart building wizard helping you select the optimal data for your charts before importing on any device of any screen size.

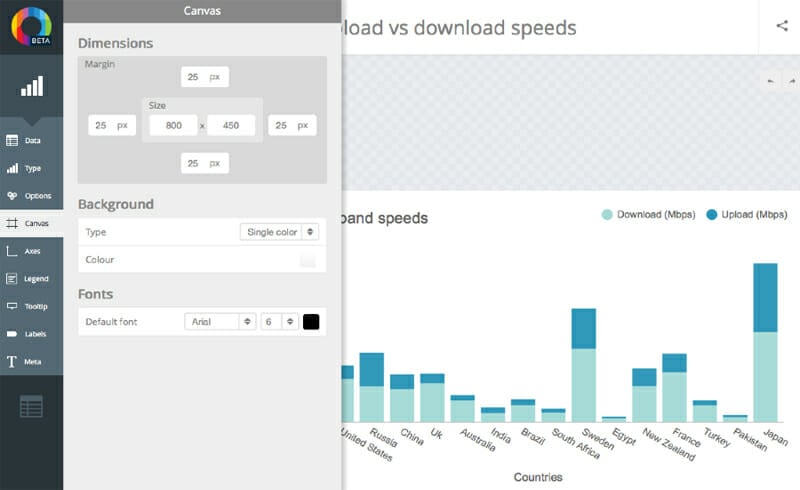

Control all aspects of your charts with hundreds of customization options, allowing you to configure everything from colors, fonts, and sizes to grids and number ticks on your axes.

CartBlocks ensures responsive HTML5 charts that work on any browser or device and the powerful D3.js tool to render your charts as scalable vector graphics and make them ready to be used on retina screens and for high-quality printed documents.

Grab the embed code and share the charts on your website, Twitter, Facebook, and other social media sites.

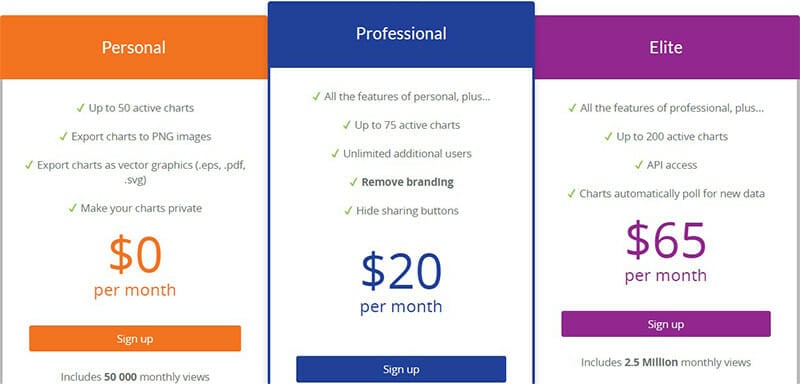

The Personal plan is $0 per month, and you can scale up to the Professional subscription for $20.

- HTML5 charts that work on any browser, device, and screen size

- Import data from spreadsheets, databases, and live feeds

- Pick the right data for your chart with the chart building wizard

- Design all elements of your charts with hundreds of customization options

- Embed your charts on websites, articles, and across social media

- Set up scheduled imports in the ChartBlocks app

- Optimize your charts for retina screens and high-quality printed documents through D3.js

- Export charts to PNG images

- Export charts as vector graphics (eps, PDF, SVG)

- Remove branding for $20 per month

Helping you create charts on any device and any screen size along with optimizing the charts for high-quality prints, ChartBlocks can offer up to 50 active charts for free for up to 50K monthly views.

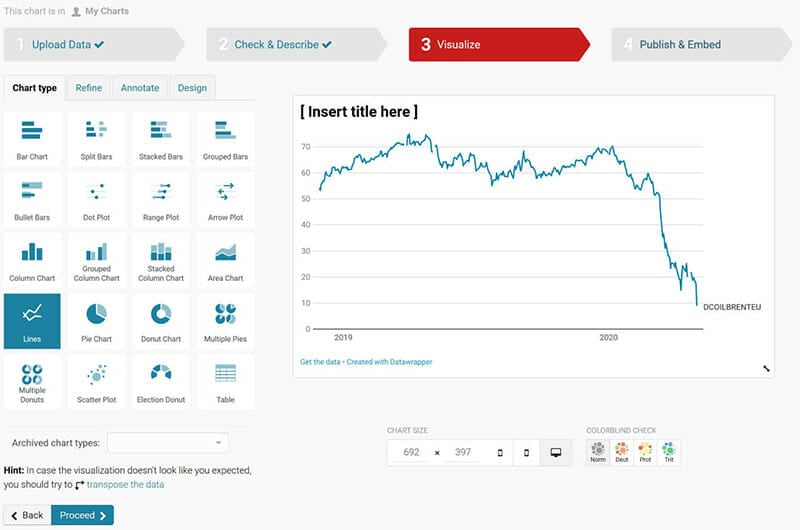

4. Datawrapper

Best data visualization software for adding charts and maps to news stories..

Created specifically for adding charts and maps to news stories, Datawrapper is an open-source tool that supports Windows, Mac, and Linux and enables you to connect your visualization to Google Sheets.

Select one of 19 interactive and responsive chart types ranging from simple bars and lines to the arrow, range, and scatter plots, three map types that allow you to create locator maps, thematic choropleth, symbol maps, and more.

Table capabilities provide you with a range of styling options for the responsive bars, columns & line charts, heatmaps, images, search bars & pagination.

Copy your data from the web, Excel, or Google Sheets, and upload CSV/XLS files or provide links to URLs, Google Sheets for live-updating charts.

Copy the embed code into your CMS or website to access the interactive version, or export the chart as a PNG, SVG, or PDF for printing.

Outside the free plan, you can purchase the Custom for $599 per month, but the free one offers unlimited visualizations.

- 19 interactive and responsive charts and 3 map types

- No limits to charts, maps, and tables you can create in the free plan

- All visualizations are private until you activate the publish capability

- Utilize shared folders, Slack & Team integrations, and admin permissions

- Dustwrapper will design a custom chart theme according to the style guides you send

- Export all visualizations as PNG, SVG, PDF formats

- Update charts and tables automatically without republishing through live updating

- Access print-ready PDFs with defined CMYK colors

Datawrapper will create finished visualizations similar to those in the New York Times, with tons of stylization options and practicality for creating graphics and web maps that you can easily copy and share.

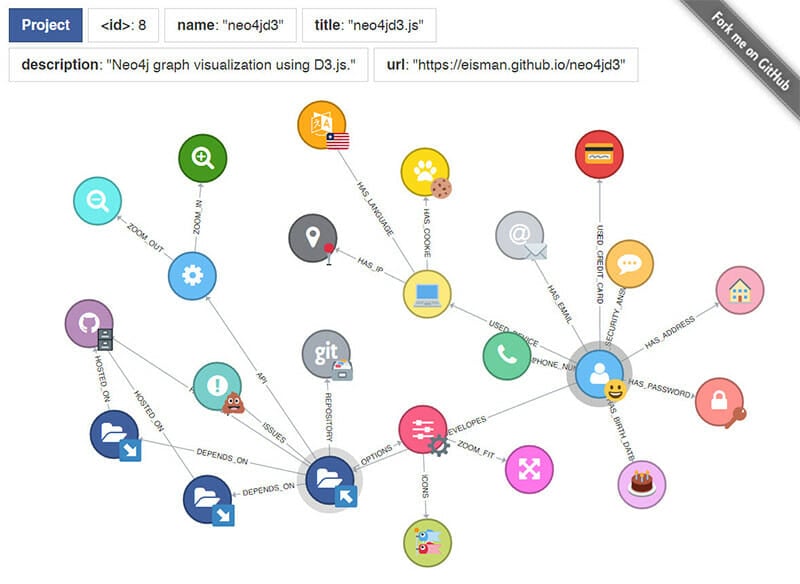

JavaScript Library for Manipulating Documents through Data with Reusable Charts.

D3.js is a JavaScript library for manipulating documents based on data, helping you activate data through HTML, SVG, and CSS.

The tool is extremely fast and it supports large datasets and dynamic behaviors for interaction and animation, enabling you to generate an HTML table from an array of numbers or use the same data to create an interactive SVG bar chart with smooth transitions and interaction.

While the platform requires some JavaScript knowledge, there are apps like NVD3 that allow non-programmers to utilize the library, providing reusable charts and chart components without taking away the power of D3.js.

Other similar apps include Plotly's Chart Studio that enables you to import data, compose interactive charts, publish static, printed versions or share interactively.

Ember Charts lets you extend and modify the time series, bar, pie, and scatter charts within the D3.js frameworks.

D3.js is free.

- Free, open-source, customizable

- Extremely fast and supportive of large datasets

- Generate HTML tables from numbers

- Create interactive SVG bar charts with smooth transitions and interaction

- Non-programmers can create complex visualizations

- A diverse collection of official and community-developed modules allows code reuse

- Utilize NVD3, Plotly's Chart Studio, and Ember Charts to get the most out of D3.js's library without coding

Emphasizing web standards, D3.js will help you create high-quality visualizations quickly and share them on the web without anyone having to install any software to view your work.

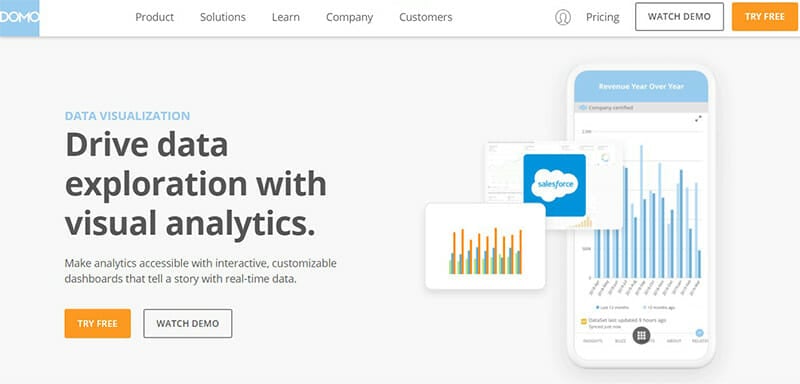

Best Data Visualization Software for Companies with BI Experience.

Domo is a cloud platform that has a powerful BI tool with a lot of data connectors and a robust data visualization capability that helps you conduct analysis and generate interactive visualizations.

The app helps you simplify administration data and examine important data using graphs and pie charts, and the engine allows you to manipulate ETL operations and conduct data cleansing in the engine after the load with no limits to how much data you can store.

With more than 450 available connectors, some of which are accessible by default and others after requesting URLs from the support team, Domo is highly flexible, and they allow you to load the locally stored CSV files easily.

Explore data in the interactive format through the data warehouse functionality, and conduct data prep, data joining, and ETL tasks.

Access more than 85 different visualizations, create and customize cards and pages, handling everything from text editing and single-data points to creating the apps for the app store.

You need to contact Domo's sales team for a personalized quote.

- Limitless data storage and an extensive range of connectors

- Create advanced charts and maps with filters and drill-downs

- Guide people through analysis with interactive data stories by combining cards, text, and images

- Ensure the teams can self-service while governing access to data

- Refine data with data points, calculated fields, and filters

- Annotate chart data for further commentary

- Define how cards on a dashboard interact with custom links and filters

- Dashboards with KPIs for retail, marketing, data science through different apps

- Encrypt your data with the Workbench tool or use an on-premise VM with Domo querying engine behind your firewall

Domo's powerful BI tool with a lot of data connectors isn't suited for newcomers and is best-suited for businesses that have BI experience that will benefit from the tool's excellent sharing features, limitless data storage, and terrific collaboration capabilities.

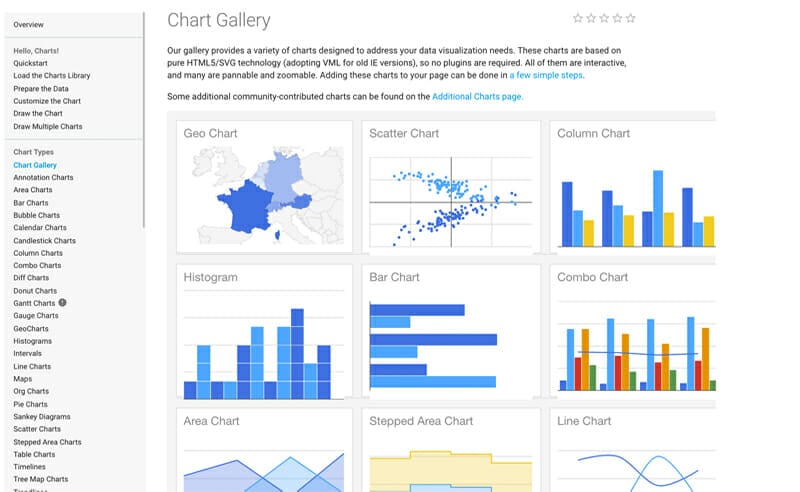

7. Google Charts

Best data visualization tool for creating simple line charts and complex hierarchical trees..

The powerful and free data visualization tool Google Charts is specifically designed for creating interactive charts that communicate data and points of emphasis clearly.

The charts are embeddable online, and you can select the most fitting ones from a rich interactive gallery and configure them according to your taste.

Supporting the HTML5 and SVG outputs, Google Charts work in browsers without the use of additional plugins, extracting the data from Google Spreadsheets and Google Fusion Tables, Salesforce, and other SQL databases.

Visualize data through pictographs, pie charts, histograms, maps, scatter charts, column and bar charts, area charts, treemaps, timelines, gauges, and many more.

Google Charts is free.

- Rich interactive chart gallery

- Cross-browser compatibility

- Dynamic data support

- Combo, calendar, candlestick, diff, gauge, Gantt, histograms, intervals, org, scattered, stepped area charts

- Animate modifications made to a chart

- Draw multiple charts on one web page

- Compatible with Android and iOS platforms

Google Charts is a free data visualization platform that supports dynamic data, provides you with a rich gallery of interactive charts to choose from, and allows you to configure them however you want.

8. FusionCharts

Best data visualization tool for building beautiful web and mobile dashboards..

FusionCharts is a JavaScript-based solution for creating web and mobile dashboards that can integrate with popular JS frameworks like React, jQuery, Ember, and Angular and server-side programming languages like PHP, Java, Django, and Ruby on Rails.

The tool equips you with 100+ interactive chart types and 2,000+ data-driven maps, including popular options like the bar, column, line, area, and pie, or domain-specific charts like treemaps, heatmaps, Gantt charts, Marimekko charts, gauges, spider charts, and waterfall charts.

In addition to these, FusionCharts provides 2K+ choropleth maps that cover countries and even cities, and the powerful engine supports millions of data points in your browser with no glitches.

Generate charts on the server-side, export the dashboards as PDFs, send reports via email, and FusionCharts will have you covered.

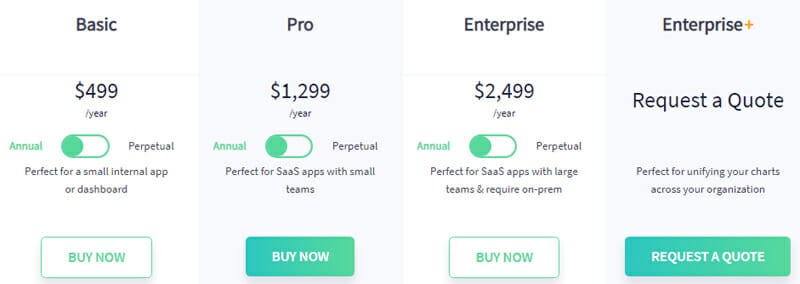

The Basic plan is $499 per year.

- Integrates with popular JS frameworks and server-side programming languages

- 100+ interactive chart types and 2K+ data-driven choropleth maps

- Construct complex charts through dashboards easily with consistent API

- Plot your crucial business data by regions with over 2,000 choropleth maps

- Common charts are supported on older browsers

- Comprehensive documentation for each library or programming language

- Ready-to-use chart examples, industry-specific dashboards and data stories with source codes

With extensive documentation, cross-browser support, and a huge number of chart and map format options, FusionCharts will allow you to build beautiful dashboards for your web and mobile projects while keeping even the most complex charts performing on a high level with consistent API.

9. Chart.js

Simple and flexible data visualization software for including animated, interactive graphs on your website..

Chart.js is a simple and flexible JavaScript charting library that provides eight chart types in total and allows animation and interaction.

Using the HTML5 Canvas for output, Chart.js renders charts across all modern browsers effectively.

You can mix and match bar and line charts to provide a clear visual distinction between datasets, plot complex, sparse datasets on date time, logarithmic, and fully custom scales.

Animate anything with out-of-the-box stunning transitions for data changes.

Chart.js is free.

- The learning curve is almost non-existent

- Compatible with all screen sizes

- Modernized, eye-catching, and pleasing graphs

- Open-source and free

- Visualize your data through 8 different animated, customizable chart types

- Continuous rendering performance across all modern browsers through HTML5 Canvas

- Mix and match bar and line charts for a clear visual distinction between datasets

- Plot complex, sparse datasets on date time, logarithmic, and entirely custom scales

- Redraw charts on window resize

Not only are the Chart.js graphs easy to digest and eye-catching, but the tool allows you to combine different graph forms to translate data into a more tangible output and add numerical JSON data into the Canvas for free.

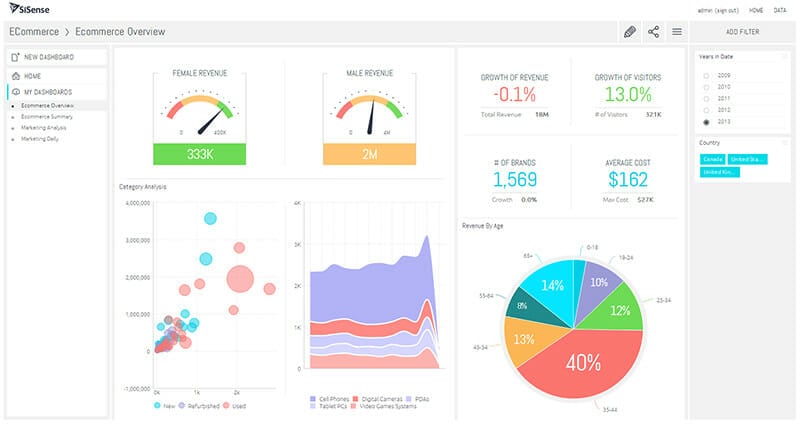

10. Sisense

#1 data visualization tool for simplifying complex data from multiple sources..

Crunch large datasets and visualize them with beautiful pictures, graphs, charts, maps, and more from a single dashboard.

One of the best data visualization tools that can help you transform data into actionable, applicable components or visualizations, Sisense lets you simplify data analysis by unlocking data from the cloud and on-prem and embed analytics anywhere with a customizable feature.

Create custom experiences and automated multi-step actions to accelerate workflows and integrate AI-powered analytics into workflows, processes, applications, and products.

Before selecting your data sources, you can preview and mash up a couple of data sources before adding them to your schema.

Instead of valuing visualizations for the number of designs and formats it offers, Sisense places the emphasis on the depth of insights the charts expose, providing multidimensional widgets that render interactive visualizations and generate a ton of insights by scrolling the mouse over them or clicking on different sections.

With no subscription plans displayed publicly, you will need to request a quote.

- Assemble and share dashboards

- Crunch large datasets and visualize them through graphs, charts, maps, and pictures

- Transform raw data into actionable, applicable components for visualizations

- Preview and mash up multiple data sources before adding them to your schema

- Enable self-service analytics for your customers code-free

- Advanced predictive intelligence and natural language querying

- Leverage robust embedding capabilities from iFrames to APIs and SDKs

- Pull in data from eBay, Facebook, Quickbooks, PayPal

- Leverage cached data for minimized query costs

- Resolve bottlenecks with in-chip processing

Appealing to seasoned BI users with its comprehensive features, Sisense will help you mash up data and create an analytics app, deploy your work on the cloud, recover your data and safeguard against errors, and help you export data to Excel, CSV, PDF.

11. Workday Adaptive Planning

#1 data visualization tool with the best planning, budgeting, and forecasting capabilities..

Workday's Adaptive Planning data visualization tool is designed to boost your business by helping you make more lucrative decisions, allowing you to plan, budget, and forecast while evaluating multiple scenarios across large datasets.

Collaborate through the web, mobile, or Excel and deliver stunning reports in minutes while quickly optimizing sales resources, increasing rep performances, and improving predictability.

Create dashboards that integrate your actuals and plans, easily manage models and forecasts across integrated data sources, and always extract real-time data.

Automated data consolidation from all sources and use flexible modeling that lets you build on the fly, adjusting the dimensions if needed.

Making the right decisions based on the insights gathered through a comprehensive budgeting and forecasting tool like Workday Adaptive Planning will be more effective when implemented hand in hand with Net Present Value calculation for fostering a more budget-optimized workspace and better investment decisions.

Before starting your free trial and receiving a quote, you will need to write a request to the support team.

- Create dashboards that integrate your actuals and plans

- Drag-and-drop report building features

- Create rep capacity plans to meet topline bookings targets

- Deploy the right quotas and set up balanced territories

- Collaborate on what-if scenarios

- Access audit trails to see what changed, where, and who did it

- Export operational data from GL, payroll, purchasing

When the active planning process is collaborative, comprehensive, and continuous as with Adaptive Planning, the tools and information for building complex dashboards quickly that the software provides are easy to deploy.

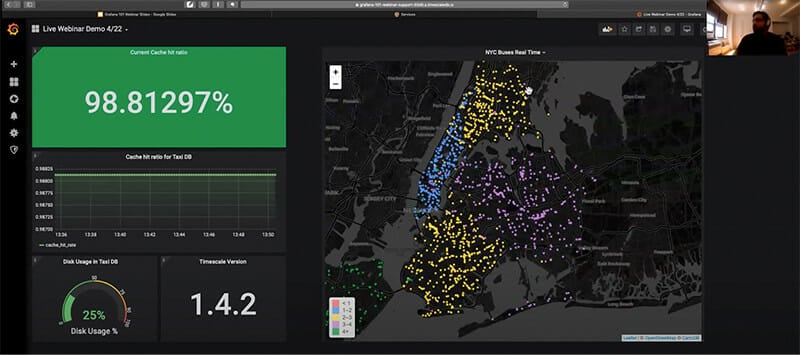

12. Grafana

Open-source data visualization tool for integrating with all data sources and using the smoothest graphs..

In Grafana , you can package and present information through a variety of chart types, and if you want to make dynamic dashboards, there are not a lot of visualization tools that make the process simpler than Grafana.

Grafana's open-source data visualization software allows you to create dynamic dashboards and other visualizations.

You can query, visualize, alert on, and understand your metrics no matter where they are stored, and deploy data source permissions, reporting, and usage insights.

Extract data from mixed data sources, apply annotations and customizable alert functions, and extend the software's capabilities via hundreds of available plugins.

Share snapshots of dashboards and invite other users to collaborate through the export functions.

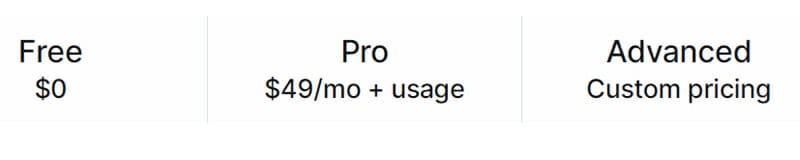

Outside of the Free plan, you can purchase the Pro subscription for $49 per month + usage.

- Creating dynamic dashboards is easy

- Variety of chart types and data sources

- Support for mixed data feeds

- Access for up to 3 members in the Free plan

- Query, visualize, alert on, and understand your metrics

- Data source permissions

- Usage insights

- Apply annotations

- Hundreds of plugins

- Share snapshots of the dashboard

One of the best software for monitoring and alerting, Grafana allows you to write the query to create graphs and alerts, integrate with almost all cloud platforms, and invite other users to collaborate for free.

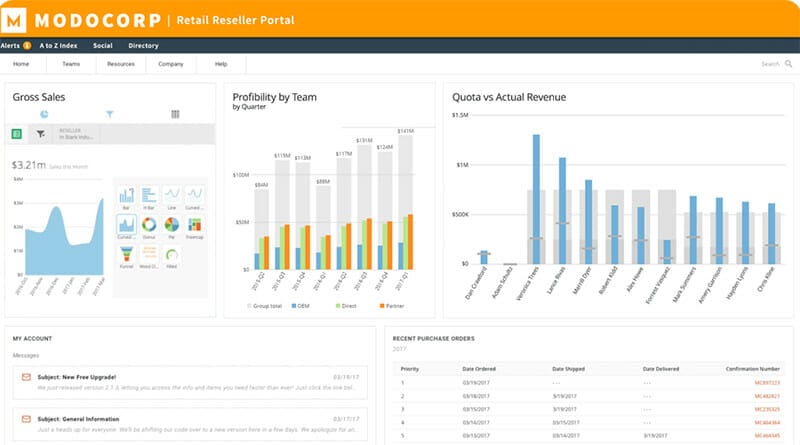

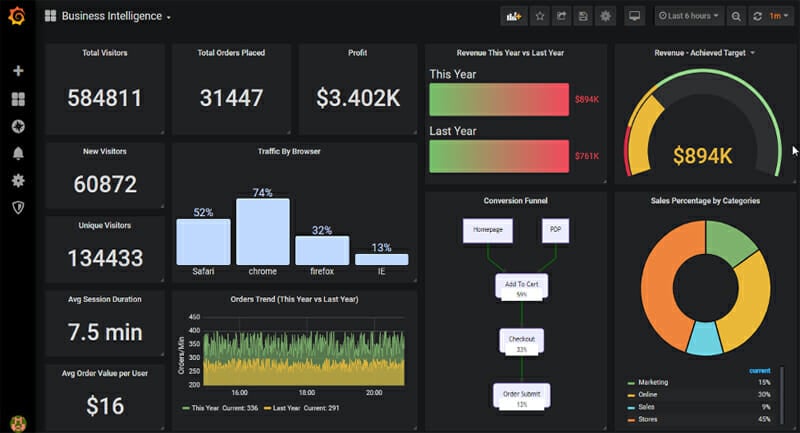

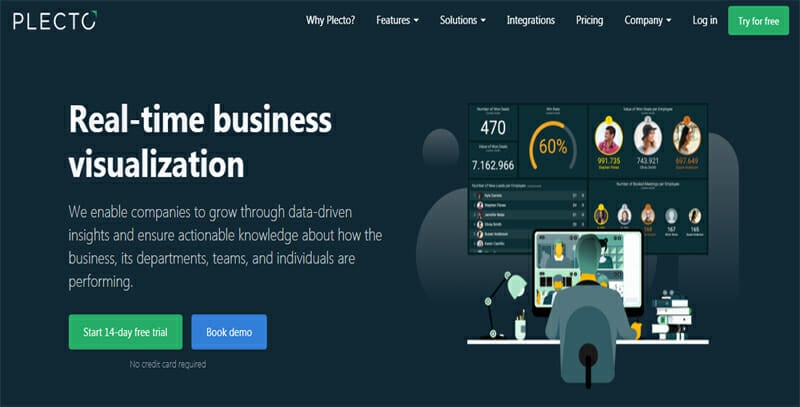

Best Data Visualization Software to Motivate and Engage Your Employees to Perform Better through Dashboards.

By visualizing performance indicators openly and engagingly, Plecto helps keep your team's morale at a high level and motivates your employees to keep improving.

Plecto allows you to integrate with an unlimited number of data sources, and you can even import data from different sources and filter these across sources.

Visualize your most important KPIs on real-time dashboards and engage your team with the addicting gamification features, sales contests, leaderboards, and instant notifications.

Add data through Excel, SQL, Zapier, or Plecto's REST-based API, display your Plecto account on a TV and access your dashboard on the go through mobile apps for Android, iPhone, and Apple Watch.

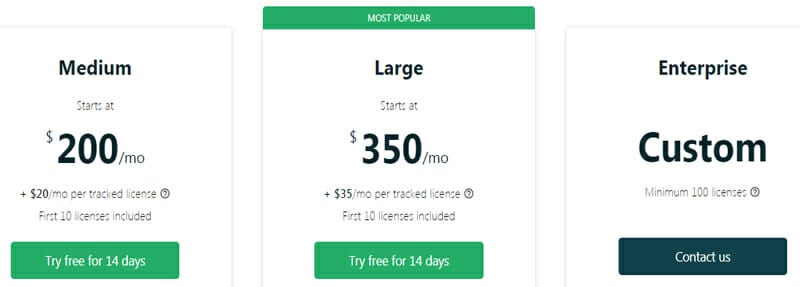

The Medium subscription starts at $250 per month when billed monthly.

- Provide data-driven, actionable knowledge about the business, departments, and individuals' performances

- Motivate your team to keep improving through gamification

- Integrate with an unlimited number of data sources

- Import data from different sources and apply filters

- Engage your team through sales contests, leaderboards, and instant notifications

- Add data through Excel, SQL, Zapier, or the software's REST-based API

- Access your Plecto account via TV or through the Android, iPhone, and Apple Watch apps

Plecto will allow the teams and individuals to keep progressing and provide teams with actionable, data-driven knowledge delivered through encouraging gamification practices while connecting them with one of 50+ pre-built integrations or public API.

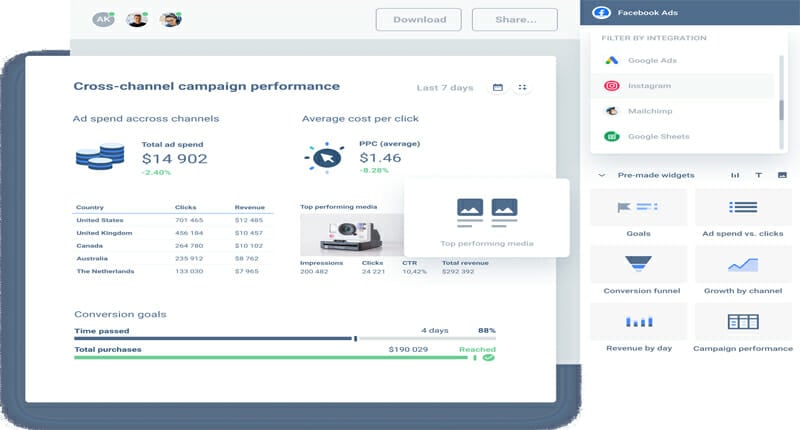

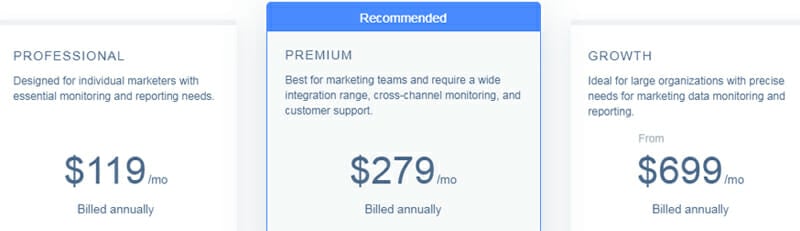

14. Whatagraph

Best data visualization tool for monitoring and comparing performances of multiple campaigns..

The Whatagraph application allows you to transfer custom data from Google Sheets and API.

Commonly used by marketing professionals for visualizing data and building tailored cross-channel reports, Whatagraph is the best tool for performance monitoring and reporting.

Blend data from different sources and create cross-channel reports so you can compare how the same campaign is performing across different channels.

Create custom reports or utilize the pre-made widgets, with ready-made report templates for different marketing channels like SEO, PPC, social media, and share links with your colleagues so they can access them at all times.

Choose from 30+ integrations that include Facebook Ads, Google Analytics, HubSpot, and more.

The Professional plan will cost you $119 per month.

- Monitor and compare performances of multiple channels and campaigns

- Customize the reports with brand colors, logos, custom domains

- Add custom data with Google Sheets and Public API integrations

- Blend data from different sources and create cross-channel reports

- Ready-made templates for different marketing channels

- Google Analytics, Google Ads, Facebook Ads, Instagram, Twitter, Linkedin, Simplifi, and more integrations

- Automatically deliver reports to clients

Whatagraph allows you to style your reports according to your preferences, monitor and compare performances across multiple campaigns and channels, and blend data from different sources for cross-channel reports.

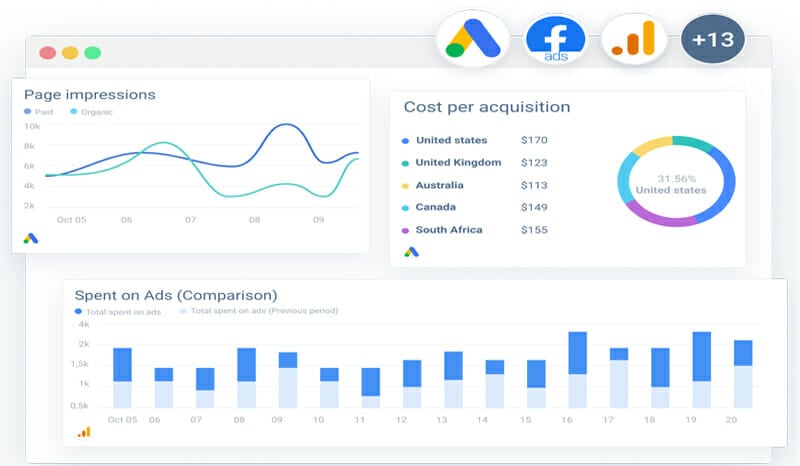

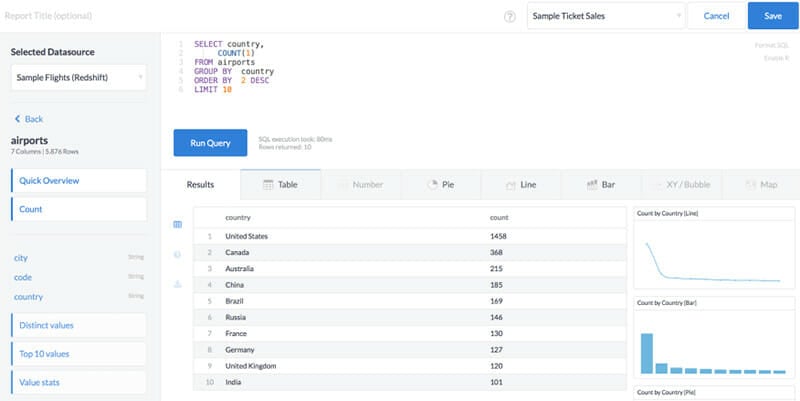

Best-in-Class Data Visualization Software for Running SQL Queries.

Cluvio will enable you to utilize SQL and R to analyze your data and create appealing, interactive dashboards in a few minutes better than any other tool on the market.

Translate your raw data into numerous professional charts and graphs, and share your dashboard with clients and colleagues without a mandatory log-in.

Scheduled dashboard and report sharing via email (image, PDF, Excel, CSV) are functionalities Cluvio provides to urge the users to view the information, get a regular snapshot, or trigger conversations.

Cluvio's customer service team is definitely worth mentioning as it has gained praise for being fast, informative, accurate, and helpful for a large portion of Cluvio's audience.

Outside of the Free plan, Cluvio's Pro plan is $249 per month.

- Change aggregation, select a specific time range, and filter dashboards by any individual attributes

- Code completion, syntax highlighting, and parameterized queries in the SQL editor

- Turn your codes into reusable snippets

- Monitor data in real-time with SQL alerts

- Automatic suggestions for best data visualization practices

- Run custom R scripts

- Invite an unlimited number of employees in the Pro and Business plans

Not only does Cluvio offer a free plan with three dashboards and 1,000 query executions, but the software comes with complete monitoring and sharing capabilities while allowing you to dig deeper into your statistical analysis and extract more value through SQL and R queries.

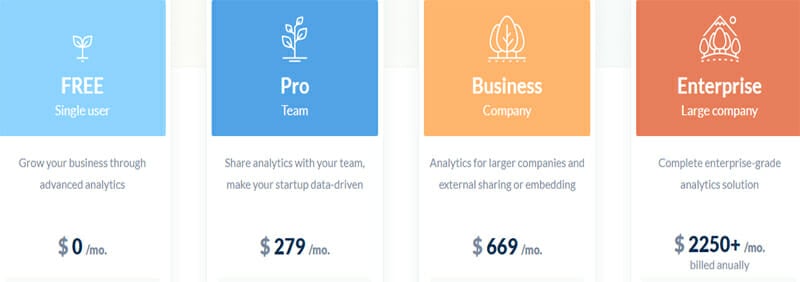

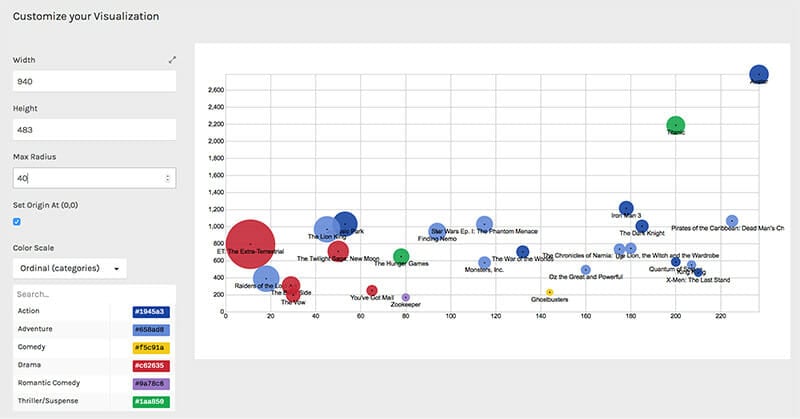

16. RAWGraphs

Best data visualization tool for simplifying complicated data through striking visual representation..

RAWGraphs will enable you to generate beautiful data visualizations uploaded as XLSX or CSV files quickly, as well as URL uploads and spreadsheet copies.

The software offers mapping and export visualizations as SVG or PNG images that can be customized by inputting additional parameters.

You can work with delimiter-separated values (CSV. and TSV. files), as well as copy-paste texts from other applications (TextEdit, Excel) and CORS-enabled endpoints (APIs).

Data here is processed only by the web browser, and the charts are available in conventional and unconventional layouts.

The pricing is not provided by the vendor, which is why you will have to contact them to get your quote.

- Work with CSV, TSV files, copy-paste texts from other applications, and APIs

- Receive visual feedback after mapping dataset dimensions

- Export visualizations as SVG and PNG images and embed them on your web page

- No server-side operations or storages are performed so no one can see or copy your data

- Unconventional charts that are hard to produce with other tools

- Simple pie and column charts

- Map the dimensions of your datasets with the visual variables of the selected layouts

- Open the visualizations in your favorite vector graphics editor and improve them

Designed as a tool to provide the missing link between spreadsheet applications (Microsoft Excel, Apple Numbers, OpenRefine) and vector graphics editors (Adobe Illustrator, Inkscape, Sketch), RAWGraphs will help you simplify complex data through powerful visualizations.

17. Visually

Fast and affordable data visualization solution for infographics and interactive websites..

Visually is a data visualization and infographics platform that will help you turn your data into a compelling story, allowing you to convert your numbers into image-based visualizations and streamline the product design processes.

To create your memorable data visualizations, Visually's team will handpick from a selection of 1,000 of the best data journalists, designers, and developers to deliver your designs in record time.

Collaborate with world-class designers to create infographics that stand out, with the software keeping you in direct contact with your creative team and assigning you a dedicated rep to be at your disposal during the streamlining of the production.

Create presentations and slideshows that leave long-lasting impressions with a normal delivery time of 19 days.

Data personalization example

You will need to submit a request to get your quote.

- Create world-class presentations and slideshows and attention-grabbing infographics

- Usual delivery time of 16 days for infographics and 19 days for slideshows and presentations

- Corporate reports, sales decks, and slideshares for startups, Fortune 50 companies

- Share content on social media channels

- Collaboration with premium data journalists, designers, and developers

- Convert your numbers into image-based visualizations

- Keep direct contact with the creative team during the infographics, presentation production process

Visually creates beautiful content that grabs the attention of large crowds on social media, conveys the intended message in a captivating way, and connects you with the world's biggest experts while allowing you to keep direct contact during their work in the production stages, which guarantees satisfactory infographics and presentations.

#1 Data Visualization Tool for Converting Data into Useful Diagrams.

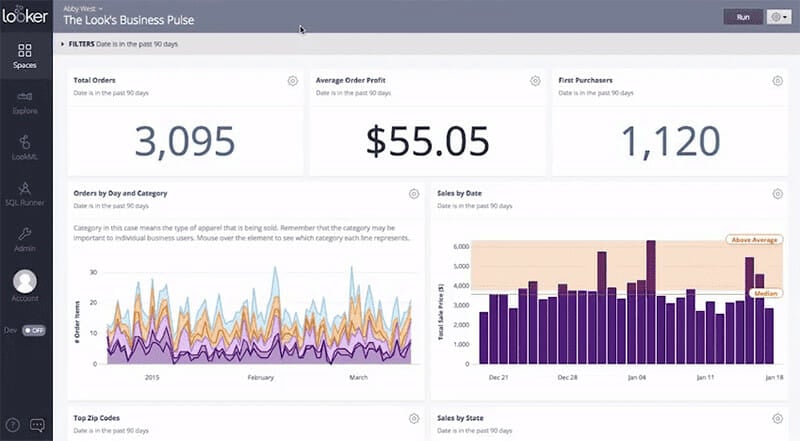

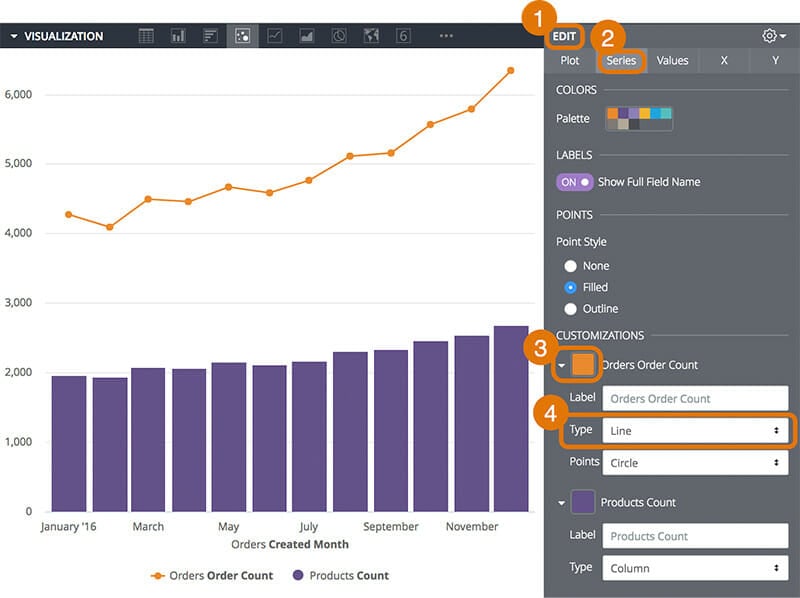

Looker will help you develop and streamline accurate data models and visualize your codes in interactive diagrams.

Equipping you with a dashboard through which you can explore and analyze your data deeply, Looker lets you select funnel, maps, timelines, donut multiples, snakey, treemap, combined charts, or gauge multiple visualizations.

You can configure your specific visualization, and the software will add it to your Looker workflow, helping you maximize your impact and tell a compelling story.

Without proper organization, any data you pull for visualizations will give subpar results, which is why you need to properly manage, update, and track data by choosing one of the best database software that will help you generate realistic and productive projections in your visualizations.

Set up filters for individuals or groups dynamically, separating one dashboard for sales reps, one for customer success managers, and another for external viewers.

Develop robust and accurate data models and reduce errors while understanding the relationships, behaviors, and extensions of different LookML objects.

As with many other vendors, you will need to send a request to receive your quote.

- Visualize your codes in interactive diagrams

- Explore and analyze your data deeply

- Choose from a variety of chart types, multiple chart and map frameworks, or configure your own

- Build effective, action-oriented dashboards and presentations

- Easy to detect changes and irregularities in your data

- The software adds your tailored visualizations to your Looker workflows

- Set up dynamic filters for groups and individuals and separate the dashboards for sales reps and external viewers

- Visualize data with subtotal in tables

Providing a modern API to integrate your workflows, Looker allows you to explore your data to intrinsic detail and bring your stories to life through compelling visualizations while compartmentalizing the dashboards for different uses.

19. Chartist.js

Best data visualization tool for smaller teams in need of simple, responsive charts..

Chartist.js is an open-source charting JavaScript library that has a lightweight interface that is flexible for integrations.

Create responsive, scalable, and great-looking charts while availing of the simple handling, great flexibility while using clear separation of concerns (styling with CSS and controlling with JS), SVG for illustration, and more.

The app is fully responsive and DPI independent, which results in GUI being displayed at a consistent size regardless of the resolution of the screen.

Chartist.js ensures responsive configuration with media queries, allowing compatibility with a wide variety of devices and screen sizes.

Specifying the style of your chart in the CSS will enable you to use the amazing CSS animations and transitions and apply them to your SVG elements.

Chartist's SVG animation API ensures almost limitless animation possibilities, and you can style your charts with CSS in @media queries.

The configuration override mechanism based on media queries helps you conditionally control the behavior of your charts when necessary.

Lastly, you should know that the app is fully built and customizable with SaaS.

Chartist.js is free.

- Create responsive, scalable, great-looking charts

- A lightweight interface that's flexible for integrations

- Implement your style through the DOM hooks

- Rich, responsive support for multiple screen sizes

- Comprehensive grid, color, label layout options

- Advanced SSL animations

- Multi-line labels

- SVG animations with SMIL

- Control the behavior of your charts with the configuration override mechanism

Offering great response configuration to media queries and high flexibility for use in the separation of concerns, Chartist.js is here to help you create highly customized, responsive charts and allow you to utilize SVG for illustrations.

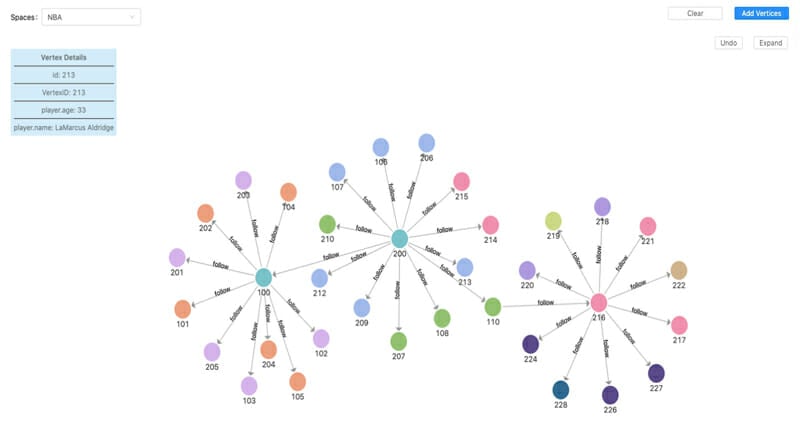

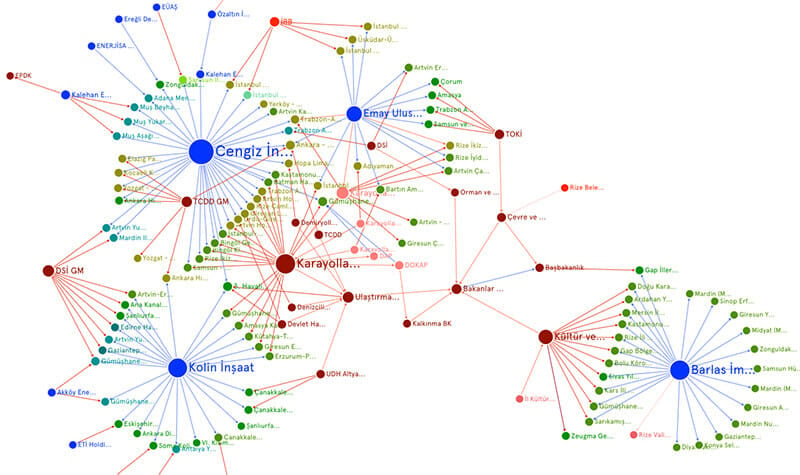

20. Sigma.js

Single-purpose data visualization tool for creating network graphs..

Sigma.js allows you to create embeddable, interactive, and responsive graphs, helping you customize your drawing and allowing you to publish the final result on any website.

To make the networks' manipulation on web pages as smooth and as fast as possible, Sigma.js will equip you with features such as Canvas and WebGL renderers, as well as mouse & touch support.

You can add your functions directly to your scripts and render the nodes and edges how you want them to be.

Through the Public API, you can modify data, move the camera, refresh the rendering, listen to events, and more.

Sigma.js can allow you to create JSON and GEXF-encoded graphs with the related plugin covering loading and parsing of the files.

Sigma.js is a free, open-source tool.

- WebGL and Canvas rendering

- Rescale when the container's size changes

- Render nodes and edges according to your liking

- Move the camera, refresh the rendering, listen to events, and modify your data through the Public API

- Display simple interactive publications of network and rich web applications

- Update data and refresh the drawing when you want

- Use plugins for animating the graphs or applying force-directed layouts to your graphs

Sigma.js is a dedicated graph drawing service that will help you embed graphs in websites and apps easily while allowing you to make changes and refresh the graphs anytime you want.

Best Data Visualization Software for Building Complex Data Models Quickly through its Associative Engine.

Innovatively providing data visualization services, Qlik will help you attain data from various sources quickly while automatically maintaining data association and supporting numerous forms of data presentation.

Capture large volumes of data and generate reports quickly and automatically while extracting valuable insights from transparent reporting functionalities and identify trends and information to help you make best-practice decisions.

Get an understanding of the information quickly through powerful global search and selections with interactive dashboards.

Combine, load, visualize, and explore your data, and activate the assistance from the insight advisor for chart creation, association recommendation, and data preparation.

Qlik Sense Business plan will cost you $30 per user per month.

- Build complex data models and dashboards quickly

- Simplifies data load and data modeling

- Aggregate structured data from different sources and build simple data models through snowflake or star schemas

- Simplified operation querying

- Generate reports quickly and automatically

- Identify trends to make best-practice decisions

- An attentive, knowledgeable support team that is receptive to feedback

- Get assistance on chart creation, association recommendation, and data preparation with the insight advisor

Allowing you to discover important insights through conversational analytics and insight advisor, rapidly develop custom apps, new visualizations, or extensions, and embed fully interactive analytics within the apps and processes, Qlik will let you visualize with clear intent and context through the most engaging graphs.

22. Polymaps

Dedicated javascript library for mapping and complete data visualization..

Designed specifically for mapping, Polymaps is a free, open-source JavaScript library for creating interactive, dynamic maps, using CSS to design and SVG to display your data through numerous types of visual presentation styles.

You can use the CSS mechanism to customize the visuals of your maps, after which you can easily embed them onto any website or apps.

The software supports large-scale and rich data overlays on interactive maps and SVR-rendered vector files, along with powerful graphical operations like compositing, geometric transformations, and image processing.

Apply styling via CSS operations, and utilize the CSS3 animations and transitions.

The software provides a factory method for constructing the required objects internally which results in shorter code and faster execution when compared to the traditional JS constructors.

Polymaps is 100% free.

- Large-scale data overlays

- CSS3 animations and transitions

- Private members can hide the internal state

- Refine the geometry to display greater details when zooming in

- Compositing, geometric transformations, and image processing

- Shorter code and faster execution

- Compatible and robust API

Polymaps is known for its speed when loading large amounts of data in full range, allowing it to run compositing, image processing, and geometric transforms, as well as supporting and processing of rich data on dynamic maps.

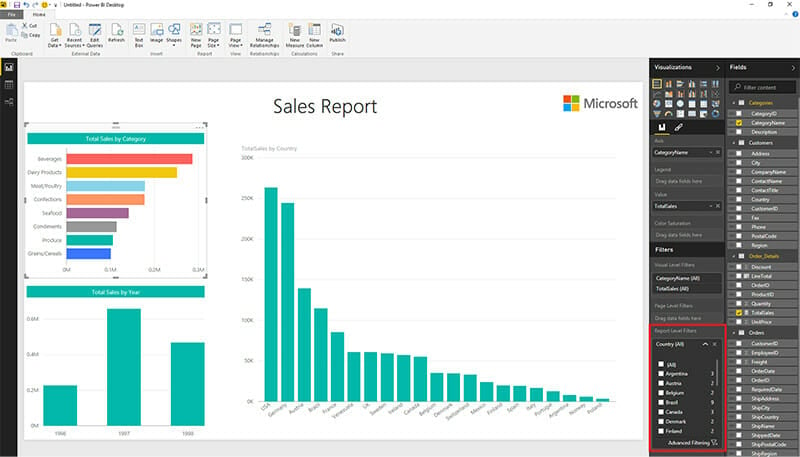

23. Microsoft Power BI

Best data visualization tool for fostering a data-driven culture with business intelligence for all..

Microsoft's Power BI is a data visualization and business intelligence tool combined into one that allows you to convert data from various data sources into interactive, engaging, and story-presenting dashboards and reports.

Providing reliable connections to your data sources on-prem and in the cloud, Power BI is ready to equip you with data exploration through natural language querying and real-time updates on the dashboard.

Save time and make data prep easier with modeling tools, and reclaim hours in a day using the self-service power query, ingestion, transforming, and integration tools.

Dig deeper into data and find patterns that lead to actionable insights, and use features like quick measures, grouping, forecasting, and clustering.

Activate the powerful DAX formula language and give advanced users full control over their models.

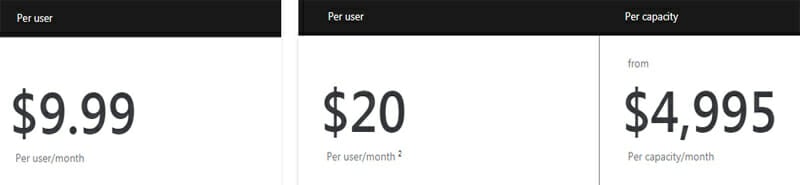

Power BI Pro is $9.99 per user per month.

- Access data from Dynamics 365, Salesforce, Azure SQL DB, Excel, SharePoint, and hundreds of other supported sources

- Pre-built and custom data connectors

- Natural language querying

- Real-time dashboard updates

- Design your reports with theming, formatting, and layout tools

- Quick measures, grouping, forecasting, and clustering

- Assign full control over models to advanced users through the DAX formula language

- Sensitivity labeling, end-to-end encryption, and real-time access monitoring

In Power BI, you can handle everything from managing reports using SaaS solutions to engaging in data exploration using the natural language query while accessing reliable data sources, which you can easily convert into interactive dashboards and reports that you can share across the whole organization.

Types of Data Visualization Methods

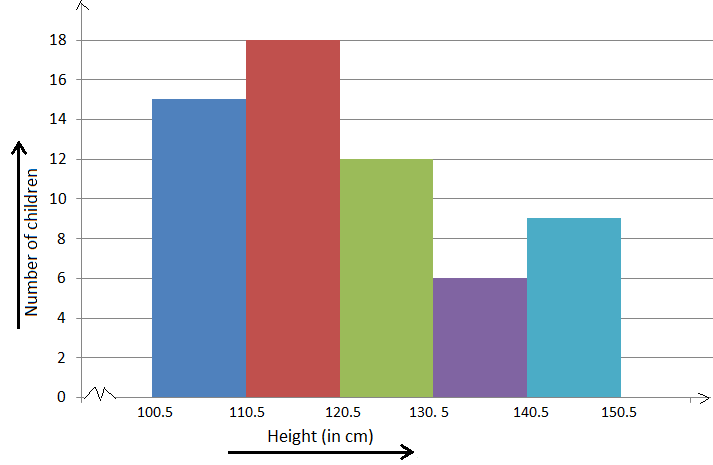

Starting with the most familiar one, column charts are a time-efficient method of showing comparisons among different sets of data.

A column chart will contain data labels along the horizontal axis with measured metrics or values presented on the vertical axis.

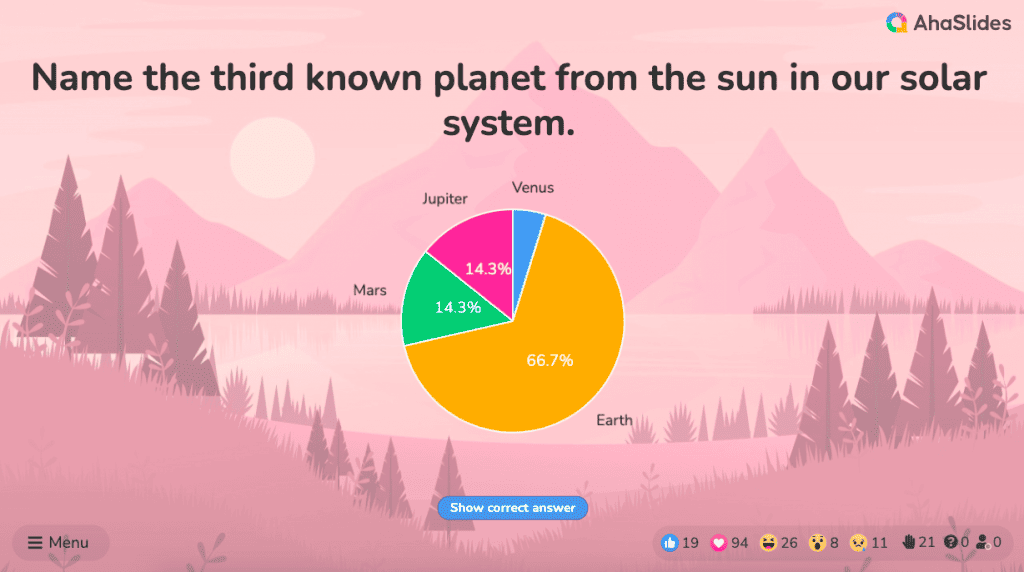

With column charts, you can track monthly sales figures, revenue per landing page, and similar information, while you can use the pie charts to demonstrate components or proportions between the elements of one whole.

You can find many more chart types like the Mekko, bar, line, scatter plot, area, waterfall, and many more.

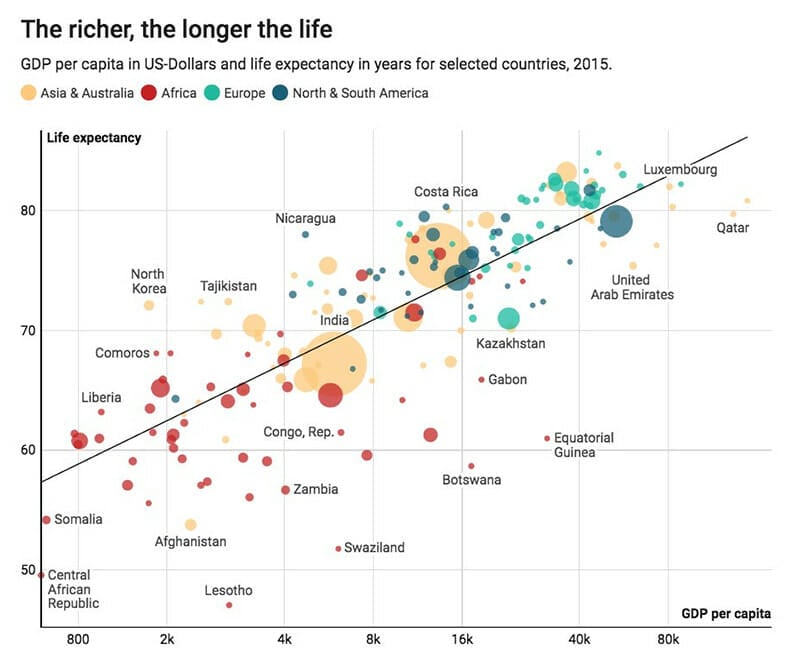

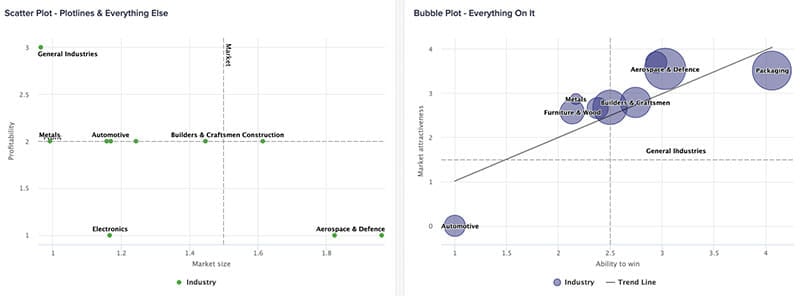

Plots are data visualization methods used to distribute two or more datasets over a 2D or 3D space to represent the relationship between these sets and the parameters on the plot.

Scatter and bubble plots are some of the most commonly used data visualization methods, while the more complex box plots are more frequently utilized for visualizing relationships between large volumes of data.

A bubble plot is an extension of the scatter plot used to look at the relationships between three numeric variables.

Box plot is a data visualization method used for expanatory data analysis, visually displaying the distribution of numerical data and distortion through displaying the data quartiles and averages.

Maps allow you to locate elements on relevant objects and areas, which is where we can start to divide them into geographical maps, building plans, website layouts, and the most popular map visualization types include heat maps, distribution maps, and cartograms.

Heat maps are graphical representations of data where values are depicted by different colors.

Distribution maps are data visualization arrangements used to indicate the distribution of a particular feature in an area, and they can be qualitative that explore qualities or characteristics, quantitative where the value of data is shown in the form of counts and numbers.

The distribution of continuous variables like temperature, pressure, rainfall are represented by lines of equal value.

4. Diagrams and Matrices

Diagrams are used to illustrate complex data relationships and links, and they include various types of data in one visualization.

They can be hierarchical, network, flowchart, Venn, multidimensional, tree-like, etc.

Matrix is one of the advanced data visualization techniques that help determine and process the correlation between multiple continuously updating datasets.

What to Look for In Data Visualization Software Tools

Before getting into the specific functionalities, let's establish the fundamentals required when purchasing a data visualization tool.

1. Ease of Use

Any data visualization software you choose must have easy-to-use features and a user-friendly interface for the less technically skilled employees.

While there are code-heavy data visualization tools packed with advanced features, these tools need to be well balanced if your team consists of both seasoned IT users and less-experienced workers.

Tableau has a steeper learning curve than other platforms on this list, for example, but this is balanced out with an extremely user-friendly design and a l arge community of users.

That's not to say Tableau doesn't require substantial training, but the completeness of the tool in all aspects makes the training process worth the effort.

Similarly, Sisense will appeal to seasoned BI users while potentially causing frustration with the newcomers.

Apart from the natural language query in the third-party apps, Sisense's UI doesn't match the level of user-friendliness needed to satisfy less-knowledgeable users.

Online training systems with well-organized support teams have helped battle this significantly.

Most importantly, Sisense provides phenomenal data visualization service and equips their target intermediate and highly-skilled business analysts with transparency, and lifts most of their burden without having to buy additional tools.

2. Data Connectivity

Quality data visualization software will equip you with the capability to connect with or extract important information from external sources when you encounter data absence problems.

If you want to import data from both online and PC sources while being able to download infographics in HD quality and connect with numerous file types like PNG, JPG, PDF, GIF, HTML, and more, Infogram could be the comprehensive data connectivity solution you need.

What if you want good data connectivity for free?

Thanks to D3.js , this is possible as well, with the tool being the perfect JavaScript library for manipulating documents based on data, letting you access data through HTML, SVG, and CSS.

All this flexibility comes with additional benefits of the tool being extremely fast, supporting large datasets and dynamic behaviors for interaction and animation.

Adding on the themes in the previous paragraphs, D3.js accommodates this robust data connectivity with apps like NVD3 that non-programmers can use to still get good results in the library.

When you search for a tool that supports the SVG and HTML5 outputs, yet enables you to work in browsers without additional plugins, it's hard to rival Google Charts as it allows you to extract data from Google Spreadsheets, Google Fusion Tables, Salesforce (and Salesforce alternatives ), and other SQL databases.

3. Employee Skill Level

Now that you have determined the fundamentals you look for in the tool, you should turn inward and see what your team can offer to the tool.

Not only will you avoid sudden training costs during the learning process, but knowing the limitations of your employees will help you select a data visualization tool to get you the results you strive for and challenge the employees to develop faster.

4. Let's Talk Refinements

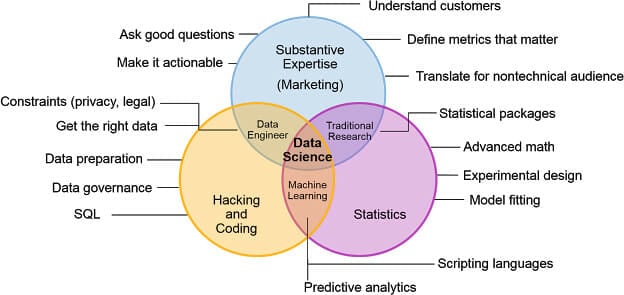

- Data visualization – Analyze data in visual form, such as patterns, charts, graphs, maps, trends, correlations, and so on.

- Role-based access management – Regulate access levels for individuals, including data and administration.

- Historical snapshots – Create snapshots of your data samples and workspace and access them as records later in the process.

- Template creation – Save previously used color schemes and combinations as templates and reuse them again in future projects.

- Visual analytics – Analyze enormous amounts of data through powerful and interactive reporting capabilities.

- Visual discovery – Find patterns, sequences, and outliers in datasets through visual analysis without necessarily creating data models.

- Data cleansing – Filter through the redundant and inaccurate residual information from various formats, and keep your database pure.

- In-place filtering – Filter off specific data by value, type, category, or other criteria with dropdowns, checkboxes, radio buttons, sliders, and more.

- Email reporting – Receive constant information and visual statistical reports about your data through scheduled emails.

- Mobile user support – Access your data and monitor ongoing operations outside of the working environment.

Which Data Visualization Tool Should I Choose?

Do you want a tool that will give you access to an enormous collection of data connectors and visualizations, allow you to create maps and public-facing visualizations that stand out, generating the most accurate forecasts and statistical summaries?

Of course, you do.

Everyone looking for a data visualization solution wants those things, and there is no better tool today to help you master self-service business intelligence like Tableau .

Maybee empowering your employees to perform better is at the top of your priorities, and you would like to engage your employees in an alternative way.

Plecto is a tool that will let you add data from all SQL databases, including Zapier and REST-based API, and allow you to integrate with an unlimited number of them while proactively motivating your team to improve through gamification and other unique features.

If you want to construct complex data models but you want to do it quickly with a tool that will help you attain data from various sources and even advise you on the best practices for chart creation, association recommendation, and data preparation, look no further than Qlik .

Just like Jumpeau, with Sisense , you know you are getting the cream of the crop from picture, graph, chart, and map visualizations and high-volume dataset management, reaching the depth of insights through the actionable visualizations that is unrivaled.

Was This Article Helpful?

Martin luenendonk.

Martin loves entrepreneurship and has helped dozens of entrepreneurs by validating the business idea, finding scalable customer acquisition channels, and building a data-driven organization. During his time working in investment banking, tech startups, and industry-leading companies he gained extensive knowledge in using different software tools to optimize business processes.

This insights and his love for researching SaaS products enables him to provide in-depth, fact-based software reviews to enable software buyers make better decisions.

Data Collection, Presentation and Analysis

- First Online: 25 May 2023

Cite this chapter

- Uche M. Mbanaso 4 ,

- Lucienne Abrahams 5 &

- Kennedy Chinedu Okafor 6

563 Accesses

This chapter covers the topics of data collection, data presentation and data analysis. It gives attention to data collection for studies based on experiments, on data derived from existing published or unpublished data sets, on observation, on simulation and digital twins, on surveys, on interviews and on focus group discussions. One of the interesting features of this chapter is the section dealing with using measurement scales in quantitative research, including nominal scales, ordinal scales, interval scales and ratio scales. It explains key facets of qualitative research including ethical clearance requirements. The chapter discusses the importance of data visualization as key to effective presentation of data, including tabular forms, graphical forms and visual charts such as those generated by Atlas.ti analytical software.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Bibliography

Abdullah, M. F., & Ahmad, K. (2013). The mapping process of unstructured data to structured data. Proceedings of the 2013 International Conference on Research and Innovation in Information Systems (ICRIIS) , Malaysia , 151–155. https://doi.org/10.1109/ICRIIS.2013.6716700

Adnan, K., & Akbar, R. (2019). An analytical study of information extraction from unstructured and multidimensional big data. Journal of Big Data, 6 , 91. https://doi.org/10.1186/s40537-019-0254-8

Article Google Scholar

Alsheref, F. K., & Fattoh, I. E. (2020). Medical text annotation tool based on IBM Watson Platform. Proceedings of the 2020 6th international conference on advanced computing and communication systems (ICACCS) , India , 1312–1316. https://doi.org/10.1109/ICACCS48705.2020.9074309

Cinque, M., Cotroneo, D., Della Corte, R., & Pecchia, A. (2014). What logs should you look at when an application fails? Insights from an industrial case study. Proceedings of the 2014 44th Annual IEEE/IFIP International Conference on Dependable Systems and Networks , USA , 690–695. https://doi.org/10.1109/DSN.2014.69

Gideon, L. (Ed.). (2012). Handbook of survey methodology for the social sciences . Springer.

Google Scholar

Leedy, P., & Ormrod, J. (2015). Practical research planning and design (12th ed.). Pearson Education.

Madaan, A., Wang, X., Hall, W., & Tiropanis, T. (2018). Observing data in IoT worlds: What and how to observe? In Living in the Internet of Things: Cybersecurity of the IoT – 2018 (pp. 1–7). https://doi.org/10.1049/cp.2018.0032

Chapter Google Scholar

Mahajan, P., & Naik, C. (2019). Development of integrated IoT and machine learning based data collection and analysis system for the effective prediction of agricultural residue/biomass availability to regenerate clean energy. Proceedings of the 2019 9th International Conference on Emerging Trends in Engineering and Technology – Signal and Information Processing (ICETET-SIP-19) , India , 1–5. https://doi.org/10.1109/ICETET-SIP-1946815.2019.9092156 .

Mahmud, M. S., Huang, J. Z., Salloum, S., Emara, T. Z., & Sadatdiynov, K. (2020). A survey of data partitioning and sampling methods to support big data analysis. Big Data Mining and Analytics, 3 (2), 85–101. https://doi.org/10.26599/BDMA.2019.9020015

Miswar, S., & Kurniawan, N. B. (2018). A systematic literature review on survey data collection system. Proceedings of the 2018 International Conference on Information Technology Systems and Innovation (ICITSI) , Indonesia , 177–181. https://doi.org/10.1109/ICITSI.2018.8696036

Mosina, C. (2020). Understanding the diffusion of the internet: Redesigning the global diffusion of the internet framework (Research report, Master of Arts in ICT Policy and Regulation). LINK Centre, University of the Witwatersrand. https://hdl.handle.net/10539/30723

Nkamisa, S. (2021). Investigating the integration of drone management systems to create an enabling remote piloted aircraft regulatory environment in South Africa (Research report, Master of Arts in ICT Policy and Regulation). LINK Centre, University of the Witwatersrand. https://hdl.handle.net/10539/33883

QuestionPro. (2020). Survey research: Definition, examples and methods . https://www.questionpro.com/article/survey-research.html

Rajanikanth, J. & Kanth, T. V. R. (2017). An explorative data analysis on Bangalore City Weather with hybrid data mining techniques using R. Proceedings of the 2017 International Conference on Current Trends in Computer, Electrical, Electronics and Communication (CTCEEC) , India , 1121-1125. https://doi/10.1109/CTCEEC.2017.8455008

Rao, R. (2003). From unstructured data to actionable intelligence. IT Professional, 5 , 29–35. https://www.researchgate.net/publication/3426648_From_Unstructured_Data_to_Actionable_Intelligence

Schulze, P. (2009). Design of the research instrument. In P. Schulze (Ed.), Balancing exploitation and exploration: Organizational antecedents and performance effects of innovation strategies (pp. 116–141). Gabler. https://doi.org/10.1007/978-3-8349-8397-8_6

Usanov, A. (2015). Assessing cybersecurity: A meta-analysis of threats, trends and responses to cyber attacks . The Hague Centre for Strategic Studies. https://www.researchgate.net/publication/319677972_Assessing_Cyber_Security_A_Meta-analysis_of_Threats_Trends_and_Responses_to_Cyber_Attacks

Van de Kaa, G., De Vries, H. J., van Heck, E., & van den Ende, J. (2007). The emergence of standards: A meta-analysis. Proceedings of the 2007 40th Annual Hawaii International Conference on Systems Science (HICSS’07) , USA , 173a–173a. https://doi.org/10.1109/HICSS.2007.529

Download references

Author information

Authors and affiliations.

Centre for Cybersecurity Studies, Nasarawa State University, Keffi, Nigeria

Uche M. Mbanaso

LINK Centre, University of the Witwatersrand, Johannesburg, South Africa

Lucienne Abrahams

Department of Mechatronics Engineering, Federal University of Technology, Owerri, Nigeria

Kennedy Chinedu Okafor

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Mbanaso, U.M., Abrahams, L., Okafor, K.C. (2023). Data Collection, Presentation and Analysis. In: Research Techniques for Computer Science, Information Systems and Cybersecurity. Springer, Cham. https://doi.org/10.1007/978-3-031-30031-8_7

Download citation

DOI : https://doi.org/10.1007/978-3-031-30031-8_7

Published : 25 May 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-30030-1

Online ISBN : 978-3-031-30031-8

eBook Packages : Engineering Engineering (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Malays Fam Physician

- v.1(2-3); 2006

How To Present Research Data?

Tong seng fah.

MMed (FamMed UKM), Department of Family Medicine, Universiti Kebangsaan Malaysia

Aznida Firzah Abdul Aziz

Introduction.

The result section of an original research paper provides answer to this question “What was found?” The amount of findings generated in a typical research project is often much more than what medical journal can accommodate in one article. So, the first thing the author needs to do is to make a selection of what is worth presenting. Having decided that, he/she will need to convey the message effectively using a mixture of text, tables and graphics. The level of details required depends a great deal on the target audience of the paper. Hence it is important to check the requirement of journal we intend to send the paper to (e.g. the Uniform Requirements for Manuscripts Submitted to Medical Journals 1 ). This article condenses some common general rules on the presentation of research data that we find useful.

SOME GENERAL RULES

- Keep it simple. This golden rule seems obvious but authors who have immersed in their data sometime fail to realise that readers are lost in the mass of data they are a little too keen to present. Present too much information tends to cloud the most pertinent facts that we wish to convey.

- First general, then specific. Start with response rate and description of research participants (these information give the readers an idea of the representativeness of the research data), then the key findings and relevant statistical analyses.

- Data should answer the research questions identified earlier.

- Leave the process of data collection to the methods section. Do not include any discussion. These errors are surprising quite common.

- Always use past tense in describing results.

- Text, tables or graphics? These complement each other in providing clear reporting of research findings. Do not repeat the same information in more than one format. Select the best method to convey the message.

Consider these two lines:

- Mean baseline HbA 1c of 73 diabetic patients before intervention was 8.9% and mean HbA 1c after intervention was 7.8%.

- Mean HbA 1c of 73 of diabetic patients decreased from 8.9% to 7.8% after an intervention.

In line 1, the author presents only the data (i.e. what exactly was found in a study) but the reader is forced to analyse and draw their own conclusion (“mean HbA 1c decreased”) thus making the result more difficult to read. In line 2, the preferred way of writing, the data was presented together with its interpretation.

- Data, which often are numbers and figures, are better presented in tables and graphics, while the interpretation are better stated in text. By doing so, we do not need to repeat the values of HbA 1c in the text (which will be illustrated in tables or graphics), and we can interpret the data for the readers. However, if there are too few variables, the data can be easily described in a simple sentence including its interpretation. For example, the majority of diabetic patients enrolled in the study were male (80%) compare to female (20%).

- Using qualitative words to attract the readers’ attention is not helpful. Such words like “remarkably” decreased, “extremely” different and “obviously” higher are redundant. The exact values in the data will show just how remarkable, how extreme and how obvious the findings are.

“It is clearly evident from Figure 1B that there was significant different (p=0.001) in HbA 1c level at 6, 12 and 18 months after diabetic self-management program between 96 patients in intervention group and 101 patients in control group, but no difference seen from 24 months onwards.” [Too wordy]

Changes of HbA 1c level after diabetic self-management program.

The above can be rewritten as:

“Statistical significant difference was only observed at 6, 12 and 18 months after diabetic self-management program between intervention and control group (Fig 1B)”. [The p values and numbers of patients are already presented in Figure 1B and need not be repeated.]

- Avoid redundant words and information. Do not repeat the result within the text, tables and figures. Well-constructed tables and graphics should be self-explanatory, thus detailed explanation in the text is not required. Only important points and results need to be highlighted in the text.

Tables are useful to highlight precise numerical values; proportions or trends are better illustrated with charts or graphics. Tables summarise large amounts of related data clearly and allow comparison to be made among groups of variables. Generally, well-constructed tables should be self explanatory with four main parts: title, columns, rows and footnotes.

- Title. Keep it brief and relate clearly the content of the table. Words in the title should represent and summarise variables used in the columns and rows rather than repeating the columns and rows’ titles. For example, “Comparing full blood count results among different races” is clearer and simpler than “Comparing haemoglobin, platelet count, and total white cell count among Malays, Chinese and Indians”.

*WC, waist circumference (in cm)

†SBP, systolic blood pressure (in mmHg)

‡DBP, diastolic blood pressure (in mmHg)

£LDL-cholesterol (in mmol/L)

*Odds ratio (95% confidence interval)

†p=0.04

‡p=0.01

- Footnotes. These add clarity to the data presented. They are listed at the bottom of tables. Their use is to define unconventional abbreviation, symbols, statistical analysis and acknowledgement (if the table is adapted from a published table). Generally the font size is smaller in the footnotes and follows a sequence of foot note signs (*, †, ‡, §, ‖, ¶, **, ††, # ). 1 These symbols and abbreviation should be standardised in all tables to avoid confusion and unnecessary long list of footnotes. Proper use of footnotes will reduce the need for multiple columns (e.g. replacing a list of p values) and the width of columns (abbreviating waist circumference to WC as in table 1B )

- Consistent use of units and its decimal places. The data on systolic blood pressure in Table 1B is neater than the similar data in Table 1A .

- Arrange date and timing from left to the right.

- Round off the numbers to fewest decimal places possible to convey meaningful precision. Mean systolic blood pressure of 165.1mmHg (as in Table 1B ) does not add much precision compared to 165mmHg. Furthermore, 0.1mmHg does not add any clinical importance. Hence blood pressure is best to round off to nearest 1mmHg.

- Avoid listing numerous zeros, which made comparison incomprehensible. For example total white cell count is best represented with 11.3 ×10 6 /L rather than 11,300,000/L. This way, we only need to write 11.3 in the cell of the table.

- Avoid too many lines in a table. Often it is sufficient to just have three horizontal lines in a table; one below the title; one dividing the column titles and data; one dividing the data and footnotes. Vertical lines are not necessary. It will only make a table more difficult to read (compare Tables 1A and and1B 1B ).

- Standard deviation can be added to show precision of the data in our table. Placement of standard deviation can be difficult to decide. If we place the standard deviation at the side of our data, it allows clear comparison when we read down ( Table 1B ). On the other hand, if we place the standard deviation below our data, it makes comparison across columns easier. Hence, we should decide what we want the readers to compare.

- It is neater and space-saving if we highlight statistically significant finding with an asterisk (*) or other symbols instead of listing down all the p values ( Table 2 ). It is not necessary to add an extra column to report the detail of student-t test or chi-square values.

Graphics are particularly good for demonstrating a trend in the data that would not be apparent in tables. It provides visual emphasis and avoids lengthy text description. However, presenting numerical data in the form of graphs will lose details of its precise values which tables are able to provide. The authors have to decide the best format of getting the intended message across. Is it for data precision or emphasis on a particular trend and pattern? Likewise, if the data is easily described in text, than text will be the preferred method, as it is more costly to print graphics than text. For example, having a nicely drawn age histogram is take up lots of space but carries little extra information. It is better to summarise it as mean ±SD or median depends on whether the age is normally distributed or skewed. Since graphics should be self-explanatory, all information provided has to be clear. Briefly, a well-constructed graphic should have a title, figure legend and footnotes along with the figure. As with the tables, titles should contain words that describe the data succinctly. Define symbols and lines used in legends clearly.

Some general guides to graphic presentation are:

- Bar charts, either horizontal or column bars, are used to display categorical data. Strictly speaking, bar charts with continuous data should be drawn as histograms or line graphs. Usually, data presented in bar charts are better illustrated in tables unless there are important pattern or trends need to be emphasised.

- Line graphs are most appropriate in tracking changing values between variables over a period of time or when the changing values are continuous data. Independent variables (e.g. time) are usually on the X-axis and dependant variables (for example, HbA 1c ) are usually on the Y-axis. The trend of HbA 1c changes is much more apparent with Figure 1B than Figure 1A , and HbA 1c level at any time after intervention can be accurately read in Figure 1B .

- Pie charts should not be used often as any data in a pie chart is better represented in bar charts (if there are specific data trend to be emphasised) or simple text description (if there are only a few variables). A common error is presenting sex distribution of study subjects in a pie chart. It is simpler by just stating % of male or female in text form.

- Patients’ identity in all illustrations, for example pictures of the patients, x-ray films, and investigation results should remain confidential. Use patient’s initials instead of their real names. Cover or blackout the eyes whenever possible. Obtain consent if pictures are used. Highlight and label areas in the illustration, which need emphasis. Do not let the readers search for details in the illustration, which may result in misinterpretation. Remember, we write to avoid misunderstanding whilst maintaining clarity of data.

Papers are often rejected because wrong statistical tests are used or interpreted incorrectly. A simple approach is to consult the statistician early. Bearing in mind that most readers are not statisticians, the reporting of any statistical tests should aim to be understandable by the average audience but sufficiently rigorous to withstand the critique of experts.

- Simple statistic such as mean and standard deviation, median, normality testing is better reported in text. For example, age of group A subjects was normally distributed with mean of 45.4 years old kg (SD=5.6). More complicated statistical tests involving many variables are better illustrated in tables or graphs with their interpretation by text. (See section on Tables).

- We should quote and interpret p value correctly. It is preferable to quote the exact p value, since it is now easily obtained from standard statistical software. This is more so if the p value is statistically not significant, rather just quoting p>0.05 or p=ns. It is not necessary to report the exact p value that is smaller than 0.001 (quoting p<0.001 is sufficient); it is incorrect to report p=0.0000 (as some software apt to report for very small p value).

- We should refrain from reporting such statement: “mean systolic blood pressure for group A (135mmHg, SD=12.5) was higher than group B (130mmHg, SD= 9.8) but did not reach statistical significance (t=4.5, p=0.56).” When p did not show statistical significance (it might be >0.01 or >0.05, depending on which level you would take), it simply means no difference among groups.

- Confidence intervals. It is now preferable to report the 95% confidence intervals (95%CI) together with p value, especially if a hypothesis testing has been performed.