How Should Data Analysis Impact Your Decision-Making Process?

Contrary to popular belief, no statistician can turn uncertainty into certainty for you. If you’re looking for facts or truth, you won’t find them by adding more equations to the mix; the only way is to collect so much data that you don’t need a statistician. How much is that? Bluntly put, all of it.

So, what’s a statistician for, in that case?

As a trained statistician myself, I’ve seen many people struggle to grasp what it means to do hypothesis testing in the face of uncertainty, so in this article, I’ll take a stab at clarifying those slippery stumbling blocks by taking an unusual approach: a touch of mythology!

More From Cassie Kozyrkov What Does It Mean to ‘Work With AI’?

How Does Hypothesis Testing Work?

Let’s put ourselves in the shoes of supernatural all-knowing beings … were they to have shoes at all. If you’re a lover of the classics, perhaps you might imagine yourself among the Greek gods and goddesses, looking down upon us mortals from Mount Olympus. In a slight departure from Homer’s deities — ever fallible and bickering — let’s imagine that we know everything about past, present, and future.

There we are on Olympus, watching little mortals going about their daily business and notice — aha! — that one of them has a default action. Because we have a perfect grasp of reality, we naturally know all about statistical decision-making, including how to set up a hypothesis test. It’s fun to be all-knowing. So let’s summarize what we know so we can get back to our usual business of laughing at the ridiculousness of the human condition:

Statistical Decision-Making 101

- The default action is the option that you find palatable under ignorance. It’s what you’ll do if you’re forced to make a snap decision.

- The alternative action is what you’ll do if the data analysis talks you out of your default action.

- The null hypothesis is a (mathematical) description of all the realities in which our default action would be a happy choice. If that sounds confusing, here’s a straightforward example .

- The alternative hypothesis is a description of all the realities not covered by the null hypothesis.

A default action is the physical action/decision that you commit to making if you don’t gather any (more) evidence. When I’m performing the role of decision advisor, one of my early questions tends to be, “What would you commit to doing if you knew you had to make the decision right this moment?” If you’re curious to learn more about default actions, I have a whole article for you here .

Picking your default action is one of the most important judgments in a statistical decision process, but I’ve never seen it taught explicitly in a traditional statistics textbook. It is the pivotal concept, though, so I prefer to drag it out into the open and name it explicitly since it will shape the entire hypothesis test.

But hush, the mortal may be about to do something stupid! Let’s watch.

What Can We Learn From Data?

This little mortal’s default action is to not launch a new version of their product. Because we deities are omniscient, we know that it’s the best action to take — we happen to know that the product is expensive to launch but customers won’t like it as much as the current version. Everyone would be better off if the new version were abandoned. Unfortunately, the mortal can’t know this — the poor thing has to live with uncertainty and limited information. But we deities know all things.

We start laughing ourselves silly, “Look at this ridiculous mortal!” If the mortal is too lazy to be bothered with data analysis , they’ll execute the default action (which we happen to know is correct) and everything will be dandy with their little life. But this mortal is not lazy. This mortal is honorable and diligent … and is going to analyze data! They want to be data-driven in all things!!

The mortal goes off to collect market data, then toils and toils over the numbers. What are they going to learn from their foray into statistics?

Well, in the best possible case, they’ll learn nothing. That, in a nutshell, is the right thing to learn when you perform a hypothesis test and find no evidence to reject your null hypothesis in favor of the alternative, which would have triggered a switch away from the default action. If that explanation zoomed past you too quickly, I’ve got a gentle primer on the logic of hypothesis testing for you here .

Learning nothing would be a wonderful outcome for this little mortal, though it’s hard on the emotions — it’s awfully disappointing to spend all that effort on data analysis and come away without anything that feels like a eureka moment serenaded by celestial trumpets. But the important thing is that the poor dear will end up taking the right action . They won’t know it’s the right action (that would be, as Shakespeare might put it, more than mortal knowledge), but they’ll end up in the same happy place as the couch potato who spent their time binging Netflix. Ah, these mortals and their Sisyphean data analysis. They were going to do the right thing anyway!

What Is the Point of Statistical Analysis?

The key insight for you, dear reader, is that the poor mortal can’t possibly know this. That’s why we are doing this rather odd exercise of putting ourselves on Mount Olympus. It’s not a normal perspective for readers to take during your statistics-article-reading loo break.

What we have been snickering at so far was the absolute best case for this mortal’s data analysis: learning nothing at all and performing their default action. Now, what’s the worst possible thing this little mortal could learn from the data? Something!

Because upon learning something, they will do … something stupid. In classical statistical inference, learning something about the population means rejecting the null hypothesis, feeling ridiculous about the default action, and switching their course of action to the (incorrect) alternative. This mortal will pat themselves on the back for statistical significance and launch a bad product.

That’s so tragicomic that we’re falling out of our supernatural chairs with laughter.

Thanks to the silly mortal’s data-driven diligence and their mathematical savvy, they’ve managed to talk themselves out of doing what they should have done. If they’d been lazy, they would have been better off! Puny mortals, so brave and so good — so hilarious.

Why would a mortal end up learning something incorrectly like that? Unfortunately, randomness is random. There’s a luck of the draw element to data. Your sample might be a freak accident that leads you to the wrong conclusion. Alas, when luck’s involved, bad things can happen to good people.

Omniscient beings may have the privilege of reasoning in advance about what the right decision is, but mortals aren’t so lucky. Mortals must contend with uncertainty and incomplete information, which means they can make mistakes. Unlike supernatural, omniscient beings, people haven’t got enough information to say which actions are correct or not.

They’ll only find that out later, in hindsight , once the universe catches up with them. In the meantime, all people can do is make the best decision they can with the incomplete information they have. Sometimes that leads them off a cliff. Uncertainty is a jerk like that, which is why I do sympathize with the desire to shower data gurus with boatloads of cash in the hopes that they’ll make the uncertainty go away. But there’s a name for someone who promises you that certainty is for sale when your data is incomplete: a charlatan. Unfortunately, data charlatans are everywhere these days . Buyer beware!

More on Data-Driven Thinking Is 'Statistical Significance' in Research Just a Strawman?

Am I Making Decisions Intelligently?

So, let’s put ourselves back in the shoes we belong in: those of puny mortals. All we have is our data set, which — when we’re doing statistics — is an incomplete snapshot of our world. We don’t have the facts that allow us to be sure we’re making the right decision. We can’t know if we’ve made a mistake or not until it’s too late. We only know what our data set looks like.

It’s always possible that, thanks to uncertainty, all our mathematical huffing and puffing talks us out of a perfectly reasonable default action. We can never be sure that we’re not making the deeply embarrassing error of toiling ourselves into a worse decision than what we would have had by spending the time with a trashy novel instead of with our data. We must remember that we are not gods and thus we haven’t got the privilege of reasoning as though we’re all-knowing.

That’s why we mortals must ask ourselves, “Am I making the decision intelligently?” instead of “Am I making the right decision?”

The mortal’s mistake — actively mathing themselves into a stupid course of action — adds insult to injury. There’s an asymmetry here that makes the mistake extra painful. It comes from the fact that they have a preferred default action in the first place. Our default action is what we fundamentally lean towards doing, even under ignorance.

The analysis would be different if there were no default action. In that case, staying lazily on the couch would not be an option — indifference between options forces you to glance at the data, but it lets you get away with a less involved approach, as I explain here .

Alas, true indifference seems rather rare in the human animal. We often enter the decision-making process with a preference for one course of action over another, which means we do have a default action that represents a happy, comfortable choice we’d need the data to talk us out of.

Instead, we’re hardly indifferent. If we were indifferent, we’d be doing this a different way — we’d simply be making a best guess based on the data. It wouldn’t matter how much data you used — just grab hold of as much data as you can afford and go with the best-looking action, statistical significance be damned.

By preferring one of the actions by default, you’re making a value judgment about what you consider to be the worst mistake you can make: stupidly leaving this cozy default action. You’re only okay with abandoning it if you get on a strong-enough cease-and-desist signal from your data. Otherwise, you’re happy to stay there. You’d appreciate avoiding the mistake of staying with a bad default action, but that situation doesn’t represent as grievous a wound to you as the other mistake you could make — leaving your comfort zone incorrectly.

Statistics Is the Science of Changing Your Mind

Statistics is the science of changing your mind , and the mechanics of its most popular methods are powered by the imbalance in your preferences about the actions that are on the table for you. The worst possible thing that you could do is talk yourself into stupidly changing your mind. And yet, because randomness is random, you could get some bad luck — it’s entirely possible that this will be exactly what happens to you. You’re a mere mortal, after all. Is there anything you can do about it? Sort of. You can’t guarantee you’ll make the right decision, but you can turn the dial on the size of the gamble you’re willing to take. It’s your best attempt at indemnifying yourself against the vagaries of chance.

In fact, that’s the main payoff to formal statistical hypothesis testing: it gives you control over the maximum up-front probability of stupidly changing your mind. It allows you to search your soul, discover your own appetite for risk, and make your decision in a way that delivers the action that best blends your data, your assumptions , and your risk preferences.

When you’re dealing with uncertainty, truly knowing is not for us mortals. You can’t make certainty out of uncertainty and you certainly can’t get it by paying a statistician to mumble some equation-filled mumbo jumbo for you. All you can know is how your data pans out in light of your assumptions. That’s what your friendly statistician helps you with. Hypothesis testing is a decision tool. What it gives you is powerful but not perfect: the ability to control your decision’s risk settings mathematically.

Thinking With Data How Ontology and Data Go Hand-in-Hand

Statistics Offers Control, Not Certainty

To summarize our discussion: you’d have to have omniscience to know if your decision is correct . Until it’s too late, of course. With uncertainty and partial information (a sample of your population), mistakes are possible even though you’ll have done the best you can with what little you know. There’s always the possibility that you — dear little mortal — will make the terrible mistake of analyzing yourself out of a perfectly good default action. What statistics allows you to do is to control the probability of that sad event.

I may be biased in my love for statistics — I’ve been a statistician since my teens, after all — but when there are really important data-driven decisions to make, I’m deeply grateful that I’m able to have control over the risk and quality of my decision process. This is why I’m frequently baffled that decision-makers engage in the pantomime of statistics without ever availing themselves of that control panel. It defeats the entire point of all that mathematical jiujitsu!

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

The Role of Data Analysis in Decision-Making: A Comprehensive Guide

In today’s data-driven world, organizations of all sizes are recognizing the immense value of data in making informed decisions. Data analysis has emerged as a critical process in extracting meaningful insights from raw data, enabling businesses to identify patterns, trends, and correlations that can guide strategic decision-making.

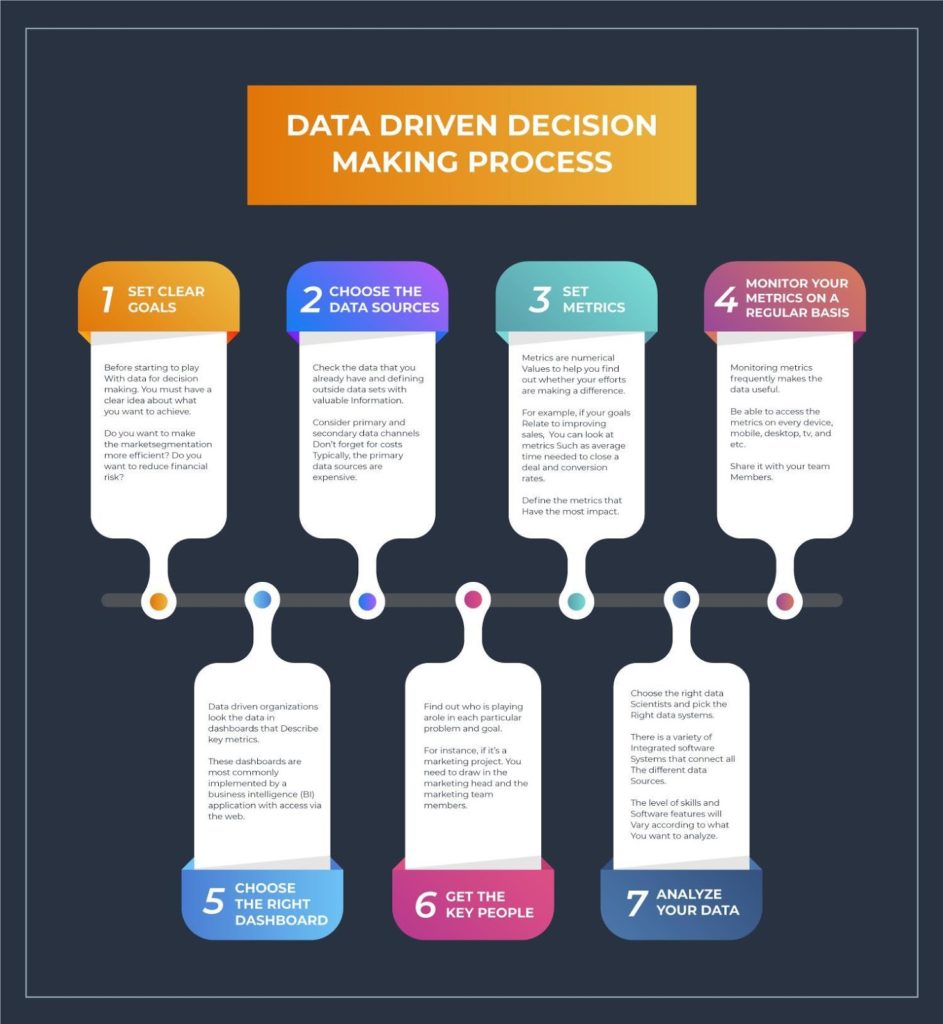

Understanding Data-Driven Decision Making

Data-driven decision-making refers to the process of making informed choices and taking actions based on objective analysis of relevant data. It involves collecting, analyzing, and interpreting data to extract meaningful insights that guide decision-making processes. By relying on data rather than intuition or assumptions, organizations can reduce biases, improve accuracy, and increase the chances of making optimal decisions.

Data-driven decision-making enables businesses to identify trends, patterns, and correlations, uncover hidden opportunities, mitigate risks, and drive strategic initiatives. It empowers decision-makers to rely on evidence-based insights, ultimately leading to better outcomes and a competitive edge in today’s data-driven world.

Understanding the Importance of Data Analysis

Types of data analysis techniques.

There are several techniques employed in data analysis, each serving a specific purpose. Some common types of data analysis techniques include:

Descriptive Analysis

Diagnostic analysis.

Diagnostic analysis aims to uncover the root causes of specific events or trends. By examining data and applying various statistical methods, it helps identify factors that contribute to observed outcomes, allowing organizations to address underlying issues.

Predictive Analysis

Predictive analysis makes use of statistical modeling techniques and historical data to predict future outcomes. By identifying patterns and relationships in data, organizations can make predictions about future trends, customer behavior, market dynamics, and more.

Prescriptive Analysis

Prescriptive analysis goes beyond predicting future outcomes. It suggests optimal courses of action based on the available data, taking into account constraints and objectives. It helps decision-makers explore different scenarios and make informed choices.

The Data Analysis Process

Data collection.

Gathering relevant data from various sources, ensuring data quality and accuracy.

Data Cleaning and Preparation

Cleaning and transforming the data to remove errors, inconsistencies, and missing values. This step also involves organizing and formatting the data for analysis.

Exploratory Data Analysis (EDA)

Statistical analysis.

Applying statistical methods to analyze data, test hypotheses, and draw meaningful conclusions. Techniques such as regression analysis, hypothesis testing, and correlation analysis are commonly used.

Data Visualization

Presenting the findings of the analysis through visual representations like charts, graphs, and dashboards to enhance understanding and facilitate decision-making.

Interpretation and Decision-Making

Interpreting the results of the analysis, extracting actionable insights, and using them to inform decision-making processes.

Tools and Technologies for Data Analysis

Data analysis relies on a variety of tools and technologies to handle and process vast amounts of data efficiently. Some popular tools include:

Spreadsheet Software

Tools like Microsoft Excel and Google Sheets offer basic data analysis capabilities, including sorting, filtering, and basic statistical functions.

Statistical Software

Business intelligence (bi) tools.

BI tools like Tableau, Power BI, and QlikView enable businesses to create interactive visualizations, reports, and dashboards for data analysis and decision-making.

Machine Learning (ML) Platforms

ML platforms like TensorFlow and scikit-learn allow organizations to build predictive models and perform advanced data analysis tasks.

Challenges and Best Practices in Data Analysis

Data analysis comes with its own set of challenges, including data quality issues, data privacy concerns, and the need for skilled analysts. To ensure successful data analysis, organizations should adopt best practices such as:

Defining Clear Objectives

Data governance, continuous learning.

Encourage a culture of continuous learning and upskilling among data analysts to keep up with evolving techniques and technologies.

Companies Who Put Analytics Into Practice

Companies across various industries harness the power of analytics to gain insights, improve operational efficiency, enhance customer experiences, and drive business growth.

Netflix is renowned for its data-driven approach to content recommendations and production. By analyzing user viewing patterns, ratings, and feedback, Netflix can tailor personalized recommendations to its subscribers. Additionally, they leverage data analysis to make strategic decisions in content creation and acquisition. Netflix analyzes viewer data to identify popular genres, cast members, and storylines, which helps them develop original content that aligns with audience preferences, increasing the likelihood of successful shows and attracting and retaining subscribers.

Privacy Overview

5 Reasons Why Data Analytics is Important in Problem Solving

Data analytics is important in problem solving and it is a key sub-branch of data science. Even though there are endless data analytics applications in a business, one of the most crucial roles it plays is problem-solving.

Using data analytics not only boosts your problem-solving skills, but it also makes them a whole lot faster and efficient, automating a majority of the long and repetitive processes.

Whether you’re fresh out of university graduate or a professional who works for an organization, having top-notch problem-solving skills is a necessity and always comes in handy.

Everybody keeps facing new kinds of complex problems every day, and a lot of time is invested in overcoming these obstacles. Moreover, much valuable time is lost while trying to find solutions to unexpected problems, and your plans also get disrupted often.

This is where data analytics comes in. It lets you find and analyze the relevant data without too much of human-support. It’s a real time-saver and has become a necessity in problem-solving nowadays. So if you don’t already use data analytics in solving these problems, you’re probably missing out on a lot!

As the saying goes from the chief analytics officer of TIBCO,

“Think analytically, rigorously, and systematically about a business problem and come up with a solution that leverages the available data .”

– Michael O’Connell.

In this article, I will explain the importance of data analytics in problem-solving and go through the top 5 reasons why it cannot be ignored. So, let’s dive into it right away.

Highly Recommended Articles:

13 Reasons Why Data Analytics is Important in Decision Making

This is Why Business Analytics is Vital in Every Business

Is Data Analysis Qualitative or Quantitative? (We find Out!)

Will Algorithms Erode our Decision-Making Skills?

What is Data Analytics?

Data analytics is the art of automating processes using algorithms to collect raw data from multiple sources and transform it. This results in achieving the data that’s ready to be studied and used for analytical purposes, such as finding the trends, patterns, and so forth.

Why is Data Analytics Important in Problem Solving?

Problem-solving and data analytics often proceed hand in hand. When a particular problem is faced, everybody’s first instinct is to look for supporting data. Data analytics plays a pivotal role in finding this data and analyzing it to be used for tackling that specific problem.

Although the analytical part sometimes adds further complexities, since it’s a whole different process that might get challenging sometimes, it eventually helps you get a better hold of the situation.

Also, you come up with a more informed solution, not leaving anything out of the equation.

Having strong analytical skills help you dig deeper into the problem and get all the insights you need. Once you have extracted enough relevant knowledge, you can proceed with solving the problem.

However, you need to make sure you’re using the right, and complete data, or using data analytics may even backfire for you. Misleading data can make you believe things that don’t exist, and that’s bound to take you off the track, making the problem appear more complex or simpler than it is.

Let’s see a very straightforward daily life example to examine the importance of data analytics in problem-solving; what would you do if a question appears on your exam, but it doesn’t have enough data provided for you to solve the question?

Obviously, you won’t be able to solve that problem. You need a certain level of facts and figures about the situation first, or you’ll be wandering in the dark.

However, once you get the information you need, you can analyze the situation and quickly develop a solution. Moreover, getting more and more knowledge of the situation will further ease your ability to solve the given problem. This is precisely how data analytics assists you. It eases the process of collecting information and processing it to solve real-life problems.

5 Reasons Why Data Analytics Is Important in Problem Solving

Now that we’ve established a general idea of how strongly connected analytical skills and problem-solving are, let’s dig deeper into the top 5 reasons why data analytics is important in problem-solving .

1. Uncover Hidden Details

Data analytics is great at putting the minor details out in the spotlight. Sometimes, even the most qualified data scientists might not be able to spot tiny details existing in the data used to solve a certain problem. However, computers don’t miss. This enhances your ability to solve problems, and you might be able to come up with solutions a lot quicker.

Data analytics tools have a wide variety of features that let you study the given data very thoroughly and catch any hidden or recurring trends using built-in features without needing any effort. These tools are entirely automated and require very little programming support to work. They’re great at excavating the depths of data, going back way into the past.

2. Automated Models

Automation is the future. Businesses don’t have enough time nor the budget to let manual workforces go through tons of data to solve business problems.

Instead, what they do is hire a data analyst who automates problem-solving processes, and once that’s done, problem-solving becomes completely independent of any human intervention.

The tools can collect, combine, clean, and transform the relevant data all by themselves and finally using it to predict the solutions. Pretty impressive, right?

However, there might be some complex problems appearing now and then, which cannot be handled by algorithms since they’re completely new and nothing similar has come up before. But a lot of the work is still done using the algorithms, and it’s only once in a blue moon that they face something that rare.

However, there’s one thing to note here; the process of automation by designing complex analytical and ML algorithms might initially be a bit challenging. Many factors need to be kept in mind, and a lot of different scenarios may occur. But once it goes up and running, you’ll be saving a significant amount of manpower as well as resources.

3. Explore Similar Problems

If you’re using a data analytics approach for solving your problems, you will have a lot of data available at your disposal. Most of the data would indirectly help you in the form of similar problems, and you only have to figure out how these problems are related.

Once you’re there, the process gets a lot smoother because you get references to how such problems were tackled in the past.

Such data is available all over the internet and is automatically extracted by the data analytics tools according to the current problems. People run into difficulties all over the world, and there’s no harm if you follow the guidelines of someone who has gone through a similar situation before.

Even though exploring similar problems is also possible without the help of data analytics, we’re generating a lot of data nowadays , and searching through tons of this data isn’t as easy as you might think. So, using analytical tools is the smart choice since they’re quite fast and will save a lot of your time.

4. Predict Future Problems

While we have already gone through the fact that data analytics tools let you analyze the data available from the past and use it to predict the solutions to the problems you’re facing in the present, it also goes the other way around.

Whenever you use data analytics to solve any present problem, the tools you’re using store the data related to the problem and saves it in the form of variables forever. This way, similar problems faced in the future don’t need to be analyzed again. Instead, you can reuse the previous solutions you have, or the algorithms can predict the solutions for you even if the problems have evolved a bit.

This way, you’re not wasting any time on the problems that are recurring in nature. You jump directly onto the solution whenever you face a situation, and this makes the job quite simple.

5. Faster Data Extraction

However, with the latest tools, the data extraction is greatly reduced, and everything is done automatically with no human intervention whatsoever.

Moreover, once the appropriate data is mined and cleaned, there are not many hurdles that remain, and the rest of the processes are done without a lot of delays.

When businesses come across a problem, around 70%-80% is their time is consumed while gathering the relevant data and transforming it into usable forms. So, you can estimate how quick the process could get if the data analytics tools automate all this process.

Even though many of the tools are open-source, if you’re a bigger organization that can spend a bit on paid tools, problem-solving could get even better. The paid tools are literal workhorses, and in addition to generating the data, they could also develop the models to your solutions, unless it’s a very complex one, without needing any support of data analysts.

What problems can data analytics solve? 3 Real-World Examples

Employee performance problems .

Imagine a Call Center with over 100 agents

By Analyzing data sets of employee attendance, productivity, and issues that tend to delay in resolution. Through that, preparing refresher training plans, and mentorship plans according to key weak areas identified.

Sales Efficiency Problems

Imagine a Business that is spread out across multiple cities or regions

By analyzing the number of sales per area, the size of the sales reps’ team, the overall income and disposable income of potential customers, you can come up with interesting insights as to why some areas sell more or less than the others. Through that, prepping a recruitment and training plan or area expansion in order to boost sales could be a good move.

Business Investment Decisions Problems

Imagine an Investor with a portfolio of apps/software)

By analyzing the number of subscribers, sales, the trends in usage, the demographics, you can decide which peace of software has a better Return on Investment over the long term.

Throughout the article, we’ve seen various reasons why data analytics is very important for problem-solving.

Many different problems that may seem very complex in the start are made seamless using data analytics, and there are hundreds of analytical tools that can help us solve problems in our everyday lives.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Causal vs Evidential Decision-making (How to Make Businesses More Effective)

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

.css-s5s6ko{margin-right:42px;color:#F5F4F3;}@media (max-width: 1120px){.css-s5s6ko{margin-right:12px;}} AI that works. Coming June 5, Asana redefines work management—again. .css-1ixh9fn{display:inline-block;}@media (max-width: 480px){.css-1ixh9fn{display:block;margin-top:12px;}} .css-1uaoevr-heading-6{font-size:14px;line-height:24px;font-weight:500;-webkit-text-decoration:underline;text-decoration:underline;color:#F5F4F3;}.css-1uaoevr-heading-6:hover{color:#F5F4F3;} .css-ora5nu-heading-6{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-box-pack:start;-ms-flex-pack:start;-webkit-justify-content:flex-start;justify-content:flex-start;color:#0D0E10;-webkit-transition:all 0.3s;transition:all 0.3s;position:relative;font-size:16px;line-height:28px;padding:0;font-size:14px;line-height:24px;font-weight:500;-webkit-text-decoration:underline;text-decoration:underline;color:#F5F4F3;}.css-ora5nu-heading-6:hover{border-bottom:0;color:#CD4848;}.css-ora5nu-heading-6:hover path{fill:#CD4848;}.css-ora5nu-heading-6:hover div{border-color:#CD4848;}.css-ora5nu-heading-6:hover div:before{border-left-color:#CD4848;}.css-ora5nu-heading-6:active{border-bottom:0;background-color:#EBE8E8;color:#0D0E10;}.css-ora5nu-heading-6:active path{fill:#0D0E10;}.css-ora5nu-heading-6:active div{border-color:#0D0E10;}.css-ora5nu-heading-6:active div:before{border-left-color:#0D0E10;}.css-ora5nu-heading-6:hover{color:#F5F4F3;} Get early access .css-1k6cidy{width:11px;height:11px;margin-left:8px;}.css-1k6cidy path{fill:currentColor;}

- Product overview

- All features

- App integrations

CAPABILITIES

- project icon Project management

- Project views

- Custom fields

- Status updates

- goal icon Goals and reporting

- Reporting dashboards

- workflow icon Workflows and automation

- portfolio icon Resource management

- Time tracking

- my-task icon Admin and security

- Admin console

- asana-intelligence icon Asana Intelligence

- list icon Personal

- premium icon Starter

- briefcase icon Advanced

- Goal management

- Organizational planning

- Campaign management

- Creative production

- Marketing strategic planning

- Request tracking

- Resource planning

- Project intake

- View all uses arrow-right icon

- Project plans

- Team goals & objectives

- Team continuity

- Meeting agenda

- View all templates arrow-right icon

- Work management resources Discover best practices, watch webinars, get insights

- What's new Learn about the latest and greatest from Asana

- Customer stories See how the world's best organizations drive work innovation with Asana

- Help Center Get lots of tips, tricks, and advice to get the most from Asana

- Asana Academy Sign up for interactive courses and webinars to learn Asana

- Developers Learn more about building apps on the Asana platform

- Community programs Connect with and learn from Asana customers around the world

- Events Find out about upcoming events near you

- Partners Learn more about our partner programs

- Support Need help? Contact the Asana support team

- Asana for nonprofits Get more information on our nonprofit discount program, and apply.

Featured Reads

- Project management |

- Data-driven decision making: A step-by- ...

Data-driven decision making: A step-by-step guide

Data-driven decision making is the process of collecting data based on your company’s key performance indicators (KPIs) and transforming that data into actionable insights. This process is a crucial element of modern business strategy. In this article, we’ll discuss the benefits of data-driven decision making and provide tips so you can make informed decisions at work.

If there’s a looming decision ahead of you at work, it’s often hard to know which direction to go. If you go with your gut feeling, you may feel more confident in your choices, but will those choices be right for your team members? When you use facts to make decisions, you can feel more at ease knowing your choices are based on data and meant to maximize business impact.

Whether outshining competitors or increasing profitability, data-driven decision making is a crucial part of business strategy in the modern world. Below, we dive into the benefits of data-driven decision making and provide tips for making these decisions at work.

Decision-making tools for agile businesses

In this ebook, learn how to equip employees to make better decisions—so your business can pivot, adapt, and tackle challenges more effectively than your competition.

What is data-driven decision making (DDDM)?

![how data analysis can aid problem solving and decision making [inline illustration] What is data-driven decision making (DDDM)? (infographic)](https://assets.asana.biz/transform/44316339-5613-4dc2-8c6b-4a0b8357b4f5/inline-project-management-data-driven-decision-making-1-2x?io=transform:fill,width:2560&format=webp)

You can use business intelligence (BI) reporting tools during this process, which make big data collection fast and fruitful. These tools simplify data visualization, making data analytics accessible to those without advanced technical know-how.

What does being data-driven mean?

In short, the concept of being data-driven refers to using facts, or data, to find patterns, inferences, and insights to inform your decision making process.

Essentially, being data-driven means that you try to make decisions without bias or emotion. As a result, you can ensure that your company’s goals and roadmap are based on evidence and the patterns you’ve extracted from it, rather than what you like or dislike.

Why is data-driven decision making important?

Data-driven decision making is important because it helps you make decisions based on facts instead of biases. If you’re in a leadership position, making objective decisions is the best way to remain fair and balanced.

The most informed decisions stem from data that measure your business goals and populates in real time. You can aggregate the data you need to see patterns and make predictions with reporting software .

Some decisions you can make with support from data include:

How to drive profits and sales

How to establish good management behavior

How to optimize operations

How to improve team performance

While not every decision will have data to back it up, many of the most important decisions will.

5 steps for making data-driven decisions

Making data-driven decisions takes practice. If you want to improve your leadership skills , then you’ll need to know how to turn raw data into actionable steps that work toward your company initiatives. The following steps can help you make better decisions when analyzing data.

![how data analysis can aid problem solving and decision making [inline illustration] 5 steps for making data-driven decisions (infographic)](https://assets.asana.biz/transform/0accfea3-a273-4adf-ad41-b1cc118b6f2b/inline-project-management-data-driven-decision-making-2-2x?io=transform:fill,width:2560&format=webp)

1. Know your vision

Before you can make informed decisions, you need to understand your company’s vision for the future . This helps you use both data and strategy to form your decisions. Graphs and figures have little meaning without context to support them.

Tip: Use your company’s yearly objectives and key results ( OKRs ) or quarterly team KPIs to make data-backed decisions.

2. Find data sources

Once you’ve identified the goal you’re working towards, you can start collecting data.

The tools and data sources you use will depend on the type of data you’re collecting. If your goal is to analyze data sets pertaining to internal company processes, use a universal reporting tool. Reporting tools offer a single point of reference for keeping track of how work across your organization is progressing. Some reporting tools like Microsoft’s Power BI let you gather data from various external sources. If you want to analyze marketing trends or competitor metrics, you can use one of those tools.

Some general success metrics you may want to measure include:

Gross profit margin: Gross profit margin is measured by subtracting the cost of goods sold from the company's net sales.

Return on investment (ROI): The ratio between the income and investment, ROI is commonly used to decide whether or not an initiative is worth investing time or money in. When used as a business metric, it often tracks how well an investment is performing.

Productivity: This is the measurement of how efficiently your company is producing goods or services. You can calculate this by dividing the total output by the total input.

Total number of customers: This is a simple but effective metric to track. The more paid customers, the more money earned for the business.

Recurring revenue : Commonly used by SaaS companies, this is the amount of revenue generated by all of your current active subscribers during a specific period. It's commonly measured either monthly or annually.

You can measure a variety of other data sets based on your job role and the vision you’re working toward. Machine learning makes aggregating real time data simpler than ever before.

Tip: Try to create a connected story through these metrics. If revenue is down, look at productivity and see if you can draw a connection. Keep digging through these metrics until you find a “why” for whatever problem you’re trying to solve.

3. Organize your data

Organizing your data to improve data visualization is crucial for making effective business decisions. If you can’t see all your relevant data in one place and understand how it connects, then it’s difficult to ensure you’re making the most informed decisions.

Tip: One way to organize your data is with an executive dashboard . An executive dashboard is a customizable interface that usually comes as a feature of your universal reporting tool. This dashboard will display the data that’s most critical to achieving your goals, whether those goals are strategic, tactical, analytical, or operational.

![how data analysis can aid problem solving and decision making [Product UI] Universal reporting interactive dashboards in Asana (Search & Reporting)](https://assets.asana.biz/transform/4a845a7b-5c6d-4e87-9968-4f67d30e46da/TG23-web-hero-039-optimization-project-goals2-static-2x?io=transform:fill,width:2560&format=webp)

4. Perform data analysis

Once you’ve organized your data, you can begin your data-driven analysis. This is when you’ll extract actionable insights from your data that will help you in the decision-making process .

Depending on your goals, you may want to analyze the data from your executive dashboard in tandem with user research such as case studies, surveys, or testimonials so your conclusions include the customer experience.

Does your team want to improve their SEO tools to make it more competitive with other options on the market? The data sets you can use to determine necessary improvements may include:

Competitors’ performance data

Current SEO software performance data

Current customer satisfaction data

User research on a variety of SEO/marketing tools

While some of this information will come from your organization, you may need to obtain some of it from external sources. Analyzing these data sets as a whole can be helpful because you’ll draw a different conclusion than you would if you were to analyze each data set individually.

Tip: Share your analytics tools with your whole team or organization. Just like any collaborative effort, data analysis is most effective when viewed from many perspectives. While you may notice one pattern in the data, it’s entirely possible a teammate may see something completely different.

5. Draw conclusions

As you perform your data analysis, you’ll likely begin to draw conclusions about what you see. However, your conclusions deserve their own section because it’s important to flesh out what you see in the data so you can share your findings with others.

The main questions to ask yourself when drawing conclusions include:

What am I seeing that I already knew about this data?

What new information did I learn from this data?

How can I use the information I’ve gained to meet my business goals?

Once you can answer these questions, you’ve successfully performed data analysis and should be ready to make data-driven decisions for your business.

Tip: A natural next step after data analysis is writing down some SMART goals . Now that you’ve dug into the facts, you can establish achievable goals based on what you’ve learned.

Data-driven decision making examples

While the data analysis itself happens behind the scenes, the way data-driven decisions affect the consumer is very apparent. Some examples of data-driven decision making in different industries include:

E-commerce

Have you ever been shopping online and wondered why you’re getting certain recommendations? Well, it’s probably because you bought something similar in the past or clicked on a certain product.

Online marketplaces like Amazon track customer journeys and use metrics like click-through rate and bounce rate to identify what items you’re engaging with most. Using this data, retailers are able to show you what you might want without you having to search for it.

Financial institutions use data in a multitude of different ways, ranging from assessing risk to customer segmentation. Risk is especially prevalent in the financial sector, so it’s important that companies are able to determine the risk factor before making any significant decisions. Historical data is the best way to understand potential risks, threats, and the likelihood they occur.

Financial institutions also use customer data to determine their target market. By grouping consumers based on socioeconomic status, spending habits, and more, financial companies can infer what consumers have the greatest lifetime value and target them.

Transportation

Data science additionally plays a huge role in determining safe transportation. The U.S. Department of Transportation’s Safety Data Initiative underscores the role that data plays in improving transportation safety.

The report pulls data from all types of motor crashes and evaluates factors like weather and road conditions to discover the source of problems. Using the hard facts, the department can work toward implementing more safety measures.

Benefits of data-driven decision making

Analytics-based decision making is more than just a helpful skill—it’s a crucial one if you want to lead by example and foster a data-driven culture.

When you use data to make decisions, you can ensure your business remains fair, goal-oriented, and focused on improvement.

![how data analysis can aid problem solving and decision making [inline illustration] Benefits of data driven decision-making (infographic)](https://assets.asana.biz/transform/4832ff3d-bbd5-4458-92db-f360cf5fab84/inline-project-management-data-driven-decision-making-3-2x?io=transform:fill,width:2560&format=webp)

Make confident decisions

The businesses that outlast their competitors do so because they’re confident in their ability to succeed. If the decision-makers within a business waiver in their choices, it can lead to mistakes, high team member turnover, and poor risk management .

When you use data to make the most important business decisions, you’ll feel confident in those decisions, which will push you and your team forward. Confidence can lead to higher team morale and better performance.

Guard against biases

Using data to make decisions will guard against any biases among business leaders. While you may not be aware of your biases, having internal favoritism or values can affect the way you make decisions.

Making decisions directly based on the facts and numbers keeps your decisions objective and fair. It also means you have something to back up your decisions when team members or stakeholders ask why you chose to do what you did.

Find unresolved questions

Without using data, there are many questions that go unanswered. There may also be questions you didn’t know you had until your data sets revealed them. Any amount of data can benefit your team by providing better visualization into areas you can’t see without statistics, graphs, and charts.

When you bring those questions to the surface, you can feel confident knowing your decisions were made by considering every bit of relevant information.

Set measurable goals

Using data is one of the simplest ways to set measurable goals for your team and successfully meet those goals. By looking at internal data on past performance, you can determine what you need to improve and get as granular as possible with your targets. For example, your team may use data to identify the following goals:

Increase number of customers by 20% year over year

Reduce overall budget spend by $20,000 each quarter

Reduce project budget spend by $500

Increase hiring by 10 team members each quarter

Reduce cost per hire by $500

Without data, it would be difficult for your company to see where they’re spending their money and where they’d like to cut costs. Setting measurable goals ultimately leads to data-driven decisions because once these goals are set, you’ll determine how to reduce the overall budget or increase the number of customers.

Improve company processes

There are ways to improve company processes without using data, but when you observe trends in team member performance using numbers or analyzing company spending patterns with graphs, the process improvements you make will be based on more than observation alone.

Processes you can improve with data may include:

Risk management based on financial data

Cost estimation based on market pricing data

Team member onboarding based on new hire performance data

Customer service based on customer feedback data

Changing a company process can be difficult if you aren’t sure about the result, but you can be confident in your decisions when the facts are in front of you.

Tips for becoming more data-driven

Data-driven organizations are able to parse through the numbers and charts and find the meaning behind them. Creating a more data-driven culture starts with simply using data more often. However, this is easier said than done. If you’re ready to get started, try these tips to become more data-driven.

![how data analysis can aid problem solving and decision making [inline illustration] tips for becoming more data-driven (infographic)](https://assets.asana.biz/transform/edfeb377-175f-4f21-adfc-a6b18d719c1c/inline-project-management-data-driven-decision-making-1-2x?io=transform:fill,width:2560&format=webp)

Find the story

The key to analyzing data, numbers, and charts is to look for the story. Without the “why,” the data itself isn’t much help, and the decision process is far more difficult. If you’re trying to become more data-driven in your decision making, look for the story the data is telling. This will be integral in making the right decisions.

Consult the data

Before making any organizational decision, ask yourself: Does the data support this? Data is everywhere and can be applied to any major decision. So why not consult it when making tough choices? Data is so helpful because it’s naturally void of bias, so make sure you’re consulting the facts before any decision.

Learn data visualization

Finding the story behind the data becomes easier when you’re able to visualize it clearly. While learning how to visualize data is often the toughest aspect of establishing a data-driven culture, it’s the best way to recognize patterns and discrepancies in the data.

Familiarize yourself with different tools and techniques for data visualization. Try to get creative with the different ways to present data. If you’re well-versed in data visualization, your data storytelling skills will skyrocket.

Make data-driven decisions easy with reporting software

You’ll need the right data in front of you to make meaningful decisions for your team. Universal reporting software aggregates data from your company and presents it on your executive dashboard so you can view it in an organized and graphical way.

Related resources

7 steps to complete a social media audit (with template)

3 visual project management layouts (and how to use them)

Grant management: A nonprofit’s guide

Everything you need to know about waterfall project management

How to analyze a problem

May 7, 2023 Companies that harness the power of data have the upper hand when it comes to problem solving. Rather than defaulting to solving problems by developing lengthy—sometimes multiyear—road maps, they’re empowered to ask how innovative data techniques could resolve challenges in hours, days or weeks, write senior partner Kayvaun Rowshankish and coauthors. But when organizations have more data than ever at their disposal, which data should they leverage to analyze a problem? Before jumping in, it’s crucial to plan the analysis, decide which analytical tools to use, and ensure rigor. Check out these insights to uncover ways data can take your problem-solving techniques to the next level, and stay tuned for an upcoming post on the potential power of generative AI in problem-solving.

The data-driven enterprise of 2025

How data can help tech companies thrive amid economic uncertainty

How to unlock the full value of data? Manage it like a product

Data ethics: What it means and what it takes

Author Talks: Think digital

Five insights about harnessing data and AI from leaders at the frontier

Real-world data quality: What are the opportunities and challenges?

How a tech company went from moving data to using data: An interview with Ericsson’s Sonia Boije

Harnessing the power of external data

What is Data Analysis?

Data are everywhere nowadays. And with each passing year, the amount of data we are producing will only continue to increase.

There is a large amount of data available, but what do we do with all that data? How is it all used? And what does all that data mean?

It’s not much use if we just collect and store data in a spreadsheet or database and don't look at it, explor it, or research it.

Data analysts use tools and processes to derive meaning from data. They are responsible for collecting, manipulating, investigating, analyzing, gathering insights, and gaining knowledge from it.

This is one of the reasons data analysts are very high in demand: they play an integral role in business and science.

In this article, I will first go over what data analysis means as a term and explain why it is so important.

I will also break down the data analysis process and list some of the necessary skills required for conducting data analysis.

Here is an overview of what we will cover:

- What is data?

- What is data analysis?

- Effective customer targeting

- Measure success and performance

- Problem solving

- Step 1: recognising and identifying the questions that need answering

- Step 2: collecting raw data

- Step 3: cleaning the data

- Step 4: analyzing the data

- Step 5: sharing the results

- A good grasp of maths and statistics

Knowledge of SQL and Relational Databases

- Knowledge of a programming language

Knowledge of data visualization tools

Knowledge of excel, what is data meaning and definition of data.

Data refers to collections of facts and individual pieces of information.

Data is vital for decision-making, planning, and even telling a story.

There are two broad and general types of data:

- Qualitative data

- Quantitative data

Qualitative data is data expressed in non-numerical characters.

It is expressed as images, videos, text documents, or audio.

This type of data can’t be measured or counted.

It is used to determine how people feel about something – it’s about people's feelings, motivations, opinions, perceptions and involves bias.

It is descriptive and aims to answer questions such as ‘Why’, ‘How’, and ‘What’.

Qualitative data is gathered from observations, surveys, or user interviews.

Quantitative data is expressed in numerical characters.

This type of data is countable, measurable, and comparable.

It is about amounts of numbers and involves things such as quantity and the average of numbers.

It aims to answer questions such as ‘How much, ‘How many’, ‘How often’, ‘and 'How long’.

The act of collecting, analyzing, and interpreting quantitative data is known as performing statistical analysis.

Statistical analysis helps uncover underlying patterns and trends in data.

What Is Data Analysis? A Definition For Beginners

Data analysis is the act of turning raw, messy data into useful insights by cleaning the data up, transforming it, manipulating it, and inspecting it.

The insights gathered from the data are then presented visually in the form of charts, graphs, or dashboards.

The insights discovered can help aid the company’s or organization’s growth. Decision-makers will be able to come to an actionable conclusion and make the right business decisions.

Extracting knowledge from raw data will help the company/organization take steps towards achieving greater customer reach, improving performance, and increasing profit.

At its core, data analysis is about identifying and predicting trends and figuring out patterns, correlations, and relationships in the available data, and finding solutions to complex problems.

Why Is Data Analysis Important?

Data equals knowledge.

This means that data analysis is integral for every business.

It can be useful and greatly beneficial for every department, whether it's administration, accounting, logistics, marketing, design, or engineering, to name a few.

Below I will explain why exploring data and giving data context and meaning is really important.

Data Analysis Improves Customer Targeting

By analyzing data, you understand your competitors, and you will be able to match your product/service to the current market needs.

It also helps you determine the appropriate audience and demographic best suited to your product or service.

This way, you will be able to come up with an effective pricing strategy to make sure that your product/service will be profitable.

You will also be able to create more targeted campaigns and know what methods and forms of advertising and content to use to reach your audience directly and effectively.

Knowing the right audience for your product or service will transform your whole strategy. It will become more customer-oriented and customized to fit customers' needs.

Essentially, with the appropriate information and tools, you will be able to figure out how your product or service can be of value and high quality.

You'll also be able to make sure that your product or service helps solve a problem for your customers.

This is especially important in the product development phases since it cuts down on expenses and saves time.

Data Analysis Measures Success and Performance

By analyzing data, you can measure how well your product/service performs in the market compared to others.

You are able to identify the stronger areas that have seen the most success and desired results. And you will be able to identify weaker areas that are facing problems.

Additionally, you can predict what areas could possibly face problems before the problem actually occurs. This way, you can take action and prevent the problem from happening.

Analyzing data will give you a better idea of what you should focus more on and what you should focus less on going forward.

By creating performance maps, you can then go on to set goals and identify potential opportunities.

Data Analysis Can Aid Problem Solving

By performing data analysis on relevant, correct, and accurate data, you will have a better understanding of the right choices you need to make and how to make more informed and wiser decisions.

Data analysis means having better insights, which helps improve decision-making and leads to solving problems.

All the above will help a business grow.

Not analyzing data, or having insufficient data, could be one of the reasons why your business is not growing.

If that is the case, performing data analysis will help you come up with a more effective strategy for the future.

And if your business is growing, analyzing data will help it grow even further.

It will help reach its full potential and meet different goals – such as boosting customer retention, finding new customers, or providing a smoother and more pleasant customer experience.

An Overview Of The Data Analysis Process

Step 1: recognising and identifying the questions that need answering.

The first step in the data analysis process is setting a clear objective.

Before setting out to gather a large amount of data, it is important to think of why you are actually performing the data analysis in the first place.

What problem are you trying to solve?

What is the purpose of this data analysis?

What are you trying to do?

What do you want to achieve?

What is the end goal?

What do you want to gain from the analysis?

Why do you even need data analysis?

At this stage, it is paramount to have an insight and understanding of your business goals.

Start by defining the right questions you want to answer and the immediate and long-term business goals.

Identify what is needed for the analysis, what kind of data you would need, what data you want to track and measure, and think of a specific problem you want to solve.

Step 2: Collecting Raw Data

The next step is to identify what type of data you want to collect – whether it will be qualitative (non-numerical, descriptive ) or quantitative (numerical).

The way you go about collecting the data and the sources you gather from will depend on whether it is qualitative or quantitative.

Some of the ways you could collect relevant and suitable data are:

- By viewing the results of user groups, surveys, forms, questionnaires, internal documents, and interviews that have already been conducted in the business.

- By viewing customer reviews and feedback on customer satisfaction.

- By viewing transactions and purchase history records, as well as sales and financial figure reports created by the finance or marketing department of the business.

- By using a customer relationship management system (CRM) in the company.

- By monitoring website and social media activity and monthly visitors.

- By monitoring social media engagement.

- By tracking commonly searched keywords and search queries.

- By checking which ads are regularly clicked on.

- By checking customer conversion rates.

- By checking email open rates.

- By comparing the company’s data to competitors using third-party services.

- By querying a database.

- By gathering data through open data sets using web scraping. Web scraping is the act of extracting and collecting data and content from websites.

Step 3: Cleaning The Data

Once you have gathered the data from multiple sources, it is important to understand the structure of that data.

It is also important to check if you have gathered all the data you needed and if any crucial data is missing.

If you used multiple sources for the data collection, your data will likely be unstructured.

Raw, unstructured data is not usable. Not all data is necessarily good data.

Cleaning data is the most important part of the data analysis process and one on which data analysts spend most of their time.

Data needs to be cleaned, which means correcting errors, polishing, and sorting through the data.

This could include:

- Looking for outliers (values that are unusually big or small).

- Fixing typos.

- Removing errors.

- Removing duplicate data.

- Managing inconsistencies in the format.

- Checking for missing values or correcting incorrect data.

- Checking for inconsistencies

- Getting rid of irrelevant data and data that is not useful or needed for the analysis.

This step will ensure that you are focusing on and analyzing the correct and appropriate data and that your data is high-quality.

If you analyze irrelevant or incorrect data, it will affect the results of your analysis and have a negative impact overall.

So, the accuracy of your end analysis will depend on this step.

Step 4: Analyzing The Data

The next step is to analyze the data based on the questions and objectives from step 1.

There are four different data analysis techniques used, and they depend on the goals and aims of the business:

- Descriptive Analysis : This step is the initial and fundamental step in the analysis process. It provides a summary of the collected data and aims to answer the question: “ What happened?”. It goes over the key points in the data and emphasizes what has already taken place.

- Diagnostic Analysis : This step is about using the collected data and trying to understand the cause behind the issue at hand and identify patterns. It aims to answer the question: “ Why has this happened?”.

- Predictive Analysis : This step is about detecting and predicting future trends and is important for the future growth of the business. It aims to answer the question: “ What is likely to happen in the future?

- Prescriptive Analysis: This step is about gathering all the insights from the three previous steps, making recommendations for the future, and creating an actionable plan. It aims to answer the question: “ What needs to be done? ”

Step 5: Sharing The Results

The last step is to interpret your findings.

This is usually done by creating reports, charts, graphs, or interactive dashboards using data visualization tools.

All the above will help support the presentation of your findings and the results of your analysis to stakeholders, business executives, and decision-makers.

Data analysts are storytellers, which means having strong communication skills is important.

They need to showcase the findings and present the results in a clear, concise, and straightforward way by taking the data and creating a narrative.

This step will influence decision-making and the future steps of the business.

What Skills Are Required For Data Analysis?

A good grasp of maths and statistics.

The amount of maths you will use as a data analyst will vary depending on the job. Some jobs may require working with maths more than others.

You don’t necessarily need to be a math wizard, but with that said, having at least a fundamental understanding of math basics can be of great help.

Here are some math courses to get you started:

- College Algebra – Learn College Math Prerequisites with this Free 7-Hour Course

- Precalculus – Learn College Math Prerequisites with this Free 5-Hour Course

- Math for Programmers Course

Data analysts need to have good knowledge of statistics and probability for gathering and analyzing data, figuring out patterns, and drawing conclusions from the data.

To get started, take an intro to statistics course, and then you can move on to more advanced topics:

- Learn College-level Statistics in this free 8-hour course

- If you want to learn Data Science, take a few of these statistics classes

Data analysts need to know how to interact with relational databases to extract data.

A database is an electronic storage localization for data. Data can be easily retrieved and searched through.

A relational database is structured in format and all data items stored have pre-defined relationships with each other.

SQL stands for S tructured Q uery L anguage and is the language used for querying and interacting with relational databases.

By writing SQL queries you can perform CRUD (Create, Read, Update, and Delete) operations on data.

To learn SQL, check out the following resources:

- SQL Commands Cheat Sheet – How to Learn SQL in 10 Minutes

- Learn SQL – Free Relational Database Courses for Beginners

- Relational Database Certification

Knowledge Of A Programming Language

To further organize and manipulate databases, data analysts benefit from knowing a programming language.

Two of the most popular ones used in the data analysis field are Python and R.

Python is a general-purpose programming language, and it is very beginner-friendly thanks to its syntax that resembles the English language. It is also one of the most used technical tools for data analysis.

Python offers a wealth of packages and libraries for data manipulation, such as Pandas and NumPy, as well as for data visualization, such as Matplotlib.

To get started, first see how to go about learning Python as a complete beginner .

Once you understand the fundamentals, you can move on to learning about Pandas, NumPy, and Matplotlib.

Here are some resources to get you started:

- How to Get Started with Pandas in Python – a Beginner's Guide

- The Ultimate Guide to the Pandas Library for Data Science in Python

- The Ultimate Guide to the NumPy Package for Scientific Computing in Python

- Learn NumPy and start doing scientific computing in Python

- How to Analyze Data with Python, Pandas & Numpy - 10 Hour Course

- Matplotlib Course – Learn Python Data Visualization

- Python Data Science – A Free 12-Hour Course for Beginners. Learn Pandas, NumPy, Matplotlib, and More.

R is a language used for statistical analysis and data analysis. That said, it is not as beginner-friendly as Python.

To get started learning it, check out the following courses:

- R Programming Language Explained

- Learn R programming language basics in just 2 hours with this free course on statistical programming

Data visualization is the graphical interpretation and presentation of data.

This includes creating graphs, charts, interactive dashboards, or maps that can be easily shared with other team members and important stakeholders.

Data visualization tools are essentially used to tell a story with data and drive decision-making.

One of the most popular data visualization tools used is Tableau.

To learn Tableau, check out the following course:

- Tableau for Data Science and Data Visualization - Crash Course

Excel is one of the most essential tools used in Data analysis.

It is used for storing, structuring, and formatting data, performing calculations, summarizing data and identifying trends, sorting data into categories, and creating reports.

You can also use Excel to create charts and graphs.

To learn how to use Excel, check out the following courses:

- Learn Microsoft Excel - Full Video Course

- Excel Classes Online – 11 Free Excel Training Courses

- Data Analysis with Python for Excel Users Course

This marks the end of the article – thank you so much for making it to the end!

Hopefully this guide was helpful, and it gave you some insight into what data analysis is, why it is important, and what skills you need to enter the field.

Thank you for reading!

Read more posts .

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical Literature

- Classical Reception

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Archaeology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Variation

- Language Families

- Language Acquisition

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Modernism)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Culture

- Music and Religion

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Society