Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing a Literature Review

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

A literature review is a document or section of a document that collects key sources on a topic and discusses those sources in conversation with each other (also called synthesis ). The lit review is an important genre in many disciplines, not just literature (i.e., the study of works of literature such as novels and plays). When we say “literature review” or refer to “the literature,” we are talking about the research ( scholarship ) in a given field. You will often see the terms “the research,” “the scholarship,” and “the literature” used mostly interchangeably.

Where, when, and why would I write a lit review?

There are a number of different situations where you might write a literature review, each with slightly different expectations; different disciplines, too, have field-specific expectations for what a literature review is and does. For instance, in the humanities, authors might include more overt argumentation and interpretation of source material in their literature reviews, whereas in the sciences, authors are more likely to report study designs and results in their literature reviews; these differences reflect these disciplines’ purposes and conventions in scholarship. You should always look at examples from your own discipline and talk to professors or mentors in your field to be sure you understand your discipline’s conventions, for literature reviews as well as for any other genre.

A literature review can be a part of a research paper or scholarly article, usually falling after the introduction and before the research methods sections. In these cases, the lit review just needs to cover scholarship that is important to the issue you are writing about; sometimes it will also cover key sources that informed your research methodology.

Lit reviews can also be standalone pieces, either as assignments in a class or as publications. In a class, a lit review may be assigned to help students familiarize themselves with a topic and with scholarship in their field, get an idea of the other researchers working on the topic they’re interested in, find gaps in existing research in order to propose new projects, and/or develop a theoretical framework and methodology for later research. As a publication, a lit review usually is meant to help make other scholars’ lives easier by collecting and summarizing, synthesizing, and analyzing existing research on a topic. This can be especially helpful for students or scholars getting into a new research area, or for directing an entire community of scholars toward questions that have not yet been answered.

What are the parts of a lit review?

Most lit reviews use a basic introduction-body-conclusion structure; if your lit review is part of a larger paper, the introduction and conclusion pieces may be just a few sentences while you focus most of your attention on the body. If your lit review is a standalone piece, the introduction and conclusion take up more space and give you a place to discuss your goals, research methods, and conclusions separately from where you discuss the literature itself.

Introduction:

- An introductory paragraph that explains what your working topic and thesis is

- A forecast of key topics or texts that will appear in the review

- Potentially, a description of how you found sources and how you analyzed them for inclusion and discussion in the review (more often found in published, standalone literature reviews than in lit review sections in an article or research paper)

- Summarize and synthesize: Give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: Don’t just paraphrase other researchers – add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically Evaluate: Mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: Use transition words and topic sentence to draw connections, comparisons, and contrasts.

Conclusion:

- Summarize the key findings you have taken from the literature and emphasize their significance

- Connect it back to your primary research question

How should I organize my lit review?

Lit reviews can take many different organizational patterns depending on what you are trying to accomplish with the review. Here are some examples:

- Chronological : The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order. Try to analyze the patterns, turning points, and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred (as mentioned previously, this may not be appropriate in your discipline — check with a teacher or mentor if you’re unsure).

- Thematic : If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Qualitative versus quantitative research

- Empirical versus theoretical scholarship

- Divide the research by sociological, historical, or cultural sources

- Theoretical : In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theorical concepts to create a framework for your research.

What are some strategies or tips I can use while writing my lit review?

Any lit review is only as good as the research it discusses; make sure your sources are well-chosen and your research is thorough. Don’t be afraid to do more research if you discover a new thread as you’re writing. More info on the research process is available in our "Conducting Research" resources .

As you’re doing your research, create an annotated bibliography ( see our page on the this type of document ). Much of the information used in an annotated bibliography can be used also in a literature review, so you’ll be not only partially drafting your lit review as you research, but also developing your sense of the larger conversation going on among scholars, professionals, and any other stakeholders in your topic.

Usually you will need to synthesize research rather than just summarizing it. This means drawing connections between sources to create a picture of the scholarly conversation on a topic over time. Many student writers struggle to synthesize because they feel they don’t have anything to add to the scholars they are citing; here are some strategies to help you:

- It often helps to remember that the point of these kinds of syntheses is to show your readers how you understand your research, to help them read the rest of your paper.

- Writing teachers often say synthesis is like hosting a dinner party: imagine all your sources are together in a room, discussing your topic. What are they saying to each other?

- Look at the in-text citations in each paragraph. Are you citing just one source for each paragraph? This usually indicates summary only. When you have multiple sources cited in a paragraph, you are more likely to be synthesizing them (not always, but often

- Read more about synthesis here.

The most interesting literature reviews are often written as arguments (again, as mentioned at the beginning of the page, this is discipline-specific and doesn’t work for all situations). Often, the literature review is where you can establish your research as filling a particular gap or as relevant in a particular way. You have some chance to do this in your introduction in an article, but the literature review section gives a more extended opportunity to establish the conversation in the way you would like your readers to see it. You can choose the intellectual lineage you would like to be part of and whose definitions matter most to your thinking (mostly humanities-specific, but this goes for sciences as well). In addressing these points, you argue for your place in the conversation, which tends to make the lit review more compelling than a simple reporting of other sources.

Get science-backed answers as you write with Paperpal's Research feature

What is a Literature Review? How to Write It (with Examples)

A literature review is a critical analysis and synthesis of existing research on a particular topic. It provides an overview of the current state of knowledge, identifies gaps, and highlights key findings in the literature. 1 The purpose of a literature review is to situate your own research within the context of existing scholarship, demonstrating your understanding of the topic and showing how your work contributes to the ongoing conversation in the field. Learning how to write a literature review is a critical tool for successful research. Your ability to summarize and synthesize prior research pertaining to a certain topic demonstrates your grasp on the topic of study, and assists in the learning process.

Table of Contents

- What is the purpose of literature review?

- a. Habitat Loss and Species Extinction:

- b. Range Shifts and Phenological Changes:

- c. Ocean Acidification and Coral Reefs:

- d. Adaptive Strategies and Conservation Efforts:

- How to write a good literature review

- Choose a Topic and Define the Research Question:

- Decide on the Scope of Your Review:

- Select Databases for Searches:

- Conduct Searches and Keep Track:

- Review the Literature:

- Organize and Write Your Literature Review:

- Frequently asked questions

What is a literature review?

A well-conducted literature review demonstrates the researcher’s familiarity with the existing literature, establishes the context for their own research, and contributes to scholarly conversations on the topic. One of the purposes of a literature review is also to help researchers avoid duplicating previous work and ensure that their research is informed by and builds upon the existing body of knowledge.

What is the purpose of literature review?

A literature review serves several important purposes within academic and research contexts. Here are some key objectives and functions of a literature review: 2

- Contextualizing the Research Problem: The literature review provides a background and context for the research problem under investigation. It helps to situate the study within the existing body of knowledge.

- Identifying Gaps in Knowledge: By identifying gaps, contradictions, or areas requiring further research, the researcher can shape the research question and justify the significance of the study. This is crucial for ensuring that the new research contributes something novel to the field.

- Understanding Theoretical and Conceptual Frameworks: Literature reviews help researchers gain an understanding of the theoretical and conceptual frameworks used in previous studies. This aids in the development of a theoretical framework for the current research.

- Providing Methodological Insights: Another purpose of literature reviews is that it allows researchers to learn about the methodologies employed in previous studies. This can help in choosing appropriate research methods for the current study and avoiding pitfalls that others may have encountered.

- Establishing Credibility: A well-conducted literature review demonstrates the researcher’s familiarity with existing scholarship, establishing their credibility and expertise in the field. It also helps in building a solid foundation for the new research.

- Informing Hypotheses or Research Questions: The literature review guides the formulation of hypotheses or research questions by highlighting relevant findings and areas of uncertainty in existing literature.

Literature review example

Let’s delve deeper with a literature review example: Let’s say your literature review is about the impact of climate change on biodiversity. You might format your literature review into sections such as the effects of climate change on habitat loss and species extinction, phenological changes, and marine biodiversity. Each section would then summarize and analyze relevant studies in those areas, highlighting key findings and identifying gaps in the research. The review would conclude by emphasizing the need for further research on specific aspects of the relationship between climate change and biodiversity. The following literature review template provides a glimpse into the recommended literature review structure and content, demonstrating how research findings are organized around specific themes within a broader topic.

Literature Review on Climate Change Impacts on Biodiversity:

Climate change is a global phenomenon with far-reaching consequences, including significant impacts on biodiversity. This literature review synthesizes key findings from various studies:

a. Habitat Loss and Species Extinction:

Climate change-induced alterations in temperature and precipitation patterns contribute to habitat loss, affecting numerous species (Thomas et al., 2004). The review discusses how these changes increase the risk of extinction, particularly for species with specific habitat requirements.

b. Range Shifts and Phenological Changes:

Observations of range shifts and changes in the timing of biological events (phenology) are documented in response to changing climatic conditions (Parmesan & Yohe, 2003). These shifts affect ecosystems and may lead to mismatches between species and their resources.

c. Ocean Acidification and Coral Reefs:

The review explores the impact of climate change on marine biodiversity, emphasizing ocean acidification’s threat to coral reefs (Hoegh-Guldberg et al., 2007). Changes in pH levels negatively affect coral calcification, disrupting the delicate balance of marine ecosystems.

d. Adaptive Strategies and Conservation Efforts:

Recognizing the urgency of the situation, the literature review discusses various adaptive strategies adopted by species and conservation efforts aimed at mitigating the impacts of climate change on biodiversity (Hannah et al., 2007). It emphasizes the importance of interdisciplinary approaches for effective conservation planning.

How to write a good literature review

Writing a literature review involves summarizing and synthesizing existing research on a particular topic. A good literature review format should include the following elements.

Introduction: The introduction sets the stage for your literature review, providing context and introducing the main focus of your review.

- Opening Statement: Begin with a general statement about the broader topic and its significance in the field.

- Scope and Purpose: Clearly define the scope of your literature review. Explain the specific research question or objective you aim to address.

- Organizational Framework: Briefly outline the structure of your literature review, indicating how you will categorize and discuss the existing research.

- Significance of the Study: Highlight why your literature review is important and how it contributes to the understanding of the chosen topic.

- Thesis Statement: Conclude the introduction with a concise thesis statement that outlines the main argument or perspective you will develop in the body of the literature review.

Body: The body of the literature review is where you provide a comprehensive analysis of existing literature, grouping studies based on themes, methodologies, or other relevant criteria.

- Organize by Theme or Concept: Group studies that share common themes, concepts, or methodologies. Discuss each theme or concept in detail, summarizing key findings and identifying gaps or areas of disagreement.

- Critical Analysis: Evaluate the strengths and weaknesses of each study. Discuss the methodologies used, the quality of evidence, and the overall contribution of each work to the understanding of the topic.

- Synthesis of Findings: Synthesize the information from different studies to highlight trends, patterns, or areas of consensus in the literature.

- Identification of Gaps: Discuss any gaps or limitations in the existing research and explain how your review contributes to filling these gaps.

- Transition between Sections: Provide smooth transitions between different themes or concepts to maintain the flow of your literature review.

Conclusion: The conclusion of your literature review should summarize the main findings, highlight the contributions of the review, and suggest avenues for future research.

- Summary of Key Findings: Recap the main findings from the literature and restate how they contribute to your research question or objective.

- Contributions to the Field: Discuss the overall contribution of your literature review to the existing knowledge in the field.

- Implications and Applications: Explore the practical implications of the findings and suggest how they might impact future research or practice.

- Recommendations for Future Research: Identify areas that require further investigation and propose potential directions for future research in the field.

- Final Thoughts: Conclude with a final reflection on the importance of your literature review and its relevance to the broader academic community.

Conducting a literature review

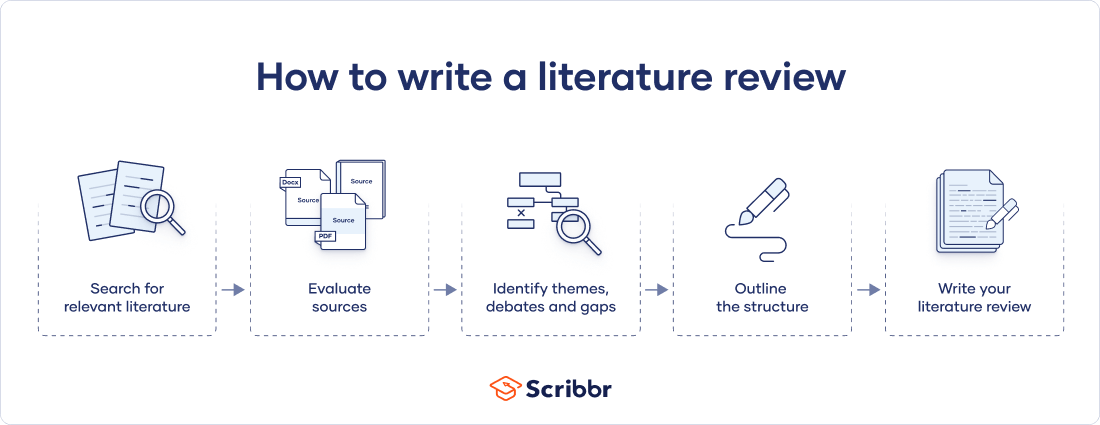

Conducting a literature review is an essential step in research that involves reviewing and analyzing existing literature on a specific topic. It’s important to know how to do a literature review effectively, so here are the steps to follow: 1

Choose a Topic and Define the Research Question:

- Select a topic that is relevant to your field of study.

- Clearly define your research question or objective. Determine what specific aspect of the topic do you want to explore?

Decide on the Scope of Your Review:

- Determine the timeframe for your literature review. Are you focusing on recent developments, or do you want a historical overview?

- Consider the geographical scope. Is your review global, or are you focusing on a specific region?

- Define the inclusion and exclusion criteria. What types of sources will you include? Are there specific types of studies or publications you will exclude?

Select Databases for Searches:

- Identify relevant databases for your field. Examples include PubMed, IEEE Xplore, Scopus, Web of Science, and Google Scholar.

- Consider searching in library catalogs, institutional repositories, and specialized databases related to your topic.

Conduct Searches and Keep Track:

- Develop a systematic search strategy using keywords, Boolean operators (AND, OR, NOT), and other search techniques.

- Record and document your search strategy for transparency and replicability.

- Keep track of the articles, including publication details, abstracts, and links. Use citation management tools like EndNote, Zotero, or Mendeley to organize your references.

Review the Literature:

- Evaluate the relevance and quality of each source. Consider the methodology, sample size, and results of studies.

- Organize the literature by themes or key concepts. Identify patterns, trends, and gaps in the existing research.

- Summarize key findings and arguments from each source. Compare and contrast different perspectives.

- Identify areas where there is a consensus in the literature and where there are conflicting opinions.

- Provide critical analysis and synthesis of the literature. What are the strengths and weaknesses of existing research?

Organize and Write Your Literature Review:

- Literature review outline should be based on themes, chronological order, or methodological approaches.

- Write a clear and coherent narrative that synthesizes the information gathered.

- Use proper citations for each source and ensure consistency in your citation style (APA, MLA, Chicago, etc.).

- Conclude your literature review by summarizing key findings, identifying gaps, and suggesting areas for future research.

The literature review sample and detailed advice on writing and conducting a review will help you produce a well-structured report. But remember that a literature review is an ongoing process, and it may be necessary to revisit and update it as your research progresses.

Frequently asked questions

A literature review is a critical and comprehensive analysis of existing literature (published and unpublished works) on a specific topic or research question and provides a synthesis of the current state of knowledge in a particular field. A well-conducted literature review is crucial for researchers to build upon existing knowledge, avoid duplication of efforts, and contribute to the advancement of their field. It also helps researchers situate their work within a broader context and facilitates the development of a sound theoretical and conceptual framework for their studies.

Literature review is a crucial component of research writing, providing a solid background for a research paper’s investigation. The aim is to keep professionals up to date by providing an understanding of ongoing developments within a specific field, including research methods, and experimental techniques used in that field, and present that knowledge in the form of a written report. Also, the depth and breadth of the literature review emphasizes the credibility of the scholar in his or her field.

Before writing a literature review, it’s essential to undertake several preparatory steps to ensure that your review is well-researched, organized, and focused. This includes choosing a topic of general interest to you and doing exploratory research on that topic, writing an annotated bibliography, and noting major points, especially those that relate to the position you have taken on the topic.

Literature reviews and academic research papers are essential components of scholarly work but serve different purposes within the academic realm. 3 A literature review aims to provide a foundation for understanding the current state of research on a particular topic, identify gaps or controversies, and lay the groundwork for future research. Therefore, it draws heavily from existing academic sources, including books, journal articles, and other scholarly publications. In contrast, an academic research paper aims to present new knowledge, contribute to the academic discourse, and advance the understanding of a specific research question. Therefore, it involves a mix of existing literature (in the introduction and literature review sections) and original data or findings obtained through research methods.

Literature reviews are essential components of academic and research papers, and various strategies can be employed to conduct them effectively. If you want to know how to write a literature review for a research paper, here are four common approaches that are often used by researchers. Chronological Review: This strategy involves organizing the literature based on the chronological order of publication. It helps to trace the development of a topic over time, showing how ideas, theories, and research have evolved. Thematic Review: Thematic reviews focus on identifying and analyzing themes or topics that cut across different studies. Instead of organizing the literature chronologically, it is grouped by key themes or concepts, allowing for a comprehensive exploration of various aspects of the topic. Methodological Review: This strategy involves organizing the literature based on the research methods employed in different studies. It helps to highlight the strengths and weaknesses of various methodologies and allows the reader to evaluate the reliability and validity of the research findings. Theoretical Review: A theoretical review examines the literature based on the theoretical frameworks used in different studies. This approach helps to identify the key theories that have been applied to the topic and assess their contributions to the understanding of the subject. It’s important to note that these strategies are not mutually exclusive, and a literature review may combine elements of more than one approach. The choice of strategy depends on the research question, the nature of the literature available, and the goals of the review. Additionally, other strategies, such as integrative reviews or systematic reviews, may be employed depending on the specific requirements of the research.

The literature review format can vary depending on the specific publication guidelines. However, there are some common elements and structures that are often followed. Here is a general guideline for the format of a literature review: Introduction: Provide an overview of the topic. Define the scope and purpose of the literature review. State the research question or objective. Body: Organize the literature by themes, concepts, or chronology. Critically analyze and evaluate each source. Discuss the strengths and weaknesses of the studies. Highlight any methodological limitations or biases. Identify patterns, connections, or contradictions in the existing research. Conclusion: Summarize the key points discussed in the literature review. Highlight the research gap. Address the research question or objective stated in the introduction. Highlight the contributions of the review and suggest directions for future research.

Both annotated bibliographies and literature reviews involve the examination of scholarly sources. While annotated bibliographies focus on individual sources with brief annotations, literature reviews provide a more in-depth, integrated, and comprehensive analysis of existing literature on a specific topic. The key differences are as follows:

References

- Denney, A. S., & Tewksbury, R. (2013). How to write a literature review. Journal of criminal justice education , 24 (2), 218-234.

- Pan, M. L. (2016). Preparing literature reviews: Qualitative and quantitative approaches . Taylor & Francis.

- Cantero, C. (2019). How to write a literature review. San José State University Writing Center .

Paperpal is an AI writing assistant that help academics write better, faster with real-time suggestions for in-depth language and grammar correction. Trained on millions of research manuscripts enhanced by professional academic editors, Paperpal delivers human precision at machine speed.

Try it for free or upgrade to Paperpal Prime , which unlocks unlimited access to premium features like academic translation, paraphrasing, contextual synonyms, consistency checks and more. It’s like always having a professional academic editor by your side! Go beyond limitations and experience the future of academic writing. Get Paperpal Prime now at just US$19 a month!

Related Reads:

- Empirical Research: A Comprehensive Guide for Academics

- How to Write a Scientific Paper in 10 Steps

- Life Sciences Papers: 9 Tips for Authors Writing in Biological Sciences

- What is an Argumentative Essay? How to Write It (With Examples)

6 Tips for Post-Doc Researchers to Take Their Career to the Next Level

Self-plagiarism in research: what it is and how to avoid it, you may also like, measuring academic success: definition & strategies for excellence, what is academic writing: tips for students, why traditional editorial process needs an upgrade, paperpal’s new ai research finder empowers authors to..., what is hedging in academic writing , how to use ai to enhance your college..., ai + human expertise – a paradigm shift..., how to use paperpal to generate emails &..., ai in education: it’s time to change the..., is it ethical to use ai-generated abstracts without....

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7 Writing a Literature Review

Hundreds of original investigation research articles on health science topics are published each year. It is becoming harder and harder to keep on top of all new findings in a topic area and – more importantly – to work out how they all fit together to determine our current understanding of a topic. This is where literature reviews come in.

In this chapter, we explain what a literature review is and outline the stages involved in writing one. We also provide practical tips on how to communicate the results of a review of current literature on a topic in the format of a literature review.

7.1 What is a literature review?

Literature reviews provide a synthesis and evaluation of the existing literature on a particular topic with the aim of gaining a new, deeper understanding of the topic.

Published literature reviews are typically written by scientists who are experts in that particular area of science. Usually, they will be widely published as authors of their own original work, making them highly qualified to author a literature review.

However, literature reviews are still subject to peer review before being published. Literature reviews provide an important bridge between the expert scientific community and many other communities, such as science journalists, teachers, and medical and allied health professionals. When the most up-to-date knowledge reaches such audiences, it is more likely that this information will find its way to the general public. When this happens, – the ultimate good of science can be realised.

A literature review is structured differently from an original research article. It is developed based on themes, rather than stages of the scientific method.

In the article Ten simple rules for writing a literature review , Marco Pautasso explains the importance of literature reviews:

Literature reviews are in great demand in most scientific fields. Their need stems from the ever-increasing output of scientific publications. For example, compared to 1991, in 2008 three, eight, and forty times more papers were indexed in Web of Science on malaria, obesity, and biodiversity, respectively. Given such mountains of papers, scientists cannot be expected to examine in detail every single new paper relevant to their interests. Thus, it is both advantageous and necessary to rely on regular summaries of the recent literature. Although recognition for scientists mainly comes from primary research, timely literature reviews can lead to new synthetic insights and are often widely read. For such summaries to be useful, however, they need to be compiled in a professional way (Pautasso, 2013, para. 1).

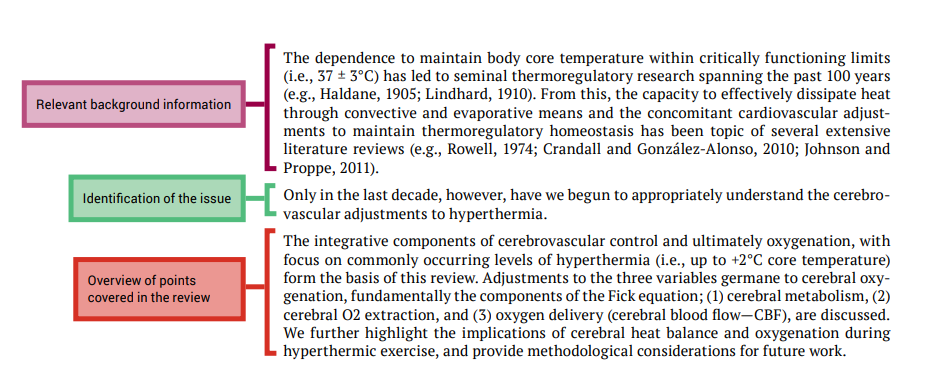

An example of a literature review is shown in Figure 7.1.

Video 7.1: What is a literature review? [2 mins, 11 secs]

Watch this video created by Steely Library at Northern Kentucky Library called ‘ What is a literature review? Note: Closed captions are available by clicking on the CC button below.

Examples of published literature reviews

- Strength training alone, exercise therapy alone, and exercise therapy with passive manual mobilisation each reduce pain and disability in people with knee osteoarthritis: a systematic review

- Traveler’s diarrhea: a clinical review

- Cultural concepts of distress and psychiatric disorders: literature review and research recommendations for global mental health epidemiology

7.2 Steps of writing a literature review

Writing a literature review is a very challenging task. Figure 7.2 summarises the steps of writing a literature review. Depending on why you are writing your literature review, you may be given a topic area, or may choose a topic that particularly interests you or is related to a research project that you wish to undertake.

Chapter 6 provides instructions on finding scientific literature that would form the basis for your literature review.

Once you have your topic and have accessed the literature, the next stages (analysis, synthesis and evaluation) are challenging. Next, we look at these important cognitive skills student scientists will need to develop and employ to successfully write a literature review, and provide some guidance for navigating these stages.

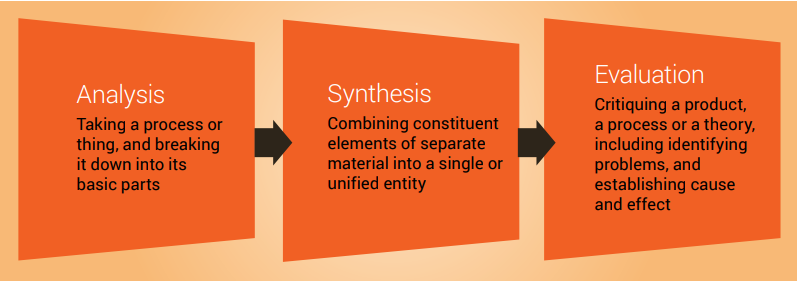

Analysis, synthesis and evaluation

Analysis, synthesis and evaluation are three essential skills required by scientists and you will need to develop these skills if you are to write a good literature review ( Figure 7.3 ). These important cognitive skills are discussed in more detail in Chapter 9.

The first step in writing a literature review is to analyse the original investigation research papers that you have gathered related to your topic.

Analysis requires examining the papers methodically and in detail, so you can understand and interpret aspects of the study described in each research article.

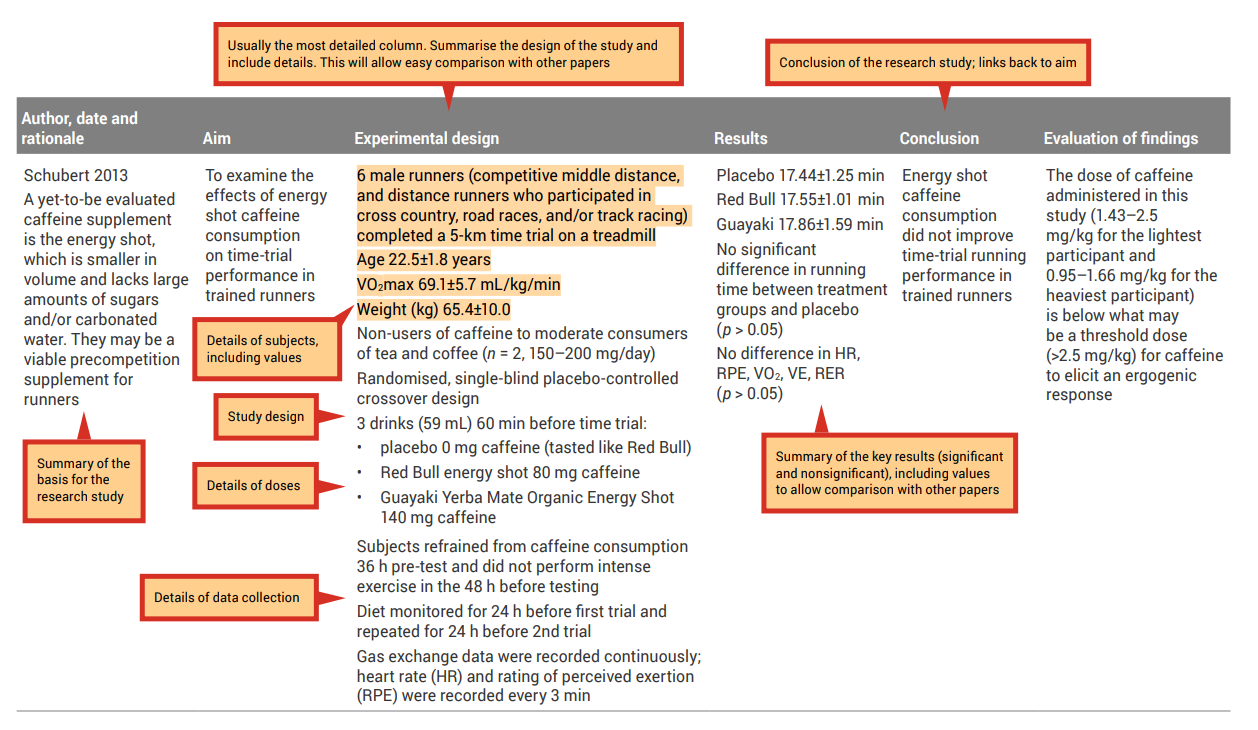

An analysis grid is a simple tool you can use to help with the careful examination and breakdown of each paper. This tool will allow you to create a concise summary of each research paper; see Table 7.1 for an example of an analysis grid. When filling in the grid, the aim is to draw out key aspects of each research paper. Use a different row for each paper, and a different column for each aspect of the paper ( Tables 7.2 and 7.3 show how completed analysis grid may look).

Before completing your own grid, look at these examples and note the types of information that have been included, as well as the level of detail. Completing an analysis grid with a sufficient level of detail will help you to complete the synthesis and evaluation stages effectively. This grid will allow you to more easily observe similarities and differences across the findings of the research papers and to identify possible explanations (e.g., differences in methodologies employed) for observed differences between the findings of different research papers.

Table 7.1: Example of an analysis grid

Table 7.3: Sample filled-in analysis grid for research article by Ping and colleagues

Source: Ping, WC, Keong, CC & Bandyopadhyay, A 2010, ‘Effects of acute supplementation of caffeine on cardiorespiratory responses during endurance running in a hot and humid climate’, Indian Journal of Medical Research, vol. 132, pp. 36–41. Used under a CC-BY-NC-SA licence.

Step two of writing a literature review is synthesis.

Synthesis describes combining separate components or elements to form a connected whole.

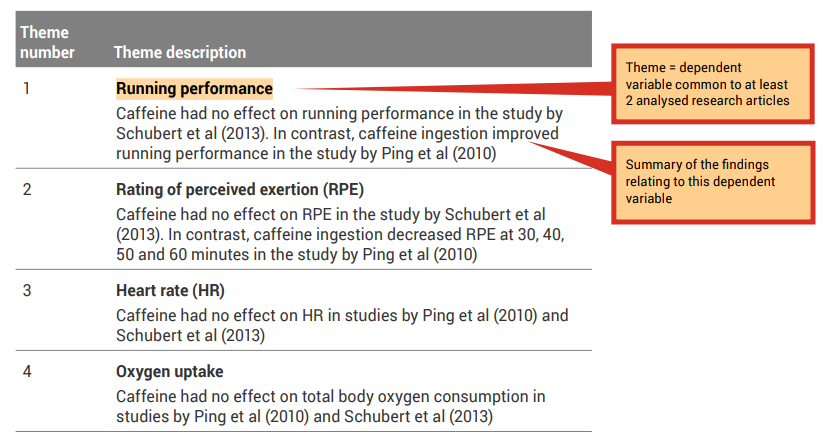

You will use the results of your analysis to find themes to build your literature review around. Each of the themes identified will become a subheading within the body of your literature review.

A good place to start when identifying themes is with the dependent variables (results/findings) that were investigated in the research studies.

Because all of the research articles you are incorporating into your literature review are related to your topic, it is likely that they have similar study designs and have measured similar dependent variables. Review the ‘Results’ column of your analysis grid. You may like to collate the common themes in a synthesis grid (see, for example Table 7.4 ).

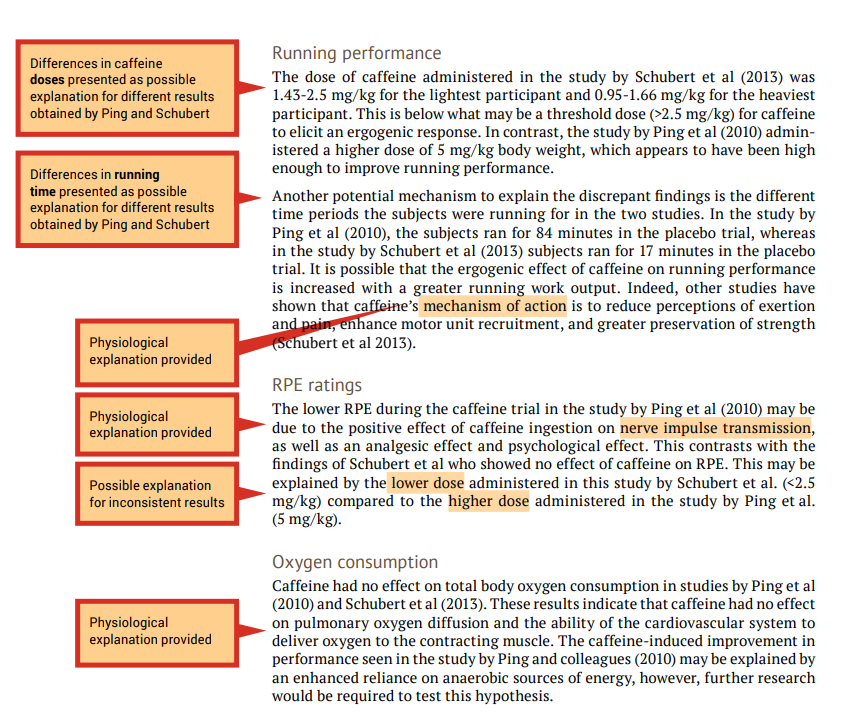

Step three of writing a literature review is evaluation, which can only be done after carefully analysing your research papers and synthesising the common themes (findings).

During the evaluation stage, you are making judgements on the themes presented in the research articles that you have read. This includes providing physiological explanations for the findings. It may be useful to refer to the discussion section of published original investigation research papers, or another literature review, where the authors may mention tested or hypothetical physiological mechanisms that may explain their findings.

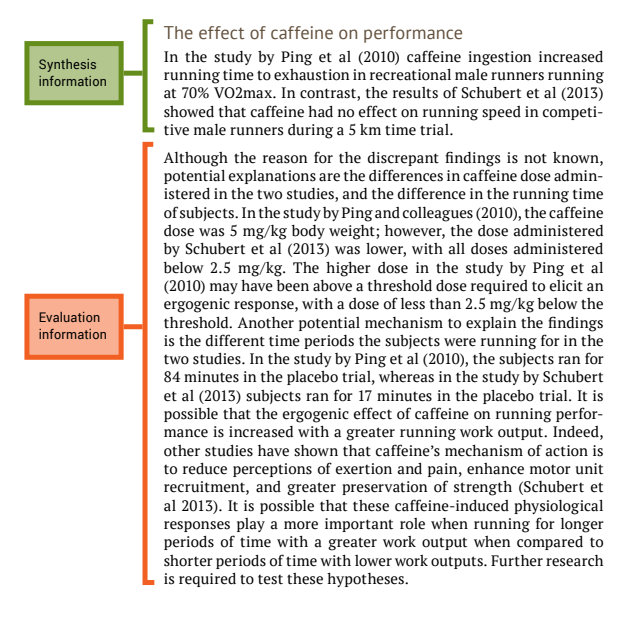

When the findings of the investigations related to a particular theme are inconsistent (e.g., one study shows that caffeine effects performance and another study shows that caffeine had no effect on performance) you should attempt to provide explanations of why the results differ, including physiological explanations. A good place to start is by comparing the methodologies to determine if there are any differences that may explain the differences in the findings (see the ‘Experimental design’ column of your analysis grid). An example of evaluation is shown in the examples that follow in this section, under ‘Running performance’ and ‘RPE ratings’.

When the findings of the papers related to a particular theme are consistent (e.g., caffeine had no effect on oxygen uptake in both studies) an evaluation should include an explanation of why the results are similar. Once again, include physiological explanations. It is still a good idea to compare methodologies as a background to the evaluation. An example of evaluation is shown in the following under ‘Oxygen consumption’.

7.3 Writing your literature review

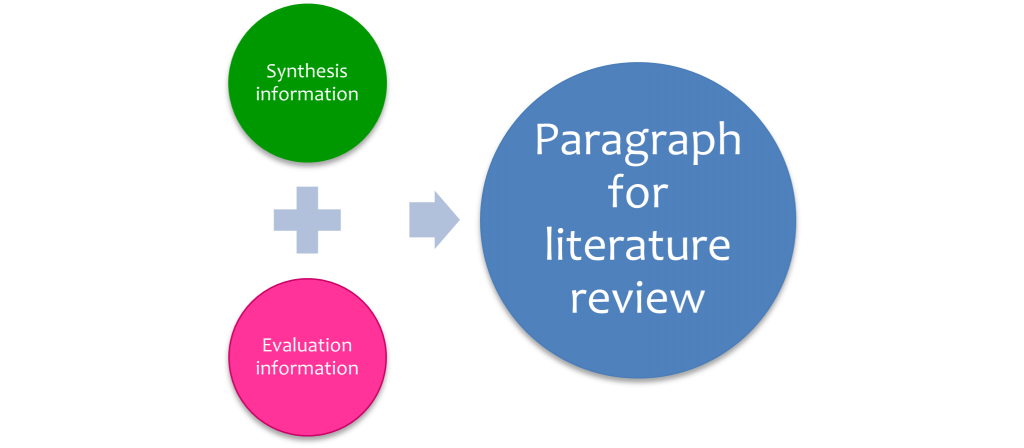

Once you have completed the analysis, and synthesis grids and written your evaluation of the research papers , you can combine synthesis and evaluation information to create a paragraph for a literature review ( Figure 7.4 ).

The following paragraphs are an example of combining the outcome of the synthesis and evaluation stages to produce a paragraph for a literature review.

Note that this is an example using only two papers – most literature reviews would be presenting information on many more papers than this ( (e.g., 106 papers in the review article by Bain and colleagues discussed later in this chapter). However, the same principle applies regardless of the number of papers reviewed.

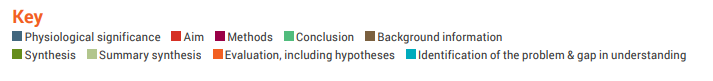

The next part of this chapter looks at the each section of a literature review and explains how to write them by referring to a review article that was published in Frontiers in Physiology and shown in Figure 7.1. Each section from the published article is annotated to highlight important features of the format of the review article, and identifies the synthesis and evaluation information.

In the examination of each review article section we will point out examples of how the authors have presented certain information and where they display application of important cognitive processes; we will use the colour code shown below:

This should be one paragraph that accurately reflects the contents of the review article.

Introduction

The introduction should establish the context and importance of the review

Body of literature review

The reference section provides a list of the references that you cited in the body of your review article. The format will depend on the journal of publication as each journal has their own specific referencing format.

It is important to accurately cite references in research papers to acknowledge your sources and ensure credit is appropriately given to authors of work you have referred to. An accurate and comprehensive reference list also shows your readers that you are well-read in your topic area and are aware of the key papers that provide the context to your research.

It is important to keep track of your resources and to reference them consistently in the format required by the publication in which your work will appear. Most scientists will use reference management software to store details of all of the journal articles (and other sources) they use while writing their review article. This software also automates the process of adding in-text references and creating a reference list. In the review article by Bain et al. (2014) used as an example in this chapter, the reference list contains 106 items, so you can imagine how much help referencing software would be. Chapter 5 shows you how to use EndNote, one example of reference management software.

Click the drop down below to review the terms learned from this chapter.

Copyright note:

- The quotation from Pautasso, M 2013, ‘Ten simple rules for writing a literature review’, PLoS Computational Biology is use under a CC-BY licence.

- Content from the annotated article and tables are based on Schubert, MM, Astorino, TA & Azevedo, JJL 2013, ‘The effects of caffeinated ‘energy shots’ on time trial performance’, Nutrients, vol. 5, no. 6, pp. 2062–2075 (used under a CC-BY 3.0 licence ) and P ing, WC, Keong , CC & Bandyopadhyay, A 2010, ‘Effects of acute supplementation of caffeine on cardiorespiratory responses during endurance running in a hot and humid climate’, Indian Journal of Medical Research, vol. 132, pp. 36–41 (used under a CC-BY-NC-SA 4.0 licence ).

Bain, A.R., Morrison, S.A., & Ainslie, P.N. (2014). Cerebral oxygenation and hyperthermia. Frontiers in Physiology, 5 , 92.

Pautasso, M. (2013). Ten simple rules for writing a literature review. PLoS Computational Biology, 9 (7), e1003149.

How To Do Science Copyright © 2022 by University of Southern Queensland is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- CAREER FEATURE

- 04 December 2020

- Correction 09 December 2020

How to write a superb literature review

Andy Tay is a freelance writer based in Singapore.

You can also search for this author in PubMed Google Scholar

Literature reviews are important resources for scientists. They provide historical context for a field while offering opinions on its future trajectory. Creating them can provide inspiration for one’s own research, as well as some practice in writing. But few scientists are trained in how to write a review — or in what constitutes an excellent one. Even picking the appropriate software to use can be an involved decision (see ‘Tools and techniques’). So Nature asked editors and working scientists with well-cited reviews for their tips.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

doi: https://doi.org/10.1038/d41586-020-03422-x

Interviews have been edited for length and clarity.

Updates & Corrections

Correction 09 December 2020 : An earlier version of the tables in this article included some incorrect details about the programs Zotero, Endnote and Manubot. These have now been corrected.

Hsing, I.-M., Xu, Y. & Zhao, W. Electroanalysis 19 , 755–768 (2007).

Article Google Scholar

Ledesma, H. A. et al. Nature Nanotechnol. 14 , 645–657 (2019).

Article PubMed Google Scholar

Brahlek, M., Koirala, N., Bansal, N. & Oh, S. Solid State Commun. 215–216 , 54–62 (2015).

Choi, Y. & Lee, S. Y. Nature Rev. Chem . https://doi.org/10.1038/s41570-020-00221-w (2020).

Download references

Related Articles

- Research management

Want to make a difference? Try working at an environmental non-profit organization

Career Feature 26 APR 24

Scientists urged to collect royalties from the ‘magic money tree’

Career Feature 25 APR 24

NIH pay rise for postdocs and PhD students could have US ripple effect

News 25 APR 24

Algorithm ranks peer reviewers by reputation — but critics warn of bias

Nature Index 25 APR 24

Researchers want a ‘nutrition label’ for academic-paper facts

Nature Index 17 APR 24

How young people benefit from Swiss apprenticeships

Spotlight 17 APR 24

Retractions are part of science, but misconduct isn’t — lessons from a superconductivity lab

Editorial 24 APR 24

ECUST Seeking Global Talents

Join Us and Create a Bright Future Together!

Shanghai, China

East China University of Science and Technology (ECUST)

Position Recruitment of Guangzhou Medical University

Seeking talents around the world.

Guangzhou, Guangdong, China

Guangzhou Medical University

Junior Group Leader

The Imagine Institute is a leading European research centre dedicated to genetic diseases, with the primary objective to better understand and trea...

Paris, Ile-de-France (FR)

Imagine Institute

Director of the Czech Advanced Technology and Research Institute of Palacký University Olomouc

The Rector of Palacký University Olomouc announces a Call for the Position of Director of the Czech Advanced Technology and Research Institute of P...

Czech Republic (CZ)

Palacký University Olomouc

Course lecturer for INFH 5000

The HKUST(GZ) Information Hub is recruiting course lecturer for INFH 5000: Information Science and Technology: Essentials and Trends.

The Hong Kong University of Science and Technology (Guangzhou)

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 5. The Literature Review

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

A literature review surveys prior research published in books, scholarly articles, and any other sources relevant to a particular issue, area of research, or theory, and by so doing, provides a description, summary, and critical evaluation of these works in relation to the research problem being investigated. Literature reviews are designed to provide an overview of sources you have used in researching a particular topic and to demonstrate to your readers how your research fits within existing scholarship about the topic.

Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . Fourth edition. Thousand Oaks, CA: SAGE, 2014.

Importance of a Good Literature Review

A literature review may consist of simply a summary of key sources, but in the social sciences, a literature review usually has an organizational pattern and combines both summary and synthesis, often within specific conceptual categories . A summary is a recap of the important information of the source, but a synthesis is a re-organization, or a reshuffling, of that information in a way that informs how you are planning to investigate a research problem. The analytical features of a literature review might:

- Give a new interpretation of old material or combine new with old interpretations,

- Trace the intellectual progression of the field, including major debates,

- Depending on the situation, evaluate the sources and advise the reader on the most pertinent or relevant research, or

- Usually in the conclusion of a literature review, identify where gaps exist in how a problem has been researched to date.

Given this, the purpose of a literature review is to:

- Place each work in the context of its contribution to understanding the research problem being studied.

- Describe the relationship of each work to the others under consideration.

- Identify new ways to interpret prior research.

- Reveal any gaps that exist in the literature.

- Resolve conflicts amongst seemingly contradictory previous studies.

- Identify areas of prior scholarship to prevent duplication of effort.

- Point the way in fulfilling a need for additional research.

- Locate your own research within the context of existing literature [very important].

Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper. 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Jesson, Jill. Doing Your Literature Review: Traditional and Systematic Techniques . Los Angeles, CA: SAGE, 2011; Knopf, Jeffrey W. "Doing a Literature Review." PS: Political Science and Politics 39 (January 2006): 127-132; Ridley, Diana. The Literature Review: A Step-by-Step Guide for Students . 2nd ed. Los Angeles, CA: SAGE, 2012.

Types of Literature Reviews

It is important to think of knowledge in a given field as consisting of three layers. First, there are the primary studies that researchers conduct and publish. Second are the reviews of those studies that summarize and offer new interpretations built from and often extending beyond the primary studies. Third, there are the perceptions, conclusions, opinion, and interpretations that are shared informally among scholars that become part of the body of epistemological traditions within the field.

In composing a literature review, it is important to note that it is often this third layer of knowledge that is cited as "true" even though it often has only a loose relationship to the primary studies and secondary literature reviews. Given this, while literature reviews are designed to provide an overview and synthesis of pertinent sources you have explored, there are a number of approaches you could adopt depending upon the type of analysis underpinning your study.

Argumentative Review This form examines literature selectively in order to support or refute an argument, deeply embedded assumption, or philosophical problem already established in the literature. The purpose is to develop a body of literature that establishes a contrarian viewpoint. Given the value-laden nature of some social science research [e.g., educational reform; immigration control], argumentative approaches to analyzing the literature can be a legitimate and important form of discourse. However, note that they can also introduce problems of bias when they are used to make summary claims of the sort found in systematic reviews [see below].

Integrative Review Considered a form of research that reviews, critiques, and synthesizes representative literature on a topic in an integrated way such that new frameworks and perspectives on the topic are generated. The body of literature includes all studies that address related or identical hypotheses or research problems. A well-done integrative review meets the same standards as primary research in regard to clarity, rigor, and replication. This is the most common form of review in the social sciences.

Historical Review Few things rest in isolation from historical precedent. Historical literature reviews focus on examining research throughout a period of time, often starting with the first time an issue, concept, theory, phenomena emerged in the literature, then tracing its evolution within the scholarship of a discipline. The purpose is to place research in a historical context to show familiarity with state-of-the-art developments and to identify the likely directions for future research.

Methodological Review A review does not always focus on what someone said [findings], but how they came about saying what they say [method of analysis]. Reviewing methods of analysis provides a framework of understanding at different levels [i.e. those of theory, substantive fields, research approaches, and data collection and analysis techniques], how researchers draw upon a wide variety of knowledge ranging from the conceptual level to practical documents for use in fieldwork in the areas of ontological and epistemological consideration, quantitative and qualitative integration, sampling, interviewing, data collection, and data analysis. This approach helps highlight ethical issues which you should be aware of and consider as you go through your own study.

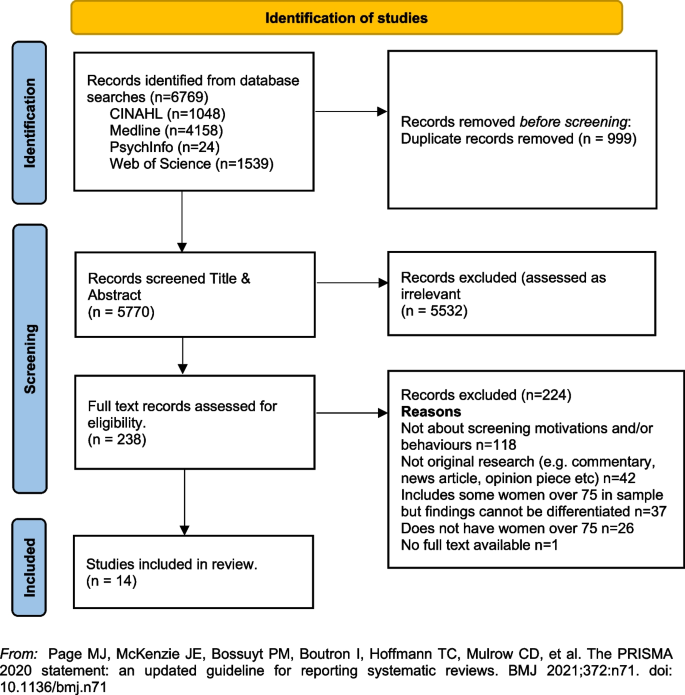

Systematic Review This form consists of an overview of existing evidence pertinent to a clearly formulated research question, which uses pre-specified and standardized methods to identify and critically appraise relevant research, and to collect, report, and analyze data from the studies that are included in the review. The goal is to deliberately document, critically evaluate, and summarize scientifically all of the research about a clearly defined research problem . Typically it focuses on a very specific empirical question, often posed in a cause-and-effect form, such as "To what extent does A contribute to B?" This type of literature review is primarily applied to examining prior research studies in clinical medicine and allied health fields, but it is increasingly being used in the social sciences.

Theoretical Review The purpose of this form is to examine the corpus of theory that has accumulated in regard to an issue, concept, theory, phenomena. The theoretical literature review helps to establish what theories already exist, the relationships between them, to what degree the existing theories have been investigated, and to develop new hypotheses to be tested. Often this form is used to help establish a lack of appropriate theories or reveal that current theories are inadequate for explaining new or emerging research problems. The unit of analysis can focus on a theoretical concept or a whole theory or framework.

NOTE : Most often the literature review will incorporate some combination of types. For example, a review that examines literature supporting or refuting an argument, assumption, or philosophical problem related to the research problem will also need to include writing supported by sources that establish the history of these arguments in the literature.

Baumeister, Roy F. and Mark R. Leary. "Writing Narrative Literature Reviews." Review of General Psychology 1 (September 1997): 311-320; Mark R. Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Kennedy, Mary M. "Defining a Literature." Educational Researcher 36 (April 2007): 139-147; Petticrew, Mark and Helen Roberts. Systematic Reviews in the Social Sciences: A Practical Guide . Malden, MA: Blackwell Publishers, 2006; Torracro, Richard. "Writing Integrative Literature Reviews: Guidelines and Examples." Human Resource Development Review 4 (September 2005): 356-367; Rocco, Tonette S. and Maria S. Plakhotnik. "Literature Reviews, Conceptual Frameworks, and Theoretical Frameworks: Terms, Functions, and Distinctions." Human Ressource Development Review 8 (March 2008): 120-130; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016.

Structure and Writing Style

I. Thinking About Your Literature Review

The structure of a literature review should include the following in support of understanding the research problem :

- An overview of the subject, issue, or theory under consideration, along with the objectives of the literature review,

- Division of works under review into themes or categories [e.g. works that support a particular position, those against, and those offering alternative approaches entirely],

- An explanation of how each work is similar to and how it varies from the others,

- Conclusions as to which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of their area of research.

The critical evaluation of each work should consider :

- Provenance -- what are the author's credentials? Are the author's arguments supported by evidence [e.g. primary historical material, case studies, narratives, statistics, recent scientific findings]?

- Methodology -- were the techniques used to identify, gather, and analyze the data appropriate to addressing the research problem? Was the sample size appropriate? Were the results effectively interpreted and reported?

- Objectivity -- is the author's perspective even-handed or prejudicial? Is contrary data considered or is certain pertinent information ignored to prove the author's point?

- Persuasiveness -- which of the author's theses are most convincing or least convincing?

- Validity -- are the author's arguments and conclusions convincing? Does the work ultimately contribute in any significant way to an understanding of the subject?

II. Development of the Literature Review

Four Basic Stages of Writing 1. Problem formulation -- which topic or field is being examined and what are its component issues? 2. Literature search -- finding materials relevant to the subject being explored. 3. Data evaluation -- determining which literature makes a significant contribution to the understanding of the topic. 4. Analysis and interpretation -- discussing the findings and conclusions of pertinent literature.

Consider the following issues before writing the literature review: Clarify If your assignment is not specific about what form your literature review should take, seek clarification from your professor by asking these questions: 1. Roughly how many sources would be appropriate to include? 2. What types of sources should I review (books, journal articles, websites; scholarly versus popular sources)? 3. Should I summarize, synthesize, or critique sources by discussing a common theme or issue? 4. Should I evaluate the sources in any way beyond evaluating how they relate to understanding the research problem? 5. Should I provide subheadings and other background information, such as definitions and/or a history? Find Models Use the exercise of reviewing the literature to examine how authors in your discipline or area of interest have composed their literature review sections. Read them to get a sense of the types of themes you might want to look for in your own research or to identify ways to organize your final review. The bibliography or reference section of sources you've already read, such as required readings in the course syllabus, are also excellent entry points into your own research. Narrow the Topic The narrower your topic, the easier it will be to limit the number of sources you need to read in order to obtain a good survey of relevant resources. Your professor will probably not expect you to read everything that's available about the topic, but you'll make the act of reviewing easier if you first limit scope of the research problem. A good strategy is to begin by searching the USC Libraries Catalog for recent books about the topic and review the table of contents for chapters that focuses on specific issues. You can also review the indexes of books to find references to specific issues that can serve as the focus of your research. For example, a book surveying the history of the Israeli-Palestinian conflict may include a chapter on the role Egypt has played in mediating the conflict, or look in the index for the pages where Egypt is mentioned in the text. Consider Whether Your Sources are Current Some disciplines require that you use information that is as current as possible. This is particularly true in disciplines in medicine and the sciences where research conducted becomes obsolete very quickly as new discoveries are made. However, when writing a review in the social sciences, a survey of the history of the literature may be required. In other words, a complete understanding the research problem requires you to deliberately examine how knowledge and perspectives have changed over time. Sort through other current bibliographies or literature reviews in the field to get a sense of what your discipline expects. You can also use this method to explore what is considered by scholars to be a "hot topic" and what is not.

III. Ways to Organize Your Literature Review

Chronology of Events If your review follows the chronological method, you could write about the materials according to when they were published. This approach should only be followed if a clear path of research building on previous research can be identified and that these trends follow a clear chronological order of development. For example, a literature review that focuses on continuing research about the emergence of German economic power after the fall of the Soviet Union. By Publication Order your sources by publication chronology, then, only if the order demonstrates a more important trend. For instance, you could order a review of literature on environmental studies of brown fields if the progression revealed, for example, a change in the soil collection practices of the researchers who wrote and/or conducted the studies. Thematic [“conceptual categories”] A thematic literature review is the most common approach to summarizing prior research in the social and behavioral sciences. Thematic reviews are organized around a topic or issue, rather than the progression of time, although the progression of time may still be incorporated into a thematic review. For example, a review of the Internet’s impact on American presidential politics could focus on the development of online political satire. While the study focuses on one topic, the Internet’s impact on American presidential politics, it would still be organized chronologically reflecting technological developments in media. The difference in this example between a "chronological" and a "thematic" approach is what is emphasized the most: themes related to the role of the Internet in presidential politics. Note that more authentic thematic reviews tend to break away from chronological order. A review organized in this manner would shift between time periods within each section according to the point being made. Methodological A methodological approach focuses on the methods utilized by the researcher. For the Internet in American presidential politics project, one methodological approach would be to look at cultural differences between the portrayal of American presidents on American, British, and French websites. Or the review might focus on the fundraising impact of the Internet on a particular political party. A methodological scope will influence either the types of documents in the review or the way in which these documents are discussed.

Other Sections of Your Literature Review Once you've decided on the organizational method for your literature review, the sections you need to include in the paper should be easy to figure out because they arise from your organizational strategy. In other words, a chronological review would have subsections for each vital time period; a thematic review would have subtopics based upon factors that relate to the theme or issue. However, sometimes you may need to add additional sections that are necessary for your study, but do not fit in the organizational strategy of the body. What other sections you include in the body is up to you. However, only include what is necessary for the reader to locate your study within the larger scholarship about the research problem.

Here are examples of other sections, usually in the form of a single paragraph, you may need to include depending on the type of review you write:

- Current Situation : Information necessary to understand the current topic or focus of the literature review.

- Sources Used : Describes the methods and resources [e.g., databases] you used to identify the literature you reviewed.

- History : The chronological progression of the field, the research literature, or an idea that is necessary to understand the literature review, if the body of the literature review is not already a chronology.

- Selection Methods : Criteria you used to select (and perhaps exclude) sources in your literature review. For instance, you might explain that your review includes only peer-reviewed [i.e., scholarly] sources.

- Standards : Description of the way in which you present your information.

- Questions for Further Research : What questions about the field has the review sparked? How will you further your research as a result of the review?

IV. Writing Your Literature Review

Once you've settled on how to organize your literature review, you're ready to write each section. When writing your review, keep in mind these issues.

Use Evidence A literature review section is, in this sense, just like any other academic research paper. Your interpretation of the available sources must be backed up with evidence [citations] that demonstrates that what you are saying is valid. Be Selective Select only the most important points in each source to highlight in the review. The type of information you choose to mention should relate directly to the research problem, whether it is thematic, methodological, or chronological. Related items that provide additional information, but that are not key to understanding the research problem, can be included in a list of further readings . Use Quotes Sparingly Some short quotes are appropriate if you want to emphasize a point, or if what an author stated cannot be easily paraphrased. Sometimes you may need to quote certain terminology that was coined by the author, is not common knowledge, or taken directly from the study. Do not use extensive quotes as a substitute for using your own words in reviewing the literature. Summarize and Synthesize Remember to summarize and synthesize your sources within each thematic paragraph as well as throughout the review. Recapitulate important features of a research study, but then synthesize it by rephrasing the study's significance and relating it to your own work and the work of others. Keep Your Own Voice While the literature review presents others' ideas, your voice [the writer's] should remain front and center. For example, weave references to other sources into what you are writing but maintain your own voice by starting and ending the paragraph with your own ideas and wording. Use Caution When Paraphrasing When paraphrasing a source that is not your own, be sure to represent the author's information or opinions accurately and in your own words. Even when paraphrasing an author’s work, you still must provide a citation to that work.

V. Common Mistakes to Avoid

These are the most common mistakes made in reviewing social science research literature.

- Sources in your literature review do not clearly relate to the research problem;

- You do not take sufficient time to define and identify the most relevant sources to use in the literature review related to the research problem;

- Relies exclusively on secondary analytical sources rather than including relevant primary research studies or data;

- Uncritically accepts another researcher's findings and interpretations as valid, rather than examining critically all aspects of the research design and analysis;

- Does not describe the search procedures that were used in identifying the literature to review;

- Reports isolated statistical results rather than synthesizing them in chi-squared or meta-analytic methods; and,

- Only includes research that validates assumptions and does not consider contrary findings and alternative interpretations found in the literature.

Cook, Kathleen E. and Elise Murowchick. “Do Literature Review Skills Transfer from One Course to Another?” Psychology Learning and Teaching 13 (March 2014): 3-11; Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Jesson, Jill. Doing Your Literature Review: Traditional and Systematic Techniques . London: SAGE, 2011; Literature Review Handout. Online Writing Center. Liberty University; Literature Reviews. The Writing Center. University of North Carolina; Onwuegbuzie, Anthony J. and Rebecca Frels. Seven Steps to a Comprehensive Literature Review: A Multimodal and Cultural Approach . Los Angeles, CA: SAGE, 2016; Ridley, Diana. The Literature Review: A Step-by-Step Guide for Students . 2nd ed. Los Angeles, CA: SAGE, 2012; Randolph, Justus J. “A Guide to Writing the Dissertation Literature Review." Practical Assessment, Research, and Evaluation. vol. 14, June 2009; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016; Taylor, Dena. The Literature Review: A Few Tips On Conducting It. University College Writing Centre. University of Toronto; Writing a Literature Review. Academic Skills Centre. University of Canberra.

Writing Tip

Break Out of Your Disciplinary Box!

Thinking interdisciplinarily about a research problem can be a rewarding exercise in applying new ideas, theories, or concepts to an old problem. For example, what might cultural anthropologists say about the continuing conflict in the Middle East? In what ways might geographers view the need for better distribution of social service agencies in large cities than how social workers might study the issue? You don’t want to substitute a thorough review of core research literature in your discipline for studies conducted in other fields of study. However, particularly in the social sciences, thinking about research problems from multiple vectors is a key strategy for finding new solutions to a problem or gaining a new perspective. Consult with a librarian about identifying research databases in other disciplines; almost every field of study has at least one comprehensive database devoted to indexing its research literature.

Frodeman, Robert. The Oxford Handbook of Interdisciplinarity . New York: Oxford University Press, 2010.

Another Writing Tip

Don't Just Review for Content!

While conducting a review of the literature, maximize the time you devote to writing this part of your paper by thinking broadly about what you should be looking for and evaluating. Review not just what scholars are saying, but how are they saying it. Some questions to ask:

- How are they organizing their ideas?

- What methods have they used to study the problem?

- What theories have been used to explain, predict, or understand their research problem?

- What sources have they cited to support their conclusions?

- How have they used non-textual elements [e.g., charts, graphs, figures, etc.] to illustrate key points?

When you begin to write your literature review section, you'll be glad you dug deeper into how the research was designed and constructed because it establishes a means for developing more substantial analysis and interpretation of the research problem.

Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1 998.

Yet Another Writing Tip

When Do I Know I Can Stop Looking and Move On?

Here are several strategies you can utilize to assess whether you've thoroughly reviewed the literature:

- Look for repeating patterns in the research findings . If the same thing is being said, just by different people, then this likely demonstrates that the research problem has hit a conceptual dead end. At this point consider: Does your study extend current research? Does it forge a new path? Or, does is merely add more of the same thing being said?

- Look at sources the authors cite to in their work . If you begin to see the same researchers cited again and again, then this is often an indication that no new ideas have been generated to address the research problem.

- Search Google Scholar to identify who has subsequently cited leading scholars already identified in your literature review [see next sub-tab]. This is called citation tracking and there are a number of sources that can help you identify who has cited whom, particularly scholars from outside of your discipline. Here again, if the same authors are being cited again and again, this may indicate no new literature has been written on the topic.

Onwuegbuzie, Anthony J. and Rebecca Frels. Seven Steps to a Comprehensive Literature Review: A Multimodal and Cultural Approach . Los Angeles, CA: Sage, 2016; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016.

- << Previous: Theoretical Framework

- Next: Citation Tracking >>

- Last Updated: Apr 24, 2024 10:51 AM

- URL: https://libguides.usc.edu/writingguide

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What is a Literature Review? | Guide, Template, & Examples

What is a Literature Review? | Guide, Template, & Examples

Published on 22 February 2022 by Shona McCombes . Revised on 7 June 2022.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research.

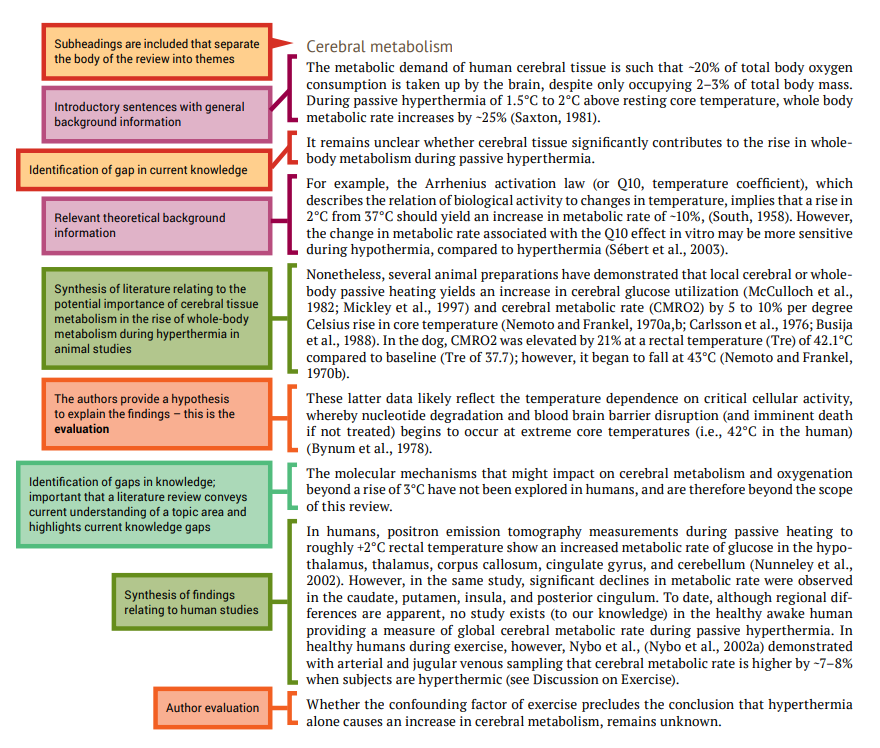

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarise sources – it analyses, synthesises, and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Why write a literature review, examples of literature reviews, step 1: search for relevant literature, step 2: evaluate and select sources, step 3: identify themes, debates and gaps, step 4: outline your literature review’s structure, step 5: write your literature review, frequently asked questions about literature reviews, introduction.

- Quick Run-through

- Step 1 & 2

When you write a dissertation or thesis, you will have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and scholarly context

- Develop a theoretical framework and methodology for your research

- Position yourself in relation to other researchers and theorists

- Show how your dissertation addresses a gap or contributes to a debate

You might also have to write a literature review as a stand-alone assignment. In this case, the purpose is to evaluate the current state of research and demonstrate your knowledge of scholarly debates around a topic.

The content will look slightly different in each case, but the process of conducting a literature review follows the same steps. We’ve written a step-by-step guide that you can follow below.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .