Mastering Backtracking: A Comprehensive Guide to Solving Complex Problems in DAA

Learn the general method of backtracking in DAA and how to apply it to solve complex problems. Understand the concept, algorithm, and examples of backtracking.

Create an image featuring JavaScript code snippets and interview-related icons or graphics. Use a color scheme of yellows and blues. Include the title '7 Essential JavaScript Interview Questions for Freshers'.

General Method of Backtracking in DAA: A Comprehensive Guide

Backtracking is a fundamental concept in computer science, particularly in the field of Design and Analysis of Algorithms (DAA). It is a powerful technique used to solve complex problems by systematically exploring all possible solutions. In this blog post, we will delve into the general method of backtracking in DAA, its applications, and examples.

What is Backtracking?

Backtracking is a problem-solving strategy that involves recursively exploring all possible solutions to a problem. It is a form of brute force approach that systematically generates all possible solutions and checks if any of them satisfy the problem's constraints. The backtracking algorithm is particularly useful for solving constraint satisfaction problems, where the goal is to find a solution that satisfies a set of constraints.

The General Method of Backtracking

The general method of backtracking involves the following steps:

1. Choose a Variable

Select a variable from the problem's domain. This variable will be used to generate possible solutions.

2. Generate a Possible Solution

Generate a possible solution by assigning a value to the chosen variable.

3. Constraint Checking

Check if the generated solution satisfies the problem's constraints. If it does, then recursively explore the solution space by assigning values to the remaining variables.

4. Backtrack

If the generated solution does not satisfy the constraints, then backtrack by undoing the last assignment and exploring alternative solutions.

Repeat steps 2-4 until a solution is found or all possible solutions have been explored.

Examples of Backtracking Algorithms

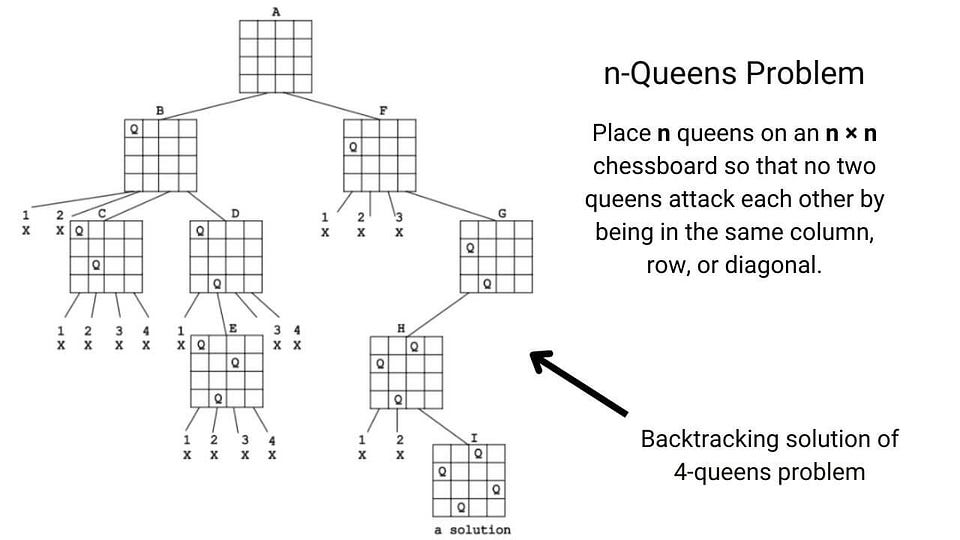

1. n-queens problem.

The N-Queens problem is a classic example of a backtracking algorithm. The problem involves placing N queens on an NxN chessboard such that no queen attacks another. The backtracking algorithm works by placing queens one by one on the board, checking for conflicts, and backtracking when a conflict is found.

Sudoku is another popular puzzle that can be solved using backtracking. The algorithm works by filling in the puzzle grid one cell at a time, checking for validity, and backtracking when an invalid solution is found.

3. Traveling Salesman Problem

The Traveling Salesman Problem is a classic problem in computer science that involves finding the shortest possible tour that visits a set of cities and returns to the starting city. The backtracking algorithm works by generating all possible tours and checking for the shortest tour.

Advantages of Backtracking

1. guaranteed solution.

Backtracking guarantees a solution to the problem, provided the problem has a solution.

2. Flexibility

Backtracking can be used to solve a wide range of problems, from simple puzzles to complex optimization problems.

3. Easy to Implement

Backtracking algorithms are relatively easy to implement, especially for small problems.

Disadvantages of Backtracking

1. computational complexity.

Backtracking algorithms can be computationally expensive, especially for large problems.

2. Space Complexity

Backtracking algorithms require a significant amount of memory to store the solution space.

3. Difficulty in Handling Constraints

Backtracking algorithms can struggle to handle complex constraints, leading to inefficient solutions.

Real-World Applications of Backtracking

1. cryptography.

Backtracking is used in cryptography to break certain encryption algorithms.

2. Scheduling

Backtracking is used in scheduling algorithms to find the optimal schedule for a set of tasks.

3. Resource Allocation

Backtracking is used in resource allocation algorithms to allocate resources efficiently.

In conclusion, backtracking is a powerful technique used to solve complex problems in computer science. The general method of backtracking involves choosing a variable, generating a possible solution, constraint checking, backtracking, and repeating the process until a solution is found. Backtracking has numerous advantages, including guaranteed solutions, flexibility, and ease of implementation. However, it also has disadvantages, including high computational complexity, space complexity, and difficulty in handling constraints. Despite these limitations, backtracking has numerous real-world applications in cryptography, scheduling, and resource allocation.

Algorithm Design Techniques in DAA

When it comes to solve problems, especially in computer science, algorithms are something that play a crucial role. An algorithm is a set of rules for solving a certain problem quickly. But the question is how to design an algorithm to solve that problem? So IT professionals are upgrading their knowledge and developing different approaches to construct an effective algorithm to fulfil the expanding demands of industry. Different algorithm design techniques and their applications are discussed in this article.

Brute-Force Search

It is a simple approach of addressing a problem that relies on huge processing power and testing of all possibilities to improve efficiency. Suppose you forgot the combination of a 4-digit padlock and to avoid purchasing the new one, you have to open the lock using brute-force search method. You will have to try all possible 4-digit combinations from 0 to 9 to unlock it. That combination could be anything between 0000 to 9999, hence there are 10,000 combinations. So we can say that in the worst case, you have to try 10, 000 times to find your actual combination.

Brute Force Search for combination of padlock

The time complexity of brute force is O(mn), which can also be written as O(n*m). This means that if we need to search a string of “n” characters in a string of “m” characters, then it would take “n*m” tries.

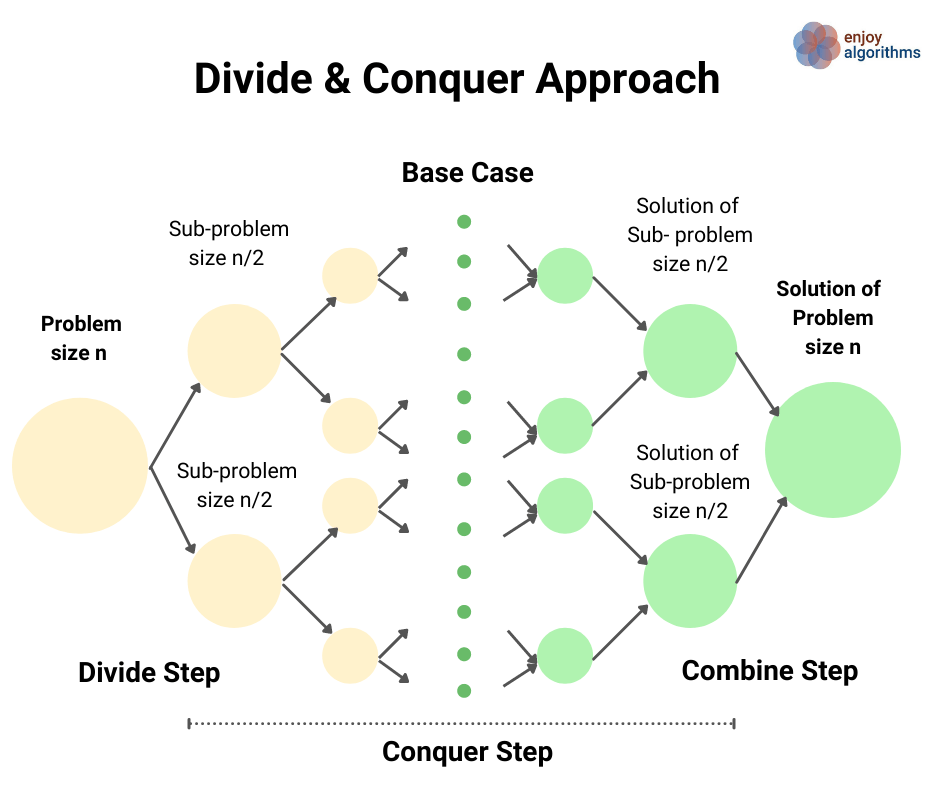

Divide and Conquer

Divide and conquer algorithm works on top-down approach and is preferred for large problems. As the name says divide and conquer, it follows following steps:

Step 1: Divide the problem into several subproblems.

Step 2: Conquer or solve each sub-problem.

Step 3: Combine each sub-problem to get the required result.

Divide and Conquer solve each subproblem recursively, so each subproblem will be the smaller original problem.

Greedy Algorithm

In Greedy Algorithm, resources are divided in a loop depending on the maximum and immediate availability at any given stage of execution. This method is used to solve optimization problems in which set of input values are given, that are required either to be increased or decreased according to the objective. Greedy Algorithm always chooses the option, which appears to be the best at the moment. That is why it is known as greedy algorithm. It may not always give the optimized solution. There are two stages to solve the problem using greedy algorithm:

- Examining the list of Items.

- Optimization

This means that a greedy algorithm selects the best immediate option and does not rethink its decisions. When it comes to optimizing a solution, this simply implies that the greedy solution will seek out local optimum solutions, which could be multiple, and may skip a global optimal solution. For example, greedy algorithm in animation below aims to locate the path with the largest sum.

With a goal of reaching the largest sum, at each step, the greedy algorithm will choose what appears to be the optimal immediate choice, so it will choose 12 instead of 3 at the second step and will not reach the best solution, which contains 99.

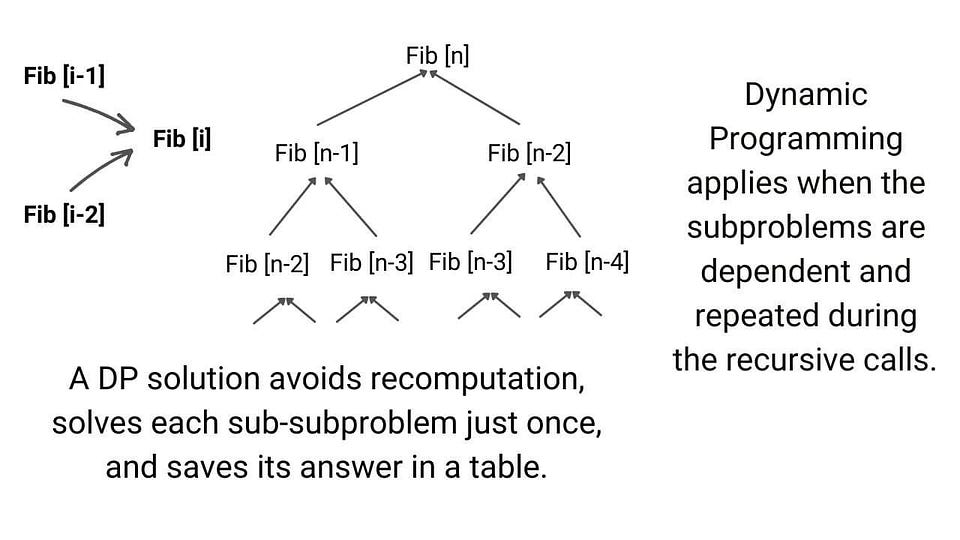

Dynamic Programming

Dynamic Programming (DP) is an algorithmic technique for solving optimization problems by breaking them into simpler sub-problems and storing each sub-solution so that the corresponding sub-problem can be solved only once. Dynamic Programming is a good methodology for optimization problems that seek the maximal or minimal solution with restrictions as it searches through all possible sub-problems and never recomputes the conclusion to any sub-problem.

It’s an algorithmic strategy for breaking down an optimization problem into smaller sub-problems and leveraging the fact that the best solution for the overall problem is defined by the best solution for its sub-problems. For example in case of Fibonacci Series in which each number is the sum of the two preceding numbers. Suppose the first two numbers of the series are 0, 1. If it is asked to find the nth number of the series, we can do that as follows:

Dynamic Programming Example

Here, to solve the overall problem i.e., Fib(n) , we have to break it down into two smaller sub-problems i.e., Fib(n-1) and Fib(n-2). Hence, we can use Dynamic Programming to solve above mentioned problem, which is elaborated in more detail in the following figure:

Fibonacci Series using Dynamic Programming

Branch and Bound Algorithm

For combinatory, discrete, and general mathematical optimization problems, branch and bound algorithms are applied to determine the optimal solution. A branch and bound algorithm searches the set of all possible solutions before recommending the best one. This algorithm enumerates possible candidate solutions in a stepwise manner by exploring all possible set of solutions.

Branch and Bound Algorithm Example

First of all we build a rooted decision tree where the root node represents the entire search space. Each child node is a part of the solution set and is a partial solution. Based on the optimal solution, we set an upper and lower bound for a given problem before constructing the rooted decision tree and we need to make a decision about which node to include in the solution set at each level. It is very important to find upper and lower bound and to find upper bound any local optimization method can be used. It can also be found by picking any point in the search space and convex relaxation. Whereas, duality can be used for finding lower bound.

Randomized Algorithm

Randomized Algorithm refers to an algorithm that uses random numbers to determine what to do next at any point in its logic. In a standard algorithm, it is usually used to reduce either the running time, or time complexity, or the memory used, or space complexity. The algorithm works by creating a random number, r, from a set of numbers and making decisions based on its value. This algorithm could assist in making a decision in a situation of doubt by flipping a coin or drawing a card from a deck.

Randomized Algorithm Flowchart

When utilizing a randomized method, keep the following two considerations in mind:

- It takes source of random numbers and makes random choices during execution along with the input.

- Behavior of an algorithm varies even on fixed inputs.

Backtracking Algorithms

Backtracking means that if the current solution isn’t working, you should go back and attempt another option. It is a method for resolving issues recursively by attempting to construct a solution incrementally, one piece at a time, discarding any solutions that do not satisfy the problem’s constraints at any point in time. This approach is used to resolve problems having multiple solutions. For example if we want to find all the possible ways of arranging 2 boys and 1 girl on 3 benches with a constraint that Girl should not be on the middle bench. So there will be 3! = 6 possibilities to solve this problem. We will try all possible ways recursively to get the required solution. Those possibilities are as follows:

Backtracking Example Possibilities

Following diagram shows the possible solutions for the above mentioned problem:

Solutions of Backtracking

Related Posts

Abstract Data Types

Radix Sort in Data Structure

Master Method in DAA

Program Performance Measurement

Doubly Linked List in Data Structure

Analyzing Algorithm Control Structure in DAA

Add comment cancel reply.

PrepBytes Blog

ONE-STOP RESOURCE FOR EVERYTHING RELATED TO CODING

Sign in to your account

Forgot your password?

Login via OTP

We will send you an one time password on your mobile number

An OTP has been sent to your mobile number please verify it below

Register with PrepBytes

Design and analysis of algorithms.

Last Updated on April 23, 2024 by Abhishek Sharma

Algorithms are the fundamental building blocks of computer science, enabling us to solve complex problems efficiently. The design and analysis of algorithms is a crucial area of study that focuses on creating efficient algorithms and understanding their behavior. In this article, we will explore the key concepts of algorithm design and analysis, including algorithmic strategies, complexity analysis, and the role of data structures in algorithm efficiency.

What is Algorithm Design?

Algorithm design is the process of creating step-by-step instructions for solving a problem. It involves selecting appropriate data structures and algorithmic techniques to optimize the solution.

Strategies used in Algorithm Design

There are several common strategies used in algorithm design, including:

- Divide and Conquer: This strategy involves breaking down a problem into smaller, more manageable subproblems, solving each subproblem recursively, and then combining the solutions to the subproblems to solve the original problem.

- Dynamic Programming: Dynamic programming is a technique used to solve problems by breaking them down into simpler subproblems and storing the results of subproblems to avoid redundant computations.

- Greedy Algorithms: Greedy algorithms make decisions based on the current best choice without considering the future consequences. While greedy algorithms are easy to implement, they may not always produce optimal solutions.

- Backtracking: Backtracking is a technique used to solve problems by exploring all possible solutions recursively and backtracking from those paths that do not lead to a valid solution.

- Randomized Algorithms: Randomized algorithms use randomness to make decisions during the computation, which can lead to more efficient solutions in some cases.

What are Algorithm Analysis?

Algorithm analysis is the process of evaluating the performance of an algorithm in terms of time complexity and space complexity. Time complexity refers to the amount of time an algorithm takes to run as a function of the input size, while space complexity refers to the amount of memory space an algorithm requires to run.

The Big O notation is commonly used to express the time complexity of an algorithm. It provides an upper bound on the growth rate of the algorithm’s running time as the input size increases. For example, an algorithm with a time complexity of O(n) has a linear growth rate, meaning its running time increases linearly with the input size.

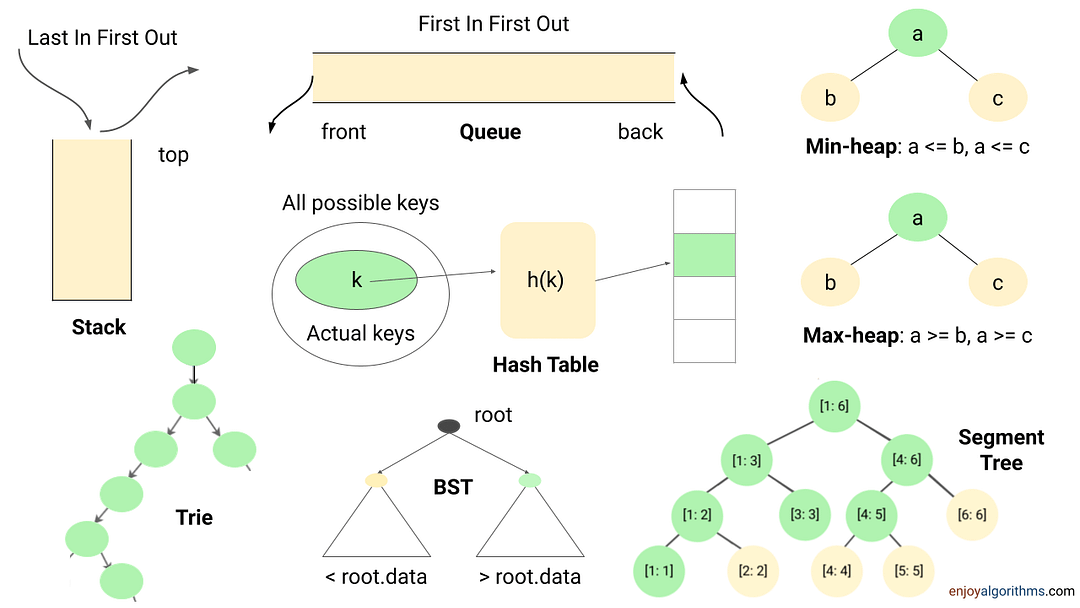

What is Data Structures?

Data structures play a crucial role in algorithm efficiency. They are used to store and organize data in a way that allows algorithms to access and manipulate it quickly and efficiently. Common data structures include arrays, linked lists, stacks, queues, trees, and graphs.

The choice of data structure can significantly impact the performance of an algorithm. For example, using a hash table to store key-value pairs can lead to faster lookups compared to using a linear list.

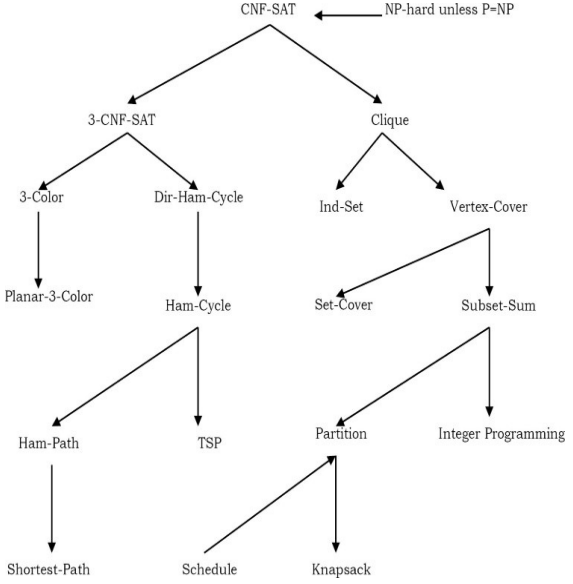

Algorithm Complexity Classes

Algorithm complexity classes classify algorithms based on their worst-case behavior. Some common complexity classes include:

- P: The class of problems that can be solved in polynomial time, meaning the running time of the algorithm is bounded by a polynomial function of the input size.

- NP: The class of problems for which a solution can be verified in polynomial time, but no efficient algorithm is known to exist for finding a solution.

- NP-hard: The class of problems that are at least as hard as the hardest problems in NP. Solving an NP-hard problem in polynomial time would imply P = NP.

- NP-complete: The class of problems that are both in NP and NP-hard. NP-complete problems are among the hardest problems in NP.

Conclusion In conclusion, the design and analysis of algorithms is a fundamental aspect of computer science that enables us to solve complex problems efficiently. By understanding algorithmic strategies, complexity analysis, and the role of data structures, we can create algorithms that are both correct and efficient. Algorithm design and analysis continue to be active areas of research, driving advancements in computing and technology.

By employing the right algorithms and data structures, developers can optimize the performance of their software applications and solve complex problems with ease. As technology continues to evolve, the importance of algorithm design and analysis will only continue to grow, making it a critical skill for any aspiring computer scientist or software developer.

FAQs related to the Design and Analysis of Algorithms

Here are some of the FAQs related to Design and Analysis of Algorithms:

1. What are some common algorithm design strategies? Common algorithm design strategies include divide and conquer, dynamic programming, greedy algorithms, backtracking, and randomized algorithms.

2. How do data structures impact algorithm efficiency? Data structures impact algorithm efficiency by providing a way to store and organize data in a way that allows algorithms to access and manipulate it quickly and efficiently. The choice of data structure can significantly impact the performance of an algorithm.

3. What are complexity classes in algorithm analysis? Complexity classes are categories that classify algorithms based on their worst-case behavior. Common complexity classes include P, NP, NP-hard, and NP-complete.

4. What is the Big O notation? The Big O notation is used to express the upper bound on the growth rate of an algorithm’s running time or space requirements. It provides a way to describe the efficiency of an algorithm in terms of its input size.

5. Why is algorithm design and analysis important in computer science? Algorithm design and analysis are important in computer science because they form the foundation of efficient problem-solving. By understanding how to design and analyze algorithms, computer scientists can create better software, solve complex problems, and advance the field of computing.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Linked List

- Segment Tree

- Backtracking

- Dynamic Programming

- Greedy Algorithm

- Operating System

- Company Placement

- Interview Tips

- General Interview Questions

- Data Structure

- Other Topics

- Computational Geometry

- Game Theory

Related Post

Introduction to amortized analysis, types of complexity classes, difference between big oh, big omega, and big theta, basics of analysis of algorithms, types of algorithm analysis, why analysis of algorithms is important.

DAA Tutorial: Design and Analysis of Algorithms

DAA Tutorial Summary

This Design and Analysis of Algorithms Tutorial is designed for beginners with little or no coding experience. It covers algorithm Design and Analysis process concepts.

What is an Algorithm?

An Algorithm is a set of well-defined instructions designed to perform a specific set of tasks. Algorithms are used in Computer science to perform calculations, automatic reasoning, data processing, computations, and problem-solving. Designing an algorithm is important before writing the program code as the algorithm explains the logic even before the code is developed.

DAA Syllabus

Introduction, advanced stuff, why study design and analysis of algorithm.

Design and Analysis of Algorithm help to design the algorithms for solving different types of problems in Computer Science. It also helps to design and analyze the logic on how the program will work before developing the actual code for a program.

Prerequisites for learning DAA Tutorial

For learning this DAA tutorial, you should know the basic programming and mathematics concepts and data structure concepts. The basic knowledge of algorithms will also help you learn and understand the DAA concepts easily and quickly.

What will you learn in this Design and Analysis of Algorithms Tutorial?

In this Design and Analysis of Algorithms tutorial, you will learn the basic concepts about DAA like the introduction to Algorithm, Greedy algorithm, linked list, and arrays in a data structure. You will also learn advanced concepts like Trees in a data structure, search algorithms, sorting algorithms, hash tables, and interview questions related to Algorithms.

DAA Tutorial

Design and Analysis of Algorithms is the process of creating step-by-step instructions for solving problems using computers and then evaluating those instructions to see how efficient they are. It’s like devising a recipe for a specific dish (the algorithm) and then figuring out how long it takes to cook (the analysis) and how much ingredients it requires.

Welcome to our comprehensive tutorial guide on Design and Analysis of Algorithms (DAA)! Whether you’re a beginner looking to dive into the world of algorithms or an experienced professional seeking to enhance your skills, this guide is designed to cater to all levels of expertise. In this Tutorial, we’ll cover everything you need to know to master DBMS, from the basics of relational databases to advanced topics like Introduction to algorithm , Asymptotic notation, Space and Time Complexity, Divide and Conquer algorithm , Greedy Algorithm.

Our DAA will guide you to learn Design and Analysis of Algorithms one step at a time.

In this Tutorial you will get well maintain DAA topic wise in the form of PDF…

Topics Covered

About daa .

- Problem Solving Framework: Design and Analysis of Algorithms (DAA) provides a systematic approach to solving computational problems by devising efficient step-by-step procedures or algorithms.

- Efficiency Evaluation: DAA involves evaluating the performance of algorithms in terms of time complexity (how long an algorithm takes to run) and space complexity (how much memory an algorithm uses).

- Algorithm Design Paradigms: DAA encompasses various design paradigms such as divide and conquer, dynamic programming, greedy algorithms, and backtracking, offering diverse strategies for solving different types of problems.

- Optimization Techniques: DAA focuses on optimizing algorithms to improve their efficiency, often balancing trade-offs between time and space complexity to achieve optimal solutions.

- Real-World Applications: DAA is fundamental to numerous real-world applications, including computer science, engineering, data science, artificial intelligence, and operations research, where efficient problem-solving algorithms are essential for tackling complex computational tasks.

Why Learn DAA ?

- Efficient Problem Solving: Learn DAA for optimized algorithms and systematic problem-solving. Algorithmic Thinking: Master DAA for critical thinking in complex computational challenges.

- Performance Optimization: Enhance efficiency in time and space with DAA expertise.

- Foundation for Computer Science: DAA is fundamental for software development and system optimization.

- Career Advancement: Proficiency in DAA unlocks diverse opportunities in software engineering and data science.

100+ Questions

- Practice Backtracking

- Interview Problems on Backtracking

- MCQs on Backtracking

- Tutorial on Backtracking

- Backtracking vs Recursion

- Backtracking vs Branch & Bound

- Print Permutations

- Subset Sum Problem

- N-Queen Problem

- Knight's Tour

- Sudoku Solver

- Rat in Maze

- Hamiltonian Cycle

- Graph Coloring

Backtracking Algorithm

- Introduction to Backtracking - Data Structure and Algorithm Tutorials

- Difference between Backtracking and Branch-N-Bound technique

- What is the difference between Backtracking and Recursion?

Standard problems on backtracking

- The Knight's tour problem

- Rat in a Maze

- N Queen Problem

- Subset Sum Problem using Backtracking

- M-Coloring Problem

- Algorithm to Solve Sudoku | Sudoku Solver

- Magnet Puzzle

- Remove Invalid Parentheses

- A backtracking approach to generate n bit Gray Codes

- Permutations of given String

Easy Problems on Backtracking

- Print all subsets of a given Set or Array

- Check if a given string is sum-string

- Count all possible Paths between two Vertices

- Find all distinct subsets of a given set using BitMasking Approach

- Find if there is a path of more than k length from a source

- Print all paths from a given source to a destination

- Print all possible strings that can be made by placing spaces

Medium prblems on Backtracking

- 8 queen problem

- Combinational Sum

- Warnsdorff's algorithm for Knight’s tour problem

- Find paths from corner cell to middle cell in maze

- Find Maximum number possible by doing at-most K swaps

- Rat in a Maze with multiple steps or jump allowed

- N Queen in O(n) space

Hard problems on Backtracking

- Power Set in Lexicographic order

- Word Break Problem using Backtracking

- Partition of a set into K subsets with equal sum

- Longest Possible Route in a Matrix with Hurdles

- Find shortest safe route in a path with landmines

- Printing all solutions in N-Queen Problem

- Print all longest common sub-sequences in lexicographical order

- Top 20 Backtracking Algorithm Interview Questions

Backtracking algorithms are like problem-solving strategies that help explore different options to find the best solution. They work by trying out different paths and if one doesn’t work, they backtrack and try another until they find the right one. It’s like solving a puzzle by testing different pieces until they fit together perfectly.

- Backtracking

Table of Content

What is Backtracking Algorithm?

How does a backtracking algorithm work, example of backtracking algorithm, when to use a backtracking algorithm, applications of backtracking algorithm.

- Basic of Backtracking Algorithm

- Standard Problems on Backtracking Algorithm

- Easy Problems on Backtracking Algorithm

- Medium Problems on Backtracking Algorithm

- Hard Problems on Backtracking Algorithm

Backtracking is a problem-solving algorithmic technique that involves finding a solution incrementally by trying different options and undoing them if they lead to a dead end .

It is commonly used in situations where you need to explore multiple possibilities to solve a problem, like searching for a path in a maze or solving puzzles like Sudoku . When a dead end is reached, the algorithm backtracks to the previous decision point and explores a different path until a solution is found or all possibilities have been exhausted.

A backtracking algorithm works by recursively exploring all possible solutions to a problem. It starts by choosing an initial solution, and then it explores all possible extensions of that solution. If an extension leads to a solution, the algorithm returns that solution. If an extension does not lead to a solution, the algorithm backtracks to the previous solution and tries a different extension.

The following is a general outline of how a backtracking algorithm works:

- Choose an initial solution.

- Explore all possible extensions of the current solution.

- If an extension leads to a solution, return that solution.

- If an extension does not lead to a solution, backtrack to the previous solution and try a different extension.

- Repeat steps 2-4 until all possible solutions have been explored.

Example: Finding the shortest path through a maze

Input: A maze represented as a 2D array, where 0 represents an open space and 1 represents a wall.

- Start at the starting point.

- For each of the four possible directions (up, down, left, right), try moving in that direction.

- If moving in that direction leads to the ending point, return the path taken.

- If moving in that direction does not lead to the ending point, backtrack to the previous position and try a different direction.

- Repeat steps 2-4 until the ending point is reached or all possible paths have been explored.

Backtracking algorithms are best used to solve problems that have the following characteristics:

- There are multiple possible solutions to the problem.

- The problem can be broken down into smaller subproblems.

- The subproblems can be solved independently.

Backtracking algorithms are used in a wide variety of applications, including:

- Solving puzzles (e.g., Sudoku, crossword puzzles)

- Finding the shortest path through a maze

- Scheduling problems

- Resource allocation problems

- Network optimization problems

Basic of Backtracking Algorithm:

- Introduction to Backtracking – Data Structure and Algorithm Tutorials

Standard Problems on Backtracking Algorithm:

- The Knight’s tour problem

- N Queen Problem | Backtracking-3

- Subset Sum problem

- m Coloring Problem

- Sudoku | Backtracking-7

- Write a program to print all permutations of a given string

Easy Problems on Backtracking Algorithm:

- Backtracking to find all subsets

- Count all possible paths between two vertices

- Find all distinct subsets of a given set

Medium Problems on Backtracking Algorithm:

- Warnsdorff’s algorithm for Knight’s tour problem

Hard Problems on Backtracking Algorithm:

- Print all palindromic partitions of a string

Quick Links :

- Learn Data Structure and Algorithms | DSA Tutorial

- ‘Practice Problems’ on Backtracking

- ‘Quiz’ on Backtracking

- ‘Videos’ on Backtracking

Please Login to comment...

Similar reads.

- Algorithms-Backtracking

- Algorithms-Recursion

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

DAA (Design and Analysis of Algorithms)

Dcrust syllabus.

CSE 206C Design and Analysis of Algorithms

CATEGORY : ENGINEERING SCIENCE COURSE

Course Objectives:

- To analyze worst-case running times of algorithms based on asymptotic analysis and justify the correctness of algorithms.

- To apply the algorithms and design techniques to solve problems.

- To explain the major graph algorithms and their analyses and to employ graphs to model engineering problems.

- To understand the concepts of tractable and intractable problems and the classes P, NP and NP-complete problems.

Introduction: Characteristics of algorithm. Analysis of algorithm: Asymptotic analysis of complexity bounds – best, average and worst-case behavior; Performance measurements of Algorithm, Time and space trade-offs, Analysis of recursive algorithms through recurrence relations: Substitution method, Recursion tree method and Masters’ theorem.

Click on any topic to read about the topic.

Fundamental Algorithmic Strategies: Brute-Force, Greedy, Dynamic Programming, Branch- and-Bound and Backtracking methodologies for the design of algorithms; Illustrations of these techniques for Problem-Solving, Bin Packing, Knap Sack TSP. Heuristics-characteristics and their application domains.

Graph and Tree Algorithms: Traversal algorithms: Depth First Search (DFS) and Breadth First Search (BFS); Shortest path algorithms, Transitive closure, Minimum Spanning Tree, Topological sorting, Network Flow Algorithm.

Tractable and Intractable Problems: Computability of Algorithms, Computability classes – P, NP, NP-complete and NP-hard. Cook’s theorem, Standard NP-complete problems and Reduction techniques.

Advanced Topics: Approximation algorithms, Randomized algorithms, Class of problems beyond NP – P SPACE

TEXT BOOKS:

- Introduction to Algorithms, 4TH Edition, Thomas H Cormen, MIT Press/McGraw-Hill.Fundamentals of Algorithms – E. Horowitz et al.

REFERENCE BOOKS:

- Algorithm Design, 1ST Edition, Jon Kleinberg and ÉvaTardos, Pearson.

- Algorithm Design: Foundations, Analysis, and Internet Examples, Second Edition, Michael T Goodrich and Roberto Tamassia, Wiley.

- Algorithms — A Creative Approach, 3 RD Edition, UdiManber, Addison-Wesley, Reading, MA.

Course Outcomes: After successful completion of the course students will be able to:

- Analyze worst-case running times of algorithms based on asymptotic analysis and justify the correctness of algorithms.

- Apply the algorithms and design techniques to solve problems;

- Explain the major graph algorithms and their analyses. Employ graphs to model engineering problems,

- Understand the concepts of tractable and intractable problems and the classes P, NP and NP-complete problems.

Related Posts

Aca (advanced computer architecture), ai (artificial intelligence and expert system), aj (advanced java), aj lab (advanced java lab), cd (compiler design), cd lab (compiler design lab), cfcl (cyber forensics and cyber laws), cg (computer graphics), cn (computer networks), coa (computer organisation and architecture, cs (cyber security), daa lab (design and analysis of algorithms lab), dbms (database management systems), dbms lab (database management system lab ), dsa (data structure and algorithms), dsa lab (data structures and algorithms lab), flat (formal languages & automata theory), itw (it workshop) / matlab, mm (multimedia technology), oop (object oriented programming).

- Digital Electronics

- Embedded System

- Mobile Computing

- Data Structures

- Computer Concepts

- Data Science

- Machine Learning

- Operating System

- Parallel Algorithm

- Principal of Programming Languages

- Software Engineering

- Artificial Intelligence

- Compiler Design

- Computer Concept

- Computer Architecture

- Data Structure

- Electronics

- Write For Us

- Yuvayana Home

- Mock Test Zone

- Write for Us

In the previous article, we learned about the relationship between Complexity Classes in DAA , In this article, we will learn about the reductions (complexity).

Before discussing reductions, let us consider the following scenario. Assume that we want to solve problem X but feel it’s very complicated. In this case, what do we do? The first thing that comes to mind is, if we have a similar problem to that of X (let us say Y), then we try to map X to Y and use Y’s solution to solve X also. This process is called reduction.

process of reduction

In order to map problem X to problem Y, we need some algorithm and that may take the linear time or more. Based on this discussion the cost of solving problem X can be given as: Cost of solving X = Cost of solving Y + Reduction time Now, let us consider the other scenario.

For solving problem X, sometimes we may need to use Y’s algorithm (solution) multiple times. In that case, the Cost of solving X = Number of Times * Cost of solving X + Reduction time The main thing in NP-Complete is reducibility. That means, we reduce (or transform) given NP-complete problems to other known NP-Complete problems. Since the NP-Complete problems are hard to solve and in order to prove that a given NP-Complete problem is hard, we take one existing hard problem (which we can prove is hard) and try to map the given problem to that and finally we prove that the given problem is hard.

Note: It’s not compulsory to reduce the given problem to a known hard problem to prove its hardness. Sometimes, we reduce the known hard problem to a given problem.

Important NP-Complete Problems (Reductions) –

Satisfiability problem:.

A boolean formula is in conjunctive normal form (CNF) if it is a conjunction (AND) of several clauses, each of which is the disjunction (OR) of several literals, each of which is either a variable or its negation. For example (a ∨ b ∨ c ∨ d ∨ e)∧(b ∨ ~c ∨ ~d) ∧ (~a ∨ c ∨ d) ∨ (a ∨ ~b) A 3-CNF formula is a CNF formula with exactly three literals per clause. The previous example is not a 3-CNF formula, since its first clause has five literals and its last clause has only two.

2-SAT Problem: 3-SAT is just SAT restricted to 3-CNF formulas: Given a 3-CNF formula, is there an assignment to the variables so that the formula evaluates to TRUE? 2-SAT Problem: 2-SAT is just SAT restricted to 2-CNF formulas: Given a 2-CNF formula, is there an assignment to the variables so that the formula evaluates to TRUE?

Circuit-Satisfiability Problem:

Given a boolean combinational circuit composed of AND, OR and NOT gates, is it satisfiable?. That means, given a boolean circuit consisting of AND, OR and NOT gates properly connected by wires, the Circuit-SAT problem is to decide whether there exists an input assignment for which the output is TRUE.

Hamiltonian Path Problem (Ham-Path):

Given an undirected graph, is there a path that visits every vertex exactly once?

Hamiltonian Cycle Problem (Ham-Cycle):

Given an undirected graph, is there a cycle (where start and end vertices are same) that visits every vertex exactly once?

Directed Hamiltonian Cycle Problem (Dir-Ham-Cycle):

Given a directed graph, is there a cycle (where start and end vertices are same) that visits every vertex exactly once?

Travelling Salesman Problem (TSP):

Given a list of cities and their pair-wise distances, the problem is to find the shortest possible tour that visits each city exactly once.

Shortest Path Problem (Shortest-Path):

Given a directed graph and two vertices s and t, check whether there is a shortest simple path from s to t.

Graph Coloring:

A k-coloring of a graph is to map one of k ‘colors’ to each vertex, so that every edge has two different colors at its endpoints. The graph coloring problem is to find the smallest possible number of colors in a legal coloring.

3-Color problem:

Given a graph, is it possible to color the graph with 3 colors in such a way that every edge has two different colors?

Clique (also called complete graph):

Given a graph, the CLIQUE problem is to compute the number of nodes in its largest complete subgraph. That means, we need to find the maximum subgraph which is also a complete graph.

Independent Set Problem (Ind_Set):

Let G be an arbitrary graph. An independent set in G is a subset of the vertices of G with no edges between them. The maximum independent set problem is the size of the largest independent set in a given graph.

Vertex Cover Problem (Vertex-Cover):

A vertex cover of a graph is a set of vertices that touches every edge in the graph. The vertex cover problem is to find the smallest vertex cover in a given graph.

Subset Sum Problem (Subset-Sum):

Given a set S of integers and an integer T, determine whether 5 has a subset whose elements sum to T.

Note: Since the problems are NP-Complete, they are NP and NP-hard too. For simplicity, we can ignore the proofs for these reductions.

Attempt free Data Structures MCQ Test

RELATED ARTICLES MORE FROM AUTHOR

Bitwise Programming

Relationship between P, NP Co-NP, NP-Hard and NP-Complete

Complexity Classes

Leave a reply cancel reply.

Save my name, email, and website in this browser for the next time I comment.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Introduction to Data Structures and Arrays

Operations on arrays – data structures traversal, insertion, deletion, searching and sorting, binary search – arrays, bubble sort in data structures, selection sort algorithm in data structures, quick sort algorithm in data structures, merge sort algorithm in data structures, insertion sort algorithm in data structures, what is stack in data structures, what is queue in data structures, what is circular queue in data structures, double ended queue/deque in data structures, what is hashing in data structures, binary tree and its types in data structures, representation and traversal techniques of binary tree, insertion operation in a binary tree, deletion in a binary tree, what is an avl tree in data structures, insertion and deletion in heaps, graphs in data structures : definition, types and terminologies, graph representation and graph traversal in data structure, dijkstra shortest path algorithm : procedure, code, uses, bellman ford algorithm, spanning tree, prims algorithm and kruskal algorithm, huffman tree and huffman algorithm, huffman coding – letter encoding using huffman tree, kth smallest element using quick select algorithm, what is dynamic programming : properties & examples, graph data structure cheat sheet for coding interviews, memoization and fibonacci.

- Privacy Policy

- Terms and Conditions

TheDeveloperBlog.com

Home | Contact Us

C-Sharp | Java | Python | Swift | GO | WPF | Ruby | Scala | F# | JavaScript | SQL | PHP | Angular | HTML

- DAA Tutorial | Design and Analysis of Algorithms Tutorial

Related Links:

- DAA Selection Sort

- DAA Recursion Tree Method

- DAA Binary Search

- DAA Stable Sorting

- DAA Lower bound Theory

- DAA Open Addressing Techniques

- DAA Linear Time Sorting

- DAA Counting Sort

- DAA Bucket Sort

- DAA Radix Sort

- DAA Hashing

- DAA Hash Tables

- DAA Hashing Method

- DAA Binary Search Trees

- DAA Red Black Tree

- DAA Master Method

- DAA Bubble Sort

- DAA | Network Flow Problems

- DAA Hash Function

- DAA Analyzing Algorithm Control Structure

- DAA Recurrence Relation

- DAA | Flow Networks and Flows

- DAA Insertion Sort

- DAA | Ford Fulkerson Algorithm

- DAA | Maximum bipartite matching

- DAA | Comparison Network

- DAA | NP-Completeness

- DAA | Bitonic Sorting Network

- DAA | Merging Network

- DAA | Complexity Classes

- DAA | Polynomial Time Verification

- DAA | Circuit Satisfiability

- DAA | 3-CNF Satisfiability

- DAA | Clique Problem

- DAA | Vertex Cover Problem

- DAA | Subset-Sum Problem

- DAA | Approximation Algorithm

- DAA | Approximation Algorithm Vertex Cover

- DAA Knuth-Morris-Pratt Algorithm

- DAA Boyer-Moore Algorithm

- DAA | Travelling Salesman Problem

- DAA String Matching Introduction

- DAA Naive String Matching Algorithm

- DAA Rabin-Karp Algorithm

- DAA String Matching with Finite Automata

- DAA Quick Sort

- Top 40 DAA Interview Questions (2021)

- DAA Max-Min Problem

- DAA Merge Sort

- DAA Tower of Hanoi

- DAA Binary Heap Sort

- DAA Algorithm

- DAA Need of Algorithm

- DAA Complexity of Algorithm

- DAA Algorithm Design Techniques

- DAA Asymptotic Analysis of Algorithms

Related Links

EnjoyMathematics

Problem-Solving Approaches in Data Structures and Algorithms

This blog highlights some popular problem-solving strategies for solving problems in DSA. Learning to apply these strategies could be one of the best milestones for the learners in mastering data structure and algorithms.

An Incremental approach using Single and Nested loops

One of the simple ideas of our daily problem-solving activities is that we build the partial solution step by step using a loop. There is a different variation to it:

- Input-centric strategy: At each iteration step, we process one input and build the partial solution.

- Output-centric strategy: At each iteration step, we add one output to the solution and build the partial solution.

- Iterative improvement strategy: Here, we start with some easily available approximations of a solution and continuously improve upon it to reach the final solution.

Here are some approaches based on loop: Using a single loop and variables, Using nested loops and variables, Incrementing the loop by a constant (more than 1), Using the loop twice (Double traversal), Using a single loop and prefix array (or extra memory), etc.

Example problems: Insertion Sort , Finding max and min in an array , Valid mountain array , Find equilibrium index of an array , Dutch national flag problem , Sort an array in a waveform .

Decrease and Conquer Approach

This strategy is based on finding the solution to a given problem via its one sub-problem solution. Such an approach leads naturally to a recursive algorithm, which reduces the problem to a sequence of smaller input sizes. Until it becomes small enough to be solved, i.e., it reaches the recursion’s base case.

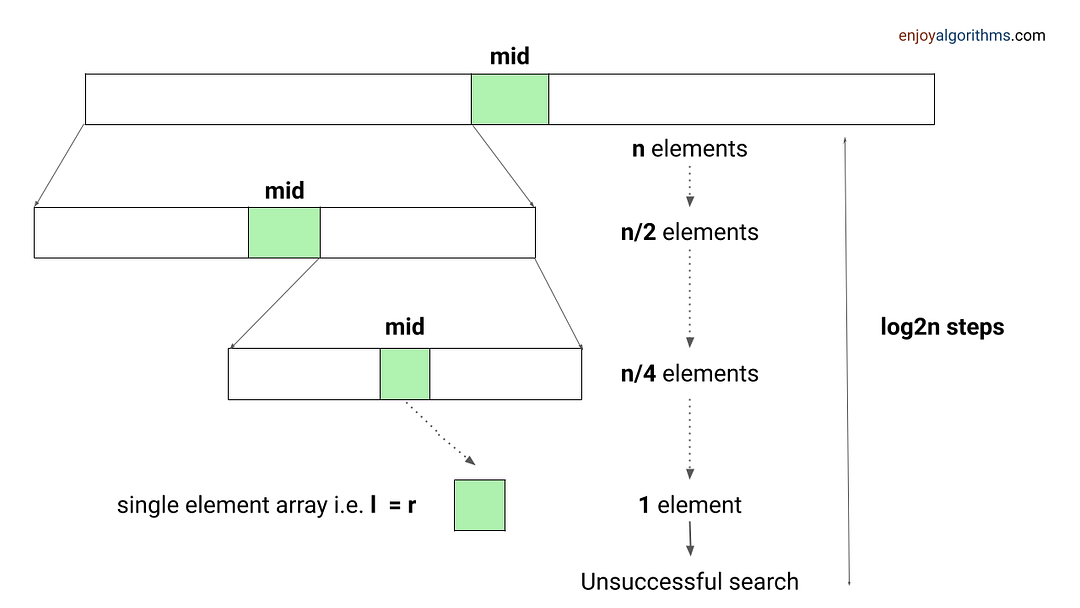

Example problems: Euclid algorithm of finding GCD , Binary Search , Josephus problem

Problem-solving using Binary Search

When an array has some order property similar to the sorted array, we can use the binary search idea to solve several searching problems efficiently in O(logn) time complexity. For doing this, we need to modify the standard binary search algorithm based on the conditions given in the problem. The core idea is simple: calculate the mid-index and iterate over the left or right half of the array.

Example problems: Find Peak Element , Search a sorted 2D matrix , Find the square root of an integer , Search in Rotated Sorted Array

Divide and Conquer Approach

This strategy is about dividing a problem into more than one subproblems, solving each of them, and then, if necessary, combining their solutions to get a solution to the original problem. We solve many fundamental problems efficiently in computer science by using this strategy.

Example problems: Merge Sort , Quick Sort , Median of two sorted arrays

Two Pointers Approach

The two-pointer approach helps us optimize time and space complexity in the case of many searching problems on arrays and linked lists. Here pointers can be pairs of array indices or pointer references to an object. This approach aims to simultaneously iterate over two different input parts to perform fewer operations. There are three variations of this approach:

Pointers are moving in the same direction with the same pace: Merging two sorted arrays or linked lists, Finding the intersection of two arrays or linked lists , Checking an array is a subset of another array , etc.

Pointers are moving in the same direction at a different pace (Fast and slow pointers): Partition process in the quick sort , Remove duplicates from the sorted array , Find the middle node in a linked list , Detect loop in a linked list , Move all zeroes to the end , Remove nth node from list end , etc.

Pointers are moving in the opposite direction: Reversing an array, Check pair sum in an array , Finding triplet with zero-sum , Rainwater trapping problem , Container with most water , etc.

Sliding Window Approach

A sliding window concept is commonly used in solving array/string problems. Here, the window is a contiguous sequence of elements defined by the start and ends indices. We perform some operations on elements within the window and “slide” it in a forward direction by incrementing the left or right end.

This approach can be effective whenever the problem consists of tasks that must be performed on a contiguous block of a fixed or variable size. This could help us improve time complexity in so many problems by converting the nested loop solution into a single loop solution.

Example problems: Longest substring without repeating characters , Count distinct elements in every window , Max continuous series of 1s , Find max consecutive 1's in an array , etc.

Transform and Conquer Approach

This approach is based on transforming a coding problem into another coding problem with some particular property that makes the problem easier to solve. In other words, here we solve the problem is solved in two stages:

- Transformation stage: We transform the original problem into another easier problem to solve.

- Conquering stage: Now, we solve the transformed problem.

Example problems: Pre-sorting based algorithms (Finding the closest pair of points, checking whether all the elements in a given array are distinct, etc.)

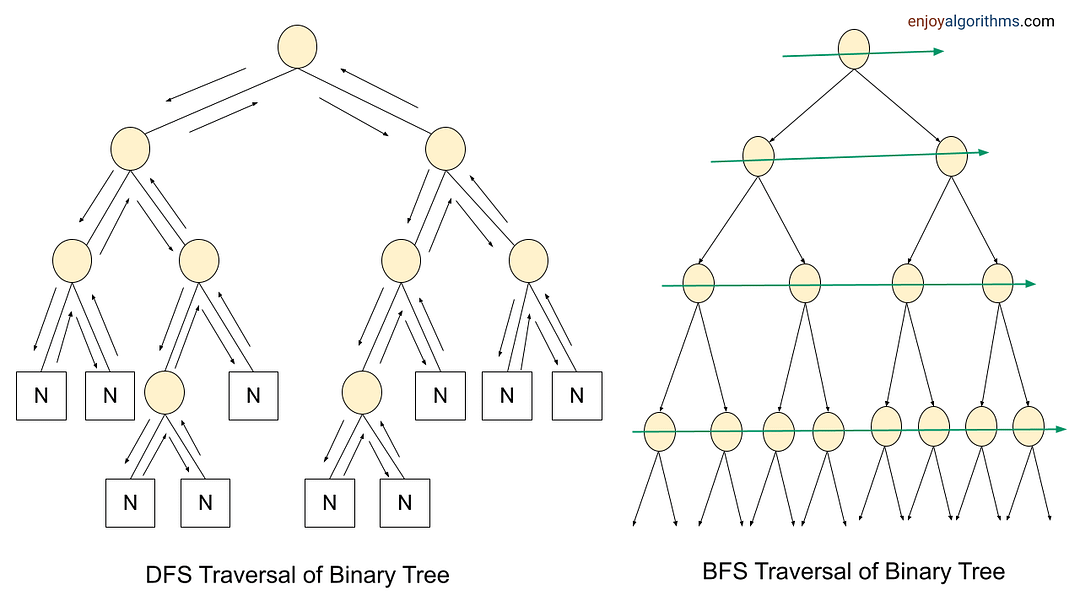

Problem-solving using BFS and DFS Traversal

Most tree and graph problems can be solved using DFS and BFS traversal. If the problem is to search for something closer to the root (or source node), we can prefer BFS, and if we need to search for something in-depth, we can choose DFS.

Sometimes, we can use both BFS and DFS traversals when node order is not required. But in some cases, such things are not possible. We need to identify the use case of both traversals to solve the problems efficiently. For example, in binary tree problems:

- We use preorder traversal in a situation when we need to explore all the tree nodes before inspecting any leaves.

- Inorder traversal of BST generates the node's data in increasing order. So we can use inorder to solve several BST problems.

- We can use postorder traversal when we need to explore all the leaf nodes before inspecting any internal nodes.

- Sometimes, we need some specific information about some level. In this situation, BFS traversal helps us to find the output easily.

To solve tree and graph problems, sometimes we pass extra variables or pointers to the function parameters, use helper functions, use parent pointers, store some additional data inside the node, and use data structures like the stack, queue, and priority queue, etc.

Example problems: Find min depth of a binary tree , Merge two binary trees , Find the height of a binary tree , Find the absolute minimum difference in a BST , The kth largest element in a BST , Course scheduling problem , bipartite graph , Find the left view of a binary tree , etc.

Problem-solving using the Data Structures

The data structure is one of the powerful tools of problem-solving in algorithms. It helps us perform some of the critical operations efficiently and improves the time complexity of the solution. Here are some of the key insights:

- Many coding problems require an effcient way to perform the search, insert and delete operations. We can perform all these operations using the hash table in O(1) time average. It's a kind of time-memory tradeoff, where we use extra space to store elements in the hash table to improve performance.

- Sometimes we need to store data in the stack (LIFO order) or queue (FIFO order) to solve several coding problems.

- Suppose there is a requirement to continuously insert or remove maximum or minimum element (Or element with min or max priority). In that case, we can use a heap (or priority queue) to solve the problem efficiently.

- Sometimes, we store data in Trie, AVL Tree, Segment Tree, etc., to perform some critical operations efficiently.

Example problems: Next greater element , Valid Parentheses , Largest rectangle in a histogram , Sliding window maximum , kth smallest element in an array , Top k frequent elements , Longest common prefix , Range sum query , Longest consecutive sequence , Check equal array , LFU cache , LRU cache , Counting sort

Dynamic Programming

Dynamic programming is one of the most popular techniques for solving problems with overlapping or repeated subproblems. Here rather than solving overlapping subproblems repeatedly, we solve each smaller subproblems only once and store the results in memory. We can solve a lot of optimization and counting problems using the idea of dynamic programming.

Example problems: Finding nth Fibonacci, Longest Common Subsequence , Climbing Stairs Problem , Maximum Subarray Sum , Minimum number of Jumps to reach End , Minimum Coin Change

Greedy Approach

This solves an optimization problem by expanding a partially constructed solution until a complete solution is reached. We take a greedy choice at each step and add it to the partially constructed solution. This idea produces the optimal global solution without violating the problem’s constraints.

- The greedy choice is the best alternative available at each step is made in the hope that a sequence of locally optimal choices will yield a (globally) optimal solution to the entire problem.

- This approach works in some cases but fails in others. Usually, it is not difficult to design a greedy algorithm itself, but a more difficult task is to prove that it produces an optimal solution.

Example problems: Fractional Knapsack, Dijkstra algorithm, The activity selection problem

Exhaustive Search

This strategy explores all possibilities of solutions until a solution to the problem is found. Therefore, problems are rarely offered to a person to solve the problem using this strategy.

The most important limitation of exhaustive search is its inefficiency. As a rule, the number of solution candidates that need to be processed grows at least exponentially with the problem size, making the approach inappropriate not only for a human but often for a computer as well.

But in some situations, there is a need to explore all possible solution spaces in a coding problem. For example: Find all permutations of a string , Print all subsets , etc.

Backtracking

Backtracking is an improvement over the approach of exhaustive search. It is a method for generating a solution by avoiding unnecessary possibilities of the solutions! The main idea is to build a solution one piece at a time and evaluate each partial solution as follows:

- If a partial solution can be developed further without violating the problem’s constraints, it is done by taking the first remaining valid option at the next stage. ( Think! )

- Suppose there is no valid option at the next stage, i.e., If there is a violation of the problem constraint, the algorithm backtracks to replace the partial solution’s previous stage with the following option for that stage. ( Think! )

In simple words, backtracking involves undoing several wrong choices — the smaller this number, the faster the algorithm finds a solution. In the worst-case scenario, a backtracking algorithm may end up generating all the solutions as an exhaustive search, but this rarely happens!

Example problems: N-queen problem , Find all k combinations , Combination sum , Sudoku solver , etc.

Problem-solving using Bit manipulation and Numbers theory

Some of the coding problems are, by default, mathematical, but sometimes we need to identify the hidden mathematical properties inside the problem. So the idea of number theory and bit manipulation is helpful in so many cases.

Sometimes understanding the bit pattern of the input and processing data at the bit level help us design an efficient solution. The best part is that the computer performs each bit-wise operation in constant time. Even sometimes, bit manipulation can reduce the requirement of extra loops and improve the performance by a considerable margin.

Example problems: Reverse bits , Add binary string , Check the power of two , Find the missing number , etc.

Hope you enjoyed the blog. Later we will write a separate blog on each problem-solving approach. Enjoy learning, Enjoy algorithms!

Share Your Insights

Don’t fill this out if you’re human:

More from EnjoyAlgorithms

Self-paced courses and blogs, coding interview, machine learning, system design, oop concepts, our newsletter.

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.

- Interview Q

DAA Tutorial

Asymptotic analysis, analysis of sorting, divide and conquer, lower bound theory, sorting in linear time, binary search trees, red black tree, dynamic programming, greedy algorithm, backtracking, shortest path, all-pairs shortest paths, maximum flow, sorting networks, complexity theory, approximation algo, string matching.

Interview Questions

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Company Questions

Trending Technologies

Artificial Intelligence

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Control System

Data Mining

Data Warehouse

- Data Structures & Algorithms

- DSA - Overview

- DSA - Environment Setup

- DSA - Algorithms Basics

- DSA - Asymptotic Analysis

- Data Structures

- DSA - Data Structure Basics

- DSA - Data Structures and Types

- DSA - Array Data Structure

- Linked Lists

- DSA - Linked List Data Structure

- DSA - Doubly Linked List Data Structure

- DSA - Circular Linked List Data Structure

- Stack & Queue

- DSA - Stack Data Structure

- DSA - Expression Parsing

- DSA - Queue Data Structure

- Searching Algorithms

- DSA - Searching Algorithms

- DSA - Linear Search Algorithm

- DSA - Binary Search Algorithm

- DSA - Interpolation Search

- DSA - Jump Search Algorithm

- DSA - Exponential Search

- DSA - Fibonacci Search

- DSA - Sublist Search

- DSA - Hash Table

- Sorting Algorithms

- DSA - Sorting Algorithms

- DSA - Bubble Sort Algorithm

- DSA - Insertion Sort Algorithm

- DSA - Selection Sort Algorithm

- DSA - Merge Sort Algorithm

- DSA - Shell Sort Algorithm

- DSA - Heap Sort

- DSA - Bucket Sort Algorithm

- DSA - Counting Sort Algorithm

- DSA - Radix Sort Algorithm

- DSA - Quick Sort Algorithm

- Graph Data Structure

- DSA - Graph Data Structure

- DSA - Depth First Traversal

- DSA - Breadth First Traversal

- DSA - Spanning Tree

- Tree Data Structure

- DSA - Tree Data Structure

- DSA - Tree Traversal

- DSA - Binary Search Tree

- DSA - AVL Tree

- DSA - Red Black Trees

- DSA - B Trees

- DSA - B+ Trees

- DSA - Splay Trees

- DSA - Tries

- DSA - Heap Data Structure

- DSA - Recursion Algorithms

- DSA - Tower of Hanoi Using Recursion

- DSA - Fibonacci Series Using Recursion

- Divide and Conquer

- DSA - Divide and Conquer

- DSA - Max-Min Problem

- DSA - Strassen's Matrix Multiplication

- DSA - Karatsuba Algorithm

- Greedy Algorithms

- DSA - Greedy Algorithms

- DSA - Travelling Salesman Problem (Greedy Approach)

- DSA - Prim's Minimal Spanning Tree

- DSA - Kruskal's Minimal Spanning Tree

- DSA - Dijkstra's Shortest Path Algorithm

- DSA - Map Colouring Algorithm

- DSA - Fractional Knapsack Problem

- DSA - Job Sequencing with Deadline

- DSA - Optimal Merge Pattern Algorithm

- Dynamic Programming

- DSA - Dynamic Programming

- DSA - Matrix Chain Multiplication

- DSA - Floyd Warshall Algorithm

- DSA - 0-1 Knapsack Problem

- DSA - Longest Common Subsequence Algorithm

- DSA - Travelling Salesman Problem (Dynamic Approach)

- Approximation Algorithms

- DSA - Approximation Algorithms

- DSA - Vertex Cover Algorithm

- DSA - Set Cover Problem

- DSA - Travelling Salesman Problem (Approximation Approach)

- Randomized Algorithms

- DSA - Randomized Algorithms

- DSA - Randomized Quick Sort Algorithm

- DSA - Karger’s Minimum Cut Algorithm

- DSA - Fisher-Yates Shuffle Algorithm

- DSA Useful Resources

- DSA - Questions and Answers

- DSA - Quick Guide

- DSA - Useful Resources

- DSA - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

DSA - Backtracking Algorithm

The backtracking algorithm is a problem-solving approach that tries out all the possible solutions and chooses the best or desired ones. Generally, it is used to solve problems that have multiple solutions. The term backtracking suggests that for a given problem, if the current solution is not suitable, eliminate it and then backtrack to try other solutions.

When do we use backtracking algorithm?

Backtracking algorithm can be used for the following problems −

The problem has multiple solutions or requires finding all possible solutions.

When the given problem can be broken down into smaller subproblems that are similar to the original problem.

If the problem has some constraints or rules that must be satisfied by the solution

How does backtracking algorithm work?

The backtracking algorithm explores various paths to find a sequence path that takes us to the solution. Along these paths, it establishes some small checkpoints from where the problem can backtrack if no feasible solution is found. This process continues until the best solution is found.

In the above figure, green is the start point, blue is the intermediate point, red are points with no feasible solution, grey is the end solution.

When backtracking algorithm reaches the end of the solution, it checks whether this path is a solution or not. If it is the solution path, it returns that otherwise, it backtracks to one step behind in order to find a solution.

Following is the algorithm for backtracking −

Complexity of Backtracking

Generally, the time complexity of backtracking algorithm is exponential (0(k n )). In some cases, it is observed that its time complexity is factorial (0(N!)).

Types of Backtracking Problem

The backtracking algorithm is applied to some specific types of problems. They are as follows −

Decision problem − It is used to find a feasible solution of the problem.

Optimization problem − It is used to find the best solution that can be applied.

Enumeration problem − It is used to find the set of all feasible solutions of the problem.

Examples of Backtracking Algorithm

The following list shows examples of Backtracking Algorithm −

- Hamiltonian Cycle

- M-Coloring Problem

- N Queen Problem

- Rat in Maze Problem

- Cryptarithmetic Puzzle

- Subset Sum Problem

- Sudoku Solving Algorithm

- Knight-Tour Problem

- Tug-Of-War Problem

- Word Break Problem

- Maximum number by swapping problem

To Continue Learning Please Login

IMAGES

VIDEO

COMMENTS

The general method of backtracking involves the following steps: 1. Choose a Variable. Select a variable from the problem's domain. This variable will be used to generate possible solutions. 2. Generate a Possible Solution. Generate a possible solution by assigning a value to the chosen variable. 3.

The divide-and-conquer strategy DAA 2022 2. Divide and Conquer Algorithms - 3 / 60 The divide-and-conquer strategy solves a problem by: 1. Breaking it into subproblems (smaller instances of the same problem) 2. Recursively solving these subproblems [Base case: If the subproblems are small enough, just solve them by brute force.] 3.

Algorithm Design Techniques. 1. Divide and Conquer Approach: It is a top-down approach. The algorithms which follow the divide & conquer techniques involve three steps: Divide the original problem into a set of subproblems. Solve every subproblem individually, recursively. Combine the solution of the subproblems (top level) into a solution of ...

Divide and conquer algorithm works on top-down approach and is preferred for large problems. As the name says divide and conquer, it follows following steps: Step 1: Divide the problem into several subproblems. Step 2: Conquer or solve each sub-problem. Step 3: Combine each sub-problem to get the required result.

Strategies used in Algorithm Design. There are several common strategies used in algorithm design, including: Divide and Conquer: This strategy involves breaking down a problem into smaller, more manageable subproblems, solving each subproblem recursively, and then combining the solutions to the subproblems to solve the original problem.

An Algorithm is a set of well-defined instructions designed to perform a specific set of tasks. Algorithms are used in Computer science to perform calculations, automatic reasoning, data processing, computations, and problem-solving. Designing an algorithm is important before writing the program code as the algorithm explains the logic even ...

Design and Analysis of Algorithms. Design and Analysis of Algorithms is a fundamental aspect of computer science that involves creating efficient solutions to computational problems and evaluating their performance. DSA focuses on designing algorithms that effectively address specific challenges and analyzing their efficiency in terms of time ...

Algorithm Design Techniques. 1. Divide and Conquer Approach: It is a top-down approach. The algorithms which follow the divide & conquer techniques involve three steps: Divide the original problem into a set of subproblems. Solve every subproblem individually, recursively. Combine the solution of the subproblems (top level) into a solution of ...

Problem Solving: Different problems require different algorithms, and by having a classification, it can help identify the best algorithm for a particular problem. Performance Comparison: By classifying algorithms, it is possible to compare their performance in terms of time and space complexity, making it easier to choose the best algorithm for a particular use case.

Problem Solving Framework: Design and Analysis of Algorithms (DAA) provides a systematic approach to solving computational problems by devising efficient step-by-step procedures or algorithms. Efficiency Evaluation: DAA involves evaluating the performance of algorithms in terms of time complexity (how long an algorithm takes to run) and space complexity (how much memory an algorithm uses).

Backtracking is a problem-solving algorithmic technique that involves finding a solution incrementally by trying different options and undoing them if they lead to a dead end. It is commonly used in situations where you need to explore multiple possibilities to solve a problem, like searching for a path in a maze or solving puzzles like Sudoku .

Fundamental Algorithmic Strategies: Brute-Force, Greedy, Dynamic Programming, Branch- and-Bound and Backtracking methodologies for the design of algorithms; Illustrations of these techniques for Problem-Solving, Bin Packing, Knap Sack TSP. Heuristics-characteristics and their application domains.

The algorithm design process entails creating a set of instructions that a computer can use to solve the problem. The algorithm analysis process entails determining the method's efficiency in terms of time and space complexity. Finally, the algorithm optimization process involves enhancing the method's efficiency by making changes to the design ...

This process is called reduction. process of reduction. In order to map problem X to problem Y, we need some algorithm and that may take the linear time or more. Based on this discussion the cost of solving problem X can be given as: Cost of solving X = Cost of solving Y + Reduction time Now, let us consider the other scenario. For solving ...

Analysis of algorithm is the process of analyzing the problem-solving capability of the algorithm in terms of the time and size required (the size of memory for storage while implementation). However, the main concern of analysis of algorithms is the required time or performance.

DAA Tutorial. Our DAA Tutorial is designed for beginners and professionals both. Our DAA Tutorial includes all topics of algorithm, asymptotic analysis, algorithm control structure, recurrence, master method, recursion tree method, simple sorting algorithm, bubble sort, selection sort, insertion sort, divide and conquer, binary search, merge ...

Learning to apply these strategies could be one of the best milestones for the learners in mastering data structure and algorithms. An Incremental approach using Single and Nested loops. One of the simple ideas of our daily problem-solving activities is that we build the partial solution step by step using a loop. There is a different variation ...

The divide-and-conquer strategy DAA 2019 2. Divide and Conquer Algorithms - 3 / 52 The divide-and-conquer strategy solves a problem by: 1. Breaking it into subproblems (smaller instances of the same problem) 2. Recursively solving these subproblems [Base case: If the subproblems are small enough, just solve them by brute force.] 3.

Analysis of algorithm is the process of analyzing the problem-solving capability of the algorithm in terms of the time and size required (the size of memory for storage while implementation ...

The first strategy you might try when solving a routine problem is called an algorithm. Algorithms are step-by-step strategies or processes for how to solve a problem or achieve a goal. Another solution that many people use to solve problems is called heuristics. Heuristics are general strategies used to make quick, short-cut

DAA Tutorial. Our DAA Tutorial is designed for beginners and professionals both. Our DAA Tutorial includes all topics of algorithm, asymptotic analysis, algorithm control structure, recurrence, master method, recursion tree method, simple sorting algorithm, bubble sort, selection sort, insertion sort, divide and conquer, binary search, merge ...

The backtracking algorithm is a problem-solving approach that tries out all the possible solutions and chooses the best or desired ones. Generally, it is used to solve problems that have multiple solutions. The term backtracking suggests that for a given problem, if the current solution is not suitable, eliminate it and then backtrack to try other solutions.

6. Approximate algorithms are a strategy for overcoming NP-completeness in optimisation problems. The optimal answer is not always ensured by this method. The approximation algorithm aims to reach ...