An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PLoS Comput Biol

- v.11(6); 2015 Jun

Computational Biology: Moving into the Future One Click at a Time

Christiana n. fogg.

1 Freelance Science Writer, Kensington, Maryland, United States of America

Diane E. Kovats

2 Executive Director, International Society for Computational Biology, La Jolla, California, United States of America

Computational biology has grown and matured into a discipline at the heart of biological research. In honor of the tenth anniversary of PLOS Computational Biology , Phil Bourne, Win Hide, Janet Kelso, Scott Markel, Ruth Nussinov, and Janet Thornton shared their memories of the heady beginnings of computational biology and their thoughts on the field’s promising and provocative future.

Philip E. Bourne

Philip Bourne ( Fig 1 ) began his scientific career in the wet lab, like many of his computational biology contemporaries. He earned his PhD in physical chemistry from the Flinders University of South Australia and pursued postdoctoral training at the University of Sheffield, where he began studying protein structure. Bourne accepted his first academic position in 1995 in the Department of Pharmacology at the University of California, San Diego (UCSD), rose to the rank of professor, and was associate vice chancellor for Innovation and Industry Alliances of the Office of Research Affairs. During his time at UCSD, he built a broad research program that used bioinformatics and systems biology to examine protein structure and function, evolution, drug discovery, disease, and immunology. Bourne also developed the Research Collaboratory for Structural Bioinformatics (RCSB) Protein Data Bank (PDB) and Immune Epitope Database (IEDB), which have become valuable data resources for the research community. In 2014, Bourne accepted the newly created position of associate director for data science (ADDS) at the National Institutes of Health (NIH), and he has been tasked with leading an NIH-wide initiative to better utilize the vast and growing collections of biomedical data in more effective and innovative ways.

Associate director for data science, NIH.

Bourne has been deeply involved with the International Society for Computational Biology (ISCB) throughout his career and is the founding editor-in-chief (EIC) of PLOS Computational Biology , an official journal of ISCB. He has been a firm believer in open access to scientific literature and the effective dissemination of data and results, for which PLOS Computational Biology is an exemplary model. Bourne believes that open access is more than just the ability to read free articles, and he said, “The future is using this content in really effective ways.” He referenced an article he cowrote with J. Lynn Fink and Mark Gerstein in 2008, titled “Open Access: Taking Full Advantage of the Content,” which argued that the full potential of open access has not been realized, as “no killer apps” exist that troll the literature and cross-reference other databases to come up with new discoveries [ 1 ]. Bourne believes that the scientific literature will become more and more open in this digital age and that new tools will be developed to harness the potential of this expansive information treasure chest.

Beyond scientific literature, Bourne sees data as a powerful catalyst that is often trapped in individual servers or hardware from defunct projects. As ADDS, he is steering the development of the Commons that he sees as a virtual space in which data sets, tools, and results can be stored, indexed, and accessed. Bourne anticipates that the Commons can be a way for tools and data to live on and benefit other scientists or, in other cases, act as a way to flag problematic or unusable contributions. He is hopeful this new approach can change the way biomedical research data is used, believing that “it offers the opportunity for serendipitous discovery.” Software tools that scientists store in the Commons will have better exposure and offer new opportunities for their use and attribution.

Bourne was in attendance as President Obama launched the President’s Precision Medicine Initiative on January 30, 2015, at the White House. This initiative aims to revolutionize medicine by harnessing information about an individual’s genome, environment, and lifestyle, and it will support research projects focused on transforming cancer treatment [ 2 ]. A bold long-term goal of the initiative is to create a cohort of 1 million American volunteers who will share their genetic and health information. Bourne sees this potentially revolutionary project as a powerful way to promote collaboration between computational biologists working on basic research problems and medical information scientists focused more on clinical and electronic health record information analysis.

Collaboration will be paramount to the success of projects like the Precision Medicine Initiative, and Bourne has observed how scientists are taking novel approaches to form collaborations in this increasingly connected world. He sees communities self-organizing and springing up spontaneously, and some have become influential and respected advocates for research and data sharing, like the Global Alliance for Genomics and Health and the Research Data Alliance. He said, “These are groups of volunteers [who are] funded to do something else but see [the] value of doing things together.” These communities and alliances offer new ways for scientists with shared research interests to come together both in person and virtually and may offer valuable lessons to scientific societies wanting to remain relevant and useful to their members.

Scientific communities are often grounded in shared ideas, and these ideas can be captured in a community’s specialized publications. During his tenure as EIC of PLOS Computational Biology , Bourne began the “ Ten Simple Rules ” collection, which has become one of the most-viewed article collections in any journal, with over 1 million views. This collection has become a treasured source of ideas and information for the computational biology community and has been relevant and helpful to biomedical scientists and trainees from many disciplines. Bourne considers the popularity of these articles an indicator of the information trainees and scientists are seeking but don’t get during their training. He thinks of the “Ten Simple Rules” articles as starting points and hopes that they genuinely help readers find information or guidance. If not, their entertainment value is remarkably therapeutic for the beleaguered scientific masses.

Bourne is looking forward to the next ten years and beyond as computational biology becomes ever more entwined with biomedical research and medicine.

Winston (Win) Hide

Win Hide ( Fig 2 ) has witnessed the transformation of biological research firsthand, from his early wet-lab training in molecular genetics to his present-day research using computational approaches to understand neurodegenerative diseases. Hide graduated from Temple University with a PhD in molecular genetics, and after his postdoctoral training and time spent in Silicon Valley, he founded the South African National Bioinformatics Institute in 1996. He accepted a position at the Harvard School of Public Health in 2008 and became director of the Harvard Stem Cell Institute Center for Stem Cell Bioinformatics. In 2014, Hide accepted a position at the Sheffield Institute for Translational Neuroscience, University of Sheffield, and became a professor of Computational Biology and Bioinformatics.

Professor of Computational Biology and Bioinformatics, Sheffield Institute for Translational Neuroscience, the University of Sheffield, United Kingdom.

Hide considers the drive to sequence the human genome in the 1990s as a major point at which computational biology transformed into a research field. He recalls remarks made around that time, by Lee Hood of the Institute for Systems Biology, contending that biology was becoming a data science. By 1998, SmithKline Beecham had organized the largest corporate bioinformatics department, headed by computational linguist David Searls, and this investment brought new attention to the power and scale of computational biology [ 3 ].

Biomedical researchers are beginning to acknowledge that biology is changing from a hypothesis-driven science to a data-driven science. But Hide thinks this shift is causing an uncomfortable and unsustainable tension between scientists working in these different realms. He has witnessed the tendency of hypothesis-driven experimentalists to pick the data they want to use, and these choices work against the innate objectivity of data-driven analysis. “People choose the pieces of data-driven science that make sense to them,” he said. “We haven’t reached the point in computational biology to judge how right we are. We’ve reached the probability but not summation of how good a model is in data-driven science. So we leave it to the biologists to decide on the pieces they know might be right and move forward.”

Hide sees a significant and urgent need for the convergence of the computational biology domains, including text mining, crowdsourcing, algorithmics, systems biology, and large surveys, to arrive at how correct models are by using machine learning. He acknowledges that these sorts of projects are difficult to take on but are likely the only way to arrive at models that are actionable.

Biologists across the board need to become more comfortable with data analysis and coding. Hide highlighted a talk given by Sean Eddy of the Howard Hughes Medical Institute at the “High Throughput Sequencing for Neuroscience” meeting in 2014 that was a gentle but compelling challenge to experimental biologists to reclaim their data analysis [ 4 ]. Eddy said, “We are not confident in our ability to analyze our own data. Biology is struggling to deal with the volume and complexity of data that sequencing generates. So far our solution has been to outsource our analysis to bioinformaticians.” He spoke about the widespread outsourcing of sequencing analysis to bioinformatics core facilities. “It is true that sequencing generates a lot of data, and it is currently true that the skills needed to do sequencing data analysis are specialized and in short supply,” he said. “What I want to tell you, though, is that those data analysis skills are easily acquired by biologists, that they must be acquired by biologists, and that that they will be. We need to rethink how we’re doing bioinformatics.” He urged biologists to learn scripting, saying “The most important thing I want you to take away from this talk tonight is that writing scripts in Perl or Python is both essential and easy, like learning to pipette. Writing a script is not software programming. To write scripts, you do not need to take courses in computer science or computer engineering. Any biologist can write a Perl script.”

Hide also sees a great need for computational biologists to be trained to collaborate better. He has witnessed the increasingly collaborative and multidisciplinary nature of biological and biomedical research and contends that computational approaches are becoming a fundamental part of collaborations. In the future, Hide expects that some of the strongest and most successful computational biologists will be specialists in particular fields (e.g., machine learning, semantic web) or domains (e.g., cancer, neuroscience) that excel at reaching across disciplines in nonthreatening and productive ways.

Many experimental biologists first became acquainted with computational biology and bioinformatics through collaborations with researchers running core facilities. Most computational biologists recognize that providing service work is an unavoidable part of their job, but this work is often not appropriately recognized or attributed. Hide believes that computational biologists, collaborators, administrators, and funding agencies must better differentiate between work done for research or as a form of service. Recognition of service work is critical to ensuring that core facilities remain a vibrant part of the research infrastructure and can attract highly skilled computational biologists.

Janet Kelso

Janet Kelso ( Fig 3 ) is working at the cutting edge of computational biology with some of the world’s most ancient DNA. Her research interests include human genetics and genome evolution, with a particular interest in the ancestry of modern Homo sapiens and their ancestors, including the extinct Neanderthal species.

Bioinformatics research group leader, Max-Planck Institute for Evolutionary Anthropology, Leipzig, Germany.

Kelso pursued her PhD studies in bioinformatics in the early 2000s under the guidance of Winston Hide at the South African National Bioinformatics Institute. She has watched firsthand as the era of personal genome sequencing has become a reality. “It has become possible in the last eight years to sequence whole genomes so rapidly and inexpensively that the sequencing of the genomes of individual people is now possible,” she said. Kelso thinks the lowered cost and rapid speed of whole genome sequencing will transform our knowledge of human genetics and change the way that medicine is practiced. She sees a prodigious job ahead for computational biologists when it comes to what we do with this data and how we interpret it. “Reference databases created by computational biologists, with information like sequence variants, will capture the effects of ordinary variation in our genomes on our phenotype—how we get sick, how we age, how we metabolize pharmaceutical drugs,” she explained. “This information will be used for diagnosis and will be important for tailoring treatments to individuals.” Kelso expects there will be insight into how genetic variations impact the effect of different treatments, even though these variations may have nothing to do with the disease itself.

As personal genomics becomes a reality, Kelso thinks computational biologists will have to consider that the public will want access to their tools and resources. “The computational biologist’s role is to provide good resources and tools that allow both biomedical researchers and ordinary people to understand and interpret their genome sequence data. It’s a really hard problem, to go from sequence variants in a genome of 3 billion bases to understanding the effects they may have on how long you live or if you develop a disease.”

Kelso sees that computational biology tools will also have immense value in fields other than human genetics. “Many of the tools we develop can be applied in other domains such as agriculture,” she explained. “For example, how do variations in a plant genome allow it to respond to environment, and what additional nutrients do you need to provide to optimize crop production? Similar computational biology tools can be applied in these different systems.”

Kelso considers many of the technical improvements in the field to have been among the major developments in computational biology over the last decade. She explained, “To me, the biggest contributions of computational biology are developments in how to store and annotate data, how to mine that data and to visualize large quantities of biological data. Our ability to integrate large volumes of data and to extract meaningful information and knowledge from that is a huge contribution and has moved the field forward substantially.”

From Kelso’s perspective, she thinks students are now more comfortable with integrating computation into their training and research. “Compared with ten years ago, lab-oriented students are becoming more skilled in bioinformatics. There will always be a place for specialists in both computational and molecular biology, but there is a larger zone in the middle now where people from these different disciplines understand each other.” Kelso has observed that many students now realize that you can’t be a molecular biologist and not know anything about informatics. “Students who come into our program now spend a lot of time learning bioinformatics and are able to work on reasonably sized data sets.”

Kelso is optimistic about the future of computational biology: “Computational biology is now a mature discipline that has cemented itself as integral to modern biology. As we enter a period of unparalleled data accumulation and analysis, computational biology will undoubtedly continue to contribute to important advances in our understanding of molecular systems.”

Scott Markel

Scott Markel ( Fig 4 ) has spent most of his career working as a software developer in industry. He pursued his PhD in mathematics at the University of Wisconsin–Madison, and like many of his contemporaries, he discovered that he could apply his degree to bioinformatics software development. He said, “I have probably made more of a career in industry than others have by leveraging open source tools, giving back to that community where and when I can.” Indeed, it was the culture of open source software supported by ISCB, especially members of the Bioinformatics Open Source Conference (BOSC) community, which drew Markel to the Society.

Secretary, ISCB. BIOVIA principal bioinformatics architect at Dassault Systèmes.

Markel, like many of his ISCB colleagues, considers the sequencing of the human genome as a major research landmark for computational biology and a powerful driver of the technologies and software developed over the last two decades for sequencing and genomics. Sequencing technology continues to become cheaper, faster, and more portable. Next-generation sequencing (NGS) technology has been adapted widely over the last five years, but Markel also sees increasing use of newer technologies, like those being developed by Oxford Nanopore, which will offer longer sequence reads. Markel has observed that researchers and bioinformatics information technology (IT) support staff are faced with the challenges of storing vast amounts of digital data and are shifting their mind-set in this era of technological flux. He said, “As sequencing gets cheaper, it’s better not to save all the data—just run the sequence analysis again.”

As an industry-oriented computational biologist, Markel has a different view of how software is developed and used. Markel has learned how to listen to clients’ needs while also balancing out what kind of product can be built, sold, and maintained. “Customers don’t want something like BLAST [Basic Local Alignment Search Tool]; they want BLAST. As team sizes get smaller and broader, it’s not worth building something the equivalent of BLAST, which will need maintenance, need to be sold as a product, and users will have to be convinced scientifically…is as good as BLAST.” Markel’s primary product, Pipeline Pilot, is a graphic scientific authoring application that supports data management and analysis. He has observed that clients in biotechnology and pharmaceutical research are working more on biologics, like therapeutic antibodies, and they are handling an increasingly diverse spectrum of data types. Markel has noticed that clients are drawn to software like the new Biotherapeutics Workbench, built for antibody researchers using Pipeline Pilot, because it provides decision support, and he explained, “Lab time is more expensive than doing things computationally. This type of application can identify subsets of candidates that you can take forward.”

Markel’s software development experiences highlight how computational biology is transforming research in industry settings, and he suspects that industry will continue to invest in computational biology-driven technologies. “If you make the programming part easier,” he said, “like being able to modify the workflow by changing settings or deploying a program through a web interface, users are thrilled to be self-enabled. For some people, especially those without a programming background, this is a revelation.”

Computational biology is likely to become a part of routine health care in the future, and Markel suspects that one area we will see this change in is the “internet of things.” Computational biology applications are not limited to research and drug discovery but are already being adapted for clinical use, like implantable devices, home health monitoring, and diagnostics. Markel took notice of Apple’s venture into the clinical trial sector through the launch of the ResearchKit platform [ 5 ], which provides clinical researchers with tools to build clinical trial apps that can be accessed by iPhone users. Markel sees this type of technology as potentially transformative, and he took note of a comment made by Alan Yeung, medical director of Stanford Cardiovascular Heath and an investigator involved with the ResearchKit cardiovascular app. 11,000 iPhone users signed up for this app within the first 24 hours of its launch, and Yueng said to Bloomberg News, “To get 10,000 people enrolled in a medical study, it would take a year and 50 medical centers around the country. That’s the power of the phone” [ 6 ]. This approach to clinical research is not without controversy, as observers are concerned iPhone-based apps can result in a biased selection of users. Others have reservations about the privacy of clinical trial data collected from these sources.

Personal health data are being collected and shared at record volumes in this era of smart phones and wearable devices. Although the openness of this data is up for debate, more intimate and personal data have caused even greater contention in recent years. Open Humans is an open online platform that asks users to share their genomes and other personal information, which can be accessed by anyone who signs into the website, and is intended to make more data available to researchers [ 7 ]. Markel sees this sort of platform as a powerful and rich source of data for computational biologists, but it’s not without controversy. Although users can share their data using an anonymous profile, the data may contain enough unique information to reveal an individual’s identity, which could have unintended consequences.

The success and wide acceptance of these open data projects will impact how the general public sees computational biology as a field, and it may take decades for the public to decide how data should be shared. Nonetheless, it’s an exciting time for computational biology according to Markel, as he sees aspects of the field coming into daily life more and has witnessed how researchers in industry labs have leveraged the power of computation.

Ruth Nussinov

Ruth Nussinov ( Fig 5 ) heads the computational structural biology group in the Laboratory of Experimental Immunology at the National Cancer Institute (NCI)/NIH and is editor-in-chief of PLOS Computational Biology . She earned a PhD in biochemistry from Rutgers University and did her postdoctoral training at the Weizmann Institute. She has spent her career working as a computational biologist and is a pioneer of DNA sequence analysis and RNA structure prediction. Nussinov began her training at a time when the term “computational biology” was poorly understood by biologists and mathematicians and no formal training programs that combined computer science, mathematics, and biology existed. In 1985, Nussinov accepted a position as an associate professor at the Tel Aviv University Medical School, where she began an independent research program. She recalled a conversation with a dean at the school. He said, “Ruth, what are you? A mathematician?” To his chagrin and befuddlement, she replied, “No, I’m a biologist, a computational biologist.” Now computational biology is one of the hottest and fastest growing fields in biology, and training programs are in high demand.

Senior investigator and head of computational structural biology group, Laboratory of Experimental Immunology, Cancer and Inflammation Program, NCI, NIH, and professor in the School of Medicine, Tel Aviv University.

As editor-in-chief of PLOS Computational Biology , Nussinov has gained a unique perspective of the field. The breadth of expertise across the journal’s Editorial Board and community of peer reviewers is vast because computational biology as a discipline is so broad; it seems to cover everything.

The vibrancy of the field is clear to Nussinov. “I think we can say it is a field that is very much alive and at the forefront of the sciences,” she said, “It reflects the fact that biology has been shifting from descriptive to a quantitative science.” Nussinov also acknowledges that computation-driven research can’t move forward without a strong relationship with experimental biology. “Computational biology is strongly tied to experiments, and experimental biology is becoming more quantitative. More and more studies provide quantitation, and this type of information is essential for making comparisons across experiments.” As a student, Nussinov recalls reading papers about transcription in which transcription levels were classified as + or ++ and were clearly subjective estimates of transcription levels. Now transcript levels are quantified with exquisite sensitivity using real-time PCR.

In spite of biology’s shift toward quantitation, Nussinov recognizes some of the field’s limitations. She has worked closely with mathematicians throughout her career, and she recalls one conversation with an algorithm development mathematician. He was trying to understand all the parameters of her experiments, and she kept saying, “It depends.” She, like many biologists, is all too familiar with the numerous variables and experimental conditions that come along with seemingly messy biology experiments, and computational biologists spend much of their time contending with this issue. New technologies, especially those based in biophysics, have contributed to improvements in the quality of data used for quantitation, but some variability will always exist.

Nussinov feels that data storage and organization are critical issues facing the future of computational biology. “Data is accumulating fast, and it is extremely diverse.” One of the challenges she sees is how does the community organize the data. She said, “The data relates to populations, disease associations, symptoms, therapeutic drugs, and more. How do you organize it and make it open and shared? By disease, by countries, more isolated or less isolated areas?” These are not easy issues to address and will only become more important as data accumulate. She also considers noise to be a major issue with this data. She said, “How do you overcome the problem of noise, an inevitable problem with vast quantities of data? How do you sift through it and see real trends? You still need cross validation.”

Nussinov believes that some of the major challenges facing computational biologists deal with developing modes of analyses that can validate or negate common beliefs or expectations, uncover unknown trends, obtain insights into fundamental processes, and exploit this information to improve predictions and design. For these, the computational biologist needs data that are openly accessible, shared software, computational power, and importantly, in-depth understanding.

In the end, Nussinov sees immeasurable value in fostering collaborations between experimental and computational biologists. “Experimentalists can’t check all possible models. Computation can provide leads, and experiments can check it. That is the ideal scenario.”

Janet Thornton

Janet Thornton ( Fig 6 ) has spent her research career studying protein structure and is considered a leading researcher in the field of structural bioinformatics. She pursued her PhD in biophysics in the 1970s, when very little information existed on protein structure and nucleotide sequences [ 8 ]. Thornton’s early research career at Oxford included using protein sequences to predict structure, and this type of research marked the earliest beginnings of bioinformatics. She became the director of the European Molecular Biology Laboratory–European Bioinformatics Institute (EMBL-EBI) in 2001, just as genomics and bioinformatics were growing rapidly, and her institution maintained valuable bioinformatics databases with data from throughout Europe. EBI also developed a thriving bioinformatics research community.

Senior scientist and outgoing director of EMBL-EBI.

Thornton’s experiences as a structural biologist and EBI director have given her an exclusive viewpoint on the evolution of computational biology and bioinformatics from its infancy to the present day. She considers developments in five different areas to have been critical to the progress of computational biology:

- Development of new methods for new data-generating technologies (next-generation sequencing , proteomics/metabolomics , genome-wide association studies [GWAS] , and image processing) . Without these methods, the new technologies would have been useless and interpretation of the data impossible.

- Development of methods in systems biology .

- Ontology development and text mining . This area is fundamental to everything in computational biology. Defining the ontologies and the science behind them will ultimately allow for the data integration and comparison needed to understand the biology of life. The opportunities presented by open literature and open data cannot be underestimated, and new methods for text mining are being developed.

- Algorithm development . The effectiveness and efficiency of old algorithms (sequence alignment and protein folding) is constantly being refined, and new algorithms are being developed alongside new technologies.

- Technical development . New methods for handling, validating, transferring, and storing data at all levels are under development, and cloud computing for the biological sciences is emerging.

Thornton reflected on some of the most interesting observations and results to come out of the increasingly diverse corpus of computational biology research—in particular, the use of genomics to identify how microbes evolve during an epidemic, genomic approaches to understanding human evolution, GWAS studies to discern how genetic variants impact disease, the discovery of the breadth of the microbiome and how bacterial populations interact and influence each other, the use of electronic health records to extract clinical data, and the observation that regulatory processes evolve relatively quickly in comparison to protein sequences and structures.

Thornton’s research has changed over time as bioinformatics tools and algorithms improved and protein data flooded the databases. She explained, “The evolution of protein function, especially understanding how the majority of enzyme functions have developed during their evolution from other functions, has been helped by new sequence data for the construction of better [phylogenetic] trees that reveal yet more interesting changes in function.” New algorithms developed in our group have changed the way we compare enzyme functions and have made it quantitative rather than qualitative.” Thornton is also studying how variants affect structure and function, and the 1,000 genomes data have greatly enhanced this work. She said, “The major difference in this area is new data. We can now look at germ-line changes in many individuals. Relationships to diseases are emerging, and many new paradigms will be revealed with 100,000 genomes from individuals with rare diseases.”

The convergence of computational and experimental biology is already underway, and Thornton considers that several pressing biological questions can only be addressed by combining these approaches—in particular, in areas such as building predictive models of the cell, organelles, and organs, understanding aging, designing enzymes, and improving drug design and target validation.

Thornton considers one of the biggest challenges facing computational biology, and potentially hindering these areas of research, is sharing data, especially medical data. She also believes that the computational biology community must make engagement of medical professionals and the public a top priority. She said, “This is really important. At EMBL-EBI, we are training medical professionals in bioinformatics, working on more and more public engagement, which is a huge challenge to do across Europe, especially with limited funds, and we are training scientists to do more public engagement.” It seems clear that computational biology will become a part of everyday life, especially in medicine, and these efforts are critical for gaining support from the medical community and the greater public.

The thoughts shared by these accomplished computational biologists make it clear that biology is becoming a data science, and future breakthroughs will depend on strong collaborations between experimental and computational biologists. Biologists will need to adapt to the data-driven nature of the discipline, and the training of future researchers is likely to reflect these changes as well. Aspects of computational biology are integrating into all levels of medicine and health care. Medical professionals as well as the public need to be well informed and educated about these changes in order to realize the full potential of this new frontier in medicine without fear of the technological advances.

Funding Statement

CNF was paid by ISCB to write this article.

Journal of Computational Biology

Editor-in-Chief: Mona Singh, PhD

Impact Factor: 1.7* *2022 Journal Citation Reports™ (Clarivate, 2023)

Citescore™: 3.2.

The leading peer-reviewed journal in computational biology and bioinformatics, publishing in-depth statistical, mathematical, and computational analysis of methods, as well as their practical impact.

- View Aims & Scope

- Indexing/Abstracting

- Editorial Board

- Featured Content

- About This Publication

- Reprints & Permissions

- News Releases

- Sample Issue

Aims & Scope

Journal of Computational Biology publishes articles whose primary contributions are the development and application of new methods in computational biology, including algorithmic, statistical, mathematical, machine learning and artificial intelligence contributions. The journal welcomes novel methods that tackle established problems within computational biology; novel methods and frameworks that anticipate new problems and data types arising in computational biology; and novel methods that are inspired from studying natural computation. Methods should be tested on real and/or simulated biological data whenever feasible. Papers whose primary contributions are theoretical are also welcome. Available only online, this is an essential journal for scientists and students who want to keep abreast of developments in bioinformatics and computational biology.

Research Articles: Research articles describe new methodology development and application in computational biology. It is recommended that manuscripts should be approximately 3,000 words, excluding tables, figures, legends, abstract, disclosure or references; longer articles can also be submitted. Research articles should include the following sections, in order: abstract, introduction, methods, results, discussion and references.

Software articles: Short 2-4 page articles describing implementations of new or recently developed computational methods for applications in computational biology. The approaches underlying the software should have methodologically interesting components. Software articles can be published as companion articles to primary research articles which describe the main methodological contributions. Software article submissions should be accompanied by a cover letter that concisely states the novel implementation and algorithmic challenges the software tackles.

*Research and software articles should report unique findings not previously published.

Tutorials: These articles highlight important concepts in computational biology. The journal especially welcomes tutorials on algorithms, data structures, machine learning paradigms, and other computational formalisms that are newly being utilized in computational biology. Prospective contributors should contact the journal ( [email protected] ) with brief outlines before proceeding.

Reviews: Brief outlines from prospective contributors are welcome, and these will also be solicited on specific subjects. Articles that benchmark existing approaches are also welcome.

News/Perspectives/Book Reviews: These article types should typically be 2–4 pages long. Contacting the journal before beginning such a paper is suggested.

Conference and other special issues: The Journal of Computational Biology welcomes proposals for special issues related to topics within the scope of the journal.

Journal of Computational Biology coverage includes:

- Algorithms for computational biology

- Mathematical modeling and simulation

- AI / Machine learning

- Statistical formulations

- Software for applied bioinformatics

- Genomics and systems biology

- Evolution and population genomics

- Biomedical applications

- Biocomputing and biology-inspired algorithms

Specific topics of interest include, but are not limited to:

- Molecular sequence analysis

- Sequencing and genotyping technologies

- Regulation and epigenomics

- Transcriptomics, including single-cell

- Metagenomics

- Population and statistical genetics

- Evolutionary, compressive and comparative genomics

- Structure and function of non-coding RNAs

- Computational proteomics and proteogenomics

- Protein structure and function

- Biological networks

- Computational systems biology

- Privacy of biomedical data

Journal of Computational Biology is under the editorial leadership of Editor-in-Chief Mona Singh, PhD , Princeton University; and other leading investigators. View the entire editorial board .

Audience: Computational biologists, bioinformaticians, data scientists, applied mathematicians, and computer scientists, among others.

Indexing/Abstracting:

- PubMed/MEDLINE

- PubMed Central

- Web of Science: Science Citation Index Expanded™ (SCIE)

- Current Contents®/Life Sciences

- Biotechnology Citation Index®

- Biological Abstracts

- BIOSIS Citation Index™

- Journal Citation Reports/Science Edition

- EMBASE/Excerpta Medica

- Chemical Abstracts

- ProQuest databases

- CAB Abstracts

- Global Health

- The DBLP Computer Science Bibliography

Society Affiliations

The Official Journal of:

2022 August

Special Issue: Professor Michael Waterman's 80th Birthday, Part 2

More Special Issues...

Recommended Publications

Genetic Engineering & Biotechnology News

OMICS: A Journal of Integrative Biology

ASSAY and Drug Development Technologies

DNA and Cell Biology

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why publish with this journal?

- About Bioinformatics

- Journals Career Network

- Editorial Board

- Advertising and Corporate Services

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Editors-in-Chief

Janet Kelso

Alfonso Valencia

The leading journal in its field, Bioinformatics publishes the highest quality scientific papers and review articles of interest to academic and industrial researchers. Its main focus is on new developments in genome bioinformatics and computational biology.

Learn more about publishing with Bioinformatics here.

Why publish with Bioinformatics ?

Are you looking for a home for your research? Publish with Bioinformatics and enjoy a variety of benefits, including:

- Strong reputation and Impact Factor

- Distinguished and supportive editors

- Fully open access

I'm ready to learn more!

Read our author guidelines to find out more about:

- Manuscript types

- Review processes

- Open access options

- Manuscript preparation

Author guidelines

Format free submissions

At first submission, Bioinformatics authors are no longer required to format their manuscript according to journal guidelines.

Find out more about submitting a manuscript

Bioinformatics is now fully OA

Bioinformatics is now fully open access. Visit the journal's open access page to learn more about the change.

Learn about the change

High-Impact Research Collection

Explore the most read, most cited, and most discussed articles published in Bioinformatics in recent years and discover what has caught the interest of your peers.

Browse the collection

International Society for Computational Biology

Bioinformatics is an official journal of the International Society for Computational Biology, the leading professional society for computational biology and bioinformatics. Members of the society receive a 15% discount on article processing charges when publishing Open Access in the journal.

- Read papers from the ISCB

Find out more

Browse by subject

- Genome analysis

- Sequence analysis

- Phylogenetics

- Structural bioinformatics

- Gene expression

- Genetics and population analysis

- Systems biology

- Data and text mining

- Databases and ontologies

- Bioimage informatics

Bioinformatics and Publons

Bioinformatics is part of a trial with Publons to recognise our expert peer reviewers and raise the status of peer review.

Latest articles

Email alerts

Register to receive table of contents email alerts as soon as new issues of Bioinformatics are published online.

Discover a more complete picture of how readers engage with research in Bioinformatics through Altmetric data. Now available on article pages.

Committee on Publication Ethics (COPE)

This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE)

publicationethics.org

Recommend to your library

Fill out our simple online form to recommend Bioinformatics to your library.

Recommend now

Related Titles

- Recommend to your Library

Affiliations

- Online ISSN 1367-4811

- Copyright © 2024 Oxford University Press

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Volume 21 Supplement 9

Selected Articles from the 20th International Conference on Bioinformatics & Computational Biology (BIOCOMP 2019)

- Introduction

- Open access

- Published: 03 December 2020

Current trend and development in bioinformatics research

- Yuanyuan Fu 1 ,

- Zhougui Ling 1 , 2 ,

- Hamid Arabnia 3 &

- Youping Deng 1

BMC Bioinformatics volume 21 , Article number: 538 ( 2020 ) Cite this article

10k Accesses

16 Citations

4 Altmetric

Metrics details

This is an editorial report of the supplements to BMC Bioinformatics that includes 6 papers selected from the BIOCOMP’19—The 2019 International Conference on Bioinformatics and Computational Biology. These articles reflect current trend and development in bioinformatics research.

The supplement to BMC Bioinformatics was proposed to launch during the BIOCOMP’19—The 2019 International Conference on Bioinformatics and Computational Biology held from July 29 to August 01, 2019 in Las Vegas, Nevada. In this congress, a variety of research areas was discussed, including bioinformatics which was one of the major focuses due to the rapid development and requirement of using bioinformatics approaches in biological data analysis, especially for omics large datasets. Here, six manuscripts were selected after strict peer review, providing an overview of the bioinformatics research trend and its application for interdisciplinary collaboration.

Cancer is one of the leading causes of morbidity and mortality worldwide. There exists an urgent need to identify new biomarkers or signatures for early detection and prognosis. Mona et al. identified biomarker genes from functional network based on the 407 differential expressed genes between lung cancer and healthy populations from a public Gene Expression Omnibus dataset. The lower expression of sixteen gene signature is associated with favorable lung cancer survival, DNA repair, and cell regulation [ 1 ]. A new class of biomarkers such as alternative splicing variants (ASV) have been studied in recent years. Various platforms and methods, for example, Affymetrix Exon-Exon Junction Array, RNA-seq, and liquid chromatography tandem mass spectrometry (LC–MS/MS), have been developed to explore the role of ASV in human disease. Zhang et al. have developed a bioinformatics workflow to combine LC–MS/MS with RNA-seq which provide new opportunities in biomarker discovery. In their study, they identified twenty-six alternative splicing biomarker peptides with one single intron event and one exon skipping event; further pathways indicated the 26 peptides may be involved in cancer, signaling, metabolism, regulation, immune system and hemostasis pathways which validated by the RNA-seq analysis [ 2 ].

Proteins serve crucial functions in essentially all biological processes and the function directly depends on their three-dimensional structures. Traditional approaches to elucidation of protein structures by NMR spectroscopy are time consuming and expensive, however, the faster and more cost-effective methods are critical in the development of personalized medicine. Cole et al. improved the REDRAFT software package in the important areas of usability, accessibility, and the core methodology which resulted in the ability to fold proteins [ 3 ].

The human microbiome is the aggregation of microorganisms that reside on or within human bodies. Rebecca et al. discussed the tissue-associated microbial detection in cancer using next generation sequencing (NGS). Various computational frameworks could shed light on the role of microbiota in cancer pathogenesis [ 4 ]. How to analyze the human microbiome data efficiently is a huge challenge. Zhang et al. developed a nonparametric test based on inter-point distance to evaluate statistical significance from a Bayesian point of view. The proposed test is more efficient and sensitive to the compositional difference compared with the traditional mean-based method [ 5 ].

Human disease is also considered as the cause of the interaction between genetic and environmental factors. In the last decades, there was a growing interest in the effect of metal toxicity on human health. Evaluating the toxicity of chemical mixture and their possible mechanism of action is still a challenge for humans and other organisms, as traditional methods are very time consuming, inefficient, and expensive, so a limited number of chemicals can be tested. In order to develop efficient and accurate predictive models, Yu et al. compared the results among a classification algorithm and identified 15 gene biomarkers with 100% accuracy for metal toxicant using a microarray classifier analysis [ 6 ].

Currently, there is a growing need to convert biological data into knowledge through a bioinformatics approach. We hope these articles can provide up-to-date information of research development and trend in bioinformatics field.

Availability of data and materials

Not applicable.

Abbreviations

The 2019 International Conference on Bioinformatics and Computational Biology

Liquid chromatography tandem mass spectrometry

Alternative splicing variants

Nuclear Magnetic Resonance

Residual Dipolar Coupling based Residue Assembly and Filter Tool

Next generation sequencing

Mona Maharjan RBT, Chowdhury K, Duan W, Mondal AM. Computational identification of biomarker genes for lung cancer considering treatment and non-treatment studies. 2020. https://doi.org/10.1186/s12859-020-3524-8 .

Zhang F, Deng CK, Wang M, Deng B, Barber R, Huang G. Identification of novel alternative splicing biomarkers for breast cancer with LC/MS/MS and RNA-Seq. Mol Cell Proteomics. 2020;16:1850–63. https://doi.org/10.1186/s12859-020-03824-8 .

Article Google Scholar

Casey Cole CP, Rachele J, Valafar H. Increased usability, algorithmic improvements and incorporation of data mining for structure calculation of proteins with REDCRAFT software package. 2020. https://doi.org/10.1186/s12859-020-3522-x .

Rebecca M, Rodriguez VSK, Menor M, Hernandez BY, Deng Y. Tissue-associated microbial detection in cancer using human sequencing data. 2020. https://doi.org/10.1186/s12859-020-03831-9 .

Qingyang Zhang TD. A distance based multisample test for high-dimensional compositional data with applications to the human microbiome . 2020. https://doi.org/10.1186/s12859-020-3530-x .

Yu Z, Fu Y, Ai J, Zhang J, Huang G, Deng Y. Development of predicitve models to distinguish metals from non-metal toxicants, and individual metal from one another. 2020. https://doi.org/10.1186/s12859-020-3525-7 .

Download references

Acknowledgements

This supplement will not be possible without the support of the International Society of Intelligent Biological Medicine (ISIBM).

About this supplement

This article has been published as part of BMC Bioinformatics Volume 21 Supplement 9, 2020: Selected Articles from the 20th International Conference on Bioinformatics & Computational Biology (BIOCOMP 2019). The full contents of the supplement are available online at https://bmcbioinformatics.biomedcentral.com/articles/supplements/volume-21-supplement-9 .

Publication of this supplement has been supported by NIH grants R01CA223490 and R01 CA230514 to Youping Deng and 5P30GM114737, P20GM103466, 5U54MD007601 and 5P30CA071789.

Author information

Authors and affiliations.

Department of Quantitative Health Sciences, John A. Burns School of Medicine, University of Hawaii at Manoa, Honolulu, HI, 96813, USA

Yuanyuan Fu, Zhougui Ling & Youping Deng

Department of Pulmonary and Critical Care Medicine, The Fourth Affiliated Hospital of Guangxi Medical University, Liuzhou, 545005, China

Zhougui Ling

Department of Computer Science, University of Georgia, Athens, GA, 30602, USA

Hamid Arabnia

You can also search for this author in PubMed Google Scholar

Contributions

YF drafted the manuscript, ZL, HA, and YD revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Youping Deng .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Fu, Y., Ling, Z., Arabnia, H. et al. Current trend and development in bioinformatics research. BMC Bioinformatics 21 (Suppl 9), 538 (2020). https://doi.org/10.1186/s12859-020-03874-y

Download citation

Published : 03 December 2020

DOI : https://doi.org/10.1186/s12859-020-03874-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Bioinformatics

- Human disease

BMC Bioinformatics

ISSN: 1471-2105

- General enquiries: [email protected]

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Computational biology

Download RSS feed: News Articles / In the Media / Audio

Search algorithm reveals nearly 200 new kinds of CRISPR systems

By analyzing bacterial data, researchers have discovered thousands of rare new CRISPR systems that have a range of functions and could enable gene editing, diagnostics, and more.

November 23, 2023

Read full story →

Making genetic prediction models more inclusive

MIT computer scientists developed a way to calculate polygenic scores that makes them more accurate for people across diverse ancestries.

October 26, 2023

Cracking the code that relates brain and behavior in a simple animal

MIT researchers model and create an atlas for how neurons of the worm C. elegans encode its behaviors, make findings available on their “WormWideWeb.”

August 23, 2023

2022-23 Takeda Fellows: Leveraging AI to positively impact human health

New fellows are working on health records, robot control, pandemic preparedness, brain injuries, and more.

January 12, 2023

An interdisciplinary journey through living machines

With NEET, Sherry Nyeo is discovering MIT’s undergraduate research community at the intersection of computer science and biological engineering.

October 18, 2022

New CRISPR-based map ties every human gene to its function

Jonathan Weissman and collaborators used their single-cell sequencing tool Perturb-seq on every expressed gene in the human genome, linking each to its job in the cell.

June 9, 2022

New computational tool predicts cell fates and genetic perturbations

The technique can help predict a cell’s path over time, such as what type of cell it will become.

February 3, 2022

The promise and pitfalls of artificial intelligence explored at TEDxMIT event

MIT scientists discuss the future of AI with applications across many sectors, as a tool that can be both beneficial and harmful.

January 11, 2022

Inaugural fund supports early-stage collaborations between MIT and Jordan

MIT-Jordan Abdul Hameed Shoman Foundation Seed Fund winners announced.

July 29, 2021

The power of two

Graduate student Ellen Zhong helped biologists and mathematicians reach across departmental lines to address a longstanding problem in electron microscopy.

June 30, 2021

Nature-inspired CRISPR enzymes for expansive genome editing

Applied computational biology discoveries vastly expand the range of CRISPR’s access to DNA sequences.

May 19, 2020

3 Questions: Greg Britten on how marine life can recover by 2050

Committing to aggressive conservation efforts could rebuild ocean habitats and species populations in a few decades.

April 3, 2020

Cleaning up hydrogen peroxide production

Solugen’s engineered enzymes offer a biologically-inspired method for producing the chemical.

September 5, 2019

Microbial communities demonstrate high turnover

New research provides insight into the behavior of microbial communities in the ocean.

January 18, 2018

Determined to make a change

Senior Ruth Park draws on her background as she strives for educational reform.

May 12, 2016

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

- News & Media

- Chemical Biology

- Computational Biology

- Ecosystem Science

- Cancer Biology

- Exposure Science & Pathogen Biology

- Metabolic Inflammatory Diseases

- Advanced Metabolomics

- Mass Spectrometry-Based Measurement Technologies

- Spatial and Single-Cell Proteomics

- Structural Biology

- Biofuels & Bioproducts

- Human Microbiome

- Soil Microbiome

- Synthetic Biology

- Computational Chemistry

- Chemical Separations

- Chemical Physics

- Atmospheric Aerosols

- Human-Earth System Interactions

- Modeling Earth Systems

- Coastal Science

- Plant Science

- Subsurface Science

- Terrestrial Aquatics

- Materials in Extreme Environments

- Precision Materials by Design

- Science of Interfaces

- Friction Stir Welding & Processing

- Dark Matter

- Flavor Physics

- Fusion Energy Science

- Neutrino Physics

- Quantum Information Sciences

- Emergency Response

- AGM Program

- Tools and Capabilities

- Grid Architecture

- Grid Cybersecurity

- Grid Energy Storage

- Earth System Modeling

- Energy System Modeling

- Transmission

- Distribution

- Appliance and Equipment Standards

- Building Energy Codes

- Advanced Building Controls

- Advanced Lighting

- Building-Grid Integration

- Building and Grid Modeling

- Commercial Buildings

- Federal Performance Optimization

- Resilience and Security

- Grid Resilience and Decarbonization

- Building America Solution Center

- Energy Efficient Technology Integration

- Home Energy Score

- Electrochemical Energy Storage

- Flexible Loads and Generation

- Grid Integration, Controls, and Architecture

- Regulation, Policy, and Valuation

- Science Supporting Energy Storage

- Chemical Energy Storage

- Waste Processing

- Radiation Measurement

- Environmental Remediation

- Subsurface Energy Systems

- Carbon Capture

- Carbon Storage

- Carbon Utilization

- Advanced Hydrocarbon Conversion

- Fuel Cycle Research

- Advanced Reactors

- Reactor Operations

- Reactor Licensing

- Solar Energy

- Wind Resource Characterization

- Wildlife and Wind

- Community Values and Ocean Co-Use

- Wind Systems Integration

- Wind Data Management

- Distributed Wind

- Energy Equity & Health

- Environmental Monitoring for Marine Energy

- Marine Biofouling and Corrosion

- Marine Energy Resource Characterization

- Testing for Marine Energy

- The Blue Economy

- Environmental Performance of Hydropower

- Hydropower Cybersecurity and Digitalization

- Hydropower and the Electric Grid

- Materials Science for Hydropower

- Pumped Storage Hydropower

- Water + Hydropower Planning

- Grid Integration of Renewable Energy

- Geothermal Energy

- Algal Biofuels

- Aviation Biofuels

- Waste-to-Energy and Products

- Hydrogen & Fuel Cells

- Emission Control

- Energy-Efficient Mobility Systems

- Lightweight Materials

- Vehicle Electrification

- Vehicle Grid Integration

- Contraband Detection

- Pathogen Science & Detection

- Explosives Detection

- Threat-Agnostic Biodefense

- Discovery and Insight

- Proactive Defense

- Trusted Systems

- Nuclear Material Science

- Radiological & Nuclear Detection

- Nuclear Forensics

- Ultra-Sensitive Nuclear Measurements

- Nuclear Explosion Monitoring

- Global Nuclear & Radiological Security

- Disaster Recovery

- Global Collaborations

- Legislative and Regulatory Analysis

- Technical Training

- Additive Manufacturing

- Deployed Technologies

- Rapid Prototyping

- Systems Engineering

- 5G Security

- RF Signal Detection & Exploitation

- Climate Security

- Internet of Things

- Maritime Security

- Artificial Intelligence

- Graph and Data Analytics

- Software Engineering

- Computational Mathematics & Statistics

- High-Performance Computing

- Visual Analytics

- Lab Objectives

- Publications & Reports

- Featured Research

- Diversity, Equity, Inclusion & Accessibility

- Lab Leadership

- Lab Fellows

- Staff Accomplishments

- Undergraduate Students

- Graduate Students

- Post-graduate Students

- University Faculty

- University Partnerships

- K-12 Educators and Students

- STEM Workforce Development

- STEM Outreach

- Meet the Team

- Internships

- Regional Impact

- Philanthropy

- Volunteering

- Available Technologies

- Industry Partnerships

- Licensing & Technology Transfer

- Entrepreneurial Leave

- Atmospheric Radiation Measurement User Facility

- Electricity Infrastructure Operations Center

- Energy Sciences Center

- Environmental Molecular Sciences Laboratory

- Grid Storage Launchpad

- Institute for Integrated Catalysis

- Interdiction Technology and Integration Laboratory

- PNNL Portland Research Center

- PNNL Seattle Research Center

- PNNL-Sequim (Marine and Coastal Research)

- Radiochemical Processing Laboratory

- Shallow Underground Laboratory

Connecting Computational and Systems Biology for Biodefense

Retooling security with bioagent-agnostic signatures

Researchers at Pacific Northwest National Laboratory and the University of Texas at El Paso are exploring the computational challenges of thinking beyond the list and developing bioagent-agnostic signatures to assess threats.

(Composite image by Derek Munson | Pacific Northwest National Laboratory)

Historically, the biodefense community relies on lists of known agents—pathogens and biotoxins like anthrax and ricin—that have been identified and prioritized as threats. In Health Security , a team of researchers at Pacific Northwest National Laboratory (PNNL) and the University of Texas at El Paso (UTEP) discuss the computational challenges of thinking beyond the list and developing bioagent-agnostic signatures to assess threats.

“As biological threats evolve, we face more unknowns and an increased sense of urgency to quickly detect and characterize disease agents to increase our biopreparedness and drive rapid responses. By shifting from an identification-based approach to a characterization-based one—with the right computational and data capabilities—we can accurately and reproducibly assess impacts without prior knowledge of an agent,” said Andy Lin, data scientist at PNNL.

The article “Computational and Systems Biology Advances to Enable Bioagent Agnostic Signatures” explores the computational data challenges of threat-agnostic biodefense , or the ability to characterize an unknown agent’s likely impact to human, animal, and plant health. The researchers discuss how the biodefense community can make the shift to a dual list-based and bioagent-agnostic signatures approach—but it will not be without its challenges. The shift will require policy changes, technological improvements, and improved data analytics.

The research brought together PNNL’s Lin, Errett Hobbs, Karen Taylor , Tony Chiang , and Jay Bardhan , with UTEP’s Cameron Torres, Stephen Aley, and Charles Spencer—convening diverse expertise across data science, computational systems biology, cytometry, immunology, and more. The research showcases the power of a cross-institution collaboration building on PNNL and UTEP’s long-standing partnership and joint appointment program to accelerate the science mission of both institutions. The UTEP team performed experiments that helped demonstrate the challenges discussed in the paper.

The research also builds on PNNL’s previous effort, highlighted in a 2021 Pathogens publication, “ Beyond the List: Bioagent-Agnostic Signatures Could Enable a More Flexible and Resilient Biodefense Posture Than an Approach Based on Priority Agent Lists Alone ,” which addressed how traditional list-based approaches are ill-equipped to accommodate threats posed by emergent, reemergent, or novel pathogens. A threat-agnostic model could present a means to more effectively surveil for and treat known and novel agents alike.

“While the biodefense community may have only just begun to develop technologies for a threat-agnostic approach, we’re highlighting promising new immunological approaches and the data challenges that need to be overcome to build exciting new possible paths forward,” said Lin.

The article was published in the March 2024 issue of Health Security and featured in the March 17 issue of the Global Biodefense headlines .

Published: May 15, 2024

Lin, A., C. M. Torres, E. C. Hobbs, J. Bardhan, S. B. Aley, C. T. Spencer, K. L. Taylor, and T. Chiang. 2024. “Computational and Systems Biology Advances to Enable Bioagent Agnostic Signatures.” Health Security . https://doi.org/10.1089/hs.2023.0076

Research topics

Lab-level communications priority topics.

AlphaFold 3 predicts the structure and interactions of all of life’s molecules

Introducing AlphaFold 3, a new AI model developed by Isomorphic Labs and Google DeepMind. By accurately predicting the structure of proteins, DNA, RNA, ligands and more, and how they interact, we hope it will help to transform our understanding of the biological world and drug discovery.

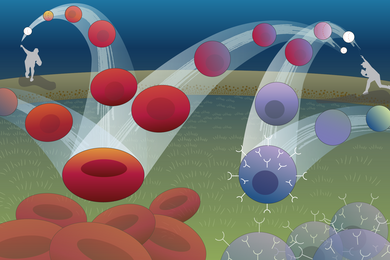

Inside every plant, animal, and human cell are billions of molecular machines. They’re made up of proteins, DNA, and other molecules, but no single piece works on its own. Only by seeing how they interact together, across millions of types of combinations, can we start to truly understand life’s processes.

In a paper published in Nature , we introduce AlphaFold 3, a revolutionary model that can predict the structure and interactions of all life’s molecules with unprecedented accuracy. For the interactions of proteins with other molecule types we see at least a 50% improvement compared with existing prediction methods, and for some important categories of interaction we have doubled prediction accuracy.

We hope AlphaFold 3 will help transform our understanding of the biological world and drug discovery. Scientists can access the majority of its capabilities, for free, through the newly launched AlphaFold Server , an easy-to-use research tool. To build on AlphaFold 3’s potential for drug design, we at Isomorphic Labs are already collaborating with pharmaceutical companies to apply it to real-world drug design challenges and, ultimately, develop new life-changing treatments for patients.

Our new model builds on the foundations of AlphaFold 2, which in 2020 made a fundamental breakthrough in protein structure prediction . So far, millions of researchers globally have used AlphaFold 2 to make discoveries in areas including malaria vaccines, cancer treatments, and enzyme design. AlphaFold has been cited more than 20,000 times and its scientific impact recognized through many prizes, most recently the Breakthrough Prize in Life Sciences . AlphaFold 3 takes us beyond proteins to a broad spectrum of biomolecules. This leap could unlock more transformative science, from accelerating drug design and genomics research, to developing biorenewable materials and more resilient crops.

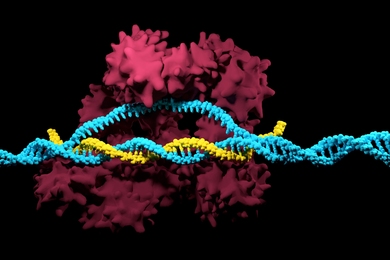

7PNM - Spike protein of a common cold virus (Coronavirus OC43) : AlphaFold 3’s structural prediction for a spike protein (blue) of a cold virus as it interacts with antibodies (turquoise) and simple sugars (yellow), accurately matches the true structure (gray). The animation shows the protein interacting with an antibody, then a sugar. Advancing our knowledge of such immune-system processes helps better understand coronaviruses, including COVID-19, raising possibilities for improved treatments.

How AlphaFold 3 reveals life’s molecules

Given an input list of molecules, AlphaFold 3 generates their joint 3D structure, revealing how they all fit together. It models large biomolecules such as proteins, DNA, and RNA, as well as small molecules, also known as ligands - a category encompassing many drugs. Furthermore, AlphaFold 3 can model chemical modifications to these molecules which control the healthy functioning of cells, that when disrupted can lead to disease.

AlphaFold 3’s capabilities come from its next-generation architecture and training that now covers all of life’s molecules. At the core of the model is an improved version of our Evoformer module – a deep learning architecture that underpinned AlphaFold 2’s incredible performance. After processing the inputs, AlphaFold 3 assembles its predictions using a diffusion network, akin to those found in AI image generators. The diffusion process starts with a cloud of atoms, and over many steps converges on its final, most accurate molecular structure.

AlphaFold 3’s predictions of molecular interactions surpass the accuracy of all existing systems. As a single model that computes entire molecular complexes in a holistic way, it’s uniquely able to unify scientific insights.

Read our paper in Nature

7BBV - Enzyme : AlphaFold 3’s prediction for a molecular complex featuring an enzyme protein (blue), an ion (yellow sphere) and simple sugars (yellow), along with the true structure (gray). This enzyme is found in a soil-borne fungus (Verticillium dahliae) that damages a wide range of plants. Insights into how this enzyme interacts with plant cells could help researchers develop healthier, more resilient crops.

Leading drug discovery at Isomorphic Labs

AlphaFold 3 creates capabilities for drug design with predictions for molecules commonly used in drugs, such as ligands and antibodies, that bind to proteins to change how they interact in human health and disease.

AlphaFold 3 achieves unprecedented accuracy in predicting drug-like interactions, including the binding of proteins with ligands and antibodies with their target proteins. AlphaFold 3 is 50% more accurate than the best traditional methods on the PoseBusters benchmark , without needing the input of any structural information, making AlphaFold 3 the first AI system to surpass physics-based tools for biomolecular structure prediction. The ability to predict antibody-protein binding is critical to understanding aspects of the human immune response and the design of new antibodies - a growing class of therapeutics.

Using AlphaFold 3 in combination with a complementary suite of in-house AI models, we are working on drug design for internal projects as well as with pharmaceutical partners. We are using AlphaFold 3 to accelerate and improve the success of drug design - by helping understand how to approach new disease targets, and developing novel ways to pursue existing ones that were previously out of reach.

Read more about how we are using AlphaFold 3 for drug design.

AlphaFold Server: A free and easy-to-use research tool

8AW3 - RNA modifying protein : AlphaFold 3’s prediction for a molecular complex featuring a protein (blue), a strand of RNA (purple), and two ions (yellow) closely matches the true structure (gray). This complex is involved with the creation of other proteins - a cellular process fundamental to life and health.

Google DeepMind’s newly launched AlphaFold Server is the most accurate tool in the world for predicting how proteins interact with other molecules throughout the cell. It is a free platform that scientists around the world can use for non-commercial research. With just a few clicks, biologists can harness the power of AlphaFold 3 to model structures composed of proteins, DNA, RNA, and a selection of ligands, ions, and chemical modifications.

AlphaFold Server helps scientists make novel hypotheses to test in the lab, speeding up workflows and enabling further innovation. This gives researchers an accessible way to generate predictions, regardless of their access to computational resources or their expertise in machine learning.

Experimental protein-structure prediction can take about the length of a PhD and cost hundreds of thousands of dollars. Google DeepMind's previous model, AlphaFold 2, has been used to predict hundreds of millions of structures, which would have taken hundreds of millions of researcher-years at the current rate of experimental structural biology.

"With AlphaFold Server, it’s not only about predicting structures anymore, it’s about generously giving access: allowing researchers to ask daring questions and accelerate discoveries.”

Céline Bouchoux, The Francis Crick Institute

Explore AlphaFold Server

Sharing the power of AlphaFold 3 responsibly

Alongside Google DeepMind, we’ve sought to understand the broad impact of the technology. Working together with the research and safety community to take a science-led approach, we have conducted extensive assessments to mitigate potential risks and share the widespread benefits to biology and humanity.

Building on the external consultations we carried out for AlphaFold 2, Google DeepMind have now engaged with more than 50 domain experts, in addition to specialist third parties, across biosecurity, research, and industry, to understand the capabilities of successive AlphaFold models and any potential risks. We also participated in community-wide forums and discussions ahead of AlphaFold 3’s launch.

AlphaFold Server reflects the ongoing commitment to share the benefits of AlphaFold, including the free database of 200 million protein structures. We’ll continue to work with the scientific community and policy makers to develop and deploy AI technologies responsibly.

Opening up the future of AI-powered cell biology

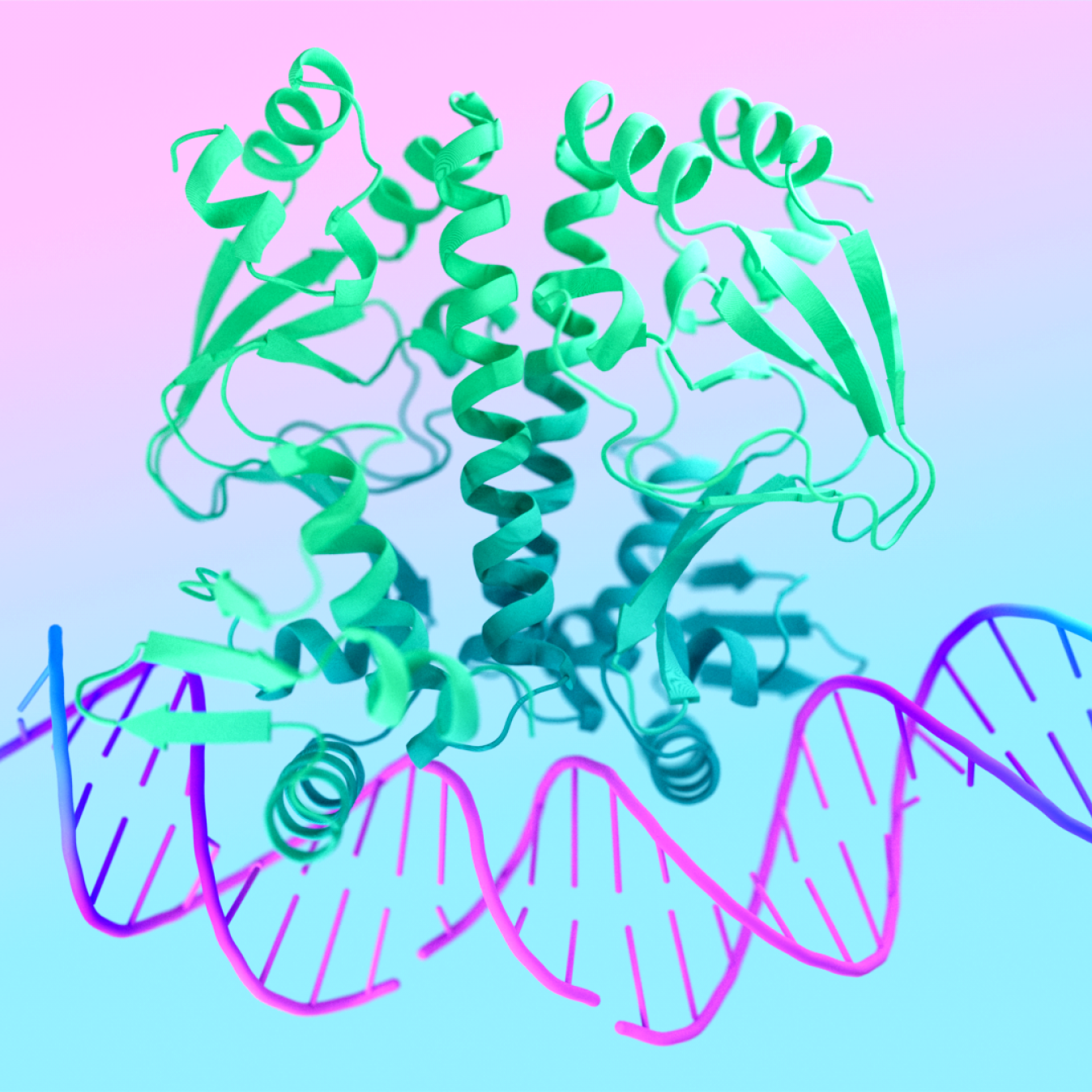

7R6R - DNA binding protein : AlphaFold 3’s prediction for a molecular complex featuring a protein (blue) bound to a double helix of DNA (pink) is a near-perfect match to the true molecular structure discovered through painstaking experiments (gray).

AlphaFold 3 brings the biological world into high definition. It allows scientists to see cellular systems in all their complexity, across structures, interactions, and modifications. This new window on the molecules of life reveals how they’re all connected and helps understand how those connections affect biological functions – such as the actions of drugs, the production of hormones, and the health-preserving process of DNA repair.

The impacts of AlphaFold 3 and the free AlphaFold Server will be realised through how they empower scientists to accelerate discovery across open questions in biology and new lines of research. We’re just beginning to tap into AlphaFold 3’s potential and can’t wait to see what the future holds.

Learn more:

Read our blog on Rational Drug Design with AlphaFold 3

.png)

Read the Isomorphic Labs blog

Latest from iso.

AI advancements make the leap into 3D pathology possible

Human tissue is intricate, complex and, of course, three dimensional. But the thin slices of tissue that pathologists most often use to diagnose disease are two dimensional, offering only a limited glimpse at the tissue's true complexity. There is a growing push in the field of pathology toward examining tissue in its three-dimensional form. But 3D pathology datasets can contain hundreds of times more data than their 2D counterparts, making manual examination infeasible.