Advertisement

A Consensus-Based Checklist for Reporting of Survey Studies (CROSS)

- Research and Reporting Methods

- Published: 22 April 2021

- Volume 36 , pages 3179–3187, ( 2021 )

Cite this article

- Akash Sharma MBBS ORCID: orcid.org/0000-0002-6822-4946 1 , 2 na1 ,

- Nguyen Tran Minh Duc MD ORCID: orcid.org/0000-0002-9333-7539 2 , 3 na1 ,

- Tai Luu Lam Thang MD ORCID: orcid.org/0000-0003-1062-2463 2 , 4 ,

- Nguyen Hai Nam MD ORCID: orcid.org/0000-0001-5184-6936 2 , 5 ,

- Sze Jia Ng MD ORCID: orcid.org/0000-0001-5353-6499 2 , 6 ,

- Kirellos Said Abbas MBCH ORCID: orcid.org/0000-0003-0339-9339 2 , 7 ,

- Nguyen Tien Huy MD, PhD ORCID: orcid.org/0000-0002-9543-9440 8 ,

- Ana Marušić MD, PhD ORCID: orcid.org/0000-0001-6272-0917 9 ,

- Christine L. Paul PhD 10 ,

- Janette Kwok MBBS ORCID: orcid.org/0000-0003-0038-1897 11 ,

- Juntra Karbwang MD, PhD 12 ,

- Chiara de Waure MD, MSc, PhD ORCID: orcid.org/0000-0002-4346-1494 13 ,

- Frances J. Drummond PhD ORCID: orcid.org/0000-0002-7802-776X 14 ,

- Yoshiyuki Kizawa MD, PhD ORCID: orcid.org/0000-0003-2456-5092 15 ,

- Erik Taal PhD ORCID: orcid.org/0000-0002-9822-4488 16 ,

- Joeri Vermeulen MSN, CM ORCID: orcid.org/0000-0002-9568-3208 17 , 18 ,

- Gillian H. M. Lee PhD ORCID: orcid.org/0000-0002-6192-4923 19 ,

- Adam Gyedu MD, MPH ORCID: orcid.org/0000-0002-4186-2403 20 ,

- Kien Gia To PhD ORCID: orcid.org/0000-0001-5038-5584 21 ,

- Martin L. Verra PhD ORCID: orcid.org/0000-0002-3933-8020 22 ,

- Évelyne M. Jacqz-Aigrain MD, PhD ORCID: orcid.org/0000-0002-4285-7067 23 ,

- Wouter K. G. Leclercq MD ORCID: orcid.org/0000-0003-1159-1857 24 ,

- Simo T. Salminen PhD 25 ,

- Cathy Donald Sherbourne PhD 26 ,

- Barbara Mintzes PhD ORCID: orcid.org/0000-0002-8671-915X 27 ,

- Sergi Lozano PhD ORCID: orcid.org/0000-0003-1895-9327 28 ,

- Ulrich S. Tran DSc ORCID: orcid.org/0000-0002-6589-3167 29 ,

- Mitsuaki Matsui MD, MSc, PhD ORCID: orcid.org/0000-0003-4075-1266 12 &

- Mohammad Karamouzian DVM, MSc, PhD candidate ORCID: orcid.org/0000-0002-5631-4469 30 , 31

29k Accesses

610 Citations

17 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

INTRODUCTION

A survey is a list of questions aiming to extract a set of desired data or opinions from a particular group of people. 1 Surveys can be administered quicker than some other methods of data gathering and facilitate data collection from a large number of participants. Numerous questions can be included in a survey that allow for increased flexibility in evaluation of several research areas, such as analysis of risk factors, treatment outcomes, disease trends, cost-effectiveness of care, and quality of life. Surveys can be conducted by phone, mail, face-to-face, or online using web-based software and applications. Online surveys can help reduce or prevent geographical dependence and increase the validity, reliability, and statistical power of the studies. Moreover, online surveys facilitate rapid survey administration as well as data collection and analysis. 2

Surveys are frequently used in a variety of research areas. For example, a PubMed search of the key word “survey” on January 7, 2021, generated over 1,519,000 results. These studies are used for a number of purposes, including but not limited to opinion polls, trend analyses, evaluation of policies, measuring the prevalence of diseases. 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 Although many surveys have been published in high-impact journals, comprehensive reporting guidelines for survey research are limited 13 , 14 and substantial variabilities and inconsistencies can be identified in the reporting of survey studies. Indeed, different studies have presented multiform patterns of survey designs and reported results in various non-systematic ways. 15 , 16 , 17

Evidence-based tools developed by experts could help streamline particular procedures that authors could follow to create reproducible and higher quality studies. 18 , 19 , 20 Research studies that have transparent and accurate reporting may be more reliable and could have a more significant impact on their potential audience. 19 However, that is often not the case when it comes to reporting research findings. For example, Moher et al. 20 reported that, although over 63,000 new studies are published in PubMed on a monthly basis, many publications face the problem of inadequate reporting. Given the lack of standardization and poor quality of reporting, the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) Network was created to help researchers publish high-impact health research. 20 Several important guidelines for various types of research studies have been created and listed on the EQUATOR website, including but not limited to the Consolidated Standards of Reporting Trials and encompasses (CONSORT) for randomized control trial, Strengthening the Reporting of Observational studies in Epidemiology (STROBE) for observational studies, and Preferred Reporting Items for Systemic Reviews and Meta-analyses (PRISMA) for systematic reviews and meta-analyses. The introduction of PRISMA checklist in 2009 led to a substantial increase in the quality of the systemic reviews and is a good example of how poor reporting, biases, and unsatisfactory results can be significantly addressed by implementing and following a validated reporting guideline. 21

SURGE 22 and CHERRIES 23 are frequently recommended for reporting of non-web and web-based surveys. However, a report by Tarek et al. found that many items of the SURGE and CHERRIES guidelines (e.g., development, description, testing of the questionnaire, advertisement, and administration of the questionnaire, sample representativeness, response rates, informed consent, statistical analysis) had been missed by authors. The authors therefore concluded a need to produce a single universal guideline as a standard quality-reporting tool for surveys. Moreover, these guidelines lack a structured approach for the development of guidelines. For example, CHERRIES which was developed in 2004 lacks a comprehensive literature review and the Delphi exercise. These steps are crucial in developing guidelines as they help identify potential gaps and opinions of different experts in the field. 20 , 24 While the SURGE checklist used a literature review for generation of their items, it also lacks the Delphi exercise and is limited to only self-administered postal surveys. There is also little information available about the experts involved in the development of these checklists. SURGE’s limited citations since its publication suggest that it is not commonly used by authors and not recommended by journals. Furthermore, even after the development of these guidelines (SURGE and CHERRIES), there has been limited improvement in reporting of surveys. For example, Alvin et al. reviewed 102 surveys in top nephrology journals and found that the quality of surveys was suboptimal and highlighted the need for new reporting guidelines to improve reporting quality and increase transparency. 25 Similarly, Prasad et al. found significant heterogeneity in reporting of radiology surveys published in major radiology journals and suggested the need for guidelines to increase the homogeneity and generalizability of survey results. 26 Mark et al. also found several deficiencies in survey methodologies and reporting practices and suggested a need for establishing minimum reporting standards for survey studies. 27 Similar concerns regarding the qualities of surveys have been raised in other medical fields. 28 , 29 , 30 , 31 , 32 , 33

Because of concerns regarding survey qualities and lack of well-developed guidelines, there is a need for a single comprehensive tool that can be used as a standard reporting checklist for survey research to address significant discrepancies in the reporting of survey studies. 13 , 25 , 26 , 27 , 28 , 31 , 32 The purpose of this study was to develop a universal checklist for both web- and non-web-based surveys. Firstly, we established a workgroup to search the literature for potential items that can be included in our checklist. Secondly, we collected information about experts in the field of survey research and emailed them an invitation letter. Lastly, we conducted three rounds of rating by the Delphi method.

Our study was performed from January 2018 to December 2019 using the Delphi method. This method is encouraged for use in scientific research as a feasible and reliable approach to reach final consensus among experts. 34 The process of checklist development included five phases: (i) planning; (ii) drafting of checklist items; (iii) consensus building using the Delphi method; (iv) dissemination of guidelines; and (v) maintenance of guidelines.

Planning Phase

In the planning phase, we established a workgroup, secured resources, reviewed the existing reporting guidelines, and drafted the plan and timeline of our project. To facilitate the development of Checklist for Reporting of Survey Studies (CROSS), a reporting checklist workgroup was set up. This workgroup had seven members from five countries. The expert panel members were found via searching original survey-based studies published between January 2004 and December 2016. The experts were selected based on their number of high-impact and highly cited publications using survey research methods. Furthermore, members of the EQUATOR Network and contributors to PRISMA checklist were involved. Panel members’ information, such as current affiliation, email address, and number of survey studies involved in were collected through their ResearchGate profiles (see Supplement 1 ). Lastly, a list of potential panel members was created and an invitation letter was emailed to every expert to inquire about their interest in participating in our study. Consenting experts received a follow-up email with a detailed explanation of the research objectives and the Delphi approach.

Drafting the Checklist

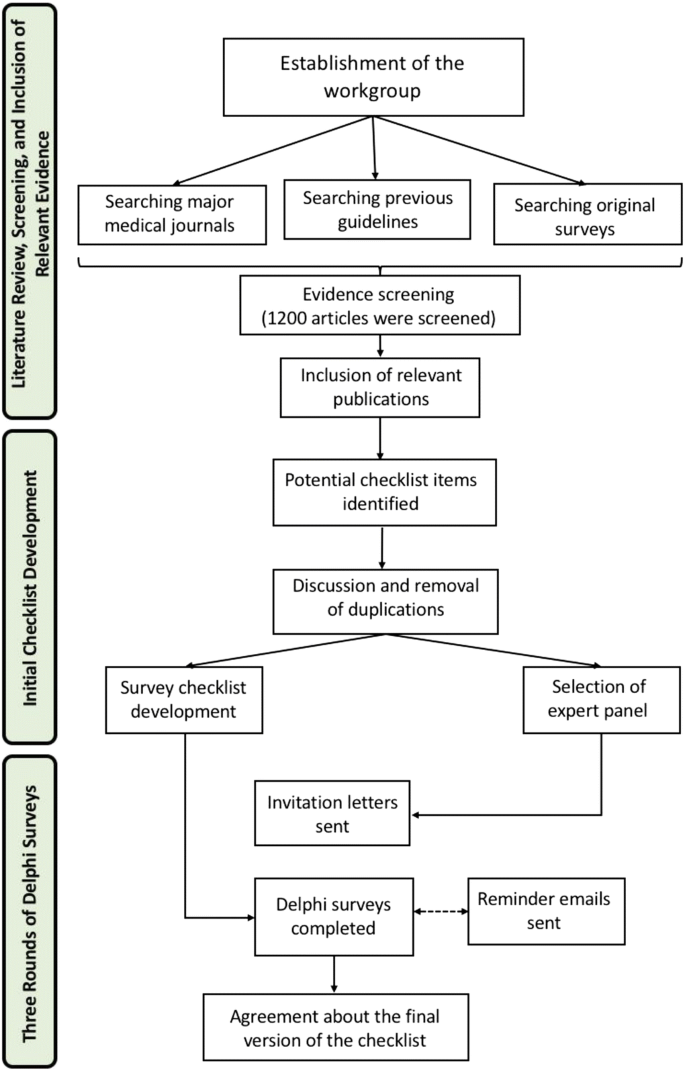

This process generated a list of potential items that could be included in the checklist. This procedure included searching the literature for potential items to be considered for inclusion in the checklist, establishing a checklist based on those potential items, and revising the checklist. Firstly, we conducted a literature review to identify survey studies published in major medical journals and extracted relevant information for drafting our potential checklist items (see Supplement 2 for a sample search strategy). Secondly, we searched the EQUATOR Network for previously published checklists for reporting of survey studies. Thirdly, three teams of two researchers independently extracted the potential items that could be included in our checklist. Lastly, our group members worked together to revise the checklist and remove any duplicates (Fig. 1 ). We discussed the importance and relevance of each potential item and compared each of them to the selected literature.

Different stages of developing the checklist.

Consensus Phase Using the Delphi Method

The first round of Delphi was conducted using SurveyMonkey (SurveyMonkey Inc., San Mateo, CA, USA; www.surveymonkey.com ). An email was sent to the expert panel containing information about the Delphi process, the timeline of each Delphi phase, and a detailed overview of the project. A Likert scale was used for rating items from 1 (strongly disagree) to 5 (strongly agree). Experts were also encouraged to provide their comments, modify items, or propose a new item that they felt was necessary to be included in the checklist. Nonresponding experts were sent weekly follow-up reminders. Items that did not reach consensus were rerated in the second round along with the modified or newly added items. The main objectives of the first round were to determine unnecessary items and identify incomplete items in the survey checklist. A pre-set 70% agreement (70% experts rating 4/5 or 5/5) was used as a cutoff for including an item in the final checklist. 35 Items that did not reach the 70% agreement threshold were adjusted according to experts’ feedback and redistributed to the panelists for round 2. In the second round, we included items that did not reach consensus in round one. In this round, experts were also provided with their round one scoring so that they could modify or preserve their previous responses. Lastly, a third round of Delphi was launched to solve any disagreements about the inclusion of items that did not reach consensus in the second round.

A total of 24 experts with a median (Q1, Q3) of 20 (15.75, 31) years of research experience participated in our study. Overall, 24 items were selected in their original form in the first round, and 27 items were reviewed in the second round. Out of these 27 items, 10 items were merged into five, and 11 items were modified based on experts’ comments. In the second round, 24 experts participated and 18 items were finally included. Overall, 18 experts responded in the third round and only one additional item was included in this round.

All details regarding the percentage agreement and mean and standard deviation (SD) of items included in the checklist are presented in Table 1 . CROSS contains 19 sections with 40 different items, including “Title and abstract” (section 1); “Introduction” (sections 2 and 3); “Methods” (sections 4–10); “Results” (sections 11–13); “Discussion” (sections 14–16); and other items (sections 17–19). Please see Supplement 3 for the final checklist.

The development of CROSS is the result of a literature review and Delphi process which involved international experts with significant expertise in the development and implementation of survey studies. CROSS includes both evidenced-informed and expert consensus-based items which are intended to serve as a tool that helps improve the quality of survey studies.

The detailed descriptions of the methods and procedures in developing this guideline are provided in this paper so that the quality of the checklist can be assessed by other scholars. Our Delphi respondent members were made up of a panel of experts with backgrounds in different disciplines. We also spent a considerable amount of time researching and debating the potential items to be included in our checklist. During the Delphi process, the agreement of each potential item was rated by participants according to a 5-point Likert scale. Although the entire process was conducted electronically, we gathered data and feedback from the participants via email instead of conducting Skype or face-to-face discussions as suggested by the EQUATOR network. 13

In comparison to the CHERRIES or SURGE checklists, CROSS provides a single but comprehensive tool which is organized according to the typical primary sections required for peer-reviewed publications. It also assists researchers in developing a comprehensive research protocol prior to conducting a survey. The “Introduction” provides a clear overview of the aim of the survey. In the “Methods” section, our checklist provides a detailed explanation of initiating and developing the survey, including study design, data collection methods, sample size calculation, survey administration, study preparation, ethical considerations, and statistical analysis. The “Results” section of CROSS describes the respondent characteristics followed by the descriptive and main results, issues that are not discussed in CHERRIES and SURGE checklists. Also, our checklist can be used in both non-web-based and web-based surveys that serves all types of survey-based studies. New items were added to our checklist to address the gaps in the available tools. For example, in item 10b, we included reports of any modification of variables. This can help researchers to justify and readers to understand why there was a need to modify the variables. In item 11b, we encourage researchers to state the reasons for non-participation at each stage. Publishing these reasons can be useful for future researchers intending to conduct a similar survey. Finally, we have added components related to limitations, interpretation, and generalizability of study results to the “Discussion” section, which are an important effort in increasing transparency and external validity. These components are missing from previous checklists (i.e., CHERRIES and SURGE).

Dissemination and Maintenance of the Checklist

Following the consensus phase, we will publish our checklist statement together with a detailed Explanation and Elaboration (E&E) document in which an in-depth explanation of the scientific rationale for each recommendation will be provided. To disseminate our final checklist widely, we aim to promote it in various journals, make it easily available on multiple websites including EQUATOR, and disseminate it through presentations at relevant conferences if necessary. We will also use social media to reach certain demographics, and also the key persons in research organizations who are regularly conducting surveys in different specialties. We also aim to seek endorsement of CROSS by journal editors, professional societies, and researchers, and to collect feedback from scholars about their experience.

Taking comments, critics, and suggestion from experts for revising and correcting our guidelines could help maintain the relevancy of the checklist. Lastly, we are planning on publishing CROSS in several non-English languages to increase its accessibility across the scientific community.

Limitations

We acknowledge the limitations of our study. First, the use of the Delphi consensus method may involve some subjectivity in interpreting experts’ responses and suggestions. Second, six experts were lost to follow up. Nonetheless, we think our checklist could improve the quality of the reporting of survey studies. Similar to other reporting checklists, CROSS requires to be re-evaluated and revised overtime to ensure it remains relevant and up-to-date with evolving research methodologies of survey studies. We therefore welcome feedback, comments, critiques, and suggestions for improvement from the research community.

CONCLUSIONS

We think CROSS has the potential to be a beneficial resource to researchers who are designing and conducting survey studies. Following CROSS before and during the survey administration could assist researchers to ensure their surveys are sufficiently reliable, reproducible, and transparent.

Wikipedia contributors. (2020). Survey (human research). In Wikipedia, The Free Encyclopedia . Retrieved 19:59, December 26, 2020, from https://en.wikipedia.org/w/index.php?title=Survey_(human_research)&oldid=994953597 .

Maymone MBC, Venkatesh S, Secemsky E, Reddy K, Vashi NA. Research Techniques Made Simple: Web-Based Survey Research in Dermatology: Conduct and Applications. J Invest Dermatol 2018;138(7):1456-1462. doi: https://doi.org/10.1016/j.jid.2018.02.032 .

Article CAS PubMed Google Scholar

Alcock I, White MP, Pahl S, Duarte-Davidson R, Fleming LE. Associations Between Pro-environmental Behaviour and Neighbourhood Nature, Nature Visit Frequency and Nature Appreciation: Evidence from a Nationally Representative Survey in England, Environ Int 2020;136:105441. doi: https://doi.org/10.1016/j.envint.2019.105441 .

Article PubMed Google Scholar

Siddiqui J, Brown K, Zahid A, Young CJ . Current Practices and Barriers to Referral for Cytoreductive Surgery and HIPEC Among Colorectal Surgeons: a Binational Survey. Eur J Surg Oncol 2020;46(1):166-172. doi: https://doi.org/10.1016/j.ejso.2019.09.007

Lee JG, Park CH, Chung H, Park JC, Kim DH, Lee BI, Byeon JS, Jung HY . Current Status and Trend in Training for Endoscopic Submucosal Dissection: a Nationwide Survey in Korea. PLoS One 2020;15(5):e0232691. doi: https://doi.org/10.1371/journal.pone.0232691

Article CAS PubMed PubMed Central Google Scholar

McChesney SL, Zelhart MD, Green RL, Nichols RL . Current U.S. Pre-Operative Bowel Preparation Trends: a 2018 Survey of the American Society of Colon and Rectal Surgeons Members. Surg Infect 2020;21(1):1-8. doi: https://doi.org/10.1089/sur.2019.125

Article Google Scholar

Núñez A, Manzano CA, Chi C. Health Outcomes, Utilization, and Equity in Chile: an Evolution from 1990 to 2015 and the Effects of the Last Health Reform. Public Health 2020;178:38-48. doi: https://doi.org/10.1016/j.puhe.2019.08.017

Blackwell AKM, Kosīte D, Marteau TM, Munafò MR . Policies for Tobacco and E-Cigarette Use: a Survey of All Higher Education Institutions and NHS Trusts in England. Nicotine Tob Res 2020;22(7):1235-1238. doi: https://doi.org/10.1093/ntr/ntz192

Liu S, Zhu Y, Chen W, Wang L, Zhang X, Zhang Y . Demographic and Socioeconomic Factors Influencing the Incidence of Ankle Fractures, a National Population-Based Survey of 512187 Individuals. Sci Rep 2018;8(1):10443. doi: https://doi.org/10.1038/s41598-018-28722-1

Tamanini JTN, Pallone LV, Sartori MGF, Girão MJBC, Dos Santos JLF, de Oliveira Duarte YA, van Kerrebroeck PEVA . A Populational-Based Survey on the Prevalence, Incidence, and Risk Factors of Urinary Incontinence in Older Adults-Results from the “SABE STUDY”. Neurourol Urodyn 2018;37(1):466-477. doi: https://doi.org/10.1002/nau.23331

Tink W, Tink JC, Turin TC, Kelly M . Adverse childhood experiences: survey of resident practice, knowledge, and attitude. Fam Med 2017;49(1):7-13

PubMed Google Scholar

Shi S, Lio J, Dong H, Jiang I, Cooper B, Sherer R. Evaluation of Geriatrics Education at a Chinese University: a Survey of Attitudes and Knowledge Among Undergraduate Medical Students. Gerontol Geriatr Educ 2020;41(2):242-249. doi: https://doi.org/10.1080/02701960.2018.1468324

Bennett, C., Khangura, S., Brehaut, J. C., Graham, I. D., Moher, D., Potter, B. K., & Grimshaw, J. M. (2010). Reporting Guidelines for Survey Research: an Analysis of Published Guidance and Reporting Practices. PLoS Med , 8 (8), e1001069. https://doi.org/10.1371/journal.pmed.1001069

Turk T, Elhady MT, Rashed S, Abdelkhalek M, Nasef SA, Khallaf AM, Mohammed AT, Attia AW, Adhikari P, Amin MA, Hirayama K, Huy NT . Quality of Reporting Web-Based and Non-web-based Survey Studies: What Authors, Reviewers and Consumers Should Consider. PLoS One 2018;13(6):e0194239. doi: https://doi.org/10.1371/journal.pone.0194239

Jones, T. L., Baxter, M. A., & Khanduja, V. (2013). A Quick Guide to Survey Research. Ann R Coll Surg Engl , 95 (1), 5–7. https://doi.org/10.1308/003588413X13511609956372

Jones D, Story D, Clavisi O, Jones R, Peyton P. An Introductory Guide to Survey Research in Anaesthesia. Anaesth Intensive Care 2006;34(2):245-53. doi: https://doi.org/10.1177/0310057X0603400219

Alderman AK, Salem B . Survey Research. Plast Reconstr Surg 2010;126(4):1381-9. doi: https://doi.org/10.1097/PRS.0b013e3181ea44f9

Moher D, Weeks L, Ocampo M, Seely D, Sampson M, Altman DG, Schulz KF, Miller D, Simera I, Grimshaw J, Hoey J. Describing Reporting Guidelines for Health Research: a Systematic Review. J Clin Epidemiol 2011;64(7):718-42. doi: https://doi.org/10.1016/j.jclinepi.2010.09.013

Simera, I., Moher, D., Hirst, A. et al. Transparent and Accurate Reporting Increases Reliability, Utility, and Impact of Your Research: Reporting Guidelines and the EQUATOR Network. BMC Med 8, 24 (2010). https://doi.org/10.1186/1741-7015-8-24

Article PubMed PubMed Central Google Scholar

Moher D, Schulz KF, Simera I, Altman DG . Guidance for Developers of Health Research Reporting Guidelines. PLoS Med 2010;7(2):e1000217. doi: https://doi.org/10.1371/journal.pmed.1000217

Tan WK, Wigley J, Shantikumar S . The Reporting Quality of Systematic Reviews and Meta-analyses in Vascular Surgery Needs Improvement: a Systematic Review. Int J Surg 2014;12(12):1262-5. doi: https://doi.org/10.1016/j.ijsu.2014.10.015

Grimshaw, J. (2014). SURGE (The SUrvey Reporting GuidelinE). In Guidelines for Reporting Health Research: a User’s Manual (eds D. Moher, D.G. Altman, K.F. Schulz, I. Simera and E. Wager). https://doi.org/10.1002/9781118715598.ch20

Eysenbach G. Improving the Quality of Web Surveys: The Checklist for Reporting Results of Internet E-Surveys (CHERRIES). J Med Internet Res 2004;6(3):e34. DOI: https://doi.org/10.2196/jmir.6.3.e34 .

EquatorNetwork.org . Developing your reporting guideline. 3 July 2018 [cited 12/28/2020]; Available from: https://www.equator-network.org/toolkits/developing-a-reporting-guideline/developing-your-reporting-guideline/ .

Li AH, Thomas SM, Farag A, Duffett M, Garg AX, Naylor KL . Quality of Survey Reporting in Nephrology Journals: a Methodologic Review. Clin J Am Soc Nephrol 2014;9(12):2089-94. doi: https://doi.org/10.2215/CJN.02130214

Shankar PR, Maturen KE . Survey Research Reporting in Radiology Publications: a Review of 2017 to 2018. J Am Coll Radiol 2019;16(10):1378-1384. doi: https://doi.org/10.1016/j.jacr.2019.07.012

Duffett M, Burns KE, Adhikari NK, Arnold DM, Lauzier F, Kho ME, Meade MO, Hayani O, Koo K, Choong K, Lamontagne F, Zhou Q, Cook DJ . Quality of Reporting of Surveys in Critical Care Journals: a Methodologic Review. Crit Care Med 2012 Feb;40(2):441-9. doi: https://doi.org/10.1097/CCM.0b013e318232d6c6

Story DA, Gin V, na Ranong V, Poustie S, Jones D; ANZCA Trials Group. Inconsistent Survey Reporting in Anesthesia Journals. Anesth Analg 2011;113(3):591-5. doi: https://doi.org/10.1213/ANE.0b013e3182264aaf

Marcopulos BA, Guterbock TM, Matusz EF . [Formula: see text] Survey Research in Neuropsychology: a Systematic Review. Clin Neuropsychol 2020;34(1):32-55. doi: https://doi.org/10.1080/13854046.2019.1590643

Rybakov KN, Beckett R, Dilley I, Sheehan AH. Reporting Quality of Survey Research Articles Published in the Pharmacy Literature. Res Soc Adm Pharm 2020;16(10):1354-1358. doi: https://doi.org/10.1016/j.sapharm.2020.01.005

Pagano MB, Dunbar NM, Tinmouth A, Apelseth TO, Lozano M, Cohn CS, Stanworth SJ; Biomedical Excellence for Safer Transfusion (BEST) Collaborative. A Methodological Review of the Quality of Reporting of Surveys in Transfusion Medicine. Transfusion. 2018;58(11):2720-2727. doi: https://doi.org/10.1111/trf.14937

Mulvany JL, Hetherington VJ, VanGeest JB . Survey Research in Podiatric Medicine: an Analysis of the Reporting of Response Rates and Non-response Bias. Foot (Edinb) 2019;40:92-97. doi: https://doi.org/10.1016/j.foot.2019.05.005

Tabernero P, Parker M, Ravinetto R, Phanouvong S, Yeung S, Kitutu FE, Cheah PY, Mayxay M, Guerin PJ, Newton PN . Ethical Challenges in Designing and Conducting Medicine Quality Surveys. Tropical Med Int Health 2016 Jun;21(6):799-806. doi: https://doi.org/10.1111/tmi.12707

Keeney S, Hasson F, McKenna H . Consulting the Oracle: Ten Lessons from Using the Delphi Technique in Nursing Research. J Adv Nurs 2006; 53(2): 205-12 8p. doi: https://doi.org/10.1111/j.1365-2648.2006.03716.x .

Zamanzadeh V, Rassouli M, Abbaszadeh A, Alavi-Majd H, Nikanfar A, Ghahramanian A . Details of content validity index and objectifying it in instrument development. Nursing Pract Today 2014; 1(3): 163-71.

Google Scholar

Download references

Acknowledgements

We are thankful to Dr. David Moher (Ottawa Hospital Research Institute, Canada) and Dr. Masahiro Hashizume (Department of Global Health Policy, Graduate School of Medicine, The University of Tokyo, Tokyo, Japan) for initial contribution of the project and in rating and development of the checklist. We are also grateful to Obaida Istanbuly (Keele University, UK) and Omar Diab (Private Dental Practice, Jordan) for their contribution in the earlier phases of the project.

Author information

Akash Sharma and Minh Duc Nguyen Tran contributed equally to this work.

Authors and Affiliations

University College of Medical Sciences and Guru Teg Bahadur Hospital, Dilshad Garden, Delhi, India

Akash Sharma MBBS

Online Research Club, Nagasaki, Japan

Akash Sharma MBBS, Nguyen Tran Minh Duc MD, Tai Luu Lam Thang MD, Nguyen Hai Nam MD, Sze Jia Ng MD & Kirellos Said Abbas MBCH

Faculty of Medicine, University of Medicine and Pharmacy, Ho Chi Minh City, Vietnam

Nguyen Tran Minh Duc MD

Department of Emergency, City’s Children Hospital, Ho Chi Minh City, Vietnam

Tai Luu Lam Thang MD

Division of Hepato-Biliary-Pancreatic Surgery and Transplantation, Department of Surgery, Graduate School of Medicine, Kyoto University, Kyoto, Japan

Nguyen Hai Nam MD

Department of Medicine, Crozer Chester Medical Center, Upland, PA, USA

Sze Jia Ng MD

Faculty of Medicine, Alexandria University, Alexandria, Egypt

Kirellos Said Abbas MBCH

Institute of Tropical Medicine (NEKKEN) and School of Tropical Medicine and Global Health, Nagasaki University, Nagasaki, 852-8523, Japan

Nguyen Tien Huy MD, PhD

Department of Research in Biomedicine and Health, University of Split School of Medicine, Split, Croatia

Ana Marušić MD, PhD

School of Medicine and Public Health, University of Newcastle, Callaghan, Australia

Christine L. Paul PhD

Division of Transplantation and Immunogenetics, Department of Pathology, Queen Mary Hospital Hong Kong, Pok Fu Lam, Hong Kong

Janette Kwok MBBS

School of Tropical Medicine and Global Health, Nagasaki University, Nagasaki, 852-8523, Japan

Juntra Karbwang MD, PhD & Mitsuaki Matsui MD, MSc, PhD

Department of Medicine and Surgery, University of Perugia, Perugia, Italy

Chiara de Waure MD, MSc, PhD

Cancer Research at UCC, University College Cork, Cork, Ireland

Frances J. Drummond PhD

Department of Palliative Medicine, Kobe University School of Medicine, Hyogo, Japan

Yoshiyuki Kizawa MD, PhD

Department of Psychology, Health & Technology, Faculty of Behavioural, Management and Social Sciences, University of Twente, Enschede, Netherlands

Erik Taal PhD

Department of Public Health, Biostatistics and Medical Informatics Research Group, Vrije Universiteit Brussel (VUB), Brussels, Belgium

Joeri Vermeulen MSN, CM

Department of Health Care, Knowledge Centre Brussels Integrated Care, Erasmus Brussels University of Applied Sciences and Arts, Brussels, Belgium

Paediatric Dentistry and Orthodontics, Faculty of Dentistry, University of Hong Kong, Pok Fu Lam, Hong Kong

Gillian H. M. Lee PhD

Department of Surgery, School of Medicine and Dentistry, Kwame Nkrumah University of Science and Technology, Kumasi, Ghana

Adam Gyedu MD, MPH

Faculty of Public Health, University of Medicine and Pharmacy, Ho Chi Minh City, Vietnam

Kien Gia To PhD

Department of Physiotherapy, Bern University Hospital, Insel Group, Bern, Switzerland

Martin L. Verra PhD

Hopital Robert-Debre AP-HP, Clinical Investigation Center, Paris, France

Évelyne M. Jacqz-Aigrain MD, PhD

Department of Surgery, Máxima Medical Center, Veldhoven, Veldhoven, the Netherlands

Wouter K. G. Leclercq MD

Department of Social Psychology, University of Helsinki, Helsinki, Finland

Simo T. Salminen PhD

RAND, Santa Monica, CA, USA

Cathy Donald Sherbourne PhD

School of Pharmacy and Charles Perkins Centrey, Faculty of Medicine and Health, The University of Sydney, Sydney, Australia

Barbara Mintzes PhD

School of Economics, University of Barcelona, Barcelona, Spain

Sergi Lozano PhD

Department of Cognition, Emotion, and Methods in Psychology, School of Psychology, University of Vienna, Vienna, Austria

Ulrich S. Tran DSc

School of Population and Public Health, University of British Columbia, Vancouver, BC, Canada

Mohammad Karamouzian DVM, MSc, PhD candidate

HIV/STI Surveillance Research Center, and WHO Collaborating Center for HIV Surveillance, Institute for Futures Studies in Health, Kerman University of Medical Sciences, Kerman, Iran

You can also search for this author in PubMed Google Scholar

Contributions

NTH is the generator of the idea, and supervised and helped in writing, reviewing, and mediating Delphi process; AS participated in making a draft of guidelines, mediating Delphi process, analysis of results, writing, and process validation; TLT helped in making a draft of guidelines, and analysis; MNT helped in drafting checklist and mediating Delphi process; NNH, NSJ, KSA, and MK helped in writing and mediating Delphi; AM, JK, CLP, JKB, CDW, FJD, MH, YK, EK, JV, GHL, AG, KGT, ML, EMJ, WKL, STS, CDS, BM, SL, UST, MM and MK helped in the rating of items in Delphi rounds and reviewing the manuscript.

Corresponding author

Correspondence to Nguyen Tien Huy MD, PhD .

Ethics declarations

Conflict of interest.

The authors declare that they do not have a conflict of interest.

Ethics approval

Ethics approval was not required for the study.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

(DOCX 22 kb)

Rights and permissions

Reprints and permissions

About this article

Sharma, A., Minh Duc, N., Luu Lam Thang, T. et al. A Consensus-Based Checklist for Reporting of Survey Studies (CROSS). J GEN INTERN MED 36 , 3179–3187 (2021). https://doi.org/10.1007/s11606-021-06737-1

Download citation

Received : 15 September 2020

Accepted : 17 March 2021

Published : 22 April 2021

Issue Date : October 2021

DOI : https://doi.org/10.1007/s11606-021-06737-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Surveys and Questionnaires

- Delphi technique

- Find a journal

- Publish with us

- Track your research

- Download PDF

- Share X Facebook Email LinkedIn

- Permissions

AAPOR Reporting Guidelines for Survey Studies

- 1 Department of Surgery, University of Wisconsin School of Medicine and Public Health, Madison

- 2 Department of Biostatistics, Gillings School of Global Public Health, University of North Carolina at Chapel Hill

- 3 Statistical Editor, JAMA Surgery

- 4 Department of Cardiothoracic Surgery, University of Pittsburgh School of Medicine, Pittsburgh, Pennsylvania

- Editorial Effective Use of Reporting Guidelines to Improve the Quality of Surgical Research Benjamin S. Brooke, MD, PhD; Amir A. Ghaferi, MD, MSc; Melina R. Kibbe, MD JAMA Surgery

- Guide to Statistics and Methods SQUIRE Reporting Guidelines for Quality Improvement Studies Rachel R. Kelz, MD, MSCE, MBA; Todd A. Schwartz, DrPH; Elliott R. Haut, MD, PhD JAMA Surgery

- Guide to Statistics and Methods STROBE Reporting Guidelines for Observational Studies Amir A. Ghaferi, MD, MS; Todd A. Schwartz, DrPH; Timothy M. Pawlik, MD, MPH, PhD JAMA Surgery

- Guide to Statistics and Methods CHEERS Reporting Guidelines for Economic Evaluations Oluwadamilola M. Fayanju, MD, MA, MPHS; Jason S. Haukoos, MD, MSc; Jennifer F. Tseng, MD, MPH JAMA Surgery

- Guide to Statistics and Methods TRIPOD Reporting Guidelines for Diagnostic and Prognostic Studies Rachel E. Patzer, PhD, MPH; Amy H. Kaji, MD, PhD; Yuman Fong, MD JAMA Surgery

- Guide to Statistics and Methods ISPOR Reporting Guidelines for Comparative Effectiveness Research Nader N. Massarweh, MD, MPH; Jason S. Haukoos, MD, MSc; Amir A. Ghaferi, MD, MS JAMA Surgery

- Guide to Statistics and Methods PRISMA Reporting Guidelines for Meta-analyses and Systematic Reviews Shipra Arya, MD, SM; Amy H. Kaji, MD, PhD; Marja A. Boermeester, MD, PhD JAMA Surgery

- Guide to Statistics and Methods MOOSE Reporting Guidelines for Meta-analyses of Observational Studies Benjamin S. Brooke, MD, PhD; Todd A. Schwartz, DrPH, MS; Timothy M. Pawlik, MD, MPH, PhD JAMA Surgery

- Guide to Statistics and Methods TREND Reporting Guidelines for Nonrandomized/Quasi-Experimental Study Designs Alex B. Haynes, MD, MPH; Jason S. Haukoos, MD, MSc; Justin B. Dimick, MD, MPH JAMA Surgery

- Guide to Statistics and Methods The CONSORT Framework Ryan P. Merkow, MD, MS; Amy H. Kaji, MD, PhD; Kamal M. F. Itani, MD JAMA Surgery

- Guide to Statistics and Methods SRQR and COREQ Reporting Guidelines for Qualitative Studies Lesly A. Dossett, MD, MPH; Amy H. Kaji, MD, PhD; Amalia Cochran, MD JAMA Surgery

Although survey studies allow researchers to gather unique information not readily available from other data sources on disease epidemiology, human behaviors and beliefs, and knowledge about health care topics from a specific population, they may be fraught with bias if not well designed and executed. The American Association for Public Opinion Research (AAPOR) Survey Disclosure Checklist (2009) and Code of Professional Ethics and Practices (2015) can guide researchers in their efforts. 1 , 2 The standards were first proposed in 1948 and arose in direct response to the presidential election where Harry Truman defeated Thomas Dewey. 3 Truman’s victory surprised the US because Gallup and other polls predicted a Dewey win. This divergence forced pollsters and statisticians to recognize flaws in their quota sampling methods, which resulted in a nonrepresentative sample and misprediction of the 33rd president of the United States. The confusion over the election prompted leaders to propose standards for survey research. Despite the long-standing nature of these guidelines, recent data show survey reporting is often subpar. 4 Compliance with disclosure requirements is often lacking, and articles in some specialties only report 75% of the required methodologic elements on average. 4

- Editorial Effective Use of Reporting Guidelines to Improve the Quality of Surgical Research JAMA Surgery

Read More About

Pitt SC , Schwartz TA , Chu D. AAPOR Reporting Guidelines for Survey Studies. JAMA Surg. 2021;156(8):785–786. doi:10.1001/jamasurg.2021.0543

Manage citations:

© 2024

Artificial Intelligence Resource Center

Surgery in JAMA : Read the Latest

Browse and subscribe to JAMA Network podcasts!

Others Also Liked

Select your interests.

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

- Register for email alerts with links to free full-text articles

- Access PDFs of free articles

- Manage your interests

- Save searches and receive search alerts

Best Practices for Survey Research

Below you will find recommendations on how to produce the best survey possible..

Included are suggestions on the design, data collection, and analysis of a quality survey. For more detailed information on important details to assess rigor of survey methology, see the AAPOR Transparency Initiative .

To download a pdf of these best practices, please click here

"The quality of a survey is best judged not by its size, scope, or prominence, but by how much attention is given to [preventing, measuring and] dealing with the many important problems that can arise."

“What is a Survey?”, American Statistical Association

1. Planning Your Survey

Is a survey the best method for answering your research question.

Surveys are an important research tool for learning about the feelings, thoughts, and behaviors of groups of individuals. However, surveys may not always be the best tool for answering your research questions. They may be appropriate when there is not already sufficiently timely or relevant existing data on the topic of study. Researchers should consider the following questions when deciding whether to conduct a survey:

- What are the objectives of the research? Are they unambiguous and specific?

- Have other surveys already collected the necessary data?

- Are other research methods such as focus groups or content analyses more appropriate?

- Is a survey alone enough to answer the research questions, or will you also need to use other types of data (e.g., administrative records)?

Surveys should not be used to produce predetermined results, campaigning, fundraising, or selling. Doing so is a violation of the AAPOR Code of Professional Ethics .

Should the survey be offered online, by mail, in person, on the phone, or in some combination of these modes?

Once you have decided to conduct a survey, you will need to decide in what mode(s) to offer it. The most common modes are online, on the phone, in person, or by mail. The choice of mode will depend at least in part on the type of information in your survey frame and the quality of the contact information. Each mode has unique advantages and disadvantages, and the decision should balance the data quality needs of the research alongside practical considerations such as the budget and time requirements.

- Compared with other modes, online surveys can be quickly administered for less cost. However, older respondents, those with lower incomes, or respondents living in rural areas are less likely to have reliable internet access or to be comfortable using computers. Online surveys may work well when the primary way you contact respondents is via email. It also may elicit more honest answers from respondents on sensitive topics because they will not have to disclose sensitive information directly to another person (an interviewer).

- Telephone surveys are often more costly than online surveys because they require the use of interviewers. Well trained interviewers can help guide the respondent through questions that might be hard to understand and encourage them to keep going if they start to lose interest, reducing the number of people who do not complete the survey. Telephone surveys are often used when the sampling frame consists of telephone numbers. Quality standards can be easier to maintain in telephone surveys if interviewers are in one centralized location.

- In-person, or face-to-face, surveys tend to cost the most and generally take more time than either online or telephone surveys. With an in-person survey, the interviewer can build a rapport with the respondent and help with questions that might be hard to understand. This is particularly relevant for long or complex surveys. In-person surveys are often used when the sampling frame consists of addresses.

- Mailed paper surveys can work well when the mailing addresses of the survey respondents are known. Respondents can complete the survey at their own convenience and do not need to have computer or internet access. Like online surveys, they can work well for surveys on sensitive topics. However, since mail surveys cannot be automated, they work best when the flow of the questionnaire is relatively straightforward. Surveys with complex skip patterns based on prior responses may be confusing to respondents and therefore better suited for other modes.

Some surveys use multiple modes, particularly if a subset of the people in the sample are more reachable via a different mode. Often, a less costly method is employed first or used concurrently with another method, for example offering a choice between online and telephone response, or mailing a paper survey with a telephone follow-up with those who have not yet responded.

2. Designing Your Sample

How to design your sample.

When you run a survey, the people who respond to your survey are called your sample because they are a sample of people from the larger population you are studying, such as adults who live in the U.S. A sampling frame is a list of information that will allow you to contact potential respondents – your sample – from a population. Ultimately, it’s the sampling frame that allows you to draw a sample from the larger population. For a mail-based survey, it’s a list of addresses in the geographic area in which your population is located; for an online panel survey, it’s the people in the panel; for a telephone survey, it’s a list of phone numbers. Thinking through how to design your sample to best match the population of study can help you run a more accurate survey that will require fewer adjustments afterwards to match the population.

One approach is to use multiple sampling frames; for example, in a phone survey, you can combine a sampling frame of people with cell phones and a sampling frame of people with landlines (or both), which is now considered a best practice for phone surveys.

Surveys can be either probability-based or nonprobability-based. For decades, probability samples, often used for telephone surveys, were the gold standard for public opinion polling. In these types of samples, there is a frame that covers all or almost all the population of interest, such as a list of all the phone numbers in the U.S. or all the residential addresses, and individuals are selected using random methods to complete the survey. More recently, nonprobability samples and online surveys have gained popularity due to the rising cost of conducting probability-based surveys. A survey conducted online can use probability samples, such as those recruited using residential addresses, or can use nonprobability samples, such as “opt-in” online panels or participants recruited, through social media or personal networks. Analyzing and reporting nonprobability-based survey results often require using special statistical techniques and taking great care to ensure transparency about the methodology.

3. Designing your questionnaire

What are some best practices for writing survey questions.

- Questions should be specific and ask only about one concept at a time. For example, respondents may interpret a question about the role of “government” differently – some may think of the federal government, while others may think of state governments.

- Write questions that are short and simple and use words and concepts that the target audience will understand. Keep in mind that knowledge, literacy skills , and English proficiency vary widely among respondents.

- Keep questions free of bias by avoiding language that pushes respondents to respond in a certain way or that presents only one side of an issue. Also be aware that respondents may tend toward a socially desirable answer or toward saying “yes” or “agree” in an effort to please the interviewer, even if unconsciously.

- Arrange questions in an order that will be logical to respondents but not influence how they answer. Often, it’s better for general questions to come earlier than specific questions about the same concept in the survey. For example, asking respondents whether they favor or oppose certain policy positions of a political leader prior to asking a general question about the favorability of that leader may prime them to weigh those certain policy positions more heavily than they otherwise would in determining how to answer about favorability.

- Choose whether a question should be closed-ended or open-ended. Closed-ended questions, which provide a list of response options to choose from, place less of a burden on respondents to come up with an answer and are easier to interpret, but they are more likely to influence how a respondent answers. Open-ended questions allow respondents to respond in their own words but require coding in order to be interpreted quantitatively.

- Response options for closed-ended questions should be chosen with care. They should be mutually exclusive, include all reasonable options (including, in some cases, options such as “don’t know” or “does not apply” or neutral choices such as “neither agree nor disagree”), and be in a logical order. In some circumstances, response options should be rotated (for example, half the respondents see response options in one order while the other half see it in reverse order) due to an observed tendency of respondents to pick the first answer in self-administered surveys and the last answer in interviewer-administered surveys. Randomization allows researchers to check on whether there are order effects.

- Consider what languages you will offer the survey in. Many U.S. residents speak limited or no English. Most nationally representative surveys in the U.S. offer questionnaires in both English and Spanish, with bilingual interviewers available in interviewer-administered modes.

- See AAPOR’s resources on question wording for more details

How can I measure change over time?

If you want to measure change, don’t change the measure.

To accurately measure whether an observed change between surveys taken at two points in time reflects a true shift in public attitudes or behaviors, it is critical to keep the question wording, framing, and methodology of the survey as similar as possible across the two surveys. Changes in question-wording and even the context of other questions before it can influence how respondents answer and make it appear that there has been a change in public opinion even if the only change is in how respondents are interpreting the question (or potentially mask an actual shift in opinion).

Changes in mode, such as comparing a survey conducted over the telephone to one conducted online, can sometimes also mimic a real change because many people respond to certain questions differently when speaking to an interviewer on the phone versus responding in private to a web survey. Questions that are very personal or have a response option that respondents see as socially undesirable, or embarrassing are particularly sensitive to this mode effect.

If changing the measure is necessary — perhaps due to flawed question wording or a desire to switch modes for logistical reasons — the researcher can employ a split-ballot experiment to test whether respondents will be sensitive to the change. This would involve fielding two versions of a survey — one with the previous mode or question wording and one with the new mode or question wording — with all other factors kept as similar as possible across the two versions. If respondents answer both versions similarly, there is evidence that any change over time is likely due to a real shift in attitudes or behaviors rather than an artifact of the change in measurement. If response patterns differ according to which version respondents see, then change over time should be interpreted cautiously if the researcher moves ahead with the change in measurement.

How can I ensure the safety, confidentiality, and comfort of respondents?

- Follow your institution’s guidance and policies on the protection of personal identifiable information and determine whether any data privacy laws apply to the study. If releasing individual responses in a public dataset, keep in mind that demographic information and survey responses may make it possible to identify respondents even if personal identifiable information like names and addresses are removed.

- Consult an Institutional Review Board for recommendations on how to mitigate the risk, even if not required by your institution.

- Disclose the sensitive topic at the beginning of the survey, or just before the questions appear in the survey, and inform respondents that they can skip the questions if they are not comfortable answering them (and be sure to program an online survey to allow skipping, or instruct interviewers to allow refusals without probing).

- Provide links or hotlines to resources that can help respondents who were affected by the sensitive questions (for example, a hotline that provides help for those suffering from eating disorders if the survey asks about disordered eating behaviors).

- Build rapport with a respondent by beginning with easy and not-too-personal questions and keeping sensitive topics for later in the survey.

- Keep respondent burden low by keeping questionnaires and individual questions short and limiting the number of difficult, sensitive, or open-ended questions.

- Allow respondents to skip a question or provide an explicit “don’t know” or “don’t want to answer” response, especially for difficult or sensitive questions. Requiring an answer increases the risk of respondents choosing to leave the survey early.

4. Fielding Your Survey

If i am using interviewers, how should they be trained.

Interviewers need to undergo training that covers both recruiting respondents into the survey and administering the survey. Recruitment training should cover topics such as contacting sampled respondents and convincing reluctant respondents to participate. Interviewers should be comfortable navigating the hardware and software used to conduct the survey and pronouncing difficult names or terms. They should have familiarity with the concepts the survey questions are asking about and know how to help respondents without influencing their answers. Training should also involve practice interviews to familiarize the interviewers with the variety of situations they are likely to encounter. If the survey is being administered in languages other than English, interviewers should demonstrate language proficiency and cultural awareness. Training should address how to conduct non-English interviews appropriately.

Interviewers should be trained in protocols on how best to protect the health and well-being of themselves and respondents, as needed. As an example, during the COVID-19 pandemic, training in the proper use of personal protective equipment and social distancing would be appropriate for field staff.

What kinds of testing should I do before fielding a survey?

Before fielding a survey, it is important to pretest the questionnaire. This typically consists of conducting cognitive interviews or using another qualitative research method to understand respondents’ thought processes, including their interpretation of the questions and how they came up with their answers. Pretesting should be conducted with respondents who are similar to those who will be in the survey (e.g., students if the survey sample is college students).

Conducting a pilot test to ensure that all survey procedures (e.g., recruiting respondents, administering the survey, cleaning data) work as intended is recommended. If it is unclear what question-wording or survey design choice is best, implementing an experiment during data collection can help systematically compare the effects of two or more alternatives.

What kinds of monitoring or quality checks should I do on my survey?

Checks must be made at every step of the survey life cycle to ensure that the sample is selected properly, the questionnaire is programmed accurately, interviewers do their work properly, information from questionnaires is edited and coded accurately, and proper analyses are used. The data should be monitored while it is being collected by using techniques such as observation of interviewers, replication of some interviews (re-interviews), and monitoring of response and paradata distributions. Odd patterns of responses may reflect a programming error or interviewer training issue that needs to be addressed immediately.

How do I get as many people to respond to the survey as possible?

It is important to monitor responses and attempt to maximize the number of people who respond to your survey. If very few people respond to your survey, there is a risk that you may be missing some types of respondents entirely, and your survey estimates may be biased. There are a variety of ways to incentivize respondents to participate in your survey, including offering monetary or non-monetary incentives, contacting them multiple times in different ways and at different times of the day, and/or using different persuasive messages. Interviewers can also help convince reluctant respondents to participate. Ideally, reasonable efforts should be made to convince both respondents who have not acknowledged the survey requests as well as those who refused to participate.

5. Analyzing and reporting the survey results

What are the common methods of analyzing survey data.

Analyzing survey data is, in many ways, similar to data analysis in other fields. However, there are a few details unique to survey data analysis to take note of. It is important to be as transparent as possible, including about any statistical techniques used to adjust the data.

Depending on your survey mode, you may have respondents who answer only part of your survey and then end the survey before finishing it. These are called partial responses, drop offs, or break offs. You should make sure to indicate these responses in your data and use a value to indicate there was no response. Questions with no response should have a different value than answer options such as “none of the above,” “I don’t know,” or “I prefer not to answer.” The same applies if your survey allows respondents to skip questions but continue in the survey.

A common way of reporting on survey data is to show cross-tabulated results, or crosstabs for short. Crosstabs are when you show a table with one question’s answers as the column headers and another question’s answers as the row names. The values in the crosstab can be either counts — the number of respondents who chose those specific answers to those two questions — or percentages. Typically, when showing percentages, the columns total to 100%.

Analyzing survey data allows us to estimate findings about the population under study by using a sample of people from that population. An industry standard is to calculate and report on the margin of sampling error, often shortened to the margin of error. The margin of error is a measurement of confidence in how close the survey results are to the true value in the population. To learn more about the margin of error and the credibility interval, a similar measurement used for nonprobability surveys, please see AAPOR’s Margin of Error resources.

What is weighting and why is it important?

Ideally, the composition of your sample would match the population under study for all the characteristics that are relevant to the topic of your survey; characteristics such as age, sex, race/ethnicity, location, educational attainment, political party identification, etc. However, this is rarely the case in practice, which can lead to the results of your survey being skewed. Weighting is a statistical technique to adjust the results to adjust the relative contributions of your respondents to match the population characteristics more closely. Learn more about weighting .

What are the common industry standards for transparency in reporting data?

Because there are so many different ways to run surveys, it’s important to be transparent about how a survey was run and analyzed so that people know how to interpret and draw conclusions from it. AAPOR’s Transparency Initiative has established a list of items to report with your survey results that uphold the industry transparency standards. These items include sample size, margin of sampling error, weighting attributes, the full text of the questions and answer options, the survey mode, the population under study, the way the sample was constructed, recruitment, and several other details of how the survey was run. The list of items to report can vary based on the mode of your survey — online, phone, face-to-face, etc. Organizations that want to commit to upholding these standards can also become members of the Transparency Initiative .

It is important to monitor responses and attempt to maximize the number of people who respond to your survey. If very few people respond to your survey, there is a risk that you may be missing some types of respondents entirely, and your survey estimates may be biased. There are a variety of ways to incentivize respondents to participate in your survey, including offering monetary or non-monetary incentives, contacting them multiple times in different ways and at different times of the day, and/or using different persuasive messages. Interviewers can also help convince reluctant respondents to participate. Ideally, reasonable efforts should be made to convince both respondents who have not acknowledged the survey requests as well as those who refused to participate.

2019 Presidential Address from the 74th Annual Conference

David Dutwin May 2019

“Many of you know me primarily as a methodologist. But in fact, my path to AAPOR had nothing to do with methodology. My early papers, in fact, wholly either provided criticism of, or underscored the critical value of, public opinion and public opinion polls.

And so in some respects, this Presidential Address is for me, completes a full circle of thought and passion I have for AAPOR, for today I would like to discuss matters pertaining to the need to reconsider, strengthen, and advance the mission of survey research in democracy.

Historically, there has been much to say on the role of public opinion in democracy. George Gallup summarized the role of polls quite succinctly when he said, “Without polls, [elites] would be guided only by letters to congressmen, the lobbying of pressure groups, and the reports of political henchmen.”

Further, Democratic theory notes the critical, if not the pivotal role, of public opinion and democratic practice. Storied political scientist V.O Key said: “The poll furnishes a means for the deflation of the extreme claims of pressure groups and for the testing of their extravagant claims of public sentiment in support of their demands.”

Furthermore, surveys provide a critical check and balance to other claims of what the American public demands in terms of policies and their government. Without polls, it would be all that much harder to verify and combat claims of public sentiment made by politicians, elites, lobbyists, and interest groups. [“No policy that does not rest upon some public opinion can be permanently maintained.”- Abe Lincoln; “Public opinion is a thermometer a monarch should constantly consult” – Napoleon]

It is sometimes asked whether leaders do consult polls and whether polls have any impact of policy. The relationship here is complex, but time and again researchers have found a meaningful and significant effect of public opinion, typically as measured by polling, on public policy. As one example, Page and Shapiro explored trends in American public opinion from the 1930s to the 1980s and found no less than 231 different changes in public policy following shifts in public opinion.

And certainly, in modern times around the world, there is recognition that the loss of public opinion would be, indeed, the loss of democracy itself. [“Where there is no public opinion, there is likely to be bad government, which sooner or later, becomes autocratic government.” – Willian Lyon Mackenzie King]

And yet, not all agree. Some twist polling to be a tool that works against democratic principles. [“The polls are just being used as another tool for voter suppression.” – Rush Limbaugh]

And certainly, public opinion itself is imperfect, filled with non-attitudes, the will of the crowd, and can often lead to tyranny of the majority, as Jon Stewart nicely pointed out. [“You have to remember one thing about the will of the people: It wasn’t that long ago that we were swept away by the Macarena.” – Jon Stewart]

If these later quotes were the extent of criticism on the role of public opinion and survey research in liberal democracy, I would not be up here today discussing what soon follows in this address. Unfortunately, however, we live a world in which many of the institutions of democracy and society are under attack.

It is important to start by recognizing that AAPOR is a scientific organization. Whether you are a quantitative or qualitative researcher, a political pollster or developer of official statistics, a sociologist or a political scientist, someone who works for a commercial entity or nonprofit, we are all survey scientists, and we come together as a great community of scientists within AAPOR, no matter our differences.

And so we, AAPOR, should be as concerned as any other scientific community regarding the current environment where science is under attack, devalued, and delegitimized. It is estimated that since the 2016 election, the federal policy has moved to censor, or misrepresent, or curtail and suppress scientific data and discoveries over 200 times, according to the Sabin Center at Columbia University. Not only is this a concern to AAPOR as a community of scientists, but we should be concerned as well on the impact of these attacks on public opinion itself.

Just as concerning is the attack on democratic information, in general. Farrell and Schneier argue that there are two key types of knowledge in democracy, common and contested. And while we should be free to argue and disagree with policy choices, our pick of democratic leaders, and even many of the rules and mores that guide us as a society, what is called contested knowledge, what cannot be up for debate is the common knowledge of democracy, for example, the legitimacy of the electoral process itself, or the validity of data attained by the Census, or even more so, I would argue, that public opinion does not tell us what the public thinks.

As the many quotes I provided earlier attest to, democracy is dependent upon a reliable and nonideological measure of the will of the people. For more than a half century and beyond, survey research been the principal and predominant vehicle by which such knowledge is generated.

And yet, we are on that doorstep where common knowledge is becoming contested. We are entering, I fear, a new phase of poll delegitimization. I am not here to advocate any political ideology and it is critical for pollsters to remain within the confines of science. Yet there has been a sea change in how polls are discussed by the current administration. To constantly call out polls for being fake is to delegitimize public opinion itself and is a threat to our profession.

Worse still, many call out polls as mere propaganda (see Joondeph, 2018). Such statements are more so a direct attack on our science, our field, and frankly, the entire AAPOR community. And yet even worse is for anyone to actually rig poll results. Perhaps nothing may undermine the science and legitimacy of polling more.

More pernicious still, we are on the precipice of an age where faking anything is possible. The technology now exists to fake actual videos of politicians, or anyone for that matter, and to create realistic false statements. The faking of poll results is merely in lockstep with these developments.

There are, perhaps, many of you in this room who don’t directly connect with this. You do not do political polling. You do government statistics. Sociology. Research on health, on education, or consumer research. But we must all realize that polling is the tip of the spear. It is what the ordinary citizen sees of our trade and our science. As Andy Kohut once noted, it represents all of survey research. [Political polling is the “most visible expression of the validity of the survey research method.“ – Andrew Kohut]

With attacks on science at an all-time high in the modern age, including attacks on the science of surveys; with denigration of common knowledge, the glue that holds democracy together, including denunciation on the reliability of official statistics; with slander on polling that goes beyond deliberation on the validity of good methods but rather attacks good methods as junk, as propaganda, and as fake news; and worse of all, a future that, by all indications, will if anything include the increased frequency of fake polls, and fake data, well, what are we, AAPOR, to do?

We must respond. We must react. And, we must speak out. What does this mean, exactly? First, AAPOR must be able to respond. Specifically, AAPOR must have vehicles and avenues of communication and the tools by which it can communicate. Second, AAPOR must know how to respond. That is to say, AAPOR must have effective and timely means of responding. We are in an every minute of the day news cycle. AAPOR must adapt to this environment and maximize its impact by speaking effectively within this communication environment. And third, AAPOR must, quite simply, have the willpower to respond. AAPOR is a fabulous member organization, providing great service to its members in terms of education, a code of ethics, guidelines for best practices and promotions of transparency and diversity in the field of survey research. But we have to do more. We have to learn to professionalize our communication and advocate for our members and our field. There are no such thing as sidelines anymore. We must do our part to defend survey science, polling, and the very role of public opinion in a functioning democracy.

This might seem to many of you like a fresh idea, and bold new step for AAPOR. But in fact, there has been a common and consistent call for improved communication abilities, communicative outreach, and advocacy by many past Presidents, from Diane Colasanto to Nancy Belden to Andy Kohut.

Past President Frank Newport for example was and is a strong supporter of the role of public opinion in democracy, underscoring in his Presidential address that quote, “the collective views of the people…are absolutely vital to the decision-making that ultimately affects them.” He argued in his Presidential address that AAPOR must protect the role of public opinion in society.

A number of Past Presidents have rightly noted that AAPOR must recognize the central role of journalists in this regard, who have the power to frame polling as a positive or negative influence on society. President Nancy Mathiowetz rightly pointed out that AAPOR must play a role in, and even financially support, endeavors to guarantee that journalists’ support AAPOR’s position on the role of polling in society and journalists’ treatment of polls. And Nancy’s vision, in fact, launched relationship with Poynter in building a number of resources for journalist education of polling.

Past President Scott Keeter also noted the need for AAPOR to do everything it can to promote public opinion research. He said that “we all do everything we can to defend high-quality survey research, its producers, and those who distribute it.” But at the same time, Scott noted clearly that, unfortunately, “At AAPOR we are fighting a mostly defensive war.”

And finally, Past President Cliff Zukin got straight to the point in his Presidential address, noting that, quote “AAPOR needs to increase its organizational capacity to respond and communicate, both internally and externally. We need to communicate our positions and values to the outside world, and we need to diffuse ideas more quickly within our profession.”

AAPOR is a wonderful organization, and in my biased opinion, the best professional organization I know. How have we responded to the call of past Presidents? I would say, we responded with vigor, with energy, and with passion. But we are but a volunteer organization of social scientists. And so, we make task forces. We write reports. These reports are well researched, well written, and at the same time, I would argue, do not work effectively to create impact in the modern communication environment.

We have taken one small step to ameliorate this, with the report on polling in the 2016 election, which was publicly released via a quite successful live Facebook video event. But we can still do better. We need to be more timely for one, as that event occurred 177 days after the election, when far fewer people were listening, and the narrative was largely already written. And we need to find ways to make such events have greater reach and impact. And of course, we need more than just one event every four years.

I have been proud to have been a part of, and even be the chair of, a number of excellent task force reports. But we cannot, I submit, continue to respond only with task force reports. AAPOR is comprised of the greatest survey researchers in the world. But it is not comprised of professional communication strategists, plain and simple. We need help, and we need professional help.

In the growth of many organizations, there comes a time when the next step must be taken. The ASA many years ago, for example, hired a full time strategic communications firm. Other organizations, including the NCA, APSA, and others, chose instead to hire their own full time professional communication strategist.

AAPOR has desired to better advocate for itself for decades. We recognize that we have to get into the fight, that there are again no more things as sidelines. And we have put forward a commendable effort in this regard, building educational resources for journalists, and writing excellent reports on elections, best practices, sugging and frugging, data falsification, and other issues. But we need to do more, and in the context of the world outside of us, we need to speak a language that resonates with journalists, political elites, and perhaps most importantly the public.

I want to stop right here and make it clear, that the return on investment on such efforts is not going to be quick. And the goal here is not to improve response rates, though I would like that very much! No, it is not likely that any efforts in any near term reverses trends in nonresponse.

It may very well be that our efforts only slow or at best stop the decline. But that would be an important development. The Washington Post says that democracy dies in darkness. If I may, I would argue that AAPOR must say, democracy dies in silence, when the vehicle for public opinion, surveys, has been twisted to be distrusted by the very people who need it most, ordinary citizens. For the most part, AAPOR has been silent. We can be silent no more.

This year, Executive Council has deliberated the issues outlined in this address, and we have chosen to act. The road will be long, and at this time, I cannot tell you where it will lead. But I can tell you our intentions and aspirations. We have begun to execute a 5 point plan that I present here to you.

First, AAPOR Executive Council developed and released a request for proposals for professional strategic communication services. Five highly regarded firms responded. After careful deliberation and in person meetings with the best of these firms, we have chosen Stanton Communications to help AAPOR become a more professionalized association. Our goals in the short term are as follows.