Critical Value

Critical value is a cut-off value that is used to mark the start of a region where the test statistic, obtained in hypothesis testing, is unlikely to fall in. In hypothesis testing, the critical value is compared with the obtained test statistic to determine whether the null hypothesis has to be rejected or not.

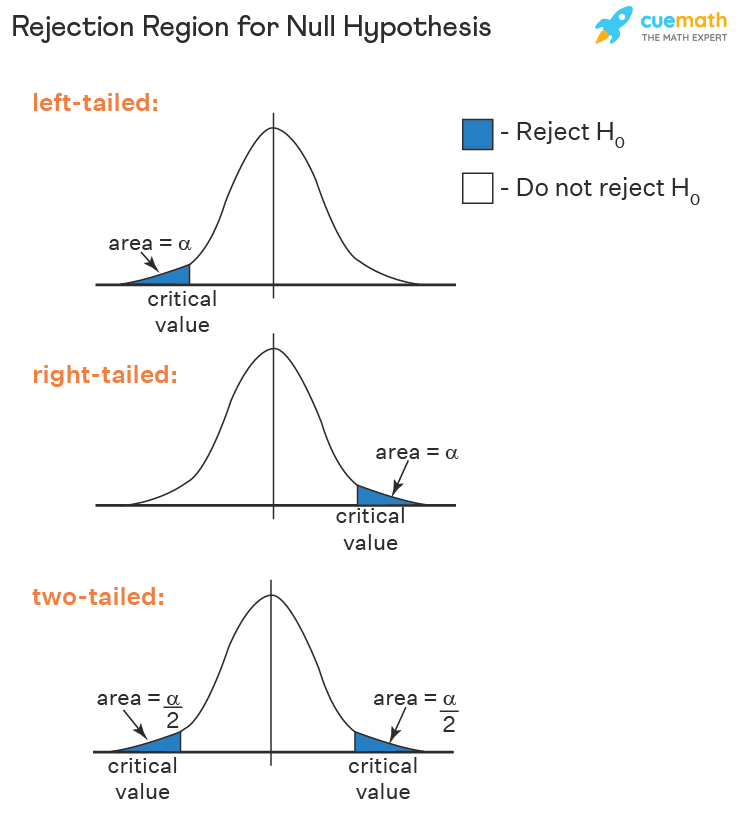

Graphically, the critical value splits the graph into the acceptance region and the rejection region for hypothesis testing. It helps to check the statistical significance of a test statistic. In this article, we will learn more about the critical value, its formula, types, and how to calculate its value.

What is Critical Value?

A critical value can be calculated for different types of hypothesis tests. The critical value of a particular test can be interpreted from the distribution of the test statistic and the significance level. A one-tailed hypothesis test will have one critical value while a two-tailed test will have two critical values.

Critical Value Definition

Critical value can be defined as a value that is compared to a test statistic in hypothesis testing to determine whether the null hypothesis is to be rejected or not. If the value of the test statistic is less extreme than the critical value, then the null hypothesis cannot be rejected. However, if the test statistic is more extreme than the critical value, the null hypothesis is rejected and the alternative hypothesis is accepted. In other words, the critical value divides the distribution graph into the acceptance and the rejection region. If the value of the test statistic falls in the rejection region, then the null hypothesis is rejected otherwise it cannot be rejected.

Critical Value Formula

Depending upon the type of distribution the test statistic belongs to, there are different formulas to compute the critical value. The confidence interval or the significance level can be used to determine a critical value. Given below are the different critical value formulas.

Critical Value Confidence Interval

The critical value for a one-tailed or two-tailed test can be computed using the confidence interval . Suppose a confidence interval of 95% has been specified for conducting a hypothesis test. The critical value can be determined as follows:

- Step 1: Subtract the confidence level from 100%. 100% - 95% = 5%.

- Step 2: Convert this value to decimals to get \(\alpha\). Thus, \(\alpha\) = 5%.

- Step 3: If it is a one-tailed test then the alpha level will be the same value in step 2. However, if it is a two-tailed test, the alpha level will be divided by 2.

- Step 4: Depending on the type of test conducted the critical value can be looked up from the corresponding distribution table using the alpha value.

The process used in step 4 will be elaborated in the upcoming sections.

T Critical Value

A t-test is used when the population standard deviation is not known and the sample size is lesser than 30. A t-test is conducted when the population data follows a Student t distribution . The t critical value can be calculated as follows:

- Determine the alpha level.

- Subtract 1 from the sample size. This gives the degrees of freedom (df).

- If the hypothesis test is one-tailed then use the one-tailed t distribution table. Otherwise, use the two-tailed t distribution table for a two-tailed test.

- Match the corresponding df value (left side) and the alpha value (top row) of the table. Find the intersection of this row and column to give the t critical value.

Test Statistic for one sample t test: t = \(\frac{\overline{x}-\mu}{\frac{s}{\sqrt{n}}}\). \(\overline{x}\) is the sample mean, \(\mu\) is the population mean, s is the sample standard deviation and n is the size of the sample.

Test Statistic for two samples t test: \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{s_{1}^{2}}{n_{1}}+\frac{s_{2}^{2}}{n_{2}}}}\).

Decision Criteria:

- Reject the null hypothesis if test statistic > t critical value (right-tailed hypothesis test).

- Reject the null hypothesis if test statistic < t critical value (left-tailed hypothesis test).

- Reject the null hypothesis if the test statistic does not lie in the acceptance region (two-tailed hypothesis test).

This decision criterion is used for all tests. Only the test statistic and critical value change.

Z Critical Value

A z test is conducted on a normal distribution when the population standard deviation is known and the sample size is greater than or equal to 30. The z critical value can be calculated as follows:

- Find the alpha level.

- Subtract the alpha level from 1 for a two-tailed test. For a one-tailed test subtract the alpha level from 0.5.

- Look up the area from the z distribution table to obtain the z critical value. For a left-tailed test, a negative sign needs to be added to the critical value at the end of the calculation.

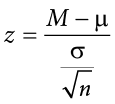

Test statistic for one sample z test: z = \(\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}\). \(\sigma\) is the population standard deviation.

Test statistic for two samples z test: z = \(\frac{(\overline{x_{1}}-\overline{x_{2}})-(\mu_{1}-\mu_{2})}{\sqrt{\frac{\sigma_{1}^{2}}{n_{1}}+\frac{\sigma_{2}^{2}}{n_{2}}}}\).

F Critical Value

The F test is largely used to compare the variances of two samples. The test statistic so obtained is also used for regression analysis. The f critical value is given as follows:

- Subtract 1 from the size of the first sample. This gives the first degree of freedom. Say, x

- Similarly, subtract 1 from the second sample size to get the second df. Say, y.

- Using the f distribution table, the intersection of the x column and y row will give the f critical value.

Test Statistic for large samples: f = \(\frac{\sigma_{1}^{2}}{\sigma_{2}^{2}}\). \(\sigma_{1}^{2}\) variance of the first sample and \(\sigma_{2}^{2}\) variance of the second sample.

Test Statistic for small samples: f = \(\frac{s_{1}^{2}}{s_{2}^{2}}\). \(s_{1}^{1}\) variance of the first sample and \(s_{2}^{2}\) variance of the second sample.

Chi-Square Critical Value

The chi-square test is used to check if the sample data matches the population data. It can also be used to compare two variables to see if they are related. The chi-square critical value is given as follows:

- Identify the alpha level.

- Subtract 1 from the sample size to determine the degrees of freedom (df).

- Using the chi-square distribution table, the intersection of the row of the df and the column of the alpha value yields the chi-square critical value.

Test statistic for chi-squared test statistic: \(\chi ^{2} = \sum \frac{(O_{i}-E_{i})^{2}}{E_{i}}\).

Critical Value Calculation

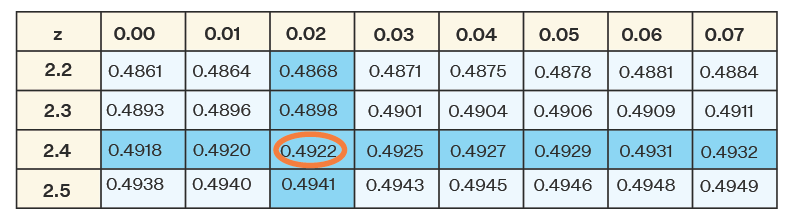

Suppose a right-tailed z test is being conducted. The critical value needs to be calculated for a 0.0079 alpha level. Then the steps are as follows:

- Subtract the alpha level from 0.5. Thus, 0.5 - 0.0079 = 0.4921

- Using the z distribution table find the area closest to 0.4921. The closest area is 0.4922. As this value is at the intersection of 2.4 and 0.02 thus, the z critical value = 2.42.

Related Articles:

- Probability and Statistics

- Data Handling

Important Notes on Critical Value

- Critical value can be defined as a value that is useful in checking whether the null hypothesis can be rejected or not by comparing it with the test statistic.

- It is the point that divides the distribution graph into the acceptance and the rejection region.

- There are 4 types of critical values - z, f, chi-square, and t.

Examples on Critical Value

Example 1: Find the critical value for a left tailed z test where \(\alpha\) = 0.012.

Solution: First subtract \(\alpha\) from 0.5. Thus, 0.5 - 0.012 = 0.488.

Using the z distribution table, z = 2.26.

However, as this is a left-tailed z test thus, z = -2.26

Answer: Critical value = -2.26

Example 2: Find the critical value for a two-tailed f test conducted on the following samples at a \(\alpha\) = 0.025

Variance = 110, Sample size = 41

Variance = 70, Sample size = 21

Solution: \(n_{1}\) = 41, \(n_{2}\) = 21,

\(n_{1}\) - 1= 40, \(n_{2}\) - 1 = 20,

Sample 1 df = 40, Sample 2 df = 20

Using the F distribution table for \(\alpha\) = 0.025, the value at the intersection of the 40 th column and 20 th row is

F(40, 20) = 2.287

Answer: Critical Value = 2.287

Example 3: Suppose a one-tailed t-test is being conducted on data with a sample size of 8 at \(\alpha\) = 0.05. Then find the critical value.

Solution: n = 8

df = 8 - 1 = 7

Using the one tailed t distribution table t(7, 0.05) = 1.895.

Answer: Crititcal Value = 1.895

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on Critical Value

What is the critical value in statistics.

Critical value in statistics is a cut-off value that is compared with a test statistic in hypothesis testing to check whether the null hypothesis should be rejected or not.

What are the Different Types of Critical Value?

There are 4 types of critical values depending upon the type of distributions they are obtained from. These distributions are given as follows:

- Normal distribution (z critical value).

- Student t distribution (t).

- Chi-squared distribution (chi-squared).

- F distribution (f).

What is the Critical Value Formula for an F test?

To find the critical value for an f test the steps are as follows:

- Determine the degrees of freedom for both samples by subtracting 1 from each sample size.

- Find the corresponding value from a one-tailed or two-tailed f distribution at the given alpha level.

- This will give the critical value.

What is the T Critical Value?

The t critical value is obtained when the population follows a t distribution. The steps to find the t critical value are as follows:

- Subtract the sample size number by 1 to get the df.

- Use the t distribution table for the alpha value to get the required critical value.

How to Find the Critical Value Using a Confidence Interval for a Two-Tailed Z Test?

The steps to find the critical value using a confidence interval are as follows:

- Subtract the confident interval from 100% and convert the resultant into a decimal value to get the alpha level.

- Subtract this value from 1.

- Find the z value for the corresponding area using the normal distribution table to get the critical value.

Can a Critical Value be Negative?

If a left-tailed test is being conducted then the critical value will be negative. This is because the critical value will be to the left of the mean thus, making it negative.

How to Reject Null Hypothesis Based on Critical Value?

The rejection criteria for the null hypothesis is given as follows:

- Right-tailed test: Test statistic > critical value.

- Left-tailed test: Test statistic < critical value.

- Two-tailed test: Reject if the test statistic does not lie in the acceptance region.

Critical Value Calculator

How to use critical value calculator, what is a critical value, critical value definition, how to calculate critical values, z critical values, t critical values, chi-square critical values (χ²), f critical values, behind the scenes of the critical value calculator.

Welcome to the critical value calculator! Here you can quickly determine the critical value(s) for two-tailed tests, as well as for one-tailed tests. It works for most common distributions in statistical testing: the standard normal distribution N(0,1) (that is when you have a Z-score), t-Student, chi-square, and F-distribution .

What is a critical value? And what is the critical value formula? Scroll down – we provide you with the critical value definition and explain how to calculate critical values in order to use them to construct rejection regions (also known as critical regions).

The critical value calculator is your go-to tool for swiftly determining critical values in statistical tests, be it one-tailed or two-tailed. To effectively use the calculator, follow these steps:

In the first field, input the distribution of your test statistic under the null hypothesis: is it a standard normal N (0,1), t-Student, chi-squared, or Snedecor's F? If you are not sure, check the sections below devoted to those distributions, and try to localize the test you need to perform.

In the field What type of test? choose the alternative hypothesis : two-tailed, right-tailed, or left-tailed.

If needed, specify the degrees of freedom of the test statistic's distribution. If you need more clarification, check the description of the test you are performing. You can learn more about the meaning of this quantity in statistics from the degrees of freedom calculator .

Set the significance level, α \alpha α . By default, we pre-set it to the most common value, 0.05, but you can adjust it to your needs.

The critical value calculator will display your critical value(s) and the rejection region(s).

Click the advanced mode if you need to increase the precision with which the critical values are computed.

For example, let's envision a scenario where you are conducting a one-tailed hypothesis test using a t-Student distribution with 15 degrees of freedom. You have opted for a right-tailed test and set a significance level (α) of 0.05. The results indicate that the critical value is 1.7531, and the critical region is (1.7531, ∞). This implies that if your test statistic exceeds 1.7531, you will reject the null hypothesis at the 0.05 significance level.

👩🏫 Want to learn more about critical values? Keep reading!

In hypothesis testing, critical values are one of the two approaches which allow you to decide whether to retain or reject the null hypothesis. The other approach is to calculate the p-value (for example, using the p-value calculator ).

The critical value approach consists of checking if the value of the test statistic generated by your sample belongs to the so-called rejection region , or critical region , which is the region where the test statistic is highly improbable to lie . A critical value is a cut-off value (or two cut-off values in the case of a two-tailed test) that constitutes the boundary of the rejection region(s). In other words, critical values divide the scale of your test statistic into the rejection region and the non-rejection region.

Once you have found the rejection region, check if the value of the test statistic generated by your sample belongs to it :

- If so, it means that you can reject the null hypothesis and accept the alternative hypothesis; and

- If not, then there is not enough evidence to reject H 0 .

But how to calculate critical values? First of all, you need to set a significance level , α \alpha α , which quantifies the probability of rejecting the null hypothesis when it is actually correct. The choice of α is arbitrary; in practice, we most often use a value of 0.05 or 0.01. Critical values also depend on the alternative hypothesis you choose for your test , elucidated in the next section .

To determine critical values, you need to know the distribution of your test statistic under the assumption that the null hypothesis holds. Critical values are then points with the property that the probability of your test statistic assuming values at least as extreme at those critical values is equal to the significance level α . Wow, quite a definition, isn't it? Don't worry, we'll explain what it all means.

First, let us point out it is the alternative hypothesis that determines what "extreme" means. In particular, if the test is one-sided, then there will be just one critical value; if it is two-sided, then there will be two of them: one to the left and the other to the right of the median value of the distribution.

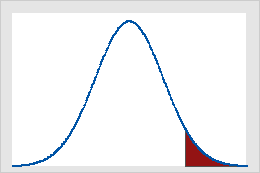

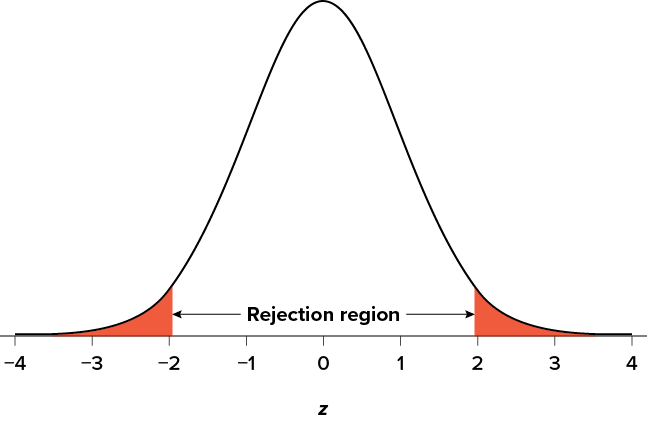

Critical values can be conveniently depicted as the points with the property that the area under the density curve of the test statistic from those points to the tails is equal to α \alpha α :

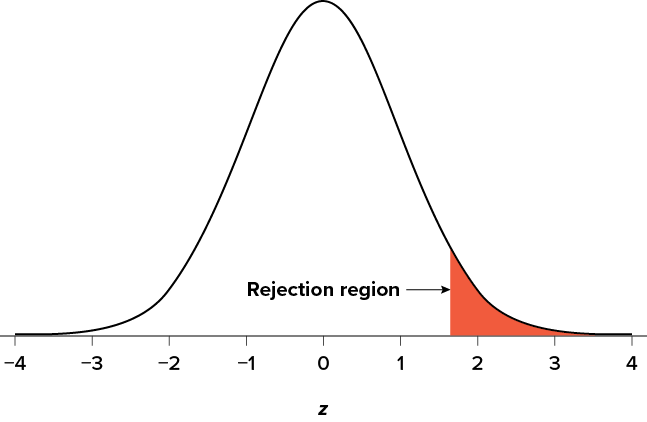

Left-tailed test: the area under the density curve from the critical value to the left is equal to α \alpha α ;

Right-tailed test: the area under the density curve from the critical value to the right is equal to α \alpha α ; and

Two-tailed test: the area under the density curve from the left critical value to the left is equal to α / 2 \alpha/2 α /2 , and the area under the curve from the right critical value to the right is equal to α / 2 \alpha/2 α /2 as well; thus, total area equals α \alpha α .

As you can see, finding the critical values for a two-tailed test with significance α \alpha α boils down to finding both one-tailed critical values with a significance level of α / 2 \alpha/2 α /2 .

The formulae for the critical values involve the quantile function , Q Q Q , which is the inverse of the cumulative distribution function ( c d f \mathrm{cdf} cdf ) for the test statistic distribution (calculated under the assumption that H 0 holds!): Q = c d f − 1 Q = \mathrm{cdf}^{-1} Q = cdf − 1 .

Once we have agreed upon the value of α \alpha α , the critical value formulae are the following:

- Left-tailed test :

- Right-tailed test :

- Two-tailed test :

In the case of a distribution symmetric about 0 , the critical values for the two-tailed test are symmetric as well:

Unfortunately, the probability distributions that are the most widespread in hypothesis testing have somewhat complicated c d f \mathrm{cdf} cdf formulae. To find critical values by hand, you would need to use specialized software or statistical tables. In these cases, the best option is, of course, our critical value calculator! 😁

Use the Z (standard normal) option if your test statistic follows (at least approximately) the standard normal distribution N(0,1) .

In the formulae below, u u u denotes the quantile function of the standard normal distribution N(0,1):

Left-tailed Z critical value: u ( α ) u(\alpha) u ( α )

Right-tailed Z critical value: u ( 1 − α ) u(1-\alpha) u ( 1 − α )

Two-tailed Z critical value: ± u ( 1 − α / 2 ) \pm u(1- \alpha/2) ± u ( 1 − α /2 )

Check out Z-test calculator to learn more about the most common Z-test used on the population mean. There are also Z-tests for the difference between two population means, in particular, one between two proportions.

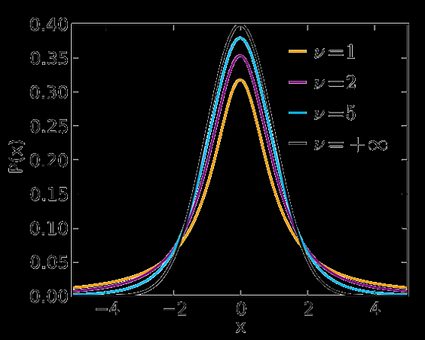

Use the t-Student option if your test statistic follows the t-Student distribution . This distribution is similar to N(0,1) , but its tails are fatter – the exact shape depends on the number of degrees of freedom . If this number is large (>30), which generically happens for large samples, then the t-Student distribution is practically indistinguishable from N(0,1). Check our t-statistic calculator to compute the related test statistic.

In the formulae below, Q t , d Q_{\text{t}, d} Q t , d is the quantile function of the t-Student distribution with d d d degrees of freedom:

Left-tailed t critical value: Q t , d ( α ) Q_{\text{t}, d}(\alpha) Q t , d ( α )

Right-tailed t critical value: Q t , d ( 1 − α ) Q_{\text{t}, d}(1 - \alpha) Q t , d ( 1 − α )

Two-tailed t critical values: ± Q t , d ( 1 − α / 2 ) \pm Q_{\text{t}, d}(1 - \alpha/2) ± Q t , d ( 1 − α /2 )

Visit the t-test calculator to learn more about various t-tests: the one for a population mean with an unknown population standard deviation , those for the difference between the means of two populations (with either equal or unequal population standard deviations), as well as about the t-test for paired samples .

Use the χ² (chi-square) option when performing a test in which the test statistic follows the χ²-distribution .

You need to determine the number of degrees of freedom of the χ²-distribution of your test statistic – below, we list them for the most commonly used χ²-tests.

Here we give the formulae for chi square critical values; Q χ 2 , d Q_{\chi^2, d} Q χ 2 , d is the quantile function of the χ²-distribution with d d d degrees of freedom:

Left-tailed χ² critical value: Q χ 2 , d ( α ) Q_{\chi^2, d}(\alpha) Q χ 2 , d ( α )

Right-tailed χ² critical value: Q χ 2 , d ( 1 − α ) Q_{\chi^2, d}(1 - \alpha) Q χ 2 , d ( 1 − α )

Two-tailed χ² critical values: Q χ 2 , d ( α / 2 ) Q_{\chi^2, d}(\alpha/2) Q χ 2 , d ( α /2 ) and Q χ 2 , d ( 1 − α / 2 ) Q_{\chi^2, d}(1 - \alpha/2) Q χ 2 , d ( 1 − α /2 )

Several different tests lead to a χ²-score:

Goodness-of-fit test : does the empirical distribution agree with the expected distribution?

This test is right-tailed . Its test statistic follows the χ²-distribution with k − 1 k - 1 k − 1 degrees of freedom, where k k k is the number of classes into which the sample is divided.

Independence test : is there a statistically significant relationship between two variables?

This test is also right-tailed , and its test statistic is computed from the contingency table. There are ( r − 1 ) ( c − 1 ) (r - 1)(c - 1) ( r − 1 ) ( c − 1 ) degrees of freedom, where r r r is the number of rows, and c c c is the number of columns in the contingency table.

Test for the variance of normally distributed data : does this variance have some pre-determined value?

This test can be one- or two-tailed! Its test statistic has the χ²-distribution with n − 1 n - 1 n − 1 degrees of freedom, where n n n is the sample size.

Finally, choose F (Fisher-Snedecor) if your test statistic follows the F-distribution . This distribution has a pair of degrees of freedom .

Let us see how those degrees of freedom arise. Assume that you have two independent random variables, X X X and Y Y Y , that follow χ²-distributions with d 1 d_1 d 1 and d 2 d_2 d 2 degrees of freedom, respectively. If you now consider the ratio ( X d 1 ) : ( Y d 2 ) (\frac{X}{d_1}):(\frac{Y}{d_2}) ( d 1 X ) : ( d 2 Y ) , it turns out it follows the F-distribution with ( d 1 , d 2 ) (d_1, d_2) ( d 1 , d 2 ) degrees of freedom. That's the reason why we call d 1 d_1 d 1 and d 2 d_2 d 2 the numerator and denominator degrees of freedom , respectively.

In the formulae below, Q F , d 1 , d 2 Q_{\text{F}, d_1, d_2} Q F , d 1 , d 2 stands for the quantile function of the F-distribution with ( d 1 , d 2 ) (d_1, d_2) ( d 1 , d 2 ) degrees of freedom:

Left-tailed F critical value: Q F , d 1 , d 2 ( α ) Q_{\text{F}, d_1, d_2}(\alpha) Q F , d 1 , d 2 ( α )

Right-tailed F critical value: Q F , d 1 , d 2 ( 1 − α ) Q_{\text{F}, d_1, d_2}(1 - \alpha) Q F , d 1 , d 2 ( 1 − α )

Two-tailed F critical values: Q F , d 1 , d 2 ( α / 2 ) Q_{\text{F}, d_1, d_2}(\alpha/2) Q F , d 1 , d 2 ( α /2 ) and Q F , d 1 , d 2 ( 1 − α / 2 ) Q_{\text{F}, d_1, d_2}(1 -\alpha/2) Q F , d 1 , d 2 ( 1 − α /2 )

Here we list the most important tests that produce F-scores: each of them is right-tailed .

ANOVA : tests the equality of means in three or more groups that come from normally distributed populations with equal variances. There are ( k − 1 , n − k ) (k - 1, n - k) ( k − 1 , n − k ) degrees of freedom, where k k k is the number of groups, and n n n is the total sample size (across every group).

Overall significance in regression analysis . The test statistic has ( k − 1 , n − k ) (k - 1, n - k) ( k − 1 , n − k ) degrees of freedom, where n n n is the sample size, and k k k is the number of variables (including the intercept).

Compare two nested regression models . The test statistic follows the F-distribution with ( k 2 − k 1 , n − k 2 ) (k_2 - k_1, n - k_2) ( k 2 − k 1 , n − k 2 ) degrees of freedom, where k 1 k_1 k 1 and k 2 k_2 k 2 are the number of variables in the smaller and bigger models, respectively, and n n n is the sample size.

The equality of variances in two normally distributed populations . There are ( n − 1 , m − 1 ) (n - 1, m - 1) ( n − 1 , m − 1 ) degrees of freedom, where n n n and m m m are the respective sample sizes.

I'm Anna, the mastermind behind the critical value calculator and a PhD in mathematics from Jagiellonian University .

The idea for creating the tool originated from my experiences in teaching and research. Recognizing the need for a tool that simplifies the critical value determination process across various statistical distributions, I built a user-friendly calculator accessible to both students and professionals. After publishing the tool, I soon found myself using the calculator in my research and as a teaching aid.

Trust in this calculator is paramount to me. Each tool undergoes a rigorous review process , with peer-reviewed insights from experts and meticulous proofreading by native speakers. This commitment to accuracy and reliability ensures that users can be confident in the content. Please check the Editorial Policies page for more details on our standards.

What is a Z critical value?

A Z critical value is the value that defines the critical region in hypothesis testing when the test statistic follows the standard normal distribution . If the value of the test statistic falls into the critical region, you should reject the null hypothesis and accept the alternative hypothesis.

How do I calculate Z critical value?

To find a Z critical value for a given confidence level α :

Check if you perform a one- or two-tailed test .

For a one-tailed test:

Left -tailed: critical value is the α -th quantile of the standard normal distribution N(0,1).

Right -tailed: critical value is the (1-α) -th quantile.

Two-tailed test: critical value equals ±(1-α/2) -th quantile of N(0,1).

No quantile tables ? Use CDF tables! (The quantile function is the inverse of the CDF.)

Verify your answer with an online critical value calculator.

Is a t critical value the same as Z critical value?

In theory, no . In practice, very often, yes . The t-Student distribution is similar to the standard normal distribution, but it is not the same . However, if the number of degrees of freedom (which is, roughly speaking, the size of your sample) is large enough (>30), then the two distributions are practically indistinguishable , and so the t critical value has practically the same value as the Z critical value.

What is the Z critical value for 95% confidence?

The Z critical value for a 95% confidence interval is:

- 1.96 for a two-tailed test;

- 1.64 for a right-tailed test; and

- -1.64 for a left-tailed test.

- Sum of Squares Calculator

- Midrange Calculator

- Coefficient of Variation Calculator

Ascending order

Central limit theorem, plant spacing.

- Biology (101)

- Chemistry (100)

- Construction (145)

- Conversion (295)

- Ecology (30)

- Everyday life (262)

- Finance (571)

- Health (440)

- Physics (511)

- Sports (105)

- Statistics (184)

- Other (183)

- Discover Omni (40)

If you could change one thing about college, what would it be?

Graduate faster

Better quality online classes

Flexible schedule

Access to top-rated instructors

How To Find Critical Value In Statistics

10.28.2022 • 13 min read

Sarah Thomas

Subject Matter Expert

Learn how to find critical value, its importance, the different systems, and the steps to follow when calculating it.

In This Article

What Is a Critical Value?

The role of critical values in hypothesis tests, factors that influence critical values, critical values for one-tailed tests & two-tailed tests, commonly used critical values, how to find the critical value in statistics, how to find a critical value in r, don't overpay for college statistics.

Take Intro to Statistics Online with Outlier.org

From the co-founder of MasterClass, earn transferable college credits from the University of Pittsburgh (a top 50 global school). The world's best online college courses for 50% less than a traditional college.

In baseball, an ump cries “foul ball” any time a batter hits the ball into foul territory. In statistics, we have something similar to a foul zone. It’s called a rejection region. While foul lines, poles, and the stadium fence mark off the foul territory in baseball, in statistics numbers called critical values mark off rejection regions.

A critical value is a number that defines the rejection region of a hypothesis test. Critical values vary depending on the type of hypothesis test you run and the type of data you are working with.

In a hypothesis test called a two-tailed Z-test with a 95% confidence level, the critical values are 1.96 and -1.96. In this test, if the statistician’s results are greater than 1.96 or less than -1.96. We reject the null hypothesis in favor of the alternative hypothesis.

In Outlier's Intro to Statistics course, Dr. Gregory Matthews explains more about hypothesis testing and why to use it:

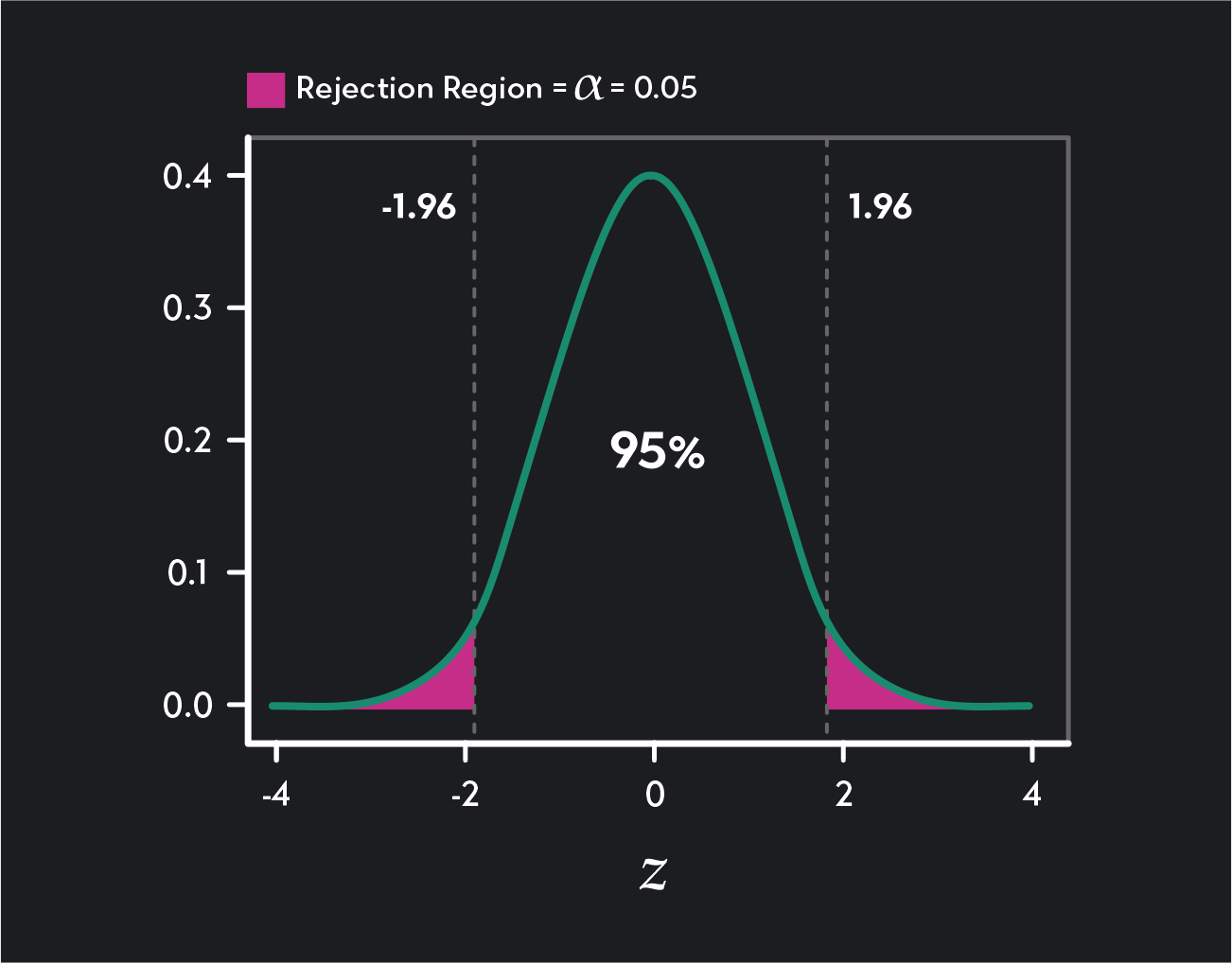

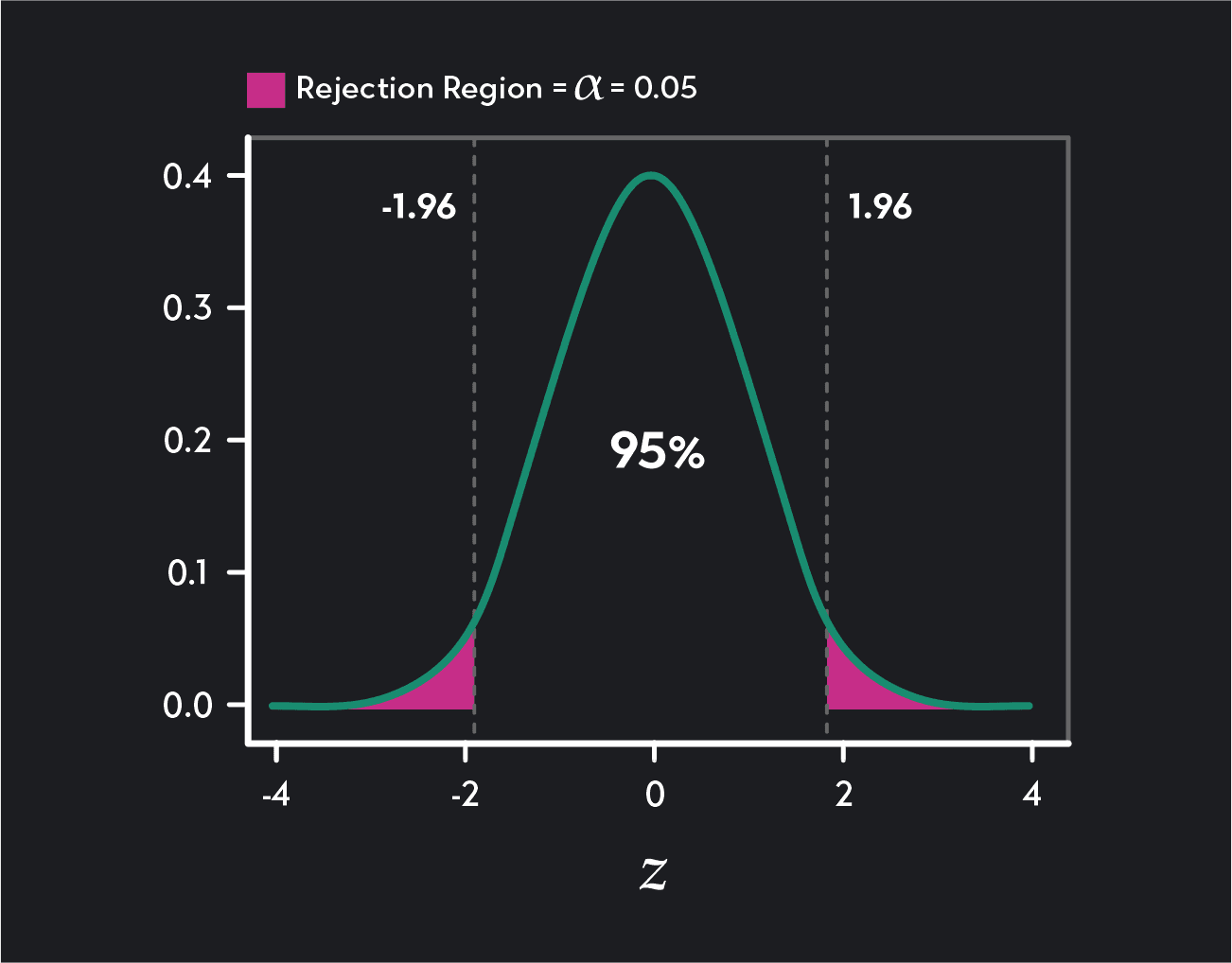

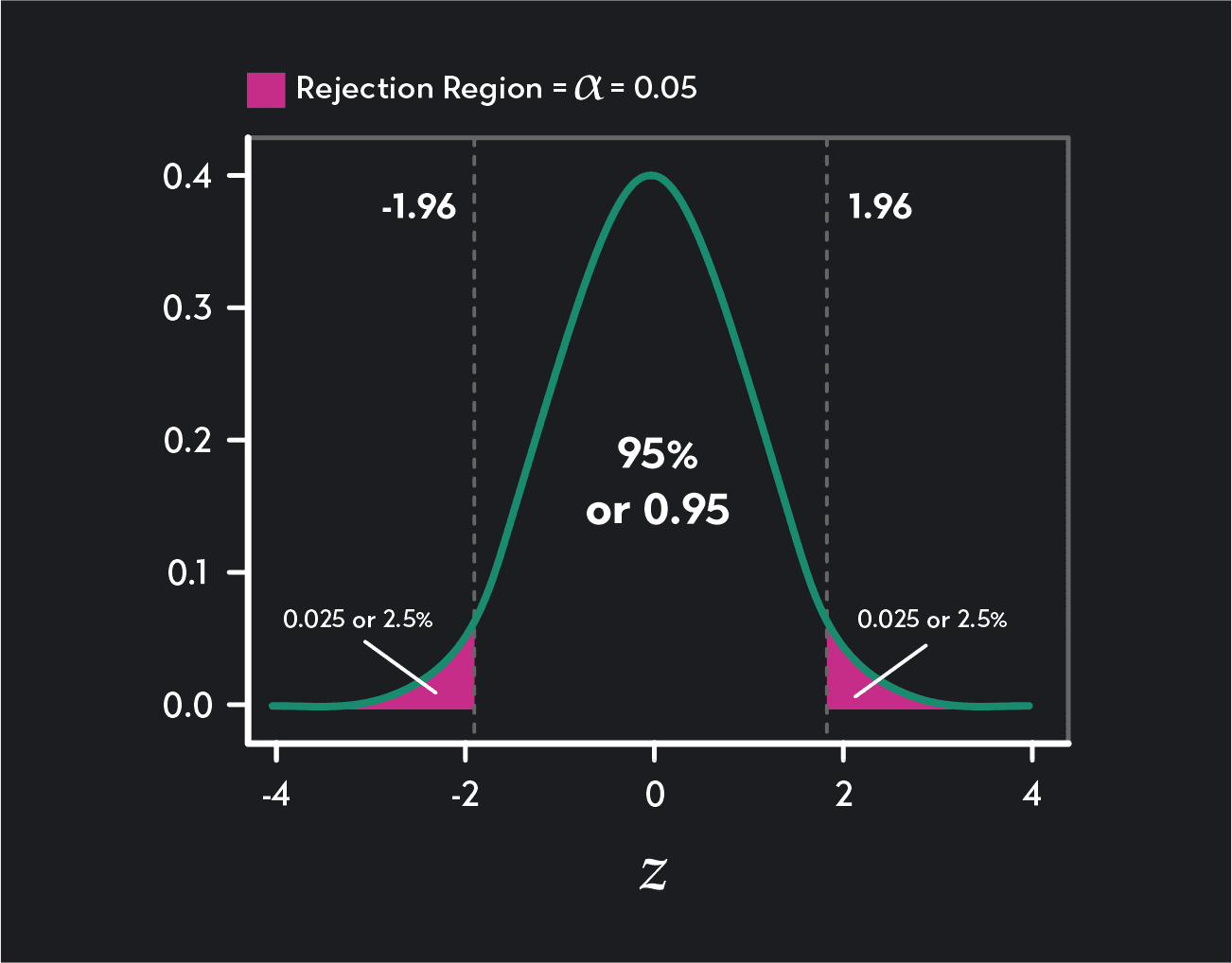

The figure below shows how the critical values mark the boundaries of two rejection regions (shaded in pink). Any test result greater than 1.96 falls into the rejection region in the distribution’s right tail, and any test result below -1.96 falls into the rejection region in the left tail of the distribution.

A two-tailed Z-test with a 95% confidence level (or a significance level of ɑ = 0.05) has two critical values 1.96 and -1.96.

Before we dive deeper, let’s do a quick refresher on hypothesis testing. In statistics, a hypothesis test is a statistical test where you test an “alternative” hypothesis against a “null” hypothesis. The null hypothesis represents the default hypothesis or the status quo. It typically represents what the academic community or the general public believes to be true. The alternative hypothesis represents what you suspect could be true in place of the null hypothesis.

For example, I may hypothesize that as times have changed, the average age of first-time mothers in the U.S. has increased and that first-time mothers, on average, are now older than 25. Meanwhile, conventional wisdom or existing research may say that the average age of first-time mothers in the U.S. is 25 years old.

In this example, my hypothesis is the alternative hypothesis, and the conventional wisdom is the null hypothesis.

Alternative Hypothesis H a H_a H a = Average age of first-time mothers in the U.S. > 25

Null Hypothesis H 0 H_0 H 0 = Average age of first-time mothers in the U.S. = 25

In a hypothesis test, the goal is to draw inferences about a population parameter (such as the population mean of first-time mothers in the U.S.) from sample data randomly drawn from the population.

The basic intuition behind hypothesis testing is this. If we assume that the null hypothesis is true, data collected from a random sample of first-time mothers should have a sample average that’s close to 25 years old. We don’t expect the sample to have the same average as the population, but we expect it to be pretty close. If we find this to be the case, we have evidence favoring the null hypothesis. If our sample average is far enough above 25, we have evidence that favors the alternative hypothesis.

A major conundrum in hypothesis testing is deciding what counts as “close to 25” and what counts as being “far enough above 25”? If you randomly sample a thousand first-time mothers and the sample mean is 26 or 27 years old, should you favor the null hypothesis or the alternative?

To make this determination, you need to do the following:

1. First, you convert your sample statistic into a test statistic.

In our first-time mother example, the sample statistic we have is the average age of the first-time mothers in our sample. Depending on the data we have, we might map this average to a Z-test statistic or a T-test statistic.

A test statistic is just a number that maps a sample statistic to a value on a standardized distribution such as a normal distribution or a T-distribution. By converting our sample statistic to a test statistic, we can easily see how likely or unlikely it is to get our sample statistic under the assumption that the null hypothesis is true.

2. Next, you select a significance level (also known as an alpha (ɑ) level) for your test.

The significance level is a measure of how confident you want to be in your decision to reject the null hypothesis in favor of the alternative. A commonly used significance level in hypothesis testing is 5% (or ɑ=0.05). An alpha-level of 0.05 means that you’ll only reject the null hypothesis if there is less than a 5% chance of wrongly favoring the alternative over the null.

3. Third, you find the critical values that correspond to your test statistic and significance level.

The critical value(s) tell you how small or large your test statistic has to be in order to reject the null hypothesis at your chosen significance level.

4. You check to see if your test statistic falls into the rejection region.

Check the value of the test statistic. Any test statistic that falls above a critical value in the right tail of the distribution is in the rejection region. Any test statistic located below a critical value in the left tail of the distribution is also in the rejection region. If your test statistic falls into the rejection region, you reject the null hypothesis in favor of the alternative hypothesis. If your test statistic does not fall into the rejection region, you fail to reject the null hypothesis.

Notice that critical values play a crucial role in hypothesis testing. Without knowing what your critical values are, you cannot make the final determination of whether or not to reject the null hypothesis.

Critical values vary with the following traits of a hypothesis test.

What test statistic are you using?

This will depend on the type of research question you have and the type of data you are working with. In a first-year statistics course, you will often conduct hypothesis tests using Z-statistics (these correspond to a standard normal distribution ), T-statistics (these correspond to a T-distribution), or chi-squared test statistics (these correspond to a chi-square distribution).

What significance level have you selected?

This is up to the person conducting the test. A significance level (or alpha level) is the probability of mistakenly rejecting the null hypothesis when it is actually true. By choosing a significance level, you are deciding how careful you want to be in avoiding such a mistake.

You might also hear a hypothesis test being described by a confidence level. Confidence levels are closely related to statistical significance. The confidence level of a test is equal to one minus the significance level or 1-ɑ.

Is it a one-tailed test or a two-tailed test?

Hypothesis tests can be one-tailed or two-tailed, depending on the alternative hypothesis. Null and alternative hypotheses are always mutually exclusive statements, but they can take different forms. If your alternative hypothesis is only concerned with positive effects or the right tail of the distribution, you will likely use a one-tailed upper-tail test.

If your alternative hypothesis is only concerned with negative effects or the left tail of the distribution, you will likely use a one-tailed lower-tail test. Finally, if your alternative hypothesis proposes a deviation in either direction from what the null hypothesis proposes, you’ll use a two-tailed test.

The number of critical values in a hypothesis test depends on whether the test is a one-tailed test or a two-tailed test.

Critical Values for Two-Tailed Tests

In a two-tailed test, we divide the rejection region into two equal parts: one in the right tail of the distribution and one in the left tail of the distribution. Each of these rejection regions will contain an area of the distribution equal to ɑ/2. For example, in a two-tailed test with a significance level of 0.05, each rejection region will contain 0.05/2 = 0.025 = 2.5% of the area under the distribution. Because we split the rejection region, a two-tailed test has two critical values.

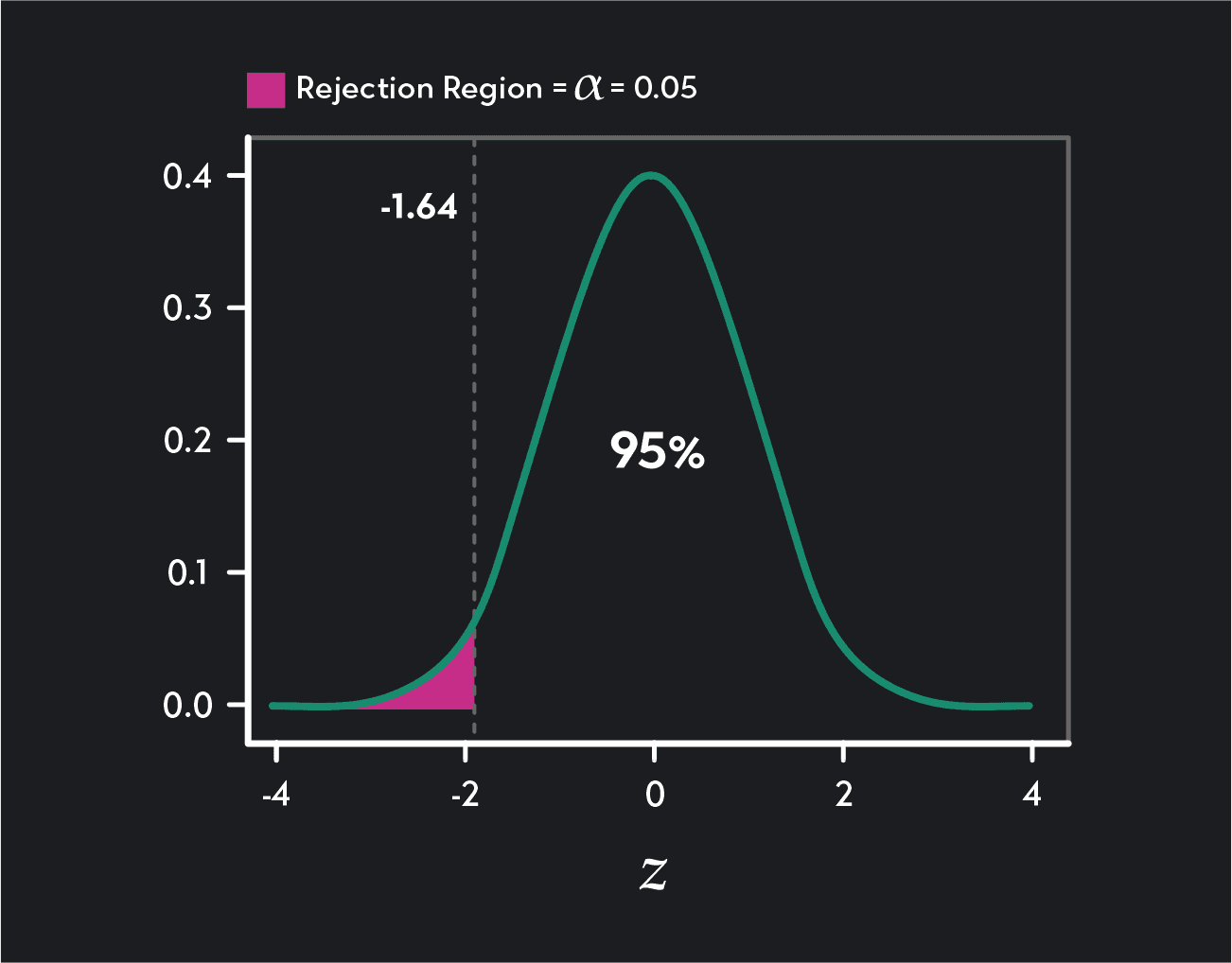

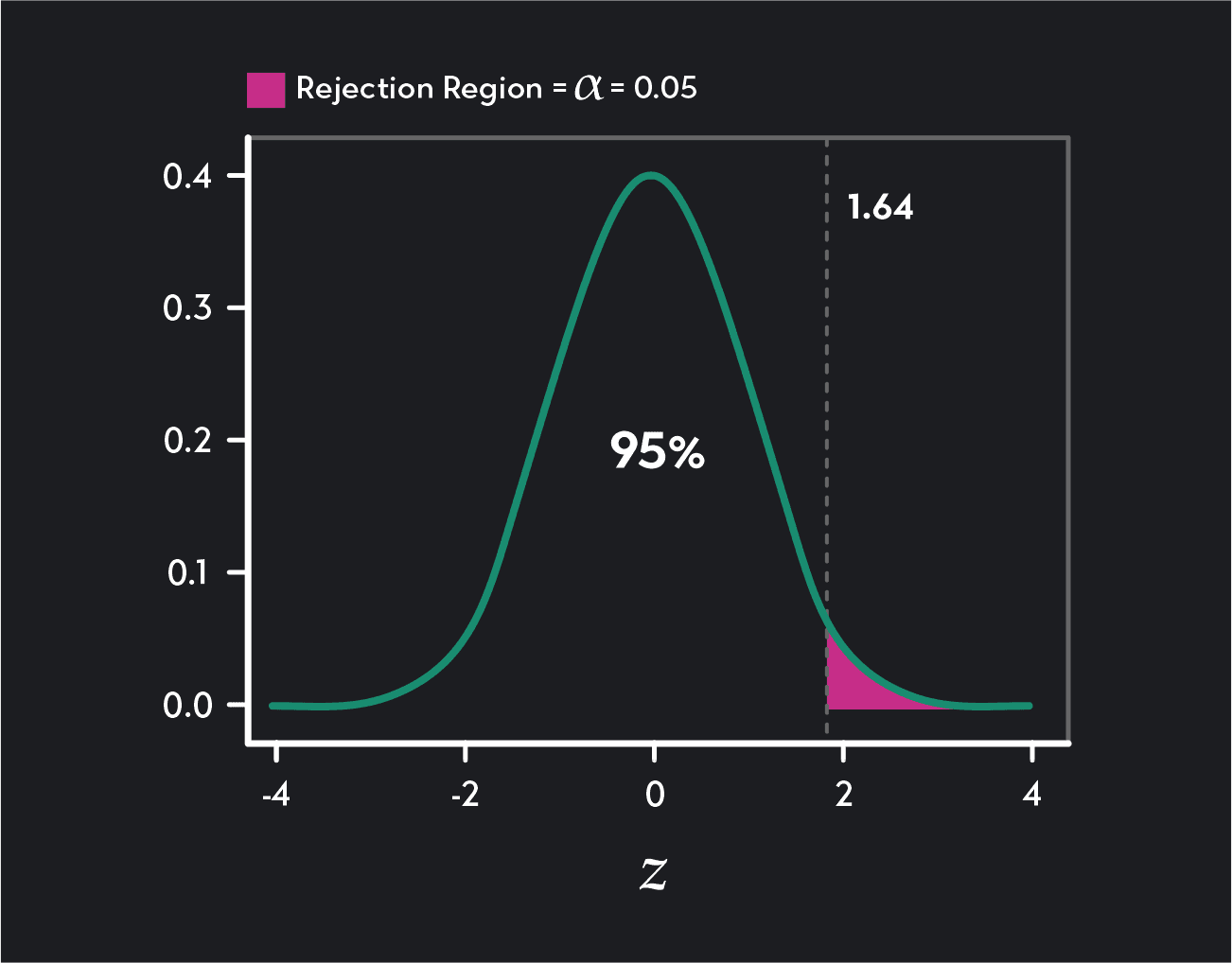

Critical Values for One-Tailed Tests

A one-tailed test has one rejection region (either in the right tail or the left tail of the distribution) and one critical value. In a lower tail (or left-tailed) test, the critical value and rejection region will be in the left tail of the distribution. In an upper tail (or right-tailed) test, the critical value and rejection region will be in the right tail of the distribution.

Two-tailed test

One-tailed lower tail test

One-tailed upper tail test

The tables below provide a list of critical values that are commonly used in hypothesis testing.

Z-Test Statistics (Using a Normal Distribution)

T-test statistics (using a t distribution), degrees of freedom (df), finding a critical value for a two-tailed z-test.

Suppose you don’t remember what the critical values for a two-sided Z-test are. How would you go about finding them?

To find the critical value, you start with the significance level of your hypothesis test. Your significance level is equal to the total area of the rejection region. For example, with a 0.05 significance level, the entire rejection region will be equal to 5% of the area under the normal distribution.

In a two-tailed test Z-test, we split equally the rejection region into two parts. One rejection region is in the distribution’s right tail, and the other is in the left tail of the distribution. Each of these two parts will contain half of the total area of the rejection region. For a two-tailed Z-test with a significance level of ɑ=0.05, each rejection region will contain ɑ/2 = 0.025 or 2.5% of the distribution. This leaves a confidence interval of 0.95 (or 95%) between the two rejection regions.

To find the critical values, you need to find the corresponding values (or Z-scores ) in the Z-distribution. Make sure the percentage lying to the left of the first critical value is equal to ɑ/2. Also, check that the percentage of the distribution lying to the right of the second critical value is equal to ɑ/2. You can use a Z-table to look up these figures.

Solved Example: Two-Tailed Z-Test

For a two-tailed Z-test with a significance level of ɑ=0.05, we are looking for two critical values such that ɑ/2 or 2.5% of the normal distribution lies to the left of the first critical value and ɑ/2 or 0.025 of the normal distribution lies to the right of the second critical value.

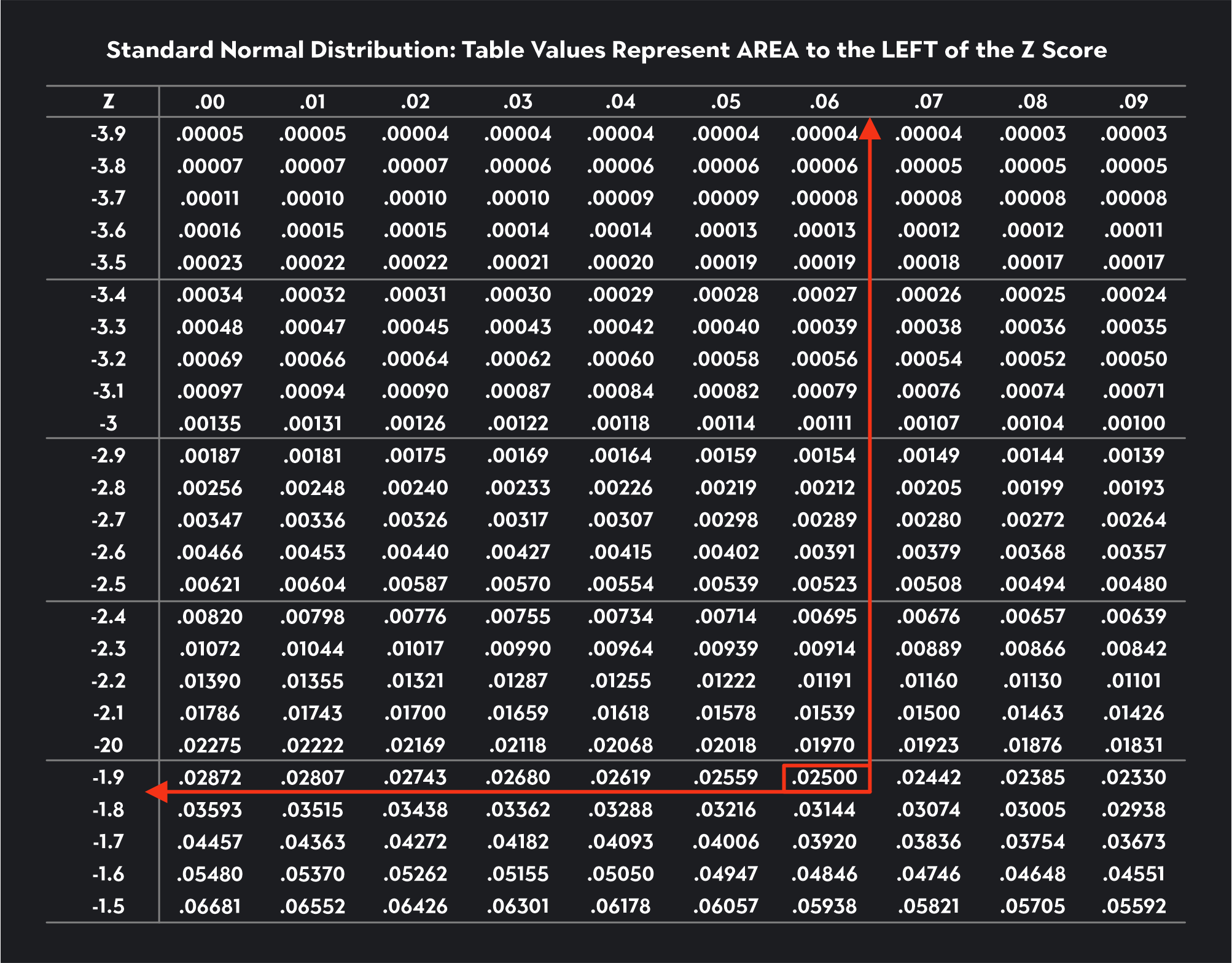

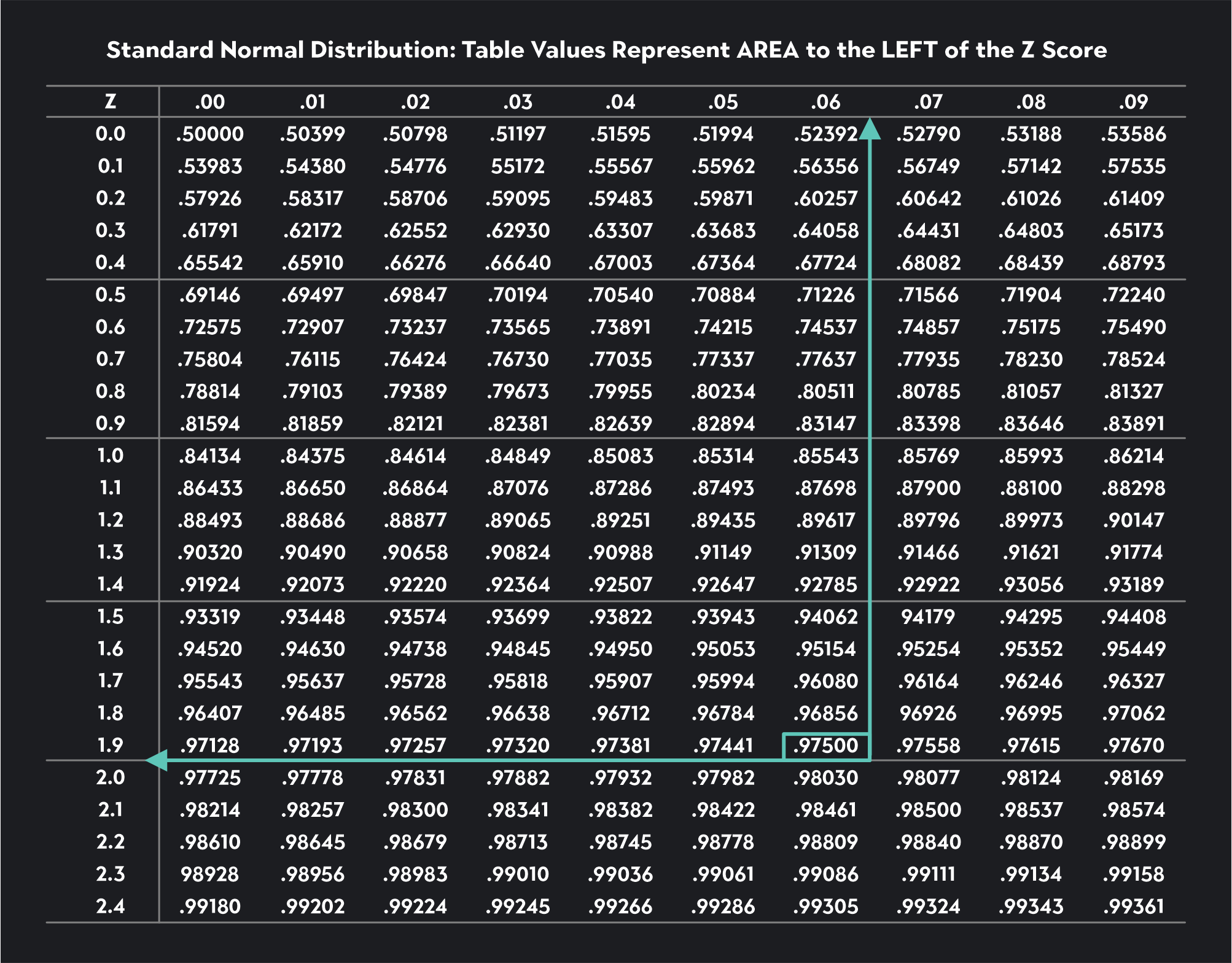

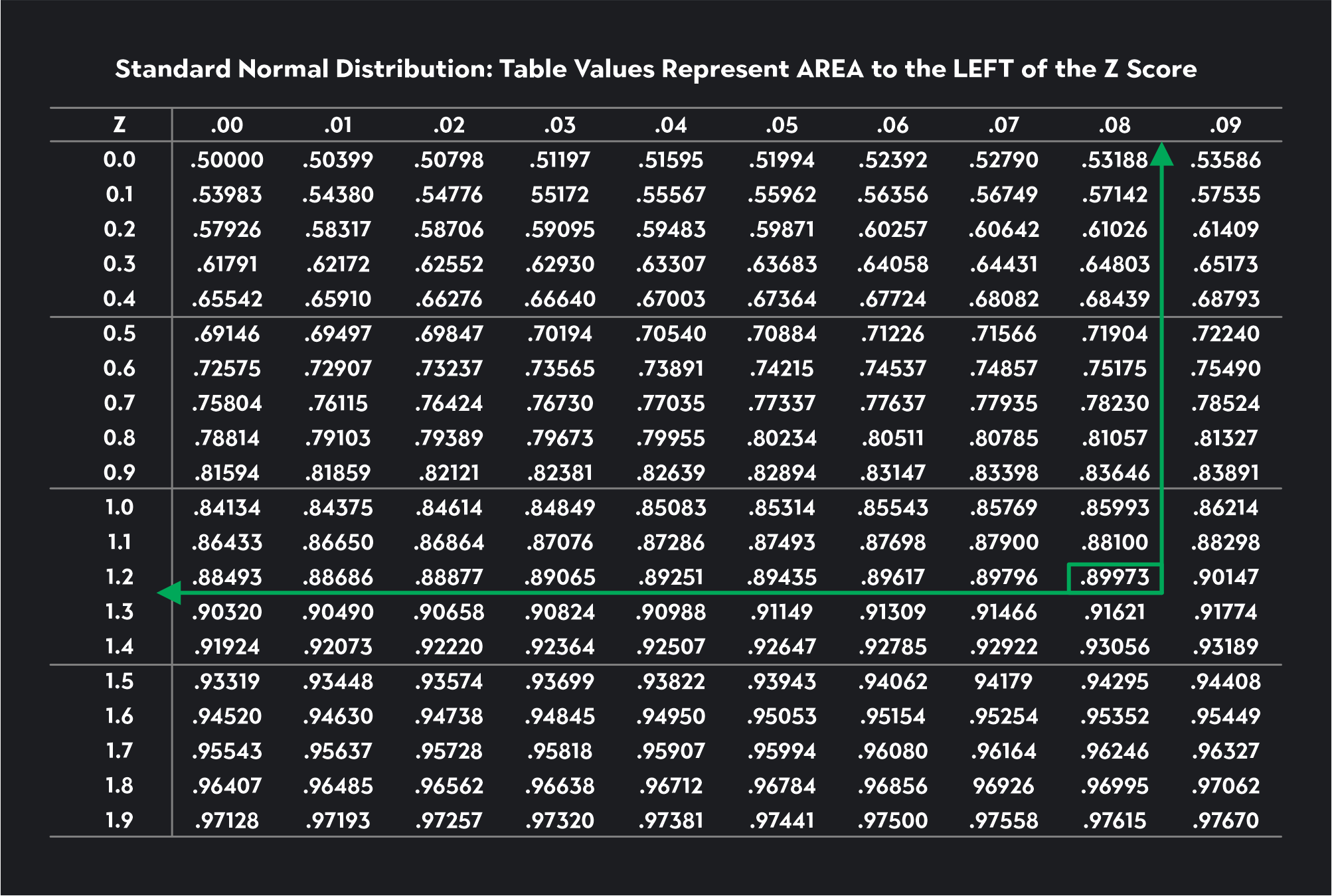

Z-tables will either show you probabilities to the LEFT or to the RIGHT of a particular value. We’ll stick to Z-tables showing probabilities to the LEFT.

For the first critical value, if the area to the left of the critical value is 0.025, we use the Z-table to find the number 0.025 in the table (we’ve shown this figure highlighted in an orange box). We then trace that value to the left to find the first two digits of the critical value (-1.9) and then up to the top to find the last digit (-0.06). If we put these together, we have the critical value -1.96. Z-tables provide Z-scores that are usually rounded to two decimal places.

For the second critical value, 2.5% of the distribution will lie to the right, meaning 97.5% of the distribution will lie to the left of the critical value (1-0.025=0.0975). To find this critical value, we look for the number 0.0975 in the Z-table (we’ve shown this figure highlighted in a green box). We trace that value to the left to find the first two digits of the critical value (1.9) and then up to the top to find the last digit (0.06). Our second critical value is 1.96.

Following similar steps, see if you can find the critical values for a Z-test with a significance level of ɑ=0.10. The critical values you find should be equal to -1.64 and 1.64.

Finding a Critical Value for a One-Tailed Z-Test

In a one-tailed test, there is just one rejection region, and the area of the rejection region is equal to the significance level.

For a one-tailed lower tail test, use the z-table to find a critical value where the total area to the left of the critical value is equal to alpha.

For a one-tailed upper tail test, use the z-table to find a critical value where the total area to the left of the critical value is equal to 1- alpha.

Solved Example: One-Tailed Z-Test

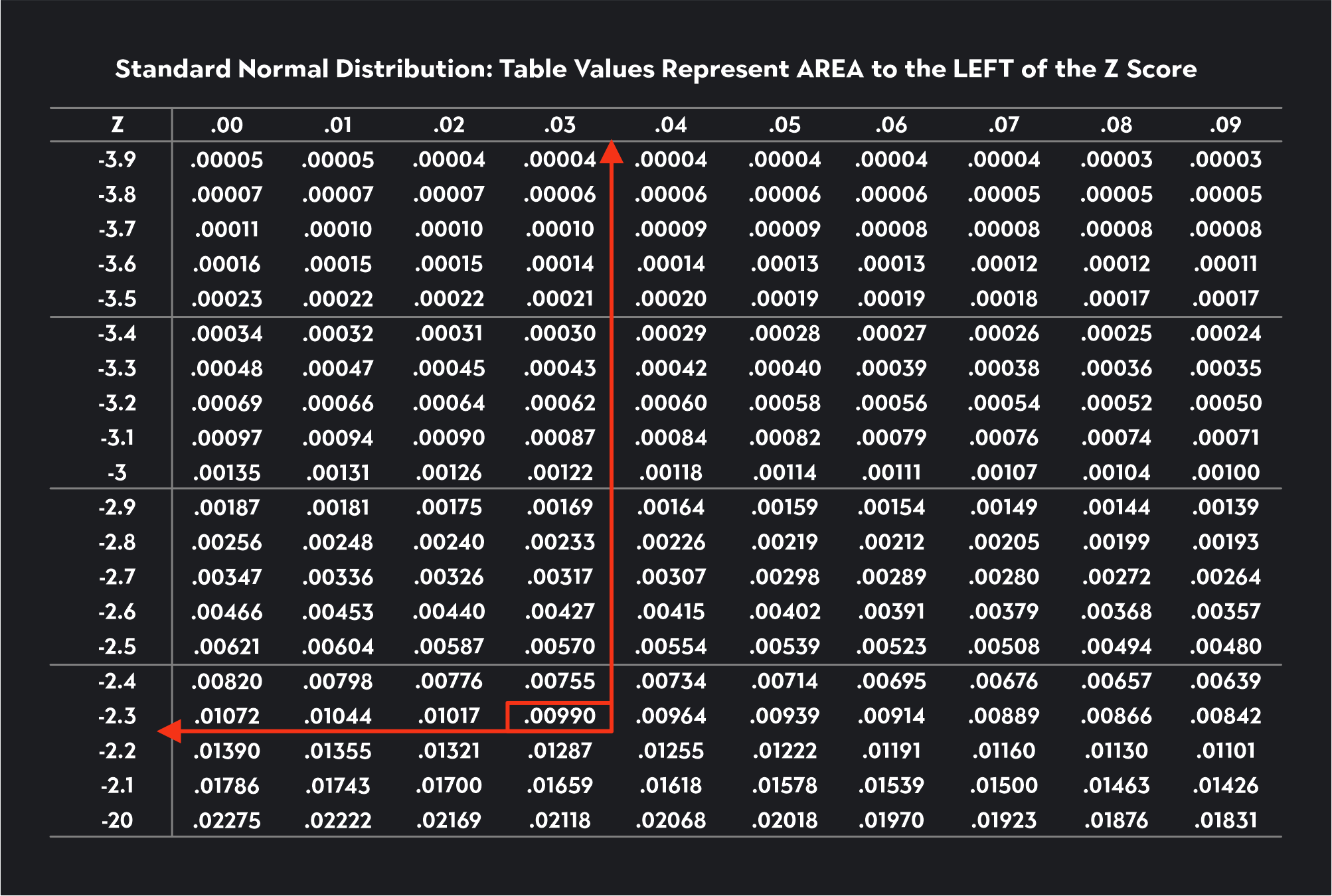

Let’s see if we can use the Z-table to find the critical value for a lower tail Z-test with a significance level of 0.01.

Since alpha equals 0.01, we are looking for this number in the Z-table. If you can’t find the exact number, you look for the closest number, which in this case is 0.0090. Once we’ve found this number, we trace the value to the first column to find the first two digits of the critical value and then up to the first row to find the last digit. The critical value is -2.33.

Now let’s see if we can use the Z-table to find the critical value for an upper tail Z-test with a significance level of 0.10.

Since this is an upper tail test, we need to use the Z-table to look for a critical value corresponding to 0.90 (1-ɑ = 1-0.10 = 0.90). The closest number to 0.90 we can find in the table is 0.89973. We trace this number to the left and then up to the top of the table to find a critical value of 1.28.

To find a critical value in R, you can use the qnorm() function for a Z-test or the qt() function for a T-test.

Here are some examples of how you could use these functions in your critical value approach.

Z-Critical Values Using R

For a two-tailed Z-test with a 0.05 significance level, you would type:

qnorm(p=0.05/2, lower.tail=FALSE)

This will give you one of your critical values. The second critical value is just the negative value of the first.

For a one-tailed lower tail Z-test with a 0.01 significance level, you would type:

qnorm(p=0.01, lower.tail=TRUE)

For a one-tailed upper tail Z-test with a 0.01 significance level, you would type:

qnorm(p=0.01, lower.tail=FALSE)

T-Critical Values Using R

For a two-tailed T-test with 15 degrees of freedom and a 0.1 significance level, you would type:

qt(p=0.1/2, df=15, lower.tail=FALSE)

For a one-tailed lower tail T-test with 10 degrees of freedom and a 0.05 significance level, you would type:

qt(p=0.05, df=10, lower.tail=TRUE)

For a one-tailed upper tail T-test with 20 degrees of freedom and a 0.01 significance level, you would type:

qt(p=0.01, df=20, lower.tail=FALSE)

Now that you know the ins and outs of critical values, you’re one step closer to conducting hypothesis tests with ease!

Explore Outlier's Award-Winning For-Credit Courses

Outlier (from the co-founder of MasterClass) has brought together some of the world's best instructors, game designers, and filmmakers to create the future of online college.

Check out these related courses:

Intro to Statistics

How data describes our world.

Intro to Microeconomics

Why small choices have big impact.

Intro to Macroeconomics

How money moves our world.

Intro to Psychology

The science of the mind.

Related Articles

Binomial Distribution: Meaning & Formula

Learn what binomial distribution is in probability. Read a list of the criteria that must be present to apply the formula and learn how to calculate it.

Understanding Math Probability - Definition, Formula & How To Find It

This article is about what probability is, its definition, and the formula. You’ll also learn how to calculate it.

What Is a Residual in Stats?

This article gives a quick definition of what’s a residual equation, the best way to read it, and how to use it with proper statistical models.

Further Reading

Set operations: formulas, properties, examples & exercises, what is set notation [+ bonus practice], how to make a box plot, a guide to understand negative correlation, calculating p-value in hypothesis testing.

Critical Value Approach in Hypothesis Testing

by Nathan Sebhastian

Posted on Jun 05, 2023

Reading time: 5 minutes

The critical value is the cut-off point to determine whether to accept or reject the null hypothesis for your sample distribution.

The critical value approach provides a standardized method for hypothesis testing, enabling you to make informed decisions based on the evidence obtained from sample data.

After calculating the test statistic using the sample data, you compare it to the critical value(s) corresponding to the chosen significance level ( α ).

The critical value(s) represent the boundary beyond which you reject the null hypothesis. You will have rejection regions and non-rejection region as follows:

Two-sided test

A two-sided hypothesis test has 2 rejection regions, so you need 2 critical values on each side. Because there are 2 rejection regions, you must split the significance level in half.

Each rejection region has a probability of α / 2 , making the total likelihood for both areas equal the significance level.

In this test, the null hypothesis H0 gets rejected when the test statistic is too small or too large.

Left-tailed test

The left-tailed test has 1 rejection region, and the null hypothesis only gets rejected when the test statistic is too small.

Right-tailed test

The right-tailed test is similar to the left-tailed test, only the null hypothesis gets rejected when the test statistic is too large.

Now that you understand the definition of critical values, let’s look at how to use critical values to construct a confidence interval.

Using Critical Values to Construct Confidence Intervals

Confidence Intervals use the same Critical values as the test you’re running.

If you’re running a z-test with a 95% confidence interval, then:

- For a two-sided test, The CVs are -1.96 and 1.96

- For a one-tailed test, the critical value is -1.65 (left) or 1.65 (right)

To calculate the upper and lower bounds of the confidence interval, you need to calculate the sample mean and then add or subtract the margin of error from it.

To get the Margin of Error, multiply the critical value by the standard error:

Let’s see an example. Suppose you are estimating the population mean with a 95% confidence level.

You have a sample mean of 50, a sample size of 100, and a standard deviation of 10. Using a z-table, the critical value for a 95% confidence level is approximately 1.96.

Calculate the standard error:

Determine the margin of error:

Compute the lower bound and upper bound:

The 95% confidence interval is (48.04, 51.96). This means that we are 95% confident that the true population mean falls within this interval.

Finding the Critical Value

The formula to find critical values depends on the specific distribution associated with the hypothesis test or confidence interval you’re using.

Here are the formulas for some commonly used distributions.

Standard Normal Distribution (Z-distribution):

The critical value for a given significance level ( α ) in the standard normal distribution is found using the cumulative distribution function (CDF) or a standard normal table.

z(α) represents the z-score corresponding to the desired significance level α .

Student’s t-Distribution (t-distribution):

The critical value for a given significance level (α) and degrees of freedom (df) in the t-distribution is found using the inverse cumulative distribution function (CDF) or a t-distribution table.

t(α, df) represents the t-score corresponding to the desired significance level α and degrees of freedom df .

Chi-Square Distribution (χ²-distribution):

The critical value for a given significance level (α) and degrees of freedom (df) in the chi-square distribution is found using the inverse cumulative distribution function (CDF) or a chi-square distribution table.

where χ²(α, df) represents the chi-square value corresponding to the desired significance level α and degrees of freedom df .

F-Distribution:

The critical value for a given significance level (α), degrees of freedom for the numerator (df₁), and degrees of freedom for the denominator (df₂) in the F-distribution is found using the inverse cumulative distribution function (CDF) or an F-distribution table.

F(α, df₁, df₂) represents the F-value corresponding to the desired significance level α , df₁ , and df₂ .

As you can see, the specific formula to find critical values depends on the distribution and the parameters associated with the problem at hand.

Usually, you don’t calculate the critical values manually as you can use statistical tables or statistical software to determine the critical values.

I will update this tutorial with statistical tables that you can use later.

The critical value is as a threshold where you make a decision based on the observed test statistic and its relation to the significance level.

It provides a predetermined point of reference to objectively evaluate the strength of the evidence against the null hypothesis and guide the acceptance or rejection of the hypothesis.

If the test statistic falls in the critical region (beyond the critical value), it means the observed data provide strong evidence against the null hypothesis.

In this case, you reject the null hypothesis in favor of the alternative hypothesis, indicating that there is sufficient evidence to support the claim or relationship stated in the alternative hypothesis.

On the other hand, if the test statistic falls in the non-critical region (within the critical value), it means the observed data do not provide enough evidence to reject the null hypothesis.

In this case, you fail to reject the null hypothesis, indicating that there is insufficient evidence to support the alternative hypothesis.

Take your skills to the next level ⚡️

I'm sending out an occasional email with the latest tutorials on programming, web development, and statistics. Drop your email in the box below and I'll send new stuff straight into your inbox!

Hello! This website is dedicated to help you learn tech and data science skills with its step-by-step, beginner-friendly tutorials. Learn statistics, JavaScript and other programming languages using clear examples written for people.

Learn more about this website

Connect with me on Twitter

Or LinkedIn

Type the keyword below and hit enter

Click to see all tutorials tagged with:

What is a critical value?

A critical value is a point on the distribution of the test statistic under the null hypothesis that defines a set of values that call for rejecting the null hypothesis. This set is called critical or rejection region. Usually, one-sided tests have one critical value and two-sided test have two critical values. The critical values are determined so that the probability that the test statistic has a value in the rejection region of the test when the null hypothesis is true equals the significance level (denoted as α or alpha).

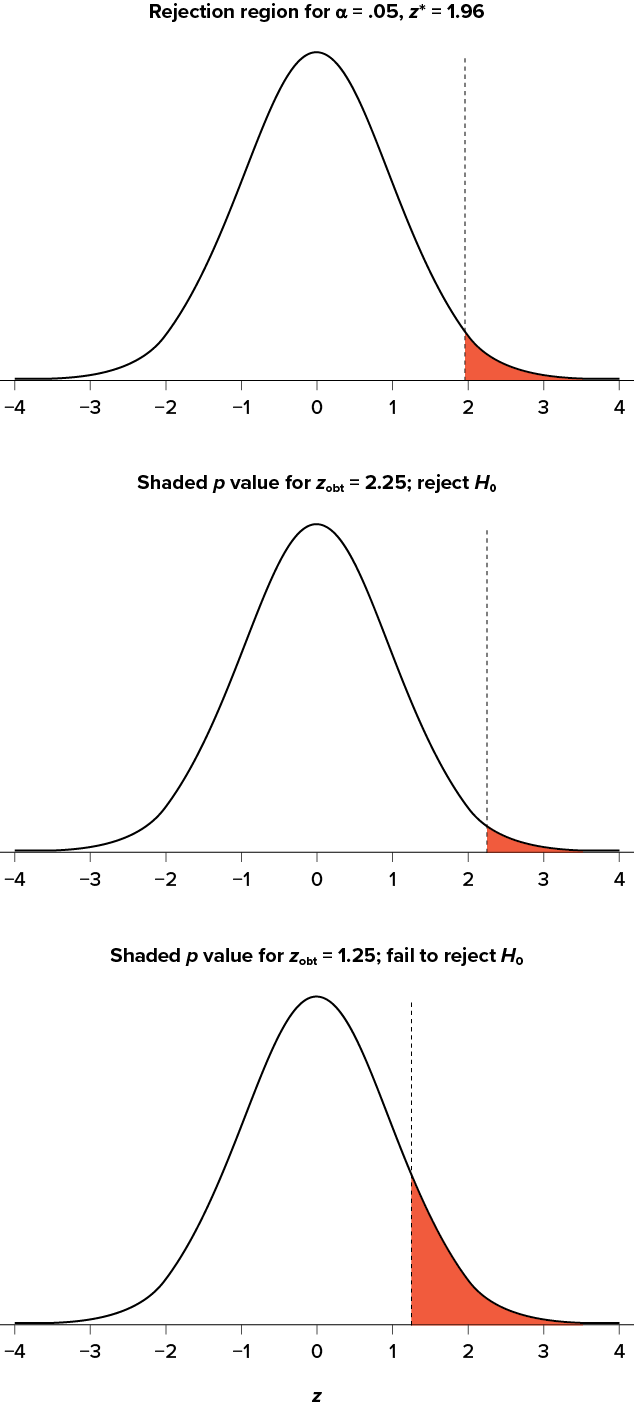

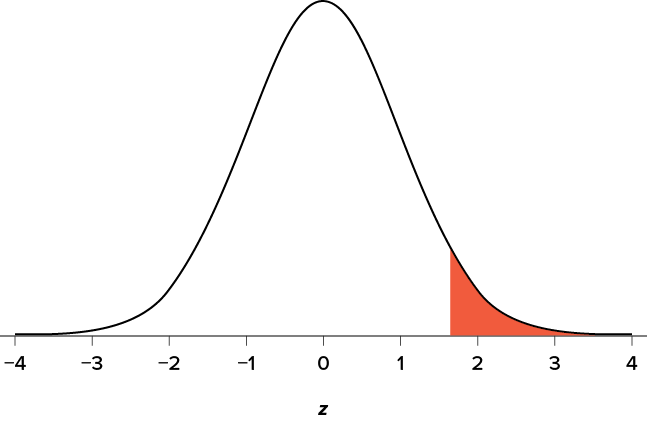

Critical values on the standard normal distribution for α = 0.05

Figure A shows that results of a one-tailed Z-test are significant if the value of the test statistic is equal to or greater than 1.64, the critical value in this case. The shaded area represents the probability of a type I error (α = 5% in this example) of the area under the curve. Figure B shows that results of a two-tailed Z-test are significant if the absolute value of the test statistic is equal to or greater than 1.96, the critical value in this case. The two shaded areas sum to 5% (α) of the area under the curve.

Examples of calculating critical values

In hypothesis testing, there are two ways to determine whether there is enough evidence from the sample to reject H 0 or to fail to reject H 0 . The most common way is to compare the p-value with a pre-specified value of α, where α is the probability of rejecting H 0 when H 0 is true. However, an equivalent approach is to compare the calculated value of the test statistic based on your data with the critical value. The following are examples of how to calculate the critical value for a 1-sample t-test and a One-Way ANOVA.

Calculating a critical value for a 1-sample t-test

- Select Calc > Probability Distributions > t .

- Select Inverse cumulative probability .

- In Degrees of freedom , enter 9 (the number of observations minus one).

- In Input constant , enter 0.95 (one minus one-half alpha).

This gives you an inverse cumulative probability, which equals the critical value, of 1.83311. If the absolute value of the t-statistic value is greater than this critical value, then you can reject the null hypothesis, H 0 , at the 0.10 level of significance.

Calculating a critical value for an analysis of variance (ANOVA)

- Choose Calc > Probability Distributions > F .

- In Numerator degrees of freedom , enter 2 (the number of factor levels minus one).

- In Denominator degrees of freedom , enter 9 (the degrees of freedom for error).

- In Input constant , enter 0.95 (one minus alpha).

This gives you an inverse cumulative probability (critical value) of 4.25649. If the F-statistic is greater than this critical value, then you can reject the null hypothesis, H 0 , at the 0.05 level of significance.

- Minitab.com

- License Portal

- Cookie Settings

You are now leaving support.minitab.com.

Click Continue to proceed to:

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7 Chapter 7: Introduction to Hypothesis Testing

alternative hypothesis

critical value

effect size

null hypothesis

probability value

rejection region

significance level

statistical power

statistical significance

test statistic

Type I error

Type II error

This chapter lays out the basic logic and process of hypothesis testing. We will perform z tests, which use the z score formula from Chapter 6 and data from a sample mean to make an inference about a population.

Logic and Purpose of Hypothesis Testing

A hypothesis is a prediction that is tested in a research study. The statistician R. A. Fisher explained the concept of hypothesis testing with a story of a lady tasting tea. Here we will present an example based on James Bond who insisted that martinis should be shaken rather than stirred. Let’s consider a hypothetical experiment to determine whether Mr. Bond can tell the difference between a shaken martini and a stirred martini. Suppose we gave Mr. Bond a series of 16 taste tests. In each test, we flipped a fair coin to determine whether to stir or shake the martini. Then we presented the martini to Mr. Bond and asked him to decide whether it was shaken or stirred. Let’s say Mr. Bond was correct on 13 of the 16 taste tests. Does this prove that Mr. Bond has at least some ability to tell whether the martini was shaken or stirred?

This result does not prove that he does; it could be he was just lucky and guessed right 13 out of 16 times. But how plausible is the explanation that he was just lucky? To assess its plausibility, we determine the probability that someone who was just guessing would be correct 13/16 times or more. This probability can be computed to be .0106. This is a pretty low probability, and therefore someone would have to be very lucky to be correct 13 or more times out of 16 if they were just guessing. So either Mr. Bond was very lucky, or he can tell whether the drink was shaken or stirred. The hypothesis that he was guessing is not proven false, but considerable doubt is cast on it. Therefore, there is strong evidence that Mr. Bond can tell whether a drink was shaken or stirred.

Let’s consider another example. The case study Physicians’ Reactions sought to determine whether physicians spend less time with obese patients. Physicians were sampled randomly and each was shown a chart of a patient complaining of a migraine headache. They were then asked to estimate how long they would spend with the patient. The charts were identical except that for half the charts, the patient was obese and for the other half, the patient was of average weight. The chart a particular physician viewed was determined randomly. Thirty-three physicians viewed charts of average-weight patients and 38 physicians viewed charts of obese patients.

The mean time physicians reported that they would spend with obese patients was 24.7 minutes as compared to a mean of 31.4 minutes for normal-weight patients. How might this difference between means have occurred? One possibility is that physicians were influenced by the weight of the patients. On the other hand, perhaps by chance, the physicians who viewed charts of the obese patients tend to see patients for less time than the other physicians. Random assignment of charts does not ensure that the groups will be equal in all respects other than the chart they viewed. In fact, it is certain the groups differed in many ways by chance. The two groups could not have exactly the same mean age (if measured precisely enough such as in days). Perhaps a physician’s age affects how long the physician sees patients. There are innumerable differences between the groups that could affect how long they view patients. With this in mind, is it plausible that these chance differences are responsible for the difference in times?

To assess the plausibility of the hypothesis that the difference in mean times is due to chance, we compute the probability of getting a difference as large or larger than the observed difference (31.4 − 24.7 = 6.7 minutes) if the difference were, in fact, due solely to chance. Using methods presented in later chapters, this probability can be computed to be .0057. Since this is such a low probability, we have confidence that the difference in times is due to the patient’s weight and is not due to chance.

The Probability Value

It is very important to understand precisely what the probability values mean. In the James Bond example, the computed probability of .0106 is the probability he would be correct on 13 or more taste tests (out of 16) if he were just guessing. It is easy to mistake this probability of .0106 as the probability he cannot tell the difference. This is not at all what it means.

The probability of .0106 is the probability of a certain outcome (13 or more out of 16) assuming a certain state of the world (James Bond was only guessing). It is not the probability that a state of the world is true. Although this might seem like a distinction without a difference, consider the following example. An animal trainer claims that a trained bird can determine whether or not numbers are evenly divisible by 7. In an experiment assessing this claim, the bird is given a series of 16 test trials. On each trial, a number is displayed on a screen and the bird pecks at one of two keys to indicate its choice. The numbers are chosen in such a way that the probability of any number being evenly divisible by 7 is .50. The bird is correct on 9/16 choices. We can compute that the probability of being correct nine or more times out of 16 if one is only guessing is .40. Since a bird who is only guessing would do this well 40% of the time, these data do not provide convincing evidence that the bird can tell the difference between the two types of numbers. As a scientist, you would be very skeptical that the bird had this ability. Would you conclude that there is a .40 probability that the bird can tell the difference? Certainly not! You would think the probability is much lower than .0001.

To reiterate, the probability value is the probability of an outcome (9/16 or better) and not the probability of a particular state of the world (the bird was only guessing). In statistics, it is conventional to refer to possible states of the world as hypotheses since they are hypothesized states of the world. Using this terminology, the probability value is the probability of an outcome given the hypothesis. It is not the probability of the hypothesis given the outcome.

This is not to say that we ignore the probability of the hypothesis. If the probability of the outcome given the hypothesis is sufficiently low, we have evidence that the hypothesis is false. However, we do not compute the probability that the hypothesis is false. In the James Bond example, the hypothesis is that he cannot tell the difference between shaken and stirred martinis. The probability value is low (.0106), thus providing evidence that he can tell the difference. However, we have not computed the probability that he can tell the difference.

The Null Hypothesis

The hypothesis that an apparent effect is due to chance is called the null hypothesis , written H 0 (“ H -naught”). In the Physicians’ Reactions example, the null hypothesis is that in the population of physicians, the mean time expected to be spent with obese patients is equal to the mean time expected to be spent with average-weight patients. This null hypothesis can be written as:

The null hypothesis in a correlational study of the relationship between high school grades and college grades would typically be that the population correlation is 0. This can be written as

Although the null hypothesis is usually that the value of a parameter is 0, there are occasions in which the null hypothesis is a value other than 0. For example, if we are working with mothers in the U.S. whose children are at risk of low birth weight, we can use 7.47 pounds, the average birth weight in the U.S., as our null value and test for differences against that.

For now, we will focus on testing a value of a single mean against what we expect from the population. Using birth weight as an example, our null hypothesis takes the form:

Keep in mind that the null hypothesis is typically the opposite of the researcher’s hypothesis. In the Physicians’ Reactions study, the researchers hypothesized that physicians would expect to spend less time with obese patients. The null hypothesis that the two types of patients are treated identically is put forward with the hope that it can be discredited and therefore rejected. If the null hypothesis were true, a difference as large as or larger than the sample difference of 6.7 minutes would be very unlikely to occur. Therefore, the researchers rejected the null hypothesis of no difference and concluded that in the population, physicians intend to spend less time with obese patients.

In general, the null hypothesis is the idea that nothing is going on: there is no effect of our treatment, no relationship between our variables, and no difference in our sample mean from what we expected about the population mean. This is always our baseline starting assumption, and it is what we seek to reject. If we are trying to treat depression, we want to find a difference in average symptoms between our treatment and control groups. If we are trying to predict job performance, we want to find a relationship between conscientiousness and evaluation scores. However, until we have evidence against it, we must use the null hypothesis as our starting point.

The Alternative Hypothesis

If the null hypothesis is rejected, then we will need some other explanation, which we call the alternative hypothesis, H A or H 1 . The alternative hypothesis is simply the reverse of the null hypothesis, and there are three options, depending on where we expect the difference to lie. Thus, our alternative hypothesis is the mathematical way of stating our research question. If we expect our obtained sample mean to be above or below the null hypothesis value, which we call a directional hypothesis, then our alternative hypothesis takes the form

based on the research question itself. We should only use a directional hypothesis if we have good reason, based on prior observations or research, to suspect a particular direction. When we do not know the direction, such as when we are entering a new area of research, we use a non-directional alternative:

We will set different criteria for rejecting the null hypothesis based on the directionality (greater than, less than, or not equal to) of the alternative. To understand why, we need to see where our criteria come from and how they relate to z scores and distributions.

Critical Values, p Values, and Significance Level

The significance level is a threshold we set before collecting data in order to determine whether or not we should reject the null hypothesis. We set this value beforehand to avoid biasing ourselves by viewing our results and then determining what criteria we should use. If our data produce values that meet or exceed this threshold, then we have sufficient evidence to reject the null hypothesis; if not, we fail to reject the null (we never “accept” the null).

Figure 7.1. The rejection region for a one-tailed test. (“ Rejection Region for One-Tailed Test ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

The rejection region is bounded by a specific z value, as is any area under the curve. In hypothesis testing, the value corresponding to a specific rejection region is called the critical value , z crit (“ z crit”), or z * (hence the other name “critical region”). Finding the critical value works exactly the same as finding the z score corresponding to any area under the curve as we did in Unit 1 . If we go to the normal table, we will find that the z score corresponding to 5% of the area under the curve is equal to 1.645 ( z = 1.64 corresponds to .0505 and z = 1.65 corresponds to .0495, so .05 is exactly in between them) if we go to the right and −1.645 if we go to the left. The direction must be determined by your alternative hypothesis, and drawing and shading the distribution is helpful for keeping directionality straight.

Suppose, however, that we want to do a non-directional test. We need to put the critical region in both tails, but we don’t want to increase the overall size of the rejection region (for reasons we will see later). To do this, we simply split it in half so that an equal proportion of the area under the curve falls in each tail’s rejection region. For a = .05, this means 2.5% of the area is in each tail, which, based on the z table, corresponds to critical values of z * = ±1.96. This is shown in Figure 7.2 .

Figure 7.2. Two-tailed rejection region. (“ Rejection Region for Two-Tailed Test ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Thus, any z score falling outside ±1.96 (greater than 1.96 in absolute value) falls in the rejection region. When we use z scores in this way, the obtained value of z (sometimes called z obtained and abbreviated z obt ) is something known as a test statistic , which is simply an inferential statistic used to test a null hypothesis. The formula for our z statistic has not changed:

Figure 7.3. Relationship between a , z obt , and p . (“ Relationship between alpha, z-obt, and p ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

When the null hypothesis is rejected, the effect is said to have statistical significance , or be statistically significant. For example, in the Physicians’ Reactions case study, the probability value is .0057. Therefore, the effect of obesity is statistically significant and the null hypothesis that obesity makes no difference is rejected. It is important to keep in mind that statistical significance means only that the null hypothesis of exactly no effect is rejected; it does not mean that the effect is important, which is what “significant” usually means. When an effect is significant, you can have confidence the effect is not exactly zero. Finding that an effect is significant does not tell you about how large or important the effect is.

Do not confuse statistical significance with practical significance. A small effect can be highly significant if the sample size is large enough.

Why does the word “significant” in the phrase “statistically significant” mean something so different from other uses of the word? Interestingly, this is because the meaning of “significant” in everyday language has changed. It turns out that when the procedures for hypothesis testing were developed, something was “significant” if it signified something. Thus, finding that an effect is statistically significant signifies that the effect is real and not due to chance. Over the years, the meaning of “significant” changed, leading to the potential misinterpretation.

The Hypothesis Testing Process

A four-step procedure.

The process of testing hypotheses follows a simple four-step procedure. This process will be what we use for the remainder of the textbook and course, and although the hypothesis and statistics we use will change, this process will not.

Step 1: State the Hypotheses

Your hypotheses are the first thing you need to lay out. Otherwise, there is nothing to test! You have to state the null hypothesis (which is what we test) and the alternative hypothesis (which is what we expect). These should be stated mathematically as they were presented above and in words, explaining in normal English what each one means in terms of the research question.

Step 2: Find the Critical Values

Step 3: calculate the test statistic and effect size.

Once we have our hypotheses and the standards we use to test them, we can collect data and calculate our test statistic—in this case z . This step is where the vast majority of differences in future chapters will arise: different tests used for different data are calculated in different ways, but the way we use and interpret them remains the same. As part of this step, we will also calculate effect size to better quantify the magnitude of the difference between our groups. Although effect size is not considered part of hypothesis testing, reporting it as part of the results is approved convention.

Step 4: Make the Decision

Finally, once we have our obtained test statistic, we can compare it to our critical value and decide whether we should reject or fail to reject the null hypothesis. When we do this, we must interpret the decision in relation to our research question, stating what we concluded, what we based our conclusion on, and the specific statistics we obtained.

Example A Movie Popcorn

Our manager is looking for a difference in the mean weight of popcorn bags compared to the population mean of 8. We will need both a null and an alternative hypothesis written both mathematically and in words. We’ll always start with the null hypothesis:

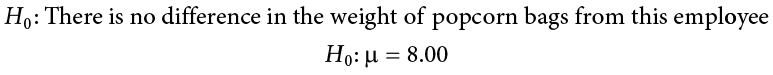

In this case, we don’t know if the bags will be too full or not full enough, so we do a two-tailed alternative hypothesis that there is a difference.

Our critical values are based on two things: the directionality of the test and the level of significance. We decided in Step 1 that a two-tailed test is the appropriate directionality. We were given no information about the level of significance, so we assume that a = .05 is what we will use. As stated earlier in the chapter, the critical values for a two-tailed z test at a = .05 are z * = ±1.96. This will be the criteria we use to test our hypothesis. We can now draw out our distribution, as shown in Figure 7.4 , so we can visualize the rejection region and make sure it makes sense.

Figure 7.4. Rejection region for z * = ±1.96. (“ Rejection Region z+-1.96 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

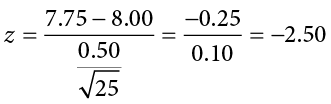

Now we come to our formal calculations. Let’s say that the manager collects data and finds that the average weight of this employee’s popcorn bags is M = 7.75 cups. We can now plug this value, along with the values presented in the original problem, into our equation for z :

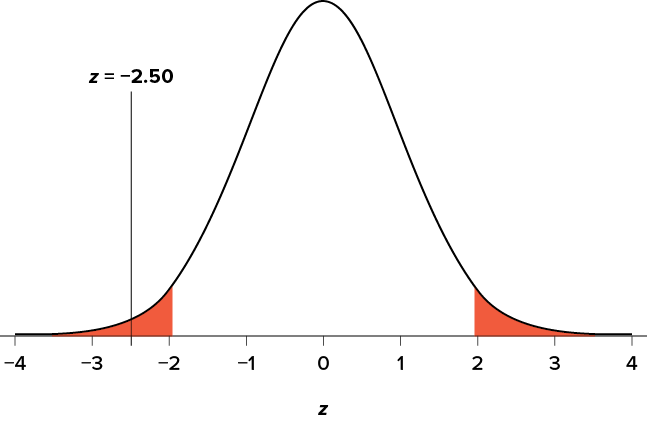

So our test statistic is z = −2.50, which we can draw onto our rejection region distribution as shown in Figure 7.5 .

Figure 7.5. Test statistic location. (“ Test Statistic Location z-2.50 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Effect Size

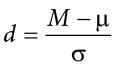

When we reject the null hypothesis, we are stating that the difference we found was statistically significant, but we have mentioned several times that this tells us nothing about practical significance. To get an idea of the actual size of what we found, we can compute a new statistic called an effect size. Effect size gives us an idea of how large, important, or meaningful a statistically significant effect is. For mean differences like we calculated here, our effect size is Cohen’s d :

This is very similar to our formula for z , but we no longer take into account the sample size (since overly large samples can make it too easy to reject the null). Cohen’s d is interpreted in units of standard deviations, just like z . For our example:

Cohen’s d is interpreted as small, moderate, or large. Specifically, d = 0.20 is small, d = 0.50 is moderate, and d = 0.80 is large. Obviously, values can fall in between these guidelines, so we should use our best judgment and the context of the problem to make our final interpretation of size. Our effect size happens to be exactly equal to one of these, so we say that there is a moderate effect.

Effect sizes are incredibly useful and provide important information and clarification that overcomes some of the weakness of hypothesis testing. Any time you perform a hypothesis test, whether statistically significant or not, you should always calculate and report effect size.

Looking at Figure 7.5 , we can see that our obtained z statistic falls in the rejection region. We can also directly compare it to our critical value: in terms of absolute value, −2.50 > −1.96, so we reject the null hypothesis. We can now write our conclusion:

Reject H 0 . Based on the sample of 25 bags, we can conclude that the average popcorn bag from this employee is smaller ( M = 7.75 cups) than the average weight of popcorn bags at this movie theater, and the effect size was moderate, z = −2.50, p < .05, d = 0.50.

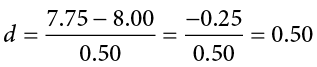

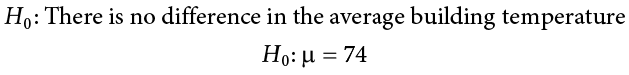

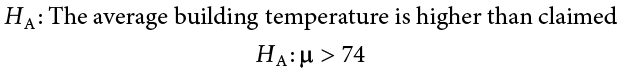

Example B Office Temperature

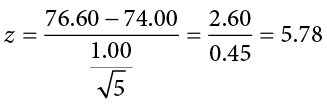

Let’s do another example to solidify our understanding. Let’s say that the office building you work in is supposed to be kept at 74 degrees Fahrenheit during the summer months but is allowed to vary by 1 degree in either direction. You suspect that, as a cost saving measure, the temperature was secretly set higher. You set up a formal way to test your hypothesis.

You start by laying out the null hypothesis:

Next you state the alternative hypothesis. You have reason to suspect a specific direction of change, so you make a one-tailed test:

You know that the most common level of significance is a = .05, so you keep that the same and know that the critical value for a one-tailed z test is z * = 1.645. To keep track of the directionality of the test and rejection region, you draw out your distribution as shown in Figure 7.6 .

Figure 7.6. Rejection region. (“ Rejection Region z1.645 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

Now that you have everything set up, you spend one week collecting temperature data:

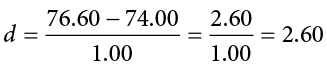

This value falls so far into the tail that it cannot even be plotted on the distribution ( Figure 7.7 )! Because the result is significant, you also calculate an effect size:

The effect size you calculate is definitely large, meaning someone has some explaining to do!

Figure 7.7. Obtained z statistic. (“ Obtained z5.77 ” by Judy Schmitt is licensed under CC BY-NC-SA 4.0 .)

You compare your obtained z statistic, z = 5.77, to the critical value, z * = 1.645, and find that z > z *. Therefore you reject the null hypothesis, concluding:

Reject H 0 . Based on 5 observations, the average temperature ( M = 76.6 degrees) is statistically significantly higher than it is supposed to be, and the effect size was large, z = 5.77, p < .05, d = 2.60.

Example C Different Significance Level

Finally, let’s take a look at an example phrased in generic terms, rather than in the context of a specific research question, to see the individual pieces one more time. This time, however, we will use a stricter significance level, a = .01, to test the hypothesis.

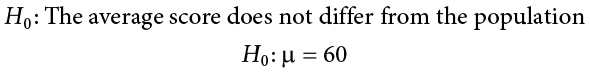

We will use 60 as an arbitrary null hypothesis value:

We will assume a two-tailed test:

We have seen the critical values for z tests at a = .05 levels of significance several times. To find the values for a = .01, we will go to the Standard Normal Distribution Table and find the z score cutting off .005 (.01 divided by 2 for a two-tailed test) of the area in the tail, which is z * = ±2.575. Notice that this cutoff is much higher than it was for a = .05. This is because we need much less of the area in the tail, so we need to go very far out to find the cutoff. As a result, this will require a much larger effect or much larger sample size in order to reject the null hypothesis.

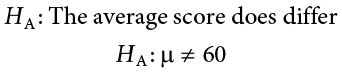

We can now calculate our test statistic. We will use s = 10 as our known population standard deviation and the following data to calculate our sample mean:

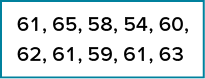

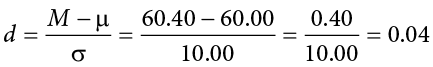

The average of these scores is M = 60.40. From this we calculate our z statistic as:

The Cohen’s d effect size calculation is:

Our obtained z statistic, z = 0.13, is very small. It is much less than our critical value of 2.575. Thus, this time, we fail to reject the null hypothesis. Our conclusion would look something like:

Fail to reject H 0 . Based on the sample of 10 scores, we cannot conclude that there is an effect causing the mean ( M = 60.40) to be statistically significantly different from 60.00, z = 0.13, p > .01, d = 0.04, and the effect size supports this interpretation.

Other Considerations in Hypothesis Testing

There are several other considerations we need to keep in mind when performing hypothesis testing.

Errors in Hypothesis Testing

In the Physicians’ Reactions case study, the probability value associated with the significance test is .0057. Therefore, the null hypothesis was rejected, and it was concluded that physicians intend to spend less time with obese patients. Despite the low probability value, it is possible that the null hypothesis of no true difference between obese and average-weight patients is true and that the large difference between sample means occurred by chance. If this is the case, then the conclusion that physicians intend to spend less time with obese patients is in error. This type of error is called a Type I error. More generally, a Type I error occurs when a significance test results in the rejection of a true null hypothesis.