Research Methodologies: Research Instruments

- Research Methodology Basics

- Research Instruments

- Types of Research Methodologies

Header Image

Types of Research Instruments

A research instrument is a tool you will use to help you collect, measure and analyze the data you use as part of your research. The choice of research instrument will usually be yours to make as the researcher and will be whichever best suits your methodology.

There are many different research instruments you can use in collecting data for your research:

- Interviews (either as a group or one-on-one). You can carry out interviews in many different ways. For example, your interview can be structured, semi-structured, or unstructured. The difference between them is how formal the set of questions is that is asked of the interviewee. In a group interview, you may choose to ask the interviewees to give you their opinions or perceptions on certain topics.

- Surveys (online or in-person). In survey research, you are posing questions in which you ask for a response from the person taking the survey. You may wish to have either free-answer questions such as essay style questions, or you may wish to use closed questions such as multiple choice. You may even wish to make the survey a mixture of both.

- Focus Groups. Similar to the group interview above, you may wish to ask a focus group to discuss a particular topic or opinion while you make a note of the answers given.

- Observations. This is a good research instrument to use if you are looking into human behaviors. Different ways of researching this include studying the spontaneous behavior of participants in their everyday life, or something more structured. A structured observation is research conducted at a set time and place where researchers observe behavior as planned and agreed upon with participants.

These are the most common ways of carrying out research, but it is really dependent on your needs as a researcher and what approach you think is best to take. It is also possible to combine a number of research instruments if this is necessary and appropriate in answering your research problem.

Data Collection

How to Collect Data for Your Research This article covers different ways of collecting data in preparation for writing a thesis.

- << Previous: Research Methodology Basics

- Next: Types of Research Methodologies >>

- Last Updated: Sep 27, 2022 12:28 PM

- URL: https://paperpile.libguides.com/research-methodologies

Community Blog

Keep up-to-date on postgraduate related issues with our quick reads written by students, postdocs, professors and industry leaders.

What is a Research Instrument?

- By DiscoverPhDs

- October 9, 2020

The term research instrument refers to any tool that you may use to collect or obtain data, measure data and analyse data that is relevant to the subject of your research.

Research instruments are often used in the fields of social sciences and health sciences. These tools can also be found within education that relates to patients, staff, teachers and students.

The format of a research instrument may consist of questionnaires, surveys, interviews, checklists or simple tests. The choice of which specific research instrument tool to use will be decided on the by the researcher. It will also be strongly related to the actual methods that will be used in the specific study.

What Makes a Good Research Instrument?

A good research instrument is one that has been validated and has proven reliability. It should be one that can collect data in a way that’s appropriate to the research question being asked.

The research instrument must be able to assist in answering the research aims , objectives and research questions, as well as prove or disprove the hypothesis of the study.

It should not have any bias in the way that data is collect and it should be clear as to how the research instrument should be used appropriately.

What are the Different Types of Interview Research Instruments?

The general format of an interview is where the interviewer asks the interviewee to answer a set of questions which are normally asked and answered verbally. There are several different types of interview research instruments that may exist.

- A structural interview may be used in which there are a specific number of questions that are formally asked of the interviewee and their responses recorded using a systematic and standard methodology.

- An unstructured interview on the other hand may still be based on the same general theme of questions but here the person asking the questions (the interviewer) may change the order the questions are asked in and the specific way in which they’re asked.

- A focus interview is one in which the interviewer will adapt their line or content of questioning based on the responses from the interviewee.

- A focus group interview is one in which a group of volunteers or interviewees are asked questions to understand their opinion or thoughts on a specific subject.

- A non-directive interview is one in which there are no specific questions agreed upon but instead the format is open-ended and more reactionary in the discussion between interviewer and interviewee.

What are the Different Types of Observation Research Instruments?

An observation research instrument is one in which a researcher makes observations and records of the behaviour of individuals. There are several different types.

Structured observations occur when the study is performed at a predetermined location and time, in which the volunteers or study participants are observed used standardised methods.

Naturalistic observations are focused on volunteers or participants being in more natural environments in which their reactions and behaviour are also more natural or spontaneous.

A participant observation occurs when the person conducting the research actively becomes part of the group of volunteers or participants that he or she is researching.

Final Comments

The types of research instruments will depend on the format of the research study being performed: qualitative, quantitative or a mixed methodology. You may for example utilise questionnaires when a study is more qualitative or use a scoring scale in more quantitative studies.

Find out the differences between a Literature Review and an Annotated Bibliography, whey they should be used and how to write them.

Impostor Syndrome is a common phenomenon amongst PhD students, leading to self-doubt and fear of being exposed as a “fraud”. How can we overcome these feelings?

The answer is simple: there is no age limit for doing a PhD; in fact, the oldest known person to have gained a PhD in the UK was 95 years old.

Join thousands of other students and stay up to date with the latest PhD programmes, funding opportunities and advice.

Browse PhDs Now

Reference management software solutions offer a powerful way for you to track and manage your academic references. Read our blog post to learn more about what they are and how to use them.

When you should and shouldn’t capitalise the names of chemical compounds and their abbreviations is not always clear.

Ellen is in the third year of her PhD at the University of Oxford. Her project looks at eighteenth-century reading manuals, using them to find out how eighteenth-century people theorised reading aloud.

Sam is a new PhD student at Teesside University. Her research is focussed on better understanding how writing poetry can help cancer survivors to work through mental and emotional issues.

Join Thousands of Students

Uncomplicated Reviews of Educational Research Methods

- Instrument, Validity, Reliability

.pdf version of this page

Part I: The Instrument

Instrument is the general term that researchers use for a measurement device (survey, test, questionnaire, etc.). To help distinguish between instrument and instrumentation, consider that the instrument is the device and instrumentation is the course of action (the process of developing, testing, and using the device).

Instruments fall into two broad categories, researcher-completed and subject-completed, distinguished by those instruments that researchers administer versus those that are completed by participants. Researchers chose which type of instrument, or instruments, to use based on the research question. Examples are listed below:

Usability refers to the ease with which an instrument can be administered, interpreted by the participant, and scored/interpreted by the researcher. Example usability problems include:

- Students are asked to rate a lesson immediately after class, but there are only a few minutes before the next class begins (problem with administration).

- Students are asked to keep self-checklists of their after school activities, but the directions are complicated and the item descriptions confusing (problem with interpretation).

- Teachers are asked about their attitudes regarding school policy, but some questions are worded poorly which results in low completion rates (problem with scoring/interpretation).

Validity and reliability concerns (discussed below) will help alleviate usability issues. For now, we can identify five usability considerations:

- How long will it take to administer?

- Are the directions clear?

- How easy is it to score?

- Do equivalent forms exist?

- Have any problems been reported by others who used it?

It is best to use an existing instrument, one that has been developed and tested numerous times, such as can be found in the Mental Measurements Yearbook . We will turn to why next.

Part II: Validity

Validity is the extent to which an instrument measures what it is supposed to measure and performs as it is designed to perform. It is rare, if nearly impossible, that an instrument be 100% valid, so validity is generally measured in degrees. As a process, validation involves collecting and analyzing data to assess the accuracy of an instrument. There are numerous statistical tests and measures to assess the validity of quantitative instruments, which generally involves pilot testing. The remainder of this discussion focuses on external validity and content validity.

External validity is the extent to which the results of a study can be generalized from a sample to a population. Establishing eternal validity for an instrument, then, follows directly from sampling. Recall that a sample should be an accurate representation of a population, because the total population may not be available. An instrument that is externally valid helps obtain population generalizability, or the degree to which a sample represents the population.

Content validity refers to the appropriateness of the content of an instrument. In other words, do the measures (questions, observation logs, etc.) accurately assess what you want to know? This is particularly important with achievement tests. Consider that a test developer wants to maximize the validity of a unit test for 7th grade mathematics. This would involve taking representative questions from each of the sections of the unit and evaluating them against the desired outcomes.

Part III: Reliability

Reliability can be thought of as consistency. Does the instrument consistently measure what it is intended to measure? It is not possible to calculate reliability; however, there are four general estimators that you may encounter in reading research:

- Inter-Rater/Observer Reliability : The degree to which different raters/observers give consistent answers or estimates.

- Test-Retest Reliability : The consistency of a measure evaluated over time.

- Parallel-Forms Reliability: The reliability of two tests constructed the same way, from the same content.

- Internal Consistency Reliability: The consistency of results across items, often measured with Cronbach’s Alpha.

Relating Reliability and Validity

Reliability is directly related to the validity of the measure. There are several important principles. First, a test can be considered reliable, but not valid. Consider the SAT, used as a predictor of success in college. It is a reliable test (high scores relate to high GPA), though only a moderately valid indicator of success (due to the lack of structured environment – class attendance, parent-regulated study, and sleeping habits – each holistically related to success).

Second, validity is more important than reliability. Using the above example, college admissions may consider the SAT a reliable test, but not necessarily a valid measure of other quantities colleges seek, such as leadership capability, altruism, and civic involvement. The combination of these aspects, alongside the SAT, is a more valid measure of the applicant’s potential for graduation, later social involvement, and generosity (alumni giving) toward the alma mater.

Finally, the most useful instrument is both valid and reliable. Proponents of the SAT argue that it is both. It is a moderately reliable predictor of future success and a moderately valid measure of a student’s knowledge in Mathematics, Critical Reading, and Writing.

Part IV: Validity and Reliability in Qualitative Research

Thus far, we have discussed Instrumentation as related to mostly quantitative measurement. Establishing validity and reliability in qualitative research can be less precise, though participant/member checks, peer evaluation (another researcher checks the researcher’s inferences based on the instrument ( Denzin & Lincoln, 2005 ), and multiple methods (keyword: triangulation ), are convincingly used. Some qualitative researchers reject the concept of validity due to the constructivist viewpoint that reality is unique to the individual, and cannot be generalized. These researchers argue for a different standard for judging research quality. For a more complete discussion of trustworthiness, see Lincoln and Guba’s (1985) chapter .

Share this:

- How To Assess Research Validity | Windranger5

- How unreliable are the judges on Strictly Come Dancing? | Delight Through Logical Misery

Comments are closed.

About Research Rundowns

Research Rundowns was made possible by support from the Dewar College of Education at Valdosta State University .

- Experimental Design

- What is Educational Research?

- Writing Research Questions

- Mixed Methods Research Designs

- Qualitative Coding & Analysis

- Qualitative Research Design

- Correlation

- Effect Size

- Mean & Standard Deviation

- Significance Testing (t-tests)

- Steps 1-4: Finding Research

- Steps 5-6: Analyzing & Organizing

- Steps 7-9: Citing & Writing

- Writing a Research Report

Blog at WordPress.com.

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

Research Instruments: Overview

- Identifying

About This Guide

This guide shows users how to identify, assess, and obtain research instruments for reuse.

A research instrument is the tool or method a researcher uses to collect, measure, and analyze data related to the subject or participant, and can be:

- tests, surveys, scales, questionnaires, checklists

- Finding Research Instruments & Tools Notes on the process of locating research instruments and tools in the Briscoe Library databases.

Already Know Your Instrument's Name?

If you already:

- Know the full name of your instrument OR

- Have a citation for the instrument AND

- Have assessed the instrument for quality, applicability, and something else

you can go straight the section on obtaining the instrument .

Research Tip: Talk to your faculty. They may have expertise in working with research instruments and can offer pointers on the process, or even recommend an instrument by name.

The Process

- Next: Identifying >>

- Last Updated: Sep 1, 2023 8:28 AM

- URL: https://libguides.uthscsa.edu/research_instruments

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- An Bras Dermatol

- v.89(6); Nov-Dec 2014

Field work I: selecting the instrument for data collection *

Joao luiz bastos.

1 Universidade Federal de Santa Catarina (UFSC) - Florianópolis (SC), Brazil.

Rodrigo Pereira Duquia

2 Universidade Federal de Ciências da Saúde de Porto Alegre (UFCSPA) - Porto Alegre (RS), Brazil.

David Alejandro González-Chica

Jeovany martínez mesa.

3 Latin American Cooperative Oncology Group (LACOG) - Porto Alegre (RS), Brazil.

Renan Rangel Bonamigo

The selection of instruments that will be used to collect data is a crucial step in the research process. Validity and reliability of the collected data and, above all, their potential comparability with data from previous investigations must be prioritized during this phase. We present a decision tree, which is intended to guide the selection of the instruments employed in research projects. Studies conducted along these lines have greater potential to broaden the knowledge on the studied subject and contribute to addressing truly socially relevant needs.

INTRODUCTION

This article discusses one of the most trivial aspects of a researcher's daily tasks, which is to select among various available options the instruments to perform data collection that meet the intended objectives and, at the same time, respect budgetary and temporal restrictions as well as other equally relevant issues when conducting a research. The instrument for data collection is a key element of the traditional questionnaires, which are used to investigate various topics of interest among participants of scientific studies. It is through questionnaires / instruments aimed to assess, for example, sun exposure, family history of skin diseases and mental disorders, that it is possible to measure these phenomena and analyze their associations in health surveys. In this paper, we discuss only questionnaires and their elementary components - the instruments; the reader should refer to specialized literature for knowledge and proper management of other resources available for data collection, including, for example, equipment to measure blood pressure, exams on cutaneous surface lesions and collection of biological material in studies focused on biochemical markers, such as blood parameters etc. Even so, it is argued that the guiding principles presented in this text widely apply, with minor adaptations, to all data collection processes.

As discussed earlier, all scientific investigations, including those in the field of Dermatology, must start with a clear and predefined question. 1 Only after formulating a pertinent research question may the researcher and his/her team plan and implement a series of procedures, which will be able to answer such a question with acceptable levels of validity and reliability . This means that the scientific activity is organized by framing questions and executing a series of procedures to address them, including, for example, the use of questionnaires and their constituent instruments. Such procedures should be recognized as processes that respect ethical research guidelines and whose results are accepted by the scientific community, i.e. they are valid and reliable. However, it should be clarified before moving forward, albeit briefly and partially, what is commonly meant by validity and reliability in science.

In general, validity is considered to be present in an instrument, procedure or research as a whole, when they produce results that reflect what they initially aimed to evaluate or measure. 2 A research can be judged both in terms of internal validity when its conclusions are correct for that sample of studied individuals, as well as external validity, when its results can be generalized to other contexts and population domains. 3 For example, in a survey that estimates the frequency of pediatric atopic dermatitis in Southeast Brazil, the closer the results are to the examined subjects' reality, the greater their internal validity. In other words, if the actual frequency of atopic dermatitis were 12.5% for this region and population, a research that achieved a similar result would be considered internally valid. 4 The ability to generalize or extrapolate those results to other regions in the country would be reflected in the study's external validity. Furthermore, to be valid in any dimension this research should have used an established instrument, able to distinguish individuals who actually have this dermatological condition from those who do not have it. So, the study's validity research depends on the validity of the very instruments that are used.

A research instrument is deemed reliable when it is able to consistently generate the same results after being applied repeatedly to the same group of subjects. This concept is often used in multiple stages of the research process including, for example, when a data collection supervisor performs a quality control check, reapplying some questions to the same subjects already interviewed or even during the construction of a new instrument in the test-retest phase in which the reliability and consistency of the given answers are examined. The Acne-Specific Quality of Life Questionnaire was considered reliable after recording consistent data on the same individuals in an interval of seven days between the first and second administrations. 5 Moreover, a study will be more reliable as more precise instruments are used in data collection and as more subjects are recruited - studies with a significant number of participants present results with a smaller margin of error. It is noteworthy that, although the concept of reliability extrapolates the question of temporal consistency (test-retest), we will address this aspect in a more limited fashion in this article. The interested reader should consult specific publications for further discussion of this topic. 6

Resuming our original question, it must be noted that the need for careful selection of instruments to be used in scientific investigations must have a solid theoretical basis and should not be considered as a mere fad. Ultimately, the wrong choice of an instrument can compromise the internal validity of the study, producing misleading results, which are therefore unable to answer the research question originally formulated. Besides, the choice of an instrument also has implications in the ability to generalize the research results (external validity), and to compare them with those of other studies conducted nationally or internationally on the same subject - researchers using equivalent instruments can establish an effective dialogue, which enables a more comprehensive analysis of the phenomenon in question, including its antecedents and consequences. 7

In order to justify the need to carefully select the instruments to be used in scientific research and also provide basic guidelines so that these decisions are based on solid grounds, we will divide this article into the following sections: (1) On the comparative nature the of scientific research; (2) How to select the most appropriate instrument for my research when there are prototypes available in the scientific literature; and (3) What to do when there are no available instruments to assess the phenomenon of interest to the researcher.

ON THE COMPARATIVE NATURE OF SCIENTIFIC RESEARCH

The inherently comparative nature of scientific research represents an aspect that may sometimes pass unnoticed even to the more experienced researcher. However, the careful examination of a project's theoretical framework, the discussion of a scientific article and also the study results are sufficient to easily demonstrate this comparative nature.

Investigations in the field of Social Anthropology, for example, are based on comparisons of complex cultural systems; the identification of idiosyncrasies in a particular cultural system is only possible after its confrontation with the characteristics of another system. 8 So, the conclusion that a specific South American indigenous population exhibits distinct kinship relations from those observed in the hegemonic Western family composition only occurs when these two forms of cultural systems are compared.

The same occurs in the healthcare field - comparisons are crucial to arrive at conclusions, including the evaluation of consistency of certain scientific findings among a set of previously conducted studies. Likewise, if a researcher is interested in examining the quality of life of patients affected by the pain caused by lower-limb ulcers, he and his team should necessarily make comparisons.

In this case, the comparison is between two distinct groups of subjects with lower-limb ulcers, one of them with pain and the other without it, to ascertain whether the levels of quality of life found in both groups are similar or not. If the researcher observes, by comparison, that the group with pain has a diminished quality of life compared to the group without pain, he may conclude that there is a negative correlation between quality of life and pain related to lowerlimb ulcers.

However, the comparative principle goes beyond contrasting internal groups in a study, as illustrated above. Researchers of a particular subject, for example, the development of melanocytic lesions, can only confirm that the use of sunscreen prevents their occurrence, when multiple scientific studies evaluating this question have previously shown it. In other words, by comparing the results generated by several investigations on the same topic, the scientific community can judge the consistency of the findings and thus make a solid conclusion about the subject matter.

Considering that the comparison of results from different studies is a key aspect of the production and consolidation of scientific knowledge, the following question arises: How should one conduct scientific studies so that their results are comparable to each other? Invariably, the answer to this question includes the use of scientific research instruments that are valid, reliable, and equivalent in different studies. So, what are the basic elements of the selection and use of these tools that enables this scientific dialogue? This is exactly what the subsequent section aims to answer.

HOW TO SELECT THE MOST APPROPRIATE INSTRUMENTS FOR MY RESEARCH, WHEN THERE ARE PROTOTYPES AVAILABLE IN THE SCIENTIFIC LITERATURE

We assume that the researcher has already formulated a clear and pertinent research question, which he or she wants to answer by conducting a scientific research. To illustrate the situation, imagine that a researcher is interested in estimating the frequency of depression and anxiety in a population of caregivers of pediatric patients with chronic dermatoses. The research question could be worded specifically in this way: What is the frequency of anxiety and depression in caregivers of children under five years of age, with chronic dermatoses (atopic dermatitis, vitiligo and psoriasis) residing in the city of Porto Alegre in 2014?

Considering that the phenomenon to be evaluated is restricted to anxiety and depression, how should the investigator proceed in this regard? There are at least two possible alternatives: the researcher can develop a set of entirely original items (instrument) to measure both mental disorders cited or select valid and reliable instruments already available in the scientific literature to assess such disorders.

Both alternatives have their own implications. Developing a new instrument means conducting an additional research project that will require considerable effort and time to be carried out. The scientific literature on to the development and adaptation of instruments emphatically condemns this decision. 9 Often, researchers who choose to develop new instruments overestimate the deficiencies of the existing ones and disregard the time and effort needed to construct a new and appropriate prototype. In most cases, the optimistic and to some extent naive expectations of these researchers are frustrated by the development of a new instrument whose flaws are potentially similar to or even greater than the ones found in existing instruments, but with an additional aggravating factor: the possibility of comparing the results of a study performed with the newly developed instrument to those of previous studies employing other measuring tools is, at least initially, nonexistent. In general, we recommend developing new instruments only when there are no other options for measuring the phenomenon in question or when the existing ones have huge and confirmed limitations.

If the researcher has taken the (right) decision to use an existing instrument to assess anxiety and depression, we suggest that he or she should cover the following steps: 9

- Conduct a very broad and thorough literature search to retrieve the instruments that assess the phenomenon in question. The bibliographic search can start in the traditional bibliographical resources in healthcare, such as PubMed, but it must also take in consideration those available in other scientific fields, such as psychology and education whenever necessary;

- Identify all the available instruments to measure the phenomenon of interest. Eventually, some may not have been published in books, book chapters or as scientific articles. In these cases, it is essential to make contact with the researchers working in the area to ask them about the existence of unpublished measuring instruments (gray literature);

Aspects regarding validity and reliability (quality) of measurement instruments *

- Select an instrument that meets the goals of your study, considering ethical, budgetary and time constraints, among others. Whenever the chosen instrument has been created in a research context significantly distinct from that of your investigation, search the literature for studies of cross-cultural adaptation that aimed to produce an equivalent version of the instrument for the language and cultural specificities of your research context. 11 Thus, as argued by Reichenheim & Moares, "the process of cross-cultural adaptation should be a combination between a component of literal translation of words and phrases from one language to another and a meticulous tuning process, that addresses the cultural context and lifestyle of the target-population to which the version will be applied." 10

Proceeding as described above, the privileged scenario will be the one in which studies addressing the same phenomena shall be conducted with equivalent instruments to assess them and therefore their results will be readily comparable. This would be the same as having a study conducted in different countries on the topics of depression and anxiety in caregivers of pediatric patients with chronic skin diseases and each one would use a version of the instrument adapted for the respective research contexts. So, while in Brazil the Hospital Anxiety and Depression Scale would be used in a version adapted to Brazilian Portuguese, the equivalent version of this same instrument in Japanese would be used in Japan. Therefore, the rates of these common mental disorders, estimated by both studies would be directly comparable at the end of each survey.

WHAT TO DO WHEN THERE ARE NO AVAILABLE INSTRUMENTS FOR MEASURING THE PHENOMENON OF INTEREST TO THE RESEARCHER

Whenever the researcher is confronted with the lack of instruments for measuring the phenomenon of interest, it is possible to follow at least one of these leads:

- Ultimately, review the research question and replace it with one that does not involve the assessment of the phenomenon for which there are no measurement tools available;

- Develop an ancillary research program, whose main objective is to perform a cross-cultural adaptation of a measurement instrument to the context in which the investigation will be conducted. In this case, one must consider the need to postpone the original study until the adapted version of the instrument is available - something that takes in the most optimistic prediction, two to three years; or

- Temporarily suspend the research initiative, waiting until other researchers have provided an adapted version of the selected instrument, making it possible to execute the study in a similar sociocultural context.

The synthesis of the entire process suggested in this article is illustrated in the decision tree, depicted in Figure 1 . We believe that the conduct of studies along these lines has an even greater potential to increase the knowledge on the particularities of any topic of interest and, ultimately, contribute to approaching socially relevant demands.

Decision tree to guide the process of choosing an instrument to collect scientific research data

Conflict of Interest: None

Financial Support: None

How to cite this article: Bastos JL, Duquia RP, González-Chica DA, Mesa JM, Bonamigo RR. Field work I: selecting the instrument for data collection. An Bras Dermatol. 2014;89(6):918-23.

* Work performed at the Universidade Federal de Santa Catarina, Porto Alegre Health Sciences Federal University and Latin American Cooperative Oncology Group (LACOG) - Porto Alegre (RS), Brazil.

Research Instruments

- Resources for Identifying Instruments

- Assessing Instruments

- Obtaining the Full Instrument

- Getting Help

What are Research Instruments?

A research instrument is a tool used to collect, measure, and analyze data related to your subject.

Research instruments can be tests , surveys , scales , questionnaires , or even checklists .

To assure the strength of your study, it is important to use previously validated instruments!

Getting Started

Already know the full name of the instrument you're looking for?

- Start here!

Finding a research instrument can be very time-consuming!

This process involves three concrete steps:

It is common that sources will not provide the full instrument, but they will provide a citation with the publisher. In some cases, you may have to contact the publisher to obtain the full text.

Research Tip : Talk to your departmental faculty. Many of them have expertise in working with research instruments and can help you with this process.

- Next: Identifying a Research Instrument >>

- Last Updated: Aug 27, 2023 9:34 AM

- URL: https://guides.library.duq.edu/researchinstruments

Occupational Science & Occupational Therapy

- Home / Help

- OT ejournals

About Research Instruments

Databases for finding research instruments, find research instruments in instrument databases, find research instruments in literature databases.

- Scoping Reviews

- Health Statistics and Data

- Writing Resources

- Transformative Agreements / Read and Publish

- Anatomy Resources

- Step 1: ASK

- Step 2: ACQUIRE/ACCESS

- Step 3: APPRAISE

- Steps 4 & 5: APPLY & ASSESS

- Mobile Apps

- NBCOT Exam Prep

- Library Instruction and Tutorials

- Research instruments are measurement tools, such as questionnaires, scales, and surveys, that researchers use to measure variables in research studies.

- In most cases, it is better to use a previously validated instrument rather than create one from scratch.

- Always evaluate instruments for relevancy, validity, and reliability.

- Many older yet relevant, valid and reliable instruments are still popular today. It is time consuming and costly to validate instruments, so re-using instruments is common and helpful for connecting your study with an existing body of research.

- Although you can conduct an internet search to find research instruments on publisher and organization websites, library databases are usually the best resources for identifying relevant, validated and reliable research instruments.

- Locating instruments takes time and requires you to follow multiple references until you reach the source.

- Databases provide information about instruments, but they do not provide access to the instruments themselves.

- In most cases, to access and use the actual instruments, you must contact the author or purchase the instrument from the publisher.

- In many cases, you will have to pay a fee to use the instrument.

- Even if the full instrument is freely available, you should contact the owner for permission to use and for any instructions and training necessary to use the instrument properly.

- CINAHL Complete This link opens in a new window Most comprehensive database of full-text for nursing & allied health journals from 1937 to present. Includes access to scholarly journal articles, dissertations, magazines, pamphlets, evidence-based care sheets, books, and research instruments.

- Health and Psychosocial Instruments (HAPI) This link opens in a new window Locate measurement instruments such as surveys, questionnaires, tests, surveys, coding schemes, checklists, rating scales, vignettes, etc. Scope includes medicine, nursing, public health , psychology, social work, communication, sociology, etc.

- Mental Measurements Yearbook (MMY) This link opens in a new window Use MMY to find REVIEWS of testing instruments. Actual test instruments are NOT provided. Most reviews discuss validity and reliability of tool. To purchase or obtain the actual test materials, you will need to contact the test publisher(s).

- PsycINFO This link opens in a new window Abstract and citation database of scholarly literature in psychological, social, behavioral, and health sciences. Includes journal articles, books, reports, theses, and dissertations from 1806 to present.

- PsycTESTS This link opens in a new window PsycTESTS is a research database that provides access to psychological tests, measures, scales, surveys, and other assessments as well as descriptive information about the test and its development. Records also discuss reliability and validity of the tool. Some records include full-text of the test.

- Rehabilitation Measures Database RMD is a web-based, searchable database of assessment instruments designed to help clinicians and researchers select appropriate measures for screening, monitoring patient progress, and assessing outcomes in rehabilitation. more... less... This database allows clinicians and researchers to search for instruments using a word search or specific characteristics of an instrument, such as the area of assessment, diagnosis, length of test, and cost. The search function returns relevant results and provides the user with the ability to refine the search. The instruments listed in the database are described with specific details regarding their reliability, validity, mode of administration, cost, and equipment required. Additionally, information to support the user in interpreting the results, such as minimal detectable change scores, cut-offs, and normative values are included. A sample copy of the instrument is also provided when available.

- << Previous: OT ejournals

- Next: Scoping Reviews >>

- Last Updated: May 28, 2024 3:30 PM

- URL: https://libguides.usc.edu/healthsciences/ot

Finding Research Instruments, Surveys, and Tests: Home

- Create Tests

- Search for Tests

- Online Test Sources

- Dissertations/Theses

- More about ERIC

- Citing in APA Style

- More about MMY

- More about PsycINFO

What are Research Instruments

A research instrument is a survey, questionnaire, test, scale, rating, or tool designed to measure the variable(s), characteristic(s), or information of interest, often a behavioral or psychological characteristic. Research instruments can be helpful tools to your research study.

"Careful planning for data collection can help with setting realistic goals. Data collection instrumentation, such as surveys, physiologic measures (blood pressure or temperature), or interview guides, must be identified and described. Using previously validated collection instruments can save time and increase the study's credibility. Once the data collection procedure has been determined, a time line for completion should be established." (Pierce, 2009, p. 159)

- Pierce, L.L. (2009). Twelve steps for success in the nursing research journey. Journal of Continuing Education in Nursing 40(4), 154-162.

A research instrument is developed as a method of data generation by researchers and information about the research instrument is shared in order to establish the credibility and validity of the method. Whether other researchers may use the research instrument is the decision of the original author-researchers. They may make it publicly available for free or for a price or they may not share it at all. Sources about research instruments have a purpose of describing the instrument to inform. Sources may or may not provide the instrument itself or the contact information of the author-researcher. The onus is on the reader-researcher to try to find the instrument itself or to contact the author-researcher to request permission for its use, if necessary.

How to choose the right one?

Are you trying to find background information about a research instrument? Or are you trying to find and obtain an actual copy of the instrument?

If you need information about a research instrument, what kind of information do you need? Do you need information on the structure of the instrument, its content, its development, its psychometric reliability or validity? What do you need?

If you plan to obtain an actual copy of the instrument to use in research, you need to be concerned not only with obtaining the instrument, but also obtaining permission to use the instrument. Research instruments may be copyrighted. To obtain permission, contact the copyright holder in writing (print or email).

If someone posts a published test or instrument without the permission of the copyright holder, they may be violating copyright and could be legally liable.

What are you trying to measure? For example, if you are studying depression, are you trying to measure the duration of depression, the intensity of depression, the change over time of the episodes, … what? The instrument must measure what you need or it is useless to you.

Factors to consider when selecting an instrument are • Well-tested factorial structure, validity & reliability • Availability of supportive materials and technology for entering, analyzing and interpreting results • Availability of normative data as a reference for evaluating, interpreting, or placing in context individual test scores • Applicable to wide range of participants • Can also be used as personal development tool/exercise • User-friendliness & administrative ease • Availability; can you obtain it? • Does it require permission from the owner to use it? • Financial cost • Amount of time required

Check the validity and reliability of tests and instruments. Do they really measure what they claim to measure? Do they measure consistently over time, with different research subjects and ethnic groups, and after repeated use? Research articles that used the test will often include reliability and validity data.

How Locate Instrument

Realize that searching for an instrument may take a lot of time. They may be published in a book or article on a particular subject. They be published and described in a dissertation. They may posted on the Internet and freely available. A specific instrument may be found in multiple publications and have been used for a long time. Or it may be new and only described in a few places. It may only be available by contacting the person who developed it, who may or may not respond to your inquiry in a timely manner.

There are a variety of sources that may used to search for research instruments. They include books, databases, Internet search engines, Web sites, journal articles, and dissertations.

A few key sources and search tips are listed in this guide.

Permission to Use the Test

If you plan to obtain an actual copy of the instrument to use in research, you need to be concerned not only with obtaining the instrument, but also obtaining permission to use the instrument. Research instruments are copyrighted. To obtain permission, contact the copyright holder to obtain permission in writing (print or email). Written permission is a record that you obtained permission.

It is a good idea to have them state in wiritng that they are indeed the copyright holder and that they grant you permission to use the instrument. If you wish to publish the actual instrument in your paper, get permission for that, too. You may write about the instrument without obtaining permission. (But remember to cite it!)

If someone posts a published test or instrument without the permission of the copyright holder, they are violating copyright and could be legally liable.

Subject Guide

- Next: Create Tests >>

- Last Updated: Mar 12, 2024 5:10 PM

- URL: https://library.indianastate.edu/instruments

What Is Research, and Why Do People Do It?

- Open Access

- First Online: 03 December 2022

Cite this chapter

You have full access to this open access chapter

- James Hiebert 6 ,

- Jinfa Cai 7 ,

- Stephen Hwang 7 ,

- Anne K Morris 6 &

- Charles Hohensee 6

Part of the book series: Research in Mathematics Education ((RME))

Abstractspiepr Abs1

Every day people do research as they gather information to learn about something of interest. In the scientific world, however, research means something different than simply gathering information. Scientific research is characterized by its careful planning and observing, by its relentless efforts to understand and explain, and by its commitment to learn from everyone else seriously engaged in research. We call this kind of research scientific inquiry and define it as “formulating, testing, and revising hypotheses.” By “hypotheses” we do not mean the hypotheses you encounter in statistics courses. We mean predictions about what you expect to find and rationales for why you made these predictions. Throughout this and the remaining chapters we make clear that the process of scientific inquiry applies to all kinds of research studies and data, both qualitative and quantitative.

You have full access to this open access chapter, Download chapter PDF

Part I. What Is Research?

Have you ever studied something carefully because you wanted to know more about it? Maybe you wanted to know more about your grandmother’s life when she was younger so you asked her to tell you stories from her childhood, or maybe you wanted to know more about a fertilizer you were about to use in your garden so you read the ingredients on the package and looked them up online. According to the dictionary definition, you were doing research.

Recall your high school assignments asking you to “research” a topic. The assignment likely included consulting a variety of sources that discussed the topic, perhaps including some “original” sources. Often, the teacher referred to your product as a “research paper.”

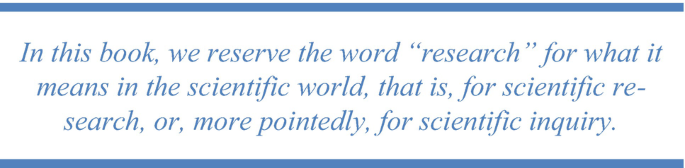

Were you conducting research when you interviewed your grandmother or wrote high school papers reviewing a particular topic? Our view is that you were engaged in part of the research process, but only a small part. In this book, we reserve the word “research” for what it means in the scientific world, that is, for scientific research or, more pointedly, for scientific inquiry .

Exercise 1.1

Before you read any further, write a definition of what you think scientific inquiry is. Keep it short—Two to three sentences. You will periodically update this definition as you read this chapter and the remainder of the book.

This book is about scientific inquiry—what it is and how to do it. For starters, scientific inquiry is a process, a particular way of finding out about something that involves a number of phases. Each phase of the process constitutes one aspect of scientific inquiry. You are doing scientific inquiry as you engage in each phase, but you have not done scientific inquiry until you complete the full process. Each phase is necessary but not sufficient.

In this chapter, we set the stage by defining scientific inquiry—describing what it is and what it is not—and by discussing what it is good for and why people do it. The remaining chapters build directly on the ideas presented in this chapter.

A first thing to know is that scientific inquiry is not all or nothing. “Scientificness” is a continuum. Inquiries can be more scientific or less scientific. What makes an inquiry more scientific? You might be surprised there is no universally agreed upon answer to this question. None of the descriptors we know of are sufficient by themselves to define scientific inquiry. But all of them give you a way of thinking about some aspects of the process of scientific inquiry. Each one gives you different insights.

Exercise 1.2

As you read about each descriptor below, think about what would make an inquiry more or less scientific. If you think a descriptor is important, use it to revise your definition of scientific inquiry.

Creating an Image of Scientific Inquiry

We will present three descriptors of scientific inquiry. Each provides a different perspective and emphasizes a different aspect of scientific inquiry. We will draw on all three descriptors to compose our definition of scientific inquiry.

Descriptor 1. Experience Carefully Planned in Advance

Sir Ronald Fisher, often called the father of modern statistical design, once referred to research as “experience carefully planned in advance” (1935, p. 8). He said that humans are always learning from experience, from interacting with the world around them. Usually, this learning is haphazard rather than the result of a deliberate process carried out over an extended period of time. Research, Fisher said, was learning from experience, but experience carefully planned in advance.

This phrase can be fully appreciated by looking at each word. The fact that scientific inquiry is based on experience means that it is based on interacting with the world. These interactions could be thought of as the stuff of scientific inquiry. In addition, it is not just any experience that counts. The experience must be carefully planned . The interactions with the world must be conducted with an explicit, describable purpose, and steps must be taken to make the intended learning as likely as possible. This planning is an integral part of scientific inquiry; it is not just a preparation phase. It is one of the things that distinguishes scientific inquiry from many everyday learning experiences. Finally, these steps must be taken beforehand and the purpose of the inquiry must be articulated in advance of the experience. Clearly, scientific inquiry does not happen by accident, by just stumbling into something. Stumbling into something unexpected and interesting can happen while engaged in scientific inquiry, but learning does not depend on it and serendipity does not make the inquiry scientific.

Descriptor 2. Observing Something and Trying to Explain Why It Is the Way It Is

When we were writing this chapter and googled “scientific inquiry,” the first entry was: “Scientific inquiry refers to the diverse ways in which scientists study the natural world and propose explanations based on the evidence derived from their work.” The emphasis is on studying, or observing, and then explaining . This descriptor takes the image of scientific inquiry beyond carefully planned experience and includes explaining what was experienced.

According to the Merriam-Webster dictionary, “explain” means “(a) to make known, (b) to make plain or understandable, (c) to give the reason or cause of, and (d) to show the logical development or relations of” (Merriam-Webster, n.d. ). We will use all these definitions. Taken together, they suggest that to explain an observation means to understand it by finding reasons (or causes) for why it is as it is. In this sense of scientific inquiry, the following are synonyms: explaining why, understanding why, and reasoning about causes and effects. Our image of scientific inquiry now includes planning, observing, and explaining why.

We need to add a final note about this descriptor. We have phrased it in a way that suggests “observing something” means you are observing something in real time—observing the way things are or the way things are changing. This is often true. But, observing could mean observing data that already have been collected, maybe by someone else making the original observations (e.g., secondary analysis of NAEP data or analysis of existing video recordings of classroom instruction). We will address secondary analyses more fully in Chap. 4 . For now, what is important is that the process requires explaining why the data look like they do.

We must note that for us, the term “data” is not limited to numerical or quantitative data such as test scores. Data can also take many nonquantitative forms, including written survey responses, interview transcripts, journal entries, video recordings of students, teachers, and classrooms, text messages, and so forth.

Exercise 1.3

What are the implications of the statement that just “observing” is not enough to count as scientific inquiry? Does this mean that a detailed description of a phenomenon is not scientific inquiry?

Find sources that define research in education that differ with our position, that say description alone, without explanation, counts as scientific research. Identify the precise points where the opinions differ. What are the best arguments for each of the positions? Which do you prefer? Why?

Descriptor 3. Updating Everyone’s Thinking in Response to More and Better Information

This descriptor focuses on a third aspect of scientific inquiry: updating and advancing the field’s understanding of phenomena that are investigated. This descriptor foregrounds a powerful characteristic of scientific inquiry: the reliability (or trustworthiness) of what is learned and the ultimate inevitability of this learning to advance human understanding of phenomena. Humans might choose not to learn from scientific inquiry, but history suggests that scientific inquiry always has the potential to advance understanding and that, eventually, humans take advantage of these new understandings.

Before exploring these bold claims a bit further, note that this descriptor uses “information” in the same way the previous two descriptors used “experience” and “observations.” These are the stuff of scientific inquiry and we will use them often, sometimes interchangeably. Frequently, we will use the term “data” to stand for all these terms.

An overriding goal of scientific inquiry is for everyone to learn from what one scientist does. Much of this book is about the methods you need to use so others have faith in what you report and can learn the same things you learned. This aspect of scientific inquiry has many implications.

One implication is that scientific inquiry is not a private practice. It is a public practice available for others to see and learn from. Notice how different this is from everyday learning. When you happen to learn something from your everyday experience, often only you gain from the experience. The fact that research is a public practice means it is also a social one. It is best conducted by interacting with others along the way: soliciting feedback at each phase, taking opportunities to present work-in-progress, and benefitting from the advice of others.

A second implication is that you, as the researcher, must be committed to sharing what you are doing and what you are learning in an open and transparent way. This allows all phases of your work to be scrutinized and critiqued. This is what gives your work credibility. The reliability or trustworthiness of your findings depends on your colleagues recognizing that you have used all appropriate methods to maximize the chances that your claims are justified by the data.

A third implication of viewing scientific inquiry as a collective enterprise is the reverse of the second—you must be committed to receiving comments from others. You must treat your colleagues as fair and honest critics even though it might sometimes feel otherwise. You must appreciate their job, which is to remain skeptical while scrutinizing what you have done in considerable detail. To provide the best help to you, they must remain skeptical about your conclusions (when, for example, the data are difficult for them to interpret) until you offer a convincing logical argument based on the information you share. A rather harsh but good-to-remember statement of the role of your friendly critics was voiced by Karl Popper, a well-known twentieth century philosopher of science: “. . . if you are interested in the problem which I tried to solve by my tentative assertion, you may help me by criticizing it as severely as you can” (Popper, 1968, p. 27).

A final implication of this third descriptor is that, as someone engaged in scientific inquiry, you have no choice but to update your thinking when the data support a different conclusion. This applies to your own data as well as to those of others. When data clearly point to a specific claim, even one that is quite different than you expected, you must reconsider your position. If the outcome is replicated multiple times, you need to adjust your thinking accordingly. Scientific inquiry does not let you pick and choose which data to believe; it mandates that everyone update their thinking when the data warrant an update.

Doing Scientific Inquiry

We define scientific inquiry in an operational sense—what does it mean to do scientific inquiry? What kind of process would satisfy all three descriptors: carefully planning an experience in advance; observing and trying to explain what you see; and, contributing to updating everyone’s thinking about an important phenomenon?

We define scientific inquiry as formulating , testing , and revising hypotheses about phenomena of interest.

Of course, we are not the only ones who define it in this way. The definition for the scientific method posted by the editors of Britannica is: “a researcher develops a hypothesis, tests it through various means, and then modifies the hypothesis on the basis of the outcome of the tests and experiments” (Britannica, n.d. ).

Notice how defining scientific inquiry this way satisfies each of the descriptors. “Carefully planning an experience in advance” is exactly what happens when formulating a hypothesis about a phenomenon of interest and thinking about how to test it. “ Observing a phenomenon” occurs when testing a hypothesis, and “ explaining ” what is found is required when revising a hypothesis based on the data. Finally, “updating everyone’s thinking” comes from comparing publicly the original with the revised hypothesis.

Doing scientific inquiry, as we have defined it, underscores the value of accumulating knowledge rather than generating random bits of knowledge. Formulating, testing, and revising hypotheses is an ongoing process, with each revised hypothesis begging for another test, whether by the same researcher or by new researchers. The editors of Britannica signaled this cyclic process by adding the following phrase to their definition of the scientific method: “The modified hypothesis is then retested, further modified, and tested again.” Scientific inquiry creates a process that encourages each study to build on the studies that have gone before. Through collective engagement in this process of building study on top of study, the scientific community works together to update its thinking.

Before exploring more fully the meaning of “formulating, testing, and revising hypotheses,” we need to acknowledge that this is not the only way researchers define research. Some researchers prefer a less formal definition, one that includes more serendipity, less planning, less explanation. You might have come across more open definitions such as “research is finding out about something.” We prefer the tighter hypothesis formulation, testing, and revision definition because we believe it provides a single, coherent map for conducting research that addresses many of the thorny problems educational researchers encounter. We believe it is the most useful orientation toward research and the most helpful to learn as a beginning researcher.

A final clarification of our definition is that it applies equally to qualitative and quantitative research. This is a familiar distinction in education that has generated much discussion. You might think our definition favors quantitative methods over qualitative methods because the language of hypothesis formulation and testing is often associated with quantitative methods. In fact, we do not favor one method over another. In Chap. 4 , we will illustrate how our definition fits research using a range of quantitative and qualitative methods.

Exercise 1.4

Look for ways to extend what the field knows in an area that has already received attention by other researchers. Specifically, you can search for a program of research carried out by more experienced researchers that has some revised hypotheses that remain untested. Identify a revised hypothesis that you might like to test.

Unpacking the Terms Formulating, Testing, and Revising Hypotheses

To get a full sense of the definition of scientific inquiry we will use throughout this book, it is helpful to spend a little time with each of the key terms.

We first want to make clear that we use the term “hypothesis” as it is defined in most dictionaries and as it used in many scientific fields rather than as it is usually defined in educational statistics courses. By “hypothesis,” we do not mean a null hypothesis that is accepted or rejected by statistical analysis. Rather, we use “hypothesis” in the sense conveyed by the following definitions: “An idea or explanation for something that is based on known facts but has not yet been proved” (Cambridge University Press, n.d. ), and “An unproved theory, proposition, or supposition, tentatively accepted to explain certain facts and to provide a basis for further investigation or argument” (Agnes & Guralnik, 2008 ).

We distinguish two parts to “hypotheses.” Hypotheses consist of predictions and rationales . Predictions are statements about what you expect to find when you inquire about something. Rationales are explanations for why you made the predictions you did, why you believe your predictions are correct. So, for us “formulating hypotheses” means making explicit predictions and developing rationales for the predictions.

“Testing hypotheses” means making observations that allow you to assess in what ways your predictions were correct and in what ways they were incorrect. In education research, it is rarely useful to think of your predictions as either right or wrong. Because of the complexity of most issues you will investigate, most predictions will be right in some ways and wrong in others.

By studying the observations you make (data you collect) to test your hypotheses, you can revise your hypotheses to better align with the observations. This means revising your predictions plus revising your rationales to justify your adjusted predictions. Even though you might not run another test, formulating revised hypotheses is an essential part of conducting a research study. Comparing your original and revised hypotheses informs everyone of what you learned by conducting your study. In addition, a revised hypothesis sets the stage for you or someone else to extend your study and accumulate more knowledge of the phenomenon.

We should note that not everyone makes a clear distinction between predictions and rationales as two aspects of hypotheses. In fact, common, non-scientific uses of the word “hypothesis” may limit it to only a prediction or only an explanation (or rationale). We choose to explicitly include both prediction and rationale in our definition of hypothesis, not because we assert this should be the universal definition, but because we want to foreground the importance of both parts acting in concert. Using “hypothesis” to represent both prediction and rationale could hide the two aspects, but we make them explicit because they provide different kinds of information. It is usually easier to make predictions than develop rationales because predictions can be guesses, hunches, or gut feelings about which you have little confidence. Developing a compelling rationale requires careful thought plus reading what other researchers have found plus talking with your colleagues. Often, while you are developing your rationale you will find good reasons to change your predictions. Developing good rationales is the engine that drives scientific inquiry. Rationales are essentially descriptions of how much you know about the phenomenon you are studying. Throughout this guide, we will elaborate on how developing good rationales drives scientific inquiry. For now, we simply note that it can sharpen your predictions and help you to interpret your data as you test your hypotheses.

Hypotheses in education research take a variety of forms or types. This is because there are a variety of phenomena that can be investigated. Investigating educational phenomena is sometimes best done using qualitative methods, sometimes using quantitative methods, and most often using mixed methods (e.g., Hay, 2016 ; Weis et al. 2019a ; Weisner, 2005 ). This means that, given our definition, hypotheses are equally applicable to qualitative and quantitative investigations.

Hypotheses take different forms when they are used to investigate different kinds of phenomena. Two very different activities in education could be labeled conducting experiments and descriptions. In an experiment, a hypothesis makes a prediction about anticipated changes, say the changes that occur when a treatment or intervention is applied. You might investigate how students’ thinking changes during a particular kind of instruction.

A second type of hypothesis, relevant for descriptive research, makes a prediction about what you will find when you investigate and describe the nature of a situation. The goal is to understand a situation as it exists rather than to understand a change from one situation to another. In this case, your prediction is what you expect to observe. Your rationale is the set of reasons for making this prediction; it is your current explanation for why the situation will look like it does.

You will probably read, if you have not already, that some researchers say you do not need a prediction to conduct a descriptive study. We will discuss this point of view in Chap. 2 . For now, we simply claim that scientific inquiry, as we have defined it, applies to all kinds of research studies. Descriptive studies, like others, not only benefit from formulating, testing, and revising hypotheses, but also need hypothesis formulating, testing, and revising.

One reason we define research as formulating, testing, and revising hypotheses is that if you think of research in this way you are less likely to go wrong. It is a useful guide for the entire process, as we will describe in detail in the chapters ahead. For example, as you build the rationale for your predictions, you are constructing the theoretical framework for your study (Chap. 3 ). As you work out the methods you will use to test your hypothesis, every decision you make will be based on asking, “Will this help me formulate or test or revise my hypothesis?” (Chap. 4 ). As you interpret the results of testing your predictions, you will compare them to what you predicted and examine the differences, focusing on how you must revise your hypotheses (Chap. 5 ). By anchoring the process to formulating, testing, and revising hypotheses, you will make smart decisions that yield a coherent and well-designed study.

Exercise 1.5

Compare the concept of formulating, testing, and revising hypotheses with the descriptions of scientific inquiry contained in Scientific Research in Education (NRC, 2002 ). How are they similar or different?

Exercise 1.6

Provide an example to illustrate and emphasize the differences between everyday learning/thinking and scientific inquiry.

Learning from Doing Scientific Inquiry

We noted earlier that a measure of what you have learned by conducting a research study is found in the differences between your original hypothesis and your revised hypothesis based on the data you collected to test your hypothesis. We will elaborate this statement in later chapters, but we preview our argument here.

Even before collecting data, scientific inquiry requires cycles of making a prediction, developing a rationale, refining your predictions, reading and studying more to strengthen your rationale, refining your predictions again, and so forth. And, even if you have run through several such cycles, you still will likely find that when you test your prediction you will be partly right and partly wrong. The results will support some parts of your predictions but not others, or the results will “kind of” support your predictions. A critical part of scientific inquiry is making sense of your results by interpreting them against your predictions. Carefully describing what aspects of your data supported your predictions, what aspects did not, and what data fell outside of any predictions is not an easy task, but you cannot learn from your study without doing this analysis.

Analyzing the matches and mismatches between your predictions and your data allows you to formulate different rationales that would have accounted for more of the data. The best revised rationale is the one that accounts for the most data. Once you have revised your rationales, you can think about the predictions they best justify or explain. It is by comparing your original rationales to your new rationales that you can sort out what you learned from your study.

Suppose your study was an experiment. Maybe you were investigating the effects of a new instructional intervention on students’ learning. Your original rationale was your explanation for why the intervention would change the learning outcomes in a particular way. Your revised rationale explained why the changes that you observed occurred like they did and why your revised predictions are better. Maybe your original rationale focused on the potential of the activities if they were implemented in ideal ways and your revised rationale included the factors that are likely to affect how teachers implement them. By comparing the before and after rationales, you are describing what you learned—what you can explain now that you could not before. Another way of saying this is that you are describing how much more you understand now than before you conducted your study.

Revised predictions based on carefully planned and collected data usually exhibit some of the following features compared with the originals: more precision, more completeness, and broader scope. Revised rationales have more explanatory power and become more complete, more aligned with the new predictions, sharper, and overall more convincing.

Part II. Why Do Educators Do Research?

Doing scientific inquiry is a lot of work. Each phase of the process takes time, and you will often cycle back to improve earlier phases as you engage in later phases. Because of the significant effort required, you should make sure your study is worth it. So, from the beginning, you should think about the purpose of your study. Why do you want to do it? And, because research is a social practice, you should also think about whether the results of your study are likely to be important and significant to the education community.

If you are doing research in the way we have described—as scientific inquiry—then one purpose of your study is to understand , not just to describe or evaluate or report. As we noted earlier, when you formulate hypotheses, you are developing rationales that explain why things might be like they are. In our view, trying to understand and explain is what separates research from other kinds of activities, like evaluating or describing.

One reason understanding is so important is that it allows researchers to see how or why something works like it does. When you see how something works, you are better able to predict how it might work in other contexts, under other conditions. And, because conditions, or contextual factors, matter a lot in education, gaining insights into applying your findings to other contexts increases the contributions of your work and its importance to the broader education community.

Consequently, the purposes of research studies in education often include the more specific aim of identifying and understanding the conditions under which the phenomena being studied work like the observations suggest. A classic example of this kind of study in mathematics education was reported by William Brownell and Harold Moser in 1949 . They were trying to establish which method of subtracting whole numbers could be taught most effectively—the regrouping method or the equal additions method. However, they realized that effectiveness might depend on the conditions under which the methods were taught—“meaningfully” versus “mechanically.” So, they designed a study that crossed the two instructional approaches with the two different methods (regrouping and equal additions). Among other results, they found that these conditions did matter. The regrouping method was more effective under the meaningful condition than the mechanical condition, but the same was not true for the equal additions algorithm.

What do education researchers want to understand? In our view, the ultimate goal of education is to offer all students the best possible learning opportunities. So, we believe the ultimate purpose of scientific inquiry in education is to develop understanding that supports the improvement of learning opportunities for all students. We say “ultimate” because there are lots of issues that must be understood to improve learning opportunities for all students. Hypotheses about many aspects of education are connected, ultimately, to students’ learning. For example, formulating and testing a hypothesis that preservice teachers need to engage in particular kinds of activities in their coursework in order to teach particular topics well is, ultimately, connected to improving students’ learning opportunities. So is hypothesizing that school districts often devote relatively few resources to instructional leadership training or hypothesizing that positioning mathematics as a tool students can use to combat social injustice can help students see the relevance of mathematics to their lives.

We do not exclude the importance of research on educational issues more removed from improving students’ learning opportunities, but we do think the argument for their importance will be more difficult to make. If there is no way to imagine a connection between your hypothesis and improving learning opportunities for students, even a distant connection, we recommend you reconsider whether it is an important hypothesis within the education community.

Notice that we said the ultimate goal of education is to offer all students the best possible learning opportunities. For too long, educators have been satisfied with a goal of offering rich learning opportunities for lots of students, sometimes even for just the majority of students, but not necessarily for all students. Evaluations of success often are based on outcomes that show high averages. In other words, if many students have learned something, or even a smaller number have learned a lot, educators may have been satisfied. The problem is that there is usually a pattern in the groups of students who receive lower quality opportunities—students of color and students who live in poor areas, urban and rural. This is not acceptable. Consequently, we emphasize the premise that the purpose of education research is to offer rich learning opportunities to all students.

One way to make sure you will be able to convince others of the importance of your study is to consider investigating some aspect of teachers’ shared instructional problems. Historically, researchers in education have set their own research agendas, regardless of the problems teachers are facing in schools. It is increasingly recognized that teachers have had trouble applying to their own classrooms what researchers find. To address this problem, a researcher could partner with a teacher—better yet, a small group of teachers—and talk with them about instructional problems they all share. These discussions can create a rich pool of problems researchers can consider. If researchers pursued one of these problems (preferably alongside teachers), the connection to improving learning opportunities for all students could be direct and immediate. “Grounding a research question in instructional problems that are experienced across multiple teachers’ classrooms helps to ensure that the answer to the question will be of sufficient scope to be relevant and significant beyond the local context” (Cai et al., 2019b , p. 115).

As a beginning researcher, determining the relevance and importance of a research problem is especially challenging. We recommend talking with advisors, other experienced researchers, and peers to test the educational importance of possible research problems and topics of study. You will also learn much more about the issue of research importance when you read Chap. 5 .

Exercise 1.7