Reliability and validity: Importance in Medical Research

Affiliations.

- 1 Al-Nafees Medical College,Isra University, Islamabad, Pakistan.

- 2 Fauji Foundation Hospital, Foundation University Medical College, Islamabad, Pakistan.

- PMID: 34974579

- DOI: 10.47391/JPMA.06-861

Reliability and validity are among the most important and fundamental domains in the assessment of any measuring methodology for data-collection in a good research. Validity is about what an instrument measures and how well it does so, whereas reliability concerns the truthfulness in the data obtained and the degree to which any measuring tool controls random error. The current narrative review was planned to discuss the importance of reliability and validity of data-collection or measurement techniques used in research. It describes and explores comprehensively the reliability and validity of research instruments and also discusses different forms of reliability and validity with concise examples. An attempt has been taken to give a brief literature review regarding the significance of reliability and validity in medical sciences.

Keywords: Validity, Reliability, Medical research, Methodology, Assessment, Research tools..

Publication types

- Biomedical Research*

- Reproducibility of Results

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 18, Issue 3

- Validity and reliability in quantitative studies

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Roberta Heale 1 ,

- Alison Twycross 2

- 1 School of Nursing, Laurentian University , Sudbury, Ontario , Canada

- 2 Faculty of Health and Social Care , London South Bank University , London , UK

- Correspondence to : Dr Roberta Heale, School of Nursing, Laurentian University, Ramsey Lake Road, Sudbury, Ontario, Canada P3E2C6; rheale{at}laurentian.ca

https://doi.org/10.1136/eb-2015-102129

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Evidence-based practice includes, in part, implementation of the findings of well-conducted quality research studies. So being able to critique quantitative research is an important skill for nurses. Consideration must be given not only to the results of the study but also the rigour of the research. Rigour refers to the extent to which the researchers worked to enhance the quality of the studies. In quantitative research, this is achieved through measurement of the validity and reliability. 1

- View inline

Types of validity

The first category is content validity . This category looks at whether the instrument adequately covers all the content that it should with respect to the variable. In other words, does the instrument cover the entire domain related to the variable, or construct it was designed to measure? In an undergraduate nursing course with instruction about public health, an examination with content validity would cover all the content in the course with greater emphasis on the topics that had received greater coverage or more depth. A subset of content validity is face validity , where experts are asked their opinion about whether an instrument measures the concept intended.

Construct validity refers to whether you can draw inferences about test scores related to the concept being studied. For example, if a person has a high score on a survey that measures anxiety, does this person truly have a high degree of anxiety? In another example, a test of knowledge of medications that requires dosage calculations may instead be testing maths knowledge.

There are three types of evidence that can be used to demonstrate a research instrument has construct validity:

Homogeneity—meaning that the instrument measures one construct.

Convergence—this occurs when the instrument measures concepts similar to that of other instruments. Although if there are no similar instruments available this will not be possible to do.

Theory evidence—this is evident when behaviour is similar to theoretical propositions of the construct measured in the instrument. For example, when an instrument measures anxiety, one would expect to see that participants who score high on the instrument for anxiety also demonstrate symptoms of anxiety in their day-to-day lives. 2

The final measure of validity is criterion validity . A criterion is any other instrument that measures the same variable. Correlations can be conducted to determine the extent to which the different instruments measure the same variable. Criterion validity is measured in three ways:

Convergent validity—shows that an instrument is highly correlated with instruments measuring similar variables.

Divergent validity—shows that an instrument is poorly correlated to instruments that measure different variables. In this case, for example, there should be a low correlation between an instrument that measures motivation and one that measures self-efficacy.

Predictive validity—means that the instrument should have high correlations with future criterions. 2 For example, a score of high self-efficacy related to performing a task should predict the likelihood a participant completing the task.

Reliability

Reliability relates to the consistency of a measure. A participant completing an instrument meant to measure motivation should have approximately the same responses each time the test is completed. Although it is not possible to give an exact calculation of reliability, an estimate of reliability can be achieved through different measures. The three attributes of reliability are outlined in table 2 . How each attribute is tested for is described below.

Attributes of reliability

Homogeneity (internal consistency) is assessed using item-to-total correlation, split-half reliability, Kuder-Richardson coefficient and Cronbach's α. In split-half reliability, the results of a test, or instrument, are divided in half. Correlations are calculated comparing both halves. Strong correlations indicate high reliability, while weak correlations indicate the instrument may not be reliable. The Kuder-Richardson test is a more complicated version of the split-half test. In this process the average of all possible split half combinations is determined and a correlation between 0–1 is generated. This test is more accurate than the split-half test, but can only be completed on questions with two answers (eg, yes or no, 0 or 1). 3

Cronbach's α is the most commonly used test to determine the internal consistency of an instrument. In this test, the average of all correlations in every combination of split-halves is determined. Instruments with questions that have more than two responses can be used in this test. The Cronbach's α result is a number between 0 and 1. An acceptable reliability score is one that is 0.7 and higher. 1 , 3

Stability is tested using test–retest and parallel or alternate-form reliability testing. Test–retest reliability is assessed when an instrument is given to the same participants more than once under similar circumstances. A statistical comparison is made between participant's test scores for each of the times they have completed it. This provides an indication of the reliability of the instrument. Parallel-form reliability (or alternate-form reliability) is similar to test–retest reliability except that a different form of the original instrument is given to participants in subsequent tests. The domain, or concepts being tested are the same in both versions of the instrument but the wording of items is different. 2 For an instrument to demonstrate stability there should be a high correlation between the scores each time a participant completes the test. Generally speaking, a correlation coefficient of less than 0.3 signifies a weak correlation, 0.3–0.5 is moderate and greater than 0.5 is strong. 4

Equivalence is assessed through inter-rater reliability. This test includes a process for qualitatively determining the level of agreement between two or more observers. A good example of the process used in assessing inter-rater reliability is the scores of judges for a skating competition. The level of consistency across all judges in the scores given to skating participants is the measure of inter-rater reliability. An example in research is when researchers are asked to give a score for the relevancy of each item on an instrument. Consistency in their scores relates to the level of inter-rater reliability of the instrument.

Determining how rigorously the issues of reliability and validity have been addressed in a study is an essential component in the critique of research as well as influencing the decision about whether to implement of the study findings into nursing practice. In quantitative studies, rigour is determined through an evaluation of the validity and reliability of the tools or instruments utilised in the study. A good quality research study will provide evidence of how all these factors have been addressed. This will help you to assess the validity and reliability of the research and help you decide whether or not you should apply the findings in your area of clinical practice.

- Lobiondo-Wood G ,

- Shuttleworth M

- ↵ Laerd Statistics . Determining the correlation coefficient . 2013 . https://statistics.laerd.com/premium/pc/pearson-correlation-in-spss-8.php

Twitter Follow Roberta Heale at @robertaheale and Alison Twycross at @alitwy

Competing interests None declared.

Read the full text or download the PDF:

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Reliability vs Validity in Research | Differences, Types & Examples

Reliability vs Validity in Research | Differences, Types & Examples

Published on 3 May 2022 by Fiona Middleton . Revised on 10 October 2022.

Reliability and validity are concepts used to evaluate the quality of research. They indicate how well a method , technique, or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.

It’s important to consider reliability and validity when you are creating your research design , planning your methods, and writing up your results, especially in quantitative research .

Table of contents

Understanding reliability vs validity, how are reliability and validity assessed, how to ensure validity and reliability in your research, where to write about reliability and validity in a thesis.

Reliability and validity are closely related, but they mean different things. A measurement can be reliable without being valid. However, if a measurement is valid, it is usually also reliable.

What is reliability?

Reliability refers to how consistently a method measures something. If the same result can be consistently achieved by using the same methods under the same circumstances, the measurement is considered reliable.

What is validity?

Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world.

High reliability is one indicator that a measurement is valid. If a method is not reliable, it probably isn’t valid.

However, reliability on its own is not enough to ensure validity. Even if a test is reliable, it may not accurately reflect the real situation.

Validity is harder to assess than reliability, but it is even more important. To obtain useful results, the methods you use to collect your data must be valid: the research must be measuring what it claims to measure. This ensures that your discussion of the data and the conclusions you draw are also valid.

Prevent plagiarism, run a free check.

Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory. Methods of estimating reliability and validity are usually split up into different types.

Types of reliability

Different types of reliability can be estimated through various statistical methods.

Types of validity

The validity of a measurement can be estimated based on three main types of evidence. Each type can be evaluated through expert judgement or statistical methods.

To assess the validity of a cause-and-effect relationship, you also need to consider internal validity (the design of the experiment ) and external validity (the generalisability of the results).

The reliability and validity of your results depends on creating a strong research design , choosing appropriate methods and samples, and conducting the research carefully and consistently.

Ensuring validity

If you use scores or ratings to measure variations in something (such as psychological traits, levels of ability, or physical properties), it’s important that your results reflect the real variations as accurately as possible. Validity should be considered in the very earliest stages of your research, when you decide how you will collect your data .

- Choose appropriate methods of measurement

Ensure that your method and measurement technique are of high quality and targeted to measure exactly what you want to know. They should be thoroughly researched and based on existing knowledge.

For example, to collect data on a personality trait, you could use a standardised questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or the findings of previous studies, and the questions should be carefully and precisely worded.

- Use appropriate sampling methods to select your subjects

To produce valid generalisable results, clearly define the population you are researching (e.g., people from a specific age range, geographical location, or profession). Ensure that you have enough participants and that they are representative of the population.

Ensuring reliability

Reliability should be considered throughout the data collection process. When you use a tool or technique to collect data, it’s important that the results are precise, stable, and reproducible.

- Apply your methods consistently

Plan your method carefully to make sure you carry out the same steps in the same way for each measurement. This is especially important if multiple researchers are involved.

For example, if you are conducting interviews or observations, clearly define how specific behaviours or responses will be counted, and make sure questions are phrased the same way each time.

- Standardise the conditions of your research

When you collect your data, keep the circumstances as consistent as possible to reduce the influence of external factors that might create variation in the results.

For example, in an experimental setup, make sure all participants are given the same information and tested under the same conditions.

It’s appropriate to discuss reliability and validity in various sections of your thesis or dissertation or research paper. Showing that you have taken them into account in planning your research and interpreting the results makes your work more credible and trustworthy.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Middleton, F. (2022, October 10). Reliability vs Validity in Research | Differences, Types & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/research-methods/reliability-or-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, the 4 types of validity | types, definitions & examples, a quick guide to experimental design | 5 steps & examples, sampling methods | types, techniques, & examples.

Validity & Reliability In Research

A Plain-Language Explanation (With Examples)

By: Derek Jansen (MBA) | Expert Reviewer: Kerryn Warren (PhD) | September 2023

Validity and reliability are two related but distinctly different concepts within research. Understanding what they are and how to achieve them is critically important to any research project. In this post, we’ll unpack these two concepts as simply as possible.

This post is based on our popular online course, Research Methodology Bootcamp . In the course, we unpack the basics of methodology using straightfoward language and loads of examples. If you’re new to academic research, you definitely want to use this link to get 50% off the course (limited-time offer).

Overview: Validity & Reliability

- The big picture

- Validity 101

- Reliability 101

- Key takeaways

First, The Basics…

First, let’s start with a big-picture view and then we can zoom in to the finer details.

Validity and reliability are two incredibly important concepts in research, especially within the social sciences. Both validity and reliability have to do with the measurement of variables and/or constructs – for example, job satisfaction, intelligence, productivity, etc. When undertaking research, you’ll often want to measure these types of constructs and variables and, at the simplest level, validity and reliability are about ensuring the quality and accuracy of those measurements .

As you can probably imagine, if your measurements aren’t accurate or there are quality issues at play when you’re collecting your data, your entire study will be at risk. Therefore, validity and reliability are very important concepts to understand (and to get right). So, let’s unpack each of them.

What Is Validity?

In simple terms, validity (also called “construct validity”) is all about whether a research instrument accurately measures what it’s supposed to measure .

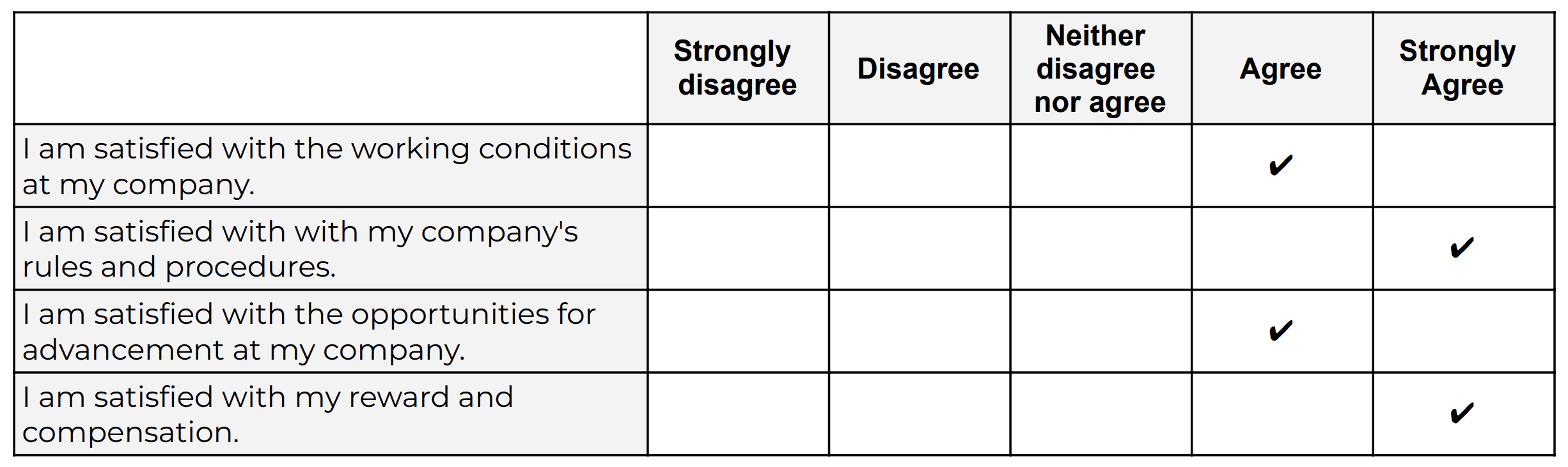

For example, let’s say you have a set of Likert scales that are supposed to quantify someone’s level of overall job satisfaction. If this set of scales focused purely on only one dimension of job satisfaction, say pay satisfaction, this would not be a valid measurement, as it only captures one aspect of the multidimensional construct. In other words, pay satisfaction alone is only one contributing factor toward overall job satisfaction, and therefore it’s not a valid way to measure someone’s job satisfaction.

Oftentimes in quantitative studies, the way in which the researcher or survey designer interprets a question or statement can differ from how the study participants interpret it . Given that respondents don’t have the opportunity to ask clarifying questions when taking a survey, it’s easy for these sorts of misunderstandings to crop up. Naturally, if the respondents are interpreting the question in the wrong way, the data they provide will be pretty useless . Therefore, ensuring that a study’s measurement instruments are valid – in other words, that they are measuring what they intend to measure – is incredibly important.

There are various types of validity and we’re not going to go down that rabbit hole in this post, but it’s worth quickly highlighting the importance of making sure that your research instrument is tightly aligned with the theoretical construct you’re trying to measure . In other words, you need to pay careful attention to how the key theories within your study define the thing you’re trying to measure – and then make sure that your survey presents it in the same way.

For example, sticking with the “job satisfaction” construct we looked at earlier, you’d need to clearly define what you mean by job satisfaction within your study (and this definition would of course need to be underpinned by the relevant theory). You’d then need to make sure that your chosen definition is reflected in the types of questions or scales you’re using in your survey . Simply put, you need to make sure that your survey respondents are perceiving your key constructs in the same way you are. Or, even if they’re not, that your measurement instrument is capturing the necessary information that reflects your definition of the construct at hand.

If all of this talk about constructs sounds a bit fluffy, be sure to check out Research Methodology Bootcamp , which will provide you with a rock-solid foundational understanding of all things methodology-related. Remember, you can take advantage of our 60% discount offer using this link.

Need a helping hand?

What Is Reliability?

As with validity, reliability is an attribute of a measurement instrument – for example, a survey, a weight scale or even a blood pressure monitor. But while validity is concerned with whether the instrument is measuring the “thing” it’s supposed to be measuring, reliability is concerned with consistency and stability . In other words, reliability reflects the degree to which a measurement instrument produces consistent results when applied repeatedly to the same phenomenon , under the same conditions .

As you can probably imagine, a measurement instrument that achieves a high level of consistency is naturally more dependable (or reliable) than one that doesn’t – in other words, it can be trusted to provide consistent measurements . And that, of course, is what you want when undertaking empirical research. If you think about it within a more domestic context, just imagine if you found that your bathroom scale gave you a different number every time you hopped on and off of it – you wouldn’t feel too confident in its ability to measure the variable that is your body weight 🙂

It’s worth mentioning that reliability also extends to the person using the measurement instrument . For example, if two researchers use the same instrument (let’s say a measuring tape) and they get different measurements, there’s likely an issue in terms of how one (or both) of them are using the measuring tape. So, when you think about reliability, consider both the instrument and the researcher as part of the equation.

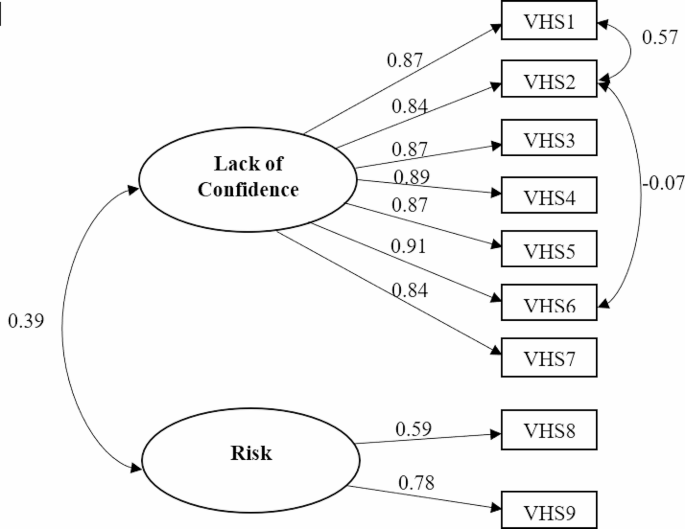

As with validity, there are various types of reliability and various tests that can be used to assess the reliability of an instrument. A popular one that you’ll likely come across for survey instruments is Cronbach’s alpha , which is a statistical measure that quantifies the degree to which items within an instrument (for example, a set of Likert scales) measure the same underlying construct . In other words, Cronbach’s alpha indicates how closely related the items are and whether they consistently capture the same concept .

Recap: Key Takeaways

Alright, let’s quickly recap to cement your understanding of validity and reliability:

- Validity is concerned with whether an instrument (e.g., a set of Likert scales) is measuring what it’s supposed to measure

- Reliability is concerned with whether that measurement is consistent and stable when measuring the same phenomenon under the same conditions.

In short, validity and reliability are both essential to ensuring that your data collection efforts deliver high-quality, accurate data that help you answer your research questions . So, be sure to always pay careful attention to the validity and reliability of your measurement instruments when collecting and analysing data. As the adage goes, “rubbish in, rubbish out” – make sure that your data inputs are rock-solid.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS.

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS AND I HAVE GREATLY BENEFITED FROM THE CONTENT.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

Inductive and deductive reasoning takes into account assumptions and incidents. Here is all you need to know about inductive vs deductive reasoning.

This article provides the key advantages of primary research over secondary research so you can make an informed decision.

You can transcribe an interview by converting a conversation into a written format including question-answer recording sessions between two or more people.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- Open access

- Published: 27 October 2021

A narrative review on the validity of electronic health record-based research in epidemiology

- Milena A. Gianfrancesco 1 &

- Neal D. Goldstein ORCID: orcid.org/0000-0002-9597-5251 2

BMC Medical Research Methodology volume 21 , Article number: 234 ( 2021 ) Cite this article

11k Accesses

46 Citations

5 Altmetric

Metrics details

Electronic health records (EHRs) are widely used in epidemiological research, but the validity of the results is dependent upon the assumptions made about the healthcare system, the patient, and the provider. In this review, we identify four overarching challenges in using EHR-based data for epidemiological analysis, with a particular emphasis on threats to validity. These challenges include representativeness of the EHR to a target population, the availability and interpretability of clinical and non-clinical data, and missing data at both the variable and observation levels. Each challenge reveals layers of assumptions that the epidemiologist is required to make, from the point of patient entry into the healthcare system, to the provider documenting the results of the clinical exam and follow-up of the patient longitudinally; all with the potential to bias the results of analysis of these data. Understanding the extent of as well as remediating potential biases requires a variety of methodological approaches, from traditional sensitivity analyses and validation studies, to newer techniques such as natural language processing. Beyond methods to address these challenges, it will remain crucial for epidemiologists to engage with clinicians and informaticians at their institutions to ensure data quality and accessibility by forming multidisciplinary teams around specific research projects.

Peer Review reports

The proliferation of electronic health records (EHRs) spurred on by federal government incentives over the past few decades has resulted in greater than an 80% adoption-rate at hospitals [ 1 ] and close to 90% in office-based practices [ 2 ] in the United States. A natural consequence of the availability of electronic health data is the conduct of research with these data, both observational and experimental [ 3 ], due to lower overhead costs and lower burden of study recruitment [ 4 ]. Indeed, a search on PubMed for publications indexed by the MeSH term “electronic health records” reveals an exponential growth in biomedical literature, especially over the last 10 years with an excess of 50,000 publications.

An emerging literature is beginning to recognize the many challenges that still lay ahead in using EHR data for epidemiological investigations. Researchers in Europe identified 13 potential sources of “bias” (bias was defined as a contamination of the data) in EHR-based data covering almost every aspect of care delivery, from selective entrance into the healthcare system, to variation in care and documentation practices, to identification and extraction of the right data for analysis [ 5 ]. Many of the identified contaminants are directly relevant to traditional epidemiological threats to validity [ 4 ]. Data quality has consistently been invoked as a central challenge in EHRs. From a qualitative perspective, healthcare workers have described challenges in the healthcare environment (e.g., heavy workload), imperfect clinical documentation practices, and concerns over data extraction and reporting tools, all of which would impact the quality of data in the EHR [ 6 ]. From a quantitative perspective, researchers have noted limited sensitivity of diagnostic codes in the EHR when relying on discrete codings, noting that upon a manual chart review free text fields often capture the missed information, motivating such techniques as natural language processing (NLP) [ 7 ]. A systematic review of EHR-based studies also identified data quality as an overarching barrier to the use of EHRs in managing the health of the community, i.e. “population health” [ 8 ]. Encouragingly this same review also identified more facilitators than barriers to the use of EHRs in public health, suggesting that opportunities outweigh the challenges. Shortreed et al. further explored these opportunities discussing how EHRs can enhance pragmatic trials, bring additional sophistication to observational studies, aid in predictive modeling, and be linked together to create more comprehensive views of patients’ health [ 9 ]. Yet, as Shortreed and others have noted, significant challenges still remain.

It is our intention with this narrative review to discuss some of these challenges in further detail. In particular, we focus on specific epidemiological threats to validity -- internal and external -- and how EHR-based epidemiological research in particular can exacerbate some of these threats. We note that while there is some overlap in the challenges we discuss with traditional paper-based medical record research that has occurred for decades, the scale and scope of an EHR-based study is often well beyond what was traditionally possible in the manual chart review era and our applied examples attempt to reflect this. We also describe existing and emerging approaches for remediating these potential biases as they arise. A summary of these challenges may be found in Table 1 . Our review is grounded in the healthcare system in the United States, although we expect many of the issues we describe to be applicable regardless of locale; where necessary, we have flagged our comments as specific to the U.S.

Challenge #1: Representativeness

The selection process for how patients are captured in the EHR is complex and a function of geographic, social, demographic, and economic determinants [ 10 ]. This can be termed the catchment of the EHR. For a patient record to appear in the EHR the patient must have been registered in the system, typically to capture their demographic and billing information, and upon a clinical visit, their health details. While this process is not new to clinical epidemiology, what tends to separate EHR-based records from traditional paper-based records is the scale and scope of the data. Patient data may be available for longer periods of time longitudinally, as well as have data corresponding to interactions with multiple, potentially disparate, healthcare systems [ 11 ]. Given the consolidation of healthcare [ 12 ] and aggregated views of multiple EHRs through health information networks or exchanges [ 11 ] the ability to have a complete view of the patients’ total health is increasing. Importantly, the epidemiologist must ascertain whether the population captured within the EHR or EHR-derived data is representative of the population targeted for inference. This is particularly true under the paradigm of population health and inferring the health status of a community from EHR-based records [ 13 ]. For example, a study of Clostridium difficile infection at an urban safety net hospital in Philadelphia, Pennsylvania demonstrated notable differences in risk factors in the hospital’s EHR compared to national surveillance data, suggesting how catchment can influence epidemiologic measures [ 14 ]. Even health-related data captured through health information exchanges may be incomplete [ 15 ].

Several hypothetical study settings can further help the epidemiologist appreciate the relationship between representativeness and validity in EHR research. In the first hypothetical, an EHR-based study is conducted from a single-location federally qualified health center, and in the second hypothetical, an EHR-based study is conducted from a large academic health system. Suppose both studies occur in the same geographic area. It is reasonable to believe the patient populations captured in both EHRs will be quite different and the catchment process could lead to divergent estimates of disease or risk factor prevalence. The large academic health system may be less likely to capture primary care visits, as specialty care may drive the preponderance of patient encounters. However, this is not a bias per se : if the target of inference from these two hypothetical EHR-based studies is the local community, then selection bias becomes a distinct possibility. The epidemiologist must also consider the potential for generalizability and transportability -- two facets of external validity that respectively relate to the extrapolation of study findings to the source population or a different population altogether -- if there are unmeasured effect modifiers, treatment interference, or compound treatments in the community targeted for inference [ 16 ].

There are several approaches for ascertaining representativeness of EHR-based data. Comparing the EHR-derived sample to Census estimates of demography is straightforward but has several important limitations. First, as previously described, the catchment process may be driven by discordant geographical areas, especially for specialty care settings. Second and third, the EHR may have limited or inaccurate information on socioeconomic status, race, and ethnicity that one may wish to compare [ 17 , 18 ], and conversely the Census has limited estimates of health, chiefly disability, fertility, and insurance and payments [ 19 ]. If selection bias is suspected as a result of missing visits in a longitudinal study [ 20 ] or the catchment process in a cross-sectional study [ 21 ], using inverse probability weighting may remediate its influence. Comparing the weighted estimates to the original, non-weighted estimates provides insight into differences in the study participants. In the population health paradigm whereby the EHR is used as a surveillance tool to identify community health disparities [ 13 ], one also needs to be concerned about representativeness. There are emerging approaches for producing such small area community estimates from large observational datasets [ 22 , 23 ]. Conceivably, these approaches may also be useful for identifying issues of representativeness, for example by comparing stratified estimates across sociodemographic or other factors that may relate to catchment. Approaches for issues concerning representativeness specifically as it applies to external validity may be found in these references [ 24 , 25 ].

Challenge #2: Data availability and interpretation

Sub-challenge #2.1: billing versus clinical versus epidemiological needs.

There is an inherent tension in the use of EHR-based data for research purposes: the EHR was never originally designed for research. In the U.S., the Health Information Technology for Economic and Clinical Health Act, which promoted EHRs as a platform for comparative effectiveness research, was an attempt to address this deficiency [ 26 ]. A brief history of the evolution of the modern EHR reveals a technology that was optimized for capturing health details relevant for billing, scheduling, and clinical record keeping [ 27 ]. As such, the availability of data for fundamental markers of upstream health that are important for identifying inequities, such as socioeconomic status, race, ethnicity, and other social determinants of health (SDOH), may be insufficiently captured in the EHR [ 17 , 18 ]. Similarly, behavioral risk factors, such as being a sexual minority person, have historically been insufficiently recorded as discrete variables. It is only recently that such data are beginning to be captured in the EHR [ 28 , 29 ], or techniques such as NLP have made it possible to extract these details when stored in free text notes (described further in “ Unstructured data: clinical notes and reports ” section).

As an example, assessing clinical morbidities in the EHR may be done on the basis of extracting appropriate International Classification of Diseases (ICD) codes, used for billing and reimbursement in the U.S. These codes are known to have low sensitivity despite high specificity for accurate diagnostic status [ 30 , 31 ]. Expressed as predictive values, which depend upon prevalence, presence of a diagnostic code is a likely indicator of a disease state, whereas absence of a diagnostic code is a less reliable indicator of the absence of that morbidity. There may further be variation by clinical domain in that ICD codes may exist but not be used in some specialties [ 32 ], variation by coding vocabulary such as the use of SNOMED for clinical documentation versus ICD for billing necessitating an ontology mapper [ 33 ], and variation by the use of “rule-out” diagnostic codes resulting in false-positive diagnoses [ 34 , 35 , 36 ]. Relatedly is the notion of upcoding, or the billing of tests, procedures, or diagnoses to receive inflated reimbursement, which, although posited to be problematic in EHRs [ 37 ] in at least one study, has not been shown to have occurred [ 38 ]. In the U.S., the billing and reimbursement model, such as fee-for-service versus managed care, may result in varying diagnostic code sensitivities and specificities, especially if upcoding is occurring [ 39 ]. In short, there is potential for misclassification of key health data in the EHR.

Misclassification can potentially be addressed through a validation study (resources permitting) or application of quantitative bias analysis, and there is a rich literature regarding the treatment of misclassified data in statistics and epidemiology. Readers are referred to these texts as a starting point [ 40 , 41 ]. Duda et al. and Shepherd et al. have described an innovative data audit approach applicable to secondary analysis of observational data, such as EHR-derived data, that incorporates the audit error rate directly in the regression analysis to reduce information bias [ 42 , 43 ]. Outside of methodological tricks in the face of imperfect data, researchers must proactively engage with clinical and informatics colleagues to ensure that the right data for the research interests are available and accessible.

Sub-challenge #2.2: Consistency in data and interpretation

For the epidemiologist, abstracting data from the EHR into a research-ready analytic dataset presents a host of complications surrounding data availability, consistency and interpretation. It is easy to conflate the total volume of data in the EHR with data that are usable for research, however expectations should be tempered. Weiskopf et al. have noted such challenges for the researcher: in their study, less than 50% of patient records had “complete” data for research purposes per their four definitions of completeness [ 44 ]. Decisions made about the treatment of incomplete data can induce selection bias or impact precision of estimates (see Challenges #1 , #3 , and #4 ). The COVID-19 pandemic has further demonstrated the challenge of obtaining research data from EHRs across multiple health systems [ 45 ]. On the other hand, EHRs have a key advantage of providing near real-time data as opposed to many epidemiological studies that have a specific endpoint or are retrospective in nature. Such real-time data availability was leveraged during COVID-19 to help healthcare systems manage their pandemic response [ 46 , 47 ]. Logistical and technical issues aside, healthcare and documentation practices are nuanced to their local environments. In fact, researchers have demonstrated how the same research question analyzed in distinct clinical databases can yield different results [ 48 ].

Once the data are obtained, choices regarding operationalization of variables have the potential to induce information bias. Several hypothetical examples can help demonstrate this point. As a first example, differences in laboratory reporting may result in measurement error or misclassification. While the order for a particular laboratory assay is likely consistent within the healthcare system, patients frequently have a choice where to have that order fulfilled. Given the breadth of assays and reporting differences that may differ lab to lab [ 49 ], it is possible that the researcher working with the raw data may not consider all possible permutations. In other words, there may be lack of consistency in the reporting of the assay results. As a second example, raw clinical data requires interpretation to become actionable. A researcher interested in capturing a patient’s Charlson comorbidity index, which is based on 16 potential diagnoses plus the patient’s age [ 50 ], may never find such a variable in the EHR. Rather, this would require operationalization based on the raw data, each of which may be misclassified. Use of such composite measures introduces the notion of “differential item functioning”, whereby a summary indicator of a complexly measured health phenomenon may differ from group to group [ 51 ]. In this case, as opposed to a measurement error bias, this is one of residual confounding in that a key (unmeasured) variable is driving the differences. Remediation of these threats to validity may involve validation studies to determine the accuracy of a particular classifier, sensitivity analysis employing alternative interpretations when the raw data are available, and omitting or imputing biased or latent variables [ 40 , 41 , 52 ]. Importantly, in all cases, the epidemiologists should work with the various health care providers and personnel who have measured and recorded the data present in the EHR, as they likely understand it best.

Furthermore and related to “Billing versus Clinical versus Epidemiological Needs” section, the healthcare system in the U.S. is fragmented with multiple payers, both public and private, potentially exacerbating the data quality issues we describe, especially when linking data across healthcare systems. Single payer systems have enabled large and near-complete population-based studies due to data availability and consistency [ 53 , 54 , 55 ]. Data may also be inconsistent for retrospective longitudinal studies spanning many years if there have been changes to coding standards or practices over time, for example due to the transition from ICD-9 to ICD-10 largely occurring in the mid 2010s or the adoption of the Patient Protection and Affordable Care Act in the U.S. in 2010 with its accompanying changes in billing. Exploratory data analysis may reveal unexpected differences in key variables, by place or time, and recoding, when possible, can enforce consistency.

Sub-challenge #2.3: Unstructured data: clinical notes and reports

There may also be scenarios where structured data fields, while available, are not traditionally or consistently used within a given medical center or by a given provider. For example, reporting of adverse events of medications, disease symptoms, and vaccinations or hospitalizations occurring at different facility/health networks may not always be entered by providers in structured EHR fields. Instead, these types of patient experiences may be more likely to be documented in an unstructured clinical note, report (e.g. pathology or radiology report), or scanned document. Therefore, reliance on structured data to identify and study such issues may result in underestimation and potentially biased results.

Advances in NLP currently allow for information to be extracted from unstructured clinical notes and text fields in a reliable and accurate manner using computational methods. NLP utilizes a range of different statistical, machine learning, and linguistic techniques, and when applied to EHR data, has the potential to facilitate more accurate detection of events not traditionally located or consistently used in structured fields. Various NLP methods can be implemented in medical text analysis, ranging from simplistic and fast term recognition systems to more advanced, commercial NLP systems [ 56 ]. Several studies have successfully utilized text mining to extract information on a variety of health-related issues within clinical notes, such as opioid use [ 57 ], adverse events [ 58 , 59 ], symptoms (e.g., shortness of breath, depression, pain) [ 60 ], and disease phenotype information documented in pathology or radiology reports, including cancer stage, histology, and tumor grade [ 61 ], and lupus nephritis [ 32 ]. It is worth noting that scanned documents involve an additional layer of computation, relying on techniques such as optical character recognition, before NLP can be applied.

Hybrid approaches that combine both narrative and structured data, such as ICD codes, to improve accuracy of detecting phenotypes have also demonstrated high performance. Banerji et al. found that using ICD-9 codes to identify allergic drug reactions in the EHR had a positive predictive value of 46%, while an NLP algorithm in conjunction with ICD-9 codes resulted in a positive predictive value of 86%; negative predictive value also increased in the combined algorithm (76%) compared to ICD-9 codes alone (39%) [ 62 ]. In another example, researchers found that the combination of unstructured clinical notes with structured data for prediction tasks involving in-hospital mortality and 30-day hospital readmission outperformed models using either clinical notes or structured data alone [ 63 ]. As we move forward in analyzing EHR data, it will be important to take advantage of the wealth of information buried in unstructured data to assist in phenotyping patient characteristics and outcomes, capture missing confounders used in multivariate analyses, and develop prediction models.

Challenge #3: Missing measurements

While clinical notes may be useful to recover incomplete information from structured data fields, it may be the case that certain variables are not collected within the EHR at all. As mentioned above, it is important to remember that EHRs were not developed as a research tool (see “ Billing versus clinical versus epidemiological needs ” section), and important variables often used in epidemiologic research may not be typically included in EHRs including socioeconomic status (education, income, occupation) and SDOH [ 17 , 18 ]. Depending upon the interest of the provider or clinical importance placed upon a given variable, this information may be included in clinical notes. While NLP could be used to capture these variables, because they may not be consistently captured, there may be bias in identifying those with a positive mention as a positive case and those with no mention as a negative case. For example, if a given provider inquires about homelessness of a patient based on knowledge of the patient’s situation or other external factors and documents this in the clinical note, we have greater assurance that this is a true positive case. However, lack of mention of homelessness in a clinical note should not be assumed as a true negative case for several reasons: not all providers may feel comfortable asking about and/or documenting homelessness, they may not deem this variable worth noting, or implicit bias among clinicians may affect what is captured. As a result, such cases (i.e. no mention of homelessness) may be incorrectly identified as “not homeless,” leading to selection bias should a researcher form a cohort exclusively of patients who are identified as homeless in the EHR.

Not adjusting for certain measurements missing from EHR data can also lead to biased results if the measurement is an important confounder. Consider the example of distinguishing between prevalent and incident cases of disease when examining associations between disease treatments and patient outcomes [ 64 ]. The first date of an ICD code entered for a given patient may not necessarily be the true date of diagnosis, but rather documentation of an existing diagnosis. This limits the ability to adjust for disease duration, which may be an important confounder in studies comparing various treatments with patient outcomes over time, and may also lead to reverse causality if disease sequalae are assumed to be risk factors.

Methods to supplement EHR data with external data have been used to capture missing information. These methods may include imputation if information (e.g. race, lab values) is collected on a subset of patients within the EHR. It is important to examine whether missingness occurs completely at random or at random (“ignorable”), or not at random (“non-ignorable”), using the data available to determine factors associated with missingness, which will also inform the best imputation strategy to pursue, if any [ 65 , 66 ]. As an example, suppose we are interested in ascertaining a patient's BMI from the EHR. If men were less likely to have BMI measured than women, the probability of missing data (BMI) depends on the observed data (gender) and may therefore be predictable and imputable. On the other hand, suppose underweight individuals were less likely to have BMI measured; the probability of missing data depends on its own value, and as such is non-predictable and may require a validation study to confirm. Alternatively to imputing missing data, surrogate measures may be used, such as inferring area-based SES indicators, including median household income, percent poverty, or area deprivation index, by zip code [ 67 , 68 ]. Lastly, validation studies utilizing external datasets may prove helpful, such as supplementing EHR data with claims data that may be available for a subset of patients (see Challenge #4 ).

As EHRs are increasingly being used for research, there are active pushes to include more structured data fields that are important to population health research, such as SDOH [ 69 ]. Inclusion of such factors are likely to result in improved patient care and outcomes, through increased precision in disease diagnosis, more effective shared decision making, identification of risk factors, and tailoring services to a given population’s needs [ 70 ]. In fact, a recent review found that when individual level SDOH were included in predictive modeling, they overwhelmingly improved performance in medication adherence, risk of hospitalization, 30-day rehospitalizations, suicide attempts, and other healthcare services [ 71 ]. Whether or not these fields will be utilized after their inclusion in the EHR may ultimately depend upon federal and state incentives, as well as support from local stakeholders, and this does not address historic, retrospective analyses of these data.

Challenge #4: Missing visits

Beyond missing variable data that may not be captured during a clinical encounter, either through structured data or clinical notes, there also may be missing information for a patient as a whole. This can occur in a variety of ways; for example, a patient may have one or two documented visits in the EHR and then is never seen again (i.e. right censoring due to lost to follow-up), or a patient is referred from elsewhere to seek specialty care, with no information captured regarding other external issues (i.e. left censoring). This may be especially common in circumstances where a given EHR is more likely to capture specialty clinics versus primary care (see Challenge #1 ). A third scenario may include patients who appear, then are not observed for a long period of time, and then reappear: this case is particularly problematic as it may appear the patient was never lost to follow up but simply had fewer visits. In any of these scenarios, a researcher will lack a holistic view of the patient’s experiences, diagnoses, results, and more. As discussed above, assuming absence of a diagnostic code as absence of disease may lead to information and/or selection bias. Further, it has been demonstrated that one key source of bias in EHRs is “informed presence” bias, where those with more medical encounters are more likely to be diagnosed with various conditions (similar to Berkson’s bias) [ 72 ].

Several solutions to these issues have been proposed. For example, it is common for EHR studies to condition on observation time (i.e. ≥n visits required to be eligible into cohort); however, this may exclude a substantial amount of patients with certain characteristics, incurring a selection bias or limiting the generalizability of study findings (see Challenge #1 ). Other strategies attempt to account for missing visit biases through longitudinal imputation approaches; for example, if a patient missed a visit, a disease activity score can be imputed for that point in time, given other data points [ 73 , 74 ]. Surrogate measures may also be used to infer patient outcomes, such as controlling for “informative” missingness as an indicator variable or using actual number of missed visits that were scheduled as a proxy for external circumstances influencing care [ 20 ]. To address “informed presence” bias described above, conditioning on the number of health-care encounters may be appropriate [ 72 ]. Understanding the reason for the missing visit may help identify the best course of action and before imputing, one should be able to identify the type of missingness, whether “informative” or not [ 65 , 66 ]. For example, if distance to a healthcare location is related to appointment attendance, being able to account for this in analysis would be important: researchers have shown how the catchment of a healthcare facility can induce selection bias [ 21 ]. Relatedly, as telehealth becomes more common fueled by the COVID-19 pandemic [ 75 , 76 ], virtual visits may generate missingness of data recorded in the presence of a provider (e.g., blood pressure if the patient does not have access to a sphygmomanometer; see Challenge #3 ), or necessitate a stratified analysis by visit type to assess for effect modification.

Another common approach is to supplement EHR information with external data sources, such as insurance claims data, when available. Unlike a given EHR, claims data are able to capture a patient’s interaction with the health care system across organizations, and additionally includes pharmacy data such as if a prescription was filled or refilled. Often researchers examine a subset of patients eligible for Medicaid/Medicare and compare what is documented in claims with information available in the EHR [ 77 ]. That is, are there additional medications, diagnoses, hospitalizations found in the claims dataset that were not present in the EHR. In a study by Franklin et al., researchers utilized a linked database of Medicare Advantage claims and comprehensive EHR data from a multi-specialty outpatient practice to determine which dataset would be more accurate in predicting medication adherence [ 77 ]. They found that both datasets were comparable in identifying those with poor adherence, though each dataset incorporated different variables.

While validation studies such as those using claims data allow researchers to gain an understanding as to how accurate and complete a given EHR is, this may only be limited to the specific subpopulation examined (i.e. those eligible for Medicaid, or those over 65 years for Medicare). One study examined congruence between EHR of a community health center and Medicaid claims with respect to diabetes [ 78 ]. They found that patients who were older, male, Spanish-speaking, above the federal poverty level, or who had discontinuous insurance were more likely to have services documented in the EHR as compared to Medicaid claims data. Therefore, while claims data may help supplement and validate information in the EHR, on their own they may underestimate care in certain populations.

Research utilizing EHR data has undoubtedly positively impacted the field of public health through its ability to provide large-scale, longitudinal data on a diverse set of patients, and will continue to do so in the future as more epidemiologists take advantage of this data source. EHR data’s ability to capture individuals that traditionally aren’t included in clinical trials, cohort studies, and even claims datasets allows researchers to measure longitudinal outcomes in patients and perhaps change the understanding of potential risk factors.

However, as outlined in this review, there are important caveats to EHR analysis that need to be taken into account; failure to do so may threaten study validity. The representativeness of EHR data depends on the catchment area of the center and corresponding target population. Tools are available to evaluate and remedy these issues, which are critical to study validity as well as extrapolation of study findings. Data availability and interpretation, missing measurements, and missing visits are also key challenges, as EHRs were not specifically developed for research purposes, despite their common use for such. Taking advantage of all available EHR data, whether it be structured or unstructured fields through NLP, will be important in understanding the patient experience and identifying key phenotypes. Beyond methods to address these concerns, it will remain crucial for epidemiologists and data analysts to engage with clinicians and informaticians at their institutions to ensure data quality and accessibility by forming multidisciplinary teams around specific research projects. Lastly, integration across multiple EHRs, or datasets that encompass multi-institutional EHR records, add an additional layer of data quality and validity issues, with the potential to exacerbate the above-stated challenges found within a single EHR. At minimum, such studies should account for correlated errors [ 79 , 80 ], and investigate whether modularization, or submechanisms that determine whether data are observed or missing in each EHR, exist [ 65 ].

The identified challenges may also apply to secondary analysis of other large healthcare databases, such as claims data, although it is important not to conflate the two types of data. EHR data are driven by clinical care and claims data are driven by the reimbursement process where there is a financial incentive to capture diagnoses, procedures, and medications [ 48 ]. The source of data likely influences the availability, accuracy, and completeness of data. The fundamental representation of data may also differ as a record in a claims database corresponds to a “claim” as opposed to an “encounter” in the EHR. As such, the representativeness of the database populations, the sensitivity and specificity of variables, as well as the mechanisms of missingness in claims data may differ from EHR data. One study that evaluated pediatric quality care measures, such as BMI, noted inferior sensitivity based on claims data alone [ 81 ]. Linking claims data to EHR data has been proposed to enhance study validity, but many of the caveats raised in herein still apply [ 82 ].

Although we focused on epidemiological challenges related to study validity, there are other important considerations for researchers working with EHR data. Privacy and security of data as well as institutional review board (IRB) or ethics board oversight of EHR-based studies should not be taken for granted. For researchers in the U.S., Goldstein and Sarwate described Health Insurance Portability and Accountability Act (HIPAA)-compliant approaches to ensure the privacy and security of EHR data used in epidemiological research, and presented emerging approaches to analyses that separate the data from analysis [ 83 ]. The IRB oversees the data collection process for EHR-based research and through the HIPAA Privacy Rule these data typically do not require informed consent provided they are retrospective and reside at the EHR’s institution [ 84 ]. Such research will also likely receive an exempt IRB review provided subjects are non-identifiable.

Conclusions

As EHRs are increasingly being used for research, epidemiologists can take advantage of the many tools and methods that already exist and apply them to the key challenges described above. By being aware of the limitations that the data present and proactively addressing them, EHR studies will be more robust, informative, and important to the understanding of health and disease in the population.

Availability of data and materials

All data and materials used in this review are described herein.

Abbreviations

Body Mass Index

Electronic Health Record

International Classification of Diseases

Institutional review board/ethics board

Health Insurance Portability and Accountability Act

Natural Language Processing

Social Determinants of Health

Socioeconomic Status

Adler-Milstein J, Holmgren AJ, Kralovec P, et al. Electronic health record adoption in US hospitals: the emergence of a digital “advanced use” divide. J Am Med Inform Assoc. 2017;24(6):1142–8.

Article PubMed PubMed Central Google Scholar

Office of the National Coordinator for Health Information Technology. ‘Office-based physician electronic health record adoption’, Health IT quick-stat #50. dashboard.healthit.gov/quickstats/pages/physician-ehr-adoption-trends.php . Accessed 15 Jan 2019.

Cowie MR, Blomster JI, Curtis LH, et al. Electronic health records to facilitate clinical research. Clin Res Cardiol. 2017;106(1):1–9.

Article PubMed Google Scholar

Casey JA, Schwartz BS, Stewart WF, et al. Using electronic health records for population health research: a review of methods and applications. Annu Rev Public Health. 2016;37:61–81.

Verheij RA, Curcin V, Delaney BC, et al. Possible sources of bias in primary care electronic health record data use and reuse. J Med Internet Res. 2018;20(5):e185.

Ni K, Chu H, Zeng L, et al. Barriers and facilitators to data quality of electronic health records used for clinical research in China: a qualitative study. BMJ Open. 2019;9(7):e029314.

Coleman N, Halas G, Peeler W, et al. From patient care to research: a validation study examining the factors contributing to data quality in a primary care electronic medical record database. BMC Fam Pract. 2015;16:11.

Kruse CS, Stein A, Thomas H, et al. The use of electronic health records to support population health: a systematic review of the literature. J Med Syst. 2018;42(11):214.

Shortreed SM, Cook AJ, Coley RY, et al. Challenges and opportunities for using big health care data to advance medical science and public health. Am J Epidemiol. 2019;188(5):851–61.

In: Smedley BD, Stith AY, Nelson AR, editors. Unequal treatment: confronting racial and ethnic disparities in health care. Washington (DC) 2003.

Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–52.

Cutler DM, Scott Morton F. Hospitals, market share, and consolidation. JAMA. 2013;310(18):1964–70.

Article CAS PubMed Google Scholar

Cocoros NM, Kirby C, Zambarano B, et al. RiskScape: a data visualization and aggregation platform for public health surveillance using routine electronic health record data. Am J Public Health. 2021;111(2):269–76.

Vader DT, Weldie C, Welles SL, et al. Hospital-acquired Clostridioides difficile infection among patients at an urban safety-net hospital in Philadelphia: demographics, neighborhood deprivation, and the transferability of national statistics. Infect Control Hosp Epidemiol. 2020;42:1–7.

Google Scholar

Dixon BE, Gibson PJ, Frederickson Comer K, et al. Measuring population health using electronic health records: exploring biases and representativeness in a community health information exchange. Stud Health Technol Inform. 2015;216:1009.

PubMed Google Scholar

Hernán MA, VanderWeele TJ. Compound treatments and transportability of causal inference. Epidemiology. 2011;22(3):368–77.

Casey JA, Pollak J, Glymour MM, et al. Measures of SES for electronic health record-based research. Am J Prev Med. 2018;54(3):430–9.

Polubriaginof FCG, Ryan P, Salmasian H, et al. Challenges with quality of race and ethnicity data in observational databases. J Am Med Inform Assoc. 2019;26(8-9):730–6.

U.S. Census Bureau. Health. Available at: https://www.census.gov/topics/health.html . Accessed 19 Jan 2021.

Gianfrancesco MA, McCulloch CE, Trupin L, et al. Reweighting to address nonparticipation and missing data bias in a longitudinal electronic health record study. Ann Epidemiol. 2020;50:48–51 e2.

Goldstein ND, Kahal D, Testa K, Burstyn I. Inverse probability weighting for selection bias in a Delaware community health center electronic medical record study of community deprivation and hepatitis C prevalence. Ann Epidemiol. 2021;60:1–7.

Gelman A, Lax J, Phillips J, et al. Using multilevel regression and poststratification to estimate dynamic public opinion. Unpublished manuscript, Columbia University. 2016 Sep 11. Available at: http://www.stat.columbia.edu/~gelman/research/unpublished/MRT(1).pdf . Accessed 22 Jan 2021.

Quick H, Terloyeva D, Wu Y, et al. Trends in tract-level prevalence of obesity in philadelphia by race-ethnicity, space, and time. Epidemiology. 2020;31(1):15–21.

Lesko CR, Buchanan AL, Westreich D, Edwards JK, Hudgens MG, Cole SR. Generalizing study results: a potential outcomes perspective. Epidemiology. 2017;28(4):553–61.

Westreich D, Edwards JK, Lesko CR, Stuart E, Cole SR. Transportability of trial results using inverse odds of sampling weights. Am J Epidemiol. 2017;186(8):1010–4.

Congressional Research Services (CRS). The Health Information Technology for Economic and Clinical Health (HITECH) Act. 2009. Available at: https://crsreports.congress.gov/product/pdf/R/R40161/9 . Accessed Jan 22 2021.

Hersh WR. The electronic medical record: Promises and problems. Journal of the American Society for Information Science. 1995;46(10):772–6.

Article Google Scholar

Collecting sexual orientation and gender identity data in electronic health records: workshop summary. Washington (DC) 2013.

Committee on the Recommended Social and Behavioral Domains and Measures for Electronic Health Records; Board on Population Health and Public Health Practice; Institute of Medicine. Capturing social and behavioral domains and measures in electronic health records: phase 2. Washington (DC): National Academies Press (US); 2015.

Goff SL, Pekow PS, Markenson G, et al. Validity of using ICD-9-CM codes to identify selected categories of obstetric complications, procedures and co-morbidities. Paediatr Perinat Epidemiol. 2012;26(5):421–9.

Schneeweiss S, Avorn J. A review of uses of health care utilization databases for epidemiologic research on therapeutics. J Clin Epidemiol. 2005;58(4):323–37.

Gianfrancesco MA. Application of text mining methods to identify lupus nephritis from electronic health records. Lupus Science & Medicine. 2019;6:A142.

National Library of Medicine. SNOMED CT to ICD-10-CM Map. Available at: https://www.nlm.nih.gov/research/umls/mapping_projects/snomedct_to_icd10cm.html . Accessed 2 Jul 2021.

Klabunde CN, Harlan LC, Warren JL. Data sources for measuring comorbidity: a comparison of hospital records and medicare claims for cancer patients. Med Care. 2006;44(10):921–8.

Burles K, Innes G, Senior K, Lang E, McRae A. Limitations of pulmonary embolism ICD-10 codes in emergency department administrative data: let the buyer beware. BMC Med Res Methodol. 2017;17(1):89.

Asgari MM, Wu JJ, Gelfand JM, Salman C, Curtis JR, Harrold LR, et al. Validity of diagnostic codes and prevalence of psoriasis and psoriatic arthritis in a managed care population, 1996-2009. Pharmacoepidemiol Drug Saf. 2013;22(8):842–9.

Hoffman S, Podgurski A. Big bad data: law, public health, and biomedical databases. J Law Med Ethics. 2013;41(Suppl 1):56–60.

Adler-Milstein J, Jha AK. Electronic health records: the authors reply. Health Aff. 2014;33(10):1877.

Geruso M, Layton T. Upcoding: evidence from medicare on squishy risk adjustment. J Polit Econ. 2020;12(3):984–1026.

Lash TL, Fox MP, Fink AK. Applying quantitative bias analysis to epidemiologic data. New York: Springer-Verlag New York; 2009.

Book Google Scholar

Gustafson P. Measurement error and misclassification in statistics and epidemiology: impacts and Bayesian adjustments. Boca Raton: Chapman and Hall/CRC; 2004.

Duda SN, Shepherd BE, Gadd CS, et al. Measuring the quality of observational study data in an international HIV research network. PLoS One. 2012;7(4):e33908.