Nursing Research Nursing Test Bank and Practice Questions (60 Items)

Welcome to your nursing test bank and practice questions for nursing research.

Nursing Research Test Bank

Nursing research has a great significance on the contemporary and future professional nursing practice , thus rendering it an essential component of the educational process. Research is typically not among the traditional responsibilities of an entry-level nurse . Many nurses are involved in either direct patient care or administrative aspects of health care. However, nursing research is a growing field in which individuals within the profession can contribute a variety of skills and experiences to the science of nursing care. Nursing research is critical to the nursing profession and is necessary for continuing advancements that promote optimal nursing care. Test your knowledge about nursing research in this 60-item nursing test bank .

Quiz Guidelines

Before you start, here are some examination guidelines and reminders you must read:

- Practice Exams : Engage with our Practice Exams to hone your skills in a supportive, low-pressure environment. These exams provide immediate feedback and explanations, helping you grasp core concepts, identify improvement areas, and build confidence in your knowledge and abilities.

- You’re given 2 minutes per item.

- For Challenge Exams, click on the “Start Quiz” button to start the quiz.

- Complete the quiz : Ensure that you answer the entire quiz. Only after you’ve answered every item will the score and rationales be shown.

- Learn from the rationales : After each quiz, click on the “View Questions” button to understand the explanation for each answer.

- Free access : Guess what? Our test banks are 100% FREE. Skip the hassle – no sign-ups or registrations here. A sincere promise from Nurseslabs: we have not and won’t ever request your credit card details or personal info for our practice questions. We’re dedicated to keeping this service accessible and cost-free, especially for our amazing students and nurses. So, take the leap and elevate your career hassle-free!

- Share your thoughts : We’d love your feedback, scores, and questions! Please share them in the comments below.

Quizzes included in this guide are:

Recommended Resources

Recommended books and resources for your NCLEX success:

Disclosure: Included below are affiliate links from Amazon at no additional cost from you. We may earn a small commission from your purchase. For more information, check out our privacy policy .

Saunders Comprehensive Review for the NCLEX-RN Saunders Comprehensive Review for the NCLEX-RN Examination is often referred to as the best nursing exam review book ever. More than 5,700 practice questions are available in the text. Detailed test-taking strategies are provided for each question, with hints for analyzing and uncovering the correct answer option.

Strategies for Student Success on the Next Generation NCLEX® (NGN) Test Items Next Generation NCLEX®-style practice questions of all types are illustrated through stand-alone case studies and unfolding case studies. NCSBN Clinical Judgment Measurement Model (NCJMM) is included throughout with case scenarios that integrate the six clinical judgment cognitive skills.

Saunders Q & A Review for the NCLEX-RN® Examination This edition contains over 6,000 practice questions with each question containing a test-taking strategy and justifications for correct and incorrect answers to enhance review. Questions are organized according to the most recent NCLEX-RN test blueprint Client Needs and Integrated Processes. Questions are written at higher cognitive levels (applying, analyzing, synthesizing, evaluating, and creating) than those on the test itself.

NCLEX-RN Prep Plus by Kaplan The NCLEX-RN Prep Plus from Kaplan employs expert critical thinking techniques and targeted sample questions. This edition identifies seven types of NGN questions and explains in detail how to approach and answer each type. In addition, it provides 10 critical thinking pathways for analyzing exam questions.

Illustrated Study Guide for the NCLEX-RN® Exam The 10th edition of the Illustrated Study Guide for the NCLEX-RN Exam, 10th Edition. This study guide gives you a robust, visual, less-intimidating way to remember key facts. 2,500 review questions are now included on the Evolve companion website. 25 additional illustrations and mnemonics make the book more appealing than ever.

NCLEX RN Examination Prep Flashcards (2023 Edition) NCLEX RN Exam Review FlashCards Study Guide with Practice Test Questions [Full-Color Cards] from Test Prep Books. These flashcards are ready for use, allowing you to begin studying immediately. Each flash card is color-coded for easy subject identification.

Recommended Links

If you need more information or practice quizzes, please do visit the following links:

An investment in knowledge pays the best interest. Keep up the pace and continue learning with these practice quizzes:

- Nursing Test Bank: Free Practice Questions UPDATED ! Our most comprehenisve and updated nursing test bank that includes over 3,500 practice questions covering a wide range of nursing topics that are absolutely free!

- NCLEX Questions Nursing Test Bank and Review UPDATED! Over 1,000+ comprehensive NCLEX practice questions covering different nursing topics. We’ve made a significant effort to provide you with the most challenging questions along with insightful rationales for each question to reinforce learning.

4 thoughts on “Nursing Research Nursing Test Bank and Practice Questions (60 Items)”

Thanks for the well prepared questions and answers. It will be of a great help for those who look up your contributions.

Hi Zac, we’re having some performance issues with the quizzes so we’re forced to change their settings in the meantime. We are working on a solution and will revert the changes once we’re sure that the problem is resolved. Thanks for the understanding!

I need pass question and answer on nursing research

Leave a Comment Cancel reply

- Request new password

- Create a new account

Research Methods in Early Childhood: An Introductory Guide

Student resources, multiple choice quiz.

Test your understanding of each chapter by taking the quiz below. Click anywhere on the question to reveal the answer. Good luck!

1. A literature review is best described as:

- A list of relevant articles and other published material you have read about your topic, describing the content of each source

- An internet search for articles describing research relevant to your topic criticising the methodology and reliability of the findings

- An evaluative overview of what is known about a topic, based on published research and theoretical accounts, which serves as a basis for future research or policy decisions

- An essay looking at the theoretical background to your research study

2. Choose the best answer. A literature review is

- Conducted after you have decided upon your research question

- Helps in the formulation of your research aim and research question

- Is the last thing to be written in your research report

- Is not part of a research proposal

3. Choose the best answer. Which is the most reliable source of information for your literature review?

- A TV documentary

- A newspaper article

- A peer reviewed research article

- A relevant chapter from a textbook

4. Choose the best answer. Critical analysis means

- Subjecting the literature to a process of interrogation in order to assess the relevance, authenticity and reliability of the literature together with the summarising of common thematic areas of discussion

- An evaluation of past research being critical of the methodology used and describing how your methodology will be an improvement

- An analysis of theoretical approaches showing how they are no longer valid according to our current state of knowledge

- Looking at the way articles are structured, pointing out logical inconsistencies

5. Which is not a reason for accurate referencing in your literature review?

- Accurate referencing is needed so that tutors can follow up your sources and check that you have reported them accurately

- Accurate referencing is needed so that researchers who read your work are alerted to source that might be helpful for them

- Referencing shows that you go to the library when not in lectures

- Accurate referencing is required because it is an academic convention

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Korean Med Sci

- v.38(48); 2023 Dec 11

- PMC10713437

Designing, Conducting, and Reporting Survey Studies: A Primer for Researchers

Olena zimba.

1 Department of Clinical Rheumatology and Immunology, University Hospital in Krakow, Krakow, Poland.

2 National Institute of Geriatrics, Rheumatology and Rehabilitation, Warsaw, Poland.

3 Department of Internal Medicine N2, Danylo Halytsky Lviv National Medical University, Lviv, Ukraine.

Armen Yuri Gasparyan

4 Departments of Rheumatology and Research and Development, Dudley Group NHS Foundation Trust (Teaching Trust of the University of Birmingham, UK), Russells Hall Hospital, Dudley, UK.

Survey studies have become instrumental in contributing to the evidence accumulation in rapidly developing medical disciplines such as medical education, public health, and nursing. The global medical community has seen an upsurge of surveys covering the experience and perceptions of health specialists, patients, and public representatives in the peri-pandemic coronavirus disease 2019 period. Currently, surveys can play a central role in increasing research activities in non-mainstream science countries where limited research funding and other barriers hinder science growth. Planning surveys starts with overviewing related reviews and other publications which may help to design questionnaires with comprehensive coverage of all related points. The validity and reliability of questionnaires rely on input from experts and potential responders who may suggest pertinent revisions to prepare forms with attractive designs, easily understandable questions, and correctly ordered points that appeal to target respondents. Currently available numerous online platforms such as Google Forms and Survey Monkey enable moderating online surveys and collecting responses from a large number of responders. Online surveys benefit from disseminating questionnaires via social media and other online platforms which facilitate the survey internationalization and participation of large groups of responders. Survey reporting can be arranged in line with related recommendations and reporting standards all of which have their strengths and limitations. The current article overviews available recommendations and presents pointers on designing, conducting, and reporting surveys.

INTRODUCTION

Surveys are increasingly popular research studies that are aimed at collecting and analyzing opinions of diverse subject groups at certain periods. Initially and predominantly employed for applied social science research, 1 surveys have maintained their social dimension and transformed into indispensable tools for analyzing knowledge, perceptions, prevalence of clinical conditions, and practices in the medical sciences. 2 In rapidly developing disciplines with social dimensions such as medical education, public health, and nursing, online surveys have become essential for monitoring and auditing healthcare and education services 3 , 4 and generating new hypotheses and research questions. 5 In non-mainstream science countries with uninterrupted Internet access, online surveys have also been praised as useful studies for increasing research activities. 6

In 2016, the Medical Subject Headings (MeSH) vocabulary of the US National Library of Medicine introduced "surveys and questionnaires" as a structured keyword, defining survey studies as "collections of data obtained from voluntary subjects" ( https://www.ncbi.nlm.nih.gov/mesh/?term=surveys+and+questionnaires ). Such studies are instrumental in the absence of evidence from randomized controlled trials, systematic reviews, and cohort studies. Tagging survey reports with this MeSH term is advisable for increasing the retrieval of relevant documents while searching through Medline, Scopus, and other global databases.

Surveys are relatively easy to conduct by distributing web-based and non-web-based questionnaires to large groups of potential responders. The ease of conduct primarily depends on the way of approaching potential respondents. Face-to-face interviews, regular postmails, e-mails, phone calls, and social media posts can be employed to reach numerous potential respondents. Digitization and social media popularization have improved the distribution of questionnaires, expanded respondents' engagement, facilitated swift data processing, and globalization of survey studies. 7

SURVEY REPORTING GUIDANCE

Despite the ease of survey studies and their importance for maintaining research activities across academic disciplines, their methodological quality, reproducibility, and implications vary widely. The deficiencies in designing and reporting are the main reason for the inefficiency of some surveys. For instance, systematic analyses of survey methodologies in nephrology, transfusion medicine, and radiology have indicated that less than one-third of related reports provide valid and reliable data. 8 , 9 , 10 Additionally, no discussions of respondents' representativeness, reasons for nonresponse, and generalizability of the results have been pinpointed as drawbacks of some survey reports. The revealed deficiencies have justified the need for survey designing and data processing in line with reporting recommendations, including those listed on the EQUATOR Network website ( https://www.equator-network.org/ ).

Arguably, survey studies lack discipline-specific and globally-acceptable reporting guidance. The diversity of surveyed subjects and populations is perhaps the main confounder. Although most questionnaires contain socio-demographic questions, there are no reporting guidelines specifically tailored to comprehensively inquire specialists across different academic disciplines, patients, and public representatives.

The EQUATOR Network platform currently lists some widely promoted documents with statements on conducting and reporting web-based and non-web-based surveys ( Table 1 ). 11 , 12 , 13 , 14 The oldest published recommendation guides on postal, face-to-face, and telephone interviews. 1 One of its critical points highlights the need to formulate a clear and explicit question/objective to run a focused survey and to design questionnaires with respondent-friendly layout and content. 1 The Checklist for Reporting Results of Internet E-Surveys (CHERRIES) is the most-used document for reporting online surveys. 11 The CHERRIES checklist included points on ensuring the reliability of online surveys and avoiding manipulations with multiple entries by the same users. 11 A specific set of recommendations, listed by the EQUATOR Network, is available for specialists who plan web-based and non-web-based surveys of knowledge, attitude, and practice in clinical medicine. 12 These recommendations help design valid questionnaires, survey representative subjects with clinical knowledge, and complete transparent reporting of the obtained results. 12

COVID-19 = coronavirus disease 2019.

From January 2018 to December 2019, three rounds of surveying experts with interest in surveys and questionnaires allowed reaching consensus on a set of points for reporting web-based and non-web-based surveys. 13 The Consensus-Based Checklist for Reporting of Survey Studies included a rating of 19 items of survey reports, from titles to acknowledgments. 13 Finally, rapid recommendations on online surveys amid the coronavirus disease 2019 (COVID-19) pandemic were published to guide the authors on how to choose social media and other online platforms for disseminating questionnaires and targeting representative groups of respondents. 14

Adhering to a combination of these recommendations is advisable to minimize the limitations of each document and increase the transparency of survey reports. For cross-sectional analyses of large sample sizes, additionally consulting the STROBE standard of the EQUATOR Network may further improve the accuracy of reporting respondents' inclusion and exclusion criteria. In fact, there are examples of online survey reports adhering to both CHERRIES and STROBE recommendations. 15 , 16

ETHICS CONSIDERATIONS

Although health research authorities in some countries lack mandates for full ethics review of survey studies, obtaining formal review protocols or ethics waivers is advisable for most surveys involving respondents from more than one country. And following country-based regulations and ethical norms of research are therefore mandatory. 14 , 17

Full ethics review or exemption procedures are important steps for planning and conducting ethically sound surveys. Given the non-interventional origin and absence of immediate health risks for participants, ethics committees may approve survey protocols without a full ethics review. 18 A full ethics review is however required when the informational and psychological harms of surveys increase the risk. 18 Informational harms may result from unauthorized access to respondents' personal data and stigmatization of respondents with leaked information about social diseases. Psychological harms may include anxiety, depression, and exacerbation of underlying psychiatric diseases.

Survey questionnaires submitted for evaluation should indicate how informed consent is obtained from respondents. 13 Additionally, information about confidentiality, anonymity, questionnaire delivery modes, compensations, and mechanisms preventing unauthorized access to questionnaires should be provided. 13 , 14 Ethical considerations and validation are especially important in studies involving vulnerable and marginalized subjects with diminished autonomy and poor social status due to dementia, substance abuse, inappropriate sexual behavior, and certain infections. 18 , 19 , 20 Precautions should be taken to avoid confidentiality breaches and bot activities when surveying via insecure online platforms. 21

Monetary compensation helps attract respondents to fill out lengthy questionnaires. However, such incentives may create mechanisms deceiving the system by surveyees with a primary interest in compensation. 22 Ethics review protocols may include points on recording online responders' IP addresses and blocking duplicate submissions from the same Internet locations. 22 IP addresses are viewed as personal information in the EU, but not in the US. Notably, IP identification may deter some potential responders in the EU. 21

PATIENT KNOWLEDGE AND PERCEPTION SURVEYS

The design of patient knowledge and perception surveys is insufficiently defined and poorly explored. Although such surveys are aimed at consistently covering research questions on clinical presentation, prevention, and treatment, more emphasis is now placed on psychometric aspects of designing related questionnaires. 23 , 24 , 25 Targeting responsive patient groups to collect reliable answers is yet another challenge that can be addressed by distributing questionnaires to patients with good knowledge of their diseases, particularly those registering with university-affiliated clinics and representing patient associations. 26 , 27 , 28

The structure of questionnaires may differ for surveys of patient groups with various age-dependent health issues. Care should be taken when children are targeted since they often report a variety of modifiable conditions such as anxiety and depression, musculoskeletal problems, and pain, affecting their quality of life. 29 Likewise, gender and age differences should be considered in questionnaires addressing the quality of life in association with mental health and social status. 30 Questionnaires for older adults may benefit from including questions about social support and assistance in the context of caring for aging diseases. 31 Finally, addressing the needs of digital technologies and home-care applications may help to ensure the completeness of questionnaires for older adults with sedentary lifestyles and mobility disabilities. 32 , 33

SOCIAL MEDIA FOR QUESTIONNAIRE DISTRIBUTION

The widespread use of social media has made it easier to distribute questionnaires to a large number of potential responders. Employing popular platforms such as Twitter and Facebook has become particularly useful for conducting nationwide surveys on awareness and concerns about global health and pandemic issues. 34 , 35 When various social media platforms are simultaneously employed, participants' sociodemographic factors such as gender, age, and level of education may confound the study results. 36 Knowing targeted groups' preferred online networking and communication sites may better direct the questionnaire distribution. 37 , 38 , 39

Preliminary evidence suggests that distributing survey links via social-media accounts of individual users and organized e-groups with interest in specific health issues may increase their engagement and correctness of responses. 40 , 41

Since surveys employing social media are publicly accessible, related questionnaires should be professionally edited to easily inquire target populations, avoid sensitive and disturbing points, and ensure privacy and confidentiality. 42 , 43 Although counting e-post views is feasible, response rates of social-media distributed questionnaires are practically impossible to record. The latter is an inherent limitation of such surveys.

SURVEY SAMPLING

Establishing connections with target populations and diversifying questionnaire dissemination may increase the rigor of current surveys which are abundantly administered. 44 Sample sizes depend on various factors, including the chosen topic, aim, and sampling strategy (random or non-random). 12 Some topics such as COVID-19 and global health may easily attract the attention of large respondent groups motivated to answer a variety of questionnaire questions. In the beginning of the pandemic, most surveys employed non-random (non-probability) sampling strategies which resulted in analyses of numerous responses without response rate calculations. These qualitative research studies were mainly aimed to analyze opinions of specialists and patients exposed to COVID-19 to develop rapid guidelines and initiate clinical trials.

Outside the pandemic, and beyond hot topics, there is a growing trend of low response rates and inadequate representation of target populations. 45 Such a trend makes it difficult to design and conduct random (probability) surveys. Subsequently, hypotheses of current online surveys often omit points on randomization and sample size calculation, ending up with qualitative analyses and pilot studies. In fact, convenience (non-random or non-probability) sampling can be particularly suitable for previously unexplored and emerging topics when overviewing literature cannot help estimate optimal samples and entirely new questionnaires should be designed and tested. The limitations of convenience sampling minimize the generalizability of the conclusions since the sample representativeness is uncertain. 45

Researchers often employ 'snowball' sampling techniques with initial surveyees forwarding the questionnaires to other interested respondents, thereby maximizing the sample size. Another common technique for obtaining more responses relies on generating regular social media reminders and resending e-mails to interested individuals and groups. Such tactics can increase the study duration but cannot exclude the participation bias and non-response.

Purposive or targeted sampling is perhaps the most precise technique when knowing the target population size and respondents' readiness to correctly fill the questionnaires and ensure an exact estimate of response rate, close to 100%. 46

DESIGNING QUESTIONNAIRES

Correctness, confidentiality, privacy, and anonymity are critical points of inquiry in questionnaires. 47 Correctly worded and convincingly presented survey invitations with consenting options and reassurances of secure data processing may increase response rates and ensure the validity of responses. 47 Online surveys are believed to be more advantageous than offline inquiries for ensuring anonymity and privacy, particularly for targeting socially marginalized and stigmatized subjects. Online study design is indeed optimal for collecting more responses in surveys of sex- and gender-related and otherwise sensitive topics.

Performing comprehensive literature reviews, consultations with subject experts, and Delphi exercises may all help to specify survey objectives, identify questionnaire domains, and formulate pertinent questions. Literature searches are required for in-depth topic coverage and identification of previously published relevant surveys. By analyzing previous questionnaire characteristics, modifications can be made to designing new self-administered surveys. The justification of new studies should correctly acknowledge similar published reports to avoid redundancies.

The initial part of a questionnaire usually includes a short introduction/preamble/cover letter that specifies the objectives, target respondents, potential benefits and risks, and moderators' contact details for further inquiries. This part may motivate potential respondents to consent and answer questions. The specifics, volume, and format of other parts are dependent on revisions in response to pretesting and pilot testing. 48 The pretesting usually involves co-authors and other contributors, colleagues with the subject interest while the pilot testing usually involves 5-10 target respondents who are well familiar with the subject and can swiftly complete the questionnaires. The guidance obtained at the pretesting and pilot testing allows editing, shortening, or expanding questionnaire sections. Although guidance on questionnaire length and question numbers is scarce, some experts empirically consider 5 domains with 5 questions in each as optimal. 12 Lengthy questionnaires may be biased due to respondents' fatigue and inability to answer numerous and complicated questions. 46

Questionnaire revisions are aimed at ensuring the validity and consistency of questions, implying the appeal to relevant responders and accurate covering of all essential points. 45 Valid questionnaires enable reliable and reproducible survey studies that end up with the same responses to variably worded and located questions. 45

Various combinations of open-ended and close-ended questions are advisable to comprehensively cover all pertinent points and enable easy and quick completion of questionnaires. Open-ended questions are usually included in small numbers since these require more time to respond. 46 Also, the interpretation and analysis of responses to open-ended questions hardly contribute to generating robust qualitative data. 49 Close-ended questions with single and multiple-choice answers constitute the main part of a questionnaire, with single answers easier to analyze and report. Questions with single answers can be presented as 3 or more Likert scales (e.g., yes/no/do not know).

Avoiding too simplistic (yes/no) questions and replacing them with Likert-scale items may increase the robustness of questionnaire analyses. 50 Additionally, constructing easily understandable questions, excluding merged items with two or more points, and moving sophisticated questions to the beginning of a questionnaire may add to the quality and feasibility of the study. 50

Survey studies are increasingly conducted by health professionals to swiftly explore opinions on a wide range of topics by diverse groups of specialists, patients, and public representatives. Arguably, quality surveys with generalizable results can be instrumental for guiding health practitioners in times of crises such as the COVID-19 pandemic when clinical trials, systematic reviews, and other evidence-based reports are scarcely available or absent. Online surveys can be particularly valuable for collecting and analyzing specialist, patient, and other subjects' responses in non-mainstream science countries where top evidence-based studies are scarce commodities and research funding is limited. Accumulated expertise in drafting quality questionnaires and conducting robust surveys is valuable for producing new data and generating new hypotheses and research questions.

The main advantages of surveys are related to the ease of conducting such studies with limited or no research funding. The digitization and social media advances have further contributed to the ease of surveying and growing global interest toward surveys among health professionals. Some of the disadvantages of current surveys are perhaps those related to imperfections of digital platforms for disseminating questionnaires and analysing responses.

Although some survey reporting standards and recommendations are available, none of these comprehensively cover all items of questionnaires and steps in surveying. None of the survey reporting standards is based on summarizing guidance of a large number of contributors involved in related research projects. As such, presenting the current guidance with a list of items for survey reports ( Table 2 ) may help better design and publish related articles.

Disclosure: The authors have no potential conflicts of interest to disclose.

Author Contributions:

- Conceptualization: Zimba O.

- Formal analysis: Zimba O, Gasparyan AY.

- Writing - original draft: Zimba O.

- Writing - review & editing: Zimba O, Gasparyan AY.

Tool in School: Quizlet

- Posted May 22, 2018

- By Lory Hough

When 15-year-old Andrew Sutherland created a software program in 2005 to help him study 111 French terms for a test on animals, little did he imagine that the program would eventually become one of the fastest-growing free education tools, with 30 million monthly users from 130 countries.

“Quizlet has absolutely become a valuable tool,” Sutherland says. “In the United States, half of all high school students and a third of all college students use us every month. That’s not something I expected to happen when I made it in high school, and it speaks to how essential it has become.”

Part of the appeal is that Quizlet takes a simple idea — picture paper flash cards — but gives it a modern twist. Online users create study sets (terms and definitions) or use study sets created by others, including classmates. They then have multiple ways to study the information: virtual flashcards or typing in answers to written or audio prompts. There are also two games: match (drag the correct answer) and gravity (type the correct answer as asteroids fall).

The online format is key, he says. “The appeal of a digital learning tool is that it can ask much more dynamic questions than what you can do with paper. Quizlet can figure out what material you’re struggling with and just focus on that. It can also verify what you know and coach you to only stop studying when it thinks you’re ready.”

Recently, they launched Quizlet Live for students to work in teams during class. Teacher feedback was key, he says, but adds, “My favorite type of feedback is hearing from teachers about new-use cases. The other day I was at a chocolate store and wearing my Quizlet shirt. The woman there said she uses Quizlet to train all their new employees about their chocolate. I want that job!”

Ed. Magazine

The magazine of the Harvard Graduate School of Education

Related Articles

Can Tablets Transform Teaching?

For Note Taking, Low-Tech Is Often Best

Q+A: Prasanth Nori, Ed.M.’19

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

9 Survey research

Survey research is a research method involving the use of standardised questionnaires or interviews to collect data about people and their preferences, thoughts, and behaviours in a systematic manner. Although census surveys were conducted as early as Ancient Egypt, survey as a formal research method was pioneered in the 1930–40s by sociologist Paul Lazarsfeld to examine the effects of the radio on political opinion formation of the United States. This method has since become a very popular method for quantitative research in the social sciences.

The survey method can be used for descriptive, exploratory, or explanatory research. This method is best suited for studies that have individual people as the unit of analysis. Although other units of analysis, such as groups, organisations or dyads—pairs of organisations, such as buyers and sellers—are also studied using surveys, such studies often use a specific person from each unit as a ‘key informant’ or a ‘proxy’ for that unit. Consequently, such surveys may be subject to respondent bias if the chosen informant does not have adequate knowledge or has a biased opinion about the phenomenon of interest. For instance, Chief Executive Officers may not adequately know employees’ perceptions or teamwork in their own companies, and may therefore be the wrong informant for studies of team dynamics or employee self-esteem.

Survey research has several inherent strengths compared to other research methods. First, surveys are an excellent vehicle for measuring a wide variety of unobservable data, such as people’s preferences (e.g., political orientation), traits (e.g., self-esteem), attitudes (e.g., toward immigrants), beliefs (e.g., about a new law), behaviours (e.g., smoking or drinking habits), or factual information (e.g., income). Second, survey research is also ideally suited for remotely collecting data about a population that is too large to observe directly. A large area—such as an entire country—can be covered by postal, email, or telephone surveys using meticulous sampling to ensure that the population is adequately represented in a small sample. Third, due to their unobtrusive nature and the ability to respond at one’s convenience, questionnaire surveys are preferred by some respondents. Fourth, interviews may be the only way of reaching certain population groups such as the homeless or illegal immigrants for which there is no sampling frame available. Fifth, large sample surveys may allow detection of small effects even while analysing multiple variables, and depending on the survey design, may also allow comparative analysis of population subgroups (i.e., within-group and between-group analysis). Sixth, survey research is more economical in terms of researcher time, effort and cost than other methods such as experimental research and case research. At the same time, survey research also has some unique disadvantages. It is subject to a large number of biases such as non-response bias, sampling bias, social desirability bias, and recall bias, as discussed at the end of this chapter.

Depending on how the data is collected, survey research can be divided into two broad categories: questionnaire surveys (which may be postal, group-administered, or online surveys), and interview surveys (which may be personal, telephone, or focus group interviews). Questionnaires are instruments that are completed in writing by respondents, while interviews are completed by the interviewer based on verbal responses provided by respondents. As discussed below, each type has its own strengths and weaknesses in terms of their costs, coverage of the target population, and researcher’s flexibility in asking questions.

Questionnaire surveys

Invented by Sir Francis Galton, a questionnaire is a research instrument consisting of a set of questions (items) intended to capture responses from respondents in a standardised manner. Questions may be unstructured or structured. Unstructured questions ask respondents to provide a response in their own words, while structured questions ask respondents to select an answer from a given set of choices. Subjects’ responses to individual questions (items) on a structured questionnaire may be aggregated into a composite scale or index for statistical analysis. Questions should be designed in such a way that respondents are able to read, understand, and respond to them in a meaningful way, and hence the survey method may not be appropriate or practical for certain demographic groups such as children or the illiterate.

Most questionnaire surveys tend to be self-administered postal surveys , where the same questionnaire is posted to a large number of people, and willing respondents can complete the survey at their convenience and return it in prepaid envelopes. Postal surveys are advantageous in that they are unobtrusive and inexpensive to administer, since bulk postage is cheap in most countries. However, response rates from postal surveys tend to be quite low since most people ignore survey requests. There may also be long delays (several months) in respondents’ completing and returning the survey, or they may even simply lose it. Hence, the researcher must continuously monitor responses as they are being returned, track and send non-respondents repeated reminders (two or three reminders at intervals of one to one and a half months is ideal). Questionnaire surveys are also not well-suited for issues that require clarification on the part of the respondent or those that require detailed written responses. Longitudinal designs can be used to survey the same set of respondents at different times, but response rates tend to fall precipitously from one survey to the next.

A second type of survey is a group-administered questionnaire . A sample of respondents is brought together at a common place and time, and each respondent is asked to complete the survey questionnaire while in that room. Respondents enter their responses independently without interacting with one another. This format is convenient for the researcher, and a high response rate is assured. If respondents do not understand any specific question, they can ask for clarification. In many organisations, it is relatively easy to assemble a group of employees in a conference room or lunch room, especially if the survey is approved by corporate executives.

A more recent type of questionnaire survey is an online or web survey. These surveys are administered over the Internet using interactive forms. Respondents may receive an email request for participation in the survey with a link to a website where the survey may be completed. Alternatively, the survey may be embedded into an email, and can be completed and returned via email. These surveys are very inexpensive to administer, results are instantly recorded in an online database, and the survey can be easily modified if needed. However, if the survey website is not password-protected or designed to prevent multiple submissions, the responses can be easily compromised. Furthermore, sampling bias may be a significant issue since the survey cannot reach people who do not have computer or Internet access, such as many of the poor, senior, and minority groups, and the respondent sample is skewed toward a younger demographic who are online much of the time and have the time and ability to complete such surveys. Computing the response rate may be problematic if the survey link is posted on LISTSERVs or bulletin boards instead of being emailed directly to targeted respondents. For these reasons, many researchers prefer dual-media surveys (e.g., postal survey and online survey), allowing respondents to select their preferred method of response.

Constructing a survey questionnaire is an art. Numerous decisions must be made about the content of questions, their wording, format, and sequencing, all of which can have important consequences for the survey responses.

Response formats. Survey questions may be structured or unstructured. Responses to structured questions are captured using one of the following response formats:

Dichotomous response , where respondents are asked to select one of two possible choices, such as true/false, yes/no, or agree/disagree. An example of such a question is: Do you think that the death penalty is justified under some circumstances? (circle one): yes / no.

Nominal response , where respondents are presented with more than two unordered options, such as: What is your industry of employment?: manufacturing / consumer services / retail / education / healthcare / tourism and hospitality / other.

Ordinal response , where respondents have more than two ordered options, such as: What is your highest level of education?: high school / bachelor’s degree / postgraduate degree.

Interval-level response , where respondents are presented with a 5-point or 7-point Likert scale, semantic differential scale, or Guttman scale. Each of these scale types were discussed in a previous chapter.

Continuous response , where respondents enter a continuous (ratio-scaled) value with a meaningful zero point, such as their age or tenure in a firm. These responses generally tend to be of the fill-in-the blanks type.

Question content and wording. Responses obtained in survey research are very sensitive to the types of questions asked. Poorly framed or ambiguous questions will likely result in meaningless responses with very little value. Dillman (1978) [1] recommends several rules for creating good survey questions. Every single question in a survey should be carefully scrutinised for the following issues:

Is the question clear and understandable ?: Survey questions should be stated in very simple language, preferably in active voice, and without complicated words or jargon that may not be understood by a typical respondent. All questions in the questionnaire should be worded in a similar manner to make it easy for respondents to read and understand them. The only exception is if your survey is targeted at a specialised group of respondents, such as doctors, lawyers and researchers, who use such jargon in their everyday environment. Is the question worded in a negative manner ?: Negatively worded questions such as ‘Should your local government not raise taxes?’ tend to confuse many respondents and lead to inaccurate responses. Double-negatives should be avoided when designing survey questions.

Is the question ambiguous ?: Survey questions should not use words or expressions that may be interpreted differently by different respondents (e.g., words like ‘any’ or ‘just’). For instance, if you ask a respondent, ‘What is your annual income?’, it is unclear whether you are referring to salary/wages, or also dividend, rental, and other income, whether you are referring to personal income, family income (including spouse’s wages), or personal and business income. Different interpretation by different respondents will lead to incomparable responses that cannot be interpreted correctly.

Does the question have biased or value-laden words ?: Bias refers to any property of a question that encourages subjects to answer in a certain way. Kenneth Rasinky (1989) [2] examined several studies on people’s attitude toward government spending, and observed that respondents tend to indicate stronger support for ‘assistance to the poor’ and less for ‘welfare’, even though both terms had the same meaning. In this study, more support was also observed for ‘halting rising crime rate’ and less for ‘law enforcement’, more for ‘solving problems of big cities’ and less for ‘assistance to big cities’, and more for ‘dealing with drug addiction’ and less for ‘drug rehabilitation’. A biased language or tone tends to skew observed responses. It is often difficult to anticipate in advance the biasing wording, but to the greatest extent possible, survey questions should be carefully scrutinised to avoid biased language.

Is the question double-barrelled ?: Double-barrelled questions are those that can have multiple answers. For example, ‘Are you satisfied with the hardware and software provided for your work?’. In this example, how should a respondent answer if they are satisfied with the hardware, but not with the software, or vice versa? It is always advisable to separate double-barrelled questions into separate questions: ‘Are you satisfied with the hardware provided for your work?’, and ’Are you satisfied with the software provided for your work?’. Another example: ‘Does your family favour public television?’. Some people may favour public TV for themselves, but favour certain cable TV programs such as Sesame Street for their children.

Is the question too general ?: Sometimes, questions that are too general may not accurately convey respondents’ perceptions. If you asked someone how they liked a certain book and provided a response scale ranging from ‘not at all’ to ‘extremely well’, if that person selected ‘extremely well’, what do they mean? Instead, ask more specific behavioural questions, such as, ‘Will you recommend this book to others, or do you plan to read other books by the same author?’. Likewise, instead of asking, ‘How big is your firm?’ (which may be interpreted differently by respondents), ask, ‘How many people work for your firm?’, and/or ‘What is the annual revenue of your firm?’, which are both measures of firm size.

Is the question too detailed ?: Avoid unnecessarily detailed questions that serve no specific research purpose. For instance, do you need the age of each child in a household, or is just the number of children in the household acceptable? However, if unsure, it is better to err on the side of details than generality.

Is the question presumptuous ?: If you ask, ‘What do you see as the benefits of a tax cut?’, you are presuming that the respondent sees the tax cut as beneficial. Many people may not view tax cuts as being beneficial, because tax cuts generally lead to lesser funding for public schools, larger class sizes, and fewer public services such as police, ambulance, and fire services. Avoid questions with built-in presumptions.

Is the question imaginary ?: A popular question in many television game shows is, ‘If you win a million dollars on this show, how will you spend it?’. Most respondents have never been faced with such an amount of money before and have never thought about it—they may not even know that after taxes, they will get only about $640,000 or so in the United States, and in many cases, that amount is spread over a 20-year period—and so their answers tend to be quite random, such as take a tour around the world, buy a restaurant or bar, spend on education, save for retirement, help parents or children, or have a lavish wedding. Imaginary questions have imaginary answers, which cannot be used for making scientific inferences.

Do respondents have the information needed to correctly answer the question ?: Oftentimes, we assume that subjects have the necessary information to answer a question, when in reality, they do not. Even if a response is obtained, these responses tend to be inaccurate given the subjects’ lack of knowledge about the question being asked. For instance, we should not ask the CEO of a company about day-to-day operational details that they may not be aware of, or ask teachers about how much their students are learning, or ask high-schoolers, ‘Do you think the US Government acted appropriately in the Bay of Pigs crisis?’.

Question sequencing. In general, questions should flow logically from one to the next. To achieve the best response rates, questions should flow from the least sensitive to the most sensitive, from the factual and behavioural to the attitudinal, and from the more general to the more specific. Some general rules for question sequencing:

Start with easy non-threatening questions that can be easily recalled. Good options are demographics (age, gender, education level) for individual-level surveys and firmographics (employee count, annual revenues, industry) for firm-level surveys.

Never start with an open ended question.

If following a historical sequence of events, follow a chronological order from earliest to latest.

Ask about one topic at a time. When switching topics, use a transition, such as, ‘The next section examines your opinions about…’

Use filter or contingency questions as needed, such as, ‘If you answered “yes” to question 5, please proceed to Section 2. If you answered “no” go to Section 3′.

Other golden rules . Do unto your respondents what you would have them do unto you. Be attentive and appreciative of respondents’ time, attention, trust, and confidentiality of personal information. Always practice the following strategies for all survey research:

People’s time is valuable. Be respectful of their time. Keep your survey as short as possible and limit it to what is absolutely necessary. Respondents do not like spending more than 10-15 minutes on any survey, no matter how important it is. Longer surveys tend to dramatically lower response rates.

Always assure respondents about the confidentiality of their responses, and how you will use their data (e.g., for academic research) and how the results will be reported (usually, in the aggregate).

For organisational surveys, assure respondents that you will send them a copy of the final results, and make sure that you follow up with your promise.

Thank your respondents for their participation in your study.

Finally, always pretest your questionnaire, at least using a convenience sample, before administering it to respondents in a field setting. Such pretesting may uncover ambiguity, lack of clarity, or biases in question wording, which should be eliminated before administering to the intended sample.

Interview survey

Interviews are a more personalised data collection method than questionnaires, and are conducted by trained interviewers using the same research protocol as questionnaire surveys (i.e., a standardised set of questions). However, unlike a questionnaire, the interview script may contain special instructions for the interviewer that are not seen by respondents, and may include space for the interviewer to record personal observations and comments. In addition, unlike postal surveys, the interviewer has the opportunity to clarify any issues raised by the respondent or ask probing or follow-up questions. However, interviews are time-consuming and resource-intensive. Interviewers need special interviewing skills as they are considered to be part of the measurement instrument, and must proactively strive not to artificially bias the observed responses.

The most typical form of interview is a personal or face-to-face interview , where the interviewer works directly with the respondent to ask questions and record their responses. Personal interviews may be conducted at the respondent’s home or office location. This approach may even be favoured by some respondents, while others may feel uncomfortable allowing a stranger into their homes. However, skilled interviewers can persuade respondents to co-operate, dramatically improving response rates.

A variation of the personal interview is a group interview, also called a focus group . In this technique, a small group of respondents (usually 6–10 respondents) are interviewed together in a common location. The interviewer is essentially a facilitator whose job is to lead the discussion, and ensure that every person has an opportunity to respond. Focus groups allow deeper examination of complex issues than other forms of survey research, because when people hear others talk, it often triggers responses or ideas that they did not think about before. However, focus group discussion may be dominated by a dominant personality, and some individuals may be reluctant to voice their opinions in front of their peers or superiors, especially while dealing with a sensitive issue such as employee underperformance or office politics. Because of their small sample size, focus groups are usually used for exploratory research rather than descriptive or explanatory research.

A third type of interview survey is a telephone interview . In this technique, interviewers contact potential respondents over the phone, typically based on a random selection of people from a telephone directory, to ask a standard set of survey questions. A more recent and technologically advanced approach is computer-assisted telephone interviewing (CATI). This is increasing being used by academic, government, and commercial survey researchers. Here the interviewer is a telephone operator who is guided through the interview process by a computer program displaying instructions and questions to be asked. The system also selects respondents randomly using a random digit dialling technique, and records responses using voice capture technology. Once respondents are on the phone, higher response rates can be obtained. This technique is not ideal for rural areas where telephone density is low, and also cannot be used for communicating non-audio information such as graphics or product demonstrations.

Role of interviewer. The interviewer has a complex and multi-faceted role in the interview process, which includes the following tasks:

Prepare for the interview: Since the interviewer is in the forefront of the data collection effort, the quality of data collected depends heavily on how well the interviewer is trained to do the job. The interviewer must be trained in the interview process and the survey method, and also be familiar with the purpose of the study, how responses will be stored and used, and sources of interviewer bias. They should also rehearse and time the interview prior to the formal study.

Locate and enlist the co-operation of respondents: Particularly in personal, in-home surveys, the interviewer must locate specific addresses, and work around respondents’ schedules at sometimes undesirable times such as during weekends. They should also be like a salesperson, selling the idea of participating in the study.

Motivate respondents: Respondents often feed off the motivation of the interviewer. If the interviewer is disinterested or inattentive, respondents will not be motivated to provide useful or informative responses either. The interviewer must demonstrate enthusiasm about the study, communicate the importance of the research to respondents, and be attentive to respondents’ needs throughout the interview.

Clarify any confusion or concerns: Interviewers must be able to think on their feet and address unanticipated concerns or objections raised by respondents to the respondents’ satisfaction. Additionally, they should ask probing questions as necessary even if such questions are not in the script.

Observe quality of response: The interviewer is in the best position to judge the quality of information collected, and may supplement responses obtained using personal observations of gestures or body language as appropriate.

Conducting the interview. Before the interview, the interviewer should prepare a kit to carry to the interview session, consisting of a cover letter from the principal investigator or sponsor, adequate copies of the survey instrument, photo identification, and a telephone number for respondents to call to verify the interviewer’s authenticity. The interviewer should also try to call respondents ahead of time to set up an appointment if possible. To start the interview, they should speak in an imperative and confident tone, such as, ‘I’d like to take a few minutes of your time to interview you for a very important study’, instead of, ‘May I come in to do an interview?’. They should introduce themself, present personal credentials, explain the purpose of the study in one to two sentences, and assure respondents that their participation is voluntary, and their comments are confidential, all in less than a minute. No big words or jargon should be used, and no details should be provided unless specifically requested. If the interviewer wishes to record the interview, they should ask for respondents’ explicit permission before doing so. Even if the interview is recorded, the interviewer must take notes on key issues, probes, or verbatim phrases

During the interview, the interviewer should follow the questionnaire script and ask questions exactly as written, and not change the words to make the question sound friendlier. They should also not change the order of questions or skip any question that may have been answered earlier. Any issues with the questions should be discussed during rehearsal prior to the actual interview sessions. The interviewer should not finish the respondent’s sentences. If the respondent gives a brief cursory answer, the interviewer should probe the respondent to elicit a more thoughtful, thorough response. Some useful probing techniques are:

The silent probe: Just pausing and waiting without going into the next question may suggest to respondents that the interviewer is waiting for more detailed response.

Overt encouragement: An occasional ‘uh-huh’ or ‘okay’ may encourage the respondent to go into greater details. However, the interviewer must not express approval or disapproval of what the respondent says.

Ask for elaboration: Such as, ‘Can you elaborate on that?’ or ‘A minute ago, you were talking about an experience you had in high school. Can you tell me more about that?’.

Reflection: The interviewer can try the psychotherapist’s trick of repeating what the respondent said. For instance, ‘What I’m hearing is that you found that experience very traumatic’ and then pause and wait for the respondent to elaborate.

After the interview is completed, the interviewer should thank respondents for their time, tell them when to expect the results, and not leave hastily. Immediately after leaving, they should write down any notes or key observations that may help interpret the respondent’s comments better.

Biases in survey research

Despite all of its strengths and advantages, survey research is often tainted with systematic biases that may invalidate some of the inferences derived from such surveys. Five such biases are the non-response bias, sampling bias, social desirability bias, recall bias, and common method bias.

Non-response bias. Survey research is generally notorious for its low response rates. A response rate of 15-20 per cent is typical in a postal survey, even after two or three reminders. If the majority of the targeted respondents fail to respond to a survey, this may indicate a systematic reason for the low response rate, which may in turn raise questions about the validity of the study’s results. For instance, dissatisfied customers tend to be more vocal about their experience than satisfied customers, and are therefore more likely to respond to questionnaire surveys or interview requests than satisfied customers. Hence, any respondent sample is likely to have a higher proportion of dissatisfied customers than the underlying population from which it is drawn. In this instance, not only will the results lack generalisability, but the observed outcomes may also be an artefact of the biased sample. Several strategies may be employed to improve response rates:

Advance notification: Sending a short letter to the targeted respondents soliciting their participation in an upcoming survey can prepare them in advance and improve their propensity to respond. The letter should state the purpose and importance of the study, mode of data collection (e.g., via a phone call, a survey form in the mail, etc.), and appreciation for their co-operation. A variation of this technique may be to ask the respondent to return a prepaid postcard indicating whether or not they are willing to participate in the study.

Relevance of content: People are more likely to respond to surveys examining issues of relevance or importance to them.

Respondent-friendly questionnaire: Shorter survey questionnaires tend to elicit higher response rates than longer questionnaires. Furthermore, questions that are clear, non-offensive, and easy to respond tend to attract higher response rates.

Endorsement: For organisational surveys, it helps to gain endorsement from a senior executive attesting to the importance of the study to the organisation. Such endorsement can be in the form of a cover letter or a letter of introduction, which can improve the researcher’s credibility in the eyes of the respondents.

Follow-up requests: Multiple follow-up requests may coax some non-respondents to respond, even if their responses are late.

Interviewer training: Response rates for interviews can be improved with skilled interviewers trained in how to request interviews, use computerised dialling techniques to identify potential respondents, and schedule call-backs for respondents who could not be reached.

Incentives : Incentives in the form of cash or gift cards, giveaways such as pens or stress balls, entry into a lottery, draw or contest, discount coupons, promise of contribution to charity, and so forth may increase response rates.

Non-monetary incentives: Businesses, in particular, are more prone to respond to non-monetary incentives than financial incentives. An example of such a non-monetary incentive is a benchmarking report comparing the business’s individual response against the aggregate of all responses to a survey.

Confidentiality and privacy: Finally, assurances that respondents’ private data or responses will not fall into the hands of any third party may help improve response rates

Sampling bias. Telephone surveys conducted by calling a random sample of publicly available telephone numbers will systematically exclude people with unlisted telephone numbers, mobile phone numbers, and people who are unable to answer the phone when the survey is being conducted—for instance, if they are at work—and will include a disproportionate number of respondents who have landline telephone services with listed phone numbers and people who are home during the day, such as the unemployed, the disabled, and the elderly. Likewise, online surveys tend to include a disproportionate number of students and younger people who are constantly on the Internet, and systematically exclude people with limited or no access to computers or the Internet, such as the poor and the elderly. Similarly, questionnaire surveys tend to exclude children and the illiterate, who are unable to read, understand, or meaningfully respond to the questionnaire. A different kind of sampling bias relates to sampling the wrong population, such as asking teachers (or parents) about their students’ (or children’s) academic learning, or asking CEOs about operational details in their company. Such biases make the respondent sample unrepresentative of the intended population and hurt generalisability claims about inferences drawn from the biased sample.

Social desirability bias . Many respondents tend to avoid negative opinions or embarrassing comments about themselves, their employers, family, or friends. With negative questions such as, ‘Do you think that your project team is dysfunctional?’, ‘Is there a lot of office politics in your workplace?’, ‘Or have you ever illegally downloaded music files from the Internet?’, the researcher may not get truthful responses. This tendency among respondents to ‘spin the truth’ in order to portray themselves in a socially desirable manner is called the ‘social desirability bias’, which hurts the validity of responses obtained from survey research. There is practically no way of overcoming the social desirability bias in a questionnaire survey, but in an interview setting, an astute interviewer may be able to spot inconsistent answers and ask probing questions or use personal observations to supplement respondents’ comments.

Recall bias. Responses to survey questions often depend on subjects’ motivation, memory, and ability to respond. Particularly when dealing with events that happened in the distant past, respondents may not adequately remember their own motivations or behaviours, or perhaps their memory of such events may have evolved with time and no longer be retrievable. For instance, if a respondent is asked to describe his/her utilisation of computer technology one year ago, or even memorable childhood events like birthdays, their response may not be accurate due to difficulties with recall. One possible way of overcoming the recall bias is by anchoring the respondent’s memory in specific events as they happened, rather than asking them to recall their perceptions and motivations from memory.

Common method bias. Common method bias refers to the amount of spurious covariance shared between independent and dependent variables that are measured at the same point in time, such as in a cross-sectional survey, using the same instrument, such as a questionnaire. In such cases, the phenomenon under investigation may not be adequately separated from measurement artefacts. Standard statistical tests are available to test for common method bias, such as Harmon’s single-factor test (Podsakoff, MacKenzie, Lee & Podsakoff, 2003), [3] Lindell and Whitney’s (2001) [4] market variable technique, and so forth. This bias can potentially be avoided if the independent and dependent variables are measured at different points in time using a longitudinal survey design, or if these variables are measured using different methods, such as computerised recording of dependent variable versus questionnaire-based self-rating of independent variables.

- Dillman, D. (1978). Mail and telephone surveys: The total design method . New York: Wiley. ↵

- Rasikski, K. (1989). The effect of question wording on public support for government spending. Public Opinion Quarterly , 53(3), 388–394. ↵

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology , 88(5), 879–903. http://dx.doi.org/10.1037/0021-9010.88.5.879. ↵

- Lindell, M. K., & Whitney, D. J. (2001). Accounting for common method variance in cross-sectional research designs. Journal of Applied Psychology , 86(1), 114–121. ↵

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

SYSTEMATIC REVIEW article

Quantifying cognitive and affective impacts of quizlet on learning outcomes: a systematic review and comprehensive meta-analysis.

- 1 Foreign Language Education, School of Foreign Languages, Selcuk University, Konya, Türkiye

- 2 Foreign Language Education, School of Foreign Languages, Akdeniz University, Antalya, Türkiye

Background: This study synthesizes research on the impact of Quizlet on learners’ vocabulary learning achievement, retention, and attitude. Quizlet’s implementation in language education is posited to enhance the learning experience by facilitating the efficient and engaging assimilation of new linguistic concepts. The study aims to determine the extent to which Quizlet influences vocabulary learning achievement, retention, and attitude.

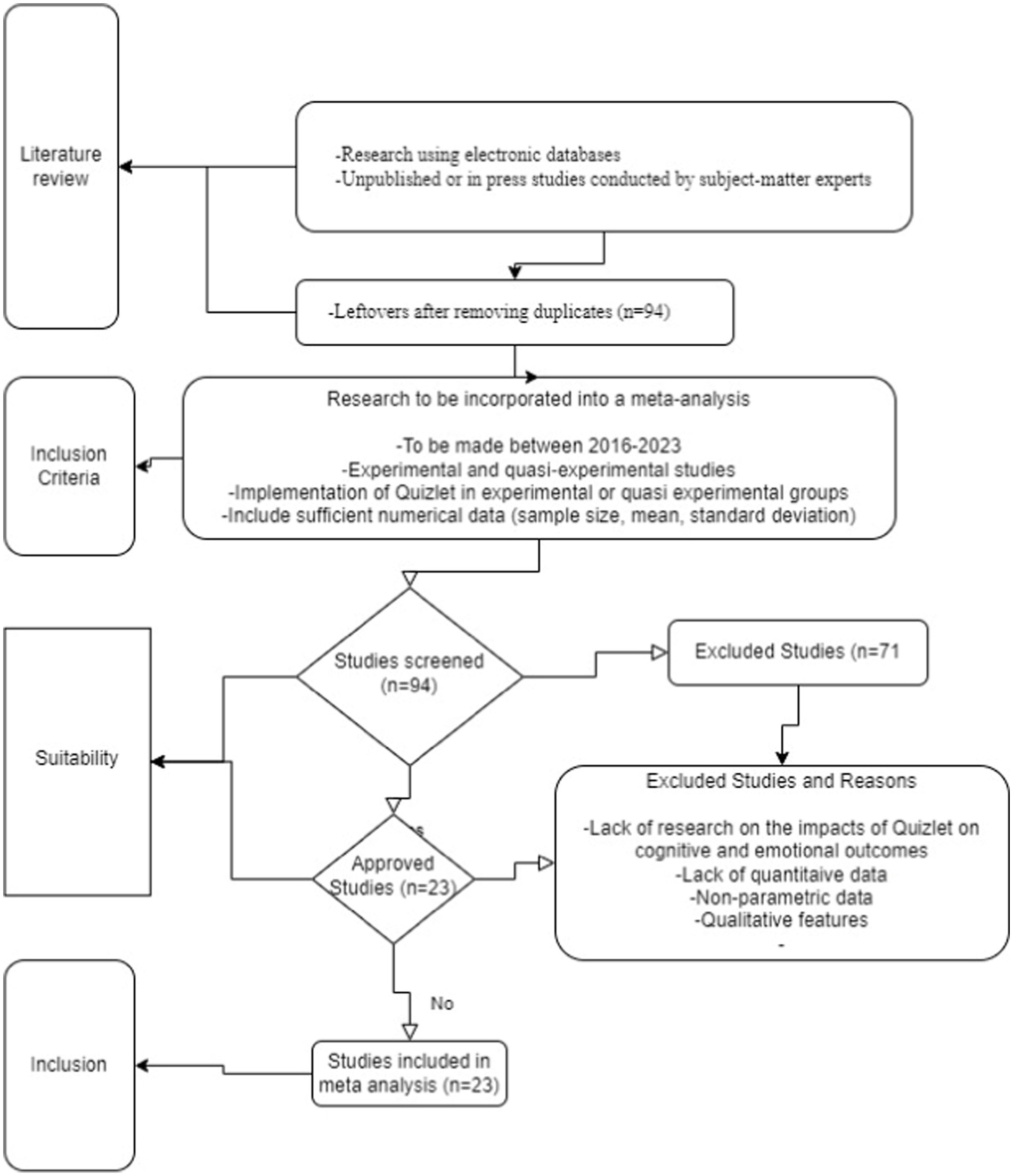

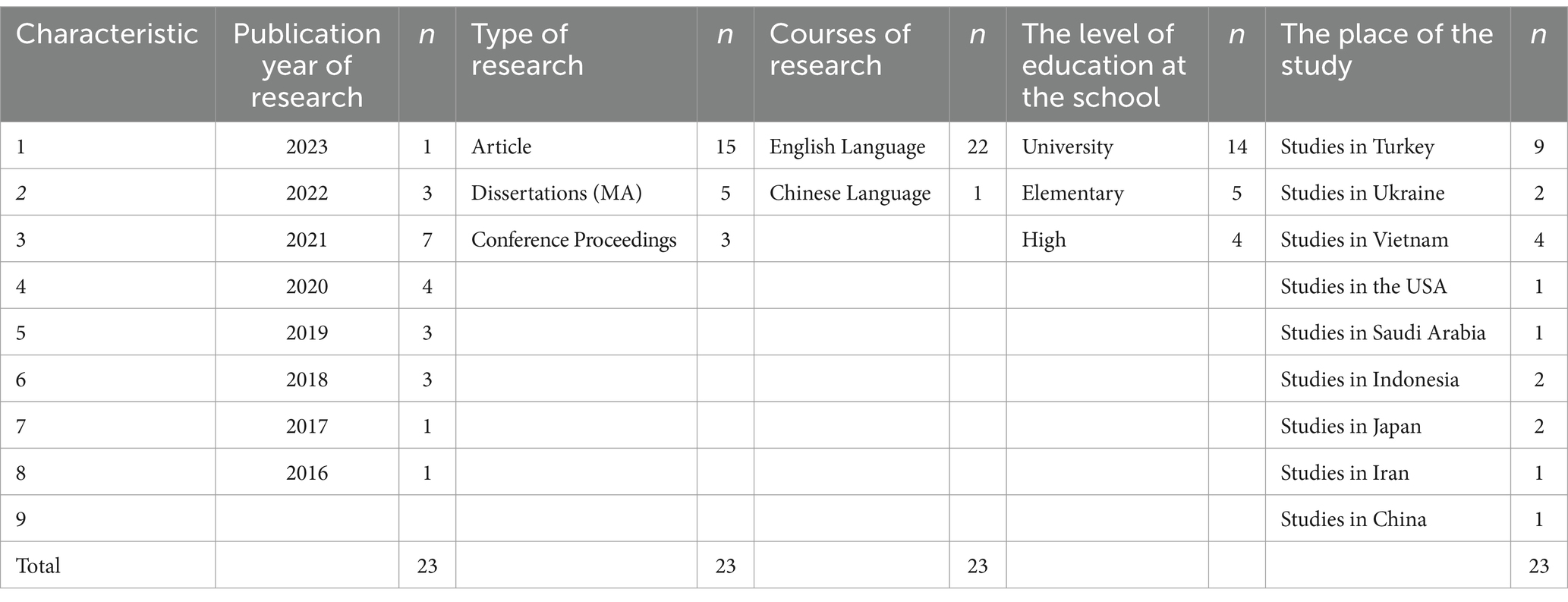

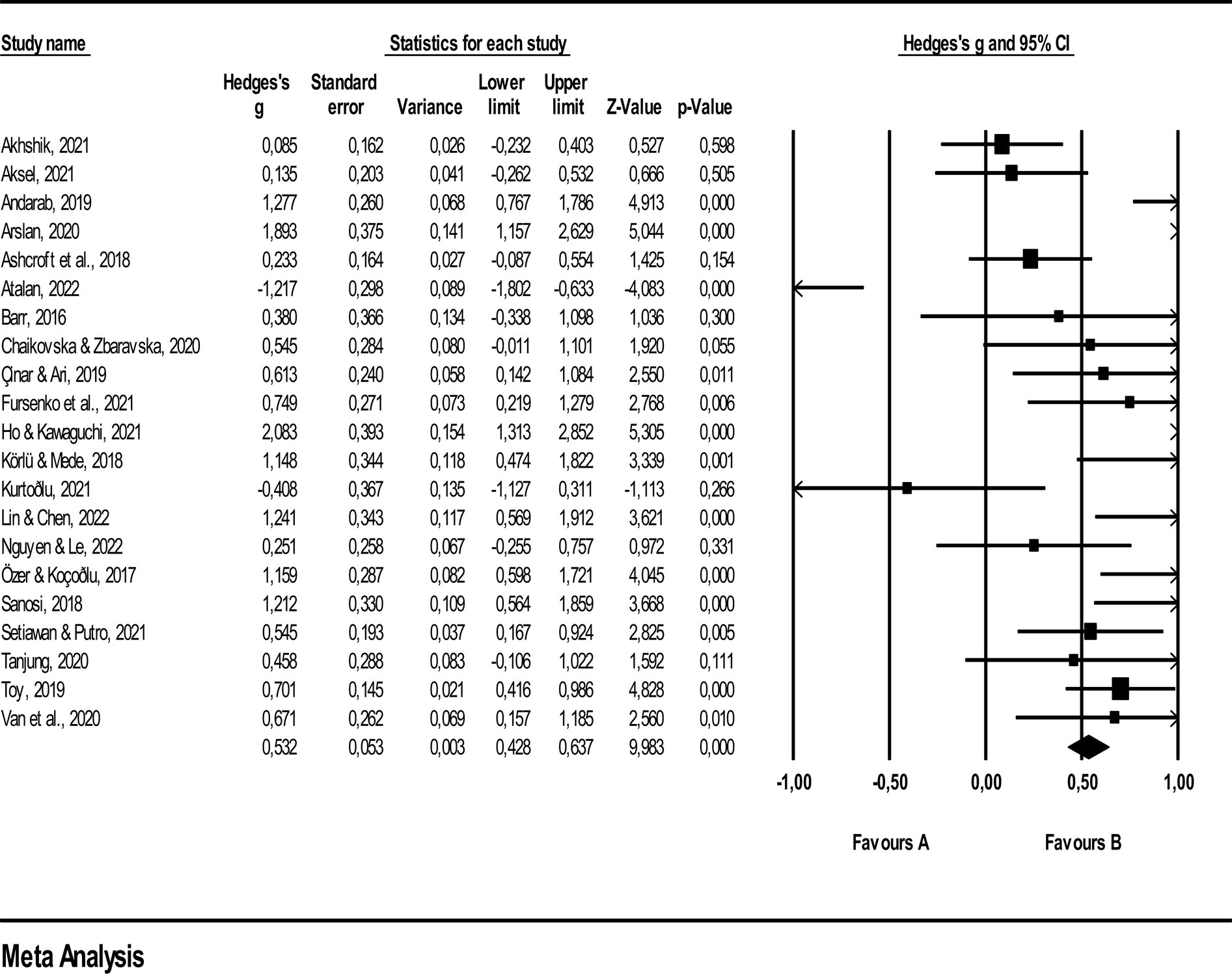

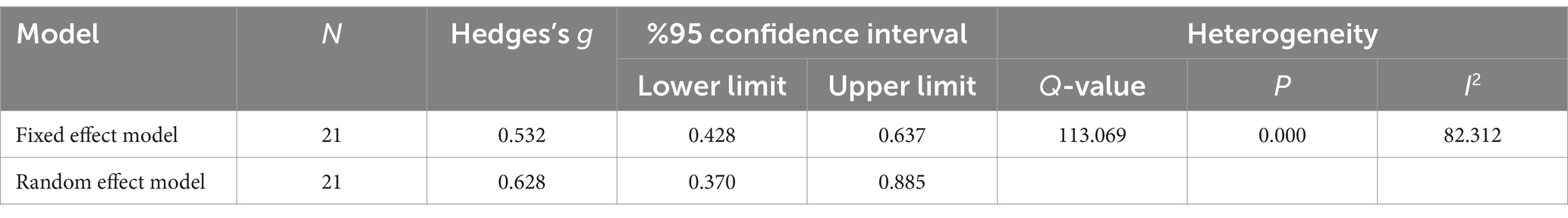

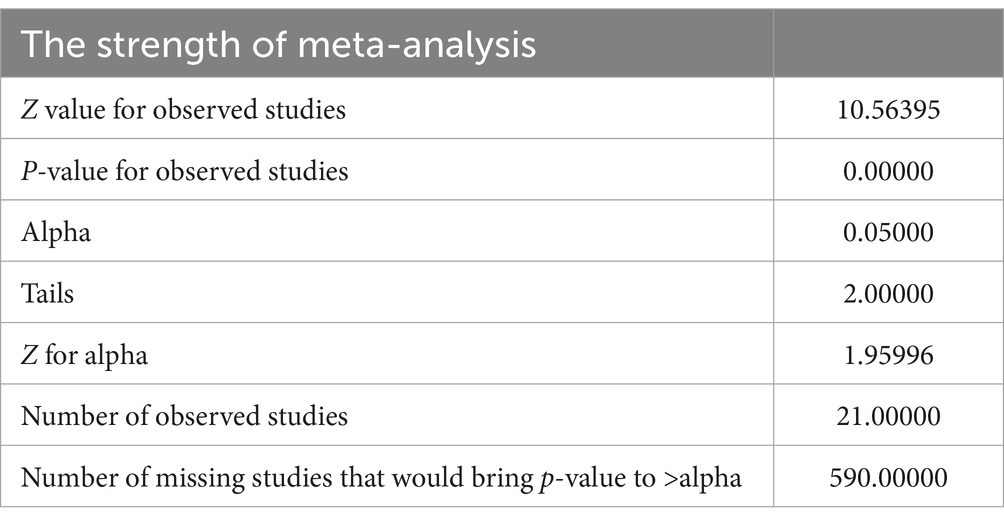

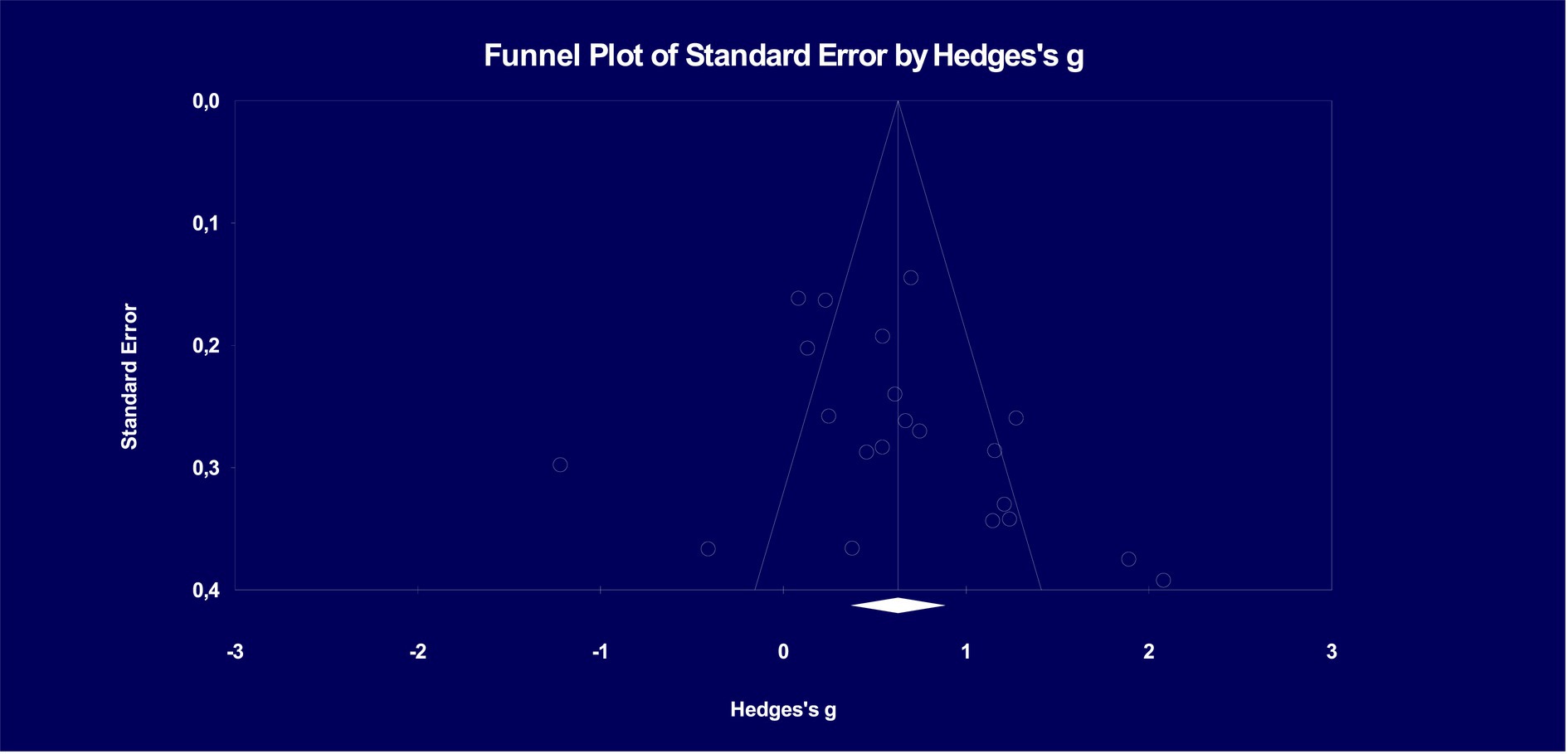

Methods: Employing a meta-analysis approach, this study investigates the primary research question: “Does Quizlet affect students’ vocabulary learning achievement, learning retention, and attitude?” Data were collected from various databases, identifying 94 studies, of which 23 met the inclusion criteria. The coding reliability was established at 98%, indicating a high degree of agreement among experts. A combination of random and fixed effects models was used to analyze the effect size of Quizlet on each outcome variable.

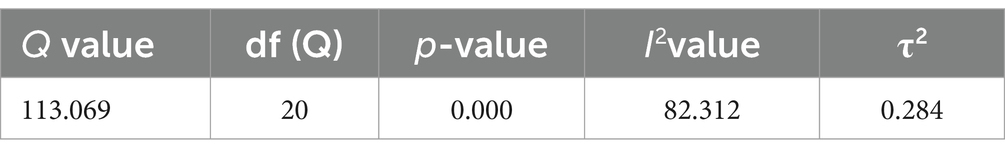

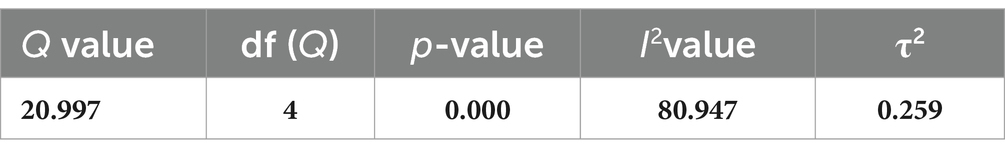

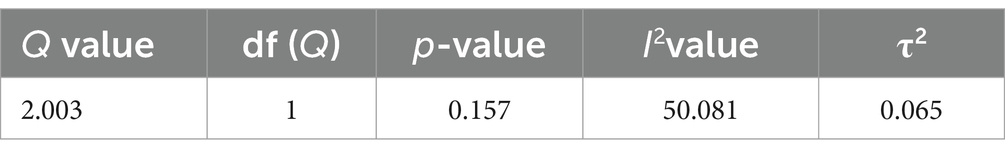

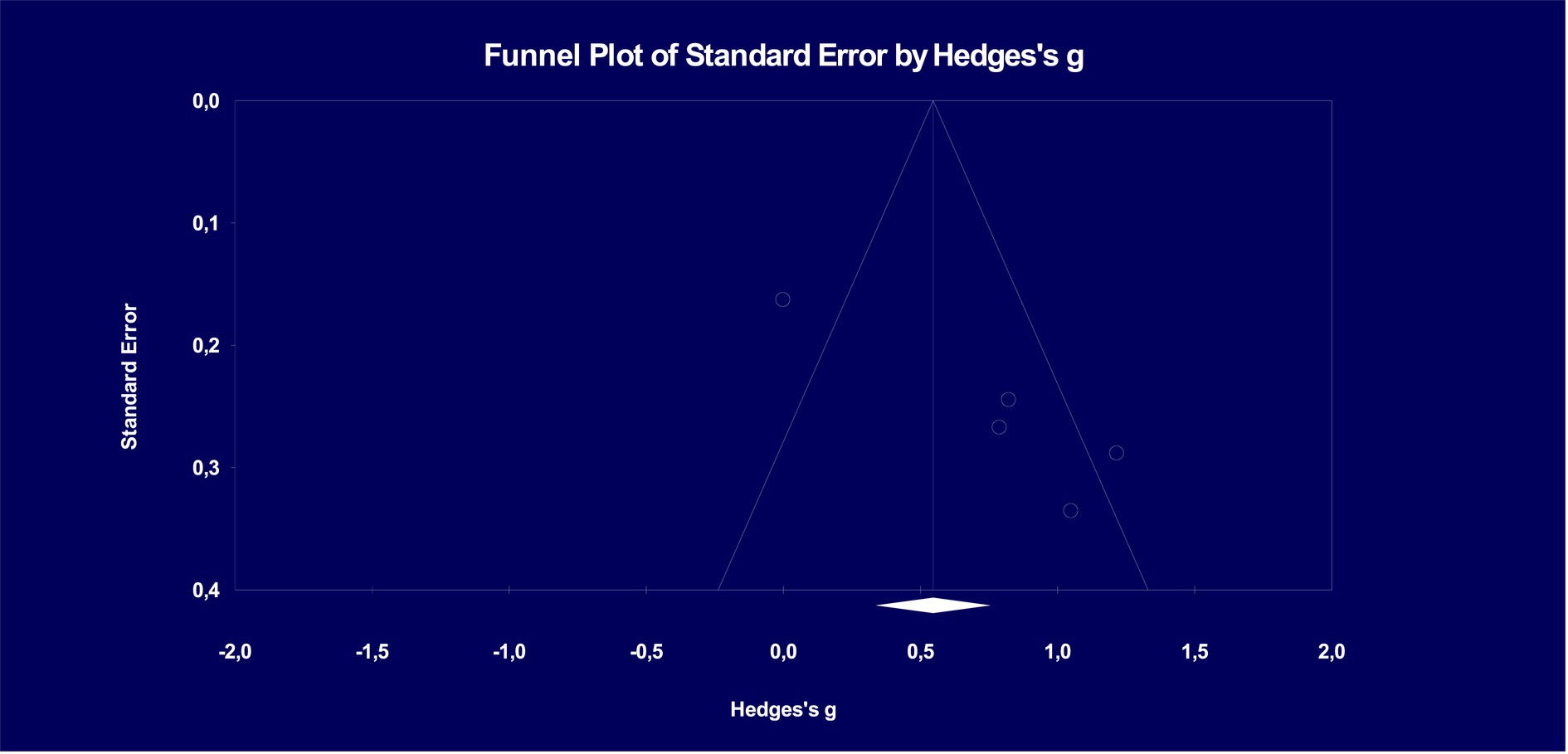

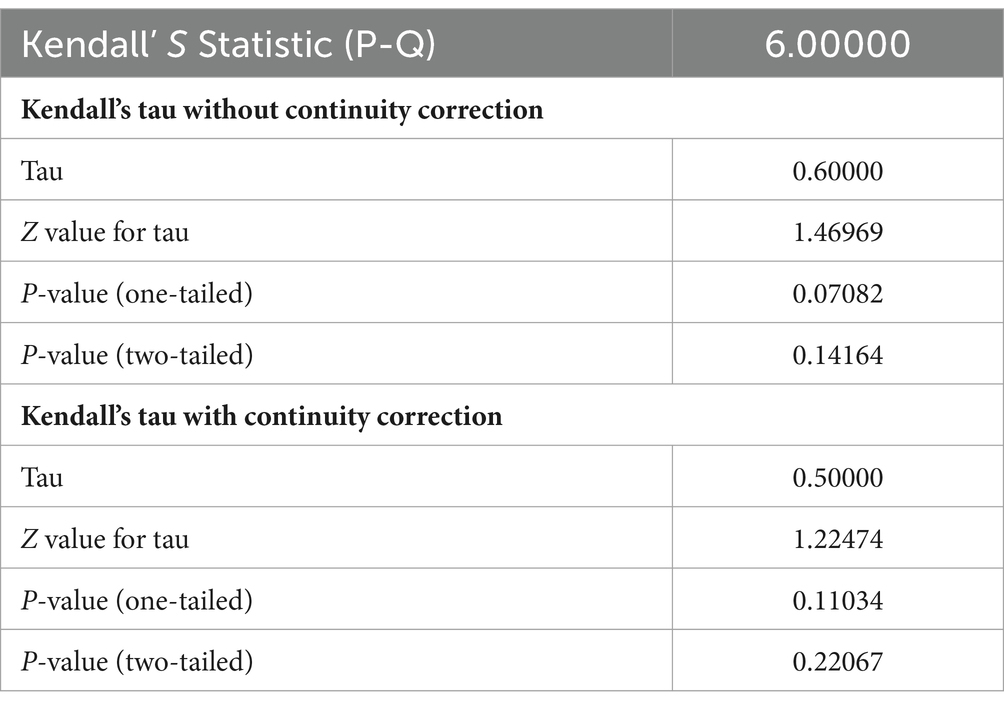

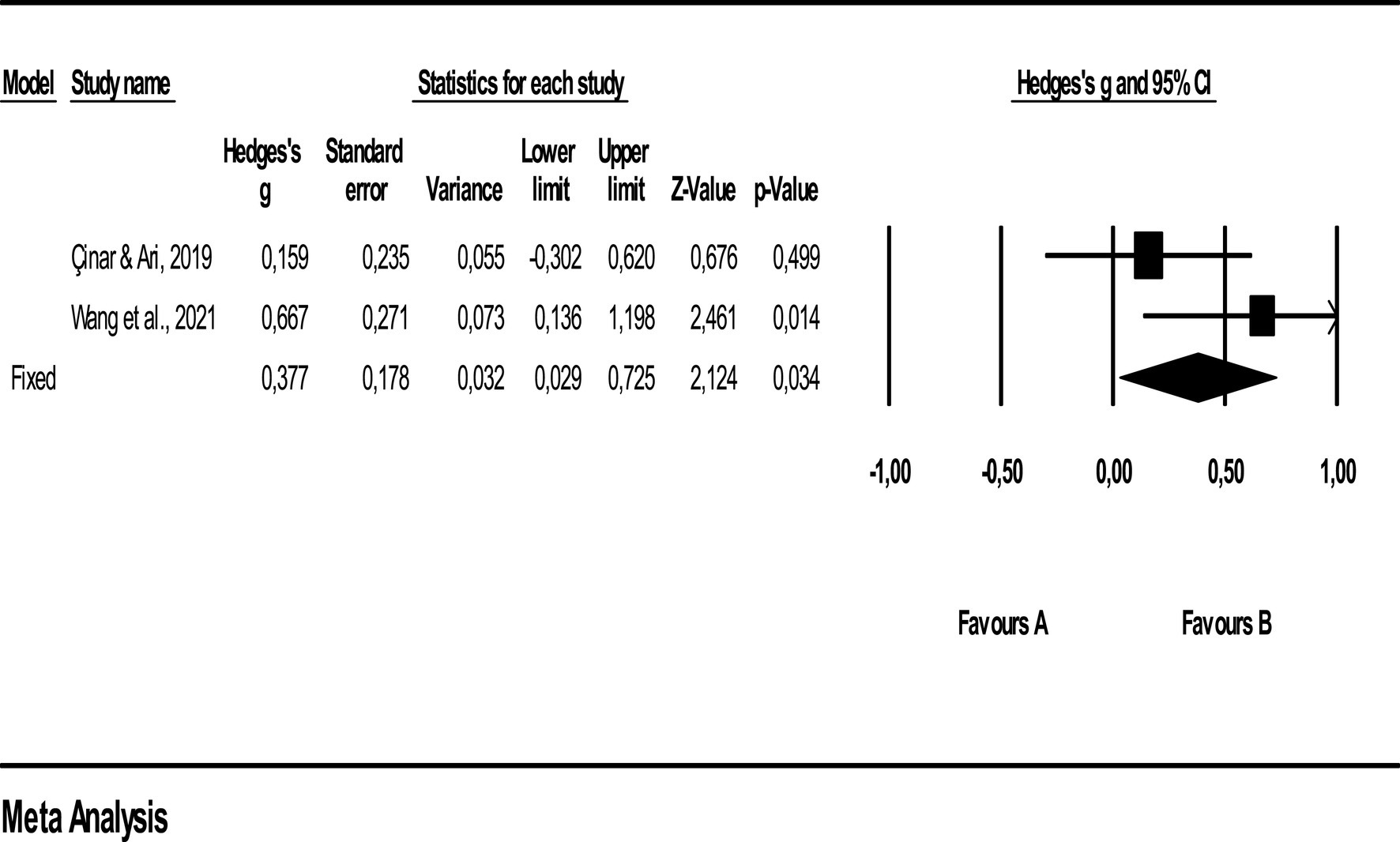

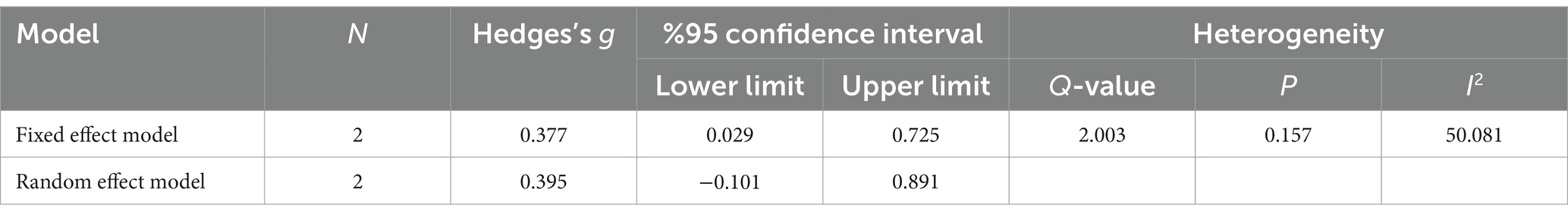

Results: Quizlet was found to have a statistically significant impact on learners’ vocabulary learning achievement, retention, and attitude. Specifically, it showed moderate effects on vocabulary learning achievement ( g = 0.62) and retention ( g = 0.74), and a small effect on student attitude ( g = 0.37). The adoption of the fixed effects model for attitude was due to homogeneous distribution, while the random effects model was used for achievement and retention because of heterogeneous distribution.

Conclusion: Quizlet enhances vocabulary learning achievement, retention, and has small positive effect on learner attitude. Its integration into language education curricula is recommended to leverage these benefits. Further research is encouraged to explore the optimization of Quizlet and similar platforms for educational success.

1 Introduction

The exponential expansion of digital technologies within the realm of pedagogy has sparked an escalating curiosity in scrutinizing their effects on academic achievement among students. This surge in interest calls for a thorough examination of how these technological tools are reshaping educational practices and outcomes. Students are frequently referred to as “Digital Natives” on account of their innate fluency with various technological devices such as computers, the internet, and video games ( Prensky, 2009 ). This inherent proficiency has been pivotal in driving the integration of digital tools in educational settings. The seamless incorporation of these technologies into classrooms, particularly language learning classrooms, highlights the evolving dynamics of modern education and emphasizes the need for empirical research to assess their impact. The field of language education has undergone a significant transformation due to the increasing influence of technology, resulting in a shift towards the integration of computers, mobile devices, and technology into teaching and learning practices ( Aprilani, 2021 ). This paradigm shift demonstrates the critical role of technology in facilitating innovative teaching and learning strategies and thus improving the quality and accessibility of education. This integration has not only reshaped traditional educational methodologies but has also necessitated the incorporation of information technology (IT) into the teaching and learning process ( Eady and Lockyer, 2013 ). As a result, the academic community is increasingly focused on understanding the impact of these changes on pedagogical practices, teaching and learning processes and student outcomes. In the domain of language education, the utilization of mobile technology has the capacity to transcend the constraints imposed by conventional learning methodologies in terms of spatial and temporal limitations, which ultimately caters to the individualized learning requirements of contemporary tertiary level scholars ( Lin and Chen, 2022 ). This situation emphasizes the importance of investigating the effectiveness of mobile technologies in improving the quality of the learning process in language education and meeting the different needs of learners. Moreover, the global application of technology in English teaching and learning has been instrumental, benefiting both teachers and learners by facilitating classroom activities and accelerating language acquisition through the use of technology and its services ( Nguyen and Van Le, 2022 ). Moreover, the integration of technology within the language learning milieu cultivates a heightened sense of self-directed and malleable learning methodology. Studies conducted on the domain of Computer-Assisted Language Learning (CALL) and Mobile-Assisted Language Learning (MALL) have inferred that the application of technological tools in the language learning process especially in the acquisition of vocabulary, particularly for non-native speakers, can be an efficacious strategy ( McLean et al., 2013 ; Chatterjee, 2022 ). This body of research provides a compelling rationale for investigating specific digital tools like Quizlet and their potential to enhance language learning. As this digital transformation in language education continues, it becomes critical to examine specific digital tools and their unique contributions to this evolving educational paradigm, especially how they combine traditional methods with innovative technology-based strategies. Amidst this technological revolution in education, the role of specific tools such as Quizlet becomes increasingly significant. By focusing on Quizlet, this study aims to bridge the gap in the literature regarding the effectiveness of digital tools in enhancing vocabulary acquisition among language learners. Integrating technology into the language learning environment fosters a sense of independent and flexible learning methodology, especially in terms of vocabulary acquisition for non-native speakers. It is precisely at this point that the functionality and applicability of tools such as Quizlet, as part of the trend in digital education, becomes important in representing an intersection between traditional learning methodologies and modern, technology-enhanced approaches. Therefore, this study seeks to contribute to the broader discourse on digital education by examining the impact of Quizlet on vocabulary learning, retention and attitude, thereby offering insights into its role as a transformative tool in language education. By systematically reviewing and meta-analyzing existing literature, our study aims to provide a definitive assessment of Quizlet’s role in the digital education landscape, highlighting its potential as a transformative educational tool.

2 Literature review

In light of this reality, a myriad of applications with a focus on improving cognitive and emotional aspects of learners have surfaced on the internet, a substantial proportion of which can be readily downloaded and employed by users without incurring any costs. This proliferation of digital resources presents a convenient and easily accessible means for language learners to supplement their vocabulary acquisition, retention, motivation and attitude endeavors. An exemplar of such innovative technological solutions is Quizlet, a widely utilized online platform that provides a diverse array of educational tools, comprising interactive flashcards, gamified activities, and evaluation assessments, among others.

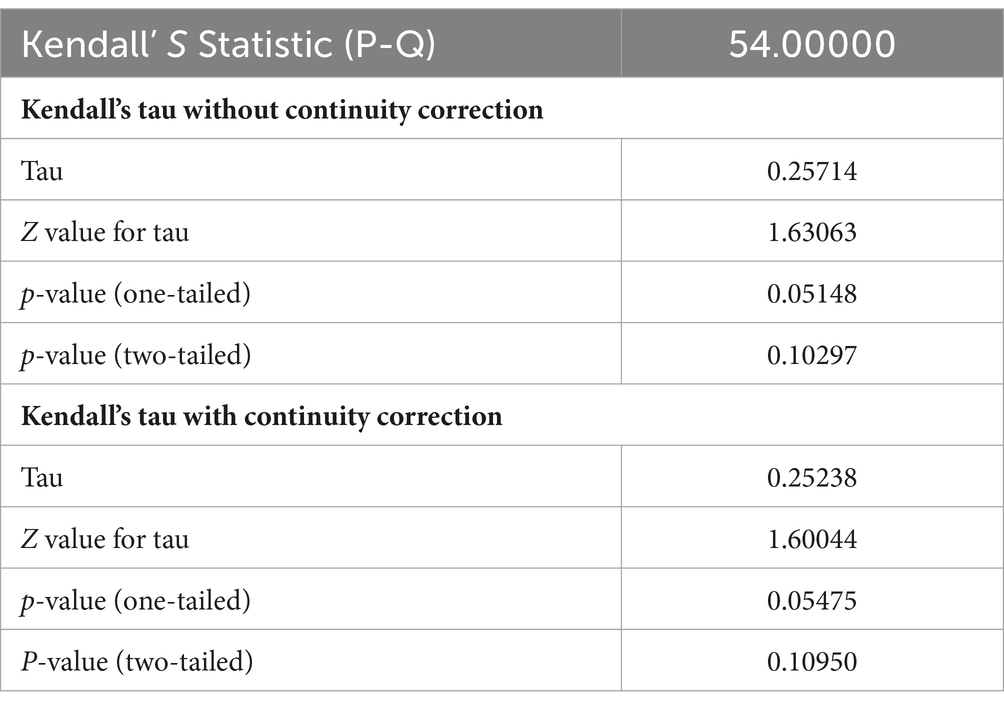

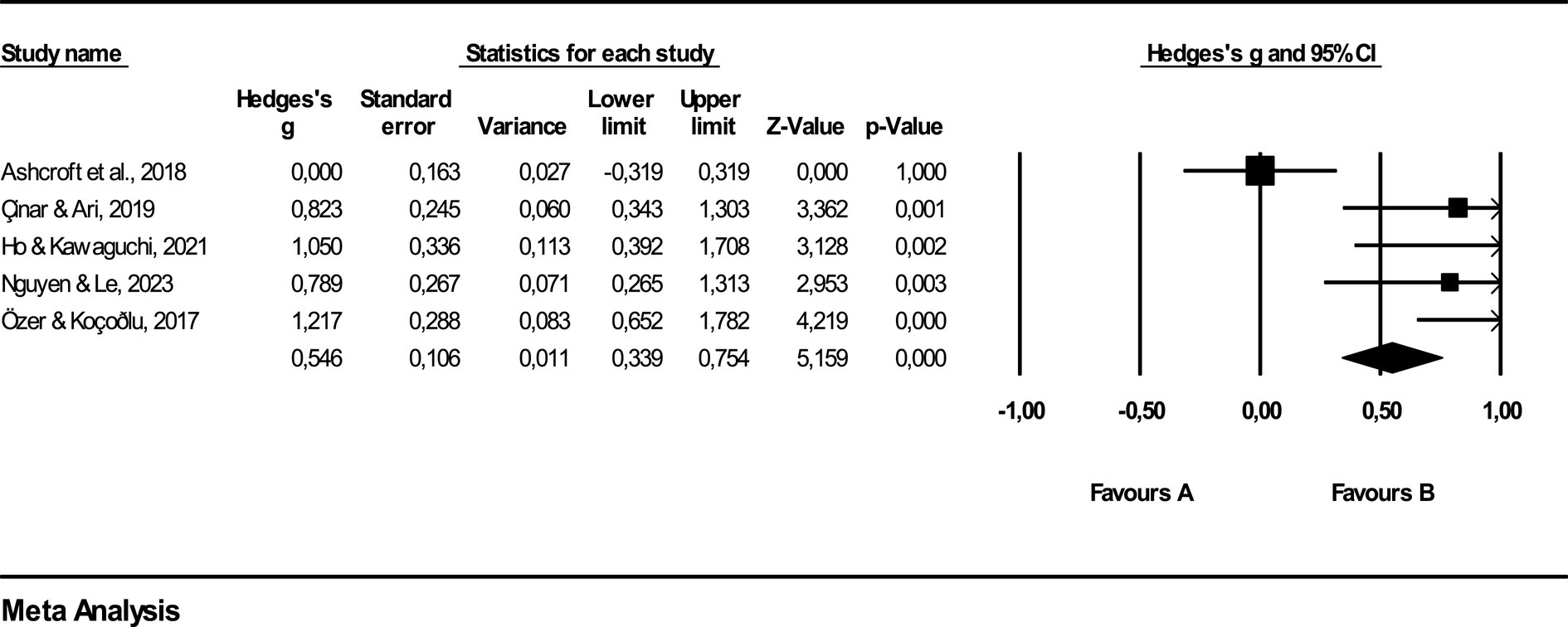

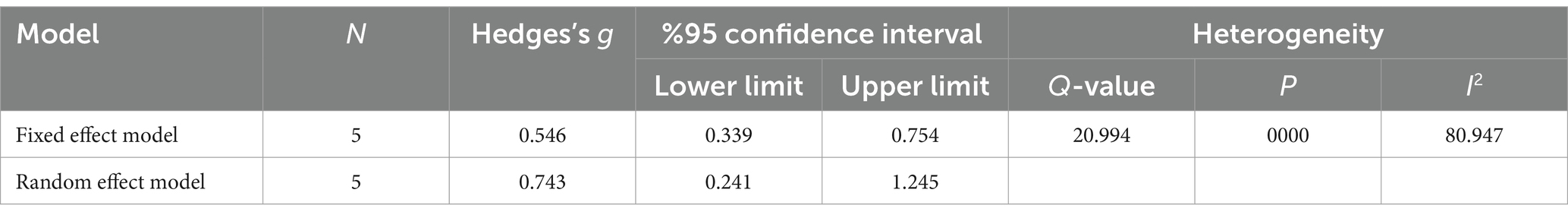

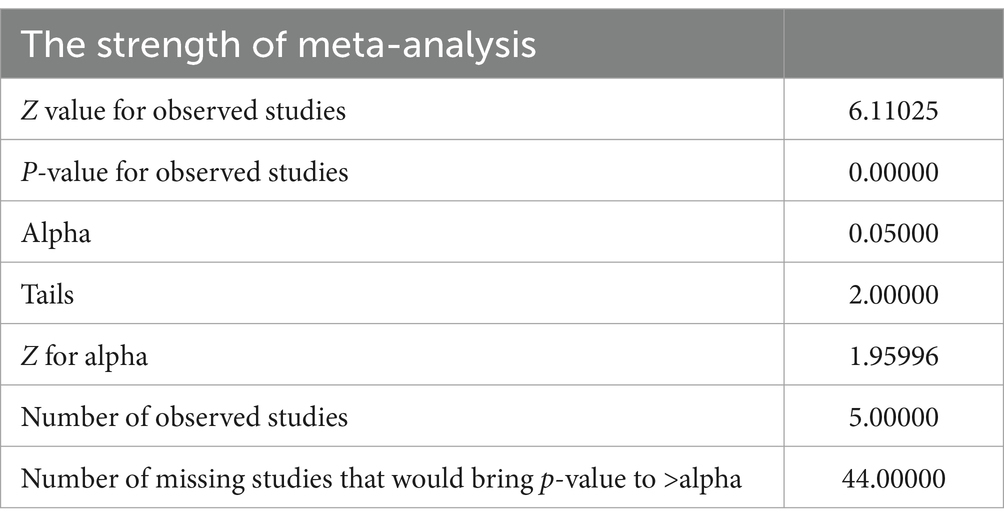

Andrew Sutherland designed a learning aid in 2005 that facilitated his academic excellence in French vocabulary assessment. He imparted it to his peers, and it resulted in a similar achievement in their respective assessments. Quizlet has since emerged as a powerful educational resource that has gained immense popularity, serving more than 60 million students and learners each month. Its widespread usage spans a wide range of disciplines, including mathematics, medicine, and foreign language acquisition, among others ( Quizlet, 2023 ). Quizlet provides a platform that enables learners to curate personalized study materials consisting of conceptual units coupled with their corresponding definitions or elucidations. Learners engage with these instructional modules through varied modes of learning, such as flashcards, games, and quizzes ( Fursenko et al., 2021 ). It is a popular web-based platform that offers a range of study tools, including flashcards, games, and quizzes. Quizlet is a well-known online learning application that enables users to build and study interactive resources like games and flashcards. According to Quizlet (2023) , learning can be improved by using it in a variety of contexts and areas. The Quizlet mobile application is particularly effective for constructing vocabulary content. It has been proposed as a convenient and pleasurable method for acquiring vocabulary knowledge ( Davie and Hilber, 2015 ). Within the app, users can access vocabulary “sets” created by other users, or they can generate their own sets and access them as flashcards or through a gaming interface ( Senior, 2022 ). Quizlet is renowned for its distinctive attributes that pertain to the creation of flashcards, multilingual capacity, and the ability to incorporate images, among other forms of diverse exercises. However, it lacks the provision of scheduling and expanded retrieval intervals as the learning process advances. There are various ways in which vocabulary sets in Quizlet can be disseminated, including but not limited to printing, embedding, incorporating URL links, and utilizing QR codes. These options provide a range of alternatives for learners to study at their preferred pace, allowing for individualized and autonomous learning experiences ( Waluyo and Bucol, 2021 ). This shift places Quizlet within a broader movement towards digitalization in education, juxtaposing its role with other emerging educational technologies.