No-code Data Pipeline for Snowflake

Easily load data from any source to Snowflake in real-time.

Snowflake Architecture & Concepts: A Comprehensive Guide

By: Suresh H Published: January 18, 2019

This article helps focuses on an in-depth understanding of Snowflake architecture, how it stores and manages data, and its micro-partitioning concepts. By the end of this blog, you will also be able to understand how Snowflake architecture is different from the rest of the cloud-based Massively Parallel Processing Databases .

Table of Contents

What is a data warehouse, what is the snowflake data warehouse, features of snowflake data warehouse, types of data warehouse architecture, components of data warehouse architecture, overview of shared-disk architecture, overview of shared-nothing architecture, snowflake architecture – a hybrid model, connecting to snowflake.

Businesses today are overflowing with data. The amount of data produced every day is truly staggering. With Data Explosion, it has become seemingly difficult to capture, process, and store big or complex datasets. Hence, it becomes a necessity for organizations to have a Central Repository where all the data is stored securely and can be further analyzed to make informed decisions. This is where Data Warehouses come into the picture.

A Data Warehouse also referred to as “Single Source of Truth”, is a Central Repository of information that supports Data Analytics and Business Intelligence (BI) activities. Data Warehouses store large amounts of data from multiple sources in a single place and are intended to execute queries and perform analysis for optimizing their business. Its analytical capabilities allow organizations to derive valuable business insights from their data to improve decision-making.

Snowflake is a cloud-based Data Warehouse solution provided as a Saas (Software-as-a-Service) with full support for ANSI SQL. It also has a unique architecture that enables users to just create tables and start querying data with very less administration or DBA activities needed. Know about Snowflake pricing here .

Let’s discuss some major features of Snowflake data warehouse:

- Security and Data Protection: Snowflake data warehouse offers enhanced authentication by providing Multi-Factor Authentication (MFA), federal authentication and Single Sign-on (SSO) and OAuth. All the communication between the client and server is protected y TLS.

- Standard and Extended SQL Support: Snowflake data warehouse supports most DDL and DML commands of SQL. It also supports advanced DML, transactions, lateral views, stored procedures, etc.

- Connectivity: Snowflake data warehouse supports an extensive set of client connectors and drivers such as Python connector, Spark connector, Node.js driver, .NET driver, etc.

- Data Sharing: You can securely share your data with other Snowflake accounts.

Read more about the features of Snowflake data warehouse here . Let’s learn about Snowflake architecture in detail.

Hevo is a No-code Data Pipeline. It supports pre-built data integration from 100+ data sources at a reasonable price . It can automate your entire data migration process in minutes. It offers a set of features and supports compatibility with several databases and data warehouses.

Let’s see some unbeatable features of Hevo:

- Simple: Hevo has a simple and intuitive user interface.

- Fault-Tolerant: Hevo offers a fault-tolerant architecture. It can automatically detect anomalies and notifies you instantly. If there is any affected record, then it is set aside for correction.

- Real-Time: Hevo has a real-time streaming structure, which ensures that your data is always ready for analysis.

- Schema Mapping: Hevo will automatically detect schema from your incoming data and maps it to your destination schema.

- Data Transformation: It provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Live Support: Hevo team is available round the clock to extend exceptional support to you through chat, email, and support call.

There are mainly 3 ways of developing a Data Warehouse :

- Single-tier Architecture: This type of architecture aims to deduplicate data in order to minimize the amount of stored data.

- Two-tier Architecture: This type of architecture aims to separate physical Data Sources from the Data Warehouse. This makes the Data Warehouse incapable of expanding and supporting multiple end-users.

- Three-tier Architecture: This type of architecture has 3 tiers in it. The bottom tier consists of the Database of the Data Warehouse Servers, the middle tier is an Online Analytical Processing (OLAP) Server used to provide an abstracted view of the Database, and finally, the top tier is a Front-end Client Layer consisting of the tools and APIs used for extracting data.

The 4 components of a Data Warehouse are as follows.

1. Data Warehouse Database

A Database forms an essential component of a Data Warehouse. A Database stores and provides access to company data. Amazon Redshift and Azure SQL come under Cloud-based Database services.

2. Extraction, Transformation, and Loading Tools (ETL)

All the operations associated with the Extraction, Transformation, and Loading (ETL) of data into the warehouse come under this component. Traditional ETL tools are used to extract data from multiple sources, transform it into a digestible format, and finally load it into a Data Warehouse.

3. Metadata

Metadata provides a framework and descriptions of data, enabling the construction, storage, handling, and use of the data.

4. Data Warehouse Access Tools

Access Tools allow users to access actionable and business-ready information from a Data Warehouse. These Warehouse Tools include Data Reporting tools, Data Querying Tools, Application Development tools, Data Mining tools, and OLAP tools.

Snowflake Architecture

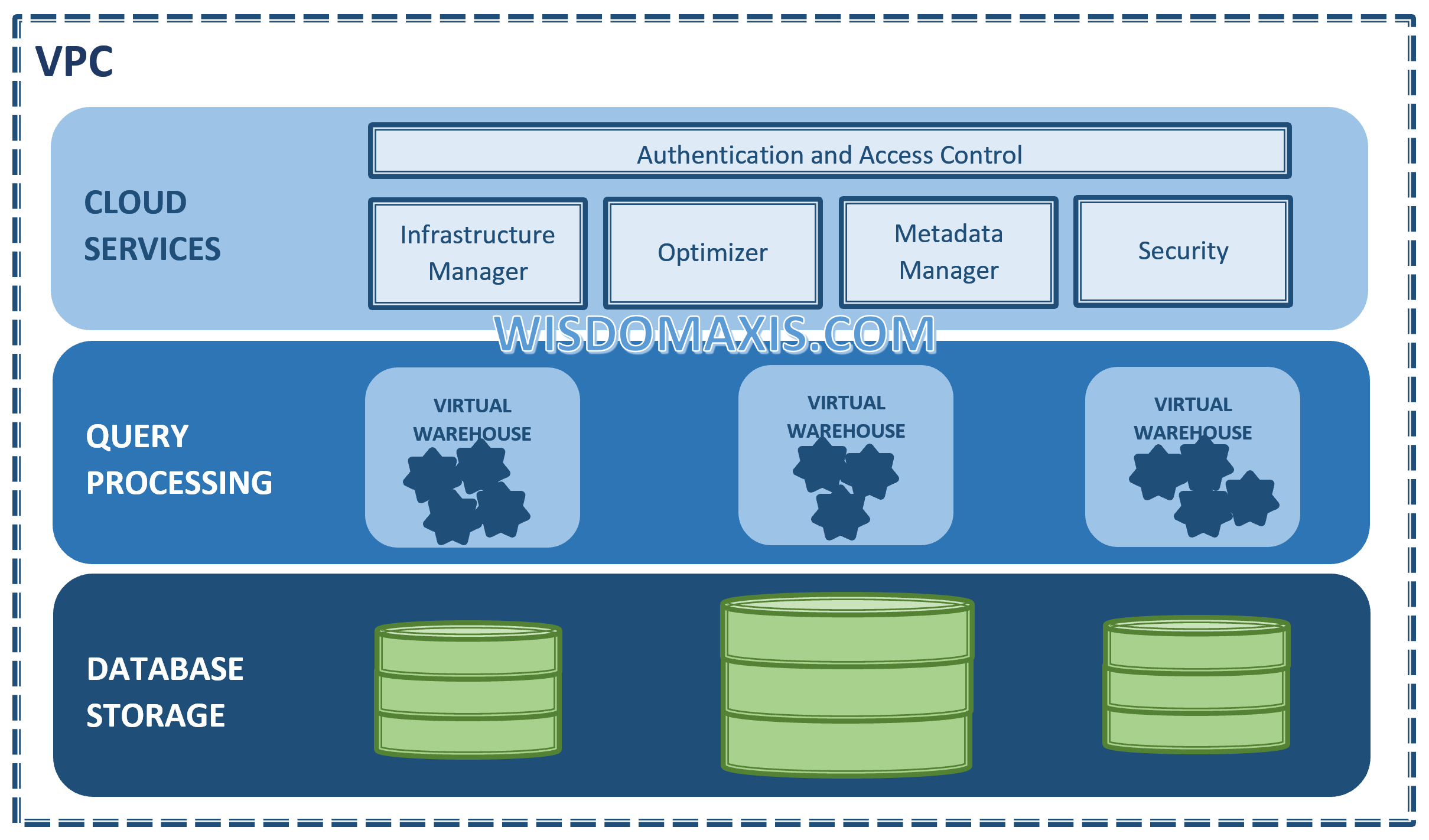

Snowflake architecture comprises a hybrid of traditional shared-disk and shared-nothing architectures to offer the best of both. Let us walk through these architectures and see how Snowflake combines them into new hybrid architecture.

- Storage Layer

- Compute Layer

- Cloud Services Layer

Used in traditional databases, shared-disk architecture has one storage layer accessible by all cluster nodes. Multiple cluster nodes having CPU and Memory with no disk storage for themselves communicate with central storage layer to get the data and process it.

Contrary to Shared-Disk architecture, Shared-Nothing architecture has distributed cluster nodes along with disk storage, their own CPU, and Memory. The advantage here is that the data can be partitioned and stored across these cluster nodes as each cluster node has its own disk storage.

Snowflake supports a high-level architecture as depicted in the diagram below. Snowflake has 3 different layers:

1. Storage Layer

Snowflake organizes the data into multiple micro partitions that are internally optimized and compressed. It uses a columnar format to store. Data is stored in the cloud storage and works as a shared-disk model thereby providing simplicity in data management. This makes sure users do not have to worry about data distribution across multiple nodes in the shared-nothing model.

Compute nodes connect with storage layer to fetch the data for query processing. As the storage layer is independent, we only pay for the average monthly storage used. Since Snowflake is provisioned on the Cloud, storage is elastic and is charged as per the usage per TB every month.

2. Compute Layer

Snowflake uses “ Virtual Warehouse ” (explained below) for running queries. Snowflake separates the query processing layer from the disk storage. Queries execute in this layer using the data from the storage layer.

Virtual Warehouses are MPP compute clusters consisting of multiple nodes with CPU and Memory provisioned on the cloud by Snowflake. Multiple Virtual Warehouses can be created in Snowflake for various requirements depending upon workloads. Each virtual warehouse can work with one storage layer. Generally, a virtual Warehouse has its own independent compute cluster and doesn’t interact with other virtual warehouses.

Advantages of Virtual Warehouse

Some of the advantages of virtual warehouse are listed below:

- Virtual Warehouses can be started or stopped at any time and also can be scaled at any time without impacting queries that are running.

- They also can be set to auto-suspend or auto-resume so that warehouses are suspended after a specific period of inactive time and then when a query is submitted are resumed.

- They can also be set to auto-scale with minimum and maximum cluster size, so for e.g. we can set minimum 1 and maximum 3 so that depending on the load Snowflake can provision between 1 to 3 multi-cluster warehouses.

3. Cloud Services Layer

All the activities such as authentication, security, metadata management of the loaded data and query optimizer that coordinate across Snowflake happens in this layer.

Examples of services handled in this layer:

- When a login request is placed it has to go through this layer,

- Query submitted to Snowflake will be sent to the optimizer in this layer and then forwarded to Compute Layer for query processing.

- Metadata required to optimize a query or to filter a data are stored in this layer.

These three layers scale independently and Snowflake charges for storage and virtual warehouse separately. Services layer is handled within compute nodes provisioned, and hence not charged.

The advantage of this Snowflake architecture is that we can scale any one layer independently of others. For e.g. you can scale storage layer elastically and will be charged for storage separately. Multiple virtual warehouses can be provisioned and scaled when additional resources are required for faster query processing and to optimize performance. Know more about Snowflake architecture from here .

Now that you’re familiar with Snowflake’s architecture, it’s now time to discuss how you can connect to Snowflake. Let’s take a look at some of the best third-party tools and technologies that form the extended ecosystem for connecting to Snowflake.

- Third-party partners and technologies are certified to provide native connectivity to Snowflake.

- Data Integration or ETL tools are known to provide native connectivity to Snowflake.

- Business intelligence (BI) tools simplify analyzing, discovering, and reporting on business data to help organizations make informed business decisions.

- Machine Learning & Data Science cover a broad category of vendors, tools, and technologies that extend Snowflake’s functionality to provide advanced capabilities for statistical and predictive modeling.

- Security & Governance tools ensure that your data is stored and maintained securely.

- Snowflake also provides native SQL Development and Data Querying interfaces.

- Snowflake supports developing applications using many popular programming languages and development platforms .

- Snowflake Partner Connect — This list will take you through Snowflake partners who offer free trials for connecting to Snowflake.

- General Configuration (All Clients) — This is a set of general configuration instructions that is applicable to all Snowflake-provided Clients (CLI, connectors, and drivers).

- SnowSQL (CLI Client) — SnowSQL is a next-generation command-line utility for connecting to Snowflake. It allows you to execute SQL queries and perform all DDL and DML operations.

- Snowflake Connector for Python

- Snowflake Connector for Spark

- Snowflake Connector for Kafka

- Node.js Driver

- Go Snowflake Driver

- .NET Driver

- JDBC Driver

- ODBC Driver

- PHP PDO Driver for Snowflake

You can always connect to Snowflake via the above-mentioned tools/technologies.

Ever since 2014, Snowflake has been simplifying how organizations store and interact with their data. In this blog, you have learned about Snowflake’s data warehouse, Snowflake architecture, and how it stores and manages data. You learned about various layers of the hybrid model in Snowflake architecture. Check out more articles about the Snowflake data warehouse to know about vital Snowflake data warehouse features and Snowflake best practices for ETL . You can have a good working knowledge of Snowflake by understanding Snowflake Create Table .

Hevo , an official Snowflake ETL Partner , can help bring your data from various sources to Snowflake in real-time. You can reach out to us or take up a free trial if you need help in setting up your Snowflake Architecture or connecting your data sources to Snowflake.

Give Hevo a try! Sign Up here for a 14-day free trial today.

If you still have any queries related to Snowflake Architecture, feel free to discuss them in the comment section below.

Suresh is enthusiastic about writing on data science and uses his problem-solving approach for helping data teams stay ahead of their competition.

- Data Integration

- Data Management

- Data Warehouse

Related Articles

Continue Reading

Vernon DaCosta

Cloud Data Warehouse: A Comprehensive Guide

Data Warehouse Best Practices: 6 Factors to Consider in 2024

9 Google BigQuery Data Types: A Comprehensive Guide

I want to read this e-book.

- Platform Overview AtScale Developer Community

- Pricing Request a Demo

Analytic Tools

Cloud Data Platform

- About Us Team Careers

- Press Releases Partners Service and Support

Now in Public Preview!

AtScale Developer Community Edition - A free, fully capable Semantic Layer Platform

October 4, 2022 | Posted by: Dave Mariani

Getting Started with a Semantic Layer with Snowflake and AtScale

Blog / semantic layer , snowflake.

More than 10 years after the explosion of big data analytics technologies, data scientists still spend nearly half their time on data preparation. Despite all their hard work preparing data for use, 86% of the data used to create insights is out of date and 41% of insights are based on data that is two months old or older.

In other words, despite major investments in powerful cloud data platforms like Snowflake , many businesses are still struggling to make the most of their data.

The problem is usually that it is difficult to bridge the gap between the data in Snowflake and the tools that business users work with (such as Power BI, Tableau, or Excel). Many business users fall back on static data extracts (such as TDE files for Tableau), creating multiple data silos and fragmented insights.

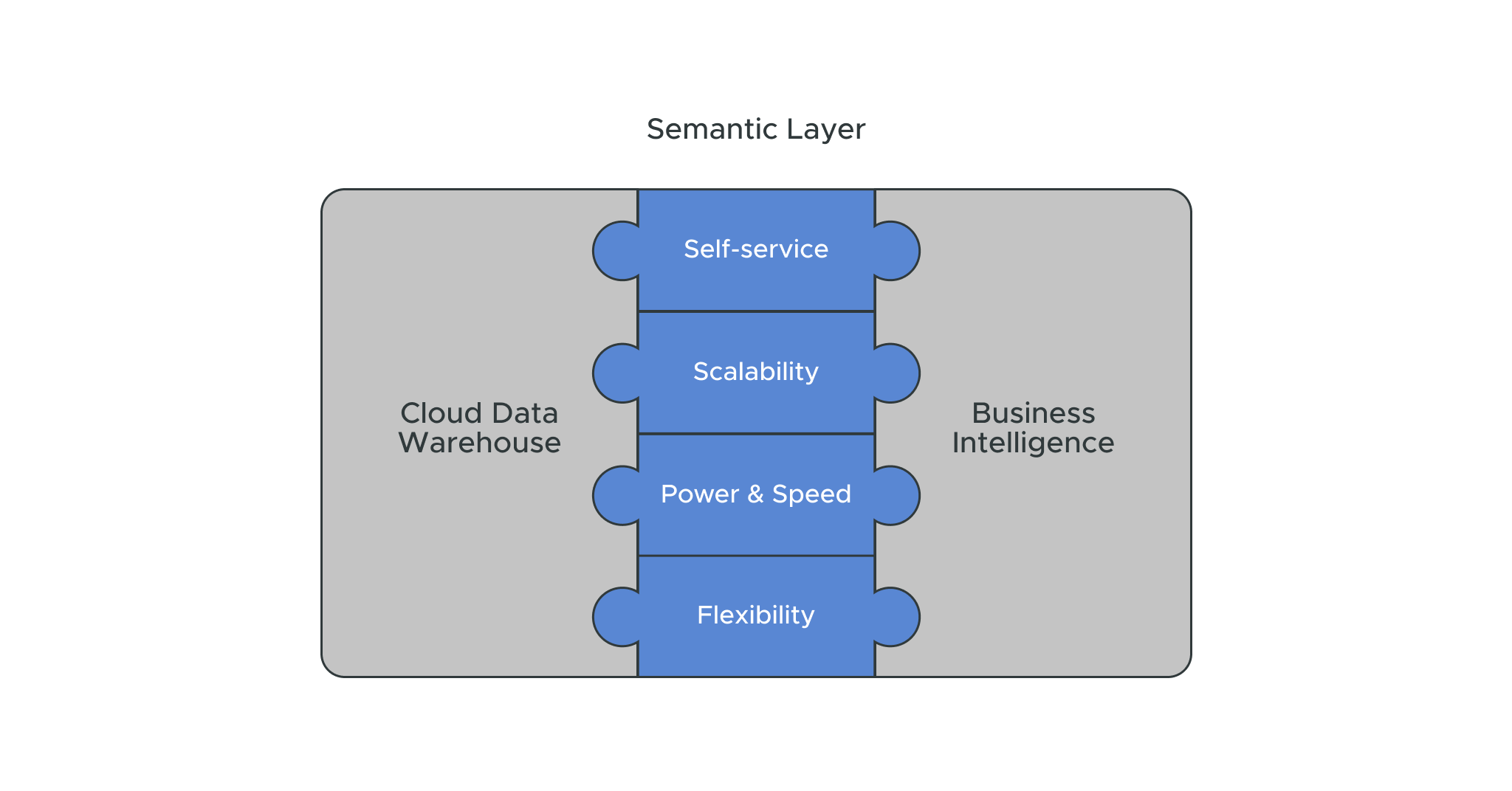

The solution? Adding a semantic layer into the data stack between your Snowflake cloud and your business intelligence tools.

What is a semantic layer?

A semantic layer gives you a single source of truth, by introducing a business view of your data for business analysts and business scientists to work with. A semantic layer ensures that you have fast, secure, and governed data for your business and data analysts.

Instead of moving data in and out of the Snowflake Data Cloud, the semantic layer enables you to leave the data where it is. You can work within the semantic layer to prepare the data for analysis across any BI, ML, or data science tool. Your data team will no longer need to remodel the data in your BI tools to make it usable – instead, every user has a live connection to the real data in Snowflake. As more data is added to Snowflake, changes are immediately propagated to every user connected to the data models in the semantic layer – so your data is always accurate.

Why AtScale and Snowflake?

AtScale isn’t the only company offering a semantic layer technology – but it is the ideal technology for Snowflake. Here’s why:

- Self-service analytics for everyone

AtScale dramatically accelerates the time-to-insight from the Snowflake Data Cloud. Because AtScale’s semantic layer is universal, data teams can deliver analysis-ready data across any BI, ML, or data science tool, with measures, dimensions, and a consistent view of your data in Snowflake tables. As a result, you can democratize access to data across your whole organization, while enabling centralized governance and consistency.

- A data analytics capacity that scales with your data

When you use AtScale with Snowflake, there’s no need to scale a separate analytics infrastructure. AtScale’s semantic layer is integrated into Snowflake’s existing security, so it scales securely even as your data grows. In fact, AtScale adds additional security to your data, including row-level and column-level security and data masking.

- Take full advantage of the power and speed of Snowflake

AtScale automatically creates data aggregates to optimize Snowflake’s performance. When you run queries through AtScale, the technology learns from real user behavior and identifies opportunities to create and employ aggregates. This makes queries dramatically faster, and also means you can add more users to an existing Snowflake warehouse without adding additional costs.

- Get true flexibility working with Snowflake

With AtScale, you can modernize legacy OLAP analytics with a full dimensional analysis engine on live data in Snowflake with no data extracts. You can extend Snowflake support into other languages, like DAX, MDX, and Python, allowing you to continue working with your current BI tools like Power BI and Excel. You can easily translate queries from BI tools and run them directly on the Snowflake SQL physical data layer, without any need to create unreliable sources of data (like data cube builds or extracts).

AtScale allows you to do multidimensional analysis directly on data in Snowflake

When you work with AtScale, it can literally take you minutes to create a multidimensional semantic model, pulling in whatever metrics are needed from your entire Snowflake Data Cloud. Those metrics will be unified, analysis-ready, and available to consumers with live queries using any BI, data science, and ML services of your choice. Instead of relying on legacy “cube” architectures like SSAS, the AtScale semantic layer enables fast dimensional analysis by creating aggregates on the Snowflake Data Cloud, with dialect support for DAX, MDX, Python, and SQL.

For a full deep dive into using AtScale with Snowflake, join Dave Mariani, AtScale’s CTO and founder, and Bob Kelly, AtScale’s Director of Education and Enablement, for an on-demand demo or check out our step-by-step How To guide . Or, if you’d like to experience the power of Snowflake and AtScale for yourself, schedule a live demo today!

Power BI/Fabric Benchmarks

AtScale Developer Community Edition

May 3, 2022

September 14, 2021

June 10, 2021

Privacy Overview

- Skip to primary navigation

- Skip to content

- Data Analytics and Solution Consulting

Data Design, Architecture and Engineering

- Data and Cloud Migration

- Predictive Analytics

ML/AI Development

- Case Studies

- Data Design, Architecture & Engineering

- Machine Learning and Artificial Intelligence Development

- Blockchain Development

- Metaverse Development

- Life at Inferenz

Snowflake Storage Layer: Understanding Snowflake Architectural Layers

Snowflake architectural layers include storage, computing, and cloud services, where data fetching, processing, and cleaning occur. In the data-driven world, enterprises produce a large amount of data that should be analyzed to make better business decisions. Snowflake, a cloud-based data storage solution, has a unique architecture that makes it one of the best data warehousing solutions for small and large enterprises.

The Snowflake data warehouse is a hybrid model amalgamation of traditional shared-disk and shared-nothing architecture. It uses a central data repository for persisted data, ensuring the information is accessible to the teams from all compute nodes. This ultimate guide will discuss the three Snowflake architectural layers in detail and how each layer functions to store, process, analyze, and clean stored data.

3 Key Snowflake Architectural Layers

As it is designed “in and for the cloud,” Snowflake is a data platform used both as a data lake as well as a data warehouse. Snowflake data warehousing solution eliminates the need for two applications and reduces the workload on the business team. In addition, organizations can scale up and down depending on their computing needs due to the high scalability of the platform. Below we will understand the Snowflake Storage layers briefly.

Storage Layer

Snowflake internally optimizes and compresses data after organizing it into multiple micro partitions. All the data in the organization is stored in the cloud, simplifying the business team’s data management process. It works as a shared-disk model, ensuring that the data team does not have to deal with data distribution across multiple nodes.

Compute layers connect with the storage layer, and the data is fetched to process the query. The advantage of the Snowflake storage layer is that enterprises pay for the monthly storage used (average) rather than a fixed amount.

Compute Layer

Snowflake uses virtual warehouse — MPP (massively parallel processing) compute clusters that consist of multiple nodes with Memory and CPU — to run queries. The data warehouse solution separates the query processing layer from the disk storage.

In addition, the virtual warehouse has an independent compute cluster. That said, it doesn’t interact with other virtual warehouses. As a virtual warehouse, data experts can start, stop, or scale it anytime without impacting the other running queries.

Cloud Service Layer

The cloud service layer is the last yet most essential Snowflake architectural layer among the three. All the critical data-related activities, such as security, metadata management, authentication, query optimization, etc., are conducted in the cloud service layer.

Whenever a user submits a query to Snowflake, it is sent to the query optimizer and compute layer for processing. In addition, metadata required for data filtering or query optimization takes place in the cloud services layer.

All three Snowflake architectural layers scale independently, and users can pay separately for the virtual warehouse and storage.

Inferenz data migration experts understand the ins and outs of Snowflake architectural layers and how to migrate data from traditional databases to modern data cloud systems. We have helped a US-based eCommerce company with data engineering and predictive analytics solutions that involved Snowflake implementation. Read the case study here .

Understanding Snowflake Data Architecture Layers & Process

According to IDC (International Data Centre), the world’s big data is expected to grow to 175ZB by 2025 , at a CAGR of 61%. This massive growth in business data opens opportunities for adopting cloud-based data storage solutions. In the hyper-competitive era, enterprises store data in disparate sources, such as Excel, SQL Server, Oracle, etc.

Analyzing, processing, and cleaning information from different data sources is a challenge for in-house teams. This is where Snowflake helps the teams by being a single data source. Below we have mentioned a few steps that will help enterprises and teams understand the exact process of Snowflake.

Data Acquisition

The initial step is to collect data from various sources such as data lake, streaming sources, data files, data sharing, on-premise databases, and SAS and data applications. Then, all the business data is extracted/fetched and loaded into the Snowflake data warehouse. Finally, ETL tools extract and convert data into readable formats and store them in the Snowflake warehouse for the next step.

Data Cleaning & Processing

After the data is ingested into the Snowflake, different processes like cleaning, integration, enrichment, and modeling occur. Next, all the acquired data is thoroughly analyzed and cleaned by removing the repetitive and unstructured data. Lastly, information is governed, secured, and monitored to ensure business teams access structured and accurate data to make strategic business decisions.

Data Consumers

The cleaned data is then available for the teams for further action. Using business intelligence solutions and data science technologies, they can use the data to accelerate business growth.

ALSO READ: Data Cleansing: What Is It & Why It Matters?

Switch To Snowflake Data Warehouse With Inferenz

Cloud data platforms such as Snowflake are high-performing and cost-effective data storage solutions for any enterprise that uses big data to make strategic decisions. Snowflake architectural layers and hybrid model make the platform a secure, scalable, and pocket-friendly solution to all data storing needs.

Inferenz, a company with certified data migration experts, can help you learn more about the concept and migrate from on-premise solutions to cloud-based data-storing applications. The experts of Inferenz will help you understand Snowflake architectural layers and transfer data from one repository to another without data breach threats.

You may also like

Snowflake Tutorial For Beginners: Guide To Architecture [2023]

3 Essential Activities in Azure Data Factory: Beginners Tutorial [2023]

Which Has High Demand AWS Vs GCP: Ultimate Beginners Guide [2023]

Ultimate Guide To Cloud Computing Definition, Types & Benefits

Adding {{itemName}} to cart

Added {{itemName}} to cart

Snowflake Architecture, Key components, Layers, pictorial presentation, Database Storage, Query Processing and Cloud Services, Hybrib model shared-disk and shared-nothing.

Snowflake architecture, key components, layers, pictorial presentation with details and descriptions.

Snowflake Architecture, Key components and Layers Details

How to Integrate Snowflake Into PowerPoint

Snowflake is a powerful data warehouse solution that provides organizations with the capability to store, analyze, and visualize large amounts of data efficiently. It offers a range of features and functionalities that make it an excellent choice for data analysis and reporting. However, contrary to common belief, Snowflake cannot integrate directly with Microsoft PowerPoint for real-time data updates. Instead, users can leverage the power of Snowflake to analyze and visualize data, and then manually incorporate these insights into their PowerPoint presentations.

While it would be convenient to directly integrate Snowflake into PowerPoint, unfortunately, this is not currently possible. PowerPoint does not have the capability to directly establish a data connection with Snowflake or import data as linked tables. Instead, users can export data from Snowflake into a format that PowerPoint can handle, such as CSV or Excel, and then manually import this data into PowerPoint.

While it’s true that Power Query and Snowflake’s REST API can be used for data integration, it’s important to note that these tools are not compatible with PowerPoint. Instead, these tools can be used with other applications, such as Excel, to manipulate and analyze data from Snowflake before incorporating it into a PowerPoint presentation.

Preparing data in Snowflake is crucial to ensure the accuracy and reliability of the data that will be manually incorporated into PowerPoint. This involves cleaning and validating the data within Snowflake before exporting it into a format that can be imported into PowerPoint. Users should ensure that the data is accurate, consistent, and appropriately formatted. Additionally, any necessary calculations or transformations should be applied to the data within Snowflake to ensure that the exported data aligns with the requirements of the PowerPoint presentation.

While it’s important to optimize Snowflake’s query performance, it’s also crucial to understand that PowerPoint does not handle large datasets or integrate directly with Snowflake. Instead, users should focus on optimizing their PowerPoint presentations to effectively communicate the insights derived from the data analyzed in Snowflake. This includes using appropriate data visualizations, such as charts and graphs, and considering the design and layout of the presentation.

When creating a presentation that incorporates data analyzed in Snowflake, it’s important to follow best practices for data visualization and presentation design. However, it’s important to note that these are general best practices for presentations and are not specific to “Snowflake-powered” presentations in PowerPoint, as direct integration between Snowflake and PowerPoint is not possible.

While it’s important to be aware of potential issues when working with Snowflake and PowerPoint, it’s also crucial to understand that the issues described, such as connectivity problems or data import errors, are not relevant in this context, as direct integration between Snowflake and PowerPoint is not supported. Instead, users may encounter issues when exporting data from Snowflake or importing data into PowerPoint, and should refer to the respective documentation for each tool to troubleshoot these issues.

In conclusion, while direct integration of Snowflake into PowerPoint is not currently possible, users can still leverage the powerful data analysis and visualization capabilities of Snowflake to enhance their PowerPoint presentations. By manually incorporating insights derived from Snowflake into PowerPoint, users can create compelling presentations that showcase the power of data analysis. By understanding the limitations of PowerPoint in terms of data integration, users can effectively navigate these challenges and maximize the impact of their data-driven presentations.

By humans, for humans - Best rated articles:

Excel report templates: build better reports faster, top 9 power bi dashboard examples, excel waterfall charts: how to create one that doesn't suck, beyond ai - discover our handpicked bi resources.

Explore Zebra BI's expert-selected resources combining technology and insight for practical, in-depth BI strategies.

We’ve been experimenting with AI-generated content, and sometimes it gets carried away. Give us a feedback and help us learn and improve! 🤍

Note: This is an experimental AI-generated article. Your help is welcome. Share your feedback with us and help us improve.

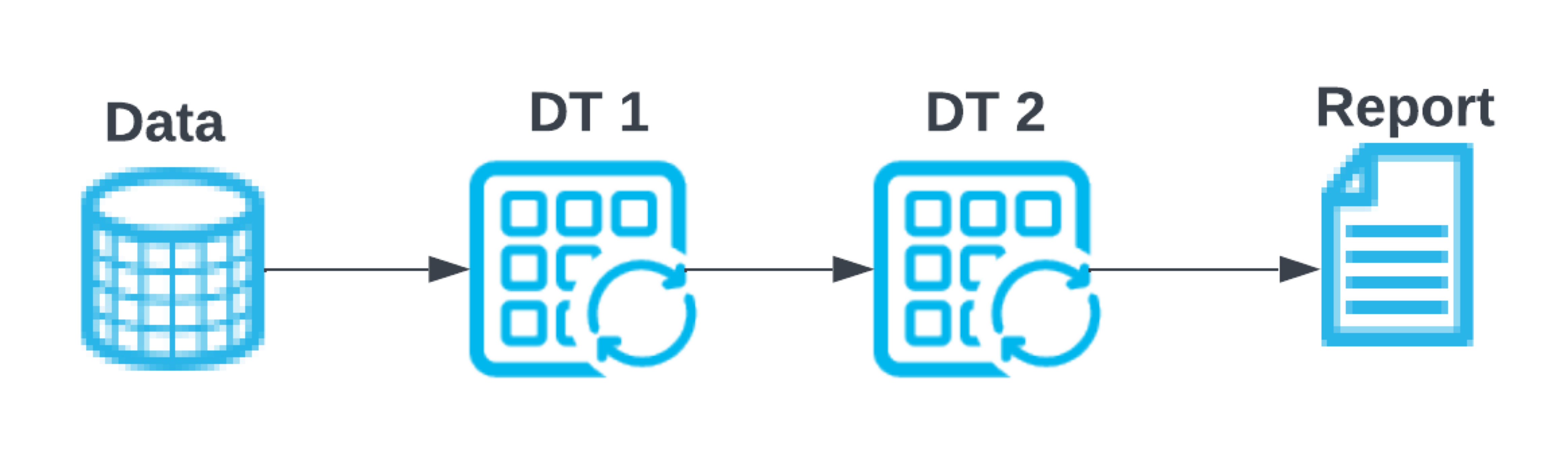

Understanding dynamic table refresh ¶

Dynamic table content is based on the results of a specific query. When the underlying data on which the dynamic table is based on changes, the table is updated to reflect those changes. These updates are referred to as a refresh . This process is automated, and it involves analyzing the query that underlies the table.

Dynamic table refresh timeouts are determined by the STATEMENT_TIMEOUT_IN_SECONDS parameter, defining the maximum duration on the account or the warehouse before it is automatically canceled.

The following sections explain dynamic table refresh in more detail:

Understanding dynamic table initialization

Dynamic table refresh modes

Understanding target lag

Supported queries in incremental refresh

How operators incrementally refresh

Supported non-deterministic functions in full refresh

How data is refreshed when dynamic tables depend on other dynamic tables

Dynamic table refresh modes ¶

The dynamic table refresh process operates in one of two ways:

Incremental refresh: This automated process analyzes the dynamic table’s query and calculates changes since the last refresh. It then merges these changes into the table. See Supported queries in incremental refresh for details on supported queries. Full refresh: When the automated process can’t perform an incremental refresh, it conducts a full refresh. This involves executing the query for the dynamic table and completely replacing the previous materialized results.

The constructs used in the query determine whether an incremental refresh can be used. After you create a dynamic table, you can monitor the table to determine whether incremental or full refreshes are used to update that table.

Understanding target lag ¶

Dynamic table refresh is triggered based on how out of date the data might be, or what is commonly referred to as target lag . When you set the target lag for a dynamic table, it’s measured relative to the base tables at the root of the graph, not the dynamic tables directly upstream. So, consider the time needed to refresh each dynamic table in a chain to the root. If you don’t, some refreshes might be skipped, leading to a higher actual lag.

To see the graph of tables connected to your dynamic table, see Use Snowsight to examine the graph of dynamic tables .

Target lag is specified in one of following ways:

Measure of freshness : Defines the maximum amount of time that the dynamic table’s content should lag behind updates to the base tables. The following example sets the product dynamic table to refresh and maintain freshness every hour: ALTER DYNAMIC TABLE product SET TARGET_LAG = '1 hour' ; Copy

Downstream : Specifies that the dynamic table should be refreshed on demand when other dynamic tables that depend on it need to refresh. Updates are inferred from upstream database objects. Downstream dynamic tables are only updated when required by upstream consumers.

In the following example, product is based on other dynamic tables and is set to refresh based on the target lag of its downstream dynamic tables: ALTER DYNAMIC TABLE product SET TARGET_LAG = DOWNSTREAM ; Copy

Target lag is inversely proportional to the dynamic table’s refresh frequency: frequent refreshes imply a lower lag.

Consider the following example where Dynamic Table 2 (DT2) is defined based on Dynamic Table 1 (DT1). DT2 must read from DT1 to materialize its contents. In addition, a report consumes DT2 data via a query.

The following results are possible, depending on how each dynamic table specifies its lag:

Supported queries in incremental refresh ¶

The following table describes the expressions, keywords, and clauses that currently support incremental refresh. For a list of queries that do not support incremental refresh, see Limitations on support for incremental refresh .

If a query uses expressions that are not supported for incremental refresh, the automated refresh process uses a full refresh, which may incur an additional cost. To determine which refresh mode is used, see Determine whether an incremental or full refresh is used .

Replacing an IMMUTABLE user-defined function (UDF) while it’s in use by a dynamic table that uses incremental refresh results in undefined behavior in that table. VOLATILE UDFs are not supported with incremental refresh.

How operators incrementally refresh ¶

The following table outlines how each operator is incrementalized (that is, how it’s transformed into a new query fragment that generates changes instead of full results) and its performance and other important factors to consider.

Supported non-deterministic functions in full refresh ¶

The following non-deterministic functions are supported in dynamic tables. Note that these functions are only supported for full refreshes. For a list of what is not supported for incremental refresh, see Limitations on support for incremental refresh .

VOLATILE user-defined functions

Sequence functions (e.g., SEQ1 , SEQ2 )

The following context functions:

CURRENT_ROLE

CURRENT_WAREHOUSE

CURRENT_ACCOUNT

CURRENT_REGION

The following date and time functions (with their respective aliases):

CURRENT_DATE

CURRENT_TIME

CURRENT_TIMESTAMP

How data is refreshed when dynamic tables depend on other dynamic tables ¶

When a dynamic table lag is specified as a measure of time, the automated refresh process determines the schedule for refreshes based on the target lag times of the dynamic tables. The process chooses a schedule that best meets the target lag times of the tables.

Target lag is not a guarantee. Instead, it is a target that Snowflake attempts to meet. Data in dynamic tables is refreshed as closely as possible within the target lag. However, target lag may be exceeded due to factors such as warehouse size, data size, query complexity, and similar factors.

In order to keep data consistent in cases when one dynamic table depends on another , the process refreshes all dynamic tables in an account at compatible times. The timing of less frequent refreshes coincides with the timing of more frequent refreshes.

For example, suppose that dynamic table A has a target lag of two minutes and queries dynamic table B that has a target lag of one minute. The process might determine that A should be refreshed every 96 seconds, and B every 48 seconds. As a result, the process might apply the following schedule:

This means that at any given time, when you query a set of dynamic tables that depend on each other, you are querying the same “snapshot” of the data across these tables.

Note that the target lag of a dynamic table cannot be shorter than the target lag of the dynamic tables it depends on. For example, suppose that:

Dynamic table A queries the dynamic tables B and C.

Dynamic table B has a target lag of five minutes.

Dynamic table C has a target lag of one minute.

This means that the target lag time for A must not be shorter than five minutes (that is, not shorter than the longer of the lag times for B and C).

If you set the lag for A to five minutes, the process sets up a refresh schedule with these goals:

Refresh C often enough to keep its lag below one minute.

Refresh A and B together and often enough to keep their lags below five minutes.

Ensure that the refresh for A and B coincides with a refresh of C to ensure snapshot isolation.

Note: If refreshes take too long, the scheduler may skip refreshes to try to stay up to date. However, snapshot isolation is preserved.

Quickstart for the dbt Cloud Semantic Layer and Snowflake

Introduction .

The dbt Semantic Layer , powered by MetricFlow , simplifies the setup of key business metrics. It centralizes definitions, avoids duplicate code, and ensures easy access to metrics in downstream tools. MetricFlow helps manage company metrics easier, allowing you to define metrics in your dbt project and query them in dbt Cloud with MetricFlow commands .

Explore our dbt Semantic Layer on-demand course to learn how to define and query metrics in your dbt project.

Additionally, dive into mini-courses for querying the dbt Semantic Layer in your favorite tools: Tableau (beta) , Hex , and Mode .

This quickstart guide is designed for dbt Cloud users using Snowflake as their data platform. It focuses on building and defining metrics, setting up the dbt Semantic Layer in a dbt Cloud project, and querying metrics in Google Sheets.

For users on different data platform

If you're using a data platform other than Snowflake, this guide is also be applicable to you. You can adapt the setup for your specific platform by following the account setup and data loading instructions detailed in the following tabs for each respective platform.

The rest of this guide applies universally across all supported platforms, ensuring you can fully leverage the dbt Semantic Layer.

- Microsoft Fabric

- Starburst Galaxy

Open a new tab and follow these quick steps for account setup and data loading instructions:

- Step 2: Create a new GCP project

- Step 3: Create BigQuery dataset

- Step 4: Generate BigQuery credentials

- Step 5: Connect dbt Cloud to BigQuery

- Step 2: Create a Databricks workspace

- Step 3: Load data

- Step 4: Connect dbt Cloud to Databricks

- Step 2: Load data into your Microsoft Fabric warehouse

- Step 3: Connect dbt Cloud to Microsoft Fabric

- Step 2: Create a Redshift cluster

- Step 4: Connect dbt Cloud to Redshift

- Step 2: Load data to an Amazon S3 bucket

- Step 3: Connect Starburst Galaxy to Amazon S3 bucket data

- Step 4: Create tables with Starburst Galaxy

- Step 5: Connect dbt Cloud to Starburst Galaxy

Prerequisites

You need a dbt Cloud Trial, Team, or Enterprise account for all deployments. Contact your representative for Single-tenant setup; otherwise, create an account using this guide.

Have the correct dbt Cloud license and permissions based on your plan:

- Enterprise — Developer license with Account Admin permissions. Or "Owner" with a Developer license, assigned Project Creator, Database Admin, or Admin permissions.

- Team — "Owner" access with a Developer license.

- Trial — Automatic "Owner" access under a trail of the Team plan.

Production and development environments must be on dbt version 1.6 or higher . Alternatively, set your environment to Keep on latest version to always remain on the latest version.

Create a trial Snowflake account :

- Select the Enterprise Snowflake edition with ACCOUNTADMIN access. Consider organizational questions when choosing a cloud provider, refer to Snowflake's Introduction to Cloud Platforms .

- Select a cloud provider and region. All cloud providers and regions will work so choose whichever you prefer.

Basic understanding of SQL and dbt. For example, you've used dbt before or have completed the dbt Fundamentals course.

What you'll learn

This guide will cover the following topics:

- Create a new Snowflake worksheet and set up your environment

- Load sample data into your Snowflake account

- Connect dbt Cloud to Snowflake

- Set up a dbt Cloud managed repository

- Initialized a dbt Cloud project and start developer

- Build your dbt Cloud project

- Create a semantic model in dbt Cloud

- Define metrics in dbt Cloud

- Add second semantic model

- Test and query metrics in dbt Cloud

- Run a production job in dbt Cloud

- Set up dbt Semantic Layer in dbt Cloud

- Connect and query metrics with Google Sheets

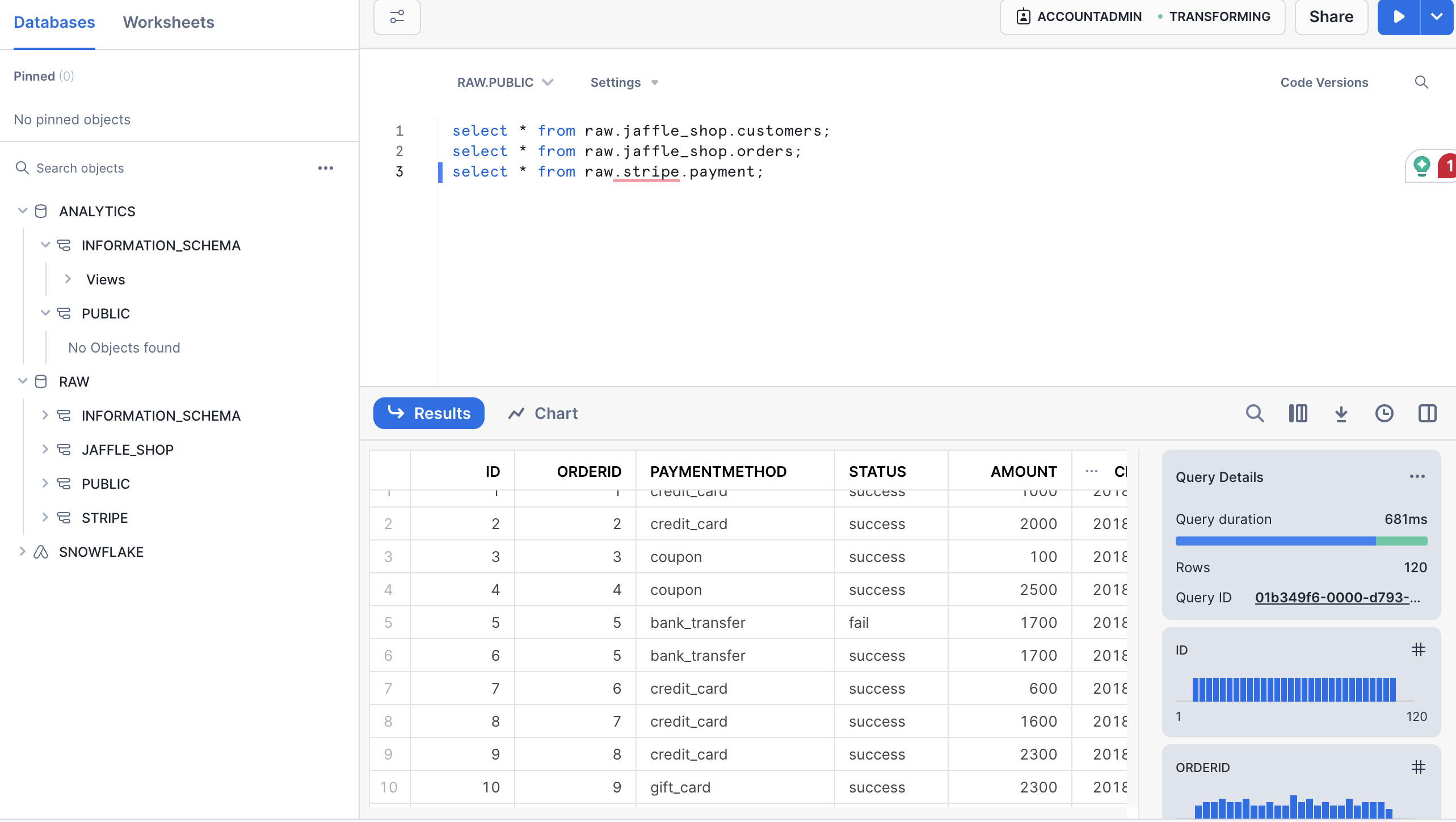

Create new Snowflake worksheet and set up environment

- Log in to your trial Snowflake account .

- In the Snowflake user interface (UI), click + Worksheet in the upper right corner.

- Select SQL Worksheet to create a new worksheet.

Set up Snowflake environment

The data used here is stored as CSV files in a public S3 bucket and the following steps will guide you through how to prepare your Snowflake account for that data and upload it.

Create a new virtual warehouse, two new databases (one for raw data, the other for future dbt development), and two new schemas (one for jaffle_shop data, the other for stripe data).

Run the following SQL commands one by one by typing them into the Editor of your new Snowflake SQL worksheet to set up your environment.

Click Run in the upper right corner of the UI for each one:

Load data into Snowflake

Now that your environment is set up, you can start loading data into it. You will be working within the raw database, using the jaffle_shop and stripe schemas to organize your tables.

Create customer table. First, delete all contents (empty) in the Editor of the Snowflake worksheet. Then, run this SQL command to create the customer table in the jaffle_shop schema:

You should see a ‘Table CUSTOMERS successfully created.’ message.

Load data. After creating the table, delete all contents in the Editor. Run this command to load data from the S3 bucket into the customer table:

You should see a confirmation message after running the command.

Create orders table. Delete all contents in the Editor. Run the following command to create…

Load data. Delete all contents in the Editor, then run this command to load data into the orders table:

Create payment table. Delete all contents in the Editor. Run the following command to create the payment table:

Load data. Delete all contents in the Editor. Run the following command to load data into the payment table:

Verify data. Verify that the data is loaded by running these SQL queries. Confirm that you can see output for each one, like the following confirmation image.

Connect dbt Cloud to Snowflake

There are two ways to connect dbt Cloud to Snowflake. The first option is Partner Connect, which provides a streamlined setup to create your dbt Cloud account from within your new Snowflake trial account. The second option is to create your dbt Cloud account separately and build the Snowflake connection yourself (connect manually). If you want to get started quickly, dbt Labs recommends using Partner Connect. If you want to customize your setup from the very beginning and gain familiarity with the dbt Cloud setup flow, dbt Labs recommends connecting manually.

- Use Partner Connect

- Connect manually

Using Partner Connect allows you to create a complete dbt account with your Snowflake connection , a managed repository , environments , and credentials.

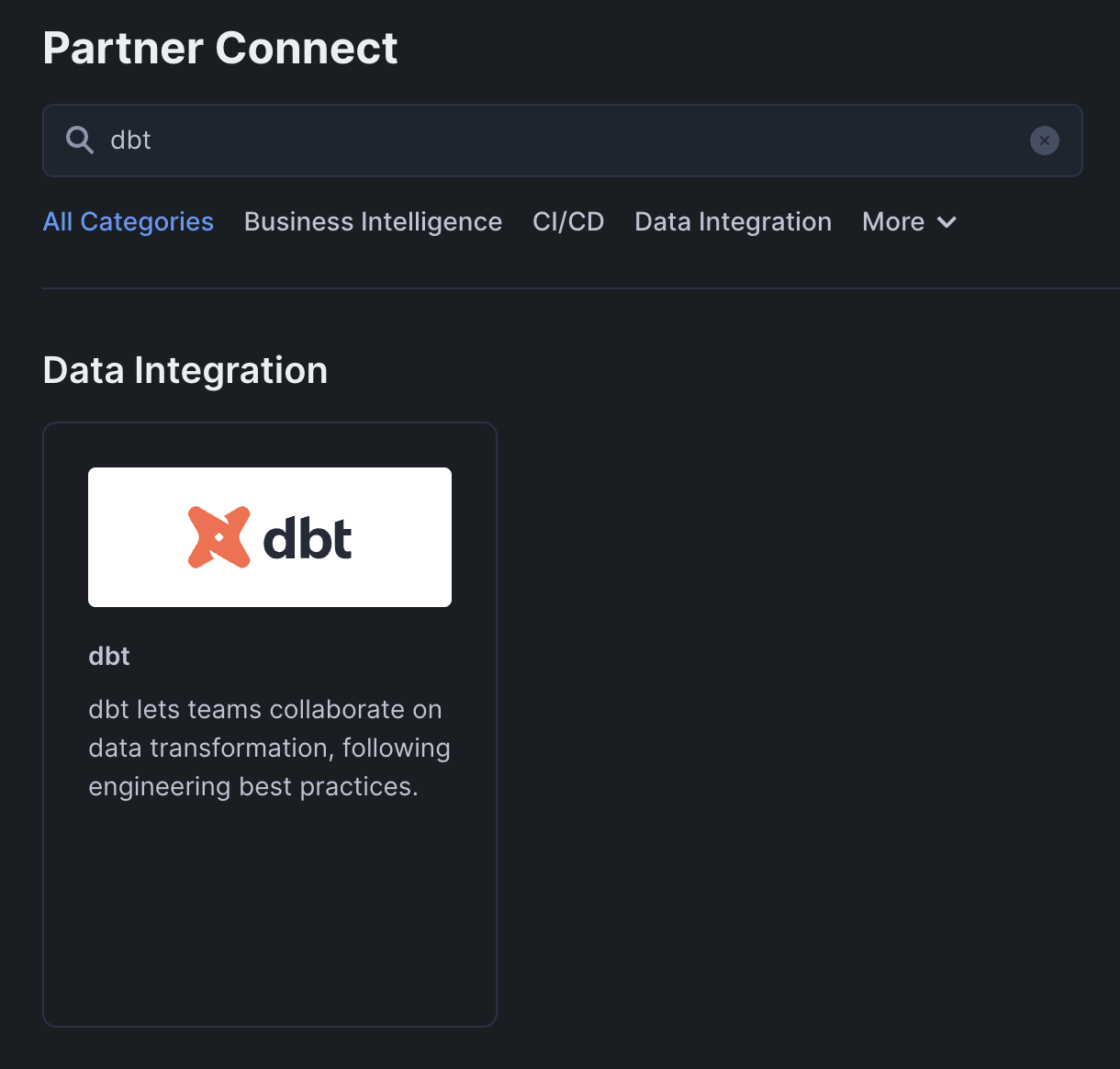

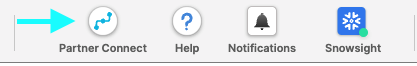

In the Snowflake UI, click on the home icon in the upper left corner. In the left sidebar, select Data Products . Then, select Partner Connect . Find the dbt tile by scrolling or by searching for dbt in the search bar. Click the tile to connect to dbt.

If you’re using the classic version of the Snowflake UI, you can click the Partner Connect button in the top bar of your account. From there, click on the dbt tile to open up the connect box.

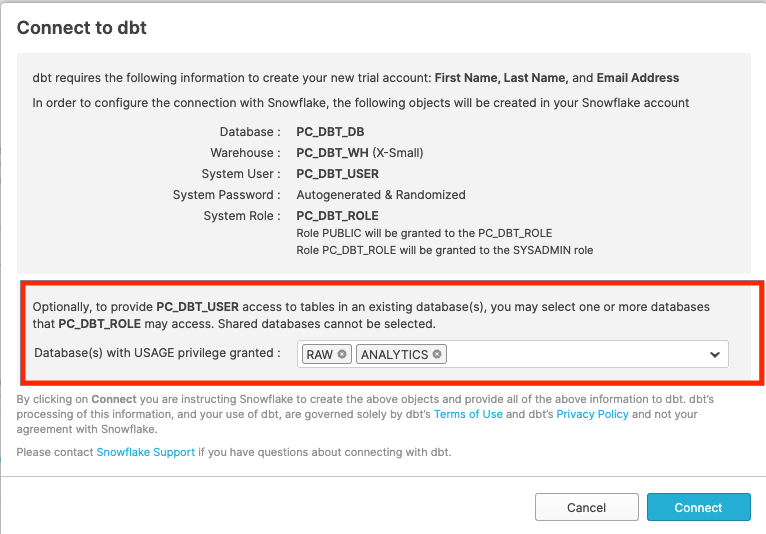

In the Connect to dbt popup, find the Optional Grant option and select the RAW and ANALYTICS databases. This will grant access for your new dbt user role to each database. Then, click Connect .

Click Activate when a popup appears:

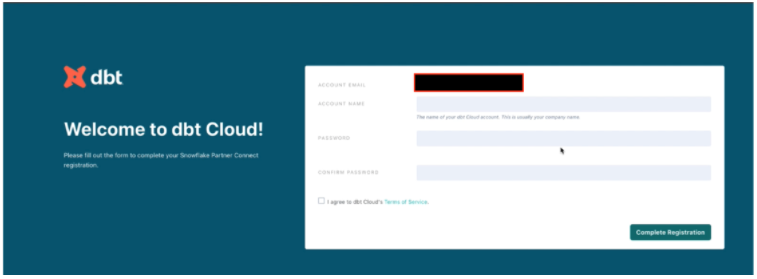

- After the new tab loads, you will see a form. If you already created a dbt Cloud account, you will be asked to provide an account name. If you haven't created account, you will be asked to provide an account name and password.

After you have filled out the form and clicked Complete Registration , you will be logged into dbt Cloud automatically.

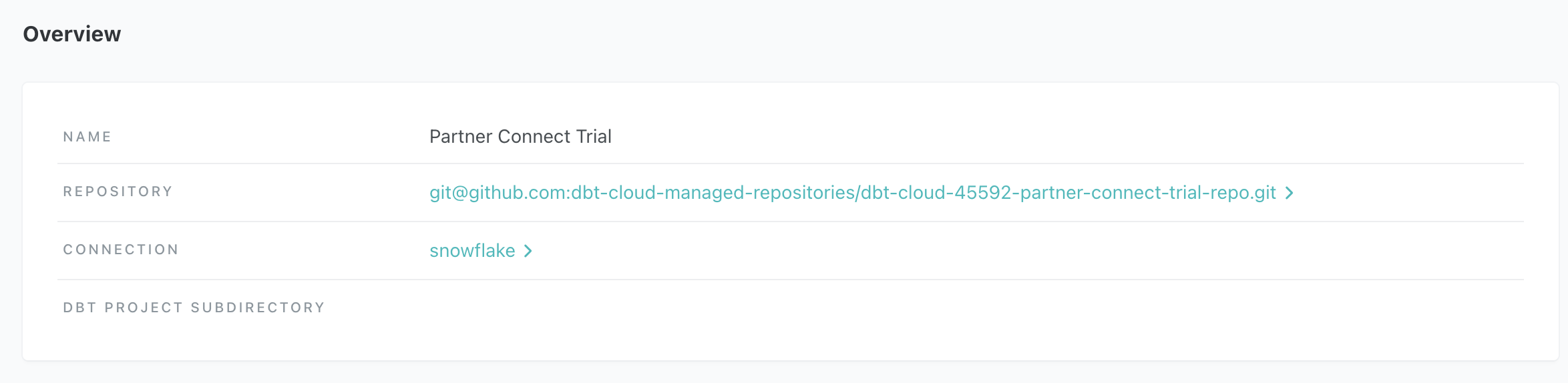

From your Account Settings in dbt Cloud (using the gear menu in the upper right corner), choose the "Partner Connect Trial" project and select snowflake in the overview table. Select edit and update the fields Database and Warehouse to be analytics and transforming , respectively.

Create a new project in dbt Cloud. From Account settings (using the gear menu in the top right corner), click + New Project .

Enter a project name and click Continue .

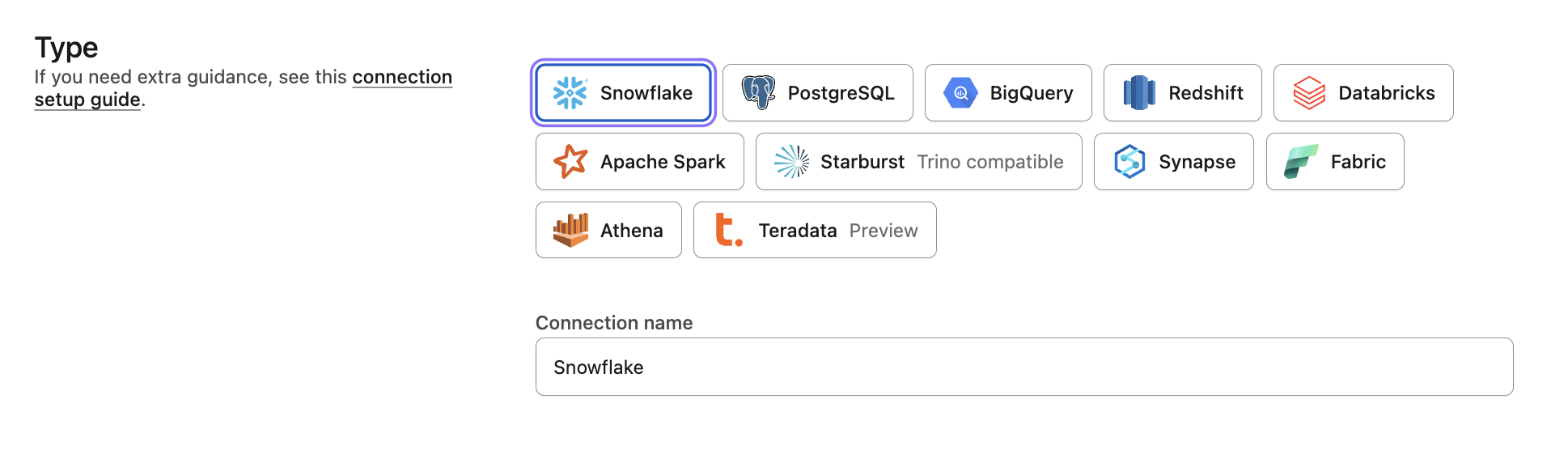

For the warehouse, click Snowflake then Next to set up your connection.

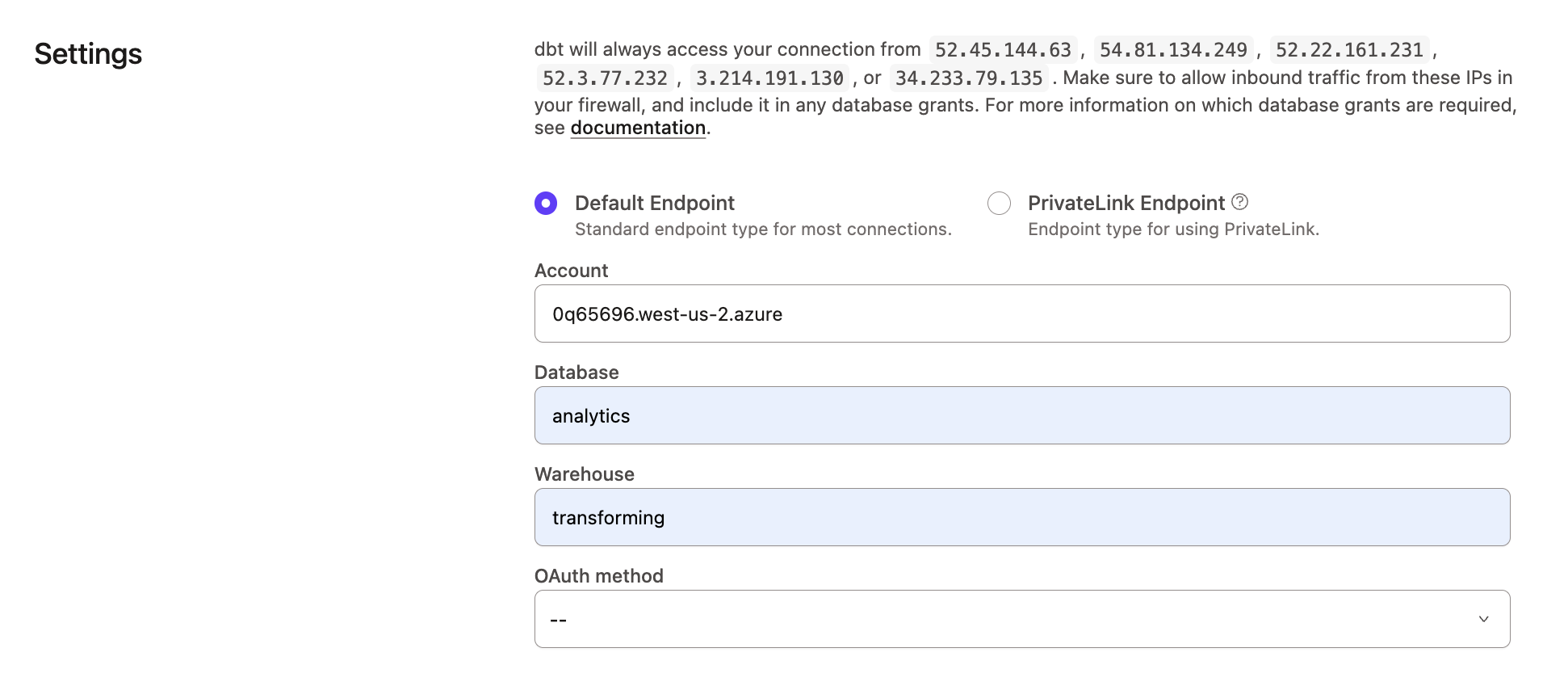

Enter your Settings for Snowflake with:

Account — Find your account by using the Snowflake trial account URL and removing snowflakecomputing.com . The order of your account information will vary by Snowflake version. For example, Snowflake's Classic console URL might look like: oq65696.west-us-2.azure.snowflakecomputing.com . The AppUI or Snowsight URL might look more like: snowflakecomputing.com/west-us-2.azure/oq65696 . In both examples, your account will be: oq65696.west-us-2.azure . For more information, see Account Identifiers in the Snowflake docs.

✅ db5261993 or db5261993.east-us-2.azure ❌ db5261993.eu-central-1.snowflakecomputing.com

Role — Leave blank for now. You can update this to a default Snowflake role later.

Database — analytics . This tells dbt to create new models in the analytics database.

Warehouse — transforming . This tells dbt to use the transforming warehouse that was created earlier.

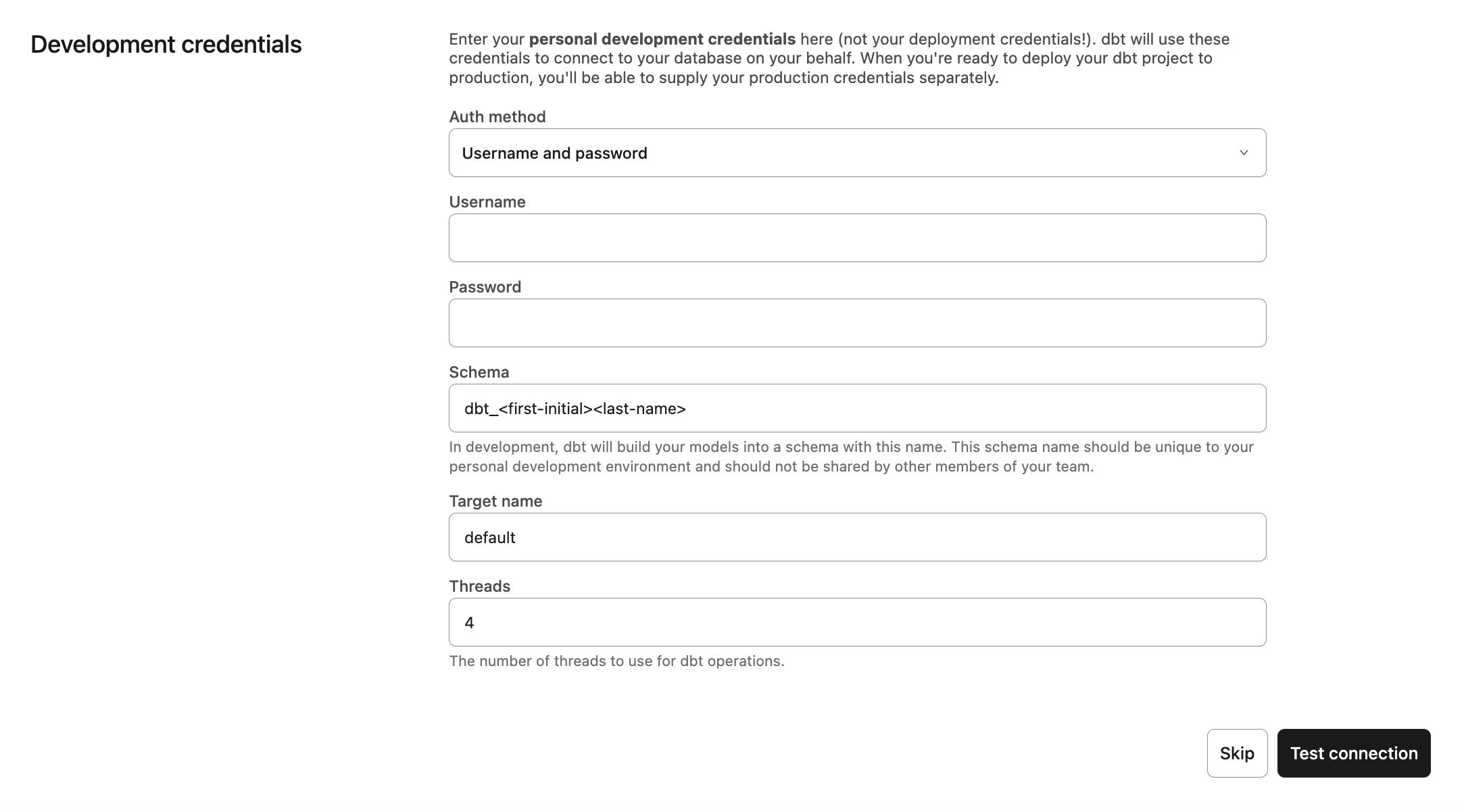

Enter your Development Credentials for Snowflake with:

- Username — The username you created for Snowflake. The username is not your email address and is usually your first and last name together in one word.

- Password — The password you set when creating your Snowflake account.

- Schema — You’ll notice that the schema name has been auto-created for you. By convention, this is dbt_<first-initial><last-name> . This is the schema connected directly to your development environment, and it's where your models will be built when running dbt within the Cloud IDE.

- Target name — Leave as the default.

- Threads — Leave as 4. This is the number of simultaneous connects that dbt Cloud will make to build models concurrently.

Click Test Connection . This verifies that dbt Cloud can access your Snowflake account.

If the connection test succeeds, click Next . If it fails, you may need to check your Snowflake settings and credentials.

Set up a dbt Cloud managed repository

If you used Partner Connect, you can skip to initializing your dbt project as the Partner Connect provides you with a managed repository. Otherwise, you will need to create your repository connection.

When you develop in dbt Cloud, you can leverage Git to version control your code.

To connect to a repository, you can either set up a dbt Cloud-hosted managed repository or directly connect to a supported git provider . Managed repositories are a great way to trial dbt without needing to create a new repository. In the long run, it's better to connect to a supported git provider to use features like automation and continuous integration .

To set up a managed repository:

- Under "Setup a repository", select Managed .

- Type a name for your repo such as bbaggins-dbt-quickstart

- Click Create . It will take a few seconds for your repository to be created and imported.

- Once you see the "Successfully imported repository," click Continue .

Initialize your dbt project and start developing

This guide assumes you use the dbt Cloud IDE to develop your dbt project and define metrics. However, the dbt Cloud IDE doesn't support using MetricFlow commands to query or preview metrics (support coming soon).

To query and preview metrics in your development tool, you can use the dbt Cloud CLI to run the MetricFlow commands .

Now that you have a repository configured, you can initialize your project and start development in dbt Cloud using the IDE:

- Click Start developing in the dbt Cloud IDE . It might take a few minutes for your project to spin up for the first time as it establishes your git connection, clones your repo, and tests the connection to the warehouse.

- Above the file tree to the left, click Initialize your project . This builds out your folder structure with example models.

- Make your initial commit by clicking Commit and sync . Use the commit message initial commit . This creates the first commit to your managed repo and allows you to open a branch where you can add new dbt code.

- Delete the models/examples folder in the File Explorer .

- Click + Create new file , add this query to the new file, and click Save as to save the new file: select * from raw . jaffle_shop . customers

- In the command line bar at the bottom, enter dbt run and click Enter. You should see a dbt run succeeded message.

Build your dbt project

The next step is to build your project. This involves adding sources, staging models, business-defined entities, and packages to your project.

Add sources

Sources in dbt are the raw data tables you'll transform. By organizing your source definitions, you document the origin of your data. It also makes your project and transformation more reliable, structured, and understandable.

You have two options for working with files in the dbt Cloud IDE:

- Create a new branch (recommended) — Create a new branch to edit and commit your changes. Navigate to Version Control on the left sidebar and click Create branch .

- Edit in the protected primary branch — If you prefer to edit, format, or lint files and execute dbt commands directly in your primary git branch, use this option. The dbt Cloud IDE prevents commits to the protected branch so you'll be prompted to commit your changes to a new branch.

Name the new branch build-project .

- Hover over the models directory and click the three dot menu ( ... ), then select Create file .

- Name the file staging/jaffle_shop/src_jaffle_shop.yml , then click Create .

- Copy the following text into the file and click Save .

In your source file, you can also use the Generate model button to create a new model file for each source. This creates a new file in the models directory with the given source name and fill in the SQL code of the source definition.

- Name the file staging/stripe/src_stripe.yml , then click Create .

Add staging models

Staging models are the first transformation step in dbt. They clean and prepare your raw data, making it ready for more complex transformations and analyses. Follow these steps to add your staging models to your project.

- In the jaffle_shop sub-directory, create the file stg_customers.sql . Or, you can use the Generate model button to create a new model file for each source.

- Copy the following query into the file and click Save .

- In the same jaffle_shop sub-directory, create the file stg_orders.sql

- In the stripe sub-directory, create the file stg_payments.sql .

- Enter dbt run in the command prompt at the bottom of the screen. You should get a successful run and see the three models.

Add business-defined entities

This phase involves creating models that serve as the entity layer or concept layer of your dbt project , making the data ready for reporting and analysis. It also includes adding packages and the MetricFlow time spine that extend dbt's functionality.

This phase is the marts layer , which brings together modular pieces into a wide, rich vision of the entities an organization cares about.

- Create the file models/marts/fct_orders.sql .

- In the models/marts directory, create the file dim_customers.sql .

- In your main directory, create the file packages.yml .

- In the models directory, create the file metrics/metricflow_time_spine.sql in your main directory.

- Enter dbt run in the command prompt at the bottom of the screen. You should get a successful run message and also see in the run details that dbt has successfully built five models.

Create semantic models

Semantic models contain many object types (such as entities, measures, and dimensions) that allow MetricFlow to construct the queries for metric definitions.

- Each semantic model will be 1:1 with a dbt SQL/Python model.

- Each semantic model will contain (at most) 1 primary or natural entity.

- Each semantic model will contain zero, one, or many foreign or unique entities used to connect to other entities.

- Each semantic model may also contain dimensions, measures, and metrics. This is what actually gets fed into and queried by your downstream BI tool.

In the following steps, semantic models enable you to define how to interpret the data related to orders. It includes entities (like ID columns serving as keys for joining data), dimensions (for grouping or filtering data), and measures (for data aggregations).

- In the metrics sub-directory, create a new file fct_orders.yml .

- Add the following code to that newly created file:

The following sections explain dimensions , entities , and measures in more detail, showing how they each play a role in semantic models.

- Entities act as unique identifiers (like ID columns) that link data together from different tables.

- Dimensions categorize and filter data, making it easier to organize.

- Measures calculates data, providing valuable insights through aggregation.

Entities are a real-world concept in a business, serving as the backbone of your semantic model. These are going to be ID columns (like order_id ) in our semantic models. These will serve as join keys to other semantic models.

Add entities to your fct_orders.yml semantic model file:

Dimensions

Dimensions are a way to group or filter information based on categories or time.

Add dimensions to your fct_orders.yml semantic model file:

Measures are aggregations performed on columns in your model. Often, you’ll find yourself using them as final metrics themselves. Measures can also serve as building blocks for more complicated metrics.

Add measures to your fct_orders.yml semantic model file:

Define metrics

Metrics are the language your business users speak and measure business performance. They are an aggregation over a column in your warehouse that you enrich with dimensional cuts.

There are different types of metrics you can configure:

- Conversion metrics — Track when a base event and a subsequent conversion event occur for an entity within a set time period.

- Cumulative metrics — Aggregate a measure over a given window. If no window is specified, the window will accumulate the measure over all of the recorded time period. Note, that you must create the time spine model before you add cumulative metrics.

- Derived metrics — Allows you to do calculations on top of metrics.

- Simple metrics — Directly reference a single measure without any additional measures involved.

- Ratio metrics — Involve a numerator metric and a denominator metric. A constraint string can be applied to both the numerator and denominator or separately to the numerator or denominator.

Once you've created your semantic models, it's time to start referencing those measures you made to create some metrics:

Add metrics to your fct_orders.yml semantic model file:

Add second semantic model to your project

Great job, you've successfully built your first semantic model! It has all the required elements: entities, dimensions, measures, and metrics.

Let’s expand your project's analytical capabilities by adding another semantic model in your other marts model, such as: dim_customers.yml .

After setting up your orders model:

- In the metrics sub-directory, create the file dim_customers.yml .

This semantic model uses simple metrics to focus on customer metrics and emphasizes customer dimensions like name, type, and order dates. It uniquely analyzes customer behavior, lifetime value, and order patterns.

Test and query metrics

To work with metrics in dbt, you have several tools to validate or run commands. Here's how you can test and query metrics depending on your setup:

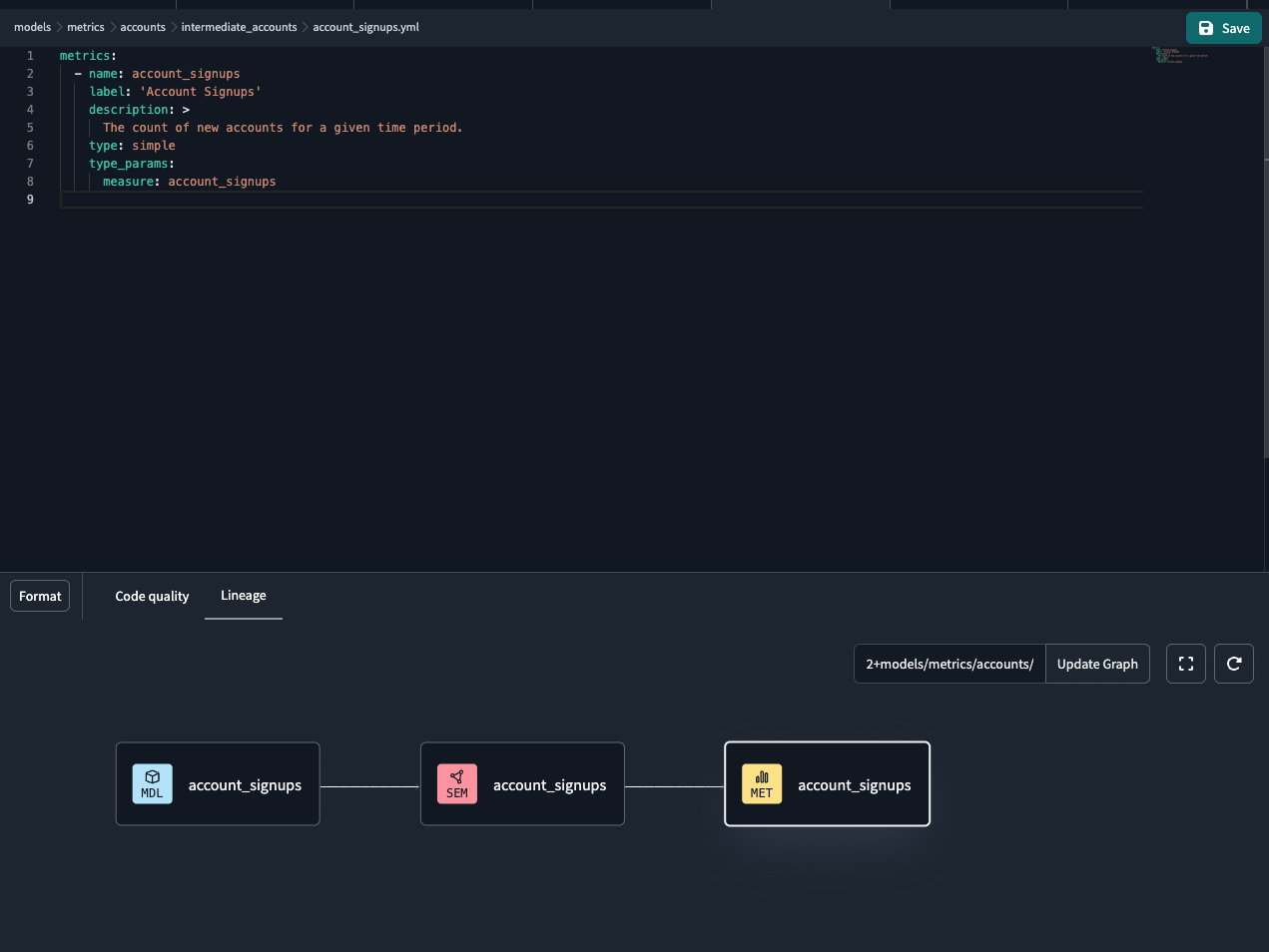

- dbt Cloud IDE users — Currently, running MetricFlow commands directly in the dbt Cloud IDE isn't supported, but is coming soon. You can view metrics visually through the DAG in the Lineage tab without directly running commands.

- dbt Cloud CLI users — The dbt Cloud CLI enables you to run MetricFlow commands to query and preview metrics directly in your command line interface.

- dbt Core users — Use the MetricFlow CLI for command execution. While this guide focuses on dbt Cloud users, dbt Core users can find detailed MetricFlow CLI setup instructions in the MetricFlow commands page. Note that to use the dbt Semantic Layer, you need to have a Team or Enterprise account .

Alternatively, you can run commands with SQL client tools like DataGrip, DBeaver, or RazorSQL.

dbt Cloud IDE users

You can view your metrics in the dbt Cloud IDE by viewing them in the Lineage tab. The dbt Cloud IDE Status button (located in the bottom right of the editor) displays an Error status if there's an error in your metric or semantic model definition. You can click the button to see the specific issue and resolve it.

Once viewed, make sure you commit and merge your changes in your project.

dbt Cloud CLI users

This section is for dbt Cloud CLI users. MetricFlow commands are integrated with dbt Cloud, which means you can run MetricFlow commands as soon as you install the dbt Cloud CLI. Your account will automatically manage version control for you.

Refer to the following steps to get started:

- Install the dbt Cloud CLI (if you haven't already). Then, navigate to your dbt project directory.

- Run a dbt command, such as dbt parse , dbt run , dbt compile , or dbt build . If you don't, you'll receive an error message that begins with: "ensure that you've ran an artifacts....".

- MetricFlow builds a semantic graph and generates a semantic_manifest.json file in dbt Cloud, which is stored in the /target directory. If using the Jaffle Shop example, run dbt seed && dbt run to ensure the required data is in your data platform before proceeding.

Any time you make changes to metrics, you need to run dbt parse at a minimum. This ensures the semantic_manifest.json file is updated and you can have your changes reflected when querying metrics.

Run dbt sl --help to confirm you have MetricFlow installed and that you can view the available commands.

Run dbt sl query --metrics <metric_name> --group-by <dimension_name> to query the metrics and dimensions. For example, to query the order_total and order_count (both metrics), and then group them by the order_date (dimension), you would run:

Verify that the metric values are what you expect. To further understand how the metric is being generated, you can view the generated SQL if you type --compile in the command line.

Commit and merge the code changes that contain the metric definitions.

Run a production job

Once you’ve committed and merged your metric changes in your dbt project, you can perform a job run in your deployment environment in dbt Cloud to materialize your metrics. The deployment environment is only supported for the dbt Semantic Layer currently.

- Note — Deployment environment is currently supported ( development experience coming soon )

- To create a new environment, navigate to Deploy in the navigation menu, select Environments , and then select Create new environment .

- Fill in your deployment credentials with your Snowflake username and password. You can name the schema anything you want. Click Save to create your new production environment.

- Create a new deploy job that runs in the environment you just created. Go back to the Deploy menu, select Jobs , select Create job , and click Deploy job .

- Set the job to run a dbt build and select the Generate docs on run checkbox.

- Run the job and make sure it runs successfully.

- Merging the code into your main branch allows dbt Cloud to pull those changes and builds the definition in the manifest produced by the run.

- Re-running the job in the deployment environment helps materialize the models, which the metrics depend on, in the data platform. It also makes sure that the manifest is up to date.

- The Semantic Layer APIs pulls in the most recent manifest and allows your integration information to extract metadata from it.

Set up dbt Semantic Layer

You can set up the dbt Semantic Layer in dbt Cloud at the environment and project level. Before you begin:

- Enterprise plan — Developer license with Account Admin permissions. Or Owner with a Developer license, assigned Project Creator, Database Admin, or Admin permissions.

- Team plan — Owner with a Developer license.

- If you are using a free trial dbt Cloud account, you are on a trial of the Team plan as an Owner, so you're good to go.

- You must have a successful run in your new environment.

Now that we've created and successfully run a job in your environment, you're ready to configure the semantic layer.

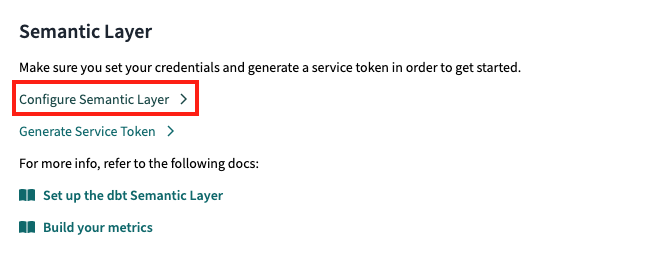

- Navigate to Account Settings in the navigation menu.

- Use the sidebar to select your project settings. Select the specific project you want to enable the Semantic Layer for.

- In the Project Details page, navigate to the Semantic Layer section, and select Configure Semantic Layer .

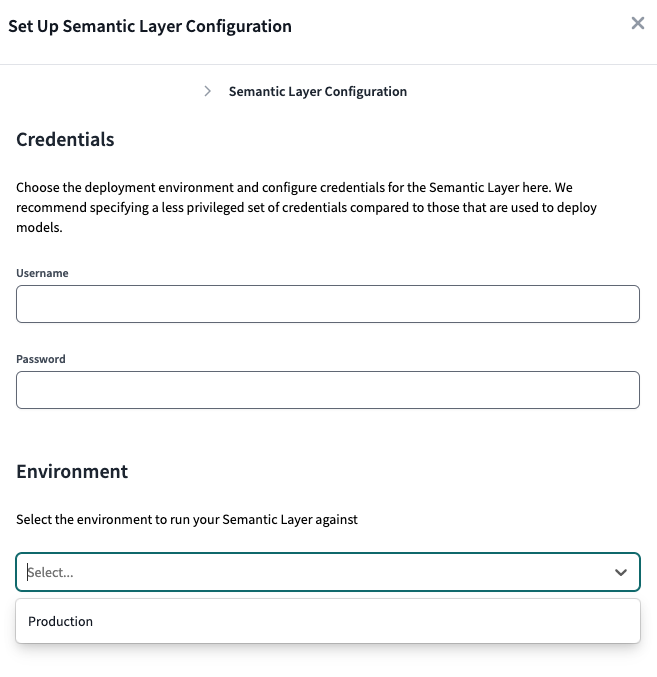

In the Set Up Semantic Layer Configuration page, enter the credentials you want the Semantic Layer to use specific to your data platform.

- Use credentials with minimal privileges. This is because the Semantic Layer requires read access to the schema(s) containing the dbt models used in your semantic models for downstream applications

- Note, Environment variables such as {{env_var('DBT_WAREHOUSE')} , doesn't supported the dbt Semantic Layer yet. You must use the actual credentials.

- Select the deployment environment you want for the Semantic Layer and click Save .

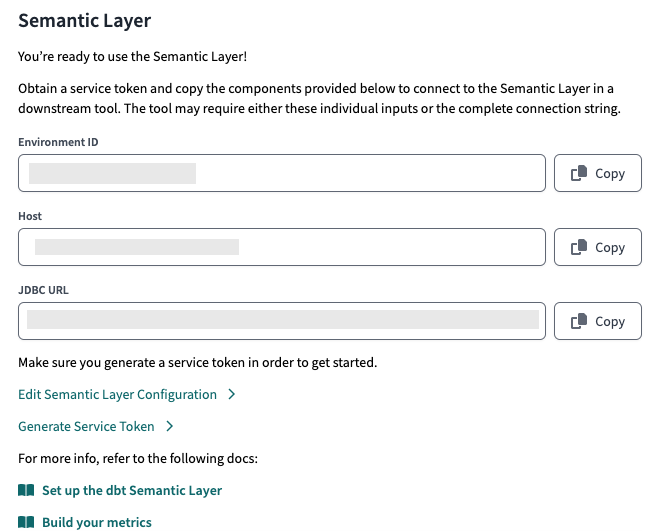

- After saving it, you'll be provided with the connection information that allows you to connect to downstream tools. If your tool supports JDBC, save the JDBC URL or individual components (like environment id and host). If it uses the GraphQL API, save the GraphQL API host information instead.

Save and copy your environment ID, service token, and host, which you'll need to use in the downstream tools. For more info on how to integrate with partner integrations, refer to Available integrations .

Return to the Project Details page and click the Generate a Service Token button. Make sure it has Semantic Layer Only and Metadata Only permissions. Name the token and save it. Once the token is generated, you won't be able to view this token again so make sure to record it somewhere safe.

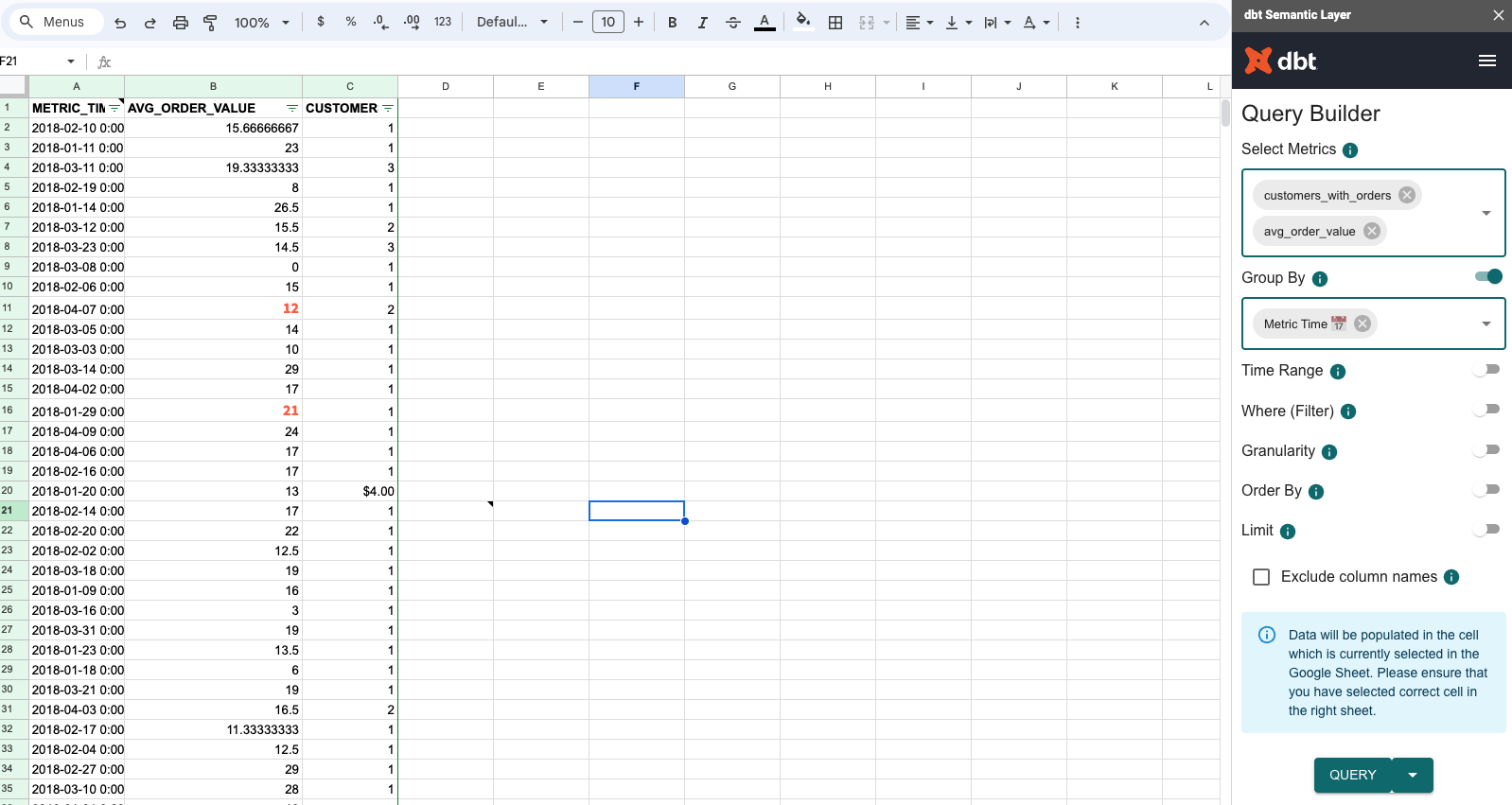

Connect and query with Google Sheets

This section will guide you on how to use the Google Sheets integration. Query metrics using other tools:

- First-class integrations with Tableau, Hex, and more.

- The dbt Semantic Layer APIs .

- Exports to expose tables of metrics and dimensions in your data platform and create a custom integration with tools like PowerBI.

To query your metrics using Google Sheets:

- Make sure you have a Gmail account.

- To set up Google Sheets and query your metrics, follow the detailed instructions on Google Sheets integration .

- Query a metric, like order_total , and filter it with a dimension, like order_date .

- You can also use the group_by parameter to group your metrics by a specific dimension.

What's next

Great job on completing the comprehensive dbt Semantic Layer guide 🎉! You should hopefully have gained a clear understanding of what the dbt Semantic Layer is, its purpose, and when to use it in your projects.

You've learned how to:

- Set up your Snowflake environment and dbt Cloud, including creating worksheets and loading data.

- Connect and configure dbt Cloud with Snowflake.

- Build, test, and manage dbt Cloud projects, focusing on metrics and semantic layers.

- Run production jobs and query metrics with Google Sheets.

For next steps, you can start defining your own metrics and learn additional configuration options such as exports , fill null values , and more.

Here are some additional resources to help you continue your journey:

- dbt Semantic Layer FAQs

- Available integrations

- Demo on how to define and query metrics with MetricFlow

- Join our live demos

IMAGES

VIDEO

COMMENTS

The cloud services layer is a collection of services that coordinate activities across Snowflake. These services tie together all of the different components of Snowflake in order to process user requests, from login to query dispatch. The cloud services layer also runs on compute instances provisioned by Snowflake from the cloud provider.

Data Warehouse Layers in Snowflake Data Landing Layer. T his is the first layer (also referred as Landing Zone) where all your data lands from multiple sources, such as operational databases ...

Snowflake supports a high-level architecture as depicted in the diagram below. Snowflake has 3 different layers: Storage Layer. Compute Layer. Cloud Services Layer. 1. Storage Layer. Snowflake organizes the data into multiple micro partitions that are internally optimized and compressed.

Snowflake Deployment Framework makes use of a layered approach to data management. It has a source layer, a cloud layer, and Snowflake's raw, integration, presentation, and share layer.

39 Presentation layer 39 Client-side rendering in the browser 40 Server-side rendering with tiles 40 More about view models and bootstrap responses 41 Analytics layer ... Snowflake Data Marketplace includes many data sets that you can incorporate into your existing business data, such as weather, demographics, or traffic, for greater data ...

Both internal (i.e. Snowflake) and external (Amazon S3, Google Cloud Storage, or Microsoft Azure) stage references can include a path (or prefix in AWS terminology). When staging regular data sets, we recommend partitioning the data into logical paths that include identifying details such as geographical location or other source identifiers ...

Snowflake's unique architecture, designed for faster analytical queries, comes from its separation of the storage and compute layers. This distinction contributes to the benefits we've mentioned earlier. Storage layer. In Snowflake, the storage layer is a critical component, storing data in an efficient and scalable manner.

Virtual Presentation Layer: Snowflake's scaling abilities make a virtual presentation layer completely viable. History tables containing only current or historical datasets can be encapsulated ...

With specialized technology in the cloud analytics layer, such as materialized views, organizations can use a cloud data warehouse to store all of its data and enjoy a level of external table performance that is comparable to data ingested directly into a data lake. With this versatile architecture, organizations can have seamless, high ...

The following topics help guide efforts to improve the performance of Snowflake. Gain insights into the historical performance of queries using the web interface or by writing queries against data in the ACCOUNT_USAGE schema. Learn about strategies to fine-tune computing power in order to improve the performance of a query or set of queries ...

A semantic layer ensures that you have fast, secure, and governed data for your business and data analysts. Instead of moving data in and out of the Snowflake Data Cloud, the semantic layer enables you to leave the data where it is. You can work within the semantic layer to prepare the data for analysis across any BI, ML, or data science tool.

The Snowflake data warehouse is a hybrid model amalgamation of traditional shared-disk and shared-nothing architecture. It uses a central data repository for persisted data, ensuring the information is accessible to the teams from all compute nodes. This ultimate guide will discuss the three Snowflake architectural layers in detail and how each ...

The cloud services layer is a collection of services that coordinate activities across Snowflake. These services tie together all of the different components of Snowflake in order to process user requests, from login to query dispatch. The cloud services layer also runs on compute instances provisioned by Snowflake from the cloud provider.

SNOWFLAKE: DATA WAREHOUSE BUILT FOR THE CLOUD At Snowlake, as we considered the limitaions of exising systems, we realized that the cloud is the perfect foundaion to build this ideal data warehouse. The cloud ofers near-ininite resources in a wide array of coniguraions, available at any ime, and you only pay for what you use. Public

9. Database Layer When you load the data into snowflake, Snowflake reorganizes that data into its internal optimized, compressed, columnar format. Snowflake store this optimized data in cloud storage. Snowflake manages all aspects of how this data is stored — the organization, file size, structure, compression, metadata, statistics, and other aspects of data storage are handled by Snowflake.

Preparing data in Snowflake is crucial to ensure the accuracy and reliability of the data that will be manually incorporated into PowerPoint. This involves cleaning and validating the data within Snowflake before exporting it into a format that can be imported into PowerPoint. Users should ensure that the data is accurate, consistent, and ...

A large variety of Snowflake customers have successfully implemented the Functional+Access Role Model. Besides scaling much easier, it has the added benefit of simplifying external IdP integration .

Dynamic table content is based on the results of a specific query. When the underlying data on which the dynamic table is based on changes, the table is updated to reflect those changes. These updates are referred to as a refresh. This process is automated, and it involves analyzing the query that underlies the table.

Introduction . The dbt Semantic Layer, powered by MetricFlow, simplifies the setup of key business metrics.It centralizes definitions, avoids duplicate code, and ensures easy access to metrics in downstream tools. MetricFlow helps manage company metrics easier, allowing you to define metrics in your dbt project and query them in dbt Cloud with MetricFlow commands.