- Skip to content

- Accessibility Policy

- Oracle blogs

- Lorem ipsum dolor

Case Study: How a bank turned challenges into opportunities to serve its customers using NoSQL Database

Acknowledgements: Michael Brey, Director of NoSQL Database Development, Oracle

An industry in flux

Financial services industries are at crossroads and are experiencing massive changes in response to shifting customer demands. With the increasing adoption of cloud technologies, digital-only enterprises are offering innovative solutions at the lowest cost.

Customer experience is a strategic imperative for most organizations today, but delivering an engaging experience across the growing number of digital customer touchpoints can be challenging, especially if they have an aging technology stack.

Additionally, organizations have to navigate these transformational changes while managing vast volumes of digital transactions, a variety of data, and velocity without straining their business systems, experiencing data loss, breaches, and/or downtime.

The below graphic shows the IT priorities of financial services institutions, and it is no surprise that 25% of them want to modernize their systems and equally the same % want to ramp up on their digital touchpoints.

This blog will examine how one of India's leading private banks modernized and expedited its digital presence, providing an enhanced experience for their customers using Oracle NoSQL Database .

Some of the bank's challenge:

- Exceeding customer expectations : India has more than 50% of its population below age 25 and more than 65% below age 35 . Banks customers are increasingly comparing banking experiences to other areas of their digital lives. These digital natives aren't looking to check their balances and deposit checks. They are looking for more meaningful online experiences, e.g., they are looking to start and finish applications to open an account without ever walking into a bank, and they want it to happen fast. The bank was looking at a system that can provide an engaging and personalized digital customer experience in real-time under strict SLA (e.g., process a loan under 10 sec).

- Ability to provide comprehensive services : Provide 'Always-on' digital services and delight customers by assisting them through chatbot interactions. Additionally, they want to experiment and deliver new services such as enhanced payment and block-chain technologies valued by their customers.

- Provide customer 360 experience : The bank offers various services, and their customers interact with those services in many different ways. However, customers want a consistent experience, regardless of the business division they are interacting with or the device they use in the process. Delivering an engaging and personalized customer experience with a single customer view and a unified view of all interactions encompassing each touchpoint with the bank is challenging.

- Managing change without disruption : The bank needed agility to launch new services and make their development staff more productive. They want to minimize outages with high availability built into the system.

Choosing the right data management strategy

A comprehensive data management strategy sets the stage for establishing a deeper understanding of customer experience. It can offer a single view by collecting all the customer's structured and unstructured data from across the organization and other relevant external sources into one place. A NoSQL database is an ideal choice. It can store personal and demographic information and customer interactions with the company, including calls, chats, emails, texts, social media responses, product/service activity history, past and present purchases. McKinsey's study suggests that data-driven companies tend to be 19X profitable when they use data as a differentiation, as they tend to acquire 23X more customers and retain 6X more customers.

Source: https://www.mckinsey.com/business-functions/marketing-and-sales/our-insights/five-facts-how-customer-analytics-boosts-corporate-performance

Why Oracle NoSQL Database

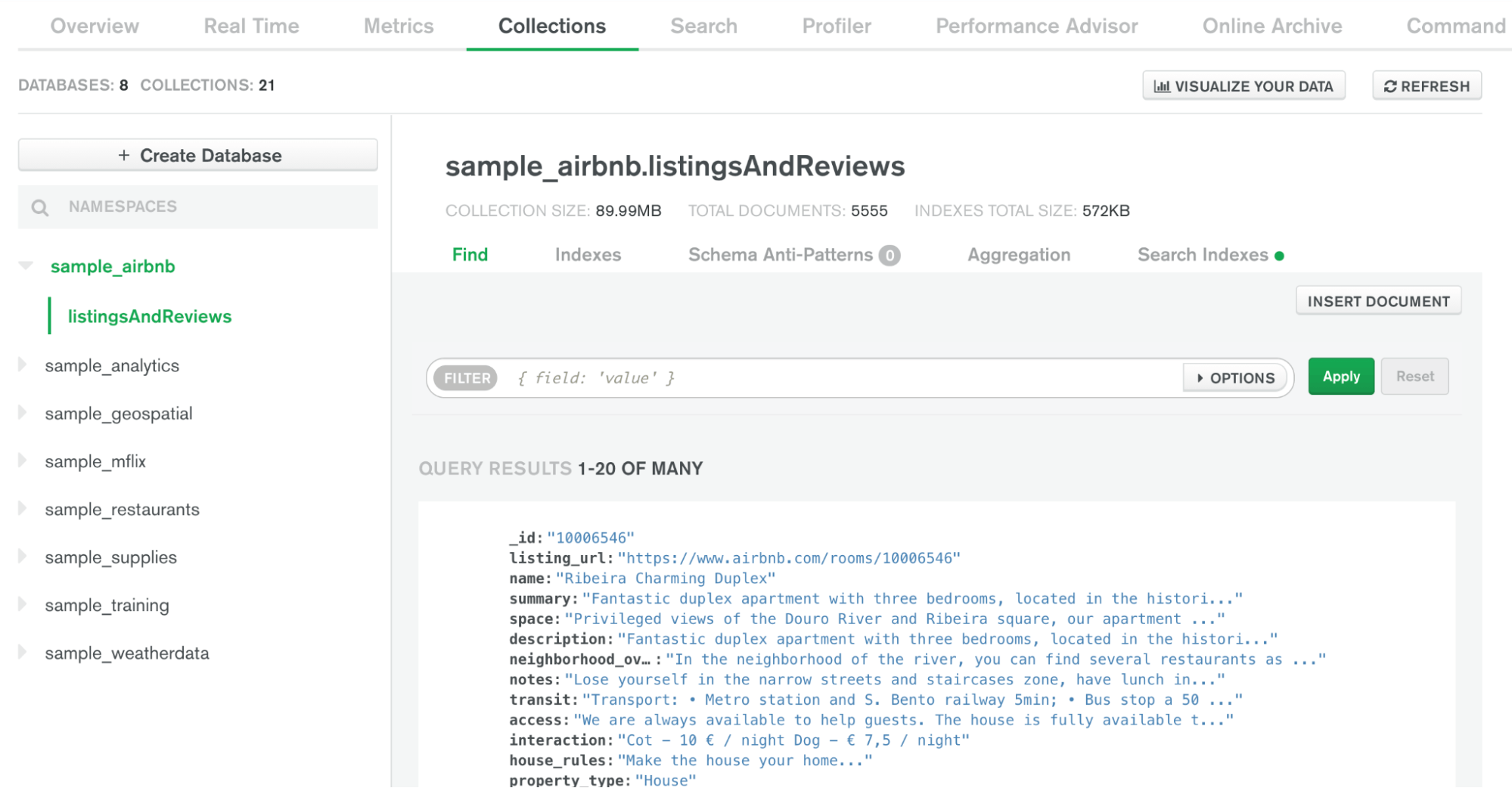

Oracle NoSQL Database multi-data model makes it easy for developers to store and combine data of any structure within the database without giving up sophisticated validation rules to govern data quality.

- Support for flexible data model:

With the JSON document model, the schema can be dynamically modified without application or database downtime. Bank can localize all data for a given entity – such as a financial asset class or user class – into a single document, rather than spreading it across multiple relational tables. Customers can access entire documents in a single database operation, rather than joining separate tables spread across the database. As a result of this data localization, application performance is often much higher when using Oracle NoSQL Database, which can be the decisive factor in improving customer experience.

- Predictable scalability with always-on availability

As banking use cases evolve, data sources and attributes grow. Onboarding additional applications, various digital channels, and users' demands, processing and storage capacity quickly grow.

Oracle NoSQL database supports scale-out architecture and sharding technology. With sharding, the data is distributed across multiple database instances spread across different machines, thus overcoming limitations of a single server and associated resources such as CPU, RAM, or I/O. An Oracle NoSQL cluster can be expanded horizontally online without incurring any application downtime and one hundred percent transparent to the application. Oracle NoSQL Database maintains multiple copies of data for high availability purposes.

- Scale-out architecture for business continuity

The bank needed the ability to deploy the system across multiple data centers for disaster recovery purposes and also for the ability to perform local writes to the data center. Oracle NoSQL Database supports active-active architecture with multi-region tables. A multi-region architecture is two or more independent, geographically distributed Oracle NoSQL Database clusters bridged by bi-directional replication, ensuring the customers always have fast access to services and the latest data.

- Simplify application development with rich query and APIs

Oracle NoSQL provides a rich query language and extensive secondary indexes giving users fast and flexible access to data with any query pattern. This can range from simple key-value lookups to complex search, traversals, and aggregations across rich data structures, including embedded sub-documents and arrays. It also supports several easy-to-use SDKs in various programming languages – in particular, the customer was looking at NodeJS drivers.

High-level architecture of the proposed solution

Critical components in the architecture include:

- Applications Layer: This layer manages all user input applications, e.g., loan or credit card applications. The applications are based on forms technology, allowing the developers to create adaptive and responsive documents to capture information. The forms have a notion of fragments that allows for pulling out standard segments such as personal details like name and address, family details, income details, etc. The application layer is responsible for doing all the "application plumbing": interacting with the database, enforcing validation at event points, etc. It interacts with the bank's backend system through the API gateway and doesn't store any personal or sensitive information.

- Database Layer: A CRM system is used primarily for lead generation to target customers. Also available in this layer is the ELK stack (Elasticsearch, Logstash, Kibana), which is primarily used to audit the log data stored in the NoSQL Database. Oracle NoSQL Database has an out-of-box integration with Elasticsearch. Oracle NoSQL Database also feeds the user drop-off (incomplete form activity) data to the orchestration framework primarily used for retargeting the users.

- Marketing Layer : This layer hosts various servers that drive the business decision process. It comprises servers and tools used for customer segmentation (identify groups of individuals who are similar in attitudes, demographic profile, etc.) and customer journey analysis (a sum of all customer experiences with the bank). Additionally, it handles personalization (showing the product or service a customer would be interested in buying) and retargeting (persuading the potential customers to reconsider bank's products and services after they left or got dropped off from their app) based on the drop-off campaign's data that's coming out the Oracle NoSQL Database.

Banking experience re-imagined

A typical user's journey, e.g., loan processing, starts with a user interacting with banks loan processing applications via – the web, mobile device, email, or even branch. The application is served off the forms in the application layer. At this stage, the user fills in details and submits the scanned supporting documents. These scanned forms are classified, and information is extracted, and the data is sent to the NoSQL Database store. The data is sent to the processing system that triggers the underwriting process, beginning with the rule engine and credit scoring engine. Depending on the underwriting process results, an application will be approved, denied, or sent back to the user for additional information. If the application is approved, the loan amount is deposited into the user's account. Suppose the user drops off at any point while filling the form. In that case, this drop-off information is stored in the NoSQL Database and feeds into the orchestration system to kick start the retargeting campaign that allows the bank to target the customer who got dropped off. The process is repeated with specific ads, emails, or WhatsApp messages retargeting the customers. In the event the customer returns, they can start the journey where they left off.

In conclusion, one of India's leading private banks modernized and expedited its digital presence and provided an enhanced experience for its customers using Oracle NoSQL Database.

More information

Oracle NoSQL Database is a multi-model, multi-region database designed to provide a highly-available, scalable, flexible, high-performant, and reliable data management solution to meet today's most demanding workloads. It is well-suited for high volume and velocity workloads, like the Internet of Things, customer 360, online contextual advertising, fraud detection, mobile application, user personalization, and online gaming. Developers can use a single application interface to build applications that run in on-premise and cloud environments quickly. Visit NoSQL Database Cloud Service page to learn more.

Michael Brey

Director of nosql development.

Previous Post

Power Your Event-Driven Applications with Oracle NoSQL Database Cloud Service – Part 1

Power your event-driven applications with oracle nosql database cloud service – part 2.

- Analyst Reports

- Cloud Economics

- Corporate Responsibility

- Diversity and Inclusion

- Security Practices

- What is Customer Service?

- What is ERP?

- What is Marketing Automation?

- What is Procurement?

- What is Talent Management?

- What is VM?

- Try Oracle Cloud Free Tier

- Oracle Sustainability

- Oracle COVID-19 Response

- Oracle and SailGP

- Oracle and Premier League

- Oracle and Red Bull Racing Honda

- US Sales 1.800.633.0738

- How can we help?

- Subscribe to Oracle Content

- © 2024 Oracle

- Privacy / Do Not Sell My Info

Why ScyllaDB?

Close-to-the-metal architecture handles millions of OPS with predictable single-digit millisecond latencies.

Is ScyllaDB right for me?

ScyllaDB is purpose-built for data-intensive apps that require high throughput & predictable low latency.

- ScyllaDB Cloud Fully-Managed on AWS & GCP

- ScyllaDB Enterprise Premium Features, Dedicated Support

- ScyllaDB Open Source Free, Open Source NoSQL Database.

- ScyllaDB Manager Streamline management

- ScyllaDB Operator ScyllaDB on Kubernetes

- ScyllaDB Monitoring Cluster observability

- ScyllaDB Drivers Get ScyllaDB shard-aware drivers

- ScyllaDB CDC Change Data Capture

ScyllaDB University

Level up your skills with our free NoSQL database courses.

Check out the ScyllaDB Blog

Our blog keeps you up to date with recent news about the ScyllaDB NoSQL database and related technologies, success stories and developer how-tos.

- All Resources

- ScyllaDB Intro

- ScyllaDB Cloud

- Cassandra Migration

- DynamoDB Migration

- Documentation

- Best Practices

- Whitepapers

- Virtual Workshops

- Masterclass Overview

- ScyllaDB Summit 2024

- How ScyllaDB Compares

- ScyllaDB vs. Cassandra

- ScyllaDB vs. DynamoDB

- ScyllaDB vs. MongoDB

- More Benchmarks

- Get Started

- ScyllaDB Cloud Access cloud clusters

- Enterprise Portal Submit tickets & access downloads

- University Self-paced learning

- Forum Ask or search questions

- Slack Chat with the ScyllaDB community

NoSQL Database Use Cases

Applications of NoSQL Databases

Nosql for big data, nosql for iot, nosql ecommerce, nosql for content management, nosql for time series data, nosql for retail, nosql for social media, nosql for cybersecurity.

NoSQL for Fraud Detection

NoSQL for Adtech

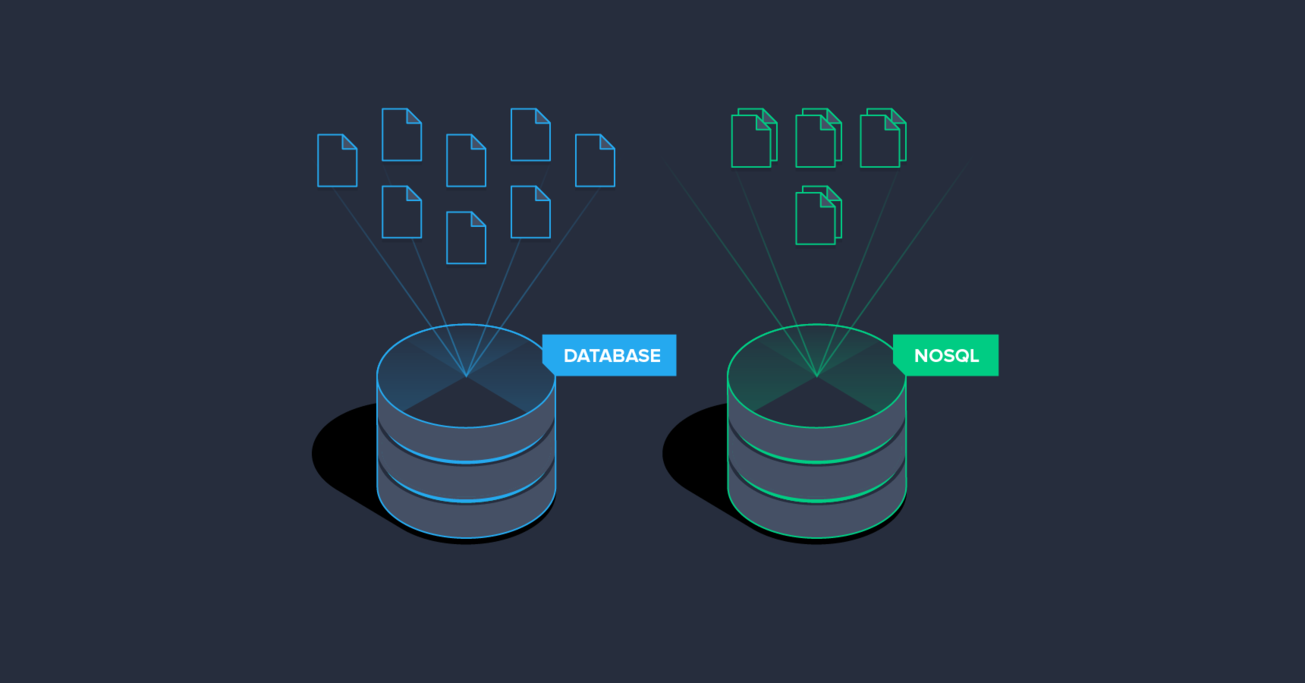

Relational databases impose fairly rigid, schema-based structures to data models; tables consisting of columns and rows, which can be joined to enable ‘relations’ among entities. Each table typically defines an entity. Each row in a table holds one entry, and each column contains a specific piece of information for that record. The relationships among tables are clearly defined and usually enforced by schemas and database rules.

Unlike RDBMSs, NoSQL databases encourage ‘application-first’ or API-first development patterns. Following these models, developers first consider queries that support the functionality specific to an application, rather than considering the data models and entities. This developer-friendly architecture paved the path to the success of the first generation of NoSQL databases.

NoSQL databases are often preferred when:

- There are large quantities and varieties of data

- Scalability is important

- Continuous availability is a priority

- For real-time analytics or performing big data work

Learn more about SQL vs NoSQL

What are some of the top NoSQL database use cases? Here are some of the most common:

NoSQL is a good option for organizations with data workloads directed toward rapid processing and analyzing of massive quantities of unstructured data—coined “ big data ” back in the 1990s. A flexible data model, continuous application availability, optimized database architecture, and modern transaction support are all important for processing big data. In contrast to relational databases, NoSQL databases are flexible because they are not confined by a fixed schema model.

In action, organizations that can process and act on fresh data rapidly achieve greater bottom-line value, business agility, and operational efficiency from it. A typical approach to real-time big data processing ingests new data with stream processing, analyzes historical data, and integrates both with a NoSQL database. For example, big data stored in a NoSQL database can be used for customer segmentation, delivering personalized ads to customers, data mining, and fraud detection.

Examples of NoSQL for Big Data Use Cases

Tens of billions of IoT devices – such as mobile devices, smart vehicles, home appliances, factory sensors, and healthcare systems–are now online. These devices continuously generate a massive amount of diverse, semi-structured data—approximately 847 zettabytes —that NoSQL databases are better equipped to ingest and analyze than their relational cousins. There are three ways to consider this:

Scalability. Scalability is difficult for SQL databases because IoT use cases often experience unpredictable traffic bursts and are frequently write-heavy to start with. NoSQL databases are a good option for managing system load when easy scaling of write capacity is a priority.

Consistency. Although relational databases deliver strong consistency guarantees, IoT applications are often well-suited for eventual consistency models.

Flexibility. While validation and schema capabilities are built into SQL databases, IoT data often needs more flexibility. NoSQL databases allow users to push schema enforcement logic to application code.

Examples of NoSQL for IoT Use Cases

NoSQL may offer better affordability, availability, performance, scalability, and flexibility for ecommerce applications compared to relational databases. Most ecommerce applications are characterized by frequent queries, massive, rapidly updating and expanding online catalogs, and huge amounts of inventory.

NoSQL databases often respond more rapidly to queries and are known for their cost-effective, predictable, horizontal scalability and high availability. With NoSQL databases, organizations can:

- Handle massive volumes of data and traffic growth

- Scale easily for a good price

- Analyze inventory and catalog in real time

- Provide catalog refreshes more rapidly

- Expand online catalog and product offerings

Examples of NoSQL for Ecommerce Use Cases

Multimedia content such as user-generated/social media reviews and imagery drives online sales most when it is curated and delivered to shoppers at the moment of interaction. Content management systems serve and store data assets and the metadata associated with them to a range of applications such as online publications, websites, and archives.

NoSQL databases offer an open-ended, flexible data model that is optimal for storing a mix of content, including structured, semi-structured, and unstructured data. NoSQL also allows users to aggregate and incorporate user data within a single catalog database to serve multiple business applications. In contrast, fixed RDBMS data models often cause many overlapping catalogs with different goals to proliferate.

Examples of NoSQL for Content Management Use Cases

Except for very small datasets, extracting high performance for time series data with an SQL database demands significant customization and configuration—and any such configuration is nontrivial.

Time series data is unique in that it is generally monitoring data gathered to assess the health of a host, system, patient, environment, etc. To optimize for time series use cases, NoSQL databases typically add data in time-ascending order and delete against a large range of old data. This ensures high query and write performance.

SQL databases are generally equally focused on creating, reading, updating, and deleting data, while NoSQL is less so. In addition, in contrast to the more loosely structured NoSQL database, SQL databases are typically designed with the ideas of atomicity, consistency, isolation, durability (ACID principles) in mind.

Time series databases typically accommodate time series data: they collect data in time order in real-time, and accommodate for extremely high volumes of data by holding it as immutable and append-only. Relational databases accommodate only lower ingest volumes, and are optimized for transactions. Overall, NoSQL databases trade ACID principles for the basic availability, soft state and eventual consistency (BASE) model, depending on their particular use case. In other words, the important notion for the time series data is the aggregate trend, not a single point in a time series, generally speaking.

Examples of NoSQL for Time Series Data Use Cases

To create differentiating, engaging digital customer experiences, it is essential to build on time-critical, data-intensive capabilities such as user profile management, personalization, and a unified customer view across touch points. This massive load of behavioral, demographic, and logistical data taxes RDBMS infrastructure that is designed to scale-up in different ways.

Distributed NoSQL databases allow users to manage increasing attributes with less work, scale more cost-effectively, and enjoy reduced latency—the essence of satisfying online interactions for users in real-time. A personalized, high-quality, fast, consistent experience is no longer a standout feature; it’s what customers demand, across all devices.

NoSQL platforms help deliver positive customer support experiences across multiple verticals by capturing data from massive quantities of omnichannel interactions and relating it to the accounts and service status of individual customers. NoSQL databases:

- Allow for expanding customer bases with extremely low latency and fast response times

- Handle structured and unstructured data from a range of sources

- Scale cost-effectively by design, and manage, store, query, and modify massive quantities of data while delivering personalized experiences

- Flex to enable innovations in the customer experience

- Seamlessly collect, integrate, and analyze new data in real-time

- Serve as the backbone for artificial intelligence (AI) and machine learning (ML) engine algorithms that drive personalization with recommendations

Examples of NoSQL for Retail Use Cases

The bulk of social networking platforms consist of posts, media, profiles, relationships, and APIs. Posts allow users to share thoughts, while media allows them to share videos and photos. Profiles store basic user information and relationships connect them. And through APIs the platform and users can interact with other sites and apps. These features demand that social network data is more flexible—and more difficult to process.

Massive amounts of data present social media platforms with both daily maintenance and development problems. Storing huge quantities of data in SQL databases makes it impossible to process unstructured and unpredictable information. Social media networks demand a flexible, occurrence-oriented database that operates on a schemaless-data model—something impossible for SQL databases. Also, the vertical scaling demands of SQL databases require enhancing implementation hardware, which makes processing large batches of data expensive.

NoSQL can store generic objects, such as JSON, and support huge volumes of read-write operations. This contributes to data consistency across the distributed system, making NoSQL databases a good option for processing the big, unstructured patterns of data access typical of social media platforms.

Examples of NoSQL for Social Media Use Cases

To react in real-time to a threat landscape that evolves constantly, cybersecurity demands speed and scale. To collect, store, and analyze the billions of events that reveal insight into the activities of malicious actors, cybersecurity providers are adopting cloud-native infrastructure. There are several reasons why a high performance low latency NoSQL database offers a cybersecurity advantage, most linking back to improved speed and scalability:

Intrusion detection. Greater speed supports real-time analytics and insights users can compare rapidly to events and operational data contained in a single database to detect problems.

Threat analysis. Real-time updates enable more proactive responses to security breaches and other attacks—including prevention.

Compliance and governance. A NoSQL structure can collect and store events and telemetry when deployed across diverse topologies, either on-premises or in the cloud, to ensure compliance.

Virus and malware protection. Enables machine learning and file analysis to identify malware within harmless content to defend users and endpoints from threats and intrusions.

Examples of NoSQL for Cybersecurity Use Cases

NoSQL for Fraud Detection and Identity Authentication

Ensuring only authentic users have access to applications and protecting sensitive personal data is a top priority that is heightened for banking, financial services, payments, and insurance.

It is sometimes possible to identify anomalies and patterns to detect fraud in real-time or even in advance. This demands real-time analysis of large quantities of both live and historic data, including environment, user profile, biometric data, geographic data, and context. For example, a $500 withdrawal may be typical until it occurs after hours in the wrong zip code.

Reputational stakes are amplified with mistakes over social media, yet excessively high false positive rates hurt the customer experience. This is why a fast and highly available NoSQL database is so important to support complex data analysis of website interactions, the CRM system, historical shopping data, and other data that fraud detection and identity authentication demand.

Examples of NoSQL for Fraud Detection Use Cases

The speed that NoSQL databases are well-suited to deliver is a critical competitive advantage for AdTech and MarTech businesses:

SLAs. To meet strict SLAs, these platforms must capture ad space during page loads—and this demands single-digit-millisecond latencies.

Real-time bidding. Consistently responsive, available databases allow users to win more available ad inventory and avoid latency spikes.

Precision ad targeting . High-volume ad service based on revenue optimization, impressions, and campaign goals can allow a team to target audiences and determine the most engaging content for individual users rapidly.

Highly scalable personalization engines . AdTech and MarTech services rely on personalization engines. These engines analyze behavioral, demographic, and geo-location data in real-time to ensure each user has a tailored experience each time they visit.

Real-time analytics. Drive real-time decision-making with actionable insights extracted from masses of data.

Mobile device metadata stores. A geographically-distributed metadata store for mobile devices can improve user conversion and retention.

User behavior and impressions. Engage in real-time capture and analysis of clickstreams to identify trends, understand sentiment, and optimize campaigns.

Machine learning. Run analytics and operational workloads at high velocity on the same infrastructure against the same datasets.

NoSQL Masterclasses: Advance Your NoSQL Knowledge

You can access the complete course here .

Trending NoSQL Resources

Start scaling with the world's best high performance NoSQL database.

- Product Overview

- ScyllaDB Enterprise

- Open Source

- Release Notes

- Online Training

- NoSQL Guides

- Resource Center

- Customer Support

- Custom Portal

- Terms of Service

- Privacy Policy

- Data Subject Request Form

- CCPA Privacy Notice

- Cookie Policy

- Trust Center

- Legal Center

Apache® and Apache Cassandra® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. Amazon DynamoDB® and Dynamo Accelerator® are trademarks of Amazon.com, Inc. No endorsements by The Apache Software Foundation or Amazon.com, Inc. are implied by the use of these marks.

- Oracle Database

- MySQL & MariaDB

- SQL, PL/SQL, T-SQL

- Microsoft SQL Server

- Programming

- Web development

- Information Systems

- Operating systems

- Cloud tec & Networking

- Data Science

- Various IT topics

The Definitive Guide to NoSQL Databases

Limited SQL scalability has prompted the industry to develop and deploy a number of NoSQL database management systems, with a focus on performance, reliability, and consistency. The trend was driven by proprietary NoSQL databases developed by Google and Amazon. Eventually, open-source systems like MongoDB, Cassandra, and Hypertable brought NoSQL within reach of everyone.

In this post, Senior Software Engineer Mohamad Altarade dives into some of them and explains why NoSQL will probably be with us for years to come.

By Mohammad Altarade

Mohammad is a highly motivated, high-energy individual with a passion for writing useful software and working with the latest technologies.

There is no doubt that the way web applications deal with data has changed significantly over the past decade. More data is being collected and more users are accessing this data concurrently than ever before. This means that scalability and performance are more of a challenge than ever for relational databases that are schema-based and therefore can be harder to scale.

The Evolution of NoSQL

The SQL scalability issue was recognized by Web 2.0 companies with huge, growing data and infrastructure needs, such as Google, Amazon, and Facebook. They came up with their own solutions to the problem – technologies like BigTable , DynamoDB , and Cassandra .

This growing interest resulted in a number of NoSQL Database Management Systems (DBMS’s), with a focus on performance, reliability, and consistency. A number of existing indexing structures were reused and improved upon with the purpose of enhancing search and read performance.

First, there were proprietary (closed source) types of NoSQL databases developed by big companies to meet their specific needs, such as Google’s BigTable, which is believed to be the first NoSQL system, and Amazon’s DynamoDB.

The success of these proprietary systems initiated development of a number of similar open-source and proprietary database systems, the most popular ones being Hypertable, Cassandra, MongoDB, DynamoDB, HBase, and Redis.

What Makes NoSQL Different?

One key difference between NoSQL databases and traditional relational databases is the fact that NoSQL is a form of unstructured storage .

This means that NoSQL databases do not have a fixed table structure like the ones found in relational databases.

Advantages and Disadvantages of NoSQL Databases

NoSQL databases have many advantages compared to traditional, relational databases.

One major, underlying difference is that NoSQL databases have a simple and flexible structure. They are schema-free.

Unlike relational databases, NoSQL databases are based on key-value pairs.

Some store types of NoSQL databases include column store, document store, key value store, graph store, object store, XML store, and other data store modes.

Usually, each value in the database has a key. Some NoSQL database stores also allow developers to store serialized objects into the database, not just simple string values.

Open-source NoSQL databases don’t require expensive licensing fees and can run on inexpensive hardware, rendering their deployment cost-effective.

Also, when working with NoSQL databases, whether they are open-source or proprietary, expansion is easier and cheaper than when working with relational databases. This is because it’s done by horizontally scaling and distributing the load on all nodes, rather than the type of vertical scaling that is usually done with relational database systems, which is replacing the main host with a more powerful one.

Disadvantages

Of course, NoSQL databases are not perfect, and they are not always the right choice.

For one thing, most NoSQL databases do not support reliability features that are natively supported by relational database systems. These reliability features can be summed up as atomicity, consistency, isolation, and durability. This also means that NoSQL databases, which don’t support those features, trade consistency for performance and scalability.

In order to support reliability and consistency features, developers must implement their own proprietary code, which adds more complexity to the system.

This might limit the number of applications that can rely on NoSQL databases for secure and reliable transactions, like banking systems.

Other forms of complexity found in most NoSQL databases include incompatibility with SQL queries. This means that a manual or proprietary querying language is needed, adding even more time and complexity.

NoSQL vs. Relational Databases

This table provides a brief feature comparison between NoSQL and relational databases:

It should be noted that the table shows a comparison on the database level , not the various database management systems that implement both models. These systems provide their own proprietary techniques to overcome some of the problems and shortcomings in both systems, and in some cases, significantly improve performance and reliability.

NoSQL Data Store Types

Key value store.

In the Key Value store type, a hash table is used in which a unique key points to an item.

Keys can be organized into logical groups of keys, only requiring keys to be unique within their own group. This allows for identical keys in different logical groups. The following table shows an example of a key-value store, in which the key is the name of the city, and the value is the address for Ulster University in that city.

Some implementations of the key value store provide caching mechanisms, which greatly enhance their performance.

All that is needed to deal with the items stored in the database is the key. Data is stored in a form of a string, JSON, or BLOB (Binary Large OBject).

One of the biggest flaws in this form of database is the lack of consistency at the database level. This can be added by the developers with their own code, but as mentioned before, this adds more effort, complexity, and time.

The most famous NoSQL database that is built on a key value store is Amazon’s DynamoDB.

Document Store

Document stores are similar to key value stores in that they are schema-less and based on a key-value model. Both, therefore, share many of the same advantages and disadvantages. Both lack consistency on the database level, which makes way for applications to provide more reliability and consistency features.

There are however, key differences between the two.

In Document Stores, the values (documents) provide encoding for the data stored. Those encodings can be XML, JSON, or BSON (Binary encoded JSON) .

Also, querying based on data can be done.

The most popular database application that relies on a Document Store is MongoDB.

Column Store

In a Column Store database, data is stored in columns, as opposed to being stored in rows as is done in most relational database management systems.

A Column Store is comprised of one or more Column Families that logically group certain columns in the database. A key is used to identify and point to a number of columns in the database, with a keyspace attribute that defines the scope of this key. Each column contains tuples of names and values, ordered and comma separated.

Column Stores have fast read/write access to the data stored. In a column store, rows that correspond to a single column are stored as a single disk entry. This makes for faster access during read/write operations.

The most popular databases that use the column store include Google’s BigTable, HBase, and Cassandra.

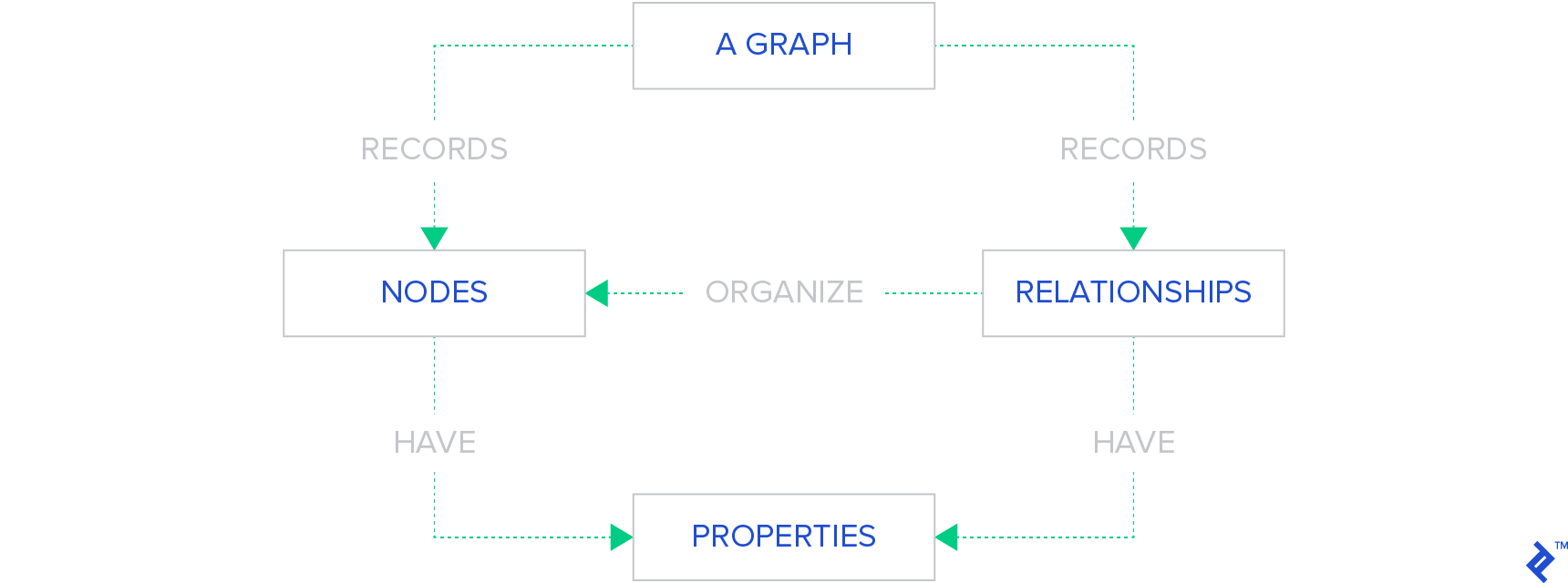

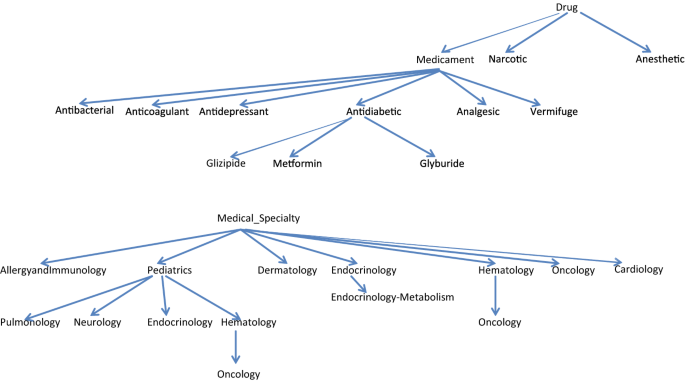

In a Graph Base NoSQL Database, a directed graph structure is used to represent the data. The graph is comprised of edges and nodes.

Formally, a graph is a representation of a set of objects, where some pairs of the objects are connected by links. The interconnected objects are represented by mathematical abstractions, called vertices, and the links that connect some pairs of vertices are called edges. A set of vertices and the edges that connect them is said to be a graph.

This illustrates the structure of a graph base database that uses edges and nodes to represent and store data. These nodes are organized by some relationships with one another, which is represented by edges between the nodes. Both the nodes and the relationships have some defined properties.

Graph databases are most typically used in social networking applications. Graph databases allow developers to focus more on relations between objects rather than on the objects themselves. In this context, they indeed allow for a scalable and easy-to-use environment.

Currently, InfoGrid and InfiniteGraph are the most popular graph databases.

NoSQL Database Management Systems

For a brief comparison of the databases, the following table provides a brief comparison between different NoSQL database management systems.

MongoDB has a flexible schema storage, which means stored objects are not necessarily required to have the same structure or fields. MongoDB also has some optimization features, which distributes the data collections across, resulting in overall performance improvement and a more balanced system.

Other NoSQL database systems, such as Apache CouchDB, are also document store type database, and share a lot of features with MongoDB, with the exception that the database can be accessed using RESTful APIs.

REST is an architectural style consisting of a coordinated set of architectural constraints applied to components, connectors, and data elements, within the World Wide Web. It relies on a stateless, client-server, cacheable communications protocol (e.g., the HTTP protocol).

RESTful applications use HTTP requests to post, read data, and delete data.

As for column base databases, Hypertable is a NoSQL database written in C++ and is based on Google’s BigTable.

Hypertable supports distributing data stores across nodes to maximize scalability, just like MongoDB and CouchDB.

One of the most widely used NoSQL databases is Cassandra, developed by Facebook.

Cassandra is a column store database that includes a lot of features aimed at reliability and fault tolerance.

Rather than providing an in-depth look at each NoSQL DBMS, Cassandra and MongoDB, two of the most widely used NoSQL database management systems, will be explored in the next subsections.

Cassandra is a database management system developed by Facebook.

The goal behind Cassandra was to create a DBMS that has no single point of failure and provides maximum availability.

Cassandra is mostly a column store database. Some studies referred to Cassandra as a hybrid system, inspired by Google’s BigTable, which is a column store database, and Amazon’s DynamoDB, which is a key-value database.

This is achieved by providing a key-value system, but the keys in Cassandra point to a set of column families, with reliance on Google’s BigTable distributed file system and Dynamo’s availability features (distributed hash table).

Cassandra is designed to store huge amounts of data distributed across different nodes. Cassandra is a DBMS designed to handle massive amounts of data, spread out across many servers, while providing a highly available service with no single point of failure, which is essential for a big service like Facebook.

The main features of Cassandra include:

- No single point of failure. For this to be achieved, Cassandra must run on a cluster of nodes, rather than a single machine. That doesn’t mean that the data on each cluster is the same, but the management software is. When a failure in one of the nodes happens, the data on that node will be inaccessible. However, other nodes (and data) will still be accessible.

- Distributed Hashing is a scheme that provides hash table functionality in a way that the addition or removal of one slot does not significantly change the mapping of keys to slots. This provides the ability to distribute the load to servers or nodes according to their capacity, and in turn, minimize downtime.

- Relatively easy to use Client Interface . Cassandra uses Apache Thrift for its client interface. Apache Thrift provides a cross-language RPC client, but most developers prefer open-source alternatives built on top of Apple Thrift, such as Hector.

- Other availability features. One of Cassandra’s features is data replication. Basically, it mirrors data to other nodes in the cluster. Replication can be random, or specific to maximize data protection by placing in a node in a different data center, for example. Another feature found in Cassandra is the partitioning policy. The partitioning policy decides where on which node to place the key. This can also be random or in order. When using both types of partitioning policies, Cassandra can strike a balance between load balancing and query performance optimization.

- Consistency. Features like replication make consistency challenging. This is due to the fact that all nodes must be up-to-date at any point in time with the latest values, or at the time a read operation is triggered. Eventually, though, Cassandra tries to maintain a balance between replication actions and read/write actions by providing this customizability to the developer.

- Read/Write Actions. The client sends a request to a single Cassandra node. The node, according to the replication policy, stores the data to the cluster. Each node first performs the data change in the commit log, and then updates the table structure with the change, both done synchronously. The read operation is also very similar, a read request is sent to a single node, and that single node is the one that determines which node holds the data, according to the partitioning/placement policy.

MongoDB is a schema-free, document-oriented database written in C++. The database is document store based, which means it stores values (referred to as documents) in the form of encoded data.

The choice of encoded format in MongoDB is JSON. This is powerful, because even if the data is nested inside JSON documents, it will still be queryable and indexable .

The subsections that follow describe some of the key features available in MongoDB.

Sharding is the partitioning and distributing of data across multiple machines (nodes). A shard is a collection of MongoDB nodes, in contrast to Cassandra where nodes are symmetrically distributed. Using shards also means the ability to horizontally scale across multiple nodes. In the case that there is an application using a single database server, it can be converted to sharded cluster with very few changes to the original application code because the way sharding is done by MongoDB. oftware is almost completely decoupled from the public APIs exposed to the client side.

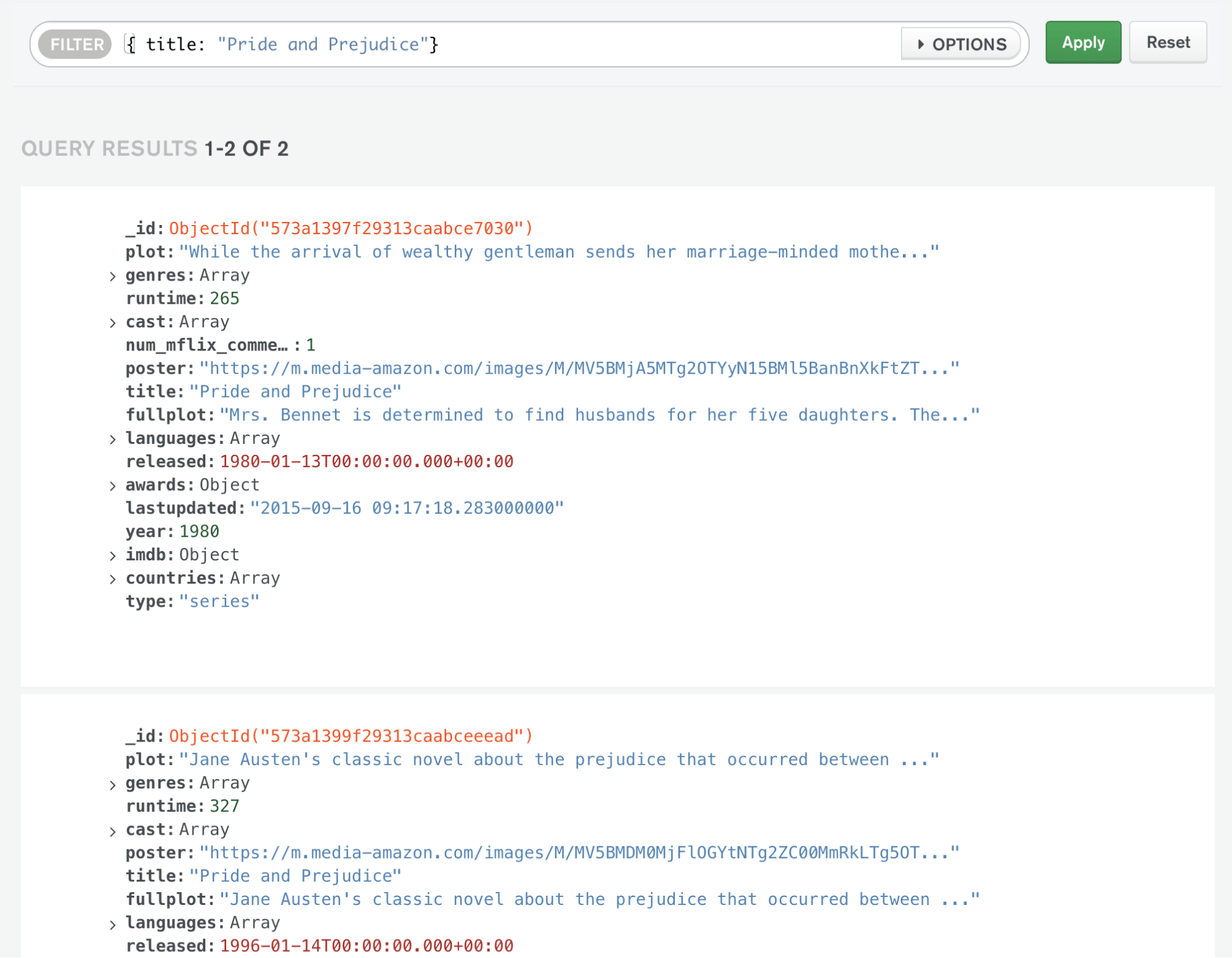

Mongo Query Language

As discussed earlier, MongoDB uses a RESTful API. To retrieve certain documents from a db collection, a query document is created containing the fields that the desired documents should match.

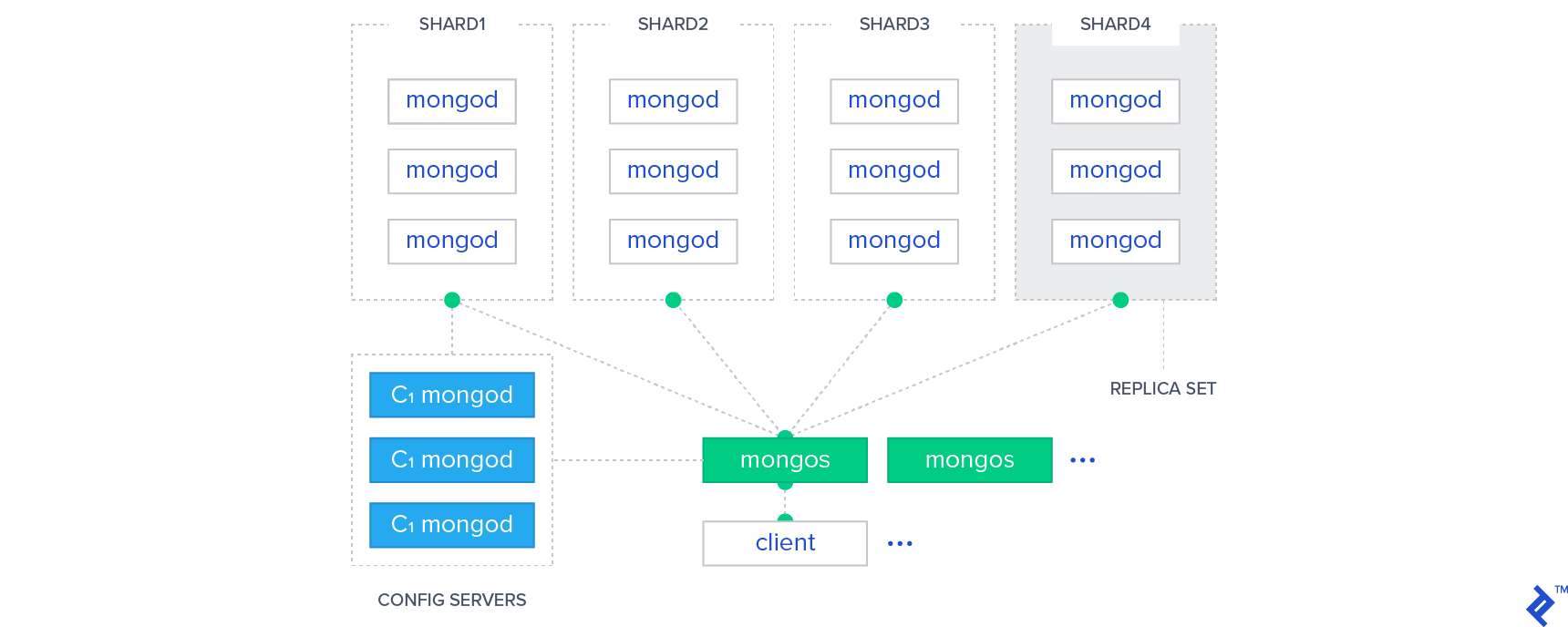

In MongoDB, there is a group of servers called routers. Each one acts as a server for one or more clients. Similarly, The cluster contains a group of servers called configuration servers. Each one holds a copy of the metadata indicating which shard contains what data. Read or write actions are sent from the clients to one of the router servers in the cluster, and are automatically routed by that server to the appropriate shards that contain the data with the help of the configuration servers.

Similar to Cassandra, a shard in MongoDB has a data replication scheme, which creates a replica set of each shard that holds exactly the same data. There are two types of replica schemes in MongoDB: Master-Slave replication and Replica-Set replication. Replica-Set provides more automation and better handling for failures, while Master-Slave requires the administrator intervention sometimes. Regardless of the replication scheme, at any point in time in a replica set, only one shard acts as the primary shard, all other replica shards are secondary shards. All write and read operations go to the primary shard, and are then distributed evenly (if needed) to the other secondary shards in the set.

In the graphic below, we see the MongoDB architecture explained above, showing the router servers in green, the configuration servers in blue, and the shards that contain the MongoDB nodes.

It should be noted that sharding (or sharing the data between shards) in MongoDB is completely automatic, which reduces the failure rate and makes MongoDB a highly scalable database management system.

Indexing Structures for NoSQL Databases

Indexing is the process of associating a key with the location of a corresponding data record in a DBMS. There are many indexing data structures used in NoSQL databases. The following sections will briefly discuss some of the more common methods; namely, B-Tree indexing, T-Tree indexing, and O2-Tree indexing.

B-Tree Indexing

B-Tree is one of the most common index structures in DBMS’s.

In B-trees, internal nodes can have a variable number of child nodes within some predefined range.

One major difference from other tree structures, such as AVL, is that B-Tree allows nodes to have a variable number of child nodes, meaning less tree balancing but more wasted space.

The B+-Tree is one of the most popular variants of B-Trees. The B+-Tree is an improvement over B-Tree that requires all keys to reside in the leaves.

T-Tree Indexing

The data structure of T-Trees was designed by combining features from AVL-Trees and B-Trees.

AVL-Trees are a type of self-balancing binary search trees, while B-Trees are unbalanced, and each node can have a different number of children.

In a T-Tree, the structure is very similar to the AVL-Tree and the B-Tree.

Each node stores more than one {key-value, pointer} tuple. Also, binary search is utilized in combination with the multiple-tuple nodes to produce better storage and performance.

A T-Tree has three types of nodes: A T-Node that has a right and left child, a leaf node with no children, and a half-leaf node with only one child.

It is believed that T-Trees have better overall performance than AVL-Trees.

O2-Tree Indexing

The O2-Tree is basically an improvement over Red-Black trees, a form of a Binary-Search tree, in which the leaf nodes contain the {key value, pointer} tuples.

O2-Tree was proposed to enhance the performance of current indexing methods. An O2-Tree of order m (m ≥ 2), where m is the minimum degree of the tree, satisfies the following properties:

- Every node is either red or black. The root is black.

- Every leaf node is colored black and consists of a block or page that holds “key value, record-pointer” pairs.

- If a node is red, then both its children are black.

- For each internal node, all simple paths from the node to descendant leaf-nodes contain the same number of black nodes. Each internal node holds a single key value.

- Leaf-nodes are blocks that have between ⌈m/2⌉ and m “key-value, record-pointer” pairs.

- If a tree has a single node, then it must be a leaf, which is the root of the tree, and it can have between 1 to m key data items.

- Leaf nodes are double-linked in forward and backward directions.

Here, we see a straightforward performance comparison between O2-Tree, T-Tree, B+-Tree, AVL-Tree, and Red-Black Tree:

The order of the T-Tree, B+-Tree, and the O2-Tree used was m = 512.

Time is recorded for operations of search, insert, and delete with update ratios varying between 0%-100% for an index of 50M records, with the operations resulting in adding another 50M records to the index.

It is clear that with an update ratio of 0-10%, B-Tree and T-Tree perform better than O2-Tree. However, with the update ratio increasing, O2-Tree index performs significantly better than most other data structures, with the B-Tree and Red-Black Tree structures suffering the most.

The Case for NoSQL?

A quick introduction to NoSQL databases, highlighting the key areas where traditional relational databases fall short, leads to the first takeaway:

While relational databases offer consistency, they are not optimized for high performance in applications where massive data is stored and processed frequently.

NoSQL databases gained a lot of popularity due to high performance, high scalability and ease of access; however, they still lack features that provide consistency and reliability.

Fortunately, a number of NoSQL DBMSs address these challenges by offering new features to enhance scalability and reliability.

Not all NoSQL database systems perform better than relational databases.

MongoDB and Cassandra have similar, and in most cases better, performance than relational databases in write and delete operations.

There is no direct correlation between the store type and the performance of a NoSQL DBMS. NoSQL implementations undergo changes, so performance may vary.

Therefore, performance measurements across database types in different studies should always be updated with the latest versions of database software in order for those numbers to be accurate.

While I can’t offer a definitive verdict on performance, here are a few points to keep in mind:

- Traditional B-Tree and T-Tree indexing is commonly used in traditional databases.

- One study offered improvements and enhancements by combining the characteristics of multiple indexing structures to come up with the O2-Tree.

- The O2-Tree outperformed other structures in most tests, especially with huge datasets and high update ratios.

- The B-Tree structure delivered the worst performance of all indexing structures covered in this article.

Further work can and should be done to enhance the consistency of NoSQL DBMSs. The integration of both systems, NoSQL and relational databases, is an area to further explore.

Finally, it’s important to note that NoSQL is a good addition to existing database standards, but with a few important caveats. NoSQL trades reliability and consistency features for sheer performance and scalability. This renders it a specialized solution, as the number of applications that can rely on NoSQL databases remains limited.

The upside? Specialization might not offer much in the way of flexibility, but when you want to get a specialized job done as quickly and efficiently as possible, you don’t need a Swiss Army Knife. You need NoSQL.

World-class articles, delivered weekly.

By entering your email, you are agreeing to our privacy policy .

Toptal Developers

- Algorithm Developers

- Angular Developers

- AWS Developers

- Azure Developers

- Big Data Architects

- Blockchain Developers

- Business Intelligence Developers

- C Developers

- Computer Vision Developers

- Django Developers

- Docker Developers

- Elixir Developers

- Go Engineers

- GraphQL Developers

- Jenkins Developers

- Kotlin Developers

- Kubernetes Experts

- Machine Learning Engineers

- Magento Developers

- .NET Developers

- R Developers

- React Native Developers

- Ruby on Rails Developers

- Salesforce Developers

- SQL Developers

- Tableau Developers

- Unreal Engine Developers

- Xamarin Developers

- View More Freelance Developers

Join the Toptal ® community.

- Open access

- Published: 14 August 2015

Choosing the right NoSQL database for the job: a quality attribute evaluation

- João Ricardo Lourenço 1 ,

- Bruno Cabral 1 ,

- Paulo Carreiro 2 ,

- Marco Vieira 1 &

- Jorge Bernardino 1 , 3

Journal of Big Data volume 2 , Article number: 18 ( 2015 ) Cite this article

65 Citations

14 Altmetric

Metrics details

For over forty years, relational databases have been the leading model for data storage, retrieval and management. However, due to increasing needs for scalability and performance, alternative systems have emerged, namely NoSQL technology. The rising interest in NoSQL technology, as well as the growth in the number of use case scenarios, over the last few years resulted in an increasing number of evaluations and comparisons among competing NoSQL technologies. While most research work mostly focuses on performance evaluation using standard benchmarks, it is important to notice that the architecture of real world systems is not only driven by performance requirements, but has to comprehensively include many other quality attribute requirements. Software quality attributes form the basis from which software engineers and architects develop software and make design decisions. Yet, there has been no quality attribute focused survey or classification of NoSQL databases where databases are compared with regards to their suitability for quality attributes common on the design of enterprise systems. To fill this gap, and aid software engineers and architects, in this article, we survey and create a concise and up-to-date comparison of NoSQL engines, identifying their most beneficial use case scenarios from the software engineer point of view and the quality attributes that each of them is most suited to.

Introduction

Relational databases have been the stronghold of modern computing applications for decades. ACID properties (Atomicity, Consistency, Isolation, Durability) made relational databases the solution for almost all data management systems. However, the need to handle data in web-scale systems [ 1 – 3 ], in particular Big Data systems [ 4 ], have led to the creation of numerous NoSQL databases.

The term NoSQL was first coined in 1988 to name a relational database that did not have a SQL (Structured Query Language) interface [ 5 ]. It was then brought back in 2009 for naming an event which highlighted new non-relational databases, such as BigTable [ 3 ] and Dynamo [ 6 ], and has since been used without an “official” definition. Generally speaking, a NoSQL database is one that uses a different approach to data storage and access when compared with relational database management systems [ 7 , 8 ]. NoSQL databases lose the support for ACID transactions as a trade-off for increased availability and scalability [ 1 , 7 ]. Brewer created the term BASE for these systems - they are Basically Available, have a Soft state (during which they are not yet consistent), and are Eventually consistent, as opposed to ACID systems [ 9 ]. This BASE model forfeits the essential ACID properties of consistency and isolation in order to favor “availability, graceful degradation, and performance” [ 9 ]. While originally the term stood for “No SQL”, it has recently been restated as “Not Only SQL” [ 1 , 7 , 10 ] to highlight that these systems rarely fully drop the relational model. Thus, in spite of being a recurrent theme in literature, NoSQL is a very broad term, encompassing very distinct database systems.

There are hundreds of readily available NoSQL databases, and each have different use case scenarios [ 11 ]. They are usually divided in four categories [ 2 , 7 , 12 ], according to their data model and storage: Key-Value Stores, Document Stores, Column Stores and Graph databases. This classification is due to the fact that each kind of database offers different solutions for specific contexts. The “one size fits all” approach of relational databases no longer applies.

There has been extensive research in the comparison of relational and non-relational databases in terms of their performance for different applications. However, when developing enterprise systems, performance is only one of many quality attributes to be considered. Unfortunately, there has not yet been a comprehensive assessment of NoSQL technology in what concerns software quality attributes. The goal of this article is to fill this gap, by clearly identifying which NoSQL databases better promote the several quality attributes, thus becoming a reference for software engineers and architects.

This article is a revised and extended version of our WorldCIST 2015 paper [ 13 ]. It improves and complements the former in the following aspects:

Three more quality attributes (Consistency, Robustness and Maintainability) were evaluated.

A new section describing the evaluated NoSQL databases was introduced.

The state of the art was extended to provide more up to date and thorough information.

All of the previously evaluated quality attributes were reevaluated in light of new studies and new developments in the NoSQL ecosystem.

New conclusions and insights derived from the quality attribute based analysis of several NoSQL databases.

Henceforth, the main contributions of this article can be summarized as follows:

The development of a quality-attribute oriented evaluation of NoSQL databases (Table 2 ). Software architects may use this information to assess which NoSQL database best fits their quality attribute requirements.

A survey of the literature on the evaluation of NoSQL databases from a historic perspective.

The identification of several future research directions towards the full coverage of software quality attributes in the evaluation of NoSQL databases.

The remainder of this article is structured as follows. In Section ‘ Background and literature review ’, we perform a review of the literature and evaluation surrounding NoSQL systems. In Section ‘ Research design and methodology ’, we introduce the methodology used to select the quality attributes and NoSQL databases that we evaluated, as well as the methodology used in that evaluation. In Section ‘ Evaluated NoSQL databases ’, we present and describe the evaluated NoSQL databases. In Section ‘ Software quality attributes ’, we analyze the different quality attributes and identify the best NoSQL solutions for each of these quality attributes according to the literature. In Section ‘ Results and discussion ’, a summary table and analysis of the results of this evaluation is provided. Finally, Section ‘ Conclusions ’ presents future work and draws the conclusions.

Background and literature review

The word NoSQL was re-introduced in 2009 during an event about distributed databases [ 5 ]. The event intended to discuss the new technologies being presented by Google (Google BigTable [ 3 ]) and Amazon (Dynamo [ 14 ]) to handle high amounts of data. Interest in the research of NoSQL technologies bloomed since then, and lead to the publication of works, such as those by Stonebraker and Cattell [ 12 , 15 , 16 ]. Sonebraker began his research by describing different types of NoSQL technology and differences among those when compared to relational technology. The author argues that the main reasons to move to NoSQL databases are performance and flexibility. Performance is mainly focused on sharing and management of distributed data (i. e. dealing with “Big Data”), while flexibility relates to the semi-structured or unstructured data that may arise on the web.

By 2011, the NoSQL ecosystem was thriving, with several databases being the center of multiple studies [ 17 – 20 ]. These included Cassandra, Amazon SimpleDB, SciDB, CouchDB, MongoDB, Riak, Redis, and many others. Researchers categorized existing databases, and identified what kinds of NoSQL databases existed according to different architectures and goals. Ultimately, the majority agreed on four categories of databases [ 11 ]: Document Store, Column Store, Key-value Store and Graph-oriented databases.

Hecht and Jablonski [ 11 ] described the main characteristics offered by different NoSQL solutions, such as availability and horizontal scailability. Konstantinou et al. [ 19 ] performed a study based on the elasticity of non-relational solutions and compared HBase, Cassandra and Riak during execution of read and update operations. The authors concluded that HBase provided high elasticity and fast reads while Cassandra was capable of delivering fast inserts (writes). On the other hand, according to the authors, Riak did not show good scaling and high performance increase, regardless of the type of access. Many studies focused on evaluating performance [ 4 , 11 , 21 ].

Performance evaluations were made easier by the popularization of the Yahoo! Cloud Serving Benchmark (YCSB), proposed and implemented by Cooper et al. [ 21 ]. This benchmark, still widely used today, allows testing the read/write, latency and elasticity capabilities of any database, in particular NoSQL databases. The first studies using YCSB evaluated Cassandra, HBase, PNUTS and MySQL to conclude that each database offers its own set of trade-offs. The authors warn that each database performs at its best in different circumstances and, thus, a careful choice of the one to use must be made according to the nature of each project.

Since 2012, NoSQL databases have been most often evaluated and compared to RDBMSs (Relational Database Management Systems). Performance evaluation carried by [ 22 ] compared Cassandra, MongoDB and PostgreSQL, concluding that MongoDB is capable of providing high throughput, but mainly when it is used as a single server instance. On the other hand, the best choice for supporting a large distributed sensor system was considered Cassandra due to its horizontal scalability. Floratou et al. [ 4 ] used YCSB and TPC-H to compare the performance of MongoDB and MS SQL Server, as well as Hive. The authors state that NoSQL technology has room for improvement and should be further updated. Ashram and Anderson [ 7 ] studied the data model of Twitter and found that using non-relational technology creates additional difficulties on the programmers’ side. Parker et al. [ 23 ] also chose MongoDB and compared its performance with MS SQL Server using only one server instance. According to their results, when performing inserts, updates and selects, MongoDB is faster, but MS SQL Server outperforms MongoDB when running complex queries instead of simpler key-value access. In [ 24 ], Kashyap et al. compare the performance, scalability and availability of HBase, MongoDB, Cassandra and CouchDB by using YCSB. Their results show that Cassandra and HBase shared similar behaviour, but the former scaled better, and that MongoDB performed better than HBase by factors in the hundreds for their particular workload. The authors are prudent, and note that NoSQL is constantly evolving and that evaluations can quickly become obsolete. Rabl et al. [ 25 ] studied Cassandra, Voldemort, HBase, Redis, VoltDB and MySQL Cluster with regards to throughput, latency and scalability. Cassandra’s throughput is consistently better than that of the other databases, but it exhibits high latency. Voldemort, HBase and Cassandra all show linear scalability, and Voldemort has the most stable, lowest latency. Of the tested NoSQL databases, VoltDB has the worst results and HBase also lagged behind the other databases in terms of throughput.

Already in 2013, with the research focus on performance, Thumbtack Technologies produced two white papers comparing Aerospike, Cassandra, Couchbase and MongoDB [ 26 , 27 ]. In [ 26 ], the authors compare the durability and performance trade-offs of several state of the art NoSQL systems. Their results firstly show that Couchbase and Aerospike have good in-memory performance, and that MongoDB and Cassandra lagged behind in bulk loading capabilities. Regarding durability, Aerospike beats the competition in large balanced and read-heavy datasets. For in-memory datasets, Couchbase performed similarly to Aerospike as well. In their second paper [ 27 ], the authors study failover characteristics. Their results allow for many conclusions, but overall tend to indicate that Aerospike, Cassandra and Couchbase give strong availability guarantees.

In [ 28 ], MongoDB and Cassandra are compared in terms of their features and their capabilities by using YCSB. MongoDB is shown to be impacted by high workloads, whereas Cassandra seemed to experience performance boosts with increasing amounts of data. Additionally, Cassandra was found to be superior for update operations. In [ 29 ], the authors studied the applicability of NoSQL to RDF (Resource Description Framework data) processing, and make several key observations: 1) distributed NoSQL systems can be competitive with RDF stores with regards to query times; 2) NoSQL systems scale more gracefully than RDF stores when loading data in parallel; 3) complex SPARQL (SPARQL Protocol and RDF Query Language) queries, particularly with joins, perform poorly on NoSQL systems; classical query optimization techniques work well on NoSQL RDF systems; 5) MapReduce-like operations introduce higher latency. As their final conclusion, the authors state that NoSQL represents a compelling alternative to native RDF stores for simple workloads. Several other studies were performed in the same year regarding the applicability of NoSQL to diverse scenarios, such as [ 30 – 32 ].

More recently, as of 2014, experiments have stopped being so focused on performance, and having additional focus on applicability. NoSQL has seen validation and widespread usage, and so, in [ 10 ], a survey of some of the most popular NoSQL solutions is described. The authors state some of the advantages and main uses according to the NoSQL database type. In another evaluation, [ 33 ] performed their tests using real medical scenarios using MongoDB and CouchDB. They concluded that MongoDB and CouchDB have similar performance and drawbacks and note that, while applicable to medical imaging archiving, NoSQL still has to improve. In [ 34 ], the Yahoo! Cloud Serving Benchmark is used with a middleware layer that allows translating SQL queries into NoSQL commands. They tested Cassandra and MongoDB with and without the middleware layer, noting that it was possible to build middleware to ease the move from relational data stores to NoSQL databases. In [ 35 ], a write-intensive enterprise application is used as the basis for comparing Cassandra, MongoDB and Couchbase with MS SQL server. The results show that Cassandra outperforms the other NoSQL databases in a four-node setup, and that a MS SQL Server running on a single node vastly outperforms all NoSQL contenders for this particular setup and scenario.

The latest trends in NoSQL research, although still related to applicability and performance, have also concerned the validity of the benchmarking processes and tools used throughout the years. The authors of [ 36 ] propose an improvement of YCSB, called YCSB++, to deal with several shortcomings of the benchmark. In [ 37 ], the author proposes a method to validate previously proposed benchmarks of NoSQL databases, claiming that rigorous algorithms should be used for benchmarking methodology before any practical use. Chen et al., in [ 38 ], perform a survey of benchmarking tools, such as YCSB and TPC-C and list shortcomings and difficulties in implementing MapReduce and Big Data related benchmark systems, proposing methods for overcoming these difficulties. Similar work had already been done by [ 39 ], where benchmarks are reviewed and suggestions are given on building better benchmarks.

As we have seen, to the best of our knowledge, there are no studies focused on quality attributes and how each NoSQL system fits each of these attributes. Our work attempts to fill in this gap, by reviewing the literature, in Section ‘ Software quality attributes ’, with regards to the different quality attributes, finally presenting our findings in a summary table in Section ‘ Results and discussion ’.

It is important to notice that the analysis of NoSQL systems is inherently bound to the CAP theorem [ 40 ]. The CAP theorem, proposed by Brewer, states that no distributed system can simultaneously guarantee Consistency, Availability and Partition-Tolerance. In the context of the CAP theorem [ 40 , 41 ], consistency is often viewed as the premise that all nodes see the same data at the same time [ 42 ]. Indeed, of Brewer’s CAP theorem, most databases choose to be “AP”, meaning they provide Availability and Partition-Tolerance. Since Partition-Tolerance is a property that often cannot be traded off, Availability and Consistency are juggled, with most databases sacrificing more consistency than availability [ 43 ]. In Fig. 1 , an illustration of CAP is shown.

CAP theorem with databases that “choose” CA, CP and AP

Some authors (Brewer being one of them) have come to criticize the way the CAP theorem is interpreted and some have claimed that much has been written in literature under false assumptions [ 41 , 44 – 46 ]. The idea of CA (systems which ensure Consistency and Availability) is now most often looked at as a trade-off on a micro-scale [ 41 ], where individual operations can have their consistency guarantees explicitly defined. This means that some operations can be tied to full consistency (in the ACID semantics sense), or to one of a vast range of possible consistency options. Modern NoSQL systems allow for this consistency tuning and should therefore not be looked at under such a simplistic view which narrows the whole system to “CA”, “CP” or “AP”.

Research design and methodology

This work was developed to answer the following research question: “Is there currently enough knowledge on quality attributes in NoSQL systems to aid a software engineer’s decision process”? In our literature survey, we did not find any similar work attempting to provide a quality attribute guided evaluation of NoSQL databases. Thus, we devised a methodology to develop this work and answer our original research question.

We began by identifying several desirable quality attributes to evaluate in NoSQL databases. There are hundreds of quality attributes, yet some are nearly ubiquitous to every software project [ 47 ], and others are intimately tied to the topic of database systems, storage models and web applications (where the database backend often requires certain quality attributes) [ 48 ]. This lead us to identify the following quality attributes to evaluate: Availability, Consistency, Durability, Maintainability, Read and Write performance, Recovery Time, Reliability, Robustness, Scalability and Stabilization Time. These attributes are interdependent and have impact on most software projects. Most of these attributes have also been the target (even if indirectly) of some studies [ 18 , 27 , 27 , 49 , 50 ], rendering them ideal picks for this work.

Once these quality attributes had been identified, we identified which NoSQL systems were more popular and used, so as to narrow our research to a fixed set of NoSQL databases. This search lead us to selecting Aerospike, Cassandra, Couchbase, CouchDB, HBase, MongoDB and Voldemort as the systems to evaluate. These are often found in literature [ 6 , 10 , 11 , 26 , 51 , 52 ] and other sources [ 53 ] as the most popular and used systems, as well as the most versatile or appropriate to certain scenarios. For instance, while Couchbase and CouchDB share source-code and several similar original design goals, they have evolved into different systems, both with very high success and different characteristics. In much the same way, MongoDB and Cassandra, which are probably the most used NoSQL databases in the market, have fundamentally different approaches to storage model. Thus, our selection of databases attempted to find not only the most popular and mature databases in general, but also those that find high applicability in specific areas.

We surveyed the literature to evaluate the selected quality attributes on the aforementioned databases. This survey took into account already available evaluations regarding certain quality attributes, such as performance [ 51 , 54 ], consistency [ 43 ] or durability [ 26 ]. Each of the surveyed papers was taken into account according to the versions of the database tested (e.g. papers with outdated versions were given less relevance), generality of results and overall relevance to this evaluation. The summary table presented in Section ‘ Results and discussion ’ is the result of this careful evaluation of the NoSQL literature, technical knowledge found on the NoSQL ecosystem and expert opinions and positions. We also took into account the overall architectures of each NoSQL system (e.g. systems built with durability limitations are intrinsically limited in terms of this quality attribute). The result of this methodology is the aforementioned summary table, which we hope will aid software engineers and architects in their decision process when selecting a given NoSQL database according to a certain quality attribute.

In the following sections, we present the databases that we evaluated from the literature, as well as that evaluation.

Evaluated NoSQL databases

There are several popular NoSQL databases which have gained recognition and are usually considered before other NoSQL alternatives. We studied several of these databases (Aerospike, Cassandra, Couchbase, CouchDB, HBase, MongoDB and Voldemort) by performing a literature review and introduce the first quality attribute based evaluation of NoSQL databases. In this section, these selected databases are presented, with a summary table at the end (Table 1 ) detailing their characteristics.

Aerospike (formerly known as Citrusleaf [ 10 ] and recently open-sourced) is a NoSQL shared-nothing key-value database which offers mainly AP (Availability and Partition-Tolerance) characteristics. Additionally, the developers claim that it provides high consistency [ 55 ] by trading off availability and consistency at low granularity in specific subsystems, restricting communication latencies, minimizing cluster size, maximizing consistency and availability during failover situations and automatic conflict resolution. Consistency is guaranteed by using synchronous writes to replicas, guaranteeing immediate consistency. This immediate consistency can be relaxed if the software architects view that as a necessity. Durability is ensured by guaranteeing the use of flash/SSD on every node and performing direct reads from flash, as well as replication on several different layers.

Failover can be handled in two different ways [ 55 ]: focusing on High consistency on AP mode, or on Availability in CP (Consistency and Partition-Tolerance) mode. The former uses techniques to “virtually eliminate network based partitioning”, including fast heartbeats and consistent Paxos based cluster formation. These techniques favor Consistency over Availability to ensure that the system does not enter a state of network partition. If, however, partitioning occurs, Aerospike offers two conflict handling policies: one relies on the database’s auto-merging capabilities, and the other offloads the conflict to the application layer so that application developers can resolve the conflicts by themselves and re-write the right data back to the database. The second way that Aerospike manages failover is to provide Availability while in CP mode. In this mode, availability needs to be sacrificed by, for instance, forcing the minority quorum(s) to halt, thus avoiding data inconsistency if a network split occurs.

Aerospike is, henceforth, an in-memory database with disk persistence, automatic data partitioning and synchronous replication, offering cross data center replication and configurability in the failover handling mechanism, preferring full consistency or high consistency [ 10 , 52 , 55 ].

Cassandra is an open-source shared-nothing NoSQL column-store database developed and used in Facebook [ 10 , 52 , 56 ]. It is based on the ideas behind Google BigTable [ 3 ] and Amazon Dynamo [ 14 ].

Cassandra is similar to BigTable in what concerns the data model. The minimal unit of storage is a column, with rows consisting of columns or super columns (nested columns). Columns themselves consist of the name, value and timestamp, all of which are provided by the client. Since it is column-based, rows need not have the same number of columns [ 10 ].

Cassandra supports a SQL-like language called CQL, together with other protocols [ 10 ]. Indexes and secondary indexes are supported, and atomicity is guaranteed at the level of one table row. Persistence is ensured by logging. Consistency is highly tunable according to the desired operation – the application developer can specify the desired level of consistency, trading off latency and consistency. Conflicts are resolved based on timestamps (the newest record is kept). The database operates in master-master mode [ 52 ], where no node is different from another, and combines disk-persistence with in-memory caching of results, resulting in high write throughput operations [ 52 , 56 ]. The master-master architecture makes it easy for horizontal scalability to happen [ 56 ]. There are several different partitioning techniques and replication can be automatically managed by the database [ 56 ].

Apache CouchDB is another open-source project, written in Erlang, and following a document-oriented approach [ 10 ]. Documents are written in JSON and are meant to be accessed with CouchDB’s specific implementation of MapReduce views written in Javascript.

This database uses a B-tree index [ 10 ], updated during data modifications. These modifications have ACID properties on the document level and the use of MVCC (Multi-Version Concurrency Control) enables readers to never block [ 10 ]. CouchDB’s document manipulation uses optimistic locks by updating an append-only B-tree for data storage, meaning that data must be periodically compressed. This compression, in spite of maintaining availability, may hinder performance [ 10 ].

Regarding fault-tolerant replication mechanisms [ 57 ], CouchDB supports both master-slave and master-master replication that can be used between different instances of CouchDB or on a single instance. Scaling in CouchDB is achieved by replicating data, a process which is performed asynchronously. It does not natively support sharding/partitioning [ 10 ]. Consistency is guaranteed in the form of strengthened eventual consistency [ 10 ], and conflict resolution is performed by selecting the most up to date version (the application layer can later try to merge conflicting changes, if possible, back into the document). CouchDB’s programming interface is REST-based [ 10 , 57 ]. Ideally, CouchDB should be able to fit the whole dataset into the RAM of the cluster, as it is primarily a RAM-based database.

Couchbase is a combination of Membase (a key-value system with memcached compatibility) and CouchDB. It can be used in key-value fashion, but is considered a document store working with JSON documents (similarly to CouchDB) [ 10 ].