Cookie Policy

We use cookies to operate this website, improve usability, personalize your experience, and improve our marketing. Privacy Policy .

By clicking "Accept" or further use of this website, you agree to allow cookies.

- Data Science

- Data Analytics

- Machine Learning

Binary Classification

What is binary classification.

In machine learning, binary classification is a supervised learning algorithm that categorizes new observations into one of two classes.

The following are a few binary classification applications, where the 0 and 1 columns are two possible classes for each observation:

Quick example

In a medical diagnosis, a binary classifier for a specific disease could take a patient's symptoms as input features and predict whether the patient is healthy or has the disease. The possible outcomes of the diagnosis are positive and negative .

Evaluation of binary classifiers

If the model successfully predicts the patients as positive, this case is called True Positive (TP) . If the model successfully predicts patients as negative, this is called True Negative (TN) . The binary classifier may misdiagnose some patients as well. If a diseased patient is classified as healthy by a negative test result, this error is called False Negative (FN) . Similarly, If a healthy patient is classified as diseased by a positive test result, this error is called False Positive(FP) .

We can evaluate a binary classifier based on the following parameters:

- True Positive (TP): The patient is diseased and the model predicts "diseased"

- False Positive (FP): The patient is healthy but the model predicts "diseased"

- True Negative (TN): The patient is healthy and the model predicts "healthy"

- False Negative (FN): The patient is diseased and the model predicts "healthy"

After obtaining these values, we can compute the accuracy score of the binary classifier as follows: $$ accuracy = \frac {TP + TN}{TP+FP+TN+FN} $$

The following is a confusion matrix , which represents the above parameters:

In machine learning, many methods utilize binary classification. The most common are:

- Support Vector Machines

- Naive Bayes

- Nearest Neighbor

- Decision Trees

- Logistic Regression

- Neural Networks

The following Python example will demonstrate using binary classification in a logistic regression problem.

A Python example for binary classification

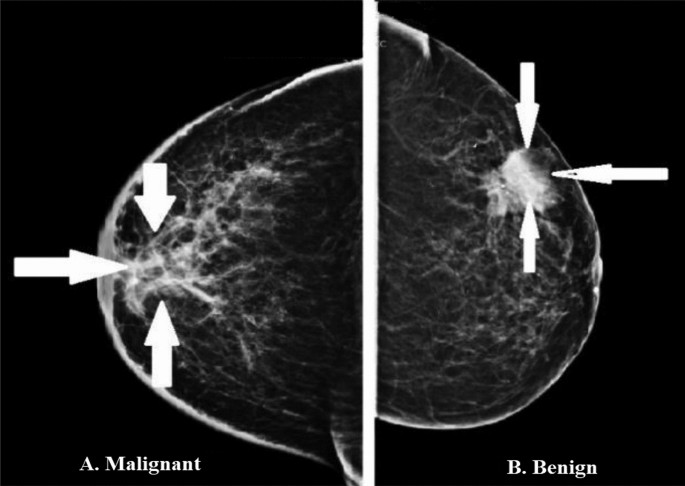

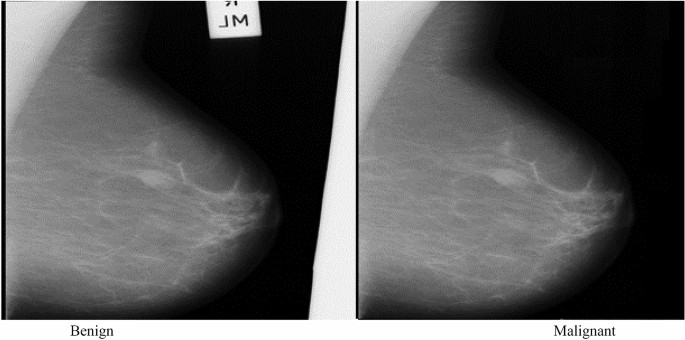

For our data, we will use the breast cancer dataset from scikit-learn. This dataset contains tumor observations and corresponding labels for whether the tumor was malignant or benign.

First, we'll import a few libraries and then load the data. When loading the data, we'll specify as_frame=True so we can work with pandas objects (see our pandas tutorial for an introduction).

The dataset contains a DataFrame for the observation data and a Series for the target data.

Let's see what the first few rows of observations look like:

5 rows × 30 columns

The output shows five observations with a column for each feature we'll use to predict malignancy.

Now, for the targets:

The targets for the first five observations are all zero, meaning the tumors are benign. Here's how many malignant and benign tumors are in our dataset:

So we have 357 malignant tumors, denoted as 1, and 212 benign, denoted as 0. So, we have a binary classification problem.

To perform binary classification using logistic regression with sklearn, we must accomplish the following steps.

Step 1: Define explanatory and target variables

We'll store the rows of observations in a variable X and the corresponding class of those observations (0 or 1) in a variable y .

Step 2: Split the dataset into training and testing sets

We use 75% of data for training and 25% for testing. Setting random_state=0 will ensure your results are the same as ours.

Step 3: Normalize the data for numerical stability

Note that we normalize after splitting the data. It's good practice to apply any data transformations to training and testing data separately to prevent data leakage .

Step 4: Fit a logistic regression model to the training data

This step effectively trains the model to predict the targets from the data.

Step 5: Make predictions on the testing data

With the model trained, we now ask the model to predict targets based on the test data.

Step 6: Calculate the accuracy score by comparing the actual values and predicted values.

We can now calculate how well the model performed by comparing the model's predictions to the true target values, which we reserved in the y_test variable.

First, we'll calculate the confusion matrix to get the necessary parameters:

With these values, we can now calculate an accuracy score:

Other binary classifiers in the scikit-learn library

Logistic regression is just one of many classification algorithms defined in Scikit-learn. We'll compare several of the most common, but feel free to read more about these algorithms in the sklearn docs here .

We'll also use the sklearn Accuracy, Precision, and Recall metrics for performance evaluation. See the docs here if you'd like to read more about the available metrics.

Initializing each binary classifier

To quickly train each model in a loop, we'll initialize each model and store it by name in a dictionary:

Performance evaluation of each binary classifier

Now that we'veinitialized the models, we'll loop over each one, train it by calling .fit() , make predictions, calculate metrics, and store each result in a dictionary.

With all metrics stored, we can use pandas to view the data as a table:

Finally, here's a quick bar chart to compare the classifiers' performance:

Since we're only using the default model parameters, we won't know which classifier is better. We should optimize each algorithm's parameters first to know which one has the best performance.

Get updates in your inbox

Join over 7,500 data science learners.

Recent articles:

The 6 best python courses for 2024 – ranked by software engineer, best course deals for black friday and cyber monday 2024, sigmoid function, dot product, 7 best artificial intelligence (ai) courses.

Top courses you can take today to begin your journey into the Artificial Intelligence field.

Meet the Authors

Associate Professor of Computer Engineering. Author/co-author of over 30 journal publications. Instructor of graduate/undergraduate courses. Supervisor of Graduate thesis. Consultant to IT Companies.

Back to blog index

Binary Classification with TensorFlow Tutorial

Binary classification is a fundamental task in machine learning, where the goal is to categorize data into one of two classes or categories.

Binary classification is used in a wide range of applications, such as spam email detection, medical diagnosis, sentiment analysis, fraud detection, and many more.

In this article, we'll explore binary classification using TensorFlow, one of the most popular deep learning libraries.

Before getting into the Binary Classification, let's discuss a little about classification problem in Machine Learning.

What is Classification problem?

A Classification problem is a type of machine learning or statistical problem in which the goal is to assign a category or label to a set of input data based on their characteristics or features. The objective is to learn a mapping between input data and predefined classes or categories, and then use this mapping to predict the class labels of new, unseen data points.

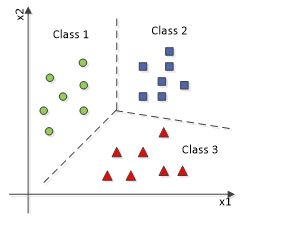

The above diagram represents a multi-classification problem in which the data will be classified into more than two (three here) types of classes.

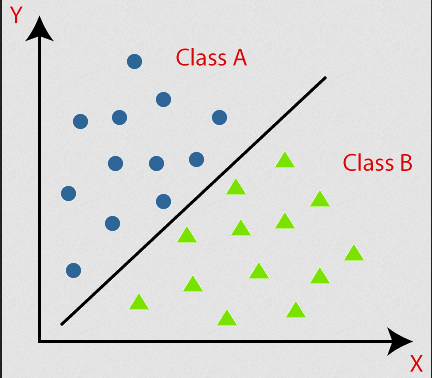

This diagram defines Binary Classification, where data is classified into two type of classes.

This simple concept is enough to understand classification problems. Let's explore this with a real-life example.

Heart Attack Analytics Prediction Using Binary Classification

In this article, we will embark on the journey of constructing a predictive model for heart attack analysis utilizing straightforward deep learning libraries.

The model that we'll be building, while being a relatively simple neural network, is capable of achieving an accuracy level of approximately 80%.

Solving real-world problems through the lens of machine learning entails a series of essential steps:

Data Collection and Analytics

Data preprocessing, building ml model.

- Train the Model

Prediction and Evaluation

It's worth noting that for this project, I obtained the dataset from Kaggle , a popular platform for data science competitions and datasets.

I encourage you to take a closer look at its contents. Understanding the dataset is crucial as it allows you to grasp the nuances and intricacies of the data, which can help you make informed decisions throughout the machine learning pipeline.

This dataset is well-structured, and there's no immediate need for further analysis. However, if you are collecting the dataset on your own, you will need to perform data analytics and visualization independently to achieve better accuracy.

Let's put on our coding shoes.

Here I am using Google Colab. You can use your own machine (in which case you will need to create a .ipynb file) or Google Colab on your account to run the notebook. You can find my source code here .

As the first step, let's import the required libraries.

I have the dataset in my drive and I'm reading it from my drive. You can download the same dataset here .

Remember the replace the path of your file in the read_csv method:

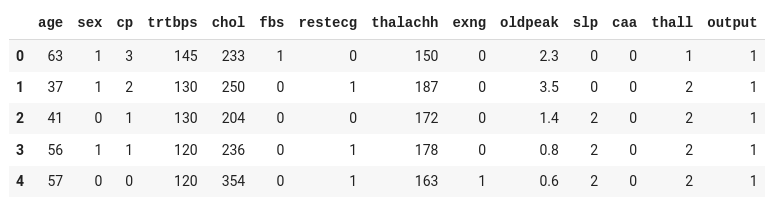

The dataset contains thirteen input columns (age, sex, cp, and so on) and one output column ( output ), which will contain the data as either 0 or 1 .

Considering the input readings, 0 in the output represents the person will not get heart attack, while the 1 represents the person will be affected by heart attack.

Let's split our input and output from the above dataset to train our model:

Since our objective is to predict the likelihood of a heart attack (0 or 1), represented by the target column, we split that into a separate dataset.

Data preprocessing is a crucial step in the machine learning pipeline, and binary classification is no exception. It involves the cleaning, transformation, and organization of raw data into a format that is suitable for training machine learning models.

A dataset will contain multiple type of data such as Numerical Data, Categorical Data, Timestamp Data, and so on.

But most of the Machine Learning algorithms are designed to work with numerical data. They require input data to be in a numeric format for mathematical operations, optimization, and model training.

In this dataset, all the columns contain numerical data, so we don't need to encode the data. We can proceed with simple normalization.

Remember if you have any non-numerical columns in your dataset, you may have to convert it into numerical by performing one-hot encoding or using other encoding algorithms.

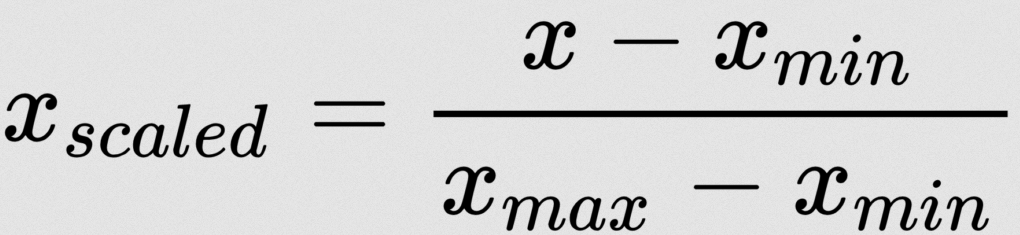

There are lot of normalization strategies. Here I am using Min-Max Normalization:

Don't worry – we don't need to apply this formula manually. We have some machine learning libraries to do this. Here I am using MinMaxScaler from sklearn:

scaler.fit(df) computes the mean and standard deviation (or other scaling parameters) necessary to perform the scaling operation. The fit method essentially learns these parameters from the data.

t_df = scaler.transform(df) : After fitting the scaler, we need to transform the dataset. The transformation typically scales the features to have a mean of 0 and a standard deviation of 1 (standardization) or scales them to a specific range (for example, [0, 1] with Min-Max scaling) depending on the scaler used.

We have completed the preprocessing. The next crucial step is to split the dataset into training and testing sets.

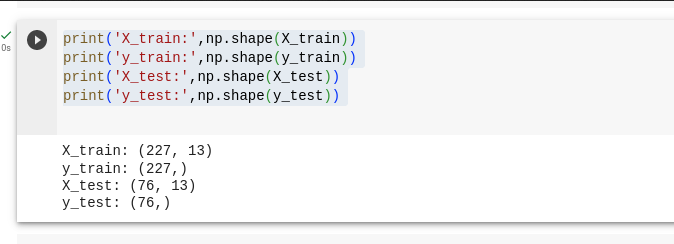

To accomplish this, I will utilize the train_test_split function from scikit-learn .

X_train and X_test are the variables that hold the independent variables.

y_train and y_test are the variables that hold the dependent variable, which represents the output we are aiming to predict.

We split the dataset by 75% and 25%, where 75% goes for training our model and 25% goes for testing our model.

A machine learning model is a computational representation of a problem or a system that is designed to learn patterns, relationships, and associations from data. It serves as a mathematical and algorithmic framework capable of making predictions, classifications, or decisions based on input data.

In essence, a model encapsulates the knowledge extracted from data, allowing it to generalize and make informed responses to new, previously unseen data.

Here, I am building a simple sequential model with one input layer and one output layer. Being a simple model, I am not using any hidden layer as it might increase the complexity of the concept.

Initialize Sequential Model

Sequential is a type of model in Keras that allows you to create neural networks layer by layer in a sequential manner. Each layer is added on top of the previous one.

Input Layer

Dense is a type of layer in Keras, representing a fully connected layer. It has 16 units, which means it has 16 neurons.

activation='relu' specifies the Rectified Linear Unit (ReLU) activation function, which is commonly used in input or hidden layers of neural networks.

input_shape=(13,) indicates the shape of the input data for this layer. In this case, we are using 13 input features (columns).

Output Layer

This line adds the output layer to the model.

It's a single neuron (1 unit) because this appears to be a binary classification problem, where you're predicting one of two classes (0 or 1).

The activation function used here is 'sigmoid' , which is commonly used for binary classification tasks. It squashes the output to a range between 0 and 1, representing the probability of belonging to one of the classes.

This line initializes the Adam optimizer with a learning rate of 0.001. The optimizer is responsible for updating the model's weights during training to minimize the defined loss function.

Compile Model

Here, we'll compile the model.

loss='binary_crossentropy' is the loss function used for binary classification. It measures the difference between the predicted and actual values and is minimized during training.

metrics=["accuracy"] : During training, we want to monitor the accuracy metric, which tells you how well the model is performing in terms of correct predictions.

Train model with dataset

Hurray, we built the model. Now it's time to train the model with our training dataset.

X_train represents the training data, which consists of the independent variables (features). The model will learn from these features to make predictions or classifications.

y_train are the corresponding target labels or dependent variables for the training data. The model will use this information to learn the patterns and relationships between the features and the target variable.

epochs=100 : The epochs parameter specifies the number of times the model will iterate over the entire training dataset. Each pass through in the dataset is called an epoch. In this case, we have 100 epochs, meaning the model will see the entire training dataset 100 times during training.

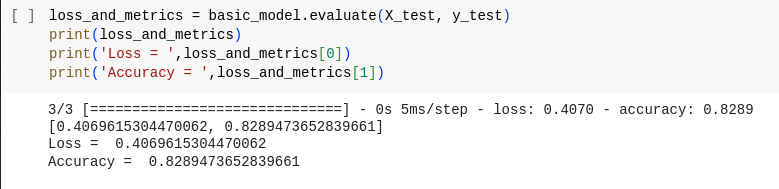

The evaluate method is used to assess how well the trained model performs on the test dataset. It computes the loss (often the same loss function used during training) and any specified metrics (for example, accuracy) for the model's predictions on the test data.

Here we got around 82% accuracy.

The predict method is used to generate predictions from the model based on the input data ( X_test in this case). The output ( predicted ) will contain the model's predictions for each data point in the training dataset.

Since I have only minimum dataset I am using the test dataset for prediction. However, it is a recommend practice to split a part of dataset (say 10%) to use as a validation dataset.

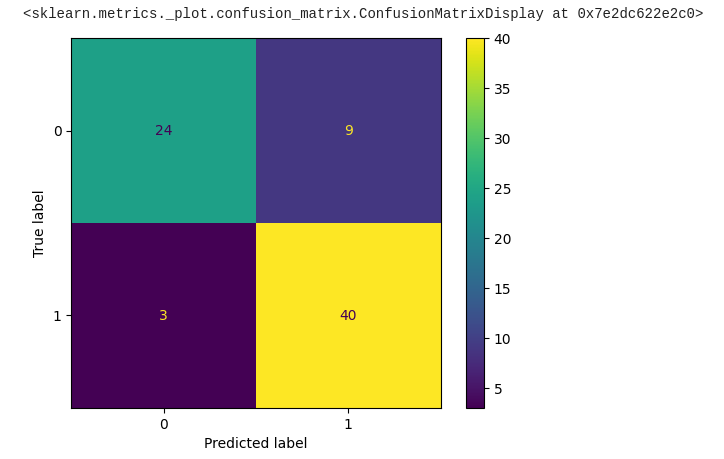

Evaluating predictions in machine learning is a crucial step to assess the performance of a model.

One commonly tool used for evaluating classification models is the confusion matrix. Let's explore what a confusion matrix is and how it's used for model evaluation:

In a binary classification problem (two classes, for example, "positive" and "negative"), a confusion matrix typically looks like this:

Here's the code to plot the confusion matrix from the predicted data of our model:

Bravo! We've made significant progress toward obtaining the required output, with approximately 84% of the data appearing to be correct.

It's worth noting that we can further optimize this model by leveraging a larger dataset and fine-tuning the hyper-parameters. However, for a foundational understanding, what we've accomplished so far is quite impressive.

Given that this dataset and the corresponding machine learning models are at a very basic level, it's important to acknowledge that real-world scenarios often involve much more complex datasets and machine learning tasks.

While this model may perform adequately for simple problems, it may not be suitable for tackling more intricate challenges.

In real-world applications, datasets can be vast and diverse, containing a multitude of features, intricate relationships, and hidden patterns. Consequently, addressing such complexities often demands a more sophisticated approach.

Here are some key factors to consider when working with complex datasets.

- Complex Data Preprocessing

- Advanced Data Encoding

- Understanding Data Correlation

- Multiple Neural Network Layers

- Feature Engineering

- Regularization

If you're already familiar with building a basic neural network, I highly recommend delving into these concepts to excel in the world of Machine Learning.

In this article, we embarked on a journey into the fascinating world of machine learning, starting with the basics.

We explored the fundamentals of binary classification—a fundamental machine learning task. From understanding the problem to building a simple model, we've gained insights into the foundational concepts that underpin this powerful field.

So, whether you're just starting or already well along the path, keep exploring, experimenting, and pushing the boundaries of what's possible with machine learning. I'll see you in another exciting article!

If you wish to learn more about artificial intelligence / machine learning / deep learning, subscribe to my article by visiting my site , which has a consolidated list of all my articles.

Project Manager by Profession. Software Developer by Passion. Curious to explore technologies. Live in Chennai

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Help | Advanced Search

Statistics > Machine Learning

Title: handling imbalanced data: a case study for binary class problems.

Abstract: For several years till date, the major issues in terms of solving for classification problems are the issues of Imbalanced data. Because majority of the machine learning algorithms by default assumes all data are balanced, the algorithms do not take into consideration the distribution of the data sample class. The results tend to be unsatisfactory and skewed towards the majority sample class distribution. This implies that the consequences as a result of using a model built using an Imbalanced data without handling for the Imbalance in the data could be misleading both in practice and theory. Most researchers have focused on the application of Synthetic Minority Oversampling Technique (SMOTE) and Adaptive Synthetic (ADASYN) Sampling Approach in handling data Imbalance independently in their works and have failed to better explain the algorithms behind these techniques with computed examples. This paper focuses on both synthetic oversampling techniques and manually computes synthetic data points to enhance easy comprehension of the algorithms. We analyze the application of these synthetic oversampling techniques on binary classification problems with different Imbalanced ratios and sample sizes.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

The Role of Balanced Training and Testing Data Sets for Binary Classifiers in Bioinformatics

Affiliation Institute for Cancer Research, Fox Chase Cancer Center, Philadelphia, Pennsylvania, United States of America

* E-mail: [email protected]

- Qiong Wei,

- Roland L. Dunbrack Jr

- Published: July 9, 2013

- https://doi.org/10.1371/journal.pone.0067863

- Reader Comments

Training and testing of conventional machine learning models on binary classification problems depend on the proportions of the two outcomes in the relevant data sets. This may be especially important in practical terms when real-world applications of the classifier are either highly imbalanced or occur in unknown proportions. Intuitively, it may seem sensible to train machine learning models on data similar to the target data in terms of proportions of the two binary outcomes. However, we show that this is not the case using the example of prediction of deleterious and neutral phenotypes of human missense mutations in human genome data, for which the proportion of the binary outcome is unknown. Our results indicate that using balanced training data (50% neutral and 50% deleterious) results in the highest balanced accuracy (the average of True Positive Rate and True Negative Rate), Matthews correlation coefficient, and area under ROC curves, no matter what the proportions of the two phenotypes are in the testing data. Besides balancing the data by undersampling the majority class, other techniques in machine learning include oversampling the minority class, interpolating minority-class data points and various penalties for misclassifying the minority class. However, these techniques are not commonly used in either the missense phenotype prediction problem or in the prediction of disordered residues in proteins, where the imbalance problem is substantial. The appropriate approach depends on the amount of available data and the specific problem at hand.

Citation: Wei Q, Dunbrack RL Jr (2013) The Role of Balanced Training and Testing Data Sets for Binary Classifiers in Bioinformatics. PLoS ONE 8(7): e67863. https://doi.org/10.1371/journal.pone.0067863

Editor: Iddo Friedberg, Miami University, United States of America

Received: November 10, 2012; Accepted: May 23, 2013; Published: July 9, 2013

Copyright: © 2013 Wei, Dunbrack. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: Funding was provided by National Institutes of Health (NIH) Grant GM84453, NIH Grant GM73784, and the Pennsylvania Department of Health. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: I have read the journal’s policy and have the following conflicts. I (Roland Dunbrack) have previously served as a guest editor for PLOS ONE. This does not alter our adherence to all the PLOS ONE policies on sharing data and materials.

Introduction

In several areas of bioinformatics, binary classifiers are common tools that have been developed for applications in the biological community. Based on input or calculated feature data, the classifiers predict the probability of a positive (or negative) outcome with probability P (+) = 1– P (–). Examples of this kind of classifier in bioinformatics include the prediction of the phenotypes of missense mutations in the human genome [1] – [8] , the prediction of disordered residues in proteins [9] – [17] , and the presence/absence of beta turn, regular secondary structures, and transmembrane helices in proteins [18] – [21] .

While studying the nature of sequence and structure features for predicting the phenotypes of missense mutations [22] – [25] , we were confronted by the fact that we do not necessarily know the rate of actual deleterious phenotypes in human genome sequence data. Recently, very large amounts of such data have become available, especially from cancer genome projects comparing tumor and non-tumor samples [26] . This led us to question the nature of our training and testing data sets, and how the proportions of positive and negative data points would affect our results. If we trained a classifier with balanced data sets (50% deleterious, 50% neutral), but ultimately genomic data have much lower rates of deleterious mutations would we overpredict deleterious phenotypes? Or should we try to create training data that resembles the potential application data? Should we choose neutral data that closely resembles potential input, for example human missense mutations in SwissVar, or should we use more distinct, for example data from close orthologues of human sequences in other organisms, in particular primates?

Traditional learning methods are designed primarily for balanced data sets. The most commonly used classification algorithms such as Support Vector Machines (SVM), neural networks and decision trees aim to optimize their objective functions that usually lead to the maximum overall accuracy – the ratio of the number of true predictions out of all predictions made. When these methods are trained on very imbalanced data sets, they often tend to produce majority classifiers – over-predicting the presence of the majority class. For a majority positive training data set, these methods will have a high true positive rate (TPR) but a low true negative rate (TNR). Many studies have shown that for several base classifiers, a balanced data set provides improved overall classification performance compared to an imbalanced data set [27] – [29] .

There are several methods in machine learning for dealing with imbalanced data sets such as random undersampling and oversampling [29] , [30] , informed undersampling [31] , generating synthetic (interpolated) data [32] , [33] , sampling with data cleaning techniques [34] , cluster-based sampling [35] and cost-sensitive learning in which there is an additional cost to misclassifying a minority class member compared to a majority class member [36] , [37] . Provost has given a general overview of machine learning from imbalanced data sets [38] , and He and Garcia [39] show the major opportunities, challenges and potential important research directions for learning from imbalanced data.

Despite the significant literature in machine learning from imbalanced data sets, this issue is infrequently discussed in the bioinformatics literature. In the missense mutation prediction field, training and testing data are frequently not balanced and the methods developed in machine learning for dealing with imbalanced data are not utilized. Table 1 shows the number of mutations and the percentage of deleterious mutations in training data set and testing data set for 11 publicly available servers for missense phenotype prediction [1] – [3] , [6] , [7] , [40] – [42] . Most of them were trained on imbalanced data sets, especially, nsSNPAnalyzer [3] , PMut [2] , [43] , [44] , SeqProfCod [41] , [45] and MuStab [46] . With a few exceptions, the balanced or imbalanced nature of the training and testing set in phenotype prediction was not discussed in the relevant publications. In one exception, Dobson et al. [47] determined that measures of prediction performance are greatly affected by the level of imbalance in the training data set. They found that the use of balanced training data sets increases the phenotype prediction accuracy compared to imbalanced data sets as measured by the Matthews Correlation Coefficient (MCC). The developers of the web servers SNAP [5] , [6] and MuD [7] also employed balanced training data sets, citing the work of Dobson et al. [47] .

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0067863.t001

The sources of deleterious and neutral mutation data are also of some concern. These are also listed in Table 1 for several available programs. The largest publicly available data set of disease-associated (or deleterious) mutations is the SwissVar database [48] . Data in SwissVar are derived from annotations in the UniprotKB database [49] . Care et al. assessed the effect of choosing different sources for neutral data sets [50] , including SwissVar human polymorphisms for which phenotypes are unknown, sequence differences between human and mammalian orthologues, and the neutral variants in the Lac repressor [51] and lysozyme data sets [52] . They argue that the SwissVar human polymorphism data set is closer to what one would expect from random mutations under no selection pressure, and therefore represent the best “neutral” data set. They show convincingly that the possible accuracy one may achieve depends on the choice of neutral data set.

In this paper, we investigate two methodological aspects of the binary classification problem. First, we consider the general problem of what effect the proportion of positive and negative cases in the training and testing sets has on the performance as assessed by some commonly used metrics. The basic question is how to achieve the best results, especially in the case where the proportion in future applications of the classifier is unknown. We show that the best results are obtained when training on balanced data sets, regardless of the rate of proportions of positives and negatives in the testing set. This is true as long as the method of assessment on the testing set appropriately accounts for any imbalance in the testing set. Our results indicate that “balanced accuracy” (the mean of TPR and TNR) is quite flat with respect to testing proportions, but is quite sensitive to balance in the training set, reaching a maximum for balanced training sets. The Matthews’ correlation coefficient is sensitive to the proportions in both the testing set and the training set, while the area under the ROC curve is not very sensitive to the testing set proportions and also not to the training set proportions when the minority class is at least 30% of the training data. Thus, while the testing measures depend to greater or lesser extents on the balance of the training and/or testing sets, they all achieve the best results on the combined use of balanced training sets and balanced testing sets.

Second, for the specific case of missense mutations, we show data that mutations derived from human/non-human-primate sequence comparisons may provide a better data set compared to the human polymorphism data. This is precisely because the primate sequence differences with human proteins are more consistent with what we would expect on biophysical grounds than the human variants. The latter are of unknown phenotype and may be the result of recent mutations in the human genome, some of which may be at least mildly to moderately deleterious.

To compile a human mutation data set, we downloaded data on mutations from the SwissVar database (release 57.8 of 22–Sep-2009) [48] . After removing unclassified variants, variants in very long proteins to reduce computation time (sequences of more than 2000 amino acids), redundant variants, and variants that are not accessible by single-site nucleotide substitutions (just 150 mutation types are accessible by single-site nucleotide change), we compiled separate human disease mutation as the deleterious mutations and human polymorphism as the neutral mutations, these two data sets labeled HumanDisease and HumanPoly respectively.

Non-human primate sequences were obtained from UniprotKB [49] . We used PSI-BLAST [53] , [54] to identify likely primate orthologues of human proteins in the SwissVar data sets using a sequence identity cutoff of 90% between the human and primate sequences. More than 75% of the human-primate pairs we identified in this procedure have sequence identity greater than 95%, and are very probably orthologues. Mutations without insertions or deletions within 10 amino acids on either side of the mutation of amino acid differences in the PSI-BLAST alignments were compiled into a data set of human/primate sequence differences, PrimateMut . Only those single-site nucleotide substitutions were included in PrimateMut , although we did not directly check DNA sequences to see if this is how the sequence changes occurred. Finally, where possible, we mapped the human mutation sites in the HumanDisease , HumanPoly , and PrimateMut data sets to known structures of human proteins in the PDB using SIFTS [55] , which provides Uniprot sequence identifiers and sequence positions for residues in the PDB. This mapping produced three data sets, HumanDiseaseStr , HumanPolyStr , and PrimateMutStr.

To produce an independent test set, we compared the SwissVar release 2012_03 of March 21, 2012 with that of release 57.8 of Sep. 22, 2009 used in the previous calculations. We selected the human-disease mutations and human polymorphisms contained in the new release and searched all human proteins in Uniprot/SwissProt against primate sequences to get additional primate polymorphisms, and then compared these human disease mutations and primate polymorphisms with our training data set to get those human disease mutations and primate polymotphisms not contained in the training data set as our independent testing data set. The resulting independent testing data set contains 2316 primate polymorphisms, 1407 human polymorphisms and 1405 human disease mutations.

The data sets are available in Data S1 .

Calculation of Sequence and Structure Features

We used PSI-BLAST [53] , [54] to search human and primate protein sequences against the database UniRef90 [49] for two rounds with an E-value cutoff of 10 to calculate the PSSM score for the mutations. From the position-specific scoring matrices (PSSMs) output by PSI-BLAST, we obtained the dPSSM score which is the difference between the PSSM score of the wildtype residues and the PSSM scores of the mutant residues.

To calculate a conservation score, we parsed the PSI-BLAST output to select homologues with sequence identity greater than 20% for each human and primate protein. We used BLASTCLUST to cluster the homologues of each query using a threshold of 35%, so that the sequences in each cluster were all homologous to each other wither a sequence identity ≥35%. A multiple sequence alignment of the sequences in the cluster containing the query was created with the program Muscle [56] , [57] . Finally, the multiple sequence alignment was input to the program AL2CO [58] to calculate the conservation score for human and primate proteins.

For each human mutation position, we determined if the amino acid was present in the coordinates of the associated structures (according to SIFTS). Similarly, for each primate mutation, we determined whether the amino acid of the human query homologue was present in the PDB structures. For each protein in our human and primate data sets whose (human) structure was available in the PDB according to SIFTS, we obtained the symmetry operators for creating the biological assemblies from the PISA website and applied these symmetry operators to create coordinates for their predicted biological assemblies. We used the program Naccess [59] to calculate surface area for each wildtype position in the biological assemblies as well as in the monomer chains containing the mutation site (i.e., from coordinate files containing only a single protein with no biological assembly partners or ligands). For the human mutation position, if the amino acid can be presented in the coordinates of more than one associated structures, we calculated the surface area for those associated structures and get the minimal surface area as the surface area of that human mutation.

Contingency Tables for Mutations

Because the two sets of data are independent and being compared to their average, there are 2 k -1 degrees of freedom (299 for 150 mutations accessible by single-nucleotide mutations).

Accuracy Measures

The ROC curve is a plot of the true positive rate versus the false positive rate for a given predictor. A random predictor would give a value of 0.5 for the area under the ROC curve, and a perfect predictor would give 1.0. The area measures discrimination, that is, the ability of the prediction score to correctly sort positive and negative cases.

The Selection of Neutral Data Sets

From SwissVar, we obtained a set of human missense mutations associated with disease and a set of polymorphisms of unknown phenotype, often presumed to be neutral. From the same set of proteins in SwissVar, we identified single-site mutations between human proteins and orthologous primate sequences with PSI-BLAST (see Methods). Table 2 gives the number of proteins and mutations in each of six data sets: HumanPoly, HumanDisease, PrimateMut and those subsets observable in experimental three-dimensional structures of the human proteins, HumanPolyStr, HumanDiseaseStr, and PrimateMutStr .

https://doi.org/10.1371/journal.pone.0067863.t002

By contrast, the values of G when comparing two different data sets exhibit much larger values. Table 3 shows G for various pairs of data sets. According to the G values in Table 3 , the large data sets HumanPoly and PrimateMut are the most similar, while HumanDisease is quite different from either. However, HumanPoly is closer to HumanDisease than PrimateMut , which brings up the question of which is the better neutral data set. The values of G for the subsets with structure follow a similar pattern ( Table 3 ). P-values for the values of G in Table 3 are all less than 0.001.

https://doi.org/10.1371/journal.pone.0067863.t003

Care et al. [50] showed that the Swiss-Prot polymorphism data are closer to nucleotide changes in non-coding sequence regions than human/non-human mammal mutations are. However, the non-coding sequences are not under the same selection pressure as coding regions are. While positions with mutations leading to disease are likely to be under strong selective pressure (depending on the nature of the disease), it is still likely that positions of known neutral mutations are under some selection pressure to retain basic biophysical properties of the amino acids at those positions.

Only those 150 mutations accessible by single-nucleotide changes are shown in color; others are shown in gray. Wildtype residue types are given along the x-axis and mutant residue types are given along the y-axis. Blue squares indicate substitution types that are overrepresented in PrimateMut , while orange squares indicate substitution types that are overrepresented in HumanPoly .

https://doi.org/10.1371/journal.pone.0067863.g001

It is immediately obvious from Figure 1 that mutations we would consider on biophysical grounds to be largely neutral (R→K, F→Y, V→I and vice versa) are overrepresented in the PrimateMut data compared to the HumanPoly data. Conversely, mutations that on biophysical grounds we would expect to be deleterious (R→W, mutations of C, G, or P to other residue types, large aromatic to charged or polar residues) are overrepresented in the HumanPoly data compared to the PrimateMut data.

We calculated predicted disorder regions for the proteins in each of the data sets using the programs IUpred [10] , Espritz [65] , and VSL2 [66] . Residues were predicted to be disordered if two of the three programs predicted disorder. According to predicted disorder regions, we calculated whether the mutation positions in each data set were in regions predicted to be ordered or disordered. In the HumanPoly and PrimateMut data sets, 31% and 23.6% of the mutations were predicted to be in disordered regions respectively, while in the HumanDisease set only 14.3% of the mutations were in predicted disordered regions. Thus, the differences between HumanPoly and PrimateMut are not due to differences in one important factor that may lead to additional mutability of amino acids, in that disordered regions are more highly divergent in sequence than folded protein domains. This result does explain why the proportion of residues in HumanDisease that can be found in known structures ( HumanDiseaseStr ), 36.4%, is so much higher than that for HumanPoly and PrimateMut , 11.3% and 15.7% respectively.

Further, we checked if the proteins in the different sets had different numbers of homologues in Uniref100, considering that the disease-related proteins may occur in more conserved pathways in a variety of organisms. We calculated the average number of proteins in clusters of sequences related to each protein in the three sets using BLASTCLUST, as described in the Methods. Proteins in each cluster containing a query protein were at least 35% identical to each other and the query. Proteins in the HumanDisease, HumanPoly, and PrimateMut had 26.4, 25.8, and 28.5 proteins on average respectively (standard deviations of 89.6, 103.2, and 92.0 respectively). Thus the HumanDisease proteins are intermediate in nature between the PrimateMut and HumanPoly proteins in terms of the number of homologues, although the numbers are not substantially different.

It appears then that the PrimateMut data show higher selection pressure (due to longer divergence times) for conserving biophysical properties than the HumanPoly data. Since polymorphisms among individuals of a species, whether human or primate, are relatively rare, the majority of sequence differences between a single primate’s genome and the reference human genome are likely to be true species differences. Thus, they are likely to be either neutral or specifically selected for in each species. On the other hand, the SwissVar polymorphisms exist specifically because they are variations among individuals of a single species. They are of unknown phenotype, especially if they are not significantly represented in the population. We therefore argue that the PrimateMut data are a better representation of neutral mutations than the HumanPoly data. In what follows, we use the PrimateMut data as the neutral mutation data set, unless otherwise specified.

We calculated two sequence-based and two structure-based features for the mutations in data sets HumanPolyStr , HumanDiseaseStr and PrimateMutStr to compare the prediction of missense phenotypes when the neutral data consists of human polymorphisms or primate sequences. From HumanDiseaseStr, we selected a sufficient number of human disease mutations to combine with human polymorphisms (called Train_HumanPoly ) and primate polymorphisms (called Train_Primate ) to construct two balanced training data sets. From our independent testing data set (described in the Methods Section), we selected sufficient human disease mutations to combine with human polymorphisms (called Test_HumanPoly ) and primate polymorphisms (called test_primate ) to create two balanced independent testing data sets. Table 4 shows the results of SVM model trained by training data sets Train_humanPloy and Train_Primate , and tested by independent testing data sets Test_HumanPoly and Test_Primate .

https://doi.org/10.1371/journal.pone.0067863.t004

The results in Table 4 show that the primate polymorphisms achieve higher cross-validation accuracy than the human polymorphisms on all measures. This confirms that the primate polymorphisms are more distinct in their distribution from the human disease mutations than the human polymorphisms. In particular, the true negative rate for the primate cross-validation results are much higher than for the human polymorphism results. Further, we tested each model ( Train_Primate and Train_HumanPoly ) on independent data sets. The two testing data sets, Test_Primate and Test_HumanPoly contain the same disease mutations but different neutral mutations. The Train_Primate model achieves the same TPR for each of the independent testing set at 82.5%, since the disease mutations are the same in each of the testing sets. Similarly, Train_HumanPoly achieves the same TPR for each of the testing sets at a lower rate of 78.1% since the human disease mutations are easier to distinguish from the primate mutations than the human polymorphisms. As may be expected, the TNR of Train_HumanPoly is better with Test_HumanPoly (70.6%) than is Train_Primate (67.3%), since the negatives are from similar data sources (human polymorphisms).

It is interesting that regardless of the training data set, the balanced measures of accuracy are relatively similar for a given testing data set. For Test_Primate , the BACC is 82.1% and 80.1% for the primate and human training data sets respectively. For Test_HumanPoly , the BACC values are 74.9% and 74.4% respectively. The MCC and AUC measures in Table 4 show a similar phenomenon. Thus, the choice of neutral mutations in the testing set has a strong influence on the results, while the choice of the neutral mutations in the training data set less so.

The Importance of Balanced Training Sets

The more general question we ask is how predictors behave depending on the level of imbalance in either the training set or testing set or both. In the case of missense mutations, we do not a priori know what the deleterious mutation rate may be in human genome data. To examine this, we produced five training data sets ( train_10 , train_30 , train_50 , train_70 and train_90 ) using the same number of training examples, but with a different class distribution ranging from 10% deleterious ( train_10 ) to 90% deleterious ( train_90 ). We trained SVMs on these data sets using four-features: the difference in PSSM scores between wildtype and mutant residues, a conservation score, and the surface accessibility of residues in biological assemblies and protein monomers.

Figure 2a shows the performance of the five SVM models in 10-fold cross-validation calculations in terms of true positive rate (TPR), true negative rate (TNR), positive predictive value (PPV), and negative predictive value (NPV) as defined in Equation 5 . In cross validation, the training and testing sets contain the same frequency of positive and negative data points. Thus on train_10 , the TPR is very low while the TNR is very high. This is a majority classifier and most predictions are negative. Train_90 shows a similar pattern but with negatives and positives reversed. The PPV and NPV show a much less drastic variation as a function of the deleterious and neutral content of the data sets. For instance, PPV ranges from about 65% to 90% while TNR ranges from 35% to 100% for the five data sets.

(a) Values for TPR, TNR, PPV, and NPV. (b) Values for MCC, BACC, AUC, and ACC.

https://doi.org/10.1371/journal.pone.0067863.g002

In Figure 2b , we show four measures of accuracy: ACC, BACC, MCC, and AUC. Overall accuracy, ACC, reaches maximum values on the extreme data sets, train_10 and train_90. These data sets have highly divergent values of TPR and TNR as shown in Figure 2a and are essentially majority classifiers. By contrast, the other three measures are designed to account for imbalanced data in the testing data sets. BACC is the mean of TPR and TNR. It achieves the highest result in the balanced data set, train_50 , and the lowest results for the extreme data sets. The range of BACC is 59% to 81%, which is quite large. Similarly, the MCC and AUC measures also achieve cross-validation maximum values on train_50 and the lowest values on train_10 and train_90 . The balanced accuracy and Matthews Correlation Coefficient are highly correlated, although BACC is a more intuitive measure of accuracy.

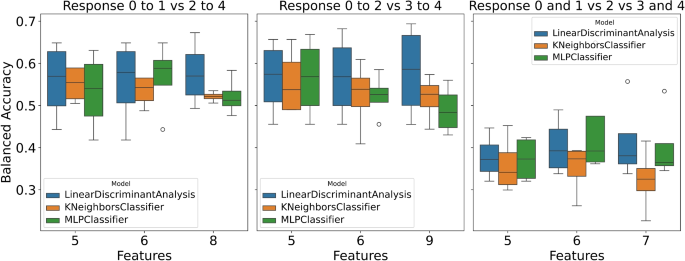

To explore these results further, we created 9 independent testing data sets using the same number of testing examples, but with different class distribution (the percentage of deleterious mutations from 10%–90%) to test the five SVM models described above ( train_10 , train_30 , etc.). Figure 3 shows the performance of those five SVM models tested by the 9 different testing data sets.

https://doi.org/10.1371/journal.pone.0067863.g003

In Figure 3a and Figure 3b , we show that the true positive and true negative rates are highly dependent on the fraction of positives in the training data set but nearly independent of the fraction of positives in the testing data set. The true positive rate and true negative rate curves of the five SVM models are flat and indicate that the true positive rate and true negative rate are determined by the percentage of the deleterious mutations in the training data – a higher percentage of deleterious mutations in training data leads to a higher true positive rate and a lower true negative rate. Figure 3c shows the positive predictive value which is defined as the proportion of the true positives against all the positive predictions (both true positives and false positives). Figure 3d shows the negative predictive value, which is defined similarly for negative predictions. In both cases, the results are highly correlated with the percentages of positives and negatives in the training data. The curves in Figure 3c show that the positive predictive value of the five SVM models increases with increasing percentage of deleterious (positive) mutations in both the training and testing data sets. The SVM model trained by data set train_10 achieves the best PPV while Figure 3a shows that this model also has the lowest TPR (less than 30%) for all nine testing data sets, because its number of false positives is very low (it classifies nearly all data points as negative). The NPV results are similar but the order of training sets is reversed and the NPV numbers are positive correlated with the percentage of negative data points in the testing data.

In Figure 4 , we show four measures that assess the overall performance of each training set model on each testing data set – the overall accuracy (ACC) in Figure 4a , the balanced accuracy (BACC) in Figure 4b , the Matthews correlation coefficient (MCC) in Figure 4c , and the area under the ROC curve (AUC) in Figure 4d . The overall shapes of the curves for the different measures are different. The ACC curves, except for train_50 , are significantly slanted, especially the train_10 and train_90 curves. The BACC curves are all quite flat. The MCC curves are all concave down, showing diminished accuracy for imbalanced testing data sets on each end. The AUC curves are basically flat but bumpier than the BACC curves. The figures indicate that the various measures are not equivalent.

https://doi.org/10.1371/journal.pone.0067863.g004

The balanced accuracy, BACC, while nearly flat with respect to the testing data sets, is highly divergent with respect to the training data sets. The SVM model train_50 achieves the best balanced accuracy for all nine different testing data sets. The SVM models trained on data sets train_30 and train_70 are worse than train_50 by up to 8 points, which would be viewed as a significant effect in the missense mutation field, as shown in Table 1 . The train_ 10 and train_90 sets are much worse, although these are significantly more imbalanced than used in training missense mutation classifiers. In Figure 4c , the MCC of train_50 achieves the best results for most of the testing data sets; train_30 is just a big higher for testing at 0.2 and 0.3, and train_70 is a bit higher at 0.9. The MCC can be as much as 10 points higher when trained and tested on balanced data than when trained on imbalanced data ( train_70 ). Figure 4d shows the area under ROC cures (AUC) behaves similarly to BACC in Figure 4b . The AUC distinguishes train_50 from train_30 and train_70 to only a small extent, but the difference between these curves and train_10 and train_90 is fairly large.

A common objective in bioinformatics is to provide tools that make predictions of binary classifiers for use in many areas of biology. Many techniques in machine learning have been applied to such problems. All of them depend on the choice of features of the data that must differentiate the positive and negative data points as well as on the nature of the training and testing data sets. While computer scientists have studied the nature of training and testing data, particularly on whether such data sets are balanced or imbalanced [38] , the role of this aspect of the data is not necessarily well appreciated in bioinformatics.

In this article, we have examined two aspects of the binary classification problem: the source of the input data sets and whether the training and testing sets are balanced or not. On the first issue, we found that a negative data set that is more distinct from the positive data set results in higher prediction rates. This result makes sense of course, but in the context of predicting missense mutation phenotypes it is critical that the neutral data points are truly neutral. We compared the ability of primate/human sequence differences and human polymorphisms to predict disease phenotypes. The primate/human sequence differences come from a small number of animal samples and the reference human genome, which is also from a small number of donors. The majority of intraspecies differences are rare, and thus the majority of primate/human differences are likely to reflect true species differences rather than polymorphisms within each species. It seems likely that they should be mostly neutral mutations, or the result of selected adaptations of the different species.

On the other hand, the polymorphisms in the SwissVar database are differences among hundreds or thousands of human donors. Their phenotypes and prevalence in the population are unknown. It is more likely that they are recent sequence changes which may or may not have deleterious consequences and may or may not survive in the population. Some authors have tried to estimate the percentage of SNPs that are deleterious. For instance, Yue and Moult estimated by various feature sets that 33–40% of missense SNPs in dbSNP are deleterious [67] . However, the training set for their SVMs contained 38% deleterious mutations and it may be that these numbers are correlated. In our case, we predict that 40% of the SwissVar polymorphisms are deleterious, while only 20.6% of the primate mutations are predicted as deleterious. With a positive predictive value of 80.4%, then perhaps 32.4% of the SwissVar polymorphisms are deleterious.

In any case, the accuracy of missense mutation prediction that one may obtain is directly affected by the different sources of neutral data and deleterious data, separately from the choice of features used or machine learning method employed. Results from the published literature should be evaluated accordingly.

We have examined the role of balanced and imbalanced training and testing data sets in binary classifiers, using the example of missense phenotype prediction as our benchmark. We were interested in how we should train such a classifier, given that we do not know the rate of deleterious mutations in real-world data such as those being generated by high-throughput sequencing projects of human genomes. Our results indicate that regardless of the rates of positives and negatives in any future testing data set such as human genome data, support vector machines trained on balanced data sets rather than imbalanced data sets performed better on each of the measures of accuracy commonly used in binary classification, i.e. balanced accuracy (BACC), the Matthews correlation coefficient (MCC), and the area under ROC curves (AUC). Balanced training data sets result in high, steady values for both TPR and TNR ( Figure 3a and 3b ) and good tradeoffs in the values of PPV and NPV ( Figure 3c and 3d ).

Even at the mild levels of training imbalance shown in Table 1 (30–40% in the minority class), there would be what would be considered significant differences in balanced accuracy of about 8% and MCC of 10%. The AUC is considerably less sensitive to the imbalance in the training set from 30–70% deleterious mutation range, probably because it measures only the ordering of the predictions rather than a single cutoff to make one prediction or the other.

For the programs listed in Table 1 , it is interesting to examine their efforts in considering the consequences of potential imbalance in the training data sets. The authors of both SNAP [5] , [6] and MuD [7] used very nearly balanced training data sets and noted the effect of using imbalanced data sets in their papers. In MuD’s case, they eliminated one third of the deleterious mutations from their initial data set in order to balance the training data. SNSPs3D-stability [67] was derived with the program SVMLight [68] – [70] , which allows for a cost model to upweight the misclassification cost of the minority class, which the authors availed themselves of. MuStab [46] also used SVMLight but the authors did not use its cost model to account for the imbalance in their training data set (31% deleterious). The program LIBSVM [71] also allows users to use a cost factor for the minority class in training. Two of the programs in Table 1 , SeqProfCod [41] , [45] and PHD-SNP [40] used this program, but did not use this feature to deal with imbalance in their training data sets. Finally, programs using other methods such as a Random Forest (SeqSubPred [72] and nsSNPAnalyzer [3] ), a neural network (PMut [2] , [43] , [44] ), and empirical rules (PolyPhen2 [73] ) also did not address the issue of training set imbalance.

In any case, given that relatively large training and testing data sets can be obtained for the missense mutation classification problem (see Table 1 ), it is clear that balancing the data in the training set is the simplest way of dealing with the problem, rather than employing methods that treat the problem in other ways (oversampling the minority class, asymmetric cost functions, etc.).

In light of the analysis presented in this paper, it is useful to examine one other group of binary classifiers in bioinformatics – that of predicting disordered regions of proteins. These classifiers predict whether a residue is disordered or ordered based on features such as local amino acid composition and secondary structure prediction. However, the typical training and testing data sets come from structures in the Protein Data Bank, which typically consist of 90–95% ordered residues. Only 5–10% of residues in X-ray structures are disordered and therefore missing from the coordinates. We examined the top five predictors in the most recent CASP experiment [74] in terms of how the methods were trained and tested. These methods were Prdos2 [14] , Disopred3C [75] , Zhou-Spine-D [16] , CBRC_Poodle [17] , and Multicom-refine [76] . Some parameters of the data sets from the published papers and the prediction rates from the CASP9 results are shown in Table 5 . All five methods were trained on highly imbalanced data sets, ranging from just 2.5% disordered (DisoPred3C) to 10% disordered (Zhou-Spine-D). DisoPred3C also had the lowest TPR and highest TNR of these five methods, which is consistent with the results shown in Figure 3a and 3b . It was also the only method that specifically upweighted misclassified examples of the minority class (disordered residues) during the training of a support vector machine using SVMlight, although they did not specify the actual weights used. The developers of Zhou-Spine-D used a marginally imbalanced training set to predict regions of long disorder (45% disordered), arguing that this situation is easier than predicting disorder in protein structures, where the disorder rate is about 10%. In the latter case, they use oversampling of the minority class of disordered residues in order to train a neural network. The other three methods listed in Table 5 did not use available cost models in the machine learning methods they used, including LIBSVM (CBRC-Poodle) or SVMLight (Prdos2) or any form of weighting or oversampling in a neural network (Multicom-refine). Because the percentage of disordered residues in protein structures is relatively low, it may be appropriate to apply asymmetric costs and oversampling techniques in attempting to account for the skew in training data in the disorder prediction problem, but these techniques have not been widely applied for the disorder prediction problem.

https://doi.org/10.1371/journal.pone.0067863.t005

In summary, the problem of imbalanced training data occurs frequently in bioinformatics. Even mild levels of imbalance – at 30–40% of the data in the minority class – is sufficient to alter the values of the measures commonly used to assess performance in ways that authors of new studies would think of as notable differences. When large amounts of data in the minority class are easy to obtain, the simplest solution is to undersample the majority class and effectively balance the data sets. When these data are sparse, then bioinformatics researchers would do well to consider techniques such as oversampling and cost-sensitive learning developed in machine learning in recent years [30] [77] – [79] .

Supporting Information

https://doi.org/10.1371/journal.pone.0067863.s001

Acknowledgments

We thank Qifang Xu for providing PDB coordinate files for biological assemblies from PISA.

Author Contributions

Conceived and designed the experiments: QW RLD. Performed the experiments: QW. Analyzed the data: QW RLD. Contributed reagents/materials/analysis tools: QW. Wrote the paper: QW RLD.

- View Article

- Google Scholar

- 27. Weiss GM, Provost F (2001) The effect of class distribution on classifier learning: An empirical study. Department of Computer Science.

- 28. Laurikkala J (2001) Improving identification of difficult small classes by balancing class distribution. 63–66.

- 31. Liu XY, WU J, Zhou ZH (2006) Exploratory under sampling for class imbalanced learning. 965–969.

- 32. Han H, Wang WY, Mao BH (2005) Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. 878–887.

- 33. He H, Bai Y, Garcia EA, Li S (2008) ADASYN: Adaptive synthetic sampling approach for imbalanced learning. 1322–1328.

- 36. Elkan C (2001) The Foundations of cost-sensitive learning. 973–978.

- 38. Provost F (2000) Learning with imbalanced data sets 101. AAAI workshop on imbalamced data sets.

- 59. Hubbard SJ, Thornton JM (1993) NACCESS. London: Department of Biochemistry and Molecular Biology, University College London.

- 60. Sokal RR, Rohlf FJ (1995) Biometry : the principles and practice of statistics in biological research. New York: W.H. Freeman. xix, 887 p.

- 64. Hanley JA, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology: 29–36.

- 68. Vapnik VN (1995) The nature of statistical learning theory. New York: Springer-Verlag New York, Inc.

- 69. Joachims T (1999) Making large-scale support vector machine learning practical. Cambridge: MIT Press.

- 70. Joachims T (2002) Learning to classify text using support vector machines: Springer.

- 72. Li S, Xi L, Li J, Wang C, Lei B, et al.. (2010) In silico prediction of deleterious single amino acid polymorphisms from amino acid sequence. J Comput Chem.

- 77. Zhou Z-H. Cost-sensitive learning; 2011; Berlin. Springer-Verlag. 17–18.

- 79. Thai-Nghe N, Gantner Z, Schmidt-Thieme L (2010) Cost-sensitive learning methods for imbalanced data. The 2010 International Joint Conference on Neural Network. 1–8.

The week's pick

Random Articles

- Stochastic Analysis of DSS Queries for a Distributed Database Design December 2013 Veins based Authentication System June 2015 Analysis of Dynamic Route Discovery Mechanism in Reactive Routing Protocols June 2014 Digital Image Alteration Detection using Advance Processing April 2015

Reseach Article

Machine learning: a review on binary classification.

Roshan Kumari, Saurabh Kr. Srivastava . Machine Learning: A Review on Binary Classification. International Journal of Computer Applications. 160, 7 ( Feb 2017), 11-15. DOI=10.5120/ijca2017913083

In the field of information extraction and retrieval, binary classification is the process of classifying given document/account on the basis of predefined classes. Sockpuppet detection is based on binary, in which given accounts are detected either sockpuppet or non-sockpuppet. Sockpuppets has become significant issues, in which one can have fake identity for some specific purpose or malicious use. Text categorization is also performed with binary classification. This research synthesizes binary classification in which various approaches for binary classification are discussed.

- Thamar Solorio, Ragib Hasan and Mainul Mizan, "A Case Study of Sockpuppet Detection in Wikipedia", Proceedings of the Workshop on Language in Social Media(LASM 2013),Pages 59-68,Atlanta,Georgia,June 13 2013.@2013 Association for Computational Linguistics.

- Michail Tsikerdekis and Sherali Zeadally, "Multiple Account Identity Deception Detection in Social Media Using Non Verbal Behavior", IEEE Transactions on Information Forensics and Security, Vol 9, No 8, August 2014.

- Thamar Solorio, Ragib Hasan and Mainul Mizan, "Sockpuppet Detection in Wikipedia :A Corpus of Real-World Deceptive Writing For Linking Writing", arXiv:1310.6772v1[cs.CL] 24 Oct 2013.

- Xueling Zheng, Yiu Ming Lai, K.P. Chow, Lucas C.K. Hui and S.M. Yiu, "Detection of Sockpuppets in Online Discussion Forums", HKU CS Tech Report TR-2011-03.

- Sadia Afroz, Michael Brennan and Rachel Greenstadt, "Detecting Hoaxes Frauds and Deception in Writing Style Online". 2011.

- Dhanyasree P*, Sajitha Krishnan and Ambikadevi Amma T, "Deception Detection in Social Media through Combined Verbal and Non-Verbal Behavior ", International Journal of Advanced Research in Computer Science and Software Engineering , Volume 5, Issue 4, 2015.

- M Balaanand,R Soumipriya,S Sivaranjani and S Sankari, "Identifying Fake Users in Social Networks Using Non-Verbal Behaviour". International Journal of Technology and Engineering System (IJTES)Vol 7. No.2 2015 Pp. 157-161©gopalax Journals, Singapore.

- Sheetal Antony, Prof. B. S. Umashankar, "Identity Deception Detection and Security in Social Medium, IJCSMC, Vol. 5, Issue 4, April 2016, pg.499-502.

- Zaher Yamak, Julien Saunier and Laurent Vercouter, " Detection of Multiple Identity Manipulation in Collaborative Projects", IW3C2, WWW'16 Companion, April 11-15, 2016, Montreal, Quebec, Canada. ACM 978-1-4503-4144-8/04.

- Asaf Shabtai, Robert Moskovitch, Yuval Elovici and Chanan Glezer, " Detection of malicious code by applying machine learning classifiers on static features: A state -of-the-art-survey ", INFORMATION SECURITY TECHNICAL REPORT 14 (2009) 16-29, ELSEVIER.

- Antu Mary Kuruvilla1 and Saira Varghese2, "A Survey on detecting Identity Deception in Social Media Applications", International Journal of Modern Trends in Engineering and Research (IJMTER) Volume 02, Issue 04, [April – 2015] ISSN (Online):2349–9745 ; ISSN (Print):2393-8161.

- Ashkan Sami, B. Yadegari, N. Peiravian, and S. Hashemi and A. Hamze, "Malware detection based on mining API calls", SAC '10: Proceedings of the ACM Symposium on Applied Computing, pp. 1020-1025, 2010.

- G.Ganesh Sundarkumar and Vadlamani Ravi, "Malware Detection by Text and Data Mining".IEEE2013..

- Prasha Shrestha,Suraj Maharajan,Gabriela Ramirez de la Rosa,Alan Sprague,Thamar Solorio and Gracy Warner, "Using String Information for Malware Family Identification" @Springer International Publishing Switzerland 2014,A.L.C.Bazzan and K.Pichara(Eds.):IBERAMIA 2014,LNAI 8864,pp.686-697,2014.DOI:10.1007/978-3-319-12027-0_55

- Michael Bailey, Jon Oberheide, Z. Morley Mao, Farnam Jahanian and Jose Nazario, " Automated Classification and Analysis of Internet Malware". April 26 2007

- Gaston L’Huillier, Alejandro Hevia, Richard Weber and Sebastian Rios, "Latent Semantic Analysis and Keyword Extraction for Phishing Classification".IEEE2010.

- Rafiqul Islam, Ronghua Tian , Lynn M. Batten and Steve Versteeg," Classification of malware based on integrated static and dynamic features". Journal of Network and Computer Applications 36 (2013) 646–656. ELSEVIER.

- Ali Danesh, Behzad Moshiri and Omid Fatemi, "Improve Text Classification Accuracy based on Classifier Fusion Methods".2007 IEEE Xplore.

- Aytuğ Onana, Serdar Korukoğlub and Hasan Bulutb, " Ensemble of keyword extraction methods and classifiers in text classification". A. Onan et al. / Expert Systems With Applications 57 (2016) 232–247.

- Baoxun Xu, Xiufeng Guo, Yumming Ye and Jiefeng Cheng, "An Improved Random Forest Classifier for Text Categorization", [JOURNAL OF COMPUTERS] VOL. 7, NO. 12, DECEMBER 2012.

- M. Sivakumar, C. Karthika and P. Renuga, "A Hybrid Text Classification Approach using KNN and SVM", [IJIRSET] Volume 3, Special Issue 3, March 2014.

- Sundus Hassan, Muhammad Rafi and Muhammad Shahid Shaikh, "Comparing SVM and Naive Classifiers for Text categorization with Wikitology as knowledge enrichment". IEEE Xplore 2012.

Index Terms

Computer Science Information Sciences

Sockpuppets Non sockpuppets multiple identity deception text categorization NB SVM Random Forest Ensemble methods and Binary Classification

Theory of Machine Learning

Chapter 4 binary classification.

(This chapter was scribed by Paul Barber. Proofread and polished by Baozhen Wang.)

In this chapter, we focus on analyzing a particular problem: binary classification. Focus on binary classification is justified because

- It encompasses much of what we have to do in practice

- \(Y\) is bounded.

In particular, there are some nasty surprises lurking in multicategory classification, so we avoid more complicated general classification here.

4.1 Bayes Classification (Rule)

Suppose \((X_{1},Y_{1}) , \ldots , (X_{n}, Y_{n})\) are iid \(P(X,Y)\) , where \(X\) is in some feature space, and \(Y=\left\{ 0,1\right\}\) . Denote the marginal distribution of \(X\) by \(P_{X}\) . The conditional distribution \(Y|X = x \sim \text{Ber}(\eta (x))\) , where \(\eta(x)\triangleq P(Y=1| X=x)=E(Y|X=x)\) . \(\eta\) is sometimes called the regression function.

Next, we consider an optimal classifier that knows \(\eta\) perfectly, that is as if we had perfect access to the distribution of \(Y|X\) .

Definition 4.1 (Bayes Classifier) \[h^{\ast}(x) = \begin{cases} 1 & \text{ if } \eta(x)> \frac{1}{2} \\ 0 & \text{ if } \eta(x) \le \frac{1}{2} \end{cases} .\]

In other words,

\[h^{\ast} (x)=1 \iff P(Y=1|X=x)>P(Y=0|X=x)\]

The performance metric of \(h\) is classification error: \(L(h)=P(h(x)\neq Y)\) , i.e., the risk function under \(0-1\) loss. Bayes Risk equals \(L^{\ast}=L(h^{\ast})\) , the latter denoting the classification error associated with \(h^{\ast}\)

Theorem 4.1 For any binary classifier \(h\) , the following identity holds:

\[L(h)-L(h^{\ast}) = \int_{\left\{ h \neq h^{\ast} \right\} } |2 \eta(x)-1| P_{x} dx = E_{X \sim P_{X}} \left( |2 \eta(x) -1| \mathbb{1}[h(x) \neq h^{\ast}(x)] \right)\]

where \(\left\{h\neq h^{\ast}\right\}\triangleq\left\{x\in X:\ h(x)\neq h^{\ast}(x)\right\}\) .

In particular, the integrand is non-negative, which implies \(L(h^{\ast}) \le L(h),\ \forall h\) . Moreover,

\[L(h^{\ast}) = E_{X}[\text{min}(\eta(x),1-\eta(x))] \le \frac{1}{2}\]

Proof . We begin by proving the last part for all \(h\) ,

\[\begin{align*} L(h) &= P(Y \neq h(x)) \\ &= P(Y=0, h(x)=1)+P(Y=1, h(x)=0) \\ &= E(\mathbb{1}[Y=0,h(x)=1])+E(\mathbb{1}[Y=1,h(x)=0]) \\ &= E_X\left\{E_{Y|X} \mathbb{1}[Y=0,h(x)=1]|X \right\}+ E_X\left\{E_{Y|X} \mathbb{1}[Y=1,h(x)=0]|X \right\} .\end{align*}\]

Now, \(h(x)\) is measurable, so

\[\mathbb{1}[1=0,h(x)=1]\eta(x) +\mathbb{1}[0=0,h(x)=1](1-\eta(x))=\mathbb{1}[h(x)=1](1-\eta(x))\]

Then \(\forall h\) ,

\[\begin{equation} L(h)= E_{X}\left[\mathbb{1}[h(x)=1] (1-\eta(x))\right]+ E_{X}\left[\mathbb{1}[h(x)=0] \eta(x)\right] \tag{4.1} \end{equation}\]

\[L(h^{\ast})=E[\mathbb{1}[h^{\ast}(x)=1](1-\eta(x))+\mathbb{1}[h^{\ast}(x)=0] \eta(x)] = E[\min(\eta(x),1-\eta(x))]\le \frac{1}{2}\]

Now apply (4.1) to both \(h\) and \(h^{\ast}\) ,

\[\begin{align*} L(h)-L(h^{\ast})&=E[\mathbb{1}[h(x)=1](1-\eta(x))+\mathbb{1}[h(x)=0]\eta(x)-\mathbb{1}[h^{\ast}(x)=1](1-\eta(x))-\mathbb{1}[h^{\ast}(x)=0] \eta(x)]\\ &=E\left\{[\mathbb{1}[h(x)=1]-\mathbb{1}[h^{\ast}(x)=1]](1-\eta(x)) + [\mathbb{1}[h^{\ast}(x)=0]-\mathbb{1}[h(x)=0]]\eta(x)\right\}\\ &=E\left\{[\mathbb{1}[h(x)=1]-\mathbb{1}[h^{\ast}(x)=1]]\cdot (1-2\eta(x)\right\}\\ &=\begin{cases} 1 & \text{ if } \eta<\frac{1}{2} \\ 0 & \text{ if } \eta =\frac{1}{2} \\ -1 & \text{ if } \eta>\frac{1}{2} \end{cases}\\ &=E[\mathbb{1}[h^{\ast}(x)\neq h(x)]\cdot sgn(1-2\eta(x))(1-2\eta(x))]\\ &=E[\mathbb{1}[h^{\ast}(x)\neq h(x)]\cdot |1-2\eta(x)|] \end{align*}\] This implies \(L(h) \ge L(h^{\ast})\square.\)

- \(L(h)-L(h^{\ast})\) is the excess risk.

- \(L(h^{\ast})=\frac{1}{2}\iff\eta(x)=\frac{1}{2}\text{ a.s. }\iff\) \(X\) contains no useful information about \(Y\) .

- Excess risk weights the discrepency between \(h\) and \(h^{\ast}\) according to how far \(h\) is from \(\frac{1}{2}\)

As noted earlier, LDA puts some model (dist’n) on data, e.g.

\[X| Y=y \sim N(\mu_{Y}, \Sigma)\]

Generally, one can compute the Bayes rule by applying the Bayes theorem

\[\eta(x)=P(Y=1|X=x) = \frac{P(X|Y=1)P(Y=1)}{P(X|Y=1)P(Y=1)+P(X|Y=0)P(Y=0)}\]

where \(P(X|Y=y)\) is density or pmf. For \(\pi\triangleq P(Y=1)\) , \(P_j\triangleq P(X|Y=j)\) :

\[\eta(x) = \frac{P_{1}(x) \pi}{P_{1}(x) \pi + P_{0}(x) (1-\pi) }\]

The bayes rule is \(h^{\ast} (x) = \mathbb{1}[\eta (x) > \frac{1}{2}]\)

\[\frac{P_{1}(x) \pi}{P_{1}(x) \pi + P_{0}(X)(1-\pi)} > \frac{1}{2} \iff \frac{\pi P_{1}(x)}{(1-\pi)P_{0}(x)} >1 \implies \frac{P_{1}}{P_{0}}(x) > \frac{1-\pi}{\pi}\]

When \(\pi=\frac{1}{2}\) , Bayes rule amounts to comparing \(P_{1}(x)\) with \(P_{0}(x)\) .

Given data \(D_{n} = \left\{ (X_{1},Y_{1}) , \ldots, (X_{n},Y_{n}) \right\}\) we build a classifier \(\hat{h}_{n} (x)\) which is random in two ways:

- \(X\) is a random variable

- \(\hat{h}_{n}\) depends of the “random” data explicitly.

Our performance metric is still \(L(\hat{h}_{n}) =P(\hat{h}_{n}(x) \neq Y)\) . Although we have integrated our \((X,Y)\) , \(L(\hat{h_{n}})\) still depends on the data \(D_{n}\) . Since this is random, we will consider bounding both \(E(L(\hat{h}_{n})-L(h^{\ast}))\) and \(L(\hat{h}_{n})-L(h^{\ast})\) with high probability.

4.2.1 Plug-in rule

Earlier we discussed two approaches to classification: generative v.s. discriminative. The middle ground is the plug in rule.

Recall \(h^{\ast}(x) = \mathbb{1}[\eta(x) > \frac{1}{2}]\) . Bayes rule says we can estimate \(\eta(x)\) using \(\hat{\eta}(x)\) and then plug it into the bayes rule to produce \(\hat{h}(x)\triangleq\mathbb{1}[\hat{\eta}_{n} >\frac{1}{2}]\) .

Many possibilities:

- If \(\eta(x)\) is smooth, use non-paramtetric regression to estimated \(E(Y|X=x)\) .

- Nadaraya-watson kernel regression

- \(k\) -nearest neighbor.

- If \(\eta(x)\) has parametric form, logistic regression

\[\log\left( \frac{\eta(x)}{1-\eta(x)} \right) = x^{T} \beta\]

Widely used, performs well and easy to compute, but not our focus here.

4.3 Learning with finite hypothesis class \(\mathcal{H}\) .

Recall the following definition:

- \(L(h) = P(Y \neq h(X))\) .

- \(\hat{L}_{n}(h) = \frac{1}{n} \sum_{i=1}^{n} \mathbb{1}[Y_{i} \neq h(x_{i})]\) .

- \(\hat{h}_{n} = \text{argmin}_{h \in \mathcal{H}} \hat{L}_{n}(h)\) .

- \(\bar{h} = \text{argmin}_{h \in \mathcal{H}} L(h)\) .

- Excess risk wrt \(\mathcal{H}\) : \(L(\hat{h}_{n})-L(\bar{h})\) .

- Excess risk: \(L (\hat{h}_{n})-L(h^{\ast})\)

Ideally, we want to bound the excess risk \[\begin{align*} L(\hat{h}_{n}) -L(h^{\ast}) &= [L(\hat{h}_{n})-L(\bar{h})]+[L(\bar{h})-L(h^{\ast})] \\ .\end{align*}\]

Goal: \(P(L(\hat{h}_{n}-L(\bar{h})) \le \Delta_{n , \delta} (\mathcal{H}))\ge 1- \delta\) . How? Try to bound \(|\hat{L}_{n}(h)-L(h)| \ \forall h \in \mathcal{H}\) . But

\[\begin{align*} |\hat{L}_{n}(h)-L(h)| &= | \frac{1}{n} \sum_{i=1}^{n} \mathbb{1}[h(X_{i}) \neq Y_{i}] - E(\mathbb{1}[h(X_{i}) \neq Y_{i}]) | \\ &= | \bar{Z}-\mu| .\end{align*}\]

\(Z_{i} \in [0,1]\) a.s. by definition. Applying Hoeffding’s inequality, we have

\[P(|\bar{Z}-\mu \le \epsilon) \ge 1- 2 \text{exp}\left( - \frac{2n^2 \epsilon^2}{\sum(b_i-a_i)} \right) = 1- 2 \text{exp}\left( -2n \epsilon^2\right)\]

Let \(\delta=2\exp(-2n\epsilon^2)\) , then \(\epsilon=\sqrt{\frac{ \log\left( 2/\delta \right)}{2n}}\) so that \(\forall h,\) \[|\bar{Z}-\mu| \le \sqrt{\frac{ \log\left( 2/\delta \right)}{2n} }\] with high probability.

Theorem 4.2 If \(\mathcal{H} = \left\{ h_{1},h_{2},\ldots, h_{m} \right\}\) , then \[L(\hat{h}_{n})-L(\bar{h}) \le \sqrt{ \frac{2 \log \left( 2M/\delta \right)}{n} }\] with probability at least \(1-\delta\) , and \[E(L(\hat{h}_{n})-L(\bar{h})) \le \sqrt{ \frac{2 \log 2M}{n}}\] .