- UPSC IAS Exam Pattern

- UPSC IAS Prelims

- UPSC IAS Mains

- UPSC IAS Interview

- UPSC IAS Optionals

- UPSC Notification

- UPSC Eligibility Criteria

- UPSC Online

- UPSC Admit Card

- UPSC Results

- UPSC Cut-Off

- UPSC Calendar

- Documents Required for UPSC IAS Exam

- UPSC IAS Prelims Syllabus

- General Studies 1

- General Studies 2

- General Studies 3

- General Studies 4

- UPSC IAS Interview Syllabus

- UPSC IAS Optional Syllabus

Forests are the best case studies for economic excellence – UPSC CSE PYQ 2022

Essay Previous Year Paper 2022- Click Here

Forests are intricate ecosystems that have evolved over millions of years, thriving on principles that enable their growth, adaptation, and delivery of benefits to the environment and society.

These virtues can serve as a valuable guide for economic systems to flourish, thrive, and yield positive outcomes. This essay explores how economic systems can embody and operate on the virtues that forests possess, along with a few case studies that demonstrate the excellence an economy can achieve by emulating forests

Firstly, forests are characterized by their resilience—the ability to recover and bounce back from challenging situations . For example, forests can withstand catastrophic events such as forest fires, droughts, and floods. Similarly, in the economy, we need to diversify supply chains to increase resilience to supply shocks caused by political shifts or natural disasters. The COVID-19 pandemic has highlighted the importance of robust and resilient programs that play a critical role in economic adaptation and survival during crises and beyond.

Secondly, diversity is another crucial characteristic of forests, encompassing genetic and species diversity . Forests consist of numerous species that interact with each other in complex ways, creating a dynamic ecosystem capable of withstanding external pressures. Higher diversity fosters a healthier natural ecosystem, making forests more productive, stable, and sustainable . Similarly, in the economy, diversity is essential. In rural areas, the focus should shift beyond agriculture to allied areas like fishery, agroforestry, and apiculture.

In urban and suburban areas, emphasis should be placed on small and medium enterprises in addition to heavy industries. Thus, a well-rounded economy should encompass a balanced mix of primary, secondary, and tertiary sectors to ensure sustainability, stability, and productivity .

Another principle of forests is mutual symbiosis , where all organisms in an ecosystem depend on each other directly or indirectly. For instance, bees depend on nectar from flowers, while flowers rely on bees for pollination, benefiting the entire system. Similarly, creating symbiosis in the economy can be advantageous.

For example, establishing agro-processing industries in agricultural areas benefits both farmers by increasing productivity and profit, while sectors gain access to raw materials at a lower cost. Connecting ancillary micro, small, and medium enterprises with larger units is also beneficial. MSMEs require high demand for survivability, while large units acquire the necessary raw materials from them.

Forests demonstrate the principle of adoption , where species adapt to changing temperatures and rainfall patterns. Similarly, in the economy, agricultural methods should adapt to climate change by employing techniques such as micro-irrigation or dryland farming in regions with low rainfall. Manufacturing industries should also upgrade to modern technologies like artificial intelligence and the Internet of Things to address changing demands and better align with needs.

Self-regulation is another important principle of forests. Forest ecosystems possess built-in mechanisms that maintain balance and stability. Predators, for example, help control prey populations, preventing overgrazing and ecological damage. Similarly, economic systems can learn from this principle by developing self-regulating mechanisms that prevent excesses and imbalances . The Reserve Bank of India (RBI) serves as an example of such a mechanism. The RBI regulates the Indian banking system, ensuring banks operate within ethical and financial standards. Its monetary policy framework aims to maintain price stability while supporting economic growth, and preventing harm to society and the economy.

Forests exhibit a long-term perspective, taking decades or even centuries to grow and adapt to changing conditions.

Economic systems can adopt a similar perspective by considering the needs of future generations. Norway’s sovereign wealth fund serves as an example of economic excellence achieved through a long-term perspective. Designed to withstand fluctuations in global financial markets, the fund has provided stable revenue for the country’s social welfare programs, allowing Norway to weather financial crises successfully.

Several case studies illustrate the importance of aligning economic models with local ecosystems. Just as a species cannot be forced to live in different types of forests, an economic model cannot be applied uniformly across all regions. Instead, localized approaches, such as promoting locally grown or unique crops, ensure sustainability and a stable economy.

Furthermore, invasive species can adversely affect domestic forests, just as unregulated foreign companies can impact domestic economies. Recognizing complementary niches is crucial, as species with identical niches cannot coexist. Similarly, businesses must identify their strengths and weaknesses to develop niche strategies effectively.

Mangroves, acting as buffer zones, protect territorial landmasses from disasters like cyclones. Similarly, countries like India, in the face of a changing global scenario and widespread globalization, need buffers to sustain their markets and navigate unforeseen events such as the COVID-19 pandemic.

Lastly, it is essential to avoid exploiting forests and natural resources beyond their regenerative capacities, as it can lead to the collapse of the forest ecosystem or the economy in the long run . In essence, sustainable development promotes economic growth with justice and environmental conservation, which is urgently needed on both national and global scales. Forests and economies are interconnected, and their elements coexist and maintain balance. The goal is to maintain an economically viable and ecologically sustainable society by embracing the virtues of forests, ultimately achieving the highest forms of economic excellence.

Table of Contents

Frequently Asked Questions (FAQs)

Question: how can forests serve as case studies for economic excellence.

Answer: Forests contribute significantly to economic excellence through various avenues such as timber production, non-timber forest products, ecotourism, and carbon sequestration. Sustainable forest management practices can ensure long-term economic benefits while preserving ecological balance.

Question: What role do forests play in supporting rural economies and livelihoods?

Answer: Forests play a crucial role in supporting rural economies by providing employment opportunities in activities like forestry, agroforestry, and non-timber forest product collection. Additionally, forests contribute to the livelihoods of local communities through ecosystem services like water regulation, soil fertility, and climate regulation.

Question: How can the conservation of forests contribute to economic sustainability?

Answer: Forest conservation is essential for maintaining biodiversity, regulating climate, and ensuring sustainable resource use. Preserving forests helps safeguard ecosystem services that have direct economic implications, such as clean water supply, pollination of crops, and mitigating climate change impacts. Long-term economic sustainability is linked to the responsible management and conservation of forests.

In case you still have your doubts, contact us on 9811333901.

For UPSC Prelims Resources, Click here

For Daily Updates and Study Material:

Join our Telegram Channel – Edukemy for IAS

- 1. Learn through Videos – here

- 2. Be Exam Ready by Practicing Daily MCQs – here

- 3. Daily Newsletter – Get all your Current Affairs Covered – here

- 4. Mains Answer Writing Practice – here

Visit our YouTube Channel – here

- A society that has more justice is a society that needs less charity.

- Inspiration from creativity springs from the effort to look for the magical in the mundane

- UPSC Essay Notes – Interesting Movies & Literature – THE SOUND OF MUSIC

- You cannot step twice in the same river – UPSC CSE PYQ 2022

Edukemy Team

Upsc essay notes – important personalities – mahatma gandhi, upsc essay notes – interesting movies & literature – the..., upsc essay notes – important personalities – j.k. rowling, not all who wander are lost – upsc cse pyq..., upsc essay notes – interesting movies & literature – coco, upsc essay notes – important personalities – a.p.j. abdul kalam, upsc essay notes – important personalities – swami vivekananda, upsc essay notes – famous book summaries – the third..., upsc essay 2019 question paper – edukemy, upsc essay notes – famous book summaries – sapiens, leave a comment cancel reply.

Save my name, email, and website in this browser for the next time I comment.

Our website uses cookies to improve your experience. By using our services, you agree to our use of cookies Got it

Keep me signed in until I sign out

Forgot your password?

A new password will be emailed to you.

Have received a new password? Login here

- Publications

Investing in Forests: The Business Case

Forest destruction and degradation is accelerating the severe climate and nature crises facing the world. Halting business practices that contribute to this degradation is a vital priority and investment in forest conservation and restoration is urgently needed. Investing in forests fulfils multiple corporate priorities. Beyond contributing to tackling the nature and climate crises, it has potential to sustain business resilience, embody values-led leadership and boost profitability and growth – the economic value of forests is vast, with one estimate suggesting the total value of intact forests and their ecosystem services to be as much as $150 trillion, around double the value of global stock markets.

World Economic Forum reports may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License , and in accordance with our Terms of Use .

Further reading All related content

Investing in trees: global companies are protecting and restoring forests

Over 100 companies have committed to investing in trees by conserving, restoring, and growing over 12 billion trees in over 100 countries.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 13 July 2022

The 2019–2020 Australian forest fires are a harbinger of decreased prescribed burning effectiveness under rising extreme conditions

- Hamish Clarke 1 , 2 , 3 , 4 ,

- Brett Cirulis 4 ,

- Trent Penman 4 ,

- Owen Price 1 , 2 ,

- Matthias M. Boer 2 , 3 &

- Ross Bradstock 1 , 2 , 3 , 5

Scientific Reports volume 12 , Article number: 11871 ( 2022 ) Cite this article

12k Accesses

19 Citations

31 Altmetric

Metrics details

- Environmental sciences

- Natural hazards

There is an imperative for fire agencies to quantify the potential for prescribed burning to mitigate risk to life, property and environmental values while facing changing climates. The 2019–2020 Black Summer fires in eastern Australia raised questions about the effectiveness of prescribed burning in mitigating risk under unprecedented fire conditions. We performed a simulation experiment to test the effects of different rates of prescribed burning treatment on risks posed by wildfire to life, property and infrastructure. In four forested case study landscapes, we found that the risks posed by wildfire were substantially higher under the fire weather conditions of the 2019–2020 season, compared to the full range of long-term historic weather conditions. For area burnt and house loss, the 2019–2020 conditions resulted in more than a doubling of residual risk across the four landscapes, regardless of treatment rate (mean increase of 230%, range 164–360%). Fire managers must prepare for a higher level of residual risk as climate change increases the likelihood of similar or even more dangerous fire seasons.

Similar content being viewed by others

The carbon dioxide removal gap

Frequent disturbances enhanced the resilience of past human populations

A unifying modelling of multiple land degradation pathways in Europe

Introduction.

Intrinsic to the earth system for hundreds of millions of years, wildfires are increasingly interacting with humans and the things we value 1 , 2 , 3 . Mega-fires in recent years have caused loss of life and property and widespread environmental and economic impacts in many countries, challenging society’s ability to respond effectively 4 , 5 , 6 . Climate change has already caused changes in some fire regimes, with greater changes projected throughout this century 7 , 8 , 9 . There is a broad network of anthropogenic influences on fire likelihood, exposure and vulnerability including land-use planning, building construction and design, insurance, household and community actions, Indigenous cultural land management, ecosystem management, and research and development. Within this network, fire management agencies play a critical role in wildfire risk mitigation, although our understanding of the interactions between, and relative contributions of, these varied factors towards risk mitigation remains limited. Addressing these gaps is required to support the development and implementation of cost-effective risk management strategies 10 .

Prescribed burning is commonly used in contemporary fire management to alter fuels, with the intention of mitigating risks posed by wildfires to assets. This involves the controlled application of fire in order to modify fuel properties and increase the likelihood of suppressing any wildfires that subsequently occur in the area of the burn 11 , 12 , 13 . Although the effects and effectiveness of prescribed burning have come under intense scientific scrutiny 14 , major knowledge gaps remain in the design of locally tailored, cost-effective treatment strategies that aim to optimise risk mitigation across a range of management values 15 . Crucially, these values may sometimes be in conflict e.g. smoke health impacts from prescribed fire and wildfire 16 or biodiversity conservation and asset protection 17 , necessitating methods for making trade-offs explicit 18 .

The 2019–2020 fires in south-eastern Australia resulted in 33 direct deaths, over 400 smoke-related premature deaths, the loss of over 3000 houses and new records for high severity fire extent and the proportion of area burnt for any forest biome globally 4 , 19 , 20 , 21 . These fires were an important opportunity to test the risk mitigation effects of prescribed burning. One empirical study found that about half the prescribed fires examined resulted in a significant decrease in fire severity, with effects greater for more recent burns and weaker for older burns 22 . Two other empirical studies 6 , 23 found decreases in the probability of high severity fire and house loss after past fire (either prescribed fire or wildfire), but also that this effect was significantly weakened under extreme fire weather conditions, consistent with prior research 24 , 25 . Large ensemble fire behaviour modelling can complement these empirical studies by exploring far more variation in weather conditions, treatment strategies and ignition location than would be possible from the historical record 26 , 27 , 28 . Simulation modelling facilitates estimates of residual risk: the percentage of maximum bushfire risk remaining, in a given area, following a particular fire management scenario, with maximum typically based on a control scenario with no prescribed burning treatment 29 . Simulation modelling also enables tracking of the trajectory of risk in the aftermath of seasons such as the 2019–2020 one, where very large burned areas might be expected to have reduced landscape fuel loads and hence residual risk.

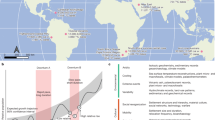

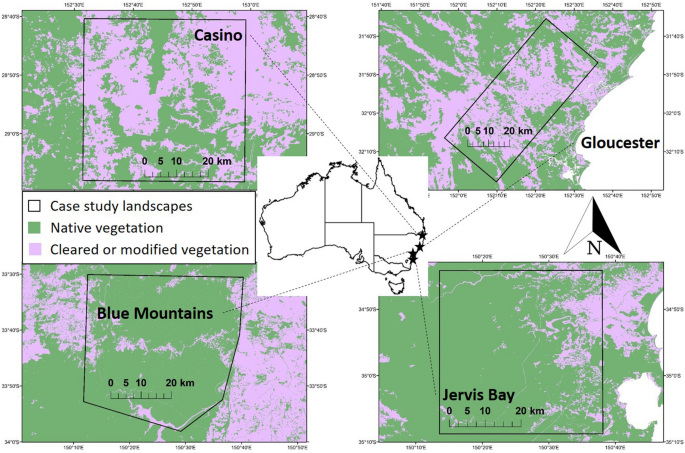

Here we perform a simulation experiment on the effects of different rates of prescribed burning treatment on area burnt and the risks posed by wildfire to multiple values. We consider life, property and infrastructure across four case study landscapes (Fig. 1 ). In particular, we asked:

How much risk mitigation does prescribed burning provide in the weather conditions of 2019-20 compared to average fire season weather distributions, based on long-term records?

How much subsequent risk reduction did the Black Summer fires provide?

Over what time period will risk reduction be measurable?

Fire behaviour simulations were carried out for four case study landscapes in south-eastern Australia: Casino, Gloucester, Blue Mountains and Jervis Bay. See Table 1 and Study Area in the Methods section for more information. This figure was generated using ArcGIS version 10.8 ( https://www.esri.com/en-us/home ).

The effect of 2019-20 fire weather conditions on risk mitigation from prescribed burning

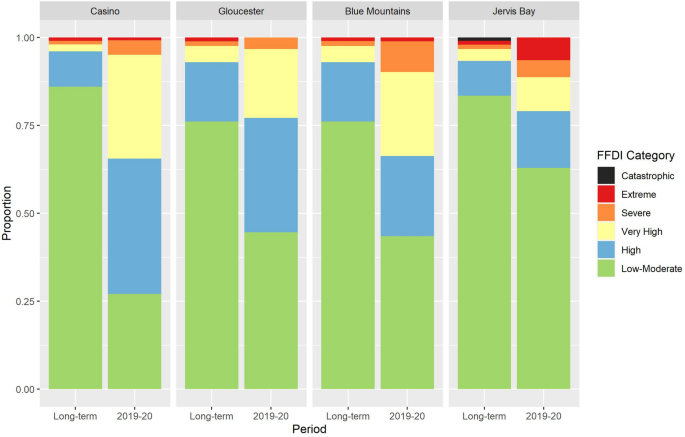

Fire weather conditions during the 2019–2020 season were markedly different to preceding years (Fig. 2 ). In all four case study landscapes there were fewer Low-Moderate days (Forest Fire Danger Index (FFDI): 0–12) and often considerably more High, Very High and Severe days (FFDI: 12–74). Only in the Jervis Bay landscape were there substantially more Extreme days (FFDI: 75–99) during the 2019–2020 season, while there were no Catastrophic days (FFDI ≥ 100) in any of the landscapes during 2019–2020.

Relative frequency of FFDI categories from half-hourly weather station data during the long-term record (1995–2014 for Casino, 1991–2014 for Gloucester and Blue Mountains, 2000–2014 for Jervis Bay) and during the 2019–2020 season.

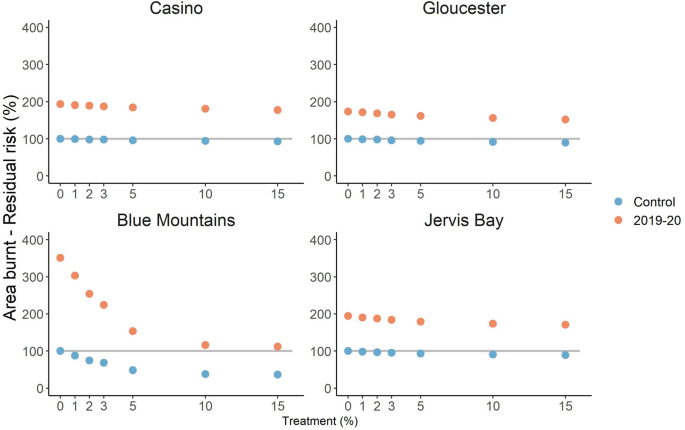

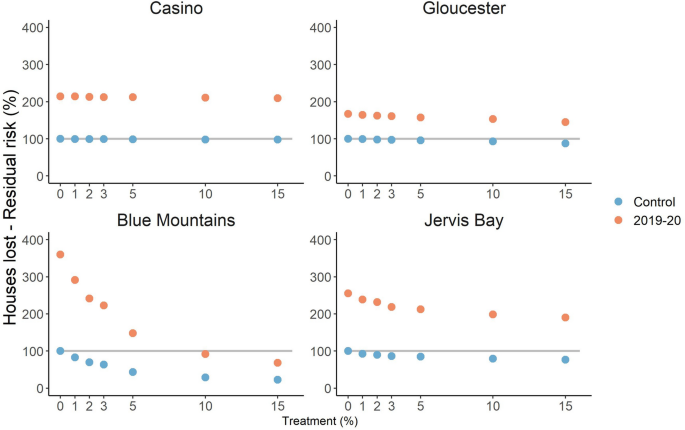

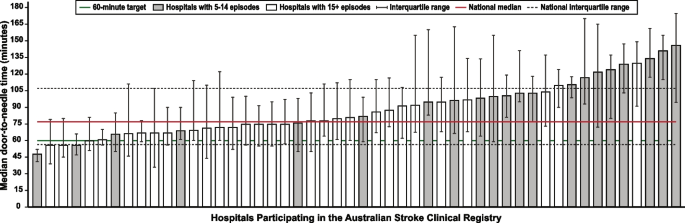

The 2019–2020 weather conditions strongly increased the residual risk of area burnt by wildfire and house loss due to wildfire (Figs. 3 , 4 ). For any given treatment rate, the residual risk under 2019–2020 weather conditions far exceeded control conditions (i.e. conditions based on long-term historic weather). For area burnt there was a mean 220% increase in residual risk (range 170–351%), while for house loss the mean increase in residual risk was 244% (range 164–360%). Only under very high rates of treatment was prescribed burning under 2019–2020 conditions able to achieve a residual risk below that of zero treatment in the control scenario, and only for house loss in the Blue Mountains (Fig. 4 ). Elsewhere even the highest rates of treatment (well above rates achieved historically) resulted in a residual risk above that of zero treatment in the control scenario.

Residual risk trajectory of area burnt by wildfire in Casino, Gloucester, Blue Mountains and Jervis Bay. Risk is relative to a scenario with no prescribed burning and long-term weather (the 100% level on the y-axis). Markers represent different annual rates of treatment, colours represent different weather conditions (blue = control i.e. long-term, orange = 2019–2020 fire season).

Residual risk trajectory of houses lost due to wildfire in Casino, Gloucester, Blue Mountains and Jervis Bay. Risk is relative to a scenario with no prescribed burning and long-term weather (the 100% level on the y-axis). Markers represent different annual rates of treatment, colours represent different weather conditions (blue = control i.e. long-term, orange = 2019–2020 fire season).

Prescribed burning resulted in a reduction in residual risk in all landscapes regardless of weather conditions, even though in almost all cases the risk remained higher than for zero treatment in the control scenario (see gradient of markers in Figs. 3 , 4 ). The effect of increasing treatment was much stronger in the Blue Mountains, with a minimum residual risk of area burnt by wildfire under long-term weather conditions of 35%, and a minimum residual risk of house loss of 22%. In the other three landscapes the minimum residual risk was 89% for area burnt and 77% for house loss. The marginal effect of prescribed burning (i.e. the rate of change in risk mitigation with incremental changes in treatment rate) was greater under the extreme 2019–2020 weather conditions, even though the residual risk was much higher as described above. Results for life loss and infrastructure damage were similar (Supplementary Figures 1 –3).

Risk in the aftermath of 2019–2020 fire season

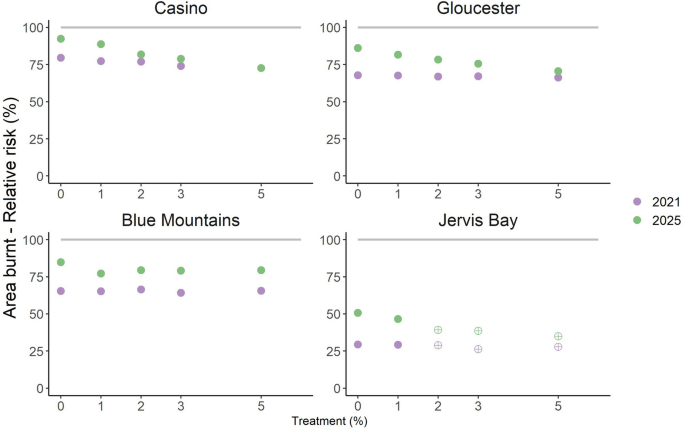

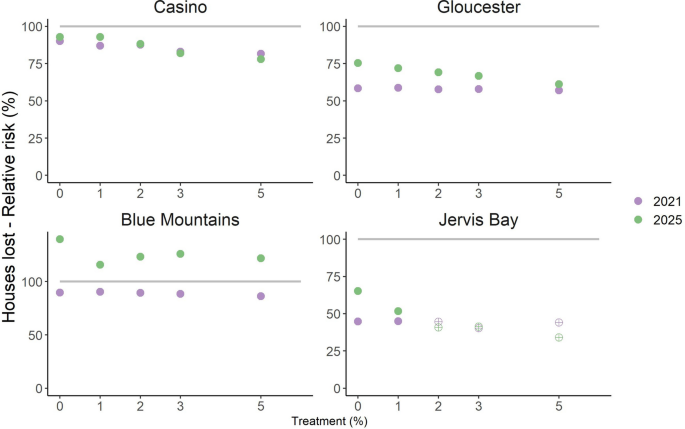

The estimated fuel load reductions due to the 2019–2020 fire season were predicted to cause widespread short-term reductions in residual risk to area burnt by wildfire and house loss, regardless of treatment level (Figs. 5 , 6 ). The potential area burnt by wildfire in 2021 was predicted to be at 30–80% of control (i.e. pre-2019–2020 levels) depending on landscape (Fig. 5 , circles). The predicted reduction in area burnt was greatest in Jervis Bay and Gloucester, which experienced the greatest and second greatest proportion burnt during the 2019–2020 season respectively (Table 1 ). By 2025, the residual risk of area burnt by wildfire climbed to 50–90% of control levels across the four study areas (Fig. 6 ). Results are similar for house loss (Fig. 6 ) i.e. the reductions in future wildfire risk due to the 2019–2020 season are partial and temporary, with residual risk actually exceeding control levels in the Blue Mountains by 2025. The re-accumulation of fuel over time is predicted to lead to greater risk mitigation from prescribed burning by 2025 than by 2021 (compare the gradients of the crosses and the circles in Figs. 5 , 6 ). As with the previous analysis, results for life loss and infrastructure damage were similar (Supplementary Figures 4 -6).

Future residual risk trajectory of area burnt by wildfire in the Casino, Gloucester, Blue Mountains and Jervis Bay case study areas. Risk is relative to a control scenario with pre-2019–2020 fuel load and no prescribed burning (the 100% level on the y-axis, indicated by line). Markers represent different annual treatment rates, colour indicates time period (blue = 2021 i.e. two years after 2019–2020 fire season, orange = 2025 i.e. six years after 2019–2020 fire season). In Jervis Bay the markers for 2, 3 and 5% p.a. treatment reflect edge treatment rates, with landscape treatment capped at 1% p.a. due to the very large area burnt during the 2019–2020 season (81% of the study area).

Future residual risk trajectory of houses lost due to wildfire in the Casino, Gloucester, Blue Mountains and Jervis Bay case study areas. Risk is relative to a control scenario with pre-2019–2020 fuel load and no prescribed burning (the 100% level on the y-axis, indicated by line). Markers represent different annual treatment rates, colour indicates time period (blue = 2021 i.e. two years after 2019–2020 fire season, orange = 2025 i.e. six years after 2019–2020 fire season). In Jervis Bay the markers for 2, 3 and 5% p.a. treatment reflect edge treatment rates, with landscape treatment capped at 1% p.a. due to the very large area burnt during the 2019–2020 season (81% of the study area).

Weather conditions during the 2019–2020 Australian fire season were a substantial risk multiplier compared to long-term weather conditions. The relative risks due to wildfire, quantified in terms of area burnt or house loss, doubled in three of four forested landscapes and more than tripled in the other. While prescribed burning partially mitigated these risks, the effect size was typically dwarfed by the effect of extreme weather conditions. In most cases zero treatment under long-term historic weather conditions yielded a lower residual risk than even the highest prescribed burning rates when combined with the 2019–2020 fire weather conditions. We also found that wildfire risk was likely to be reduced in the aftermath of the 2019–2020 fires, based on the implied fuel reduction associated with the unprecedented area burnt during the 2019–2020 season. However, the residual risk was still substantial in some areas and was predicted to rise steadily in the coming years, regardless of prescribed burning treatment rates.

Prescribed burning can mitigate a range of risks posed by wildfire, however residual risk can be substantial and is likely to increase strongly during severe fire weather conditions 6 , 24 . We found that the risk mitigation available from prescribed burning varies considerably depending on where it is carried out and which management values are being targeted, consistent with previous modelling studies that suggest there is no ‘one size fits all’ solution to prescribed burning treatment 15 , 16 . Of the factors influencing regional variation in prescribed burning effectiveness, the configuration of assets and the type, amount and condition of native vegetation are likely to be important. The Blue Mountains landscape, where area burnt by wildfire responded most strongly to treatment, has a relatively high proportion of native vegetation compared to the other landscapes, particularly Casino and Gloucester which are mostly cleared. The Blue Mountains also has an unusual combination of a high population concentrated in a linear strip of settlements surrounded by forest, which may contribute to greater returns on treatment (Fig. 1 ). Future research could systematically investigate the relationship between risk mitigation and properties of key variables such as asset distribution, vegetation and burn blocks for an expanded selection of landscapes. Although residual risk was greatly reduced in some areas after the 2019–2020 fire season, it remained substantial in other areas and was generally predicted to rise rapidly with fuel re-accumulation over the following five years. More work is needed to understand potential feedbacks between increasing fire activity, fuel accumulation and subsequent fire activity 8 .

Our conclusions are dependent on a number of assumptions associated with our fire behaviour simulation approach, including the foundational premise that fire spread is a function of fire weather, fuel load and factors such as topography. Fire behaviour simulators built on these assumptions have known biases and perform better when these are addressed, although their tendency to underestimate extreme fire behaviour suggests our results may be conservative 30 , 31 , 32 . The approach also assumes that both wildfires and prescribed burns consume equivalent quantities of fuel and that this fuel starts to re-accumulate after fire as a negative exponential function of time since fire, eventually stabilising at an equilibrium amount. In fact fuel consumption rates vary considerably within a given fire but also between wildfires and prescribed fires, which consume less fuel 33 , 34 . This also points towards our results being conservative due to potentially overestimating the mitigation effect of prescribed burning. Furthermore the accumulation of fuel post fire depends on the vegetation type, soil and climate 35 . Our experiments on the trajectory of risk after the 2019–2020 fire season may be limited by the relatively short amount of time allowed to elapse, which may be insufficient for prescribed burning treatment effects to become apparent. More broadly, our study design involves repeated instances of a single wildfire and thus does not capture the fire regime i.e. the effects of multiple fires in space and time, nor does it factor in future changes in climate, fuel or fuel moisture 36 . We did not model suppression, which is a complex function of fuel type, fuel load, fire behaviour, weather, topography and fire management decision making 37 . Suppression can reduce a range of risks although it is less effective under extreme weather conditions 38 , 39 , 40 .

Fire-prone landscapes around the world have experienced increasingly severe fire weather conditions 20 , 41 . The extreme conditions of the 2019–2020 fire season are projected to occur more frequently in the 21st century 42 . Our results suggest that climate change could seriously undermine the role played by prescribed burning in wildfire risk mitigation, as found in previous studies 43 , 44 . Using landscape simulation modelling in the Blue Mountains and the Woronora Plateau (about 100 km north of our Jervis Bay landscape), Bradstock et al. 43 found that the rate of prescribed burning treatment would need to quintuple or more by 2050 to counteract the effects of climate change on risk mitigation in terms of measures such as area burned and intensity of unplanned fire. Our study assumes that similar or greater treatment rates will be possible in future, which may not be the case depending on the prevalence of suitable prescribed burning weather conditions 45 , 46 . These findings demonstrate that there can be no wildfire risk mitigation without effective climate change mitigation 47 . Our research reinforces the need for comprehensive, transparent and objective evaluation of the effectiveness of existing attempts to mitigate wildfire risk across a range of management objectives, with future work potentially targeting additional management values such as smoke production and associated health impacts, agriculture and tourism impacts, and more nuanced measures of environmental impact. Such an evaluation could inform the trial and implementation of a range of locally tailored risk mitigation measures that address the full complexity of fire across preparation, response and recovery phases, such as prescribed burning, mechanical fuel reduction, anthropogenic ignition management, suppression, planning, construction and community engagement.

We selected four case study landscapes that were extensively impacted during the 2019–2020 fire season: Casino (69,362 ha burnt), Gloucester (132, 281 ha), Blue Mountains (119,626 ha) and Jervis Bay (137,049 ha) (Fig. 1 ; Table 1 ). All landscapes are forested, have considerable Wildland Urban Interface (WUI), and have a history of both wildfire and prescribed fire. Case study landscapes were approximately 200,000 ha (Table 1 ), intended to align with the upper limit of the size distribution of wildfires in local ecosystems (During the 2019–2020 fire season the Gospers Mountain fire, the result of mergers between several large fires in the Blue Mountains World Heritage Area and neighbouring areas, had a final burned area of over 500,000 ha).

The dominant land cover in the Casino landscape is cleared or modified vegetation (58%). The main native vegetation is dry sclerophyll forest with a shrub/grass understorey (17% of the study area) followed by wet sclerophyll forest with a grassy understorey (9%). The Casino area has a population of about 12,000, mostly concentrated in the town of Casino with a small number dispersed on semi-rural properties. Cleared or modified vegetation is also the dominant land cover in the Gloucester landscape (60%). The main native vegetation is wet sclerophyll forest with a grassy understorey (23% of the study area) followed by wet sclerophyll forest with a shrubby understorey (8%). The population is about 30,000, most of which live in the town of Taree on the eastern edge of the landscape with the remainder in smaller towns and semi-rural properties. The main native vegetation in the Blue Mountains landscape is dry sclerophyll forest with a shrubby understorey (63% of the study area) followed by dry sclerophyll forest with a shrub/grass understorey (9%). About 11% of the landscape is cleared or modified vegetation. Around 100,000 people live within the area, mainly living in a string of suburbs along a highway which bisects the region. The main native vegetation in the Jervis Bay landscape is dry sclerophyll forest with a shrubby understorey (40% of the study area) followed by wet sclerophyll forest with a grassy understorey (17%). Around 14% of the landscape is cleared or modified vegetation. About 50,000 people live within the area, mostly in the township of Nowra in the northeast with most of the remainder in coastal suburbs in the southeast.

All four landscapes are examples of the temperature eucalypt forest fire regime niche, characterised by high-productivity, with infrequent low-intensity litter fires in spring and medium-intensity shrub fires in spring and summer 48 . Fire intensity typically ranges from 1000 to 5000 kW m −1 , although extreme weather conditions may support crown fires where fire intensity can reach 10,000–50,000 kW m −1 . Fire interval is around 5–20 years, although can be as long as 20–100 years 48 . Contemporary prescribed burning rates average 2.5% p.a. in the Blue Mountains landscape and range from 0.4 to 0.6% p.a.in the Casino, Gloucester and Jervis Bay landscapes.

Phoenix fire simulator

Fires were simulated using PHOENIX RapidFire v4.0.0.7 49 , which is commonly applied in operations across south-eastern Australian states, including NSW 17 . Fire growth and rate of spread are calculated from Huygens’ propagation principle of fire edge 50 , a modified McArthur Mk5 forest fire behaviour model 51 , 52 and a generalisation of the CSIRO southern grassland fire spread model 53 . A 30-m resolution digital elevation model was included to allow PHOENIX to incorporate topographic effects on fire behaviour. Vegetation mapping and fuel accumulation models for major vegetation types of the case study landscapes were supplied by the NSW Rural Fire Service. Simulations were run at 180m grid resolution and model output included flame length, ember density, convection and intensity.

Scenario parameterisation

PHOENIX estimates fuel loads using separate fuel accumulation curves for combined surface and/near-surface, elevated and bark fuels 54 . These curves are based on a negative exponential growth function and varied among vegetation types 55 . The treatable portion of each case study landscape was defined as all fuels except crop, farm and urban landcover, and comprised 38% of the Casino landscape, 52% of the Gloucester landscape, 70% of the Blue Mountains landscape and 83% of the Jervis Bay landscape. Treatable fuels were separated into two types of management-sized ‘burn blocks’. Edge blocks were adjacent to property and settlements, while landscape blocks were more remote and larger. For edge blocks, a minimum burn interval of 5 years was used as it reflects what is feasible for agencies to achieve while allowing fuel recovery after burning. For landscape blocks, the minimum burn interval is the minimum tolerable fire interval for the majority of the vegetation type within each block, as represented by NSW Department of Planning and Environment mapping. In each case study landscape, 1000 ignition locations were selected based on an empirical model developed and tested for similar forest types 56 . Individual fires were ignited at 11:00 h local time and propagated for 12 h, unless self-extinguished within this period. This time period provides a standardised approach for risk estimation 15 , 57 and was chosen as a compromise between a sufficient amount of time for significant wildfire impacts to be realised 58 , while avoiding the factorial multiplication of weather conditions spanning multiple days. We tested seven combinations of equal edge and landscape treatment (0, 1, 2, 3, 5, 10, 15% p.a.), resulting in a range of fuel age classes for each simulation (Supplementary Figures 7 –10). Half-hourly weather data was drawn from the full record of observations at the nearest Bureau of Meteorology automatic weather station for each case study landscape (Casino 1995–2014, Gloucester 1991–2014, Blue Mountains 1991–2014, Jervis Bay 2000–2014). Simulations were repeated for each of the fire danger categories that had been recorded during the fire season (Spring-Summer) in each case study landscape i.e. Low–Moderate (0–11), High (12–24), Very High (25–49), Severe (50–74), Extreme (75–99) and in Jervis Bay only, Catastrophic (100+). The results from the simulated fires were used to estimate the impact on five management values (see “ Impact estimation ” section below) and then adjusted for the frequency of fire weather conditions contributing to ignitions and fire spread to estimate annualised risk (see “ Risk estimation ” section below).

Two sets of simulations were run to explore the effect of 2019–2020 fire weather conditions on prescribed burning effectiveness: (1) with weather drawn from the long-term historical record of fire season observations, referred to as "control”, (2) with weather drawn only from the 2019–2020 fire season, referred to as “2019–2020”. For Casino the period of active fire in the 2019–2020 fire season was September 2019 to December 2019, for Gloucester and the Blue Mountains this was October 2019 to December 2019, and for Jervis Bay this was December 2019 to January 2020. The relative frequency of fire weather conditions in each scenario was incorporated into risk estimation through a Bayesian decision network (see “ Risk estimation ” section below).

Three sets of simulations were run to explore the trajectory of risk in the aftermath of 2019–2020 fire season: (1) with a fire history excluding the 2019–2020 fire season and with no prescribed burning, referred to as “control”, (2) with a fire history including the 2019–2020 fire season, and with prescribed burning and fuel accumulation through to 2021 i.e. 2 years after the 2019–2020 season (“2021”), and (3) the same as (2) except through to 2025 (“2025”). Due to the very large area burnt during the 2019–2020 season, prescribed burning treatment rates (edge and landscape) were capped at 5% p.a. for Casino, Gloucester and the Blue Mountains. In Jervis Bay, where 81% of the study area was burned by the 2019–2020 fires, edge treatment was capped at 5% p.a. and landscape treatment rate was capped at 1% p.a.

Impact estimation

Effectiveness of prescribed burning at mitigating wildfire impacts was assessed base on area burnt and four management values: house loss, loss of human life, length of powerline damaged and length of road damaged. Area burnt was a direct output from the fire behaviour simulations. The probability of house loss was calculated as a function of predicted ember density, flame length and convection as presented in 59 . House loss was calculated per 180-m model grid cell and then multiplied by the number of houses in that grid cell to estimate the number of houses lost per fire. Statistical loss of human life was based on house loss (using the house loss function), the number of houses exposed (using simulation output) and the number of people exposed to fire 60 . House location and population density data were derived from national datasets ( 61 , Australian Bureau of Statistics) and combined to give the total number of people exposed to fire. Road and powerline location data was supplied by the NSW Department of Planning and Environment. In the absence of empirical data a simple threshold of 10,000 kW/m was used to classify roads or powerlines within each 180-m grid cell as damaged by fire or not. Impacts were estimated from simulation output and the datasets described above, resulting in a distribution of area burnt and impacts on the four management values, corresponding to different weather, treatment and ignition scenarios.

Risk estimation

Building on previous studies 15 , 57 , a Bayesian Decision Networks (BDN) approach was used to generate residual risk estimates and hence evaluate the risk mitigation available from prescribed burning. We adopted the recommendations of Marcot et al. 62 and Chen and Pollino 63 in designing our BDN. A conceptual model was adapted from previous studies of fire management 64 and used to create an influence diagram. In this model fire weather affected ignition probability; fire weather and treatment option (a decision node) affected the distribution of fire sizes; and fire weather, fire size and fire management affected the amount of loss for a given management value. To translate the influence diagram into risk estimates, probability distribution tables were populated for the fire weather node (based on weather station data) and the fire size and management value impact nodes (based on the impact estimation step described above) of the BDN. The BDN then generated output values for each of the different prescribed burning treatment scenarios, based on the influence diagram.

Continuous data were discretised on a log scale across the range of values iteratively to get a relatively even distribution across non-zero values. For each FFDI category, we calculated the average maximum daily FFDI during the fire season for each study area, using the same weather station data used to drive PHOENIX. FFDI values were then separated into fire days (fire recorded within 200 km of the weather station) and non-fire days. The relative frequency of fire days was then used to drive ignitions in the BDN. Raw risk values were the expected node likelihoods for area burnt, house loss, life loss, length of powerline damaged and length of road damaged. These raw values were converted into residual risk values by dividing them by the risk value associated with the zero edge, zero landscape treatment scenario. These risks can be validly compared between regions because they reflect the observed distribution of fire weather conditions in each area. Further details of fire behaviour simulations, impact estimation and risk estimation can be found in 15 , 57 .

Data availability

The datasets generated from fire simulation and risk estimation for the current study are available from the corresponding author on reasonable request. Weather data is available from the Australian Bureau of Meteorology ( http://www.bom.gov.au ). Vegetation mapping and fuel accumulation models are available from the NSW Rural Fire Service ( https://www.rfs.nsw.gov.au ). Fire-sensitive vegetation, road and powerline location data is available from the NSW Department of Planning and Environment ( https://www.environment.nsw.gov.au ).

Code availability

Code to prepare the plots is available on request.

Bowman, D. M. J. S. et al. Fire in the earth system. Science 324 , 481–484 (2009).

Article ADS CAS Google Scholar

Gill, A. M., Stephens, S. L. & Cary, G. J. The worldwide “wildfire” problem. Ecol. Appl. 23 , 438–454 (2013).

Article Google Scholar

Moritz, M. A. et al. Learning to coexist with wildfire. Nature 515 , 58–66 (2014).

Filkov, A. I., Ngo, T., Matthews, S., Telfer, S. & Penman, T. D. Impact of Australia’s catastrophic 2019/20 bushfire season on communities and environment: Retrospective analysis and current trends. J. Saf. Sci. Res. 1 , 44–56 (2020).

Google Scholar

Duane, A., Castellnou, M. & Brotons, L. Towards a comprehensive look at global drivers of novel extreme wildfire events. Clim. Chang. 165 , 43. https://doi.org/10.1007/s10584-021-03066-4 (2021).

Article ADS Google Scholar

Nolan, R. H. et al. What do the Australian Black Summer fires signify for the global fire crisis?. Fire. 4 , 97. https://doi.org/10.3390/fire4040097 (2021).

Williams, A. P. et al. Observed impacts of anthropogenic climate change on wildfire in California. Earth’s Future 7 , 892–910 (2019).

Abatzoglou, J. T. et al. Projected increases in western US forest fire despite growing fuel constraints. Commun. Earth. Environ. 2 , 227. https://doi.org/10.1038/s43247-021-00299-0 (2021).

Canadell, J. G. et al. Multi-decadal increase of forest burned area in Australia is linked to climate change. Nat. Commun. 12 , 6921. https://doi.org/10.1038/s41467-021-27225-4 (2021).

Article ADS CAS PubMed PubMed Central Google Scholar

Wunder, S. et al. Resilient landscapes to prevent catastrophic forest fires: Socioeconomic insights towards a new paradigm. For. Policy Econ. 128 , 102458. https://doi.org/10.1016/j.forpol.2021.102458 (2021).

Burrows, N. & McCaw, L. Prescribed burning in southwestern Australian forests. Front. Ecol. Environ. 11 , e25–e34. https://doi.org/10.1890/120356 (2013).

Duff, T. J., Cawson, J. G. & Penman, T. D. Prescribed burning. In Encyclopedia of Wildfires and Wildland-Urban Interface (WUI) Fires (ed. Manzello, S. L.) 1–11 (Springer International Publishing, 2019).

Penman, T. D., Collins, L., Duff, T. J., Price, O. F. & Cary, G. J. Scientific evidence regarding effectiveness of prescribed burning. In Prescribed Burning in Australasia: The Science, Practice and Politics of Burning the Bush (ed. Bushfire and Natural Hazards CRC) 99–111 (AFAC, 2020).

Russell-Smith, J., McCaw, L. & Leavesley, A. Adaptive prescribed burning in Australia for the early 21st Century—Context, status, challenges. Int. J. Wildland Fire. 29 , 305 (2020).

Cirulis, B. et al. Quantification of inter-regional differences in risk mitigation from prescribed burning across multiple management values. Int. J. Wildland Fire. 29 , 414–426 (2019).

Borchers-Arriagada, N. et al. Smoke health costs and the calculus for wildfires fuel management: A modelling study. Lancet Planet. Health 5 , e608–e619. https://doi.org/10.1016/S2542-5196(21)00198-4 (2021).

Article PubMed Google Scholar

Bentley, P. D. & Penman, T. D. Is there an inherent conflict in managing fire for people and conservation?. Int. J. Wildland Fire 26 , 455–468 (2017).

Driscoll, D. A. et al. Resolving future fire management conflicts using multicriteria decision making. Conserv. Biol. 30 , 196–205 (2016).

Johnston, F. H. et al. Unprecedented health costs of smoke-related PM25 from the 2019–20 Australian megafires. Nat. Sustain. 4 , 42–47. https://doi.org/10.1038/s41893-020-00610-5 (2021).

Collins, L. et al. The 2019/2020 mega-fires exposed Australian ecosystems to an unprecedented extent of high-severity fire. Environ. Res. Lett. 16 , 044029. https://doi.org/10.1088/1748-9326/abeb9e (2021).

Boer, M. M., Resco de Dios, V. & Bradstock, R. A. Unprecedented burn area of Australian mega forest fires. Nat. Clim. Change. 10 , 171–172. https://doi.org/10.1038/s41558-020-0716-1 (2020).

Hislop, S., Stone, C., Haywood, A. & Skidmore, A. The effectiveness of fuel reduction burning for wildfire mitigation in sclerophyll forests. Aust. For. 83 , 255–264 (2020).

Bowman, D. M. J. S., Williamson, G. J., Gibson, R. K., Bradstock, R. A. & Keenan, R. J. The severity and extent of the Australia 2019–20 Eucalyptus forest fires are not the legacy of forest management. Nat. Ecol. Evol. 5 , 1003–1010 (2021).

Price, O. F. & Bradstock, R. A. The efficacy of fuel treatment in mitigating property loss during wildfires: Insights from analysis of the severity of the catastrophic fires in 2009 in Victoria, Australia. J. Environ. Manag. 113 , 146–157 (2012).

Parks, S. A., Holsinger, L. M., Miller, C. & Nelson, C. R. Wildland fire as a self-regulating mechanism: The role of previous burns and weather in limiting fire progression. Ecol. Appl. 25 , 1478–1492 (2015).

Ager, A. A., Houtman, R. M., Day, M. A., Ringo, C. & Palaiologou, P. Tradeoffs between US national forest harvest targets and fuel management to reduce wildfire transmission to the wildland urban interface. For. Ecol. Manag. 434 , 99–109 (2019).

Alcasena, F. J., Ager, A. A., Bailey, J. D., Pineda, N. & Vega-García, C. Towards a comprehensive wildfire management strategy for Mediterranean areas: Framework development and implementation in Catalonia Spain. J. Environ. Manag. 231 , 303–320 (2019).

Ager, A. A. et al. Predicting paradise: Modeling future wildfire disasters in the western US. Sci. Total Environ. 784 , 147057. https://doi.org/10.1016/j.scitotenv.2021.147057 (2021).

Article ADS CAS PubMed Google Scholar

Victorian Government. Safer Together: A new approach to reducing the risk of bushfire in Victoria (The State of Victoria, 2015).

Faggian, N. et al. Final Report: An evaluation of fire spread simulators used in Australia (Bureau of Meteorology, 2017).

Penman, T. D. et al. Effect of weather forecast errors on fire growth model projections. Int. J. Wildland Fire. 29 , 983–994 (2020).

Penman, T. D. et al. Improved accuracy of wildfire simulations using fuel hazard estimates based on environmental data. J. Environ. Manag. 301 , 113789. https://doi.org/10.1016/j.jenvman.2021.113789 (2022).

Article CAS Google Scholar

Price, O., Nolan, R. H. & Samson, S. A. Fuel consumption rates in eucalypt forest during hazard reduction burns, cultural burns and wildfires. For. Ecol. Manag. 505 , 119894. https://doi.org/10.1016/j.foreco.2021.119894 (2022).

Nolan, R. H. et al. Framework for assessing live fine fuel loads and biomass consumption during fire. For. Ecol. Manag. 504 , 119830. https://doi.org/10.1016/j.foreco.2021.119830 (2022).

McColl-Gausden, S. C., Bennett, L. T., Duff, T. J., Cawson, J. G. & Penman, T. D. Climatic and edaphic gradients predict variation in wildland fuel hazard in south-eastern Australia. Ecography 43 , 443–455 (2020).

McColl-Gausden, S. C., Bennett, L. T., Ababei, D. A., Clarke, H. G. & Penman, T. D. Future fire regimes increase risks to obligate-seeder forests. Divers. Distrib. https://doi.org/10.1111/ddi.13417 (2021).

Arienti, M. C., Cumming, S. G. & Boutin, S. Empirical models of forest fire initial attack success probabilities: The effects of fuels, anthropogenic linear features, fire weather, and management. Can. J. For. Res. 36 , 3155–3166 (2006).

Plucinski, M. P. Factors affecting containment area and time of Australian forest fires featuring aerial suppression. For. Sci. 58 , 390–398 (2012).

Penman, T. D. et al. Examining the relative effects of fire weather, suppression and fuel treatment on fire behaviour—A simulation study. J. Environ. Manag. 131 , 325–333 (2013).

Cary, G. J., Davies, I. D., Bradstock, R. A., Keane, R. E. & Flannigan, M. D. Importance of fuel treatment for limiting moderate-to-high intensity fire: Findings from comparative fire modelling. Landsc. Ecol. 32 , 1473–1483 (2017).

Jolly, W. M. et al. Climate-induced variations in global wildfire danger from 1979 to 2013. Nat. Commun. 6 , 7537 (2015).

Clarke, H. & Evans, J. P. Exploring the future change space for fire weather in southeast Australia. Theor. Appl. Climatol. 136 , 513–527 (2018).

Bradstock, R. A. et al. Wildfires, fuel treatment and risk mitigation in Australian eucalypt forests: Insights from landscape-scale simulation. J. Environ. Manag. 105 , 66–75 (2012).

King, K. J., Cary, G. J., Bradstock, R. A. & Marsden-Smedley, J. B. Contrasting fire responses to climate and management: Insights from two Australian ecosystems. Glob. Change Biol. 19 , 1223–1235 (2013).

Clarke, H. et al. Climate change effects on the frequency, seasonality and interannual variability of suitable prescribed burning weather conditions in southeastern Australia. Agric. For. Meteorol. 271 , 148–157 (2019).

Kupfer, J. A., Terando, A. J., Gao, P., Teske, C. & Hiers, J. K. Climate change projected to reduce prescribed burning opportunities in the south-eastern United States. Int. J. Wildland Fire 29 , 764–778 (2020).

Abram, N. J. et al. Connections of climate change and variability to large and extreme forest fires in southeast Australia. Commun. Earth Environ. 2 , 8. https://doi.org/10.1038/s43247-020-00065-8 (2021).

Murphy, B. P. et al. Fire regimes of Australia: A pyrogeographic model system. J. Biogeogr. 40 , 1048–1058 (2013).

Tolhurst, K., Shields, B. & Chong, D. PHOENIX: development and application of a bushfire risk-management tool. Aust. J. Emerg. Manag. 23 , 47–54 (2008).

Knight, I. & Coleman, J. A fire perimeter expansion algorithm-based on Huygens wavelet propagation. Int. J. Wildland Fire. 3 , 73–84 (1993).

McArthur, A. G. Fire behaviour in eucalypt forests. In Leaflet 107 (Commonwealth of Australia, 1967).

Noble, I., Gill, A. & Bary, G. McArthur’s fire-danger meters expressed as equations. Aust. J. Ecol. 5 , 201–203 (1980).

Cheney, N., Gould, J. & Catchpole, W. R. Prediction of fire spread in grasslands. Int. J. Wildland Fire. 8 , 1–13 (1998).

Hines, F., Tolhurst, K. G., Wilson, A. A. G. & McCarthy, G. J. Overall Fuel Hazard Assessment Guide , 4th edition (Department of Sustainability and Environment, 2010).

Watson, P. J. Fuel Load Dynamics in NSW Vegetation. Part 1: Forests and Grassy Woodlands. Report to the NSW Rural Fire Service (Centre for Environmental Risk Management of Bushfires, 2011).

Clarke, H., Gibson, R., Cirulis, B., Bradstock, R. A. & Penman, T. D. Developing and testing models of the drivers of anthropogenic and lightning-caused ignition in southeastern Australia. J. Environ. Manag. 235 , 34–41 (2019).

Penman, T. et al. Cost-effective prescribed burning solutions vary between landscapes in eastern Australia. Front. For. Glob. Change. https://doi.org/10.3389/ffgc.2020.00079 (2020).

Cruz, M. G. et al. Anatomy of a catastrophic wildfire: The Black Saturday Kilmore East fire in Victoria, Australia. For. Ecol. Manag. 284 , 269–285 (2012).

Tolhurst, K. G. & Chong, D. M. Assessing potential house losses using PHOENIX RapidFire. In Proceedings of Bushfire CRC & Australasian Fire and Emergency Service Authorities Council (AFAC) 2011 Conference Science Day (ed. Thornton, R. P.) 74-76 (Bushfire CRC, 2011).

Harris, S., Anderson, W., Kilinc, M. & Fogarty, L. The relationship between fire behaviour measures and community loss: An exploratory analysis for developing a bushfire severity scale. Nat. Hazards 63 , 391–415 (2012).

Public Sector Mapping Agencies. Geocoded National Address File Database. https://www.psma.com.au/products/g-naf (2016).

Marcot, B. G., Steventon, J. D., Sutherland, G. D. & McCann, R. K. Guidelines for developing and updating Bayesian belief networks applied to ecological modeling and conservation. Can. J. For. Res. 36 , 3063–3074 (2006).

Chen, S. H. & Pollino, C. A. Good practice in Bayesian network modelling. Environ. Model. Softw. 37 , 134–145 (2012).

Penman, T. D., Cirulis, B. & Marcot, B. G. Bayesian decision network modeling for environmental risk management: A wildfire case study. J. Environ. Manag. 270 , 110735. https://doi.org/10.1016/j.jenvman.2020.110735 (2020).

Download references

Acknowledgements

We acknowledge the New South Wales Government's Department of Planning & Environment for providing funds to support this research via the NSW Bushfire Risk Management Research Hub. Thank you to the NSW Rural Fire Service and NSW Department of Planning and Environment for providing data. The authors declare no conflicts of interest.

Author information

Authors and affiliations.

Centre for Environmental Risk Management of Bushfires, Centre for Sustainable Ecosystem Solutions, University of Wollongong, Wollongong, NSW, 2522, Australia

Hamish Clarke, Owen Price & Ross Bradstock

NSW Bushfire Risk Management Research Hub, University of Wollongong, Wollongong, NSW, 2522, Australia

Hamish Clarke, Owen Price, Matthias M. Boer & Ross Bradstock

Hawkesbury Institute for the Environment, Western Sydney University, Locked Bag 1797, Penrith, NSW, 2751, Australia

Hamish Clarke, Matthias M. Boer & Ross Bradstock

FLARE Wildfire Research, School of Ecosystem and Forest Sciences, The University of Melbourne, Melbourne, Victoria, 3363, Australia

Hamish Clarke, Brett Cirulis & Trent Penman

NSW Department of Planning and Environment, Science, Economics and Insights Division, Parramatta, NSW, Australia

Ross Bradstock

You can also search for this author in PubMed Google Scholar

Contributions

H.C.: conceptualisation, formal analysis, writing—original draft, visualization. B.C.: conceptualisation, software, investigation. T.P.: conceptualisation, methodology, writing—review and editing. O.P.: methodology, writing—review and editing. M.M.B.: methodology, writing—review and editing. R.B.: conceptualization, methodology, writing—review and editing.

Corresponding author

Correspondence to Hamish Clarke .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary information., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Clarke, H., Cirulis, B., Penman, T. et al. The 2019–2020 Australian forest fires are a harbinger of decreased prescribed burning effectiveness under rising extreme conditions. Sci Rep 12 , 11871 (2022). https://doi.org/10.1038/s41598-022-15262-y

Download citation

Received : 02 March 2022

Accepted : 21 June 2022

Published : 13 July 2022

DOI : https://doi.org/10.1038/s41598-022-15262-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Global impacts of fire regimes on wildland bird diversity.

- Fátima Arrogante-Funes

- Inmaculada Aguado

- Emilio Chuvieco

Fire Ecology (2024)

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Vibrant Cities Lab

Urban forests case studies: challenges, potential and success in a dozen cities.

There are many challenges facing cities in the 21st century: aging gray infrastructures, social and economic inequality, maxed out systems and grids, extensive urban development. With more than 80 percent of the U.S. population now calling urban areas home, finding solutions to these issues that fit within a city’s budgetary constraints, while also enhancing the city for the better, is of tantamount importance.

- american forests ,

- case studies ,

- challenges ,

- Urban Forests

Related Resources

- ORIENTATION

- ASSIGNMENTS

- Program Home Page

- LIBRARY RESOURCES

- Getting Help

- Engaging Course Concepts

Case Study: The Amazon Rainforest

The Amazon in context

Tropical rainforests are often considered to be the “cradles of biodiversity.” Though they cover only about 6% of the Earth’s land surface, they are home to over 50% of global biodiversity. Rainforests also take in massive amounts of carbon dioxide and release oxygen through photosynthesis, which has also given them the nickname “lungs of the planet.” They also store very large amounts of carbon, and so cutting and burning their biomass contributes to global climate change. Many modern medicines are derived from rainforest plants, and several very important food crops originated in the rainforest, including bananas, mangos, chocolate, coffee, and sugar cane.

In order to qualify as a tropical rainforest, an area must receive over 250 centimeters of rainfall each year and have an average temperature above 24 degrees centigrade, as well as never experience frosts. The Amazon rainforest in South America is the largest in the world. The second largest is the Congo in central Africa, and other important rainforests can be found in Central America, the Caribbean, and Southeast Asia. Brazil contains about 40% of the world’s remaining tropical rainforest. Its rainforest covers an area of land about 2/3 the size of the continental United States.

There are countless reasons, both anthropocentric and ecocentric, to value rainforests. But they are one of the most threatened types of ecosystems in the world today. It’s somewhat difficult to estimate how quickly rainforests are being cut down, but estimates range from between 50,000 and 170,000 square kilometers per year. Even the most conservative estimates project that if we keep cutting down rainforests as we are today, within about 100 years there will be none left.

How does a rainforest work?

Rainforests are incredibly complex ecosystems, but understanding a few basics about their ecology will help us understand why clear-cutting and fragmentation are such destructive activities for rainforest biodiversity.

High biodiversity in tropical rainforests means that the interrelationships between organisms are very complex. A single tree may house more than 40 different ant species, each of which has a different ecological function and may alter the habitat in distinct and important ways. Ecologists debate about whether systems that have high biodiversity are stable and resilient, like a spider web composed of many strong individual strands, or fragile, like a house of cards. Both metaphors are likely appropriate in some cases. One thing we can be certain of is that it is very difficult in a rainforest system, as in most other ecosystems, to affect just one type of organism. Also, clear cutting one small area may damage hundreds or thousands of established species interactions that reach beyond the cleared area.

Pollination is a challenge for rainforest trees because there are so many different species, unlike forests in the temperate regions that are often dominated by less than a dozen tree species. One solution is for individual trees to grow close together, making pollination simpler, but this can make that species vulnerable to extinction if the one area where it lives is clear cut. Another strategy is to develop a mutualistic relationship with a long-distance pollinator, like a specific bee or hummingbird species. These pollinators develop mental maps of where each tree of a particular species is located and then travel between them on a sort of “trap-line” that allows trees to pollinate each other. One problem is that if a forest is fragmented then these trap-line connections can be disrupted, and so trees can fail to be pollinated and reproduce even if they haven’t been cut.

The quality of rainforest soils is perhaps the most surprising aspect of their ecology. We might expect a lush rainforest to grow from incredibly rich, fertile soils, but actually, the opposite is true. While some rainforest soils that are derived from volcanic ash or from river deposits can be quite fertile, generally rainforest soils are very poor in nutrients and organic matter. Rainforests hold most of their nutrients in their live vegetation, not in the soil. Their soils do not maintain nutrients very well either, which means that existing nutrients quickly “leech” out, being carried away by water as it percolates through the soil. Also, soils in rainforests tend to be acidic, which means that it’s difficult for plants to access even the few existing nutrients. The section on slash and burn agriculture in the previous module describes some of the challenges that farmers face when they attempt to grow crops on tropical rainforest soils, but perhaps the most important lesson is that once a rainforest is cut down and cleared away, very little fertility is left to help a forest regrow.

What is driving deforestation in the Amazon?

Many factors contribute to tropical deforestation, but consider this typical set of circumstances and processes that result in rapid and unsustainable rates of deforestation. This story fits well with the historical experience of Brazil and other countries with territory in the Amazon Basin.

Population growth and poverty encourage poor farmers to clear new areas of rainforest, and their efforts are further exacerbated by government policies that permit landless peasants to establish legal title to land that they have cleared.

At the same time, international lending institutions like the World Bank provide money to the national government for large-scale projects like mining, construction of dams, new roads, and other infrastructure that directly reduces the forest or makes it easier for farmers to access new areas to clear.

The activities most often encouraging new road development are timber harvesting and mining. Loggers cut out the best timber for domestic use or export, and in the process knock over many other less valuable trees. Those trees are eventually cleared and used for wood pulp, or burned, and the area is converted into cattle pastures. After a few years, the vegetation is sufficiently degraded to make it not profitable to raise cattle, and the land is sold to poor farmers seeking out a subsistence living.

Regardless of how poor farmers get their land, they often are only able to gain a few years of decent crop yields before the poor quality of the soil overwhelms their efforts, and then they are forced to move on to another plot of land. Small-scale farmers also hunt for meat in the remaining fragmented forest areas, which reduces the biodiversity in those areas as well.

Another important factor not mentioned in the scenario above is the clearing of rainforest for industrial agriculture plantations of bananas, pineapples, and sugar cane. These crops are primarily grown for export, and so an additional driver to consider is consumer demand for these crops in countries like the United States.

These cycles of land use, which are driven by poverty and population growth as well as government policies, have led to the rapid loss of tropical rainforests. What is lost in many cases is not simply biodiversity, but also valuable renewable resources that could sustain many generations of humans to come. Efforts to protect rainforests and other areas of high biodiversity is the topic of the next section.

Forest Biodiversity

Case Study: Seeing the Forest for the Trees

What is so important about forests.

Biodiversity and Healthy Forests

The forests of Maine are among the most diverse in North America. They include 14 conifer, or cone bearing, and 52 deciduous, or broadleaf, trees. Since their establishment nearly 6,000 years ago, the composition and extent of forests of Maine have changed, as a result of both natural and man-made events. In the early days of European Settlement, much of the forest of Maine (68%) was cleared to make way for farming. Since the early 1900's the forest has regenerated.

Become Involved in Forest Monitoring

Project Learning Tree (PLT) is a multi-disciplinary environmental education program for educators and students in Pre-K through Grade 12. The American Forest Foundation supports Project Learning Tree. Any citizen scientist can use the PLT forest-monitoring techniques illustrated in this chapter. To learn more about Project Learning Tree and forest monitoring techniques consult the Teaching Notes for resource links.

« Previous Page Next Page »

- Seeing the Forest for the Trees: What's in Your Woods?

- Teaching Notes

- Step-by-Step Instructions

- Tools and Data

- Going Further

- About this Site

- Accessibility

Citing and Terms of Use

Material on this page is offered under a Creative Commons license unless otherwise noted below.

Show terms of use for text on this page »

Show terms of use for media on this page »

- None found in this page

- Initial Publication Date: August 10, 2010

- Short URL: https://serc.carleton.edu/48483 What's This?

Sustainable Landscapes

Forest landscape restoration, protected & conserved areas, forest sector transformation & valuation, forests & climate, deforestation- and conversion-free supply chains & governance.

- Case Studies

- #Connect2Forests

- Terms & Conditions

Case studies

Lessons from practitioners on conserving forests for nature and people.

Insights from WWF experts on solutions to safeguard forests.

Stories of people and places behind WWF's forest conservation work around the world.

Explore our work through the eyes of our people and partners.

wwf approach

Livestock farmers lead the way in implementing sustainable land use practices and reducing deforestation in peru, how argentina could emerge as a leader in mainstreaming beef free from deforestation, using wood forensic science to deter corruption and illegality in the timber trade, community-based forest monitoring in colombia, brazil's amazon soy moratorium, share your experience.

Replication of successful approaches and learning lessons from other forest practitioners can make conservation work more impactful. Take a few minutes to capture and share your experience and tips.

Machine Learning and image analysis towards improved energy management in Industry 4.0: a practical case study on quality control

- Original Article

- Open access

- Published: 13 May 2024

- Volume 17 , article number 48 , ( 2024 )

Cite this article

You have full access to this open access article

- Mattia Casini 1 ,

- Paolo De Angelis 1 ,

- Marco Porrati 2 ,

- Paolo Vigo 1 ,

- Matteo Fasano 1 ,

- Eliodoro Chiavazzo 1 &

- Luca Bergamasco ORCID: orcid.org/0000-0001-6130-9544 1

155 Accesses

1 Altmetric

Explore all metrics

With the advent of Industry 4.0, Artificial Intelligence (AI) has created a favorable environment for the digitalization of manufacturing and processing, helping industries to automate and optimize operations. In this work, we focus on a practical case study of a brake caliper quality control operation, which is usually accomplished by human inspection and requires a dedicated handling system, with a slow production rate and thus inefficient energy usage. We report on a developed Machine Learning (ML) methodology, based on Deep Convolutional Neural Networks (D-CNNs), to automatically extract information from images, to automate the process. A complete workflow has been developed on the target industrial test case. In order to find the best compromise between accuracy and computational demand of the model, several D-CNNs architectures have been tested. The results show that, a judicious choice of the ML model with a proper training, allows a fast and accurate quality control; thus, the proposed workflow could be implemented for an ML-powered version of the considered problem. This would eventually enable a better management of the available resources, in terms of time consumption and energy usage.

Similar content being viewed by others

Towards Operation Excellence in Automobile Assembly Analysis Using Hybrid Image Processing

Deep Learning Based Algorithms for Welding Edge Points Detection

Artificial Intelligence: Prospect in Mechanical Engineering Field—A Review

Avoid common mistakes on your manuscript.

Introduction

An efficient use of energy resources in industry is key for a sustainable future (Bilgen, 2014 ; Ocampo-Martinez et al., 2019 ). The advent of Industry 4.0, and of Artificial Intelligence, have created a favorable context for the digitalisation of manufacturing processes. In this view, Machine Learning (ML) techniques have the potential for assisting industries in a better and smart usage of the available data, helping to automate and improve operations (Narciso & Martins, 2020 ; Mazzei & Ramjattan, 2022 ). For example, ML tools can be used to analyze sensor data from industrial equipment for predictive maintenance (Carvalho et al., 2019 ; Dalzochio et al., 2020 ), which allows identification of potential failures in advance, and thus to a better planning of maintenance operations with reduced downtime. Similarly, energy consumption optimization (Shen et al., 2020 ; Qin et al., 2020 ) can be achieved via ML-enabled analysis of available consumption data, with consequent adjustments of the operating parameters, schedules, or configurations to minimize energy consumption while maintaining an optimal production efficiency. Energy consumption forecast (Liu et al., 2019 ; Zhang et al., 2018 ) can also be improved, especially in industrial plants relying on renewable energy sources (Bologna et al., 2020 ; Ismail et al., 2021 ), by analysis of historical data on weather patterns and forecast, to optimize the usage of energy resources, avoid energy peaks, and leverage alternative energy sources or storage systems (Li & Zheng, 2016 ; Ribezzo et al., 2022 ; Fasano et al., 2019 ; Trezza et al., 2022 ; Mishra et al., 2023 ). Finally, ML tools can also serve for fault or anomaly detection (Angelopoulos et al., 2019 ; Md et al., 2022 ), which allows prompt corrective actions to optimize energy usage and prevent energy inefficiencies. Within this context, ML techniques for image analysis (Casini et al., 2024 ) are also gaining increasing interest (Chen et al., 2023 ), for their application to e.g. materials design and optimization (Choudhury, 2021 ), quality control (Badmos et al., 2020 ), process monitoring (Ho et al., 2021 ), or detection of machine failures by converting time series data from sensors to 2D images (Wen et al., 2017 ).

Incorporating digitalisation and ML techniques into Industry 4.0 has led to significant energy savings (Maggiore et al., 2021 ; Nota et al., 2020 ). Projects adopting these technologies can achieve an average of 15% to 25% improvement in energy efficiency in the processes where they were implemented (Arana-Landín et al., 2023 ). For instance, in predictive maintenance, ML can reduce energy consumption by optimizing the operation of machinery (Agrawal et al., 2023 ; Pan et al., 2024 ). In process optimization, ML algorithms can improve energy efficiency by 10-20% by analyzing and adjusting machine operations for optimal performance, thereby reducing unnecessary energy usage (Leong et al., 2020 ). Furthermore, the implementation of ML algorithms for optimal control can lead to energy savings of 30%, because these systems can make real-time adjustments to production lines, ensuring that machines operate at peak energy efficiency (Rahul & Chiddarwar, 2023 ).

In automotive manufacturing, ML-driven quality control can lead to energy savings by reducing the need for redoing parts or running inefficient production cycles (Vater et al., 2019 ). In high-volume production environments such as consumer electronics, novel computer-based vision models for automated detection and classification of damaged packages from intact packages can speed up operations and reduce waste (Shahin et al., 2023 ). In heavy industries like steel or chemical manufacturing, ML can optimize the energy consumption of large machinery. By predicting the optimal operating conditions and maintenance schedules, these systems can save energy costs (Mypati et al., 2023 ). Compressed air is one of the most energy-intensive processes in manufacturing. ML can optimize the performance of these systems, potentially leading to energy savings by continuously monitoring and adjusting the air compressors for peak efficiency, avoiding energy losses due to leaks or inefficient operation (Benedetti et al., 2019 ). ML can also contribute to reducing energy consumption and minimizing incorrectly produced parts in polymer processing enterprises (Willenbacher et al., 2021 ).

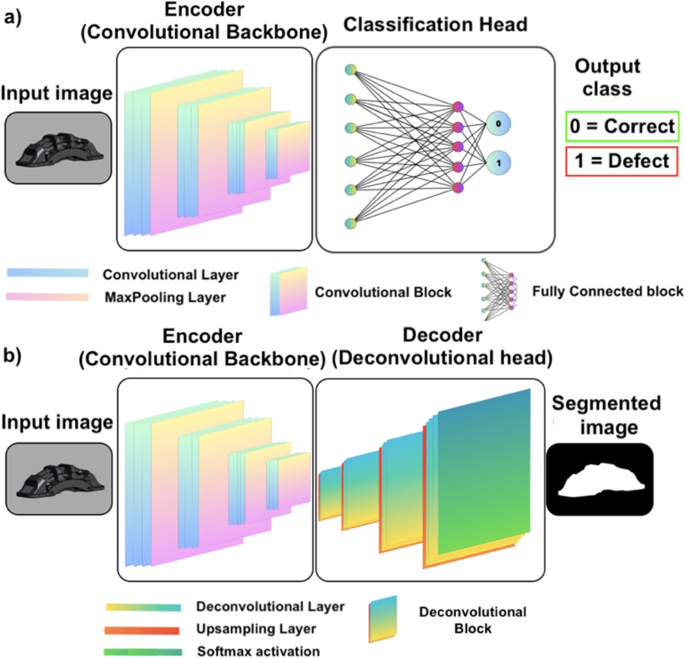

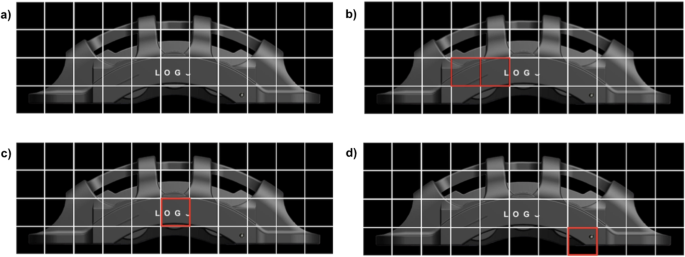

Here we focus on a practical industrial case study of brake caliper processing. In detail, we focus on the quality control operation, which is typically accomplished by human visual inspection and requires a dedicated handling system. This eventually implies a slower production rate, and inefficient energy usage. We thus propose the integration of an ML-based system to automatically perform the quality control operation, without the need for a dedicated handling system and thus reduced operation time. To this, we rely on ML tools able to analyze and extract information from images, that is, deep convolutional neural networks, D-CNNs (Alzubaidi et al., 2021 ; Chai et al., 2021 ).

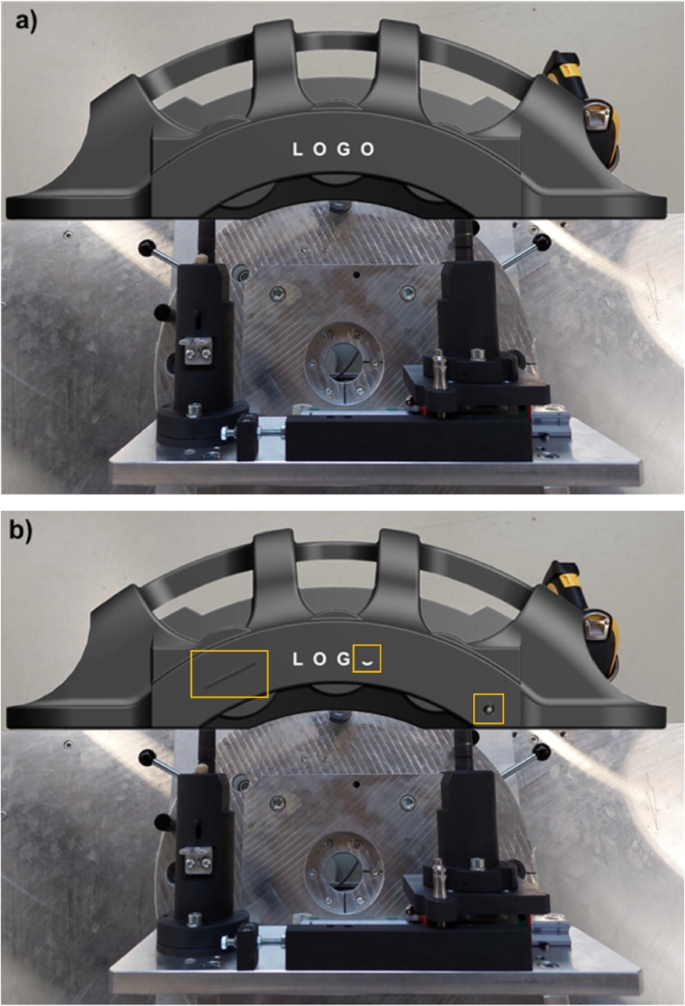

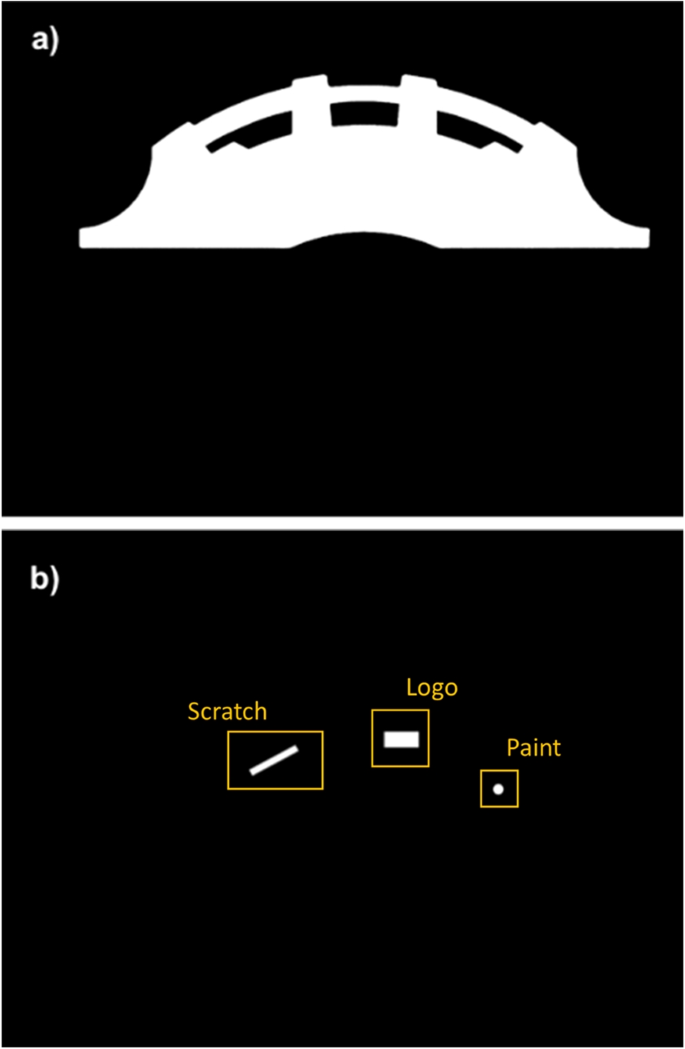

Sample 3D model (GrabCAD ) of the considered brake caliper: (a) part without defects, and (b) part with three sample defects, namely a scratch, a partially missing letter in the logo, and a circular painting defect (shown by the yellow squares, from left to right respectively)