data science Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Assessing the effects of fuel energy consumption, foreign direct investment and GDP on CO2 emission: New data science evidence from Europe & Central Asia

Documentation matters: human-centered ai system to assist data science code documentation in computational notebooks.

Computational notebooks allow data scientists to express their ideas through a combination of code and documentation. However, data scientists often pay attention only to the code, and neglect creating or updating their documentation during quick iterations. Inspired by human documentation practices learned from 80 highly-voted Kaggle notebooks, we design and implement Themisto, an automated documentation generation system to explore how human-centered AI systems can support human data scientists in the machine learning code documentation scenario. Themisto facilitates the creation of documentation via three approaches: a deep-learning-based approach to generate documentation for source code, a query-based approach to retrieve online API documentation for source code, and a user prompt approach to nudge users to write documentation. We evaluated Themisto in a within-subjects experiment with 24 data science practitioners, and found that automated documentation generation techniques reduced the time for writing documentation, reminded participants to document code they would have ignored, and improved participants’ satisfaction with their computational notebook.

Data science in the business environment: Insight management for an Executive MBA

Adventures in financial data science, gecoagent: a conversational agent for empowering genomic data extraction and analysis.

With the availability of reliable and low-cost DNA sequencing, human genomics is relevant to a growing number of end-users, including biologists and clinicians. Typical interactions require applying comparative data analysis to huge repositories of genomic information for building new knowledge, taking advantage of the latest findings in applied genomics for healthcare. Powerful technology for data extraction and analysis is available, but broad use of the technology is hampered by the complexity of accessing such methods and tools. This work presents GeCoAgent, a big-data service for clinicians and biologists. GeCoAgent uses a dialogic interface, animated by a chatbot, for supporting the end-users’ interaction with computational tools accompanied by multi-modal support. While the dialogue progresses, the user is accompanied in extracting the relevant data from repositories and then performing data analysis, which often requires the use of statistical methods or machine learning. Results are returned using simple representations (spreadsheets and graphics), while at the end of a session the dialogue is summarized in textual format. The innovation presented in this article is concerned with not only the delivery of a new tool but also our novel approach to conversational technologies, potentially extensible to other healthcare domains or to general data science.

Differentially Private Medical Texts Generation Using Generative Neural Networks

Technological advancements in data science have offered us affordable storage and efficient algorithms to query a large volume of data. Our health records are a significant part of this data, which is pivotal for healthcare providers and can be utilized in our well-being. The clinical note in electronic health records is one such category that collects a patient’s complete medical information during different timesteps of patient care available in the form of free-texts. Thus, these unstructured textual notes contain events from a patient’s admission to discharge, which can prove to be significant for future medical decisions. However, since these texts also contain sensitive information about the patient and the attending medical professionals, such notes cannot be shared publicly. This privacy issue has thwarted timely discoveries on this plethora of untapped information. Therefore, in this work, we intend to generate synthetic medical texts from a private or sanitized (de-identified) clinical text corpus and analyze their utility rigorously in different metrics and levels. Experimental results promote the applicability of our generated data as it achieves more than 80\% accuracy in different pragmatic classification problems and matches (or outperforms) the original text data.

Impact on Stock Market across Covid-19 Outbreak

Abstract: This paper analysis the impact of pandemic over the global stock exchange. The stock listing values are determined by variety of factors including the seasonal changes, catastrophic calamities, pandemic, fiscal year change and many more. This paper significantly provides analysis on the variation of listing price over the world-wide outbreak of novel corona virus. The key reason to imply upon this outbreak was to provide notion on underlying regulation of stock exchanges. Daily closing prices of the stock indices from January 2017 to January 2022 has been utilized for the analysis. The predominant feature of the research is to analyse the fact that does global economy downfall impacts the financial stock exchange. Keywords: Stock Exchange, Matplotlib, Streamlit, Data Science, Web scrapping.

Information Resilience: the nexus of responsible and agile approaches to information use

AbstractThe appetite for effective use of information assets has been steadily rising in both public and private sector organisations. However, whether the information is used for social good or commercial gain, there is a growing recognition of the complex socio-technical challenges associated with balancing the diverse demands of regulatory compliance and data privacy, social expectations and ethical use, business process agility and value creation, and scarcity of data science talent. In this vision paper, we present a series of case studies that highlight these interconnected challenges, across a range of application areas. We use the insights from the case studies to introduce Information Resilience, as a scaffold within which the competing requirements of responsible and agile approaches to information use can be positioned. The aim of this paper is to develop and present a manifesto for Information Resilience that can serve as a reference for future research and development in relevant areas of responsible data management.

qEEG Analysis in the Diagnosis of Alzheimers Disease; a Comparison of Functional Connectivity and Spectral Analysis

Alzheimers disease (AD) is a brain disorder that is mainly characterized by a progressive degeneration of neurons in the brain, causing a decline in cognitive abilities and difficulties in engaging in day-to-day activities. This study compares an FFT-based spectral analysis against a functional connectivity analysis based on phase synchronization, for finding known differences between AD patients and Healthy Control (HC) subjects. Both of these quantitative analysis methods were applied on a dataset comprising bipolar EEG montages values from 20 diagnosed AD patients and 20 age-matched HC subjects. Additionally, an attempt was made to localize the identified AD-induced brain activity effects in AD patients. The obtained results showed the advantage of the functional connectivity analysis method compared to a simple spectral analysis. Specifically, while spectral analysis could not find any significant differences between the AD and HC groups, the functional connectivity analysis showed statistically higher synchronization levels in the AD group in the lower frequency bands (delta and theta), suggesting that the AD patients brains are in a phase-locked state. Further comparison of functional connectivity between the homotopic regions confirmed that the traits of AD were localized in the centro-parietal and centro-temporal areas in the theta frequency band (4-8 Hz). The contribution of this study is that it applies a neural metric for Alzheimers detection from a data science perspective rather than from a neuroscience one. The study shows that the combination of bipolar derivations with phase synchronization yields similar results to comparable studies employing alternative analysis methods.

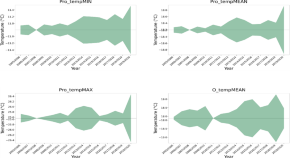

Big Data Analytics for Long-Term Meteorological Observations at Hanford Site

A growing number of physical objects with embedded sensors with typically high volume and frequently updated data sets has accentuated the need to develop methodologies to extract useful information from big data for supporting decision making. This study applies a suite of data analytics and core principles of data science to characterize near real-time meteorological data with a focus on extreme weather events. To highlight the applicability of this work and make it more accessible from a risk management perspective, a foundation for a software platform with an intuitive Graphical User Interface (GUI) was developed to access and analyze data from a decommissioned nuclear production complex operated by the U.S. Department of Energy (DOE, Richland, USA). Exploratory data analysis (EDA), involving classical non-parametric statistics, and machine learning (ML) techniques, were used to develop statistical summaries and learn characteristic features of key weather patterns and signatures. The new approach and GUI provide key insights into using big data and ML to assist site operation related to safety management strategies for extreme weather events. Specifically, this work offers a practical guide to analyzing long-term meteorological data and highlights the integration of ML and classical statistics to applied risk and decision science.

Export Citation Format

Share document.

Advertisement

Data Science and Analytics: An Overview from Data-Driven Smart Computing, Decision-Making and Applications Perspective

- Review Article

- Published: 12 July 2021

- Volume 2 , article number 377 , ( 2021 )

Cite this article

- Iqbal H. Sarker ORCID: orcid.org/0000-0003-1740-5517 1 , 2

68k Accesses

129 Citations

Explore all metrics

The digital world has a wealth of data, such as internet of things (IoT) data, business data, health data, mobile data, urban data, security data, and many more, in the current age of the Fourth Industrial Revolution (Industry 4.0 or 4IR). Extracting knowledge or useful insights from these data can be used for smart decision-making in various applications domains. In the area of data science, advanced analytics methods including machine learning modeling can provide actionable insights or deeper knowledge about data, which makes the computing process automatic and smart. In this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and capabilities of an application through smart decision-making in different scenarios. We also discuss and summarize ten potential real-world application domains including business, healthcare, cybersecurity, urban and rural data science, and so on by taking into account data-driven smart computing and decision making. Based on this, we finally highlight the challenges and potential research directions within the scope of our study. Overall, this paper aims to serve as a reference point on data science and advanced analytics to the researchers and decision-makers as well as application developers, particularly from the data-driven solution point of view for real-world problems.

Similar content being viewed by others

Machine Learning: Algorithms, Real-World Applications and Research Directions

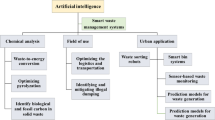

Artificial intelligence for waste management in smart cities: a review

AI-Based Modeling: Techniques, Applications and Research Issues Towards Automation, Intelligent and Smart Systems

Avoid common mistakes on your manuscript.

Introduction

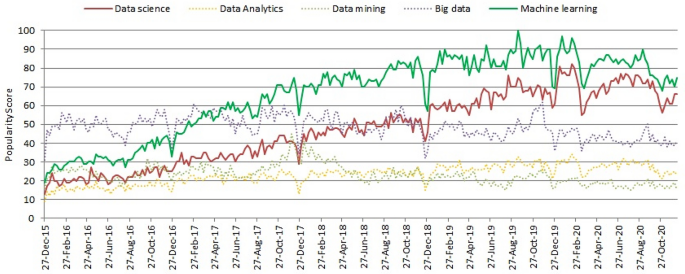

We are living in the age of “data science and advanced analytics”, where almost everything in our daily lives is digitally recorded as data [ 17 ]. Thus the current electronic world is a wealth of various kinds of data, such as business data, financial data, healthcare data, multimedia data, internet of things (IoT) data, cybersecurity data, social media data, etc [ 112 ]. The data can be structured, semi-structured, or unstructured, which increases day by day [ 105 ]. Data science is typically a “concept to unify statistics, data analysis, and their related methods” to understand and analyze the actual phenomena with data. According to Cao et al. [ 17 ] “data science is the science of data” or “data science is the study of data”, where a data product is a data deliverable, or data-enabled or guided, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, or system. The popularity of “Data science” is increasing day-by-day, which is shown in Fig. 1 according to Google Trends data over the last 5 years [ 36 ]. In addition to data science, we have also shown the popularity trends of the relevant areas such as “Data analytics”, “Data mining”, “Big data”, “Machine learning” in the figure. According to Fig. 1 , the popularity indication values for these data-driven domains, particularly “Data science”, and “Machine learning” are increasing day-by-day. This statistical information and the applicability of the data-driven smart decision-making in various real-world application areas, motivate us to study briefly on “Data science” and machine-learning-based “Advanced analytics” in this paper.

The worldwide popularity score of data science comparing with relevant areas in a range of 0 (min) to 100 (max) over time where x -axis represents the timestamp information and y -axis represents the corresponding score

Usually, data science is the field of applying advanced analytics methods and scientific concepts to derive useful business information from data. The emphasis of advanced analytics is more on anticipating the use of data to detect patterns to determine what is likely to occur in the future. Basic analytics offer a description of data in general, while advanced analytics is a step forward in offering a deeper understanding of data and helping to analyze granular data, which we are interested in. In the field of data science, several types of analytics are popular, such as "Descriptive analytics" which answers the question of what happened; "Diagnostic analytics" which answers the question of why did it happen; "Predictive analytics" which predicts what will happen in the future; and "Prescriptive analytics" which prescribes what action should be taken, discussed briefly in “ Advanced analytics methods and smart computing ”. Such advanced analytics and decision-making based on machine learning techniques [ 105 ], a major part of artificial intelligence (AI) [ 102 ] can also play a significant role in the Fourth Industrial Revolution (Industry 4.0) due to its learning capability for smart computing as well as automation [ 121 ].

Although the area of “data science” is huge, we mainly focus on deriving useful insights through advanced analytics, where the results are used to make smart decisions in various real-world application areas. For this, various advanced analytics methods such as machine learning modeling, natural language processing, sentiment analysis, neural network, or deep learning analysis can provide deeper knowledge about data, and thus can be used to develop data-driven intelligent applications. More specifically, regression analysis, classification, clustering analysis, association rules, time-series analysis, sentiment analysis, behavioral patterns, anomaly detection, factor analysis, log analysis, and deep learning which is originated from the artificial neural network, are taken into account in our study. These machine learning-based advanced analytics methods are discussed briefly in “ Advanced analytics methods and smart computing ”. Thus, it’s important to understand the principles of various advanced analytics methods mentioned above and their applicability to apply in various real-world application areas. For instance, in our earlier paper Sarker et al. [ 114 ], we have discussed how data science and machine learning modeling can play a significant role in the domain of cybersecurity for making smart decisions and to provide data-driven intelligent security services. In this paper, we broadly take into account the data science application areas and real-world problems in ten potential domains including the area of business data science, health data science, IoT data science, behavioral data science, urban data science, and so on, discussed briefly in “ Real-world application domains ”.

Based on the importance of machine learning modeling to extract the useful insights from the data mentioned above and data-driven smart decision-making, in this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application. The key contribution of this study is thus understanding data science modeling, explaining different analytic methods for solution perspective and their applicability in various real-world data-driven applications areas mentioned earlier. Overall, the purpose of this paper is, therefore, to provide a basic guide or reference for those academia and industry people who want to study, research, and develop automated and intelligent applications or systems based on smart computing and decision making within the area of data science.

The main contributions of this paper are summarized as follows:

To define the scope of our study towards data-driven smart computing and decision-making in our real-world life. We also make a brief discussion on the concept of data science modeling from business problems to data product and automation, to understand its applicability and provide intelligent services in real-world scenarios.

To provide a comprehensive view on data science including advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application.

To discuss the applicability and significance of machine learning-based analytics methods in various real-world application areas. We also summarize ten potential real-world application areas, from business to personalized applications in our daily life, where advanced analytics with machine learning modeling can be used to achieve the expected outcome.

To highlight and summarize the challenges and potential research directions within the scope of our study.

The rest of the paper is organized as follows. The next section provides the background and related work and defines the scope of our study. The following section presents the concepts of data science modeling for building a data-driven application. After that, briefly discuss and explain different advanced analytics methods and smart computing. Various real-world application areas are discussed and summarized in the next section. We then highlight and summarize several research issues and potential future directions, and finally, the last section concludes this paper.

Background and Related Work

In this section, we first discuss various data terms and works related to data science and highlight the scope of our study.

Data Terms and Definitions

There is a range of key terms in the field, such as data analysis, data mining, data analytics, big data, data science, advanced analytics, machine learning, and deep learning, which are highly related and easily confusing. In the following, we define these terms and differentiate them with the term “Data Science” according to our goal.

The term “Data analysis” refers to the processing of data by conventional (e.g., classic statistical, empirical, or logical) theories, technologies, and tools for extracting useful information and for practical purposes [ 17 ]. The term “Data analytics”, on the other hand, refers to the theories, technologies, instruments, and processes that allow for an in-depth understanding and exploration of actionable data insight [ 17 ]. Statistical and mathematical analysis of the data is the major concern in this process. “Data mining” is another popular term over the last decade, which has a similar meaning with several other terms such as knowledge mining from data, knowledge extraction, knowledge discovery from data (KDD), data/pattern analysis, data archaeology, and data dredging. According to Han et al. [ 38 ], it should have been more appropriately named “knowledge mining from data”. Overall, data mining is defined as the process of discovering interesting patterns and knowledge from large amounts of data [ 38 ]. Data sources may include databases, data centers, the Internet or Web, other repositories of data, or data dynamically streamed through the system. “Big data” is another popular term nowadays, which may change the statistical and data analysis approaches as it has the unique features of “massive, high dimensional, heterogeneous, complex, unstructured, incomplete, noisy, and erroneous” [ 74 ]. Big data can be generated by mobile devices, social networks, the Internet of Things, multimedia, and many other new applications [ 129 ]. Several unique features including volume, velocity, variety, veracity, value (5Vs), and complexity are used to understand and describe big data [ 69 ].

In terms of analytics, basic analytics provides a summary of data whereas the term “Advanced Analytics” takes a step forward in offering a deeper understanding of data and helps to analyze granular data. Advanced analytics is characterized or defined as autonomous or semi-autonomous data or content analysis using advanced techniques and methods to discover deeper insights, predict or generate recommendations, typically beyond traditional business intelligence or analytics. “Machine learning”, a branch of artificial intelligence (AI), is one of the major techniques used in advanced analytics which can automate analytical model building [ 112 ]. This is focused on the premise that systems can learn from data, recognize trends, and make decisions, with minimal human involvement [ 38 , 115 ]. “Deep Learning” is a subfield of machine learning that discusses algorithms inspired by the human brain’s structure and the function called artificial neural networks [ 38 , 139 ].

Unlike the above data-related terms, “Data science” is an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies. In [ 17 ], Cao et al. defined data science from the disciplinary perspective as “data science is a new interdisciplinary field that synthesizes and builds on statistics, informatics, computing, communication, management, and sociology to study data and its environments (including domains and other contextual aspects, such as organizational and social aspects) to transform data to insights and decisions by following a data-to-knowledge-to-wisdom thinking and methodology”. In “ Understanding data science modeling ”, we briefly discuss the data science modeling from a practical perspective starting from business problems to data products that can assist the data scientists to think and work in a particular real-world problem domain within the area of data science and analytics.

Related Work

In the area, several papers have been reviewed by the researchers based on data science and its significance. For example, the authors in [ 19 ] identify the evolving field of data science and its importance in the broader knowledge environment and some issues that differentiate data science and informatics issues from conventional approaches in information sciences. Donoho et al. [ 27 ] present 50 years of data science including recent commentary on data science in mass media, and on how/whether data science varies from statistics. The authors formally conceptualize the theory-guided data science (TGDS) model in [ 53 ] and present a taxonomy of research themes in TGDS. Cao et al. include a detailed survey and tutorial on the fundamental aspects of data science in [ 17 ], which considers the transition from data analysis to data science, the principles of data science, as well as the discipline and competence of data education.

Besides, the authors include a data science analysis in [ 20 ], which aims to provide a realistic overview of the use of statistical features and related data science methods in bioimage informatics. The authors in [ 61 ] study the key streams of data science algorithm use at central banks and show how their popularity has risen over time. This research contributes to the creation of a research vector on the role of data science in central banking. In [ 62 ], the authors provide an overview and tutorial on the data-driven design of intelligent wireless networks. The authors in [ 87 ] provide a thorough understanding of computational optimal transport with application to data science. In [ 97 ], the authors present data science as theoretical contributions in information systems via text analytics.

Unlike the above recent studies, in this paper, we concentrate on the knowledge of data science including advanced analytics methods, machine learning modeling, real-world application domains, and potential research directions within the scope of our study. The advanced analytics methods based on machine learning techniques discussed in this paper can be applied to enhance the capabilities of an application in terms of data-driven intelligent decision making and automation in the final data product or systems.

Understanding Data Science Modeling

In this section, we briefly discuss how data science can play a significant role in the real-world business process. For this, we first categorize various types of data and then discuss the major steps of data science modeling starting from business problems to data product and automation.

Types of Real-World Data

Typically, to build a data-driven real-world system in a particular domain, the availability of data is the key [ 17 , 112 , 114 ]. The data can be in different types such as (i) Structured—that has a well-defined data structure and follows a standard order, examples are names, dates, addresses, credit card numbers, stock information, geolocation, etc.; (ii) Unstructured—has no pre-defined format or organization, examples are sensor data, emails, blog entries, wikis, and word processing documents, PDF files, audio files, videos, images, presentations, web pages, etc.; (iii) Semi-structured—has elements of both the structured and unstructured data containing certain organizational properties, examples are HTML, XML, JSON documents, NoSQL databases, etc.; and (iv) Metadata—that represents data about the data, examples are author, file type, file size, creation date and time, last modification date and time, etc. [ 38 , 105 ].

In the area of data science, researchers use various widely-used datasets for different purposes. These are, for example, cybersecurity datasets such as NSL-KDD [ 127 ], UNSW-NB15 [ 79 ], Bot-IoT [ 59 ], ISCX’12 [ 15 ], CIC-DDoS2019 [ 22 ], etc., smartphone datasets such as phone call logs [ 88 , 110 ], mobile application usages logs [ 124 , 149 ], SMS Log [ 28 ], mobile phone notification logs [ 77 ] etc., IoT data [ 56 , 11 , 64 ], health data such as heart disease [ 99 ], diabetes mellitus [ 86 , 147 ], COVID-19 [ 41 , 78 ], etc., agriculture and e-commerce data [ 128 , 150 ], and many more in various application domains. In “ Real-world application domains ”, we discuss ten potential real-world application domains of data science and analytics by taking into account data-driven smart computing and decision making, which can help the data scientists and application developers to explore more in various real-world issues.

Overall, the data used in data-driven applications can be any of the types mentioned above, and they can differ from one application to another in the real world. Data science modeling, which is briefly discussed below, can be used to analyze such data in a specific problem domain and derive insights or useful information from the data to build a data-driven model or data product.

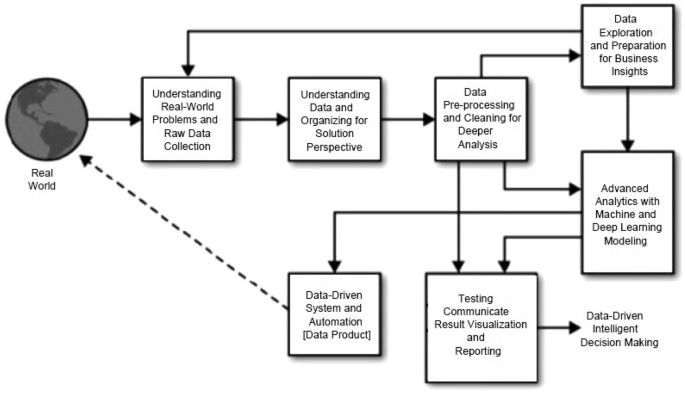

Steps of Data Science Modeling

Data science is typically an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies, mentioned earlier in “ Background and related work ”. In this section, we briefly discuss how data science can play a significant role in the real-world business process. Figure 2 shows an example of data science modeling starting from real-world data to data-driven product and automation. In the following, we briefly discuss each module of the data science process.

An example of data science modeling from real-world data to data-driven system and decision making

Understanding business problems: This involves getting a clear understanding of the problem that is needed to solve, how it impacts the relevant organization or individuals, the ultimate goals for addressing it, and the relevant project plan. Thus to understand and identify the business problems, the data scientists formulate relevant questions while working with the end-users and other stakeholders. For instance, how much/many, which category/group, is the behavior unrealistic/abnormal, which option should be taken, what action, etc. could be relevant questions depending on the nature of the problems. This helps to get a better idea of what business needs and what we should be extracted from data. Such business knowledge can enable organizations to enhance their decision-making process, is known as “Business Intelligence” [ 65 ]. Identifying the relevant data sources that can help to answer the formulated questions and what kinds of actions should be taken from the trends that the data shows, is another important task associated with this stage. Once the business problem has been clearly stated, the data scientist can define the analytic approach to solve the problem.

Understanding data: As we know that data science is largely driven by the availability of data [ 114 ]. Thus a sound understanding of the data is needed towards a data-driven model or system. The reason is that real-world data sets are often noisy, missing values, have inconsistencies, or other data issues, which are needed to handle effectively [ 101 ]. To gain actionable insights, the appropriate data or the quality of the data must be sourced and cleansed, which is fundamental to any data science engagement. For this, data assessment that evaluates what data is available and how it aligns to the business problem could be the first step in data understanding. Several aspects such as data type/format, the quantity of data whether it is sufficient or not to extract the useful knowledge, data relevance, authorized access to data, feature or attribute importance, combining multiple data sources, important metrics to report the data, etc. are needed to take into account to clearly understand the data for a particular business problem. Overall, the data understanding module involves figuring out what data would be best needed and the best ways to acquire it.

Data pre-processing and exploration: Exploratory data analysis is defined in data science as an approach to analyzing datasets to summarize their key characteristics, often with visual methods [ 135 ]. This examines a broad data collection to discover initial trends, attributes, points of interest, etc. in an unstructured manner to construct meaningful summaries of the data. Thus data exploration is typically used to figure out the gist of data and to develop a first step assessment of its quality, quantity, and characteristics. A statistical model can be used or not, but primarily it offers tools for creating hypotheses by generally visualizing and interpreting the data through graphical representation such as a chart, plot, histogram, etc [ 72 , 91 ]. Before the data is ready for modeling, it’s necessary to use data summarization and visualization to audit the quality of the data and provide the information needed to process it. To ensure the quality of the data, the data pre-processing technique, which is typically the process of cleaning and transforming raw data [ 107 ] before processing and analysis is important. It also involves reformatting information, making data corrections, and merging data sets to enrich data. Thus, several aspects such as expected data, data cleaning, formatting or transforming data, dealing with missing values, handling data imbalance and bias issues, data distribution, search for outliers or anomalies in data and dealing with them, ensuring data quality, etc. could be the key considerations in this step.

Machine learning modeling and evaluation: Once the data is prepared for building the model, data scientists design a model, algorithm, or set of models, to address the business problem. Model building is dependent on what type of analytics, e.g., predictive analytics, is needed to solve the particular problem, which is discussed briefly in “ Advanced analytics methods and smart computing ”. To best fits the data according to the type of analytics, different types of data-driven or machine learning models that have been summarized in our earlier paper Sarker et al. [ 105 ], can be built to achieve the goal. Data scientists typically separate training and test subsets of the given dataset usually dividing in the ratio of 80:20 or data considering the most popular k -folds data splitting method [ 38 ]. This is to observe whether the model performs well or not on the data, to maximize the model performance. Various model validation and assessment metrics, such as error rate, accuracy, true positive, false positive, true negative, false negative, precision, recall, f-score, ROC (receiver operating characteristic curve) analysis, applicability analysis, etc. [ 38 , 115 ] are used to measure the model performance, which can guide the data scientists to choose or design the learning method or model. Besides, machine learning experts or data scientists can take into account several advanced analytics such as feature engineering, feature selection or extraction methods, algorithm tuning, ensemble methods, modifying existing algorithms, or designing new algorithms, etc. to improve the ultimate data-driven model to solve a particular business problem through smart decision making.

Data product and automation: A data product is typically the output of any data science activity [ 17 ]. A data product, in general terms, is a data deliverable, or data-enabled or guide, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, application, or system that process data and generate results. Businesses can use the results of such data analysis to obtain useful information like churn (a measure of how many customers stop using a product) prediction and customer segmentation, and use these results to make smarter business decisions and automation. Thus to make better decisions in various business problems, various machine learning pipelines and data products can be developed. To highlight this, we summarize several potential real-world data science application areas in “ Real-world application domains ”, where various data products can play a significant role in relevant business problems to make them smart and automate.

Overall, we can conclude that data science modeling can be used to help drive changes and improvements in business practices. The interesting part of the data science process indicates having a deeper understanding of the business problem to solve. Without that, it would be much harder to gather the right data and extract the most useful information from the data for making decisions to solve the problem. In terms of role, “Data Scientists” typically interpret and manage data to uncover the answers to major questions that help organizations to make objective decisions and solve complex problems. In a summary, a data scientist proactively gathers and analyzes information from multiple sources to better understand how the business performs, and designs machine learning or data-driven tools/methods, or algorithms, focused on advanced analytics, which can make today’s computing process smarter and intelligent, discussed briefly in the following section.

Advanced Analytics Methods and Smart Computing

As mentioned earlier in “ Background and related work ”, basic analytics provides a summary of data whereas advanced analytics takes a step forward in offering a deeper understanding of data and helps in granular data analysis. For instance, the predictive capabilities of advanced analytics can be used to forecast trends, events, and behaviors. Thus, “advanced analytics” can be defined as the autonomous or semi-autonomous analysis of data or content using advanced techniques and methods to discover deeper insights, make predictions, or produce recommendations, where machine learning-based analytical modeling is considered as the key technologies in the area. In the following section, we first summarize various types of analytics and outcome that are needed to solve the associated business problems, and then we briefly discuss machine learning-based analytical modeling.

Types of Analytics and Outcome

In the real-world business process, several key questions such as “What happened?”, “Why did it happen?”, “What will happen in the future?”, “What action should be taken?” are common and important. Based on these questions, in this paper, we categorize and highlight the analytics into four types such as descriptive, diagnostic, predictive, and prescriptive, which are discussed below.

Descriptive analytics: It is the interpretation of historical data to better understand the changes that have occurred in a business. Thus descriptive analytics answers the question, “what happened in the past?” by summarizing past data such as statistics on sales and operations or marketing strategies, use of social media, and engagement with Twitter, Linkedin or Facebook, etc. For instance, using descriptive analytics through analyzing trends, patterns, and anomalies, etc., customers’ historical shopping data can be used to predict the probability of a customer purchasing a product. Thus, descriptive analytics can play a significant role to provide an accurate picture of what has occurred in a business and how it relates to previous times utilizing a broad range of relevant business data. As a result, managers and decision-makers can pinpoint areas of strength and weakness in their business, and eventually can take more effective management strategies and business decisions.

Diagnostic analytics: It is a form of advanced analytics that examines data or content to answer the question, “why did it happen?” The goal of diagnostic analytics is to help to find the root cause of the problem. For example, the human resource management department of a business organization may use these diagnostic analytics to find the best applicant for a position, select them, and compare them to other similar positions to see how well they perform. In a healthcare example, it might help to figure out whether the patients’ symptoms such as high fever, dry cough, headache, fatigue, etc. are all caused by the same infectious agent. Overall, diagnostic analytics enables one to extract value from the data by posing the right questions and conducting in-depth investigations into the answers. It is characterized by techniques such as drill-down, data discovery, data mining, and correlations.

Predictive analytics: Predictive analytics is an important analytical technique used by many organizations for various purposes such as to assess business risks, anticipate potential market patterns, and decide when maintenance is needed, to enhance their business. It is a form of advanced analytics that examines data or content to answer the question, “what will happen in the future?” Thus, the primary goal of predictive analytics is to identify and typically answer this question with a high degree of probability. Data scientists can use historical data as a source to extract insights for building predictive models using various regression analyses and machine learning techniques, which can be used in various application domains for a better outcome. Companies, for example, can use predictive analytics to minimize costs by better anticipating future demand and changing output and inventory, banks and other financial institutions to reduce fraud and risks by predicting suspicious activity, medical specialists to make effective decisions through predicting patients who are at risk of diseases, retailers to increase sales and customer satisfaction through understanding and predicting customer preferences, manufacturers to optimize production capacity through predicting maintenance requirements, and many more. Thus predictive analytics can be considered as the core analytical method within the area of data science.

Prescriptive analytics: Prescriptive analytics focuses on recommending the best way forward with actionable information to maximize overall returns and profitability, which typically answer the question, “what action should be taken?” In business analytics, prescriptive analytics is considered the final step. For its models, prescriptive analytics collects data from several descriptive and predictive sources and applies it to the decision-making process. Thus, we can say that it is related to both descriptive analytics and predictive analytics, but it emphasizes actionable insights instead of data monitoring. In other words, it can be considered as the opposite of descriptive analytics, which examines decisions and outcomes after the fact. By integrating big data, machine learning, and business rules, prescriptive analytics helps organizations to make more informed decisions to produce results that drive the most successful business decisions.

In summary, to clarify what happened and why it happened, both descriptive analytics and diagnostic analytics look at the past. Historical data is used by predictive analytics and prescriptive analytics to forecast what will happen in the future and what steps should be taken to impact those effects. In Table 1 , we have summarized these analytics methods with examples. Forward-thinking organizations in the real world can jointly use these analytical methods to make smart decisions that help drive changes in business processes and improvements. In the following, we discuss how machine learning techniques can play a big role in these analytical methods through their learning capabilities from the data.

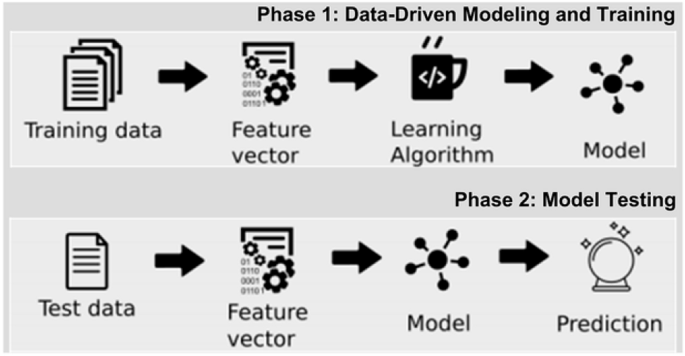

Machine Learning Based Analytical Modeling

In this section, we briefly discuss various advanced analytics methods based on machine learning modeling, which can make the computing process smart through intelligent decision-making in a business process. Figure 3 shows a general structure of a machine learning-based predictive modeling considering both the training and testing phase. In the following, we discuss a wide range of methods such as regression and classification analysis, association rule analysis, time-series analysis, behavioral analysis, log analysis, and so on within the scope of our study.

A general structure of a machine learning based predictive model considering both the training and testing phase

Regression Analysis

In data science, one of the most common statistical approaches used for predictive modeling and data mining tasks is regression techniques [ 38 ]. Regression analysis is a form of supervised machine learning that examines the relationship between a dependent variable (target) and independent variables (predictor) to predict continuous-valued output [ 105 , 117 ]. The following equations Eqs. 1 , 2 , and 3 [ 85 , 105 ] represent the simple, multiple or multivariate, and polynomial regressions respectively, where x represents independent variable and y is the predicted/target output mentioned above:

Regression analysis is typically conducted for one of two purposes: to predict the value of the dependent variable in the case of individuals for whom some knowledge relating to the explanatory variables is available, or to estimate the effect of some explanatory variable on the dependent variable, i.e., finding the relationship of causal influence between the variables. Linear regression cannot be used to fit non-linear data and may cause an underfitting problem. In that case, polynomial regression performs better, however, increases the model complexity. The regularization techniques such as Ridge, Lasso, Elastic-Net, etc. [ 85 , 105 ] can be used to optimize the linear regression model. Besides, support vector regression, decision tree regression, random forest regression techniques [ 85 , 105 ] can be used for building effective regression models depending on the problem type, e.g., non-linear tasks. Financial forecasting or prediction, cost estimation, trend analysis, marketing, time-series estimation, drug response modeling, etc. are some examples where the regression models can be used to solve real-world problems in the domain of data science and analytics.

Classification Analysis

Classification is one of the most widely used and best-known data science processes. This is a form of supervised machine learning approach that also refers to a predictive modeling problem in which a class label is predicted for a given example [ 38 ]. Spam identification, such as ‘spam’ and ‘not spam’ in email service providers, can be an example of a classification problem. There are several forms of classification analysis available in the area such as binary classification—which refers to the prediction of one of two classes; multi-class classification—which involves the prediction of one of more than two classes; multi-label classification—a generalization of multiclass classification in which the problem’s classes are organized hierarchically [ 105 ].

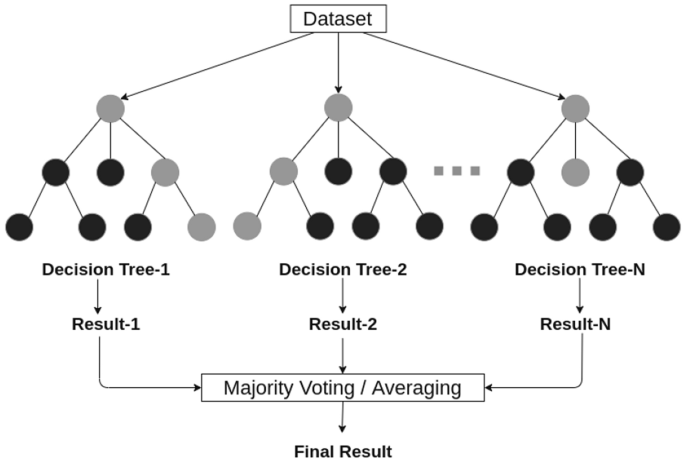

An example of a random forest structure considering multiple decision trees

Several popular classification techniques, such as k-nearest neighbors [ 5 ], support vector machines [ 55 ], navies Bayes [ 49 ], adaptive boosting [ 32 ], extreme gradient boosting [ 85 ], logistic regression [ 66 ], decision trees ID3 [ 92 ], C4.5 [ 93 ], and random forests [ 13 ] exist to solve classification problems. The tree-based classification technique, e.g., random forest considering multiple decision trees, performs better than others to solve real-world problems in many cases as due to its capability of producing logic rules [ 103 , 115 ]. Figure 4 shows an example of a random forest structure considering multiple decision trees. In addition, BehavDT recently proposed by Sarker et al. [ 109 ], and IntrudTree [ 106 ] can be used for building effective classification or prediction models in the relevant tasks within the domain of data science and analytics.

Cluster Analysis

Clustering is a form of unsupervised machine learning technique and is well-known in many data science application areas for statistical data analysis [ 38 ]. Usually, clustering techniques search for the structures inside a dataset and, if the classification is not previously identified, classify homogeneous groups of cases. This means that data points are identical to each other within a cluster, and different from data points in another cluster. Overall, the purpose of cluster analysis is to sort various data points into groups (or clusters) that are homogeneous internally and heterogeneous externally [ 105 ]. To gain insight into how data is distributed in a given dataset or as a preprocessing phase for other algorithms, clustering is often used. Data clustering, for example, assists with customer shopping behavior, sales campaigns, and retention of consumers for retail businesses, anomaly detection, etc.

Many clustering algorithms with the ability to group data have been proposed in machine learning and data science literature [ 98 , 138 , 141 ]. In our earlier paper Sarker et al. [ 105 ], we have summarized this based on several perspectives, such as partitioning methods, density-based methods, hierarchical-based methods, model-based methods, etc. In the literature, the popular K-means [ 75 ], K-Mediods [ 84 ], CLARA [ 54 ] etc. are known as partitioning methods; DBSCAN [ 30 ], OPTICS [ 8 ] etc. are known as density-based methods; single linkage [ 122 ], complete linkage [ 123 ], etc. are known as hierarchical methods. In addition, grid-based clustering methods, such as STING [ 134 ], CLIQUE [ 2 ], etc.; model-based clustering such as neural network learning [ 141 ], GMM [ 94 ], SOM [ 18 , 104 ], etc.; constrained-based methods such as COP K-means [ 131 ], CMWK-Means [ 25 ], etc. are used in the area. Recently, Sarker et al. [ 111 ] proposed a hierarchical clustering method, BOTS [ 111 ] based on bottom-up agglomerative technique for capturing user’s similar behavioral characteristics over time. The key benefit of agglomerative hierarchical clustering is that the tree-structure hierarchy created by agglomerative clustering is more informative than an unstructured set of flat clusters, which can assist in better decision-making in relevant application areas in data science.

Association Rule Analysis

Association rule learning is known as a rule-based machine learning system, an unsupervised learning method is typically used to establish a relationship among variables. This is a descriptive technique often used to analyze large datasets for discovering interesting relationships or patterns. The association learning technique’s main strength is its comprehensiveness, as it produces all associations that meet user-specified constraints including minimum support and confidence value [ 138 ].

Association rules allow a data scientist to identify trends, associations, and co-occurrences between data sets inside large data collections. In a supermarket, for example, associations infer knowledge about the buying behavior of consumers for different items, which helps to change the marketing and sales plan. In healthcare, to better diagnose patients, physicians may use association guidelines. Doctors can assess the conditional likelihood of a given illness by comparing symptom associations in the data from previous cases using association rules and machine learning-based data analysis. Similarly, association rules are useful for consumer behavior analysis and prediction, customer market analysis, bioinformatics, weblog mining, recommendation systems, etc.

Several types of association rules have been proposed in the area, such as frequent pattern based [ 4 , 47 , 73 ], logic-based [ 31 ], tree-based [ 39 ], fuzzy-rules [ 126 ], belief rule [ 148 ] etc. The rule learning techniques such as AIS [ 3 ], Apriori [ 4 ], Apriori-TID and Apriori-Hybrid [ 4 ], FP-Tree [ 39 ], Eclat [ 144 ], RARM [ 24 ] exist to solve the relevant business problems. Apriori [ 4 ] is the most commonly used algorithm for discovering association rules from a given dataset among the association rule learning techniques [ 145 ]. The recent association rule-learning technique ABC-RuleMiner proposed in our earlier paper by Sarker et al. [ 113 ] could give significant results in terms of generating non-redundant rules that can be used for smart decision making according to human preferences, within the area of data science applications.

Time-Series Analysis and Forecasting

A time series is typically a series of data points indexed in time order particularly, by date, or timestamp [ 111 ]. Depending on the frequency, the time-series can be different types such as annually, e.g., annual budget, quarterly, e.g., expenditure, monthly, e.g., air traffic, weekly, e.g., sales quantity, daily, e.g., weather, hourly, e.g., stock price, minute-wise, e.g., inbound calls in a call center, and even second-wise, e.g., web traffic, and so on in relevant domains.

A mathematical method dealing with such time-series data, or the procedure of fitting a time series to a proper model is termed time-series analysis. Many different time series forecasting algorithms and analysis methods can be applied to extract the relevant information. For instance, to do time-series forecasting for future patterns, the autoregressive (AR) model [ 130 ] learns the behavioral trends or patterns of past data. Moving average (MA) [ 40 ] is another simple and common form of smoothing used in time series analysis and forecasting that uses past forecasted errors in a regression-like model to elaborate an averaged trend across the data. The autoregressive moving average (ARMA) [ 12 , 120 ] combines these two approaches, where autoregressive extracts the momentum and pattern of the trend and moving average capture the noise effects. The most popular and frequently used time-series model is the autoregressive integrated moving average (ARIMA) model [ 12 , 120 ]. ARIMA model, a generalization of an ARMA model, is more flexible than other statistical models such as exponential smoothing or simple linear regression. In terms of data, the ARMA model can only be used for stationary time-series data, while the ARIMA model includes the case of non-stationarity as well. Similarly, seasonal autoregressive integrated moving average (SARIMA), autoregressive fractionally integrated moving average (ARFIMA), autoregressive moving average model with exogenous inputs model (ARMAX model) are also used in time-series models [ 120 ].

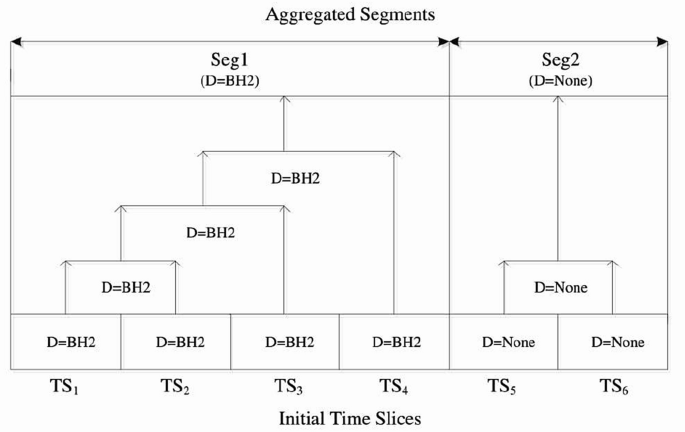

An example of producing aggregate time segments from initial time slices based on similar behavioral characteristics

In addition to the stochastic methods for time-series modeling and forecasting, machine and deep learning-based approach can be used for effective time-series analysis and forecasting. For instance, in our earlier paper, Sarker et al. [ 111 ] present a bottom-up clustering-based time-series analysis to capture the mobile usage behavioral patterns of the users. Figure 5 shows an example of producing aggregate time segments Seg_i from initial time slices TS_i based on similar behavioral characteristics that are used in our bottom-up clustering approach, where D represents the dominant behavior BH_i of the users, mentioned above [ 111 ]. The authors in [ 118 ], used a long short-term memory (LSTM) model, a kind of recurrent neural network (RNN) deep learning model, in forecasting time-series that outperform traditional approaches such as the ARIMA model. Time-series analysis is commonly used these days in various fields such as financial, manufacturing, business, social media, event data (e.g., clickstreams and system events), IoT and smartphone data, and generally in any applied science and engineering temporal measurement domain. Thus, it covers a wide range of application areas in data science.

Opinion Mining and Sentiment Analysis

Sentiment analysis or opinion mining is the computational study of the opinions, thoughts, emotions, assessments, and attitudes of people towards entities such as products, services, organizations, individuals, issues, events, topics, and their attributes [ 71 ]. There are three kinds of sentiments: positive, negative, and neutral, along with more extreme feelings such as angry, happy and sad, or interested or not interested, etc. More refined sentiments to evaluate the feelings of individuals in various situations can also be found according to the problem domain.

Although the task of opinion mining and sentiment analysis is very challenging from a technical point of view, it’s very useful in real-world practice. For instance, a business always aims to obtain an opinion from the public or customers about its products and services to refine the business policy as well as a better business decision. It can thus benefit a business to understand the social opinion of their brand, product, or service. Besides, potential customers want to know what consumers believe they have when they use a service or purchase a product. Document-level, sentence level, aspect level, and concept level, are the possible levels of opinion mining in the area [ 45 ].

Several popular techniques such as lexicon-based including dictionary-based and corpus-based methods, machine learning including supervised and unsupervised learning, deep learning, and hybrid methods are used in sentiment analysis-related tasks [ 70 ]. To systematically define, extract, measure, and analyze affective states and subjective knowledge, it incorporates the use of statistics, natural language processing (NLP), machine learning as well as deep learning methods. Sentiment analysis is widely used in many applications, such as reviews and survey data, web and social media, and healthcare content, ranging from marketing and customer support to clinical practice. Thus sentiment analysis has a big influence in many data science applications, where public sentiment is involved in various real-world issues.

Behavioral Data and Cohort Analysis

Behavioral analytics is a recent trend that typically reveals new insights into e-commerce sites, online gaming, mobile and smartphone applications, IoT user behavior, and many more [ 112 ]. The behavioral analysis aims to understand how and why the consumers or users behave, allowing accurate predictions of how they are likely to behave in the future. For instance, it allows advertisers to make the best offers with the right client segments at the right time. Behavioral analytics, including traffic data such as navigation paths, clicks, social media interactions, purchase decisions, and marketing responsiveness, use the large quantities of raw user event information gathered during sessions in which people use apps, games, or websites. In our earlier papers Sarker et al. [ 101 , 111 , 113 ] we have discussed how to extract users phone usage behavioral patterns utilizing real-life phone log data for various purposes.

In the real-world scenario, behavioral analytics is often used in e-commerce, social media, call centers, billing systems, IoT systems, political campaigns, and other applications, to find opportunities for optimization to achieve particular outcomes. Cohort analysis is a branch of behavioral analytics that involves studying groups of people over time to see how their behavior changes. For instance, it takes data from a given data set (e.g., an e-commerce website, web application, or online game) and separates it into related groups for analysis. Various machine learning techniques such as behavioral data clustering [ 111 ], behavioral decision tree classification [ 109 ], behavioral association rules [ 113 ], etc. can be used in the area according to the goal. Besides, the concept of RecencyMiner, proposed in our earlier paper Sarker et al. [ 108 ] that takes into account recent behavioral patterns could be effective while analyzing behavioral data as it may not be static in the real-world changes over time.

Anomaly Detection or Outlier Analysis

Anomaly detection, also known as Outlier analysis is a data mining step that detects data points, events, and/or findings that deviate from the regularities or normal behavior of a dataset. Anomalies are usually referred to as outliers, abnormalities, novelties, noise, inconsistency, irregularities, and exceptions [ 63 , 114 ]. Techniques of anomaly detection may discover new situations or cases as deviant based on historical data through analyzing the data patterns. For instance, identifying fraud or irregular transactions in finance is an example of anomaly detection.

It is often used in preprocessing tasks for the deletion of anomalous or inconsistency in the real-world data collected from various data sources including user logs, devices, networks, and servers. For anomaly detection, several machine learning techniques can be used, such as k-nearest neighbors, isolation forests, cluster analysis, etc [ 105 ]. The exclusion of anomalous data from the dataset also results in a statistically significant improvement in accuracy during supervised learning [ 101 ]. However, extracting appropriate features, identifying normal behaviors, managing imbalanced data distribution, addressing variations in abnormal behavior or irregularities, the sparse occurrence of abnormal events, environmental variations, etc. could be challenging in the process of anomaly detection. Detection of anomalies can be applicable in a variety of domains such as cybersecurity analytics, intrusion detections, fraud detection, fault detection, health analytics, identifying irregularities, detecting ecosystem disturbances, and many more. This anomaly detection can be considered a significant task for building effective systems with higher accuracy within the area of data science.

Factor Analysis

Factor analysis is a collection of techniques for describing the relationships or correlations between variables in terms of more fundamental entities known as factors [ 23 ]. It’s usually used to organize variables into a small number of clusters based on their common variance, where mathematical or statistical procedures are used. The goals of factor analysis are to determine the number of fundamental influences underlying a set of variables, calculate the degree to which each variable is associated with the factors, and learn more about the existence of the factors by examining which factors contribute to output on which variables. The broad purpose of factor analysis is to summarize data so that relationships and patterns can be easily interpreted and understood [ 143 ].

Exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) are the two most popular factor analysis techniques. EFA seeks to discover complex trends by analyzing the dataset and testing predictions, while CFA tries to validate hypotheses and uses path analysis diagrams to represent variables and factors [ 143 ]. Factor analysis is one of the algorithms for unsupervised machine learning that is used for minimizing dimensionality. The most common methods for factor analytics are principal components analysis (PCA), principal axis factoring (PAF), and maximum likelihood (ML) [ 48 ]. Methods of correlation analysis such as Pearson correlation, canonical correlation, etc. may also be useful in the field as they can quantify the statistical relationship between two continuous variables, or association. Factor analysis is commonly used in finance, marketing, advertising, product management, psychology, and operations research, and thus can be considered as another significant analytical method within the area of data science.

Log Analysis

Logs are commonly used in system management as logs are often the only data available that record detailed system runtime activities or behaviors in production [ 44 ]. Log analysis is thus can be considered as the method of analyzing, interpreting, and capable of understanding computer-generated records or messages, also known as logs. This can be device log, server log, system log, network log, event log, audit trail, audit record, etc. The process of creating such records is called data logging.

Logs are generated by a wide variety of programmable technologies, including networking devices, operating systems, software, and more. Phone call logs [ 88 , 110 ], SMS Logs [ 28 ], mobile apps usages logs [ 124 , 149 ], notification logs [ 77 ], game Logs [ 82 ], context logs [ 16 , 149 ], web logs [ 37 ], smartphone life logs [ 95 ], etc. are some examples of log data for smartphone devices. The main characteristics of these log data is that it contains users’ actual behavioral activities with their devices. Similar other log data can be search logs [ 50 , 133 ], application logs [ 26 ], server logs [ 33 ], network logs [ 57 ], event logs [ 83 ], network and security logs [ 142 ] etc.

Several techniques such as classification and tagging, correlation analysis, pattern recognition methods, anomaly detection methods, machine learning modeling, etc. [ 105 ] can be used for effective log analysis. Log analysis can assist in compliance with security policies and industry regulations, as well as provide a better user experience by encouraging the troubleshooting of technical problems and identifying areas where efficiency can be improved. For instance, web servers use log files to record data about website visitors. Windows event log analysis can help an investigator draw a timeline based on the logging information and the discovered artifacts. Overall, advanced analytics methods by taking into account machine learning modeling can play a significant role to extract insightful patterns from these log data, which can be used for building automated and smart applications, and thus can be considered as a key working area in data science.

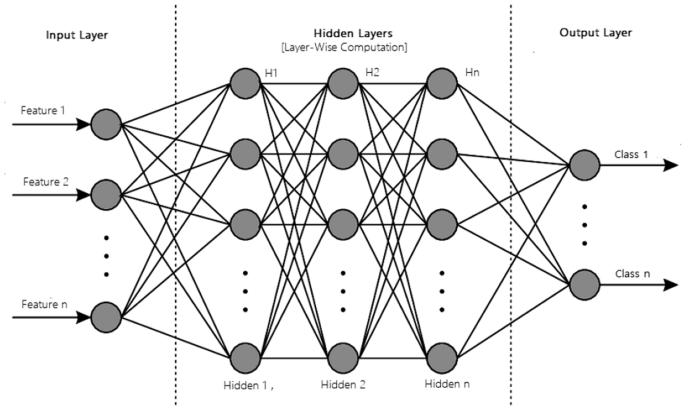

Neural Networks and Deep Learning Analysis

Deep learning is a form of machine learning that uses artificial neural networks to create a computational architecture that learns from data by combining multiple processing layers, such as the input, hidden, and output layers [ 38 ]. The key benefit of deep learning over conventional machine learning methods is that it performs better in a variety of situations, particularly when learning from large datasets [ 114 , 140 ].

The most common deep learning algorithms are: multi-layer perceptron (MLP) [ 85 ], convolutional neural network (CNN or ConvNet) [ 67 ], long short term memory recurrent neural network (LSTM-RNN) [ 34 ]. Figure 6 shows a structure of an artificial neural network modeling with multiple processing layers. The Backpropagation technique [ 38 ] is used to adjust the weight values internally while building the model. Convolutional neural networks (CNNs) [ 67 ] improve on the design of traditional artificial neural networks (ANNs), which include convolutional layers, pooling layers, and fully connected layers. It is commonly used in a variety of fields, including natural language processing, speech recognition, image processing, and other autocorrelated data since it takes advantage of the two-dimensional (2D) structure of the input data. AlexNet [ 60 ], Xception [ 21 ], Inception [ 125 ], Visual Geometry Group (VGG) [ 42 ], ResNet [ 43 ], etc., and other advanced deep learning models based on CNN are also used in the field.

In addition to CNN, recurrent neural network (RNN) architecture is another popular method used in deep learning. Long short-term memory (LSTM) is a popular type of recurrent neural network architecture used broadly in the area of deep learning. Unlike traditional feed-forward neural networks, LSTM has feedback connections. Thus, LSTM networks are well-suited for analyzing and learning sequential data, such as classifying, sorting, and predicting data based on time-series data. Therefore, when the data is in a sequential format, such as time, sentence, etc., LSTM can be used, and it is widely used in the areas of time-series analysis, natural language processing, speech recognition, and so on.

A structure of an artificial neural network modeling with multiple processing layers

In addition to the most popular deep learning methods mentioned above, several other deep learning approaches [ 104 ] exist in the field for various purposes. The self-organizing map (SOM) [ 58 ], for example, uses unsupervised learning to represent high-dimensional data as a 2D grid map, reducing dimensionality. Another learning technique that is commonly used for dimensionality reduction and feature extraction in unsupervised learning tasks is the autoencoder (AE) [ 10 ]. Restricted Boltzmann machines (RBM) can be used for dimensionality reduction, classification, regression, collaborative filtering, feature learning, and topic modeling, according to [ 46 ]. A deep belief network (DBN) is usually made up of a backpropagation neural network and unsupervised networks like restricted Boltzmann machines (RBMs) or autoencoders (BPNN) [ 136 ]. A generative adversarial network (GAN) [ 35 ] is a deep learning network that can produce data with characteristics that are similar to the input data. Transfer learning is common worldwide presently because it can train deep neural networks with a small amount of data, which is usually the re-use of a pre-trained model on a new problem [ 137 ]. These deep learning methods can perform well, particularly, when learning from large-scale datasets [ 105 , 140 ]. In our previous article Sarker et al. [ 104 ], we have summarized a brief discussion of various artificial neural networks (ANN) and deep learning (DL) models mentioned above, which can be used in a variety of data science and analytics tasks.

Real-World Application Domains

Almost every industry or organization is impacted by data, and thus “Data Science” including advanced analytics with machine learning modeling can be used in business, marketing, finance, IoT systems, cybersecurity, urban management, health care, government policies, and every possible industries, where data gets generated. In the following, we discuss ten most popular application areas based on data science and analytics.

Business or financial data science: In general, business data science can be considered as the study of business or e-commerce data to obtain insights about a business that can typically lead to smart decision-making as well as taking high-quality actions [ 90 ]. Data scientists can develop algorithms or data-driven models predicting customer behavior, identifying patterns and trends based on historical business data, which can help companies to reduce costs, improve service delivery, and generate recommendations for better decision-making. Eventually, business automation, intelligence, and efficiency can be achieved through the data science process discussed earlier, where various advanced analytics methods and machine learning modeling based on the collected data are the keys. Many online retailers, such as Amazon [ 76 ], can improve inventory management, avoid out-of-stock situations, and optimize logistics and warehousing using predictive modeling based on machine learning techniques [ 105 ]. In terms of finance, the historical data is related to financial institutions to make high-stakes business decisions, which is mostly used for risk management, fraud prevention, credit allocation, customer analytics, personalized services, algorithmic trading, etc. Overall, data science methodologies can play a key role in the future generation business or finance industry, particularly in terms of business automation, intelligence, and smart decision-making and systems.

Manufacturing or industrial data science: To compete in global production capability, quality, and cost, manufacturing industries have gone through many industrial revolutions [ 14 ]. The latest fourth industrial revolution, also known as Industry 4.0, is the emerging trend of automation and data exchange in manufacturing technology. Thus industrial data science, which is the study of industrial data to obtain insights that can typically lead to optimizing industrial applications, can play a vital role in such revolution. Manufacturing industries generate a large amount of data from various sources such as sensors, devices, networks, systems, and applications [ 6 , 68 ]. The main categories of industrial data include large-scale data devices, life-cycle production data, enterprise operation data, manufacturing value chain sources, and collaboration data from external sources [ 132 ]. The data needs to be processed, analyzed, and secured to help improve the system’s efficiency, safety, and scalability. Data science modeling thus can be used to maximize production, reduce costs and raise profits in manufacturing industries.

Medical or health data science: Healthcare is one of the most notable fields where data science is making major improvements. Health data science involves the extrapolation of actionable insights from sets of patient data, typically collected from electronic health records. To help organizations, improve the quality of treatment, lower the cost of care, and improve the patient experience, data can be obtained from several sources, e.g., the electronic health record, billing claims, cost estimates, and patient satisfaction surveys, etc., to analyze. In reality, healthcare analytics using machine learning modeling can minimize medical costs, predict infectious outbreaks, prevent preventable diseases, and generally improve the quality of life [ 81 , 119 ]. Across the global population, the average human lifespan is growing, presenting new challenges to today’s methods of delivery of care. Thus health data science modeling can play a role in analyzing current and historical data to predict trends, improve services, and even better monitor the spread of diseases. Eventually, it may lead to new approaches to improve patient care, clinical expertise, diagnosis, and management.

IoT data science: Internet of things (IoT) [ 9 ] is a revolutionary technical field that turns every electronic system into a smarter one and is therefore considered to be the big frontier that can enhance almost all activities in our lives. Machine learning has become a key technology for IoT applications because it uses expertise to identify patterns and generate models that help predict future behavior and events [ 112 ]. One of the IoT’s main fields of application is a smart city, which uses technology to improve city services and citizens’ living experiences. For example, using the relevant data, data science methods can be used for traffic prediction in smart cities, to estimate the total usage of energy of the citizens for a particular period. Deep learning-based models in data science can be built based on a large scale of IoT datasets [ 7 , 104 ]. Overall, data science and analytics approaches can aid modeling in a variety of IoT and smart city services, including smart governance, smart homes, education, connectivity, transportation, business, agriculture, health care, and industry, and many others.

Cybersecurity data science: Cybersecurity, or the practice of defending networks, systems, hardware, and data from digital attacks, is one of the most important fields of Industry 4.0 [ 114 , 121 ]. Data science techniques, particularly machine learning, have become a crucial cybersecurity technology that continually learns to identify trends by analyzing data, better detecting malware in encrypted traffic, finding insider threats, predicting where bad neighborhoods are online, keeping people safe while surfing, or protecting information in the cloud by uncovering suspicious user activity [ 114 ]. For instance, machine learning and deep learning-based security modeling can be used to effectively detect various types of cyberattacks or anomalies [ 103 , 106 ]. To generate security policy rules, association rule learning can play a significant role to build rule-based systems [ 102 ]. Deep learning-based security models can perform better when utilizing the large scale of security datasets [ 140 ]. Thus data science modeling can enable professionals in cybersecurity to be more proactive in preventing threats and reacting in real-time to active attacks, through extracting actionable insights from the security datasets.

Behavioral data science: Behavioral data is information produced as a result of activities, most commonly commercial behavior, performed on a variety of Internet-connected devices, such as a PC, tablet, or smartphones [ 112 ]. Websites, mobile applications, marketing automation systems, call centers, help desks, and billing systems, etc. are all common sources of behavioral data. Behavioral data is much more than just data, which is not static data [ 108 ]. Advanced analytics of these data including machine learning modeling can facilitate in several areas such as predicting future sales trends and product recommendations in e-commerce and retail; predicting usage trends, load, and user preferences in future releases in online gaming; determining how users use an application to predict future usage and preferences in application development; breaking users down into similar groups to gain a more focused understanding of their behavior in cohort analysis; detecting compromised credentials and insider threats by locating anomalous behavior, or making suggestions, etc. Overall, behavioral data science modeling typically enables to make the right offers to the right consumers at the right time on various common platforms such as e-commerce platforms, online games, web and mobile applications, and IoT. In social context, analyzing the behavioral data of human being using advanced analytics methods and the extracted insights from social data can be used for data-driven intelligent social services, which can be considered as social data science.

Mobile data science: Today’s smart mobile phones are considered as “next-generation, multi-functional cell phones that facilitate data processing, as well as enhanced wireless connectivity” [ 146 ]. In our earlier paper [ 112 ], we have shown that users’ interest in “Mobile Phones” is more and more than other platforms like “Desktop Computer”, “Laptop Computer” or “Tablet Computer” in recent years. People use smartphones for a variety of activities, including e-mailing, instant messaging, online shopping, Internet surfing, entertainment, social media such as Facebook, Linkedin, and Twitter, and various IoT services such as smart cities, health, and transportation services, and many others. Intelligent apps are based on the extracted insight from the relevant datasets depending on apps characteristics, such as action-oriented, adaptive in nature, suggestive and decision-oriented, data-driven, context-awareness, and cross-platform operation [ 112 ]. As a result, mobile data science, which involves gathering a large amount of mobile data from various sources and analyzing it using machine learning techniques to discover useful insights or data-driven trends, can play an important role in the development of intelligent smartphone applications.