An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMC Health Serv Res

Application of four-dimension criteria to assess rigour of qualitative research in emergency medicine

Roberto forero.

1 The Simpson Centre for Health Services Research, South Western Sydney Clinical School and the Ingham Institute for Applied Research, Liverpool Hospital, UNSW, Liverpool, NSW 1871 Australia

Shizar Nahidi

Josephine de costa, mohammed mohsin.

2 Psychiatry Research and Teaching Unit, Liverpool Hospital, NSW Health, Sydney, Australia

3 School of Psychiatry, Faculty of Medicine, University of New South Wales, Sydney, Australia

Gerry Fitzgerald

4 School - Public Health and Social Work, Queensland University of Technology (QUT), Brisbane, Qld Australia

5 Australasian College for Emergency Medicine (ACEM), Melbourne, VIC Australia

Nick Gibson

6 School of Nursing and Midwifery, Edith Cowan University (ECU), Perth, WA Australia

Sally McCarthy

7 Emergency Care Institute (ECI), NSW Agency for Clinical Innovation (ACI), Sydney, Australia

Patrick Aboagye-Sarfo

8 Clinical Support Directorate, System Policy & Planning Division, Department of Health WA, Perth, WA Australia

Associated Data

All data generated or analysed during this study are included in this published article and its supplementary information files have been included in the appendix. No individual data will be available.

The main objective of this methodological manuscript was to illustrate the role of using qualitative research in emergency settings. We outline rigorous criteria applied to a qualitative study assessing perceptions and experiences of staff working in Australian emergency departments.

We used an integrated mixed-methodology framework to identify different perspectives and experiences of emergency department staff during the implementation of a time target government policy. The qualitative study comprised interviews from 119 participants across 16 hospitals. The interviews were conducted in 2015–2016 and the data were managed using NVivo version 11. We conducted the analysis in three stages, namely: conceptual framework, comparison and contrast and hypothesis development. We concluded with the implementation of the four-dimension criteria (credibility, dependability, confirmability and transferability) to assess the robustness of the study,

We adapted four-dimension criteria to assess the rigour of a large-scale qualitative research in the emergency department context. The criteria comprised strategies such as building the research team; preparing data collection guidelines; defining and obtaining adequate participation; reaching data saturation and ensuring high levels of consistency and inter-coder agreement.

Based on the findings, the proposed framework satisfied the four-dimension criteria and generated potential qualitative research applications to emergency medicine research. We have added a methodological contribution to the ongoing debate about rigour in qualitative research which we hope will guide future studies in this topic in emergency care research. It also provided recommendations for conducting future mixed-methods studies. Future papers on this series will use the results from qualitative data and the empirical findings from longitudinal data linkage to further identify factors associated with ED performance; they will be reported separately.

Electronic supplementary material

The online version of this article (10.1186/s12913-018-2915-2) contains supplementary material, which is available to authorized users.

Qualitative research methods have been used in emergency settings in a variety of ways to address important problems that cannot be explored in another way, such as attitudes, preferences and reasons for presenting to the emergency department (ED) versus other type of clinical services (i.e., general practice) [ 1 – 4 ].

The methodological contribution of this research is part of the ongoing debate of scientific rigour in emergency care, such as the importance of qualitative research in evidence-based medicine, its contribution to tool development and policy evaluation [ 2 , 3 , 5 – 7 ]. For instance, the Four-Hour Rule and the National Emergency Access Target (4HR/NEAT) was an important policy implemented in Australia to reduce EDs crowding and boarding (access block) [ 8 – 13 ]. This policy generated the right conditions for using mixed methods to investigate the impact of 4HR/NEAT policy implementation on people attending, working or managing this type of problems in emergency departments [ 2 , 3 , 5 – 7 , 14 – 17 ].

The rationale of our study was to address the perennial question of how to assess and establish methodological robustness in these types of studies. For that reason, we conducted this mixed method study to explore the impact of the 4HR/NEAT in 16 metropolitan hospitals in four Australian states and territories, namely: Western Australia (WA), Queensland (QLD), New South Wales (NSW), and the Australian Capital Territory (ACT) [ 18 , 19 ].

The main objectives of the qualitative component was to understand the personal, professional and organisational perspectives reported by ED staff during the implementation of 4HR/NEAT, and to explore their perceptions and experiences associated with the implementation of the policy in their local environment.

This is part of an Australian National Health and Medical Research Council (NH&MRC) Partnership project to assess the impact of the 4HR/NEAT on Australian EDs. It is intended to complement the quantitative streams of a large data-linkage/dynamic modelling study using a mixed-methods approach to understand the impact of the implementation of the four-hour rule policy.

Methodological rigour

This section describes the qualitative methods to assess the rigour of the qualitative study. Researchers conducting quantitative studies use conventional terms such as internal validity, reliability, objectivity and external validity [ 17 ]. In establishing trustworthiness, Lincoln and Guba created stringent criteria in qualitative research, known as credibility, dependability, confirmability and transferability [ 17 – 20 ]. This is referred in this article as “the Four-Dimensions Criteria” (FDC). Other studies have used different variations of these categories to stablish rigour [ 18 , 19 ]. In our case, we adapted the criteria point by point by selecting those strategies that applied to our study systematically. Table 1 illustrates which strategies were adapted in our study.

Key FDC strategies adapted from Lincoln and Guba [ 23 ]

Study procedure

We carefully planned and conducted a series of semi-structured interviews based on the four-dimension criteria (credibility, dependability, confirmability and transferability) to assess and ensure the robustness of the study. These criteria have been used in other contexts of qualitative health research; but this is the first time it has been used in the emergency setting [ 20 – 26 ].

Sampling and recruitment

We employed a combination of stratified purposive sampling (quota sampling), criterion-based and maximum variation sampling strategies to recruit potential participants [ 27 , 28 ]. The hospitals selected for the main longitudinal quantitative data linkage study, were also purposively selected in this qualitative component.

We targeted potential individuals from four groups, namely: ED Directors, ED physicians, ED nurses, and data/admin staff. The investigators identified local site coordinators who arranged the recruitment in each of the participating 16 hospitals (6 in NSW, 4 in QLD, 4 in WA and 2 in the ACT) and facilitated on-site access to the research team. These coordinators provided a list of potential participants for each professional group. By using this list, participants within each group were selected through purposive sampling technique. We initially planned to recruit at least one ED director, two ED physicians, two ED nurses and one data/admin staff per hospital. Invitation emails were circulated by the site coordinators to all potential participants who were asked to contact the main investigators if they required more information.

We also employed criterion-based purposive sampling to ensure that those with experience relating to 4HR/NEAT were eligible. For ethical on-site restrictions, the primary condition of the inclusion criteria was that eligible participants needed to be working in the ED during the period that the 4HR/NEAT policy was implemented. Those who were not working in that ED during the implementation period were not eligible to participate, even if they had previous working experience in other EDs.

We used maximum variation sampling to ensure that the sample reflects a diverse group in terms of skill level, professional experience and policy implementation [ 28 ]. We included study participants irrespective of whether their role/position was changed (for example, if they received a promotion during their term of service in ED).

In summary, over a period of 7 months (August 2015 to March 2016), we identified all the potential participants (124) and conducted 119 interviews (5 were unable to participate due to workload availability). The overall sample comprised a cohort of people working in different roles across 16 hospitals. Table 2 presents the demographic and professional characteristics of the participants.

Demographic and professional characteristics of the staff participated in the study

Dir represents ‘Director’, NUM Nursing unit manager, CNC Clinical nurse consultant

Data collection

We employed a semi-structured interview technique. Six experienced investigators (3 in NSW, 1 in ACT, 1 in QLD and 1 in WA) conducted the interviews (117 face-to-face on site and 2 by telephone). We used an integrated interview protocol which consisted of a demographically-oriented question and six open-ended questions about different aspects of the 4HR/NEAT policy (see Additional file 1 : Appendix 1).

With the participant’s permission, interviews were audio-recorded. All the hospitals provided a quiet interview room that ensured privacy and confidentiality for participants and investigators.

All the interviews were transcribed verbatim by a professional transcriber with reference to a standardised transcription protocol [ 29 ]. The data analysis team followed a stepwise process for data cleaning, and de-identification. Transcripts were imported to qualitative data analysis software NVivo version 11 for management and coding [ 30 ].

Data analysis

The analyses were carried out in three stages. In the first stage, we identified key concepts using content analysis and a mind-mapping process from the research protocol and developed a conceptual framework to organise the data [ 31 ]. The analysis team reviewed and coded a selected number of transcripts, then juxtaposed the codes against the domains incorporated in the interview protocol as indicated in the three stages of analysis with the conceptual framework (Fig. 1 ).

Conceptual framework with the three stages of analysis used for the analysis of the qualitative data

In this stage, two cycles of coding were conducted: in the first one, all the transcripts were revised and initially coded, key concepts were identified throughout the full data set. The second cycle comprised an in-depth exploration and creation of additional categories to generate the codebook (see Additional file 2 : Appendix 2). This codebook was a summary document encompassing all the concepts identified as primary and subsequent levels. It presented hierarchical categorisation of key concepts developed from the domains indicated in Fig. Fig.1 1 .

A summarised list of key concepts and their definitions are presented in Table 3 . We show the total number of interviews for each of the key concepts, and the number of times (i.e., total citations) a concept appeared in the whole dataset.

Summary of key concepts, their definition, total number of citations and total number of interviews

Citations refer to the number of times a coded term was counted in NVivo

The second stage of analysis compared and contrasted the experiences, perspectives and actions of participants by role and location. The third and final stage of analysis aimed to generate theory-driven hypotheses and provided an in-depth understanding of the impact of the policy. At this stage, the research team explored different theoretical perspectives such as the carousel model and models of care approach [ 16 , 32 – 34 ]. We also used iterative sampling to reach saturation and interpret the findings.

Ethics approval and consent to participate

Ethics approval was obtained for all participating hospitals and the qualitative methods are based on the original research protocol approved by the funding organisations [ 18 ].

This section described the FDC and provided a detailed description of the strategies used in the analysis. It was adapted from the FDC methodology described by Lincoln and Guba [ 23 – 26 ] as the framework to ensure a high level of rigour in qualitative research. In Table Table1, 1 , we have provided examples of how the process was implemented for each criterion and techniques to ensure compliance with the purpose of FDC.

Credibility

Prolonged and varied engagement with each setting.

All the investigators had the opportunity to have a continued engagement with each ED during the data collection process. They received a supporting material package, comprising background information about the project; consent forms and the interview protocol (see Additional file 1 : Appendix 1). They were introduced to each setting by the local coordinator and had the chance to meet the ED directors and potential participants, They also identified local issues and salient characteristics of each site, and had time to get acquainted with the study’s participants. This process allowed the investigators to check their personal perspectives and predispositions, and enhance their familiarity with the study setting. This strategy also allowed participants to become familiar with the project and the research team.

Interviewing process and techniques

In order to increase credibility of the data collected and of the subsequent results, we took a further step of calibrating the level of awareness and knowledge of the research protocol. The research team conducted training sessions, teleconferences, induction meetings and pilot interviews with the local coordinators. Each of the interviewers conducted one or two pilot interviews to refine the overall process using the interview protocol, time-management and the overall running of the interviews.

The semi-structured interview procedure also allowed focus and flexibility during the interviews. The interview protocol (Additional file 1 : Appendix 1) included several prompts that allowed the expansion of answers and the opportunity for requesting more information, if required.

Establishing investigators’ authority

In relation to credibility, Miles and Huberman [ 35 ] expanded the concept to the trustworthiness of investigators’ authority as ‘human instruments’ and recommended the research team should present the following characteristics:

- Familiarity with phenomenon and research context : In our study, the research team had several years’ experience in the development and implementation of 4HR/NEAT in Australian EDs and extensive ED-based research experience and track records conducting this type of work.

- Investigative skills: Investigators who were involved in data collections had three or more years’ experience in conducting qualitative data collection, specifically individual interview techniques.

- Theoretical knowledge and skills in conceptualising large datasets: Investigators had post-graduate experience in qualitative data analysis and using NVivo software to manage and qualitative research skills to code and interpret large amounts of qualitative data.

- Ability to take a multidisciplinary approach: The multidisciplinary background of the team in public health, nursing, emergency medicine, health promotion, social sciences, epidemiology and health services research, enabled us to explore different theoretical perspectives and using an eclectic approach to interpret the findings.

These characteristics ensured that the data collection and content were consistent across states and participating hospitals.

Collection of referential adequacy materials

In accordance with Guba’s recommendation to collect any additional relevant resources, investigators maintained a separate set of materials from on-site data collection which included documents and field notes that provided additional information in relation to the context of the study, its findings and interpretation of results. These materials were collected and used during the different levels of data analysis and kept for future reference and secure storage of confidential material [ 26 ].

Peer debriefing

We conducted several sessions of peer debriefing with some of the Project Management Committee (PMC) members. They were asked at different stages throughout the analysis to reflect and cast their views on the conceptual analysis framework, the key concepts identified during the first level of analysis and eventually the whole set of findings (see Fig. Fig.1). 1 ). We also have reported and discussed preliminary methods and general findings at several scientific meetings of the Australasian College for Emergency Medicine.

Dependability

Rich description of the study protocol.

This study was developed from the early stages through a systematic search of the existing literature about the four-hour rule and time-target care delivery in ED. Detailed draft of the study protocol was delivered in consultation with the PMC. After incorporating all the comments, a final draft was generated for the purpose of obtaining the required ethics approvals for each ED setting in different states and territories.

To maintain consistency, we documented all the changes and revisions to the research protocol, and kept a trackable record of when and how changes were implemented.

Establishing an audit trail

Steps were taken to keep a track record of the data collection process [ 24 ]: we have had sustained communication within the research team to ensure the interviewers were abiding by an agreed-upon protocol to recruit participants. As indicated before, we provided the investigators with a supporting material package. We also instructed the interviewers on how to securely transfer the data to the transcriber. The data-analysis team systematically reviewed the transcripts against the audio files for accuracy and clarifications provided by the transcriber.

All the steps in coding the data and identification of key concepts were agreed upon by the research team. The progress of the data analysis was monitored on a weekly basis. Any modifications of the coding system were discussed and verified by the team to ensure correct and consistent interpretation throughout the analysis.

The codebook (see Additional file 2 : Appendix 2) was revised and updated during the cycles of coding. Utilisation of the mind-mapping process described above helped to verify consistency and allowed to determine how precise the participants’ original information was preserved in the coding [ 31 ].

As required by relevant Australian legislation [ 36 ], we maintained complete records of the correspondence and minutes of meetings, as well as all qualitative data files in NVivo and Excel on the administrative organisation’s secure drive. Back-up files were kept in a secure external storage device, for future access if required.

Stepwise replication—measuring the inter-coders’ agreement

To assess the interpretative rigour of the analysis, we applied inter-coder agreement to control the coding accuracy and monitor inter-coder reliability among the research team throughout the analysis stage [ 37 ]. This step was crucially important in the study given the changes of staff that our team experienced during the analysis stage. At the initial stages of coding, we tested the inter-coder agreement using the following protocol:

- Step 1 – Two data analysts and principal investigator coded six interviews, separately.

- Step 2 – The team discussed the interpretation of the emerging key concepts, and resolved any coding discrepancies.

- Step 3 – The initial codebook was composed and used for developing the respective conceptual framework.

- Step 4 – The inter-coder agreement was calculated and found a weighted Kappa coefficient of 0.765 which indicates a very good agreement (76.5%) of the data.

With the addition of a new analyst to the team, we applied another round of inter-coder agreement assessment. We followed the same steps to ensure the inter-coder reliability along the trajectory of data analysis, except for step 3—a priori codebook was used as a benchmark to compare and contrast the codes developed by the new analyst. The calculated Kappa coefficient 0.822 indicates a very good agreement of the data (See Table 4 ).

Inter-coder analysis using Cohen’s Kappa coefficients

Confirmability

Reflexivity.

The analysis was conducted by the research team who brought different perspectives to the data interpretation. To appreciate the collective interpretation of the findings, each investigator used a separate reflexive journal to record the issues about sensitive topics or any potential ethical issues that might have affected the data analysis. These were discussed in the weekly meetings.

After completion of the data collection, reflection and feedback from all the investigators conducting the interviews were sought in both written and verbal format.

Triangulation

To assess the confirmability and credibility of the findings, the following four triangulation processes were considered: methodological, data source, investigators and theoretical triangulation.

Methodological triangulation is in the process of being implemented using the mixed methods approach with linked data from our 16 hospitals.

Data source triangulation was achieved by using several groups of ED staff working in different states/territories and performing different roles. This triangulation offered a broad source of data that contributed to gain a holistic understanding of the impact of 4HR/NEAT on EDs across Australia. We expect to use data triangulation with linked-data in future secondary analysis.

Investigators triangulation was obtained by consensus decision making though collaboration, discussion and participation of the team holding different perspectives. We also used the investigators’ field notes, memos and reflexive journals as a form of triangulation to validate the data collected. This approach enabled us to balance out the potential bias of individual investigators and enabling the research team to reach a satisfactory consensus level.

Theoretical triangulation was achieved by using and exploring different theoretical perspectives such as the carousel model and models of care approach [ 16 , 32 – 34 ]. that could be applied in the context of the study to generate hypotheses and theory driven codes [ 16 , 32 , 38 ].

Transferability

Purposive sampling to form a nominated sample.

As outlined in the methods section, we used a combination of three purposive sampling techniques to make sure that the selected participants were representative of the variety of views of ED staff across settings. This representativeness was critical for conducting comparative analysis across different groups.

Data saturation

We employed two methods to ensure data saturation was reached, namely: operational and theoretical. The operational method was used to quantify the number of new codes per interview over time. It indicates that the majority of codes were identified in the first interviews, followed by a decreasing frequency of codes identified from other interviews.

Theoretical saturation and iterative sampling were achieved through regular meetings where progress of coding and identification of variations in each of the key concepts were reported and discussed. We also used iterative sampling to reach saturation and interpret the findings. We continued this iterative process until no new codes emerged from the dataset and all the variations of an observed phenomenon were identified [ 39 ] (Fig. 2 ).

Data saturation gain per interview added based on the chronological order of data collection in the hospitals. Y axis = number of new codes, X axis = number of interviews over time

Scientific rigour in qualitative research assessing trustworthiness is not new. Qualitative researchers have used rigour criteria widely [ 40 – 42 ]. The novelty of the method described in this article rests on the systematic application of these criteria in a large-scale qualitative study in the context of emergency medicine.

According to the FDC, similar findings should be obtained if the process is repeated with the same cohort of participants in the same settings and organisational context. By employing the FDC and the proposed strategies, we could enhance the dependability of the findings. As indicated in the literature, qualitative research has many times been questioned in history for its validity and credibility [ 3 , 20 , 43 , 44 ].

Nevertheless, if the work is done properly, based on the suggested tools and techniques, any qualitative work can become a solid piece of evidence. This study suggests that emergency medicine researchers can improve their qualitative research if conducted according to the suggested criteria. The triangulation and reflexivity strategies helped us to minimise the investigators’ bias, and affirm that the findings were objective and accurately reflect the participants’ perspectives and experiences. Abiding by a consistent method of data collection (e.g., interview protocol) and conducting the analysis with a team of investigators, helped us minimise the risk of interpretation bias.

Employing several purposive sampling techniques enabled us to have a diverse range of opinions and experiences which at the same time enhanced the credibility of the findings. We expect that the outcomes of this study will show a high degree of applicability, because any resultant hypotheses may be transferable across similar settings in emergency care. The systematic quantification of data saturation at this scale of qualitative data has not been demonstrated in the emergency medicine literature before.

As indicated, the objective of this study was to contribute to the ongoing debate about rigour in qualitative research by using our mixed methods study as an example. In relation to innovative application of mixed-methods, the findings from this qualitative component can be used to explain specific findings from the quantitative component of the study. For example, different trends of 4HR/NEAT performance can be explained by variations in staff relationships across states (see key concept 1, Table Table3). 3 ). In addition, some experiences from doctors and nurses may explain variability of performance indicators across participating hospitals. The robustness of the qualitative data will allow us to generate hypotheses that in turn can be tested in future research.

Careful planning is essential in any type of research project which includes the importance of allocating sufficient resources both human and financial. It is also required to organise precise arrangements for building the research team; preparing data collection guidelines; defining and obtaining adequate participation. This may allow other researchers in emergency care to replicate the use of the FDC in the future.

This study has several limitations. Some limitations of the qualitative component include recall bias or lack of reliable information collected about interventions conducted in the past (before the implementation of the policy). As Weber and colleagues [ 45 ] point out, conducting interviews with clinicians at a single point in time may be affected by recall bias. Moreover, ED staff may have left the organisation or have progressed in their careers (from junior to senior clinical roles, i.e. junior nursing staff or junior medical officers, registrars, etc.), so obtaining information about pre/during/post-4HR/NEAT was a difficult undertaking. Although the use of criterion-based and maximum-variation sampling techniques minimised this effect, we could not guarantee that the sampling techniques could have reached out all those who might be eligible to participate.

In terms of recruitment, we could not select potential participants who were not working in that particular ED during the implementation, even if they had previous working experience in other hospital EDs. This is a limitation because people who participated in previous hospitals during the intervention could not provide valuable input to the overall project.

In addition, one would claim that the findings could have been ‘ED-biased’ due to the fact that we did not interview the staff or administrators outside the ED. Unfortunately, interviews outside the ED were beyond the resources and scope of the project.

With respect to the rigour criteria, we could not carry out a systematic member checking as we did not have the required resources for such an expensive follow-up. Nevertheless, we have taken extensive measures to ensure confirmation of the integrity of the data.

Conclusions

The FDC presented in this manuscript provides an important and systematic approach to achieve trustworthy qualitative findings. As indicated before, qualitative research credentials have been questioned. However, if the work is done properly based on the suggested tools and techniques described in this manuscript, any work can become a very notable piece of evidence. This study concludes that the FDC is effective; any investigator in emergency medicine research can improve their qualitative research if conducted accordingly.

Important indicators such as saturation levels and inter-coder reliability should be considered in all types of qualitative projects. One important aspect is that by using FDC we can demonstrate that qualitative research is not less rigorous than quantitative methods.

We also conclude that the FDC is a valid framework to be used in qualitative research in the emergency medicine context. We recommend that future research in emergency care should consider the FDC to achieve trustworthy qualitative findings. We can conclude that our method confirms the credibility (validity) and dependability (reliability) of the analysis which are a true reflection of the perspectives reported by the group of participants across different states/territories.

We can also conclude that our method confirms the objectivity of the analyses and reduces the risk for interpretation bias. We encourage adherence to practical frameworks and strategies like those presented in this manuscript.

Finally, we have highlighted the importance of allocating sufficient resources. This is essential if other researchers in emergency care would like to replicate the use of the FDC in the future.

Following papers in this series will use the empirical findings from longitudinal data linkage analyses and the results from the qualitative study to further identify factors associated with ED performance before and after the implementation of the 4HR/NEAT.

Additional files

Appendix 1. Interview form. Text. (PDF 445 kb)

Appendix 2. Codebook NVIVO. Text code. (PDF 335 kb)

Appendix 3. Acknowledgements. Text. (PDF 104 kb)

Acknowledgements

We acknowledge Brydan Lenne who was employed in the preliminary stages of the project, for ethics application preparation and ethics submissions, and her contribution in the planning stages, data collection of the qualitative analysis and preliminary coding of the conceptual framework is appreciated. Fenglian Xu, who was also employed in the initial stages of the project in the data linkage component. Jenine Beekhuyzen, CEO Adroit Research, for consultancy and advice on qualitative aspects of the manuscript and Liz Brownlee, owner/manager Bostock Transcripts services for the transcription of the interviews. Brydan Lenne, Karlene Dickens; Cecily Scutt and Tracey Hawkins who conducted the interviews across states. We also thank Anna Holdgate, Michael Golding, Michael Hession, Amith Shetty, Drew Richardson, Daniel Fatovich, David Mountain, Nick Gibson, Sam Toloo, Conrad Loten, John Burke and Vijai Joseph who acted as site contacts on each State/Territory. We also thank all the participants for their contribution in time and information provided. A full acknowledgment of all investigators and partner organisations is enclosed as an attachment (see Additional file 3 : Appendix 3).

This project was funded by the Australian National Health and Medical Research Council (NH&MRC) Partnership Grant No APP1029492 with cash contributions from the following organisations: Department of Health of Western Australia, Australasian College for Emergency Medicine, Ministry of Health of NSW and the Emergency Care Institute, NSW Agency for Clinical Innovation, and Emergency Medicine Foundation, Queensland.

Availability of data and materials

Abbreviations, authors’ contributions.

RF, GF, SMC made substantial contributions to conception, design and funding of the study. RF, SN, NG, SMC with acquisition of data. RF, SN, JDC for the analysis and interpretation of data. RF, SN, JDC, MM, GF, NG, SMC and PA were involved in drafting the manuscript and revising it critically for important intellectual content and gave final approval of the version to be published. All authors have participated sufficiently in the work to take public responsibility for appropriate portions of the content; and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

As indicated in the background, our study received ethics approval from the respective Human Research Ethics Committees of Western Australian Department of Health (DBL.201403.07), Cancer Institute NSW (HREC/14/CIPHS/30), ACT Department of Health (ETH.3.14.054) and Queensland Health (HREC/14/QGC/30) as well as governance approval from the 16 participating hospitals. All participants received information about the project; received an invitation to participate and signed a consent form and agreed to allow an audio recording to be conducted.

Consent for publication

All the data used from the interviews were de-identified for the analysis. No individual details, images or recordings, were used apart from the de-identified transcription.

Competing interests

RF is an Associate Editor of the Journal. No other authors have declared any competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Part II. rigour in qualitative research: complexities and solutions

Affiliation.

- 1 University of Queensland, School of Nursing, Australia.

- PMID: 16220839

- DOI: 10.7748/nr2005.07.13.1.29.c5998

Anthony G Tuckett outlines the strategies and operational techniques he used to attain rigour in a qualitative research study through relying on Guba and Lincoln's trustworthiness criterion. Research strategies such as use of personal journals, audio recording and transcript auditing, and operational techniques including triangulation strategies and peer review, are examined.

Publication types

- Attitude of Health Personnel

- Attitude to Health

- Data Collection / methods

- Data Collection / standards

- Data Interpretation, Statistical

- Geriatric Nursing / education

- Geriatric Nursing / organization & administration

- Nursing Methodology Research / methods*

- Nursing Methodology Research / standards

- Peer Review, Research / methods

- Periodicals as Topic

- Qualitative Research*

- Reproducibility of Results

- Research Design / standards*

- Tape Recording

Separating "Fact" from Fiction: Strategies to Improve Rigour in Historical Research

- Tanya Langtree James Cook University http://orcid.org/0000-0002-0622-8044

- Melanie Birks James Cook University http://orcid.org/0000-0001-7611-4272

- Narelle Biedermann James Cook University http://orcid.org/0000-0002-8344-5069

Since the 1980s, many fields of qualitative research have adopted LINCOLN and GUBA's (1985) four criteria for determining rigour (credibility, confirmability, dependability and transferability) to evaluate the quality of research outputs. Historical research is one field of qualitative inquiry where this is not the case. While most historical researchers recognise the need to be rigorous in their methods in order to improve the trustworthiness of their results, ambiguity exists about how rigour is demonstrated in historical research. As a result, strategies to establish rigour remain focused on piecemeal activities (e.g., source criticism) rather than adopting a whole-of-study approach. Using a piecemeal approach makes it difficult for others to understand the researcher's rationale for the methods used and decisions made during the research process. Fragmenting approaches to rigour may contribute to questioning of the legitimacy of historical methods. In this article, we provide a critique of the challenges to achieving rigour that currently exist in historical research. We then offer practical strategies that can be incorporated into historical methods to address these challenges with the aim of producing a more transparent historical narrative.

Author Biographies

Tanya langtree, james cook university.

Tanya LANGTREE , MNSt is a PhD candidate in the College of Healthcare Sciences, Division of Tropical Health and Medicine, James Cook University, Australia. Her research interests include nursing education, simulation and history. Tanya's PhD project is using historical methods to conceptualise the development of nursing practice prior to professionalisation.

Melanie Birks, James Cook University

Melanie BIRKS , PhD, is professor and Head of Nursing and Midwifery at James Cook University, Australia. Her research interests are in the areas of accessibility, innovation, relevance and quality in nursing education.

Narelle Biedermann, James Cook University

Narelle BIEDERMANN , PhD, is a senior lecturer in Nursing and Midwifery at James Cook University, Australia. Her research interests are in the areas of nursing and military history and nursing education.

How to Cite

- Endnote/Zotero/Mendeley (RIS)

Copyright (c) 2019 Tanya Langtree, Melanie Birks, Narelle Biedermann

This work is licensed under a Creative Commons Attribution 4.0 International License .

Most read articles by the same author(s)

- Helena Harrison, Melanie Birks, Richard Franklin, Jane Mills, Case Study Research: Foundations and Methodological Orientations , Forum Qualitative Sozialforschung / Forum: Qualitative Social Research: Vol. 18 No. 1 (2017): Analyzing Narratives Across Media

Make a Submission

Current issue, information.

- For Readers

- For Authors

- For Librarians

Usage Statistics Information

We log anonymous usage statistics. Please read the privacy information for details.

Developed By

2000-2024 Forum Qualitative Sozialforschung / Forum: Qualitative Social Research (ISSN 1438-5627) Institut für Qualitative Forschung , Internationale Akademie Berlin gGmbH

Hosting: Center for Digital Systems , Freie Universität Berlin Funding 2023-2025 by the KOALA project

Privacy Statement Accessibility Statement

Criteria for Good Qualitative Research: A Comprehensive Review

- Regular Article

- Open access

- Published: 18 September 2021

- Volume 31 , pages 679–689, ( 2022 )

Cite this article

You have full access to this open access article

- Drishti Yadav ORCID: orcid.org/0000-0002-2974-0323 1

28 Citations

72 Altmetric

Explore all metrics

This review aims to synthesize a published set of evaluative criteria for good qualitative research. The aim is to shed light on existing standards for assessing the rigor of qualitative research encompassing a range of epistemological and ontological standpoints. Using a systematic search strategy, published journal articles that deliberate criteria for rigorous research were identified. Then, references of relevant articles were surveyed to find noteworthy, distinct, and well-defined pointers to good qualitative research. This review presents an investigative assessment of the pivotal features in qualitative research that can permit the readers to pass judgment on its quality and to condemn it as good research when objectively and adequately utilized. Overall, this review underlines the crux of qualitative research and accentuates the necessity to evaluate such research by the very tenets of its being. It also offers some prospects and recommendations to improve the quality of qualitative research. Based on the findings of this review, it is concluded that quality criteria are the aftereffect of socio-institutional procedures and existing paradigmatic conducts. Owing to the paradigmatic diversity of qualitative research, a single and specific set of quality criteria is neither feasible nor anticipated. Since qualitative research is not a cohesive discipline, researchers need to educate and familiarize themselves with applicable norms and decisive factors to evaluate qualitative research from within its theoretical and methodological framework of origin.

Similar content being viewed by others

What is Qualitative in Qualitative Research

Environmental-, social-, and governance-related factors for business investment and sustainability: a scientometric review of global trends

Mixed methods research: what it is and what it could be

Avoid common mistakes on your manuscript.

Introduction

“… It is important to regularly dialogue about what makes for good qualitative research” (Tracy, 2010 , p. 837)

To decide what represents good qualitative research is highly debatable. There are numerous methods that are contained within qualitative research and that are established on diverse philosophical perspectives. Bryman et al., ( 2008 , p. 262) suggest that “It is widely assumed that whereas quality criteria for quantitative research are well‐known and widely agreed, this is not the case for qualitative research.” Hence, the question “how to evaluate the quality of qualitative research” has been continuously debated. There are many areas of science and technology wherein these debates on the assessment of qualitative research have taken place. Examples include various areas of psychology: general psychology (Madill et al., 2000 ); counseling psychology (Morrow, 2005 ); and clinical psychology (Barker & Pistrang, 2005 ), and other disciplines of social sciences: social policy (Bryman et al., 2008 ); health research (Sparkes, 2001 ); business and management research (Johnson et al., 2006 ); information systems (Klein & Myers, 1999 ); and environmental studies (Reid & Gough, 2000 ). In the literature, these debates are enthused by the impression that the blanket application of criteria for good qualitative research developed around the positivist paradigm is improper. Such debates are based on the wide range of philosophical backgrounds within which qualitative research is conducted (e.g., Sandberg, 2000 ; Schwandt, 1996 ). The existence of methodological diversity led to the formulation of different sets of criteria applicable to qualitative research.

Among qualitative researchers, the dilemma of governing the measures to assess the quality of research is not a new phenomenon, especially when the virtuous triad of objectivity, reliability, and validity (Spencer et al., 2004 ) are not adequate. Occasionally, the criteria of quantitative research are used to evaluate qualitative research (Cohen & Crabtree, 2008 ; Lather, 2004 ). Indeed, Howe ( 2004 ) claims that the prevailing paradigm in educational research is scientifically based experimental research. Hypotheses and conjectures about the preeminence of quantitative research can weaken the worth and usefulness of qualitative research by neglecting the prominence of harmonizing match for purpose on research paradigm, the epistemological stance of the researcher, and the choice of methodology. Researchers have been reprimanded concerning this in “paradigmatic controversies, contradictions, and emerging confluences” (Lincoln & Guba, 2000 ).

In general, qualitative research tends to come from a very different paradigmatic stance and intrinsically demands distinctive and out-of-the-ordinary criteria for evaluating good research and varieties of research contributions that can be made. This review attempts to present a series of evaluative criteria for qualitative researchers, arguing that their choice of criteria needs to be compatible with the unique nature of the research in question (its methodology, aims, and assumptions). This review aims to assist researchers in identifying some of the indispensable features or markers of high-quality qualitative research. In a nutshell, the purpose of this systematic literature review is to analyze the existing knowledge on high-quality qualitative research and to verify the existence of research studies dealing with the critical assessment of qualitative research based on the concept of diverse paradigmatic stances. Contrary to the existing reviews, this review also suggests some critical directions to follow to improve the quality of qualitative research in different epistemological and ontological perspectives. This review is also intended to provide guidelines for the acceleration of future developments and dialogues among qualitative researchers in the context of assessing the qualitative research.

The rest of this review article is structured in the following fashion: Sect. Methods describes the method followed for performing this review. Section Criteria for Evaluating Qualitative Studies provides a comprehensive description of the criteria for evaluating qualitative studies. This section is followed by a summary of the strategies to improve the quality of qualitative research in Sect. Improving Quality: Strategies . Section How to Assess the Quality of the Research Findings? provides details on how to assess the quality of the research findings. After that, some of the quality checklists (as tools to evaluate quality) are discussed in Sect. Quality Checklists: Tools for Assessing the Quality . At last, the review ends with the concluding remarks presented in Sect. Conclusions, Future Directions and Outlook . Some prospects in qualitative research for enhancing its quality and usefulness in the social and techno-scientific research community are also presented in Sect. Conclusions, Future Directions and Outlook .

For this review, a comprehensive literature search was performed from many databases using generic search terms such as Qualitative Research , Criteria , etc . The following databases were chosen for the literature search based on the high number of results: IEEE Explore, ScienceDirect, PubMed, Google Scholar, and Web of Science. The following keywords (and their combinations using Boolean connectives OR/AND) were adopted for the literature search: qualitative research, criteria, quality, assessment, and validity. The synonyms for these keywords were collected and arranged in a logical structure (see Table 1 ). All publications in journals and conference proceedings later than 1950 till 2021 were considered for the search. Other articles extracted from the references of the papers identified in the electronic search were also included. A large number of publications on qualitative research were retrieved during the initial screening. Hence, to include the searches with the main focus on criteria for good qualitative research, an inclusion criterion was utilized in the search string.

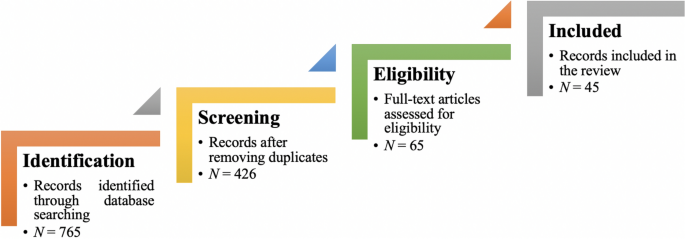

From the selected databases, the search retrieved a total of 765 publications. Then, the duplicate records were removed. After that, based on the title and abstract, the remaining 426 publications were screened for their relevance by using the following inclusion and exclusion criteria (see Table 2 ). Publications focusing on evaluation criteria for good qualitative research were included, whereas those works which delivered theoretical concepts on qualitative research were excluded. Based on the screening and eligibility, 45 research articles were identified that offered explicit criteria for evaluating the quality of qualitative research and were found to be relevant to this review.

Figure 1 illustrates the complete review process in the form of PRISMA flow diagram. PRISMA, i.e., “preferred reporting items for systematic reviews and meta-analyses” is employed in systematic reviews to refine the quality of reporting.

PRISMA flow diagram illustrating the search and inclusion process. N represents the number of records

Criteria for Evaluating Qualitative Studies

Fundamental criteria: general research quality.

Various researchers have put forward criteria for evaluating qualitative research, which have been summarized in Table 3 . Also, the criteria outlined in Table 4 effectively deliver the various approaches to evaluate and assess the quality of qualitative work. The entries in Table 4 are based on Tracy’s “Eight big‐tent criteria for excellent qualitative research” (Tracy, 2010 ). Tracy argues that high-quality qualitative work should formulate criteria focusing on the worthiness, relevance, timeliness, significance, morality, and practicality of the research topic, and the ethical stance of the research itself. Researchers have also suggested a series of questions as guiding principles to assess the quality of a qualitative study (Mays & Pope, 2020 ). Nassaji ( 2020 ) argues that good qualitative research should be robust, well informed, and thoroughly documented.

Qualitative Research: Interpretive Paradigms

All qualitative researchers follow highly abstract principles which bring together beliefs about ontology, epistemology, and methodology. These beliefs govern how the researcher perceives and acts. The net, which encompasses the researcher’s epistemological, ontological, and methodological premises, is referred to as a paradigm, or an interpretive structure, a “Basic set of beliefs that guides action” (Guba, 1990 ). Four major interpretive paradigms structure the qualitative research: positivist and postpositivist, constructivist interpretive, critical (Marxist, emancipatory), and feminist poststructural. The complexity of these four abstract paradigms increases at the level of concrete, specific interpretive communities. Table 5 presents these paradigms and their assumptions, including their criteria for evaluating research, and the typical form that an interpretive or theoretical statement assumes in each paradigm. Moreover, for evaluating qualitative research, quantitative conceptualizations of reliability and validity are proven to be incompatible (Horsburgh, 2003 ). In addition, a series of questions have been put forward in the literature to assist a reviewer (who is proficient in qualitative methods) for meticulous assessment and endorsement of qualitative research (Morse, 2003 ). Hammersley ( 2007 ) also suggests that guiding principles for qualitative research are advantageous, but methodological pluralism should not be simply acknowledged for all qualitative approaches. Seale ( 1999 ) also points out the significance of methodological cognizance in research studies.

Table 5 reflects that criteria for assessing the quality of qualitative research are the aftermath of socio-institutional practices and existing paradigmatic standpoints. Owing to the paradigmatic diversity of qualitative research, a single set of quality criteria is neither possible nor desirable. Hence, the researchers must be reflexive about the criteria they use in the various roles they play within their research community.

Improving Quality: Strategies

Another critical question is “How can the qualitative researchers ensure that the abovementioned quality criteria can be met?” Lincoln and Guba ( 1986 ) delineated several strategies to intensify each criteria of trustworthiness. Other researchers (Merriam & Tisdell, 2016 ; Shenton, 2004 ) also presented such strategies. A brief description of these strategies is shown in Table 6 .

It is worth mentioning that generalizability is also an integral part of qualitative research (Hays & McKibben, 2021 ). In general, the guiding principle pertaining to generalizability speaks about inducing and comprehending knowledge to synthesize interpretive components of an underlying context. Table 7 summarizes the main metasynthesis steps required to ascertain generalizability in qualitative research.

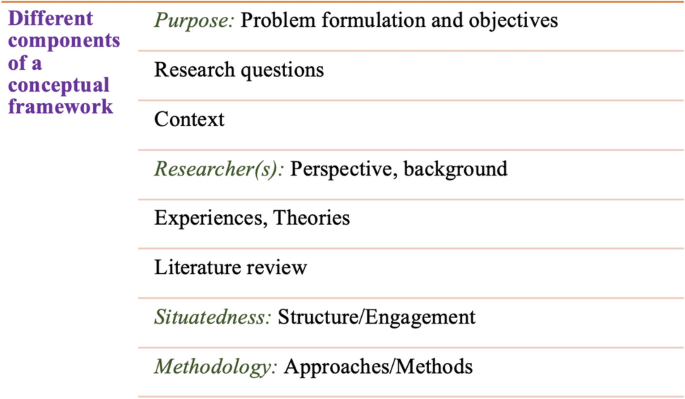

Figure 2 reflects the crucial components of a conceptual framework and their contribution to decisions regarding research design, implementation, and applications of results to future thinking, study, and practice (Johnson et al., 2020 ). The synergy and interrelationship of these components signifies their role to different stances of a qualitative research study.

Essential elements of a conceptual framework

In a nutshell, to assess the rationale of a study, its conceptual framework and research question(s), quality criteria must take account of the following: lucid context for the problem statement in the introduction; well-articulated research problems and questions; precise conceptual framework; distinct research purpose; and clear presentation and investigation of the paradigms. These criteria would expedite the quality of qualitative research.

How to Assess the Quality of the Research Findings?

The inclusion of quotes or similar research data enhances the confirmability in the write-up of the findings. The use of expressions (for instance, “80% of all respondents agreed that” or “only one of the interviewees mentioned that”) may also quantify qualitative findings (Stenfors et al., 2020 ). On the other hand, the persuasive reason for “why this may not help in intensifying the research” has also been provided (Monrouxe & Rees, 2020 ). Further, the Discussion and Conclusion sections of an article also prove robust markers of high-quality qualitative research, as elucidated in Table 8 .

Quality Checklists: Tools for Assessing the Quality

Numerous checklists are available to speed up the assessment of the quality of qualitative research. However, if used uncritically and recklessly concerning the research context, these checklists may be counterproductive. I recommend that such lists and guiding principles may assist in pinpointing the markers of high-quality qualitative research. However, considering enormous variations in the authors’ theoretical and philosophical contexts, I would emphasize that high dependability on such checklists may say little about whether the findings can be applied in your setting. A combination of such checklists might be appropriate for novice researchers. Some of these checklists are listed below:

The most commonly used framework is Consolidated Criteria for Reporting Qualitative Research (COREQ) (Tong et al., 2007 ). This framework is recommended by some journals to be followed by the authors during article submission.

Standards for Reporting Qualitative Research (SRQR) is another checklist that has been created particularly for medical education (O’Brien et al., 2014 ).

Also, Tracy ( 2010 ) and Critical Appraisal Skills Programme (CASP, 2021 ) offer criteria for qualitative research relevant across methods and approaches.

Further, researchers have also outlined different criteria as hallmarks of high-quality qualitative research. For instance, the “Road Trip Checklist” (Epp & Otnes, 2021 ) provides a quick reference to specific questions to address different elements of high-quality qualitative research.

Conclusions, Future Directions, and Outlook

This work presents a broad review of the criteria for good qualitative research. In addition, this article presents an exploratory analysis of the essential elements in qualitative research that can enable the readers of qualitative work to judge it as good research when objectively and adequately utilized. In this review, some of the essential markers that indicate high-quality qualitative research have been highlighted. I scope them narrowly to achieve rigor in qualitative research and note that they do not completely cover the broader considerations necessary for high-quality research. This review points out that a universal and versatile one-size-fits-all guideline for evaluating the quality of qualitative research does not exist. In other words, this review also emphasizes the non-existence of a set of common guidelines among qualitative researchers. In unison, this review reinforces that each qualitative approach should be treated uniquely on account of its own distinctive features for different epistemological and disciplinary positions. Owing to the sensitivity of the worth of qualitative research towards the specific context and the type of paradigmatic stance, researchers should themselves analyze what approaches can be and must be tailored to ensemble the distinct characteristics of the phenomenon under investigation. Although this article does not assert to put forward a magic bullet and to provide a one-stop solution for dealing with dilemmas about how, why, or whether to evaluate the “goodness” of qualitative research, it offers a platform to assist the researchers in improving their qualitative studies. This work provides an assembly of concerns to reflect on, a series of questions to ask, and multiple sets of criteria to look at, when attempting to determine the quality of qualitative research. Overall, this review underlines the crux of qualitative research and accentuates the need to evaluate such research by the very tenets of its being. Bringing together the vital arguments and delineating the requirements that good qualitative research should satisfy, this review strives to equip the researchers as well as reviewers to make well-versed judgment about the worth and significance of the qualitative research under scrutiny. In a nutshell, a comprehensive portrayal of the research process (from the context of research to the research objectives, research questions and design, speculative foundations, and from approaches of collecting data to analyzing the results, to deriving inferences) frequently proliferates the quality of a qualitative research.

Prospects : A Road Ahead for Qualitative Research

Irrefutably, qualitative research is a vivacious and evolving discipline wherein different epistemological and disciplinary positions have their own characteristics and importance. In addition, not surprisingly, owing to the sprouting and varied features of qualitative research, no consensus has been pulled off till date. Researchers have reflected various concerns and proposed several recommendations for editors and reviewers on conducting reviews of critical qualitative research (Levitt et al., 2021 ; McGinley et al., 2021 ). Following are some prospects and a few recommendations put forward towards the maturation of qualitative research and its quality evaluation:

In general, most of the manuscript and grant reviewers are not qualitative experts. Hence, it is more likely that they would prefer to adopt a broad set of criteria. However, researchers and reviewers need to keep in mind that it is inappropriate to utilize the same approaches and conducts among all qualitative research. Therefore, future work needs to focus on educating researchers and reviewers about the criteria to evaluate qualitative research from within the suitable theoretical and methodological context.

There is an urgent need to refurbish and augment critical assessment of some well-known and widely accepted tools (including checklists such as COREQ, SRQR) to interrogate their applicability on different aspects (along with their epistemological ramifications).

Efforts should be made towards creating more space for creativity, experimentation, and a dialogue between the diverse traditions of qualitative research. This would potentially help to avoid the enforcement of one's own set of quality criteria on the work carried out by others.

Moreover, journal reviewers need to be aware of various methodological practices and philosophical debates.

It is pivotal to highlight the expressions and considerations of qualitative researchers and bring them into a more open and transparent dialogue about assessing qualitative research in techno-scientific, academic, sociocultural, and political rooms.

Frequent debates on the use of evaluative criteria are required to solve some potentially resolved issues (including the applicability of a single set of criteria in multi-disciplinary aspects). Such debates would not only benefit the group of qualitative researchers themselves, but primarily assist in augmenting the well-being and vivacity of the entire discipline.

To conclude, I speculate that the criteria, and my perspective, may transfer to other methods, approaches, and contexts. I hope that they spark dialog and debate – about criteria for excellent qualitative research and the underpinnings of the discipline more broadly – and, therefore, help improve the quality of a qualitative study. Further, I anticipate that this review will assist the researchers to contemplate on the quality of their own research, to substantiate research design and help the reviewers to review qualitative research for journals. On a final note, I pinpoint the need to formulate a framework (encompassing the prerequisites of a qualitative study) by the cohesive efforts of qualitative researchers of different disciplines with different theoretic-paradigmatic origins. I believe that tailoring such a framework (of guiding principles) paves the way for qualitative researchers to consolidate the status of qualitative research in the wide-ranging open science debate. Dialogue on this issue across different approaches is crucial for the impending prospects of socio-techno-educational research.

Amin, M. E. K., Nørgaard, L. S., Cavaco, A. M., Witry, M. J., Hillman, L., Cernasev, A., & Desselle, S. P. (2020). Establishing trustworthiness and authenticity in qualitative pharmacy research. Research in Social and Administrative Pharmacy, 16 (10), 1472–1482.

Article Google Scholar

Barker, C., & Pistrang, N. (2005). Quality criteria under methodological pluralism: Implications for conducting and evaluating research. American Journal of Community Psychology, 35 (3–4), 201–212.

Bryman, A., Becker, S., & Sempik, J. (2008). Quality criteria for quantitative, qualitative and mixed methods research: A view from social policy. International Journal of Social Research Methodology, 11 (4), 261–276.

Caelli, K., Ray, L., & Mill, J. (2003). ‘Clear as mud’: Toward greater clarity in generic qualitative research. International Journal of Qualitative Methods, 2 (2), 1–13.

CASP (2021). CASP checklists. Retrieved May 2021 from https://casp-uk.net/casp-tools-checklists/

Cohen, D. J., & Crabtree, B. F. (2008). Evaluative criteria for qualitative research in health care: Controversies and recommendations. The Annals of Family Medicine, 6 (4), 331–339.

Denzin, N. K., & Lincoln, Y. S. (2005). Introduction: The discipline and practice of qualitative research. In N. K. Denzin & Y. S. Lincoln (Eds.), The sage handbook of qualitative research (pp. 1–32). Sage Publications Ltd.

Google Scholar

Elliott, R., Fischer, C. T., & Rennie, D. L. (1999). Evolving guidelines for publication of qualitative research studies in psychology and related fields. British Journal of Clinical Psychology, 38 (3), 215–229.

Epp, A. M., & Otnes, C. C. (2021). High-quality qualitative research: Getting into gear. Journal of Service Research . https://doi.org/10.1177/1094670520961445

Guba, E. G. (1990). The paradigm dialog. In Alternative paradigms conference, mar, 1989, Indiana u, school of education, San Francisco, ca, us . Sage Publications, Inc.

Hammersley, M. (2007). The issue of quality in qualitative research. International Journal of Research and Method in Education, 30 (3), 287–305.

Haven, T. L., Errington, T. M., Gleditsch, K. S., van Grootel, L., Jacobs, A. M., Kern, F. G., & Mokkink, L. B. (2020). Preregistering qualitative research: A Delphi study. International Journal of Qualitative Methods, 19 , 1609406920976417.

Hays, D. G., & McKibben, W. B. (2021). Promoting rigorous research: Generalizability and qualitative research. Journal of Counseling and Development, 99 (2), 178–188.

Horsburgh, D. (2003). Evaluation of qualitative research. Journal of Clinical Nursing, 12 (2), 307–312.

Howe, K. R. (2004). A critique of experimentalism. Qualitative Inquiry, 10 (1), 42–46.

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American Journal of Pharmaceutical Education, 84 (1), 7120.

Johnson, P., Buehring, A., Cassell, C., & Symon, G. (2006). Evaluating qualitative management research: Towards a contingent criteriology. International Journal of Management Reviews, 8 (3), 131–156.

Klein, H. K., & Myers, M. D. (1999). A set of principles for conducting and evaluating interpretive field studies in information systems. MIS Quarterly, 23 (1), 67–93.

Lather, P. (2004). This is your father’s paradigm: Government intrusion and the case of qualitative research in education. Qualitative Inquiry, 10 (1), 15–34.

Levitt, H. M., Morrill, Z., Collins, K. M., & Rizo, J. L. (2021). The methodological integrity of critical qualitative research: Principles to support design and research review. Journal of Counseling Psychology, 68 (3), 357.

Lincoln, Y. S., & Guba, E. G. (1986). But is it rigorous? Trustworthiness and authenticity in naturalistic evaluation. New Directions for Program Evaluation, 1986 (30), 73–84.

Lincoln, Y. S., & Guba, E. G. (2000). Paradigmatic controversies, contradictions and emerging confluences. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (2nd ed., pp. 163–188). Sage Publications.

Madill, A., Jordan, A., & Shirley, C. (2000). Objectivity and reliability in qualitative analysis: Realist, contextualist and radical constructionist epistemologies. British Journal of Psychology, 91 (1), 1–20.

Mays, N., & Pope, C. (2020). Quality in qualitative research. Qualitative Research in Health Care . https://doi.org/10.1002/9781119410867.ch15

McGinley, S., Wei, W., Zhang, L., & Zheng, Y. (2021). The state of qualitative research in hospitality: A 5-year review 2014 to 2019. Cornell Hospitality Quarterly, 62 (1), 8–20.

Merriam, S., & Tisdell, E. (2016). Qualitative research: A guide to design and implementation. San Francisco, US.

Meyer, M., & Dykes, J. (2019). Criteria for rigor in visualization design study. IEEE Transactions on Visualization and Computer Graphics, 26 (1), 87–97.

Monrouxe, L. V., & Rees, C. E. (2020). When I say… quantification in qualitative research. Medical Education, 54 (3), 186–187.

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52 (2), 250.

Morse, J. M. (2003). A review committee’s guide for evaluating qualitative proposals. Qualitative Health Research, 13 (6), 833–851.

Nassaji, H. (2020). Good qualitative research. Language Teaching Research, 24 (4), 427–431.

O’Brien, B. C., Harris, I. B., Beckman, T. J., Reed, D. A., & Cook, D. A. (2014). Standards for reporting qualitative research: A synthesis of recommendations. Academic Medicine, 89 (9), 1245–1251.

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19 , 1609406919899220.

Reid, A., & Gough, S. (2000). Guidelines for reporting and evaluating qualitative research: What are the alternatives? Environmental Education Research, 6 (1), 59–91.

Rocco, T. S. (2010). Criteria for evaluating qualitative studies. Human Resource Development International . https://doi.org/10.1080/13678868.2010.501959

Sandberg, J. (2000). Understanding human competence at work: An interpretative approach. Academy of Management Journal, 43 (1), 9–25.

Schwandt, T. A. (1996). Farewell to criteriology. Qualitative Inquiry, 2 (1), 58–72.

Seale, C. (1999). Quality in qualitative research. Qualitative Inquiry, 5 (4), 465–478.

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Education for Information, 22 (2), 63–75.

Sparkes, A. C. (2001). Myth 94: Qualitative health researchers will agree about validity. Qualitative Health Research, 11 (4), 538–552.

Spencer, L., Ritchie, J., Lewis, J., & Dillon, L. (2004). Quality in qualitative evaluation: A framework for assessing research evidence.

Stenfors, T., Kajamaa, A., & Bennett, D. (2020). How to assess the quality of qualitative research. The Clinical Teacher, 17 (6), 596–599.

Taylor, E. W., Beck, J., & Ainsworth, E. (2001). Publishing qualitative adult education research: A peer review perspective. Studies in the Education of Adults, 33 (2), 163–179.

Tong, A., Sainsbury, P., & Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care, 19 (6), 349–357.

Tracy, S. J. (2010). Qualitative quality: Eight “big-tent” criteria for excellent qualitative research. Qualitative Inquiry, 16 (10), 837–851.

Download references

Open access funding provided by TU Wien (TUW).

Author information

Authors and affiliations.

Faculty of Informatics, Technische Universität Wien, 1040, Vienna, Austria

Drishti Yadav

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Drishti Yadav .

Ethics declarations

Conflict of interest.

The author declares no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Yadav, D. Criteria for Good Qualitative Research: A Comprehensive Review. Asia-Pacific Edu Res 31 , 679–689 (2022). https://doi.org/10.1007/s40299-021-00619-0

Download citation

Accepted : 28 August 2021

Published : 18 September 2021

Issue Date : December 2022

DOI : https://doi.org/10.1007/s40299-021-00619-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Qualitative research

- Evaluative criteria

- Find a journal

- Publish with us

- Track your research

- Search Menu

- Sign in through your institution

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Submit?

- About Public Opinion Quarterly

- About the American Association for Public Opinion Research

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Anti-semitic attitudes of the mass public: estimates and explanations based on a survey of the moscow oblast.

- Article contents

- Figures & tables

- Supplementary Data

JAMES L. GIBSON, RAYMOND M. DUCH, ANTI-SEMITIC ATTITUDES OF THE MASS PUBLIC: ESTIMATES AND EXPLANATIONS BASED ON A SURVEY OF THE MOSCOW OBLAST, Public Opinion Quarterly , Volume 56, Issue 1, SPRING 1992, Pages 1–28, https://doi.org/10.1086/269293

- Permissions Icon Permissions

In this article we examine anti-Semitism as expressed by a sample of residents of the Moscow Oblast (Soviet Union). Based on a survey conducted in 1920, we begin by describing anti-Jewish prejudice and support for official discrimination against Jews. We discover a surprisingly low level of expressed anti-Semitism among these Soviet respondents and virtually no support for state policies that discriminate against Jews. At the same time, many of the conventional hypotheses predicting anti-Semitism are supported in the Soviet case. Anti-Semitism is concentrated among those with lower levels of education, those whose personal financial condition is deteriorating, and those who oppose further democratization of the Soviet Union. We do not take these findings as evidence that anti-Semitism is a trivial problem in the Soviet Union but, rather, suggest that efforts to combat anti-Jewish movements would likely receive considerable support from ordinary Soviet people.

Email alerts

Citing articles via.

- Recommend to your Library

Affiliations

- Online ISSN 1537-5331

- Copyright © 2024 American Association for Public Opinion Research

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

The Unique Burial of a Child of Early Scythian Time at the Cemetery of Saryg-Bulun (Tuva)

<< Previous page

Pages: 379-406

In 1988, the Tuvan Archaeological Expedition (led by M. E. Kilunovskaya and V. A. Semenov) discovered a unique burial of the early Iron Age at Saryg-Bulun in Central Tuva. There are two burial mounds of the Aldy-Bel culture dated by 7th century BC. Within the barrows, which adjoined one another, forming a figure-of-eight, there were discovered 7 burials, from which a representative collection of artifacts was recovered. Burial 5 was the most unique, it was found in a coffin made of a larch trunk, with a tightly closed lid. Due to the preservative properties of larch and lack of air access, the coffin contained a well-preserved mummy of a child with an accompanying set of grave goods. The interred individual retained the skin on his face and had a leather headdress painted with red pigment and a coat, sewn from jerboa fur. The coat was belted with a leather belt with bronze ornaments and buckles. Besides that, a leather quiver with arrows with the shafts decorated with painted ornaments, fully preserved battle pick and a bow were buried in the coffin. Unexpectedly, the full-genomic analysis, showed that the individual was female. This fact opens a new aspect in the study of the social history of the Scythian society and perhaps brings us back to the myth of the Amazons, discussed by Herodotus. Of course, this discovery is unique in its preservation for the Scythian culture of Tuva and requires careful study and conservation.

Keywords: Tuva, Early Iron Age, early Scythian period, Aldy-Bel culture, barrow, burial in the coffin, mummy, full genome sequencing, aDNA