- Privacy Policy

Home » Data Collection – Methods Types and Examples

Data Collection – Methods Types and Examples

Table of Contents

Data Collection

Definition:

Data collection is the process of gathering and collecting information from various sources to analyze and make informed decisions based on the data collected. This can involve various methods, such as surveys, interviews, experiments, and observation.

In order for data collection to be effective, it is important to have a clear understanding of what data is needed and what the purpose of the data collection is. This can involve identifying the population or sample being studied, determining the variables to be measured, and selecting appropriate methods for collecting and recording data.

Types of Data Collection

Types of Data Collection are as follows:

Primary Data Collection

Primary data collection is the process of gathering original and firsthand information directly from the source or target population. This type of data collection involves collecting data that has not been previously gathered, recorded, or published. Primary data can be collected through various methods such as surveys, interviews, observations, experiments, and focus groups. The data collected is usually specific to the research question or objective and can provide valuable insights that cannot be obtained from secondary data sources. Primary data collection is often used in market research, social research, and scientific research.

Secondary Data Collection

Secondary data collection is the process of gathering information from existing sources that have already been collected and analyzed by someone else, rather than conducting new research to collect primary data. Secondary data can be collected from various sources, such as published reports, books, journals, newspapers, websites, government publications, and other documents.

Qualitative Data Collection

Qualitative data collection is used to gather non-numerical data such as opinions, experiences, perceptions, and feelings, through techniques such as interviews, focus groups, observations, and document analysis. It seeks to understand the deeper meaning and context of a phenomenon or situation and is often used in social sciences, psychology, and humanities. Qualitative data collection methods allow for a more in-depth and holistic exploration of research questions and can provide rich and nuanced insights into human behavior and experiences.

Quantitative Data Collection

Quantitative data collection is a used to gather numerical data that can be analyzed using statistical methods. This data is typically collected through surveys, experiments, and other structured data collection methods. Quantitative data collection seeks to quantify and measure variables, such as behaviors, attitudes, and opinions, in a systematic and objective way. This data is often used to test hypotheses, identify patterns, and establish correlations between variables. Quantitative data collection methods allow for precise measurement and generalization of findings to a larger population. It is commonly used in fields such as economics, psychology, and natural sciences.

Data Collection Methods

Data Collection Methods are as follows:

Surveys involve asking questions to a sample of individuals or organizations to collect data. Surveys can be conducted in person, over the phone, or online.

Interviews involve a one-on-one conversation between the interviewer and the respondent. Interviews can be structured or unstructured and can be conducted in person or over the phone.

Focus Groups

Focus groups are group discussions that are moderated by a facilitator. Focus groups are used to collect qualitative data on a specific topic.

Observation

Observation involves watching and recording the behavior of people, objects, or events in their natural setting. Observation can be done overtly or covertly, depending on the research question.

Experiments

Experiments involve manipulating one or more variables and observing the effect on another variable. Experiments are commonly used in scientific research.

Case Studies

Case studies involve in-depth analysis of a single individual, organization, or event. Case studies are used to gain detailed information about a specific phenomenon.

Secondary Data Analysis

Secondary data analysis involves using existing data that was collected for another purpose. Secondary data can come from various sources, such as government agencies, academic institutions, or private companies.

How to Collect Data

The following are some steps to consider when collecting data:

- Define the objective : Before you start collecting data, you need to define the objective of the study. This will help you determine what data you need to collect and how to collect it.

- Identify the data sources : Identify the sources of data that will help you achieve your objective. These sources can be primary sources, such as surveys, interviews, and observations, or secondary sources, such as books, articles, and databases.

- Determine the data collection method : Once you have identified the data sources, you need to determine the data collection method. This could be through online surveys, phone interviews, or face-to-face meetings.

- Develop a data collection plan : Develop a plan that outlines the steps you will take to collect the data. This plan should include the timeline, the tools and equipment needed, and the personnel involved.

- Test the data collection process: Before you start collecting data, test the data collection process to ensure that it is effective and efficient.

- Collect the data: Collect the data according to the plan you developed in step 4. Make sure you record the data accurately and consistently.

- Analyze the data: Once you have collected the data, analyze it to draw conclusions and make recommendations.

- Report the findings: Report the findings of your data analysis to the relevant stakeholders. This could be in the form of a report, a presentation, or a publication.

- Monitor and evaluate the data collection process: After the data collection process is complete, monitor and evaluate the process to identify areas for improvement in future data collection efforts.

- Ensure data quality: Ensure that the collected data is of high quality and free from errors. This can be achieved by validating the data for accuracy, completeness, and consistency.

- Maintain data security: Ensure that the collected data is secure and protected from unauthorized access or disclosure. This can be achieved by implementing data security protocols and using secure storage and transmission methods.

- Follow ethical considerations: Follow ethical considerations when collecting data, such as obtaining informed consent from participants, protecting their privacy and confidentiality, and ensuring that the research does not cause harm to participants.

- Use appropriate data analysis methods : Use appropriate data analysis methods based on the type of data collected and the research objectives. This could include statistical analysis, qualitative analysis, or a combination of both.

- Record and store data properly: Record and store the collected data properly, in a structured and organized format. This will make it easier to retrieve and use the data in future research or analysis.

- Collaborate with other stakeholders : Collaborate with other stakeholders, such as colleagues, experts, or community members, to ensure that the data collected is relevant and useful for the intended purpose.

Applications of Data Collection

Data collection methods are widely used in different fields, including social sciences, healthcare, business, education, and more. Here are some examples of how data collection methods are used in different fields:

- Social sciences : Social scientists often use surveys, questionnaires, and interviews to collect data from individuals or groups. They may also use observation to collect data on social behaviors and interactions. This data is often used to study topics such as human behavior, attitudes, and beliefs.

- Healthcare : Data collection methods are used in healthcare to monitor patient health and track treatment outcomes. Electronic health records and medical charts are commonly used to collect data on patients’ medical history, diagnoses, and treatments. Researchers may also use clinical trials and surveys to collect data on the effectiveness of different treatments.

- Business : Businesses use data collection methods to gather information on consumer behavior, market trends, and competitor activity. They may collect data through customer surveys, sales reports, and market research studies. This data is used to inform business decisions, develop marketing strategies, and improve products and services.

- Education : In education, data collection methods are used to assess student performance and measure the effectiveness of teaching methods. Standardized tests, quizzes, and exams are commonly used to collect data on student learning outcomes. Teachers may also use classroom observation and student feedback to gather data on teaching effectiveness.

- Agriculture : Farmers use data collection methods to monitor crop growth and health. Sensors and remote sensing technology can be used to collect data on soil moisture, temperature, and nutrient levels. This data is used to optimize crop yields and minimize waste.

- Environmental sciences : Environmental scientists use data collection methods to monitor air and water quality, track climate patterns, and measure the impact of human activity on the environment. They may use sensors, satellite imagery, and laboratory analysis to collect data on environmental factors.

- Transportation : Transportation companies use data collection methods to track vehicle performance, optimize routes, and improve safety. GPS systems, on-board sensors, and other tracking technologies are used to collect data on vehicle speed, fuel consumption, and driver behavior.

Examples of Data Collection

Examples of Data Collection are as follows:

- Traffic Monitoring: Cities collect real-time data on traffic patterns and congestion through sensors on roads and cameras at intersections. This information can be used to optimize traffic flow and improve safety.

- Social Media Monitoring : Companies can collect real-time data on social media platforms such as Twitter and Facebook to monitor their brand reputation, track customer sentiment, and respond to customer inquiries and complaints in real-time.

- Weather Monitoring: Weather agencies collect real-time data on temperature, humidity, air pressure, and precipitation through weather stations and satellites. This information is used to provide accurate weather forecasts and warnings.

- Stock Market Monitoring : Financial institutions collect real-time data on stock prices, trading volumes, and other market indicators to make informed investment decisions and respond to market fluctuations in real-time.

- Health Monitoring : Medical devices such as wearable fitness trackers and smartwatches can collect real-time data on a person’s heart rate, blood pressure, and other vital signs. This information can be used to monitor health conditions and detect early warning signs of health issues.

Purpose of Data Collection

The purpose of data collection can vary depending on the context and goals of the study, but generally, it serves to:

- Provide information: Data collection provides information about a particular phenomenon or behavior that can be used to better understand it.

- Measure progress : Data collection can be used to measure the effectiveness of interventions or programs designed to address a particular issue or problem.

- Support decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions.

- Identify trends : Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Monitor and evaluate : Data collection can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

When to use Data Collection

Data collection is used when there is a need to gather information or data on a specific topic or phenomenon. It is typically used in research, evaluation, and monitoring and is important for making informed decisions and improving outcomes.

Data collection is particularly useful in the following scenarios:

- Research : When conducting research, data collection is used to gather information on variables of interest to answer research questions and test hypotheses.

- Evaluation : Data collection is used in program evaluation to assess the effectiveness of programs or interventions, and to identify areas for improvement.

- Monitoring : Data collection is used in monitoring to track progress towards achieving goals or targets, and to identify any areas that require attention.

- Decision-making: Data collection is used to provide decision-makers with information that can be used to inform policies, strategies, and actions.

- Quality improvement : Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Characteristics of Data Collection

Data collection can be characterized by several important characteristics that help to ensure the quality and accuracy of the data gathered. These characteristics include:

- Validity : Validity refers to the accuracy and relevance of the data collected in relation to the research question or objective.

- Reliability : Reliability refers to the consistency and stability of the data collection process, ensuring that the results obtained are consistent over time and across different contexts.

- Objectivity : Objectivity refers to the impartiality of the data collection process, ensuring that the data collected is not influenced by the biases or personal opinions of the data collector.

- Precision : Precision refers to the degree of accuracy and detail in the data collected, ensuring that the data is specific and accurate enough to answer the research question or objective.

- Timeliness : Timeliness refers to the efficiency and speed with which the data is collected, ensuring that the data is collected in a timely manner to meet the needs of the research or evaluation.

- Ethical considerations : Ethical considerations refer to the ethical principles that must be followed when collecting data, such as ensuring confidentiality and obtaining informed consent from participants.

Advantages of Data Collection

There are several advantages of data collection that make it an important process in research, evaluation, and monitoring. These advantages include:

- Better decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions, leading to better decision-making.

- Improved understanding: Data collection helps to improve our understanding of a particular phenomenon or behavior by providing empirical evidence that can be analyzed and interpreted.

- Evaluation of interventions: Data collection is essential in evaluating the effectiveness of interventions or programs designed to address a particular issue or problem.

- Identifying trends and patterns: Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Increased accountability: Data collection increases accountability by providing evidence that can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

- Validation of theories: Data collection can be used to test hypotheses and validate theories, leading to a better understanding of the phenomenon being studied.

- Improved quality: Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Limitations of Data Collection

While data collection has several advantages, it also has some limitations that must be considered. These limitations include:

- Bias : Data collection can be influenced by the biases and personal opinions of the data collector, which can lead to inaccurate or misleading results.

- Sampling bias : Data collection may not be representative of the entire population, resulting in sampling bias and inaccurate results.

- Cost : Data collection can be expensive and time-consuming, particularly for large-scale studies.

- Limited scope: Data collection is limited to the variables being measured, which may not capture the entire picture or context of the phenomenon being studied.

- Ethical considerations : Data collection must follow ethical principles to protect the rights and confidentiality of the participants, which can limit the type of data that can be collected.

- Data quality issues: Data collection may result in data quality issues such as missing or incomplete data, measurement errors, and inconsistencies.

- Limited generalizability : Data collection may not be generalizable to other contexts or populations, limiting the generalizability of the findings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Evaluating Research – Process, Examples and...

Research Questions – Types, Examples and Writing...

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Collection: What It Is, Methods & Tools + Examples

Let’s face it, no one wants to make decisions based on guesswork or gut feelings. The most important objective of data collection is to ensure that the data gathered is reliable and packed to the brim with juicy insights that can be analyzed and turned into data-driven decisions. There’s nothing better than good statistical analysis .

LEARN ABOUT: Level of Analysis

Collecting high-quality data is essential for conducting market research, analyzing user behavior, or just trying to get a handle on business operations. With the right approach and a few handy tools, gathering reliable and informative data.

So, let’s get ready to collect some data because when it comes to data collection, it’s all about the details.

Content Index

What is Data Collection?

Data collection methods, data collection examples, reasons to conduct online research and data collection, conducting customer surveys for data collection to multiply sales, steps to effectively conduct an online survey for data collection, survey design for data collection.

Data collection is the procedure of collecting, measuring, and analyzing accurate insights for research using standard validated techniques.

Put simply, data collection is the process of gathering information for a specific purpose. It can be used to answer research questions, make informed business decisions, or improve products and services.

To collect data, we must first identify what information we need and how we will collect it. We can also evaluate a hypothesis based on collected data. In most cases, data collection is the primary and most important step for research. The approach to data collection is different for different fields of study, depending on the required information.

LEARN ABOUT: Action Research

There are many ways to collect information when doing research. The data collection methods that the researcher chooses will depend on the research question posed. Some data collection methods include surveys, interviews, tests, physiological evaluations, observations, reviews of existing records, and biological samples. Let’s explore them.

LEARN ABOUT: Best Data Collection Tools

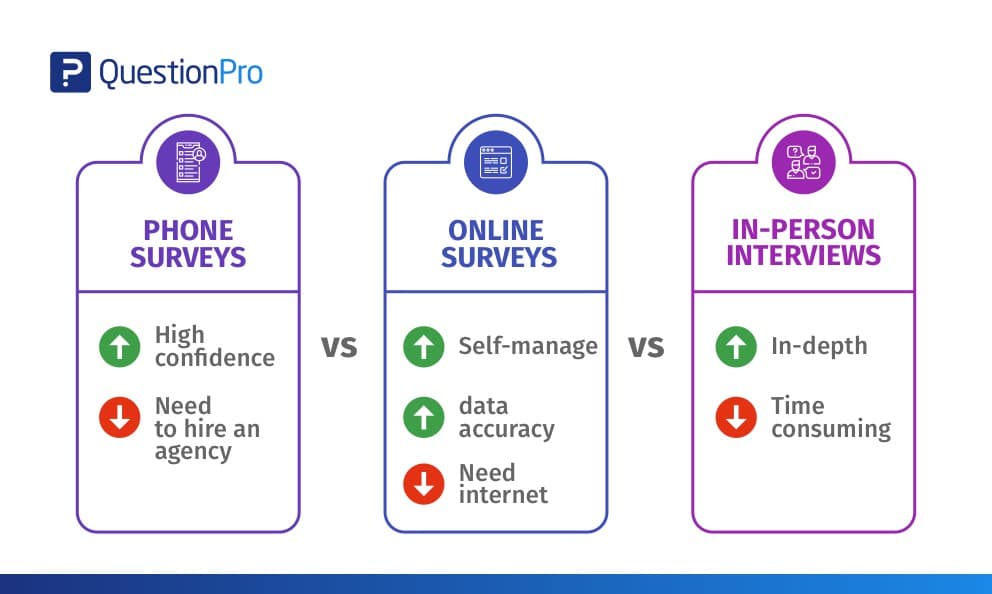

Phone vs. Online vs. In-Person Interviews

Essentially there are four choices for data collection – in-person interviews, mail, phone, and online. There are pros and cons to each of these modes.

- Pros: In-depth and a high degree of confidence in the data

- Cons: Time-consuming, expensive, and can be dismissed as anecdotal

- Pros: Can reach anyone and everyone – no barrier

- Cons: Expensive, data collection errors, lag time

- Pros: High degree of confidence in the data collected, reach almost anyone

- Cons: Expensive, cannot self-administer, need to hire an agency

- Pros: Cheap, can self-administer, very low probability of data errors

- Cons: Not all your customers might have an email address/be on the internet, customers may be wary of divulging information online.

In-person interviews always are better, but the big drawback is the trap you might fall into if you don’t do them regularly. It is expensive to regularly conduct interviews and not conducting enough interviews might give you false positives. Validating your research is almost as important as designing and conducting it.

We’ve seen many instances where after the research is conducted – if the results do not match up with the “gut-feel” of upper management, it has been dismissed off as anecdotal and a “one-time” phenomenon. To avoid such traps, we strongly recommend that data-collection be done on an “ongoing and regular” basis.

LEARN ABOUT: Research Process Steps

This will help you compare and analyze the change in perceptions according to marketing for your products/services. The other issue here is sample size. To be confident with your research, you must interview enough people to weed out the fringe elements.

A couple of years ago there was a lot of discussion about online surveys and their statistical analysis plan . The fact that not every customer had internet connectivity was one of the main concerns.

LEARN ABOUT: Statistical Analysis Methods

Although some of the discussions are still valid, the reach of the internet as a means of communication has become vital in the majority of customer interactions. According to the US Census Bureau, the number of households with computers has doubled between 1997 and 2001.

Learn more: Quantitative Market Research

In 2001 nearly 50% of households had a computer. Nearly 55% of all households with an income of more than 35,000 have internet access, which jumps to 70% for households with an annual income of 50,000. This data is from the US Census Bureau for 2001.

There are primarily three modes of data collection that can be employed to gather feedback – Mail, Phone, and Online. The method actually used for data collection is really a cost-benefit analysis. There is no slam-dunk solution but you can use the table below to understand the risks and advantages associated with each of the mediums:

Keep in mind, the reach here is defined as “All U.S. Households.” In most cases, you need to look at how many of your customers are online and determine. If all your customers have email addresses, you have a 100% reach of your customers.

Another important thing to keep in mind is the ever-increasing dominance of cellular phones over landline phones. United States FCC rules prevent automated dialing and calling cellular phone numbers and there is a noticeable trend towards people having cellular phones as the only voice communication device.

This introduces the inability to reach cellular phone customers who are dropping home phone lines in favor of going entirely wireless. Even if automated dialing is not used, another FCC rule prohibits from phoning anyone who would have to pay for the call.

Learn more: Qualitative Market Research

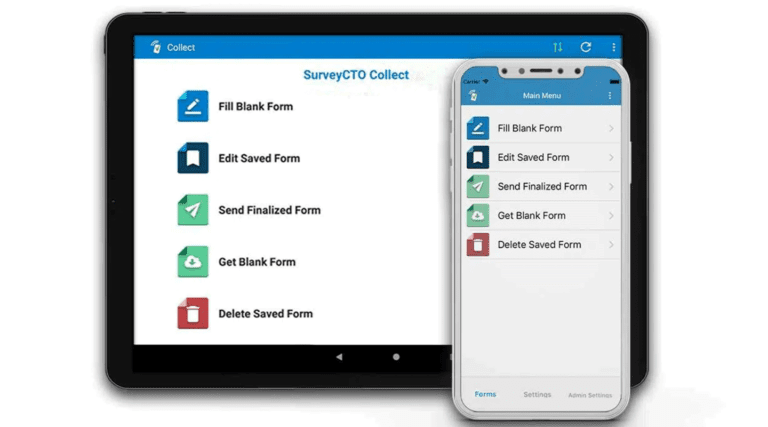

Multi-Mode Surveys

Surveys, where the data is collected via different modes (online, paper, phone etc.), is also another way of going. It is fairly straightforward and easy to have an online survey and have data-entry operators to enter in data (from the phone as well as paper surveys) into the system. The same system can also be used to collect data directly from the respondents.

Learn more: Survey Research

Data collection is an important aspect of research. Let’s consider an example of a mobile manufacturer, company X, which is launching a new product variant. To conduct research about features, price range, target market, competitor analysis, etc. data has to be collected from appropriate sources.

The marketing team can conduct various data collection activities such as online surveys or focus groups .

The survey should have all the right questions about features and pricing, such as “What are the top 3 features expected from an upcoming product?” or “How much are your likely to spend on this product?” or “Which competitors provide similar products?” etc.

For conducting a focus group, the marketing team should decide the participants and the mediator. The topic of discussion and objective behind conducting a focus group should be clarified beforehand to conduct a conclusive discussion.

Data collection methods are chosen depending on the available resources. For example, conducting questionnaires and surveys would require the least resources, while focus groups require moderately high resources.

Feedback is a vital part of any organization’s growth. Whether you conduct regular focus groups to elicit information from key players or, your account manager calls up all your marquee accounts to find out how things are going – essentially they are all processes to find out from your customers’ eyes – How are we doing? What can we do better?

Online surveys are just another medium to collect feedback from your customers , employees and anyone your business interacts with. With the advent of Do-It-Yourself tools for online surveys, data collection on the internet has become really easy, cheap and effective.

Learn more: Online Research

It is a well-established marketing fact that acquiring a new customer is 10 times more difficult and expensive than retaining an existing one. This is one of the fundamental driving forces behind the extensive adoption and interest in CRM and related customer retention tactics.

In a research study conducted by Rice University Professor Dr. Paul Dholakia and Dr. Vicki Morwitz, published in Harvard Business Review, the experiment inferred that the simple fact of asking customers how an organization was performing by itself to deliver results proved to be an effective customer retention strategy.

In the research study, conducted over the course of a year, one set of customers were sent out a satisfaction and opinion survey and the other set was not surveyed. In the next one year, the group that took the survey saw twice the number of people continuing and renewing their loyalty towards the organization data .

Learn more: Research Design

The research study provided a couple of interesting reasons on the basis of consumer psychology, behind this phenomenon:

- Satisfaction surveys boost the customers’ desire to be coddled and induce positive feelings. This crops from a section of the human psychology that intends to “appreciate” a product or service they already like or prefer. The survey feedback collection method is solely a medium to convey this. The survey is a vehicle to “interact” with the company and reinforces the customer’s commitment to the company.

- Surveys may increase awareness of auxiliary products and services. Surveys can be considered modes of both inbound as well as outbound communication. Surveys are generally considered to be a data collection and analysis source. Most people are unaware of the fact that consumer surveys can also serve as a medium for distributing data. It is important to note a few caveats here.

- In most countries, including the US, “selling under the guise of research” is illegal. b. However, we all know that information is distributed while collecting information. c. Other disclaimers may be included in the survey to ensure users are aware of this fact. For example: “We will collect your opinion and inform you about products and services that have come online in the last year…”

- Induced Judgments: The entire procedure of asking people for their feedback can prompt them to build an opinion on something they otherwise would not have thought about. This is a very underlying yet powerful argument that can be compared to the “Product Placement” strategy currently used for marketing products in mass media like movies and television shows. One example is the extensive and exclusive use of the “mini-Cooper” in the blockbuster movie “Italian Job.” This strategy is questionable and should be used with great caution.

Surveys should be considered as a critical tool in the customer journey dialog. The best thing about surveys is its ability to carry “bi-directional” information. The research conducted by Paul Dholakia and Vicki Morwitz shows that surveys not only get you the information that is critical for your business, but also enhances and builds upon the established relationship you have with your customers.

Recent technological advances have made it incredibly easy to conduct real-time surveys and opinion polls . Online tools make it easy to frame questions and answers and create surveys on the Web. Distributing surveys via email, website links or even integration with online CRM tools like Salesforce.com have made online surveying a quick-win solution.

So, you’ve decided to conduct an online survey. There are a few questions in your mind that you would like answered, and you are looking for a fast and inexpensive way to find out more about your customers, clients, etc.

First and foremost thing you need to decide what the smart objectives of the study are. Ensure that you can phrase these objectives as questions or measurements. If you can’t, you are better off looking at other data sources like focus groups and other qualitative methods . The data collected via online surveys is dominantly quantitative in nature.

Review the basic objectives of the study. What are you trying to discover? What actions do you want to take as a result of the survey? – Answers to these questions help in validating collected data. Online surveys are just one way of collecting and quantifying data .

Learn more: Qualitative Data & Qualitative Data Collection Methods

- Visualize all of the relevant information items you would like to have. What will the output survey research report look like? What charts and graphs will be prepared? What information do you need to be assured that action is warranted?

- Assign ranks to each topic (1 and 2) according to their priority, including the most important topics first. Revisit these items again to ensure that the objectives, topics, and information you need are appropriate. Remember, you can’t solve the research problem if you ask the wrong questions.

- How easy or difficult is it for the respondent to provide information on each topic? If it is difficult, is there an alternative medium to gain insights by asking a different question? This is probably the most important step. Online surveys have to be Precise, Clear and Concise. Due to the nature of the internet and the fluctuations involved, if your questions are too difficult to understand, the survey dropout rate will be high.

- Create a sequence for the topics that are unbiased. Make sure that the questions asked first do not bias the results of the next questions. Sometimes providing too much information, or disclosing purpose of the study can create bias. Once you have a series of decided topics, you can have a basic structure of a survey. It is always advisable to add an “Introductory” paragraph before the survey to explain the project objective and what is expected of the respondent. It is also sensible to have a “Thank You” text as well as information about where to find the results of the survey when they are published.

- Page Breaks – The attention span of respondents can be very low when it comes to a long scrolling survey. Add page breaks as wherever possible. Having said that, a single question per page can also hamper response rates as it increases the time to complete the survey as well as increases the chances for dropouts.

- Branching – Create smart and effective surveys with the implementation of branching wherever required. Eliminate the use of text such as, “If you answered No to Q1 then Answer Q4” – this leads to annoyance amongst respondents which result in increase survey dropout rates. Design online surveys using the branching logic so that appropriate questions are automatically routed based on previous responses.

- Write the questions . Initially, write a significant number of survey questions out of which you can use the one which is best suited for the survey. Divide the survey into sections so that respondents do not get confused seeing a long list of questions.

- Sequence the questions so that they are unbiased.

- Repeat all of the steps above to find any major holes. Are the questions really answered? Have someone review it for you.

- Time the length of the survey. A survey should take less than five minutes. At three to four research questions per minute, you are limited to about 15 questions. One open end text question counts for three multiple choice questions. Most online software tools will record the time taken for the respondents to answer questions.

- Include a few open-ended survey questions that support your survey object. This will be a type of feedback survey.

- Send an email to the project survey to your test group and then email the feedback survey afterward.

- This way, you can have your test group provide their opinion about the functionality as well as usability of your project survey by using the feedback survey.

- Make changes to your questionnaire based on the received feedback.

- Send the survey out to all your respondents!

Online surveys have, over the course of time, evolved into an effective alternative to expensive mail or telephone surveys. However, you must be aware of a few conditions that need to be met for online surveys. If you are trying to survey a sample representing the target population, please remember that not everyone is online.

Moreover, not everyone is receptive to an online survey also. Generally, the demographic segmentation of younger individuals is inclined toward responding to an online survey.

Learn More: Examples of Qualitarive Data in Education

Good survey design is crucial for accurate data collection. From question-wording to response options, let’s explore how to create effective surveys that yield valuable insights with our tips to survey design.

- Writing Great Questions for data collection

Writing great questions can be considered an art. Art always requires a significant amount of hard work, practice, and help from others.

The questions in a survey need to be clear, concise, and unbiased. A poorly worded question or a question with leading language can result in inaccurate or irrelevant responses, ultimately impacting the data’s validity.

Moreover, the questions should be relevant and specific to the research objectives. Questions that are irrelevant or do not capture the necessary information can lead to incomplete or inconsistent responses too.

- Avoid loaded or leading words or questions

A small change in content can produce effective results. Words such as could , should and might are all used for almost the same purpose, but may produce a 20% difference in agreement to a question. For example, “The management could.. should.. might.. have shut the factory”.

Intense words such as – prohibit or action, representing control or action, produce similar results. For example, “Do you believe Donald Trump should prohibit insurance companies from raising rates?”.

Sometimes the content is just biased. For instance, “You wouldn’t want to go to Rudolpho’s Restaurant for the organization’s annual party, would you?”

- Misplaced questions

Questions should always reference the intended context, and questions placed out of order or without its requirement should be avoided. Generally, a funnel approach should be implemented – generic questions should be included in the initial section of the questionnaire as a warm-up and specific ones should follow. Toward the end, demographic or geographic questions should be included.

- Mutually non-overlapping response categories

Multiple-choice answers should be mutually unique to provide distinct choices. Overlapping answer options frustrate the respondent and make interpretation difficult at best. Also, the questions should always be precise.

For example: “Do you like water juice?”

This question is vague. In which terms is the liking for orange juice is to be rated? – Sweetness, texture, price, nutrition etc.

- Avoid the use of confusing/unfamiliar words

Asking about industry-related terms such as caloric content, bits, bytes, MBS , as well as other terms and acronyms can confuse respondents . Ensure that the audience understands your language level, terminology, and, above all, the question you ask.

- Non-directed questions give respondents excessive leeway

In survey design for data collection, non-directed questions can give respondents excessive leeway, which can lead to vague and unreliable data. These types of questions are also known as open-ended questions, and they do not provide any structure for the respondent to follow.

For instance, a non-directed question like “ What suggestions do you have for improving our shoes?” can elicit a wide range of answers, some of which may not be relevant to the research objectives. Some respondents may give short answers, while others may provide lengthy and detailed responses, making comparing and analyzing the data challenging.

To avoid these issues, it’s essential to ask direct questions that are specific and have a clear structure. Closed-ended questions, for example, offer structured response options and can be easier to analyze as they provide a quantitative measure of respondents’ opinions.

- Never force questions

There will always be certain questions that cross certain privacy rules. Since privacy is an important issue for most people, these questions should either be eliminated from the survey or not be kept as mandatory. Survey questions about income, family income, status, religious and political beliefs, etc., should always be avoided as they are considered to be intruding, and respondents can choose not to answer them.

- Unbalanced answer options in scales

Unbalanced answer options in scales such as Likert Scale and Semantic Scale may be appropriate for some situations and biased in others. When analyzing a pattern in eating habits, a study used a quantity scale that made obese people appear in the middle of the scale with the polar ends reflecting a state where people starve and an irrational amount to consume. There are cases where we usually do not expect poor service, such as hospitals.

- Questions that cover two points

In survey design for data collection, questions that cover two points can be problematic for several reasons. These types of questions are often called “double-barreled” questions and can cause confusion for respondents, leading to inaccurate or irrelevant data.

For instance, a question like “Do you like the food and the service at the restaurant?” covers two points, the food and the service, and it assumes that the respondent has the same opinion about both. If the respondent only liked the food, their opinion of the service could affect their answer.

It’s important to ask one question at a time to avoid confusion and ensure that the respondent’s answer is focused and accurate. This also applies to questions with multiple concepts or ideas. In these cases, it’s best to break down the question into multiple questions that address each concept or idea separately.

- Dichotomous questions

Dichotomous questions are used in case you want a distinct answer, such as: Yes/No or Male/Female . For example, the question “Do you think this candidate will win the election?” can be Yes or No.

- Avoid the use of long questions

The use of long questions will definitely increase the time taken for completion, which will generally lead to an increase in the survey dropout rate. Multiple-choice questions are the longest and most complex, and open-ended questions are the shortest and easiest to answer.

Data collection is an essential part of the research process, whether you’re conducting scientific experiments, market research, or surveys. The methods and tools used for data collection will vary depending on the research type, the sample size required, and the resources available.

Several data collection methods include surveys, observations, interviews, and focus groups. We learn each method has advantages and disadvantages, and choosing the one that best suits the research goals is important.

With the rise of technology, many tools are now available to facilitate data collection, including online survey software and data visualization tools. These tools can help researchers collect, store, and analyze data more efficiently, providing greater results and accuracy.

By understanding the various methods and tools available for data collection, we can develop a solid foundation for conducting research. With these research skills , we can make informed decisions, solve problems, and contribute to advancing our understanding of the world around us.

Analyze your survey data to gauge in-depth market drivers, including competitive intelligence, purchasing behavior, and price sensitivity, with QuestionPro.

You will obtain accurate insights with various techniques, including conjoint analysis, MaxDiff analysis, sentiment analysis, TURF analysis, heatmap analysis, etc. Export quality data to external in-depth analysis tools such as SPSS and R Software, and integrate your research with external business applications. Everything you need for your data collection. Start today for free!

LEARN MORE FREE TRIAL

MORE LIKE THIS

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

I Am Disconnected – Tuesday CX Thoughts

May 21, 2024

20 Best Customer Success Tools of 2024

May 20, 2024

AI-Based Services Buying Guide for Market Research (based on ESOMAR’s 20 Questions)

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

What is Data Collection? Methods, Types, Tools, Examples

Appinio Research · 09.11.2023 · 33min read

Are you ready to unlock the power of data? In today's data-driven world, understanding the art and science of data collection is the key to informed decision-making and achieving your objectives.

This guide will walk you through the intricate data collection process, from its fundamental principles to advanced strategies and ethical considerations. Whether you're a business professional, researcher, or simply curious about the world of data, this guide will equip you with the knowledge and tools needed to harness the potential of data collection effectively.

What is Data Collection?

Data collection is the systematic process of gathering and recording information or data from various sources for analysis, interpretation, and decision-making. It is a fundamental step in research, business operations, and virtually every field where information is used to understand, improve, or make informed choices.

Key Elements of Data Collection

- Sources: Data can be collected from a wide range of sources, including surveys , interviews, observations, sensors, databases, social media, and more.

- Methods: Various methods are employed to collect data, such as questionnaires, data entry, web scraping, and sensor networks. The choice of method depends on the type of data, research objectives, and available resources.

- Data Types: Data can be qualitative (descriptive) or quantitative (numerical), structured (organized into a predefined format) or unstructured (free-form text or media), and primary (collected directly) or secondary (obtained from existing sources).

- Data Collection Tools: Technology plays a significant role in modern data collection, with software applications, mobile apps, sensors, and data collection platforms facilitating efficient and accurate data capture.

- Ethical Considerations: Ethical guidelines, including informed consent and privacy protection, must be followed to ensure that data collection respects the rights and well-being of individuals.

- Data Quality: The accuracy, completeness, and reliability of collected data are critical to its usefulness. Data quality assurance measures are implemented to minimize errors and biases.

- Data Storage: Collected data needs to be securely stored and managed to prevent loss, unauthorized access, and breaches. Data storage solutions range from on-premises servers to cloud-based platforms.

Importance of Data Collection in Modern Businesses

Data collection is of paramount importance in modern businesses for several compelling reasons:

- Informed Decision-Making: Collected data serves as the foundation for informed decision-making at all levels of an organization. It provides valuable insights into customer behavior, market trends, operational efficiency, and more.

- Competitive Advantage: Businesses that effectively collect and analyze data gain a competitive edge. Data-driven insights help identify opportunities, optimize processes, and stay ahead of competitors .

- Customer Understanding: Data collection allows businesses to better understand their customers, their preferences, and their pain points. This insight is invaluable for tailoring products, services, and marketing strategies.

- Performance Measurement: Data collection enables organizations to assess the performance of various aspects of their operations, from marketing campaigns to production processes. This helps identify areas for improvement.

- Risk Management: Businesses can use data to identify potential risks and develop strategies to mitigate them. This includes financial risks, supply chain disruptions, and cybersecurity threats.

- Innovation: Data collection supports innovation by providing insights into emerging trends and customer demands. Businesses can use this information to develop new products or services.

- Resource Allocation: Data-driven decision-making helps allocate resources efficiently. For example, marketing budgets can be optimized based on the performance of different channels.

Goals and Objectives of Data Collection

The goals and objectives of data collection depend on the specific context and the needs of the organization or research project. However, there are some common overarching objectives:

- Information Gathering: The primary goal is to gather accurate, relevant, and reliable information that addresses specific questions or objectives.

- Analysis and Insight: Collected data is meant to be analyzed to uncover patterns, trends, relationships, and insights that can inform decision-making and strategy development.

- Measurement and Evaluation: Data collection allows for the measurement and evaluation of various factors, such as performance, customer satisfaction , or market potential.

- Problem Solving: Data collection can be directed toward solving specific problems or challenges faced by an organization, such as identifying the root causes of quality issues.

- Monitoring and Surveillance: In some cases, data collection serves as a continuous monitoring or surveillance function, allowing organizations to track ongoing processes or conditions.

- Benchmarking: Data collection can be used for benchmarking against industry standards or competitors, helping organizations assess their performance relative to others.

- Planning and Strategy: Data collected over time can support long-term planning and strategy development, ensuring that organizations adapt to changing circumstances.

In summary, data collection is a foundational activity with diverse applications across industries and sectors. Its objectives range from understanding customers and making informed decisions to improving processes, managing risks, and driving innovation. The quality and relevance of collected data are pivotal in achieving these goals.

How to Plan Your Data Collection Strategy?

Before kicking things off, we'll review the crucial steps of planning your data collection strategy. Your success in data collection largely depends on how well you define your objectives, select suitable sources, set clear goals, and choose appropriate collection methods.

Defining Your Research Questions

Defining your research questions is the foundation of any effective data collection effort. The more precise and relevant your questions, the more valuable the data you collect.

- Specificity is Key: Make sure your research questions are specific and focused. Instead of asking, "How can we improve customer satisfaction?" ask, "What specific aspects of our service do customers find most satisfying or dissatisfying?"

- Prioritize Questions: Determine the most critical questions that will have the most significant impact on your goals. Not all questions are equally important, so allocate your resources accordingly.

- Alignment with Objectives: Ensure that your research questions directly align with your overall objectives. If your goal is to increase sales, your research questions should be geared toward understanding customer buying behaviors and preferences.

Identifying Key Data Sources

Identifying the proper data sources is essential for gathering accurate and relevant information. Here are some examples of key data sources for different industries and purposes.

- Customer Data: This can include customer demographics, purchase history, website behavior, and feedback from customer service interactions.

- Market Research Reports: Utilize industry reports, competitor analyses, and market trend studies to gather external data and insights.

- Internal Records: Your organization's databases, financial records, and operational data can provide valuable insights into your business's performance.

- Social Media Platforms: Monitor social media channels to gather customer feedback, track brand mentions , and identify emerging trends in your industry.

- Web Analytics: Collect data on website traffic, user behavior, and conversion rates to optimize your online presence.

Setting Clear Data Collection Goals

Setting clear and measurable goals is essential to ensure your data collection efforts remain on track and deliver valuable results. Goals should be:

- Specific: Clearly define what you aim to achieve with your data collection. For instance, increasing website traffic by 20% in six months is a specific goal.

- Measurable: Establish criteria to measure your progress and success. Use metrics such as revenue growth, customer satisfaction scores, or conversion rates.

- Achievable: Set realistic goals that your team can realistically work towards. Overly ambitious goals can lead to frustration and burnout.

- Relevant : Ensure your goals align with your organization's broader objectives and strategic initiatives.

- Time-Bound: Set a timeframe within which you plan to achieve your goals. This adds a sense of urgency and helps you track progress effectively.

Choosing Data Collection Methods

Selecting the correct data collection methods is crucial for obtaining accurate and reliable data. Your choice should align with your research questions and goals. Here's a closer look at various data collection methods and their practical applications.

Types of Data Collection Methods

Now, let's explore different data collection methods in greater detail, including examples of when and how to use them effectively:

Surveys and Questionnaires

Surveys and questionnaires are versatile tools for gathering data from a large number of respondents. They are commonly used for:

- Customer Feedback: Collecting opinions and feedback on products, services, and overall satisfaction.

- Market Research: Assessing market preferences, identifying trends, and evaluating consumer behavior .

- Employee Surveys : Measuring employee engagement, job satisfaction, and feedback on workplace conditions.

Example: If you're running an e-commerce business and want to understand customer preferences, you can create an online survey asking customers about their favorite product categories, preferred payment methods, and shopping frequency.

To enhance your data collection endeavors, check out Appinio , a modern research platform that simplifies the process and maximizes the quality of insights. Appinio offers user-friendly survey and questionnaire tools that enable you to effortlessly design surveys tailored to your needs. It also provides seamless integration with interview and observation data, allowing you to consolidate your findings in one place.

Discover how Appinio can elevate your data collection efforts. Book a demo today to unlock a world of possibilities in gathering valuable insights!

Book a Demo

Interviews involve one-on-one or group conversations with participants to gather detailed insights. They are particularly useful for:

- Qualitative Research: Exploring complex topics, motivations, and personal experiences.

- In-Depth Analysis: Gaining a deep understanding of specific issues or situations.

- Expert Opinions: Interviewing industry experts or thought leaders to gather valuable insights.

Example: If you're a healthcare provider aiming to improve patient experiences, conducting interviews with patients can help you uncover specific pain points and suggestions for improvement.

Observations

Observations entail watching and recording behaviors or events in their natural context. This method is ideal for:

- Behavioral Studies: Analyzing how people interact with products or environments.

- Field Research : Collecting data in real-world settings, such as retail stores, public spaces, or classrooms.

- Ethnographic Research : Immersing yourself in a specific culture or community to understand their practices and customs.

Example: If you manage a retail store, observing customer traffic flow and purchasing behaviors can help optimize store layout and product placement.

Document Analysis

Document analysis involves reviewing and extracting information from written or digital documents. It is valuable for:

- Historical Research: Studying historical records, manuscripts, and archives.

- Content Analysis: Analyzing textual or visual content from websites, reports, or publications.

- Legal and Compliance: Reviewing contracts, policies, and legal documents for compliance purposes.

Example: If you're a content marketer, you can analyze competitor blog posts to identify common topics and keywords used in your industry.

Web Scraping

Web scraping is the automated process of extracting data from websites. It's suitable for:

- Competitor Analysis: Gathering data on competitor product prices, descriptions, and customer reviews.

- Market Research: Collecting data on product listings, reviews, and trends from e-commerce websites.

- News and Social Media Monitoring: Tracking news articles, social media posts, and comments related to your brand or industry.

Example: If you're in the travel industry, web scraping can help you collect pricing data for flights and accommodations from various travel booking websites to stay competitive.

Social Media Monitoring

Social media monitoring involves tracking and analyzing conversations and activities on social media platforms. It's valuable for:

- Brand Reputation Management: Monitoring brand mentions and sentiment to address customer concerns or capitalize on positive feedback.

- Competitor Analysis: Keeping tabs on competitors' social media strategies and customer engagement.

- Trend Identification: Identifying emerging trends and viral content within your industry.

Example: If you run a restaurant, social media monitoring can help you track customer reviews, comments, and hashtags related to your establishment, allowing you to respond promptly to customer feedback and trends.

By understanding the nuances and applications of these data collection methods, you can choose the most appropriate approach to gather valuable insights for your specific objectives. Remember that a well-thought-out data collection strategy is the cornerstone of informed decision-making and business success.

How to Design Your Data Collection Instruments?

Now that you've defined your research questions, identified data sources, set clear goals, and chosen appropriate data collection methods, it's time to design the instruments you'll use to collect data effectively.

Design Effective Survey Questions

Designing survey questions is a crucial step in gathering accurate and meaningful data. Here are some key considerations:

- Clarity: Ensure that your questions are clear and concise. Avoid jargon or ambiguous language that may confuse respondents.

- Relevance: Ask questions that directly relate to your research objectives. Avoid unnecessary or irrelevant questions that can lead to survey fatigue.

- Avoid Leading Questions: Formulate questions that do not guide respondents toward a particular answer. Maintain neutrality to get unbiased responses.

- Response Options: Provide appropriate response options, including multiple-choice, Likert scales , or open-ended formats, depending on the type of data you need.

- Pilot Testing: Before deploying your survey, conduct pilot tests with a small group to identify any issues with question wording or response options.

Craft Interview Questions for Insightful Conversations

Developing interview questions requires thoughtful consideration to elicit valuable insights from participants:

- Open-Ended Questions: Use open-ended questions to encourage participants to share their thoughts, experiences, and perspectives without being constrained by predefined answers.

- Probing Questions: Prepare follow-up questions to delve deeper into specific topics or clarify responses.

- Structured vs. Semi-Structured Interviews: Decide whether your interviews will follow a structured format with predefined questions or a semi-structured approach that allows flexibility.

- Avoid Biased Questions: Ensure your questions do not steer participants toward desired responses. Maintain objectivity throughout the interview.

Build an Observation Checklist for Data Collection

When conducting observations, having a well-structured checklist is essential:

- Clearly Defined Variables: Identify the specific variables or behaviors you are observing and ensure they are well-defined.

- Checklist Format: Create a checklist format that is easy to use and follow during observations. This may include checkboxes, scales, or space for notes.

- Training Observers: If you have a team of observers, provide thorough training to ensure consistency and accuracy in data collection.

- Pilot Observations: Before starting formal data collection, conduct pilot observations to refine your checklist and ensure it captures the necessary information.

Streamline Data Collection with Forms and Templates

Creating user-friendly data collection forms and templates helps streamline the process:

- Consistency: Ensure that all data collection forms follow a consistent format and structure, making it easier to compare and analyze data.

- Data Validation: Incorporate data validation checks to reduce errors during data entry. This can include dropdown menus, date pickers, or required fields.

- Digital vs. Paper Forms: Decide whether digital forms or traditional paper forms are more suitable for your data collection needs. Digital forms often offer real-time data validation and remote access.

- Accessibility: Make sure your forms and templates are accessible to all team members involved in data collection. Provide training if necessary.

The Data Collection Process

Now that your data collection instruments are ready, it's time to embark on the data collection process itself. This section covers the practical steps involved in collecting high-quality data.

1. Preparing for Data Collection

Adequate preparation is essential to ensure a smooth data collection process:

- Resource Allocation: Allocate the necessary resources, including personnel, technology, and materials, to support data collection activities.

- Training: Train data collection teams or individuals on the use of data collection instruments and adherence to protocols.

- Pilot Testing: Conduct pilot data collection runs to identify and resolve any issues or challenges that may arise.

- Ethical Considerations: Ensure that data collection adheres to ethical standards and legal requirements. Obtain necessary permissions or consent as applicable.

2. Conducting Data Collection

During data collection, it's crucial to maintain consistency and accuracy:

- Follow Protocols: Ensure that data collection teams adhere to established protocols and procedures to maintain data integrity.

- Supervision: Supervise data collection teams to address questions, provide guidance, and resolve any issues that may arise.

- Documentation: Maintain detailed records of the data collection process, including dates, locations, and any deviations from the plan.

- Data Security: Implement data security measures to protect collected information from unauthorized access or breaches.

3. Ensuring Data Quality and Reliability

After collecting data, it's essential to validate and ensure its quality:

- Data Cleaning: Review collected data for errors, inconsistencies, and missing values. Clean and preprocess the data to ensure accuracy.

- Quality Checks: Perform quality checks to identify outliers or anomalies that may require further investigation or correction.

- Data Validation: Cross-check data with source documents or original records to verify its accuracy and reliability.

- Data Auditing: Conduct periodic audits to assess the overall quality of the collected data and make necessary adjustments.

4. Managing Data Collection Teams

If you have multiple team members involved in data collection, effective management is crucial:

- Communication: Maintain open and transparent communication channels with team members to address questions, provide guidance, and ensure consistency.

- Performance Monitoring: Regularly monitor the performance of data collection teams, identifying areas for improvement or additional training.

- Problem Resolution: Be prepared to promptly address any challenges or issues that arise during data collection.

- Feedback Loop: Establish a feedback loop for data collection teams to share insights and best practices, promoting continuous improvement.

By following these steps and best practices in the data collection process, you can ensure that the data you collect is reliable, accurate, and aligned with your research objectives. This lays the foundation for meaningful analysis and informed decision-making.

How to Store and Manage Data?

It's time to explore the critical aspects of data storage and management, which are pivotal in ensuring the security, accessibility, and usability of your collected data.

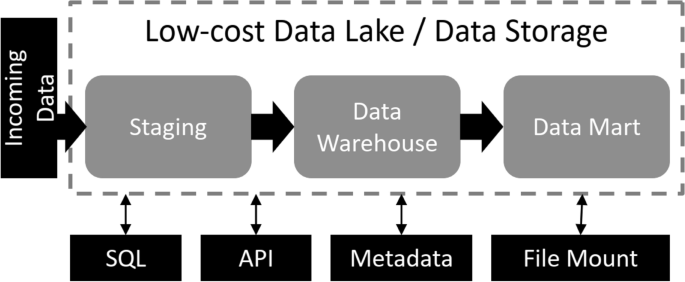

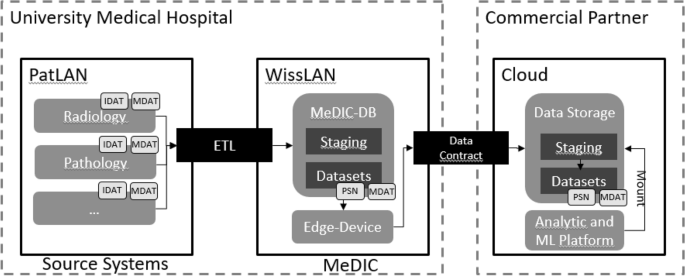

Choosing Data Storage Solutions

Selecting the proper data storage solutions is a strategic decision that impacts data accessibility, scalability, and security. Consider the following factors:

- Cloud vs. On-Premises: Decide whether to store your data in the cloud or on-premises. Cloud solutions offer scalability, accessibility, and automatic backups, while on-premises solutions provide more control but require significant infrastructure investments.

- Data Types: Assess the types of data you're collecting, such as structured, semi-structured, or unstructured data. Choose storage solutions that accommodate your data formats efficiently.

- Scalability: Ensure that your chosen solution can scale as your data volume grows. This is crucial for preventing storage bottlenecks.

- Data Accessibility: Opt for storage solutions that provide easy and secure access to authorized users, whether they are on-site or remote.

- Data Recovery and Backup: Implement robust data backup and recovery mechanisms to safeguard against data loss due to hardware failures or disasters.

Data Security and Privacy

Data security and privacy are paramount, especially when handling sensitive or personal information.

- Encryption: Implement encryption for data at rest and in transit. Use encryption protocols like SSL/TLS for communication and robust encryption algorithms for storage.

- Access Control: Set up role-based access control (RBAC) to restrict access to data based on job roles and responsibilities. Limit access to only those who need it.

- Compliance: Ensure that your data storage and management practices comply with relevant data protection regulations, such as GDPR, HIPAA, or CCPA.

- Data Masking: Use data masking techniques to conceal sensitive information in non-production environments.

- Monitoring and Auditing: Continuously monitor access logs and perform regular audits to detect unauthorized activities and maintain compliance.

Data Organization and Cataloging

Organizing and cataloging your data is essential for efficient retrieval, analysis, and decision-making.

- Metadata Management: Maintain detailed metadata for each dataset, including data source, date of collection, data owner, and description. This makes it easier to locate and understand your data.

- Taxonomies and Categories: Develop taxonomies or data categorization schemes to classify data into logical groups, making it easier to find and manage.

- Data Versioning: Implement data versioning to track changes and updates over time. This ensures data lineage and transparency.

- Data Catalogs: Use data cataloging tools and platforms to create a searchable inventory of your data assets, facilitating discovery and reuse.

- Data Retention Policies: Establish clear data retention policies that specify how long data should be retained and when it should be securely deleted or archived.

How to Analyze and Interpret Data?

Once you've collected your data, let's take a look at the process of extracting valuable insights from your collected data through analysis and interpretation.

Data Cleaning and Preprocessing

Data cleaning and preprocessing are essential steps to ensure that your data is accurate and ready for analysis.

- Handling Missing Data: Develop strategies for dealing with missing data, such as imputation or removal, based on the nature of your data and research objectives.

- Outlier Detection: Identify and address outliers that can skew analysis results. Consider whether outliers should be corrected, removed, or retained based on their significance.

- Normalization and Scaling: Normalize or scale data to bring it within a common range, making it suitable for certain algorithms and models.

- Data Transformation: Apply data transformations, such as logarithmic scaling or categorical encoding, to prepare data for specific types of analysis.

- Data Imbalance: Address class imbalance issues in datasets, particularly machine learning applications, to avoid biased model training.

Exploratory Data Analysis (EDA)

EDA is the process of visually and statistically exploring your data to uncover patterns, trends, and potential insights.

- Descriptive Statistics: Calculate basic statistics like mean, median, and standard deviation to summarize data distributions.

- Data Visualization: Create visualizations such as histograms, scatter plots, and heatmaps to reveal relationships and patterns within the data.

- Correlation Analysis: Examine correlations between variables to understand how they influence each other.

- Hypothesis Testing: Conduct hypothesis tests to assess the significance of observed differences or relationships in your data.

Statistical Analysis Techniques

Choose appropriate statistical analysis techniques based on your research questions and data types.

- Descriptive Statistics: Use descriptive statistics to summarize and describe your data, providing an initial overview of key features.

- Inferential Statistics: Apply inferential statistics, including t-tests, ANOVA, or regression analysis, to test hypotheses and draw conclusions about population parameters.

- Non-parametric Tests: Employ non-parametric tests when assumptions of normality are not met or when dealing with ordinal or nominal data .

- Time Series Analysis: Analyze time-series data to uncover trends, seasonality, and temporal patterns.

Data Visualization

Data visualization is a powerful tool for conveying complex information in a digestible format.

- Charts and Graphs: Utilize various charts and graphs, such as bar charts, line charts, pie charts, and heatmaps, to represent data visually.

- Interactive Dashboards: Create interactive dashboards using tools like Tableau, Power BI, or custom web applications to allow stakeholders to explore data dynamically.

- Storytelling: Use data visualization to tell a compelling data-driven story, highlighting key findings and insights.

- Accessibility: Ensure that data visualizations are accessible to all audiences, including those with disabilities, by following accessibility guidelines.

Drawing Conclusions and Insights

Finally, drawing conclusions and insights from your data analysis is the ultimate goal.

- Contextual Interpretation: Interpret your findings in the context of your research objectives and the broader business or research landscape.

- Actionable Insights: Identify actionable insights that can inform decision-making, strategy development, or future research directions.

- Report Generation: Create comprehensive reports or presentations that communicate your findings clearly and concisely to stakeholders.

- Validation: Cross-check your conclusions with domain experts or subject matter specialists to ensure accuracy and relevance.

By following these steps in data analysis and interpretation, you can transform raw data into valuable insights that drive informed decisions, optimize processes, and create new opportunities for your organization.

How to Report and Present Data?

Now, let's explore the crucial steps of reporting and presenting data effectively, ensuring that your findings are communicated clearly and meaningfully to stakeholders.

1. Create Data Reports

Data reports are the culmination of your data analysis efforts, presenting your findings in a structured and comprehensible manner.

- Report Structure: Organize your report with a clear structure, including an introduction, methodology, results, discussion, and conclusions.

- Visualization Integration: Incorporate data visualizations, charts, and graphs to illustrate key points and trends.

- Clarity and Conciseness: Use clear and concise language, avoiding technical jargon, to make your report accessible to a diverse audience.

- Actionable Insights: Highlight actionable insights and recommendations that stakeholders can use to make informed decisions.

- Appendices: Include appendices with detailed methodology, data sources, and any additional information that supports your findings.

2. Leverage Data Visualization Tools

Data visualization tools can significantly enhance your ability to convey complex information effectively. Top data visualization tools include:

- Tableau: Tableau offers a wide range of visualization options and interactive dashboards, making it a popular choice for data professionals.

- Power BI: Microsoft's Power BI provides powerful data visualization and business intelligence capabilities, suitable for creating dynamic reports and dashboards.

- Python Libraries: Utilize Python libraries such as Matplotlib, Seaborn, and Plotly for custom data visualizations and analysis.

- Excel: Microsoft Excel remains a versatile tool for creating basic charts and graphs, particularly for smaller datasets.

- Custom Development: Consider custom development for specialized visualization needs or when existing tools don't meet your requirements.

3. Communicate Findings to Stakeholders

Effectively communicating your findings to stakeholders is essential for driving action and decision-making.

- Audience Understanding : Tailor your communication to the specific needs and background knowledge of your audience. Avoid technical jargon when speaking to non-technical stakeholders.

- Visual Storytelling: Craft a narrative that guides stakeholders through the data, highlighting key insights and their implications.

- Engagement: Use engaging and interactive presentations or reports to maintain the audience's interest and encourage participation.

- Question Handling: Be prepared to answer questions and provide clarifications during presentations or discussions. Anticipate potential concerns or objections.

- Feedback Loop: Encourage feedback and open dialogue with stakeholders to ensure your findings align with their objectives and expectations.

Data Collection Examples

To better understand the practical application of data collection in various domains, let's explore some real-world examples, including those in the business context. These examples illustrate how data collection can drive informed decision-making and lead to meaningful insights.

Business Customer Feedback Surveys

Scenario: A retail company wants to enhance its customer experience and improve product offerings. To achieve this, they initiate customer feedback surveys.

Data Collection Approach:

- Survey Creation: The company designs a survey with specific questions about customer preferences , shopping experiences , and product satisfaction.

- Distribution: Surveys are distributed through various channels, including email, in-store kiosks, and the company's website.

- Data Gathering: Responses from thousands of customers are collected and stored in a centralized database.

Data Analysis and Insights:

- Customer Sentiment Analysis : Using natural language processing (NLP) techniques, the company analyzes open-ended responses to gauge customer sentiment.

- Product Performance: Analyzing survey data, the company identifies which products receive the highest and lowest ratings, leading to decisions on which products to improve or discontinue.

- Store Layout Optimization: By examining feedback related to in-store experiences, the company can adjust store layouts and signage to enhance customer flow and convenience.

Healthcare Patient Record Digitization

Scenario: A healthcare facility aims to transition from paper-based patient records to digital records for improved efficiency and patient care.

- Scanning and Data Entry: Existing paper records are scanned, and data entry personnel convert them into digital format.

- Electronic Health Record (EHR) Implementation: The facility adopts an EHR system to store and manage patient data securely.

- Continuous Data Entry: As new patient information is collected, it is directly entered into the EHR system.

- Patient History Access: Physicians and nurses gain instant access to patient records, improving diagnostic accuracy and treatment.

- Data Analytics: Aggregated patient data can be analyzed to identify trends in diseases, treatment outcomes, and healthcare resource utilization.

- Resource Optimization: Analysis of patient data allows the facility to allocate resources more efficiently, such as staff scheduling based on patient admission patterns.

Social Media Engagement Monitoring

Scenario: A digital marketing agency manages social media campaigns for various clients and wants to track campaign performance and audience engagement.

- Social Media Monitoring Tools: The agency employs social media monitoring tools to collect data on post engagement, reach, likes, shares, and comments.

- Custom Tracking Links: Unique tracking links are created for each campaign to monitor traffic and conversions.