From the Editors

Notes from The Conversation newsroom

How we edit science part 1: the scientific method

View all partners

We take science seriously at The Conversation and we work hard to report it accurately. This series of five posts is adapted from an internal presentation on how to understand and edit science by our Australian Science & Technology Editor, Tim Dean. We thought you might also find it useful.

Introduction

If I told you that science was a truth-seeking endeavour that uses a single robust method to prove scientific facts about the world, steadily and inexorably driving towards objective truth, would you believe me?

Many would. But you shouldn’t.

The public perception of science is often at odds with how science actually works. Science is often seen to be a separate domain of knowledge, framed to be superior to other forms of knowledge by virtue of its objectivity, which is sometimes referred to as it having a “ view from nowhere ”.

But science is actually far messier than this - and far more interesting. It is not without its limitations and flaws, but it’s still the most effective tool we have to understand the workings of the natural world around us.

In order to report or edit science effectively - or to consume it as a reader - it’s important to understand what science is, how the scientific method (or methods) work, and also some of the common pitfalls in practising science and interpreting its results.

This guide will give a short overview of what science is and how it works, with a more detailed treatment of both these topics in the final post in the series.

What is science?

Science is special, not because it claims to provide us with access to the truth, but because it admits it can’t provide truth .

Other means of producing knowledge, such as pure reason, intuition or revelation, might be appealing because they give the impression of certainty , but when this knowledge is applied to make predictions about the world around us, reality often finds them wanting.

Rather, science consists of a bunch of methods that enable us to accumulate evidence to test our ideas about how the world is, and why it works the way it does. Science works precisely because it enables us to make predictions that are borne out by experience.

Science is not a body of knowledge. Facts are facts, it’s just that some are known with a higher degree of certainty than others. What we often call “scientific facts” are just facts that are backed by the rigours of the scientific method, but they are not intrinsically different from other facts about the world.

What makes science so powerful is that it’s intensely self-critical. In order for a hypothesis to pass muster and enter a textbook, it must survive a battery of tests designed specifically to show that it could be wrong. If it passes, it has cleared a high bar.

The scientific method(s)

Despite what some philosophers have stated , there is a method for conducting science. In fact, there are many. And not all revolve around performing experiments.

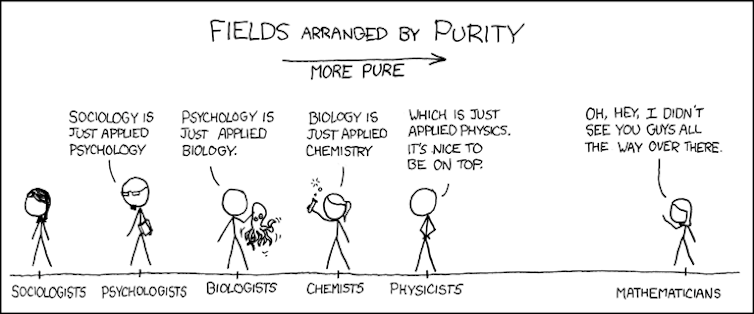

One method involves simple observation, description and classification, such as in taxonomy. (Some physicists look down on this – and every other – kind of science, but they’re only greasing a slippery slope .)

However, when most of us think of The Scientific Method, we’re thinking of a particular kind of experimental method for testing hypotheses.

This begins with observing phenomena in the world around us, and then moves on to positing hypotheses for why those phenomena happen the way they do. A hypothesis is just an explanation, usually in the form of a causal mechanism: X causes Y. An example would be: gravitation causes the ball to fall back to the ground.

A scientific theory is just a collection of well-tested hypotheses that hang together to explain a great deal of stuff.

Crucially, a scientific hypothesis needs to be testable and falsifiable .

An untestable hypothesis would be something like “the ball falls to the ground because mischievous invisible unicorns want it to”. If these unicorns are not detectable by any scientific instrument, then the hypothesis that they’re responsible for gravity is not scientific.

An unfalsifiable hypothesis is one where no amount of testing can prove it wrong. An example might be the psychic who claims the experiment to test their powers of ESP failed because the scientific instruments were interfering with their abilities.

(Caveat: there are some hypotheses that are untestable because we choose not to test them. That doesn’t make them unscientific in principle, it’s just that they’ve been denied by an ethics committee or other regulation.)

Experimentation

There are often many hypotheses that could explain any particular phenomenon. Does the rock fall to the ground because an invisible force pulls on the rock? Or is it because the mass of the Earth warps spacetime , and the rock follows the lowest-energy path, thus colliding with the ground? Or is it that all substances have a natural tendency to fall towards the centre of the Universe , which happens to be at the centre of the Earth?

The trick is figuring out which hypothesis is the right one. That’s where experimentation comes in.

A scientist will take their hypothesis and use that to make a prediction, and they will construct an experiment to see if that prediction holds. But any observation that confirms one hypothesis will likely confirm several others as well. If I lift and drop a rock, it supports all three of the hypotheses on gravity above.

Furthermore, you can keep accumulating evidence to confirm a hypothesis, and it will never prove it to be absolutely true. This is because you can’t rule out the possibility of another similar hypothesis being correct, or of making some new observation that shows your hypothesis to be false. But if one day you drop a rock and it shoots off into space, that ought to cast doubt on all of the above hypotheses.

So while you can never prove a hypothesis true simply by making more confirmatory observations, you only one need one solid contrary observation to prove a hypothesis false. This notion is at the core of the hypothetico-deductive model of science.

This is why a great deal of science is focused on testing hypotheses, pushing them to their limits and attempting to break them through experimentation. If the hypothesis survives repeated testing, our confidence in it grows.

So even crazy-sounding theories like general relativity and quantum mechanics can become well accepted, because both enable very precise predictions, and these have been exhaustively tested and come through unscathed.

The next post will cover hypothesis testing in greater detail.

- Scientific method

- Philosophy of science

- How we edit science

Senior Research Fellow - Women's Health Services

Senior Lecturer in Periodontics

Lecturer / Senior Lecturer - Marketing

Assistant Editor - 1 year cadetship

Executive Dean, Faculty of Health

What Is a Testable Hypothesis?

- Scientific Method

- Chemical Laws

- Periodic Table

- Projects & Experiments

- Biochemistry

- Physical Chemistry

- Medical Chemistry

- Chemistry In Everyday Life

- Famous Chemists

- Activities for Kids

- Abbreviations & Acronyms

- Weather & Climate

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

A hypothesis is a tentative answer to a scientific question. A testable hypothesis is a hypothesis that can be proved or disproved as a result of testing, data collection, or experience. Only testable hypotheses can be used to conceive and perform an experiment using the scientific method .

Requirements for a Testable Hypothesis

In order to be considered testable, two criteria must be met:

- It must be possible to prove that the hypothesis is true.

- It must be possible to prove that the hypothesis is false.

- It must be possible to reproduce the results of the hypothesis.

Examples of a Testable Hypothesis

All the following hypotheses are testable. It's important, however, to note that while it's possible to say that the hypothesis is correct, much more research would be required to answer the question " why is this hypothesis correct?"

- Students who attend class have higher grades than students who skip class. This is testable because it is possible to compare the grades of students who do and do not skip class and then analyze the resulting data. Another person could conduct the same research and come up with the same results.

- People exposed to high levels of ultraviolet light have a higher incidence of cancer than the norm. This is testable because it is possible to find a group of people who have been exposed to high levels of ultraviolet light and compare their cancer rates to the average.

- If you put people in a dark room, then they will be unable to tell when an infrared light turns on. This hypothesis is testable because it is possible to put a group of people into a dark room, turn on an infrared light, and ask the people in the room whether or not an infrared light has been turned on.

Examples of a Hypothesis Not Written in a Testable Form

- It doesn't matter whether or not you skip class. This hypothesis can't be tested because it doesn't make any actual claim regarding the outcome of skipping class. "It doesn't matter" doesn't have any specific meaning, so it can't be tested.

- Ultraviolet light could cause cancer. The word "could" makes a hypothesis extremely difficult to test because it is very vague. There "could," for example, be UFOs watching us at every moment, even though it's impossible to prove that they are there!

- Goldfish make better pets than guinea pigs. This is not a hypothesis; it's a matter of opinion. There is no agreed-upon definition of what a "better" pet is, so while it is possible to argue the point, there is no way to prove it.

How to Propose a Testable Hypothesis

Now that you know what a testable hypothesis is, here are tips for proposing one.

- Try to write the hypothesis as an if-then statement. If you take an action, then a certain outcome is expected.

- Identify the independent and dependent variable in the hypothesis. The independent variable is what you are controlling or changing. You measure the effect this has on the dependent variable.

- Write the hypothesis in such a way that you can prove or disprove it. For example, a person has skin cancer, you can't prove they got it from being out in the sun. However, you can demonstrate a relationship between exposure to ultraviolet light and increased risk of skin cancer.

- Make sure you are proposing a hypothesis you can test with reproducible results. If your face breaks out, you can't prove the breakout was caused by the french fries you had for dinner last night. However, you can measure whether or not eating french fries is associated with breaking out. It's a matter of gathering enough data to be able to reproduce results and draw a conclusion.

- Examples of Independent and Dependent Variables

- Null Hypothesis Examples

- What Is the Visible Light Spectrum?

- What Glows Under Black Light?

- Difference Between Independent and Dependent Variables

- What Are Examples of a Hypothesis?

- What Are the Elements of a Good Hypothesis?

- What Is a Hypothesis? (Science)

- How To Design a Science Fair Experiment

- Understanding Simple vs Controlled Experiments

- Scientific Method Vocabulary Terms

- Hypothesis, Model, Theory, and Law

- Theory Definition in Science

- Null Hypothesis Definition and Examples

- What 'Fail to Reject' Means in a Hypothesis Test

What is a scientific hypothesis?

It's the initial building block in the scientific method.

Hypothesis basics

What makes a hypothesis testable.

- Types of hypotheses

- Hypothesis versus theory

Additional resources

Bibliography.

A scientific hypothesis is a tentative, testable explanation for a phenomenon in the natural world. It's the initial building block in the scientific method . Many describe it as an "educated guess" based on prior knowledge and observation. While this is true, a hypothesis is more informed than a guess. While an "educated guess" suggests a random prediction based on a person's expertise, developing a hypothesis requires active observation and background research.

The basic idea of a hypothesis is that there is no predetermined outcome. For a solution to be termed a scientific hypothesis, it has to be an idea that can be supported or refuted through carefully crafted experimentation or observation. This concept, called falsifiability and testability, was advanced in the mid-20th century by Austrian-British philosopher Karl Popper in his famous book "The Logic of Scientific Discovery" (Routledge, 1959).

A key function of a hypothesis is to derive predictions about the results of future experiments and then perform those experiments to see whether they support the predictions.

A hypothesis is usually written in the form of an if-then statement, which gives a possibility (if) and explains what may happen because of the possibility (then). The statement could also include "may," according to California State University, Bakersfield .

Here are some examples of hypothesis statements:

- If garlic repels fleas, then a dog that is given garlic every day will not get fleas.

- If sugar causes cavities, then people who eat a lot of candy may be more prone to cavities.

- If ultraviolet light can damage the eyes, then maybe this light can cause blindness.

A useful hypothesis should be testable and falsifiable. That means that it should be possible to prove it wrong. A theory that can't be proved wrong is nonscientific, according to Karl Popper's 1963 book " Conjectures and Refutations ."

An example of an untestable statement is, "Dogs are better than cats." That's because the definition of "better" is vague and subjective. However, an untestable statement can be reworded to make it testable. For example, the previous statement could be changed to this: "Owning a dog is associated with higher levels of physical fitness than owning a cat." With this statement, the researcher can take measures of physical fitness from dog and cat owners and compare the two.

Types of scientific hypotheses

In an experiment, researchers generally state their hypotheses in two ways. The null hypothesis predicts that there will be no relationship between the variables tested, or no difference between the experimental groups. The alternative hypothesis predicts the opposite: that there will be a difference between the experimental groups. This is usually the hypothesis scientists are most interested in, according to the University of Miami .

For example, a null hypothesis might state, "There will be no difference in the rate of muscle growth between people who take a protein supplement and people who don't." The alternative hypothesis would state, "There will be a difference in the rate of muscle growth between people who take a protein supplement and people who don't."

If the results of the experiment show a relationship between the variables, then the null hypothesis has been rejected in favor of the alternative hypothesis, according to the book " Research Methods in Psychology " (BCcampus, 2015).

There are other ways to describe an alternative hypothesis. The alternative hypothesis above does not specify a direction of the effect, only that there will be a difference between the two groups. That type of prediction is called a two-tailed hypothesis. If a hypothesis specifies a certain direction — for example, that people who take a protein supplement will gain more muscle than people who don't — it is called a one-tailed hypothesis, according to William M. K. Trochim , a professor of Policy Analysis and Management at Cornell University.

Sometimes, errors take place during an experiment. These errors can happen in one of two ways. A type I error is when the null hypothesis is rejected when it is true. This is also known as a false positive. A type II error occurs when the null hypothesis is not rejected when it is false. This is also known as a false negative, according to the University of California, Berkeley .

A hypothesis can be rejected or modified, but it can never be proved correct 100% of the time. For example, a scientist can form a hypothesis stating that if a certain type of tomato has a gene for red pigment, that type of tomato will be red. During research, the scientist then finds that each tomato of this type is red. Though the findings confirm the hypothesis, there may be a tomato of that type somewhere in the world that isn't red. Thus, the hypothesis is true, but it may not be true 100% of the time.

Scientific theory vs. scientific hypothesis

The best hypotheses are simple. They deal with a relatively narrow set of phenomena. But theories are broader; they generally combine multiple hypotheses into a general explanation for a wide range of phenomena, according to the University of California, Berkeley . For example, a hypothesis might state, "If animals adapt to suit their environments, then birds that live on islands with lots of seeds to eat will have differently shaped beaks than birds that live on islands with lots of insects to eat." After testing many hypotheses like these, Charles Darwin formulated an overarching theory: the theory of evolution by natural selection.

"Theories are the ways that we make sense of what we observe in the natural world," Tanner said. "Theories are structures of ideas that explain and interpret facts."

- Read more about writing a hypothesis, from the American Medical Writers Association.

- Find out why a hypothesis isn't always necessary in science, from The American Biology Teacher.

- Learn about null and alternative hypotheses, from Prof. Essa on YouTube .

Encyclopedia Britannica. Scientific Hypothesis. Jan. 13, 2022. https://www.britannica.com/science/scientific-hypothesis

Karl Popper, "The Logic of Scientific Discovery," Routledge, 1959.

California State University, Bakersfield, "Formatting a testable hypothesis." https://www.csub.edu/~ddodenhoff/Bio100/Bio100sp04/formattingahypothesis.htm

Karl Popper, "Conjectures and Refutations," Routledge, 1963.

Price, P., Jhangiani, R., & Chiang, I., "Research Methods of Psychology — 2nd Canadian Edition," BCcampus, 2015.

University of Miami, "The Scientific Method" http://www.bio.miami.edu/dana/161/evolution/161app1_scimethod.pdf

William M.K. Trochim, "Research Methods Knowledge Base," https://conjointly.com/kb/hypotheses-explained/

University of California, Berkeley, "Multiple Hypothesis Testing and False Discovery Rate" https://www.stat.berkeley.edu/~hhuang/STAT141/Lecture-FDR.pdf

University of California, Berkeley, "Science at multiple levels" https://undsci.berkeley.edu/article/0_0_0/howscienceworks_19

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Warm ocean water is rushing beneath Antarctica's 'Doomsday Glacier,' making its collapse more likely

Earth from space: Rare phenomenon transforms African thunderstorm into giant ethereal 'jellyfish'

Scientists grow diamonds from scratch in 15 minutes thanks to groundbreaking new process

Most Popular

- 2 James Webb telescope confirms there is something seriously wrong with our understanding of the universe

- 3 Earth from space: Rare phenomenon transforms African thunderstorm into giant ethereal 'jellyfish'

- 4 Some of the oldest stars in the universe found hiding near the Milky Way's edge — and they may not be alone

- 5 See May's full 'Flower Moon' rise this week close to a red supergiant star

- 2 Scientists finally solve mystery of why Europeans have less Neanderthal DNA than East Asians

- 3 'We are approaching the tipping point': Marker for the collapse of key Atlantic current discovered

- 4 Can a commercial airplane do a barrel roll?

- 5 Why is there sometimes a green flash at sunset and sunrise?

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Understanding Science

How science REALLY works...

- Understanding Science 101

Science works with ideas that can be tested using evidence from the natural world.

Science works with testable ideas

If an explanation is equally compatible with all possible observations, then it is not testable and hence, not within the reach of science. This is frequently the case with ideas about supernatural entities. For example, consider the idea that an all-powerful supernatural being controls our actions. Is there anything we could do to test that idea? No. Because this supernatural being is all-powerful, anything we observe could be chalked up to the whim of that being. Or not. The point is that we can’t use the tools of science to gather any information about whether or not this being exists — so such an idea is outside the realm of science.

A SCIENCE PROTOTYPE: RUTHERFORD AND THE ATOM

Rutherford’s story continues as we examine each item on the Science Checklist. To find out how this investigation measures up against the rest of the checklist, read on.

- Science in action

- Take a sidetrip

- Teaching resources

Even ideas about things that occurred long ago, that happen in distant galaxies, or that are too small for us to observe directly can be testable. For some examples of testing ideas about the very old, very distant, and very tiny, explore these stories:

- Asteroids and dinosaurs

- The structure of DNA: Cooperation and competition

Find out how scientific ideas are actually tested. Visit Testing scientific ideas in our How science works section.

- Learn strategies for building lessons and activities around the Science Checklist: Grades 6-8 Grades 9-12 Grades 13-16

- Get graphics and pdfs of the Science Checklist to use in your classroom.

Science aims to explain and understand

Science relies on evidence

Subscribe to our newsletter

- The science flowchart

- Science stories

- Grade-level teaching guides

- Teaching resource database

- Journaling tool

- Misconceptions

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

4.14: Experiments and Hypotheses

- Last updated

- Save as PDF

- Page ID 43806

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Now we’ll focus on the methods of scientific inquiry. Science often involves making observations and developing hypotheses. Experiments and further observations are often used to test the hypotheses.

A scientific experiment is a carefully organized procedure in which the scientist intervenes in a system to change something, then observes the result of the change. Scientific inquiry often involves doing experiments, though not always. For example, a scientist studying the mating behaviors of ladybugs might begin with detailed observations of ladybugs mating in their natural habitats. While this research may not be experimental, it is scientific: it involves careful and verifiable observation of the natural world. The same scientist might then treat some of the ladybugs with a hormone hypothesized to trigger mating and observe whether these ladybugs mated sooner or more often than untreated ones. This would qualify as an experiment because the scientist is now making a change in the system and observing the effects.

Forming a Hypothesis

When conducting scientific experiments, researchers develop hypotheses to guide experimental design. A hypothesis is a suggested explanation that is both testable and falsifiable. You must be able to test your hypothesis, and it must be possible to prove your hypothesis true or false.

For example, Michael observes that maple trees lose their leaves in the fall. He might then propose a possible explanation for this observation: “cold weather causes maple trees to lose their leaves in the fall.” This statement is testable. He could grow maple trees in a warm enclosed environment such as a greenhouse and see if their leaves still dropped in the fall. The hypothesis is also falsifiable. If the leaves still dropped in the warm environment, then clearly temperature was not the main factor in causing maple leaves to drop in autumn.

In the Try It below, you can practice recognizing scientific hypotheses. As you consider each statement, try to think as a scientist would: can I test this hypothesis with observations or experiments? Is the statement falsifiable? If the answer to either of these questions is “no,” the statement is not a valid scientific hypothesis.

Practice Questions

Determine whether each following statement is a scientific hypothesis.

- No. This statement is not testable or falsifiable.

- No. This statement is not testable.

- No. This statement is not falsifiable.

- Yes. This statement is testable and falsifiable.

[reveal-answer q=”429550″] Show Answers [/reveal-answer] [hidden-answer a=”429550″]

- d: Yes. This statement is testable and falsifiable. This could be tested with a number of different kinds of observations and experiments, and it is possible to gather evidence that indicates that air pollution is not linked with asthma.

- a: No. This statement is not testable or falsifiable. “Bad thoughts and behaviors” are excessively vague and subjective variables that would be impossible to measure or agree upon in a reliable way. The statement might be “falsifiable” if you came up with a counterexample: a “wicked” place that was not punished by a natural disaster. But some would question whether the people in that place were really wicked, and others would continue to predict that a natural disaster was bound to strike that place at some point. There is no reason to suspect that people’s immoral behavior affects the weather unless you bring up the intervention of a supernatural being, making this idea even harder to test.

[/hidden-answer]

Testing a Vaccine

Let’s examine the scientific process by discussing an actual scientific experiment conducted by researchers at the University of Washington. These researchers investigated whether a vaccine may reduce the incidence of the human papillomavirus (HPV). The experimental process and results were published in an article titled, “ A controlled trial of a human papillomavirus type 16 vaccine .”

Preliminary observations made by the researchers who conducted the HPV experiment are listed below:

- Human papillomavirus (HPV) is the most common sexually transmitted virus in the United States.

- There are about 40 different types of HPV. A significant number of people that have HPV are unaware of it because many of these viruses cause no symptoms.

- Some types of HPV can cause cervical cancer.

- About 4,000 women a year die of cervical cancer in the United States.

Practice Question

Researchers have developed a potential vaccine against HPV and want to test it. What is the first testable hypothesis that the researchers should study?

- HPV causes cervical cancer.

- People should not have unprotected sex with many partners.

- People who get the vaccine will not get HPV.

- The HPV vaccine will protect people against cancer.

[reveal-answer q=”20917″] Show Answer [/reveal-answer] [hidden-answer a=”20917″]Hypothesis A is not the best choice because this information is already known from previous studies. Hypothesis B is not testable because scientific hypotheses are not value statements; they do not include judgments like “should,” “better than,” etc. Scientific evidence certainly might support this value judgment, but a hypothesis would take a different form: “Having unprotected sex with many partners increases a person’s risk for cervical cancer.” Before the researchers can test if the vaccine protects against cancer (hypothesis D), they want to test if it protects against the virus. This statement will make an excellent hypothesis for the next study. The researchers should first test hypothesis C—whether or not the new vaccine can prevent HPV.[/hidden-answer]

Experimental Design

You’ve successfully identified a hypothesis for the University of Washington’s study on HPV: People who get the HPV vaccine will not get HPV.

The next step is to design an experiment that will test this hypothesis. There are several important factors to consider when designing a scientific experiment. First, scientific experiments must have an experimental group. This is the group that receives the experimental treatment necessary to address the hypothesis.

The experimental group receives the vaccine, but how can we know if the vaccine made a difference? Many things may change HPV infection rates in a group of people over time. To clearly show that the vaccine was effective in helping the experimental group, we need to include in our study an otherwise similar control group that does not get the treatment. We can then compare the two groups and determine if the vaccine made a difference. The control group shows us what happens in the absence of the factor under study.

However, the control group cannot get “nothing.” Instead, the control group often receives a placebo. A placebo is a procedure that has no expected therapeutic effect—such as giving a person a sugar pill or a shot containing only plain saline solution with no drug. Scientific studies have shown that the “placebo effect” can alter experimental results because when individuals are told that they are or are not being treated, this knowledge can alter their actions or their emotions, which can then alter the results of the experiment.

Moreover, if the doctor knows which group a patient is in, this can also influence the results of the experiment. Without saying so directly, the doctor may show—through body language or other subtle cues—his or her views about whether the patient is likely to get well. These errors can then alter the patient’s experience and change the results of the experiment. Therefore, many clinical studies are “double blind.” In these studies, neither the doctor nor the patient knows which group the patient is in until all experimental results have been collected.

Both placebo treatments and double-blind procedures are designed to prevent bias. Bias is any systematic error that makes a particular experimental outcome more or less likely. Errors can happen in any experiment: people make mistakes in measurement, instruments fail, computer glitches can alter data. But most such errors are random and don’t favor one outcome over another. Patients’ belief in a treatment can make it more likely to appear to “work.” Placebos and double-blind procedures are used to level the playing field so that both groups of study subjects are treated equally and share similar beliefs about their treatment.

The scientists who are researching the effectiveness of the HPV vaccine will test their hypothesis by separating 2,392 young women into two groups: the control group and the experimental group. Answer the following questions about these two groups.

- This group is given a placebo.

- This group is deliberately infected with HPV.

- This group is given nothing.

- This group is given the HPV vaccine.

[reveal-answer q=”918962″] Show Answers [/reveal-answer] [hidden-answer a=”918962″]

- a: This group is given a placebo. A placebo will be a shot, just like the HPV vaccine, but it will have no active ingredient. It may change peoples’ thinking or behavior to have such a shot given to them, but it will not stimulate the immune systems of the subjects in the same way as predicted for the vaccine itself.

- d: This group is given the HPV vaccine. The experimental group will receive the HPV vaccine and researchers will then be able to see if it works, when compared to the control group.

Experimental Variables

A variable is a characteristic of a subject (in this case, of a person in the study) that can vary over time or among individuals. Sometimes a variable takes the form of a category, such as male or female; often a variable can be measured precisely, such as body height. Ideally, only one variable is different between the control group and the experimental group in a scientific experiment. Otherwise, the researchers will not be able to determine which variable caused any differences seen in the results. For example, imagine that the people in the control group were, on average, much more sexually active than the people in the experimental group. If, at the end of the experiment, the control group had a higher rate of HPV infection, could you confidently determine why? Maybe the experimental subjects were protected by the vaccine, but maybe they were protected by their low level of sexual contact.

To avoid this situation, experimenters make sure that their subject groups are as similar as possible in all variables except for the variable that is being tested in the experiment. This variable, or factor, will be deliberately changed in the experimental group. The one variable that is different between the two groups is called the independent variable. An independent variable is known or hypothesized to cause some outcome. Imagine an educational researcher investigating the effectiveness of a new teaching strategy in a classroom. The experimental group receives the new teaching strategy, while the control group receives the traditional strategy. It is the teaching strategy that is the independent variable in this scenario. In an experiment, the independent variable is the variable that the scientist deliberately changes or imposes on the subjects.

Dependent variables are known or hypothesized consequences; they are the effects that result from changes or differences in an independent variable. In an experiment, the dependent variables are those that the scientist measures before, during, and particularly at the end of the experiment to see if they have changed as expected. The dependent variable must be stated so that it is clear how it will be observed or measured. Rather than comparing “learning” among students (which is a vague and difficult to measure concept), an educational researcher might choose to compare test scores, which are very specific and easy to measure.

In any real-world example, many, many variables MIGHT affect the outcome of an experiment, yet only one or a few independent variables can be tested. Other variables must be kept as similar as possible between the study groups and are called control variables . For our educational research example, if the control group consisted only of people between the ages of 18 and 20 and the experimental group contained people between the ages of 30 and 35, we would not know if it was the teaching strategy or the students’ ages that played a larger role in the results. To avoid this problem, a good study will be set up so that each group contains students with a similar age profile. In a well-designed educational research study, student age will be a controlled variable, along with other possibly important factors like gender, past educational achievement, and pre-existing knowledge of the subject area.

What is the independent variable in this experiment?

- Sex (all of the subjects will be female)

- Presence or absence of the HPV vaccine

- Presence or absence of HPV (the virus)

[reveal-answer q=”68680″]Show Answer[/reveal-answer] [hidden-answer a=”68680″]Answer b. Presence or absence of the HPV vaccine. This is the variable that is different between the control and the experimental groups. All the subjects in this study are female, so this variable is the same in all groups. In a well-designed study, the two groups will be of similar age. The presence or absence of the virus is what the researchers will measure at the end of the experiment. Ideally the two groups will both be HPV-free at the start of the experiment.

List three control variables other than age.

[practice-area rows=”3″][/practice-area] [reveal-answer q=”903121″]Show Answer[/reveal-answer] [hidden-answer a=”903121″]Some possible control variables would be: general health of the women, sexual activity, lifestyle, diet, socioeconomic status, etc.

What is the dependent variable in this experiment?

- Sex (male or female)

- Rates of HPV infection

- Age (years)

[reveal-answer q=”907103″]Show Answer[/reveal-answer] [hidden-answer a=”907103″]Answer b. Rates of HPV infection. The researchers will measure how many individuals got infected with HPV after a given period of time.[/hidden-answer]

Contributors and Attributions

- Revision and adaptation. Authored by : Shelli Carter and Lumen Learning. Provided by : Lumen Learning. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Scientific Inquiry. Provided by : Open Learning Initiative. Located at : https://oli.cmu.edu/jcourse/workbook/activity/page?context=434a5c2680020ca6017c03488572e0f8 . Project : Introduction to Biology (Open + Free). License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Are Untestable Scientific Theories Acceptable?

Richard Dawid: String Theory and the Scientific Method , 2nd edn, Cambridge University Press, New York, 2013, ISBN 978-1-107-02971-2, 202 pp, US$ 95.00 (hardback)

- Book Review

- Published: 15 February 2015

- Volume 25 , pages 443–448, ( 2016 )

Cite this article

- Osvaldo Pessoa Jr. 1

449 Accesses

2 Citations

Explore all metrics

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Engelhardt, H. T., Jr., & Caplan, A. L. (Eds.). (1987). Scientific controversies . Cambridge: Cambridge University Press.

Google Scholar

Johansson, L.-G., & Matsubara, K. (2011). String theory and general methodology: A mutual evaluation. Studies in History and Philosophy of Modern Physics, 42 , 199–210.

Article Google Scholar

Laudan, L. (1984). Science and values . Berkeley: University of California Press.

Polchinski, J. (2007). All strung out? American Scientist, 95 (1), 1.

Smolin, L. (2006). The trouble with physics . New York: Houghton Mifflin.

Woit, P. (2006). Not even wrong . New York: Basic Books.

Download references

Author information

Authors and affiliations.

Philosophy Department, University of São Paulo, São Paulo, Brazil

Osvaldo Pessoa Jr.

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Osvaldo Pessoa Jr. .

Rights and permissions

Reprints and permissions

About this article

Pessoa, O. Are Untestable Scientific Theories Acceptable?. Sci & Educ 25 , 443–448 (2016). https://doi.org/10.1007/s11191-015-9748-8

Download citation

Published : 15 February 2015

Issue Date : May 2016

DOI : https://doi.org/10.1007/s11191-015-9748-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Hypothesis Examples

A hypothesis is a prediction of the outcome of a test. It forms the basis for designing an experiment in the scientific method . A good hypothesis is testable, meaning it makes a prediction you can check with observation or experimentation. Here are different hypothesis examples.

Null Hypothesis Examples

The null hypothesis (H 0 ) is also known as the zero-difference or no-difference hypothesis. It predicts that changing one variable ( independent variable ) will have no effect on the variable being measured ( dependent variable ). Here are null hypothesis examples:

- Plant growth is unaffected by temperature.

- If you increase temperature, then solubility of salt will increase.

- Incidence of skin cancer is unrelated to ultraviolet light exposure.

- All brands of light bulb last equally long.

- Cats have no preference for the color of cat food.

- All daisies have the same number of petals.

Sometimes the null hypothesis shows there is a suspected correlation between two variables. For example, if you think plant growth is affected by temperature, you state the null hypothesis: “Plant growth is not affected by temperature.” Why do you do this, rather than say “If you change temperature, plant growth will be affected”? The answer is because it’s easier applying a statistical test that shows, with a high level of confidence, a null hypothesis is correct or incorrect.

Research Hypothesis Examples

A research hypothesis (H 1 ) is a type of hypothesis used to design an experiment. This type of hypothesis is often written as an if-then statement because it’s easy identifying the independent and dependent variables and seeing how one affects the other. If-then statements explore cause and effect. In other cases, the hypothesis shows a correlation between two variables. Here are some research hypothesis examples:

- If you leave the lights on, then it takes longer for people to fall asleep.

- If you refrigerate apples, they last longer before going bad.

- If you keep the curtains closed, then you need less electricity to heat or cool the house (the electric bill is lower).

- If you leave a bucket of water uncovered, then it evaporates more quickly.

- Goldfish lose their color if they are not exposed to light.

- Workers who take vacations are more productive than those who never take time off.

Is It Okay to Disprove a Hypothesis?

Yes! You may even choose to write your hypothesis in such a way that it can be disproved because it’s easier to prove a statement is wrong than to prove it is right. In other cases, if your prediction is incorrect, that doesn’t mean the science is bad. Revising a hypothesis is common. It demonstrates you learned something you did not know before you conducted the experiment.

Test yourself with a Scientific Method Quiz .

- Mellenbergh, G.J. (2008). Chapter 8: Research designs: Testing of research hypotheses. In H.J. Adèr & G.J. Mellenbergh (eds.), Advising on Research Methods: A Consultant’s Companion . Huizen, The Netherlands: Johannes van Kessel Publishing.

- Popper, Karl R. (1959). The Logic of Scientific Discovery . Hutchinson & Co. ISBN 3-1614-8410-X.

- Schick, Theodore; Vaughn, Lewis (2002). How to think about weird things: critical thinking for a New Age . Boston: McGraw-Hill Higher Education. ISBN 0-7674-2048-9.

- Tobi, Hilde; Kampen, Jarl K. (2018). “Research design: the methodology for interdisciplinary research framework”. Quality & Quantity . 52 (3): 1209–1225. doi: 10.1007/s11135-017-0513-8

Related Posts

Module 1: Introduction to Biology

Experiments and hypotheses, learning outcomes.

- Form a hypothesis and use it to design a scientific experiment

Now we’ll focus on the methods of scientific inquiry. Science often involves making observations and developing hypotheses. Experiments and further observations are often used to test the hypotheses.

A scientific experiment is a carefully organized procedure in which the scientist intervenes in a system to change something, then observes the result of the change. Scientific inquiry often involves doing experiments, though not always. For example, a scientist studying the mating behaviors of ladybugs might begin with detailed observations of ladybugs mating in their natural habitats. While this research may not be experimental, it is scientific: it involves careful and verifiable observation of the natural world. The same scientist might then treat some of the ladybugs with a hormone hypothesized to trigger mating and observe whether these ladybugs mated sooner or more often than untreated ones. This would qualify as an experiment because the scientist is now making a change in the system and observing the effects.

Forming a Hypothesis

When conducting scientific experiments, researchers develop hypotheses to guide experimental design. A hypothesis is a suggested explanation that is both testable and falsifiable. You must be able to test your hypothesis, and it must be possible to prove your hypothesis true or false.

For example, Michael observes that maple trees lose their leaves in the fall. He might then propose a possible explanation for this observation: “cold weather causes maple trees to lose their leaves in the fall.” This statement is testable. He could grow maple trees in a warm enclosed environment such as a greenhouse and see if their leaves still dropped in the fall. The hypothesis is also falsifiable. If the leaves still dropped in the warm environment, then clearly temperature was not the main factor in causing maple leaves to drop in autumn.

In the Try It below, you can practice recognizing scientific hypotheses. As you consider each statement, try to think as a scientist would: can I test this hypothesis with observations or experiments? Is the statement falsifiable? If the answer to either of these questions is “no,” the statement is not a valid scientific hypothesis.

Practice Questions

Determine whether each following statement is a scientific hypothesis.

Air pollution from automobile exhaust can trigger symptoms in people with asthma.

- No. This statement is not testable or falsifiable.

- No. This statement is not testable.

- No. This statement is not falsifiable.

- Yes. This statement is testable and falsifiable.

Natural disasters, such as tornadoes, are punishments for bad thoughts and behaviors.

a: No. This statement is not testable or falsifiable. “Bad thoughts and behaviors” are excessively vague and subjective variables that would be impossible to measure or agree upon in a reliable way. The statement might be “falsifiable” if you came up with a counterexample: a “wicked” place that was not punished by a natural disaster. But some would question whether the people in that place were really wicked, and others would continue to predict that a natural disaster was bound to strike that place at some point. There is no reason to suspect that people’s immoral behavior affects the weather unless you bring up the intervention of a supernatural being, making this idea even harder to test.

Testing a Vaccine

Let’s examine the scientific process by discussing an actual scientific experiment conducted by researchers at the University of Washington. These researchers investigated whether a vaccine may reduce the incidence of the human papillomavirus (HPV). The experimental process and results were published in an article titled, “ A controlled trial of a human papillomavirus type 16 vaccine .”

Preliminary observations made by the researchers who conducted the HPV experiment are listed below:

- Human papillomavirus (HPV) is the most common sexually transmitted virus in the United States.

- There are about 40 different types of HPV. A significant number of people that have HPV are unaware of it because many of these viruses cause no symptoms.

- Some types of HPV can cause cervical cancer.

- About 4,000 women a year die of cervical cancer in the United States.

Practice Question

Researchers have developed a potential vaccine against HPV and want to test it. What is the first testable hypothesis that the researchers should study?

- HPV causes cervical cancer.

- People should not have unprotected sex with many partners.

- People who get the vaccine will not get HPV.

- The HPV vaccine will protect people against cancer.

Experimental Design

You’ve successfully identified a hypothesis for the University of Washington’s study on HPV: People who get the HPV vaccine will not get HPV.

The next step is to design an experiment that will test this hypothesis. There are several important factors to consider when designing a scientific experiment. First, scientific experiments must have an experimental group. This is the group that receives the experimental treatment necessary to address the hypothesis.

The experimental group receives the vaccine, but how can we know if the vaccine made a difference? Many things may change HPV infection rates in a group of people over time. To clearly show that the vaccine was effective in helping the experimental group, we need to include in our study an otherwise similar control group that does not get the treatment. We can then compare the two groups and determine if the vaccine made a difference. The control group shows us what happens in the absence of the factor under study.

However, the control group cannot get “nothing.” Instead, the control group often receives a placebo. A placebo is a procedure that has no expected therapeutic effect—such as giving a person a sugar pill or a shot containing only plain saline solution with no drug. Scientific studies have shown that the “placebo effect” can alter experimental results because when individuals are told that they are or are not being treated, this knowledge can alter their actions or their emotions, which can then alter the results of the experiment.

Moreover, if the doctor knows which group a patient is in, this can also influence the results of the experiment. Without saying so directly, the doctor may show—through body language or other subtle cues—their views about whether the patient is likely to get well. These errors can then alter the patient’s experience and change the results of the experiment. Therefore, many clinical studies are “double blind.” In these studies, neither the doctor nor the patient knows which group the patient is in until all experimental results have been collected.

Both placebo treatments and double-blind procedures are designed to prevent bias. Bias is any systematic error that makes a particular experimental outcome more or less likely. Errors can happen in any experiment: people make mistakes in measurement, instruments fail, computer glitches can alter data. But most such errors are random and don’t favor one outcome over another. Patients’ belief in a treatment can make it more likely to appear to “work.” Placebos and double-blind procedures are used to level the playing field so that both groups of study subjects are treated equally and share similar beliefs about their treatment.

The scientists who are researching the effectiveness of the HPV vaccine will test their hypothesis by separating 2,392 young women into two groups: the control group and the experimental group. Answer the following questions about these two groups.

- This group is given a placebo.

- This group is deliberately infected with HPV.

- This group is given nothing.

- This group is given the HPV vaccine.

- a: This group is given a placebo. A placebo will be a shot, just like the HPV vaccine, but it will have no active ingredient. It may change peoples’ thinking or behavior to have such a shot given to them, but it will not stimulate the immune systems of the subjects in the same way as predicted for the vaccine itself.

- d: This group is given the HPV vaccine. The experimental group will receive the HPV vaccine and researchers will then be able to see if it works, when compared to the control group.

Experimental Variables

A variable is a characteristic of a subject (in this case, of a person in the study) that can vary over time or among individuals. Sometimes a variable takes the form of a category, such as male or female; often a variable can be measured precisely, such as body height. Ideally, only one variable is different between the control group and the experimental group in a scientific experiment. Otherwise, the researchers will not be able to determine which variable caused any differences seen in the results. For example, imagine that the people in the control group were, on average, much more sexually active than the people in the experimental group. If, at the end of the experiment, the control group had a higher rate of HPV infection, could you confidently determine why? Maybe the experimental subjects were protected by the vaccine, but maybe they were protected by their low level of sexual contact.

To avoid this situation, experimenters make sure that their subject groups are as similar as possible in all variables except for the variable that is being tested in the experiment. This variable, or factor, will be deliberately changed in the experimental group. The one variable that is different between the two groups is called the independent variable. An independent variable is known or hypothesized to cause some outcome. Imagine an educational researcher investigating the effectiveness of a new teaching strategy in a classroom. The experimental group receives the new teaching strategy, while the control group receives the traditional strategy. It is the teaching strategy that is the independent variable in this scenario. In an experiment, the independent variable is the variable that the scientist deliberately changes or imposes on the subjects.

Dependent variables are known or hypothesized consequences; they are the effects that result from changes or differences in an independent variable. In an experiment, the dependent variables are those that the scientist measures before, during, and particularly at the end of the experiment to see if they have changed as expected. The dependent variable must be stated so that it is clear how it will be observed or measured. Rather than comparing “learning” among students (which is a vague and difficult to measure concept), an educational researcher might choose to compare test scores, which are very specific and easy to measure.

In any real-world example, many, many variables MIGHT affect the outcome of an experiment, yet only one or a few independent variables can be tested. Other variables must be kept as similar as possible between the study groups and are called control variables . For our educational research example, if the control group consisted only of people between the ages of 18 and 20 and the experimental group contained people between the ages of 30 and 35, we would not know if it was the teaching strategy or the students’ ages that played a larger role in the results. To avoid this problem, a good study will be set up so that each group contains students with a similar age profile. In a well-designed educational research study, student age will be a controlled variable, along with other possibly important factors like gender, past educational achievement, and pre-existing knowledge of the subject area.

What is the independent variable in this experiment?

- Sex (all of the subjects will be female)

- Presence or absence of the HPV vaccine

- Presence or absence of HPV (the virus)

List three control variables other than age.

What is the dependent variable in this experiment?

- Sex (male or female)

- Rates of HPV infection

- Age (years)

- Revision and adaptation. Authored by : Shelli Carter and Lumen Learning. Provided by : Lumen Learning. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Scientific Inquiry. Provided by : Open Learning Initiative. Located at : https://oli.cmu.edu/jcourse/workbook/activity/page?context=434a5c2680020ca6017c03488572e0f8 . Project : Introduction to Biology (Open + Free). License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Exploring Our Fluid Earth

Teaching science as inquiry.

- Create new account

- Reset your password

For more option use Advanced Search

Practices of Science: Opinion, Hypothesis & Theory

An opinion is a statement describing a personal belief or thought that cannot be tested (or has not been tested) and is unsupported by evidence. A hypothesis is usually a prediction based on some observation or evidence. Hypotheses must be testable, and once tested, they can be supported by evidence. If a statement is made that cannot be tested and disproved, then it is not a hypothesis. Sometimes it is possible to restate an opinion so that it can become a hypothesis.

A scientific theory is a hypothesis that has been extensively tested, evaluated by the scientific community, and is strongly supported. Theories often describe a large set of observations, and provide a cohesive explanation for those observations. An individual cannot come up with a theory. Theories require extensive testing and agreement within the scientific community. Theories are not described as true or right, but as the best-supported explanation of the world based on evidence.

SF Fig. 7.9. Alfred Wegener first proposed the idea of continental drift.

Image courtesy of Deutsches Dokumentationszentrum für Kunstgeschichte - Bildarchiv Foto Marburg, Wikimedia Commons

German-born geophysicist Alfred Wegener is credited with proposing a hypothesis of continental drift in the late 1800’s, but it was not until the 1960’s that his concept became widely accepted by the scientific community. Part of the problem Wegener faced in presenting his hypothesis of continental drift was that he did not have a sufficient evidence to be able to propose the mechanism of continental movement. Wegener suggested that the continents moved across the ocean floor, but the lack of disturbance on the ocean floor did not support this part of his hypothesis. The elevation of continental drift to the status of a theory came largely from evidence supporting new ideas about the mechanism of plate movement: plate tectonics. It was only over time, as more scientists evaluated and added to Wegener’s original hypothesis, that it became widely accepted as a theory.

- Arc-shaped island chains like the Aleutian Islands are found at subduction zones.

- Dinosaurs were mean animals.

- Mammals are superior to reptiles.

- An asteroid impact contributed to the extinction of dinosaurs.

- Science can answer any question.

- The climate on Antarctica was once warmer than it is now.

- The center of the earth is made of platinum.

- You have a hypothesis that the land near your school was once at the bottom of the ocean, but due to continental movement, it is now miles inland from any water source. How would you test your hypothesis? What evidence would you use to support your claim?

Authors & Partners

Partner Organizations

Exploring Our Fluid Earth, a product of the Curriculum Research & Development Group (CRDG), College of Education. © University of Hawai‘i, . This document may be freely reproduced and distributed for non-profit educational purposes.

The Unfalsifiable Hypothesis Paradox

What is the unfalsifiable hypothesis paradox.

Imagine someone tells you a story about a dragon that breathes not fire, but invisible, heatless fire. You grab a thermometer to test the claim but no matter what, you can’t prove it’s not true because you can’t measure something that’s invisible and has no heat. This is what we call an ‘unfalsifiable hypothesis’—it’s a claim that’s made in such a way that it can’t be proven wrong, no matter what.

Now, the paradox is this: in science, being able to prove or disprove a claim makes it strong and believable. If nobody could ever prove a hypothesis wrong, you’d think it’s completely reliable, right? But actually, in science, that makes it weak! If we can’t test a claim, then it’s not really playing by the rules of science. So, the paradox is that not being able to prove something wrong can make a claim scientifically useless—even though it seems like it would be the ultimate truth.

Key Arguments

- An unfalsifiable hypothesis is a claim that can’t be proven wrong, but just because we can’t disprove it, that doesn’t make it automatically true.

- Science grows and improves through testing ideas; if we can’t test a claim, we can’t know if it’s really valid.

- Being able to show that an idea could be wrong is a fundamental part of scientific thinking. Without this testability, a claim is more like a personal belief or a philosophical idea than a scientific one.

- An unfalsifiable hypothesis might look like it’s scientific, but it’s misleading since it doesn’t stick to the strict rules of testing and evidence that science needs.

- Using unfalsifiable claims can block our paths to understanding since they stop us from asking questions and looking for verifiable answers.

- The dragon with invisible, heatless fire: This is an example of an unfalsifiable hypothesis because no test or observation could ever show that the dragon’s fire isn’t real, since it can’t be detected in any way.

- Saying a celestial teapot orbits the Sun between Earth and Mars: This teapot is said to be small and far enough away that no telescope could spot it. Because it’s undetectable, we can’t disprove its existence.

- A theory that angels are responsible for keeping us gravitationally bound to Earth: Since we can’t test for the presence or actions of angels, we can’t refute the claim, making it unfalsifiable.

- The statement that the world’s sorrow is caused by invisible spirits: It sounds serious, but if we can’t measure or observe these spirits, we can’t possibly prove this idea right or wrong.

Answer or Resolution

Dealing with the Unfalsifiable Hypothesis Paradox means finding a balance. We can’t just ignore all ideas that can’t be tested because some might lead to real scientific breakthroughs one day. On the other side, we can’t treat untestable claims as true science. It’s about being open to possibilities but also clear about what counts as scientific evidence.

Some people might say we should only focus on what can be proven wrong. Others think even wild ideas have their place at the starting line of science—they inspire us and can evolve into something testable later on.

Major Criticism

Some people criticize the idea of rejecting all unfalsifiable ideas because that could block new ways of thinking. Sometimes a wild guess can turn into a real scientific discovery. Plus, falsifiability is just one part of what makes a theory scientific. We shouldn’t throw away potentially good ideas just because they don’t fit one rule, especially when they’re still in the early stages and shouldn’t be held too tightly to any rules at all.

Another point is that some important ideas have been unfalsifiable at first but later became testable. So, we have to recognize that science itself can change and grow.

Practical Applications

You might wonder, “Why does this matter to me?” Well, knowing about the Unfalsifiable Hypothesis Paradox actually affects a lot of real-world situations, like how we learn things in school, the kinds of products we buy, and even the rules and laws that are made.

- Education: By learning what makes science solid, students can tell the difference between real science and just a bunch of fancy words that sound scientific but aren’t based on testable ideas.

- Consumer Protection: Sometimes companies try to sell things by using science-sounding claims that can’t be proven wrong—and that’s where knowing about unfalsifiable hypotheses helps protect us from buying into false promises.

- Legal and Policy Making: For people who make laws or guide big decisions, understanding this concept helps them judge if a study or report is really based on solid science.

Related Topics

The Unfalsifiable Hypothesis Paradox is linked with a couple of other important ideas you might hear about:

- Scientific Method: This is the set of steps scientists use to learn about the world. Part of the process is making sure ideas can be tested.

- Pseudoscience: These are beliefs or practices that try to appear scientific but don’t follow the scientific method properly, often using unfalsifiable claims.

- Empiricism : This big word just means learning by observation and experiment—the backbone of science and everything opposite of unfalsifiable concepts.

Wrapping up, the Unfalsifiable Hypothesis Paradox shows us that science isn’t just about coming up with ideas—it’s about being able to test them, too. Untestable claims may be interesting, but they can’t help us understand the world in a scientific way. But remember, just because an idea is unfalsifiable now doesn’t mean it will be forever. The best approach is using that creative spark but always grounding it in what we can observe and prove. This balance keeps our imaginations soaring but our facts checked, forming a bridge between our wildest ideas and the world we can measure and know.

Skip to the content

Learn about 'testable questions'

A testable question is one that can be answered through hands on experimentation by the student. The key to a testable question is to find something where you change only one thing (or a very small number)

Untestable: What makes something sink or float? Testable: How well do different materials sink or float in water?

Untestable: How do rockets work? Testable: How does changing the shape of a rocket's fins change its flight?

Untestable: What makes a magnet attract things? Testable: Does temperature have an effect on a magnet's strength?

Untestable: What happens when water expands as it freezes? Testable: How much force is needed to keep water from expanding as it freezes?

Untestable: What is bread mold? Testable: What conditions keep bread mold from growing on bread?

Resource Links

- Science Fair Buddies

- Science News

- Energy Kids

- CA State Science Fair

- Discovery Education

- Ideas from NASA

- Exploratorium

November 25, 2019

Multiverse Theories Are Bad for Science

New books by a physicist and science journalist mount aggressive but ultimately unpersuasive defenses of multiverses

By John Horgan

Here a universe, there a universe...

Igor Sokalski Getty Images

This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

In 1990 I wrote a bit of fluff for Scientific American about whether our cosmos might be just one in an “infinitude,” as several theories of physics implied. I titled my piece “Here a Universe, There a Universe . . .” and kept the tone light, because I didn’t want readers to take these cosmic conjectures too seriously. After all, there was no way of proving, or disproving, the existence of other universes.*

Today, physicists still lack evidence of other universes, or even good ideas for obtaining evidence. Many nonetheless insist our cosmos really is just a mote of dust in a vast “multiverse.” One especially eloquent and passionate multiverse theorist is Sean Carroll. His faith in the multiverse stems from his faith in quantum mechanics, which he sees as our best account of reality.

In his book Something Deeply Hidden , Carroll asserts that quantum mechanics describes not just very small things but everything, including us. “As far as we currently know,” he writes, “quantum mechanics isn’t just an approximation to the truth; it is the truth.” And however preposterous it might seem, a multiverse, Carroll argues, is an inescapable consequence of quantum mechanics.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

To make his case, he takes us deep into the surreal quantum world. Our world! The basic quantum equation, called a wave function, shows a particle—an electron, say—inhabiting many possible positions, with different probabilities assigned to each one. Aim an instrument at the electron to determine where it is, and you’ll find it in just one place. You might reasonably assume that the wave function is just a statistical approximation of the electron’s behavior, which can’t be more precise because electrons are tiny and our instruments crude. But you would be wrong, according to Carroll. The electron exists as a kind of probabilistic blur until you observe it, when it “collapses,” in physics lingo, into a single position.

Physicists and philosophers have been arguing about this “measurement problem” for almost a century now. Various other explanations have been proposed, but most are either implausible, making human consciousness a necessary component of reality, or kludgy, requiring ad hoc tweaks of the wave function. The only solution that makes sense to Carroll—because it preserves quantum mechanics in its purest form—was proposed in 1957 by a Princeton graduate student, Hugh Everett III. He conjectured that the electron actually inhabits all the positions allowed by the wave function, but in different universes.

This hypothesis, which came to be called the many-worlds theory, has been refined over the decades. It no longer entails acts of measurement, or consciousness (sorry New Agers). The universe supposedly splits, or branches, whenever one quantum particle jostles against another, making their wave functions collapse. This process, called “decoherence,” happens all the time, everywhere. It is happening to you right now. And now. And now. Yes, zillions of your doppelgangers are out there at this very moment, probably having more fun than you. Asked why we don’t feel ourselves splitting, Everett replied, “Do you feel the motion of the earth?”

Carroll addresses the problem of evidence, sort of. He says philosopher Karl Popper, who popularized the notion that scientific theories should be precise enough to be testable, or falsifiable, “had good things to say about” Everett’s hypothesis, calling it “a completely objective discussion of quantum mechanics.” (Popper, I must add, had doubts about natural selection, so his taste wasn’t irreproachable.)

Carroll proposes furthermore that because quantum mechanics is falsifiable, the many-worlds hypothesis “is the most falsifiable theory ever invented”—even if we can never directly observe any of those many worlds. The term “many,” by the way, is a gross understatement. The number of universes created since the big bang, Carroll estimates, is 2 to the power of 10 to the power of 112. Like I said, an infinitude.

And that’s just the many-worlds multiverse. Physicists have proposed even stranger multiverses, which science writer Tom Siegfried describes in his book The Number of the Heavens . String theory, which posits that all the forces of nature stem from stringy thingies wriggling in nine or more dimensions, implies that our cosmos is just a hillock in a sprawling “landscape” of universes, some with radically different laws and dimensions than ours. Chaotic inflation, a supercharged version of the big bang theory, suggests that our universe is a minuscule bubble in a boundless, frothy sea.

In addition to describing these and other multiverses, Siegfried provides a history of the idea of other worlds, which goes back to the ancient Greeks. (Is there anything they didn’t think of first?) Acknowledging that “nobody can say for sure” whether other universes exist, Siegfried professes neutrality on their existence. But he goes on to construct an almost comically partisan defense of the multiverse, declaring that “it makes much more sense for a multiverse to exist than not."

Siegfried blames historical resistance to the concept of other worlds on Aristotle, who “argued with Vulcan-like assuredness” that earth is the only world. Because Aristotle was wrong about that, Siegfried seems to suggest, maybe modern multiverse skeptics are wrong too. After all, the known universe has expanded enormously since Aristotle’s era. We learned only a century ago that the Milky Way is just one of many galaxies.

The logical next step, Siegfried contends, would be for us to discover that our entire cosmos is one of many. Rebutting skeptics who call multiverse theories “unscientific” because they are untestable, Siegfried retorts that the skeptics are unscientific, because they are “pre-supposing a definition of science that rules out multiverses to begin with.” He calls skeptics “deniers”—a term usually linked to doubts about real things, like vaccines, climate change and the Holocaust.

I am not a multiverse denier, any more than I am a God denier. Science cannot resolve the existence of either God or the multiverse, making agnosticism the only sensible position. I see some value in multiverse theories. Particularly when presented by a writer as gifted as Sean Carroll, they goad our imaginations and give us intimations of infinity. They make us feel really, really small—in a good way.

But I’m less entertained by multiverse theories than I once was, for a couple of reasons. First, science is in a slump, for reasons both internal and external. Science is ill-served when prominent thinkers tout ideas that can never be tested and hence are, sorry, unscientific. Moreover, at a time when our world, the real world, faces serious problems, dwelling on multiverses strikes me as escapism—akin to billionaires fantasizing about colonizing Mars. Shouldn’t scientists do something more productive with their time?

Maybe in another universe Carroll and Siegfried have convinced me to take multiverses seriously, but I doubt it.

*This is a slightly modified version of a review published in The Wall Street Journal last month.

Further Reading :

Jeffrey Epstein and the Decadence of Science

String Theory Does Not Win a Nobel, and I Win a Bet

Is speculation in multiverses as immoral as speculation in subprime mortgages ?

Meta-Post: Posts on Physics

I spoke with Sean Carroll in 2008 on Bloggingheads.tv .

See also my free, online book Mind-Body Problems: Science, Subjectivity & Who We Really Are .

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- CAREER FEATURE

- 08 May 2024

Illuminating ‘the ugly side of science’: fresh incentives for reporting negative results

- Rachel Brazil 0

Rachel Brazil is a freelance journalist in London, UK.

You can also search for this author in PubMed Google Scholar

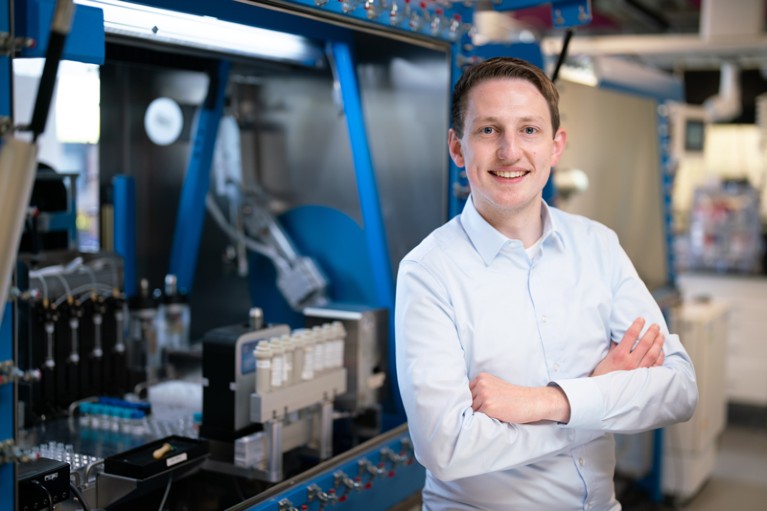

The editor-in-chief of the Journal of Trial & Error , Sarahanne Field wants to publish the messy, null and negative results sitting in researchers’ file drawers. Credit: Sander Martens

Editor-in-chief Sarahanne Field describes herself and her team at the Journal of Trial & Error as wanting to highlight the “ugly side of science — the parts of the process that have gone wrong”.