Text to speech

An AI Speech feature that converts text to lifelike speech.

Bring your apps to life with natural-sounding voices

Build apps and services that speak naturally. Differentiate your brand with a customized, realistic voice generator, and access voices with different speaking styles and emotional tones to fit your use case—from text readers and talkers to customer support chatbots.

Lifelike synthesized speech

Enable fluid, natural-sounding text to speech that matches the intonation and emotion of human voices.

Customizable text-talker voices

Create a unique AI voice generator that reflects your brand's identity.

Fine-grained text-to-talk audio controls

Tune voice output for your scenarios by easily adjusting rate, pitch, pronunciation, pauses, and more.

Flexible deployment

Run Text to Speech anywhere—in the cloud, on-premises, or at the edge in containers.

Tailor your speech output

Fine-tune synthesized speech audio to fit your scenario. Define lexicons and control speech parameters such as pronunciation, pitch, rate, pauses, and intonation with Speech Synthesis Markup Language (SSML) or with the audio content creation tool .

Deploy Text to Speech anywhere, from the cloud to the edge

Run Text to Speech wherever your data resides. Build lifelike speech synthesis into applications optimized for both robust cloud capabilities and edge locality using containers .

Build a custom voice for your brand

Differentiate your brand with a unique custom voice . Develop a highly realistic voice for more natural conversational interfaces using the Custom Neural Voice capability, starting with 30 minutes of audio.

Fuel App Innovation with Cloud AI Services

Learn five key ways your organization can get started with AI to realize value quickly.

Comprehensive privacy and security

Documentation.

AI Speech, part of Azure AI Services, is certified by SOC, FedRAMP, PCI DSS, HIPAA, HITECH, and ISO.

View and delete your custom voice data and synthesized speech models at any time. Your data is encrypted while it’s in storage.

Your data remains yours. Your text data isn't stored during data processing or audio voice generation.

Backed by Azure infrastructure, AI Speech offers enterprise-grade security, availability, compliance, and manageability.

Comprehensive security and compliance, built in

Microsoft invests more than $1 billion annually on cybersecurity research and development.

We employ more than 3,500 security experts who are dedicated to data security and privacy.

Azure has more certifications than any other cloud provider. View the comprehensive list .

Flexible pricing gives you the power and control you need

Pay only for what you use, with no upfront costs. With Text to Speech, you pay as you go based on the number of characters you convert to audio.

Get started with an Azure free account

After your credit, move to pay as you go to keep building with the same free services. Pay only if you use more than your free monthly amounts.

Guidelines for building responsible synthetic voices

Learn about responsible deployment

Synthetic voices must be designed to earn the trust of others. Learn the principles of building synthesized voices that create confidence in your company and services.

Obtain consent from voice talent

Help voice talent understand how neural text-to-speech (TTS) works and get information on recommended use cases.

Be transparent

Transparency is foundational to responsible use of computer voice generators and synthetic voices. Help ensure that users understand when they’re hearing a synthetic voice and that voice talent is aware of how their voice will be used. Learn more with our disclosure design guidelines.

Documentation and resources

Get started.

Read the documentation

Take the Microsoft Learn course

Get started with a 30-day learning journey

Explore code samples

Check out the sample code

See customization resources

Customize your speech solution with Speech studio . No code required.

Start building with AI Services

Advanced Text to Speech API

- ~400ms latency

- High quality at speed

Highest Quality Audio Output

Contextual awareness.

Understands text nuances for appropriate intonation and resonance.

Emotional Range

Adapt the emotional tone to suit any narrative required.

Multilingual Capability

Authentic speech across 29 languages, with each voice maintaining its original characteristics.

Voice Variety

Use voice design and a comprehensive library to discover voices for every use-case.

High Quality Output

Supreme audio quality at 128 kbps to elevate the listener's experience.

Audio Streaming

Quickly generate long-form content, at no loss to quality.

Low Latency Turbo Model

Build Faster Than Ever

3 Months Free

11m characters, api features, 1000s of hq voices.

Create custom voices by cloning your own voice, create a new one from scratch or explore our library.

Real-time Latency

Get the fastest response time in the industry with our real-time API. Achieve ~400ms audio generation times at 128kbps.

Contextual awareness

Our text to speech model understands the context of the text to deliver the most natural sounding voices.

Enterprise-ready Security

Trusted security and data controls, soc2 and gdpr.

Compliant with the highest security and data handling standards

Full Privacy Mode

Optional Full Privacy mode that enables zero content and data retention on ElevenLabs servers. Exclusively for Enterprise.

End-To-End Encryption

Content and data sent to and from our models are always protected

Explore our resources

Python library, react text to speech guide, gaming ai voice guide, multilingual text to speech api in 29 languages, developer api, enterprise scale, frequently asked questions, what makes elevenlabs api the best tts api.

It offers unparalleled quality, multilingual capabilities, and low latency (<500ms), ensuring optimal user experience. It also provides a comprehensive library of voices and a variety of voice settings to suit any use-case.

What is a text to speech & AI voice API?

It is an application programming interface that allows developers to integrate text-to-speech and voice cloning capabilities into their applications. It works by leveraging deep learning to convert text into speech, and speech into a different voice. The technology has had significant growth in recent months due to its ability to create a more immersive user experience. It is used to create audiobooks, podcasts, voice assistants, and more. It can also be used to create custom voices for gaming, movies, and other media.

How do I get started with the text to speech API?

You can get started by signing up for a free account. Once you have an account, find your xi-api-key in your profile settings after registration. This key is required for authentication in API requests. You can then generate audio from text in a variety of languages by sending a POST request to the API with the desired text and voice settings. The API returns an audio file in response. Use programming languages like Python for these requests, as demonstrated in the example above.

How does the API ensure high-quality output?

It delivers audio at 128 kbps, allowing for a premium listening experience. It also offers a variety of voice settings to suit any use-case, including emotional range, contextual awareness, and voice variety.

Can I get support during the integration process?

Yes, extensive resources, an active developer community, and a responsive support team are available to assist you.

How many languages does the API support?

Our text to speech API supports 29 languages including Hindi, Spanish, German, Arabic & Chinese. Each voice maintains its unique characteristics across all languages.

What is the latency of the text to speech API?

The API boasts ultra-low latency, achieving approximately 400ms audio generation times with its Turbo model. This ensures a quick turnaround from text input to audio output. Multiple latency optimization modes are available, enabling significant improvements and responsiveness.

What are the use cases for the ElevenLabs TTS API?

The API can be used to create audiobooks, podcasts, voice assistants, and more. It can also be used to create custom voices for gaming, movies, and other media.

What is an AI voice API and how does it work?

An AI voice API is an application programming interface that allows developers to integrate text-to-speech and voice cloning capabilities into their applications. It works by leveraging deep learning to convert text into speech, and speech into a different voice.

What is the best text to speech (TTS) API?

The best text to speech API is one that offers high-quality output, multilingual capabilities, and low latency. It should also provide a comprehensive library of voices and a variety of voice settings to suit any use-case. You can find all of these features and more with ElevenLabs.

Best Text to Speech APIs

List of the Top Text-to-Speech APIs (also known as TTS APIs) available on RapidAPI.

- Recommended APIs

- Popular APIs

- Free Public APIs for Developers

- Top AI Based APIs

- View All Collections

- Entertainment

- View All Categories

About this Collection:

Text to speech apis, about tts apis.

TTS APIs (text to speech APIs) can be used to enable speech-based text output in an app or program in addition to providing text on a screen.

What is text to speech?

Text to speech (TTS), also known as speech synthesis, is the process of converting written text to spoken audio. In most cases, text to speech refers specifically to text on a computer or other device.

How does a text-to-speech API work?

First, a program sends text to the API as a request, typically in JSON format. Optionally, text can often be formatted using SSML, a type of markup language created to improve the efficiency of speech synthesis programs.

Once the API receives the request, it will return the equivalent audio object. This object can then be integrated into the program which made the request and played for the user.

The best text to speech APIs also allow selection of accent and gender, as well as other options.

Who is text to speech for?

Text to speech is crucial for some users with disabilities. Users with vision problems may be unable to read text and interpret figures that rely on sight alone, so the ability to have content spoken to them instead of reading can mean the difference between an unusable program and a usable one.

While screen readers and other types of adaptive hardware and software exist to allow users with disabilities to use inaccessible programs, these can be complicated and expensive. It’s almost always better to provide a native text-to-speech solution within your program or app.

Text-to-speech APIs can also help nondisabled users, however. There are many use cases for text to speech, including safer use of an app or program in situations where looking at a screen might be dangerous, distracting or just inconvenient. For example, a sighted user following a recipe on their phone could have it read aloud to them instead of constantly having to clean their hands to check the next step.

Why is a text-to-speech API important?

Using an API for text to speech can make programs much more effective.

Especially because speech synthesis is such a specialized and complex field, an API can free up developers to focus on the unique strengths of their own program.

Users with disabilities also have higher expectations than in the past, and developers are better off meeting their needs with a robust, established text to speech API rather than using a homegrown solution.

What you can expect from the best text to speech APIs?

Any text to speech API will return an audio file.

The best produce seamless audio that sounds like it was spoken by a real human being. In some cases, APIs even allow developers to create their own voice model for the audio output they request.

High-quality APIs of any sort should also include support and extensive documentation.

Are there examples of the best free TTS APIs?

- Text to Speech

- IBM Watson TTS

- Robomatic.ai

- Text to Speech - TTS

- Microsoft Text Translator

- Text-to-Speech

Text to Speech API SDKs

All text to speech APIs are supported and made available in multiple developer programming languages and SDKs including:

- Objective-C

- Java (Android)

Just select your preference from any API endpoints page.

Sign up today for free on RapidAPI to begin using Text to Speech APIs!

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

Using the Web Speech API

Speech recognition.

Speech recognition involves receiving speech through a device's microphone, which is then checked by a speech recognition service against a list of grammar (basically, the vocabulary you want to have recognized in a particular app.) When a word or phrase is successfully recognized, it is returned as a result (or list of results) as a text string, and further actions can be initiated as a result.

The Web Speech API has a main controller interface for this — SpeechRecognition — plus a number of closely-related interfaces for representing grammar, results, etc. Generally, the default speech recognition system available on the device will be used for the speech recognition — most modern OSes have a speech recognition system for issuing voice commands. Think about Dictation on macOS, Siri on iOS, Cortana on Windows 10, Android Speech, etc.

Note: On some browsers, such as Chrome, using Speech Recognition on a web page involves a server-based recognition engine. Your audio is sent to a web service for recognition processing, so it won't work offline.

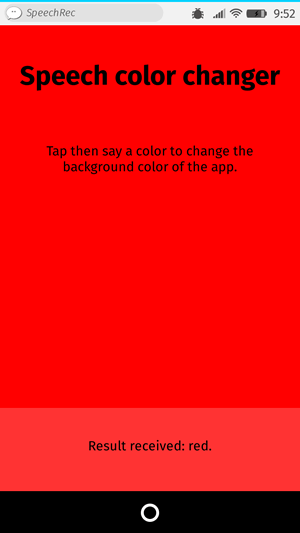

To show simple usage of Web speech recognition, we've written a demo called Speech color changer . When the screen is tapped/clicked, you can say an HTML color keyword, and the app's background color will change to that color.

To run the demo, navigate to the live demo URL in a supporting mobile browser (such as Chrome).

HTML and CSS

The HTML and CSS for the app is really trivial. We have a title, instructions paragraph, and a div into which we output diagnostic messages.

The CSS provides a very simple responsive styling so that it looks OK across devices.

Let's look at the JavaScript in a bit more detail.

Prefixed properties

Browsers currently support speech recognition with prefixed properties. Therefore at the start of our code we include these lines to allow for both prefixed properties and unprefixed versions that may be supported in future:

The grammar

The next part of our code defines the grammar we want our app to recognize. The following variable is defined to hold our grammar:

The grammar format used is JSpeech Grammar Format ( JSGF ) — you can find a lot more about it at the previous link to its spec. However, for now let's just run through it quickly:

- The lines are separated by semicolons, just like in JavaScript.

- The first line — #JSGF V1.0; — states the format and version used. This always needs to be included first.

- The second line indicates a type of term that we want to recognize. public declares that it is a public rule, the string in angle brackets defines the recognized name for this term ( color ), and the list of items that follow the equals sign are the alternative values that will be recognized and accepted as appropriate values for the term. Note how each is separated by a pipe character.

- You can have as many terms defined as you want on separate lines following the above structure, and include fairly complex grammar definitions. For this basic demo, we are just keeping things simple.

Plugging the grammar into our speech recognition

The next thing to do is define a speech recognition instance to control the recognition for our application. This is done using the SpeechRecognition() constructor. We also create a new speech grammar list to contain our grammar, using the SpeechGrammarList() constructor.

We add our grammar to the list using the SpeechGrammarList.addFromString() method. This accepts as parameters the string we want to add, plus optionally a weight value that specifies the importance of this grammar in relation of other grammars available in the list (can be from 0 to 1 inclusive.) The added grammar is available in the list as a SpeechGrammar object instance.

We then add the SpeechGrammarList to the speech recognition instance by setting it to the value of the SpeechRecognition.grammars property. We also set a few other properties of the recognition instance before we move on:

- SpeechRecognition.continuous : Controls whether continuous results are captured ( true ), or just a single result each time recognition is started ( false ).

- SpeechRecognition.lang : Sets the language of the recognition. Setting this is good practice, and therefore recommended.

- SpeechRecognition.interimResults : Defines whether the speech recognition system should return interim results, or just final results. Final results are good enough for this simple demo.

- SpeechRecognition.maxAlternatives : Sets the number of alternative potential matches that should be returned per result. This can sometimes be useful, say if a result is not completely clear and you want to display a list if alternatives for the user to choose the correct one from. But it is not needed for this simple demo, so we are just specifying one (which is actually the default anyway.)

Starting the speech recognition

After grabbing references to the output <div> and the HTML element (so we can output diagnostic messages and update the app background color later on), we implement an onclick handler so that when the screen is tapped/clicked, the speech recognition service will start. This is achieved by calling SpeechRecognition.start() . The forEach() method is used to output colored indicators showing what colors to try saying.

Receiving and handling results

Once the speech recognition is started, there are many event handlers that can be used to retrieve results, and other pieces of surrounding information (see the SpeechRecognition events .) The most common one you'll probably use is the result event, which is fired once a successful result is received:

The second line here is a bit complex-looking, so let's explain it step by step. The SpeechRecognitionEvent.results property returns a SpeechRecognitionResultList object containing SpeechRecognitionResult objects. It has a getter so it can be accessed like an array — so the first [0] returns the SpeechRecognitionResult at position 0. Each SpeechRecognitionResult object contains SpeechRecognitionAlternative objects that contain individual recognized words. These also have getters so they can be accessed like arrays — the second [0] therefore returns the SpeechRecognitionAlternative at position 0. We then return its transcript property to get a string containing the individual recognized result as a string, set the background color to that color, and report the color recognized as a diagnostic message in the UI.

We also use the speechend event to stop the speech recognition service from running (using SpeechRecognition.stop() ) once a single word has been recognized and it has finished being spoken:

Handling errors and unrecognized speech

The last two handlers are there to handle cases where speech was recognized that wasn't in the defined grammar, or an error occurred. The nomatch event seems to be supposed to handle the first case mentioned, although note that at the moment it doesn't seem to fire correctly; it just returns whatever was recognized anyway:

The error event handles cases where there is an actual error with the recognition successfully — the SpeechRecognitionErrorEvent.error property contains the actual error returned:

Speech synthesis

Speech synthesis (aka text-to-speech, or TTS) involves receiving synthesizing text contained within an app to speech, and playing it out of a device's speaker or audio output connection.

The Web Speech API has a main controller interface for this — SpeechSynthesis — plus a number of closely-related interfaces for representing text to be synthesized (known as utterances), voices to be used for the utterance, etc. Again, most OSes have some kind of speech synthesis system, which will be used by the API for this task as available.

To show simple usage of Web speech synthesis, we've provided a demo called Speak easy synthesis . This includes a set of form controls for entering text to be synthesized, and setting the pitch, rate, and voice to use when the text is uttered. After you have entered your text, you can press Enter / Return to hear it spoken.

To run the demo, navigate to the live demo URL in a supporting mobile browser.

The HTML and CSS are again pretty trivial, containing a title, some instructions for use, and a form with some simple controls. The <select> element is initially empty, but is populated with <option> s via JavaScript (see later on.)

Let's investigate the JavaScript that powers this app.

Setting variables

First of all, we capture references to all the DOM elements involved in the UI, but more interestingly, we capture a reference to Window.speechSynthesis . This is API's entry point — it returns an instance of SpeechSynthesis , the controller interface for web speech synthesis.

Populating the select element

To populate the <select> element with the different voice options the device has available, we've written a populateVoiceList() function. We first invoke SpeechSynthesis.getVoices() , which returns a list of all the available voices, represented by SpeechSynthesisVoice objects. We then loop through this list — for each voice we create an <option> element, set its text content to display the name of the voice (grabbed from SpeechSynthesisVoice.name ), the language of the voice (grabbed from SpeechSynthesisVoice.lang ), and -- DEFAULT if the voice is the default voice for the synthesis engine (checked by seeing if SpeechSynthesisVoice.default returns true .)

We also create data- attributes for each option, containing the name and language of the associated voice, so we can grab them easily later on, and then append the options as children of the select.

Older browser don't support the voiceschanged event, and just return a list of voices when SpeechSynthesis.getVoices() is fired. While on others, such as Chrome, you have to wait for the event to fire before populating the list. To allow for both cases, we run the function as shown below:

Speaking the entered text

Next, we create an event handler to start speaking the text entered into the text field. We are using an onsubmit handler on the form so that the action happens when Enter / Return is pressed. We first create a new SpeechSynthesisUtterance() instance using its constructor — this is passed the text input's value as a parameter.

Next, we need to figure out which voice to use. We use the HTMLSelectElement selectedOptions property to return the currently selected <option> element. We then use this element's data-name attribute, finding the SpeechSynthesisVoice object whose name matches this attribute's value. We set the matching voice object to be the value of the SpeechSynthesisUtterance.voice property.

Finally, we set the SpeechSynthesisUtterance.pitch and SpeechSynthesisUtterance.rate to the values of the relevant range form elements. Then, with all necessary preparations made, we start the utterance being spoken by invoking SpeechSynthesis.speak() , passing it the SpeechSynthesisUtterance instance as a parameter.

In the final part of the handler, we include an pause event to demonstrate how SpeechSynthesisEvent can be put to good use. When SpeechSynthesis.pause() is invoked, this returns a message reporting the character number and name that the speech was paused at.

Finally, we call blur() on the text input. This is mainly to hide the keyboard on Firefox OS.

Updating the displayed pitch and rate values

The last part of the code updates the pitch / rate values displayed in the UI, each time the slider positions are moved.

- Español – América Latina

- Português – Brasil

- Documentation

- Cloud Text-to-Speech API

Cloud Text-to-Speech basics

Text-to-Speech allows developers to create natural-sounding, synthetic human speech as playable audio. You can use the audio data files you create using Text-to-Speech to power your applications or augment media like videos or audio recordings (in compliance with the Google Cloud Platform Terms of Service including compliance with all applicable law).

Text-to-Speech converts text or Speech Synthesis Markup Language (SSML) input into audio data like MP3 or LINEAR16 (the encoding used in WAV files).

This document is a guide to the fundamental concepts of using Text-to-Speech. Before diving into the API itself, review the quickstarts .

Basic example

Text-to-Speech is ideal for any application that plays audio of human speech to users. It allows you to convert arbitrary strings, words, and sentences into the sound of a person speaking the same things.

Imagine that you have a voice assistant app that provides natural language feedback to your users as playable audio files. Your app might take an action and then provide human speech as feedback to the user.

For example, your app may want to report that it successfully added an event to the user's calendar. Your app constructs a response string to report the success to the user, something like "I've added the event to your calendar."

With Text-to-Speech, you can convert that response string to actual human speech to play back to the user, similar to the example provided below.

Your browser does not support the audio element. Example 1. Audio file generated from Text-to-Speech

To create an audio file like example 1, you send a request to Text-to-Speech like the following code snippet.

Speech synthesis

The process of translating text input into audio data is called synthesis and the output of synthesis is called synthetic speech . Text-to-Speech takes two types of input: raw text or SSML-formatted data (discussed below). To create a new audio file, you call the synthesize endpoint of the API.

The speech synthesis process generates raw audio data as a base64-encoded string. You must decode the base64-encoded string into an audio file before an application can play it. Most platforms and operating systems have tools for decoding base64 text into playable media files.

To learn more about synthesis, review the quickstarts or the Creating Voice Audio Files page.

Text-to-Speech creates raw audio data of natural, human speech. That is, it creates audio that sounds like a person talking. When you send a synthesis request to Text-to-Speech, you must specify a voice that 'speaks' the words.

Text-to-Speech has a wide selection of custom voices available for you to use. The voices differ by language, gender, and accent (for some languages). For example, you can create audio that mimics the sound of a female English speaker with a British accent like example 1, above. You can also convert the same text into a different voice, say a male English speaker with an Australian accent.

Your browser does not support the audio element. Example 2. Audio file generated with en-AU speaker

To see the complete list of the available voices, see Supported Voices .

WaveNet voices

Along with other, traditional synthetic voices, Text-to-Speech also provides premium, WaveNet-generated voices. Users find the Wavenet-generated voices to be more warm and human-like than other synthetic voices.

The key difference to a WaveNet voice is the WaveNet model used to generate the voice. WaveNet models have been trained using raw audio samples of actual humans speaking. As a result, these models generate synthetic speech with more human-like emphasis and inflection on syllables, phonemes, and words.

Compare the following two samples of synthetic speech.

Your browser does not support the audio element. Example 3. Audio file generated with a standard voice

Your browser does not support the audio element. Example 4. Audio file generated with a WaveNet voice

To learn more about the benefits of WaveNet-generated voices, see Types of voices .

Other audio output settings

Besides the voice, you can also configure other aspects of the audio data output created by speech synthesis. Text-to-Speech supports configuring the speaking rate, pitch, volume, and sample rate hertz.

Review the AudioConfig reference for more information.

Speech Synthesis Markup Language (SSML) support

You can enhance the synthetic speech produced by Text-to-Speech by marking up the text using Speech Synthesis Markup Language (SSML) . SSML enables you to insert pauses, acronym pronunciations, or other additional details into the audio data created by Text-to-Speech. Text-to-Speech supports a subset of the available SSML elements .

For example, you can ensure that the synthetic speech correctly pronounces ordinal numbers by providing Text-to-Speech with SSML input that marks ordinal numbers as such.

Your browser does not support the audio element. Example 5. Audio file generated from plain text input

Your browser does not support the audio element. Example 6. Audio file generated from SSML input

To learn more about how to synthesize speech from SSML, see Creating Voice Audio Files

Try it for yourself

If you're new to Google Cloud, create an account to evaluate how Text-to-Speech performs in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-05-09 UTC.

- Português – Brasil

Using the Text-to-Speech API with Python

1. overview.

The Text-to-Speech API enables developers to generate human-like speech. The API converts text into audio formats such as WAV, MP3, or Ogg Opus. It also supports Speech Synthesis Markup Language (SSML) inputs to specify pauses, numbers, date and time formatting, and other pronunciation instructions.

In this tutorial, you will focus on using the Text-to-Speech API with Python.

What you'll learn

- How to set up your environment

- How to list supported languages

- How to list available voices

- How to synthesize audio from text

What you'll need

- A Google Cloud project

- A browser, such as Chrome or Firefox

- Familiarity using Python

How will you use this tutorial?

How would you rate your experience with python, how would you rate your experience with google cloud services, 2. setup and requirements, self-paced environment setup.

- Sign-in to the Google Cloud Console and create a new project or reuse an existing one. If you don't already have a Gmail or Google Workspace account, you must create one .

- The Project name is the display name for this project's participants. It is a character string not used by Google APIs. You can always update it.

- The Project ID is unique across all Google Cloud projects and is immutable (cannot be changed after it has been set). The Cloud Console auto-generates a unique string; usually you don't care what it is. In most codelabs, you'll need to reference your Project ID (typically identified as PROJECT_ID ). If you don't like the generated ID, you might generate another random one. Alternatively, you can try your own, and see if it's available. It can't be changed after this step and remains for the duration of the project.

- For your information, there is a third value, a Project Number , which some APIs use. Learn more about all three of these values in the documentation .

- Next, you'll need to enable billing in the Cloud Console to use Cloud resources/APIs. Running through this codelab won't cost much, if anything at all. To shut down resources to avoid incurring billing beyond this tutorial, you can delete the resources you created or delete the project. New Google Cloud users are eligible for the $300 USD Free Trial program.

Start Cloud Shell

While Google Cloud can be operated remotely from your laptop, in this codelab you will be using Cloud Shell , a command line environment running in the Cloud.

Activate Cloud Shell

If this is your first time starting Cloud Shell, you're presented with an intermediate screen describing what it is. If you were presented with an intermediate screen, click Continue .

It should only take a few moments to provision and connect to Cloud Shell.

This virtual machine is loaded with all the development tools needed. It offers a persistent 5 GB home directory and runs in Google Cloud, greatly enhancing network performance and authentication. Much, if not all, of your work in this codelab can be done with a browser.

Once connected to Cloud Shell, you should see that you are authenticated and that the project is set to your project ID.

- Run the following command in Cloud Shell to confirm that you are authenticated:

Command output

- Run the following command in Cloud Shell to confirm that the gcloud command knows about your project:

If it is not, you can set it with this command:

3. Environment setup

Before you can begin using the Text-to-Speech API, run the following command in Cloud Shell to enable the API:

You should see something like this:

Now, you can use the Text-to-Speech API!

Navigate to your home directory:

Create a Python virtual environment to isolate the dependencies:

Activate the virtual environment:

Install IPython and the Text-to-Speech API client library:

Now, you're ready to use the Text-to-Speech API client library!

In the next steps, you'll use an interactive Python interpreter called IPython , which you installed in the previous step. Start a session by running ipython in Cloud Shell:

You're ready to make your first request and list the supported languages...

4. List supported languages

In this section, you will get the list of all supported languages.

Copy the following code into your IPython session:

Take a moment to study the code and see how it uses the list_voices client library method to build the list of supported languages.

Call the function:

You should get the following (or a larger) list:

The list shows 58 languages and variants such as:

- Chinese and Taiwanese Mandarin,

- Australian, British, Indian, and American English,

- French from Canada and France,

- Portuguese from Brazil and Portugal.

This list is not fixed and grows as new voices are available.

This step allowed you to list the supported languages.

5. List available voices

In this section, you will get the list of voices available in different languages.

Take a moment to study the code and see how it uses the client library method list_voices(language_code) to list voices available for a given language.

Now, get the list of available German voices:

Multiple female and male voices are available, as well as standard, WaveNet, Neural2, and Studio voices:

- Standard voices are generated by signal processing algorithms.

- WaveNet, Neural2, and Studio voices are higher quality voices synthesized by machine learning models and sounding more natural.

Now, get the list of available English voices:

You should get something like this:

In addition to a selection of multiple voices in different genders and qualities, multiple accents are available: Australian, British, Indian, and American English.

Take a moment to list the voices available for your preferred languages and variants (or even all of them):

This step allowed you to list the available voices. You can read more about the supported voices and languages .

6. Synthesize audio from text

You can use the Text-to-Speech API to convert a string into audio data. You can configure the output of speech synthesis in a variety of ways, including selecting a unique voice or modulating the output in pitch, volume, speaking rate, and sample rate .

Take a moment to study the code and see how it uses the synthesize_speech client library method to generate the audio data and save it as a wav file.

Now, generate sentences in a few different accents:

To download all generated files at once, you can use this Cloud Shell command from your Python environment:

Validate and your browser will download the files:

Open each file and hear the result.

In this step, you were able to use Text-to-Speech API to convert sentences into audio wav files. Read more about creating voice audio files .

7. Congratulations!

You learned how to use the Text-to-Speech API using Python to generate human-like speech!

To clean up your development environment, from Cloud Shell:

- If you're still in your IPython session, go back to the shell: exit

- Stop using the Python virtual environment: deactivate

- Delete your virtual environment folder: cd ~ ; rm -rf ./venv-texttospeech

To delete your Google Cloud project, from Cloud Shell:

- Retrieve your current project ID: PROJECT_ID=$(gcloud config get-value core/project)

- Make sure this is the project you want to delete: echo $PROJECT_ID

- Delete the project: gcloud projects delete $PROJECT_ID

- Test the demo in your browser: https://cloud.google.com/text-to-speech

- Text-to-Speech documentation: https://cloud.google.com/text-to-speech/docs

- Python on Google Cloud: https://cloud.google.com/python

- Cloud Client Libraries for Python: https://github.com/googleapis/google-cloud-python

This work is licensed under a Creative Commons Attribution 2.0 Generic License.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

IMAGES

VIDEO

COMMENTS

Text to speech. Learn how to turn text into lifelike spoken audio. Introduction. The Audio API provides a speech endpoint based on our TTS (text-to-speech) model. It comes with 6 built-in voices and can be used to: Narrate a written blog post. Produce spoken audio in multiple languages. Give real time audio output using streaming.

Text to speech. An AI Speech feature that converts text to lifelike speech. Try Text to speech free Create a pay-as-you-go account. Overview. Bring your apps to life with natural-sounding voices. Build apps and services that speak naturally.

The most accurate and best text to speech (TTS) API. Convert text into lifelike speech with best-in-class latency & the most advanced AI audio model ever. Quickly generate AI voices in multiple languages with our AI Voice API for your chatbots, AI agents, LLMs, websites, apps and more

Browse 7+ Best Text to Speech APIs available on RapidAPI.com. Top Best Text to Speech APIs include Text-to-Speech, Rev.AI, RoboMatic.AI and more. Sign up today for free!

The Web Speech API provides two distinct areas of functionality — speech recognition, and speech synthesis (also known as text to speech, or tts) — which open up interesting new possibilities for accessibility, and control mechanisms.

Text-to-Speech converts text or Speech Synthesis Markup Language (SSML) input into audio data of natural human speech. Learn more. Documentation resources. Find quickstarts and guides, review...

Text-to-Speech converts text or Speech Synthesis Markup Language (SSML) input into audio data like MP3 or LINEAR16 (the encoding used in WAV files). This document is a guide to the...

The Text-to-Speech API enables developers to generate human-like speech. The API converts text into audio formats such as WAV, MP3, or Ogg Opus. It also supports Speech Synthesis Markup...