EDITORIAL article

Editorial: theoretical advances and practical applications of spiking neural networks.

- 1 Neuromorphic Sensor Signal Processing Lab, Department of Electronic and Electrical Engineering, Centre for Image and Signal Processing, University of Strathclyde, Glasgow, United Kingdom

- 2 Centre for Future Media, School of Computer Science and Engineering, University of Electronic Science and Technology of China, Chengdu, Sichuan, China

- 3 Learning and Intelligent Systems Lab, School of Electrical Engineering and Computer Science, Ohio University, Athens, OH, United States

Editorial on the Research Topic Theoretical advances and practical applications of spiking neural networks

1 Introduction

Neuromorphic engineering has experienced a significant growth in popularity over the last 10 years, going from being a niche academic research area, often confused with deep learning and mostly unknown to the wider industrial community, to being the main focus of many funding calls, significant industrial endeavours, and national and international initiatives. The advent to market of neuromorphic sensors, with a related widening understanding of the event-based sensing paradigm, combined with the development of the first neuromorphic processors, has steered the wider academic community and industry toward the investigation and use of Spiking Neural Networks (SNN). Very often overlooked in favour of the now extremely popular Deep Neural Networks (DNN), SNNs have become a serious alternative to DNNs, in application domains where size, weight and power are key limiting factors to the deployment of AI systems, such in Space applications, Security and Defence, Automotive, and more generally AI at the Edge. Nonetheless, there are many aspects of SNNs that still require significant investigation, as there are many unexplored avenues in this regard. To this aim, the articles accepted in this special topic present novel research works that focus on methodologies for training of SNNs and on the use of SNN in real life applications.

2 About the papers

The articles published in this special topic cover a varied set of application domains, including image processing (such as denoising and segmentation), analysis of biosignals (for seizure detection), activity recognition through wearable devices, and audio processing for the cocktail party effect. Moreover, the topic hosts also two papers on novel learning methods, such as meta-spikepropamine, and the chip-in-loop SNN proxy learning.

In the article “ Efficient and generalizable cross-patient epileptic seizure detection through a spiking neural network ”, Zhang et al. propose an EEG-based spiking neural network (EESNN) with a recurrent spiking convolution structure, leveraging the biological plausibility of SNNs, to take better advantage of temporal and biological characteristics of EEG signals.

Li Y. et al. propose the application of the SNNs to time series of binary spikes generated through wearable devices, in their work entitled “ Efficient human activity recognition with spatio-temporal spiking neural networks ”. The results reported indicate that SNNs achieve competitive accuracy while significantly reducing energy in this application.

In “ Explaining cocktail party effect and McGurk effect with a spiking neural network improved by Motif-topology ” Jia et al. introduce a novel Motif-topology improved SNN (M-SNN) to enhance the network's ability to tackle complex cognitive tasks. The experimental results show a lower computational cost and higher accuracy and a better explanation of some key phenomena of these two effects, such as new concept generation and anti-background noise.

In their article “ Meta-SpikePropamine: learning to learn with synaptic plasticity in spiking neural networks ”, Schmidgall et al. propose a novel bi-level optimization framework that integrates neuroscience principles into SNNs to enhance online learning capabilities. The experimental outcomes underscore the potential of neuroscience-inspired models to advance the field of online learning, marking a promising direction for future research.

Li X. et al. propose a biologically-plausible algorithm named the mixture of personality (MoP) improved spiking actor network (SAN), in their work “ Mixture of personality improved spiking actor network for efficient multi-agent cooperation ”. The experimental results on the benchmark cooperative overcooked task show that the proposed MoP-SAN algorithm could achieve higher performance for the paradigms with (learning) and without (generalization) unseen partners.

In the article “ SPIDEN: Deep Spiking Neural Networks for Efficient Image Denoising ” Castagnetti et al. explore the use of SNNs for image denoising applications, with the goal of reaching the accuracy of conventional Deep Convolutional Neural Networks (DCNNs), while reducing computational costs. The authors present a formal analysis of data flow through Integrate and Fire (IF) spiking neurons, and establish the trade-off between conversion error and activation sparsity in SNNs. Experimental results demonstrate that the design goals are effectively achieved.

Yue et al. propose a novel three-stage SNN training scheme for segmenting human brain images, in the work “ Spiking Neural Networks Fine-Tuning for Brain Image Segmentation ”. Their pipeline begins with fully optimizing an ANN, followed by a quick ANN-to-SNN conversion to initialize the corresponding spiking network. Spike-based backpropagation is then employed to fine-tune the converted SNN. Experimental results show a significant advantage of the proposed scheme over both ANN-to-SNN conversion and direct SNN training solutions in terms of segmentation accuracy and training efficiency.

In “ Chip-In-Loop SNN Proxy Learning: A New Method for Efficient Training of Spiking Neural Networks ” Liu et al. introduce the Chip-In-Loop SNN Proxy Learning (CIL-SPL) method. Their approach applies proxy learning principles and use hardware devices as proxy agents. This combination allows the hardware to exhibit event-driven, asynchronous behavior, while enabling training of the synchronous SNN structure using backward loss gradients. Experiments on the N-MNIST dataset demonstrate that CIL-SPL achieves the best performance on actual hardware chips.

3 Discussion and future trends

As it can be seen, the range and type of applications covered demonstrate the wide potential of SNNs in being applicable and effective in different domains, and not just for event-based visual sensing and processing, which are sometimes confused, by non-experts, almost as synonymous for SNNs. Indeed, event-based cameras are currently the most popular example of event-based sensors. The reason for this is quite likely due to the fact that event-based vision sensors provide an easy to understand approach to spike generation by mimicking the human retina. In fact, the output of an event-based camera is rather straightforward to visualise and interpret. Therefore, it is encouraging to see that the research community is exploring other avenues of application of SNNs, and this should be encouraged further. More specifically, event extraction from other sensing modalities is a very interesting and welcome feature of some of the articles in this topic.

In this respect, new commercially available neuromorphic sensors are welcome. In fact, researchers have indeed proposed novel neuromorphic sensors in other modalities, as for example audio, olfactory, tactile. However, these are still pretty much confined at academic research level. Furthermore, an interesting future direction is the investigation of novel event-based sensing approaches based on conventional, i.e. non natively neuromorphic, sensors and sensing hardware architectures. For example, as Radars and Lidars already handle information in the form of pulses, it would be ideal if existing technology could be used, as is, to generate event-based data to be fed to SNNs directly, in raw format. This would avoid the need for redundant initial transformation of the sensed data into more conventional formats, which may be unnecessary in the context of SNN-based processing.

In another sense, research on SNNs can borrow ideas from DNN approaches, but it should avoid closely mimicking the development of DNNs. As while this can provide useful research directions and hints, it may also steer SNNs toward use that does not fully exploit all the potential that event-based sensing and processing have to offer. More applications which leverage key aspects of SNN should be welcome, such as the sparse nature of event-base data, and its timing and asynchronous processing aspect. Along this line of thought, these two key aspects should be investigated to devise learning methods, that mimic the human brain and its learning, not from a mere dogmatic biological plausibility aspect, but also from a more pragmatic engineering angle.

4 Conclusion

Spiking Neural Networks and Neuromorphic Engineering have come out from their niche and are now known to the wider academic community and to industry, and are now frequently indicated as the key technologies that can successfully achieve AI at the Edge. This is a fundamental step forward, in order to entice further research focusing on the core aspects: sensors, processing algorithms, and hardware architectures. Nonetheless, common misconceptions should be cleared, and there should be a conscious effort to use SNNs and their capabilities for what they are, to ensure that they are used as the right tool for the job, rather than just based on their similarity to other generations of neural networks.

Author contributions

GD: Writing – original draft, Writing – review & editing. JL: Writing – review & editing. MZ: Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Keywords: Spiking Neural Networks (SNN), Neuromorphic Engineering (NE), event-based sensing, neural networks, artificial intelligence

Citation: Di Caterina G, Zhang M and Liu J (2024) Editorial: Theoretical advances and practical applications of spiking neural networks. Front. Neurosci. 18:1406502. doi: 10.3389/fnins.2024.1406502

Received: 25 March 2024; Accepted: 29 March 2024; Published: 22 April 2024.

Edited and reviewed by: André van Schaik , Western Sydney University, Australia

Copyright © 2024 Di Caterina, Zhang and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gaetano Di Caterina, gaetano.di-caterina@strath.ac.uk

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

An Overview of Spikingneural Networks

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

SMT-Based Modeling and Verification of Spiking Neural Networks: A Case Study

- Conference paper

- First Online: 17 January 2023

- Cite this conference paper

- Soham Banerjee 10 ,

- Sumana Ghosh 10 ,

- Ansuman Banerjee 10 &

- Swarup K. Mohalik 11

Part of the book series: Lecture Notes in Computer Science ((LNCS,volume 13881))

Included in the following conference series:

- International Conference on Verification, Model Checking, and Abstract Interpretation

539 Accesses

In this paper, we present a case study on modeling and verification of Spiking Neural Networks (SNN) using Satisfiability Modulo Theory (SMT) solvers. SNN are special neural networks that have great similarity in their architecture and operation with the human brain. These networks have shown similar performance when compared to traditional networks with comparatively lesser energy requirement. We discuss different properties of SNNs and their functioning. We then use Z3, a popular SMT solver to encode the network and its properties. Specifically, we use the theory of Linear Real Arithmetic (LRA). Finally, we present a framework for verification and adversarial robustness analysis and demonstrate it on the Iris and MNIST benchmarks.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Code and Benchmarks. https://github.com/Soham-Banerjee/SMT-Encoding-for-Spiking-Neural-Network

Alur, R.: Timed automata. In: Peled, D. (ed.) CAV 1999. LNCS, vol. 1633, pp. 8–22. Springer, Heidelberg (1999). https://doi.org/10.1007/3-540-48683-6_3

Chapter Google Scholar

Aman, B., Ciobanu, G.: Modelling and verification of weighted spiking neural systems. Theoret. Comput. Sci. 623 , 92–102 (2016)

Article MathSciNet Google Scholar

De Maria, E., Di Giusto, C., Laversa, L.: Spiking neural networks modelled as timed automata with parameter learning (2018)

Google Scholar

De Maria, E., Muzy, A., Gaffé, D., Ressouche, A., Grammont, F.: Verification of temporal properties of neuronal archetypes modeled as synchronous reactive systems. In: Cinquemani, E., Donzé, A. (eds.) HSB 2016. LNCS, vol. 9957, pp. 97–112. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-47151-8_7

Demin, V., Nekhaev, D.: Recurrent spiking neural network learning based on a competitive maximization of neuronal activity. Front. Neuroinf. 12 , 79 (2018)

Article Google Scholar

Deng, L.: The MNIST database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 29 (6), 141–142 (2012)

Diehl, P.U., Cook, M.: Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 9 , 99 (2015)

Ding, J., Yu, Z., Tian, Y., Huang, T.: Optimal ANN-SNN conversion for fast and accurate inference in deep spiking neural networks (2021)

Elboher, Y.Y., Gottschlich, J., Katz, G.: An abstraction-based framework for neural network verification. In: Lahiri, S.K., Wang, C. (eds.) CAV 2020. LNCS, vol. 12224, pp. 43–65. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-53288-8_3

Eshraghian, J.K., et al.: Training spiking neural networks using lessons from deep learning (2021)

Fisher, R.: Iris. UCI Machine Learning Repository (1988). https://archive.ics.uci.edu/ml/datasets/Iris

Gokulanathan, S., Feldsher, A., Malca, A., Barrett, C., Katz, G.: Simplifying neural networks using formal verification. In: Lee, R., Jha, S., Mavridou, A., Giannakopoulou, D. (eds.) NFM 2020. LNCS, vol. 12229, pp. 85–93. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-55754-6_5

Goldberger, B., Katz, G., Adi, Y., Keshet, J.: Minimal modifications of deep neural networks using verification. In: LPAR23. LPAR-23: 23rd International Conference on Logic for Programming, Artificial Intelligence and Reasoning, vol. 73, pp. 260–278 (2020)

Guo, W., Fouda, M.E., Eltawil, A.M., Salama, K.N.: Neural coding in spiking neural networks: a comparative study for robust neuromorphic systems. Front. Neurosci. 15 , 638474 (2021)

Katz, G., Barrett, C., Dill, D.L., Julian, K., Kochenderfer, M.J.: Reluplex: a calculus for reasoning about deep neural networks. Formal Methods Syst. Design 1–30 (2021)

Kim, T., et al.: Spiking neural network (SNN) with memristor synapses having non-linear weight update. Front. Comput. Neurosci. 15 , 646125 (2021)

Kuper, L., Katz, G., Gottschlich, J., Julian, K., Barrett, C., Kochenderfer, M.: Toward scalable verification for safety-critical deep networks (2018)

Lahav, O., Katz, G.: Pruning and slicing neural networks using formal verification (2021)

Li, S., Zhang, Z., Mao, R., Xiao, J., Chang, L., Zhou, J.: A fast and energy-efficient SNN processor with adaptive clock/event-driven computation scheme and online learning. IEEE Trans. Circuits Syst. I Regul. Pap. 68 (4), 1543–1552 (2021)

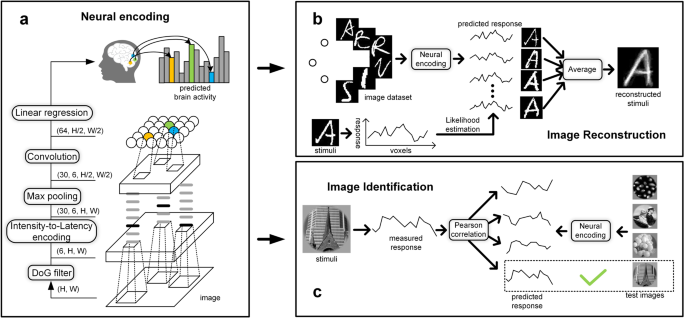

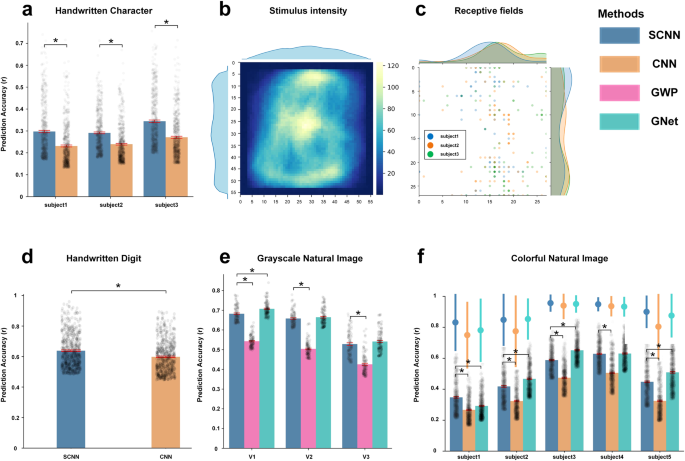

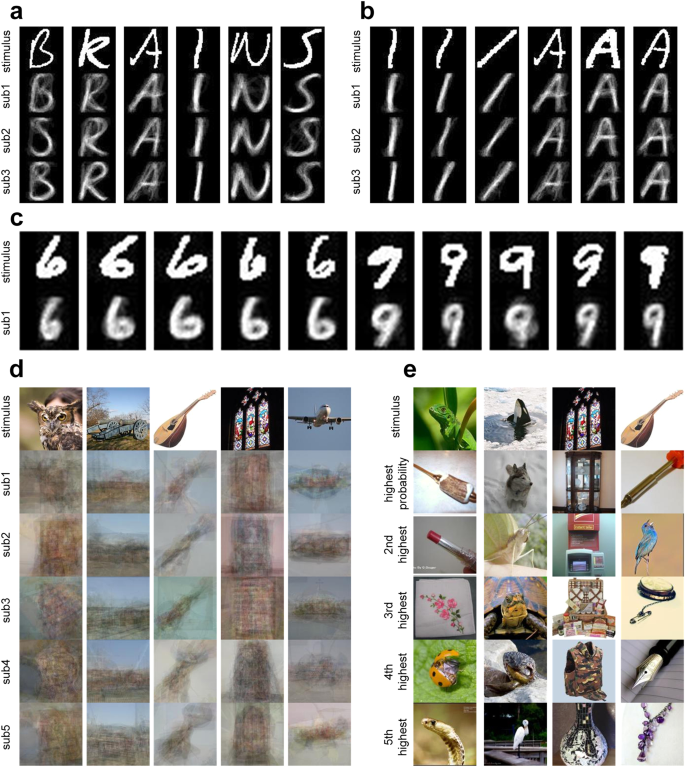

Liu, T.Y., Mahjoubfar, A., Prusinski, D., Stevens, L.: Neuromorphic computing for content-based image retrieval. PLOS One 17 (4), 1–13 (2022). https://doi.org/10.1371/journal.pone.0264364

Malik, N.: Artificial neural networks and their applications (2005)

de Maria, E., Gaffé, D., Ressouche, A., Girard Riboulleau, C.: A model-checking approach to reduce spiking neural networks. In: BIOINFORMATICS 2018 - 9th International Conference on Bioinformatics Models, Methods and Algorithms, pp. 1–8 (2018)

de Moura, L., Bjørner, N.: Z3: an efficient SMT solver. In: Ramakrishnan, C.R., Rehof, J. (eds.) TACAS 2008. LNCS, vol. 4963, pp. 337–340. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-78800-3_24

Stimberg, M., Brette, R., Goodman, D.F.: Brian 2, an intuitive and efficient neural simulator. eLife 8 , e47314 (2019)

Tavanaei, A., Ghodrati, M., Kheradpisheh, S.R., Masquelier, T., Maida, A.S.: Deep learning in spiking neural networks (2018)

Tjeng, V., Xiao, K., Tedrake, R.: Evaluating robustness of neural networks with mixed integer programming (2017)

Yu, Z., Abdulghani, A.M., Zahid, A., Heidari, H., Imran, M.A., Abbasi, Q.H.: An overview of neuromorphic computing for artificial intelligence enabled hardware-based hopfield neural network. IEEE Access 8 , 67085–67099 (2020). https://doi.org/10.1109/ACCESS.2020.2985839

Download references

Author information

Authors and affiliations.

Indian Statistical Institute, Kolkata, India

Soham Banerjee, Sumana Ghosh & Ansuman Banerjee

Ericsson Research, Bangalore, India

Swarup K. Mohalik

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Ansuman Banerjee .

Editor information

Editors and affiliations.

Inria, Amazon Web Services, Courbevoie, France

Cezara Dragoi

Amazon Web Services, Seattle, WA, USA

Michael Emmi

University of Southern California, Los Angeles, CA, USA

Jingbo Wang

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper.

Banerjee, S., Ghosh, S., Banerjee, A., Mohalik, S.K. (2023). SMT-Based Modeling and Verification of Spiking Neural Networks: A Case Study. In: Dragoi, C., Emmi, M., Wang, J. (eds) Verification, Model Checking, and Abstract Interpretation. VMCAI 2023. Lecture Notes in Computer Science, vol 13881. Springer, Cham. https://doi.org/10.1007/978-3-031-24950-1_2

Download citation

DOI : https://doi.org/10.1007/978-3-031-24950-1_2

Published : 17 January 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-24949-5

Online ISBN : 978-3-031-24950-1

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Comput Neurosci

Spiking neural network connectivity and its potential for temporal sensory processing and variable binding

1 Multimedia and Vision Research Group, School of Electronic Engineering and Computer Science, Queen Mary, University of London, London, UK

Cornelius Glackin

2 Adaptive Systems Research Group, Department of Computer Science, University of Hertfordshire, Hatfield, Hertfordshire, UK

The most biologically-inspired artificial neurons are those of the third generation, and are termed spiking neurons, as individual pulses or spikes are the means by which stimuli are communicated. In essence, a spike is a short-term change in electrical potential and is the basis of communication between biological neurons. Unlike previous generations of artificial neurons, spiking neurons operate in the temporal domain, and exploit time as a resource in their computation. In 1952, Alan Lloyd Hodgkin and Andrew Huxley produced the first model of a spiking neuron; their model describes the complex electro-chemical process that enables spikes to propagate through, and hence be communicated by, spiking neurons. Since this time, improvements in experimental procedures in neurobiology, particularly with in vivo experiments, have provided an increasingly more complex understanding of biological neurons. For example, it is now well-understood that the propagation of spikes between neurons requires neurotransmitter, which is typically of limited supply. When the supply is exhausted neurons become unresponsive. The morphology of neurons, number of receptor sites, amongst many other factors, means that neurons consume the supply of neurotransmitter at different rates. This in turn produces variations over time in the responsiveness of neurons, yielding various computational capabilities. Such improvements in the understanding of the biological neuron have culminated in a wide range of different neuron models, ranging from the computationally efficient to the biologically realistic. These models enable the modeling of neural circuits found in the brain.

In recent years, much of the focus in neuron modeling has moved to the study of the connectivity of spiking neural networks. Spiking neural networks provide a vehicle to understand from a computational perspective, aspects of the brain's neural circuitry. This understanding can then be used to tackle some of the historically intractable issues with artificial neurons, such as scalability and lack of variable binding. Current knowledge of feed-forward, lateral, and recurrent connectivity of spiking neurons, and the interplay between excitatory and inhibitory neurons is beginning to shed light on these issues, by improved understanding of the temporal processing capabilities and synchronous behavior of biological neurons. This research topic spans current research on neuron models to spiking neural networks and their application to interesting and current computational problems. The research papers submitted to this topic can be categorized into the following major areas of more efficient neuron modeling; lateral and recurrent spiking neural network connectivity; exploitation of biological neural circuitry by means of spiking neural networks; optimization of spiking neural networks; and spiking neural networks for sensory processing.

Moujahid and d'Anjou ( 2012 ) stimulate the giant squid axon with simulated spikes to develop some new insights into the development of more relevant models of biological neurons. They observed that temperature mediates the efficiency of action potentials by reducing the overlap between sodium and potassium currents in the ion exchange and subsequent energy consumption. The original research article by Dockendorf and Srinivasa ( 2013 ) falls into the area of lateral and recurrent spiking neural network connectivity. It presents a recurrent spiking model capable of learning episodes featuring missing and noisy data. The presented topology provides a means of recalling previously encoded patterns where inhibition is of the high frequency variety aiming to promote stability of the network. Kaplan et al. ( 2013 ) also investigated the use of recurrent spiking connectivity in their work on motion-based prediction and the issue of missing data. Here they address how anisotropic connectivity patterns that consider the tuning properties of neurons efficiently predict the trajectory of a disappearing moving stimulus. They demonstrate and test this by simulating the network response in a moving-dot blanking experiment.

Garrido et al. ( 2013 ) investigate how systematic modifications of synaptic weights can exert close control over the timing of spike transmissions. They demonstrate this using a network of leaky integrate-and-fire spiking neurons to simulate cells of the cerebellar granular layer. Börgers and Walker ( 2013 ) investigate simulations of excitatory pyramidal cells and inhibitory interneurons which interact and exhibit gamma rhythms in the hippocampus and neocortex. They focus on how inhibitory interneurons maintain synchrony using gap junctions. Similarly, Ponulak and Hopfield ( 2013 ) also take inspiration from the neural structure of the hippocampus to hypothesize about the problem of spatial navigation. Their topology encodes the spatial environment through an exploratory phase which utilizes “place” cells to reflect all possible trajectory boundaries and environmental constraints. Subsequently, a wave propagation process maps the trajectory between the target or multiple targets and the current location by altering the synaptic connectivity of the aforementioned “place” cells in a single pass. A novel viewpoint of the state-of-the-art for the exploitation of biological neural circuitry by means of spiking neural networks is provided by Aimone and Weick ( 2013 ). In their paper, a thorough and comprehensive review of modeling cortical damage due to stroke is provided. They argue that a theoretical understanding of the damaged cortical area post-disease is vital while taking into account current thinking of models for adult neurogenesis.

One of the issues with modeling large-scale spiking neural networks is the lack of tools to analyse such a large parameter space, as Buice and Chow ( 2013 ) discuss in their hypothesis and theory article. They propose a possible approach which combines mean field theory with information about spiking correlations; thus reducing the complexity to that of a more comprehensible rate-like description. Demonstrations of spiking neural networks for sensory processing include the work of Srinivasa and Jiang ( 2013 ). Their research consists of the development of spiking neuron models, initially assembled into an unstructured map topology. The authors show how the combination of self-organized and STDP-based continuous learning can provide the initial formation and on-going maintenance of orientation and ocular dominance maps of the kind commonly found in the visual cortex.

It is clear that research on spiking neural networks has expanded beyond computational models of individual neurons and now encompasses large-scale networks which aim to model the behavior of whole neural regions. This has resulted in a diverse and exciting field of research with many perspectives and a multitude of potential applications.

- Aimone J. B., Weick J. P. (2013). Perspectives for computational modeling of cell replacement for neurological disorders . Front. Comput. Neurosci . 7 :150 10.3389/fncom.2013.00150 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Börgers C., Walker B. (2013). Toggling between gamma-frequency activity and suppression of cell assemblies . Front. Comput. Neurosci . 7 :33 10.3389/fncom.2013.00033 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Buice M. A., Chow C. C. (2013). Generalized activity equations for spiking neural network dynamics . Front. Comput. Neurosci . 7 :162 10.3389/fncom.2013.00162 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Dockendorf K., Srinivasa N. (2013). Learning and prospective recall of noisy spike pattern episodes . Front. Comput. Neurosci . 7 :80 10.3389/fncom.2013.00080 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Garrido J. A., Ros E., D'Angelo E. (2013). Spike timing regulation on the millisecond scale by distributed synaptic plasticity at the cerebellum input stage: a simulation study . Front. Comput. Neurosci . 7 :64 10.3389/fncom.2013.00064 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Kaplan B. A., Lansner A., Masson G. S., Perrinet L. U. (2013). Anisotropic connectivity implements motion-based prediction in a spiking neural network . Front. Comput. Neurosci . 7 :112 10.3389/fncom.2013.00112 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Moujahid A., d'Anjou A. (2012). Metabolic efficiency with fast spiking in the squid axon . Front. Comput. Neurosci . 6 :95 10.3389/fncom.2012.00095 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Ponulak F. J., Hopfield J. J. (2013). Rapid, parallel path planning by propagating wavefronts of spiking neural activity. Front. Comput. Neurosci . 7 :98 10.3389/fncom.2013.00098 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Srinivasa N., Jiang Q. (2013). Stable learning of functional maps in self-organizing spiking neural networks with continuous synaptic plasticity . Front. Comput. Neurosci . 7 :10 10.3389/fncom.2013.00010 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

IM-Loss: Information Maximization Loss for Spiking Neural Networks

Part of Advances in Neural Information Processing Systems 35 (NeurIPS 2022) Main Conference Track

Yufei Guo, Yuanpei Chen, Liwen Zhang, Xiaode Liu, Yinglei Wang, Xuhui Huang, Zhe Ma

Spiking Neural Network (SNN), recognized as a type of biologically plausible architecture, has recently drawn much research attention. It transmits information by $0/1$ spikes. This bio-mimetic mechanism of SNN demonstrates extreme energy efficiency since it avoids any multiplications on neuromorphic hardware. However, the forward-passing $0/1$ spike quantization will cause information loss and accuracy degradation. To deal with this problem, the Information maximization loss (IM-Loss) that aims at maximizing the information flow in the SNN is proposed in the paper. The IM-Loss not only enhances the information expressiveness of an SNN directly but also plays a part of the role of normalization without introducing any additional operations (\textit{e.g.}, bias and scaling) in the inference phase. Additionally, we introduce a novel differentiable spike activity estimation, Evolutionary Surrogate Gradients (ESG) in SNNs. By appointing automatic evolvable surrogate gradients for spike activity function, ESG can ensure sufficient model updates at the beginning and accurate gradients at the end of the training, resulting in both easy convergence and high task performance. Experimental results on both popular non-spiking static and neuromorphic datasets show that the SNN models trained by our method outperform the current state-of-the-art algorithms.

Name Change Policy

Requests for name changes in the electronic proceedings will be accepted with no questions asked. However name changes may cause bibliographic tracking issues. Authors are asked to consider this carefully and discuss it with their co-authors prior to requesting a name change in the electronic proceedings.

Use the "Report an Issue" link to request a name change.

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper, mark the official implementation from paper authors, add a new evaluation result row, remove a task, add a method, remove a method, edit datasets, temporal spiking neural networks with synaptic delay for graph reasoning.

27 May 2024 · Mingqing Xiao , Yixin Zhu , Di He , Zhouchen Lin · Edit social preview

Spiking neural networks (SNNs) are investigated as biologically inspired models of neural computation, distinguished by their computational capability and energy efficiency due to precise spiking times and sparse spikes with event-driven computation. A significant question is how SNNs can emulate human-like graph-based reasoning of concepts and relations, especially leveraging the temporal domain optimally. This paper reveals that SNNs, when amalgamated with synaptic delay and temporal coding, are proficient in executing (knowledge) graph reasoning. It is elucidated that spiking time can function as an additional dimension to encode relation properties via a neural-generalized path formulation. Empirical results highlight the efficacy of temporal delay in relation processing and showcase exemplary performance in diverse graph reasoning tasks. The spiking model is theoretically estimated to achieve $20\times$ energy savings compared to non-spiking counterparts, deepening insights into the capabilities and potential of biologically inspired SNNs for efficient reasoning. The code is available at https://github.com/pkuxmq/GRSNN.

Code Edit Add Remove Mark official

Tasks edit add remove, datasets edit.

Results from the Paper Edit Add Remove

Methods edit add remove.

Stochastic Spiking Neural Networks with First-to-Spike Coding

Spiking Neural Networks (SNNs), recognized as the third generation of neural networks, are known for their bio-plausibility and energy efficiency, especially when implemented on neuromorphic hardware. However, the majority of existing studies on SNNs have concentrated on deterministic neurons with rate coding, a method that incurs substantial computational overhead due to lengthy information integration times and fails to fully harness the brain’s probabilistic inference capabilities and temporal dynamics. In this work, we explore the merger of novel computing and information encoding schemes in SNN architectures where we integrate stochastic spiking neuron models with temporal coding techniques. Through extensive benchmarking with other deterministic SNNs and rate-based coding, we investigate the tradeoffs of our proposal in terms of accuracy, inference latency, spiking sparsity, energy consumption, and robustness. Our work is the first to extend the scalability of direct training approaches of stochastic SNNs with temporal encoding to VGG architectures and beyond-MNIST datasets.

Index Terms:

I introduction.

Spiking neural networks (SNNs) bridge the gap between artificial and biological neural networks (ANNs), offering insights into neurological processes. In the human neuronal system, most of the information is propagated between neurons using spike-based activation signals over time. Inspired by this property, SNNs use binary spike signals to transmit, encode, and process information. Compared with analog neural networks where neuron and synaptic states are represented by non-binary multi-bit representations, SNNs have demonstrated significant energy and computational power savings, especially when deployed on neuromorphic hardware [ 1 ] .

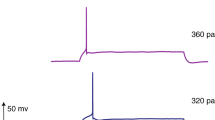

In SNNs, rate coding is one of the most popular coding methods. Under such a scheme, the information is represented by the rate or frequency of spikes over a defined period of time. However, such coding methods overlook the information of precise spike timings [ 2 ] and are constrained by slow information transmission and large processing latency. On the other hand, temporal coding represents information by the precise timing of individual spikes but often lacks scalability and robustness [ 3 ] . Compared to rate coding, which requires high firing rates to represent the same information, temporal coding can represent complex temporal patterns with relatively few spikes. In particular, First-to-Spike coding is a temporal coding scheme inspired by the rapid information processing observed in certain biological neural systems such as the retina [ 4 ] and auditory system [ 5 ] . In First-to-Spike coding, prediction is made when the first spike is observed on any one of the output neurons, thereby saving the need to operate over a redundant period of time as in rate coding. Therefore, it is often claimed that temporal coding is more computationally efficient than rate coding [ 3 ] .

Nevertheless, it is a challenging task to train an SNN due to the disruptive nature of information representation and processing, especially for frameworks based on temporal coding. Most existing works on scalable SNN training with temporal encoding convert pre-trained ANN to SNN [ 6 , 7 ] . The conversion process typically involves mapping the analog activation values of the ANN’s neurons to the timing of spikes of the SNN’s neurons. SNN training algorithms with conventional architectures and rate encoding [ 8 ] have witnessed rapid development [ 9 , 10 ] in recent years ranging from global spike-driven backpropagation techniques [ 11 ] to more local approaches like Deep Continuous Local Learning (DECOLLE) [ 12 ] , Equilibrium Propagation (EP) [ 13 , 14 ] , Deep Spike Timing Dependent Plasticity [ 15 ] , among others. In stark contrast, literature on direct training of SNNs with temporal encoding remains extremely sparse with demonstrations primarily on toy datasets like MNIST.

Although the vast majority of algorithm development and applications in SNNs employ deterministic neuron models such as the deterministic Spike Response Model (SRM) [ 16 ] , Integrate and Fire (IF), and Leaky Integrate and Fire (LIF) models [ 17 ] , it is important to recognize that biological neurons generate spikes in a stochastic fashion [ 18 ] . Furthermore, deterministic neuron models are discontinuous and non-differentiable, which presents substantial challenges in the application of gradient-based optimization methods. On the other hand, stochastic neuron models smoothen the network model to be continuously differentiable [ 19 ] and therefore have the potential to offer enhanced efficiency and robustness.

Recently, there has been growing interest in exploiting stochastic devices in the neuromorphic hardware community [ 20 ] , [ 21 ] . With the scaling of device dimensions, memristive devices lose their programming resolution and are characterized by increased cycle-to-cycle variation. Work has started in earnest to design stochastic state-compressed SNNs using such scaled neuromorphic devices that exhibit iso-accuracies in comparison to their multi-bit deterministic counterparts enabled by the alternate encoding of information in the probability domain [ 22 , 23 ] . Ref. [ 24 ] proposes noisy spiking neural network (NSNN) which leverages stochastic noise as a resource for training SNNs using rate-based coding. While there is significant progress in the domain of stochastic SNN training algorithms, there remains a noticeable gap in the design of SNN architectures that integrate the benefits of both stochastic neuronal computing and temporal information coding. Most of the current efforts on directly training stochastic SNNs with temporal coding have primarily demonstrated success on simple datasets and shallow network structures [ 25 , 26 , 27 ] . Scaling these networks to deeper architectures and more complex datasets presents significant challenges. Further, as we demonstrate in this work, many of the supposed benefits of temporal encoding, like enhanced spiking sparsity, may not necessarily hold true for deep architectures. This necessitates a co-design approach to identify the relative tradeoffs of stochastic temporally encoded SNNs in terms of accuracy, latency, sparsity, energy cost, and robustness. The specific contributions of this work are summarized below:

(i) Algorithm Development: We present a simple and structured algorithm framework to train stochastic SNNs directly with First-to-Spike coding. We also present training frameworks to train deterministic SNNs with temporal encoding that serve as a comparison baseline for our work to identify the relative merits/demerits of the computing and encoding scheme. We present empirical results to substantiate the scalability of our approach by demonstrating state-of-the-art accuracies on MNIST [ 28 ] and CIFAR-10 [ 29 ] datasets for 2-layer MLP, LeNet5, and VGG15 architectures. Notably, this is the first work to demonstrate direct SNN training employing First-to-Spike coding for VGG architectures on the CIFAR dataset.

(ii) Co-Design Analysis: We present a comprehensive quantitative analysis of previously unexplored trade-offs for stochastic SNNs with temporal encoding in terms of neuromorphic compute specific metrics like accuracy, latency, sparsity, energy efficiency, and robustness.

The rest of the paper is organized as follows. Section II describes related works. Section III introduces the training frameworks of SNNs with First-to-Spike coding for both deterministic and stochastic computing architectures. Section IV presents the experimental results and Section V provides conclusions and future outlook.

II Related Works

Temporal Coding: Temporal coding is characterized by its emphasis on the timing of spikes rather than the frequency in rate coding. Time-to-First-Spike (TTFS) coding [ 30 ] is a popular temporal coding scheme that is demonstrated to have rapid and low power processing [ 31 , 32 , 33 , 34 ] since it typically imposes a limitation that each neuron should only generate at most one spike. This limitation lacks biological plausibility and it cannot handle the complex temporal structure of sequences of real-world events [ 35 ] . Although latency is significantly reduced compared to rate coding, it still suffers from high latency, particularly when processing complex datasets [ 33 , 36 , 37 ] . Therefore, we focus on First-to-Spike coding based temporal coding strategy that can further reduce the latency in comparison to TTFS coding. First-to-Spike coding is distinct from other approaches since it does not primarily rely on the precise timing of each spike. Instead, this coding strategy focuses on the order of the first spike of all the output neurons. The efficacy and potential applications of the First-to-Spike coding mechanism have been extensively explored in recent literature [ 35 , 38 ] . Nevertheless, the process of generating a spike in SNNs is non-differentiable. To tackle this problem, there are several common methods for developing and training SNNs with temporal coding, which will be introduced next.

ANN-SNN Conversion Approaches for Temporal Coding: ANN-SNN Conversion is a widely adopted method for converting pre-trained ANNs to SNNs [ 39 , 40 , 41 ] . The neurons with continuous activation functions, such as sigmoid or ReLU, need to be mapped to spiking neurons like IF/LIF neurons. Algorithmic approaches usually aim to reduce information loss caused during the conversion process. Proposal by [ 6 ] designed an exact mapping from an ANN with ReLUs to a corresponding SNN with TTFS coding. The key achievement of this mapping is that it maintains the network accuracy after conversion with minimal drop. However, the conversion process involves complex steps which can make the process difficult to implement and optimize. Additionally, the necessity to use different conversion strategies for different types of layers further adds to the complexity. Also, it is important to note that the ANNs are trained without any temporal information, which typically results in high latency when converted to SNNs [ 11 ] . Hence, it is critical to explore direct training strategies for SNNs with temporal encoding.

Direct SNN Training Approaches for Temporal Coding: In the domain of TTFS coding, a convolutional-like coding method [ 42 , 43 ] was proposed to directly train an SNN, which uses a temporal kernel to integrate temporal and spatial information. It can significantly reduce the model size and transform the spatial localities into temporal localities which can improve efficiency and accuracy. Some recent works use the surrogate gradient [ 35 , 44 ] or surrogate model [ 37 ] to solve the non-differentiable backpropagation issue in deterministic neurons with temporal coding. Another technique is to directly train SNNs with stochastic neurons. The smoothing effect of stochastic neurons is crucial for enabling gradient-based optimization methods in SNNs [ 19 ] by solving the non-linear, non-differentiable aspects of the spiking mechanism. Research by [ 25 , 26 ] introduced a stochastic neuron model for directly training SNNs. This model uses the generalized linear model (GLM) [ 45 ] and first-to-spike coding. The GLM consists of a set of linear filters to process the incoming spikes, followed by a nonlinear function that computes the neuron’s firing probability based on the filtered inputs. Subsequently, this model employs a stochastic process such as the Poisson process to generate spike trains. However, the computational complexity of adapting a GLM for large-scale SNN training can be quite high. Other recent works have also explored stochastic SNNs with TTFS coding where the stochastic neuron is implemented by the intrinsic physics of spin devices [ 27 ] . However, existing research primarily focuses on shallow networks and MNIST-level datasets and lacks quantification of benefits offered by stochastic computing and temporal encoding in SNNs at scale.

III Methods

In this section, we introduce the methodology to train deterministic and stochastic SNNs with First-to-Spike coding where the key idea is to find the neuron that generates the first spike signal, thereby terminating the inference process. Associated loss function design and weight gradient calculations are also elaborated considering discontinuity issues observed in spiking neurons.

III-A Deterministic SNN

The Leaky Integrate-and-Fire (LIF) neuron model is one of the most recognized spiking neuron models in SNNs, primarily chosen for its balance between simplicity and biological plausibility [ 46 ] . The LIF model simulates the behavior of neurons by accumulating input signals (voltage) until they reach the threshold. During this period, the accumulated voltage decays over time, which simulates the electrical resistance seen in real neuronal membranes. However, the process to generate a spike in LIF models is non-differentiable which makes it challenging for traditional gradient-based methods. Defining a surrogate gradient (SG) as a continuous relaxation of the real gradients is one of the common ways to tackle the discontinuous spiking nonlinearity [ 19 ] . The deterministic LIF neuron model used in our network can be summarized as:

The output spike train o i t superscript subscript 𝑜 𝑖 𝑡 o_{i}^{t} italic_o start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT is generated by following this equation:

The temporal cross-entropy loss function [ 47 ] , which integrates the principles of First-to-Spike coding, is formalized as follows: For each neuron i 𝑖 i italic_i , the estimated activation probability is computed using the equation:

where, t i subscript 𝑡 𝑖 t_{i} italic_t start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT is the time of the first spike of neuron i 𝑖 i italic_i and n 𝑛 n italic_n is the number of output neurons. The loss function is given by the following equation:

where, y i ∈ { 0 , 1 } subscript 𝑦 𝑖 0 1 y_{i}\in\{0,1\} italic_y start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ∈ { 0 , 1 } is a one-hot target vector and n 𝑛 n italic_n is the number of output neurons. In the context of First-to-Spike coding, the goal is to minimize the time of the first spike of the correct neuron, which leads to maximizing its corresponding probability, as indicated by the cross-entropy loss function.

The gradient of the weights corresponding to the deterministic LIF neuron model is given by the following equation:

superscript subscript 𝑉 𝑖 𝑡 {\partial o_{i}^{t}}/{\partial V_{i}^{t}} ∂ italic_o start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT / ∂ italic_V start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT , we need a surrogate gradient to solve the discontinuous spiking nonlinearity. In this paper, the A r c t a n 𝐴 𝑟 𝑐 𝑡 𝑎 𝑛 Arctan italic_A italic_r italic_c italic_t italic_a italic_n surrogate [ 48 ] is used. After employing the A r c t a n 𝐴 𝑟 𝑐 𝑡 𝑎 𝑛 Arctan italic_A italic_r italic_c italic_t italic_a italic_n surrogate, Eqn. 2 can be written as:

superscript subscript 𝑜 𝑖 𝑡 {\partial t_{i}}/{\partial o_{i}^{t}} ∂ italic_t start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT / ∂ italic_o start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT can be expressed as:

This allows the network to be trained using variants of backpropagation. In this paper, Backpropagation through time (BPTT) [ 49 ] is used where the network is unrolled across timesteps for backpropagation.

III-B Stochastic SNN

Contrary to the usage of SG for deterministic neuron models, another way to solve the discontinuous spiking nonlinearity is by using stochastic neuron models. Inspired by [ 25 ] , we integrate stochastic LIF neurons with First-to-Spike coding. The membrane potential is computed by using the following equation:

where, k i subscript 𝑘 𝑖 k_{i} italic_k start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT is a scaling factor of the membrane potential of the neuron. Subsequently, the sigmoid activation function is used to calculate p i t superscript subscript 𝑝 𝑖 𝑡 p_{i}^{t} italic_p start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT , which is the probability of neuron i 𝑖 i italic_i generating a spike at time t 𝑡 t italic_t . The probability p i t superscript subscript 𝑝 𝑖 𝑡 p_{i}^{t} italic_p start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT is used to generate an independent and identically distributed (i.i.d.) Bernoulli value, which represents the discrete spike train generated by the neuron. Due to the non-differentiable nature of the Bernoulli function, it poses a problem for backpropagation techniques which rely on gradient-based optimization. To address this issue, we use the Straight-Through (ST) estimator [ 50 ] , which passes the gradient received from the deeper layer directly to the preceding layer without any modification in the backward phase. In the output layer, we compute the probability P t subscript 𝑃 𝑡 P_{t} italic_P start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT of the correct neuron to generate the earliest spike at time t 𝑡 t italic_t by the following equation:

where, p c t superscript subscript 𝑝 𝑐 𝑡 p_{c}^{t} italic_p start_POSTSUBSCRIPT italic_c end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_t end_POSTSUPERSCRIPT is the probability of correct neuron c generating a spike at time t 𝑡 t italic_t . This equation represents the probability that no wrong neurons generate a spike before the correct neuron produces a spike at time t 𝑡 t italic_t . We use the same ML (Maximum Likelihood) criterion used in [ 25 ] by maximizing the sum of all P t subscript 𝑃 𝑡 P_{t} italic_P start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT s through the following equation:

As the timestep increases, P t subscript 𝑃 𝑡 P_{t} italic_P start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT reduces, and the contributions to the overall losses diminish progressively which encourages neurons to fire earlier but not before the correct neuron, resulting in reduced latency. Furthermore, the BPTT algorithm is employed in a similar fashion as the deterministic SNN model, unfolding the network across timesteps and calculating the gradients of Eqn. 10 with respect to the weights at each timestep.

In this section, we evaluate the accuracy, latency, sparsity, energy cost, and noise sensitivity of different types of models to evaluate the influence of information encoding, computing scheme and training methods independently: ANN-SNN conversion utilizing deterministic neurons and rate coding (D-R-CONV) [ 39 ] , BPTT trained models with deterministic neurons utilizing rate coding (D-R-BPTT) [ 11 ] , deterministic neural networks trained by BPTT utilizing First-to-Spike coding (D-F-BPTT) and the stochastic neural networks trained by BPTT utilizing First-to-Spike coding (S-F-BPTT). Acronyms are used to simplify the naming that reflects its key features: The first part indicates the type of neurons: deterministic (D) and stochastic (S), the second part denotes the coding method: rate coding (R) and First-to-Spike coding (F), and the third part represents the training method: ANN-SNN conversion (CONV) and Backpropagation Through Time (BPTT). We will use these acronyms throughout the remainder of the paper for brevity. We conduct experiments for three neural network architectures, ranging from shallow to deep: 2-layer MLP, LeNet5, and VGG15.

IV-A Datasets

In this paper, we use the MNIST [ 28 ] and CIFAR-10 [ 29 ] datasets for our experiments. In the preprocessing stage for the MNIST dataset, we adjust the pixel intensities from their original range of 0-255 to a normalized range of 0-1. For the CIFAR-10 dataset, we use data augmentation to effectively increase the diversity of the training data and reduce overfitting. In our case, the random horizontal flipping is applied with a probability of 0.5, and the image is rotated at an angle randomly selected from a range of -15 to 15 degrees [ 51 ] . Our preprocessing also includes random cropping of images, with a padding of 4 pixels [ 52 ] . To further augment the dataset, a random affine transformation is applied to the image. This includes shear-based transformations, where the degree of shear is precisely set to 10, effectively introducing a specific level of distortion to the images. Additionally, scaling adjustments are applied, altering the image size to fluctuate between 80% and 120% of the original size. The image attributes such as brightness, contrast, and saturation are adjusted [ 53 ] , each by a factor of 0.2, to enhance model robustness against varying lighting and color conditions. Furthermore, normalization of the input image data is employed based on the mean and standard deviation for each color channel in the CIFAR-10 dataset.

IV-B Model Training

In the training of all architectures, the Adam optimizer [ 54 ] is utilized, accompanied by a learning rate scheduler. For deterministic LIF neurons, the parameter α 𝛼 \alpha italic_α is set to 2 in Eqn 7 . The detailed hyperparameter settings are listed in Table I . Additionally, a critical aspect of SNN-specific optimization involves layerwise tuning of the neuron’s firing threshold V t h subscript 𝑉 𝑡 ℎ V_{th} italic_V start_POSTSUBSCRIPT italic_t italic_h end_POSTSUBSCRIPT in the D-F-BPTT model and the scaling factor k i subscript 𝑘 𝑖 k_{i} italic_k start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT in the S-F-BPTT model (see Section III). For this purpose, we used a Neuroevolutionary optimized hybrid SNN training approach [ 55 ] where the trained model was subsequently optimized using the gradient-free differential evolution (DE) algorithm [ 56 , 57 ] to achieve the best accuracy-latency tradeoff. Prior work has demonstrated that such a hybrid framework significantly outperforms approaches that combine such hyperparameter tuning during the BPTT training process itself [ 55 ] .

IV-C Quantitative Analysis

Accuracy: The performance of each network is summarized in Table II . The accuracy is determined by calculating the mean value across ten independent runs. Transitioning from rate coding to temporal coding actually does not reduce the accuracy and even increases the accuracy in some cases. However, the introduction of stochasticity to the model causes a consistent increase in the network accuracy on the more complex CIFAR-10 dataset . For complex datasets, the variability introduced by stochasticity could act as a form of data augmentation, presenting the network with a wider range of data during the training. This can prevent the model from overfitting, leading to better generalization.

Inference Latency: In SNNs, reducing latency without sacrificing accuracy can be a critical goal, allowing for faster and more energy-efficient computation. In particular, for the D-R-CONV and D-R-BPTT models, the optimal number of timesteps is determined by identifying the saturation point on a plot of timesteps versus accuracy, where further increase in timesteps no longer significantly improves model accuracy. The differences in SNN inference latency in terms of timesteps are noted in Table II . Compared to other temporal coding models, First-to-Spike coding models show a significantly lower latency. In the First-to-Spike coding scheme, the result is based on which neuron in the output layer is the first to generate a spike. This approach requires only a single spike in the output layer to ascertain the result, reducing the number of timesteps significantly. On the other hand, rate coding relies on the frequency of spikes over time, and therefore the network needs a longer observation window to establish an accurate spike rate. This phenomenon is magnified when the dataset becomes complex. On the CIFAR-10 dataset, the rate coding approaches require a substantially higher number of timesteps to achieve the same level of accuracy compared to the models employing First-to-Spike coding. Interestingly, we find that the S-F-BPTT model reduces the latency even further in comparison to the D-F-BPTT model. The stochastic nature of spike generation in S-F-BPTT models may cause an output spike generation even when the input stimulus is not too strong or the membrane potential is low, allowing for a faster response to the input.

Sparsity: Spiking sparsity in SNNs is an important metric for evaluating the efficiency and functionality of models. The average spiking rate of a particular layer, defined as the average number of spikes that a neuron generates over a fixed time interval, is utilized to quantitatively represent sparsity. In this context, a higher average spike rate indicates lower sparsity, and vice versa. The average spiking rate of each model across various layers for the LeNet5 architecture trained on the MNIST dataset and VGG15 architecture trained on the CIFAR-10 dataset is shown in Fig. 1 . Contrary to the common assumption of higher sparsity in temporal coding models than in rate coding models, the figure shows that first-to-spike models do show higher sparsity in the final layer, with the hidden layers presenting a contrary trend. The main reason is the necessity to encode the same information with reduced latency for temporally encoded models, which demands an increased spike count. It also explains why the S-F-BPTT model has the highest spiking rate with the lowest latency. Moreover, to achieve a reliable and consistent output in the presence of stochasticity, the stochastic SNN model needs to increase its spiking rate. This compensates for the unpredictability of individual spikes, ensuring that the overall signal transmission between neurons remains stable and correct.

Energy Cost: The total number of SNN computations, that serves as a proxy metric for the resultant energy consumption of the model when deployed on neuromorphic hardware [ 39 ] , is also a crucial factor in designing SNN models. The total “energy cost” E 𝐸 E italic_E of each model, defined as the ratio of the number of computations performed in the SNN relative to that of an iso-architecture ANN, can be estimated as:

where, L 𝐿 L italic_L is the total number of layers, S i − 1 subscript 𝑆 𝑖 1 S_{i-1} italic_S start_POSTSUBSCRIPT italic_i - 1 end_POSTSUBSCRIPT is the average spiking rate of the ( i − 1 ) t h superscript 𝑖 1 𝑡 ℎ (i-1)^{th} ( italic_i - 1 ) start_POSTSUPERSCRIPT italic_t italic_h end_POSTSUPERSCRIPT layer, T 𝑇 T italic_T is the number of timesteps used for inference, and O P i 𝑂 subscript 𝑃 𝑖 {OP}_{i} italic_O italic_P start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT is the number of operations in the i t h superscript 𝑖 𝑡 ℎ i^{th} italic_i start_POSTSUPERSCRIPT italic_t italic_h end_POSTSUPERSCRIPT layer. Following [ 39 ] , O P i 𝑂 subscript 𝑃 𝑖 {OP}_{i} italic_O italic_P start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT of convolutional layers and linear layers can be summarized as:

where, l i subscript 𝑙 𝑖 l_{i} italic_l start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT is the i t h superscript 𝑖 𝑡 ℎ i^{th} italic_i start_POSTSUPERSCRIPT italic_t italic_h end_POSTSUPERSCRIPT layer, C I subscript 𝐶 𝐼 C_{I} italic_C start_POSTSUBSCRIPT italic_I end_POSTSUBSCRIPT and C O subscript 𝐶 𝑂 C_{O} italic_C start_POSTSUBSCRIPT italic_O end_POSTSUBSCRIPT are the number of input and output channels, K H subscript 𝐾 𝐻 K_{H} italic_K start_POSTSUBSCRIPT italic_H end_POSTSUBSCRIPT and K W subscript 𝐾 𝑊 K_{W} italic_K start_POSTSUBSCRIPT italic_W end_POSTSUBSCRIPT are the height and width of the kernel, O H subscript 𝑂 𝐻 O_{H} italic_O start_POSTSUBSCRIPT italic_H end_POSTSUBSCRIPT and O W subscript 𝑂 𝑊 O_{W} italic_O start_POSTSUBSCRIPT italic_W end_POSTSUBSCRIPT are the height and width of the output, and I F subscript 𝐼 𝐹 I_{F} italic_I start_POSTSUBSCRIPT italic_F end_POSTSUBSCRIPT and O F subscript 𝑂 𝐹 O_{F} italic_O start_POSTSUBSCRIPT italic_F end_POSTSUBSCRIPT are the number of input and output features. The results depicted in Table II demonstrate the energy cost of the four models: D-R-BPTT, D-R-CONV, S-F-BPTT, and D-F-BPTT. The D-R-BPTT model has the highest energy requirement, followed by the D-R-CONV, S-F-BPTT, and D-F-BPTT models, in descending order. The results demonstrate that the models using First-to-Spike coding are more energy efficient than rate-coded models. Furthermore, it is observed that the benefit of lower latency for the S-F-BPTT model is outweighed by its significantly higher spiking rate in contrast to the D-F-BPTT model, ultimately resulting in comparable or increased energy expenditure. However, on the CIFAR-10 dataset, this difference is less pronounced, as the D-F-BPTT model requires almost twice the number of timesteps compared to the S-F-BPTT model, resulting in only a slight difference in the total energy cost.

Noise Sensitivity: A key aspect of ML model design is to ensure robustness to noise. A model’s noise sensitivity can be measured by adding different levels of noise to the input and observing the impact on the network’s accuracy. In this paper, Gaussian noise is used to assess how well the model can maintain its performance under noisy conditions. The variance of Gaussian noise is adjusted from 0 to 1. Fig. 2 shows the relationship between accuracy degradation and the magnitude of applied noise. It can be observed that the D-R-BPTT model demonstrates a higher tolerance to noise, maintaining higher accuracy as the noise intensity increases. Since it uses rate coding, which encodes information by the frequency of multiple spikes over time, individual perturbations caused by noise have less impact on the overall information conveyed. Temporal coding models (D-F-BPTT and S-F-BPTT) are more sensitive to noise because they rely on the precise timing of spikes to encode information. However, the stochastic model has better performance than the deterministic model at high noise levels. This can be attributed to the stochasticity which is incorporated during the training process itself and therefore can provide more resilience to noise (through more tolerance towards the precision of individual spikes).

V Conclusions

In summary, our research explores the interplay of deterministic/stochastic computing with First-to-Spike information coding in SNNs. This integration bridges a gap in current research, demonstrating scalable direct training of SNNs with temporal encoding on large-scale datasets and deep architectures. We showcase that First-to-Spike coding has significant performance benefits for SNN architectures in contrast to traditional rate-based models with regard to various metrics, including latency, sparsity, and energy efficiency. We also underscore notable trade-offs between the stochastic and deterministic SNN models in temporal encoding scenarios. Stochastic models reduce latency and provide enhanced noise robustness, which is important for real-time confidence-critical applications. However, this advantage is offered at the expense of a slight decrement in sparsity, which consequentially results in higher energy costs compared to deterministic SNNs employing First-to-Spike coding. In terms of accuracy, stochastic SNNs have the potential to aid in better generalization, especially for complex datasets. Although our results are promising, scaling this method to ImageNet level vision tasks as well as beyond vision applications could be a future research direction. Energy and sparsity aware training techniques can be also considered for stochastic SNN models with temporal encoding to further enhance its applicability for resource-constrained edge devices.

Acknowledgments

This material is based upon work supported in part by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, under Award Number #DE-SC0021562, the U.S. National Science Foundation under award No. CCSS #2333881, CCF #1955815, CAREER #2337646 and EFRI BRAID #2318101 and by Oracle Cloud credits and related resources provided by the Oracle for Research program.

- [1] A. Javanshir, T. T. Nguyen, M. A. P. Mahmud, and A. Z. Kouzani, “Advancements in Algorithms and Neuromorphic Hardware for Spiking Neural Networks,” Neural Computation , vol. 34, no. 6, pp. 1289–1328, 05 2022.

- [2] W. Maas, “Networks of spiking neurons: The third generation of neural network models,” Trans. Soc. Comput. Simul. Int. , vol. 14, no. 4, p. 1659–1671, dec 1997.

- [3] W. Guo, M. E. Fouda, A. M. Eltawil, and K. N. Salama, “Neural coding in spiking neural networks: A comparative study for robust neuromorphic systems,” Frontiers in Neuroscience , vol. 15, 2021.

- [4] T. Gollisch and M. Meister, “Rapid neural coding in the retina with relative spike latencies,” Science , vol. 319, no. 5866, pp. 1108–1111, 2008.

- [5] P. Heil, “First-spike latency of auditory neurons revisited,” Current Opinion in Neurobiology , vol. 14, no. 4, pp. 461–467, 2004.

- [6] A. Stanojevic, S. Woźniak, G. Bellec, G. Cherubini, A. Pantazi, and W. Gerstner, “An exact mapping from relu networks to spiking neural networks,” 2022.

- [7] B. Rueckauer and S.-C. Liu, “Conversion of analog to spiking neural networks using sparse temporal coding,” in 2018 IEEE International Symposium on Circuits and Systems (ISCAS) , 2018, pp. 1–5.

- [8] J. Lin, S. Lu, M. Bal, and A. Sengupta, “Benchmarking spiking neural network learning methods with varying locality,” arXiv preprint arXiv:2402.01782 , 2024.

- [9] M. Bal and A. Sengupta, “Spikingbert: Distilling bert to train spiking language models using implicit differentiation,” in Proceedings of the AAAI Conference on Artificial Intelligence , vol. 38, no. 10, 2024, pp. 10 998–11 006.

- [10] R.-J. Zhu, Q. Zhao, G. Li, and J. K. Eshraghian, “Spikegpt: Generative pre-trained language model with spiking neural networks,” arXiv preprint arXiv:2302.13939 , 2023.

- [11] N. Rathi, G. Srinivasan, P. Panda, and K. Roy, “Enabling deep spiking neural networks with hybrid conversion and spike timing dependent backpropagation,” in International Conference on Learning Representations , 2020.

- [12] J. Kaiser, H. Mostafa, and E. Neftci, “Synaptic plasticity dynamics for deep continuous local learning (decolle),” Frontiers in Neuroscience , vol. 14, May 2020. [Online]. Available: http://dx.doi.org/10.3389/fnins.2020.00424

- [13] B. Scellier and Y. Bengio, “Equilibrium propagation: Bridging the gap between energy-based models and backpropagation,” 2017.

- [14] M. Bal and A. Sengupta, “Sequence learning using equilibrium propagation,” arXiv preprint arXiv:2209.09626 , 2022.

- [15] S. Lu and A. Sengupta, “Deep Unsupervised Learning Using Spike-Timing-Dependent Plasticity,” Neuromorphic Computing and Engineering , 2023.

- [16] W. Gerstner, R. Ritz, and J. L. van Hemmen, “Why spikes? hebbian learning and retrieval of time-resolved excitation patterns,” Biol. Cybern. , vol. 69, no. 5-6, pp. 503–515, Sep. 1993.

- [17] L. Lapicque, “Recherches quantitatives sur l’excitation électrique des nerfs traitée comme une polarisation,” J Physiol Paris , vol. 9, pp. 620–635, 1907.

- [18] W. Maass, “To spike or not to spike: That is the question,” Proceedings of the IEEE , vol. 103, no. 12, pp. 2219–2224, 2015.

- [19] E. O. Neftci, H. Mostafa, and F. Zenke, “Surrogate gradient learning in spiking neural networks,” 2019.

- [20] A. Sengupta, M. Parsa, B. Han, and K. Roy, “Probabilistic deep spiking neural systems enabled by magnetic tunnel junction,” IEEE Transactions on Electron Devices , vol. 63, no. 7, p. 2963–2970, Jul. 2016.

- [21] K. Yang and A. Sengupta, “Stochastic magnetoelectric neuron for temporal information encoding,” Applied Physics Letters , vol. 116, no. 4, Jan. 2020.

- [22] A. Islam, K. Yang, A. K. Shukla, P. Khanal, B. Zhou, W.-G. Wang, and A. Sengupta, “Hardware in loop learning with spin stochastic neurons,” arXiv preprint arXiv:2305.03235 , 2023.

- [23] A. Islam, A. Saha, Z. Jiang, K. Ni, and A. Sengupta, “Hybrid stochastic synapses enabled by scaled ferroelectric field-effect transistors,” Applied Physics Letters , vol. 122, no. 12, 2023.

- [24] Ma, G., Yan, R. & Tang, H. Exploiting noise as a resource for computation and learning in spiking neural networks. Patterns , vol. 4, no. 10, pp. 100831, 2023.

- [25] A. Bagheri, O. Simeone, and B. Rajendran, “Training probabilistic spiking neural networks with first-to-spike decoding,” 2018.

- [26] B. Rosenfeld, O. Simeone, and B. Rajendran, “Learning first-to-spike policies for neuromorphic control using policy gradients,” in 2019 IEEE 20th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC) , 2019, pp. 1–5.

- [27] K. Yang, D. P. Gm, and A. Sengupta, “Leveraging probabilistic switching in superparamagnets for temporal information encoding in neuromorphic systems,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems , 2023.

- [28] Y. LeCun and C. Cortes, “MNIST handwritten digit database,” 2010.

- [29] A. Krizhevsky, V. Nair, and G. Hinton, “Cifar-10 (canadian institute for advanced research).”

- [30] W. Gerstner and W. M. Kistler, Spiking Neuron Models: Single Neurons, Populations, Plasticity . Cambridge University Press, 2002.

- [31] J. Göltz, L. Kriener, A. Baumbach, S. Billaudelle, O. Breitwieser, B. Cramer, D. Dold, A. F. Kungl, W. Senn, J. Schemmel, K. Meier, and M. A. Petrovici, “Fast and energy-efficient neuromorphic deep learning with first-spike times,” Nature Machine Intelligence , vol. 3, no. 9, p. 823–835, Sep. 2021.

- [32] S. Oh, D. Kwon, G. Yeom, W.-M. Kang, S. Lee, S. Y. Woo, J. Kim, and J.-H. Lee, “Neuron circuits for low-power spiking neural networks using time-to-first-spike encoding,” IEEE Access , vol. 10, pp. 24 444–24 455, 2022.

- [33] S. Park, S. Kim, B. Na, and S. Yoon, “T2fsnn: Deep spiking neural networks with time-to-first-spike coding,” 2020.

- [34] Y. Kim, A. Kahana, R. Yin, Y. Li, P. Stinis, G. E. Karniadakis, and P. Panda, “Rethinking skip connections in spiking neural networks with time-to-first-spike coding,” Frontiers in Neuroscience , vol. 18, 2024. [Online]. Available: https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2024.1346805

- [35] S. Liu, V. C. H. Leung, and P. L. Dragotti, “First-spike coding promotes accurate and efficient spiking neural networks for discrete events with rich temporal structures,” Front. Neurosci. , vol. 17, p. 1266003, Oct. 2023.

- [36] W. Wei, M. Zhang, H. Qu, A. Belatreche, J. Zhang, and H. Chen, “Temporal-coded spiking neural networks with dynamic firing threshold: Learning with event-driven backpropagation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) , October 2023, pp. 10 552–10 562.

- [37] S. Park and S. Yoon, “Training energy-efficient deep spiking neural networks with time-to-first-spike coding,” 2021.

- [38] M. Mozafari, S. R. Kheradpisheh, T. Masquelier, A. Nowzari-Dalini, and M. Ganjtabesh, “First-spike-based visual categorization using reward-modulated stdp,” IEEE Transactions on Neural Networks and Learning Systems , vol. 29, no. 12, p. 6178–6190, Dec. 2018.

- [39] S. Lu and A. Sengupta, “Exploring the connection between binary and spiking neural networks,” Frontiers in Neuroscience , vol. 14, jun 2020.

- [40] A. Sengupta, Y. Ye, R. Wang, C. Liu, and K. Roy, “Going deeper in spiking neural networks: Vgg and residual architectures,” 2019.

- [41] Y. Li, S. Deng, X. Dong, R. Gong, and S. Gu, “A free lunch from ann: Towards efficient, accurate spiking neural networks calibration,” 2021.

- [42] T. Liu, Z. Liu, F. Lin, Y. Jin, G. Quan, and W. Wen, “Mt-spike: A multilayer time-based spiking neuromorphic architecture with temporal error backpropagation,” 2018.

- [43] T. Liu, L. Jiang, Y. Jin, G. Quan, and W. Wen, “Pt-spike: A precise-time-dependent single spike neuromorphic architecture with efficient supervised learning,” in 2018 23rd Asia and South Pacific Design Automation Conference (ASP-DAC) , 2018, pp. 568–573.

- [44] M. Zhang, J. Wang, B. Amornpaisannon, Z. Zhang, V. Miriyala, A. Belatreche, H. Qu, J. Wu, Y. Chua, T. E. Carlson, and H. Li, “Rectified linear postsynaptic potential function for backpropagation in deep spiking neural networks,” 2020.

- [45] J. W. Pillow, L. Paninski, V. J. Uzzell, E. P. Simoncelli, and E. J. Chichilnisky, “Prediction and decoding of retinal ganglion cell responses with a probabilistic spiking model,” J. Neurosci. , vol. 25, no. 47, pp. 11 003–11 013, Nov. 2005.

- [46] E. Izhikevich, “Which model to use for cortical spiking neurons?” IEEE Transactions on Neural Networks , vol. 15, no. 5, pp. 1063–1070, 2004.

- [47] J. K. Eshraghian, M. Ward, E. Neftci, X. Wang, G. Lenz, G. Dwivedi, M. Bennamoun, D. S. Jeong, and W. D. Lu, “Training spiking neural networks using lessons from deep learning,” Proceedings of the IEEE , vol. 111, no. 9, pp. 1016–1054, 2023.

- [48] W. Fang, Z. Yu, Y. Chen, T. Masquelier, T. Huang, and Y. Tian, “Incorporating learnable membrane time constant to enhance learning of spiking neural networks,” 2021.

- [49] Y. Wu, L. Deng, G. Li, J. Zhu, and L. Shi, “Spatio-temporal backpropagation for training high-performance spiking neural networks,” Frontiers in Neuroscience , vol. 12, May 2018.

- [50] Y. Bengio, N. Léonard, and A. C. Courville, “Estimating or propagating gradients through stochastic neurons for conditional computation,” CoRR , vol. abs/1308.3432, 2013.

- [51] C. Shorten and T. M. Khoshgoftaar, “A survey on image data augmentation for deep learning,” J. Big Data , vol. 6, no. 1, Dec. 2019.

- [52] C.-Y. Lee, S. Xie, P. Gallagher, Z. Zhang, and Z. Tu, “Deeply-supervised nets,” 2014.

- [53] E. D. Cubuk, B. Zoph, D. Mane, V. Vasudevan, and Q. V. Le, “Autoaugment: Learning augmentation policies from data,” 2019.

- [54] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” 2017.

- [55] S. Lu and A. Sengupta, “Neuroevolution guided hybrid spiking neural network training,” Front. Neurosci. , vol. 16, p. 838523, Apr. 2022.

- [56] R. Storn and K. Price, “Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces,” Journal of Global Optimization , vol. 11, pp. 341–359, 01 1997.

- [57] P. Virtanen, R. Gommers, T. E. Oliphant, M. Haberland, T. Reddy, D. Cournapeau, E. Burovski, P. Peterson, W. Weckesser, J. Bright et al. , “SciPy 1.0: fundamental algorithms for scientific computing in python,” Nature methods , vol. 17, no. 3, pp. 261–272, 2020.

- [58] Y. Sakemi, K. Morino, T. Morie, and K. Aihara, “A supervised learning algorithm for multilayer spiking neural networks based on temporal coding toward energy-efficient vlsi processor design,” IEEE Transactions on Neural Networks and Learning Systems , vol. 34, no. 1, pp. 394–408, 2023.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

- We're Hiring!

- Help Center

SPIKING NEURAL NETWORK

- Most Cited Papers

- Most Downloaded Papers

- Newest Papers

- Save to Library

- Last »

- Spiking Neurons Follow Following

- Optic Nerve Follow Following

- Spiking neuron models Follow Following

- Neuronal Activity Follow Following

- Spiking Neural Networks Follow Following

- Temporal Data Mining Follow Following