Semi-Supervised Visual Representation Learning for Fashion Compatibility

We consider the problem of complementary fashion prediction. Existing approaches focus on learning an embedding space where fashion items from different categories that are visually compatible are closer to each other. However, creating such labeled outfits is intensive and also not feasible to generate all possible outfit combinations, especially with large fashion catalogs. In this work, we propose a semi-supervised learning approach where we leverage large unlabeled fashion corpus to create pseudo positive and negative outfits on the fly during training. For each labeled outfit in a training batch, we obtain a pseudo-outfit by matching each item in the labeled outfit with unlabeled items. Additionally, we introduce consistency regularization to ensure that representation of the original images and their transformations are consistent to implicitly incorporate colour and other important attributes through self-supervision. We conduct extensive experiments on Polyvore, Polyvore-D and our newly created large-scale Fashion Outfits datasets, and show that our approach with only a fraction of labeled examples performs on-par with completely supervised methods.

1. Introduction

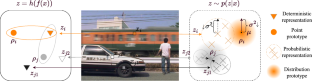

Recent advancements in computer vision have led to their several practical applications in fashion such as similar product recommendation (Lu et al . , 2019 ; Lin et al . , 2020 ; Tangseng and Okatani, 2020 ; Tangseng et al . , 2018 ) , shop-the-look (Hadi Kiapour et al . , 2015 ; Liu et al . , 2012 ) , virtual try-ons (Neuberger et al . , 2020 ; Yang et al . , 2020b ) and 3D avatars (Ma et al . , 2020 ; Patel et al . , 2020 ) . In this work, we focus on fashion compatibility where the objective is to compose matching clothing items that are appealing and complement well, as shown in the Fig. 1 A and Fig. 1 B. This could have potential application in online retail industry to recommend complementary products to the user based on their previously purchased product(s). For example, a formal shoe can be recommended to a customer who purchased a office-wear trouser .

What constitutes a good “complementary” similarity differs from visual similarity. Learning representations for compatibility requires reasoning about color, shape, product category and other high level attributes to ensure that the items from “different” categories are closer. Most existing works (Vasileva et al . , 2018 ; Tan et al . , 2019 ; Lin et al . , 2020 ) achieve this through careful labeling of dataset to identify items that go well together. A simple metric learning approach can then be used to learn an embedding space where the compatible items are brought closer compared to non-compatible ones. However, creating such labeled outfit pairs is expensive, laborious and sometimes require expert knowledge. This also becomes cumbersome and infeasible even for a reasonably large fashion catalog as one needs to compose several possible outfit combinations. In this work, we aim to learn powerful representation for compatibility task with very limited labeled outfit data. We propose a semi-supervised learning approach to leverage large amount of unlabeled fashion images that can be easily obtained. The approach is based on data augmentation technique (Berthelot et al . , 2019 ; Yun et al . , 2019 ) where the goal is to enhance the training set through techniques namely - pseudo-labeling and consistency regularization . Based on the idea that new combinations or associations can be formed from the current associations of the outfit items, we aim to generate pseudo positive and negative outfit pairs on-the-fly during each training iteration. For example, consider that if an item A is compatible with another item B and if item C is visually similar to item A, it is possible to then create a new outfit with item pairs B and C. For each image in the positive/negative training pairs, we find the most visually similar example in the unlabeled set and create a new pseudo-outfit pairs as shown in Fig. 1 C. Thus even with few training outfit collections, it is possible to generate a large stream of pseudo-outfits pairs.

As image attributes such as colour, shape and texture play a big role for compatibility, we additionally introduce self-supervised consistency regularization (Berthelot et al . , 2019 ) on unlabeled images to explicitly learn those attributes. For instance, we need to disentangle shape information from our representation as items in an outfit are usually of different shape. Similarly, colour can be very informative. We achieve this by applying random transformation on the images and direct the network to produce (dis)similar representations. Note that this is different from explicit attention mechanism employed by current schemes where they train conditional masks to learn a subspace for different attributes such as color and patterns (Tan et al . , 2019 ; Lin et al . , 2020 ) .

We conduct extensive experiments on Polyvore and Polyvore-Disjoint (Vasileva et al . , 2018 ) datasets and show that our proposed approach can achieve on-par performance with fully supervised methods even with 5 5 5 % of labeled outfits. In a fully supervised setting, our approach can achieve state-of-the-art performance on compatibility prediction task. Finally, we create a large scale Fashion Outfits dataset consisting of around 700 700 700 K outfits with more than 3 3 3 M images and demonstrate consistent improvement in performance over fully-supervised baseline.

To summarize, we make the following contributions in this paper.

We propose a semi-supervised approach for fashion compatibility prediction by learning powerful representation with limited outfit labels.

We introduce consistency regularization and pseudo-labeling based data augmentation techniques to learn different attributes and generate pseudo-outfits, completely from the unlabeled fashion images.

We demonstrate on-par performance as fully supervised approaches with only a fraction of labeled outfit on Polyvore, Polyvore-D and our newly created datasets.

2. Related Work

In this section, we discuss previous works on compatibility prediction and other related areas.

Fashion Compatibility introduced by Han et al. ( Han et al . , 2017 ) was formulated as a sequential problem and trained a bi-directional recurrent network model that predicts the next compatible item conditioned on previously observed items in the outfit sequence. They also introduced Polyvore dataset in this work. Vasileva et al. ( Vasileva et al . , 2018 ) train pairwise embedding spaces and employ metric learning to learn representations for fashion compatibility. Representations are learnt for different pairs of categories which is not feasible when the catalog of large category types. Further, they enriched the Polyvore dataset by introducing more challenging evaluation sets by filtering out common items across train and test splits. Tan et al. ( Tan et al . , 2019 ) learn embeddings by relying on a conditional weight branch on two image representations as an attention mechanism for selecting the subspace. Unlike (Vasileva et al . , 2018 ) , this work does not require access to the type information during evaluation.

Another additional ingredient of these works (Han et al . , 2017 ; Vasileva et al . , 2018 ; Tan et al . , 2019 ) is the usage of additional meta-data such as text description. They train a text module using word2vec features (Mikolov et al . , 2013 ) and enforce a visual-semantic embedding (VSE) loss to align text and image representations. In our work, we focus on only visual information.

More recently, some works (Cucurull et al . , 2019 ; Yang et al . , 2020a ; Duan et al . , 2019 ) have also exploited item-item relationships and trained a graph convolutional network ( GCN ) by exploiting didactic co-occurrences of items. Further, Lin et al. ( Lin et al . , 2020 ) introduce a fashion retrieval problem for images and learn a type-dependent attention mechanism. Most of the existing literature in fashion compatibility focus on the fully supervised paradigm. In contrast, we address a more practical and challenging semi-supervised paradigm without using additional text data.

Semi-supervised learning has seen lot of progress (Berthelot et al . , 2019 ; Yun et al . , 2019 ; Verma et al . , 2019 ) in the last few years where ever there is a scarcity of labeled data. In the context of deep representation learning, following techniques are widely employed. In consistency regularization (Ouali et al . , 2020 ; Cubuk et al . , 2018 ) , output class predictions are forced to remain unchanged for different augmentations of the input data. This provides regularization to the model to achieve good generalization. In our work, we incorporate consistency regularization to explicitly capture appearance and shape attributes for fashion items. However unlike previous approaches, we minimize the distance between original image and its shape transformation and simultaneously maximize the distance between original image and its colour transformation. This ensures that the model learns shape-invariant features and color variant features.

On the other hand, entropy minimization (Kundu et al . , 2020b ; Grandvalet and Bengio, 2005 ) aims to obey cluster assumption by forming low density regions between different classes. Pseudo-labeling (Lee, 2013 ; Kundu et al . , 2020c ; Venkat et al . , 2020 ) is a type of self-training algorithm that assign hard labels to unlabeled examples based on the maximum prediction probability. In our work, we create pseudo-outfits and augment our training dataset to exploit transitivity of items across different outfits. Generative adversarial network (GAN) (Goodfellow et al . , 2014 ) based approaches have also been proposed for semi-supervised learning, however these are challenging to train (Salimans et al . , 2016 ) . In a related area of few-shot learning, Prototypical networks (Snell et al . , 2017 ) exploit the simple inductive bias of a classifier by defining a prototype embedding as the mean of the support set in the latent space. We make use of a simple inductive bias that the nearest neighbour in embedding space should have similar attributes to construct pseudo-outfits.

Self-supervised learning literature is broadly based on solving a pre-defined task that exploits the structure present in the data (He et al . , 2020 ; Chen et al . , 2020b ; Caron et al . , 2018 ; Goyal et al . , 2019 ) , or equivariance (Noroozi et al . , 2017 ; Kundu et al . , 2020a ) and invariance (Misra and Maaten, 2020 ) properties of image transformations. For image classification, different pretext tasks such as jigsaw puzzle (Noroozi and Favaro, 2016 ) , colorization task (Deshpande et al . , 2015 ) , rotation prediction (Gidaris et al . , 2018 ) have been proposed. It has been shown recently that even simple data augmentation methods (Chen et al . , 2020b ; He et al . , 2020 ; Chen et al . , 2020a ) such as colour jittering and gray scale transformations can provide good supervision. These approaches employ contrastive loss to learn consistent representation for an image and its augmentation while treating other images in the batch as negative samples. In our work, we explicitly define positive and negative data augmentations and impose a self-supervised consistency loss on them. However, our regularizer does not require access to labels as required by (He et al . , 2020 ; Chen et al . , 2020b ) during evaluation.

3. Our Approach

We are interested in learning powerful visual representation for the task of fashion compatibility. This is achieved through metric learning by bringing the embedding of items (from different categories) that go well together in a outfit closer compared to non-compatible items. While the previous works (Vasileva et al . , 2018 ; Tan et al . , 2019 ; Lin et al . , 2020 ) relied mostly on large labeled outfits, in this work, we instead consider only a fraction of labeled outfits and leverage unlabeled fashion items to learn such representations.

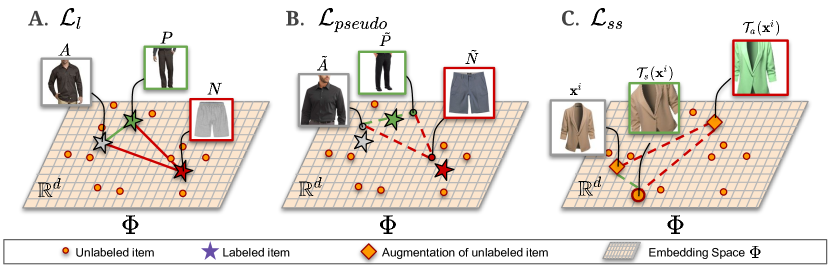

Our proposed approach is a data augmentation technique where pseudo-positive and negative triplet pairs (Fig 2 (b)) are generated on-the-fly during each training iteration based on visual similarity of individual examples in the triplet using the images in the unlabeled set. Since color and texture play a key role in determining matching outfits (Kim et al . , 2020 ) , we incorporate an additional self-supervised loss on the individual fashion items (Fig 2 (c)) to implicitly capture those attributes. Note that, this is different from explicit attention mechanism employed by current methods where they learn conditional masks that implicitly learns colour and pattern attributes (Tan et al . , 2019 ; Lin et al . , 2020 ) .

We next formulate the problem of semi-supervised fashion compatibility and then describe our proposed data augmentation techniques in greater detail.

3.1. Formulation

We are given a dataset 𝒟 = { 𝒟 l ∪ 𝒟 u } 𝒟 subscript 𝒟 𝑙 subscript 𝒟 𝑢 \mathcal{D}=\{\mathcal{D}_{l}\cup\mathcal{D}_{u}\} for training our model. 𝒟 l = { X 1 , X 2 … X l } subscript 𝒟 𝑙 superscript 𝑋 1 superscript 𝑋 2 … superscript 𝑋 𝑙 \mathcal{D}_{l}=\{X^{1},X^{2}\dots X^{l}\} corresponds to the labeled set where each X i = { 𝐱 1 i , 𝐱 2 i , … , 𝐱 k i } superscript 𝑋 𝑖 subscript superscript 𝐱 𝑖 1 subscript superscript 𝐱 𝑖 2 … subscript superscript 𝐱 𝑖 𝑘 X^{i}=\{\mathbf{x}^{i}_{1},\mathbf{x}^{i}_{2},\dots,\mathbf{x}^{i}_{k}\} is an outfit containing more than one fashion item from different categories. Note that, a fashion item 𝐱 j i subscript superscript 𝐱 𝑖 𝑗 \mathbf{x}^{i}_{j} can be a part of multiple outfits. Similarly, 𝒟 u = { 𝐱 1 , 𝐱 2 , … , 𝐱 u } subscript 𝒟 𝑢 superscript 𝐱 1 superscript 𝐱 2 … superscript 𝐱 𝑢 \mathcal{D}_{u}=\{\mathbf{x}^{1},\mathbf{x}^{2},\dots,\mathbf{x}^{u}\} is the large unlabeled corpus of individual fashion items that are not part of any outfit. We also denote 𝒳 𝒳 \mathcal{X} as the set of all fashion images. Our goal is then to learn a mapping function f θ : 𝒳 → Φ : subscript 𝑓 𝜃 → 𝒳 Φ f_{\theta}:\mathcal{X}\rightarrow\Phi that transforms the fashion images into an embedding space where compatible items are closer to each other. In our case, we use deep convolutional neural network ( CNN ) as f θ subscript 𝑓 𝜃 f_{\theta} where θ 𝜃 \theta denotes the parameters of our network.

3.2. Architecture

Our network architecture is similar to previous approaches (Vasileva et al . , 2018 ) that are based on siamese network (Rahul et al . , 2017 ) with ResNet18 backbone. To train our model, we need a batch of labeled triplets and unlabeled images. Each triplet consists of anchor ( A 𝐴 A ), positive ( P 𝑃 P ) and negative ( N 𝑁 N ) image pairs as shown in the Figure 2 . Anchor and positive images are complementary items that are part of the same outfit but from different categories (e.g. shirt and trouser ) while negative image is chosen randomly from the positive item category. The embeddings ϕ italic-ϕ \phi are obtained after the fully-connected layer, similar to (Vasileva et al . , 2018 ) . We train our network with triplet based margin loss defined as,

where, A 𝐴 A , P 𝑃 P and N 𝑁 N denote anchor, positive and negative images of the triplet, respectively. d ( ⋅ , ⋅ ) 𝑑 ⋅ ⋅ d(\cdot,\cdot) is the Euclidean distance function and m 𝑚 m is the margin.

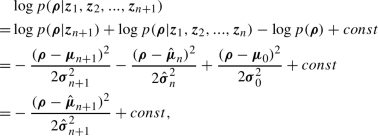

Labeled dataset: We directly minimize the above margin loss ( ℒ l subscript ℒ 𝑙 \mathcal{L}_{l} ) for the triplets sampled from labeled outfits to bring compatible items closer to each other pushing non-compatible items farther away as shown in Fig. 2 A. However, in the absence of large labeled dataset, we employ consistency regularization and pseudo-labeling losses on the unlabeled images that are described next.

3.3. Consistency regularization

Based on the hypothesis that learning discriminative representation for fashion compatibility prediction requires reasoning about important attributes such as colour and texture since matched outfits often have similar attributes. At the same time, it is necessary to disentangle shape information as items from different categories usually have different shapes. This becomes especially crucial when training the ImageNet pre-trained networks that learn discriminative shape information (Chen et al . , 2020b ; He et al . , 2020 ) for generic image classification. We propose a self-supervised consistency regularization on individual fashion items that enforce these observations on the network. The main difference to existing approaches is that we leverage unlabeled fashion images to explicitly learn such attributes without the need for an attention mechanism (Tan et al . , 2019 ) or a large labeled outfit dataset (Tan et al . , 2019 ; Lin et al . , 2020 ) .

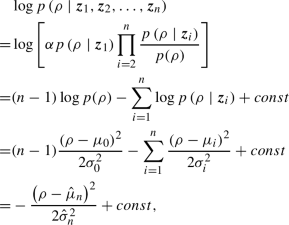

We consider entire fashion item set 𝒳 𝒳 \mathcal{X} that includes both labeled and unlabeled samples without their outfit association. Given each image in the batch, we apply random transformations for shape and appearance, and then measure the discrepancy between the representations of the original and perturbed images. Unlike (Berthelot et al . , 2019 ; Yun et al . , 2019 ) that ensures consistent output class distribution for a sample and its transformation, here we enforce the consistency for the representation as network is optimized for distance based loss function. We apply following transformations on the images and visualize a few examples in Fig. 3 .

Shape transformation ( 𝒯 s subscript 𝒯 𝑠 \mathcal{T}_{s} ) : For each image x i superscript 𝑥 𝑖 x^{i} in the mini-batch, we apply random affine transformations such as rotation and shearing. We rotate the image randomly in the range of ± 5 plus-or-minus 5 \pm 5 degrees and shear the image by utmost 30 30 30 pixels along x 𝑥 x and y 𝑦 y coordinates. We finally scale the images by a maximum of 1.2 1.2 1.2 times and take the center crop of the image. Colour and texture remain unaltered. We represent shape perturbed images as 𝐱 [ s ] i = 𝒯 s ( 𝐱 i ) subscript superscript 𝐱 𝑖 delimited-[] 𝑠 subscript 𝒯 𝑠 superscript 𝐱 𝑖 \mathbf{x}^{i}_{[s]}=\mathcal{T}_{s}(\mathbf{x}^{i}) . See Fig. 3 for examples.

Appearance transformation ( 𝒯 a subscript 𝒯 𝑎 \mathcal{T}_{a} ) : While it is challenging to modify the colour/texture of the items, we resort to simple transformations such as random gray scale, colour jitter and random cropping to obtain the perturbations 𝐱 [ a ] i = 𝒯 a ( 𝐱 i ) subscript superscript 𝐱 𝑖 delimited-[] 𝑎 subscript 𝒯 𝑎 superscript 𝐱 𝑖 \mathbf{x}^{i}_{[a]}=\mathcal{T}_{a}(\mathbf{x}^{i}) . Such techniques are previously employed in self-supervised works (He et al . , 2020 ; Chen et al . , 2020b ) for representation learning. See Fig. 3 for examples.

Finally, we construct a triplet with original fashion item x i superscript 𝑥 𝑖 x^{i} as anchor, and its shape 𝐱 [ s ] i subscript superscript 𝐱 𝑖 delimited-[] 𝑠 \mathbf{x}^{i}_{[s]} and color 𝐱 [ a ] i subscript superscript 𝐱 𝑖 delimited-[] 𝑎 \mathbf{x}^{i}_{[a]} perturbed images as positive and negative instances. We then impose a self-supervised loss ( ℒ s s subscript ℒ 𝑠 𝑠 \mathcal{L}_{ss} ) on the augmented triplet ( 𝐱 i , 𝐱 [ s ] i , 𝐱 [ a ] i ) superscript 𝐱 𝑖 subscript superscript 𝐱 𝑖 delimited-[] 𝑠 subscript superscript 𝐱 𝑖 delimited-[] 𝑎 (\mathbf{x}^{i},\mathbf{x}^{i}_{[s]},\mathbf{x}^{i}_{[a]}) using margin triplet loss as shown in Fig. 2 C.

3.4. Data Augmentation with Pseudo-Labels

While consistency regularization applied on individual fashion items directs the network what to focus on, we present a labeling strategy that generates pseudo-outfits by exploiting the knowledge of fashion items in their vicinity. Pseudo-outfits are the synthetically created outfit pairs created on-the-fly during each training iteration. We again leverage unlabeled images to achieve this.

We draw our motivation from few-shot learning (Snell et al . , 2017 ) and argue that nearest neighbour should have similar attributes at a thematic level (e.g. different office-wear items to be closer to each other compared to travel-wear items) due to the inductive bias of the network instilled by ℒ l subscript ℒ 𝑙 \mathcal{L}_{l} and ℒ s s subscript ℒ 𝑠 𝑠 \mathcal{L}_{ss} . Thus given a triplet with anchor, positive and negative items from the labeled outfit, we create a pseudo-triplet ( A ~ , P ~ , N ~ ) ~ 𝐴 ~ 𝑃 ~ 𝑁 (\tilde{A},\tilde{P},\tilde{N}) by finding nearest element for each of these items in the embedding space. As it is computationally infeasible to perform nearest neighbor search on entire unlabeled dataset 𝒟 U subscript 𝒟 𝑈 \mathcal{D}_{U} , we randomly sample sufficiently large mini-batch of unlabeled images, 𝐛 u subscript 𝐛 𝑢 \mathbf{b}_{u} , to generate pseudo-outfits and finally impose margin loss ( ℒ p s e u d o subscript ℒ 𝑝 𝑠 𝑒 𝑢 𝑑 𝑜 \mathcal{L}_{pseudo} ) on the pseudo-triplets ( A ~ , P ~ , N ~ ) ~ 𝐴 ~ 𝑃 ~ 𝑁 (\tilde{A},\tilde{P},\tilde{N}) as shown in Fig. 2 B.

3.5. Loss function

We formalize our algorithm in Algo. 1 . We minimize the following objective function to train our model

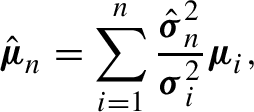

where ℒ l subscript ℒ 𝑙 \mathcal{L}_{l} , ℒ p s e u d o subscript ℒ 𝑝 𝑠 𝑒 𝑢 𝑑 𝑜 \mathcal{L}_{pseudo} and ℒ s s subscript ℒ 𝑠 𝑠 \mathcal{L}_{ss} are the triplet margin losses on the labeled, pseudo-outfits and individual instances, respectively. λ s s subscript 𝜆 𝑠 𝑠 \lambda_{ss} and λ p s e u d o subscript 𝜆 𝑝 𝑠 𝑒 𝑢 𝑑 𝑜 \lambda_{pseudo} are the hyper-parameters.

4. Datasets

Polyvore outfits (Vasileva et al . , 2018 ) and Polyvore disjoint (Vasileva et al . , 2018 ) are the two primary datasets used in the literature for evaluating fashion compatibility. We conduct our ablations and comparisons with previous state-of-the-art approaches on these datasets. In addition to these, we baseline our results on a newly created fashion dataset to provide large scale evaluation. We provide the statistics of these datasets in Table 4 .

Fashion outfits is a proprietary dataset created based on the user purchase transactions on an e-commerce platform. Our collection process makes a reasonable assumption that multiple fashion items from different categories that are purchased by an user in a single session are complementary and go well together. Only those fashion categories that are similar to high-level categories defined in the Polyvore dataset are considered. Based on the user purchase history over a period of time, we retained user sessions (a) with purchases from more than one category, (b) with uniquely purchased items and (c) that do not have multiple items from the same category. We finally apply association mining algorithm (Agrawal and Srikant, 1994 ) on these subset to retain highly frequent co-occurring items and remove any noisy transactions.

Overall, the dataset consists of 705 K 705 𝐾 ~{}705K outfits with more than 3 M 3 𝑀 ~{}3M images. We randomly split the dataset into 675 K 675 𝐾 ~{}675K train, 10 K 10 𝐾 10K validation, and 20 K 20 𝐾 20K test outfits. In our experiments, we primarily intend to use the train set as an unlabeled set to demonstrate the efficacy of our proposed approach with unlabeled examples.

5. Experiments

5.1. implementation details.

We use the same implementation procedure as (Tan et al . , 2019 ; Vasileva et al . , 2018 ) and modify ImageNet-pretrained ResNet-18 (He et al . , 2016 ) as our backbone. We consider a batch size of 256 256 256 for labeled set where each sample within a batch consists of anchor, positive and negative images. For the unlabeled set, we consider 1024 1024 1024 individual fashion items. The triplet loss margin m 𝑚 m is set to 0.4 0.4 0.4 . We determine the values of λ s s subscript 𝜆 𝑠 𝑠 \lambda_{ss} and λ p s e u d o subscript 𝜆 𝑝 𝑠 𝑒 𝑢 𝑑 𝑜 \lambda_{pseudo} empirically and set them to 0.1 0.1 0.1 and 1 1 1 , respectively. The network is optimized with Adam (Kingma and Ba, 2015 ) optimizer with a learning rate of 5 e 5 𝑒 5e - 5 5 5 . The network is trained for 10 10 10 epochs and the best results are reported based on a validation set. Our implementation is done in PyTorch (Paszke et al . , 2019 ) and trained on Nvidia-V100 GPU machine with 16 16 16 GB memory.

5.2. Evaluation Tasks

Fill-In-The-Blank (FITB) is a question and answering task in which model is presented with an incomplete outfit along with four candidate items as possible answers. The task is then to choose a candidate that is most compatible with the given outfit. As done in (Vasileva et al . , 2018 ) , we measure the pairwise cosine similarity between the candidate embedding and the average embedding of the outfit and choose the one with highest similarity. The performance is reported as overall accuracy on this task.

Compatibility prediction is a binary prediction task where model has to predict whether all the items in a given outfit are compatible or not. We calculate the average pairwise distance between items in the outfit and report the performance as area under the receiver operating curve ( AUC ).

5.3. Results

Baseline methods. To validate our hypothesis that color plays an important role for compatibility learning, we report our baseline result with color histogram features. As shown in Table 2 , these simple features perform remarkably well and achieve results on par with some deep architectures (Bi-LSTM approach (Han et al . , 2017 ) ). This motivates us to explicitly encode colour information in our representation from unlabeled images. Another baseline is a siamese network with triplet loss as reported in (Vasileva et al . , 2018 ) . Note that these baselines do not have include any other meta data such as text or label information.

Comparison with state-of-the-art. We make comparisons with previously reported approaches on Polyvore datasets in Table 2 . All these approaches rely on a fully supervised outfit dataset for representation learning. We also specify whether these approaches rely on any additional metadata information such as category and title. It is clear from the table that our approach achieves on par performance compared to fully supervised methods with only a fraction of labeled outfits and does not require any additional meta-data information.

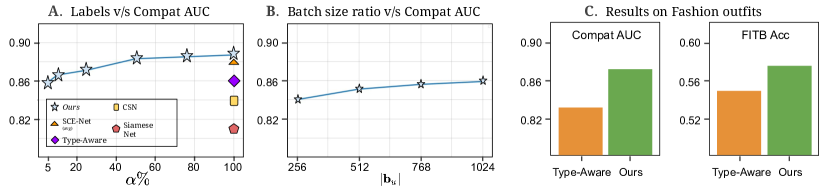

Result on Fashion outfits. We report our large-scale tests on Fashion outfits in Fig. 4 C. Our method obtains scores of 0.87/57.6 on Compat AUC/FITB tasks while (Vasileva et al . , 2018 ) obtains scores of 0.83/55.0. This demonstrates that our method works well even on large benchmarks. Due to non-availability of code for some methods, we report the result only for (Vasileva et al . , 2018 ) .

5.4. Ablation studies

Consistency regularization and pseudo-labels. We first conduct our ablation study to understand the effectiveness of ℒ s s subscript ℒ 𝑠 𝑠 \mathcal{L}_{ss} and ℒ p s e u d o subscript ℒ 𝑝 𝑠 𝑒 𝑢 𝑑 𝑜 \mathcal{L}_{pseudo} . We include different regularization terms to the baseline siamese model and report the results in Table 3 . It is evident that both these forms of regularization on unlabeled data have complementary benefits and improve the overall performance significantly achieving results on par with supervised methods.

Batch size . To create good quality pseudo-outfits, we need to have reasonably large unlabeled image batches to ensure better quality matches that share similar attributes for items in the labeled triplet (Chen et al . , 2020b ; Lee, 2013 ) . To evaluate this, we conduct an experiment with different unlabeled bath sizes and report the results in Fig 4 B. Results indicate that increasing the batch size consistently improves the performance of our model. However, we could not go beyond a batch size of 1024 1024 1024 due to GPU memory limitations.

How many labeled outfits are enough? Since it is challenging to annotate outfit pairs, an important question we seek to answer is the number of labeled outfits that are sufficient for learning good representations. For this study, we consider a proportion of the Polyvore dataset as labeled and consider remaining outfits as unlabeled in each experiment. As expected, performance improves by increasing the labeled set as shown in Fig 4 A. As depicted in the figure, our semi-supervised method (with α 𝛼 \alpha = 5 % percent 5 5\% ) outperforms fully-supervised CSN (Veit et al . , 2017 ) and Siamese network. Further, as we increase α 𝛼 \alpha to 50 % percent 50 50\% , our approach starts to outperform even the fully-supervised approaches that use additional data such as text description (Veit et al . , 2017 ; Vasileva et al . , 2018 ) .

Another interesting point to note is that, at full supervision (i.e. α 𝛼 \alpha = 100 % percent 100 100\% ), our approach achieves 0.89 0.89 0.89 AUC outperforming many supervised approaches on the compatibility task compared in Table 2 due to the added consistency regularization supervision.

5.5. Visualization

Embedding Space . We plot t-SNE (Maaten and Hinton, 2008 ) of the visual representation space Φ Φ \Phi for ImageNet and our model as shown in Fig 5 . As shown in Fig. 5 A, ImageNet pre-trained models trained for classification depicts stronger bias for learning shape discriminative features that bringing different category items farther away. This bias hampers the learning of fashion compatibility especially in a semi-supervised setting like ours as discussed in Sec. 3.3 . In Fig. 5 B, we portray the t-SNE plot of the representation space learned by our semi-supervised approach ( α 𝛼 \alpha = 5 % percent 5 5\% ). The t-SNE plot demonstrates that the embedding space has learnt strong representations for visual-appearance characterized by the color and texture information of fashion items. Hence, by explicitly disentangling the shape information of the fashion items, our approach overcomes the pre-training task bias.

Qualitative results : In Fig. 6 , we present qualitative results of our approach on the Polyvore and Fashion outfits dataset. The results show that our approach is able to model the concepts of color and texture well. In the Fig. 6 , we also present some of the failure cases where our approach produces suboptimal predictions for FITB and Compatibility tasks. Our model performs suboptimally on outfits that contain items with significantly varying appearance. For example, in the last box of Fig. 6 , the texture of the trouser is very different from that of the shirt and the outerwear .

6. Conclusion

In this work, we have presented an approach for learning strong visual representations for fashion compatibility using limited labeled training outfit data. We proposed two techniques for leveraging unlabeled data. First, to learn important attributes such as color, we introduced a self-supervision scheme that enforces consistency in the representation of input and its random transformations. While this acts like a data augmentation at instance level, we also proposed pseudo-labeling technique that creates pseudo-labels based on the visual similarity of labeled and unlabeled images. We conducted our experiments on Polyvore, Polyvore-D and newly created Fashion outfits dataset and achieved results on-par with supervised methods. This, however, is achieved with only a fraction of labeled data and without using any meta data such as text description.

- Agrawal and Srikant (1994) Rakesh Agrawal and Ramakrishnan Srikant. 1994. Fast algorithms for mining association rules. In International Conference on Very Large Data Bases (VLDB) .

- Berthelot et al . (2019) David Berthelot, Nicholas Carlini, Ian Goodfellow, Nicolas Papernot, Avital Oliver, and Colin A Raffel. 2019. Mixmatch: A holistic approach to semi-supervised learning. In Neural Information Processing Systems (NeurIPS) .

- Caron et al . (2018) Mathilde Caron, Piotr Bojanowski, Armand Joulin, and Matthijs Douze. 2018. Deep clustering for unsupervised learning of visual features. In European Conference of Computer Vision (ECCV) .

- Chen et al . (2020b) Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. 2020b. A simple framework for contrastive learning of visual representations. ICML (2020).

- Chen et al . (2020a) Xinlei Chen, Haoqi Fan, Ross Girshick, and Kaiming He. 2020a. Improved baselines with momentum contrastive learning. arXiv preprint arXiv:2003.04297 (2020).

- Cubuk et al . (2018) Ekin D Cubuk, Barret Zoph, Dandelion Mane, Vijay Vasudevan, and Quoc V Le. 2018. Autoaugment: Learning augmentation policies from data. arXiv preprint arXiv:1805.09501 (2018).

- Cucurull et al . (2019) Guillem Cucurull, Perouz Taslakian, and David Vazquez. 2019. Context-aware visual compatibility prediction. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Deshpande et al . (2015) Aditya Deshpande, Jason Rock, and David Forsyth. 2015. Learning large-scale automatic image colorization. In International Conference of Computer Vision (ICCV) .

- Duan et al . (2019) Jiali Duan, Xiaoyuan Guo, Son Tran, and Jay Kuo. 2019. Fashion Compatibility Recommendation via Unsupervised Metric Graph Learning. In Neural Information Processing Systems workshop .

- Gidaris et al . (2018) Spyros Gidaris, Praveer Singh, and Nikos Komodakis. 2018. Unsupervised representation learning by predicting image rotations. arXiv preprint arXiv:1803.07728 (2018).

- Goodfellow et al . (2014) Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Neural Information Processing Systems (NIPS) .

- Goyal et al . (2019) Priya Goyal, Dhruv Mahajan, Abhinav Gupta, and Ishan Misra. 2019. Scaling and benchmarking self-supervised visual representation learning. In International Conference of Computer Vision (ICCV) .

- Grandvalet and Bengio (2005) Yves Grandvalet and Yoshua Bengio. 2005. Semi-supervised learning by entropy minimization. In Neural Information Processing Systems (NIPS) .

- Hadi Kiapour et al . (2015) M Hadi Kiapour, Xufeng Han, Svetlana Lazebnik, Alexander C Berg, and Tamara L Berg. 2015. Where to buy it: Matching street clothing photos in online shops. In International Conference of Computer Vision (ICCV) .

- Han et al . (2017) Xintong Han, Zuxuan Wu, Yu-Gang Jiang, and Larry S Davis. 2017. Learning fashion compatibility with bidirectional lstms. In ACMMM .

- He et al . (2020) Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. 2020. Momentum contrast for unsupervised visual representation learning. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- He et al . (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Kim et al . (2020) Donghyun Kim, Kuniaki Saito, Kate Saenko, Stan Sclaroff, and Bryan A Plummer. 2020. Self-supervised Visual Attribute Learning for Fashion Compatibility. arXiv (2020).

- Kingma and Ba (2015) Diederik P Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. International Conference on Learning Representations (ICLR) (2015).

- Kundu et al . (2020a) Jogendra Nath Kundu, Ambareesh Revanur, Govind Vitthal Waghmare, Rahul Mysore Venkatesh, and R Venkatesh Babu. 2020a. Unsupervised Cross-Modal Alignment for Multi-Person 3D Pose Estimation. European Conference of Computer Vision (ECCV) (2020).

- Kundu et al . (2020b) Jogendra Nath Kundu, Naveen Venkat, Ambareesh Revanur, R Venkatesh Babu, et al . 2020b. Towards Inheritable Models for Open-Set Domain Adaptation. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Kundu et al . (2020c) Jogendra Nath Kundu, Rahul Mysore Venkatesh, Naveen Venkat, Ambareesh Revanur, and R. Venkatesh Babu. 2020c. Class-Incremental Domain Adaptation. In European Conference of Computer Vision (ECCV) .

- Lee (2013) Dong-Hyun Lee. 2013. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In ICMLw .

- Lin et al . (2020) Yen-Liang Lin, Son Tran, and Larry S Davis. 2020. Fashion Outfit Complementary Item Retrieval. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Liu et al . (2012) Si Liu, Zheng Song, Guangcan Liu, Changsheng Xu, Hanqing Lu, and Shuicheng Yan. 2012. Street-to-shop: Cross-scenario clothing retrieval via parts alignment and auxiliary set. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Lu et al . (2019) Zhi Lu, Yang Hu, Yunchao Jiang, Yan Chen, and Bing Zeng. 2019. Learning Binary Code for Personalized Fashion Recommendation. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Ma et al . (2020) Qianli Ma, Jinlong Yang, Anurag Ranjan, Sergi Pujades, Gerard Pons-Moll, Siyu Tang, and Michael J Black. 2020. Learning to dress 3d people in generative clothing. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Maaten and Hinton (2008) Laurens van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE. Journal of Machine Learning (JMLR) (2008).

- Mikolov et al . (2013) Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. In Neural Information Processing Systems (NIPS) .

- Misra and Maaten (2020) Ishan Misra and Laurens van der Maaten. 2020. Self-supervised learning of pretext-invariant representations. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Neuberger et al . (2020) Assaf Neuberger, Eran Borenstein, Bar Hilleli, Eduard Oks, and Sharon Alpert. 2020. Image Based Virtual Try-On Network From Unpaired Data. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Noroozi and Favaro (2016) Mehdi Noroozi and Paolo Favaro. 2016. Unsupervised learning of visual representations by solving jigsaw puzzles. In European Conference of Computer Vision (ECCV) .

- Noroozi et al . (2017) Mehdi Noroozi, Hamed Pirsiavash, and Paolo Favaro. 2017. Representation learning by learning to count. In International Conference of Computer Vision (ICCV) .

- Ouali et al . (2020) Yassine Ouali, Céline Hudelot, and Myriam Tami. 2020. Semi-Supervised Semantic Segmentation with Cross-Consistency Training. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Paszke et al . (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al . 2019. Pytorch: An imperative style, high-performance deep learning library. In Neural Information Processing Systems (NeurIPS) .

- Patel et al . (2020) Chaitanya Patel, Zhouyingcheng Liao, and Gerard Pons-Moll. 2020. Tailornet: Predicting clothing in 3d as a function of human pose, shape and garment style. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Rahul et al . (2017) MV Rahul, Revanur Ambareesh, and G Shobha. 2017. Siamese network for underwater multiple object tracking. In International Conference on Machine Learning and Computing (ICMLC) .

- Salimans et al . (2016) Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved techniques for training gans. In Neural Information Processing Systems (NIPS) .

- Snell et al . (2017) Jake Snell, Kevin Swersky, and Richard Zemel. 2017. Prototypical networks for few-shot learning. In Neural Information Processing Systems (NeurIPS) .

- Tan et al . (2019) Reuben Tan, Mariya I Vasileva, Kate Saenko, and Bryan A Plummer. 2019. Learning similarity conditions without explicit supervision. In International Conference of Computer Vision (ICCV) .

- Tangseng and Okatani (2020) Pongsate Tangseng and Takayuki Okatani. 2020. Toward explainable fashion recommendation. In Winter Conference on Applications of Computer Vision (WACV) .

- Tangseng et al . (2018) P. Tangseng, K. Yamaguchi, and T. Okatani. 2018. Recommending Outfits from Personal Closet. In Winter Conference on Applications of Computer Vision (WACV) .

- Vasileva et al . (2018) Mariya I Vasileva, Bryan A Plummer, Krishna Dusad, Shreya Rajpal, Ranjitha Kumar, and David Forsyth. 2018. Learning type-aware embeddings for fashion compatibility. In European Conference of Computer Vision (ECCV) .

- Veit et al . (2017) Andreas Veit, Serge Belongie, and Theofanis Karaletsos. 2017. Conditional similarity networks. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Venkat et al . (2020) Naveen Venkat, Jogendra Nath Kundu, Durgesh Kumar Singh, Ambareesh Revanur, and R. Venkatesh Babu. 2020. Your Classifier can Secretly Suffice Multi-Source Domain Adaptation. In Neural Information Processing Systems (NeurIPS) .

- Verma et al . (2019) Vikas Verma, Alex Lamb, Juho Kannala, Yoshua Bengio, and David Lopez-Paz. 2019. Interpolation consistency training for semi-supervised learning. International Joint Conference on Artificial Intelligence (IJCAI) (2019).

- Yang et al . (2020b) Han Yang, Ruimao Zhang, Xiaobao Guo, Wei Liu, Wangmeng Zuo, and Ping Luo. 2020b. Towards Photo-Realistic Virtual Try-On by Adaptively Generating-Preserving Image Content. In Conference on Computer Vision and Pattern Recognition (CVPR) .

- Yang et al . (2020a) Xun Yang, Xiaoyu Du, and Meng Wang. 2020a. Learning to Match on Graph for Fashion Compatibility Modeling. In AAAI conference on Artificial Intelligence (AAAI) .

- Yun et al . (2019) Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. 2019. Cutmix: Regularization strategy to train strong classifiers with localizable features. In International Conference of Computer Vision (ICCV) .

Semi-Supervised Visual Representation Learning for Fashion Compatibility

We consider the problem of complementary fashion prediction. Existing approaches focus on learning an embedding space where fashion items from different categories that are visually compatible are closer to each other. However, creating such labeled outfits is intensive and also not feasible to generate all possible outfit combinations, especially with large fashion catalogs. In this work, we propose a semi-supervised learning approach where we leverage large unlabeled fashion corpus to create pseudo-positive and pseudo-negative outfits on the fly during training. For each labeled outfit in a training batch, we obtain a pseudo-outfit by matching each item in the labeled outfit with unlabeled items. Additionally, we introduce consistency regularization to ensure that representation of the original images and their transformations are consistent to implicitly incorporate colour and other important attributes through self-supervision. We conduct extensive experiments on Polyvore, Polyvore-D and our newly created large-scale Fashion Outfits datasets, and show that our approach with only a fraction of labeled examples performs on-par with completely supervised methods.

Ambareesh Revanur

Vijay Kumar

Deepthi Sharma

Related Research

Learning fashion compatibility from in-the-wild images, theme-matters: fashion compatibility learning via theme attention, semi-supervised compatibility learning across categories for clothing matching, adaptive consistency regularization for semi-supervised transfer learning, semi-supervised fashion compatibility prediction by color distortion prediction, hard-aware fashion attribute classification, using discriminative methods to learn fashion compatibility across datasets.

Please sign up or login with your details

Generation Overview

AI Generator calls

AI Video Generator calls

AI Chat messages

Genius Mode messages

Genius Mode images

AD-free experience

Private images

- Includes 500 AI Image generations, 1750 AI Chat Messages, 30 AI Video generations, 60 Genius Mode Messages and 60 Genius Mode Images per month. If you go over any of these limits, you will be charged an extra $5 for that group.

- For example: if you go over 500 AI images, but stay within the limits for AI Chat and Genius Mode, you'll be charged $5 per additional 500 AI Image generations.

- Includes 100 AI Image generations and 300 AI Chat Messages. If you go over any of these limits, you will have to pay as you go.

- For example: if you go over 100 AI images, but stay within the limits for AI Chat, you'll have to reload on credits to generate more images. Choose from $5 - $1000. You'll only pay for what you use.

Out of credits

Refill your membership to continue using DeepAI

Share your generations with friends

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Semi-Supervised Visual Representation Learning for Fashion Compatibility

2021, Fifteenth ACM Conference on Recommender Systems

Related Papers

Computer Vision – ECCV 2018

Shreya Rajpal

2020 IEEE Winter Conference on Applications of Computer Vision (WACV)

Utkarsh Patel

Proceedings of the 28th ACM International Conference on Multimedia

Wanying Ding

Outfit recommendation requires the answers of some challenging outfit compatibility questions such as 'Which pair of boots and school bag go well with my jeans and sweater?'. It is more complicated than conventional similarity search, and needs to consider not only visual aesthetics but also the intrinsic fine-grained and multi-category nature of fashion items. Some existing approaches solve the problem through sequential models or learning pair-wise distances between items. However, most of them only consider coarse category information in defining fashion compatibility while neglecting the fine-grained category information often desired in practical applications. To better define the fashion compatibility and more flexibly meet different needs, we propose a novel problem of learning compatibility among multiple tuples (each consisting of an item and category pair), and recommending fashion items following the category choices from customers. Our contributions include: 1) D...

Fashion is an inherently visual concept and computer vision and artificial intelligence (AI) are playing an increasingly important role in shaping the future of this domain. Many research has been done on recommending fashion products based on the learned user preferences. However, in addition to recommending single items, AI can also help users create stylish outfits from items they already have, or purchase additional items that go well with their current wardrobe. Compatibility is the key factor in creating stylish outfits from single items. Previous studies have mostly focused on modeling pair-wise compatibility. There are a few approaches that consider an entire outfit, but these approaches have limitations such as requiring rich semantic information, category labels, and fixed order of items. Thus, they fail to effectively determine compatibility when such information is not available. In this work, we adopt a Relation Network (RN) to develop new compatibility learning models,...

2019 IEEE/CVF International Conference on Computer Vision (ICCV)

Mariya Vasileva

Fifteenth ACM Conference on Recommender Systems

IEEE Access

Aleksander Skorupa

Debopriyo Banerjee

Computer Vision – ECCV 2020

RELATED PAPERS

GIULIA PAZZAGLIA

ACM Transactions on Multimedia Computing, Communications, and Applications

Federico Becattini

Shubham Saurav

2020 IEEE Sixth International Conference on Multimedia Big Data (BigMM)

Dikshant Sagar

Arridhana Ciptadi

2018 IEEE Winter Conference on Applications of Computer Vision (WACV)

Pongsate Tangseng

Anuario De Historia De La Iglesia

Javier Vergara

Geraldine Macdonald

Journal of Student Research

Varnika Jain

Mithun Gupta

Shafin Rahman

Proceedings of the 29th ACM International Conference on Multimedia

Hongzhi Yin

2021 IEEE 4th International Conference on Multimedia Information Processing and Retrieval (MIPR)

Abhinav Ravi

Manjunath R

Pattern Recognition. ICPR International Workshops and Challenges

IEEE Transactions on Multimedia

Salman Hassan Khan

Cornell University - arXiv

Jean Paul Ainam

Applied Sciences

International Journal of Machine Learning and Cybernetics

Neural Processing Letters

mahta hassanpour

Youshan Zhang

Jonghyun Choi

Arabian Journal for Science and Engineering

Garima Gupta

Georgios Evangelopoulos

Rodrigo Laurens

Isabella Nogues

arXiv: Computer Vision and Pattern Recognition

Farhad Pourpanah

Hadi Kiapour

Rajeev Yasarla

Petr Buchal

Leulseged Tesfaye

IEEE Transactions on Pattern Analysis and Machine Intelligence

Laurin Wagner

Pattern Recognition

Ashish Shrivastava

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Conferences

- New Conferences

- search search

- You are not signed in

External Links

- Google Scholar

- RevanurKS21

- References: 0

- Cited by: 0

- Bibliographies: 0

- [Upload PDF for personal use]

Researchr is a web site for finding, collecting, sharing, and reviewing scientific publications, for researchers by researchers.

Sign up for an account to create a profile with publication list, tag and review your related work, and share bibliographies with your co-authors.

Semi-Supervised Visual Representation Learning for Fashion Compatibility

Ambareesh Revanur , Vijay Kumar , Deepthi Sharma . Semi-Supervised Visual Representation Learning for Fashion Compatibility . In Humberto Jesús Corona Pampín , Martha A. Larson , Martijn C. Willemsen , Joseph A. Konstan , Julian J. McAuley , Jean Garcia-Gathright , Bouke Huurnink , Even Oldridge , editors, RecSys '21: Fifteenth ACM Conference on Recommender Systems, Amsterdam, The Netherlands, 27 September 2021 - 1 October 2021 . pages 463-472 , ACM, 2021. [doi]

- Bibliographies

Abstract is missing.

- Web Service API

Self-supervised Visual Attribute Learning for Fashion Compatibility

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 11 May 2024

Shifting to machine supervision: annotation-efficient semi and self-supervised learning for automatic medical image segmentation and classification

- Pranav Singh 1 ,

- Raviteja Chukkapalli 2 ,

- Shravan Chaudhari 2 ,

- Luoyao Chen 1 ,

- Mei Chen 1 ,

- Jinqian Pan 1 ,

- Craig Smuda 3 &

- Jacopo Cirrone 1 , 4

Scientific Reports volume 14 , Article number: 10820 ( 2024 ) Cite this article

563 Accesses

2 Altmetric

Metrics details

- Computational biology and bioinformatics

- Image processing

- Machine learning

- Medical research

- Preclinical research

- Predictive medicine

Advancements in clinical treatment are increasingly constrained by the limitations of supervised learning techniques, which depend heavily on large volumes of annotated data. The annotation process is not only costly but also demands substantial time from clinical specialists. Addressing this issue, we introduce the S4MI (Self-Supervision and Semi-Supervision for Medical Imaging) pipeline, a novel approach that leverages advancements in self-supervised and semi-supervised learning. These techniques engage in auxiliary tasks that do not require labeling, thus simplifying the scaling of machine supervision compared to fully-supervised methods. Our study benchmarks these techniques on three distinct medical imaging datasets to evaluate their effectiveness in classification and segmentation tasks. Notably, we observed that self-supervised learning significantly surpassed the performance of supervised methods in the classification of all evaluated datasets. Remarkably, the semi-supervised approach demonstrated superior outcomes in segmentation, outperforming fully-supervised methods while using 50% fewer labels across all datasets. In line with our commitment to contributing to the scientific community, we have made the S4MI code openly accessible, allowing for broader application and further development of these methods. The code can be accessed at https://github.com/pranavsinghps1/S4MI .

Similar content being viewed by others

Annotation-efficient deep learning for automatic medical image segmentation

Segment anything in medical images

Self-supervised learning for medical image classification: a systematic review and implementation guidelines

Introduction.

Medical imaging analysis plays a pivotal role in clinical decision-making, aiding in diagnosis, treatment planning, and monitoring. The advent of deep learning has significantly enhanced the capability to analyze medical images both effectively and efficiently, promising to automate aspects of the diagnostic process and thereby augment clinical decision-making. However, the efficacy of these deep learning techniques is heavily contingent upon the availability of well-annotated medical image data. Unlike in natural image processing, obtaining annotations in the medical domain is fraught with challenges due to privacy concerns, high costs, and the extensive time required for expert clinicians to produce accurate annotations 1 . Moreover, the unique requirements for expert knowledge in medical imaging mean that traditional crowd-sourcing for annotations is not viable. This results in medical imaging datasets being significantly smaller than their natural imaging counterparts, leading to suboptimal performance when neural networks are trained from scratch on such limited data volumes. Consequently, transfer learning has become a key strategy, leveraging knowledge acquired from source domain tasks to enhance performance on target domain tasks in medical imaging. Transfer learning typically involves initializing the network with weights from a pre-trained model and subsequently fine-tuning it with target domain data. While transfer learning has shown promise, especially when source and target datasets, as well as their respective output classes, are similar 2 , the distinct nature of medical imaging often limits its applicability.

The core motivation of our study is to address these challenges by comparing machine supervision with traditional fully-supervised approaches in the realm of medical imaging. The acquisition of labels in medical imaging is notably costly and time-intensive, a hurdle that machine supervision approaches, with their scalable and label-free nature, aim to overcome. By enabling the development of priors that can outperform supervised methods, machine supervision tackles a critical bottleneck in advancing clinical treatments-the heavy reliance on supervised learning techniques that necessitate extensive annotated data. This study aims to elucidate the efficiencies that machine supervision can introduce, particularly in light of the challenges posed by the need for domain-specific annotation, the limited size of medical datasets due to stringent privacy regulations and high annotation costs, and the indispensable requirement for expert knowledge in annotating medical images.

In recent times, the field of computer vision has witnessed remarkable strides due to achievements in learning algorithms such as Deep Metric Learning 3 , self-distillation 4 , masked image modeling 5 , etc. These innovative approaches have enabled the learning of meaningful visual representations from unannotated image data, with fine-tuning models from these learners showing competitive performance gains 4 . There is a growing interest in exploring and applying these learning algorithms to medical imaging modalities, where annotations are notably scarce. These algorithms, typically deployed in self-supervised, semi-supervised, or unsupervised settings, involve a two-stage process: (1) pre-training to acquire representations from unannotated data, and (2) fine-tuning using annotated data to refine the model for the specific target task.

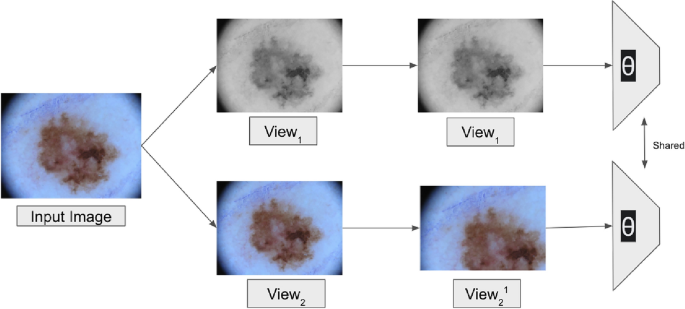

In the realm of self-supervised learning, our work specifically focuses on DINO (Distillation with NO labels) 4 and CASS (Cross-Architectural Self-supervision) 6 techniques, which are at the forefront of current research. These methods leverage joint embedding 7 based architecture, a cutting-edge approach that facilitates learning meaningful visual representations by aligning two different views of an image to the same embedding space. This strategy is particularly adept at extracting robust features from unannotated data, making it highly relevant to medical imaging analysis. Additionally, for semi-supervised learning, we delve into the cross-architectural, cross-teaching method 8 , which represents a significant advancement towards utilizing machine supervision over traditional human annotation. The adoption of such state-of-the-art self/semi-supervised techniques marks a pivotal shift in medical imaging, emphasizing machine supervision’s scalable and label-free advantages. This is crucial in the context of medical imaging, where the procurement of labels is not only cost-prohibitive but also requires extensive time and expertise.

Building upon these considerations, this work introduces the S4MI (Self-Supervision and Semi-Supervision for Medical Imaging) pipeline. This novel semi-/self-supervised learning approach is designed to directly address the aforementioned challenges by minimizing the dependency on extensive labeling efforts. By integrating cutting-edge self-supervised learning algorithms with the latest semi-supervised learning techniques, S4MI aims to significantly improve performance beyond the capabilities of traditional supervised approaches. This initiative represents a significant step towards the development of scalable healthcare solutions, leveraging the untapped potential of machine supervision to achieve and potentially exceed the efficacy of human-supervised methods in medical imaging analysis.

In this work, we evaluate the performance of state-of-the-art machine supervision approaches against traditional transfer learning across two pivotal tasks in medical image analysis: segmentation and classification. Our comparison spans three challenging datasets from distinct medical modalities, including histopathology slide images and skin lesion images. Additionally, we explore the effectiveness of machine supervision by varying the amount of annotated data used for fine-tuning. The machine supervision approaches examined in this study include 4 , 6 for classification and 8 , 9 for segmentation.

To compare transfer learning from ImageNet with machine supervision and show its general applicability, we selected three datasets representative of those clinicians encounter in real life, featuring varying levels of class imbalance and sample sizes.

Dermatomyositis : This dataset comprises 198 RGB samples from 7 patients, each image measuring 352 by 469 pixels. With this dataset, we conduct multilabel classification, aiming to classify cells based on their protein staining into TFH-1, TFH-217, TFH-Like, B cells, and cells that do not conform to the aforementioned categories, labeled as ’others.’ We utilize the F1 score as our evaluation metric on the test set.

Dermofit : This dataset consists of 1,300 image samples captured with an SLR camera, spanning the following ten classes: Actinic Keratosis (AK), Basal Cell Carcinoma (BCC), Melanocytic Nevus/Mole (ML), Squamous Cell Carcinoma (SCC), Seborrhoeic Keratosis (SK), Intraepithelial Carcinoma (IEC), Pyogenic Granuloma (PYO), Haemangioma (VASC), Dermatofibroma (DF), and Melanoma (MEL). Each image in this dataset is uniquely sized, with dimensions ranging from 205 \(\times\) 205 to 1020 \(\times\) 1020 pixels.

ISIC-2017 : Within this dataset, 2,000 JPEG images are distributed among three classes: Melanoma, Seborrheic Keratosis, and Benign Nevi. The evaluation of the test set is based on the Recall score.

Classification

For classification, we compare existing self-supervised techniques with transfer learning, the de facto norm in medical imaging. As mentioned, due to a lack of data in medical imaging, classifiers are often trained using initialization from other large visual datasets like ImageNet 10 . The architectures are then fine-tuned on the target medical imaging dataset using these initializations. Alternatively, we can train using self-supervised approaches to provide better classification priors for the target medical imaging dataset. In self-supervised learning, an auxiliary task is performed without labels to learn fine-grained information about the image. This auxiliary task can be performed in various ways, for example, by corrupting the image, followed by its reconstruction, creating copies of the same image (positive pairs), and minimizing the distance between them using two differently parameterized architectures or redundancy reduction. In our study, we focus on DINO 4 and CASS 6 . DINO learns by creating augmentation-asymmetric copies of the input image, whereas CASS creates architecturally asymmetric copies followed by similarity maximization between the copies. We pre-trained using these two self-supervised techniques for 100 epochs, followed by 50 epochs of fine-tuning with labels. We perform fine-tuning with 10% and 100% label fractions. For this, we use all of the available labels per image while using 10% or 100% of the total labels available.

Classification pipeline additional details

For the ImageNet supervised classification approach, we used images and their corresponding labels as our inputs. For training with x% labels, we only used x% of the entire training images and labels while keeping the number of labels per image the same. For the self-supervised approaches DINO and CASS, for pertaining, we start with only images. During the fine-tuning process, we initialize the networks with their pre-trained weights and use corresponding image-label pairs. Similar to the supervised approach, we also fine-tune these architectures for two label fractions, 10% and 100%. Further details can be inferred from our open-sourced code base .

Segmentation

This section will first introduce standard implementation designs mutually applied to all models, followed by additional implementation details for the semi-supervised model. Lastly, we will describe the procedure of testing different cross-entropy weight initialization.

Approach specific additional details

Semi-supervised approach The semi-supervised model uses a batch size of 16, and each batch consists of eight labeled images and eight unlabeled images. In the last column of Fig. 5 , we compare the performances between semi- and fully-supervised models in 100% labeled-ratio scenario; for this, we re-adjusted the batch to be 15 labeled images and one unlabeled image to approximate the fully-supervised setting while still retaining the “cross teaching” component so that the loss remains consistent and hence comparable with other semi-supervised label ratios. Additionally, when comparing the performance between fully and semi-supervised models, we adopt the same practice from Luo et al. 8 and use Swin Transformer to compare DEDL 11 with Resnet34 backbone as they have a similar number of parameters

Unsupervised approach We implemented PiCIE for Dermofit, Dermatomyositis, and ISIC-2017 datasets similar to the supervised and self/semi-supervised methods. We made slight changes that suit the datasets and ensure smooth learning, such as using SGD optimizer instead of Adam optimizer, keeping the learning rate the same as the original implementation (1e−4), and adding the StepLR (Step Learning Rate) scheduler provided by PyTorch. This is because we observed that the model sometimes failed to learn and was stuck at predicting all the image pixels as either foreground (thing) or background (stuff) with the Adam optimizer. We used batch sizes of 64 and 128, depending on the availability of CUDA RAM and dataset sizes. We trained the PiCIE unsupervised pipeline for 50 epochs with the ResNet34 backbone as a feature extractor and retained the unsupervised clustering technique described in 9 to achieve optimal segmentation performance. The hardware used was Nvidia RTX8000, similar to the previous methods. For PiCIE, labels were only used for validation and testing, not during training.

Supervised approach We adopted Singh and Cirrone’s 12 approach for the supervised part of our comparison. For this, we utilize image and mask pairs during training, validation, and testing with a ResNet-34-based U-Net 13 .

Common implementations

We implemented all models in Pytorch 14 using a single NVIDIA RTX-8000 GPU with 64 GB RAM and 3 CPU cores. All models are trained with an Adam optimizer with an initial learning rate (lr) of 3.6e−4 and a weight decay of 1e−5. We also set a cosine annealing scheduler with a maximum of 50 iterations and a minimum learning rate of 3.4e−4 to adjust the learning rate based on each epoch. For splitting our dataset into training, validation, and testing sets, we use a random train-validation-test split (70–10–20%), except in the ISIC2017 dataset, where we adopt the train/val/test split according to 15 for match-up comparison (57–8.5–34%). The batch size is 16, and we use data augmentation to enrich the training set using random rotation, random flip, and a further resizing to 224 \(\times\) 224 to fit in Swin Transformer’s patch size requirement 16 . Note that for 3-channel datasets (Dermofit, ISIC), we add a pre-processing step that normalizes the red channel of the RGB color model as proposed by 17 . We repeat all experiments with different seed values five times and report the mean value in the 95% confidence interval in all tables. Similar to classification, we fine-tune with multiple label fractions, with semi-supervised and DEDL. When we mention that we fine-tune with x% labels, we use all labels per image but only x% of the total available image-label pairs.

Data-preprocessing

Following 11 , we chose the processed image sample size to be 480 \(\times\) 480 to avoid empty tile issues. To ensure uniformity, the image input size for the model remains consistent across all three datasets, as illustrated below. For the Dermatomyositis dataset, since it contains images of uniform sizes 1408 \(\times\) 1876 each, we tiled them to a size of 480 \(\times\) 480 and then used blank padding at the edges to make them fit in 480 \(\times\) 480 sizes. This results in 12 tiled sub-images per sample, which are then resized to 224 \(\times\) 224. In contrast, the Dermofit and the ISIC2017 datasets contain images of different sizes. Since the two datasets are about skin lesions, they have significantly denser and larger mask labels than the Dermatomyositis dataset. Thus, a different image preprocessing step is applied to the latter two datasets: bilinear interpolation to 480 \(\times\) 480 followed by a resize to 224 \(\times\) 224.

We start by studying the classification pipeline in Sect. “ Classification ”, followed by understanding the interpretability aspect of classification in Sect. “ Classification ”. Since interpretability is ingrained in the segmentation task, we only study segmentation quantitatively in Sect. “ Segmentation ”.

For classification, we advocate using self-supervision to learn useful discriminative representations from unlabelled data. Self-supervised techniques rely on an auxiliary pretext task for pre-training to learn useful representations. These representations are further improved and aligned with the downstream task through labeled fine-tuning. We benchmark and study the performance of transfer learning as well as two self-supervised techniques: (1) Distillation with No Labels (DINO) 4 , a self-supervised approach designed for natural images, and (2) Cross cross-architectural self-supervision (CASS) 6 a self-supervision approach for medical imaging. We further expand on our methodology in Sect. “ Classification ”.

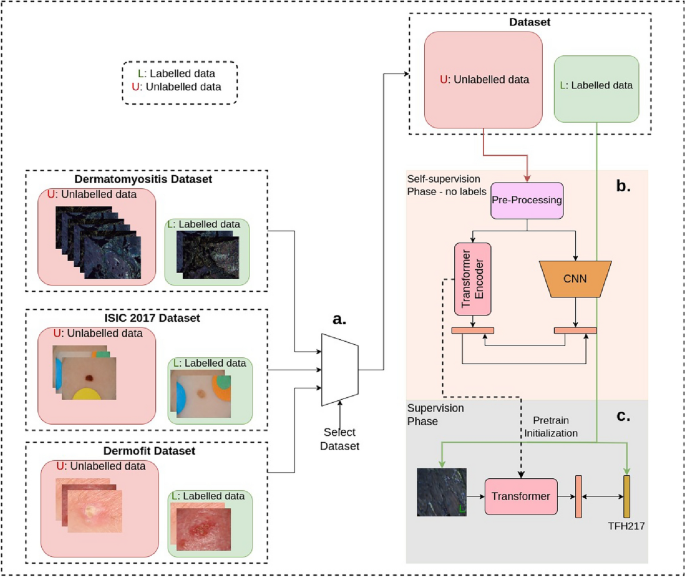

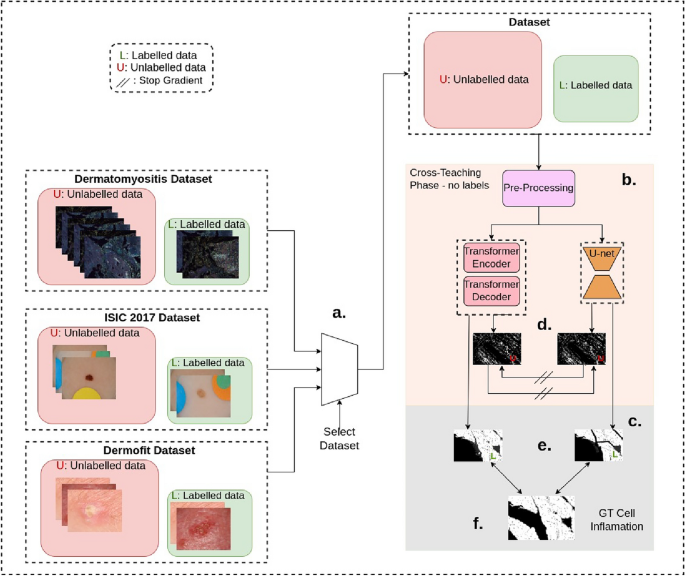

Applying CASS: In this figure we detail the steps involved in applying CASS, the best-performing machine supervision approach for classification 6 . We conducted experiments on three datasets listed on the left side: the Dermatomyositis, ISIC-2017, and Dermofit datasets. For training on a dataset, we initialize both the networks with their ImageNet weights and select one dataset at a time. To train CASS, we start by label-free pretraining as illustrated in Part (b) of Fig. 1 . During pre-training, a CNN and a Transformer are trained simultaneously. In the case of the Dermatomyositis dataset, the finetuning is multi-label, while in the case of the ISIC-2017 and the Dermofit dataset, it is multi-class. This pre-training in (b) is followed by labeled fine-tuning as shown in Part ( c ), where image are fine-tuned one at a time.

We present the results of supervised and self-supervised trained classifiers in Table 1 . We use the F1 score as the comparison metric, similar to its implementation in previous works 6 . The F1 score is defined as \(F1 = \frac{2*Precision*Recall}{Precision+Recall} = \frac{2*TP}{2*TP+FP+FN}\) , where TP represents True Positive, FN is False Negative and FP is False Positive. We observe that CASS (Fig. 1 ) outperforms DINO and transfer learning consistently across all the datasets and backbones, with the, exception for the ISIC 2017 challenge dataset, where DINO outperforms CASS using the ResNet50 backbone. Interestingly, we also observe that ViT trained with CASS using just 10% labels, which saves significant annotation time, performs significantly better than the transfer learning-based supervised approach with 100% labels for the Dermatomyositis dataset. For the same case, DINO performs on par with the supervised approach while using 90% fewer labels. This shows the impact that machine supervision can have on improving access to classified medical images. DINO and CASS take an image to create asymmetry through augmentation (DINO) and architecture (CASS). These are then passed through different parameterized feature extractors (DINO) and architectural feature extractors (CASS) to create embeddings. Since the two embeddings are generated from the same image, they are expected to be similar; this is then used as the supervisory signal to maximize the similarity between the two embedding and update encoders. In CASS, architectural invariance is used to create a positive pair instead of creating augmentation invariance, as in the case of DINO. This is because CNN and Transformers learn different representations from the same image 6 .

Saliency maps

In segmentation, interpretability is ingrained in the task itself, as we can easily identify whether model predictions are aligned with the ground truth. To understand the decision-making process of trained neural classifiers, we study pixel attribution or saliency maps 18 . To accomplish this, we first train the neural network and compute the gradient of a class score with respect to the input pixels. Backpropagating the gradients that lead to a particular classification onto the input image helps us understand the most filtered/rewarding extracted features related to classification. We present the results using DINO, CASS-trained ResNet-50, and ViT Base/16 on the ISIC-2017 dataset in Fig. 2 . Although most saliency maps fail to align with human-interested pathology, CASS-based saliency maps align more than those from DINO. This could be attributed to the joint training of CASS, where it trains a CNN that focuses on local information while a Transformer focuses on global or image-level features. Although both techniques fail to recognize and focus on the relevant area in all cases, CASS-trained architectures are consistently better focused on the relevant pathology than DINO-trained architectures.

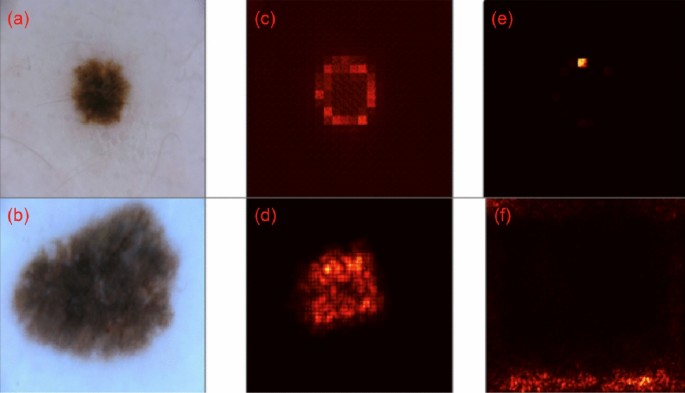

Visualization of saliency maps on a random sample from the ISIC-2017 dataset, left ( a , b ): data (input image), middle ( c , d ): saliency map from CASS, and right ( e , f ): saliency map from DINO-trained ViTB/16 at the top and ResNet-50 at the bottom ( f ). DINO’s saliency map exhibits notable stochasticity, displaying a lack of strong correlation with the specific pathology under consideration. Conversely, in the case of CASS, the saliency maps demonstrate a significantly more aligned with the pathology of interest both for CNN as well as the Transformer.

In this figure we present the semi-supervised pipeline as described in Sect. “ Segmentation ”. Similar to the classification experiments, we evaluate the segmentation pipeline on three challenging medical image segmentation datasets—the Dermatomyositis, ISIC-2017, and the Dermofit dataset. We use one dataset at a time to train the semi-supervised architecture. Unlike the classification pipeline, semi-supervised learning involves simultaneous learning from labeled and unlabeled data. In Part (b) of Fig. 3 , we start by training the data in an unlabeled fashion and during the same iteration labeled data is also passed to the architecture as shown in part (c) of the figure. Predictions from passing inputs of the labeled images yield learned predictions as shown in Part (e). Unsupervised loss is then calculated by comparing the outputs of the CNN and the Transformer (as shown in Part (d) of this figure) using the \({\mathcal {L}}_{\text {Unsupervised}}\) in Sect. “ Segmentation ”. This unsupervised loss is then added to the supervised loss denoted by \({\mathcal {L}}_{\text {Supervised}}\) in Sect. “ Segmentation ”. The supervised loss is calculated against the ground truth as shown in part (e) and (f) in this figure.

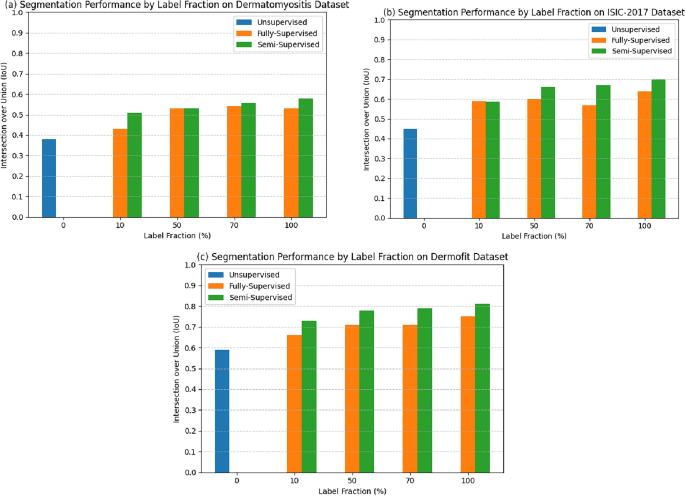

Image Segmentation is another important task in medical image analysis. The area of interest—usually containing the pathology—is segmented from the rest of the slide image. We study the performance of transfer learning, semi-supervised, and unsupervised approaches for segmentation. In computer vision, it is widely acknowledged that image segmentation presents a more intricate challenge than image classification. This distinction arises from the fact that image segmentation necessitates the meticulous classification of individual pixels, whereas image classification solely requires making predictions at the image level. Moreover, due to the aforementioned rationale, annotating images for segmentation exhibits a significantly higher complexity level. Therefore, the present study aims to investigate the effectiveness of unsupervised, semi-supervised, and fully supervised techniques across four distinct label fractions instead of the conventional two in classification. In our case, x% label fractions indicate that we only use x% labels from the dataset. We include the unsupervised approach 9 to study the performance for 0% labels scenario. We compare the performance of DEDL (Data-Efficient Deep Learning framework) 11 , a transfer learning-based approach, against 8 , a semi-supervised approach using the Swin transformer-based U-Net model (Fig. 3 ).

We choose 11 due to its significant promise in histopathology imaging, and 8 for its state-of-the-art approach to leveraging unannotated data in MR segmentation. The semi-supervised approach 8 trains two segmentation architectures—a Swin transformer-based U-Net and a CNN U-Net (as shown in Fig. 3 ), while DEDL 11 and unsupervised approach 9 only train one architecture.

To ensure a fair comparison, we make sure the number of overall parameters trained is the same in each case over three datasets and four-label fractions.

In their study 8 , compared the performance of Swin-U-Net trained in semi- and fully-supervised fashion for segmentation. Observing that Swin-U-Net exhibits superior performance in a semi-supervised setting, we extend this comparison to include a semi-supervised Swin-U-Net and a similarly parameterized ResNet-34 based U-Net, trained under both unsupervised and fully-supervised approaches. Furthermore, from 11 , we use their best-reported ResNet-34-based U-Net model.

The evaluations are based on three datasets, as detailed in Sect. “ Data ”. To ensure our results are statistically significant, we conduct all experiments with five different seed values and report the mean values in the 95% confidence interval (C.I.) over the five runs. For comparison, we use IoU (Intersection over Union) in line with previous works in the field 12 . IoU or Jaccard index for two images U and V is defined as \(IoU(U,V) = \frac{|U \cap V|}{|U \cup V|}\) . We present these results in Fig. 5 .

Semi-supervised approach

Semi-supervision approaches utilize the dataset’s labeled and unlabeled parts for learning. Techniques generally fall under (1) Adversarial training (e.g. DAN 19 ) (2) Self-Training (e.g. MR image segmentation 20 ) (3) Co-Training (e.g. DCT 21 ) and (4) Knowledge Distillation (e.g. UAMT 22 ). We study the co-training-based semi-supervision segmentation technique 8 , depicted in Fig. 4 . The model consists of two segmentation architectures, a U-Net (trained from scratch) and a pre-trained Swin Transformer, adapted from the Swin Transformer proposed by Liu et al. 16 . Both architectures are updated simultaneously using a combined loss over the labeled and unlabeled images.