Recent Publications (Vol. 109)

Vol. 109 (2024)

bizicount: Bivariate Zero-Inflated Count Copula Regression Using R

Scikit-fda: a python package for functional data analysis, opentsne: a modular python library for t-sne dimensionality reduction and embedding, magi: a package for inference of dynamic systems from noisy and sparse data via manifold-constrained gaussian processes, fungp: an r package for gaussian process regression with scalar and functional inputs, extremes.jl: extreme value analysis in julia, cpop: detecting changes in piecewise-linear signals, generalized plackett-luce likelihoods, fhmm: hidden markov models for financial time series in r, emulation and history matching using the hmer package.

The IBM® SPSS® software platform offers advanced statistical analysis, a vast library of machine learning algorithms, text analysis, open-source extensibility, integration with big data and seamless deployment into applications.

Its ease of use, flexibility and scalability make SPSS accessible to users of all skill levels. What’s more, it’s suitable for projects of all sizes and levels of complexity, and can help you find new opportunities, improve efficiency and minimize risk.

Within the SPSS software family of products, IBM SPSS Statistics supports a top-down, hypothesis testing approach to your data, while IBM SPSS Modeler exposes patterns and models hidden in data through a bottom-up, hypothesis generation approach.

The AI studio that brings together traditional machine learning along with the new generative AI capabilities powered by foundation models.

SPSS Statistics for Students

Prepare and analyze data with an easy-to-use interface without having to write code.

Choose from purchase options including subscription and traditional licenses.

Empower coders, noncoders and analysts with visual data science tools.

IBM SPSS Modeler helps you tap into data assets and modern applications, with algorithms and models that are ready for immediate use.

IBM SPSS Modeler is available on IBM Cloud Pak for Data. Take advantage of IBM SPSS Modeler on the public cloud.

Manage analytical assets, automate processes and share results more efficiently and securely.

Get descriptive and predictive analytics, data preparation and real-time scoring.

Use structural equation modeling (SEM) to test hypotheses and gain new insights from data.

Create a platform that can make predictive analytics easier for big data.

Find support resources for SPSS Statistics.

Get technical tips and insights from other SPSS users.

Gain new perspective through expert guidance.

Find support resources for IBM SPSS Modeler.

Learn how to use linear regression analysis to predict the value of a variable based on the value of another variable.

Learn how logistic regression estimates the probability of an event occurring, based on a dataset of independent variables.

Learn about new statistical procedures, data visualization tools and other improvements in SPSS Statistics 29.

Discover how you can uncover data insights that solve business and research problems.

SPSS, SAS, R, Stata, JMP? Choosing a Statistical Software Package or Two

by Karen Grace-Martin 50 Comments

In addition to the five listed in this title, there are quite a few other options, so how do you choose which statistical software to use?

The default is to use whatever software they used in your statistics class–at least you know the basics.

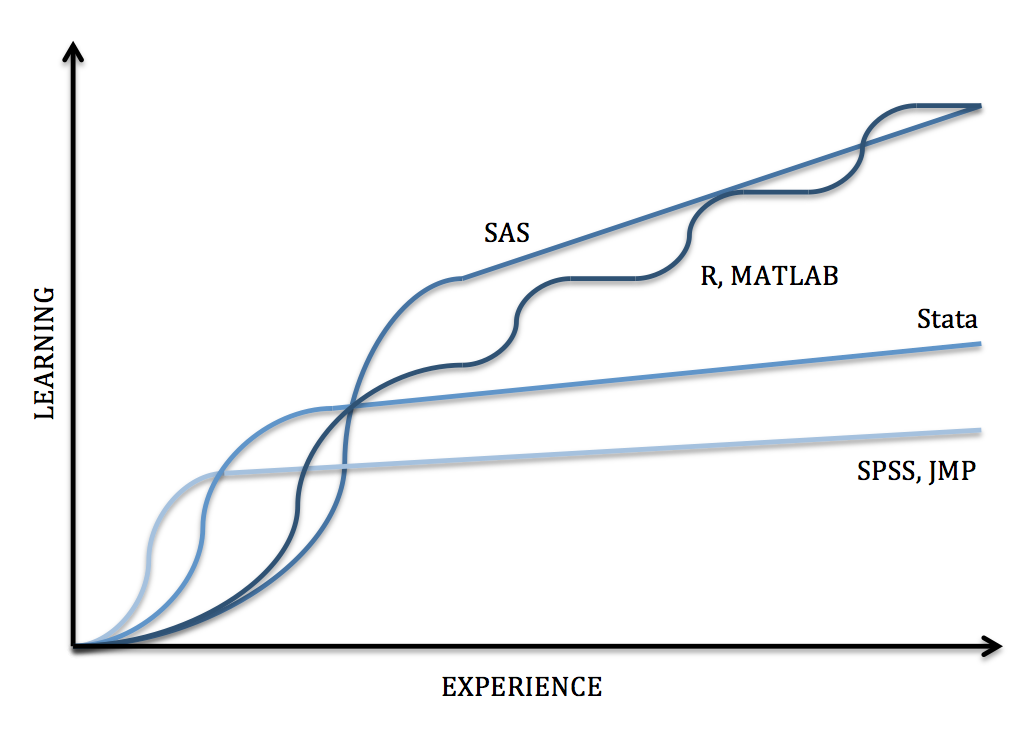

And this might turn out pretty well, but chances are it will fail you at some point. Many times the stat package used in a class is chosen for its shallow learning curve, not its ability to handle advanced analyses that are encountered in research.

I think I’ve used at least a dozen different statistics packages since my first stats class. And here are my observations:

1. The first one you learn is the hardest to learn. There are many similarities in the logic and wording they use even if the interface is different. So once you’re learned one, it will be easier to learn the next one.

2. You will have to learn another one. Just accept it. If you have the self discipline to do it, I suggest learning two software packages at the beginning. This will come in handy for a number of reasons

– My favorite stat package for a while was BMDP. Until the company was bought up by SPSS. I’m not sure if they stopped producing or updating it, but my university cancelled their site license.

– Many schools offer only a site license for only one package, and it may not be the one you’re used to. When I was at Cornell, they offered site licenses for 5 packages. But when a new stats professor decided to use JMP instead of Minitab, guess what happened to the Minitab site license? Unless you’re sure you’ll never leave your current university, you may have to start over.

– In case you decide to outwit the powers-that-be in IT who control the site licenses and buy your own (or use R, which is free), no software package does every type of analysis. There is huge overlap , to be sure, and the major ones are much more comprehensive than they were even 5 years ago. Even so, the gaps are in the most complicated analyses–some mixed models, gee, complex sampling, etc. And when you’re trying to learn a new, highly complicated statistical method is not the time to learn a new, highly complicated stats package.

For these reasons, I recommend that everyone who plans to do research for the foreseeable future learn two packages.

I know, it’s hard enough to find the time to start over and learn one. Much less the self discipline. But if you can, it will save you grief later on. There are many great books, online tutorials, and workshops for learning all the major stats packages.

But I also recommend you choose one as your primary package and learn it really, really well. The defaults and assumptions and wording are not the same across packages. Knowing how yours handles dummy coding or missing data is imperative to doing correct statistics.

Which one? Mainly it depends on the field you’re in. Social scientists should generally learn SPSS as their main package, mainly because that is what their colleagues are using. You can then choose something else as a backup–either SAS, R, or Stata, based on availability and which makes most sense to you logically.

Reader Interactions

October 5, 2021 at 7:37 pm

Rguroo is a newly developed software for teaching statistics that is becoming increasingly popular. This software is web-based and is designed by instructors who have had many years of teaching experience. To get more information, please visit https://rguroo.com

October 19, 2021 at 3:50 pm

Interesting. Thanks for sharing this.

November 3, 2019 at 11:58 pm

Hello,am an actuarial science student. Kindly help me choose the best software to learn.

July 8, 2019 at 3:41 am

Great stuff, thanks.

March 27, 2019 at 10:44 am

I want to pursue MSC in ecology.which is tbe best statistical package?

March 6, 2018 at 10:30 am

Sir/Madam I am graduate in statistics B.SC[MSCS] and post graduate in M.SC[MATHEMATICS] .Im interested in SAS .I want your suggestion.

January 24, 2018 at 6:20 pm

My experience SPSS is better than the others by far in terms of flexibility, user friendliness, user interface. It is popular in academia as compared to SAS and R. R is Ok but you have to know lots of things before you feel comfortable with it, and there are too many packages which are confusing some times. SAS is Ok but I hate its web usage and old fashion UI. In addition SPSS has just added Bayesian Statistics and it is a huge plus. Stop using stingy SAS! Check it out if it make sense what I have just added here! SPSS, SAS, R, Stata, Minitab, OriginPro, NSCC (!) and Pass (good for sample size estimates) and forget the others!

April 8, 2017 at 5:22 am

This is Prasad, I am purshing Msc (statistics with computer applications) we can give the suggestion which software is better pls me suggests

June 30, 2017 at 12:22 am

I have also done MSC statistics but now I have a problem. Problem is that i did not know about the Statistics software such as SPSS,SAS,STAT,etc, Can you give me any suggestion

January 23, 2017 at 9:27 am

Hai folks Basically Iam a Statistics background student completed my graduation in Statistics And now Iam pursuing my post graduation in statistics too Iam going learn SAS is it okay for me ?? or any thing else you people are going to suggest please suggest me

September 28, 2016 at 11:33 am

Hi friends! I’m in an agricultural institution,and most of my colleagues are using SAS & SPSS in their researches. For a change, I’m planning to use another statistical tool for my research, the JMP. Could there be any difference? Thanks

July 25, 2016 at 10:14 am

I have done MA economics and looking for supporting statical package for carrer betterment. Please suggest some statical package which can support to my economics degree..

August 4, 2016 at 6:47 am

Dear Ganesh, Given that you’re from Economics background, it is suggested to go ahead with STATA or SPSS. Stata has witnessed a rapid rise in last few years ,especially all international org in India like UN office,World Bank regional office,Institute of economic growth, NCAER they all have swaped from spss to stata.

August 5, 2016 at 8:21 am

I would recommend using R not only because it is free but because it has become an industry standard. Below is a link to a very good course that will teach you how to use R. Good luck! -Lisa

https://www.coursera.org/learn/r-programming

August 16, 2016 at 2:57 pm

Hey Ganesh and Lisa, thanks for posting. We have a great collection of resources for R, too, including 2 free webinars. Check them out here .

September 21, 2016 at 7:40 am

I personally did my first degree in Quantitative Economics of Makerere University, and I have always felt at home using both STATA & SPSS tools. But more preferably STATA. If you are in the field of Economic Research, Agricultural Reseach, Public health(Epidemiology), i would advise you use STATA instead of SPSS.

In contrast, I think SPSS has better procedures when it comes to using graphs.

In general, I prefer STATA to SPSS

June 30, 2017 at 12:25 am

January 1, 2018 at 2:15 pm

How you did your MSC in statistics without knowledge of SPSS and STATA i can’t imagine that

September 29, 2017 at 5:06 pm

I know exactly where Makerere university is, lived in Kampala for a year. Can’t remember my econ degree- think it was minitab, but then in EPI we learned both SPSS and SAS at the university

January 3, 2021 at 10:51 am

Use STATA .It is more rich than SPSS for policy research which is in your area as an economist.

May 25, 2015 at 9:03 am

Could anyone suggest me any site that has some good projects ( I am looking for beginners to intermediate level) that uses Stata as a tool?

August 5, 2016 at 8:18 am

Here is a link to a book that I purchased that I thought was very helpful. I do not use Stata, however, I learned a lot by reading this book. (The workflow concepts carry over to anything.) Hope this helps!

http://www.indiana.edu/~jslsoc/web_workflow/wf_home.htm

https://www.amazon.com/Workflow-Data-Analysis-Using-Stata-ebook/dp/B01GQJSGGI/ref=sr_1_1?ie=UTF8&qid=1470399079&sr=8-1&keywords=analysis+of+workflow+stata#nav-subnav

August 16, 2016 at 3:18 pm

Hey Paul and Lisa,

You can also check out our list of Stata resources , which includes 2 free webinars.

December 22, 2014 at 10:19 am

I definitely prefer NCSS, though not mentioned in the article. Now the newest version pre-released even in cloud. https://www.apponfly.com/en/application/ncss10

April 20, 2017 at 8:22 am

Hi Brandon. I am using AppOnFly as well, but I have chosen SPSS to do my analysis. They also provide a free trial of SPSS which is available for 30days: http://www.apponfly.com/en/ibm-spss-statistics-standard?EZE

August 27, 2014 at 1:53 am

For a comparison of SPSS, SAS, R, Stata and Matlab for each type of statistical analysis, see

http://www.stanfordphd.com/Statistical_Software.html

May 31, 2014 at 9:57 pm

I like to use Java since it has good graphics. Therefore, my choice is SCaVis ( http://jwork.org/scavis ). It integrates Java and Python with superb graphics.

December 7, 2013 at 12:08 pm

I am used to spss and stata for my data analysis, however today I tried adding “analyse-it” to my excel package. It really worked for me. Can I really go ahead with it?

December 9, 2013 at 10:48 am

I don’t know much about the excel plug ins (or whatever the correct software term is). As a general rule, I avoid excel for data analysis, but this add-on may be just fine.

December 1, 2018 at 1:44 pm

Thanks for the mention Kule, and yes Karen, Analyse-it is completely legitimate. We’ve developed it for over 20-years now, validate and test it thoroughly (see our NIST StRD results at https://analyse-it.com/support/NIST-StRD ), and most importantly we do not use any of the unreliable Excel statistical functions.

September 7, 2016 at 11:46 am

You could also try the XLSTAT software statistical add-on. It holds more than 200 statistical features including multivariate data analysis, modeling, machine learning, stat tests and field-oriented features https://www.xlstat.com/en/

August 2, 2013 at 1:58 pm

Hi Karen, nice suggestions backed with arguments!

On a different note, I wish to hear your opinion on free software… Have you, for example, had an experience with EasyReg? It seems to have much of the econometrics methods covered — by far more than I would ever imagine to use –, it’s easy to operate and is supported with PDF-files about relevant theory. What do you think? (I have currently no access to commercial software, unfortunately.)

August 7, 2013 at 3:28 pm

Thanks! I haven’t used that software before, but I can tell you there are many good stat software packages out there. If you like using it and you’re confident that it’s accurate, go with it.

May 26, 2013 at 9:47 am

I often use r ! and sometimes work with SPSS and Excel,but at all, i prefer to use R because i love programing and R is a wonderfull language.also R isn’t limited! my goal idea is to create packages that cover shortage of other softwares,and linking softwares toghether.Indeed,i like to ferret in softwares. so,my first software is R but i hasn’t think about primiary software yet…! so,i research about statistical softwares and decide to use STATA inside R!

June 6, 2013 at 5:18 pm

Hi Morteza, I agree: R is awesome if you love programming. But do check out Stata too. 🙂

September 19, 2014 at 6:29 am

Hi karen do you really think that R is more efficient then Stata. I think that you are right because in programming most of my fellows using R rather then Stata. So Agreed with you…….. 😀

November 26, 2018 at 10:59 am

Hi, is there any book that will help me to learn STATA and R. If there is pease how do I get it. I’m in Nigeria.

May 23, 2013 at 8:34 pm

I use R and Stata regularly. Dollar for dollar, I personally think that Stata is the most comprehensive stats package you can buy. Excellent documentation and a great user community. R is excellent as well, but suffers from absolutely terrible online documentation, which (for me) requires third party sources (read: books).

If somebody is buying you a license, then you don’t care what it costs. If someone like me has to buy a license, then to me, Stata is a no-brainer, given all the stats you can do with it.

My college eliminated both SAS and SPSS for that reason and use R for most classes. Rumor has it SAS is offering a new “college” licensing fee, but I’m not privy to that information.

Small sidebar: SAS started on the mainframe and it annoys me that it still “looks” that way. JMP is probably better (and again, expensive) but doesn’t have anywhere the capabilities if base SAS, the last time I looked.

Just my opinions.

May 24, 2013 at 1:54 pm

I actually agree with you about Stata. If I were to start over, that’s what I would use, especially, as you’ve said, if you’re buying your own license.

And Stata has the *best* manuals, IMHO.

February 5, 2013 at 5:43 pm

Depends on which social scientists you are talking about. I doubt you will find many economists, for example, who do most (if any) of their analyses in SPSS. If you absolutely must have a gui JMP is clearly the superior platform, since its scripting language can interface with R, and you can do whatever you please. Try searching for quantile regression in the SPSS documentation, it says the math is too hard, and SPSS cannot compute.

February 6, 2013 at 2:25 pm

Agreed, most economists I’ve talked to use either Stata or Eviews.

SPSS also interfaces with R.

Sure, there are examples of specific analyses that can’t be done in any software. That’s one reason why it’s good to be able to use at least two.

November 15, 2012 at 12:30 pm

I am SPSS and R lover…in my university they use JMP software…how should I convince them that SPSS is better than JMP…or First of all can I convince them???

November 16, 2012 at 11:53 am

Well, I’m sure they’ll cite budget issues. But there are some statistical options in SPSS that are not available in JMP. I don’t know of any where the reverse is true, although that may just be my lack of knowledge of JMP. For example, to the best of my knowledge, JMP doesn’t have a Linear Mixed Model procedure.

December 3, 2012 at 1:00 pm

When you add random effects to a linear model in JMP the default is REML. In fact the manual goes so far as to say REML for repeated measures data is the modern default, and JMP provides EMS solutions for univariate RM ANOVA only for historical reasons. JMP doesn’t do multilevel models (more than 1 level of random effects), and I don’t believe it does generalized linear mixed effects models (count or binary outcomes). I usually use Stata and R, but I keep an eye on JMP because it is a fun program sometimes. I have used it for repeated measures data by mixed model when a colleague wanted help doing it himself, where the posthoc tests where flexible and accessible, compared to his version of Stata or in R.

December 3, 2012 at 5:08 pm

Thanks, Dave. That’s great to know. The last time I used JMP (which was a few years ago), REML wasn’t an option.

Yes, I agree. JMP is very straightforward and for 95% of analyses that most researchers use, entirely sufficient.

October 11, 2011 at 11:58 pm

Good advice, all around. But… if you choose SPSS as your primary package, SAS has little to offer you, and vice versa. The overlap is just too great to make either a good complement to the other.

A factor to consider in choosing between the Big Two is your preferred user interface. If you don’t want to program (much) and you adore point-and-shoot interfaces, go with SPSS. If you don’t mind programming explicitly, and despise point-and-shoot interfaces SAS will make you happier.

Another factor in choosing among the Big Two is your use of structural equation models (SEMs). If you don’t use them it’s a non-issue. If you use them extensively, you should choose between EQS-like syntax (in SAS PROC CALIS) and SPSS’s AMOS. SEMs are confusing enough without worrying about converting from your preferred expression of the models into the expression your software wants.

Much better choices as a complement to one of the Big Two are Stata and some dialect of S (R, S, S-plus). Stata users say it has some very slick programming facilities. (I’m not among them, so I can’t say from experience.) The S dialects are killers for simulation studies. I benchmarked R against SAS/IML (in version 9.1) and found R was an order of magnitude faster. R is built entirely around an object-oriented programming interface. Language extensions are a snap. In my opinion bootstrap estimation is easier in R than in other languages. High resolution graphics are native to R, and (despite a lot of improvement from versions 6 to 7 to 9.1 and 9.2) not native to SAS.

March 10, 2015 at 1:47 pm

I think SAS becomes an asset over SPSS when the focus is on data preparation: Merging multiple tables, accessing SQL databases, using API functions, creating canned reports, etc..

January 29, 2010 at 10:08 am

hi friends, I am new to R.I would like to know R-PLUS.Does any know where can I get the free training for R-PLUS.

Regards, Peng.

May 23, 2013 at 12:59 pm

I believe the above comment is spam. I am not aware of the existence of R-plus; googling revealed a word for word comment on another site: http://www.talkstats.com/showthread.php/10761-free-training-for-R-PLUS .

Apologies to the commenter if this is a genuine enquiry.

May 23, 2013 at 2:56 pm

Thanks, Dave.

I suspect it was real, only because there was no link back to another site (you wouldn’t believe the strange links I get). I figured it was a language difficulty, and they meant S-Plus, on which R was based.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Privacy Overview

Quantitative Analysis Guide: Which Statistical Software to Use?

- Finding Data

- Which Statistical Software to Use?

- Merging Data Sets

- Reshaping Data Sets

- Choose Statistical Test for 1 Dependent Variable

- Choose Statistical Test for 2 or More Dependent Variables

- Data Services Home Page

Statistical Software Comparison

- What statistical test to use?

- Data Visualization Resources

- Data Analysis Examples External (UCLA) examples of regression and power analysis

- Supported software

- Request a consultation

- Making your code reproducible

Software Access

- The first version of SPSS was developed by Norman H. Nie, Dale H. Bent and C. Hadlai Hull in and released in 1968 as the Statistical Package for Social Sciences.

- In July 2009, IBM acquired SPSS.

- Social sciences

- Health sciences

Data Format and Compatibility

- .sav file to save data

- Optional syntax files (.sps)

- Easily export .sav file from Qualtrics

- Import Excel files (.xls, .xlsx), Text files (.csv, .txt, .dat), SAS (.sas7bdat), Stata (.dta)

- Export Excel files (.xls, .xlsx), Text files (.csv, .dat), SAS (.sas7bdat), Stata (.dta)

- SPSS Chart Types

- Chart Builder: Drag and drop graphics

- Easy and intuitive user interface; menus and dialog boxes

- Similar feel to Excel

- SEMs through SPSS Amos

- Easily exclude data and handle missing data

Limitations

- Absence of robust methods (e.g...Least Absolute Deviation Regression, Quantile Regression, ...)

- Unable to perform complex many to many merge

Sample Data

- Developed by SAS

- Created in the 1980s by John Sall to take advantage of the graphical user interface introduced by Macintosh

- Orginally stood for 'John's Macintosh Program'

- Five products: JMP, JMP Pro, JMP Clinical, JMP Genomics, JMP Graph Builder App

- Engineering: Six Sigma, Quality Control, Scientific Research, Design of Experiments

- Healthcare/Pharmaceutical

- .jmp file to save data

- Optional syntax files (.jsl)

- Import Excel files (.xls, .xlsx), Text files (.csv, .txt, .dat), SAS (.sas7bdat), Stata (.dta), SPSS (.sav)

- Export Excel files (.xls, .xlsx), Text files (.csv, .dat), SAS (.sas7bdat)

- Gallery of JMP Graphs

- Drag and Drop Graph Editor will try to guess what chart is correct for your data

- Dynamic interface can be used to zoom and change view

- Ability to lasso outliers on a graph and regraph without the outliers

- Interactive Graphics

- Scripting Language (JSL)

- SAS, R and MATLAB can be executed using JSL

- Interface for using R from within and add-in for Excel

- Great interface for easily managing output

- Graphs and data tables are dynamically linked

- Great set of online resources!

- Absence of some robust methods (regression: 2SLS, LAD, Quantile)

- Stata was first released in January 1985 as a regression and data management package with 44 commands, written by Bill Gould and Sean Becketti.

- The name Stata is a syllabic abbreviation of the words statistics and data.

- The graphical user interface (menus and dialog boxes) was released in 2003.

- Political Science

- Public Health

- Data Science

- Who uses Stata?

Data Format and Compatibility

- .dta file to save dataset

- .do syntax file, where commands can be written and saved

- Import Excel files (.xls, .xlsx), Text files (.txt, .csv, .dat), SAS (.XPT), Other (.XML), and various ODBC data sources

- Export Excel files (.xls, . xlsx ), Text files (.txt, .csv, .dat), SAS (.XPT), Other (.XML), and various ODBC data sources

- Newer versions of Stata can read datasets, commands, graphs, etc., from older versions, and in doing so, reproduce results

- Older versions of Stata cannot read newer versions of Stata datasets, but newer versions can save in the format of older versions

- Stata Graph Gallery

- UCLA - Stata Graph Gallery

- Syntax mainly used, but menus are an option as well

- Some user written programs are available to install

- Offers matrix programming in Mata

- Works well with panel, survey, and time-series data

- Data management

- Can only hold one dataset in memory at a time

- The specific Stata package ( Stata/IC, Stata/SE, and Stata/MP ) limits the size of usable datasets. One may have to sacrifice the number of variables for the number of observations, or vice versa, depending on the package.

- Overall, graphs have limited flexibility. Stata schemes , however, provide some flexibility in changing the style of the graphs.

- Sample Syntax

* First enter the data manually; input str10 sex test1 test2 "Male" 86 83 "Male" 93 79 "Male" 85 81 "Male" 83 80 "Male" 91 76 "Female" 94 79 "Fem ale" 91 94 "Fem ale" 83 84 "Fem ale" 96 81 "Fem ale" 95 75 end

* Next run a paired t-test; ttest test1 == test2

* Create a scatterplot; twoway ( scatter test2 test1 if sex == "Male" ) ( scatter test2 test1 if sex == "Fem ale" ), legend (lab(1 "Male" ) lab(2 "Fem ale" ))

- The development of SAS (Statistical Analysis System) began in 1966 by Anthony Bar of North Carolina State University and later joined by James Goodnight.

- The National Institute of Health funded this project with a goal of analyzing agricultural data to improve crop yields.

- The first release of SAS was in 1972. In 2012, SAS held 36.2% of the market making it the largest market-share holder in 'advanced analytics.'

- Financial Services

- Manufacturing

- Health and Life Sciences

- Available for Windows only

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), JMP (.jmp), Other (.xml)

- Export Excel files (.xls, . xlsx ), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), JMP (.jmp), Other (.xml)

- SAS Graphics Samples Output Gallery

- Can be cumbersome at times to create perfect graphics with syntax

- ODS Graphics Designer provides a more interactive interface

- BASE SAS contains the data management facility, programming language, data analysis and reporting tools

- SAS Libraries collect the SAS datasets you create

- Multitude of additional components are available to complement Base SAS which include SAS/GRAPH, SAS/PH (Clinical Trial Analysis), SAS/ETS (Econometrics and Time Series), SAS/Insight (Data Mining) etc...

- SAS Certification exams

- Handles extremely large datasets

- Predominantly used for data management and statistical procedures

- SAS has two main types of code; DATA steps and PROC steps

- With one procedure, test results, post estimation and plots can be produced

- Size of datasets analyzed is only limited by the machine

Limitations

- Graphics can be cumbersome to manipulate

- Since SAS is a proprietary software, there may be an extensive lag time for the implementation of new methods

- Documentation and books tend to be very technical and not necessarily new user friendly

* First enter the data manually; data example; input sex $ test1 test2; datalines ; M 86 83 M 93 79 M 85 81 M 83 80 M 91 76 F 94 79 F 91 94 F 83 84 F 96 81 F 95 75 ; run ;

* Next run a paired t-test; proc ttest data = example; paired test1*test2; run ;

* Create a scatterplot; proc sgplot data = example; scatter y = test1 x = test2 / group = sex; run ;

- R first appeared in 1993 and was created by Ross Ihaka and Robert Gentleman at the University of Auckland, New Zealand.

- R is an implementation of the S programming language which was developed at Bell Labs.

- It is named partly after its first authors and partly as a play on the name of S.

- R is currently developed by the R Development Core Team.

- RStudio, an integrated development environment (IDE) was first released in 2011.

- Companies Using R

- Finance and Economics

- Bioinformatics

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), SPSS (.sav), Stata (.dta), SAS(.sas7bdat), Other (.xml, .json)

- Export Excel files (.xlsx), Text files (.txt, .csv), SPSS (.sav), Stata (.dta), Other (.json)

- ggplot2 package, grammar of graphics

- Graphs available through ggplot2

- The R Graph Gallery

- Network analysis (igraph)

- Flexible esthetics and options

- Interactive graphics with Shiny

- Many available packages to create field specific graphics

- R is a free and open source

- Over 6000 user contributed packages available through CRAN

- Large online community

- Network Analysis, Text Analysis, Data Mining, Web Scraping

- Interacts with other software such as, Python, Bioconductor, WinBUGS, JAGS etc...

- Scope of functions, flexible, versatile etc..

Limitations

- Large online help community but no 'formal' tech support

- Have to have a good understanding of different data types before real ease of use begins

- Many user written packages may be hard to sift through

# Manually enter the data into a dataframe dataset <- data.frame(sex = c("Male", "Male", "Male", "Male", "Male", "Female", "Female", "Female", "Female", "Female"), test1 = c( 86 , 93 , 85 , 83 , 91 , 94 , 91 , 83 , 96 , 95 ), test2 = c( 83 , 79 , 81 , 80 , 76 , 79 , 94 , 84 , 81 , 75 ))

# Now we will run a paired t-test t.test(dataset$test1, dataset$test2, paired = TRUE )

# Last let's simply plot these two test variables plot(dataset$test1, dataset$test2, col = c("red","blue")[dataset$sex]) legend("topright", fill = c("blue", "red"), c("Male", "Female"))

# Making the same graph using ggplot2 install.packages('ggplot2') library(ggplot2) mygraph <- ggplot(data = dataset, aes(x = test1, y = test2, color = sex)) mygraph + geom_point(size = 5) + ggtitle('Test1 versus Test2 Scores')

- Cleave Moler of the University of New Mexico began development in the late 1970s.

- With the help of Jack Little, they cofounded MathWorks and released MATLAB (matrix laboratory) in 1984.

- Education (linear algebra and numerical analysis)

- Popular among scientists involved in image processing

- Engineering

- .m Syntax file

- Import Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), Other (.xml, .json)

- Export Excel files (.xls, .xlsx), Text files (.txt, .dat, .csv), Other (.xml, .json)

- MATLAB Plot Gallery

- Customizable but not point-and-click visualization

- Optimized for data analysis, matrix manipulation in particular

- Basic unit is a matrix

- Vectorized operations are quick

- Diverse set of available toolboxes (apps) [Statistics, Optimization, Image Processing, Signal Processing, Parallel Computing etc..]

- Large online community (MATLAB Exchange)

- Image processing

- Vast number of pre-defined functions and implemented algorithms

- Lacks implementation of some advanced statistical methods

- Integrates easily with some languages such as C, but not others, such as Python

- Limited GIS capabilities

sex = { 'Male' , 'Male' , 'Male' , 'Male' , 'Male' , 'Female' , 'Female' , 'Female' , 'Female' , 'Female' }; t1 = [86,93,85,83,91,94,91,83,96,95]; t2 = [83,79,81,80,76,79,94,84,81,75];

% paired t-test [h,p,ci,stats] = ttest(t1,t2)

% independent samples t-test sex = categorical(sex); [h,p,ci,stats] = ttest2(t1(sex== 'Male' ),t1(sex== 'Female' ))

plot(t1,t2, 'o' ) g = sex== 'Male' ; plot(t1(g),t2(g), 'bx' ); hold on; plot(t1(~g),t2(~g), 'ro' )

Software Features and Capabilities

*The primary interface is bolded in the case of multiple interface types available.

Learning Curve

Further Reading

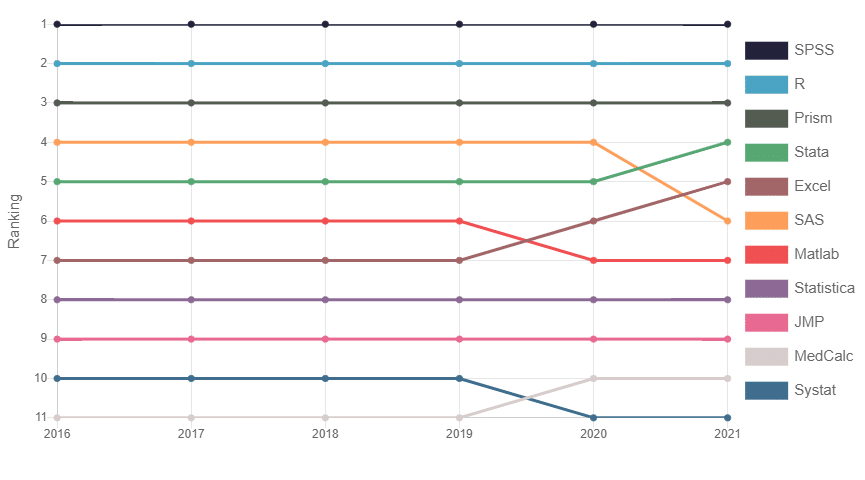

- The Popularity of Data Analysis Software

- Statistical Software Capability Table

- The SAS versus R Debate in Industry and Academia

- Why R has a Steep Learning Curve

- Comparison of Data Analysis Packages

- Comparison of Statistical Packages

- MATLAB commands in Python and R

- MATLAB and R Side by Side

- Stata and R Side by Side

- << Previous: Statistical Guidance

- Next: Merging Data Sets >>

- Last Updated: Jun 3, 2024 3:55 PM

- URL: https://guides.nyu.edu/quant

A Fresh Way to Do Statistics

Download JASP

JASP is an open-source project supported by the University of Amsterdam.

JASP has an intuitive interface that was designed with the user in mind.

JASP offers standard analysis procedures in both their classical and Bayesian form.

Main Features

Your choice.

- Frequentist analyses

- Bayesian analyses

User-friendly Interface

- Dynamic update of all results

- Spreadsheet layout and an intuitive drag-and-drop interface

- Progressive disclosure for increased understanding

- Annotated output for communicating your results

Developed for publishing analyses

- Integrated with The Open Science Framework (OSF)

- Support for APA format (copy graphs and tables directly into Word)

View complete feature list

Mission Statement

Our main goal is to help statistical practitioners reach maximally informative conclusions with a minimum of fuss. This is why we have developed JASP, a free cross-platform software program with a state-of-the-art graphical user interface.

Your First Steps Using JASP

Getting started.

The introductory video on the left should give you a good idea of how JASP works. You can consult our Getting Started Guide for more information.

How to Use JASP

Take a look at our How to Use JASP page for in-depth explanations of the different features in JASP.

Past Sponsors

University of Bern

Department of Psychology

www.psy.unibe.ch

APS Fund for Teaching and Public Understanding of Psychological Science

www.psychologicalscience.org

European Research Council

www.erc.europa.eu

Nederlandse Organisatie voor Wetenschappelijk Onderzoek

Center for Open Science

Scientific Advisory Board

- Prof. James O. Berger, Duke University

- Prof. Jon Forster, University of Southampton

- Prof. Merlise A. Clyde, Duke University

- Prof. Ioannis Ntzoufras, Athens University of Economics and Business

- Prof. Jeffrey N. Rouder, University of California, Irvine

- Prof. Zoltan Dienes, University of Sussex

- Prof. Andy Field, University of Sussex

- Prof. Han L. J. van der Maas, University of Amsterdam

- Prof. Erin Buchanan, Missouri State University

- Prof. Casper Albers, University of Groningen

- Dr. Henrik Singmann, University College London

- Dr. Felix Schönbrodt, LMU Munich

For more details on the scientific advisory board, click here .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

Basic statistical tools in research and data analysis

Zulfiqar ali.

Department of Anaesthesiology, Division of Neuroanaesthesiology, Sheri Kashmir Institute of Medical Sciences, Soura, Srinagar, Jammu and Kashmir, India

S Bala Bhaskar

1 Department of Anaesthesiology and Critical Care, Vijayanagar Institute of Medical Sciences, Bellary, Karnataka, India

Statistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data. The results and inferences are precise only if proper statistical tests are used. This article will try to acquaint the reader with the basic research tools that are utilised while conducting various studies. The article covers a brief outline of the variables, an understanding of quantitative and qualitative variables and the measures of central tendency. An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data analysis.

INTRODUCTION

Statistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population.[ 1 ] This requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. An adequate knowledge of statistics is necessary for proper designing of an epidemiological study or a clinical trial. Improper statistical methods may result in erroneous conclusions which may lead to unethical practice.[ 2 ]

Variable is a characteristic that varies from one individual member of population to another individual.[ 3 ] Variables such as height and weight are measured by some type of scale, convey quantitative information and are called as quantitative variables. Sex and eye colour give qualitative information and are called as qualitative variables[ 3 ] [ Figure 1 ].

Classification of variables

Quantitative variables

Quantitative or numerical data are subdivided into discrete and continuous measurements. Discrete numerical data are recorded as a whole number such as 0, 1, 2, 3,… (integer), whereas continuous data can assume any value. Observations that can be counted constitute the discrete data and observations that can be measured constitute the continuous data. Examples of discrete data are number of episodes of respiratory arrests or the number of re-intubations in an intensive care unit. Similarly, examples of continuous data are the serial serum glucose levels, partial pressure of oxygen in arterial blood and the oesophageal temperature.

A hierarchical scale of increasing precision can be used for observing and recording the data which is based on categorical, ordinal, interval and ratio scales [ Figure 1 ].

Categorical or nominal variables are unordered. The data are merely classified into categories and cannot be arranged in any particular order. If only two categories exist (as in gender male and female), it is called as a dichotomous (or binary) data. The various causes of re-intubation in an intensive care unit due to upper airway obstruction, impaired clearance of secretions, hypoxemia, hypercapnia, pulmonary oedema and neurological impairment are examples of categorical variables.

Ordinal variables have a clear ordering between the variables. However, the ordered data may not have equal intervals. Examples are the American Society of Anesthesiologists status or Richmond agitation-sedation scale.

Interval variables are similar to an ordinal variable, except that the intervals between the values of the interval variable are equally spaced. A good example of an interval scale is the Fahrenheit degree scale used to measure temperature. With the Fahrenheit scale, the difference between 70° and 75° is equal to the difference between 80° and 85°: The units of measurement are equal throughout the full range of the scale.

Ratio scales are similar to interval scales, in that equal differences between scale values have equal quantitative meaning. However, ratio scales also have a true zero point, which gives them an additional property. For example, the system of centimetres is an example of a ratio scale. There is a true zero point and the value of 0 cm means a complete absence of length. The thyromental distance of 6 cm in an adult may be twice that of a child in whom it may be 3 cm.

STATISTICS: DESCRIPTIVE AND INFERENTIAL STATISTICS

Descriptive statistics[ 4 ] try to describe the relationship between variables in a sample or population. Descriptive statistics provide a summary of data in the form of mean, median and mode. Inferential statistics[ 4 ] use a random sample of data taken from a population to describe and make inferences about the whole population. It is valuable when it is not possible to examine each member of an entire population. The examples if descriptive and inferential statistics are illustrated in Table 1 .

Example of descriptive and inferential statistics

Descriptive statistics

The extent to which the observations cluster around a central location is described by the central tendency and the spread towards the extremes is described by the degree of dispersion.

Measures of central tendency

The measures of central tendency are mean, median and mode.[ 6 ] Mean (or the arithmetic average) is the sum of all the scores divided by the number of scores. Mean may be influenced profoundly by the extreme variables. For example, the average stay of organophosphorus poisoning patients in ICU may be influenced by a single patient who stays in ICU for around 5 months because of septicaemia. The extreme values are called outliers. The formula for the mean is

where x = each observation and n = number of observations. Median[ 6 ] is defined as the middle of a distribution in a ranked data (with half of the variables in the sample above and half below the median value) while mode is the most frequently occurring variable in a distribution. Range defines the spread, or variability, of a sample.[ 7 ] It is described by the minimum and maximum values of the variables. If we rank the data and after ranking, group the observations into percentiles, we can get better information of the pattern of spread of the variables. In percentiles, we rank the observations into 100 equal parts. We can then describe 25%, 50%, 75% or any other percentile amount. The median is the 50 th percentile. The interquartile range will be the observations in the middle 50% of the observations about the median (25 th -75 th percentile). Variance[ 7 ] is a measure of how spread out is the distribution. It gives an indication of how close an individual observation clusters about the mean value. The variance of a population is defined by the following formula:

where σ 2 is the population variance, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The variance of a sample is defined by slightly different formula:

where s 2 is the sample variance, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. The formula for the variance of a population has the value ‘ n ’ as the denominator. The expression ‘ n −1’ is known as the degrees of freedom and is one less than the number of parameters. Each observation is free to vary, except the last one which must be a defined value. The variance is measured in squared units. To make the interpretation of the data simple and to retain the basic unit of observation, the square root of variance is used. The square root of the variance is the standard deviation (SD).[ 8 ] The SD of a population is defined by the following formula:

where σ is the population SD, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The SD of a sample is defined by slightly different formula:

where s is the sample SD, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. An example for calculation of variation and SD is illustrated in Table 2 .

Example of mean, variance, standard deviation

Normal distribution or Gaussian distribution

Most of the biological variables usually cluster around a central value, with symmetrical positive and negative deviations about this point.[ 1 ] The standard normal distribution curve is a symmetrical bell-shaped. In a normal distribution curve, about 68% of the scores are within 1 SD of the mean. Around 95% of the scores are within 2 SDs of the mean and 99% within 3 SDs of the mean [ Figure 2 ].

Normal distribution curve

Skewed distribution

It is a distribution with an asymmetry of the variables about its mean. In a negatively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the right of Figure 1 . In a positively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the left of the figure leading to a longer right tail.

Curves showing negatively skewed and positively skewed distribution

Inferential statistics

In inferential statistics, data are analysed from a sample to make inferences in the larger collection of the population. The purpose is to answer or test the hypotheses. A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. Hypothesis tests are thus procedures for making rational decisions about the reality of observed effects.

Probability is the measure of the likelihood that an event will occur. Probability is quantified as a number between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty).

In inferential statistics, the term ‘null hypothesis’ ( H 0 ‘ H-naught ,’ ‘ H-null ’) denotes that there is no relationship (difference) between the population variables in question.[ 9 ]

Alternative hypothesis ( H 1 and H a ) denotes that a statement between the variables is expected to be true.[ 9 ]

The P value (or the calculated probability) is the probability of the event occurring by chance if the null hypothesis is true. The P value is a numerical between 0 and 1 and is interpreted by researchers in deciding whether to reject or retain the null hypothesis [ Table 3 ].

P values with interpretation

If P value is less than the arbitrarily chosen value (known as α or the significance level), the null hypothesis (H0) is rejected [ Table 4 ]. However, if null hypotheses (H0) is incorrectly rejected, this is known as a Type I error.[ 11 ] Further details regarding alpha error, beta error and sample size calculation and factors influencing them are dealt with in another section of this issue by Das S et al .[ 12 ]

Illustration for null hypothesis

PARAMETRIC AND NON-PARAMETRIC TESTS

Numerical data (quantitative variables) that are normally distributed are analysed with parametric tests.[ 13 ]

Two most basic prerequisites for parametric statistical analysis are:

- The assumption of normality which specifies that the means of the sample group are normally distributed

- The assumption of equal variance which specifies that the variances of the samples and of their corresponding population are equal.

However, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[ 14 ] statistical techniques are used. Non-parametric tests are used to analyse ordinal and categorical data.

Parametric tests

The parametric tests assume that the data are on a quantitative (numerical) scale, with a normal distribution of the underlying population. The samples have the same variance (homogeneity of variances). The samples are randomly drawn from the population, and the observations within a group are independent of each other. The commonly used parametric tests are the Student's t -test, analysis of variance (ANOVA) and repeated measures ANOVA.

Student's t -test

Student's t -test is used to test the null hypothesis that there is no difference between the means of the two groups. It is used in three circumstances:

where X = sample mean, u = population mean and SE = standard error of mean

where X 1 − X 2 is the difference between the means of the two groups and SE denotes the standard error of the difference.

- To test if the population means estimated by two dependent samples differ significantly (the paired t -test). A usual setting for paired t -test is when measurements are made on the same subjects before and after a treatment.

The formula for paired t -test is:

where d is the mean difference and SE denotes the standard error of this difference.

The group variances can be compared using the F -test. The F -test is the ratio of variances (var l/var 2). If F differs significantly from 1.0, then it is concluded that the group variances differ significantly.

Analysis of variance

The Student's t -test cannot be used for comparison of three or more groups. The purpose of ANOVA is to test if there is any significant difference between the means of two or more groups.

In ANOVA, we study two variances – (a) between-group variability and (b) within-group variability. The within-group variability (error variance) is the variation that cannot be accounted for in the study design. It is based on random differences present in our samples.

However, the between-group (or effect variance) is the result of our treatment. These two estimates of variances are compared using the F-test.

A simplified formula for the F statistic is:

where MS b is the mean squares between the groups and MS w is the mean squares within groups.

Repeated measures analysis of variance

As with ANOVA, repeated measures ANOVA analyses the equality of means of three or more groups. However, a repeated measure ANOVA is used when all variables of a sample are measured under different conditions or at different points in time.

As the variables are measured from a sample at different points of time, the measurement of the dependent variable is repeated. Using a standard ANOVA in this case is not appropriate because it fails to model the correlation between the repeated measures: The data violate the ANOVA assumption of independence. Hence, in the measurement of repeated dependent variables, repeated measures ANOVA should be used.

Non-parametric tests

When the assumptions of normality are not met, and the sample means are not normally, distributed parametric tests can lead to erroneous results. Non-parametric tests (distribution-free test) are used in such situation as they do not require the normality assumption.[ 15 ] Non-parametric tests may fail to detect a significant difference when compared with a parametric test. That is, they usually have less power.

As is done for the parametric tests, the test statistic is compared with known values for the sampling distribution of that statistic and the null hypothesis is accepted or rejected. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 5 .

Analogue of parametric and non-parametric tests

Median test for one sample: The sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median reference value.

This test examines the hypothesis about the median θ0 of a population. It tests the null hypothesis H0 = θ0. When the observed value (Xi) is greater than the reference value (θ0), it is marked as+. If the observed value is smaller than the reference value, it is marked as − sign. If the observed value is equal to the reference value (θ0), it is eliminated from the sample.

If the null hypothesis is true, there will be an equal number of + signs and − signs.

The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

There is a major limitation of sign test as we lose the quantitative information of the given data and merely use the + or – signs. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. As in the sign test, if there is an observed value that is equal to the reference value θ0, this observed value is eliminated from the sample.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample and compares the difference in the rank sums.

Mann-Whitney test

It is used to test the null hypothesis that two samples have the same median or, alternatively, whether observations in one sample tend to be larger than observations in the other.

Mann–Whitney test compares all data (xi) belonging to the X group and all data (yi) belonging to the Y group and calculates the probability of xi being greater than yi: P (xi > yi). The null hypothesis states that P (xi > yi) = P (xi < yi) =1/2 while the alternative hypothesis states that P (xi > yi) ≠1/2.

Kolmogorov-Smirnov test

The two-sample Kolmogorov-Smirnov (KS) test was designed as a generic method to test whether two random samples are drawn from the same distribution. The null hypothesis of the KS test is that both distributions are identical. The statistic of the KS test is a distance between the two empirical distributions, computed as the maximum absolute difference between their cumulative curves.

Kruskal-Wallis test

The Kruskal–Wallis test is a non-parametric test to analyse the variance.[ 14 ] It analyses if there is any difference in the median values of three or more independent samples. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test statistic.

Jonckheere test

In contrast to Kruskal–Wallis test, in Jonckheere test, there is an a priori ordering that gives it a more statistical power than the Kruskal–Wallis test.[ 14 ]

Friedman test

The Friedman test is a non-parametric test for testing the difference between several related samples. The Friedman test is an alternative for repeated measures ANOVAs which is used when the same parameter has been measured under different conditions on the same subjects.[ 13 ]

Tests to analyse the categorical data

Chi-square test, Fischer's exact test and McNemar's test are used to analyse the categorical or nominal variables. The Chi-square test compares the frequencies and tests whether the observed data differ significantly from that of the expected data if there were no differences between groups (i.e., the null hypothesis). It is calculated by the sum of the squared difference between observed ( O ) and the expected ( E ) data (or the deviation, d ) divided by the expected data by the following formula:

A Yates correction factor is used when the sample size is small. Fischer's exact test is used to determine if there are non-random associations between two categorical variables. It does not assume random sampling, and instead of referring a calculated statistic to a sampling distribution, it calculates an exact probability. McNemar's test is used for paired nominal data. It is applied to 2 × 2 table with paired-dependent samples. It is used to determine whether the row and column frequencies are equal (that is, whether there is ‘marginal homogeneity’). The null hypothesis is that the paired proportions are equal. The Mantel-Haenszel Chi-square test is a multivariate test as it analyses multiple grouping variables. It stratifies according to the nominated confounding variables and identifies any that affects the primary outcome variable. If the outcome variable is dichotomous, then logistic regression is used.

SOFTWARES AVAILABLE FOR STATISTICS, SAMPLE SIZE CALCULATION AND POWER ANALYSIS

Numerous statistical software systems are available currently. The commonly used software systems are Statistical Package for the Social Sciences (SPSS – manufactured by IBM corporation), Statistical Analysis System ((SAS – developed by SAS Institute North Carolina, United States of America), R (designed by Ross Ihaka and Robert Gentleman from R core team), Minitab (developed by Minitab Inc), Stata (developed by StataCorp) and the MS Excel (developed by Microsoft).

There are a number of web resources which are related to statistical power analyses. A few are:

- StatPages.net – provides links to a number of online power calculators

- G-Power – provides a downloadable power analysis program that runs under DOS

- Power analysis for ANOVA designs an interactive site that calculates power or sample size needed to attain a given power for one effect in a factorial ANOVA design

- SPSS makes a program called SamplePower. It gives an output of a complete report on the computer screen which can be cut and paste into another document.

It is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study. This will help to conduct an appropriately well-designed study leading to valid and reliable results. Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based guidelines.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

What is Statistical Analysis? Types, Methods, Software, Examples

Appinio Research · 29.02.2024 · 31min read

Ever wondered how we make sense of vast amounts of data to make informed decisions? Statistical analysis is the answer. In our data-driven world, statistical analysis serves as a powerful tool to uncover patterns, trends, and relationships hidden within data. From predicting sales trends to assessing the effectiveness of new treatments, statistical analysis empowers us to derive meaningful insights and drive evidence-based decision-making across various fields and industries. In this guide, we'll explore the fundamentals of statistical analysis, popular methods, software tools, practical examples, and best practices to help you harness the power of statistics effectively. Whether you're a novice or an experienced analyst, this guide will equip you with the knowledge and skills to navigate the world of statistical analysis with confidence.

What is Statistical Analysis?

Statistical analysis is a methodical process of collecting, analyzing, interpreting, and presenting data to uncover patterns, trends, and relationships. It involves applying statistical techniques and methodologies to make sense of complex data sets and draw meaningful conclusions.

Importance of Statistical Analysis

Statistical analysis plays a crucial role in various fields and industries due to its numerous benefits and applications:

- Informed Decision Making : Statistical analysis provides valuable insights that inform decision-making processes in business, healthcare, government, and academia. By analyzing data, organizations can identify trends, assess risks, and optimize strategies for better outcomes.

- Evidence-Based Research : Statistical analysis is fundamental to scientific research, enabling researchers to test hypotheses, draw conclusions, and validate theories using empirical evidence. It helps researchers quantify relationships, assess the significance of findings, and advance knowledge in their respective fields.

- Quality Improvement : In manufacturing and quality management, statistical analysis helps identify defects, improve processes, and enhance product quality. Techniques such as Six Sigma and Statistical Process Control (SPC) are used to monitor performance, reduce variation, and achieve quality objectives.

- Risk Assessment : In finance, insurance, and investment, statistical analysis is used for risk assessment and portfolio management. By analyzing historical data and market trends, analysts can quantify risks, forecast outcomes, and make informed decisions to mitigate financial risks.

- Predictive Modeling : Statistical analysis enables predictive modeling and forecasting in various domains, including sales forecasting, demand planning, and weather prediction. By analyzing historical data patterns, predictive models can anticipate future trends and outcomes with reasonable accuracy.

- Healthcare Decision Support : In healthcare, statistical analysis is integral to clinical research, epidemiology, and healthcare management. It helps healthcare professionals assess treatment effectiveness, analyze patient outcomes, and optimize resource allocation for improved patient care.

Statistical Analysis Applications

Statistical analysis finds applications across diverse domains and disciplines, including:

- Business and Economics : Market research , financial analysis, econometrics, and business intelligence.

- Healthcare and Medicine : Clinical trials, epidemiological studies, healthcare outcomes research, and disease surveillance.

- Social Sciences : Survey research, demographic analysis, psychology experiments, and public opinion polls.

- Engineering : Reliability analysis, quality control, process optimization, and product design.

- Environmental Science : Environmental monitoring, climate modeling, and ecological research.

- Education : Educational research, assessment, program evaluation, and learning analytics.

- Government and Public Policy : Policy analysis, program evaluation, census data analysis, and public administration.

- Technology and Data Science : Machine learning, artificial intelligence, data mining, and predictive analytics.

These applications demonstrate the versatility and significance of statistical analysis in addressing complex problems and informing decision-making across various sectors and disciplines.

Fundamentals of Statistics

Understanding the fundamentals of statistics is crucial for conducting meaningful analyses. Let's delve into some essential concepts that form the foundation of statistical analysis.

Basic Concepts

Statistics is the science of collecting, organizing, analyzing, and interpreting data to make informed decisions or conclusions. To embark on your statistical journey, familiarize yourself with these fundamental concepts:

- Population vs. Sample : A population comprises all the individuals or objects of interest in a study, while a sample is a subset of the population selected for analysis. Understanding the distinction between these two entities is vital, as statistical analyses often rely on samples to draw conclusions about populations.

- Independent Variables : Variables that are manipulated or controlled in an experiment.

- Dependent Variables : Variables that are observed or measured in response to changes in independent variables.

- Parameters vs. Statistics : Parameters are numerical measures that describe a population, whereas statistics are numerical measures that describe a sample. For instance, the population mean is denoted by μ (mu), while the sample mean is denoted by x̄ (x-bar).

Descriptive Statistics

Descriptive statistics involve methods for summarizing and describing the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Standard measures of descriptive statistics include:

- Mean : The arithmetic average of a set of values, calculated by summing all values and dividing by the number of observations.

- Median : The middle value in a sorted list of observations.

- Mode : The value that appears most frequently in a dataset.

- Range : The difference between the maximum and minimum values in a dataset.

- Variance : The average of the squared differences from the mean.

- Standard Deviation : The square root of the variance, providing a measure of the average distance of data points from the mean.

- Graphical Techniques : Graphical representations, including histograms, box plots, and scatter plots, offer visual insights into the distribution and relationships within a dataset. These visualizations aid in identifying patterns, outliers, and trends.

Inferential Statistics

Inferential statistics enable researchers to draw conclusions or make predictions about populations based on sample data. These methods allow for generalizations beyond the observed data. Fundamental techniques in inferential statistics include:

- Null Hypothesis (H0) : The hypothesis that there is no significant difference or relationship.

- Alternative Hypothesis (H1) : The hypothesis that there is a significant difference or relationship.

- Confidence Intervals : Confidence intervals provide a range of plausible values for a population parameter. They offer insights into the precision of sample estimates and the uncertainty associated with those estimates.

- Regression Analysis : Regression analysis examines the relationship between one or more independent variables and a dependent variable. It allows for the prediction of the dependent variable based on the values of the independent variables.

- Sampling Methods : Sampling methods, such as simple random sampling, stratified sampling, and cluster sampling , are employed to ensure that sample data are representative of the population of interest. These methods help mitigate biases and improve the generalizability of results.

Probability Distributions

Probability distributions describe the likelihood of different outcomes in a statistical experiment. Understanding these distributions is essential for modeling and analyzing random phenomena. Some common probability distributions include:

- Normal Distribution : The normal distribution, also known as the Gaussian distribution, is characterized by a symmetric, bell-shaped curve. Many natural phenomena follow this distribution, making it widely applicable in statistical analysis.

- Binomial Distribution : The binomial distribution describes the number of successes in a fixed number of independent Bernoulli trials. It is commonly used to model binary outcomes, such as success or failure, heads or tails.

- Poisson Distribution : The Poisson distribution models the number of events occurring in a fixed interval of time or space. It is often used to analyze rare or discrete events, such as the number of customer arrivals in a queue within a given time period.

Types of Statistical Analysis

Statistical analysis encompasses a diverse range of methods and approaches, each suited to different types of data and research questions. Understanding the various types of statistical analysis is essential for selecting the most appropriate technique for your analysis. Let's explore some common distinctions in statistical analysis methods.

Parametric vs. Non-parametric Analysis

Parametric and non-parametric analyses represent two broad categories of statistical methods, each with its own assumptions and applications.

- Parametric Analysis : Parametric methods assume that the data follow a specific probability distribution, often the normal distribution. These methods rely on estimating parameters (e.g., means, variances) from the data. Parametric tests typically provide more statistical power but require stricter assumptions. Examples of parametric tests include t-tests, ANOVA, and linear regression.

- Non-parametric Analysis : Non-parametric methods make fewer assumptions about the underlying distribution of the data. Instead of estimating parameters, non-parametric tests rely on ranks or other distribution-free techniques. Non-parametric tests are often used when data do not meet the assumptions of parametric tests or when dealing with ordinal or non-normal data. Examples of non-parametric tests include the Wilcoxon rank-sum test, Kruskal-Wallis test, and Spearman correlation.

Descriptive vs. Inferential Analysis

Descriptive and inferential analyses serve distinct purposes in statistical analysis, focusing on summarizing data and making inferences about populations, respectively.

- Descriptive Analysis : Descriptive statistics aim to describe and summarize the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Descriptive analysis techniques include measures of central tendency (e.g., mean, median, mode), measures of dispersion (e.g., variance, standard deviation), and graphical representations (e.g., histograms, box plots).

- Inferential Analysis : Inferential statistics involve making inferences or predictions about populations based on sample data. These methods allow researchers to generalize findings from the sample to the larger population. Inferential analysis techniques include hypothesis testing, confidence intervals, regression analysis, and sampling methods. These methods help researchers draw conclusions about population parameters, such as means, proportions, or correlations, based on sample data.

Exploratory vs. Confirmatory Analysis

Exploratory and confirmatory analyses represent two different approaches to data analysis, each serving distinct purposes in the research process.

- Exploratory Analysis : Exploratory data analysis (EDA) focuses on exploring data to discover patterns, relationships, and trends. EDA techniques involve visualizing data, identifying outliers, and generating hypotheses for further investigation. Exploratory analysis is particularly useful in the early stages of research when the goal is to gain insights and generate hypotheses rather than confirm specific hypotheses.

- Confirmatory Analysis : Confirmatory data analysis involves testing predefined hypotheses or theories based on prior knowledge or assumptions. Confirmatory analysis follows a structured approach, where hypotheses are tested using appropriate statistical methods. Confirmatory analysis is common in hypothesis-driven research, where the goal is to validate or refute specific hypotheses using empirical evidence. Techniques such as hypothesis testing, regression analysis, and experimental design are often employed in confirmatory analysis.

Methods of Statistical Analysis

Statistical analysis employs various methods to extract insights from data and make informed decisions. Let's explore some of the key methods used in statistical analysis and their applications.

Hypothesis Testing

Hypothesis testing is a fundamental concept in statistics, allowing researchers to make decisions about population parameters based on sample data. The process involves formulating null and alternative hypotheses, selecting an appropriate test statistic, determining the significance level, and interpreting the results. Standard hypothesis tests include:

- t-tests : Used to compare means between two groups.

- ANOVA (Analysis of Variance) : Extends the t-test to compare means across multiple groups.

- Chi-square test : Assessing the association between categorical variables.

Regression Analysis

Regression analysis explores the relationship between one or more independent variables and a dependent variable. It is widely used in predictive modeling and understanding the impact of variables on outcomes. Key types of regression analysis include:

- Simple Linear Regression : Examines the linear relationship between one independent variable and a dependent variable.

- Multiple Linear Regression : Extends simple linear regression to analyze the relationship between multiple independent variables and a dependent variable.

- Logistic Regression : Used for predicting binary outcomes or modeling probabilities.

Analysis of Variance (ANOVA)

ANOVA is a statistical technique used to compare means across two or more groups. It partitions the total variability in the data into components attributable to different sources, such as between-group differences and within-group variability. ANOVA is commonly used in experimental design and hypothesis testing scenarios.

Time Series Analysis

Time series analysis deals with analyzing data collected or recorded at successive time intervals. It helps identify patterns, trends, and seasonality in the data. Time series analysis techniques include:

- Trend Analysis : Identifying long-term trends or patterns in the data.

- Seasonal Decomposition : Separating the data into seasonal, trend, and residual components.

- Forecasting : Predicting future values based on historical data.

Survival Analysis

Survival analysis is used to analyze time-to-event data, such as time until death, failure, or occurrence of an event of interest. It is widely used in medical research, engineering, and social sciences to analyze survival probabilities and hazard rates over time.

Factor Analysis

Factor analysis is a statistical method used to identify underlying factors or latent variables that explain patterns of correlations among observed variables. It is commonly used in psychology, sociology, and market research to uncover underlying dimensions or constructs.

Cluster Analysis

Cluster analysis is a multivariate technique that groups similar objects or observations into clusters or segments based on their characteristics. It is widely used in market segmentation, image processing, and biological classification.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional space while preserving most of the variability in the data. It identifies orthogonal axes (principal components) that capture the maximum variance in the data. PCA is useful for data visualization, feature selection, and data compression.

How to Choose the Right Statistical Analysis Method?

Selecting the appropriate statistical method is crucial for obtaining accurate and meaningful results from your data analysis.

Understanding Data Types and Distribution

Before choosing a statistical method, it's essential to understand the types of data you're working with and their distribution. Different statistical methods are suitable for different types of data:

- Continuous vs. Categorical Data : Determine whether your data are continuous (e.g., height, weight) or categorical (e.g., gender, race). Parametric methods such as t-tests and regression are typically used for continuous data , while non-parametric methods like chi-square tests are suitable for categorical data.

- Normality : Assess whether your data follows a normal distribution. Parametric methods often assume normality, so if your data are not normally distributed, non-parametric methods may be more appropriate.

Assessing Assumptions

Many statistical methods rely on certain assumptions about the data. Before applying a method, it's essential to assess whether these assumptions are met:

- Independence : Ensure that observations are independent of each other. Violations of independence assumptions can lead to biased results.

- Homogeneity of Variance : Verify that variances are approximately equal across groups, especially in ANOVA and regression analyses. Levene's test or Bartlett's test can be used to assess homogeneity of variance.

- Linearity : Check for linear relationships between variables, particularly in regression analysis. Residual plots can help diagnose violations of linearity assumptions.

Considering Research Objectives

Your research objectives should guide the selection of the appropriate statistical method.

- What are you trying to achieve with your analysis? : Determine whether you're interested in comparing groups, predicting outcomes, exploring relationships, or identifying patterns.

- What type of data are you analyzing? : Choose methods that are suitable for your data type and research questions.

- Are you testing specific hypotheses or exploring data for insights? : Confirmatory analyses involve testing predefined hypotheses, while exploratory analyses focus on discovering patterns or relationships in the data.

Consulting Statistical Experts

If you're unsure about the most appropriate statistical method for your analysis, don't hesitate to seek advice from statistical experts or consultants:

- Collaborate with Statisticians : Statisticians can provide valuable insights into the strengths and limitations of different statistical methods and help you select the most appropriate approach.

- Utilize Resources : Take advantage of online resources, forums, and statistical software documentation to learn about different methods and their applications.

- Peer Review : Consider seeking feedback from colleagues or peers familiar with statistical analysis to validate your approach and ensure rigor in your analysis.