An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

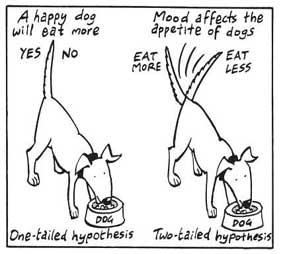

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

- Physician Physician Board Reviews Physician Associate Board Reviews CME Lifetime CME Free CME MATE and DEA Compliance

- Student USMLE Step 1 USMLE Step 2 USMLE Step 3 COMLEX Level 1 COMLEX Level 2 COMLEX Level 3 96 Medical School Exams Student Resource Center NCLEX - RN NCLEX - LPN/LVN/PN 24 Nursing Exams

- Nurse Practitioner APRN/NP Board Reviews CNS Certification Reviews CE - Nurse Practitioner FREE CE

- Nurse RN Certification Reviews CE - Nurse FREE CE

- Pharmacist Pharmacy Board Exam Prep CE - Pharmacist

- Allied Allied Health Exam Prep Dentist Exams CE - Social Worker CE - Dentist

- Point of Care

- Free CME/CE

Hypothesis Testing, P Values, Confidence Intervals, and Significance

Definition/introduction.

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

Issues of Concern

Register for free and read the full article, learn more about a subscription to statpearls point-of-care.

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

Jones M, Gebski V, Onslow M, Packman A. Statistical power in stuttering research: a tutorial. Journal of speech, language, and hearing research : JSLHR. 2002 Apr:45(2):243-55 [PubMed PMID: 12003508]

Sedgwick P. Pitfalls of statistical hypothesis testing: type I and type II errors. BMJ (Clinical research ed.). 2014 Jul 3:349():g4287. doi: 10.1136/bmj.g4287. Epub 2014 Jul 3 [PubMed PMID: 24994622]

Fethney J. Statistical and clinical significance, and how to use confidence intervals to help interpret both. Australian critical care : official journal of the Confederation of Australian Critical Care Nurses. 2010 May:23(2):93-7. doi: 10.1016/j.aucc.2010.03.001. Epub 2010 Mar 29 [PubMed PMID: 20347326]

Hayat MJ. Understanding statistical significance. Nursing research. 2010 May-Jun:59(3):219-23. doi: 10.1097/NNR.0b013e3181dbb2cc. Epub [PubMed PMID: 20445438]

Ferrill MJ, Brown DA, Kyle JA. Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Journal of pharmacy practice. 2010 Aug:23(4):344-51. doi: 10.1177/0897190009358774. Epub 2010 Apr 13 [PubMed PMID: 21507834]

Infanger D, Schmidt-Trucksäss A. P value functions: An underused method to present research results and to promote quantitative reasoning. Statistics in medicine. 2019 Sep 20:38(21):4189-4197. doi: 10.1002/sim.8293. Epub 2019 Jul 3 [PubMed PMID: 31270842]

Dorey F. Statistics in brief: Interpretation and use of p values: all p values are not equal. Clinical orthopaedics and related research. 2011 Nov:469(11):3259-61. doi: 10.1007/s11999-011-2053-1. Epub [PubMed PMID: 21918804]

Liu XS. Implications of statistical power for confidence intervals. The British journal of mathematical and statistical psychology. 2012 Nov:65(3):427-37. doi: 10.1111/j.2044-8317.2011.02035.x. Epub 2011 Oct 25 [PubMed PMID: 22026811]

Tijssen JG, Kolm P. Demystifying the New Statistical Recommendations: The Use and Reporting of p Values. Journal of the American College of Cardiology. 2016 Jul 12:68(2):231-3. doi: 10.1016/j.jacc.2016.05.026. Epub [PubMed PMID: 27386779]

Spanos A. Recurring controversies about P values and confidence intervals revisited. Ecology. 2014 Mar:95(3):645-51 [PubMed PMID: 24804448]

Freire APCF, Elkins MR, Ramos EMC, Moseley AM. Use of 95% confidence intervals in the reporting of between-group differences in randomized controlled trials: analysis of a representative sample of 200 physical therapy trials. Brazilian journal of physical therapy. 2019 Jul-Aug:23(4):302-310. doi: 10.1016/j.bjpt.2018.10.004. Epub 2018 Oct 16 [PubMed PMID: 30366845]

Dorey FJ. In brief: statistics in brief: Confidence intervals: what is the real result in the target population? Clinical orthopaedics and related research. 2010 Nov:468(11):3137-8. doi: 10.1007/s11999-010-1407-4. Epub [PubMed PMID: 20532716]

Porcher R. Reporting results of orthopaedic research: confidence intervals and p values. Clinical orthopaedics and related research. 2009 Oct:467(10):2736-7. doi: 10.1007/s11999-009-0952-1. Epub 2009 Jun 30 [PubMed PMID: 19565303]

Gardner MJ, Altman DG. Confidence intervals rather than P values: estimation rather than hypothesis testing. British medical journal (Clinical research ed.). 1986 Mar 15:292(6522):746-50 [PubMed PMID: 3082422]

Cooper RJ, Wears RL, Schriger DL. Reporting research results: recommendations for improving communication. Annals of emergency medicine. 2003 Apr:41(4):561-4 [PubMed PMID: 12658257]

Doll H, Carney S. Statistical approaches to uncertainty: P values and confidence intervals unpacked. Equine veterinary journal. 2007 May:39(3):275-6 [PubMed PMID: 17520981]

Colquhoun D. The reproducibility of research and the misinterpretation of p-values. Royal Society open science. 2017 Dec:4(12):171085. doi: 10.1098/rsos.171085. Epub 2017 Dec 6 [PubMed PMID: 29308247]

Use the mouse wheel to zoom in and out, click and drag to pan the image

- Research article

- Open access

- Published: 19 May 2010

The null hypothesis significance test in health sciences research (1995-2006): statistical analysis and interpretation

- Luis Carlos Silva-Ayçaguer 1 ,

- Patricio Suárez-Gil 2 &

- Ana Fernández-Somoano 3

BMC Medical Research Methodology volume 10 , Article number: 44 ( 2010 ) Cite this article

38k Accesses

23 Citations

18 Altmetric

Metrics details

The null hypothesis significance test (NHST) is the most frequently used statistical method, although its inferential validity has been widely criticized since its introduction. In 1988, the International Committee of Medical Journal Editors (ICMJE) warned against sole reliance on NHST to substantiate study conclusions and suggested supplementary use of confidence intervals (CI). Our objective was to evaluate the extent and quality in the use of NHST and CI, both in English and Spanish language biomedical publications between 1995 and 2006, taking into account the International Committee of Medical Journal Editors recommendations, with particular focus on the accuracy of the interpretation of statistical significance and the validity of conclusions.

Original articles published in three English and three Spanish biomedical journals in three fields (General Medicine, Clinical Specialties and Epidemiology - Public Health) were considered for this study. Papers published in 1995-1996, 2000-2001, and 2005-2006 were selected through a systematic sampling method. After excluding the purely descriptive and theoretical articles, analytic studies were evaluated for their use of NHST with P-values and/or CI for interpretation of statistical "significance" and "relevance" in study conclusions.

Among 1,043 original papers, 874 were selected for detailed review. The exclusive use of P-values was less frequent in English language publications as well as in Public Health journals; overall such use decreased from 41% in 1995-1996 to 21% in 2005-2006. While the use of CI increased over time, the "significance fallacy" (to equate statistical and substantive significance) appeared very often, mainly in journals devoted to clinical specialties (81%). In papers originally written in English and Spanish, 15% and 10%, respectively, mentioned statistical significance in their conclusions.

Conclusions

Overall, results of our review show some improvements in statistical management of statistical results, but further efforts by scholars and journal editors are clearly required to move the communication toward ICMJE advices, especially in the clinical setting, which seems to be imperative among publications in Spanish.

Peer Review reports

The null hypothesis statistical testing (NHST) has been the most widely used statistical approach in health research over the past 80 years. Its origins dates back to 1279 [ 1 ] although it was in the second decade of the twentieth century when the statistician Ronald Fisher formally introduced the concept of "null hypothesis" H 0 - which, generally speaking, establishes that certain parameters do not differ from each other. He was the inventor of the "P-value" through which it could be assessed [ 2 ]. Fisher's P-value is defined as a conditional probability calculated using the results of a study. Specifically, the P-value is the probability of obtaining a result at least as extreme as the one that was actually observed, assuming that the null hypothesis is true. The Fisherian significance testing theory considered the p-value as an index to measure the strength of evidence against the null hypothesis in a single experiment. The father of NHST never endorsed, however, the inflexible application of the ultimately subjective threshold levels almost universally adopted later on (although the introduction of the 0.05 has his paternity also).

A few years later, Jerzy Neyman and Egon Pearson considered the Fisherian approach inefficient, and in 1928 they published an article [ 3 ] that would provide the theoretical basis of what they called hypothesis statistical testing . The Neyman-Pearson approach is based on the notion that one out of two choices has to be taken: accept the null hypothesis taking the information as a reference based on the information provided, or reject it in favor of an alternative one. Thus, one can incur one of two types of errors: a Type I error, if the null hypothesis is rejected when it is actually true, and a Type II error, if the null hypothesis is accepted when it is actually false. They established a rule to optimize the decision process, using the p-value introduced by Fisher, by setting the maximum frequency of errors that would be admissible.

The null hypothesis statistical testing, as applied today, is a hybrid coming from the amalgamation of the two methods [ 4 ]. As a matter of fact, some 15 years later, both procedures were combined to give rise to the nowadays widespread use of an inferential tool that would satisfy none of the statisticians involved in the original controversy. The present method essentially goes as follows: given a null hypothesis, an estimate of the parameter (or parameters) is obtained and used to create statistics whose distribution, under H 0 , is known. With these data the P-value is computed. Finally, the null hypothesis is rejected when the obtained P-value is smaller than a certain comparative threshold (usually 0.05) and it is not rejected if P is larger than the threshold.

The first reservations about the validity of the method began to appear around 1940, when some statisticians censured the logical roots and practical convenience of Fisher's P-value [ 5 ]. Significance tests and P-values have repeatedly drawn the attention and criticism of many authors over the past 70 years, who have kept questioning its epistemological legitimacy as well as its practical value. What remains in spite of these criticisms is the lasting legacy of researchers' unwillingness to eradicate or reform these methods.

Although there are very comprehensive works on the topic [ 6 ], we list below some of the criticisms most universally accepted by specialists.

The P-values are used as a tool to make decisions in favor of or against a hypothesis. What really may be relevant, however, is to get an effect size estimate (often the difference between two values) rather than rendering dichotomous true/false verdicts [ 7 – 11 ].

The P-value is a conditional probability of the data, provided that some assumptions are met, but what really interests the investigator is the inverse probability: what degree of validity can be attributed to each of several competing hypotheses, once that certain data have been observed [ 12 ].

The two elements that affect the results, namely the sample size and the magnitude of the effect, are inextricably linked in the value of p and we can always get a lower P-value by increasing the sample size. Thus, the conclusions depend on a factor completely unrelated to the reality studied (i.e. the available resources, which in turn determine the sample size) [ 13 , 14 ].

Those who defend the NHST often assert the objective nature of that test, but the process is actually far from being so. NHST does not ensure objectivity. This is reflected in the fact that we generally operate with thresholds that are ultimately no more than conventions, such as 0.01 or 0.05. What is more, for many years their use has unequivocally demonstrated the inherent subjectivity that goes with the concept of P, regardless of how it will be used later [ 15 – 17 ].

In practice, the NHST is limited to a binary response sorting hypotheses into "true" and "false" or declaring "rejection" or "no rejection", without demanding a reasonable interpretation of the results, as has been noted time and again for decades. This binary orthodoxy validates categorical thinking, which results in a very simplistic view of scientific activity that induces researchers not to test theories about the magnitude of effect sizes [ 18 – 20 ].

Despite the weakness and shortcomings of the NHST, they are frequently taught as if they were the key inferential statistical method or the most appropriate, or even the sole unquestioned one. The statistical textbooks, with only some exceptions, do not even mention the NHST controversy. Instead, the myth is spread that NHST is the "natural" final action of scientific inference and the only procedure for testing hypotheses. However, relevant specialists and important regulators of the scientific world advocate avoiding them.

Taking especially into account that NHST does not offer the most important information (i.e. the magnitude of an effect of interest, and the precision of the estimate of the magnitude of that effect), many experts recommend the reporting of point estimates of effect sizes with confidence intervals as the appropriate representation of the inherent uncertainty linked to empirical studies [ 21 – 25 ]. Since 1988, the International Committee of Medical Journal Editors (ICMJE, known as the Vancouver Group ) incorporates the following recommendation to authors of manuscripts submitted to medical journals: "When possible, quantify findings and present them with appropriate indicators of measurement error or uncertainty (such as confidence intervals). Avoid relying solely on statistical hypothesis testing, such as P-values, which fail to convey important information about effect size" [ 26 ].

As will be shown, the use of confidence intervals (CI), occasionally accompanied by P-values, is recommended as a more appropriate method for reporting results. Some authors have noted several shortcomings of CI long ago [ 27 ]. In spite of the fact that calculating CI could be complicated indeed, and that their interpretation is far from simple [ 28 , 29 ], authors are urged to use them because they provide much more information than the NHST and do not merit most of its criticisms of NHST [ 30 ]. While some have proposed different options (for instance, likelihood-based information theoretic methods [ 31 ], and the Bayesian inferential paradigm [ 32 ]), confidence interval estimation of effect sizes is clearly the most widespread alternative approach.

Although twenty years have passed since the ICMJE began to disseminate such recommendations, systematically ignored by the vast majority of textbooks and hardly incorporated in medical publications [ 33 ], it is interesting to examine the extent to which the NHST is used in articles published in medical journals during recent years, in order to identify what is still lacking in the process of eradicating the widespread ceremonial use that is made of statistics in health research [ 34 ]. Furthermore, it is enlightening in this context to examine whether these patterns differ between English- and Spanish-speaking worlds and, if so, to see if the changes in paradigms are occurring more slowly in Spanish-language publications. In such a case we would offer various suggestions.

In addition to assessing the adherence to the above cited statistical recommendation proposed by ICMJE relative to the use of P-values, we consider it of particular interest to estimate the extent to which the significance fallacy is present, an inertial deficiency that consists of attributing -- explicitly or not -- qualitative importance or practical relevance to the found differences simply because statistical significance was obtained.

Many authors produce misleading statements such as "a significant effect was (or was not) found" when it should be said that "a statistically significant difference was (or was not) found". A detrimental consequence of this equivalence is that some authors believe that finding out whether there is "statistical significance" or not is the aim, so that this term is then mentioned in the conclusions [ 35 ]. This means virtually nothing, except that it indicates that the author is letting a computer do the thinking. Since the real research questions are never statistical ones, the answers cannot be statistical either. Accordingly, the conversion of the dichotomous outcome produced by a NHST into a conclusion is another manifestation of the mentioned fallacy.

The general objective of the present study is to evaluate the extent and quality of use of NHST and CI, both in English- and in Spanish-language biomedical publications, between 1995 and 2006 taking into account the International Committee of Medical Journal Editors recommendations, with particular focus on accuracy regarding interpretation of statistical significance and the validity of conclusions.

We reviewed the original articles from six journals, three in English and three in Spanish, over three disjoint periods sufficiently separated from each other (1995-1996, 2000-2001, 2005-2006) as to properly describe the evolution in prevalence of the target features along the selected periods.

The selection of journals was intended to get representation for each of the following three thematic areas: clinical specialties ( Obstetrics & Gynecology and Revista Española de Cardiología) ; Public Health and Epidemiology ( International Journal of Epidemiology and Atención Primaria) and the area of general and internal medicine ( British Medical Journal and Medicina Clínica ). Five of the selected journals formally endorsed ICMJE guidelines; the remaining one ( Revista Española de Cardiología ) suggests observing ICMJE demands in relation with specific issues. We attempted to capture journal diversity in the sample by selecting general and specialty journals with different degrees of influence, resulting from their impact factors in 2007, which oscillated between 1.337 (MC) and 9.723 (BMJ). No special reasons guided us to choose these specific journals, but we opted for journals with rather large paid circulations. For instance, the Spanish Cardiology Journal is the one with the largest impact factor among the fourteen Spanish Journals devoted to clinical specialties that have impact factor and Obstetrics & Gynecology has an outstanding impact factor among the huge number of journals available for selection.

It was decided to take around 60 papers for each biennium and journal, which means a total of around 1,000 papers. As recently suggested [ 36 , 37 ], this number was not established using a conventional method, but by means of a purposive and pragmatic approach in choosing the maximum sample size that was feasible.

Systematic sampling in phases [ 38 ] was used in applying a sampling fraction equal to 60/N, where N is the number of articles, in each of the 18 subgroups defined by crossing the six journals and the three time periods. Table 1 lists the population size and the sample size for each subgroup. While the sample within each subgroup was selected with equal probability, estimates based on other subsets of articles (defined across time periods, areas, or languages) are based on samples with various selection probabilities. Proper weights were used to take into account the stratified nature of the sampling in these cases.

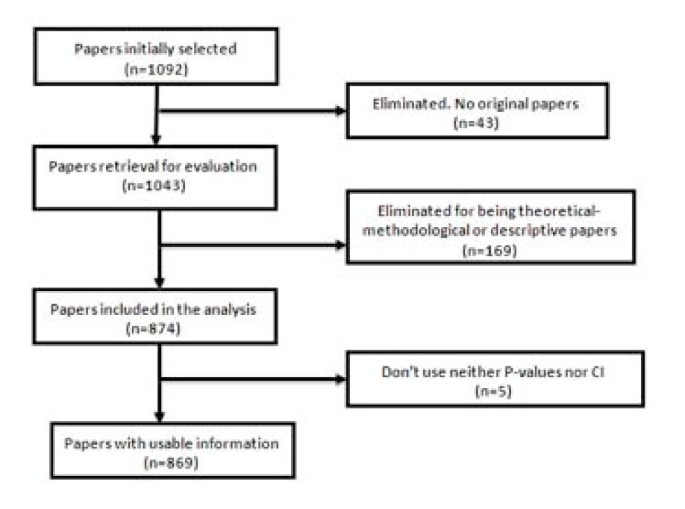

Forty-nine of the 1,092 selected papers were eliminated because, although the section of the article in which they were assigned could suggest they were originals, detailed scrutiny revealed that in some cases they were not. The sample, therefore, consisted of 1,043 papers. Each of them was classified into one of three categories: (1) purely descriptive papers, those designed to review or characterize the state of affairs as it exists at present, (2) analytical papers, or (3) articles that address theoretical, methodological or conceptual issues. An article was regarded as analytical if it seeks to explain the reasons behind a particular occurrence by discovering causal relationships or, even if self-classified as descriptive, it was carried out to assess cause-effect associations among variables. We classify as theoretical or methodological those articles that do not handle empirical data as such, and focus instead on proposing or assessing research methods. We identified 169 papers as purely descriptive or theoretical, which were therefore excluded from the sample. Figure 1 presents a flow chart showing the process for determining eligibility for inclusion in the sample.

Flow chart of the selection process for eligible papers .

To estimate the adherence to ICMJE recommendations, we considered whether the papers used P-values, confidence intervals, and both simultaneously. By "the use of P-values" we mean that the article contains at least one P-value, explicitly mentioned in the text or at the bottom of a table, or that it reports that an effect was considered as statistically significant . It was deemed that an article uses CI if it explicitly contained at least one confidence interval, but not when it only provides information that could allow its computation (usually by presenting both the estimate and the standard error). Probability intervals provided in Bayesian analysis were classified as confidence intervals (although conceptually they are not the same) since what is really of interest here is whether or not the authors quantify the findings and present them with appropriate indicators of the margin of error or uncertainty.

In addition we determined whether the "Results" section of each article attributed the status of "significant" to an effect on the sole basis of the outcome of a NHST (i.e., without clarifying that it is strictly statistical significance). Similarly, we examined whether the term "significant" (applied to a test) was mistakenly used as synonymous with substantive , relevant or important . The use of the term "significant effect" when it is only appropriate as a reference to a "statistically significant difference," can be considered a direct expression of the significance fallacy [ 39 ] and, as such, constitutes one way to detect the problem in a specific paper.

We also assessed whether the "Conclusions," which sometimes appear as a separate section in the paper or otherwise in the last paragraphs of the "Discussion" section mentioned statistical significance and, if so, whether any of such mentions were no more than an allusion to results.

To perform these analyses we considered both the abstract and the body of the article. To assess the handling of the significance issue, however, only the body of the manuscript was taken into account.

The information was collected by four trained observers. Every paper was assigned to two reviewers. Disagreements were discussed and, if no agreement was reached, a third reviewer was consulted to break the tie and so moderate the effect of subjectivity in the assessment.