Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- A Quick Guide to Experimental Design | 5 Steps & Examples

A Quick Guide to Experimental Design | 5 Steps & Examples

Published on 11 April 2022 by Rebecca Bevans . Revised on 5 December 2022.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design means creating a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If if random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, frequently asked questions about experimental design.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

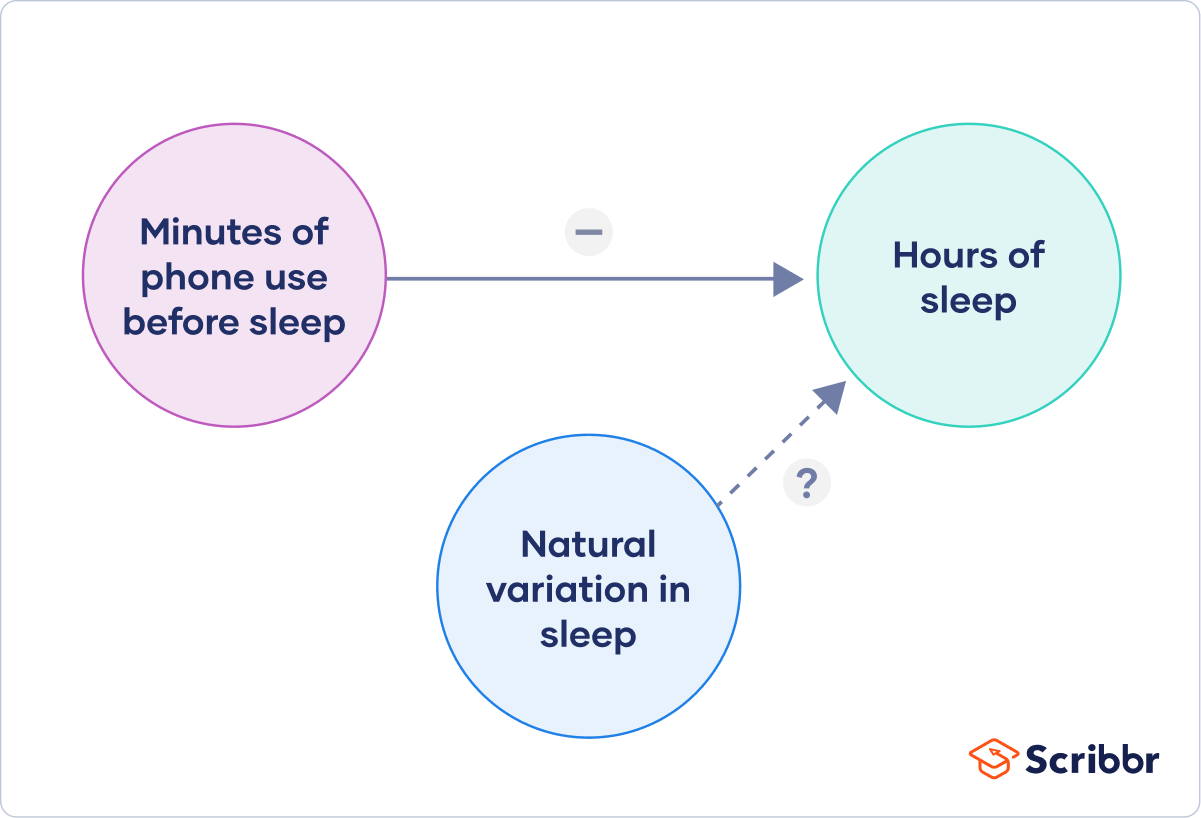

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism, run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalised and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomised design vs a randomised block design .

- A between-subjects design vs a within-subjects design .

Randomisation

An experiment can be completely randomised or randomised within blocks (aka strata):

- In a completely randomised design , every subject is assigned to a treatment group at random.

- In a randomised block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

Sometimes randomisation isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomising or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimise bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalised to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

Experimental designs are a set of procedures that you plan in order to examine the relationship between variables that interest you.

To design a successful experiment, first identify:

- A testable hypothesis

- One or more independent variables that you will manipulate

- One or more dependent variables that you will measure

When designing the experiment, first decide:

- How your variable(s) will be manipulated

- How you will control for any potential confounding or lurking variables

- How many subjects you will include

- How you will assign treatments to your subjects

The key difference between observational studies and experiments is that, done correctly, an observational study will never influence the responses or behaviours of participants. Experimental designs will have a treatment condition applied to at least a portion of participants.

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word ‘between’ means that you’re comparing different conditions between groups, while the word ‘within’ means you’re comparing different conditions within the same group.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, December 05). A Quick Guide to Experimental Design | 5 Steps & Examples. Scribbr. Retrieved 21 May 2024, from https://www.scribbr.co.uk/research-methods/guide-to-experimental-design/

Is this article helpful?

Rebecca Bevans

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10 Experimental research

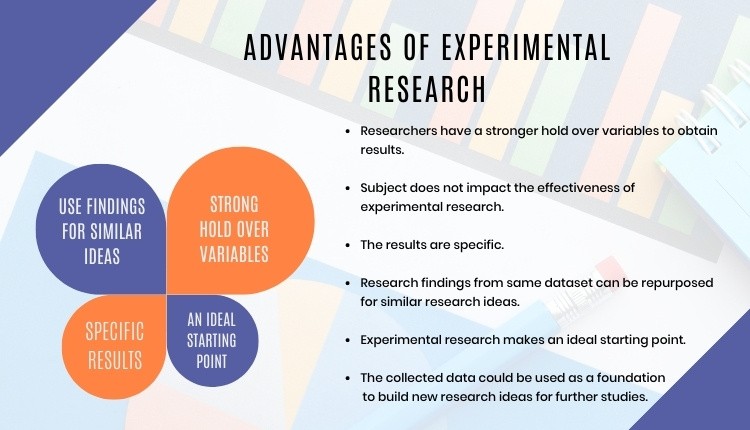

Experimental research—often considered to be the ‘gold standard’ in research designs—is one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity (causality) due to its ability to link cause and effect through treatment manipulation, while controlling for the spurious effect of extraneous variable.

Experimental research is best suited for explanatory research—rather than for descriptive or exploratory research—where the goal of the study is to examine cause-effect relationships. It also works well for research that involves a relatively limited and well-defined set of independent variables that can either be manipulated or controlled. Experimental research can be conducted in laboratory or field settings. Laboratory experiments , conducted in laboratory (artificial) settings, tend to be high in internal validity, but this comes at the cost of low external validity (generalisability), because the artificial (laboratory) setting in which the study is conducted may not reflect the real world. Field experiments are conducted in field settings such as in a real organisation, and are high in both internal and external validity. But such experiments are relatively rare, because of the difficulties associated with manipulating treatments and controlling for extraneous effects in a field setting.

Experimental research can be grouped into two broad categories: true experimental designs and quasi-experimental designs. Both designs require treatment manipulation, but while true experiments also require random assignment, quasi-experiments do not. Sometimes, we also refer to non-experimental research, which is not really a research design, but an all-inclusive term that includes all types of research that do not employ treatment manipulation or random assignment, such as survey research, observational research, and correlational studies.

Basic concepts

Treatment and control groups. In experimental research, some subjects are administered one or more experimental stimulus called a treatment (the treatment group ) while other subjects are not given such a stimulus (the control group ). The treatment may be considered successful if subjects in the treatment group rate more favourably on outcome variables than control group subjects. Multiple levels of experimental stimulus may be administered, in which case, there may be more than one treatment group. For example, in order to test the effects of a new drug intended to treat a certain medical condition like dementia, if a sample of dementia patients is randomly divided into three groups, with the first group receiving a high dosage of the drug, the second group receiving a low dosage, and the third group receiving a placebo such as a sugar pill (control group), then the first two groups are experimental groups and the third group is a control group. After administering the drug for a period of time, if the condition of the experimental group subjects improved significantly more than the control group subjects, we can say that the drug is effective. We can also compare the conditions of the high and low dosage experimental groups to determine if the high dose is more effective than the low dose.

Treatment manipulation. Treatments are the unique feature of experimental research that sets this design apart from all other research methods. Treatment manipulation helps control for the ‘cause’ in cause-effect relationships. Naturally, the validity of experimental research depends on how well the treatment was manipulated. Treatment manipulation must be checked using pretests and pilot tests prior to the experimental study. Any measurements conducted before the treatment is administered are called pretest measures , while those conducted after the treatment are posttest measures .

Random selection and assignment. Random selection is the process of randomly drawing a sample from a population or a sampling frame. This approach is typically employed in survey research, and ensures that each unit in the population has a positive chance of being selected into the sample. Random assignment, however, is a process of randomly assigning subjects to experimental or control groups. This is a standard practice in true experimental research to ensure that treatment groups are similar (equivalent) to each other and to the control group prior to treatment administration. Random selection is related to sampling, and is therefore more closely related to the external validity (generalisability) of findings. However, random assignment is related to design, and is therefore most related to internal validity. It is possible to have both random selection and random assignment in well-designed experimental research, but quasi-experimental research involves neither random selection nor random assignment.

Threats to internal validity. Although experimental designs are considered more rigorous than other research methods in terms of the internal validity of their inferences (by virtue of their ability to control causes through treatment manipulation), they are not immune to internal validity threats. Some of these threats to internal validity are described below, within the context of a study of the impact of a special remedial math tutoring program for improving the math abilities of high school students.

History threat is the possibility that the observed effects (dependent variables) are caused by extraneous or historical events rather than by the experimental treatment. For instance, students’ post-remedial math score improvement may have been caused by their preparation for a math exam at their school, rather than the remedial math program.

Maturation threat refers to the possibility that observed effects are caused by natural maturation of subjects (e.g., a general improvement in their intellectual ability to understand complex concepts) rather than the experimental treatment.

Testing threat is a threat in pre-post designs where subjects’ posttest responses are conditioned by their pretest responses. For instance, if students remember their answers from the pretest evaluation, they may tend to repeat them in the posttest exam.

Not conducting a pretest can help avoid this threat.

Instrumentation threat , which also occurs in pre-post designs, refers to the possibility that the difference between pretest and posttest scores is not due to the remedial math program, but due to changes in the administered test, such as the posttest having a higher or lower degree of difficulty than the pretest.

Mortality threat refers to the possibility that subjects may be dropping out of the study at differential rates between the treatment and control groups due to a systematic reason, such that the dropouts were mostly students who scored low on the pretest. If the low-performing students drop out, the results of the posttest will be artificially inflated by the preponderance of high-performing students.

Regression threat —also called a regression to the mean—refers to the statistical tendency of a group’s overall performance to regress toward the mean during a posttest rather than in the anticipated direction. For instance, if subjects scored high on a pretest, they will have a tendency to score lower on the posttest (closer to the mean) because their high scores (away from the mean) during the pretest were possibly a statistical aberration. This problem tends to be more prevalent in non-random samples and when the two measures are imperfectly correlated.

Two-group experimental designs

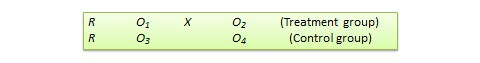

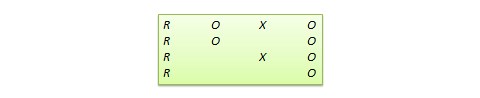

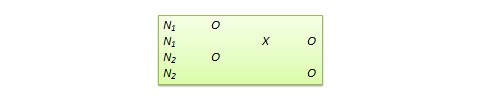

Pretest-posttest control group design . In this design, subjects are randomly assigned to treatment and control groups, subjected to an initial (pretest) measurement of the dependent variables of interest, the treatment group is administered a treatment (representing the independent variable of interest), and the dependent variables measured again (posttest). The notation of this design is shown in Figure 10.1.

Statistical analysis of this design involves a simple analysis of variance (ANOVA) between the treatment and control groups. The pretest-posttest design handles several threats to internal validity, such as maturation, testing, and regression, since these threats can be expected to influence both treatment and control groups in a similar (random) manner. The selection threat is controlled via random assignment. However, additional threats to internal validity may exist. For instance, mortality can be a problem if there are differential dropout rates between the two groups, and the pretest measurement may bias the posttest measurement—especially if the pretest introduces unusual topics or content.

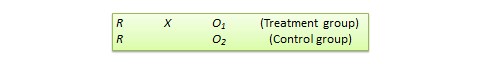

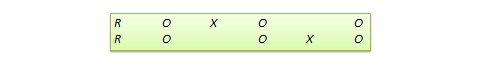

Posttest -only control group design . This design is a simpler version of the pretest-posttest design where pretest measurements are omitted. The design notation is shown in Figure 10.2.

The treatment effect is measured simply as the difference in the posttest scores between the two groups:

The appropriate statistical analysis of this design is also a two-group analysis of variance (ANOVA). The simplicity of this design makes it more attractive than the pretest-posttest design in terms of internal validity. This design controls for maturation, testing, regression, selection, and pretest-posttest interaction, though the mortality threat may continue to exist.

Because the pretest measure is not a measurement of the dependent variable, but rather a covariate, the treatment effect is measured as the difference in the posttest scores between the treatment and control groups as:

Due to the presence of covariates, the right statistical analysis of this design is a two-group analysis of covariance (ANCOVA). This design has all the advantages of posttest-only design, but with internal validity due to the controlling of covariates. Covariance designs can also be extended to pretest-posttest control group design.

Factorial designs

Two-group designs are inadequate if your research requires manipulation of two or more independent variables (treatments). In such cases, you would need four or higher-group designs. Such designs, quite popular in experimental research, are commonly called factorial designs. Each independent variable in this design is called a factor , and each subdivision of a factor is called a level . Factorial designs enable the researcher to examine not only the individual effect of each treatment on the dependent variables (called main effects), but also their joint effect (called interaction effects).

In a factorial design, a main effect is said to exist if the dependent variable shows a significant difference between multiple levels of one factor, at all levels of other factors. No change in the dependent variable across factor levels is the null case (baseline), from which main effects are evaluated. In the above example, you may see a main effect of instructional type, instructional time, or both on learning outcomes. An interaction effect exists when the effect of differences in one factor depends upon the level of a second factor. In our example, if the effect of instructional type on learning outcomes is greater for three hours/week of instructional time than for one and a half hours/week, then we can say that there is an interaction effect between instructional type and instructional time on learning outcomes. Note that the presence of interaction effects dominate and make main effects irrelevant, and it is not meaningful to interpret main effects if interaction effects are significant.

Hybrid experimental designs

Hybrid designs are those that are formed by combining features of more established designs. Three such hybrid designs are randomised bocks design, Solomon four-group design, and switched replications design.

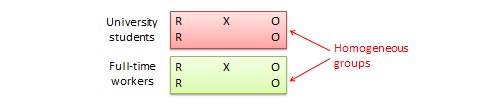

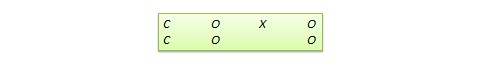

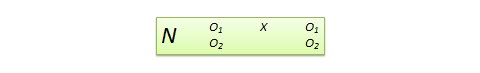

Randomised block design. This is a variation of the posttest-only or pretest-posttest control group design where the subject population can be grouped into relatively homogeneous subgroups (called blocks ) within which the experiment is replicated. For instance, if you want to replicate the same posttest-only design among university students and full-time working professionals (two homogeneous blocks), subjects in both blocks are randomly split between the treatment group (receiving the same treatment) and the control group (see Figure 10.5). The purpose of this design is to reduce the ‘noise’ or variance in data that may be attributable to differences between the blocks so that the actual effect of interest can be detected more accurately.

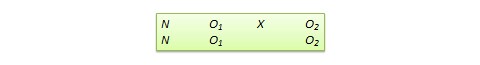

Solomon four-group design . In this design, the sample is divided into two treatment groups and two control groups. One treatment group and one control group receive the pretest, and the other two groups do not. This design represents a combination of posttest-only and pretest-posttest control group design, and is intended to test for the potential biasing effect of pretest measurement on posttest measures that tends to occur in pretest-posttest designs, but not in posttest-only designs. The design notation is shown in Figure 10.6.

Switched replication design . This is a two-group design implemented in two phases with three waves of measurement. The treatment group in the first phase serves as the control group in the second phase, and the control group in the first phase becomes the treatment group in the second phase, as illustrated in Figure 10.7. In other words, the original design is repeated or replicated temporally with treatment/control roles switched between the two groups. By the end of the study, all participants will have received the treatment either during the first or the second phase. This design is most feasible in organisational contexts where organisational programs (e.g., employee training) are implemented in a phased manner or are repeated at regular intervals.

Quasi-experimental designs

Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient: random assignment. For instance, one entire class section or one organisation is used as the treatment group, while another section of the same class or a different organisation in the same industry is used as the control group. This lack of random assignment potentially results in groups that are non-equivalent, such as one group possessing greater mastery of certain content than the other group, say by virtue of having a better teacher in a previous semester, which introduces the possibility of selection bias . Quasi-experimental designs are therefore inferior to true experimental designs in interval validity due to the presence of a variety of selection related threats such as selection-maturation threat (the treatment and control groups maturing at different rates), selection-history threat (the treatment and control groups being differentially impacted by extraneous or historical events), selection-regression threat (the treatment and control groups regressing toward the mean between pretest and posttest at different rates), selection-instrumentation threat (the treatment and control groups responding differently to the measurement), selection-testing (the treatment and control groups responding differently to the pretest), and selection-mortality (the treatment and control groups demonstrating differential dropout rates). Given these selection threats, it is generally preferable to avoid quasi-experimental designs to the greatest extent possible.

In addition, there are quite a few unique non-equivalent designs without corresponding true experimental design cousins. Some of the more useful of these designs are discussed next.

Regression discontinuity (RD) design . This is a non-equivalent pretest-posttest design where subjects are assigned to the treatment or control group based on a cut-off score on a preprogram measure. For instance, patients who are severely ill may be assigned to a treatment group to test the efficacy of a new drug or treatment protocol and those who are mildly ill are assigned to the control group. In another example, students who are lagging behind on standardised test scores may be selected for a remedial curriculum program intended to improve their performance, while those who score high on such tests are not selected from the remedial program.

Because of the use of a cut-off score, it is possible that the observed results may be a function of the cut-off score rather than the treatment, which introduces a new threat to internal validity. However, using the cut-off score also ensures that limited or costly resources are distributed to people who need them the most, rather than randomly across a population, while simultaneously allowing a quasi-experimental treatment. The control group scores in the RD design do not serve as a benchmark for comparing treatment group scores, given the systematic non-equivalence between the two groups. Rather, if there is no discontinuity between pretest and posttest scores in the control group, but such a discontinuity persists in the treatment group, then this discontinuity is viewed as evidence of the treatment effect.

Proxy pretest design . This design, shown in Figure 10.11, looks very similar to the standard NEGD (pretest-posttest) design, with one critical difference: the pretest score is collected after the treatment is administered. A typical application of this design is when a researcher is brought in to test the efficacy of a program (e.g., an educational program) after the program has already started and pretest data is not available. Under such circumstances, the best option for the researcher is often to use a different prerecorded measure, such as students’ grade point average before the start of the program, as a proxy for pretest data. A variation of the proxy pretest design is to use subjects’ posttest recollection of pretest data, which may be subject to recall bias, but nevertheless may provide a measure of perceived gain or change in the dependent variable.

Separate pretest-posttest samples design . This design is useful if it is not possible to collect pretest and posttest data from the same subjects for some reason. As shown in Figure 10.12, there are four groups in this design, but two groups come from a single non-equivalent group, while the other two groups come from a different non-equivalent group. For instance, say you want to test customer satisfaction with a new online service that is implemented in one city but not in another. In this case, customers in the first city serve as the treatment group and those in the second city constitute the control group. If it is not possible to obtain pretest and posttest measures from the same customers, you can measure customer satisfaction at one point in time, implement the new service program, and measure customer satisfaction (with a different set of customers) after the program is implemented. Customer satisfaction is also measured in the control group at the same times as in the treatment group, but without the new program implementation. The design is not particularly strong, because you cannot examine the changes in any specific customer’s satisfaction score before and after the implementation, but you can only examine average customer satisfaction scores. Despite the lower internal validity, this design may still be a useful way of collecting quasi-experimental data when pretest and posttest data is not available from the same subjects.

An interesting variation of the NEDV design is a pattern-matching NEDV design , which employs multiple outcome variables and a theory that explains how much each variable will be affected by the treatment. The researcher can then examine if the theoretical prediction is matched in actual observations. This pattern-matching technique—based on the degree of correspondence between theoretical and observed patterns—is a powerful way of alleviating internal validity concerns in the original NEDV design.

Perils of experimental research

Experimental research is one of the most difficult of research designs, and should not be taken lightly. This type of research is often best with a multitude of methodological problems. First, though experimental research requires theories for framing hypotheses for testing, much of current experimental research is atheoretical. Without theories, the hypotheses being tested tend to be ad hoc, possibly illogical, and meaningless. Second, many of the measurement instruments used in experimental research are not tested for reliability and validity, and are incomparable across studies. Consequently, results generated using such instruments are also incomparable. Third, often experimental research uses inappropriate research designs, such as irrelevant dependent variables, no interaction effects, no experimental controls, and non-equivalent stimulus across treatment groups. Findings from such studies tend to lack internal validity and are highly suspect. Fourth, the treatments (tasks) used in experimental research may be diverse, incomparable, and inconsistent across studies, and sometimes inappropriate for the subject population. For instance, undergraduate student subjects are often asked to pretend that they are marketing managers and asked to perform a complex budget allocation task in which they have no experience or expertise. The use of such inappropriate tasks, introduces new threats to internal validity (i.e., subject’s performance may be an artefact of the content or difficulty of the task setting), generates findings that are non-interpretable and meaningless, and makes integration of findings across studies impossible.

The design of proper experimental treatments is a very important task in experimental design, because the treatment is the raison d’etre of the experimental method, and must never be rushed or neglected. To design an adequate and appropriate task, researchers should use prevalidated tasks if available, conduct treatment manipulation checks to check for the adequacy of such tasks (by debriefing subjects after performing the assigned task), conduct pilot tests (repeatedly, if necessary), and if in doubt, use tasks that are simple and familiar for the respondent sample rather than tasks that are complex or unfamiliar.

In summary, this chapter introduced key concepts in the experimental design research method and introduced a variety of true experimental and quasi-experimental designs. Although these designs vary widely in internal validity, designs with less internal validity should not be overlooked and may sometimes be useful under specific circumstances and empirical contingencies.

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Types of Research Designs

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Introduction

Before beginning your paper, you need to decide how you plan to design the study .

The research design refers to the overall strategy and analytical approach that you have chosen in order to integrate, in a coherent and logical way, the different components of the study, thus ensuring that the research problem will be thoroughly investigated. It constitutes the blueprint for the collection, measurement, and interpretation of information and data. Note that the research problem determines the type of design you choose, not the other way around!

De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Trochim, William M.K. Research Methods Knowledge Base. 2006.

General Structure and Writing Style

The function of a research design is to ensure that the evidence obtained enables you to effectively address the research problem logically and as unambiguously as possible . In social sciences research, obtaining information relevant to the research problem generally entails specifying the type of evidence needed to test the underlying assumptions of a theory, to evaluate a program, or to accurately describe and assess meaning related to an observable phenomenon.

With this in mind, a common mistake made by researchers is that they begin their investigations before they have thought critically about what information is required to address the research problem. Without attending to these design issues beforehand, the overall research problem will not be adequately addressed and any conclusions drawn will run the risk of being weak and unconvincing. As a consequence, the overall validity of the study will be undermined.

The length and complexity of describing the research design in your paper can vary considerably, but any well-developed description will achieve the following :

- Identify the research problem clearly and justify its selection, particularly in relation to any valid alternative designs that could have been used,

- Review and synthesize previously published literature associated with the research problem,

- Clearly and explicitly specify hypotheses [i.e., research questions] central to the problem,

- Effectively describe the information and/or data which will be necessary for an adequate testing of the hypotheses and explain how such information and/or data will be obtained, and

- Describe the methods of analysis to be applied to the data in determining whether or not the hypotheses are true or false.

The research design is usually incorporated into the introduction of your paper . You can obtain an overall sense of what to do by reviewing studies that have utilized the same research design [e.g., using a case study approach]. This can help you develop an outline to follow for your own paper.

NOTE : Use the SAGE Research Methods Online and Cases and the SAGE Research Methods Videos databases to search for scholarly resources on how to apply specific research designs and methods . The Research Methods Online database contains links to more than 175,000 pages of SAGE publisher's book, journal, and reference content on quantitative, qualitative, and mixed research methodologies. Also included is a collection of case studies of social research projects that can be used to help you better understand abstract or complex methodological concepts. The Research Methods Videos database contains hours of tutorials, interviews, video case studies, and mini-documentaries covering the entire research process.

Creswell, John W. and J. David Creswell. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches . 5th edition. Thousand Oaks, CA: Sage, 2018; De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Leedy, Paul D. and Jeanne Ellis Ormrod. Practical Research: Planning and Design . Tenth edition. Boston, MA: Pearson, 2013; Vogt, W. Paul, Dianna C. Gardner, and Lynne M. Haeffele. When to Use What Research Design . New York: Guilford, 2012.

Action Research Design

Definition and Purpose

The essentials of action research design follow a characteristic cycle whereby initially an exploratory stance is adopted, where an understanding of a problem is developed and plans are made for some form of interventionary strategy. Then the intervention is carried out [the "action" in action research] during which time, pertinent observations are collected in various forms. The new interventional strategies are carried out, and this cyclic process repeats, continuing until a sufficient understanding of [or a valid implementation solution for] the problem is achieved. The protocol is iterative or cyclical in nature and is intended to foster deeper understanding of a given situation, starting with conceptualizing and particularizing the problem and moving through several interventions and evaluations.

What do these studies tell you ?

- This is a collaborative and adaptive research design that lends itself to use in work or community situations.

- Design focuses on pragmatic and solution-driven research outcomes rather than testing theories.

- When practitioners use action research, it has the potential to increase the amount they learn consciously from their experience; the action research cycle can be regarded as a learning cycle.

- Action research studies often have direct and obvious relevance to improving practice and advocating for change.

- There are no hidden controls or preemption of direction by the researcher.

What these studies don't tell you ?

- It is harder to do than conducting conventional research because the researcher takes on responsibilities of advocating for change as well as for researching the topic.

- Action research is much harder to write up because it is less likely that you can use a standard format to report your findings effectively [i.e., data is often in the form of stories or observation].

- Personal over-involvement of the researcher may bias research results.

- The cyclic nature of action research to achieve its twin outcomes of action [e.g. change] and research [e.g. understanding] is time-consuming and complex to conduct.

- Advocating for change usually requires buy-in from study participants.

Coghlan, David and Mary Brydon-Miller. The Sage Encyclopedia of Action Research . Thousand Oaks, CA: Sage, 2014; Efron, Sara Efrat and Ruth Ravid. Action Research in Education: A Practical Guide . New York: Guilford, 2013; Gall, Meredith. Educational Research: An Introduction . Chapter 18, Action Research. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Kemmis, Stephen and Robin McTaggart. “Participatory Action Research.” In Handbook of Qualitative Research . Norman Denzin and Yvonna S. Lincoln, eds. 2nd ed. (Thousand Oaks, CA: SAGE, 2000), pp. 567-605; McNiff, Jean. Writing and Doing Action Research . London: Sage, 2014; Reason, Peter and Hilary Bradbury. Handbook of Action Research: Participative Inquiry and Practice . Thousand Oaks, CA: SAGE, 2001.

Case Study Design

A case study is an in-depth study of a particular research problem rather than a sweeping statistical survey or comprehensive comparative inquiry. It is often used to narrow down a very broad field of research into one or a few easily researchable examples. The case study research design is also useful for testing whether a specific theory and model actually applies to phenomena in the real world. It is a useful design when not much is known about an issue or phenomenon.

- Approach excels at bringing us to an understanding of a complex issue through detailed contextual analysis of a limited number of events or conditions and their relationships.

- A researcher using a case study design can apply a variety of methodologies and rely on a variety of sources to investigate a research problem.

- Design can extend experience or add strength to what is already known through previous research.

- Social scientists, in particular, make wide use of this research design to examine contemporary real-life situations and provide the basis for the application of concepts and theories and the extension of methodologies.

- The design can provide detailed descriptions of specific and rare cases.

- A single or small number of cases offers little basis for establishing reliability or to generalize the findings to a wider population of people, places, or things.

- Intense exposure to the study of a case may bias a researcher's interpretation of the findings.

- Design does not facilitate assessment of cause and effect relationships.

- Vital information may be missing, making the case hard to interpret.

- The case may not be representative or typical of the larger problem being investigated.

- If the criteria for selecting a case is because it represents a very unusual or unique phenomenon or problem for study, then your interpretation of the findings can only apply to that particular case.

Case Studies. Writing@CSU. Colorado State University; Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 4, Flexible Methods: Case Study Design. 2nd ed. New York: Columbia University Press, 1999; Gerring, John. “What Is a Case Study and What Is It Good for?” American Political Science Review 98 (May 2004): 341-354; Greenhalgh, Trisha, editor. Case Study Evaluation: Past, Present and Future Challenges . Bingley, UK: Emerald Group Publishing, 2015; Mills, Albert J. , Gabrielle Durepos, and Eiden Wiebe, editors. Encyclopedia of Case Study Research . Thousand Oaks, CA: SAGE Publications, 2010; Stake, Robert E. The Art of Case Study Research . Thousand Oaks, CA: SAGE, 1995; Yin, Robert K. Case Study Research: Design and Theory . Applied Social Research Methods Series, no. 5. 3rd ed. Thousand Oaks, CA: SAGE, 2003.

Causal Design

Causality studies may be thought of as understanding a phenomenon in terms of conditional statements in the form, “If X, then Y.” This type of research is used to measure what impact a specific change will have on existing norms and assumptions. Most social scientists seek causal explanations that reflect tests of hypotheses. Causal effect (nomothetic perspective) occurs when variation in one phenomenon, an independent variable, leads to or results, on average, in variation in another phenomenon, the dependent variable.

Conditions necessary for determining causality:

- Empirical association -- a valid conclusion is based on finding an association between the independent variable and the dependent variable.

- Appropriate time order -- to conclude that causation was involved, one must see that cases were exposed to variation in the independent variable before variation in the dependent variable.

- Nonspuriousness -- a relationship between two variables that is not due to variation in a third variable.

- Causality research designs assist researchers in understanding why the world works the way it does through the process of proving a causal link between variables and by the process of eliminating other possibilities.

- Replication is possible.

- There is greater confidence the study has internal validity due to the systematic subject selection and equity of groups being compared.

- Not all relationships are causal! The possibility always exists that, by sheer coincidence, two unrelated events appear to be related [e.g., Punxatawney Phil could accurately predict the duration of Winter for five consecutive years but, the fact remains, he's just a big, furry rodent].

- Conclusions about causal relationships are difficult to determine due to a variety of extraneous and confounding variables that exist in a social environment. This means causality can only be inferred, never proven.

- If two variables are correlated, the cause must come before the effect. However, even though two variables might be causally related, it can sometimes be difficult to determine which variable comes first and, therefore, to establish which variable is the actual cause and which is the actual effect.

Beach, Derek and Rasmus Brun Pedersen. Causal Case Study Methods: Foundations and Guidelines for Comparing, Matching, and Tracing . Ann Arbor, MI: University of Michigan Press, 2016; Bachman, Ronet. The Practice of Research in Criminology and Criminal Justice . Chapter 5, Causation and Research Designs. 3rd ed. Thousand Oaks, CA: Pine Forge Press, 2007; Brewer, Ernest W. and Jennifer Kubn. “Causal-Comparative Design.” In Encyclopedia of Research Design . Neil J. Salkind, editor. (Thousand Oaks, CA: Sage, 2010), pp. 125-132; Causal Research Design: Experimentation. Anonymous SlideShare Presentation; Gall, Meredith. Educational Research: An Introduction . Chapter 11, Nonexperimental Research: Correlational Designs. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Trochim, William M.K. Research Methods Knowledge Base. 2006.

Cohort Design

Often used in the medical sciences, but also found in the applied social sciences, a cohort study generally refers to a study conducted over a period of time involving members of a population which the subject or representative member comes from, and who are united by some commonality or similarity. Using a quantitative framework, a cohort study makes note of statistical occurrence within a specialized subgroup, united by same or similar characteristics that are relevant to the research problem being investigated, rather than studying statistical occurrence within the general population. Using a qualitative framework, cohort studies generally gather data using methods of observation. Cohorts can be either "open" or "closed."

- Open Cohort Studies [dynamic populations, such as the population of Los Angeles] involve a population that is defined just by the state of being a part of the study in question (and being monitored for the outcome). Date of entry and exit from the study is individually defined, therefore, the size of the study population is not constant. In open cohort studies, researchers can only calculate rate based data, such as, incidence rates and variants thereof.

- Closed Cohort Studies [static populations, such as patients entered into a clinical trial] involve participants who enter into the study at one defining point in time and where it is presumed that no new participants can enter the cohort. Given this, the number of study participants remains constant (or can only decrease).

- The use of cohorts is often mandatory because a randomized control study may be unethical. For example, you cannot deliberately expose people to asbestos, you can only study its effects on those who have already been exposed. Research that measures risk factors often relies upon cohort designs.

- Because cohort studies measure potential causes before the outcome has occurred, they can demonstrate that these “causes” preceded the outcome, thereby avoiding the debate as to which is the cause and which is the effect.

- Cohort analysis is highly flexible and can provide insight into effects over time and related to a variety of different types of changes [e.g., social, cultural, political, economic, etc.].

- Either original data or secondary data can be used in this design.

- In cases where a comparative analysis of two cohorts is made [e.g., studying the effects of one group exposed to asbestos and one that has not], a researcher cannot control for all other factors that might differ between the two groups. These factors are known as confounding variables.

- Cohort studies can end up taking a long time to complete if the researcher must wait for the conditions of interest to develop within the group. This also increases the chance that key variables change during the course of the study, potentially impacting the validity of the findings.

- Due to the lack of randominization in the cohort design, its external validity is lower than that of study designs where the researcher randomly assigns participants.

Healy P, Devane D. “Methodological Considerations in Cohort Study Designs.” Nurse Researcher 18 (2011): 32-36; Glenn, Norval D, editor. Cohort Analysis . 2nd edition. Thousand Oaks, CA: Sage, 2005; Levin, Kate Ann. Study Design IV: Cohort Studies. Evidence-Based Dentistry 7 (2003): 51–52; Payne, Geoff. “Cohort Study.” In The SAGE Dictionary of Social Research Methods . Victor Jupp, editor. (Thousand Oaks, CA: Sage, 2006), pp. 31-33; Study Design 101. Himmelfarb Health Sciences Library. George Washington University, November 2011; Cohort Study. Wikipedia.

Cross-Sectional Design

Cross-sectional research designs have three distinctive features: no time dimension; a reliance on existing differences rather than change following intervention; and, groups are selected based on existing differences rather than random allocation. The cross-sectional design can only measure differences between or from among a variety of people, subjects, or phenomena rather than a process of change. As such, researchers using this design can only employ a relatively passive approach to making causal inferences based on findings.

- Cross-sectional studies provide a clear 'snapshot' of the outcome and the characteristics associated with it, at a specific point in time.

- Unlike an experimental design, where there is an active intervention by the researcher to produce and measure change or to create differences, cross-sectional designs focus on studying and drawing inferences from existing differences between people, subjects, or phenomena.

- Entails collecting data at and concerning one point in time. While longitudinal studies involve taking multiple measures over an extended period of time, cross-sectional research is focused on finding relationships between variables at one moment in time.

- Groups identified for study are purposely selected based upon existing differences in the sample rather than seeking random sampling.

- Cross-section studies are capable of using data from a large number of subjects and, unlike observational studies, is not geographically bound.

- Can estimate prevalence of an outcome of interest because the sample is usually taken from the whole population.

- Because cross-sectional designs generally use survey techniques to gather data, they are relatively inexpensive and take up little time to conduct.

- Finding people, subjects, or phenomena to study that are very similar except in one specific variable can be difficult.

- Results are static and time bound and, therefore, give no indication of a sequence of events or reveal historical or temporal contexts.

- Studies cannot be utilized to establish cause and effect relationships.

- This design only provides a snapshot of analysis so there is always the possibility that a study could have differing results if another time-frame had been chosen.

- There is no follow up to the findings.

Bethlehem, Jelke. "7: Cross-sectional Research." In Research Methodology in the Social, Behavioural and Life Sciences . Herman J Adèr and Gideon J Mellenbergh, editors. (London, England: Sage, 1999), pp. 110-43; Bourque, Linda B. “Cross-Sectional Design.” In The SAGE Encyclopedia of Social Science Research Methods . Michael S. Lewis-Beck, Alan Bryman, and Tim Futing Liao. (Thousand Oaks, CA: 2004), pp. 230-231; Hall, John. “Cross-Sectional Survey Design.” In Encyclopedia of Survey Research Methods . Paul J. Lavrakas, ed. (Thousand Oaks, CA: Sage, 2008), pp. 173-174; Helen Barratt, Maria Kirwan. Cross-Sectional Studies: Design Application, Strengths and Weaknesses of Cross-Sectional Studies. Healthknowledge, 2009. Cross-Sectional Study. Wikipedia.

Descriptive Design

Descriptive research designs help provide answers to the questions of who, what, when, where, and how associated with a particular research problem; a descriptive study cannot conclusively ascertain answers to why. Descriptive research is used to obtain information concerning the current status of the phenomena and to describe "what exists" with respect to variables or conditions in a situation.

- The subject is being observed in a completely natural and unchanged natural environment. True experiments, whilst giving analyzable data, often adversely influence the normal behavior of the subject [a.k.a., the Heisenberg effect whereby measurements of certain systems cannot be made without affecting the systems].

- Descriptive research is often used as a pre-cursor to more quantitative research designs with the general overview giving some valuable pointers as to what variables are worth testing quantitatively.

- If the limitations are understood, they can be a useful tool in developing a more focused study.

- Descriptive studies can yield rich data that lead to important recommendations in practice.

- Appoach collects a large amount of data for detailed analysis.

- The results from a descriptive research cannot be used to discover a definitive answer or to disprove a hypothesis.

- Because descriptive designs often utilize observational methods [as opposed to quantitative methods], the results cannot be replicated.

- The descriptive function of research is heavily dependent on instrumentation for measurement and observation.

Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 5, Flexible Methods: Descriptive Research. 2nd ed. New York: Columbia University Press, 1999; Given, Lisa M. "Descriptive Research." In Encyclopedia of Measurement and Statistics . Neil J. Salkind and Kristin Rasmussen, editors. (Thousand Oaks, CA: Sage, 2007), pp. 251-254; McNabb, Connie. Descriptive Research Methodologies. Powerpoint Presentation; Shuttleworth, Martyn. Descriptive Research Design, September 26, 2008; Erickson, G. Scott. "Descriptive Research Design." In New Methods of Market Research and Analysis . (Northampton, MA: Edward Elgar Publishing, 2017), pp. 51-77; Sahin, Sagufta, and Jayanta Mete. "A Brief Study on Descriptive Research: Its Nature and Application in Social Science." International Journal of Research and Analysis in Humanities 1 (2021): 11; K. Swatzell and P. Jennings. “Descriptive Research: The Nuts and Bolts.” Journal of the American Academy of Physician Assistants 20 (2007), pp. 55-56; Kane, E. Doing Your Own Research: Basic Descriptive Research in the Social Sciences and Humanities . London: Marion Boyars, 1985.

Experimental Design

A blueprint of the procedure that enables the researcher to maintain control over all factors that may affect the result of an experiment. In doing this, the researcher attempts to determine or predict what may occur. Experimental research is often used where there is time priority in a causal relationship (cause precedes effect), there is consistency in a causal relationship (a cause will always lead to the same effect), and the magnitude of the correlation is great. The classic experimental design specifies an experimental group and a control group. The independent variable is administered to the experimental group and not to the control group, and both groups are measured on the same dependent variable. Subsequent experimental designs have used more groups and more measurements over longer periods. True experiments must have control, randomization, and manipulation.

- Experimental research allows the researcher to control the situation. In so doing, it allows researchers to answer the question, “What causes something to occur?”

- Permits the researcher to identify cause and effect relationships between variables and to distinguish placebo effects from treatment effects.

- Experimental research designs support the ability to limit alternative explanations and to infer direct causal relationships in the study.

- Approach provides the highest level of evidence for single studies.

- The design is artificial, and results may not generalize well to the real world.

- The artificial settings of experiments may alter the behaviors or responses of participants.

- Experimental designs can be costly if special equipment or facilities are needed.

- Some research problems cannot be studied using an experiment because of ethical or technical reasons.

- Difficult to apply ethnographic and other qualitative methods to experimentally designed studies.

Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 7, Flexible Methods: Experimental Research. 2nd ed. New York: Columbia University Press, 1999; Chapter 2: Research Design, Experimental Designs. School of Psychology, University of New England, 2000; Chow, Siu L. "Experimental Design." In Encyclopedia of Research Design . Neil J. Salkind, editor. (Thousand Oaks, CA: Sage, 2010), pp. 448-453; "Experimental Design." In Social Research Methods . Nicholas Walliman, editor. (London, England: Sage, 2006), pp, 101-110; Experimental Research. Research Methods by Dummies. Department of Psychology. California State University, Fresno, 2006; Kirk, Roger E. Experimental Design: Procedures for the Behavioral Sciences . 4th edition. Thousand Oaks, CA: Sage, 2013; Trochim, William M.K. Experimental Design. Research Methods Knowledge Base. 2006; Rasool, Shafqat. Experimental Research. Slideshare presentation.

Exploratory Design

An exploratory design is conducted about a research problem when there are few or no earlier studies to refer to or rely upon to predict an outcome . The focus is on gaining insights and familiarity for later investigation or undertaken when research problems are in a preliminary stage of investigation. Exploratory designs are often used to establish an understanding of how best to proceed in studying an issue or what methodology would effectively apply to gathering information about the issue.

The goals of exploratory research are intended to produce the following possible insights:

- Familiarity with basic details, settings, and concerns.

- Well grounded picture of the situation being developed.

- Generation of new ideas and assumptions.

- Development of tentative theories or hypotheses.

- Determination about whether a study is feasible in the future.

- Issues get refined for more systematic investigation and formulation of new research questions.

- Direction for future research and techniques get developed.

- Design is a useful approach for gaining background information on a particular topic.

- Exploratory research is flexible and can address research questions of all types (what, why, how).

- Provides an opportunity to define new terms and clarify existing concepts.

- Exploratory research is often used to generate formal hypotheses and develop more precise research problems.

- In the policy arena or applied to practice, exploratory studies help establish research priorities and where resources should be allocated.

- Exploratory research generally utilizes small sample sizes and, thus, findings are typically not generalizable to the population at large.

- The exploratory nature of the research inhibits an ability to make definitive conclusions about the findings. They provide insight but not definitive conclusions.

- The research process underpinning exploratory studies is flexible but often unstructured, leading to only tentative results that have limited value to decision-makers.

- Design lacks rigorous standards applied to methods of data gathering and analysis because one of the areas for exploration could be to determine what method or methodologies could best fit the research problem.

Cuthill, Michael. “Exploratory Research: Citizen Participation, Local Government, and Sustainable Development in Australia.” Sustainable Development 10 (2002): 79-89; Streb, Christoph K. "Exploratory Case Study." In Encyclopedia of Case Study Research . Albert J. Mills, Gabrielle Durepos and Eiden Wiebe, editors. (Thousand Oaks, CA: Sage, 2010), pp. 372-374; Taylor, P. J., G. Catalano, and D.R.F. Walker. “Exploratory Analysis of the World City Network.” Urban Studies 39 (December 2002): 2377-2394; Exploratory Research. Wikipedia.

Field Research Design

Sometimes referred to as ethnography or participant observation, designs around field research encompass a variety of interpretative procedures [e.g., observation and interviews] rooted in qualitative approaches to studying people individually or in groups while inhabiting their natural environment as opposed to using survey instruments or other forms of impersonal methods of data gathering. Information acquired from observational research takes the form of “ field notes ” that involves documenting what the researcher actually sees and hears while in the field. Findings do not consist of conclusive statements derived from numbers and statistics because field research involves analysis of words and observations of behavior. Conclusions, therefore, are developed from an interpretation of findings that reveal overriding themes, concepts, and ideas. More information can be found HERE .

- Field research is often necessary to fill gaps in understanding the research problem applied to local conditions or to specific groups of people that cannot be ascertained from existing data.

- The research helps contextualize already known information about a research problem, thereby facilitating ways to assess the origins, scope, and scale of a problem and to gage the causes, consequences, and means to resolve an issue based on deliberate interaction with people in their natural inhabited spaces.

- Enables the researcher to corroborate or confirm data by gathering additional information that supports or refutes findings reported in prior studies of the topic.

- Because the researcher in embedded in the field, they are better able to make observations or ask questions that reflect the specific cultural context of the setting being investigated.

- Observing the local reality offers the opportunity to gain new perspectives or obtain unique data that challenges existing theoretical propositions or long-standing assumptions found in the literature.

What these studies don't tell you

- A field research study requires extensive time and resources to carry out the multiple steps involved with preparing for the gathering of information, including for example, examining background information about the study site, obtaining permission to access the study site, and building trust and rapport with subjects.

- Requires a commitment to staying engaged in the field to ensure that you can adequately document events and behaviors as they unfold.

- The unpredictable nature of fieldwork means that researchers can never fully control the process of data gathering. They must maintain a flexible approach to studying the setting because events and circumstances can change quickly or unexpectedly.

- Findings can be difficult to interpret and verify without access to documents and other source materials that help to enhance the credibility of information obtained from the field [i.e., the act of triangulating the data].

- Linking the research problem to the selection of study participants inhabiting their natural environment is critical. However, this specificity limits the ability to generalize findings to different situations or in other contexts or to infer courses of action applied to other settings or groups of people.

- The reporting of findings must take into account how the researcher themselves may have inadvertently affected respondents and their behaviors.

Historical Design

The purpose of a historical research design is to collect, verify, and synthesize evidence from the past to establish facts that defend or refute a hypothesis. It uses secondary sources and a variety of primary documentary evidence, such as, diaries, official records, reports, archives, and non-textual information [maps, pictures, audio and visual recordings]. The limitation is that the sources must be both authentic and valid.

- The historical research design is unobtrusive; the act of research does not affect the results of the study.

- The historical approach is well suited for trend analysis.

- Historical records can add important contextual background required to more fully understand and interpret a research problem.

- There is often no possibility of researcher-subject interaction that could affect the findings.

- Historical sources can be used over and over to study different research problems or to replicate a previous study.

- The ability to fulfill the aims of your research are directly related to the amount and quality of documentation available to understand the research problem.

- Since historical research relies on data from the past, there is no way to manipulate it to control for contemporary contexts.

- Interpreting historical sources can be very time consuming.

- The sources of historical materials must be archived consistently to ensure access. This may especially challenging for digital or online-only sources.

- Original authors bring their own perspectives and biases to the interpretation of past events and these biases are more difficult to ascertain in historical resources.

- Due to the lack of control over external variables, historical research is very weak with regard to the demands of internal validity.

- It is rare that the entirety of historical documentation needed to fully address a research problem is available for interpretation, therefore, gaps need to be acknowledged.

Howell, Martha C. and Walter Prevenier. From Reliable Sources: An Introduction to Historical Methods . Ithaca, NY: Cornell University Press, 2001; Lundy, Karen Saucier. "Historical Research." In The Sage Encyclopedia of Qualitative Research Methods . Lisa M. Given, editor. (Thousand Oaks, CA: Sage, 2008), pp. 396-400; Marius, Richard. and Melvin E. Page. A Short Guide to Writing about History . 9th edition. Boston, MA: Pearson, 2015; Savitt, Ronald. “Historical Research in Marketing.” Journal of Marketing 44 (Autumn, 1980): 52-58; Gall, Meredith. Educational Research: An Introduction . Chapter 16, Historical Research. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007.

Longitudinal Design

A longitudinal study follows the same sample over time and makes repeated observations. For example, with longitudinal surveys, the same group of people is interviewed at regular intervals, enabling researchers to track changes over time and to relate them to variables that might explain why the changes occur. Longitudinal research designs describe patterns of change and help establish the direction and magnitude of causal relationships. Measurements are taken on each variable over two or more distinct time periods. This allows the researcher to measure change in variables over time. It is a type of observational study sometimes referred to as a panel study.

- Longitudinal data facilitate the analysis of the duration of a particular phenomenon.

- Enables survey researchers to get close to the kinds of causal explanations usually attainable only with experiments.

- The design permits the measurement of differences or change in a variable from one period to another [i.e., the description of patterns of change over time].

- Longitudinal studies facilitate the prediction of future outcomes based upon earlier factors.

- The data collection method may change over time.

- Maintaining the integrity of the original sample can be difficult over an extended period of time.

- It can be difficult to show more than one variable at a time.

- This design often needs qualitative research data to explain fluctuations in the results.

- A longitudinal research design assumes present trends will continue unchanged.

- It can take a long period of time to gather results.

- There is a need to have a large sample size and accurate sampling to reach representativness.

Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 6, Flexible Methods: Relational and Longitudinal Research. 2nd ed. New York: Columbia University Press, 1999; Forgues, Bernard, and Isabelle Vandangeon-Derumez. "Longitudinal Analyses." In Doing Management Research . Raymond-Alain Thiétart and Samantha Wauchope, editors. (London, England: Sage, 2001), pp. 332-351; Kalaian, Sema A. and Rafa M. Kasim. "Longitudinal Studies." In Encyclopedia of Survey Research Methods . Paul J. Lavrakas, ed. (Thousand Oaks, CA: Sage, 2008), pp. 440-441; Menard, Scott, editor. Longitudinal Research . Thousand Oaks, CA: Sage, 2002; Ployhart, Robert E. and Robert J. Vandenberg. "Longitudinal Research: The Theory, Design, and Analysis of Change.” Journal of Management 36 (January 2010): 94-120; Longitudinal Study. Wikipedia.

Meta-Analysis Design

Meta-analysis is an analytical methodology designed to systematically evaluate and summarize the results from a number of individual studies, thereby, increasing the overall sample size and the ability of the researcher to study effects of interest. The purpose is to not simply summarize existing knowledge, but to develop a new understanding of a research problem using synoptic reasoning. The main objectives of meta-analysis include analyzing differences in the results among studies and increasing the precision by which effects are estimated. A well-designed meta-analysis depends upon strict adherence to the criteria used for selecting studies and the availability of information in each study to properly analyze their findings. Lack of information can severely limit the type of analyzes and conclusions that can be reached. In addition, the more dissimilarity there is in the results among individual studies [heterogeneity], the more difficult it is to justify interpretations that govern a valid synopsis of results. A meta-analysis needs to fulfill the following requirements to ensure the validity of your findings:

- Clearly defined description of objectives, including precise definitions of the variables and outcomes that are being evaluated;

- A well-reasoned and well-documented justification for identification and selection of the studies;

- Assessment and explicit acknowledgment of any researcher bias in the identification and selection of those studies;

- Description and evaluation of the degree of heterogeneity among the sample size of studies reviewed; and,

- Justification of the techniques used to evaluate the studies.

- Can be an effective strategy for determining gaps in the literature.

- Provides a means of reviewing research published about a particular topic over an extended period of time and from a variety of sources.

- Is useful in clarifying what policy or programmatic actions can be justified on the basis of analyzing research results from multiple studies.

- Provides a method for overcoming small sample sizes in individual studies that previously may have had little relationship to each other.

- Can be used to generate new hypotheses or highlight research problems for future studies.

- Small violations in defining the criteria used for content analysis can lead to difficult to interpret and/or meaningless findings.

- A large sample size can yield reliable, but not necessarily valid, results.

- A lack of uniformity regarding, for example, the type of literature reviewed, how methods are applied, and how findings are measured within the sample of studies you are analyzing, can make the process of synthesis difficult to perform.

- Depending on the sample size, the process of reviewing and synthesizing multiple studies can be very time consuming.

Beck, Lewis W. "The Synoptic Method." The Journal of Philosophy 36 (1939): 337-345; Cooper, Harris, Larry V. Hedges, and Jeffrey C. Valentine, eds. The Handbook of Research Synthesis and Meta-Analysis . 2nd edition. New York: Russell Sage Foundation, 2009; Guzzo, Richard A., Susan E. Jackson and Raymond A. Katzell. “Meta-Analysis Analysis.” In Research in Organizational Behavior , Volume 9. (Greenwich, CT: JAI Press, 1987), pp 407-442; Lipsey, Mark W. and David B. Wilson. Practical Meta-Analysis . Thousand Oaks, CA: Sage Publications, 2001; Study Design 101. Meta-Analysis. The Himmelfarb Health Sciences Library, George Washington University; Timulak, Ladislav. “Qualitative Meta-Analysis.” In The SAGE Handbook of Qualitative Data Analysis . Uwe Flick, editor. (Los Angeles, CA: Sage, 2013), pp. 481-495; Walker, Esteban, Adrian V. Hernandez, and Micheal W. Kattan. "Meta-Analysis: It's Strengths and Limitations." Cleveland Clinic Journal of Medicine 75 (June 2008): 431-439.

Mixed-Method Design

- Narrative and non-textual information can add meaning to numeric data, while numeric data can add precision to narrative and non-textual information.

- Can utilize existing data while at the same time generating and testing a grounded theory approach to describe and explain the phenomenon under study.

- A broader, more complex research problem can be investigated because the researcher is not constrained by using only one method.

- The strengths of one method can be used to overcome the inherent weaknesses of another method.

- Can provide stronger, more robust evidence to support a conclusion or set of recommendations.

- May generate new knowledge new insights or uncover hidden insights, patterns, or relationships that a single methodological approach might not reveal.

- Produces more complete knowledge and understanding of the research problem that can be used to increase the generalizability of findings applied to theory or practice.

- A researcher must be proficient in understanding how to apply multiple methods to investigating a research problem as well as be proficient in optimizing how to design a study that coherently melds them together.

- Can increase the likelihood of conflicting results or ambiguous findings that inhibit drawing a valid conclusion or setting forth a recommended course of action [e.g., sample interview responses do not support existing statistical data].

- Because the research design can be very complex, reporting the findings requires a well-organized narrative, clear writing style, and precise word choice.

- Design invites collaboration among experts. However, merging different investigative approaches and writing styles requires more attention to the overall research process than studies conducted using only one methodological paradigm.

- Concurrent merging of quantitative and qualitative research requires greater attention to having adequate sample sizes, using comparable samples, and applying a consistent unit of analysis. For sequential designs where one phase of qualitative research builds on the quantitative phase or vice versa, decisions about what results from the first phase to use in the next phase, the choice of samples and estimating reasonable sample sizes for both phases, and the interpretation of results from both phases can be difficult.

- Due to multiple forms of data being collected and analyzed, this design requires extensive time and resources to carry out the multiple steps involved in data gathering and interpretation.