5 Quasi-Experimental Design Examples

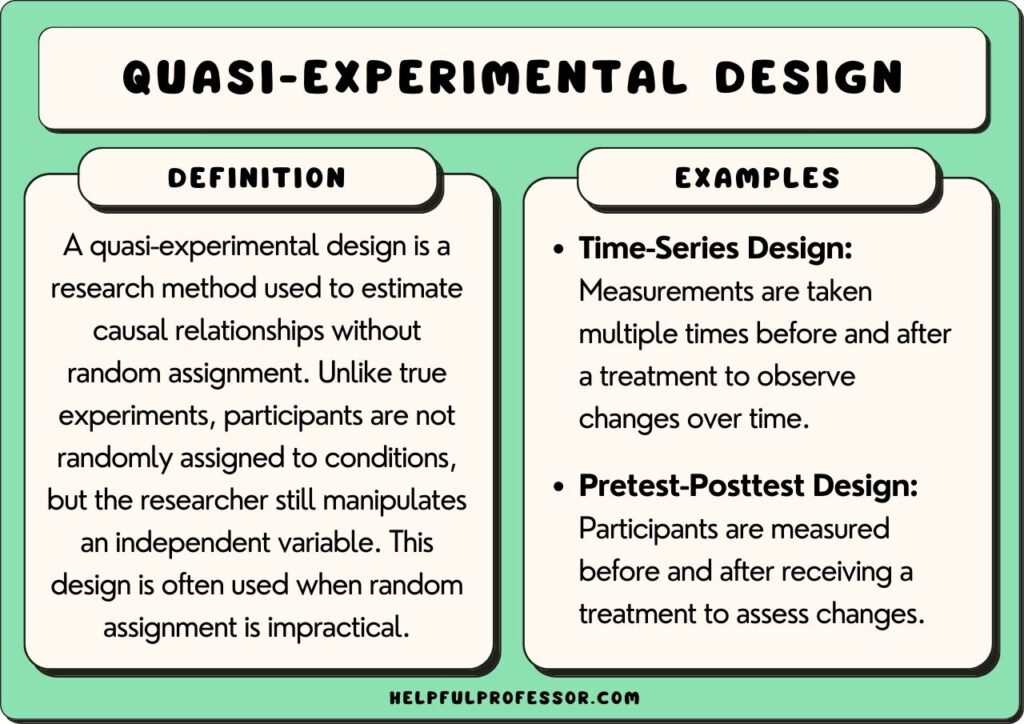

Quasi-experimental design refers to a type of experimental design that uses pre-existing groups of people rather than random groups.

Because the groups of research participants already exist, they cannot be randomly assigned to a cohort . This makes inferring a causal relationship between the treatment and observed/criterion variable difficult.

Quasi-experimental designs are generally considered inferior to true experimental designs.

Limitations of Quasi-Experimental Design

Since participants cannot be randomly assigned to the grouping variable (male/female; high education/low education), the internal validity of the study is questionable.

Extraneous variables may exist that explain the results. For example, with quasi-experimental studies involving gender, there are numerous cultural and biological variables that distinguish males and females other than gender alone.

Each one of those variables may be able to explain the results without the need to refer to gender.

See More Research Limitations Here

Quasi-Experimental Design Examples

1. smartboard apps and math.

A school has decided to supplement their math resources with smartboard applications. The math teachers research the apps available and then choose two apps for each grade level. Before deciding on which apps to purchase, the school contacts the seller and asks for permission to demo/test the apps before purchasing the licenses.

The study involves having different teachers use the apps with their classes. Since there are two math teachers at each grade level, each teacher will use one of the apps in their classroom for three months. At the end of three months, all students will take the same math exams. Then the school can simply compare which app improved the students’ math scores the most.

The reason this is called a quasi-experiment is because the school did not randomly assign students to one app or the other. The students were already in pre-existing groups/classes.

Although it was impractical to randomly assign students to use one version or the other of the apps, it creates difficulty interpreting the results.

For instance, if students in teacher A’s class did better than the students in teacher B’s class, then can we really say the difference was due to the app? There may be other differences between the two teachers that account for the results. This poses a serious threat to the study’s internal validity.

2. Leadership Training

There is reason to believe that teaching entrepreneurs modern leadership techniques will improve their performance and shorten how long it takes for them to reach profitability. Team members will feel better appreciated and work harder, which should translate to increased productivity and innovation.

This hypothetical study took place in a third-world country in a mid-sized city. The researchers marketed the training throughout the city and received interest from 5 start-ups in the tech sector and 5 in the textile industry. The leaders of each company then attended six weeks of workshops on employee motivation, leadership styles, and effective team management.

At the end of one year, the researchers returned. They conducted a standard assessment of each start-up’s growth trajectory and administered various surveys to employees.

The results indicated that tech start-ups were further along in their growth paths than textile start-ups. The data also showed that tech work teams reported greater job satisfaction and company loyalty than textile work teams.

Although the results appear straightforward, because the researchers used a quasi-experimental design, they cannot say that the training caused the results.

The two groups may differ in ways that could explain the results. For instance, perhaps there is less growth potential in the textile industry in that city, or perhaps tech leaders are more progressive and willing to accept new leadership strategies.

3. Parenting Styles and Academic Performance

Psychologists are very interested in factors that affect children’s academic performance. Since parenting styles affect a variety of children’s social and emotional profiles, it stands to reason that it may affect academic performance as well. The four parenting styles under study are: authoritarian, authoritative, permissive, and neglectful/uninvolved.

To examine this possible relationship, researchers assessed the parenting style of 120 families with third graders in a large metropolitan city. Trained raters made two-hour home visits to conduct observations of parent/child interactions. That data was later compared with the children’s grades.

The results revealed that children raised in authoritative households had the highest grades of all the groups.

However, because the researchers were not able to randomly assign children to one of the four parenting styles, the internal validity is called into question.

There may be other explanations for the results other than parenting style. For instance, maybe parents that practice authoritative parenting also come from a higher SES demographic than the other parents.

Because they have higher income and education levels, they may put more emphasis on their child’s academic performance. Or, because they have greater financial resources, their children attend STEM camps, co-curricular and other extracurricular academic-orientated classes.

4. Government Reforms and Economic Impact

Government policies can have a tremendous impact on economic development. Making it easier for small businesses to open and reducing bank loans are examples of policies that can have immediate results. So, a third-world country decides to test policy reforms in two mid-sized cities. One city receives reforms directed at small businesses, while the other receives reforms directed at luring foreign investment.

The government was careful to choose two cities that were similar in terms of size and population demographics.

Over the next five years, economic growth data were collected at the end of each fiscal year. The measures consisted of housing sells, local GDP, and unemployment rates.

At the end of five years the results indicated that small business reforms had a much larger impact on economic growth than foreign investment. The city which received small business reforms saw an increase in housing sells and GDP, but a drop in unemployment. The other city saw stagnant sells and GDP, and a slight increase in unemployment.

On the surface, it appears that small business reform is the better way to go. However, a more careful analysis revealed that the economic improvement observed in the one city was actually the result of two multinational real estate firms entering the market. The two firms specialize in converting dilapidated warehouses into shopping centers and residential properties.

5. Gender and Meditation

Meditation can help relieve stress and reduce symptoms of depression and anxiety. It is a simple and easy to use technique that just about anyone can try. However, are the benefits real or is it just that people believe it can help? To find out, a team of counselors designed a study to put it to a test.

Since they believe that women are more likely to benefit than men, they recruit both males and females to be in their study.

Both groups were trained in meditation by a licensed professional. The training took place over three weekends. Participants were instructed to practice at home at least four times a week for the next three months and keep a journal each time they meditate.

At the end of the three months, physical and psychological health data were collected on all participants. For physical health, participants’ blood pressure was measured. For psychological health, participants filled out a happiness scale and the emotional tone of their diaries were examined.

The results showed that meditation worked better for women than men. Women had lower blood pressure, scored higher on the happiness scale, and wrote more positive statements in their diaries.

Unfortunately, the researchers noticed that men apparently did not actually practice meditation as much as they should. They had very few journal entries and in post-study interviews, a vast majority of men admitted that they only practiced meditation about half the time.

The lack of practice is an extraneous variable. Perhaps if men had adhered to the study instructions, their scores on the physical and psychological measures would have been higher than women’s measures.

The quasi-experiment is used when researchers want to study the effects of a variable/treatment on different groups of people. Groups can be defined based on gender, parenting style, SES demographics, or any number of other variables.

The problem is that when interpreting the results, even clear differences between the groups cannot be attributed to the treatment.

The groups may differ in ways other than the grouping variables. For example, leadership training in the study above may have improved the textile start-ups’ performance if the techniques had been applied at all. Similarly, men may have benefited from meditation as much as women, if they had just tried.

Baumrind, D. (1991). Parenting styles and adolescent development. In R. M. Lerner, A. C. Peterson, & J. Brooks-Gunn (Eds.), Encyclopedia of Adolescence (pp. 746–758). New York: Garland Publishing, Inc.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Matthew L. Maciejewski (2020) Quasi-experimental design. Biostatistics & Epidemiology, 4 (1), 38-47. https://doi.org/10.1080/24709360.2018.1477468

Thyer, Bruce. (2012). Quasi-Experimental Research Designs . Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195387384.001.0001

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ Vicarious Punishment: Definition, Examples, Pros and Cons

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 10 Sublimation Examples (in Psychology)

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 10 Fixed Ratio Schedule Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 10 Sensorimotor Stage Examples

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

- Chris Drew (PhD) #molongui-disabled-link Cognitive Dissonance Theory: Examples and Definition

- Chris Drew (PhD) #molongui-disabled-link Vicarious Punishment: Definition, Examples, Pros and Cons

- Chris Drew (PhD) #molongui-disabled-link 10 Sublimation Examples (in Psychology)

- Chris Drew (PhD) #molongui-disabled-link Social Penetration Theory: Examples, Phases, Criticism

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- Advanced Search

- by Research Center

- by Document Type

- Users by Expertise

- Users by Department

- Users by Name

- Latest Additions

Information

- -->Main Page

- Getting Started

- Submitting Your ETD

- Repository Policies

Over the past couple of decades, teacher effectiveness has become a major focus to improve students’ mathematics learning. Teacher professional development (PD), in particular, has been at the center of efforts aimed at improving teaching practice and the mathematics learning of students. However, empirical evidence for the effectiveness of PD for improving student achievement is mixed and there is limited research-based knowledge about the features of effective PD not only in mathematics but also in other subject areas. In this quasi-experimental study, I examined the effect of a Math and Science Partnership (MSP) PD on student achievement trajectories. Results of hierarchical growth models for this study revealed that content-focused (Algebra1 and Geometry), ongoing PD was effective for improving student achievement (relative to a matched comparison group) in Algebra1 (both for high and low performing students) and in Geometry (for low performing students only). There was no effect of PD on students’ achievement in Algebra2, which was not the focus of the MSP-PD. By demonstrating an effect of PD on student achievement, this study contributes to our growing knowledge base about features of PD programs that appear to contribute to their effectiveness. Moreover, it provides a case study showing how the research design might contribute in important ways to the ability to detect an effect of PD -if one exists- on student achievement. For example, given the data I had from the district, I was able to examine student growth within all Algebra 1, Geometry and Algebra 2 courses, while matching classrooms on aggregate student characteristics and school contexts. This allowed me to eliminate the potential confound of curriculum and to utilize longitudinal models to examine PD effects on students’ growth (relative to a comparison sample) for matched classrooms. Findings of this study have implications for educational practitioners and policymakers in their efforts to design and support effective PD programs in mathematics, and these features likely transfer to the design of PD in all subject areas. Moreover, for educational researchers this study suggests potential strategies for demonstrating robust research-based evidence for the effectiveness of PD on student learning.

Monthly Views for the past 3 years

Plum analytics, actions (login required).

This site is hosted by the University Library System of the University of Pittsburgh as part of its D-Scribe Digital Publishing Program

The ULS Office of Scholarly Communication and Publishing fosters and supports new modes of publishing and information-sharing among researchers.

The University of Pittsburgh and D-Scholarship@Pitt support Open Access to research.

Connect with us

Send comments or questions.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.3 Quasi-Experimental Research

Learning objectives.

- Explain what quasi-experimental research is and distinguish it clearly from both experimental and correlational research.

- Describe three different types of quasi-experimental research designs (nonequivalent groups, pretest-posttest, and interrupted time series) and identify examples of each one.

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). Because the independent variable is manipulated before the dependent variable is measured, quasi-experimental research eliminates the directionality problem. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables. In terms of internal validity, therefore, quasi-experiments are generally somewhere between correlational studies and true experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here.

Nonequivalent Groups Design

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions.

Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments, might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Design

In a pretest-posttest design , the dependent variable is measured once before the treatment is implemented and once after it is implemented. Imagine, for example, a researcher who is interested in the effectiveness of an antidrug education program on elementary school students’ attitudes toward illegal drugs. The researcher could measure the attitudes of students at a particular elementary school during one week, implement the antidrug program during the next week, and finally, measure their attitudes again the following week. The pretest-posttest design is much like a within-subjects experiment in which each participant is tested first under the control condition and then under the treatment condition. It is unlike a within-subjects experiment, however, in that the order of conditions is not counterbalanced because it typically is not possible for a participant to be tested in the treatment condition first and then in an “untreated” control condition.

If the average posttest score is better than the average pretest score, then it makes sense to conclude that the treatment might be responsible for the improvement. Unfortunately, one often cannot conclude this with a high degree of certainty because there may be other explanations for why the posttest scores are better. One category of alternative explanations goes under the name of history . Other things might have happened between the pretest and the posttest. Perhaps an antidrug program aired on television and many of the students watched it, or perhaps a celebrity died of a drug overdose and many of the students heard about it. Another category of alternative explanations goes under the name of maturation . Participants might have changed between the pretest and the posttest in ways that they were going to anyway because they are growing and learning. If it were a yearlong program, participants might become less impulsive or better reasoners and this might be responsible for the change.

Another alternative explanation for a change in the dependent variable in a pretest-posttest design is regression to the mean . This refers to the statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion. For example, a bowler with a long-term average of 150 who suddenly bowls a 220 will almost certainly score lower in the next game. Her score will “regress” toward her mean score of 150. Regression to the mean can be a problem when participants are selected for further study because of their extreme scores. Imagine, for example, that only students who scored especially low on a test of fractions are given a special training program and then retested. Regression to the mean all but guarantees that their scores will be higher even if the training program has no effect. A closely related concept—and an extremely important one in psychological research—is spontaneous remission . This is the tendency for many medical and psychological problems to improve over time without any form of treatment. The common cold is a good example. If one were to measure symptom severity in 100 common cold sufferers today, give them a bowl of chicken soup every day, and then measure their symptom severity again in a week, they would probably be much improved. This does not mean that the chicken soup was responsible for the improvement, however, because they would have been much improved without any treatment at all. The same is true of many psychological problems. A group of severely depressed people today is likely to be less depressed on average in 6 months. In reviewing the results of several studies of treatments for depression, researchers Michael Posternak and Ivan Miller found that participants in waitlist control conditions improved an average of 10 to 15% before they received any treatment at all (Posternak & Miller, 2001). Thus one must generally be very cautious about inferring causality from pretest-posttest designs.

Does Psychotherapy Work?

Early studies on the effectiveness of psychotherapy tended to use pretest-posttest designs. In a classic 1952 article, researcher Hans Eysenck summarized the results of 24 such studies showing that about two thirds of patients improved between the pretest and the posttest (Eysenck, 1952). But Eysenck also compared these results with archival data from state hospital and insurance company records showing that similar patients recovered at about the same rate without receiving psychotherapy. This suggested to Eysenck that the improvement that patients showed in the pretest-posttest studies might be no more than spontaneous remission. Note that Eysenck did not conclude that psychotherapy was ineffective. He merely concluded that there was no evidence that it was, and he wrote of “the necessity of properly planned and executed experimental studies into this important field” (p. 323). You can read the entire article here:

http://psychclassics.yorku.ca/Eysenck/psychotherapy.htm

Fortunately, many other researchers took up Eysenck’s challenge, and by 1980 hundreds of experiments had been conducted in which participants were randomly assigned to treatment and control conditions, and the results were summarized in a classic book by Mary Lee Smith, Gene Glass, and Thomas Miller (Smith, Glass, & Miller, 1980). They found that overall psychotherapy was quite effective, with about 80% of treatment participants improving more than the average control participant. Subsequent research has focused more on the conditions under which different types of psychotherapy are more or less effective.

In a classic 1952 article, researcher Hans Eysenck pointed out the shortcomings of the simple pretest-posttest design for evaluating the effectiveness of psychotherapy.

Wikimedia Commons – CC BY-SA 3.0.

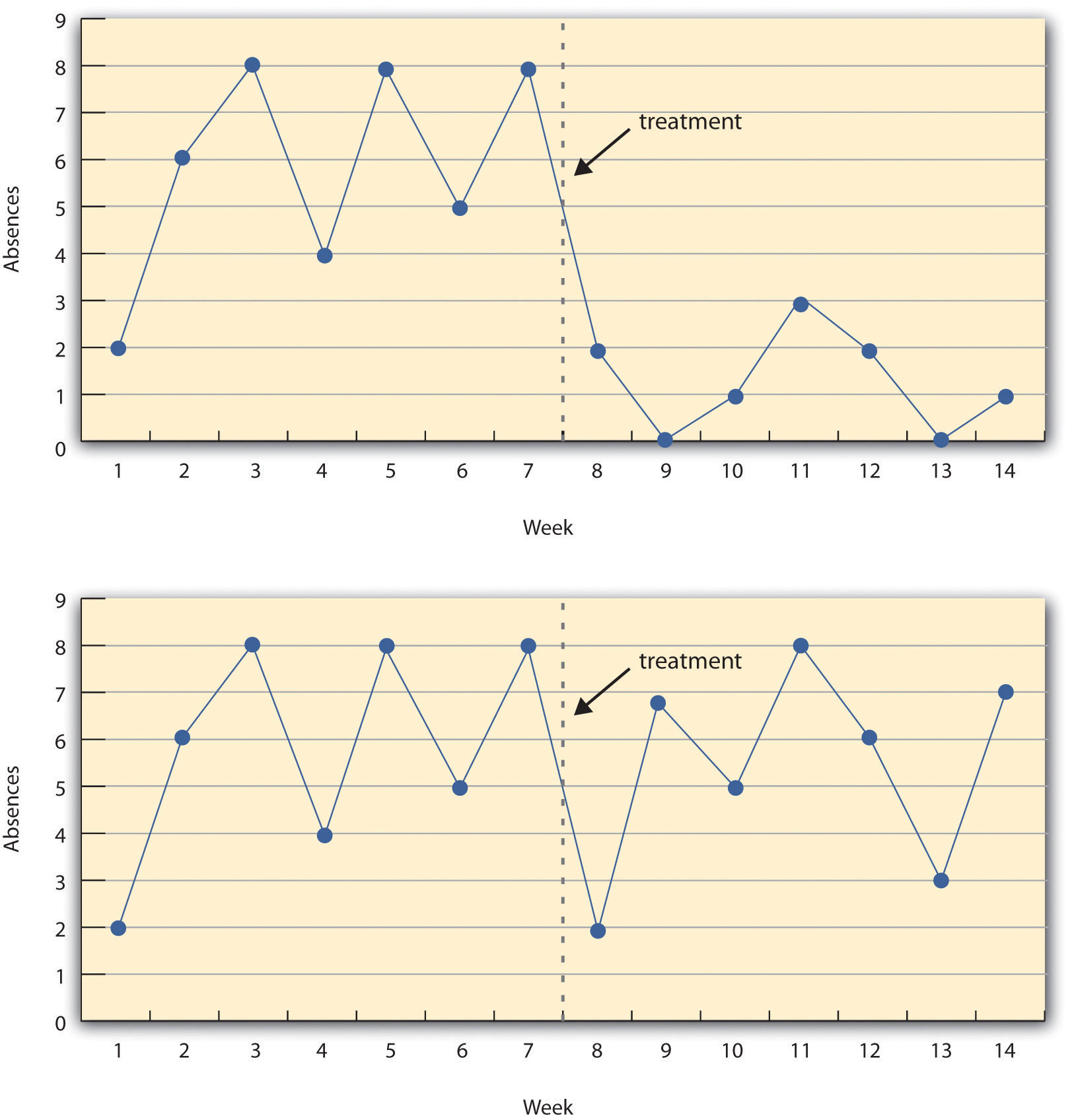

Interrupted Time Series Design

A variant of the pretest-posttest design is the interrupted time-series design . A time series is a set of measurements taken at intervals over a period of time. For example, a manufacturing company might measure its workers’ productivity each week for a year. In an interrupted time series-design, a time series like this is “interrupted” by a treatment. In one classic example, the treatment was the reduction of the work shifts in a factory from 10 hours to 8 hours (Cook & Campbell, 1979). Because productivity increased rather quickly after the shortening of the work shifts, and because it remained elevated for many months afterward, the researcher concluded that the shortening of the shifts caused the increase in productivity. Notice that the interrupted time-series design is like a pretest-posttest design in that it includes measurements of the dependent variable both before and after the treatment. It is unlike the pretest-posttest design, however, in that it includes multiple pretest and posttest measurements.

Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows data from a hypothetical interrupted time-series study. The dependent variable is the number of student absences per week in a research methods course. The treatment is that the instructor begins publicly taking attendance each day so that students know that the instructor is aware of who is present and who is absent. The top panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment worked. There is a consistently high number of absences before the treatment, and there is an immediate and sustained drop in absences after the treatment. The bottom panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment did not work. On average, the number of absences after the treatment is about the same as the number before. This figure also illustrates an advantage of the interrupted time-series design over a simpler pretest-posttest design. If there had been only one measurement of absences before the treatment at Week 7 and one afterward at Week 8, then it would have looked as though the treatment were responsible for the reduction. The multiple measurements both before and after the treatment suggest that the reduction between Weeks 7 and 8 is nothing more than normal week-to-week variation.

Figure 7.5 A Hypothetical Interrupted Time-Series Design

The top panel shows data that suggest that the treatment caused a reduction in absences. The bottom panel shows data that suggest that it did not.

Combination Designs

A type of quasi-experimental design that is generally better than either the nonequivalent groups design or the pretest-posttest design is one that combines elements of both. There is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve but whether they improve more than participants who do not receive the treatment.

Imagine, for example, that students in one school are given a pretest on their attitudes toward drugs, then are exposed to an antidrug program, and finally are given a posttest. Students in a similar school are given the pretest, not exposed to an antidrug program, and finally are given a posttest. Again, if students in the treatment condition become more negative toward drugs, this could be an effect of the treatment, but it could also be a matter of history or maturation. If it really is an effect of the treatment, then students in the treatment condition should become more negative than students in the control condition. But if it is a matter of history (e.g., news of a celebrity drug overdose) or maturation (e.g., improved reasoning), then students in the two conditions would be likely to show similar amounts of change. This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not.

Finally, if participants in this kind of design are randomly assigned to conditions, it becomes a true experiment rather than a quasi experiment. In fact, it is the kind of experiment that Eysenck called for—and that has now been conducted many times—to demonstrate the effectiveness of psychotherapy.

Key Takeaways

- Quasi-experimental research involves the manipulation of an independent variable without the random assignment of participants to conditions or orders of conditions. Among the important types are nonequivalent groups designs, pretest-posttest, and interrupted time-series designs.

- Quasi-experimental research eliminates the directionality problem because it involves the manipulation of the independent variable. It does not eliminate the problem of confounding variables, however, because it does not involve random assignment to conditions. For these reasons, quasi-experimental research is generally higher in internal validity than correlational studies but lower than true experiments.

- Practice: Imagine that two college professors decide to test the effect of giving daily quizzes on student performance in a statistics course. They decide that Professor A will give quizzes but Professor B will not. They will then compare the performance of students in their two sections on a common final exam. List five other variables that might differ between the two sections that could affect the results.

Discussion: Imagine that a group of obese children is recruited for a study in which their weight is measured, then they participate for 3 months in a program that encourages them to be more active, and finally their weight is measured again. Explain how each of the following might affect the results:

- regression to the mean

- spontaneous remission

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Eysenck, H. J. (1952). The effects of psychotherapy: An evaluation. Journal of Consulting Psychology, 16 , 319–324.

Posternak, M. A., & Miller, I. (2001). Untreated short-term course of major depression: A meta-analysis of studies using outcomes from studies using wait-list control groups. Journal of Affective Disorders, 66 , 139–146.

Smith, M. L., Glass, G. V., & Miller, T. I. (1980). The benefits of psychotherapy . Baltimore, MD: Johns Hopkins University Press.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Quasi-experimental Research: What It Is, Types & Examples

Much like an actual experiment, quasi-experimental research tries to demonstrate a cause-and-effect link between a dependent and an independent variable. A quasi-experiment, on the other hand, does not depend on random assignment, unlike an actual experiment. The subjects are sorted into groups based on non-random variables.

What is Quasi-Experimental Research?

“Resemblance” is the definition of “quasi.” Individuals are not randomly allocated to conditions or orders of conditions, even though the regression analysis is changed. As a result, quasi-experimental research is research that appears to be experimental but is not.

The directionality problem is avoided in quasi-experimental research since the regression analysis is altered before the multiple regression is assessed. However, because individuals are not randomized at random, there are likely to be additional disparities across conditions in quasi-experimental research.

As a result, in terms of internal consistency, quasi-experiments fall somewhere between correlational research and actual experiments.

The key component of a true experiment is randomly allocated groups. This means that each person has an equivalent chance of being assigned to the experimental group or the control group, depending on whether they are manipulated or not.

Simply put, a quasi-experiment is not a real experiment. A quasi-experiment does not feature randomly allocated groups since the main component of a real experiment is randomly assigned groups. Why is it so crucial to have randomly allocated groups, given that they constitute the only distinction between quasi-experimental and actual experimental research ?

Let’s use an example to illustrate our point. Let’s assume we want to discover how new psychological therapy affects depressed patients. In a genuine trial, you’d split half of the psych ward into treatment groups, With half getting the new psychotherapy therapy and the other half receiving standard depression treatment .

And the physicians compare the outcomes of this treatment to the results of standard treatments to see if this treatment is more effective. Doctors, on the other hand, are unlikely to agree with this genuine experiment since they believe it is unethical to treat one group while leaving another untreated.

A quasi-experimental study will be useful in this case. Instead of allocating these patients at random, you uncover pre-existing psychotherapist groups in the hospitals. Clearly, there’ll be counselors who are eager to undertake these trials as well as others who prefer to stick to the old ways.

These pre-existing groups can be used to compare the symptom development of individuals who received the novel therapy with those who received the normal course of treatment, even though the groups weren’t chosen at random.

If any substantial variations between them can be well explained, you may be very assured that any differences are attributable to the treatment but not to other extraneous variables.

As we mentioned before, quasi-experimental research entails manipulating an independent variable by randomly assigning people to conditions or sequences of conditions. Non-equivalent group designs, pretest-posttest designs, and regression discontinuity designs are only a few of the essential types.

What are quasi-experimental research designs?

Quasi-experimental research designs are a type of research design that is similar to experimental designs but doesn’t give full control over the independent variable(s) like true experimental designs do.

In a quasi-experimental design, the researcher changes or watches an independent variable, but the participants are not put into groups at random. Instead, people are put into groups based on things they already have in common, like their age, gender, or how many times they have seen a certain stimulus.

Because the assignments are not random, it is harder to draw conclusions about cause and effect than in a real experiment. However, quasi-experimental designs are still useful when randomization is not possible or ethical.

The true experimental design may be impossible to accomplish or just too expensive, especially for researchers with few resources. Quasi-experimental designs enable you to investigate an issue by utilizing data that has already been paid for or gathered by others (often the government).

Because they allow better control for confounding variables than other forms of studies, they have higher external validity than most genuine experiments and higher internal validity (less than true experiments) than other non-experimental research.

Is quasi-experimental research quantitative or qualitative?

Quasi-experimental research is a quantitative research method. It involves numerical data collection and statistical analysis. Quasi-experimental research compares groups with different circumstances or treatments to find cause-and-effect links.

It draws statistical conclusions from quantitative data. Qualitative data can enhance quasi-experimental research by revealing participants’ experiences and opinions, but quantitative data is the method’s foundation.

Quasi-experimental research types

There are many different sorts of quasi-experimental designs. Three of the most popular varieties are described below: Design of non-equivalent groups, Discontinuity in regression, and Natural experiments.

Design of Non-equivalent Groups

Example: design of non-equivalent groups, discontinuity in regression, example: discontinuity in regression, natural experiments, example: natural experiments.

However, because they couldn’t afford to pay everyone who qualified for the program, they had to use a random lottery to distribute slots.

Experts were able to investigate the program’s impact by utilizing enrolled people as a treatment group and those who were qualified but did not play the jackpot as an experimental group.

How QuestionPro helps in quasi-experimental research?

QuestionPro can be a useful tool in quasi-experimental research because it includes features that can assist you in designing and analyzing your research study. Here are some ways in which QuestionPro can help in quasi-experimental research:

Design surveys

Randomize participants, collect data over time, analyze data, collaborate with your team.

With QuestionPro, you have access to the most mature market research platform and tool that helps you collect and analyze the insights that matter the most. By leveraging InsightsHub, the unified hub for data management, you can leverage the consolidated platform to organize, explore, search, and discover your research data in one organized data repository .

Optimize Your quasi-experimental research with QuestionPro. Get started now!

FREE TRIAL LEARN MORE

MORE LIKE THIS

Trend Report: Guide for Market Dynamics & Strategic Analysis

May 29, 2024

Cannabis Industry Business Intelligence: Impact on Research

May 28, 2024

Top 10 Dynata Alternatives & Competitors

May 27, 2024

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Privacy Policy

Home » Quasi-Experimental Research Design – Types, Methods

Quasi-Experimental Research Design – Types, Methods

Table of Contents

Quasi-Experimental Design

Quasi-experimental design is a research method that seeks to evaluate the causal relationships between variables, but without the full control over the independent variable(s) that is available in a true experimental design.

In a quasi-experimental design, the researcher uses an existing group of participants that is not randomly assigned to the experimental and control groups. Instead, the groups are selected based on pre-existing characteristics or conditions, such as age, gender, or the presence of a certain medical condition.

Types of Quasi-Experimental Design

There are several types of quasi-experimental designs that researchers use to study causal relationships between variables. Here are some of the most common types:

Non-Equivalent Control Group Design

This design involves selecting two groups of participants that are similar in every way except for the independent variable(s) that the researcher is testing. One group receives the treatment or intervention being studied, while the other group does not. The two groups are then compared to see if there are any significant differences in the outcomes.

Interrupted Time-Series Design

This design involves collecting data on the dependent variable(s) over a period of time, both before and after an intervention or event. The researcher can then determine whether there was a significant change in the dependent variable(s) following the intervention or event.

Pretest-Posttest Design

This design involves measuring the dependent variable(s) before and after an intervention or event, but without a control group. This design can be useful for determining whether the intervention or event had an effect, but it does not allow for control over other factors that may have influenced the outcomes.

Regression Discontinuity Design

This design involves selecting participants based on a specific cutoff point on a continuous variable, such as a test score. Participants on either side of the cutoff point are then compared to determine whether the intervention or event had an effect.

Natural Experiments

This design involves studying the effects of an intervention or event that occurs naturally, without the researcher’s intervention. For example, a researcher might study the effects of a new law or policy that affects certain groups of people. This design is useful when true experiments are not feasible or ethical.

Data Analysis Methods

Here are some data analysis methods that are commonly used in quasi-experimental designs:

Descriptive Statistics

This method involves summarizing the data collected during a study using measures such as mean, median, mode, range, and standard deviation. Descriptive statistics can help researchers identify trends or patterns in the data, and can also be useful for identifying outliers or anomalies.

Inferential Statistics

This method involves using statistical tests to determine whether the results of a study are statistically significant. Inferential statistics can help researchers make generalizations about a population based on the sample data collected during the study. Common statistical tests used in quasi-experimental designs include t-tests, ANOVA, and regression analysis.

Propensity Score Matching

This method is used to reduce bias in quasi-experimental designs by matching participants in the intervention group with participants in the control group who have similar characteristics. This can help to reduce the impact of confounding variables that may affect the study’s results.

Difference-in-differences Analysis

This method is used to compare the difference in outcomes between two groups over time. Researchers can use this method to determine whether a particular intervention has had an impact on the target population over time.

Interrupted Time Series Analysis

This method is used to examine the impact of an intervention or treatment over time by comparing data collected before and after the intervention or treatment. This method can help researchers determine whether an intervention had a significant impact on the target population.

Regression Discontinuity Analysis

This method is used to compare the outcomes of participants who fall on either side of a predetermined cutoff point. This method can help researchers determine whether an intervention had a significant impact on the target population.

Steps in Quasi-Experimental Design

Here are the general steps involved in conducting a quasi-experimental design:

- Identify the research question: Determine the research question and the variables that will be investigated.

- Choose the design: Choose the appropriate quasi-experimental design to address the research question. Examples include the pretest-posttest design, non-equivalent control group design, regression discontinuity design, and interrupted time series design.

- Select the participants: Select the participants who will be included in the study. Participants should be selected based on specific criteria relevant to the research question.

- Measure the variables: Measure the variables that are relevant to the research question. This may involve using surveys, questionnaires, tests, or other measures.

- Implement the intervention or treatment: Implement the intervention or treatment to the participants in the intervention group. This may involve training, education, counseling, or other interventions.

- Collect data: Collect data on the dependent variable(s) before and after the intervention. Data collection may also include collecting data on other variables that may impact the dependent variable(s).

- Analyze the data: Analyze the data collected to determine whether the intervention had a significant impact on the dependent variable(s).

- Draw conclusions: Draw conclusions about the relationship between the independent and dependent variables. If the results suggest a causal relationship, then appropriate recommendations may be made based on the findings.

Quasi-Experimental Design Examples

Here are some examples of real-time quasi-experimental designs:

- Evaluating the impact of a new teaching method: In this study, a group of students are taught using a new teaching method, while another group is taught using the traditional method. The test scores of both groups are compared before and after the intervention to determine whether the new teaching method had a significant impact on student performance.

- Assessing the effectiveness of a public health campaign: In this study, a public health campaign is launched to promote healthy eating habits among a targeted population. The behavior of the population is compared before and after the campaign to determine whether the intervention had a significant impact on the target behavior.

- Examining the impact of a new medication: In this study, a group of patients is given a new medication, while another group is given a placebo. The outcomes of both groups are compared to determine whether the new medication had a significant impact on the targeted health condition.

- Evaluating the effectiveness of a job training program : In this study, a group of unemployed individuals is enrolled in a job training program, while another group is not enrolled in any program. The employment rates of both groups are compared before and after the intervention to determine whether the training program had a significant impact on the employment rates of the participants.

- Assessing the impact of a new policy : In this study, a new policy is implemented in a particular area, while another area does not have the new policy. The outcomes of both areas are compared before and after the intervention to determine whether the new policy had a significant impact on the targeted behavior or outcome.

Applications of Quasi-Experimental Design

Here are some applications of quasi-experimental design:

- Educational research: Quasi-experimental designs are used to evaluate the effectiveness of educational interventions, such as new teaching methods, technology-based learning, or educational policies.

- Health research: Quasi-experimental designs are used to evaluate the effectiveness of health interventions, such as new medications, public health campaigns, or health policies.

- Social science research: Quasi-experimental designs are used to investigate the impact of social interventions, such as job training programs, welfare policies, or criminal justice programs.

- Business research: Quasi-experimental designs are used to evaluate the impact of business interventions, such as marketing campaigns, new products, or pricing strategies.

- Environmental research: Quasi-experimental designs are used to evaluate the impact of environmental interventions, such as conservation programs, pollution control policies, or renewable energy initiatives.

When to use Quasi-Experimental Design

Here are some situations where quasi-experimental designs may be appropriate:

- When the research question involves investigating the effectiveness of an intervention, policy, or program : In situations where it is not feasible or ethical to randomly assign participants to intervention and control groups, quasi-experimental designs can be used to evaluate the impact of the intervention on the targeted outcome.

- When the sample size is small: In situations where the sample size is small, it may be difficult to randomly assign participants to intervention and control groups. Quasi-experimental designs can be used to investigate the impact of an intervention without requiring a large sample size.

- When the research question involves investigating a naturally occurring event : In some situations, researchers may be interested in investigating the impact of a naturally occurring event, such as a natural disaster or a major policy change. Quasi-experimental designs can be used to evaluate the impact of the event on the targeted outcome.

- When the research question involves investigating a long-term intervention: In situations where the intervention or program is long-term, it may be difficult to randomly assign participants to intervention and control groups for the entire duration of the intervention. Quasi-experimental designs can be used to evaluate the impact of the intervention over time.

- When the research question involves investigating the impact of a variable that cannot be manipulated : In some situations, it may not be possible or ethical to manipulate a variable of interest. Quasi-experimental designs can be used to investigate the relationship between the variable and the targeted outcome.

Purpose of Quasi-Experimental Design

The purpose of quasi-experimental design is to investigate the causal relationship between two or more variables when it is not feasible or ethical to conduct a randomized controlled trial (RCT). Quasi-experimental designs attempt to emulate the randomized control trial by mimicking the control group and the intervention group as much as possible.

The key purpose of quasi-experimental design is to evaluate the impact of an intervention, policy, or program on a targeted outcome while controlling for potential confounding factors that may affect the outcome. Quasi-experimental designs aim to answer questions such as: Did the intervention cause the change in the outcome? Would the outcome have changed without the intervention? And was the intervention effective in achieving its intended goals?

Quasi-experimental designs are useful in situations where randomized controlled trials are not feasible or ethical. They provide researchers with an alternative method to evaluate the effectiveness of interventions, policies, and programs in real-life settings. Quasi-experimental designs can also help inform policy and practice by providing valuable insights into the causal relationships between variables.

Overall, the purpose of quasi-experimental design is to provide a rigorous method for evaluating the impact of interventions, policies, and programs while controlling for potential confounding factors that may affect the outcome.

Advantages of Quasi-Experimental Design

Quasi-experimental designs have several advantages over other research designs, such as:

- Greater external validity : Quasi-experimental designs are more likely to have greater external validity than laboratory experiments because they are conducted in naturalistic settings. This means that the results are more likely to generalize to real-world situations.

- Ethical considerations: Quasi-experimental designs often involve naturally occurring events, such as natural disasters or policy changes. This means that researchers do not need to manipulate variables, which can raise ethical concerns.

- More practical: Quasi-experimental designs are often more practical than experimental designs because they are less expensive and easier to conduct. They can also be used to evaluate programs or policies that have already been implemented, which can save time and resources.

- No random assignment: Quasi-experimental designs do not require random assignment, which can be difficult or impossible in some cases, such as when studying the effects of a natural disaster. This means that researchers can still make causal inferences, although they must use statistical techniques to control for potential confounding variables.

- Greater generalizability : Quasi-experimental designs are often more generalizable than experimental designs because they include a wider range of participants and conditions. This can make the results more applicable to different populations and settings.

Limitations of Quasi-Experimental Design

There are several limitations associated with quasi-experimental designs, which include:

- Lack of Randomization: Quasi-experimental designs do not involve randomization of participants into groups, which means that the groups being studied may differ in important ways that could affect the outcome of the study. This can lead to problems with internal validity and limit the ability to make causal inferences.

- Selection Bias: Quasi-experimental designs may suffer from selection bias because participants are not randomly assigned to groups. Participants may self-select into groups or be assigned based on pre-existing characteristics, which may introduce bias into the study.

- History and Maturation: Quasi-experimental designs are susceptible to history and maturation effects, where the passage of time or other events may influence the outcome of the study.

- Lack of Control: Quasi-experimental designs may lack control over extraneous variables that could influence the outcome of the study. This can limit the ability to draw causal inferences from the study.

- Limited Generalizability: Quasi-experimental designs may have limited generalizability because the results may only apply to the specific population and context being studied.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Am Med Inform Assoc

- v.13(1); Jan-Feb 2006

The Use and Interpretation of Quasi-Experimental Studies in Medical Informatics

Associated data.

Quasi-experimental study designs, often described as nonrandomized, pre-post intervention studies, are common in the medical informatics literature. Yet little has been written about the benefits and limitations of the quasi-experimental approach as applied to informatics studies. This paper outlines a relative hierarchy and nomenclature of quasi-experimental study designs that is applicable to medical informatics intervention studies. In addition, the authors performed a systematic review of two medical informatics journals, the Journal of the American Medical Informatics Association (JAMIA) and the International Journal of Medical Informatics (IJMI), to determine the number of quasi-experimental studies published and how the studies are classified on the above-mentioned relative hierarchy. They hope that future medical informatics studies will implement higher level quasi-experimental study designs that yield more convincing evidence for causal links between medical informatics interventions and outcomes.

Quasi-experimental studies encompass a broad range of nonrandomized intervention studies. These designs are frequently used when it is not logistically feasible or ethical to conduct a randomized controlled trial. Examples of quasi-experimental studies follow. As one example of a quasi-experimental study, a hospital introduces a new order-entry system and wishes to study the impact of this intervention on the number of medication-related adverse events before and after the intervention. As another example, an informatics technology group is introducing a pharmacy order-entry system aimed at decreasing pharmacy costs. The intervention is implemented and pharmacy costs before and after the intervention are measured.

In medical informatics, the quasi-experimental, sometimes called the pre-post intervention, design often is used to evaluate the benefits of specific interventions. The increasing capacity of health care institutions to collect routine clinical data has led to the growing use of quasi-experimental study designs in the field of medical informatics as well as in other medical disciplines. However, little is written about these study designs in the medical literature or in traditional epidemiology textbooks. 1 , 2 , 3 In contrast, the social sciences literature is replete with examples of ways to implement and improve quasi-experimental studies. 4 , 5 , 6

In this paper, we review the different pretest-posttest quasi-experimental study designs, their nomenclature, and the relative hierarchy of these designs with respect to their ability to establish causal associations between an intervention and an outcome. The example of a pharmacy order-entry system aimed at decreasing pharmacy costs will be used throughout this article to illustrate the different quasi-experimental designs. We discuss limitations of quasi-experimental designs and offer methods to improve them. We also perform a systematic review of four years of publications from two informatics journals to determine the number of quasi-experimental studies, classify these studies into their application domains, determine whether the potential limitations of quasi-experimental studies were acknowledged by the authors, and place these studies into the above-mentioned relative hierarchy.

The authors reviewed articles and book chapters on the design of quasi-experimental studies. 4 , 5 , 6 , 7 , 8 , 9 , 10 Most of the reviewed articles referenced two textbooks that were then reviewed in depth. 4 , 6

Key advantages and disadvantages of quasi-experimental studies, as they pertain to the study of medical informatics, were identified. The potential methodological flaws of quasi-experimental medical informatics studies, which have the potential to introduce bias, were also identified. In addition, a summary table outlining a relative hierarchy and nomenclature of quasi-experimental study designs is described. In general, the higher the design is in the hierarchy, the greater the internal validity that the study traditionally possesses because the evidence of the potential causation between the intervention and the outcome is strengthened. 4

We then performed a systematic review of four years of publications from two informatics journals. First, we determined the number of quasi-experimental studies. We then classified these studies on the above-mentioned hierarchy. We also classified the quasi-experimental studies according to their application domain. The categories of application domains employed were based on categorization used by Yearbooks of Medical Informatics 1992–2005 and were similar to the categories of application domains employed by Annual Symposiums of the American Medical Informatics Association. 11 The categories were (1) health and clinical management; (2) patient records; (3) health information systems; (4) medical signal processing and biomedical imaging; (5) decision support, knowledge representation, and management; (6) education and consumer informatics; and (7) bioinformatics. Because the quasi-experimental study design has recognized limitations, we sought to determine whether authors acknowledged the potential limitations of this design. Examples of acknowledgment included mention of lack of randomization, the potential for regression to the mean, the presence of temporal confounders and the mention of another design that would have more internal validity.

All original scientific manuscripts published between January 2000 and December 2003 in the Journal of the American Medical Informatics Association (JAMIA) and the International Journal of Medical Informatics (IJMI) were reviewed. One author (ADH) reviewed all the papers to identify the number of quasi-experimental studies. Other authors (ADH, JCM, JF) then independently reviewed all the studies identified as quasi-experimental. The three authors then convened as a group to resolve any disagreements in study classification, application domain, and acknowledgment of limitations.

Results and Discussion

What is a quasi-experiment.

Quasi-experiments are studies that aim to evaluate interventions but that do not use randomization. Similar to randomized trials, quasi-experiments aim to demonstrate causality between an intervention and an outcome. Quasi-experimental studies can use both preintervention and postintervention measurements as well as nonrandomly selected control groups.

Using this basic definition, it is evident that many published studies in medical informatics utilize the quasi-experimental design. Although the randomized controlled trial is generally considered to have the highest level of credibility with regard to assessing causality, in medical informatics, researchers often choose not to randomize the intervention for one or more reasons: (1) ethical considerations, (2) difficulty of randomizing subjects, (3) difficulty to randomize by locations (e.g., by wards), (4) small available sample size. Each of these reasons is discussed below.

Ethical considerations typically will not allow random withholding of an intervention with known efficacy. Thus, if the efficacy of an intervention has not been established, a randomized controlled trial is the design of choice to determine efficacy. But if the intervention under study incorporates an accepted, well-established therapeutic intervention, or if the intervention has either questionable efficacy or safety based on previously conducted studies, then the ethical issues of randomizing patients are sometimes raised. In the area of medical informatics, it is often believed prior to an implementation that an informatics intervention will likely be beneficial and thus medical informaticians and hospital administrators are often reluctant to randomize medical informatics interventions. In addition, there is often pressure to implement the intervention quickly because of its believed efficacy, thus not allowing researchers sufficient time to plan a randomized trial.

For medical informatics interventions, it is often difficult to randomize the intervention to individual patients or to individual informatics users. So while this randomization is technically possible, it is underused and thus compromises the eventual strength of concluding that an informatics intervention resulted in an outcome. For example, randomly allowing only half of medical residents to use pharmacy order-entry software at a tertiary care hospital is a scenario that hospital administrators and informatics users may not agree to for numerous reasons.

Similarly, informatics interventions often cannot be randomized to individual locations. Using the pharmacy order-entry system example, it may be difficult to randomize use of the system to only certain locations in a hospital or portions of certain locations. For example, if the pharmacy order-entry system involves an educational component, then people may apply the knowledge learned to nonintervention wards, thereby potentially masking the true effect of the intervention. When a design using randomized locations is employed successfully, the locations may be different in other respects (confounding variables), and this further complicates the analysis and interpretation.

In situations where it is known that only a small sample size will be available to test the efficacy of an intervention, randomization may not be a viable option. Randomization is beneficial because on average it tends to evenly distribute both known and unknown confounding variables between the intervention and control group. However, when the sample size is small, randomization may not adequately accomplish this balance. Thus, alternative design and analytical methods are often used in place of randomization when only small sample sizes are available.

What Are the Threats to Establishing Causality When Using Quasi-experimental Designs in Medical Informatics?

The lack of random assignment is the major weakness of the quasi-experimental study design. Associations identified in quasi-experiments meet one important requirement of causality since the intervention precedes the measurement of the outcome. Another requirement is that the outcome can be demonstrated to vary statistically with the intervention. Unfortunately, statistical association does not imply causality, especially if the study is poorly designed. Thus, in many quasi-experiments, one is most often left with the question: “Are there alternative explanations for the apparent causal association?” If these alternative explanations are credible, then the evidence of causation is less convincing. These rival hypotheses, or alternative explanations, arise from principles of epidemiologic study design.

Shadish et al. 4 outline nine threats to internal validity that are outlined in ▶ . Internal validity is defined as the degree to which observed changes in outcomes can be correctly inferred to be caused by an exposure or an intervention. In quasi-experimental studies of medical informatics, we believe that the methodological principles that most often result in alternative explanations for the apparent causal effect include (a) difficulty in measuring or controlling for important confounding variables, particularly unmeasured confounding variables, which can be viewed as a subset of the selection threat in ▶ ; (b) results being explained by the statistical principle of regression to the mean . Each of these latter two principles is discussed in turn.

Threats to Internal Validity

Adapted from Shadish et al. 4

An inability to sufficiently control for important confounding variables arises from the lack of randomization. A variable is a confounding variable if it is associated with the exposure of interest and is also associated with the outcome of interest; the confounding variable leads to a situation where a causal association between a given exposure and an outcome is observed as a result of the influence of the confounding variable. For example, in a study aiming to demonstrate that the introduction of a pharmacy order-entry system led to lower pharmacy costs, there are a number of important potential confounding variables (e.g., severity of illness of the patients, knowledge and experience of the software users, other changes in hospital policy) that may have differed in the preintervention and postintervention time periods ( ▶ ). In a multivariable regression, the first confounding variable could be addressed with severity of illness measures, but the second confounding variable would be difficult if not nearly impossible to measure and control. In addition, potential confounding variables that are unmeasured or immeasurable cannot be controlled for in nonrandomized quasi-experimental study designs and can only be properly controlled by the randomization process in randomized controlled trials.

Example of confounding. To get the true effect of the intervention of interest, we need to control for the confounding variable.

Another important threat to establishing causality is regression to the mean. 12 , 13 , 14 This widespread statistical phenomenon can result in wrongly concluding that an effect is due to the intervention when in reality it is due to chance. The phenomenon was first described in 1886 by Francis Galton who measured the adult height of children and their parents. He noted that when the average height of the parents was greater than the mean of the population, the children tended to be shorter than their parents, and conversely, when the average height of the parents was shorter than the population mean, the children tended to be taller than their parents.

In medical informatics, what often triggers the development and implementation of an intervention is a rise in the rate above the mean or norm. For example, increasing pharmacy costs and adverse events may prompt hospital informatics personnel to design and implement pharmacy order-entry systems. If this rise in costs or adverse events is really just an extreme observation that is still within the normal range of the hospital's pharmaceutical costs (i.e., the mean pharmaceutical cost for the hospital has not shifted), then the statistical principle of regression to the mean predicts that these elevated rates will tend to decline even without intervention. However, often informatics personnel and hospital administrators cannot wait passively for this decline to occur. Therefore, hospital personnel often implement one or more interventions, and if a decline in the rate occurs, they may mistakenly conclude that the decline is causally related to the intervention. In fact, an alternative explanation for the finding could be regression to the mean.

What Are the Different Quasi-experimental Study Designs?

In the social sciences literature, quasi-experimental studies are divided into four study design groups 4 , 6 :

- Quasi-experimental designs without control groups

- Quasi-experimental designs that use control groups but no pretest

- Quasi-experimental designs that use control groups and pretests

- Interrupted time-series designs

There is a relative hierarchy within these categories of study designs, with category D studies being sounder than categories C, B, or A in terms of establishing causality. Thus, if feasible from a design and implementation point of view, investigators should aim to design studies that fall in to the higher rated categories. Shadish et al. 4 discuss 17 possible designs, with seven designs falling into category A, three designs in category B, and six designs in category C, and one major design in category D. In our review, we determined that most medical informatics quasi-experiments could be characterized by 11 of 17 designs, with six study designs in category A, one in category B, three designs in category C, and one design in category D because the other study designs were not used or feasible in the medical informatics literature. Thus, for simplicity, we have summarized the 11 study designs most relevant to medical informatics research in ▶ .

Relative Hierarchy of Quasi-experimental Designs

O = Observational Measurement; X = Intervention Under Study. Time moves from left to right.

The nomenclature and relative hierarchy were used in the systematic review of four years of JAMIA and the IJMI. Similar to the relative hierarchy that exists in the evidence-based literature that assigns a hierarchy to randomized controlled trials, cohort studies, case-control studies, and case series, the hierarchy in ▶ is not absolute in that in some cases, it may be infeasible to perform a higher level study. For example, there may be instances where an A6 design established stronger causality than a B1 design. 15 , 16 , 17

Quasi-experimental Designs without Control Groups

Here, X is the intervention and O is the outcome variable (this notation is continued throughout the article). In this study design, an intervention (X) is implemented and a posttest observation (O1) is taken. For example, X could be the introduction of a pharmacy order-entry intervention and O1 could be the pharmacy costs following the intervention. This design is the weakest of the quasi-experimental designs that are discussed in this article. Without any pretest observations or a control group, there are multiple threats to internal validity. Unfortunately, this study design is often used in medical informatics when new software is introduced since it may be difficult to have pretest measurements due to time, technical, or cost constraints.

This is a commonly used study design. A single pretest measurement is taken (O1), an intervention (X) is implemented, and a posttest measurement is taken (O2). In this instance, period O1 frequently serves as the “control” period. For example, O1 could be pharmacy costs prior to the intervention, X could be the introduction of a pharmacy order-entry system, and O2 could be the pharmacy costs following the intervention. Including a pretest provides some information about what the pharmacy costs would have been had the intervention not occurred.

The advantage of this study design over A2 is that adding a second pretest prior to the intervention helps provide evidence that can be used to refute the phenomenon of regression to the mean and confounding as alternative explanations for any observed association between the intervention and the posttest outcome. For example, in a study where a pharmacy order-entry system led to lower pharmacy costs (O3 < O2 and O1), if one had two preintervention measurements of pharmacy costs (O1 and O2) and they were both elevated, this would suggest that there was a decreased likelihood that O3 is lower due to confounding and regression to the mean. Similarly, extending this study design by increasing the number of measurements postintervention could also help to provide evidence against confounding and regression to the mean as alternate explanations for observed associations.

This design involves the inclusion of a nonequivalent dependent variable ( b ) in addition to the primary dependent variable ( a ). Variables a and b should assess similar constructs; that is, the two measures should be affected by similar factors and confounding variables except for the effect of the intervention. Variable a is expected to change because of the intervention X, whereas variable b is not. Taking our example, variable a could be pharmacy costs and variable b could be the length of stay of patients. If our informatics intervention is aimed at decreasing pharmacy costs, we would expect to observe a decrease in pharmacy costs but not in the average length of stay of patients. However, a number of important confounding variables, such as severity of illness and knowledge of software users, might affect both outcome measures. Thus, if the average length of stay did not change following the intervention but pharmacy costs did, then the data are more convincing than if just pharmacy costs were measured.

The Removed-Treatment Design

This design adds a third posttest measurement (O3) to the one-group pretest-posttest design and then removes the intervention before a final measure (O4) is made. The advantage of this design is that it allows one to test hypotheses about the outcome in the presence of the intervention and in the absence of the intervention. Thus, if one predicts a decrease in the outcome between O1 and O2 (after implementation of the intervention), then one would predict an increase in the outcome between O3 and O4 (after removal of the intervention). One caveat is that if the intervention is thought to have persistent effects, then O4 needs to be measured after these effects are likely to have disappeared. For example, a study would be more convincing if it demonstrated that pharmacy costs decreased after pharmacy order-entry system introduction (O2 and O3 less than O1) and that when the order-entry system was removed or disabled, the costs increased (O4 greater than O2 and O3 and closer to O1). In addition, there are often ethical issues in this design in terms of removing an intervention that may be providing benefit.

The Repeated-Treatment Design

The advantage of this design is that it demonstrates reproducibility of the association between the intervention and the outcome. For example, the association is more likely to be causal if one demonstrates that a pharmacy order-entry system results in decreased pharmacy costs when it is first introduced and again when it is reintroduced following an interruption of the intervention. As for design A5, the assumption must be made that the effect of the intervention is transient, which is most often applicable to medical informatics interventions. Because in this design, subjects may serve as their own controls, this may yield greater statistical efficiency with fewer numbers of subjects.