Six Sigma Study Guide

Study notes and guides for Six Sigma certification tests

Hypothesis Testing Overview

Posted by Ted Hessing

You may know all the statistics in the world, but if you jump straight from those statistics to the wrong conclusion, you could make a million-dollar error. That’s where hypothesis testing comes in. It combines tried-and-tested analysis tools, real-world data, and a framework that allows us to test our assumptions and beliefs. This way, we can say how likely something is to be true or not within a given standard of accuracy.

When using hypothesis testing, we create the following:

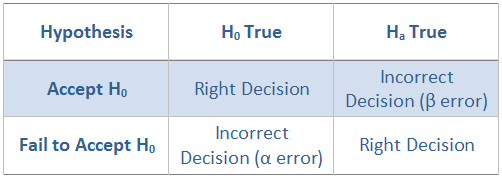

- A null hypothesis (H0): the assumption that the experimental results are due to chance alone; nothing (from 6M) influenced our results.

- An alternative hypothesis (Ha): we expect to find a particular outcome.

These hypotheses should always be mutually exclusive: if one is true, the other is false.

Once we have our null and alternative hypotheses, we evaluate them with a sample of an entire population, check our results, and conclude based on those results.

Note: We never accept a NULL hypothesis; we simply fail to reject it. We are always testing the NULL.

Basic Testing Process

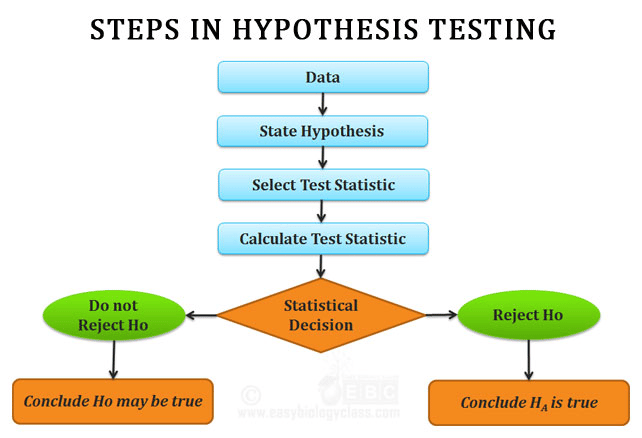

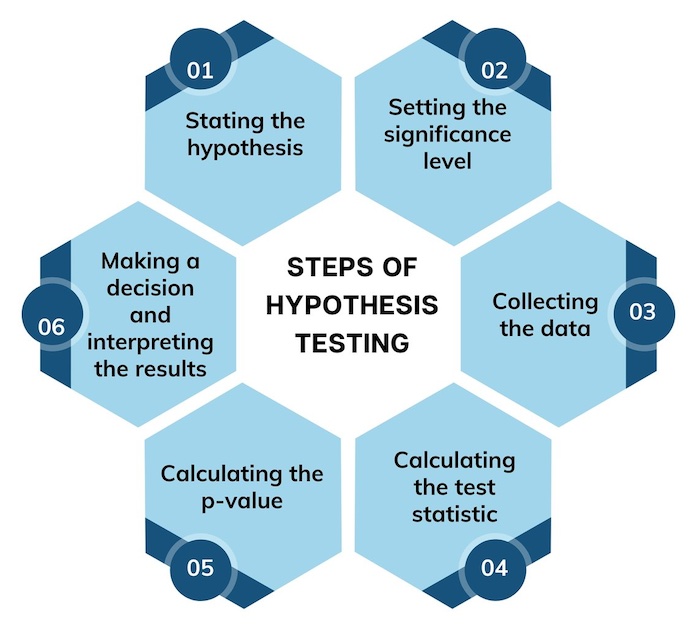

The basic testing process consists of five steps:

- Identify the question

- Determine the significance

- Choose the test

- Interpret the results

- Make a decision.

Read more about the hypothesis testing process .

Terminology

This field uses a lot of specialist terminologies. We’ve collated a list of the most common terms and their meanings for easy lookup. See the hypothesis testing terminology list .

Tailed Hypothesis Tests

These tests are commonly referred to according to their ‘tails’ or the critical regions that exist within them. There are three basic types: right-tailed, left-tailed, and two-tailed. Read more about tailed hypothesis tests .

Errors in Hypothesis Testing

When discussing an error in this context, the word has a very specific meaning: it refers to incorrectly either rejecting or accepting a hypothesis. Read more about errors in hypothesis testing .

We use P-values to determine how statistically significant our test results are and how probable we’ll make an error. Read more about p-values .

Types of Hypothesis Tests

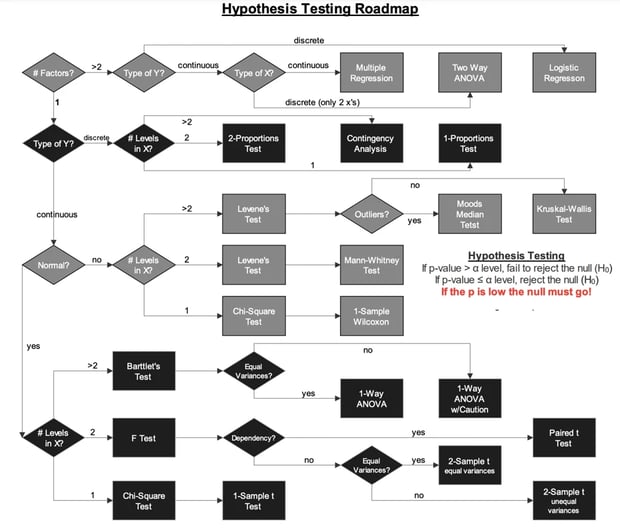

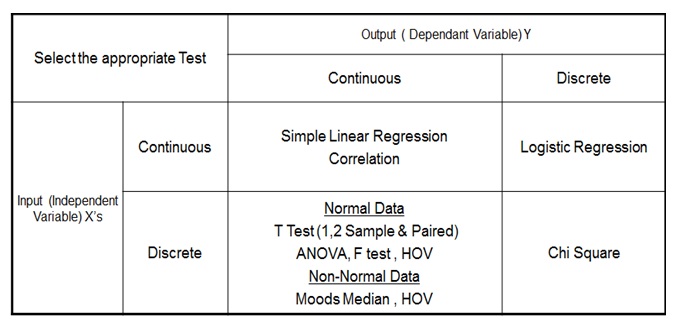

One aspect of testing that can confuse the new student is finding which–out of many available tests–is correct to use.

Parametric Tests

You can use these tests when it’s implied that a distribution is assumed for the population or the data is a sample from a distribution (often a normal distribution ).

Non Parametric Tests

You use non-parametric tests when you don’t know, can’t assume, and can’t identify what kind of distribution you have.

Hypothesis Test Study Guide

We run through the types of tests and briefly explain what each one is commonly used for. Read more about types of hypothesis tests .

Significance of Central Limit Theorem

The Central Limit Theorem is important for inferential statistics because it allows us to safely assume that the sampling distribution of the mean will be normal in most cases. This means that we can take advantage of statistical techniques that assume a normal distribution.

The Central Limit Theorem is one of the most profound and useful statistical and probability results. The large samples (more than 30) from any sort of distribution of the sample means will follow a normal distribution . The central limit theorem is vital in statistics for two main reasons—the normality assumption and the precision of the estimates.

The spread of the sample means is less (narrower) than the spread of the population you’re sampling from. So, it does not matter how the original population is skewed.

- The means of the sampling distribution of the mean is equal to the population mean µ x̅ =µ X

- The standard deviation of the sample means equals the standard deviation of the population divided by the square root of the sample size: σ( x̅ ) = σ(x) / √(n)

Central Limit Theorem allows using confidence intervals, hypothesis testing, DOE, regression analysis, and other analytical techniques. Many statistics have approximately normal distributions for large sample sizes, even when we are sampling from a non-normal distribution.

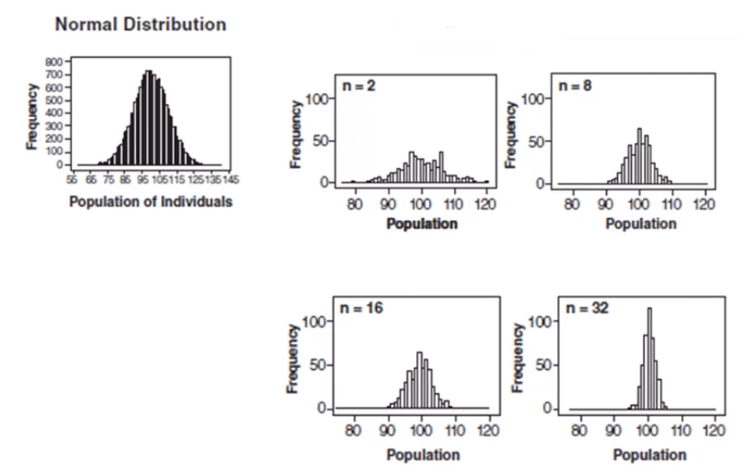

Control charts and the central limit theorem

In the above graph, subgroup sizes of 2, 8, 16, and 32 were used in this analysis. We can see the impact of the subgrouping. In Figure 2 (n=8), the histogram is not as wide and looks more “normally” distributed than Figure 1. Figure 3 shows the histogram for subgroup averages when n = 16, it is even more narrow and it looks more normally distributed. Figures 1 through 4 show, that as n increases, the distribution becomes narrower and more bell-shaped -just as the central limit theorem says. This means that we can often use well-developed statistical inference procedures and probability calculations that are based on a normal distribution, even if we are sampling from a population that is not normal, provided we have a large sample size.

Overview Videos

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Insert/edit link

Enter the destination URL

Or link to existing content

🚀 Chart your career path in cutting-edge domains.

Talk to a career counsellor for personalised guidance 🤝.

9 Types of Hypothesis Testing for Six Sigma Data Analysis

Table of content, hypothesis testing and steps involved in it, why is hypothesis testing needed in six sigma, benefits of using hypothesis testing in six sigma, how to choose a hypothesis test for six sigma data analysis.

Hypothesis testing is a statistical technique that tells us whether the tests of our experiment have shown any meaningful results. After hypothesis testing, you can tell whether the test results have happened due to pure chance or a significant intervention. If it is down to chances, it will be difficult or impossible to replicate the results. However, if it is due to a particular instance, knowledge of that will enable us to replicate the results time and again.

Source: Medium

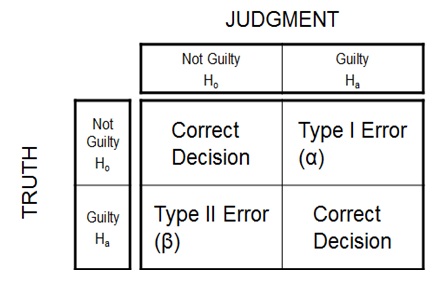

In hypothesis testing steps, the person conducting the test states these two hypotheses. Since both are opposites, only one of the two hypotheses can be correct. The alpha and beta risks are also identified as a part of this statistical data analysis. Alpha risk is the risk of incorrectly rejecting the null hypothesis. Beta risk, on the other hand, is the risk of not rejecting an incorrect null hypothesis.

The next step is to formulate the plan to evaluate the available data to arrive at the correct hypothesis. The tester carries out the plan and analyzes the sample. The tester then summarizes the result from the data analysis tools to find out which of the two hypotheses stands. Based on the data, the tester may reject the null hypothesis or the testing may fail to reject it. This, in short, answers what hypothesis testing is.

Using statistical techniques and formulae is a part of a Six Sigma manager’s work profile. In a Six Sigma project, the manager makes key decisions based on statistical test inferences. Hypothesis testing allows a greater degree of confidence in these decisions because they are not merely the mathematical differences between two samples.

Let us assume that a Six Sigma project produces thermal power. The quality of coal used as a raw material may influence the wattage of power generated. As the Six Sigma project manager, you want to establish whether there is a statistically significant relationship between the coal grade used and the power generated. With hypothesis testing, you can frame the proper null hypothesis, identify the alpha risk and the beta risk, and calculate the test statistic or p-value. This will help you to arrive at a more informed conclusion on your coal quality and power generation theory.

Hypothesis testing is useful in measuring the progress of your project as you strive to improve your product or service. Since it’s a test of significance, hypothesis testing helps you prove whether the data is statistically significant. In terms of Six Sigma hypothesis testing examples, it could be decisive in spotting the improvements in your product or service or the lack of them.

In a decision made based on a sample study, there is a probability that non-representative samples may flaw the decision. However, a hypothesis test converts a practical problem into a statistical problem, consequently giving us an estimate of the probability of a non-representative sample.

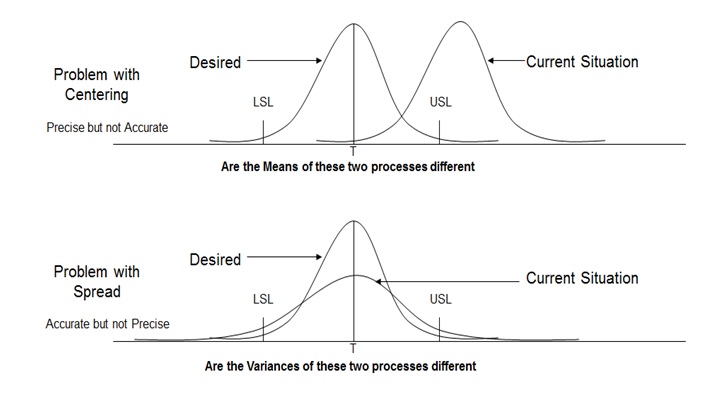

A process may face problems with centering or problems with spread. In other words, the mean of two processes may be different, or the variances of the two processes may be different. Both are instances of differences in distribution. Hypothesis testing can help us analyze whether the difference between the two data sets is statistically significant.

1) Normality – Normality tests whether the sample distributes normally. Here the null hypothesis states that the population is normally distributed, while the alternative hypothesis states otherwise. If the p-value of the test is less than the defined significance level, the tester can reject the null hypothesis.

The sample size is crucial in normality testing. If the sample size is large, a small deviation can project a statistically significant p-value, which will be difficult to detect in case of a small sample size. A Six Sigma project manager would consider the sample size before relying on the normality test result.

2) T-test – A T-test compares the respective means of two data groups. In a project, it is useful to find out whether two data sets are different from one another or whether a process or treatment has an effect on the population. In a T-test, the data has to be independent and normally distributed to an aggregable degree. The data groups should have a similar amount of variance. These assumptions are part of the T-test as it is a parametric test of difference. T-tests will make the pairwise comparison only, and other types of hypothesis testing are for more than two groups.

4) Homogeneity of Variance (HOV) – HOV tests the homogeneity of variances between populations. With an assumption that variances among different groups are equal, you can pool such groups together to estimate the population variance. With HOV you get a better assurance of this variance homogeneity, which can encourage you to use smaller sample sizes or make better interpretations from the same sample size.

In the case of two groups, you can assume that the variance would be one (the null hypothesis). Anything other than a variance of one, i.e., equal, would be in support of the alternative hypothesis. In the case of three or more populations, the alternative hypothesis would be that one population variance is different.

5) Analysis of Variance (ANOVA) – ANOVA compares the means of different groups to test if they significantly vary from one another. For instance, in a project, ANOVA can check whether there are multiple approaches to solving a particular problem. The mean, in this case, time taken to solve the problem, of all these approaches will help us find out the effectiveness of each approach. If there are only two groups, T-test and ANOVA will show the same result. The null hypothesis in ANOVA is that no sample means have any significant difference. Any difference in even one sample means would mean rejection of the null hypothesis.

6) Mood’s Median – Mood’s Median tests the similarity of medians across two or more populations. It is a non-parametric test, which means that it doesn’t make any assumption based on normally distributed data, unlike ANOVA, for instance. Non-parametric tests are a better failsafe against wrong inferences. Nevertheless, it has a null hypothesis as it should, which is that there are no significant differences between the medians under consideration.

7) Welch’s T-test – Also known as Welch’s Test for Unequal Variances, it checks two sample means for significant differences. The null hypothesis is that the means are equal while the alternative hypothesis is that the means are not equal. In Welch’s test, there is a modification in the degrees of freedom over the student’s T-test. Unlike the T-test, it doesn’t assume equal variances. It is more reliable when both the groups have unequal sample sizes and variances. But statisticians don’t recommend Welch’s T-test for small sample sizes.

8) Kruskal-Wallis H Test – Like Mood’s Median, Kruskal-Wallis H Test is a non-parametric variation of ANOVA. The two hypotheses in it are that the population medians are or are not equal. To run this test, the tester uses ranks of the data value, rather than the data values themselves. While Kruskal-Wallis will find out the significant differences between the populations, it doesn’t tell you which groups are, in fact, different. This test assumes there is one independent variable with two or more levels. The observations should be independent, with no relation among data points in different groups. Besides, all the tested groups must have s\distributions of similar shape.

9) Box-Cox Power Transformation – Box-Cox Power Transformation allows a broader test diameter, with normality not being a required assumption. It is known as power transformation because it transforms non-normal dependent variables into a normal shape. Box-Cox Power transformation uses an exponent called lambda. Its test value can vary from -5 to 5. Of all the test values of the lambda, the one that’s optimal value has the best approximation of a normal distribution curve.

As can be seen in the different types of hypothesis testing, the purpose of most of these tests are different, or should we say – significantly different. Even in the case of tests that are similar in mechanism and technique, smaller differences are there, whether it’s in the assumptions or the inclusion of an additional element.

Therefore, if you want to compare a population mean with a given standard, or two different population means, a T-test is the test to use. On the other hand, if means of more than two populations need comparing, Six Sigma project managers often use the ANOVA test.

If you are doing a comparison among the variances of two or more populations, the HOV is one of the appropriate tests. On the other hand, comparing the medians of two or more populations can be appropriate for the Mood’s Median test. To compare the differences in output between two or more sub-groups, a Chi-Square Test of Independence is the way to go.

The choice of the hypothesis depends on the needs of the Six Sigma data analytics. The broader goal in Six Sigma remains that we have to move the process mean and restrict the standard deviation to a minimum. The decisions are based on sample data because of the cost-effectiveness, rather than an exhaustive study of the total population. Hypothesis testing enables Six Sigma teams to decide whether there are different population parameters, or the difference, if any, is due to sample variations.

Once the Six Sigma team understands the problem it can correlate the practical difference in outcomes with the statistical differences found in testing. The hypothesis testing identifies the difference. The selection of the test type, in turn, will depend on underlying factors. Is the difference a change in the mean or the variance, for instance?

Planning to become a skilled Data Analyst? Learn Data Science from scratch with OdinSchool's Data Science Course and get dedicated placement assistance!

About the Author

Meet Raktim Sharma, a talented writer who enjoys baking and taking pictures in addition to contributing insightful articles. He contributes a plethora of knowledge to our blog with years of experience and skill.

Related Posts

Career Transition with Data Science Upskill Amidst Many Challenges!

A journey filled with twists and turns, Waquar Ahmed's career path unfolded like a novel, each chapter a step...

Unlocking Success: AON Analyst's Middle-Class Climb to a 124% Salary Hike!

Discover the importance of structured learning in the pursuit of success in the data science field.

The Role of Machine Learning in Data Science

Data Science is a field that studies data and employs a series of methods, algorithms, systems, and tools to...

Join OdinSchool's Data Science Bootcamp

With job assistance.

- Guide: Hypothesis Testing

Daniel Croft

Daniel Croft is an experienced continuous improvement manager with a Lean Six Sigma Black Belt and a Bachelor's degree in Business Management. With more than ten years of experience applying his skills across various industries, Daniel specializes in optimizing processes and improving efficiency. His approach combines practical experience with a deep understanding of business fundamentals to drive meaningful change.

- Last Updated: September 8, 2023

- Learn Lean Sigma

In the world of data-driven decision-making, Hypothesis Testing stands as a cornerstone methodology. It serves as the statistical backbone for a multitude of sectors, from manufacturing and logistics to healthcare and finance. But what exactly is Hypothesis Testing, and why is it so indispensable? Simply put, it’s a technique that allows you to validate or invalidate claims about a population based on sample data. Whether you’re looking to streamline a manufacturing process, optimize logistics, or improve customer satisfaction, Hypothesis Testing offers a structured approach to reach conclusive, data-supported decisions.

The graphical example above provides a simplified snapshot of a hypothesis test. The bell curve represents a normal distribution, the green area is where you’d accept the null hypothesis ( H 0), and the red area is the “rejection zone,” where you’d favor the alternative hypothesis ( Ha ). The vertical blue line represents the threshold value or “critical value,” beyond which you’d reject H 0.

Here’s a graphical example of a hypothesis test, which you can include in the introduction section of your guide. In this graph:

- The curve represents a standard normal distribution, often encountered in hypothesis tests.

- The green-shaded area signifies the “Acceptance Region,” where you would fail to reject the null hypothesis ( H 0).

- The red-shaded areas are the “Rejection Regions,” where you would reject H 0 in favor of the alternative hypothesis ( Ha ).

- The blue dashed lines indicate the “Critical Values” (±1.96), which are the thresholds for rejecting H 0.

This graphical representation serves as a conceptual foundation for understanding the mechanics of hypothesis testing. It visually illustrates what it means to accept or reject a hypothesis based on a predefined level of significance.

Table of Contents

What is hypothesis testing.

Hypothesis testing is a structured procedure in statistics used for drawing conclusions about a larger population based on a subset of that population, known as a sample. The method is widely used across different industries and sectors for a variety of purposes. Below, we’ll dissect the key components of hypothesis testing to provide a more in-depth understanding.

The Hypotheses: H 0 and Ha

In every hypothesis test, there are two competing statements:

- Null Hypothesis ( H 0) : This is the “status quo” hypothesis that you are trying to test against. It is a statement that asserts that there is no effect or difference. For example, in a manufacturing setting, the null hypothesis might state that a new production process does not improve the average output quality.

- Alternative Hypothesis ( Ha or H 1) : This is what you aim to prove by conducting the hypothesis test. It is the statement that there is an effect or difference. Using the same manufacturing example, the alternative hypothesis might state that the new process does improve the average output quality.

Significance Level ( α )

Before conducting the test, you decide on a “Significance Level” ( α ), typically set at 0.05 or 5%. This level represents the probability of rejecting the null hypothesis when it is actually true. Lower α values make the test more stringent, reducing the chances of a ‘false positive’.

Data Collection

You then proceed to gather data, which is usually a sample from a larger population. The quality of your test heavily relies on how well this sample represents the population. The data can be collected through various means such as surveys, observations, or experiments.

Statistical Test

Depending on the nature of the data and what you’re trying to prove, different statistical tests can be applied (e.g., t-test, chi-square test , ANOVA , etc.). These tests will compute a test statistic (e.g., t , 2 χ 2, F , etc.) based on your sample data.

Here are graphical examples of the distributions commonly used in three different types of statistical tests: t-test, Chi-square test, and ANOVA (Analysis of Variance), displayed side by side for comparison.

- Graph 1 (Leftmost): This graph represents a t-distribution, often used in t-tests. The t-distribution is similar to the normal distribution but tends to have heavier tails. It is commonly used when the sample size is small or the population variance is unknown.

Chi-square Test

- Graph 2 (Middle): The Chi-square distribution is used in Chi-square tests, often for testing independence or goodness-of-fit. Unlike the t-distribution, the Chi-square distribution is not symmetrical and only takes on positive values.

ANOVA (F-distribution)

- Graph 3 (Rightmost): The F-distribution is used in Analysis of Variance (ANOVA), a statistical test used to analyze the differences between group means. Like the Chi-square distribution, the F-distribution is also not symmetrical and takes only positive values.

These visual representations provide an intuitive understanding of the different statistical tests and their underlying distributions. Knowing which test to use and when is crucial for conducting accurate and meaningful hypothesis tests.

Decision Making

The test statistic is then compared to a critical value determined by the significance level ( α ) and the sample size. This comparison will give you a p-value. If the p-value is less than α , you reject the null hypothesis in favor of the alternative hypothesis. Otherwise, you fail to reject the null hypothesis.

Interpretation

Finally, you interpret the results in the context of what you were investigating. Rejecting the null hypothesis might mean implementing a new process or strategy, while failing to reject it might lead to a continuation of current practices.

To sum it up, hypothesis testing is not just a set of formulas but a methodical approach to problem-solving and decision-making based on data. It’s a crucial tool for anyone interested in deriving meaningful insights from data to make informed decisions.

Why is Hypothesis Testing Important?

Hypothesis testing is a cornerstone of statistical and empirical research, serving multiple functions in various fields. Let’s delve into each of the key areas where hypothesis testing holds significant importance:

Data-Driven Decisions

In today’s complex business environment, making decisions based on gut feeling or intuition is not enough; you need data to back up your choices. Hypothesis testing serves as a rigorous methodology for making decisions based on data. By setting up a null hypothesis and an alternative hypothesis, you can use statistical methods to determine which is more likely to be true given a data sample. This structured approach eliminates guesswork and adds empirical weight to your decisions, thereby increasing their credibility and effectiveness.

Risk Management

Hypothesis testing allows you to assign a ‘p-value’ to your findings, which is essentially the probability of observing the given sample data if the null hypothesis is true. This p-value can be directly used to quantify risk. For instance, a p-value of 0.05 implies there’s a 5% risk of rejecting the null hypothesis when it’s actually true. This is invaluable in scenarios like product launches or changes in operational processes, where understanding the risk involved can be as crucial as the decision itself.

Here’s an example to help you understand the concept better.

The graph above serves as a graphical representation to help explain the concept of a ‘p-value’ and its role in quantifying risk in hypothesis testing. Here’s how to interpret the graph:

Elements of the Graph

- The curve represents a Standard Normal Distribution , which is often used to represent z-scores in hypothesis testing.

- The red-shaded area on the right represents the Rejection Region . It corresponds to a 5% risk ( α =0.05) of rejecting the null hypothesis when it is actually true. This is the area where, if your test statistic falls, you would reject the null hypothesis.

- The green-shaded area represents the Acceptance Region , with a 95% level of confidence. If your test statistic falls in this region, you would fail to reject the null hypothesis.

- The blue dashed line is the Critical Value (approximately 1.645 in this example). If your standardized test statistic (z-value) exceeds this point, you enter the rejection region, and your p-value becomes less than 0.05, leading you to reject the null hypothesis.

Relating to Risk Management

The p-value can be directly related to risk management. For example, if you’re considering implementing a new manufacturing process, the p-value quantifies the risk of that decision. A low p-value (less than α ) would mean that the risk of rejecting the null hypothesis (i.e., going ahead with the new process) when it’s actually true is low, thus indicating a lower risk in implementing the change.

Quality Control

In sectors like manufacturing, automotive, and logistics, maintaining a high level of quality is not just an option but a necessity. Hypothesis testing is often employed in quality assurance and control processes to test whether a certain process or product conforms to standards. For example, if a car manufacturing line claims its error rate is below 5%, hypothesis testing can confirm or disprove this claim based on a sample of products. This ensures that quality is not compromised and that stakeholders can trust the end product.

Resource Optimization

Resource allocation is a significant challenge for any organization. Hypothesis testing can be a valuable tool in determining where resources will be most effectively utilized. For instance, in a manufacturing setting, you might want to test whether a new piece of machinery significantly increases production speed. A hypothesis test could provide the statistical evidence needed to decide whether investing in more of such machinery would be a wise use of resources.

In the realm of research and development, hypothesis testing can be a game-changer. When developing a new product or process, you’ll likely have various theories or hypotheses. Hypothesis testing allows you to systematically test these, filtering out the less likely options and focusing on the most promising ones. This not only speeds up the innovation process but also makes it more cost-effective by reducing the likelihood of investing in ideas that are statistically unlikely to be successful.

In summary, hypothesis testing is a versatile tool that adds rigor, reduces risk, and enhances the decision-making and innovation processes across various sectors and functions.

This graphical representation makes it easier to grasp how the p-value is used to quantify the risk involved in making a decision based on a hypothesis test.

Step-by-Step Guide to Hypothesis Testing

To make this guide practical and helpful if you are new learning about the concept we will explain each step of the process and follow it up with an example of the method being applied to a manufacturing line, and you want to test if a new process reduces the average time it takes to assemble a product.

Step 1: State the Hypotheses

The first and foremost step in hypothesis testing is to clearly define your hypotheses. This sets the stage for your entire test and guides the subsequent steps, from data collection to decision-making. At this stage, you formulate two competing hypotheses:

Null Hypothesis ( H 0)

The null hypothesis is a statement that there is no effect or no difference, and it serves as the hypothesis that you are trying to test against. It’s the default assumption that any kind of effect or difference you suspect is not real, and is due to chance. Formulating a clear null hypothesis is crucial, as your statistical tests will be aimed at challenging this hypothesis.

In a manufacturing context, if you’re testing whether a new assembly line process has reduced the time it takes to produce an item, your null hypothesis ( H 0) could be:

H 0:”The new process does not reduce the average assembly time.”

Alternative Hypothesis ( Ha or H 1)

The alternative hypothesis is what you want to prove. It is a statement that there is an effect or difference. This hypothesis is considered only after you find enough evidence against the null hypothesis.

Continuing with the manufacturing example, the alternative hypothesis ( Ha ) could be:

Ha :”The new process reduces the average assembly time.”

Types of Alternative Hypothesis

Depending on what exactly you are trying to prove, the alternative hypothesis can be:

- Two-Sided : You’re interested in deviations in either direction (greater or smaller).

- One-Sided : You’re interested in deviations only in one direction (either greater or smaller).

Scenario: Reducing Assembly Time in a Car Manufacturing Plant

You are a continuous improvement manager at a car manufacturing plant. One of the assembly lines has been struggling with longer assembly times, affecting the overall production schedule. A new assembly process has been proposed, promising to reduce the assembly time per car. Before rolling it out on the entire line, you decide to conduct a hypothesis test to see if the new process actually makes a difference. Null Hypothesis ( H 0) In this context, the null hypothesis would be the status quo, asserting that the new assembly process doesn’t reduce the assembly time per car. Mathematically, you could state it as: H 0:The average assembly time per car with the new process ≥ The average assembly time per car with the old process. Or simply: H 0:”The new process does not reduce the average assembly time per car.” Alternative Hypothesis ( Ha or H 1) The alternative hypothesis is what you aim to prove — that the new process is more efficient. Mathematically, it could be stated as: Ha :The average assembly time per car with the new process < The average assembly time per car with the old process Or simply: Ha :”The new process reduces the average assembly time per car.” Types of Alternative Hypothesis In this example, you’re only interested in knowing if the new process reduces the time, making it a One-Sided Alternative Hypothesis .

Step 2: Determine the Significance Level ( α )

Once you’ve clearly stated your null and alternative hypotheses, the next step is to decide on the significance level, often denoted by α . The significance level is a threshold below which the null hypothesis will be rejected. It quantifies the level of risk you’re willing to accept when making a decision based on the hypothesis test.

What is a Significance Level?

The significance level, usually expressed as a percentage, represents the probability of rejecting the null hypothesis when it is actually true. Common choices for α are 0.05, 0.01, and 0.10, representing 5%, 1%, and 10% levels of significance, respectively.

- 5% Significance Level ( α =0.05) : This is the most commonly used level and implies that you are willing to accept a 5% chance of rejecting the null hypothesis when it is true.

- 1% Significance Level ( α =0.01) : This is a more stringent level, used when you want to be more sure of your decision. The risk of falsely rejecting the null hypothesis is reduced to 1%.

- 10% Significance Level ( α =0.10) : This is a more lenient level, used when you are willing to take a higher risk. Here, the chance of falsely rejecting the null hypothesis is 10%.

Continuing with the manufacturing example, let’s say you decide to set α =0.05, meaning you’re willing to take a 5% risk of concluding that the new process is effective when it might not be.

How to Choose the Right Significance Level?

Choosing the right significance level depends on the context and the consequences of making a wrong decision. Here are some factors to consider:

- Criticality of Decision : For highly critical decisions with severe consequences if wrong, a lower α like 0.01 may be appropriate.

- Resource Constraints : If the cost of collecting more data is high, you may choose a higher α to make a decision based on a smaller sample size.

- Industry Standards : Sometimes, the choice of α may be dictated by industry norms or regulatory guidelines.

By the end of Step 2, you should have a well-defined significance level that will guide the rest of your hypothesis testing process. This level serves as the cut-off for determining whether the observed effect or difference in your sample is statistically significant or not.

Continuing the Scenario: Reducing Assembly Time in a Car Manufacturing Plant

After formulating the hypotheses, the next step is to set the significance level ( α ) that will be used to interpret the results of the hypothesis test. This is a critical decision as it quantifies the level of risk you’re willing to accept when making a conclusion based on the test. Setting the Significance Level Given that assembly time is a critical factor affecting the production schedule, and ultimately, the company’s bottom line, you decide to be fairly stringent in your test. You opt for a commonly used significance level: α = 0.05 This means you are willing to accept a 5% chance of rejecting the null hypothesis when it is actually true. In practical terms, if you find that the p-value of the test is less than 0.05, you will conclude that the new process significantly reduces assembly time and consider implementing it across the entire line. Why α = 0.05 ? Industry Standard : A 5% significance level is widely accepted in many industries, including manufacturing, for hypothesis testing. Risk Management : By setting α = 0.05 , you’re limiting the risk of concluding that the new process is effective when it may not be to just 5%. Balanced Approach : This level offers a balance between being too lenient (e.g., α=0.10) and too stringent (e.g., α=0.01), making it a reasonable choice for this scenario.

Step 3: Collect and Prepare the Data

After stating your hypotheses and setting the significance level, the next vital step is data collection. The data you collect serves as the basis for your hypothesis test, so it’s essential to gather accurate and relevant data.

Types of Data

Depending on your hypothesis, you’ll need to collect either:

- Quantitative Data : Numerical data that can be measured. Examples include height, weight, and temperature.

- Qualitative Data : Categorical data that represent characteristics. Examples include colors, gender, and material types.

Data Collection Methods

Various methods can be used to collect data, such as:

- Surveys and Questionnaires : Useful for collecting qualitative data and opinions.

- Observation : Collecting data through direct or participant observation.

- Experiments : Especially useful in scientific research where control over variables is possible.

- Existing Data : Utilizing databases, records, or any other data previously collected.

Sample Size

The sample size ( n ) is another crucial factor. A larger sample size generally gives more accurate results, but it’s often constrained by resources like time and money. The choice of sample size might also depend on the statistical test you plan to use.

Continuing with the manufacturing example, suppose you decide to collect data on the assembly time of 30 randomly chosen products, 15 made using the old process and 15 made using the new process. Here, your sample size n =30.

Data Preparation

Once data is collected, it often needs to be cleaned and prepared for analysis. This could involve:

- Removing Outliers : Outliers can skew the results and provide an inaccurate picture.

- Data Transformation : Converting data into a format suitable for statistical analysis.

- Data Coding : Categorizing or labeling data, necessary for qualitative data.

By the end of Step 3, you should have a dataset that is ready for statistical analysis. This dataset should be representative of the population you’re interested in and prepared in a way that makes it suitable for hypothesis testing.

With the hypotheses stated and the significance level set, you’re now ready to collect the data that will serve as the foundation for your hypothesis test. Given that you’re testing a change in a manufacturing process, the data will most likely be quantitative, representing the assembly time of cars produced on the line. Data Collection Plan You decide to use a Random Sampling Method for your data collection. For two weeks, assembly times for randomly selected cars will be recorded: one week using the old process and another week using the new process. Your aim is to collect data for 40 cars from each process, giving you a sample size ( n ) of 80 cars in total. Types of Data Quantitative Data : In this case, you’re collecting numerical data representing the assembly time in minutes for each car. Data Preparation Data Cleaning : Once the data is collected, you’ll need to inspect it for any anomalies or outliers that could skew your results. For example, if a significant machine breakdown happened during one of the weeks, you may need to adjust your data or collect more. Data Transformation : Given that you’re dealing with time, you may not need to transform your data, but it’s something to consider, depending on the statistical test you plan to use. Data Coding : Since you’re dealing with quantitative data in this scenario, coding is likely unnecessary unless you’re planning to categorize assembly times into bins (e.g., ‘fast’, ‘medium’, ‘slow’) for some reason. Example Data Points: Car_ID Process_Type Assembly_Time_Minutes 1 Old 38.53 2 Old 35.80 3 Old 36.96 4 Old 39.48 5 Old 38.74 6 Old 33.05 7 Old 36.90 8 Old 34.70 9 Old 34.79 … … … The complete dataset would contain 80 rows: 40 for the old process and 40 for the new process.

Step 4: Conduct the Statistical Test

After you have your hypotheses, significance level, and collected data, the next step is to actually perform the statistical test. This step involves calculations that will lead to a test statistic, which you’ll then use to make your decision regarding the null hypothesis.

Choose the Right Test

The first task is to decide which statistical test to use. The choice depends on several factors:

- Type of Data : Quantitative or Qualitative

- Sample Size : Large or Small

- Number of Groups or Categories : One-sample, Two-sample, or Multiple groups

For instance, you might choose a t-test for comparing means of two groups when you have a small sample size. Chi-square tests are often used for categorical data, and ANOVA is used for comparing means across more than two groups.

Calculation of Test Statistic

Once you’ve chosen the appropriate statistical test, the next step is to calculate the test statistic. This involves using the sample data in a specific formula for the chosen test.

Obtain the p-value

After calculating the test statistic, the next step is to find the p-value associated with it. The p-value represents the probability of observing the given test statistic if the null hypothesis is true.

- A small p-value (< α ) indicates strong evidence against the null hypothesis, so you reject the null hypothesis.

- A large p-value (> α ) indicates weak evidence against the null hypothesis, so you fail to reject the null hypothesis.

Make the Decision

You now compare the p-value to the predetermined significance level ( α ):

- If p < α , you reject the null hypothesis in favor of the alternative hypothesis.

- If p > α , you fail to reject the null hypothesis.

In the manufacturing case, if your calculated p-value is 0.03 and your α is 0.05, you would reject the null hypothesis, concluding that the new process effectively reduces the average assembly time.

By the end of Step 4, you will have either rejected or failed to reject the null hypothesis, providing a statistical basis for your decision-making process.

Now that you have collected and prepared your data, the next step is to conduct the actual statistical test to evaluate the null and alternative hypotheses. In this case, you’ll be comparing the mean assembly times between cars produced using the old and new processes to determine if the new process is statistically significantly faster. Choosing the Right Test Given that you have two sets of independent samples (old process and new process), a Two-sample t-test for Equality of Means seems appropriate for comparing the average assembly times. Preparing Data for Minitab Firstly, you would prepare your data in an Excel sheet or CSV file with one column for the assembly times using the old process and another column for the assembly times using the new process. Import this file into Minitab. Steps to Perform the Two-sample t-test in Minitab Open Minitab : Launch the Minitab software on your computer. Import Data : Navigate to File > Open and import your data file. Navigate to the t-test Menu : Go to Stat > Basic Statistics > 2-Sample t... . Select Columns : In the dialog box, specify the columns corresponding to the old and new process assembly times under “Sample 1” and “Sample 2.” Options : Click on Options and make sure that you set the confidence level to 95% (which corresponds to α = 0.05 ). Run the Test : Click OK to run the test. In this example output, the p-value is 0.0012, which is less than the significance level α = 0.05 . Hence, you would reject the null hypothesis. The t-statistic is -3.45, indicating that the mean of the new process is statistically significantly less than the mean of the old process, which aligns with your alternative hypothesis. Showing the data displayed as a Box plot in the below graphic it is easy to see the new process is statistically significantly better.

Why do a Hypothesis test?

You might ask, after all this why do a hypothesis test and not just look at the averages, which is a good question. While looking at average times might give you a general idea of which process is faster, hypothesis testing provides several advantages that a simple comparison of averages doesn’t offer:

Statistical Significance

Account for Random Variability : Hypothesis testing considers not just the averages, but also the variability within each group. This allows you to make more robust conclusions that account for random chance.

Quantify the Evidence : With hypothesis testing, you obtain a p-value that quantifies the strength of the evidence against the null hypothesis. A simple comparison of averages doesn’t provide this level of detail.

Control Type I Error : Hypothesis testing allows you to control the probability of making a Type I error (i.e., rejecting a true null hypothesis). This is particularly useful in settings where the consequences of such an error could be costly or risky.

Quantify Risk : Hypothesis testing provides a structured way to make decisions based on a predefined level of risk (the significance level, α ).

Decision-making Confidence

Objective Decision Making : The formal structure of hypothesis testing provides an objective framework for decision-making. This is especially useful in a business setting where decisions often have to be justified to stakeholders.

Replicability : The statistical rigor ensures that the results are replicable. Another team could perform the same test and expect to get similar results, which is not necessarily the case when comparing only averages.

Additional Insights

Understanding of Variability : Hypothesis testing often involves looking at measures of spread and distribution, not just the mean. This can offer additional insights into the processes you’re comparing.

Basis for Further Analysis : Once you’ve performed a hypothesis test, you can often follow it up with other analyses (like confidence intervals for the difference in means, or effect size calculations) that offer more detailed information.

In summary, while comparing averages is quicker and simpler, hypothesis testing provides a more reliable, nuanced, and objective basis for making data-driven decisions.

Step 5: Interpret the Results and Make Conclusions

Having conducted the statistical test and obtained the p-value, you’re now at a stage where you can interpret these results in the context of the problem you’re investigating. This step is crucial for transforming the statistical findings into actionable insights.

Interpret the p-value

The p-value you obtained tells you the significance of your results:

- Low p-value ( p < α ) : Indicates that the results are statistically significant, and it’s unlikely that the observed effects are due to random chance. In this case, you generally reject the null hypothesis.

- High p-value ( p > α ) : Indicates that the results are not statistically significant, and the observed effects could well be due to random chance. Here, you generally fail to reject the null hypothesis.

Relate to Real-world Context

You should then relate these statistical conclusions to the real-world context of your problem. This is where your expertise in your specific field comes into play.

In our manufacturing example, if you’ve found a statistically significant reduction in assembly time with a p-value of 0.03 (which is less than the α level of 0.05), you can confidently conclude that the new manufacturing process is more efficient. You might then consider implementing this new process across the entire assembly line.

Make Recommendations

Based on your conclusions, you can make recommendations for action or further study. For example:

- Implement Changes : If the test results are significant, consider making the changes on a larger scale.

- Further Research : If the test results are not clear or not significant, you may recommend further studies or data collection.

- Review Methodology : If you find that the results are not as expected, it might be useful to review the methodology and see if the test was conducted under the right conditions and with the right test parameters.

Document the Findings

Lastly, it’s essential to document all the steps taken, the methodology used, the data collected, and the conclusions drawn. This documentation is not only useful for any further studies but also for auditing purposes or for stakeholders who may need to understand the process and the findings.

By the end of Step 5, you’ll have turned the raw statistical findings into meaningful conclusions and actionable insights. This is the final step in the hypothesis testing process, making it a complete, robust method for informed decision-making.

You’ve successfully conducted the hypothesis test and found strong evidence to reject the null hypothesis in favor of the alternative: The new assembly process is statistically significantly faster than the old one. It’s now time to interpret these results in the context of your business operations and make actionable recommendations. Interpretation of Results Statistical Significance : The p-value of 0.0012 is well below the significance level of = 0.05 α = 0.05 , indicating that the results are statistically significant. Practical Significance : The boxplot and t-statistic (-3.45) suggest not just statistical, but also practical significance. The new process appears to be both consistently and substantially faster. Risk Assessment : The low p-value allows you to reject the null hypothesis with a high degree of confidence, meaning the risk of making a Type I error is minimal. Business Implications Increased Productivity : Implementing the new process could lead to an increase in the number of cars produced, thereby enhancing productivity. Cost Savings : Faster assembly time likely translates to lower labor costs. Quality Control : Consider monitoring the quality of cars produced under the new process closely to ensure that the speedier assembly does not compromise quality. Recommendations Implement New Process : Given the statistical and practical significance of the findings, recommend implementing the new process across the entire assembly line. Monitor and Adjust : Implement a control phase where the new process is monitored for both speed and quality. This could involve additional hypothesis tests or control charts. Communicate Findings : Share the results and recommendations with stakeholders through a formal presentation or report, emphasizing both the statistical rigor and the potential business benefits. Review Resource Allocation : Given the likely increase in productivity, assess if resources like labor and parts need to be reallocated to optimize the workflow further.

By following this step-by-step guide, you’ve journeyed through the rigorous yet enlightening process of hypothesis testing. From stating clear hypotheses to interpreting the results, each step has paved the way for making informed, data-driven decisions that can significantly impact your projects, business, or research.

Hypothesis testing is more than just a set of formulas or calculations; it’s a holistic approach to problem-solving that incorporates context, statistics, and strategic decision-making. While the process may seem daunting at first, each step serves a crucial role in ensuring that your conclusions are both statistically sound and practically relevant.

- McKenzie, C.R., 2004. Hypothesis testing and evaluation . Blackwell handbook of judgment and decision making , pp.200-219.

- Park, H.M., 2015. Hypothesis testing and statistical power of a test.

- Eberhardt, L.L., 2003. What should we do about hypothesis testing? . The Journal of wildlife management , pp.241-247.

Q: What is hypothesis testing in the context of Lean Six Sigma?

A: Hypothesis testing is a statistical method used in Lean Six Sigma to determine whether there is enough evidence in a sample of data to infer that a certain condition holds true for the entire population. In the Lean Six Sigma process, it’s commonly used to validate the effectiveness of process improvements by comparing performance metrics before and after changes are implemented. A null hypothesis ( H 0 ) usually represents no change or effect, while the alternative hypothesis ( H 1 ) indicates a significant change or effect.

Q: How do I determine which statistical test to use for my hypothesis?

A: The choice of statistical test for hypothesis testing depends on several factors, including the type of data (nominal, ordinal, interval, or ratio), the sample size, the number of samples (one sample, two samples, paired), and whether the data distribution is normal. For example, a t-test is used for comparing the means of two groups when the data is normally distributed, while a Chi-square test is suitable for categorical data to test the relationship between two variables. It’s important to choose the right test to ensure the validity of your hypothesis testing results.

Q: What is a p-value, and how does it relate to hypothesis testing?

A: A p-value is a probability value that helps you determine the significance of your results in hypothesis testing. It represents the likelihood of obtaining a result at least as extreme as the one observed during the test, assuming that the null hypothesis is true. In hypothesis testing, if the p-value is lower than the predetermined significance level (commonly α = 0.05 ), you reject the null hypothesis, suggesting that the observed effect is statistically significant. If the p-value is higher, you fail to reject the null hypothesis, indicating that there is not enough evidence to support the alternative hypothesis.

Q: Can you explain Type I and Type II errors in hypothesis testing?

A: Type I and Type II errors are potential errors that can occur in hypothesis testing. A Type I error, also known as a “false positive,” occurs when the null hypothesis is true, but it is incorrectly rejected. It is equivalent to a false alarm. On the other hand, a Type II error, or a “false negative,” happens when the null hypothesis is false, but it is erroneously failed to be rejected. This means a real effect or difference was missed. The risk of a Type I error is represented by the significance level ( α ), while the risk of a Type II error is denoted by β . Minimizing these errors is crucial for the reliability of hypothesis tests in continuous improvement projects.

Daniel Croft is a seasoned continuous improvement manager with a Black Belt in Lean Six Sigma. With over 10 years of real-world application experience across diverse sectors, Daniel has a passion for optimizing processes and fostering a culture of efficiency. He's not just a practitioner but also an avid learner, constantly seeking to expand his knowledge. Outside of his professional life, Daniel has a keen Investing, statistics and knowledge-sharing, which led him to create the website learnleansigma.com, a platform dedicated to Lean Six Sigma and process improvement insights.

Free Lean Six Sigma Templates

Improve your Lean Six Sigma projects with our free templates. They're designed to make implementation and management easier, helping you achieve better results.

Other Guides

- Certified Six Sigma Green Belt (CSSGB™)

- Certified Six Sigma Black Belt (CSSBB™)

- Certified Six Sigma Master Black Belt (CSSMBB™)

- Certified Six Sigma Champion (CSSC™)

- Certified Six Sigma Deployment Leader (CSSDL™)

- Certified Six Sigma Yellow Belt (CSSYB™)

- Certified Six Sigma Trainer (CSSTRA™)

- Certified Six Sigma Coach (CSSCOA™)

- Register Your Six Sigma Certification Program

- International Scrum Institute™

- International DevOps Certification Academy™

- International Organization for Project Management (IO4PM™)

- International Software Test Institute™

- International MBA Institute™

- Your Blog (US Army Personnel Selected Six Sigma Institute™)

- Our Industry Review and Feedback

- Our Official Recognition and Industry Clients

- Our Corporate Partners Program

- Frequently Asked Questions (FAQ)

- Shareable Digital Badge And Six Sigma Certifications Validation Registry (NEW)

- Recommend International Six Sigma Institute™ To Friends

- Introducing: World's First & Only Six Sigma AI Assistant (NEW)

- Your Free Six Sigma Book

- Your Free Premium Six Sigma Training

- Your Sample Six Sigma Certification Test Questions

- Your Free Six Sigma Events

- Terms and Conditions

- Privacy Policy

Six Sigma Hypothesis Testing: Results with P-Value & Data

Hypothesis testing is crucial in Six Sigma as it provides a statistical framework to analyze process improvements, measure project progress, and make data-driven decisions. By using hypothesis testing, Six Sigma practitioners can effectively determine whether changes made to a process have resulted in meaningful improvements or not, thus ensuring strategic decision-making based on evidence.

Six Sigma DMAIC - Analyze Phase - Hypothesis Testing

What is the difference that can be detected using Hypothesis Testing?

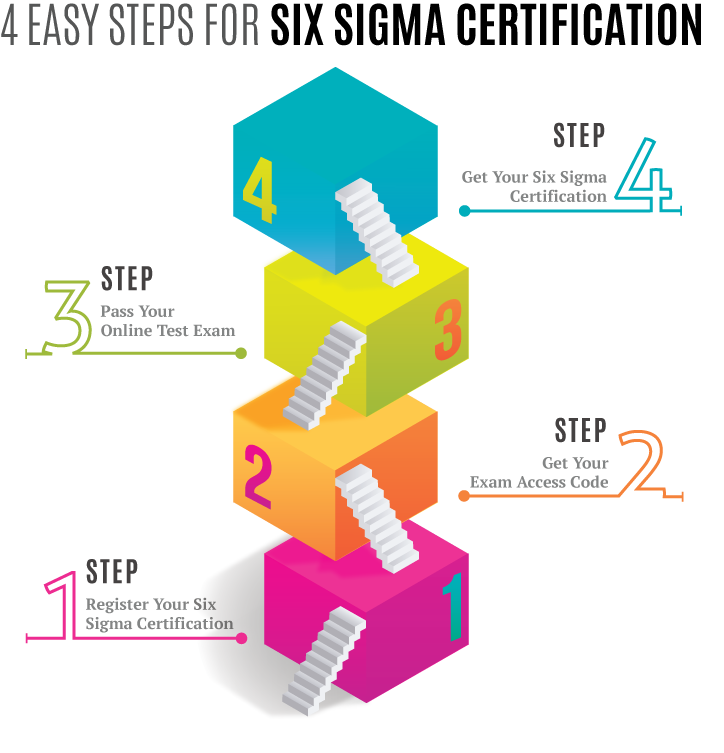

- Step 1: Determine appropriate Hypothesis test

- Step 2: State the Null Hypothesis Ho and Alternate Hypothesis Ha

- Step 3: Calculate Test Statistics / P-value against table value of test statistic

- Step 4: Interpret results – Accept or reject Ho

- Ho = Null Hypothesis – There is No statistically significant difference between the two groups

- Ha = Alternate Hypothesis – There is statistically significant difference between the two groups

P Value – Also known as Probability value, it is a statistical measure which indicates the probability of making an α error. The value ranges between 0 and 1. We normally work with 5% alpha risk, a p value lower than 0.05 means that we reject the Null hypothesis and accept alternate hypothesis.

What is P-Value in Six Sigma?

Six sigma hypothesis: null and alternative.

It's crucial to understand that both hypotheses play complementary roles in hypothesis testing. While the null hypothesis anchors the current state or standard processes, the alternative hypothesis serves as a beacon for evaluating potential enhancements or variances resulting from process improvements or changes. When these two hypotheses are utilized effectively, they provide a structured framework for detailed analysis and decision-making.

Tools for Six Sigma Hypothesis Testing

Difference analysis in six sigma hypothesis testing.

Applying a t-test for comparing means or another suitable hypothesis testing method will help evaluate whether the decrease from 10 minutes to 8 minutes is meaningful or just due to chance. It allows decision-makers to make informed choices based on statistical test inferences rather than relying solely on anecdotal evidence or individual perceptions.

Deciding Hypothesis Validity in Six Sigma

This decision-making process plays a pivotal role in organizational decision-making because it guides leaders in determining whether observed changes are truly beneficial or merely due to chance. In essence, what we are doing here is looking for hard evidence through data analysis—evidence that supports our belief about positive changes resulting from specific actions taken within our processes.

Enhancing Precision via Reduction of Variation

Practical applications of hypothesis testing in six sigma.

All these scenarios underscore the practical relevance of hypothesis testing in enabling organizations to make informed decisions and allocate resources effectively based on tangible evidence rather than intuition or assumptions.

Frequently Asked Questions about Six Sigma Hypothesis Testing

What are some common types of hypothesis tests used in six sigma projects, how does hypothesis testing fit into the six sigma methodology, how do you determine the significance level and power of a hypothesis test in six sigma, what are some practical examples or case studies where hypothesis testing has been applied successfully in a six sigma project.

What are the key steps involved in conducting hypothesis testing in Six Sigma?

What is the purpose of hypothesis testing in six sigma, in the dmaic method, which stage identifies with the confirmation and testing the statistical solution, project y is continuous and x is discrete to validate variance between subgroups. what does this mean, which hypothesis test will you perform when the y is continuous normal and x is discrete. what does this this mean, what is sigma test, what is beta testing is six sigma, what null hypothesis in six sigma, recap of six sigma hypothesis testing with p-values.

Mastering Six Sigma hypothesis testing, including the interpretation of P-values, is a skill that can significantly enhance one's ability to drive process improvements and minimize variation. The recap of these testing techniques serves as a valuable reminder of the precision required in decision-making within the Six Sigma framework. Whether you're initiating a project or refining existing processes, the insights gained from comprehending P-values contribute to the success of your continuous improvement initiatives.

Your Six Sigma Training Table of Contents

We guarantee that your free online training will make you pass your six sigma certification exam.

THE ONLY BOOK. YOU CAN SIMPLY LEARN SIX SIGMA.

YOUR SIX SIGMA REVEALED 2ND EDITION IS NOW READY. CLICK BOOK COVER FOR FREE DOWNLOAD...

Hypothesis Testing

Hypothesis Testing in Lean Six Sigma

One of the essential tools in the Lean Six Sigma toolkit is hypothesis testing. Hypothesis testing is crucial in the Define-Measure-Analyze-Improve-Control (DMAIC) cycle, helping organizations make data-driven decisions and achieve continuous improvement. This article will explore the significance of hypothesis testing in Lean Six Sigma, its key concepts, and practical applications.

Understanding Hypothesis Testing

Hypothesis testing is a statistical method used to determine whether there is a significant difference between two or more sets of data. In the context of Lean Six Sigma, it is primarily used to assess the impact of process changes or improvements. The process of hypothesis testing involves formulating two competing hypotheses:

- Null Hypothesis (H0): This hypothesis assumes that there is no significant difference or effect. It represents the status quo or the current state of the process.

- Alternative Hypothesis (Ha) or (H1): This hypothesis suggests that there is a significant difference or effect resulting from process changes or improvements.

The goal of hypothesis testing is to collect and analyze data to either accept or reject the null hypothesis in favor of the alternative hypothesis.

Key Steps in Hypothesis Testing in Lean Six Sigma

- Define the Problem: The first step in Lean Six Sigma’s DMAIC cycle is clearly defining the problem. This involves understanding the process, identifying the problem’s scope, and setting measurable goals for improvement.

- Formulate Hypotheses: Once the problem is defined, the next step is to formulate the null and alternative hypotheses. This step is crucial as it sets the foundation for the hypothesis testing process.

- Collect Data: Data collection is critical to hypothesis testing. Lean Six Sigma practitioners gather relevant data using various methods, ensuring the data is accurate, representative, and sufficient for analysis.

- Analyze Data: Statistical analysis is the heart of hypothesis testing. Different statistical tests are used depending on the data type and the analysis objectives. Common tests include t-tests, chi-square tests, and analysis of variance (ANOVA) .

- Determine Significance Level: A significance level (alpha) is set to determine the threshold for accepting or rejecting the null hypothesis in hypothesis testing. Common significance levels are 0.05 and 0.01, representing a 5% and 1% chance of making a Type I error, respectively.

- Calculate Test Statistic: The test statistic is computed from the collected data and compared to a critical value or a p-value to determine its significance.

- Make a Decision: Based on the test statistic and significance level, a decision is made either to reject the null hypothesis in favor of the alternative hypothesis or to fail to reject the null hypothesis.

- Draw Conclusions: The final step involves drawing conclusions based on the decision made in step 7. These conclusions inform the next steps in the Lean Six Sigma DMAIC cycle, whether it be process improvement, optimization, or control.

Practical Applications of Hypothesis Testing in Lean Six Sigma

- Process Improvement: Hypothesis testing is often used to assess whether process improvements, such as changes in machinery, materials, or procedures, lead to significant enhancements in process performance.

- Root Cause Analysis: Lean Six Sigma practitioners employ hypothesis testing to identify the root causes of process defects or variations, helping organizations address the underlying issues effectively.

- Product Quality Control: Manufacturers use hypothesis testing to ensure the quality of products meets predefined standards and specifications, reducing defects and customer complaints.

- Cost Reduction: By testing hypotheses related to cost reduction initiatives, organizations can determine whether cost-saving measures are effective and sustainable.

- Customer Satisfaction: Hypothesis testing can be applied to customer feedback data to determine if changes in products or services result in increased customer satisfaction.

Six Sigma Green Belt vs Six Sigma Black Belt in Hypothesis Testing

Six Sigma Black Belts and Six Sigma Green Belts both use hypothesis testing as a critical tool in process improvement projects, but there are differences in their roles and responsibilities, which influence how they employ hypothesis testing:

1. Project Leadership and Complexity:

- Black Belts : Black Belts typically lead larger and more complex improvement projects. They are responsible for selecting projects that significantly impact the organization’s strategic goals. Hypothesis testing for Black Belts often involves multifaceted analyses, intricate data collection strategies, and a deeper understanding of statistical techniques.

- Green Belts : Green Belts usually work on smaller-scale projects or support Black Belts on larger projects. Their projects may have a narrower focus and involve less complex hypothesis testing than Black Belts.

2. Statistical Expertise:

- Black Belts : Black Belts are expected to have a higher level of statistical expertise. They are often skilled in advanced statistical methods and can handle complex data analysis. They might use more advanced statistical techniques such as multivariate analysis, design of experiments (DOE), or regression modeling.

- Green Belts : Green Belts have a solid understanding of basic statistical methods and hypothesis testing but may not have the same depth of expertise as Black Belts. They typically use simpler statistical tools for hypothesis testing.

3. Project Oversight and Coaching:

- Black Belts : Black Belts often mentor or coach Green Belts and team members. They guide and oversee multiple projects simultaneously, ensuring that the right tools and methods, including hypothesis testing, are applied effectively.

- Green Belts : Green Belts focus primarily on their own project work but may receive guidance and support from Black Belts. They contribute to projects led by Black Belts and assist in data collection and analysis.

4. Strategic Impact:

- Black Belts : Black Belts work on projects that are closely aligned with the organization’s strategic goals. They are expected to deliver significant financial and operational benefits. Hypothesis testing for Black Belts may have a direct impact on strategic decision-making.

- Green Belts : Green Belts work on projects that often contribute to departmental or functional improvements. While their projects can still have a substantial impact, they may not be as closely tied to the organization’s overall strategic direction.

5. Reporting and Presentation:

- Black Belts : Black Belts are typically responsible for presenting project findings and recommendations to senior management. They must effectively communicate the results of hypothesis testing and their implications for the organization.

- Green Belts : Green Belts may present their findings to their immediate supervisors or project teams but may not have the same level of exposure to senior management as Black Belts.

Six Sigma Black Belts and Green Belts both use hypothesis testing, but Black Belts tend to handle more complex, strategically significant projects, often involving advanced statistical methods. They also play a coaching and leadership role within the organization, whereas Green Belts primarily focus on their own projects and may support Black Belts in larger initiatives. The level of statistical expertise, project complexity, and strategic impact are key factors that differentiate how each role uses hypothesis testing.

Drawbacks to Using Hypothesis Testing During a Six Sigma Project

It’s important to recognize that while hypothesis testing is a valuable tool, it is not without its challenges and limitations. Lets delve into some of the drawbacks and complexities associated with employing hypothesis testing within the context of Six Sigma projects.

Data Quality and Availability: One fundamental challenge lies in the quality and accessibility of data. Hypothesis testing relies heavily on having accurate and pertinent data at hand. Obtaining high-quality data can sometimes be a formidable task, and gaps or inaccuracies in the data can jeopardize the reliability of the analysis.

Assumptions and Simplifications: Many hypothesis tests are built upon certain assumptions about the data, such as adherence to specific statistical distributions or characteristics. These assumptions, when violated, can compromise the accuracy and validity of the test results. Real-world data often exhibits complexity that may not neatly conform to these assumptions.

Sample Size Considerations: The effectiveness of a hypothesis test is significantly influenced by the sample size. Smaller sample sizes may not possess the statistical power necessary to detect meaningful differences, potentially leading to erroneous conclusions. Conversely, larger sample sizes may unearth statistically significant differences that may not have practical significance.

Type I and Type II Errors: Hypothesis testing necessitates a careful balance between Type I errors (incorrectly rejecting a true null hypothesis) and Type II errors (failing to reject a false null hypothesis). The choice of the significance level (alpha) directly impacts the trade-off between these errors, making it crucial to select an appropriate alpha level for the specific context.

Complex Interactions: Real-world processes often involve intricate interactions between multiple variables and factors. Hypothesis testing, by design, simplifies these interactions, potentially leading to an oversimplified understanding of the process dynamics. Neglecting these interactions can result in inaccurate conclusions and ineffective process improvements.

Time and Resources: Hypothesis testing can be resource-intensive and time-consuming, especially when dealing with extensive datasets or complex statistical analyses. The process requires allocation of resources for data collection, analysis, and interpretation. Striking the right balance between the benefits of hypothesis testing and the resources invested is a consideration in Six Sigma projects.

Overemphasis on Statistical Significance: There is a risk of becoming overly focused on achieving statistical significance. While statistical significance holds importance, it does not always translate directly into practical significance or tangible business value. A fixation on p-values and statistical significance can sometimes lead to a myopic view of the broader context.

Contextual Factors: Hypothesis testing, on its own, does not encompass all contextual elements that may influence process performance. Factors such as external market conditions, customer preferences, and regulatory changes may not be adequately accounted for through hypothesis testing alone. Complementing hypothesis testing with qualitative analysis and a holistic understanding of the process environment is essential.

Hypothesis testing is a valuable tool in Six Sigma projects, but it is vital to acknowledge its limitations and complexities. Practitioners should exercise caution, ensuring that hypothesis testing is applied judiciously and that its results are interpreted within the broader framework of organizational goals. Success in Six Sigma projects often hinges on blending statistical rigor with practical wisdom.

Hypothesis testing is a fundamental tool in the Lean Six Sigma methodology, enabling organizations to make data-driven decisions, identify process improvements, and enhance overall efficiency and quality. When executed correctly, hypothesis testing empowers businesses to achieve their goals, reduce defects, cut costs, and, ultimately, deliver better products and services to their customers. By integrating hypothesis testing into the DMAIC cycle, Lean Six Sigma practitioners can drive continuous improvement and ensure the long-term success of their organizations.

These tools and techniques are not mutually exclusive, and their selection depends on the problem’s nature, the process’s complexity, and the data available. Six Sigma practitioners, including Green Belts and Black Belts, are trained to use these tools effectively to drive meaningful improvements during the Improve stage of DMAIC.

Lean Six Sigma Green Belt Certification

Lean Six Sigma Black Belt Certification

Six Sigma Body of Knowledge

View All Six Sigma Certifications

Six Sigma Resource Center

- All Certifications

- Accessibility

Connect With Us

Copyright © 2022 MSI. All Rights Reserved.

- Lean Six Sigma

Hypothesis Testing

Hypothesis Testing is a statistical method to infer and validate the significance of any assumption on a given data. While discussing about statistical significance of a data, it means that the data can be scientifically tested and determined on its significance against the predicted outcome. A detailed explanation given below will shed more information.

The data/information does not reveal the truth or is ambiguous at the first glance and require a prediction based on wisdom.

To start with, Hypothesis testing should follow the below steps:

- Hypothesis Selection: The prediction based on wisdom is then considered as Null Hypothesis (H 0 ). Say H 0 = x, H 0 <x, and H 0 > x. The alternate hypothesis (H a ) should be such that it can be accepted or is unpredictable at the end of the test. For the above H0 given, the respective alternate hypothesis would be: H a ≠x, H a ≥ x and H a ≤ x. Both the hypothesis are never rejected. It should always be: Accepted or Failed to accept either of the hypothesis (Refer the forthcoming example)

- H0 is true but Ha is accepted due to error in the data (α Error)

- Ha is true but H0 is accepted due to some error in the data (β Error)

The ‘α error’ is also known as Type I error and ‘β error’ as Type II Error. Various tests are available that can be used to check the significance of the data depending on the hypothesis. Few of the tests are ANOVA, Chi-Square test, One and Two sample t-test, etc.

Both the cases would result in incorrect inference causing us to take wrong decision and there by not achieving the desired results. To overcome that the test should be:

- Fixed with an acceptable significance level (1- α value). Say 95% or 99%. It means a variation or error in the test results of around (α): 5% or 1% is allowable. Higher the significance level, more accurate is the test result.

- Increase the sample size which will reduce the β error

Adequate care should be taken in defining the H0 and Ha. The ‘α error’ threshold should be clearly defined and compared with appropriate probabilistic value which would determine whether to accept H0 or not.

- Conduct the Test: Select the appropriate test and state the relevant test statistic based on the data type and distribution. Then calculate the test statistic and arrive at the probability table value of the statistic based on the given degrees of freedom and significance level. For example: In a chi-square test, an Actual Chi-square value will be calculated through the formulas; An expected Chi-square value will be looked up from the Probability table corresponding to the given degrees of freedom and significance level.

- Compare the actual and expected values and choose whether the Alternate Hypothesis can be accepted or not. (Refer to the respective Test for more details)

- p – Value < α value: Accept Alternate Hypothesis

- p – Value > α value: Reject Alternate Hypothesis

This is a purely probability based derivation and hence it is quite possible that different statistical tests may indicate different results.

Illustration: Now let us look at a world famous example which would help us in understanding hypothesis testing way clearer.

In a courtroom for a criminal trial, the defendant (Data point/observation) is not considered guilty unless proved.

H 0 : Defendant not guilty

H a : Defendant guilty

According to the law we know that an innocent should never be acquitted unless otherwise it is proved to be. Here we have considered H 0 as not guilty so that the erroneous decision of convicting an innocent is reduced. The alternate hypothesis would be accepted only when significant data is available to prove the defendant guilty.

When we have an innocent defendant and he is proved not guilty we accept the hypothesis H 0 ) of wisdom whereas when we prove the defendant to be guilty we either fail to accept the H 0 or simply accept the alternate hypothesis.

The Hypothesis Testing is indeed a very powerful statistical method and can provide support to the information that you can intend to prove to be either correct or incorrect.

Previous post: KAIZEN

Next post: Best Practice

- 10 Things You Should Know About Six Sigma

- Famous Six Sigma People

- Six Sigma Software

Recent Posts

- Control System Expansion

- Energy Audit Management

- Industrial Project Management

- Network Diagram

- Supply Chain and Logistics

- Visual Management

- Utilizing Pareto Charts in Business Analysis

- Privacy Policy

R Essentials for Six Sigma Analytics

Chapter 5 hypothesis testing, 5.1 common hypothesis testing for six sigma.

Although not comprehensive, this chapter discusses the most common hypothesis testing techniques a Six Sigma professional normally handles. It also assumes that you have the basic knowledge behind Hypothesis Testing such as Null and Alternative hypothesis, alpha and beta risks, Type I and II errors, confidence level, and so on. With that out of the way, let’s get right into the tests.

5.2 1-Sample t Test

Let’s create a vector with some random data using the rnorm function, like this: